Yogi Berra: 'If the world were perfect, it wouldn't be.'

If the world were perfect, it wouldn't be.

"If the world were perfect, it wouldn't be." - Yogi BerraYogi Berra, a legendary American baseball player and philosopher, famously stated this intriguing quote that invites us to consider the imperfection of our world. At first glance, it may seem paradoxical or even pessimistic, but delving deeper into its meaning reveals profound philosophical insights. Berra's words encapsulate the idea that perfection is not attainable or desirable, and that the imperfections of our world are what make it truly remarkable.In a straightforward sense, this quote suggests that a perfect world is an impossibility. If everything were flawless and without blemish, there would be no room for growth, development, or change. Perfection implies a static state where nothing can be improved or enhanced. And in such a world, life would lack the variety and challenges that propel us forward.To fully understand the significance of Berra's quote, let us introduce a concept known as "The Beauty of Imperfection." This concept challenges the conventional belief that perfection is the ultimate goal. It emphasizes that perfection can lead to stagnation and monotony, robbing life of its richness and vibrancy. Instead, embracing imperfections allows us to appreciate the unique and diverse aspects of our world.Consider the natural world, for instance. The intricacies of the flora and fauna, the delicate balance of ecosystems, the changing seasons, and the unpredictability of weather patterns all contribute to the charm and splendor of our planet. If everything were perfect and predictable, nature would lose its awe-inspiring and mysterious appeal. The imperfections in nature make it captivating and engender a sense of wonder that propels us to explore and learn.The concept of embracing imperfection also applies to human relationships. It is through our flaws and imperfections that we connect with others on a deeper level. When we share vulnerability, empathy grows, fostering meaningful connections. In a perfect world, relationships would lack authenticity and depth, as there would be no need for understanding, forgiveness, or growth.Furthermore, it is in the pursuit of overcoming imperfections that we demonstrate resilience, creativity, and innovation. Unattainable perfection drives us to constantly seek improvement, to find innovative solutions to problems, and to push boundaries. The imperfections in technology, for example, provide the impetus for advancements and breakthroughs, fueling progress in various fields.However, it is important to note that embracing imperfection does not mean accepting mediocrity or ignoring the pursuit of excellence. Instead, it encourages us to recognize that mistakes, failures, and shortcomings are essential components of progress and personal growth. It is through our imperfections that we learn and evolve, both individually and collectively.In conclusion, Yogi Berra's thought-provoking quote, "If the world were perfect, it wouldn't be," sheds light on an intricate aspect of our existence. It reminds us of the beauty found in imperfections and challenges the notion that perfection should be our ultimate goal. Embracing imperfection encourages growth, fosters meaningful relationships, fuels creativity, and propels progress. By celebrating the imperfect world we inhabit, we can find inspiration, fulfillment, and a greater appreciation for the complexities of life.

9 Newtonian Worldview

Chapter 9: newtonian worldview.

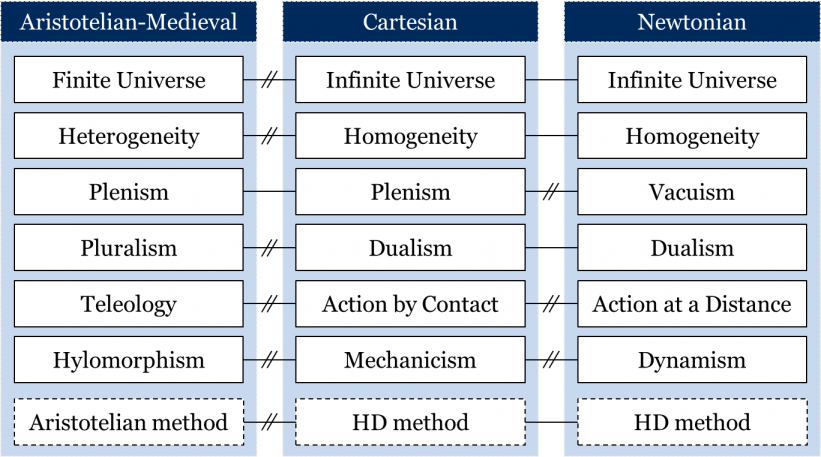

Back in chapter 4, we deduced a couple theorems from the laws of scientific change. One such theorem is called the mosaic split theorem , where the acceptance of two incompatible theories leads to a split in a mosaic. A very noteworthy split happened to the Aristotelian-Medieval mosaic around 1700, when theories of both the Cartesian and the Newtonian worldviews equally satisfied the expectations of Aristotelians. For a period of around 40 years between 1700 and 1740, two incompatible sets of theories were accepted by two very different communities. We covered the Cartesian worldview, which was accepted on the Continent, in chapter 8. In this chapter, we will cover the Newtonian worldview .

The Newtonian mosaic was first accepted in Britain ca. 1700. Continental Europe accepted the Newtonian mosaic around 1740, following the confirmation of a novel prediction concerning the shape of the Earth that favoured the Newtonian theory of gravity. The once-split Cartesian and Newtonian mosaics merged, leaving the Newtonian worldview accepted across Europe until about 1920.

One thing we must bear in mind is that the Newtonian mosaic of 1700 looked quite different from the Newtonian mosaic of, say, 1900; a lot can happen to a mosaic over two centuries. Recall that theories and methods of a mosaic do not change all at once, but rather in a piecemeal fashion. We nevertheless suggest the mosaic of 1700 exemplifies the same worldview as that of 1900 because, generally-speaking, both mosaics bore similar underlying metaphysical assumptions – principles to be elaborated on throughout this chapter.

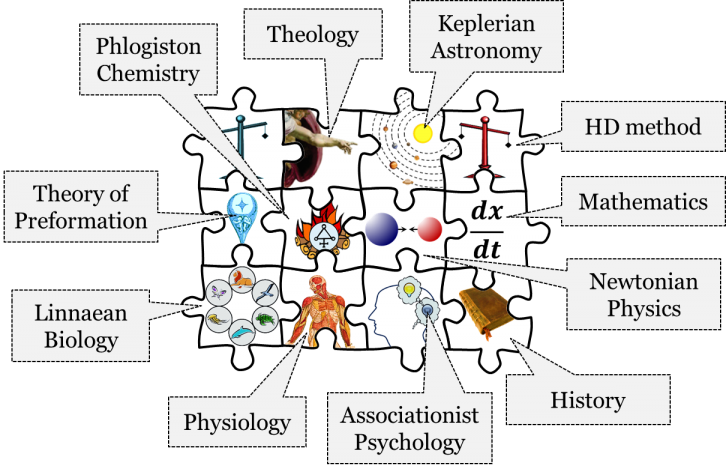

That said, we can still understand and appreciate the key elements of the Newtonian mosaic at some particular time. In our case, we’re going to provide a snapshot of the mosaic ca. 1765. Its key elements at that time included revealed and natural theology, natural astrology, Newtonian physics and Keplerian astronomy, vitalist physiology, phlogiston chemistry, the theory of preformation, Linnaean biology, associationist psychology, history, mathematics (including calculus) as well as the hypothetico-deductive method.

Let’s start with the most obvious elements of the Newtonian mosaic – Newtonian physics and cosmology.

Newtonian Physics and Cosmology

In 1687, Isaac Newton first published one of the most studied texts in the history and philosophy of science, Philosophiæ Naturalis Principia Mathematica , or the Principia for short. It is in this text that Newton first described the physical laws that are part and parcel of every first-year physics course, including his three laws of motion, his law of universal gravitation, and the laws of planetary motion. Of course, it would take several decades of debate and discussion for the community of the time to fully accept Newtonian physics. Nevertheless, by the 1760s, Newtonian cosmology and physics were accepted across Europe.

As we did in chapter 8, here we’re going to cover not only the individual theories of the Newtonian mosaic, but also the metaphysical elements underlying these theories. Since any metaphysical element is best understood when paired with its opposite elements (e.g. hylomorphism vs. mechanicism, pluralism vs. dualism, etc.), we will also be introducing those elements of the Aristotelian-Medieval and Cartesian worldviews which the Newtonian worldview opposed.

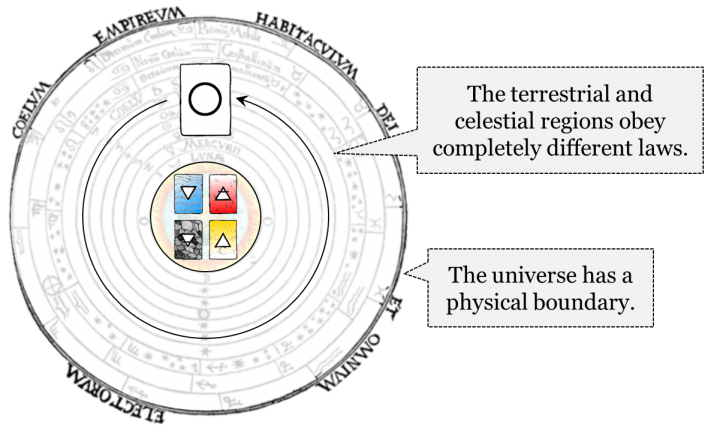

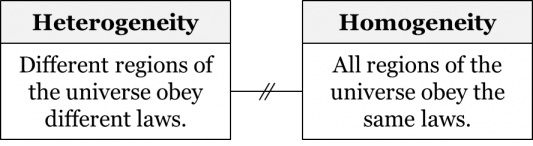

Recall from chapter 7 that, in their accepted cosmology, Aristotelians separated the universe into two regions – terrestrial and celestial. They believe that, in the terrestrial region, there are four elements – earth, water, air, and fire – that move linearly either towards or away from the centre of the universe. The celestial region, on the other hand, is composed of a single element – aether – which moves circularly around the centre of the universe. Since Aristotelians believed that terrestrial and celestial objects behave differently, we say that Aristotelians accepted the metaphysical principle of heterogeneity , that the terrestrial and celestial regions were fundamentally different.

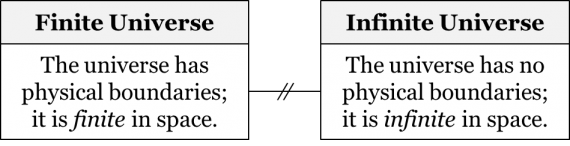

Additionally, Aristotelians posited that the celestial region was organized in a series of concentric spheres – something like a Matryoshka, or Russian nesting doll – with each planet nested in a spherical shell. The outermost sphere was considered the sphere of the stars, which was believed to be the physical boundary of the universe. According to Aristotelians, there is nothing beyond that sphere, not even empty space. Thus, they also accepted that the universe is finite .

Cartesians rejected the Aristotelians idea of heterogeneity of the two regions as well as their idea of a finite universe. First, let’s recall one of the central tenets of the Cartesian worldview: the principal attribute of all matter is extension. For Cartesians, it makes no difference whether that is the tangible matter of the Earth or the invisible matter of a stellar vortex – it must always be extended, i.e. occupy space. Since all matter, both terrestrial and celestial, is just an extended substance, the same set of physical laws applied anywhere in the universe. That is, Cartesians accepted the homogeneity of the laws of nature, that all regions of the universe obey the same laws.

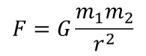

Additionally, if extension is merely an attribute of matter, i.e. if space cannot exist independently of matter, then a question emerges: what would a Cartesian imagine existing beyond any boundary? Surely, they would never imagine an edge to the universe followed by empty space and nothingness – that would violate their belief in plenism. Instead beyond every seeming boundary is simply more extended matter , be it spatial matter or the matter of other planetary systems. Descartes would say that the universe extends ‘indefinitely’, meaning potentially infinitely, because he could imagine (but be uncertain about) a lack of boundaries to the edge of the universe and because he reserved the true idea of infiniteness (rather than indefiniteness) for God. So, we would say that Cartesians accepted an infinite universe , that the universe has no physical boundaries and is infinite in space.

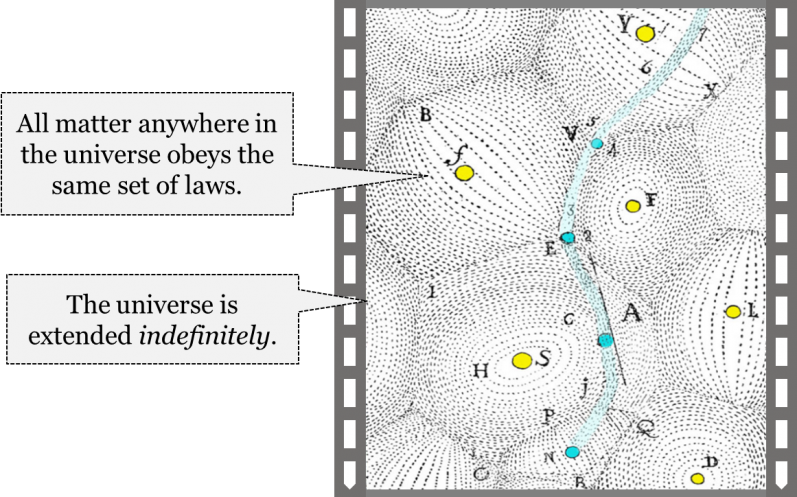

This is how Descartes’ himself imagined a fragment of our infinite universe:

The drawing shows a number of stars (in yellow) with their respective stellar vortices, as well as a comet (in light blue) wandering from one vortex to another.

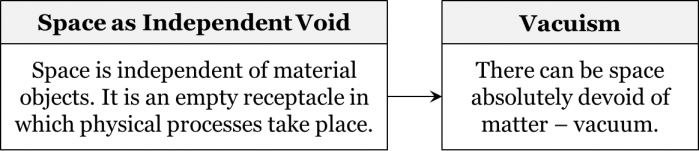

What about the Newtonian attitude towards the scope of the laws of nature and the boundaries of the universe? Let’s start with the Newtonian view on the boundaries of the universe. While Cartesians accepted that space was an attribute of matter, i.e. that it is indispensable from matter, Newtonians accepted quite the opposite: that space can and does exist independently from matter. For Newtonians, space is like a stage on which the entire material universe is built. But space can also exist without that material universe. This idea is known as the conception of absolute space : space is independent of material objects; it is an empty receptacle in which physical processes take place.

Bearing in mind that Newtonians accepted the existence of absolute space, then it remained possible, from the Newtonian point of view, for this absolute space to exist beyond any perceived boundary of the universe. Effectively, such boundaries would not even exist in the Newtonian worldview; space is essentially a giant void filled with solar system after solar system. If space is a void, then the universe must be infinite. Therefore, we say that Newtonians accepted the metaphysical idea of an infinite universe .

What about the laws of nature in the Newtonian worldview? Newton introduced three laws of motion as well as the law of universal gravitation to describe physical processes. Let’s go over these laws and see what they suggest about homogeneity or heterogeneity. First, consider Newton’s second law , which states:

The acceleration (a) of a body is directly proportional to the net force (F) acting on the body and is inversely proportional to the mass (m) of the body:

The law is also often stated as F = ma . To understand what this law states, imagine we used the law to describe an arrow being launched from a bow. A Newtonian would say the acceleration of the arrow after being launched from the bow would depend on the mass of the arrow, as well as the force of the bowstring pushing the arrow.

Now, what would happen to this arrow if there were no additional forces applied to it after being launched? For Newtonians, the answer is given by Newton’s first law , or the law of inertia , which states that:

If an object experiences no net force, then the velocity of the object is constant: it is either at rest or moves in a straight line with constant speed.

So, after being launched but while remaining subject to no additional forces, the arrow would just keep moving in a straight line because of inertia. In other words, an object will remain at rest or in constant motion until some external force acts upon it. In reality, projectiles do not fly in a vacuum, for other than inertia, they are also subject to gravity. Newton accounted for the falling of objects by a force of mutual gravitational attraction between every pair of objects. His law of universal gravitation states that any two bodies attract each other with a force proportional to the product of their masses and inversely proportional to the square of the distance between them.

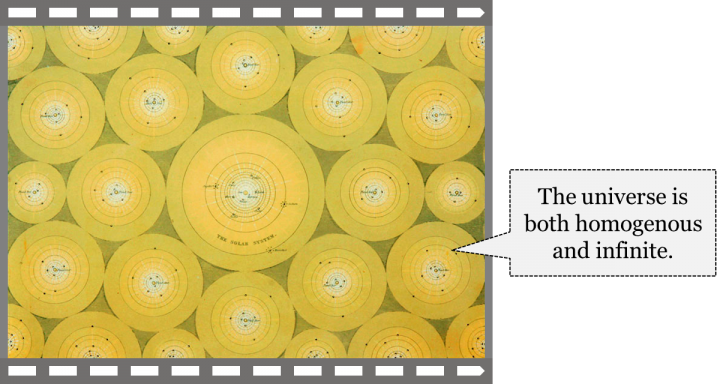

Any two bodies attract each other with a force (F) proportional to the product of their masses (m 1 and m 2 ) and inversely proportional to the square of the distance (r) between them:

To apply Newton’s theory to the flying arrow, a Newtonian would need to know the distance between the arrow and the centre of the Earth, as well as the masses of the arrow and the Earth. Knowing these two values, they could calculate what the force of gravity between the arrow and the Earth is. Although gravity is a force of mutual attraction, the force pulling the Earth towards the arrow is immeasurably tiny compared to the force pulling the arrow towards the Earth. This is because the mass of the Earth is vastly greater than that of the arrow.

Finally, we have Newton’s third law , which states that:

If one body exerts a force on a second body, then the latter simultaneously exerts a force on the former, and the two forces are equal and opposite.

In other words, the third law is the law of equal and opposite reactions. So, every time an object interacts with another object – either by colliding with it, or by exerting some attractive force on it – the other object will experience the same force, but in the opposite direction. Thus, as the bowstring exerts its propulsive force on the arrow, the arrow exerts an equal and opposite force on the bowstring.

The two main factors a Newtonian would have to consider determining a flying arrow’s trajectory are inertia and gravity . Were the arrow launched at a forty-five degree angle, the Newtonian would explain the forward and upward motion of the arrow, after leaving the bow, as due to its inertia. They would explain the fact that the arrow does not move in a straight line at a forty-five degree angle to the ground as due to the action of the force of gravity, which bends the arrow’s trajectory by pulling it towards the surface of the Earth. The resulting motion would be due to both inertia and gravitational force. The angle of ascent would decrease and turn into an angle of descent, and the arrow’s trajectory would be a parabola .

In a thought experiment in his Treatise of the System of the World Newton imagined he had a fantastically powerful cannon, on top of an imaginary mountain so high that the force of air resistance on a cannonball would be negligible. If he fired a cannonball from this super cannon, it would hurtle forward due to inertia, but also fall toward Earth due to the force of gravity, eventually crashing into Earth’s surface. The faster the cannonball left the cannon barrel, the further it would travel before crashing to Earth. Newton realized that if a cannonball were fired fast enough, its fall due to the force of gravity would be at the same rate as the Earth curved away beneath it. Rather than crashing to Earth, it would continue to circle the globe forever, just as the moon circles the Earth in its orbit. The orbital path it would follow would be circular or, more generally, elliptical ; an oval shape with an off-centre Earth. If the cannonball were fired faster than a certain critical velocity, called the escape velocity , a Newtonian could calculate that it would escape from the Earth’s gravitational pull entirely, hurtling away into outer space, never to return.

The conclusion we can draw from these examples is that the same laws that govern a projectile here on Earth must also govern a projectile in the heavens, as well as the motion of the planets and the stars. In other words, Newtonians accepted that the same laws of nature applied in the terrestrial regions of the universe as in the celestial. For this reason, they abandoned the distinction between the two regions that characterized the Aristotelian-Medieval worldview, and instead accepted the principle of homogeneity of the laws of nature. That is, in addition to the idea of infinite universe, they also accepted that all regions of the universe obey the same laws.

By this point in the chapter, it is hopefully evident that the underlying assumptions of the Newtonian worldview are vastly different from those of the Aristotelian-Medieval worldview. It might also seem as though Newtonians shared many assumptions with Cartesians – which they did. But the rival Cartesian and Newtonian communities also saw stark contrasts in the basic characteristics of their worldviews. In some ways, Cartesians shared more with Aristotelians than Newtonians.

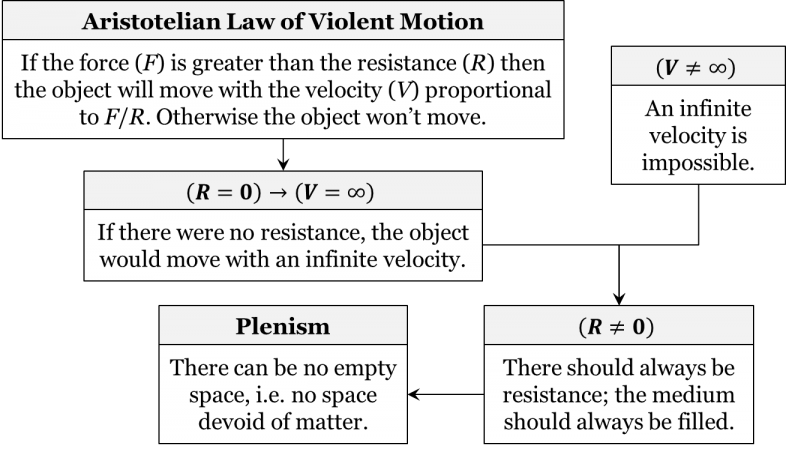

Let us consider the idea of absolute space . Neither Aristotelians nor Cartesians would ever accept such an idea, for it conflicted with some basic assumptions of their worldviews. First, recall the Aristotelian law of violent motion : if force ( F ) is greater than resistance ( R ), then the object will move with a velocity ( V ) proportional to F/R ; if resistance ( R ) is greater than force ( F ), then the object will stay put. According to this law, should a moving object experience no resistance, the formula calls for us to divide by 0, leaving the object with an infinite velocity. It’s tough to imagine what infinite velocity would look like in the real world, but we might imagine it as something like instant teleportation or being in two or more places at once. Aristotelians recognized the absurdity of an infinite velocity, and accordingly denied it was even possible in the first place. It followed from the impossibility of an infinite velocity that some resistance is always necessary in any motion. For our purposes, what this means is that, for Aristotelians, the universe is always filled with something that creates resistance. Thus, implicit in the Aristotelian-Medieval worldview was the idea of plenism , that there can be no empty space, i.e. no space devoid of matter.

Cartesians, as we know from chapter 8, also accepted plenism, though they justified it in a very different way. Since extension, according to Cartesians, is the principal attribute of matter, and since no attribute can exist without a substance, extension too cannot exist on its own. Extension, according to Cartesians, is always attached to something extended, i.e. to material things. Thus, there is no space without matter. The idea of plenism was one of the essential metaphysical assumptions of the Cartesian worldview.

In contrast, Newtonians rejected plenism. Recall that, in the Principia , Newton introduced and defended the idea of absolute space – the idea of space as independent from material objects. This implies vacuism , which is quite simply, the exact opposite of plenism. It says that there can be space absolutely devoid of matter, or that there can be a vacuum.

That said, why might Newton have introduced the idea of absolute space to begin with? Our historical hypothesis is that, at the time Newton was writing the Principia , scientists across Europe were conducting experiments that seemed to suggest the existence of a vacuum. These included barometric experiments conducted by Evangelista Torricelli and Blaise Pascal. Because the idea of a vacuism contradicted the then-accepted Aristotelian-Medieval idea of plenism, it could only, at best, be seen as a pursued theory at the time. Newton, however, seemed to have taken the results of these experiments seriously and developed a physical theory that could account for the possibility, or even actuality, of empty space.

Let’s focus on one such experiment in more detail: the Magdeburg hemispheres . After the barometric experiments of Torricelli and Pascal, Otto von Guericke, mayor of the German town of Magdeburg, invented a device that could pump the air out of a sealed space, effectively, he claimed, creating a vacuum. Von Guericke’s device consisted of two semi-spherical shells or hemispheres, that, when placed together and emptied of air, would remain sealed by both the vacuum within and the air pressure without. So powerful was the vacuum within the sealed hemispheres that, reportedly, two sets of horses could not pry the device apart. Were the universe a plenum, it should have been impossible to create a vacuum inside the device and the horses would have been easily able to displace the halves in different directions. Since this was not the case, it seemed that pumping air out of the device left a vacuum inside of it. The pressure exerted by the air outside the two halves of the device was not balanced by the pressure of any matter within the device making the hemispheres extremely difficult to pull apart.

It is because of experiments like those of von Guericke that Newton seems to have been inspired to base his physics on the idea of absolute space. Effectively, he built his theory upon a new assumption, that space is not an attribute of matter, but rather an independent void or a receptacle that can, but need not, hold any kind of matter. It is furthermore the reason that vacuism was an important element of the Newtonian worldview.

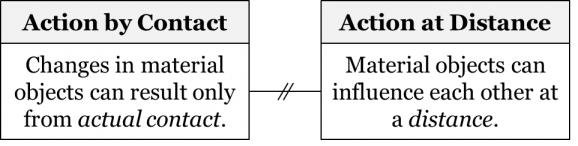

There are other important metaphysical elements that separate the Cartesian worldview from the Newtonian. Recall that Cartesians accepted the principle of action by contact – under which material particles can only interact by colliding with one another. Also recall that Descartes’ first law of motion – that every material body maintains its state of motion or rest unless a collision with another body changes that state – follows from action by contact. Newton, as well, had a first law of motion. Phrased more along the lines of Descartes’ first law, Newton’s first law of motion says that every material body maintains its state of motion or rest unless it is caused to change its state by some force. The key difference between the two first laws is that the Newtonian worldview allows changes to result from the influence of forces , while in the Cartesian worldview changes can only result from the actual contact of material bodies. The clearest example of a force is probably the force of gravity. It is entirely possible in the Newtonian worldview for two objects, like the Moon and the Earth, to be gravitationally attracted to one another without any intermediary objects, like the bits of a matter of a vortex. Essentially, this means that in place of action by contact, Newtonians accepted the principle of action at a distance – that material objects can influence each other at a distance through empty space.

There is an important clarification to make about action at a distance. Accepting the possibility of action at a distance does not necessitate that all objects interact at a distance. For instance, were a Newtonian to observe a football (or soccer, if that’s what you think the sport is called) player dribbling a ball down a field with their feet, they would not assume that there is some kind of contactless force keeping the ball with the moving player. Rather, they would explain that the ball is being moved by the player’s feet contacting the ball and pushing it down the field. Newtonians continued to accept that many objects interact by contact. But they also accepted the idea that objects can influence one another across empty space through forces.

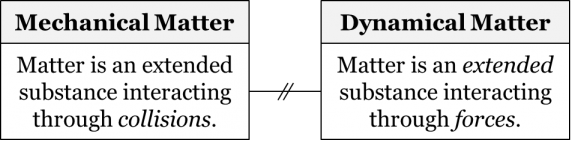

In addition to action by contact, we also know that Cartesians accepted the principle of mechanicism – that all material objects are extended substances composed of bits of interacting matter. So, for Cartesians all seeming instances of action at a distance, like the revolution of the Moon around the Earth or the Earth around the Sun, must actually be the result of colliding particles – the matter of the terrestrial and solar vortices, in these cases. Effectively, the absence of forces in the Cartesian worldview, and the fact that the source of all motion is external to any particular piece of matter, i.e. caused by its collision with another piece of matter, mean that all matter is inert . In other words, material things do not have any capacity to influence other things without actually touching them.

Newtonians conceived of matter in a new way. While Cartesian mechanical matter was inert, Newtonian matter was active and dynamic. It not only occupied space and maintained its state of rest or motion unless compelled to change it by an external force, it also had an active capacity to exert force on other bodies from a distance. Newton’s law of gravitation tells us that any two objects are gravitationally attracted to one another. Effectively, Newtonians replaced the Cartesian conception of mechanicism with dynamism , the idea of matter as an extended substance interacting through forces. Thus, they saw all matter as not only occupying some space, i.e. as being extended, but also as having some active capacity.

These new ideas were actually troubling to Newton himself. Newton disliked that his theory of universal gravitation suggested that objects have a mysterious, almost magical, ability to interact from a distance. Action at a distance and dynamic matter were, for Newton, occult ideas. Mathematically, the law of gravity worked, and it allowed one to predict and explain a wide range of terrestrial and celestial phenomena. But because Newton was educated in a mechanistic tradition, he initially believed that proper explanations should be mechanistic, i.e. should involve physical contact. In fact, he searched for, but ultimately failed to provide, a mechanical explanation for gravity. In other words, it seems as though Newton himself likely accepted mechanicism. However, because of his failure to provide such a mechanical explanation, and the many successes of his theory, the Newtonian community eventually accepted the notion of the force of gravity as acting at a distance. Furthermore, the strong implication that action at a distance remained the most reasonable explanation for the motion of the planets and the stars led the Newtonian community to accept that matter must be dynamic.

We bring up the conflicting perspectives of Newton and the Newtonians to emphasize the importance of distinguishing between individual and communal beliefs when studying the history of science. On the one hand, we can write fascinating intellectual biographies of great scientists such as Newton. But when we write such histories – histories of individuals – we risk misrepresenting the accepted worldviews of the communities in which those great individuals worked. In such a case, we trade a focus on the discoveries and inventions of individuals – what we would call newly pursued theories – for a proper reconstruction of the belief system of the time. If we write our histories from the perspective of the community, we can understand these individuals in their proper context. We can better realize not only how novel their ideas were but also what the response of the community was and at what point, if ever, their proposed theories became accepted. In sum, it is only by distinguishing the history of the individual from the history of the community that we can realize that Newton’s personal views on matter and motion did not necessarily align with that of the Newtonians; he most likely accepted mechanicism, while Newtonians clearly accepted dynamism.

The dynamic conception of matter underlies more than just the cosmology and physics of the Newtonian mosaic. We see dynamism implicit in the theories of other fields as well. For instance, Newtonians rejected the Cartesian idea of corkscrew particles to explain magnetism, accepting the idea of a magnetic force in its place. In chemistry, Newtonians accepted that the chemicals they believed to exist – like mercury, lead, silver, and gold – combine and react more or less effectively because of something they called a chemical affinity . Chemical affinity was interpreted as an active capacity inherent in different chemical substances which caused some to combine with others in a way that had clear parallels to the Newtonian conception of gravity. For example, following numerous experiments and observations, they concluded that mercury would combine better with gold than silver, and they explained this in terms of mercury’s strong chemical affinity to gold. Even in physiology, Newtonians posited and accepted the existence of a vital force that brought organisms to life.

In the Cartesian worldview, the accepted physiological theories were mechanistic . That is, Cartesians saw human bodies – indeed, all living organisms – as complex machines of interconnected and moving parts. Though they were uncertain how the mind commands the body to operate, they were confident that all biological processes acted mechanistically through actual contact, similar to a clock with its various gears and cogs.

The Newtonian response to mechanistic physiology was known as vitalism . In the first few decades of the eighteenth century, physicians found themselves asking about what properties are essential to life. Mechanicists would probably answer that living organisms were carefully organized combinations of bits of extended matter, much like the carefully organized gears, wheels, and pendulum of a clock. But by the mid-to-late eighteenth century, the medical community began observing phenomena that were anomalous for mechanistic physiology. One observation concerned an animal’s ability to preserve its body heat, even when the circulation of its blood was stopped. Mechanicists posited that heat is generated by circulating blood, and they could not provide a satisfactory explanation for why heat continued to be generated in the absence of this mechanical cause. Another observation concerned the temperature of a dog’s nose. It was noted that a dog’s nose is filled with blood and should thus be warm like the rest of its body, and yet most often a dog’s nose is as cold as the temperature of the air around the dog. Why did the temperature of the rest of the dog’s body not cool to the temperature of the air around it? It seemed that mechanicists could not produce a satisfactory answer to such questions. Vitalists, on the other hand, posited that there was some additional force inherent in living things which, in this case, regulates an animal’s body heat. By the late 1700’s, vitalist physiology and the idea of a vital force had replaced mechanistic physiology as the accepted physiological theory of the time. In essence, vitalism suggested that living matter is organized by an inherent vital force.

Newtonians saw vital forces as the living principles responsible for maintaining health and curing illness. Physicians generally characterized an organism’s vital force by two properties, sensibility and contractility. Sensibility involved what the different parts of your body could feel. It included both voluntary properties that allowed you to use your senses to interact with your environment, and involuntary properties, like feeling hunger or maintaining a sense of balance. Contractility involved how the different parts of your body moved. It was sometimes an involuntary property that ensured the beating of your heart or the digestion of food, and sometimes a voluntary property involved with things like locomotion. In essence, vitalists accepted that organisms would lack both sensibility and contractility in the absence of a vital force.

Accordingly, vitalists suggested that illness and disease derived from damage to one of these vital properties. For instance, vitalists believed proper digestion was a contractile property directed by a vital force. Were a person to catch the flu, vitalists suggested that the vital force directing proper digestion was somehow interrupted. In effect, that person’s digestive system would not function properly, as demonstrated by flu-like symptoms such as vomiting. Alternatively, consider a sensible property guided by a vital force, like hunger or thirst. Falling ill manifests itself not only in changes in existing sensible properties, like a loss of appetite, but sometimes also in the addition of new, unwanted sensations, like itching or tingling.

The treatment of illness, for vitalists, was about administering medicine that activates the vital forces of the body in such a way as to accelerate healing by the proper functioning of these vital properties. Treatment did not always involve straightforward activation of one of these properties. For instance, a physician would avoid giving medicine that heightens contractility to a person suffering from convulsions – a symptom, the involuntary contraction of the muscles, associated with an unwanted increase in the contractible property.

The vitalist conceptions of illness and treatment were in sharp contrast with those of both Aristotelians and Cartesians. As explained in earlier chapters, for Aristotelians disease was a result of an imbalance of bodily fluids or humors. Consequently, treatment involved the rebalancing of these humors. For Cartesians, the ideas of disease and curing remained largely the same as those of Aristotelians, despite the fact that in Cartesian physiology, humors received a purely mechanistic interpretation. Conversely, vitalists at the time of the Newtonian worldview didn’t believe curing was about the mechanical balancing of humors; it was about restoring the vital forces that help maintain a properly functioning body.

Similarly, to the force of gravity, vital force was seen as a property of a material body, as something that doesn’t exist independently of the body. Importantly, Newtonians did not see vital force as a separate immaterial substance. This is true about the dynamic conception of matter in general: the behaviour of material objects is guided by forces that are inherent in matter itself.

Although Cartesians and Newtonians had different conceptions of matter – mechanical and dynamic respectively – they agreed that matter and mind can exist independently of each other. Both Cartesians and Newtonians accepted dualism, the idea that there are two independent substances: matter and mind.

In addition to purely material and purely spiritual entities, both parties would agree that there are also entities that are both material and spiritual. Specifically, Cartesians and Newtonians would agree that human beings are the only citizens of two worlds. However, some alternatives to this view were pursued at the time. For instance, some philosophers believed that animals and plants were composed of not only matter, but also mind. They believed this because they saw all living organisms as having inherent organizing principles – minds, souls, or spirits – which are essentially non-material. Others denied that humans are composed of any mind, or spiritual substance, at all; they saw humans, along with animals, plants, and rocks, as entirely material, while only angels and God were composed of a mental, spiritual substance. However, albeit pursued, these alternative views remained unaccepted. The position implicit in the Newtonian worldview was that only humans are composed of both mind and matter.

This dualistic position was very much in accord with another important puzzle piece of the Newtonian mosaic – theology. Different Newtonian communities accepted different theologies. In Europe alone, there would be a number of different mosaics: Catholic Newtonian, Orthodox Newtonian, Lutheran Newtonian, Anglican Newtonian etc. Yet, the theologies accepted in all of these mosaics assumed that the spiritual world can exist independently of the material world and that matter and mind are separate substances. So, it is not surprising that dualism was accepted in all of these mosaics.

Theology, or the study of God and relations between God, humankind, and the universe, held an important place in the Newtonian worldview. Theologians and natural philosophers alike were concerned with revealing the attributes of God as well as finding proofs of his existence. Nowadays, these theological questions strike us as non -scientific. But in the eighteenth and nineteenth centuries, they remained legitimate topics of scientific study.

Just like Aristotelians and Cartesians before them, Newtonians accepted that there were two distinct branches of theology: revealed theology and natural theology. Revealed theology was concerned with inferring God’s existence and attributes – what he can and cannot do – exclusively from his acts of self-revelation. Most commonly for Newtonians, revealed theology meant that God revealed knowledge about himself and the natural world through a holy text like the Bible. But revelation also occurred in the form of a supernatural entity like a saint, an angel, or God himself speaking to a mortal person, or through a genuine miracle like the curing of some untreatable illness.

It wasn’t uncommon for a natural philosopher of the time to practice revealed theology. Newton himself interpreted many passages from the Bible as evidence of various prophecies. For instance, he believed that a passage from the Book of Revelations indicated that the reign of the Catholic Church would only last for 1260 years. But he was never certain on which year the reign of the Catholic Church had actually begun, and so he came up with multiple dates to mark the fulfilment of the prophecy of 1260 years. Nevertheless, Newton’s belief in this prophecy stems from his reading of the Bible, i.e. it stems from his practice of and belief in revealed theology.

In contrast, natural theology was the branch of theology concerned with inferring God’s existence and attributes by means of reason unaided by God’s acts of self-revelation. Philosophers were practicing natural theology when they made arguments about God with reason and logic. Descartes’ ontological argument for the existence of God from chapter 8 is an example of a theory in natural theology. Others would practice natural theology by studying God through the natural world around them. In any case, what characterizes natural theology is that conclusions regarding God, his attributes, and works were drawn without any reference to a holy text.

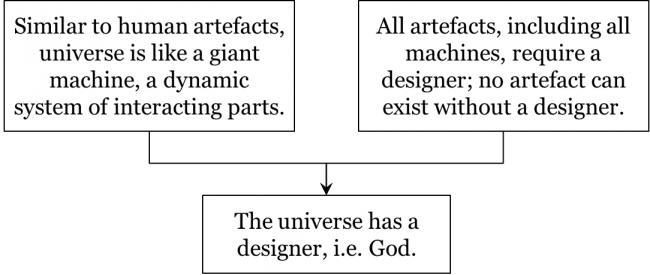

Let’s consider one formulation of the famous argument from design for God’s existence. The argument goes like this. On the one hand, the universe seems like a great big machine, a dynamic system of interacting parts. It is, in a sense, analogous to human artefacts; it is akin to a very complex clock, where all the bits and pieces work in a perfect harmony. On the other hand, we know that artefacts including machines have a designer. Therefore, so the argument goes, the universe also has a designer, i.e. God:

In essence, the argument from design assumes an analogy between the universe as a whole and a human artefact, such as a steam engine, a mercury thermometer, or a marine chronometer. Since such artefacts are the product of design by a higher entity (i.e. humans), then perhaps the universe itself, with the planets and stars moving about in the heavens, is the product of design by some even higher entity – God. The argument fits under the category of natural theology because it is based on a certain understanding of nature, i.e. the idea that the universe is a dynamic system of interacting parts operating through collisions and forces.

The argument from design was far from perfect. The eighteenth-century philosopher David Hume remained unconvinced by it and pointed out some of its major problems. First, Hume rejected the premise that there is an analogy between the universe as a machine and artefacts, making the entire argument unsound. He noted that the reason we claim artefacts have a designer is that we have experienced humanity designing artefacts from initial concept to final product. But when it comes to the universe as a whole, Hume reasoned, we have never experienced such a conceptual stage. That is, no one has ever seen an all-powerful being create a universe; we’re merely living in an already operational “machine”. So, while artefacts clearly have a designer, this doesn’t imply that the universe has a designer.

Second, Hume points out that this argument for the existence of God says nothing about what God is like. Even if we were to accept the argument, the only conclusion that would logically follow from it is that the universe has some designer. Importantly, it wouldn’t imply that this designer is necessarily the omnipotent, omniscient, and omnibenevolent God of the Christian religion. There is nothing in the argument to preclude an imperfect God from designing the universe, or even multiple gods from designing it. To accept an imperfect God, or the existence of multiple Gods, would be incompatible with the then-accepted Christian beliefs concerning an all-perfect God.

Regardless of Hume’s criticism, Newtonians accepted some form of the argument from design for the existence of God. More generally, Newtonians accepted both revealed theology , or the study of God through his acts of self-revelation, and natural theology , or the study of God by examining the universe he created.

While theology was an essential part of the Newtonian mosaic, astrology , the study of celestial influences upon terrestrial events, suffered a different fate. Newtonians understood that the stars and the planets exerted some kind of influence upon the Earth. However, in the Newtonian worldview, the astrological topics that were accepted in the Aristotelian worldview were either gradually encompassed by other fields of natural science or rejected altogether.

Traditionally, astrology was divided into two branches – judicial astrology and natural astrology. Judicial astrology was the branch of astrology concerned with celestial influences upon human affairs. For instance, consulting the heavens to advise a monarch on when to go to war, or when to conceive an heir fell within the domain of judicial astrology. Judicial astrology could also be involved in something as innocent as reading a horoscope to decide when to ask a crush’s hand in marriage. In all of these examples, it was suggested that the influence of the heavens extended beyond the material world to the human mind.

The other branch of astrology was natural astrology , and it was concerned with celestial influences upon natural things. For instance, positing a link between the rising and setting of the Moon and the ebb and flow of the tide fell under natural astrology. Similarly, any study of light and heat coming from the Sun and affecting the Earth was considered part of natural astrology. The measuring of time and the forecasting of weather by studying planetary positions would also pertain to natural astrology. Medical prognostications using natal horoscopes would equally belong to this field.

A question arises: why weren’t medical prognostications using natal horoscopes a part of judicial astrology; didn’t they concern heavenly influences upon humans ? To answer this question, we need to recall the distinction between the mind and the body. You would be right to recall that physicians would require knowledge of the heavens – specifically of a patient’s natal horoscope – in order to properly rebalance a patient’s humors. So, it might seem as though physicians were studying celestial influences over human affairs and therefore practicing judicial astrology. But, more precisely, physicians would simply monitor and make changes to a patient’s body ; they would not be concerned with any celestial influences over a patient’s mind . Medical prognostication did not fall under judicial astrology because physicians accepted that the celestial realm can and does influence the material world by bringing humors in and out of balance. They did not accept, as claimed by judicial astrologers, that the heavens could determine what would normally be determined by a person’s mind or by an even more powerful agent, God.

Furthermore, the idea of judicial astrology was in conflict with the Christian belief in free will. According to one of the fundamental Christian dogmas, humans have an ability to act spontaneously and make decisions that are not predetermined by prior events. This goes against the key idea of judicial astrology: if celestial events do, in fact, determine the actions of humans, then in what sense can humans be said to possess free will? If a state of the human mind is determined by the position of stars and planets, then the very notion of human free will becomes questionable. It is not surprising, therefore, that the practice of judicial astrology was considered heresy and therefore banned. As such, judicial astrology was never an accepted part of either the Aristotelian-Medieval, Cartesian, or Newtonian mosaics.

Natural astrology, on the other hand, was an accepted element of the Aristotelian-Medieval worldview and many of its topics even persisted through the Cartesian and Newtonian worldviews. While in the Aristotelian-Medieval mosaic natural astrology was a separate element, it ceased to be so in the Cartesian and Newtonian mosaics; only some of its topics survived and were subsumed under other fields, such as astronomy, geology, meteorology, or physics. There were other topics of natural astrology that were simply rejected. Consider the following three questions:

How are tides influenced by celestial objects such as the Sun and the Moon?

How is the weather on Earth influenced by celestial phenomena?

How is human health influenced by the arrangement of celestial objects?

In the Aristotelian-Medieval worldview, all three of these questions were accepted as legitimate topics of study; they all pertained to the domain of natural astrology. In particular, Aristotelians accepted that there was a certain link between the Moon and the tides. They believed that the positions of planets influenced weather on Earth. For instance, a major flood might be explained by a conjunction of planets in the constellation of Aquarius. Finally, Aristotelian physicians believed that the positions of the planets affect the balance of humors in the body, and thus human health.

Of these three topics, only two survived in the Cartesian and Newtonian mosaics. Thus, both Cartesians and Newtonians accepted that the position of the Moon plays an important role in the ebb and flow of the tides. While they would not agree as to what actual mechanism causes this ebb and flow, they would all accept that a “celestial” influence upon the tides exists. Similarly, they would both agree that the weather on Earth might be affected by the Sun. However, the question of the celestial influence upon the human body was rejected in the Cartesian and Newtonian worldviews.

In short, while some traditional topics of natural astrology survived in the Cartesian and Newtonian worldviews, they didn’t do so under the label of natural astrology. Instead they were absorbed by other fields of science.

Newtonian Method

How did Newtonians evaluate the theories that would become a part of their worldview? If we were to ask this question to Newtonians themselves, especially in the eighteenth century, their explicit answer would be in accord with the empiricist-inductivist methodology of Locke and Newton. As mentioned in chapter 3, the empiricist-inductivist methodology prescribed that a theory is acceptable if it merely inductively generalizes from empirical results without postulating any hypothetical entities. However, the actual expectations – their method not methodology – of the Newtonian community was different. When we study the actual eighteenth-century transitions in accepted theories, it becomes apparent that the scientists of the time were willing to accept theories that postulated unobservable entities. Recall, for instance, the fluid theory of electricity that postulated the existence of an electric fluid , the theory of preformation that postulated invisibly small homunculi in men’s semen, or Newton’s theory that postulated the existence of absolute space, absolute time, and the force of gravity. In other words, the actual expectations of the community of the time, i.e. their methods, were different from their explicitly proclaimed methodological rules.

So how did Newtonians actually evaluate their theories? In fact, the same way as Cartesians: all theories in the Newtonian mosaic had to satisfy the requirements of the hypothetico-deductive (HD) method in order to be accepted. Indeed, the employment of this new method also had to follow the third law of scientific change : just as in the case of the Cartesian mosaic, the HD method was a logical consequence of some of the key metaphysical principles underlying the Newtonian worldview. These metaphysical principles would be the same in both mosaics, but they would be arrived at differently in Cartesian and Newtonian mosaics.

In previous chapters, we explained how the HD method became employed in the Cartesian mosaic because it followed from their belief that the principle attribute of matter is extension. Newtonians, on the other hand, had a slightly different understanding of matter. They believed in a dynamical conception of matter, that matter is an extended substance interacting through forces. We can draw two conclusions from the Newtonian belief in dynamic matter. First, the secondary qualities of matter, like taste, smell, and colour, result from the combination and dynamic interaction of material parts. Since these secondary qualities were taken as the products of a more fundamental inner mechanism, albeit one that allows for the influence of forces, Newtonians accepted the principle of complexity . Second, any phenomenon can be produced by an infinite number of different combinations of particles interacting through collisions and forces. Accordingly, it is possible for many different, equally precise explanations to be given for any phenomenon after the fact. Thus, Newtonians also accepted the principle that post-hoc explanations should be distrusted, and novel otherwise unexpected predictions should be valued. If these conclusions seem similar to the conclusions Cartesians drew from their belief that matter is extension, it’s because they are. The fact that Newtonians also accepted forces doesn’t seem to have influenced their employment of the HD method. As per the third law of scientific change, the HD method becomes employed because it is a deductive consequence of Newtonians’ belief in complexity and their mistrust for post-hoc explanations.

Let’s summarize the many metaphysical conceptions we’ve uncovered in this chapter. While Aristotelians believed in the heterogeneity of the laws of nature and that the universe was finite, Cartesians and Newtonians alike believed the laws of nature to be homogenous and the universe to, in fact, be infinite . Newtonians also shared the Cartesian belief in dualism , that there are two substances in the world: mind and matter. At the same time, the metaphysical assumptions of the Newtonian worldview contrasted in many ways with both those of the Aristotelian-Medieval and the Cartesian worldviews. Newtonians replaced the idea of plenism with that of vacuism – that there can be empty space; they expanded their understanding of change and motion from action by contact to allowing for the possibility of action at a distance ; and they modified the conception of matter from being mechanical and inert to being active and dynamic .

One point that we want to emphasize about all of these metaphysical elements insofar as the Newtonian worldview is concerned is that they weren’t always explicitly discussed or taught in the eighteenth and nineteenth centuries. Rather, some of these assumptions are implicit elements of the worldview; they are ideas that, had we had a conversation with a Newtonian, we would expect them to agree with, for they all follow from their accepted theories. For instance, the reason Newtonians would accept the conception of dynamism is that their explicit acceptance of the law of gravity implies that matter can interact through forces.

Before concluding this chapter, there’s another important point to re-emphasize about these historical chapters in general: all mosaics change in a piecemeal fashion. What this means is that the Newtonian worldview of, say, 1760, looked vastly different from the Newtonian worldview of 1900. While we’ve tried to describe the theories accepted by Newtonians and the metaphysical principles that characterized their worldview around the second half of the eighteenth century, this may create a false impression that the Newtonian mosaic did not change much after that. It actually did.

One notable change within the Newtonian worldview was a shift in the belief over how to conceive of matter, a shift from dynamism to the belief in particles and waves . Up until around the 1820s, Newtonians conceived of matter merely in terms of particles. These particles could interact via collisions and forces – hence the dynamic conception of matter – but they were always understood in a literally corpuscular sense. After the 1820s, however, Newtonians had experimentally observed matter behaving in a non-corpuscular way. Some matter had been observed acting more like a wave in a fluid medium than like a particle. The idea of dynamic matter consisting exclusively of particles interacting through collisions and forces came to be replaced by the idea of dynamic matter consisting of both particles and waves interacting through collisions and forces. It is possible that some Newtonians sought to replace the idea of a force with that of a wave, so that all forces pushing and pulling dynamic matter about could be interpreted in terms of waves in a subtle fluid medium sometimes called the luminiferous ether. But not all forces at the time of the Newtonian worldview were explained in terms of waves, so the most that we can say about them is that they accepted that both particles and waves interacted through collisions and forces. Recall the discussion of Fresnel’s wave theory of light from chapter 3.

Did the Newtonian conception of matter in terms of particles and waves persist in the twentieth century? That will be a topic of our next chapter, on the Contemporary worldview.

Introduction to History and Philosophy of Science Copyright © by Barseghyan, Hakob; Overgaard, Nicholas; and Rupik, Gregory is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Understanding Science

How science REALLY works...

- Understanding Science 101

- Misconceptions

- Testing ideas with evidence is at the heart of the process of science.

- Scientific testing involves figuring out what we would expect to observe if an idea were correct and comparing that expectation to what we actually observe.

Misconception: Science proves ideas.

Misconception: Science can only disprove ideas.

Correction: Science neither proves nor disproves. It accepts or rejects ideas based on supporting and refuting evidence, but may revise those conclusions if warranted by new evidence or perspectives. Read more about it.

Testing scientific ideas

Testing ideas about childbed fever.

As a simple example of how scientific testing works, consider the case of Ignaz Semmelweis, who worked as a doctor on a maternity ward in the 1800s. In his ward, an unusually high percentage of new mothers died of what was then called childbed fever. Semmelweis considered many possible explanations for this high death rate. Two of the many ideas that he considered were (1) that the fever was caused by mothers giving birth lying on their backs (as opposed to on their sides) and (2) that the fever was caused by doctors’ unclean hands (the doctors often performed autopsies immediately before examining women in labor). He tested these ideas by considering what expectations each idea generated. If it were true that childbed fever were caused by giving birth on one’s back, then changing procedures so that women labored on their sides should lead to lower rates of childbed fever. Semmelweis tried changing the position of labor, but the incidence of fever did not decrease; the actual observations did not match the expected results. If, however, childbed fever were caused by doctors’ unclean hands, having doctors wash their hands thoroughly with a strong disinfecting agent before attending to women in labor should lead to lower rates of childbed fever. When Semmelweis tried this, rates of fever plummeted; the actual observations matched the expected results, supporting the second explanation.

Testing in the tropics

Let’s take a look at another, very different, example of scientific testing: investigating the origins of coral atolls in the tropics. Consider the atoll Eniwetok (Anewetak) in the Marshall Islands — an oceanic ring of exposed coral surrounding a central lagoon. From the 1800s up until today, scientists have been trying to learn what supports atoll structures beneath the water’s surface and exactly how atolls form. Coral only grows near the surface of the ocean where light penetrates, so Eniwetok could have formed in several ways:

Hypothesis 2: The coral that makes up Eniwetok might have grown in a ring atop an underwater mountain already near the surface. The key to this hypothesis is the idea that underwater mountains don’t sink; instead the remains of dead sea animals (shells, etc.) accumulate on underwater mountains, potentially assisted by tectonic uplifting. Eventually, the top of the mountain/debris pile would reach the depth at which coral grow, and the atoll would form.

Which is a better explanation for Eniwetok? Did the atoll grow atop a sinking volcano, forming an underwater coral tower, or was the mountain instead built up until it neared the surface where coral were eventually able to grow? Which of these explanations is best supported by the evidence? We can’t perform an experiment to find out. Instead, we must figure out what expectations each hypothesis generates, and then collect data from the world to see whether our observations are a better match with one of the two ideas.

If Eniwetok grew atop an underwater mountain, then we would expect the atoll to be made up of a relatively thin layer of coral on top of limestone or basalt. But if it grew upwards around a subsiding island, then we would expect the atoll to be made up of many hundreds of feet of coral on top of volcanic rock. When geologists drilled into Eniwetok in 1951 as part of a survey preparing for nuclear weapons tests, the drill bored through more than 4000 feet (1219 meters) of coral before hitting volcanic basalt! The actual observation contradicted the underwater mountain explanation and matched the subsiding island explanation, supporting that idea. Of course, many other lines of evidence also shed light on the origins of coral atolls, but the surprising depth of coral on Eniwetok was particularly convincing to many geologists.

- Take a sidetrip

Visit the NOAA website to see an animation of coral atoll formation according to Hypothesis 1.

- Teaching resources

Scientists test hypotheses and theories. They are both scientific explanations for what we observe in the natural world, but theories deal with a much wider range of phenomena than do hypotheses. To learn more about the differences between hypotheses and theories, jump ahead to Science at multiple levels .

- Use our web interactive to help students document and reflect on the process of science.

- Learn strategies for building lessons and activities around the Science Flowchart: Grades 3-5 Grades 6-8 Grades 9-12 Grades 13-16

- Find lesson plans for introducing the Science Flowchart to your students in: Grades 3-5 Grades 6-8 Grades 9-16

- Get graphics and pdfs of the Science Flowchart to use in your classroom. Translations are available in Spanish, French, Japanese, and Swahili.

Observation beyond our eyes

The logic of scientific arguments

Subscribe to our newsletter

- The science flowchart

- Science stories

- Grade-level teaching guides

- Teaching resource database

- Journaling tool

June 18, 2020

The Truth about Scientific Models

They don’t necessarily try to predict what will happen—but they can help us understand possible futures

By Sabine Hossenfelder

Getty Images

As COVID-19 claimed victims at the start of the pandemic, scientific models made headlines. We needed such models to make informed decisions. But how can we tell whether they can be trusted? The philosophy of science, it seems, has become a matter of life or death. Whether we are talking about traffic noise from a new highway, climate change or a pandemic, scientists rely on models, which are simplified, mathematical representations of the real world. Models are approximations and omit details, but a good model will robustly output the quantities it was developed for.

Models do not always predict the future. This does not make them unscientific, but it makes them a target for science skeptics. I cannot even blame the skeptics, because scientists frequently praise correct predictions to prove a model’s worth. It isn’t originally their idea. Many eminent philosophers of science, including Karl Popper and Imre Lakatos, opined that correct predictions are a way of telling science from pseudoscience.

But correct predictions alone don’t make for a good scientific model. And the opposite is also true: a model can be good science without ever making predictions. Indeed, the models that matter most for political discourse are those that do not make predictions. Instead they produce “projections” or “scenarios” that, in contrast to predictions, are forecasts that depend on the course of action we will take. That is, after all, the reason we consult models: so we can decide what to do. But because we cannot predict political decisions themselves, the actual future trend is necessarily unpredictable.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

This has become one of the major difficulties in explaining pandemic models. Dire predictions in March 2020 for COVID’s global death toll did not come true. But they were projections for the case in which we took no measures; they were not predictions.

Political decisions are not the only reason a model may make merely contingent projections rather than definite predictions. Trends of global warming, for example, depend on the frequency and severity of volcanic eruptions, which themselves cannot currently be predicted. They also depend on technological progress, which itself depends on economic prosperity, which in turn depends on, among many other things, whether society is in the grasp of a pandemic. Sometimes asking for predictions is really asking for too much.

Predictions are also not enough to make for good science. Recall how each time a natural catastrophe happens, it turns out to have been “predicted” in a movie or a book. Given that most natural catastrophes are predictable to the extent that “eventually something like this will happen,” this is hardly surprising. But these are not predictions; they are scientifically meaningless prophecies because they are not based on a model whose methodology can be reproduced, and no one has tested whether the prophecies were better than random guesses.

Thus, predictions are neither necessary for a good scientific model nor sufficient to judge one. But why, then, were the philosophers so adamant that good science needs to make predictions? It’s not that they were wrong. It’s just that they were trying to address a different problem than what we are facing now.

Scientists tell good models from bad ones by using statistical methods that are hard to communicate without equations. These methods depend on the type of model, the amount of data and the field of research. In short, it’s difficult. The rough answer is that a good scientific model accurately explains a lot of data with few assumptions. The fewer the assumptions and the better the fit to data, the better the model.

But the philosophers were not concerned with quantifying explanatory power. They were looking for a way to tell good science from bad science without having to dissect scientific details. And although correct predictions may not tell you whether a model is good science, they increase trust in the scientists’ conclusions because predictions prevent scientists from adding assumptions after they have seen the data. Thus, asking for predictions is a good rule of thumb, but it is a crude and error-prone criterion. And fundamentally it makes no sense. A model either accurately describes nature or doesn’t. At which moment in time a scientist made a calculation is irrelevant for the model’s relation to nature.

A confusion closely related to the idea that good science must make predictions is the belief that scientists should not update a model when new data come in. This can also be traced back to Popper & Co., who thought it is bad scientific practice. But of course, a good scientist updates their model when they get new data! This is the essence of the scientific method: When you learn something new, revise. In practice, this usually means recalibrating model parameters with new data. This is why we saw regular updates of COVID case projections. What a scientist is not supposed to do is add so many assumptions that their model can fit any data. This would be a model with no explanatory power.

Understanding the role of predictions in science also matters for climate models. These models have correctly predicted many observed trends, from the increase of surface temperature, to stratospheric cooling, to sea ice melting. This fact is often used by scientists against climate change deniers. But the deniers then come back with some papers that made wrong predictions. In response, the scientists point out the wrong predictions were few and far between. The deniers counter there may have been all kinds of reasons for the skewed number of papers that have nothing to do with scientific merit. Now we are counting heads and quibbling about the ethics of scientific publishing rather than talking science. What went wrong? Predictions are the wrong argument.

A better answer to deniers is that climate models explain loads of data with few assumptions. The computationally simplest explanation for our observations is that the trends are caused by human carbon dioxide emission. It’s the hypothesis that has the most explanatory power.

In summary, to judge a scientific model, do not ask for predictions. Ask instead to what degree the data are explained by the model and how many assumptions were necessary for this. And most of all, do not judge a model by whether you like what it tells you.

Sabine Hossenfelder is a physicist and research fellow at the Frankfurt Institute for Advanced Studies in Germany. She currently works on dark matter and the foundations of quantum mechanics.

- Peterborough

Understanding Hypotheses and Predictions

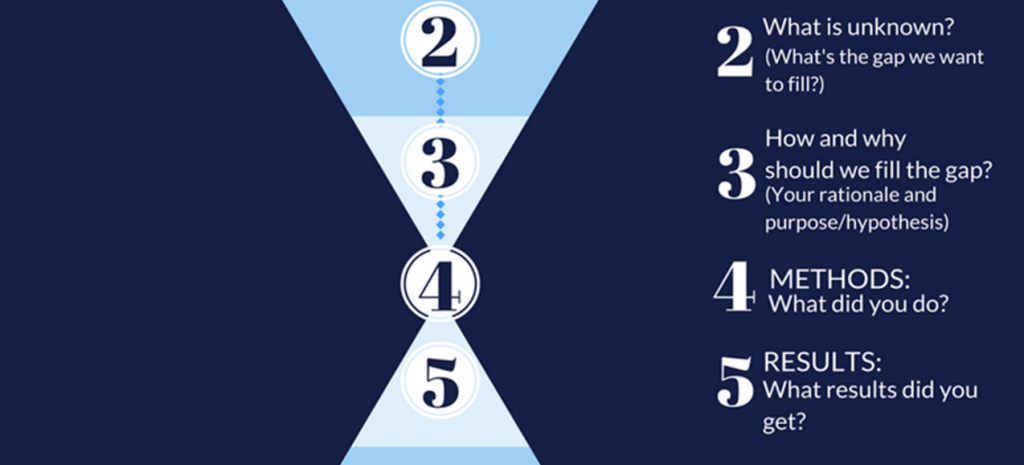

Hypotheses and predictions are different components of the scientific method. The scientific method is a systematic process that helps minimize bias in research and begins by developing good research questions.

Research Questions

Descriptive research questions are based on observations made in previous research or in passing. This type of research question often quantifies these observations. For example, while out bird watching, you notice that a certain species of sparrow made all its nests with the same material: grasses. A descriptive research question would be “On average, how much grass is used to build sparrow nests?”

Descriptive research questions lead to causal questions. This type of research question seeks to understand why we observe certain trends or patterns. If we return to our observation about sparrow nests, a causal question would be “Why are the nests of sparrows made with grasses rather than twigs?”

In simple terms, a hypothesis is the answer to your causal question. A hypothesis should be based on a strong rationale that is usually supported by background research. From the question about sparrow nests, you might hypothesize, “Sparrows use grasses in their nests rather than twigs because grasses are the more abundant material in their habitat.” This abundance hypothesis might be supported by your prior knowledge about the availability of nest building materials (i.e. grasses are more abundant than twigs).

On the other hand, a prediction is the outcome you would observe if your hypothesis were correct. Predictions are often written in the form of “if, and, then” statements, as in, “if my hypothesis is true, and I were to do this test, then this is what I will observe.” Following our sparrow example, you could predict that, “If sparrows use grass because it is more abundant, and I compare areas that have more twigs than grasses available, then, in those areas, nests should be made out of twigs.” A more refined prediction might alter the wording so as not to repeat the hypothesis verbatim: “If sparrows choose nesting materials based on their abundance, then when twigs are more abundant, sparrows will use those in their nests.”

As you can see, the terms hypothesis and prediction are different and distinct even though, sometimes, they are incorrectly used interchangeably.

Let us take a look at another example:

Causal Question: Why are there fewer asparagus beetles when asparagus is grown next to marigolds?

Hypothesis: Marigolds deter asparagus beetles.

Prediction: If marigolds deter asparagus beetles, and we grow asparagus next to marigolds, then we should find fewer asparagus beetles when asparagus plants are planted with marigolds.

A final note

It is exciting when the outcome of your study or experiment supports your hypothesis. However, it can be equally exciting if this does not happen. There are many reasons why you can have an unexpected result, and you need to think why this occurred. Maybe you had a potential problem with your methods, but on the flip side, maybe you have just discovered a new line of evidence that can be used to develop another experiment or study.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

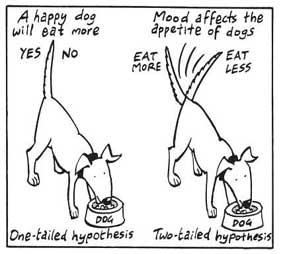

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.