Approaches in Determining Software Development Methods for Organizations: A Systematic Literature Review

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Lau F, Kuziemsky C, editors. Handbook of eHealth Evaluation: An Evidence-based Approach [Internet]. Victoria (BC): University of Victoria; 2017 Feb 27.

Handbook of eHealth Evaluation: An Evidence-based Approach [Internet].

Chapter 9 methods for literature reviews.

Guy Paré and Spyros Kitsiou .

9.1. Introduction

Literature reviews play a critical role in scholarship because science remains, first and foremost, a cumulative endeavour ( vom Brocke et al., 2009 ). As in any academic discipline, rigorous knowledge syntheses are becoming indispensable in keeping up with an exponentially growing eHealth literature, assisting practitioners, academics, and graduate students in finding, evaluating, and synthesizing the contents of many empirical and conceptual papers. Among other methods, literature reviews are essential for: (a) identifying what has been written on a subject or topic; (b) determining the extent to which a specific research area reveals any interpretable trends or patterns; (c) aggregating empirical findings related to a narrow research question to support evidence-based practice; (d) generating new frameworks and theories; and (e) identifying topics or questions requiring more investigation ( Paré, Trudel, Jaana, & Kitsiou, 2015 ).

Literature reviews can take two major forms. The most prevalent one is the “literature review” or “background” section within a journal paper or a chapter in a graduate thesis. This section synthesizes the extant literature and usually identifies the gaps in knowledge that the empirical study addresses ( Sylvester, Tate, & Johnstone, 2013 ). It may also provide a theoretical foundation for the proposed study, substantiate the presence of the research problem, justify the research as one that contributes something new to the cumulated knowledge, or validate the methods and approaches for the proposed study ( Hart, 1998 ; Levy & Ellis, 2006 ).

The second form of literature review, which is the focus of this chapter, constitutes an original and valuable work of research in and of itself ( Paré et al., 2015 ). Rather than providing a base for a researcher’s own work, it creates a solid starting point for all members of the community interested in a particular area or topic ( Mulrow, 1987 ). The so-called “review article” is a journal-length paper which has an overarching purpose to synthesize the literature in a field, without collecting or analyzing any primary data ( Green, Johnson, & Adams, 2006 ).

When appropriately conducted, review articles represent powerful information sources for practitioners looking for state-of-the art evidence to guide their decision-making and work practices ( Paré et al., 2015 ). Further, high-quality reviews become frequently cited pieces of work which researchers seek out as a first clear outline of the literature when undertaking empirical studies ( Cooper, 1988 ; Rowe, 2014 ). Scholars who track and gauge the impact of articles have found that review papers are cited and downloaded more often than any other type of published article ( Cronin, Ryan, & Coughlan, 2008 ; Montori, Wilczynski, Morgan, Haynes, & Hedges, 2003 ; Patsopoulos, Analatos, & Ioannidis, 2005 ). The reason for their popularity may be the fact that reading the review enables one to have an overview, if not a detailed knowledge of the area in question, as well as references to the most useful primary sources ( Cronin et al., 2008 ). Although they are not easy to conduct, the commitment to complete a review article provides a tremendous service to one’s academic community ( Paré et al., 2015 ; Petticrew & Roberts, 2006 ). Most, if not all, peer-reviewed journals in the fields of medical informatics publish review articles of some type.

The main objectives of this chapter are fourfold: (a) to provide an overview of the major steps and activities involved in conducting a stand-alone literature review; (b) to describe and contrast the different types of review articles that can contribute to the eHealth knowledge base; (c) to illustrate each review type with one or two examples from the eHealth literature; and (d) to provide a series of recommendations for prospective authors of review articles in this domain.

9.2. Overview of the Literature Review Process and Steps

As explained in Templier and Paré (2015) , there are six generic steps involved in conducting a review article:

- formulating the research question(s) and objective(s),

- searching the extant literature,

- screening for inclusion,

- assessing the quality of primary studies,

- extracting data, and

- analyzing data.

Although these steps are presented here in sequential order, one must keep in mind that the review process can be iterative and that many activities can be initiated during the planning stage and later refined during subsequent phases ( Finfgeld-Connett & Johnson, 2013 ; Kitchenham & Charters, 2007 ).

Formulating the research question(s) and objective(s): As a first step, members of the review team must appropriately justify the need for the review itself ( Petticrew & Roberts, 2006 ), identify the review’s main objective(s) ( Okoli & Schabram, 2010 ), and define the concepts or variables at the heart of their synthesis ( Cooper & Hedges, 2009 ; Webster & Watson, 2002 ). Importantly, they also need to articulate the research question(s) they propose to investigate ( Kitchenham & Charters, 2007 ). In this regard, we concur with Jesson, Matheson, and Lacey (2011) that clearly articulated research questions are key ingredients that guide the entire review methodology; they underscore the type of information that is needed, inform the search for and selection of relevant literature, and guide or orient the subsequent analysis. Searching the extant literature: The next step consists of searching the literature and making decisions about the suitability of material to be considered in the review ( Cooper, 1988 ). There exist three main coverage strategies. First, exhaustive coverage means an effort is made to be as comprehensive as possible in order to ensure that all relevant studies, published and unpublished, are included in the review and, thus, conclusions are based on this all-inclusive knowledge base. The second type of coverage consists of presenting materials that are representative of most other works in a given field or area. Often authors who adopt this strategy will search for relevant articles in a small number of top-tier journals in a field ( Paré et al., 2015 ). In the third strategy, the review team concentrates on prior works that have been central or pivotal to a particular topic. This may include empirical studies or conceptual papers that initiated a line of investigation, changed how problems or questions were framed, introduced new methods or concepts, or engendered important debate ( Cooper, 1988 ). Screening for inclusion: The following step consists of evaluating the applicability of the material identified in the preceding step ( Levy & Ellis, 2006 ; vom Brocke et al., 2009 ). Once a group of potential studies has been identified, members of the review team must screen them to determine their relevance ( Petticrew & Roberts, 2006 ). A set of predetermined rules provides a basis for including or excluding certain studies. This exercise requires a significant investment on the part of researchers, who must ensure enhanced objectivity and avoid biases or mistakes. As discussed later in this chapter, for certain types of reviews there must be at least two independent reviewers involved in the screening process and a procedure to resolve disagreements must also be in place ( Liberati et al., 2009 ; Shea et al., 2009 ). Assessing the quality of primary studies: In addition to screening material for inclusion, members of the review team may need to assess the scientific quality of the selected studies, that is, appraise the rigour of the research design and methods. Such formal assessment, which is usually conducted independently by at least two coders, helps members of the review team refine which studies to include in the final sample, determine whether or not the differences in quality may affect their conclusions, or guide how they analyze the data and interpret the findings ( Petticrew & Roberts, 2006 ). Ascribing quality scores to each primary study or considering through domain-based evaluations which study components have or have not been designed and executed appropriately makes it possible to reflect on the extent to which the selected study addresses possible biases and maximizes validity ( Shea et al., 2009 ). Extracting data: The following step involves gathering or extracting applicable information from each primary study included in the sample and deciding what is relevant to the problem of interest ( Cooper & Hedges, 2009 ). Indeed, the type of data that should be recorded mainly depends on the initial research questions ( Okoli & Schabram, 2010 ). However, important information may also be gathered about how, when, where and by whom the primary study was conducted, the research design and methods, or qualitative/quantitative results ( Cooper & Hedges, 2009 ). Analyzing and synthesizing data : As a final step, members of the review team must collate, summarize, aggregate, organize, and compare the evidence extracted from the included studies. The extracted data must be presented in a meaningful way that suggests a new contribution to the extant literature ( Jesson et al., 2011 ). Webster and Watson (2002) warn researchers that literature reviews should be much more than lists of papers and should provide a coherent lens to make sense of extant knowledge on a given topic. There exist several methods and techniques for synthesizing quantitative (e.g., frequency analysis, meta-analysis) and qualitative (e.g., grounded theory, narrative analysis, meta-ethnography) evidence ( Dixon-Woods, Agarwal, Jones, Young, & Sutton, 2005 ; Thomas & Harden, 2008 ).

9.3. Types of Review Articles and Brief Illustrations

EHealth researchers have at their disposal a number of approaches and methods for making sense out of existing literature, all with the purpose of casting current research findings into historical contexts or explaining contradictions that might exist among a set of primary research studies conducted on a particular topic. Our classification scheme is largely inspired from Paré and colleagues’ (2015) typology. Below we present and illustrate those review types that we feel are central to the growth and development of the eHealth domain.

9.3.1. Narrative Reviews

The narrative review is the “traditional” way of reviewing the extant literature and is skewed towards a qualitative interpretation of prior knowledge ( Sylvester et al., 2013 ). Put simply, a narrative review attempts to summarize or synthesize what has been written on a particular topic but does not seek generalization or cumulative knowledge from what is reviewed ( Davies, 2000 ; Green et al., 2006 ). Instead, the review team often undertakes the task of accumulating and synthesizing the literature to demonstrate the value of a particular point of view ( Baumeister & Leary, 1997 ). As such, reviewers may selectively ignore or limit the attention paid to certain studies in order to make a point. In this rather unsystematic approach, the selection of information from primary articles is subjective, lacks explicit criteria for inclusion and can lead to biased interpretations or inferences ( Green et al., 2006 ). There are several narrative reviews in the particular eHealth domain, as in all fields, which follow such an unstructured approach ( Silva et al., 2015 ; Paul et al., 2015 ).

Despite these criticisms, this type of review can be very useful in gathering together a volume of literature in a specific subject area and synthesizing it. As mentioned above, its primary purpose is to provide the reader with a comprehensive background for understanding current knowledge and highlighting the significance of new research ( Cronin et al., 2008 ). Faculty like to use narrative reviews in the classroom because they are often more up to date than textbooks, provide a single source for students to reference, and expose students to peer-reviewed literature ( Green et al., 2006 ). For researchers, narrative reviews can inspire research ideas by identifying gaps or inconsistencies in a body of knowledge, thus helping researchers to determine research questions or formulate hypotheses. Importantly, narrative reviews can also be used as educational articles to bring practitioners up to date with certain topics of issues ( Green et al., 2006 ).

Recently, there have been several efforts to introduce more rigour in narrative reviews that will elucidate common pitfalls and bring changes into their publication standards. Information systems researchers, among others, have contributed to advancing knowledge on how to structure a “traditional” review. For instance, Levy and Ellis (2006) proposed a generic framework for conducting such reviews. Their model follows the systematic data processing approach comprised of three steps, namely: (a) literature search and screening; (b) data extraction and analysis; and (c) writing the literature review. They provide detailed and very helpful instructions on how to conduct each step of the review process. As another methodological contribution, vom Brocke et al. (2009) offered a series of guidelines for conducting literature reviews, with a particular focus on how to search and extract the relevant body of knowledge. Last, Bandara, Miskon, and Fielt (2011) proposed a structured, predefined and tool-supported method to identify primary studies within a feasible scope, extract relevant content from identified articles, synthesize and analyze the findings, and effectively write and present the results of the literature review. We highly recommend that prospective authors of narrative reviews consult these useful sources before embarking on their work.

Darlow and Wen (2015) provide a good example of a highly structured narrative review in the eHealth field. These authors synthesized published articles that describe the development process of mobile health ( m-health ) interventions for patients’ cancer care self-management. As in most narrative reviews, the scope of the research questions being investigated is broad: (a) how development of these systems are carried out; (b) which methods are used to investigate these systems; and (c) what conclusions can be drawn as a result of the development of these systems. To provide clear answers to these questions, a literature search was conducted on six electronic databases and Google Scholar . The search was performed using several terms and free text words, combining them in an appropriate manner. Four inclusion and three exclusion criteria were utilized during the screening process. Both authors independently reviewed each of the identified articles to determine eligibility and extract study information. A flow diagram shows the number of studies identified, screened, and included or excluded at each stage of study selection. In terms of contributions, this review provides a series of practical recommendations for m-health intervention development.

9.3.2. Descriptive or Mapping Reviews

The primary goal of a descriptive review is to determine the extent to which a body of knowledge in a particular research topic reveals any interpretable pattern or trend with respect to pre-existing propositions, theories, methodologies or findings ( King & He, 2005 ; Paré et al., 2015 ). In contrast with narrative reviews, descriptive reviews follow a systematic and transparent procedure, including searching, screening and classifying studies ( Petersen, Vakkalanka, & Kuzniarz, 2015 ). Indeed, structured search methods are used to form a representative sample of a larger group of published works ( Paré et al., 2015 ). Further, authors of descriptive reviews extract from each study certain characteristics of interest, such as publication year, research methods, data collection techniques, and direction or strength of research outcomes (e.g., positive, negative, or non-significant) in the form of frequency analysis to produce quantitative results ( Sylvester et al., 2013 ). In essence, each study included in a descriptive review is treated as the unit of analysis and the published literature as a whole provides a database from which the authors attempt to identify any interpretable trends or draw overall conclusions about the merits of existing conceptualizations, propositions, methods or findings ( Paré et al., 2015 ). In doing so, a descriptive review may claim that its findings represent the state of the art in a particular domain ( King & He, 2005 ).

In the fields of health sciences and medical informatics, reviews that focus on examining the range, nature and evolution of a topic area are described by Anderson, Allen, Peckham, and Goodwin (2008) as mapping reviews . Like descriptive reviews, the research questions are generic and usually relate to publication patterns and trends. There is no preconceived plan to systematically review all of the literature although this can be done. Instead, researchers often present studies that are representative of most works published in a particular area and they consider a specific time frame to be mapped.

An example of this approach in the eHealth domain is offered by DeShazo, Lavallie, and Wolf (2009). The purpose of this descriptive or mapping review was to characterize publication trends in the medical informatics literature over a 20-year period (1987 to 2006). To achieve this ambitious objective, the authors performed a bibliometric analysis of medical informatics citations indexed in medline using publication trends, journal frequencies, impact factors, Medical Subject Headings (MeSH) term frequencies, and characteristics of citations. Findings revealed that there were over 77,000 medical informatics articles published during the covered period in numerous journals and that the average annual growth rate was 12%. The MeSH term analysis also suggested a strong interdisciplinary trend. Finally, average impact scores increased over time with two notable growth periods. Overall, patterns in research outputs that seem to characterize the historic trends and current components of the field of medical informatics suggest it may be a maturing discipline (DeShazo et al., 2009).

9.3.3. Scoping Reviews

Scoping reviews attempt to provide an initial indication of the potential size and nature of the extant literature on an emergent topic (Arksey & O’Malley, 2005; Daudt, van Mossel, & Scott, 2013 ; Levac, Colquhoun, & O’Brien, 2010). A scoping review may be conducted to examine the extent, range and nature of research activities in a particular area, determine the value of undertaking a full systematic review (discussed next), or identify research gaps in the extant literature ( Paré et al., 2015 ). In line with their main objective, scoping reviews usually conclude with the presentation of a detailed research agenda for future works along with potential implications for both practice and research.

Unlike narrative and descriptive reviews, the whole point of scoping the field is to be as comprehensive as possible, including grey literature (Arksey & O’Malley, 2005). Inclusion and exclusion criteria must be established to help researchers eliminate studies that are not aligned with the research questions. It is also recommended that at least two independent coders review abstracts yielded from the search strategy and then the full articles for study selection ( Daudt et al., 2013 ). The synthesized evidence from content or thematic analysis is relatively easy to present in tabular form (Arksey & O’Malley, 2005; Thomas & Harden, 2008 ).

One of the most highly cited scoping reviews in the eHealth domain was published by Archer, Fevrier-Thomas, Lokker, McKibbon, and Straus (2011) . These authors reviewed the existing literature on personal health record ( phr ) systems including design, functionality, implementation, applications, outcomes, and benefits. Seven databases were searched from 1985 to March 2010. Several search terms relating to phr s were used during this process. Two authors independently screened titles and abstracts to determine inclusion status. A second screen of full-text articles, again by two independent members of the research team, ensured that the studies described phr s. All in all, 130 articles met the criteria and their data were extracted manually into a database. The authors concluded that although there is a large amount of survey, observational, cohort/panel, and anecdotal evidence of phr benefits and satisfaction for patients, more research is needed to evaluate the results of phr implementations. Their in-depth analysis of the literature signalled that there is little solid evidence from randomized controlled trials or other studies through the use of phr s. Hence, they suggested that more research is needed that addresses the current lack of understanding of optimal functionality and usability of these systems, and how they can play a beneficial role in supporting patient self-management ( Archer et al., 2011 ).

9.3.4. Forms of Aggregative Reviews

Healthcare providers, practitioners, and policy-makers are nowadays overwhelmed with large volumes of information, including research-based evidence from numerous clinical trials and evaluation studies, assessing the effectiveness of health information technologies and interventions ( Ammenwerth & de Keizer, 2004 ; Deshazo et al., 2009 ). It is unrealistic to expect that all these disparate actors will have the time, skills, and necessary resources to identify the available evidence in the area of their expertise and consider it when making decisions. Systematic reviews that involve the rigorous application of scientific strategies aimed at limiting subjectivity and bias (i.e., systematic and random errors) can respond to this challenge.

Systematic reviews attempt to aggregate, appraise, and synthesize in a single source all empirical evidence that meet a set of previously specified eligibility criteria in order to answer a clearly formulated and often narrow research question on a particular topic of interest to support evidence-based practice ( Liberati et al., 2009 ). They adhere closely to explicit scientific principles ( Liberati et al., 2009 ) and rigorous methodological guidelines (Higgins & Green, 2008) aimed at reducing random and systematic errors that can lead to deviations from the truth in results or inferences. The use of explicit methods allows systematic reviews to aggregate a large body of research evidence, assess whether effects or relationships are in the same direction and of the same general magnitude, explain possible inconsistencies between study results, and determine the strength of the overall evidence for every outcome of interest based on the quality of included studies and the general consistency among them ( Cook, Mulrow, & Haynes, 1997 ). The main procedures of a systematic review involve:

- Formulating a review question and developing a search strategy based on explicit inclusion criteria for the identification of eligible studies (usually described in the context of a detailed review protocol).

- Searching for eligible studies using multiple databases and information sources, including grey literature sources, without any language restrictions.

- Selecting studies, extracting data, and assessing risk of bias in a duplicate manner using two independent reviewers to avoid random or systematic errors in the process.

- Analyzing data using quantitative or qualitative methods.

- Presenting results in summary of findings tables.

- Interpreting results and drawing conclusions.

Many systematic reviews, but not all, use statistical methods to combine the results of independent studies into a single quantitative estimate or summary effect size. Known as meta-analyses , these reviews use specific data extraction and statistical techniques (e.g., network, frequentist, or Bayesian meta-analyses) to calculate from each study by outcome of interest an effect size along with a confidence interval that reflects the degree of uncertainty behind the point estimate of effect ( Borenstein, Hedges, Higgins, & Rothstein, 2009 ; Deeks, Higgins, & Altman, 2008 ). Subsequently, they use fixed or random-effects analysis models to combine the results of the included studies, assess statistical heterogeneity, and calculate a weighted average of the effect estimates from the different studies, taking into account their sample sizes. The summary effect size is a value that reflects the average magnitude of the intervention effect for a particular outcome of interest or, more generally, the strength of a relationship between two variables across all studies included in the systematic review. By statistically combining data from multiple studies, meta-analyses can create more precise and reliable estimates of intervention effects than those derived from individual studies alone, when these are examined independently as discrete sources of information.

The review by Gurol-Urganci, de Jongh, Vodopivec-Jamsek, Atun, and Car (2013) on the effects of mobile phone messaging reminders for attendance at healthcare appointments is an illustrative example of a high-quality systematic review with meta-analysis. Missed appointments are a major cause of inefficiency in healthcare delivery with substantial monetary costs to health systems. These authors sought to assess whether mobile phone-based appointment reminders delivered through Short Message Service ( sms ) or Multimedia Messaging Service ( mms ) are effective in improving rates of patient attendance and reducing overall costs. To this end, they conducted a comprehensive search on multiple databases using highly sensitive search strategies without language or publication-type restrictions to identify all rct s that are eligible for inclusion. In order to minimize the risk of omitting eligible studies not captured by the original search, they supplemented all electronic searches with manual screening of trial registers and references contained in the included studies. Study selection, data extraction, and risk of bias assessments were performed independently by two coders using standardized methods to ensure consistency and to eliminate potential errors. Findings from eight rct s involving 6,615 participants were pooled into meta-analyses to calculate the magnitude of effects that mobile text message reminders have on the rate of attendance at healthcare appointments compared to no reminders and phone call reminders.

Meta-analyses are regarded as powerful tools for deriving meaningful conclusions. However, there are situations in which it is neither reasonable nor appropriate to pool studies together using meta-analytic methods simply because there is extensive clinical heterogeneity between the included studies or variation in measurement tools, comparisons, or outcomes of interest. In these cases, systematic reviews can use qualitative synthesis methods such as vote counting, content analysis, classification schemes and tabulations, as an alternative approach to narratively synthesize the results of the independent studies included in the review. This form of review is known as qualitative systematic review.

A rigorous example of one such review in the eHealth domain is presented by Mickan, Atherton, Roberts, Heneghan, and Tilson (2014) on the use of handheld computers by healthcare professionals and their impact on access to information and clinical decision-making. In line with the methodological guidelines for systematic reviews, these authors: (a) developed and registered with prospero ( www.crd.york.ac.uk/ prospero / ) an a priori review protocol; (b) conducted comprehensive searches for eligible studies using multiple databases and other supplementary strategies (e.g., forward searches); and (c) subsequently carried out study selection, data extraction, and risk of bias assessments in a duplicate manner to eliminate potential errors in the review process. Heterogeneity between the included studies in terms of reported outcomes and measures precluded the use of meta-analytic methods. To this end, the authors resorted to using narrative analysis and synthesis to describe the effectiveness of handheld computers on accessing information for clinical knowledge, adherence to safety and clinical quality guidelines, and diagnostic decision-making.

In recent years, the number of systematic reviews in the field of health informatics has increased considerably. Systematic reviews with discordant findings can cause great confusion and make it difficult for decision-makers to interpret the review-level evidence ( Moher, 2013 ). Therefore, there is a growing need for appraisal and synthesis of prior systematic reviews to ensure that decision-making is constantly informed by the best available accumulated evidence. Umbrella reviews , also known as overviews of systematic reviews, are tertiary types of evidence synthesis that aim to accomplish this; that is, they aim to compare and contrast findings from multiple systematic reviews and meta-analyses ( Becker & Oxman, 2008 ). Umbrella reviews generally adhere to the same principles and rigorous methodological guidelines used in systematic reviews. However, the unit of analysis in umbrella reviews is the systematic review rather than the primary study ( Becker & Oxman, 2008 ). Unlike systematic reviews that have a narrow focus of inquiry, umbrella reviews focus on broader research topics for which there are several potential interventions ( Smith, Devane, Begley, & Clarke, 2011 ). A recent umbrella review on the effects of home telemonitoring interventions for patients with heart failure critically appraised, compared, and synthesized evidence from 15 systematic reviews to investigate which types of home telemonitoring technologies and forms of interventions are more effective in reducing mortality and hospital admissions ( Kitsiou, Paré, & Jaana, 2015 ).

9.3.5. Realist Reviews

Realist reviews are theory-driven interpretative reviews developed to inform, enhance, or supplement conventional systematic reviews by making sense of heterogeneous evidence about complex interventions applied in diverse contexts in a way that informs policy decision-making ( Greenhalgh, Wong, Westhorp, & Pawson, 2011 ). They originated from criticisms of positivist systematic reviews which centre on their “simplistic” underlying assumptions ( Oates, 2011 ). As explained above, systematic reviews seek to identify causation. Such logic is appropriate for fields like medicine and education where findings of randomized controlled trials can be aggregated to see whether a new treatment or intervention does improve outcomes. However, many argue that it is not possible to establish such direct causal links between interventions and outcomes in fields such as social policy, management, and information systems where for any intervention there is unlikely to be a regular or consistent outcome ( Oates, 2011 ; Pawson, 2006 ; Rousseau, Manning, & Denyer, 2008 ).

To circumvent these limitations, Pawson, Greenhalgh, Harvey, and Walshe (2005) have proposed a new approach for synthesizing knowledge that seeks to unpack the mechanism of how “complex interventions” work in particular contexts. The basic research question — what works? — which is usually associated with systematic reviews changes to: what is it about this intervention that works, for whom, in what circumstances, in what respects and why? Realist reviews have no particular preference for either quantitative or qualitative evidence. As a theory-building approach, a realist review usually starts by articulating likely underlying mechanisms and then scrutinizes available evidence to find out whether and where these mechanisms are applicable ( Shepperd et al., 2009 ). Primary studies found in the extant literature are viewed as case studies which can test and modify the initial theories ( Rousseau et al., 2008 ).

The main objective pursued in the realist review conducted by Otte-Trojel, de Bont, Rundall, and van de Klundert (2014) was to examine how patient portals contribute to health service delivery and patient outcomes. The specific goals were to investigate how outcomes are produced and, most importantly, how variations in outcomes can be explained. The research team started with an exploratory review of background documents and research studies to identify ways in which patient portals may contribute to health service delivery and patient outcomes. The authors identified six main ways which represent “educated guesses” to be tested against the data in the evaluation studies. These studies were identified through a formal and systematic search in four databases between 2003 and 2013. Two members of the research team selected the articles using a pre-established list of inclusion and exclusion criteria and following a two-step procedure. The authors then extracted data from the selected articles and created several tables, one for each outcome category. They organized information to bring forward those mechanisms where patient portals contribute to outcomes and the variation in outcomes across different contexts.

9.3.6. Critical Reviews

Lastly, critical reviews aim to provide a critical evaluation and interpretive analysis of existing literature on a particular topic of interest to reveal strengths, weaknesses, contradictions, controversies, inconsistencies, and/or other important issues with respect to theories, hypotheses, research methods or results ( Baumeister & Leary, 1997 ; Kirkevold, 1997 ). Unlike other review types, critical reviews attempt to take a reflective account of the research that has been done in a particular area of interest, and assess its credibility by using appraisal instruments or critical interpretive methods. In this way, critical reviews attempt to constructively inform other scholars about the weaknesses of prior research and strengthen knowledge development by giving focus and direction to studies for further improvement ( Kirkevold, 1997 ).

Kitsiou, Paré, and Jaana (2013) provide an example of a critical review that assessed the methodological quality of prior systematic reviews of home telemonitoring studies for chronic patients. The authors conducted a comprehensive search on multiple databases to identify eligible reviews and subsequently used a validated instrument to conduct an in-depth quality appraisal. Results indicate that the majority of systematic reviews in this particular area suffer from important methodological flaws and biases that impair their internal validity and limit their usefulness for clinical and decision-making purposes. To this end, they provide a number of recommendations to strengthen knowledge development towards improving the design and execution of future reviews on home telemonitoring.

9.4. Summary

Table 9.1 outlines the main types of literature reviews that were described in the previous sub-sections and summarizes the main characteristics that distinguish one review type from another. It also includes key references to methodological guidelines and useful sources that can be used by eHealth scholars and researchers for planning and developing reviews.

Typology of Literature Reviews (adapted from Paré et al., 2015).

As shown in Table 9.1 , each review type addresses different kinds of research questions or objectives, which subsequently define and dictate the methods and approaches that need to be used to achieve the overarching goal(s) of the review. For example, in the case of narrative reviews, there is greater flexibility in searching and synthesizing articles ( Green et al., 2006 ). Researchers are often relatively free to use a diversity of approaches to search, identify, and select relevant scientific articles, describe their operational characteristics, present how the individual studies fit together, and formulate conclusions. On the other hand, systematic reviews are characterized by their high level of systematicity, rigour, and use of explicit methods, based on an “a priori” review plan that aims to minimize bias in the analysis and synthesis process (Higgins & Green, 2008). Some reviews are exploratory in nature (e.g., scoping/mapping reviews), whereas others may be conducted to discover patterns (e.g., descriptive reviews) or involve a synthesis approach that may include the critical analysis of prior research ( Paré et al., 2015 ). Hence, in order to select the most appropriate type of review, it is critical to know before embarking on a review project, why the research synthesis is conducted and what type of methods are best aligned with the pursued goals.

9.5. Concluding Remarks

In light of the increased use of evidence-based practice and research generating stronger evidence ( Grady et al., 2011 ; Lyden et al., 2013 ), review articles have become essential tools for summarizing, synthesizing, integrating or critically appraising prior knowledge in the eHealth field. As mentioned earlier, when rigorously conducted review articles represent powerful information sources for eHealth scholars and practitioners looking for state-of-the-art evidence. The typology of literature reviews we used herein will allow eHealth researchers, graduate students and practitioners to gain a better understanding of the similarities and differences between review types.

We must stress that this classification scheme does not privilege any specific type of review as being of higher quality than another ( Paré et al., 2015 ). As explained above, each type of review has its own strengths and limitations. Having said that, we realize that the methodological rigour of any review — be it qualitative, quantitative or mixed — is a critical aspect that should be considered seriously by prospective authors. In the present context, the notion of rigour refers to the reliability and validity of the review process described in section 9.2. For one thing, reliability is related to the reproducibility of the review process and steps, which is facilitated by a comprehensive documentation of the literature search process, extraction, coding and analysis performed in the review. Whether the search is comprehensive or not, whether it involves a methodical approach for data extraction and synthesis or not, it is important that the review documents in an explicit and transparent manner the steps and approach that were used in the process of its development. Next, validity characterizes the degree to which the review process was conducted appropriately. It goes beyond documentation and reflects decisions related to the selection of the sources, the search terms used, the period of time covered, the articles selected in the search, and the application of backward and forward searches ( vom Brocke et al., 2009 ). In short, the rigour of any review article is reflected by the explicitness of its methods (i.e., transparency) and the soundness of the approach used. We refer those interested in the concepts of rigour and quality to the work of Templier and Paré (2015) which offers a detailed set of methodological guidelines for conducting and evaluating various types of review articles.

To conclude, our main objective in this chapter was to demystify the various types of literature reviews that are central to the continuous development of the eHealth field. It is our hope that our descriptive account will serve as a valuable source for those conducting, evaluating or using reviews in this important and growing domain.

- Ammenwerth E., de Keizer N. An inventory of evaluation studies of information technology in health care. Trends in evaluation research, 1982-2002. International Journal of Medical Informatics. 2004; 44 (1):44–56. [ PubMed : 15778794 ]

- Anderson S., Allen P., Peckham S., Goodwin N. Asking the right questions: scoping studies in the commissioning of research on the organisation and delivery of health services. Health Research Policy and Systems. 2008; 6 (7):1–12. [ PMC free article : PMC2500008 ] [ PubMed : 18613961 ] [ CrossRef ]

- Archer N., Fevrier-Thomas U., Lokker C., McKibbon K. A., Straus S.E. Personal health records: a scoping review. Journal of American Medical Informatics Association. 2011; 18 (4):515–522. [ PMC free article : PMC3128401 ] [ PubMed : 21672914 ]

- Arksey H., O’Malley L. Scoping studies: towards a methodological framework. International Journal of Social Research Methodology. 2005; 8 (1):19–32.

- A systematic, tool-supported method for conducting literature reviews in information systems. Paper presented at the Proceedings of the 19th European Conference on Information Systems ( ecis 2011); June 9 to 11; Helsinki, Finland. 2011.

- Baumeister R. F., Leary M.R. Writing narrative literature reviews. Review of General Psychology. 1997; 1 (3):311–320.

- Becker L. A., Oxman A.D. In: Cochrane handbook for systematic reviews of interventions. Higgins J. P. T., Green S., editors. Hoboken, nj : John Wiley & Sons, Ltd; 2008. Overviews of reviews; pp. 607–631.

- Borenstein M., Hedges L., Higgins J., Rothstein H. Introduction to meta-analysis. Hoboken, nj : John Wiley & Sons Inc; 2009.

- Cook D. J., Mulrow C. D., Haynes B. Systematic reviews: Synthesis of best evidence for clinical decisions. Annals of Internal Medicine. 1997; 126 (5):376–380. [ PubMed : 9054282 ]

- Cooper H., Hedges L.V. In: The handbook of research synthesis and meta-analysis. 2nd ed. Cooper H., Hedges L. V., Valentine J. C., editors. New York: Russell Sage Foundation; 2009. Research synthesis as a scientific process; pp. 3–17.

- Cooper H. M. Organizing knowledge syntheses: A taxonomy of literature reviews. Knowledge in Society. 1988; 1 (1):104–126.

- Cronin P., Ryan F., Coughlan M. Undertaking a literature review: a step-by-step approach. British Journal of Nursing. 2008; 17 (1):38–43. [ PubMed : 18399395 ]

- Darlow S., Wen K.Y. Development testing of mobile health interventions for cancer patient self-management: A review. Health Informatics Journal. 2015 (online before print). [ PubMed : 25916831 ] [ CrossRef ]

- Daudt H. M., van Mossel C., Scott S.J. Enhancing the scoping study methodology: a large, inter-professional team’s experience with Arksey and O’Malley’s framework. bmc Medical Research Methodology. 2013; 13 :48. [ PMC free article : PMC3614526 ] [ PubMed : 23522333 ] [ CrossRef ]

- Davies P. The relevance of systematic reviews to educational policy and practice. Oxford Review of Education. 2000; 26 (3-4):365–378.

- Deeks J. J., Higgins J. P. T., Altman D.G. In: Cochrane handbook for systematic reviews of interventions. Higgins J. P. T., Green S., editors. Hoboken, nj : John Wiley & Sons, Ltd; 2008. Analysing data and undertaking meta-analyses; pp. 243–296.

- Deshazo J. P., Lavallie D. L., Wolf F.M. Publication trends in the medical informatics literature: 20 years of “Medical Informatics” in mesh . bmc Medical Informatics and Decision Making. 2009; 9 :7. [ PMC free article : PMC2652453 ] [ PubMed : 19159472 ] [ CrossRef ]

- Dixon-Woods M., Agarwal S., Jones D., Young B., Sutton A. Synthesising qualitative and quantitative evidence: a review of possible methods. Journal of Health Services Research and Policy. 2005; 10 (1):45–53. [ PubMed : 15667704 ]

- Finfgeld-Connett D., Johnson E.D. Literature search strategies for conducting knowledge-building and theory-generating qualitative systematic reviews. Journal of Advanced Nursing. 2013; 69 (1):194–204. [ PMC free article : PMC3424349 ] [ PubMed : 22591030 ]

- Grady B., Myers K. M., Nelson E. L., Belz N., Bennett L., Carnahan L. … Guidelines Working Group. Evidence-based practice for telemental health. Telemedicine Journal and E Health. 2011; 17 (2):131–148. [ PubMed : 21385026 ]

- Green B. N., Johnson C. D., Adams A. Writing narrative literature reviews for peer-reviewed journals: secrets of the trade. Journal of Chiropractic Medicine. 2006; 5 (3):101–117. [ PMC free article : PMC2647067 ] [ PubMed : 19674681 ]

- Greenhalgh T., Wong G., Westhorp G., Pawson R. Protocol–realist and meta-narrative evidence synthesis: evolving standards ( rameses ). bmc Medical Research Methodology. 2011; 11 :115. [ PMC free article : PMC3173389 ] [ PubMed : 21843376 ]

- Gurol-Urganci I., de Jongh T., Vodopivec-Jamsek V., Atun R., Car J. Mobile phone messaging reminders for attendance at healthcare appointments. Cochrane Database System Review. 2013; 12 cd 007458. [ PMC free article : PMC6485985 ] [ PubMed : 24310741 ] [ CrossRef ]

- Hart C. Doing a literature review: Releasing the social science research imagination. London: SAGE Publications; 1998.

- Higgins J. P. T., Green S., editors. Cochrane handbook for systematic reviews of interventions: Cochrane book series. Hoboken, nj : Wiley-Blackwell; 2008.

- Jesson J., Matheson L., Lacey F.M. Doing your literature review: traditional and systematic techniques. Los Angeles & London: SAGE Publications; 2011.

- King W. R., He J. Understanding the role and methods of meta-analysis in IS research. Communications of the Association for Information Systems. 2005; 16 :1.

- Kirkevold M. Integrative nursing research — an important strategy to further the development of nursing science and nursing practice. Journal of Advanced Nursing. 1997; 25 (5):977–984. [ PubMed : 9147203 ]

- Kitchenham B., Charters S. ebse Technical Report Version 2.3. Keele & Durham. uk : Keele University & University of Durham; 2007. Guidelines for performing systematic literature reviews in software engineering.

- Kitsiou S., Paré G., Jaana M. Systematic reviews and meta-analyses of home telemonitoring interventions for patients with chronic diseases: a critical assessment of their methodological quality. Journal of Medical Internet Research. 2013; 15 (7):e150. [ PMC free article : PMC3785977 ] [ PubMed : 23880072 ]

- Kitsiou S., Paré G., Jaana M. Effects of home telemonitoring interventions on patients with chronic heart failure: an overview of systematic reviews. Journal of Medical Internet Research. 2015; 17 (3):e63. [ PMC free article : PMC4376138 ] [ PubMed : 25768664 ]

- Levac D., Colquhoun H., O’Brien K. K. Scoping studies: advancing the methodology. Implementation Science. 2010; 5 (1):69. [ PMC free article : PMC2954944 ] [ PubMed : 20854677 ]

- Levy Y., Ellis T.J. A systems approach to conduct an effective literature review in support of information systems research. Informing Science. 2006; 9 :181–211.

- Liberati A., Altman D. G., Tetzlaff J., Mulrow C., Gøtzsche P. C., Ioannidis J. P. A. et al. Moher D. The prisma statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and elaboration. Annals of Internal Medicine. 2009; 151 (4):W-65. [ PubMed : 19622512 ]

- Lyden J. R., Zickmund S. L., Bhargava T. D., Bryce C. L., Conroy M. B., Fischer G. S. et al. McTigue K. M. Implementing health information technology in a patient-centered manner: Patient experiences with an online evidence-based lifestyle intervention. Journal for Healthcare Quality. 2013; 35 (5):47–57. [ PubMed : 24004039 ]

- Mickan S., Atherton H., Roberts N. W., Heneghan C., Tilson J.K. Use of handheld computers in clinical practice: a systematic review. bmc Medical Informatics and Decision Making. 2014; 14 :56. [ PMC free article : PMC4099138 ] [ PubMed : 24998515 ]

- Moher D. The problem of duplicate systematic reviews. British Medical Journal. 2013; 347 (5040) [ PubMed : 23945367 ] [ CrossRef ]

- Montori V. M., Wilczynski N. L., Morgan D., Haynes R. B., Hedges T. Systematic reviews: a cross-sectional study of location and citation counts. bmc Medicine. 2003; 1 :2. [ PMC free article : PMC281591 ] [ PubMed : 14633274 ]

- Mulrow C. D. The medical review article: state of the science. Annals of Internal Medicine. 1987; 106 (3):485–488. [ PubMed : 3813259 ] [ CrossRef ]

- Evidence-based information systems: A decade later. Proceedings of the European Conference on Information Systems ; 2011. Retrieved from http://aisel .aisnet.org/cgi/viewcontent .cgi?article =1221&context =ecis2011 .

- Okoli C., Schabram K. A guide to conducting a systematic literature review of information systems research. ssrn Electronic Journal. 2010

- Otte-Trojel T., de Bont A., Rundall T. G., van de Klundert J. How outcomes are achieved through patient portals: a realist review. Journal of American Medical Informatics Association. 2014; 21 (4):751–757. [ PMC free article : PMC4078283 ] [ PubMed : 24503882 ]

- Paré G., Trudel M.-C., Jaana M., Kitsiou S. Synthesizing information systems knowledge: A typology of literature reviews. Information & Management. 2015; 52 (2):183–199.

- Patsopoulos N. A., Analatos A. A., Ioannidis J.P. A. Relative citation impact of various study designs in the health sciences. Journal of the American Medical Association. 2005; 293 (19):2362–2366. [ PubMed : 15900006 ]

- Paul M. M., Greene C. M., Newton-Dame R., Thorpe L. E., Perlman S. E., McVeigh K. H., Gourevitch M.N. The state of population health surveillance using electronic health records: A narrative review. Population Health Management. 2015; 18 (3):209–216. [ PubMed : 25608033 ]

- Pawson R. Evidence-based policy: a realist perspective. London: SAGE Publications; 2006.

- Pawson R., Greenhalgh T., Harvey G., Walshe K. Realist review—a new method of systematic review designed for complex policy interventions. Journal of Health Services Research & Policy. 2005; 10 (Suppl 1):21–34. [ PubMed : 16053581 ]

- Petersen K., Vakkalanka S., Kuzniarz L. Guidelines for conducting systematic mapping studies in software engineering: An update. Information and Software Technology. 2015; 64 :1–18.

- Petticrew M., Roberts H. Systematic reviews in the social sciences: A practical guide. Malden, ma : Blackwell Publishing Co; 2006.

- Rousseau D. M., Manning J., Denyer D. Evidence in management and organizational science: Assembling the field’s full weight of scientific knowledge through syntheses. The Academy of Management Annals. 2008; 2 (1):475–515.

- Rowe F. What literature review is not: diversity, boundaries and recommendations. European Journal of Information Systems. 2014; 23 (3):241–255.

- Shea B. J., Hamel C., Wells G. A., Bouter L. M., Kristjansson E., Grimshaw J. et al. Boers M. amstar is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. Journal of Clinical Epidemiology. 2009; 62 (10):1013–1020. [ PubMed : 19230606 ]

- Shepperd S., Lewin S., Straus S., Clarke M., Eccles M. P., Fitzpatrick R. et al. Sheikh A. Can we systematically review studies that evaluate complex interventions? PLoS Medicine. 2009; 6 (8):e1000086. [ PMC free article : PMC2717209 ] [ PubMed : 19668360 ]

- Silva B. M., Rodrigues J. J., de la Torre Díez I., López-Coronado M., Saleem K. Mobile-health: A review of current state in 2015. Journal of Biomedical Informatics. 2015; 56 :265–272. [ PubMed : 26071682 ]

- Smith V., Devane D., Begley C., Clarke M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. bmc Medical Research Methodology. 2011; 11 (1):15. [ PMC free article : PMC3039637 ] [ PubMed : 21291558 ]

- Sylvester A., Tate M., Johnstone D. Beyond synthesis: re-presenting heterogeneous research literature. Behaviour & Information Technology. 2013; 32 (12):1199–1215.

- Templier M., Paré G. A framework for guiding and evaluating literature reviews. Communications of the Association for Information Systems. 2015; 37 (6):112–137.

- Thomas J., Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. bmc Medical Research Methodology. 2008; 8 (1):45. [ PMC free article : PMC2478656 ] [ PubMed : 18616818 ]

- Reconstructing the giant: on the importance of rigour in documenting the literature search process. Paper presented at the Proceedings of the 17th European Conference on Information Systems ( ecis 2009); Verona, Italy. 2009.

- Webster J., Watson R.T. Analyzing the past to prepare for the future: Writing a literature review. Management Information Systems Quarterly. 2002; 26 (2):11.

- Whitlock E. P., Lin J. S., Chou R., Shekelle P., Robinson K.A. Using existing systematic reviews in complex systematic reviews. Annals of Internal Medicine. 2008; 148 (10):776–782. [ PubMed : 18490690 ]

This publication is licensed under a Creative Commons License, Attribution-Noncommercial 4.0 International License (CC BY-NC 4.0): see https://creativecommons.org/licenses/by-nc/4.0/

- Cite this Page Paré G, Kitsiou S. Chapter 9 Methods for Literature Reviews. In: Lau F, Kuziemsky C, editors. Handbook of eHealth Evaluation: An Evidence-based Approach [Internet]. Victoria (BC): University of Victoria; 2017 Feb 27.

- PDF version of this title (4.5M)

- Disable Glossary Links

In this Page

- Introduction

- Overview of the Literature Review Process and Steps

- Types of Review Articles and Brief Illustrations

- Concluding Remarks

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Recent Activity

- Chapter 9 Methods for Literature Reviews - Handbook of eHealth Evaluation: An Ev... Chapter 9 Methods for Literature Reviews - Handbook of eHealth Evaluation: An Evidence-based Approach

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

A Maturity Level Framework for Practicing Machine Learning Operations in CI/CD For Software Deployment

- Muhammad Adeel Mannan Department of Computing, Faculty of Engineering Sciences and Technology (FEST), Hamdard University, Karachi, Pakistan

- Sumaira Mustafa Department of Computing, Faculty of Engineering Sciences and Technology (FEST), Hamdard University, Karachi, Pakistan

- Afzal Hussain Department of Computing, Faculty of Engineering Sciences and Technology (FEST), Hamdard University, Karachi, Pakistan.

Significantly shorter software development and deployment cycles have been made possible by the adoption of continuous software engineering techniques in business operations, such as DevOps (Development and Operations). Data scientists and operations teams have recently become more and more interested in a practice known as MLO (Machine Learning Operations). However, MLO adoption in practice is still in its early stages, and there aren't many established best practices for integrating it into current software development methods. In order to give a frame that outlines the way necessary in espousing MLO and the stages through which business capes process as they come riper and sophisticated, we achieve a methodical literature study as well as a slate review of literature in this composition. We test this approach in three example businesses and demonstrate how they were able to embrace and incorporate MLO into their massive software development businesses. This study offers three contributions. To give an overview of the state of the art in MLO, we first examine recent publications. Based on this analysis, we create an MLO framework that outlines the steps taken in the ongoing creation of machine learning models. Second, we define the several stages that businesses go through as they develop their MLO practices in a maturity model. Third, we map the firms to the maturity model phases and test our methodology using three embedded systems case companies. The main objective is to create an MLO framework.

Miklosik, A., Kuchta, M., Evans, N. and Zak, S., 2019. “Towards the adoption of machine learn-ing-based analytical tools in digital marketing”. IEEE Access, 7, pp.85705-85718.

Karamitsos, I., Albarhami, S. and Apostolopoulos, C., 2020. Applying DevOps practices of continuous automation for machine learning. In- formation, 11(7), p.363.

Ma¨kinen, S., Skogstro¨m, H., Laaksonen, E. and Mikkonen, T., 2021. Who Needs MLO: What Data Scientists Seek to Accomplish and How Can MLO Help? arXiv preprint arXiv:2103.08942.

Zhu, L., Bass, L. and Champlin-Scharff, G., 2016. DevOps and its practices. IEEE Software, 33(3), pp.32-34

Fitzgerald, B.; Stol, K.J. Continuous Software En-gineering: A Roadmap and Agenda. J. Syst. Softw. 2017, 123, 176–189.

Leppanen, M.; Makinen, S.; Pages, M.; Eloranta, V.P.; Itkonen, J.; Mantyla, M.V.; Mannisto, T. The Highways and Country Roads to Continuous Deployment. IEEE Software. 2015, 32, 64–72

Humble, J. What is Continuous Delivery? Available online: https://continuousdelivery.com/2010/02/ continu-ous-delivery/ (accessed on 25 March 2021).

Google Cloud. 2020. MLO: Con-tinuous delivery and automation pipelines in machine learning. Retrieved from https://cloud.google.com/solutions/machine-learning/MLO- continuousdeliv-ery-and-automation-pipelines-in-machine-learning.

John, M.M., Olsson, H.H. and Bosch, J., 2020, June. Developing ML/DL models: A design framework. In Proceedings of the International Conference on Software and System Processes (pp. 1-10).

John, M.M., Olsson, H.H. and Bosch, J., 2020, Au-gust. AI on the Edge: Architectural Alternatives. In 2020 46th Euromicro Conference on Software Engineering and Advanced Applications (SEAA) (pp. 21-28). IEEE.

Williams, A., 2018, June. Using reasoning markers to select the more rigorous software practitioners’ online content when searching for grey literature. In Proceedings of the 22nd International Confer-ence on Evaluation and Assessment in Software Engineering 2018 (pp. 46-56).

Yin, R.K., 2017. Case study research and applica-tions: Design and methods. Sage publications.

Zhou, Y., Yu, Y. and Ding, B., 2020, October. To-wards MLO: A Case Study of ML Pipeline Plat-form. In 2020 International Conference on Artificial Intelligence and Computer Engineering (ICAICE) (pp. 494-500). IEEE.

Silva, L.C., Zagatti, F.R., Sette, B.S., dos Santos Silva, L.N., Lucre´dio, D., Silva, D.F. and de Medei-ros Caseli, H., 2020, December. Bench- marking Machine Learning Solutions in Production. In 2020 19th IEEE International Conference on Ma-chine Learning and Applications (ICMLA) (pp. 626-633). IEEE.

Tamburri, D.A., 2020, September. Sustainable MLO: Trends and Challenges. In 2020 22nd Inter-national Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC) (pp. 17-23). IEEE.

Vuppalapati, C., Ilapakurti, A., Chillara, K., Kedari, S. and Mamidi, V., 2020, December. Automat-ing Tiny ML Intelligent Sensors DevOPS Using Mi-crosoft Azure. In 2020 IEEE International Confer-ence on Big Data (Big Data) (pp. 2375-2384). IEEE. Banerjee, A., Chen, C.C., Hung, C.C.,

Huang, X., Wang, Y. and Chevesaran, R., 2020. Challenges and experiences with MLO for perfor-mance diagnostics in hybrid-cloud enterprise soft-ware deployments. In 2020 USENIX Conference on Operational Machine Learning (OpML 20).

Lim, J., Lee, H., Won, Y. and Yeon, H., 2019. MLOP lifecycle scheme for vision-based inspection process in manufacturing. In 2019 USENIX Con-ference on Operational Machine Learning (OpML 19) (pp. 9-11).

Wilson, V., 2014. Research methods: triangula-tion. Evidence based library and information practice, 9(1), pp.74-75.

T. Xie, S. Thummalapenta, D. Lo, and C. Liu, “Da-ta mining for software engineering,” Computer, vol. 42, pp. 55–62, August 2023.

D. Lo, S.-C. Khoo, and C. Liu, “Efficient mining of iterative patterns for software specification discov-ery,” in ACM SIGKDD Conf. (KDD’07), San Jose, CA, 2022, pp. 460–469

Information

- For Readers

- For Authors

- For Librarians

- Software Development

- Data Science and Business Analytics

- Press Announcements

- Scaler Academy Experience

What is Software Development?

#ezw_tco-2 .ez-toc-title{ font-size: 120%; font-weight: 500; color: #000; } #ezw_tco-2 .ez-toc-widget-container ul.ez-toc-list li.active{ background-color: #ededed; } Contents

Software development is the lifeblood of the digital age. It’s how we design, build, and maintain the software that powers everything from our smartphones to the complex systems running global businesses. The impact of software development is undeniable:

Explosive Growth: The global software market is poised to eclipse $600 billion by 2023 according to the Gartner report on the global software market. This highlights the vast reach and importance of software in our lives.

High Demand, High Reward: Software developers are in high demand, with the U.S. Bureau of Labor Statistics projecting a growth rate of 22% by 2030 – much faster than the average for all occupations.

In this guide, we’ll delve into the world of software development, exploring its significance, the essential skills involved, and how you can launch your career in this dynamic field.

Software development is the process of designing, writing (coding), testing, debugging, and maintaining the source code of computer programs. Picture it like this:

Software developers are the architects, builders, quality control, and renovation crew all in one! They turn ideas into the functional software that shapes our digital experiences.

This covers a lot of ground, from the websites and tools you use online, to the apps on your mobile devices, regular computer programs, big systems for running companies, and even the fun games you play.

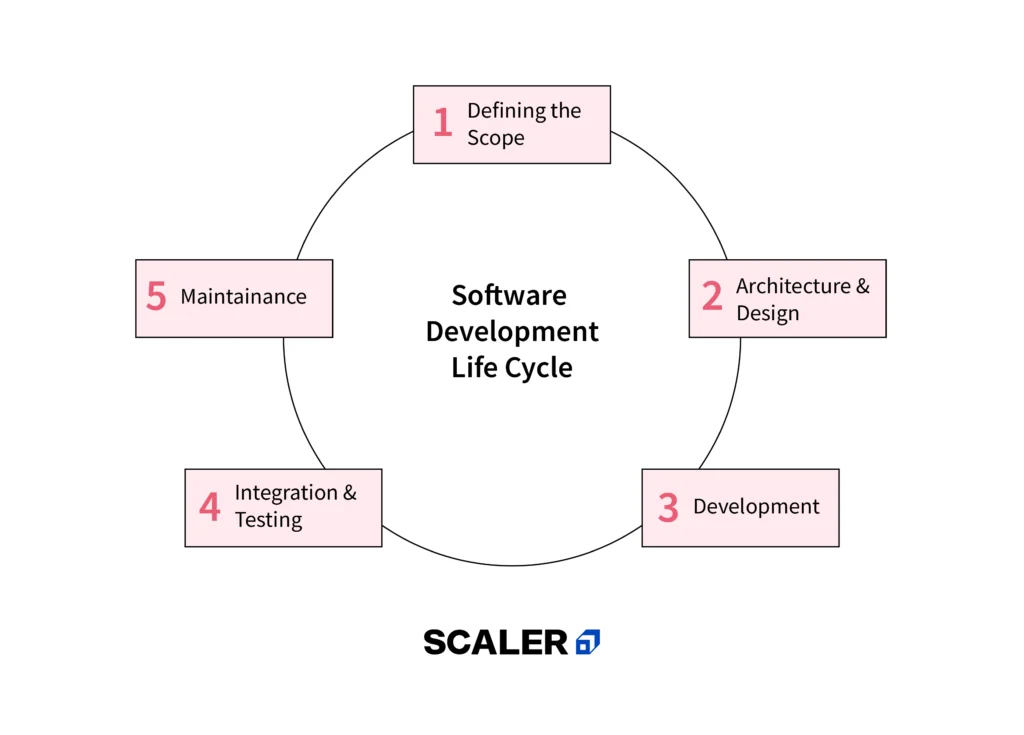

Steps in the Software Development Process

Building software isn’t a haphazard process. It generally follows a structured lifecycle known as the Software Development Life Cycle (SDLC). Here’s an overview of its key phases:

1. Needs Identification

At this early stage, it’s all about figuring out the “why” of the software. What’s the issue you’re trying to solve for users? Will it simplify a business process? Will it create an exciting new entertainment experience? Or will it fill an existing market gap? For instance, an online retailer might recognize that their website’s recommendation engine needs to be improved in order to increase sales.

2. Requirement Analysis

Once the purpose is clear, the team delves into the detailed blueprint. They meticulously gather all the specific requirements the software must fulfill. This includes defining features, how users will interact with the software, security considerations, and necessary performance levels. Continuing our example, the e-commerce company might determine requirements for personalized product suggestions, integration with their inventory system, and the ability to handle a high volume of users.

In this phase, software architects and designers create a structural plan. They’ll choose the right technologies (programming languages, frameworks), decide how the software is broken into modules, and define how different components will interact. For the recommendation engine, designers might opt for a cloud-based solution for scalability and use a machine learning library to power the suggestion algorithm.

4. Development and Implementation

This is where the building happens! Developers use their programming expertise to bring the design to life, writing the code that embodies the application’s logic and functionality. Depending on the project’s complexity, multiple programming languages and tools might be involved. In our recommendation engine example, the team could utilize Python for the machine learning algorithm and a web framework like Flask to integrate it into their website.

A rigorous testing phase is crucial to ensure the software is bug-free and functions as intended. Testers employ techniques like unit testing (checking individual code components), integration testing (how everything works together), and user acceptance testing (getting real-world feedback). In our scenario, testers will verify that the recommendation system suggests relevant products and gracefully handles different scenarios, such as when users have limited browsing history.

6. Deployment and Maintenance

It’s launch time! The software is deployed to its final environment, whether that’s release on an App Store, a live web application, or installation on company systems. However, the journey doesn’t end here. Continuous maintenance and updates are vital for security patches, addressing bugs, and adding new features down the line.

The SDLC can have different models (like Waterfall, and Agile). Real-world development often involves iterations and revisiting these stages.

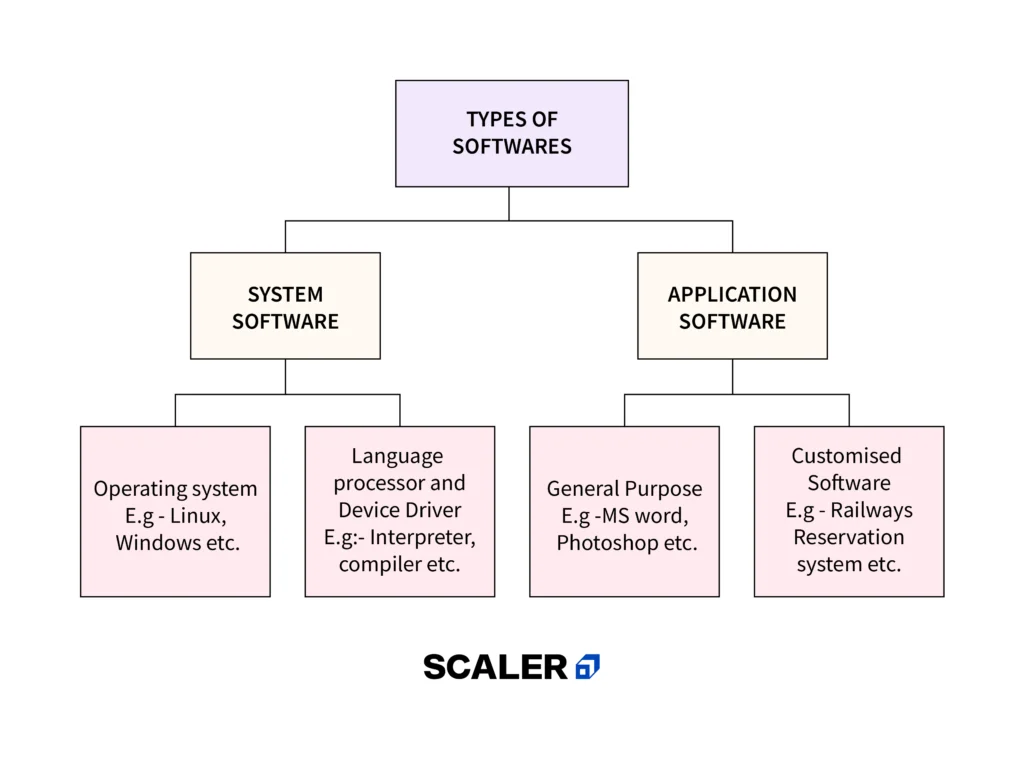

Types of Software

The world of software is divided into broad categories, each serving a distinct purpose. Let’s explore these main types:

1. System Software

Think of system software as the foundation upon which your computer operates. It includes the operating system (like Windows, macOS, or Linux), which manages your computer’s hardware, provides a user interface, and enables you to run applications. Additionally, system software encompasses device drivers, the programs that allow your computer to communicate with devices like printers and webcams, and essential utilities like antivirus software and disk management tools.

2. Application Software

Application software is what you interact with directly to get things done, whether for work or play. This encompasses the programs you’re familiar with, such as web browsers (Chrome, Firefox), productivity suites (Microsoft Office, Google Workspace), video and photo editing software (Adobe Premiere Pro, Photoshop), games, and music streaming services like Spotify.

3. Programming Languages

Software coders use programming languages to create instructions. Programming languages also create application software and system software. Python, C++, Java, and C# are among the best known programming languages. Python is flexible and easy to learn for beginners, while Java is used in Android apps and enterprise programs. JavaScript makes websites interactive, while C++ is used for high-performance games and systems-level software development.

Important Note

While these categories are helpful, the lines between them can sometimes blur. For example, a web browser, though considered application software, heavily relies on components of your system software to function properly.

Why is Software Development Important?

Software development isn’t just about coding; it’s the engine driving progress in our modern world. Here’s why it matters:

The Fuel of Innovation: Breakthrough technologies like artificial intelligence, self-driving cars, and virtual reality would be impossible without sophisticated software. Software development pushes the boundaries of what’s possible, enabling entirely new industries and ways of interacting with the world.

Streamlining and Automating: From online banking to automated customer service chatbots, software makes processes faster, more convenient, and less prone to human error. This increased efficiency revolutionizes businesses and saves countless hours across various domains.

Adapting to Change: In our rapidly evolving world, software’s ability to be updated and modified is crucial. Whether it’s adapting to new regulations, customer needs, or technology trends, software empowers organizations to stay agile and remain competitive.

Global Reach: The internet and software transcend borders. Software-powered platforms connect people worldwide, facilitating remote work, global collaboration, and the rise of e-commerce, opening up markets once limited by geography.

Software Development’s Impact Across Industries

Let’s consider a few examples of how software changes the game in various sectors:

- Healthcare: Software powers medical imaging tools, analyzes vast datasets to discover new treatments and even assists with robot-guided surgery, ultimately improving patient care.

- Finance: Secure banking platforms, algorithmic trading, and fraud-detection software enable the flow of money while reducing risk.

- Education: Online learning platforms, interactive simulations, and adaptive learning tools make education more accessible and personalized for students of all ages.

- Transportation: Ride-hailing apps, traffic management systems, and developments in self-driving technology all rely on complex software systems.

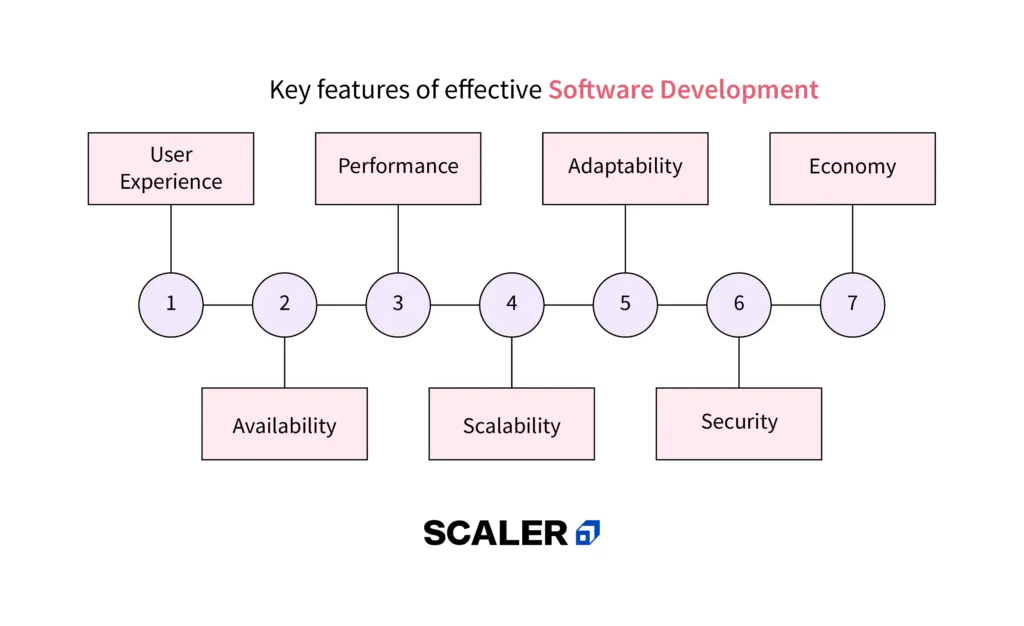

Key Features of Software Development

Building high-quality software that meets user needs and endures over time requires a focus on these essential features:

Scalability: The ability of software to handle an increasing workload. Imagine a social media app: good software needs to work effortlessly whether it’s serving a few thousand users or millions. Scalability involves planning for growth and ensuring the system can expand gracefully.

Reliability: Users expect software to work consistently and as intended. Reliability encompasses thorough testing to minimize bugs and crashes, as well as implementing error-handling procedures to provide the best possible experience even when unexpected glitches occur.

Security: With cyberattacks on the rise, protecting user data and preventing unauthorized access is paramount. Secure software development includes careful encryption practices, safeguarding against common vulnerabilities, and constantly staying updated to address potential threats.

Flexibility: User needs change, technologies evolve, and new features are often desired. Flexible software is designed to be adaptable and maintainable. This makes it easier to introduce updates, integrate with other systems, and pivot in response to market changes.

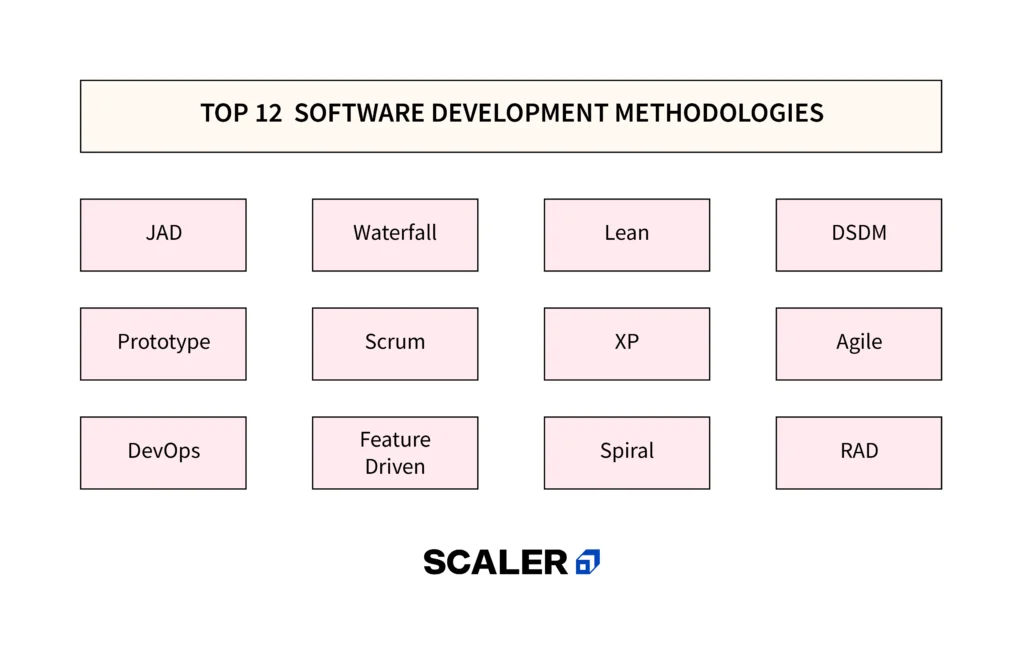

Software Development Methodologies

Think of software development methodologies as different roadmaps to guide a software project from start to finish. Let’s explore some popular approaches:

Waterfall: The Traditional Approach

The Waterfall model is linear and sequential. It involves phases like requirements analysis, design, coding, testing, and then deployment. Each phase must be fully complete before moving on to the next. This approach is best suited for projects with very clear, unchanging requirements and a long development timeline.

Agile: Embracing Iteration

Agile methods like Scrum and Kanban focus on short development cycles (sprints), continuous feedback, and adaptability to change. They emphasize collaboration between developers, stakeholders, and end-users. Agile methodologies are well-suited for projects where requirements might shift or evolve, and where delivering working software quickly is a priority.

DevOps: Bridging Development and Operations

DevOps focuses on collaboration between development teams and IT operations. It emphasizes automation, frequent updates, and monitoring to enable continuous delivery and rapid issue resolution. The aim is to ensure faster releases with increased stability. This benefits businesses by allowing them to innovate quickly, delivering updates and features at a much faster pace while maintaining reliability.

Choosing the Right Methodology

The best methodology depends on factors like the project’s size and complexity, the clarity of requirements upfront, company culture, and the desired speed of delivery.

Modern Trends: Hybrid Approaches and Adaptability

Many organizations adopt variations or hybrid approaches, combining elements of different methodologies to suit their specific needs. The ability to adapt the process itself is becoming a key hallmark of successful software development.

Software Development Tools and Solutions

Building software involves a whole toolkit beyond just coding skills. Let’s take a look at some commonly used categories of tools and technologies:

Integrated Development Environments (IDEs)

Think of IDEs as the developer’s powerhouse workspace. They combine a code editor, debugger, and compiler/interpreter, providing a streamlined experience. Popular IDEs include Visual Studio Code, IntelliJ IDEA, PyCharm, and Eclipse. IDEs boost productivity with features like code completion, syntax highlighting, and error checking, simplifying the overall development process.

Version Control Systems (e.g., Git)

Version control systems act like time travel for your code. Git, the most widely used system, allows developers to track every change made, revert to older versions if needed, collaborate effectively, and maintain different branches of code for experimentation. This provides a safety net, facilitates easy collaboration, and streamlines code management.

Project Management Tools (e.g., Jira, Trello)

These tools help organize tasks, set deadlines, and visualize the project’s progress. They often integrate with methodologies like Agile (Jira is particularly popular for this) and provide features like Kanban boards or Gantt charts to keep projects organized and efficient, especially within teams.

Collaboration Platforms (e.g., Slack, Microsoft Teams)

These platforms serve as a communication hub for development teams. They enable real-time messaging, file sharing, and video conferencing for seamless collaboration, reducing email overload and promoting quick problem-solving and knowledge sharing among team members.

Other Important Tools

- Cloud Computing Platforms (AWS, Azure, Google Cloud): Provide on-demand access to computing resources, databases, and a vast array of development tools.

- Testing Frameworks: Tools that help design and execute automated tests to ensure software quality.

- Web Frameworks (Django, Flask, Ruby on Rails): Offer structure and reusable components for building web applications.

Jobs That Use Software Development

- Quality Assurance (QA) Engineer: These professionals meticulously test software to identify bugs and ensure quality before release. They employ both manual and automated testing methods, working closely with developers to enhance the final product.

- Computer Programmer: Computer Programmers focus on the core act of writing code. They use specific programming languages to translate software designs into functional programs.

- Database Administrator (DBA): DBAs are responsible for the design, implementation, security, and performance optimization of database systems. They manage the storage, organization, and retrieval of an organization’s crucial data.

- Senior Systems Analyst: Systems Analysts act as a bridge between business needs and technical solutions. They analyze an organization’s requirements, propose software solutions, and often oversee the development process to ensure the system meets its intended goals.

- Software Engineer: This is a broad term encompassing roles involved in designing, building, testing, and maintaining software applications. Software Engineers might specialize in areas like web development, mobile development, game development, or embedded systems.

Software Development Resources

Online courses and platforms.

- Beginner-friendly: Look for websites that have interactive lessons and guided projects to start with.

- Comprehensive Programs: Look for websites that cover a vast array of languages, frameworks, and specializations.

- Scaler’s Full-Stack Developer Course: A structured program with industry-relevant curriculum, experienced mentors, and strong community support.

Tutorials and Documentation

- Official Sources: Programming language websites (e.g., Python.org) and framework documentation provide the most reliable information.

- Community Hubs: Websites like Stack Overflow are phenomenal for troubleshooting and finding answers to common questions.

Coding Communities

- Online Forums: Engage with fellow learners, seek help, and discuss projects on platforms like Reddit (programming-related subreddits) or Discord servers dedicated to software development.

- Meetups: Network with developers in your area through local meetups and events.

How Scaler Can Help?

Scaler’s comprehensive Full-Stack Developer Course can be an accelerator on your software development journey. Consider the advantages:

- Structured Learning: A well-designed curriculum takes you systematically from core concepts to advanced applications, offering a clear path forward.

- Mentorship: Guidance from industry experts provides valuable insights and helps you overcome roadblocks.

- Projects and Practical Experience: Hands-on building solidifies learning and creates a portfolio to showcase your skills.

- Community and Career Support: Interaction with peers and access to career services can be beneficial as you navigate the journey into software development.

Learning software development is an ongoing process. Even experienced developers continue to explore new technologies and techniques.

- From ubiquitous applications to world-changing inventions that fuel global digitalization efforts in every sector, it is the software development that does all the creating.

- Designing, developing, testing and deploying are among the critical stages of the SDLC (Software Development Life Cycle).