- Artificial Intelligence

- Natural Language Processing

- Vision Analytics

- Large Language Model (LLM)

- Digital Lending Automation

- Sales Analytics

- Manufacturing OEE Analytics

- HR Analytics

- Procurement Analytics

- IT JIRA tracking

Automate data into insights

Create and deploy models

- PrepAI AI Powered Question Generation Platform

- Tally BI Advanced Power BI Tally Data Solution

- Virtual Try On Platform Elevate your customers’ shopping experience

- HirelakeAI AI Powered Resume Parsing & Job Matching

- SensiblyAI Interpret your Audience

- DataToBiz CV Platform Test Vision Analytics for Free

- Knowledge Base

- Case Studies

- Refer and Earn

Effective Big Data Analytics Use Cases in 20+ Industries

If we have to talk about the modern technologies and industry disruptions that can benefit every industry and every business organization, then Big Data Analytics fits the bill perfectly.

The big data analytics market is slated to hit 103 bn USD by 2023 and 70% of the large enterprise business setups are using big data.

Organizations continue to generate heaps of data every year, and the global amount of data created, stored, and consumed by 2025 is slated to surpass 180 zettabytes.

However, they are unable to put this huge amount of data to the right use because they are clueless about putting their big data to work.

Here, we are discussing the top big data analytics use cases for a wide range of industries. So, take a thorough read and get started with your big data journey.

Let us begin with understanding the term Big Data Analytics.

What is Big Data Analytics?

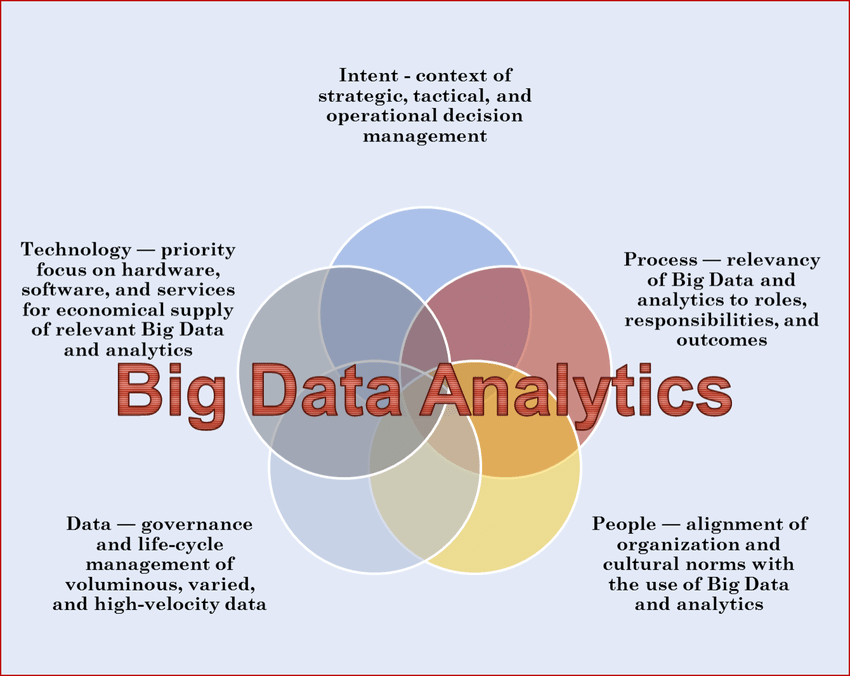

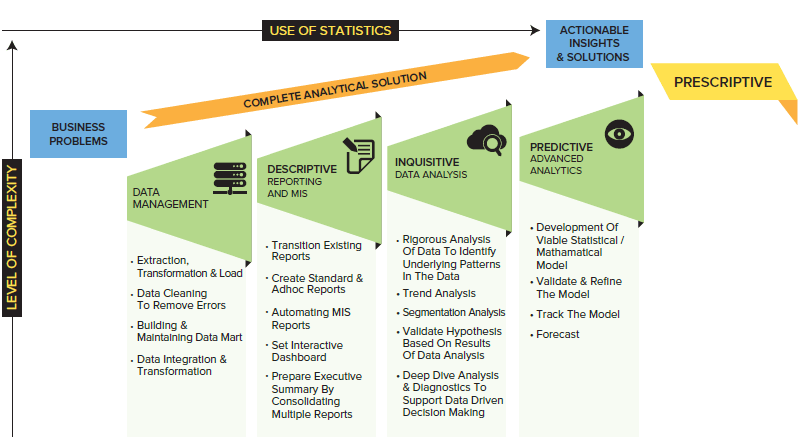

Big data analytics is the process of using advanced analytical techniques against extremely large and diverse data sets, with huge blocks of unstructured or semi-structured, or structured data. It is a complex process where the data is processed and parsed to discover hidden patterns, market trends, and correlations and draw actionable insights from them.

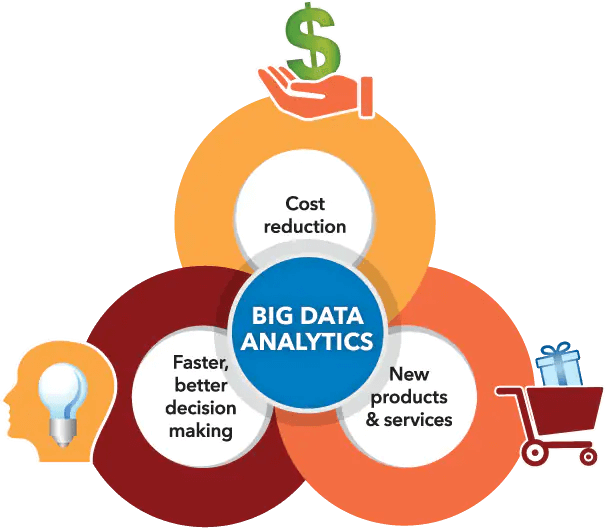

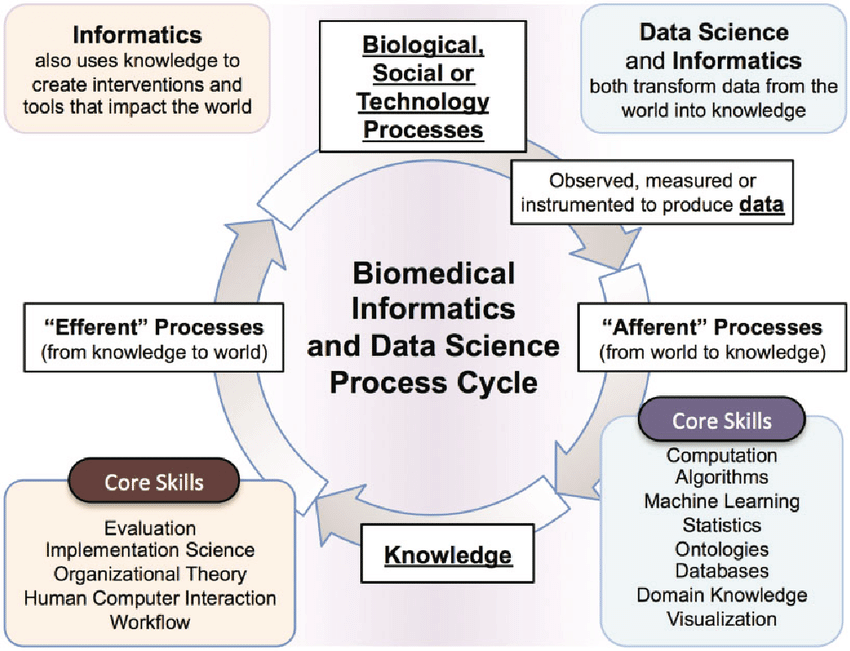

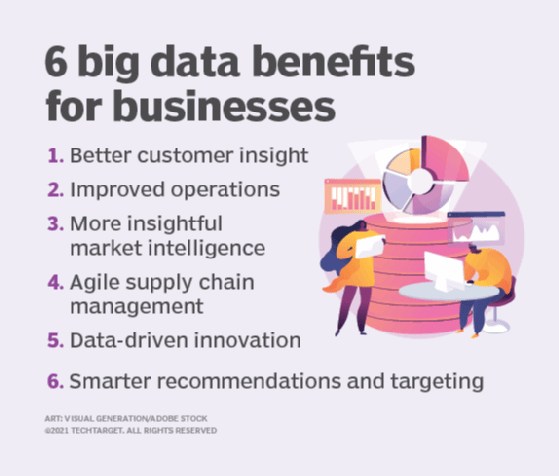

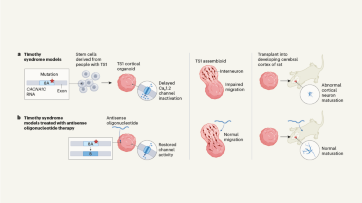

The following image shows some benefits of big data analytics:

Big data analytics enables business organizations to make sense of the data they are accumulating and leverage the insights drawn from it for various business activities.

The following visual shows some of the direct benefits of using big data analytics:

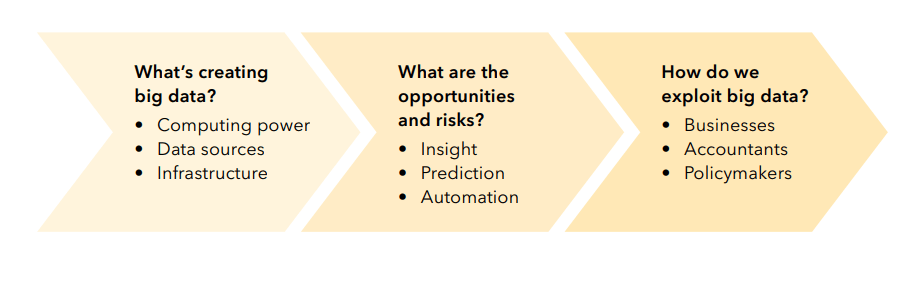

Before we move on to discuss the use cases of big data analytics, it is important to address one more thing – What makes big data analytics so versatile?

Core Strengths of Big Data Analytics

Big data analytics is a combination of multiple advanced technologies that work together to help business organizations use the best set of technologies to get the best value out of their data.

Some of these technologies are machine learning, data mining, data management, Hadoop, etc.

Below, we discuss the core strengths of big data.

1. Cost Reduction

Big data analytics offers data-driven insights for the business stakeholders and they can take better strategic decisions, streamline and optimize the operational processes and understand their customers better. All this helps in cost-cutting and adds efficiency to the business model.

Big data analytics also streamline the supply chains to reduce time, effort, and resource consumption.

Studies also reveal that big data analytics solutions can help companies reduce the cost of failure by 35% via:

- Real-time monitoring

- Real-time visualization

- In-memory Analytics

- Product Monitoring

- Effective Fleet Management

2. Reliable and Continuous Data

As big data analytics allows business enterprises to make use of organizational data, they don’t have to rely upon third-party market research or tools for the same. Further, as the organizational data expands continually, having a reliable and robust big data analytics platform ensures reliable and continuous data streams.

3. New Products and Services

Because of the availability of a set of diverse and advanced technologies in the form of big data analytics, you can take better decisions related to developing new products and services.

Also, you always have the best market and customer or end-user insights to steer the development processes in the right direction.

Hence, big data analytics also facilitates faster decision-making stemming from data-driven actionable insights.

4. Improved Efficiency

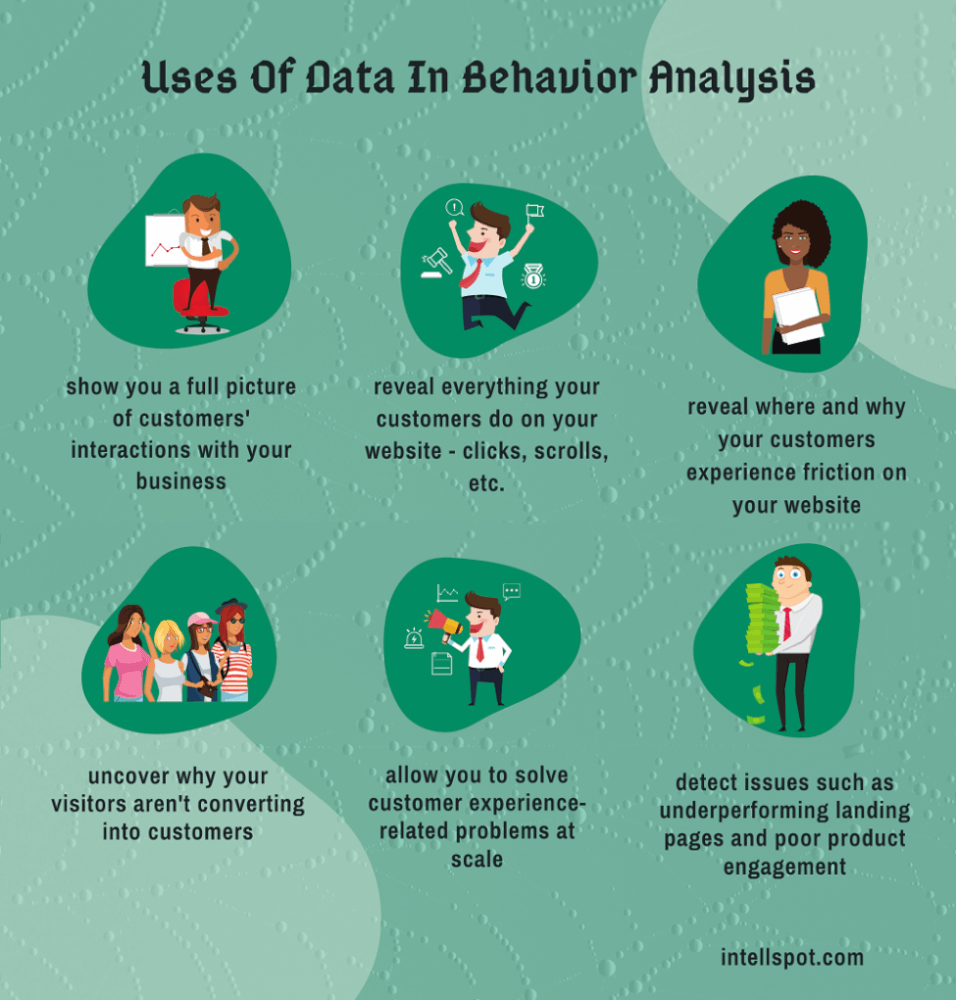

Big data analytics improves accuracy, efficiency, and overall decision-making in business organizations. You can analyze the customer behavior via the shopping data and leverage the power of predictive analytics to make certain calculations, such as checkout wait times, etc. Stats reveal that 38% of companies use big data for organizational efficiency.

Actionable Advice for Data-Driven Leaders

Struggling to reap the right kind of insights from your business data? Get expert tips, latest trends, insights, case studies, recommendations and more in your inbox.

5. Better Monitoring and Tracking

Big data analytics also empowers organizations with real-time monitoring and tracking functionalities and amplifies the results by suggesting the appropriate actions or strategizing nudges stemming from predictive data analytics.

These tracking and monitoring capabilities are of extreme importance in:

- Security posture management

- Mitigating cybersecurity attacks and minimizing the damage

- Database backup

- IT infrastructure management

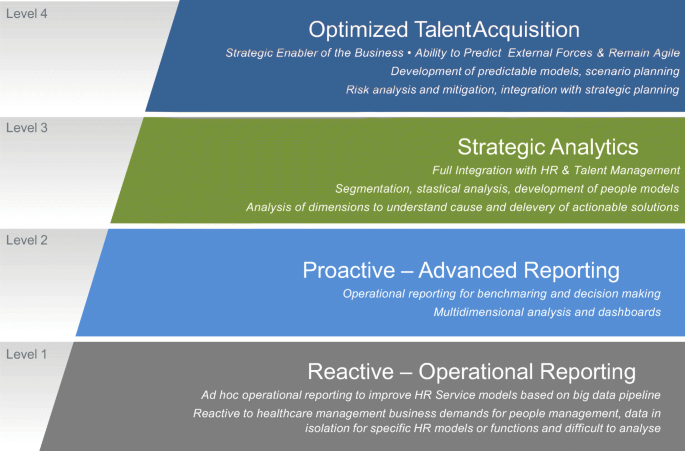

6. Better Remote Resource Management

Be it hiring or remote team management and monitoring, big data analytics offers a wide range of capabilities to enterprises. Big data analytics can empower business owners with core insights to make better decisions regarding employee tracking, employee hiring, performance management, etc.

This remote resource management capability works well for IT infrastructure management as well.

7. Taking Right Organizational Decisions

Take a look at the following visual that shows how big data analytics can help companies take better and data-driven organizational decisions.

Now, we discuss the top big data analytics use cases in various industries.

Big Data Analytics Use Cases in Various Industries

1. banking and finance (fraud detection, risk & insurance, and asset management).

Futuristic banks and financial institutions are capitalizing on big data in various ways, ranging from capturing new markets and market opportunities to fraud reduction and investment risk management. These organizations are able to leverage big data analytics as a powerful solution to gain a competitive advantage as well.

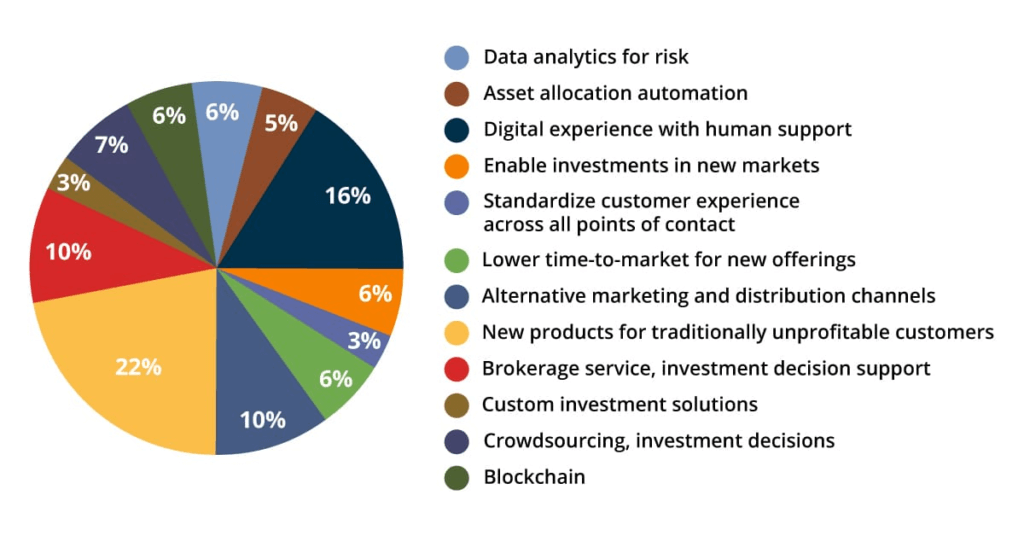

Take a look at the following image that shows various use cases of big data analytics in the finance and banking sector:

Recent studies suggest that big data analytics is going to register a CAGR of 22.97% over the period of 2021 to 2026. As the amount of data generated and government regulations increase, they are fueling the demand for big data analytics in the sector.

2. Accounting

Data is Accounting’s heart and using big data analytics in accounting will certainly deliver more value to the accounting businesses. The accounting sector has various activities, such as different types of audits, checking and maintaining ledger, transaction management, taxation, financial planning, etc.

The auditors have to deal with numerous sorts of data that might be structured or unstructured, and big data analytics can help them in:

- Outliers identification

- Exclude exceptions

- Focus on data blocks of greatest risk areas

- Visualize data

- Connect financial and non-financial data

- Compare predicted outcomes for improving forecasting etc

Using big data analytics will also improve regulatory efficiency, and minimize the redundancy in accounting.

3. Aviation

Studies reveal that the aviation analytics market will hit the 3bn USD by 2025 and will register a CAGR of 11.5% over the forecast period.

The major growth drivers of the aviation market are:

- Increasing demand for optimized business operations

- COVID-19 outbreak affecting the normal aviation operations

- Mergers, acquisitions, and joint ventures

Recent trends and changes in the Original Equipment Manufacturer (OEM) and user segment of the aviation industry One of the most bankable big data analytics opportunities in the aviation industry is cloud-based real-time data collection and analytics, which requires diverse data models.

Likewise, big data analytics has a huge potential in the airlines’ industry as well, improving basic operations, such as maintenance, distribution of resources, flight safety, flight services, to business goals, such as loyalty programs and route optimization.

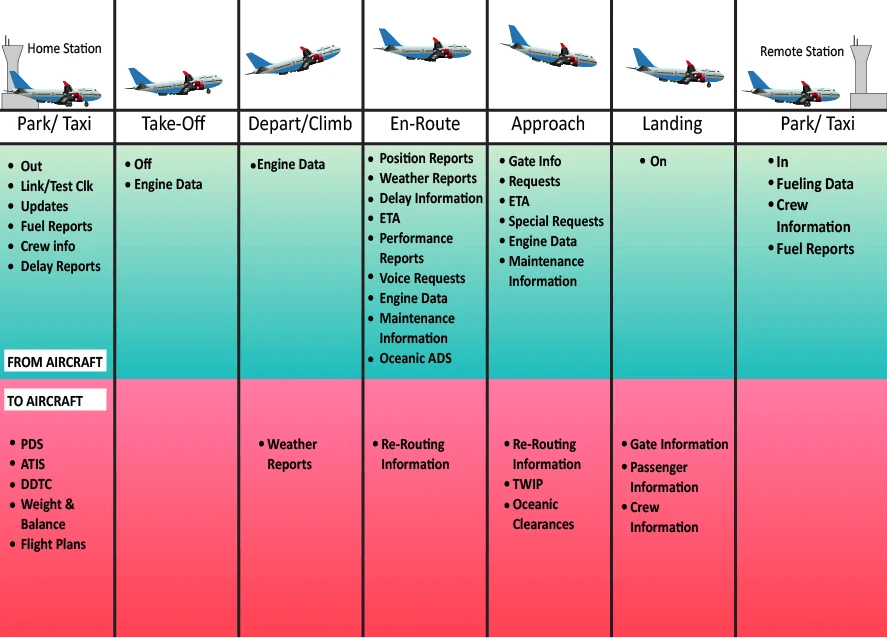

The following image shows the various points of data generation in the aviation industry (flights only), that can be a valid use case for big data analytics:

4. Agriculture

UN estimates reveal that the world population will hit the 9.8 billion mark by 2050 and to fulfill the food demands of such a large population, agriculture needs modification. However, the climate changes have not only rendered the majority of farmlands unfit for farming, but have also impacted the rainfall patterns, and dried a number of water sources.

This means that apart from increasing crop production, farmers have to improve the other farming-related activities.

Big data analytics can help agriculture and agribusiness stakeholders in the following ways:

- Precision farming techniques stemming from advanced technologies, such as big data, IoT , analytics, etc.

- Offer advance warnings and climate change predictions

- Ethical and wise use of pesticides

- Farm equipment optimization

- Supply chain optimization and streamlining

Some of the ideal case studies in this regard are:

- IBM food trust

5. Automotive

Be it research and development, or marketing planning, big data analytics has a huge scope in the automotive industry that is a combination of a number of individual industries. Being a core infrastructure segment empowering a number of crucial public and private ecosystems, the automobile sector generates huge loads of data every single day!

Hence, it is one of the most critical use cases for big data analytics.

Some common applications are:

- Improve the design and manufacturing process via a definitive cost analysis of various designs and concepts.

- Vehicle use and maintenance constraints

- Tracking and monitoring the manufacturing processes to ensure Zero fault in production

- Predicting market trends for sales, manufacturing, and technologies used by the automotive companies

- Supply chain and logistics analysis

- Streamlining the manufacturing to stay ahead of market competition

- Excellent quality analytics to create extremely user-friendly and high-performing vehicles

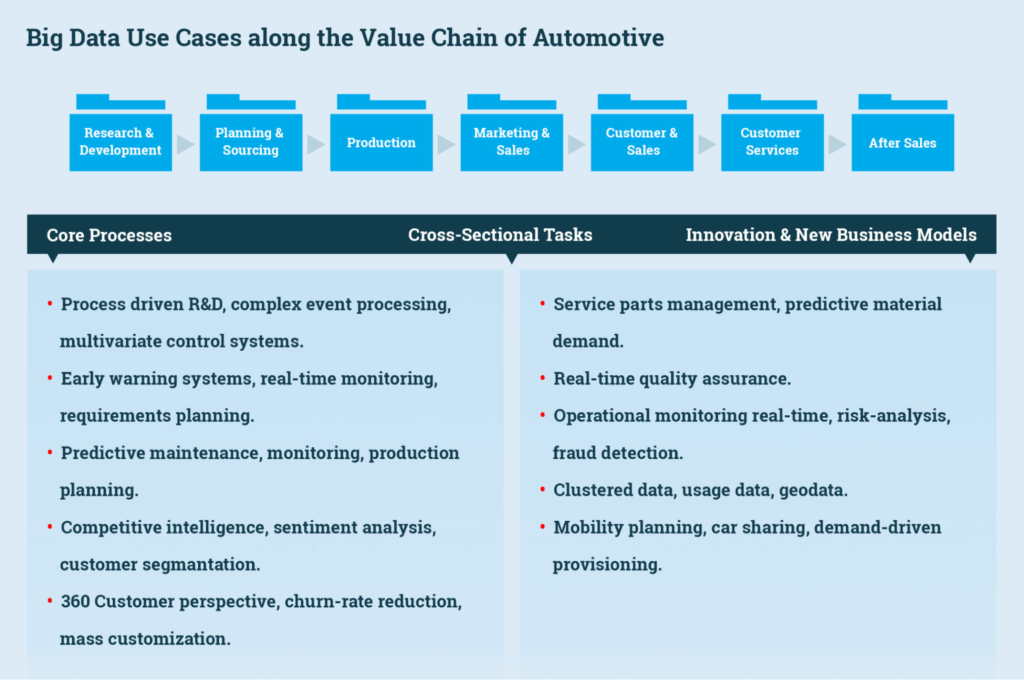

Take a look at the following visual to have an overall idea of the big analytics use cases in the value chain of the automotive industry:

6. Biomedical Research and Healthcare (Cancer, Genomic medicine, COVID-19 Management)

Recent stats reveal that the big data analytics market in healthcare will be around 67.82 bn USD by 2025. Healthcare is a huge industry generating mountains of data that is extremely crucial for the patients, medical institutions, insurance companies, government, and research as well.

With proper analysis of huge data blocks, big data analytics can not only help medical researchers to devise more targeted and successful treatment plans but also procure medical supplies from all over the world.

Organ donation, betterment of treatment facilities, development of better medicines, and prediction of pandemic or epidemic outbreaks to contain their ferocity – there are multiple ways big data analytics can benefit the healthcare industry.

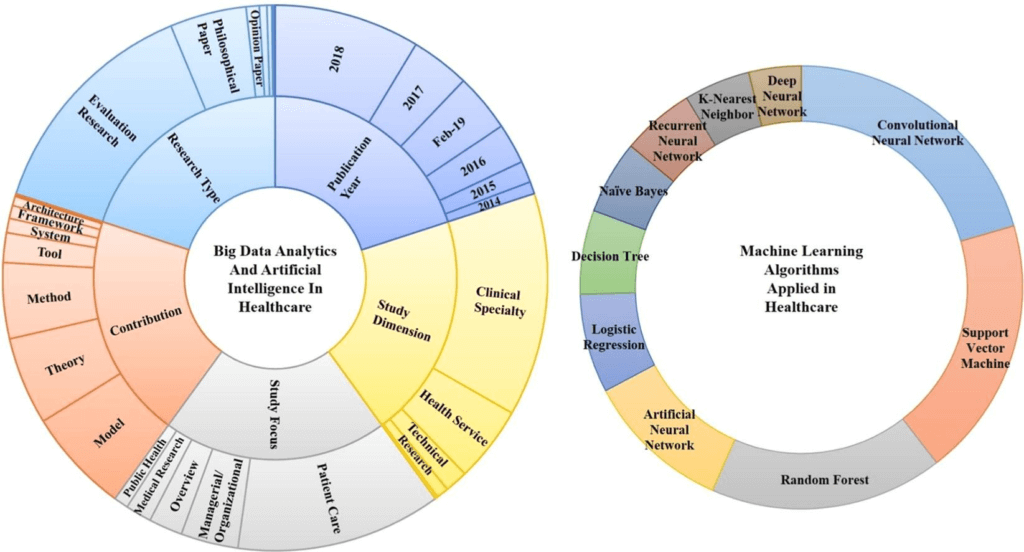

Take a look at the following image for a better understanding:

Also, big data analytics is playing a huge role in COVID-19 management by predicting the outbreaks, red zones, and facilitating crucial data for the frontline workers.

Finally, when we talk about Biomedical research, big data analytics emerges as a powerful tool for:

- Data sourcing, processing, and reporting

- Predicting trends, and offering hidden patterns from historic data blocks

- Genome research and individual genetic data processing for personalized medicine development

The biomedical research and healthcare industry is a huge use case for big data analytics and the applications can themselves form a topic of lengthy discussion.

Various applications of big data analytics in biomedical informatics:

7. Business and Management

95% of businesses cite unstructured data management as a major problem and 97.2% of business organizations are investing in AI and big data to streamline operations, implement digitization and introduce automation, among other business objectives.

However, the business organizations suffer from multiple data pain points, such as:

- Unstructured data

- Fragmented data

- Database incompatibility

- Unstructured data storage and management

- Data loss due to cyber crimes

Big data analytics can thus be a knight in shining armor for business process streamlining and management with its massive capability set.

Business owners can take more targeted, data-driven, and smart decisions based on the data insights provided by big data analytics, and do much more, as ideated in the following visual:

8. Cloud Computing

45% of businesses across the globe are running at least one big data workload on the cloud, and public cloud services will drive 90% of innovation in analytics and data.

Cloud computing has many challenges, and security is one of them. In fact, security is becoming a major concern for business organizations across the world as well. ‘

Also, big data analytics has rigorous network, data, and server requirements that persuade business organizations across the globe to outsource the hassle and operational overloads to third parties. It is spurring a number of new opportunities that support big data analytics and help organizations overcome architectural hurdles.

9. Cybersecurity

In cybersecurity, big data security analytics is an emerging trend and helps business organizations to improve security via:

- Identify outliers and anomalies in security data to detect malicious or suspicious activities

- Workflow automation for responding to threats, such as disrupting obvious malware attacks

53% of the companies that are already using big data security analytics say that they experienced high benefits from big data analytics.

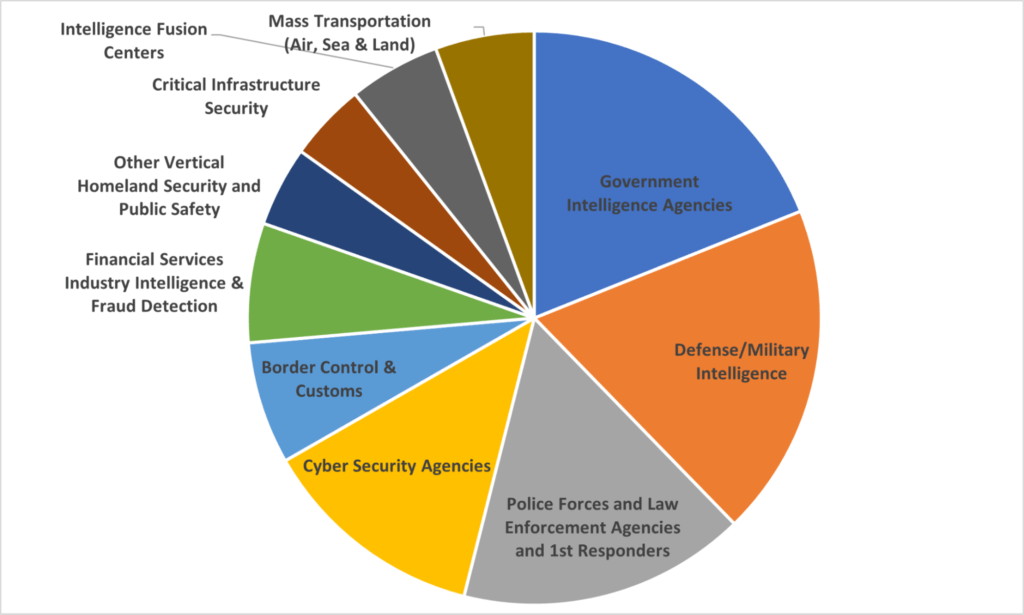

10. Government and Law Enforcement

Government and public infrastructure produce a large amount of data in various forms, such as body cameras, CCTV footage, satellites, public schemes, registrations, certifications, social media, etc.

Big data analytics can empower the government and public services sector in many ways, some of which are mentioned below:

- Open data initiatives to manage, monitor, and track the private company data

- Encouraging public participation and transparency in open data initiatives by the government

- Predicting consumer frauds, political shifts, and tracking the border security

- Defense and consumer protection

- Public safety via a rapid and efficient address of public grievances

- Transportation and city infrastructure management

- Public health management

- Efficient and data-driven management of energy, environment, and public utilities

Also, big data analytics are of extreme importance in the law enforcement segment as well. Tracking crimes, real-time and 24X7 policing of sensitive areas, real-time monitoring and tracking of criminals, smugglers, and tracing money launderers – there are various ways big data analytics can help law enforcement stakeholders.

The following visual shows how big data analytics can help the law enforcement and national security sectors:

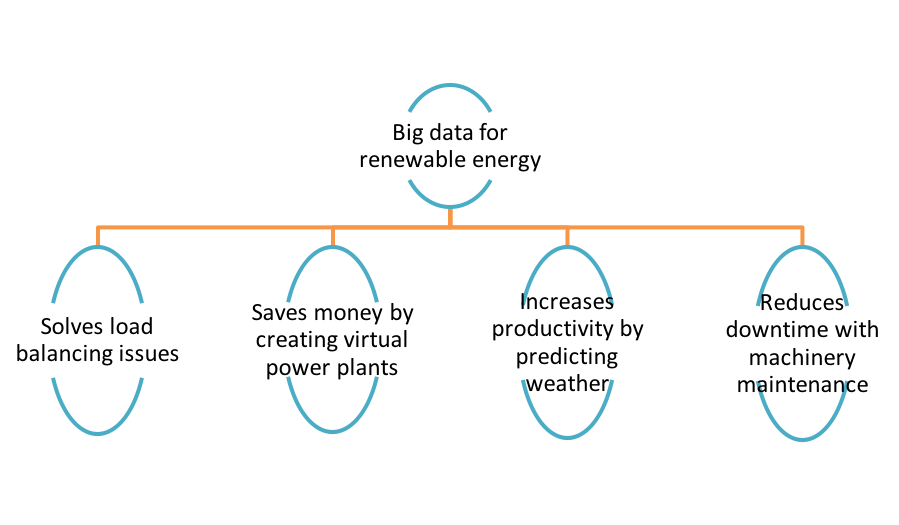

11. Oil, Gas & Renewable Energy

From offering new ways to innovate for various sectors to using data sensors for tracking and monitoring new preserves, big data analytics offers many use cases in the energy industry.

Some common application areas include:

- Tracking and monitoring of oil well and equipment performance

- Monitor well activity

- Predictive equipment maintenance in remote and deep-water locations

- Oil exploration and optimizing drilling sites

- Optimization of oil production via unstructured sensor and historical data

Some other potential areas where data analytics is of extreme importance are the safety of oil sites, supply pipes, and saving time via automation.

Improvement of fuel transportation, supply chain, and logistics are some other areas where big data analytics can be of help.

Further, in the renewable energy sector, the technology can offer actionable insights such as geographical data insights for installing renewable energy plants, deforestation maps, efficiency, and cost-benefit analysis of various methods of energy production, as shown below:

12. Manufacturing & Supply Chain Management

When the world is on the verge of the fourth industrial revolution, the manufacturing sector and supply chains are subject to an intense revolution in many ways. The manufacturers are looking for ways to harness massive data they generate in order to streamline the business processes, dig hidden patterns and market trends from huge data blocks to drive profits, and boost their business equities.

There are three core segments in the manufacturing industry that form crucial application areas of big data analytics:

- Predictive Maintenance – Predict equipment failure, discover potential issues in the manufacturing units as well as products, etc.

- Operational Efficiency – Analysis and assessment of production processes, proactive customer feedback, future demand forecasts, etc.

- Production Optimization – Optimizing the production lines to decrease the costs and increase business revenue, and identify the processes or activities causing delays in production.

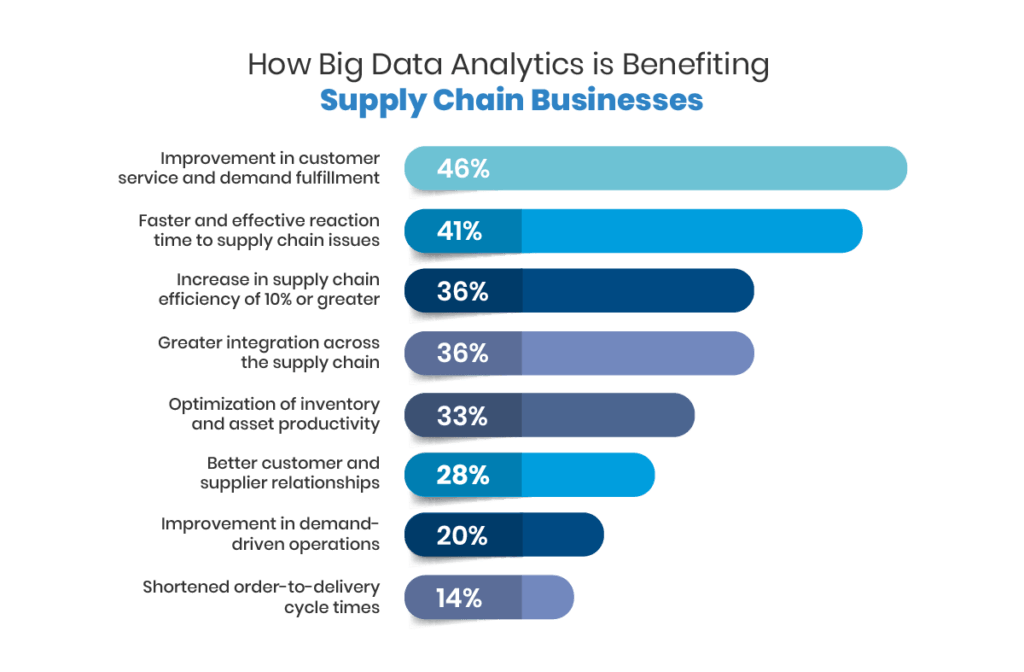

Big data analytics can help businesses revolutionize the supply chains in various ways, such as:

13. Retail

The modern retail landscape is alight with fierce competition and is becoming increasingly volatile with industry disruptions and the break-neck pace of technological advancements. Businesses are focusing on many granular aspects of customers and business offerings, irrespective of them being product-based vendors or service-based vendors.

Some of the big data analysis use cases in retail are:

- Product Development – Predictive business models, market research for developing products that are high in demand, and get deep insights from huge consumer and market data from multiple platforms.

- Customer Experience and Service – Providing personalized and hyper-personalized services and customer experiences throughout the customer journeys and addressing crucial events, such as customer complaints, customer churn, etc.

Customer Lifetime Value – Rich actionable insights on customer behavior, purchase patterns , and motivation to offer a highly personalized lifetime plan to all the customers.

14. Stock Market

Another crucial industry that walks in parallel with retail, and drives the economy is the Stock Market. And, big data analytics can be a game-changer here as well.

Experts say that big data analytics has changed finance and stock market trading by:

- Offering smart automated investment and trading modules

- Smart modules for funds planning and management of stocks based on real-time market insights

- Using predictive insights for gaining more by trading well ahead of time

- Estimation of outcomes and returns for investments of all sizes and all types.

15. Telecom

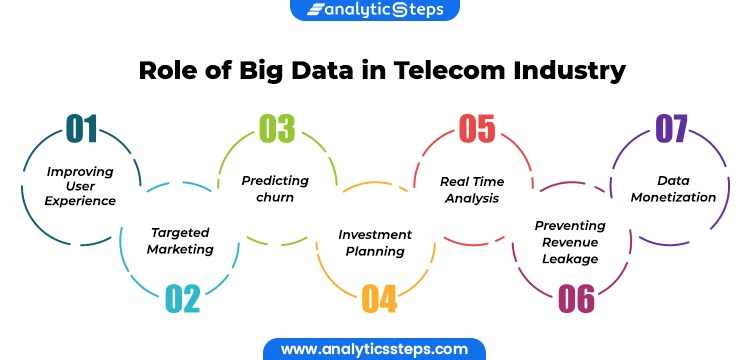

The telecom industry is in for a huge wave of digital transformation and revolution by advanced technologies and data analytics. As the number of smartphone users increases and technologies like 5G is all set to penetrate the developing countries as well, big data analytics emerges as a credible tool to tackle multiple issues.

Some applications are shown in the following image:

Some use cases for big data in the telecom industry are:

- Optimizing Network Capacity – Analysis of network usage for deciding rerouting bandwidth, managing the network limitation, and decoding infrastructure investments with data-driven insights from multiple areas.

- Telecom Customer Churn – With multiple options available in the market, the business operators are always at a risk of losing customers to their competitors.

- With insights collected from data about customer satisfaction, market research, and service quality, the brands can address the issue with much clarity.

- New Product Offerings – With predictive analytics and thorough market research, the telecom companies can come up with new product offerings that are unique, address the customer pain points, and cater to usability concerns, instead of generic brand offerings.

16. Media and Entertainment

In the Media and Entertainment industry, big data analytics can offer insights about the various content preferences, reception, and cost/subscription ideas to the brands.

Further, analysis of customer behavior and content consumption can be used to offer more personalized content recommendations and get insights for creating new shows. Market potential, market segmentation, and insights about customer sentiments can also help drive core business decisions to increase revenue and decrease the odds of creating flop or lopsided content.

17. Education

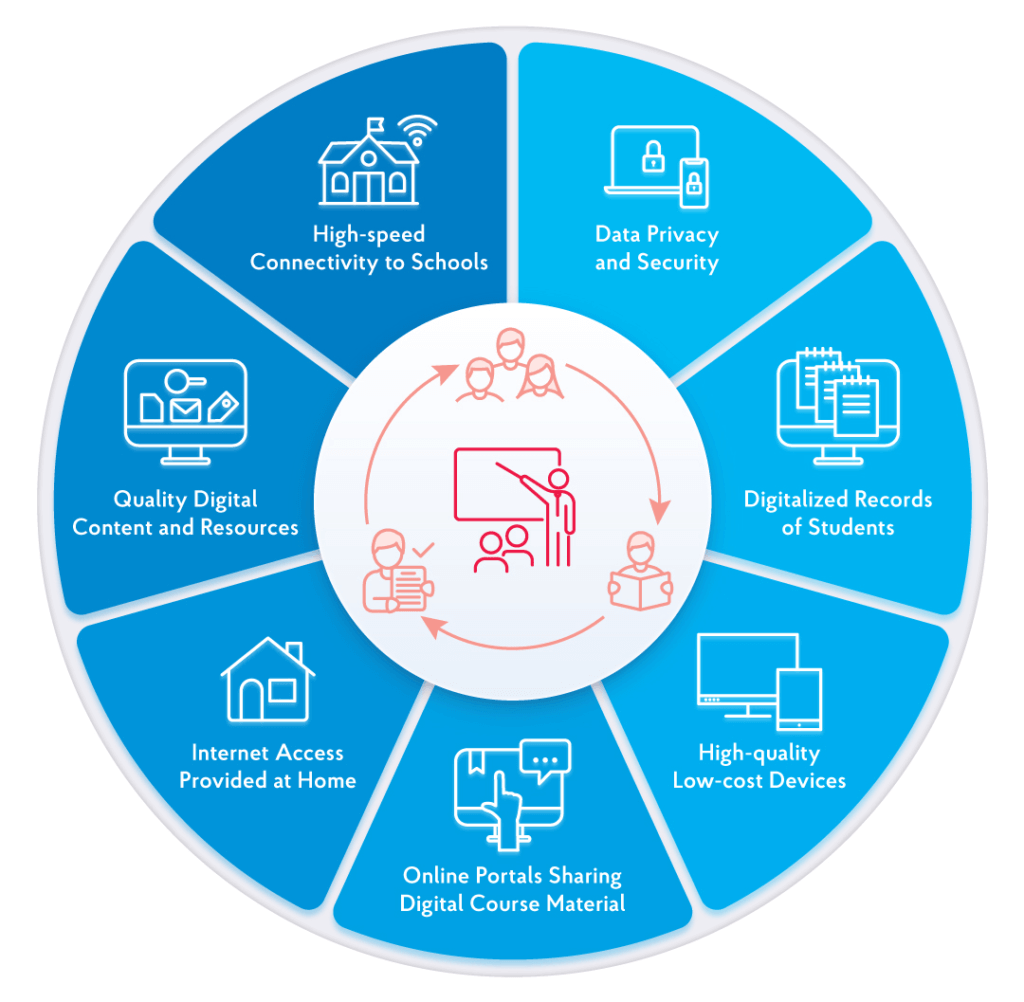

Market forecasts suggest that the big data analytics market in education will stand at 57.14 bn USD by 2030. Despite being extremely useful in various segments of the industry, the technology valuation differs greatly from the industries mentioned above.

There are many reasons for the same, such as regional education policies, lack of digitization , and technological advancements in the sector.

Some core areas of application are shown in the following visual:

18. Pharmacy

In the Pharmacy sector, big data analytics is of extreme importance in the following areas:

- Standardization of images, numerical, data processing methods

- Gaining insights from hoards of analytical and medical data that is still siloed in the research files

- Clinical monitoring

- Personalized drug development and digitized data analysis

- Operations management in institutes and manufacturing units

- Addressing the failure of traditional data processing methods

- Taking model-based decisions

19. Psychology

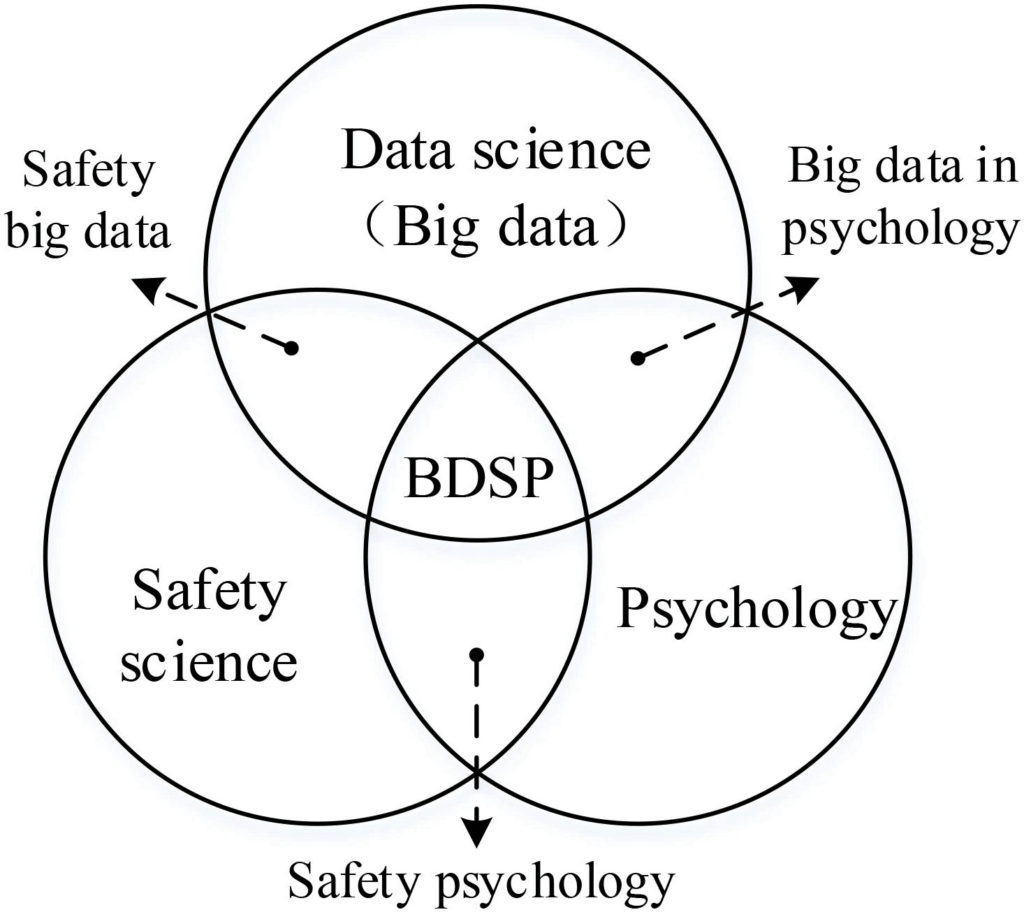

If you are unable to grasp the relationship between psychology and data analytics, take a look at the graphical relationship diagram below:

Big data analytics has a big role in psychology, such and in its multiple branches, such as organizational psychology to understand employee motivation and satisfaction in a better manner, etc, and safety psychology to make counseling and medical consultation better.

Further, when it comes to therapeutic counseling, big data analytics can help the practitioners by offering behavioral models of a patient and their tendencies, and develop personalized therapy programs or diagnosing severe psychological disorders for criminal cases, etc.

20. Project Management

The global business dissatisfaction with project management techniques is increasing despite innovation in workplace tech. Also, only 78% of the projects meet original goals and only 64% of them are completed on time.

Project management is a huge use case for big data analytics, and some application areas are:

- Deriving project feasibility stats from initial work plans and SRS documents

- Predicting the success and failure of the development process

- Checking the market relevance, budgeting, etc

Some other applications of big data analytics in project management are:

21. Marketing and Sales (Advertising)

Market research is a complex industry with various independent surveys and studies going on simultaneously. Apart from generating a huge amount of data, these studies also generate a huge number of redundancies because of the unstructured nature of data.

Big data analytics can not only make study results better but also help organizations to leverage them better by allowing them to define specific test cases and custom parameters.

Also, when it comes to sales and sales processes, big data analytics is of paramount importance as it surpasses the “ dry ” nature of data.

It can go beyond the statistics to discover the underlying trends, such as behavioral analytics, sentiment analysis, predictive analysis of customer comments in informal or regional language to decode customer satisfaction levels, etc.

The following visual shows how these stats help businesses make important decisions:

Thus, the brands can market more, better, and with proper customer targets in mind.

22. Social Media Management

Another crucial segment of marketing and sales is social media management and monitoring as more and more people are now using social media platforms for shopping, reviewing, and interacting with brands.

However, when it comes to drawing sensible business-relevant insights from the huge amounts of social media data, the majority of brands succumb to feeble data analytics software.

Big data analytics can uncover excellent data insights from the social media channels and platforms to make marketing, customer service, and advertising better and more aligned to business goals.

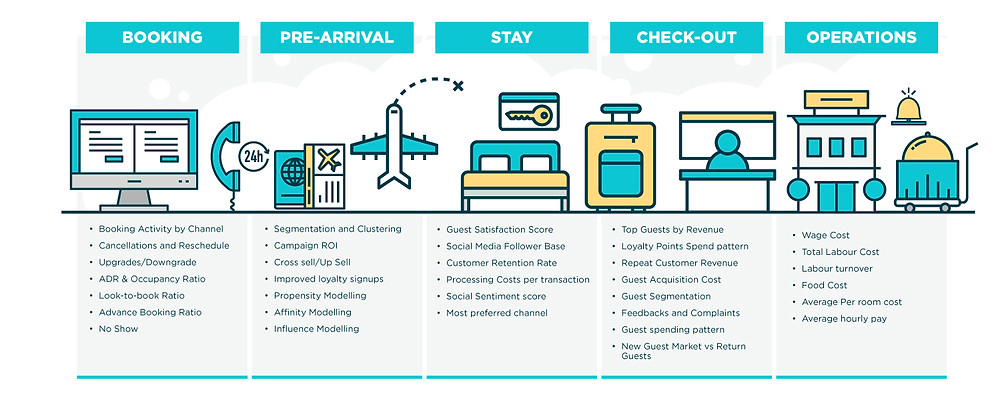

23. Hospitality, Restaurants, and Tourism

Ranging from an increase in online revenue to a reduction in guest complaints, and increasing customer satisfaction via highly personalized services during the stay – there are multiple use cases for big data analytics in the hospitality and restaurant industries .

Apart from the customer-relevant insights, big data analytics can also offer business insights to the business owners such as:

- Location suggestions

- Itinerary suggestions

- Deals, discounts, and promotional campaigns

- Smart advertising

- Pricing and family/corporate-specific services

- Travelers’ needs

The tourism industry is also an interesting use case, as people are now traveling for many purposes, other than business, leisure, and work, such as medical tourism.

Some of the application areas of big data analytics in the tourism industry are shown in the following visual:

24. Miscellaneous Use Cases

Construction.

- Resolving structural issues

- Improved collaboration

- Reduced construction time, wastage, and carbon emissions

- Wearables’ data processing to improve worker safety

Image Processing

- Better image data visualization

- Satellite image processing

- Improved security for confidential images

- Interactive digital media

- Military imagery protection and image data processing

- Image-based modeling and algorithms

- Knowledge-based recognition

- Virtual and augmented reality

- Track maintenance and planning

- Service, customer, and travel data

- Real-time predictive analysis for minimizing delays owing to weather and sudden incidents

- Infrastructure management

- Coach maintenance, facility maintenance, and safety of travelers

Big Data Analytics: Laying the Road for Future-Ready Businesses

The future of the business landscape is full of uncertainties and intense competition, and nothing is more reliable and credible than data!

Big data analytics offers powerful data mining, management, and processing capabilities that can help businesses make the most of historical data and continuously generated organizational data.

With abilities to drive business decisions for the present and future, big data analytics is one of the most bankable technologies for businesses of all types and all scales.

While it is easy to say, adopting and implementing big data analytics is a challenging task with serious requirements, in terms of resources and capital. Hence, the best way to take the first step towards embracing the revolution is by opting for reputed big data consulting companies , such as DataToBiz that can help you identify, understand, and cater to your big data analytics needs.

For more information, book an appointment today!

Arya Bharti

Driven by passion and an unrelenting urge to learn, Arya Bharti has a keen interest in evolving and innovative business technology and solutions that empower businesses and people alike. You can connect with Arya on LinkedIn and Facebook.

Leave a Reply Cancel reply

You must be logged in to post a comment.

Data Engineering

AI & Machine Learning

By Use Cases

Business Intelligence & Tableau

+91 70099 35623

[email protected], f-429, industrial area, phase 8b, mohali, pb 160059 punjab, india, ©2024 datatobiz r all rights reserved.

- Privacy Policy

Subscribe To Our Newsletter

Get amazing insights and updates on the latest trends in AI, BI and Data Science technologies

Big data case study: How UPS is using analytics to improve performance

A new initiative at UPS will use real-time data, advanced analytics and artificial intelligence to help employees make better decisions.

As chief information and engineering officer for logistics giant UPS, Juan Perez is placing analytics and insight at the heart of business operations.

Big data and digital transformation: How one enables the other

Drowning in data is not the same as big data. Here's the true definition of big data and a powerful example of how it's being used to power digital transformation.

"Big data at UPS takes many forms because of all the types of information we collect," he says. "We're excited about the opportunity of using big data to solve practical business problems. We've already had some good experience of using data and analytics and we're very keen to do more."

Perez says UPS is using technology to improve its flexibility, capability, and efficiency, and that the right insight at the right time helps line-of-business managers to improve performance.

The aim for UPS, says Perez, is to use the data it collects to optimise processes, to enable automation and autonomy, and to continue to learn how to improve its global delivery network.

Leading data-fed projects that change the business for the better

Perez says one of his firm's key initiatives, known as Network Planning Tools, will help UPS to optimise its logistics network through the effective use of data. The system will use real-time data, advanced analytics and artificial intelligence to help employees make better decisions. The company expects to begin rolling out the initiative from the first quarter of 2018.

"That will help all our business units to make smart use of our assets and it's just one key project that's being supported in the organisation as part of the smart logistics network," says Perez, who also points to related and continuing developments in Orion (On-road Integrated Optimization and Navigation), which is the firm's fleet management system.

Orion uses telematics and advanced algorithms to create optimal routes for delivery drivers. The IT team is currently working on the third version of the technology, and Perez says this latest update to Orion will provide two key benefits to UPS.

First, the technology will include higher levels of route optimisation which will be sent as navigation advice to delivery drivers. "That will help to boost efficiency," says Perez.

Second, Orion will use big data to optimise delivery routes dynamically.

"Today, Orion creates delivery routes before drivers leave the facility and they stay with that static route throughout the day," he says. "In the future, our system will continually look at the work that's been completed, and that still needs to be completed, and will then dynamically optimise the route as drivers complete their deliveries. That approach will ensure we meet our service commitments and reduce overall delivery miles."

Once Orion is fully operational for more than 55,000 drivers this year, it will lead to a reduction of about 100 million delivery miles -- and 100,000 metric tons of carbon emissions. Perez says these reductions represent a key measure of business efficiency and effectiveness, particularly in terms of sustainability.

Projects such as Orion and Network Planning Tools form part of a collective of initiatives that UPS is using to improve decision making across the package delivery network. The firm, for example, recently launched the third iteration of its chatbot that uses artificial intelligence to help customers find rates and tracking information across a series of platforms, including Facebook and Amazon Echo.

"That project will continue to evolve, as will all our innovations across the smart logistics network," says Perez. "Everything runs well today but we also recognise there are opportunities for continuous improvement."

Overcoming business challenges to make the most of big data

"Big data is all about the business case -- how effective are we as an IT team in defining a good business case, which includes how to improve our service to our customers, what is the return on investment and how will the use of data improve other aspects of the business," says Perez.

These alternative use cases are not always at the forefront of executive thinking. Consultant McKinsey says too many organisations drill down on a single data set in isolation and fail to consider what different data sets mean for other parts of the business.

However, Perez says the re-use of information can have a significant impact at UPS. Perez talks, for example, about using delivery data to help understand what types of distribution solutions work better in different geographical locations.

"Should we have more access points? Should we introduce lockers? Should we allow drivers to release shipments without signatures? Data, technology, and analytics will improve our ability to answer those questions in individual locations -- and those benefits can come from using the information we collect from our customers in a different way," says Perez.

Perez says this fresh, open approach creates new opportunities for other data-savvy CIOs. "The conversation in the past used to be about buying technology, creating a data repository and discovering information," he says. "Now the conversation is changing and it's exciting. Every time we talk about a new project, the start of the conversation includes data."

By way of an example, Perez says senior individuals across the organisation now talk as a matter of course about the potential use of data in their line-of-business and how that application of insight might be related to other models across the organisation.

These senior executive, he says, also ask about the availability of information and whether the existence of data in other parts of the business will allow the firm to avoid a duplication of effort.

"The conversation about data is now much more active," says Perez. "That higher level of collaboration provides benefits for everyone because the awareness across the organisation means we'll have better repositories, less duplication and much more effective data models for new business cases in the future."

Read more about big data

- Turning big data into business insights: The state of play

- Choosing the best big data partners: Eight questions to ask

- Report shows that AI is more important to IoT than big data insights

How AI can rescue IT pros from job burnout and alert fatigue

Vyond's video generator adds ai that businesses will love. try it for yourself, the best internet providers in charlotte: top local isps compared.

10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Tech Leader | Stanford / Yale University

Anand Kumpatla

Sr Data Scientist @ Doubleslash Software Solutions Pvt Ltd

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn

LinkedIn is the largest professional social networking site with nearly 800 million members in more than 200 countries worldwide. Almost 40% of the users access LinkedIn daily, clocking around 1 billion interactions per month. The data science team at LinkedIn works with this massive pool of data to generate insights to build strategies, apply algorithms and statistical inferences to optimize engineering solutions, and help the company achieve its goals. Here are some of the real world data science projects at LinkedIn:

i) LinkedIn Recruiter Implement Search Algorithms and Recommendation Systems

LinkedIn Recruiter helps recruiters build and manage a talent pool to optimize the chances of hiring candidates successfully. This sophisticated product works on search and recommendation engines. The LinkedIn recruiter handles complex queries and filters on a constantly growing large dataset. The results delivered have to be relevant and specific. The initial search model was based on linear regression but was eventually upgraded to Gradient Boosted decision trees to include non-linear correlations in the dataset. In addition to these models, the LinkedIn recruiter also uses the Generalized Linear Mix model to improve the results of prediction problems to give personalized results.

ii) Recommendation Systems Personalized for News Feed

The LinkedIn news feed is the heart and soul of the professional community. A member's newsfeed is a place to discover conversations among connections, career news, posts, suggestions, photos, and videos. Every time a member visits LinkedIn, machine learning algorithms identify the best exchanges to be displayed on the feed by sorting through posts and ranking the most relevant results on top. The algorithms help LinkedIn understand member preferences and help provide personalized news feeds. The algorithms used include logistic regression, gradient boosted decision trees and neural networks for recommendation systems.

iii) CNN's to Detect Inappropriate Content

To provide a professional space where people can trust and express themselves professionally in a safe community has been a critical goal at LinkedIn. LinkedIn has heavily invested in building solutions to detect fake accounts and abusive behavior on their platform. Any form of spam, harassment, inappropriate content is immediately flagged and taken down. These can range from profanity to advertisements for illegal services. LinkedIn uses a Convolutional neural networks based machine learning model. This classifier trains on a training dataset containing accounts labeled as either "inappropriate" or "appropriate." The inappropriate list consists of accounts having content from "blocklisted" phrases or words and a small portion of manually reviewed accounts reported by the user community.

Here is a Text Classification Project to help you understand NLP basics for text classification. You can find a news recommendation system dataset to help you build a personalized news recommender system. You can also use this dataset to build a classifier using logistic regression, Naive Bayes, or Neural networks to classify toxic comments.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Pfizer is a multinational pharmaceutical company headquartered in New York, USA. One of the largest pharmaceutical companies globally known for developing a wide range of medicines and vaccines in disciplines like immunology, oncology, cardiology, and neurology. Pfizer became a household name in 2010 when it was the first to have a COVID-19 vaccine with FDA. In early November 2021, The CDC has approved the Pfizer vaccine for kids aged 5 to 11. Pfizer has been using machine learning and artificial intelligence to develop drugs and streamline trials, which played a massive role in developing and deploying the COVID-19 vaccine. Here are a few data analytics case studies by Pfizer :

i) Identifying Patients for Clinical Trials

Artificial intelligence and machine learning are used to streamline and optimize clinical trials to increase their efficiency. Natural language processing and exploratory data analysis of patient records can help identify suitable patients for clinical trials. These can help identify patients with distinct symptoms. These can help examine interactions of potential trial members' specific biomarkers, predict drug interactions and side effects which can help avoid complications. Pfizer's AI implementation helped rapidly identify signals within the noise of millions of data points across their 44,000-candidate COVID-19 clinical trial.

ii) Supply Chain and Manufacturing

Data science and machine learning techniques help pharmaceutical companies better forecast demand for vaccines and drugs and distribute them efficiently. Machine learning models can help identify efficient supply systems by automating and optimizing the production steps. These will help supply drugs customized to small pools of patients in specific gene pools. Pfizer uses Machine learning to predict the maintenance cost of equipment used. Predictive maintenance using AI is the next big step for Pharmaceutical companies to reduce costs.

iii) Drug Development

Computer simulations of proteins, and tests of their interactions, and yield analysis help researchers develop and test drugs more efficiently. In 2016 Watson Health and Pfizer announced a collaboration to utilize IBM Watson for Drug Discovery to help accelerate Pfizer's research in immuno-oncology, an approach to cancer treatment that uses the body's immune system to help fight cancer. Deep learning models have been used recently for bioactivity and synthesis prediction for drugs and vaccines in addition to molecular design. Deep learning has been a revolutionary technique for drug discovery as it factors everything from new applications of medications to possible toxic reactions which can save millions in drug trials.

You can create a Machine learning model to predict molecular activity to help design medicine using this dataset . You may build a CNN or a Deep neural network for this data analyst case study project.

Access Data Science and Machine Learning Project Code Examples

9) Shell Data Analyst Case Study Project

Shell is a global group of energy and petrochemical companies with over 80,000 employees in around 70 countries. Shell uses advanced technologies and innovations to help build a sustainable energy future. Shell is going through a significant transition as the world needs more and cleaner energy solutions to be a clean energy company by 2050. It requires substantial changes in the way in which energy is used. Digital technologies, including AI and Machine Learning, play an essential role in this transformation. These include efficient exploration and energy production, more reliable manufacturing, more nimble trading, and a personalized customer experience. Using AI in various phases of the organization will help achieve this goal and stay competitive in the market. Here are a few data analytics case studies in the petrochemical industry:

i) Precision Drilling

Shell is involved in the processing mining oil and gas supply, ranging from mining hydrocarbons to refining the fuel to retailing them to customers. Recently Shell has included reinforcement learning to control the drilling equipment used in mining. Reinforcement learning works on a reward-based system based on the outcome of the AI model. The algorithm is designed to guide the drills as they move through the surface, based on the historical data from drilling records. It includes information such as the size of drill bits, temperatures, pressures, and knowledge of the seismic activity. This model helps the human operator understand the environment better, leading to better and faster results will minor damage to machinery used.

ii) Efficient Charging Terminals

Due to climate changes, governments have encouraged people to switch to electric vehicles to reduce carbon dioxide emissions. However, the lack of public charging terminals has deterred people from switching to electric cars. Shell uses AI to monitor and predict the demand for terminals to provide efficient supply. Multiple vehicles charging from a single terminal may create a considerable grid load, and predictions on demand can help make this process more efficient.

iii) Monitoring Service and Charging Stations

Another Shell initiative trialed in Thailand and Singapore is the use of computer vision cameras, which can think and understand to watch out for potentially hazardous activities like lighting cigarettes in the vicinity of the pumps while refueling. The model is built to process the content of the captured images and label and classify it. The algorithm can then alert the staff and hence reduce the risk of fires. You can further train the model to detect rash driving or thefts in the future.

Here is a project to help you understand multiclass image classification. You can use the Hourly Energy Consumption Dataset to build an energy consumption prediction model. You can use time series with XGBoost to develop your model.

10) Zomato Case Study on Data Analytics

Zomato was founded in 2010 and is currently one of the most well-known food tech companies. Zomato offers services like restaurant discovery, home delivery, online table reservation, online payments for dining, etc. Zomato partners with restaurants to provide tools to acquire more customers while also providing delivery services and easy procurement of ingredients and kitchen supplies. Currently, Zomato has over 2 lakh restaurant partners and around 1 lakh delivery partners. Zomato has closed over ten crore delivery orders as of date. Zomato uses ML and AI to boost their business growth, with the massive amount of data collected over the years from food orders and user consumption patterns. Here are a few examples of data analyst case study project developed by the data scientists at Zomato:

i) Personalized Recommendation System for Homepage

Zomato uses data analytics to create personalized homepages for its users. Zomato uses data science to provide order personalization, like giving recommendations to the customers for specific cuisines, locations, prices, brands, etc. Restaurant recommendations are made based on a customer's past purchases, browsing history, and what other similar customers in the vicinity are ordering. This personalized recommendation system has led to a 15% improvement in order conversions and click-through rates for Zomato.

You can use the Restaurant Recommendation Dataset to build a restaurant recommendation system to predict what restaurants customers are most likely to order from, given the customer location, restaurant information, and customer order history.

ii) Analyzing Customer Sentiment

Zomato uses Natural language processing and Machine learning to understand customer sentiments using social media posts and customer reviews. These help the company gauge the inclination of its customer base towards the brand. Deep learning models analyze the sentiments of various brand mentions on social networking sites like Twitter, Instagram, Linked In, and Facebook. These analytics give insights to the company, which helps build the brand and understand the target audience.

iii) Predicting Food Preparation Time (FPT)

Food delivery time is an essential variable in the estimated delivery time of the order placed by the customer using Zomato. The food preparation time depends on numerous factors like the number of dishes ordered, time of the day, footfall in the restaurant, day of the week, etc. Accurate prediction of the food preparation time can help make a better prediction of the Estimated delivery time, which will help delivery partners less likely to breach it. Zomato uses a Bidirectional LSTM-based deep learning model that considers all these features and provides food preparation time for each order in real-time.

Data scientists are companies' secret weapons when analyzing customer sentiments and behavior and leveraging it to drive conversion, loyalty, and profits. These 10 data science case studies projects with examples and solutions show you how various organizations use data science technologies to succeed and be at the top of their field! To summarize, Data Science has not only accelerated the performance of companies but has also made it possible to manage & sustain their performance with ease.

FAQs on Data Analysis Case Studies

A case study in data science is an in-depth analysis of a real-world problem using data-driven approaches. It involves collecting, cleaning, and analyzing data to extract insights and solve challenges, offering practical insights into how data science techniques can address complex issues across various industries.

To create a data science case study, identify a relevant problem, define objectives, and gather suitable data. Clean and preprocess data, perform exploratory data analysis, and apply appropriate algorithms for analysis. Summarize findings, visualize results, and provide actionable recommendations, showcasing the problem-solving potential of data science techniques.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

- Data Center

- Applications

- Open Source

Datamation content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More .

A growing number of enterprises are pooling terabytes and petabytes of data, but many of them are grappling with ways to apply their big data as it grows.

How can companies determine what big data solutions will work best for their industry, business model, and specific data science goals?

Check out these big data enterprise case studies from some of the top big data companies and their clients to learn about the types of solutions that exist for big data management.

Enterprise case studies

Netflix on aws, accuweather on microsoft azure, china eastern airlines on oracle cloud, etsy on google cloud, mlogica on sap hana cloud.

Read next: Big Data Market Review 2021

Netflix is one of the largest media and technology enterprises in the world, with thousands of shows that its hosts for streaming as well as its growing media production division. Netflix stores billions of data sets in its systems related to audiovisual data, consumer metrics, and recommendation engines. The company required a solution that would allow it to store, manage, and optimize viewers’ data. As its studio has grown, Netflix also needed a platform that would enable quicker and more efficient collaboration on projects.

“Amazon Kinesis Streams processes multiple terabytes of log data each day. Yet, events show up in our analytics in seconds,” says John Bennett, senior software engineer at Netflix.

“We can discover and respond to issues in real-time, ensuring high availability and a great customer experience.”

Industries: Entertainment, media streaming

Use cases: Computing power, storage scaling, database and analytics management, recommendation engines powered through AI/ML, video transcoding, cloud collaboration space for production, traffic flow processing, scaled email and communication capabilities

- Now using over 100,000 server instances on AWS for different operational functions

- Used AWS to build a studio in the cloud for content production that improves collaborative capabilities

- Produced entire seasons of shows via the cloud during COVID-19 lockdowns

- Scaled and optimized mass email capabilities with Amazon Simple Email Service (Amazon SES)

- Netflix’s Amazon Kinesis Streams-based solution now processes billions of traffic flows daily

Read the full Netflix on AWS case study here .

AccuWeather is one of the oldest and most trusted providers of weather forecast data. The weather company provides an API that other companies can use to embed their weather content into their own systems. AccuWeather wanted to move its data processes to the cloud. However, the traditional GRIB 2 data format for weather data is not supported by most data management platforms. With Microsoft Azure, Azure Data Lake Storage, and Azure Databricks (AI), AccuWeather was able to find a solution that would convert the GRIB 2 data, analyze it in more depth than before, and store this data in a scalable way.

“With some types of severe weather forecasts, it can be a life-or-death scenario,” says Christopher Patti, CTO at AccuWeather.

“With Azure, we’re agile enough to process and deliver severe weather warnings rapidly and offer customers more time to respond, which is important when seconds count and lives are on the line.”

Industries: Media, weather forecasting, professional services

Use cases: Making legacy and traditional data formats usable for AI-powered analysis, API migration to Azure, data lakes for storage, more precise reporting and scaling

- GRIB 2 weather data made operational for AI-powered next-generation forecasting engine, via Azure Databricks

- Delta lake storage layer helps to create data pipelines and more accessibility

- Improved speed, accuracy, and localization of forecasts via machine learning

- Real-time measurement of API key usage and performance

- Ability to extract weather-related data from smart-city systems and self-driving vehicles

Read the full AccuWeather on Microsoft Azure case study here .

China Eastern Airlines is one of the largest airlines in the world that is working to improve safety, efficiency, and overall customer experience through big data analytics. With Oracle’s cloud setup and a large portfolio of analytics tools, it now has access to more in-flight, aircraft, and customer metrics.

“By processing and analyzing over 100 TB of complex daily flight data with Oracle Big Data Appliance, we gained the ability to easily identify and predict potential faults and enhanced flight safety,” says Wang Xuewu, head of China Eastern Airlines’ data lab.

“The solution also helped to cut fuel consumption and increase customer experience.”

Industries: Airline, travel, transportation

Use cases: Increased flight safety and fuel efficiency, reduced operational costs, big data analytics

- Optimized big data analysis to analyze flight angle, take-off speed, and landing speed, maximizing predictive analytics for engine and flight safety

- Multi-dimensional analysis on over 60 attributes provides advanced metrics and recommendations to improve aircraft fuel use

- Advanced spatial analytics on the travelers’ experience, with metrics covering in-flight cabin service, baggage, ground service, marketing, flight operation, website, and call center

- Using Oracle Big Data Appliance to integrate Hadoop data from aircraft sensors, unifying and simplifying the process for evaluating device health across an aircraft

- Central interface for daily management of real-time flight data

Read the full China Eastern Airlines on Oracle Cloud case study here .

Etsy is an e-commerce site for independent artisan sellers. With its goal to create a buying and selling space that puts the individual first, Etsy wanted to advance its platform to the cloud to keep up with needed innovations. But it didn’t want to lose the personal touches or values that drew customers in the first place. Etsy chose Google for cloud migration and big data management for several primary reasons: Google’s advanced features that back scalability, its commitment to sustainability, and the collaborative spirit of the Google team.

Mike Fisher, CTO at Etsy, explains how Google’s problem-solving approach won them over.

“We found that Google would come into meetings, pull their chairs up, meet us halfway, and say, ‘We don’t do that, but let’s figure out a way that we can do that for you.'”

Industries: Retail, E-commerce

Use cases: Data center migration to the cloud, accessing collaboration tools, leveraging machine learning (ML) and artificial intelligence (AI), sustainability efforts

- 5.5 petabytes of data migrated from existing data center to Google Cloud

- >50% savings in compute energy, minimizing total carbon footprint and energy usage

- 42% reduced compute costs and improved cost predictability through virtual machine (VM), solid state drive (SSD), and storage optimizations

- Democratization of cost data for Etsy engineers

- 15% of Etsy engineers moved from system infrastructure management to customer experience, search, and recommendation optimization

Read the full Etsy on Google Cloud case study here .

mLogica is a technology and product consulting firm that wanted to move to the cloud, in order to better support its customers’ big data storage and analytics needs. Although it held on to its existing data analytics platform, CAP*M, mLogica relied on SAP HANA Cloud to move from on-premises infrastructure to a more scalable cloud structure.

“More and more of our clients are moving to the cloud, and our solutions need to keep pace with this trend,” says Michael Kane, VP of strategic alliances and marketing, mLogica

“With CAP*M on SAP HANA Cloud, we can future-proof clients’ data setups.”