- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.4: Basic Concepts of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 1715

- John H. McDonald

- University of Delaware

Learning Objectives

- One of the main goals of statistical hypothesis testing is to estimate the \(P\) value, which is the probability of obtaining the observed results, or something more extreme, if the null hypothesis were true. If the observed results are unlikely under the null hypothesis, reject the null hypothesis.

- Alternatives to this "frequentist" approach to statistics include Bayesian statistics and estimation of effect sizes and confidence intervals.

Introduction

There are different ways of doing statistics. The technique used by the vast majority of biologists, and the technique that most of this handbook describes, is sometimes called "frequentist" or "classical" statistics. It involves testing a null hypothesis by comparing the data you observe in your experiment with the predictions of a null hypothesis. You estimate what the probability would be of obtaining the observed results, or something more extreme, if the null hypothesis were true. If this estimated probability (the \(P\) value) is small enough (below the significance value), then you conclude that it is unlikely that the null hypothesis is true; you reject the null hypothesis and accept an alternative hypothesis.

Many statisticians harshly criticize frequentist statistics, but their criticisms haven't had much effect on the way most biologists do statistics. Here I will outline some of the key concepts used in frequentist statistics, then briefly describe some of the alternatives.

Null Hypothesis

The null hypothesis is a statement that you want to test. In general, the null hypothesis is that things are the same as each other, or the same as a theoretical expectation. For example, if you measure the size of the feet of male and female chickens, the null hypothesis could be that the average foot size in male chickens is the same as the average foot size in female chickens. If you count the number of male and female chickens born to a set of hens, the null hypothesis could be that the ratio of males to females is equal to a theoretical expectation of a \(1:1\) ratio.

The alternative hypothesis is that things are different from each other, or different from a theoretical expectation.

For example, one alternative hypothesis would be that male chickens have a different average foot size than female chickens; another would be that the sex ratio is different from \(1:1\).

Usually, the null hypothesis is boring and the alternative hypothesis is interesting. For example, let's say you feed chocolate to a bunch of chickens, then look at the sex ratio in their offspring. If you get more females than males, it would be a tremendously exciting discovery: it would be a fundamental discovery about the mechanism of sex determination, female chickens are more valuable than male chickens in egg-laying breeds, and you'd be able to publish your result in Science or Nature . Lots of people have spent a lot of time and money trying to change the sex ratio in chickens, and if you're successful, you'll be rich and famous. But if the chocolate doesn't change the sex ratio, it would be an extremely boring result, and you'd have a hard time getting it published in the Eastern Delaware Journal of Chickenology . It's therefore tempting to look for patterns in your data that support the exciting alternative hypothesis. For example, you might look at \(48\) offspring of chocolate-fed chickens and see \(31\) females and only \(17\) males. This looks promising, but before you get all happy and start buying formal wear for the Nobel Prize ceremony, you need to ask "What's the probability of getting a deviation from the null expectation that large, just by chance, if the boring null hypothesis is really true?" Only when that probability is low can you reject the null hypothesis. The goal of statistical hypothesis testing is to estimate the probability of getting your observed results under the null hypothesis.

Biological vs. Statistical Null Hypotheses

It is important to distinguish between biological null and alternative hypotheses and statistical null and alternative hypotheses. "Sexual selection by females has caused male chickens to evolve bigger feet than females" is a biological alternative hypothesis; it says something about biological processes, in this case sexual selection. "Male chickens have a different average foot size than females" is a statistical alternative hypothesis; it says something about the numbers, but nothing about what caused those numbers to be different. The biological null and alternative hypotheses are the first that you should think of, as they describe something interesting about biology; they are two possible answers to the biological question you are interested in ("What affects foot size in chickens?"). The statistical null and alternative hypotheses are statements about the data that should follow from the biological hypotheses: if sexual selection favors bigger feet in male chickens (a biological hypothesis), then the average foot size in male chickens should be larger than the average in females (a statistical hypothesis). If you reject the statistical null hypothesis, you then have to decide whether that's enough evidence that you can reject your biological null hypothesis. For example, if you don't find a significant difference in foot size between male and female chickens, you could conclude "There is no significant evidence that sexual selection has caused male chickens to have bigger feet." If you do find a statistically significant difference in foot size, that might not be enough for you to conclude that sexual selection caused the bigger feet; it might be that males eat more, or that the bigger feet are a developmental byproduct of the roosters' combs, or that males run around more and the exercise makes their feet bigger. When there are multiple biological interpretations of a statistical result, you need to think of additional experiments to test the different possibilities.

Testing the Null Hypothesis

The primary goal of a statistical test is to determine whether an observed data set is so different from what you would expect under the null hypothesis that you should reject the null hypothesis. For example, let's say you are studying sex determination in chickens. For breeds of chickens that are bred to lay lots of eggs, female chicks are more valuable than male chicks, so if you could figure out a way to manipulate the sex ratio, you could make a lot of chicken farmers very happy. You've fed chocolate to a bunch of female chickens (in birds, unlike mammals, the female parent determines the sex of the offspring), and you get \(25\) female chicks and \(23\) male chicks. Anyone would look at those numbers and see that they could easily result from chance; there would be no reason to reject the null hypothesis of a \(1:1\) ratio of females to males. If you got \(47\) females and \(1\) male, most people would look at those numbers and see that they would be extremely unlikely to happen due to luck, if the null hypothesis were true; you would reject the null hypothesis and conclude that chocolate really changed the sex ratio. However, what if you had \(31\) females and \(17\) males? That's definitely more females than males, but is it really so unlikely to occur due to chance that you can reject the null hypothesis? To answer that, you need more than common sense, you need to calculate the probability of getting a deviation that large due to chance.

In the figure above, I used the BINOMDIST function of Excel to calculate the probability of getting each possible number of males, from \(0\) to \(48\), under the null hypothesis that \(0.5\) are male. As you can see, the probability of getting \(17\) males out of \(48\) total chickens is about \(0.015\). That seems like a pretty small probability, doesn't it? However, that's the probability of getting exactly \(17\) males. What you want to know is the probability of getting \(17\) or fewer males. If you were going to accept \(17\) males as evidence that the sex ratio was biased, you would also have accepted \(16\), or \(15\), or \(14\),… males as evidence for a biased sex ratio. You therefore need to add together the probabilities of all these outcomes. The probability of getting \(17\) or fewer males out of \(48\), under the null hypothesis, is \(0.030\). That means that if you had an infinite number of chickens, half males and half females, and you took a bunch of random samples of \(48\) chickens, \(3.0\%\) of the samples would have \(17\) or fewer males.

This number, \(0.030\), is the \(P\) value. It is defined as the probability of getting the observed result, or a more extreme result, if the null hypothesis is true. So "\(P=0.030\)" is a shorthand way of saying "The probability of getting \(17\) or fewer male chickens out of \(48\) total chickens, IF the null hypothesis is true that \(50\%\) of chickens are male, is \(0.030\)."

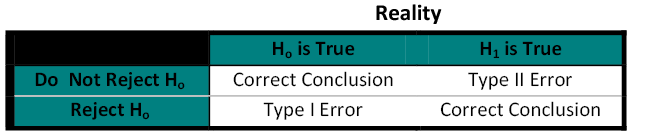

False Positives vs. False Negatives

After you do a statistical test, you are either going to reject or accept the null hypothesis. Rejecting the null hypothesis means that you conclude that the null hypothesis is not true; in our chicken sex example, you would conclude that the true proportion of male chicks, if you gave chocolate to an infinite number of chicken mothers, would be less than \(50\%\).

When you reject a null hypothesis, there's a chance that you're making a mistake. The null hypothesis might really be true, and it may be that your experimental results deviate from the null hypothesis purely as a result of chance. In a sample of \(48\) chickens, it's possible to get \(17\) male chickens purely by chance; it's even possible (although extremely unlikely) to get \(0\) male and \(48\) female chickens purely by chance, even though the true proportion is \(50\%\) males. This is why we never say we "prove" something in science; there's always a chance, however miniscule, that our data are fooling us and deviate from the null hypothesis purely due to chance. When your data fool you into rejecting the null hypothesis even though it's true, it's called a "false positive," or a "Type I error." So another way of defining the \(P\) value is the probability of getting a false positive like the one you've observed, if the null hypothesis is true.

Another way your data can fool you is when you don't reject the null hypothesis, even though it's not true. If the true proportion of female chicks is \(51\%\), the null hypothesis of a \(50\%\) proportion is not true, but you're unlikely to get a significant difference from the null hypothesis unless you have a huge sample size. Failing to reject the null hypothesis, even though it's not true, is a "false negative" or "Type II error." This is why we never say that our data shows the null hypothesis to be true; all we can say is that we haven't rejected the null hypothesis.

Significance Levels

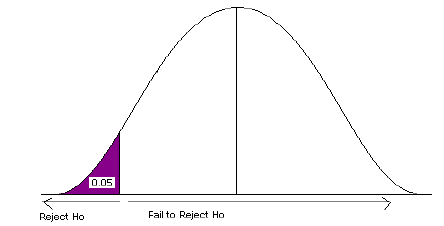

Does a probability of \(0.030\) mean that you should reject the null hypothesis, and conclude that chocolate really caused a change in the sex ratio? The convention in most biological research is to use a significance level of \(0.05\). This means that if the \(P\) value is less than \(0.05\), you reject the null hypothesis; if \(P\) is greater than or equal to \(0.05\), you don't reject the null hypothesis. There is nothing mathematically magic about \(0.05\), it was chosen rather arbitrarily during the early days of statistics; people could have agreed upon \(0.04\), or \(0.025\), or \(0.071\) as the conventional significance level.

The significance level (also known as the "critical value" or "alpha") you should use depends on the costs of different kinds of errors. With a significance level of \(0.05\), you have a \(5\%\) chance of rejecting the null hypothesis, even if it is true. If you try \(100\) different treatments on your chickens, and none of them really change the sex ratio, \(5\%\) of your experiments will give you data that are significantly different from a \(1:1\) sex ratio, just by chance. In other words, \(5\%\) of your experiments will give you a false positive. If you use a higher significance level than the conventional \(0.05\), such as \(0.10\), you will increase your chance of a false positive to \(0.10\) (therefore increasing your chance of an embarrassingly wrong conclusion), but you will also decrease your chance of a false negative (increasing your chance of detecting a subtle effect). If you use a lower significance level than the conventional \(0.05\), such as \(0.01\), you decrease your chance of an embarrassing false positive, but you also make it less likely that you'll detect a real deviation from the null hypothesis if there is one.

The relative costs of false positives and false negatives, and thus the best \(P\) value to use, will be different for different experiments. If you are screening a bunch of potential sex-ratio-changing treatments and get a false positive, it wouldn't be a big deal; you'd just run a few more tests on that treatment until you were convinced the initial result was a false positive. The cost of a false negative, however, would be that you would miss out on a tremendously valuable discovery. You might therefore set your significance value to \(0.10\) or more for your initial tests. On the other hand, once your sex-ratio-changing treatment is undergoing final trials before being sold to farmers, a false positive could be very expensive; you'd want to be very confident that it really worked. Otherwise, if you sell the chicken farmers a sex-ratio treatment that turns out to not really work (it was a false positive), they'll sue the pants off of you. Therefore, you might want to set your significance level to \(0.01\), or even lower, for your final tests.

The significance level you choose should also depend on how likely you think it is that your alternative hypothesis will be true, a prediction that you make before you do the experiment. This is the foundation of Bayesian statistics, as explained below.

You must choose your significance level before you collect the data, of course. If you choose to use a different significance level than the conventional \(0.05\), people will be skeptical; you must be able to justify your choice. Throughout this handbook, I will always use \(P< 0.05\) as the significance level. If you are doing an experiment where the cost of a false positive is a lot greater or smaller than the cost of a false negative, or an experiment where you think it is unlikely that the alternative hypothesis will be true, you should consider using a different significance level.

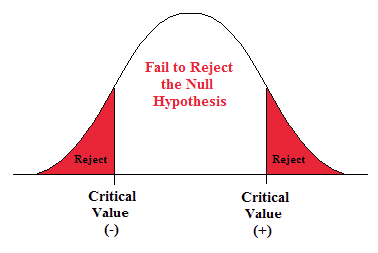

One-tailed vs. Two-tailed Probabilities

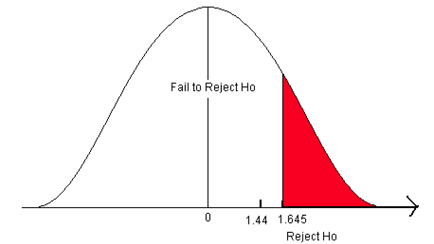

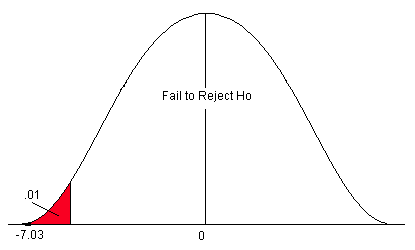

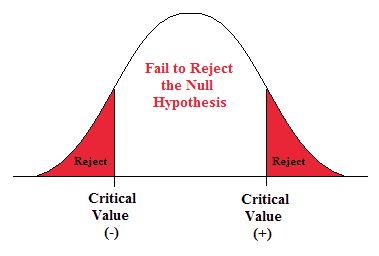

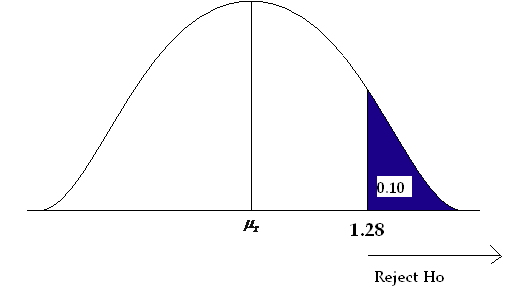

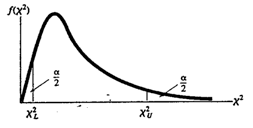

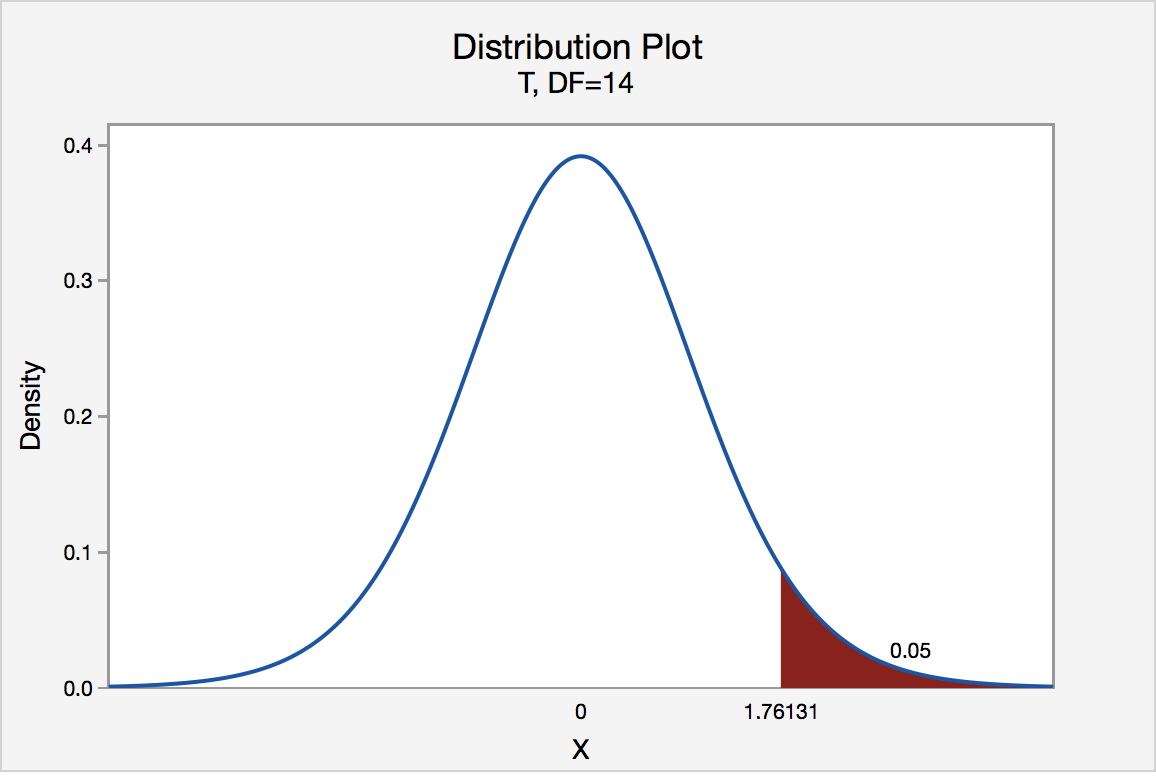

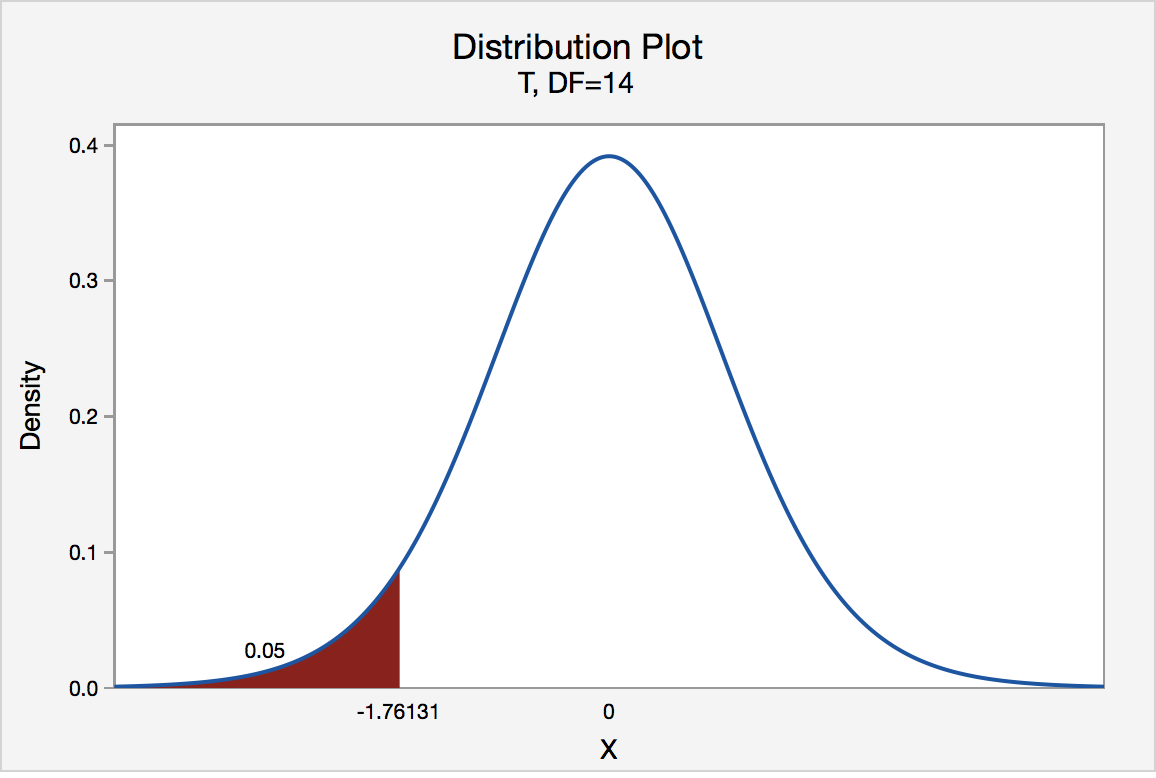

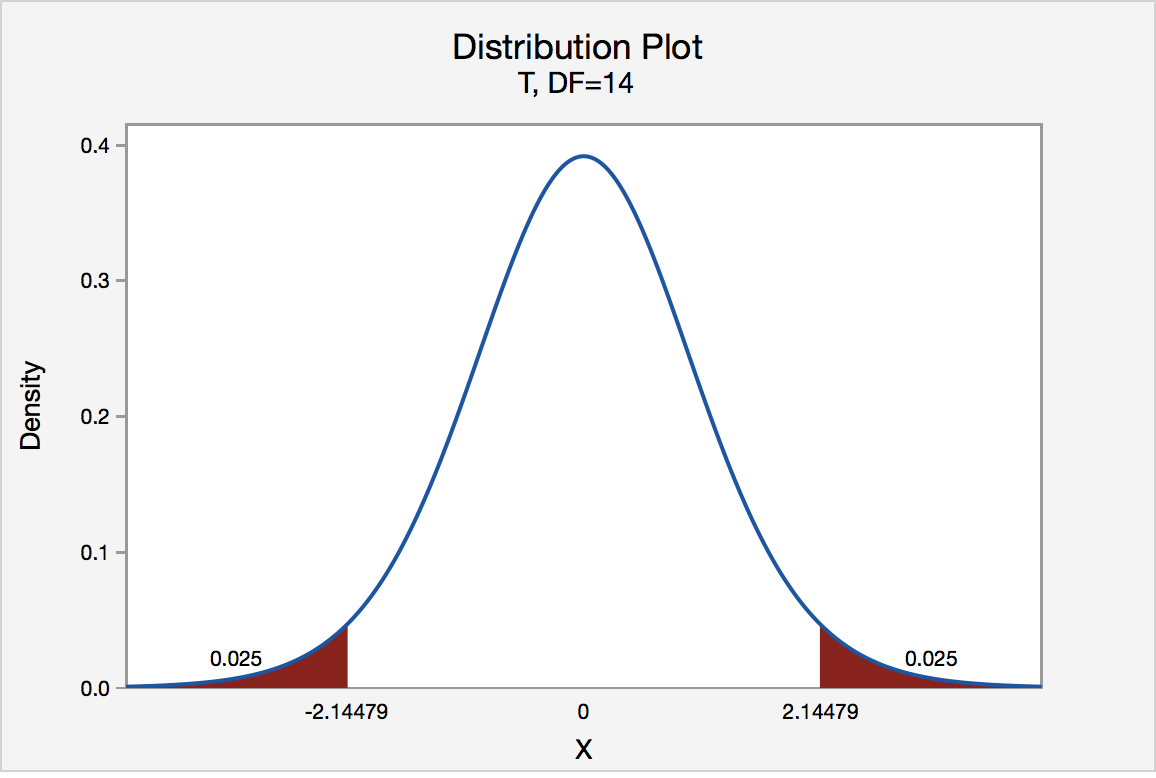

The probability that was calculated above, \(0.030\), is the probability of getting \(17\) or fewer males out of \(48\). It would be significant, using the conventional \(P< 0.05\)criterion. However, what about the probability of getting \(17\) or fewer females? If your null hypothesis is "The proportion of males is \(17\) or more" and your alternative hypothesis is "The proportion of males is less than \(0.5\)," then you would use the \(P=0.03\) value found by adding the probabilities of getting \(17\) or fewer males. This is called a one-tailed probability, because you are adding the probabilities in only one tail of the distribution shown in the figure. However, if your null hypothesis is "The proportion of males is \(0.5\)", then your alternative hypothesis is "The proportion of males is different from \(0.5\)." In that case, you should add the probability of getting \(17\) or fewer females to the probability of getting \(17\) or fewer males. This is called a two-tailed probability. If you do that with the chicken result, you get \(P=0.06\), which is not quite significant.

You should decide whether to use the one-tailed or two-tailed probability before you collect your data, of course. A one-tailed probability is more powerful, in the sense of having a lower chance of false negatives, but you should only use a one-tailed probability if you really, truly have a firm prediction about which direction of deviation you would consider interesting. In the chicken example, you might be tempted to use a one-tailed probability, because you're only looking for treatments that decrease the proportion of worthless male chickens. But if you accidentally found a treatment that produced \(87\%\) male chickens, would you really publish the result as "The treatment did not cause a significant decrease in the proportion of male chickens"? I hope not. You'd realize that this unexpected result, even though it wasn't what you and your farmer friends wanted, would be very interesting to other people; by leading to discoveries about the fundamental biology of sex-determination in chickens, in might even help you produce more female chickens someday. Any time a deviation in either direction would be interesting, you should use the two-tailed probability. In addition, people are skeptical of one-tailed probabilities, especially if a one-tailed probability is significant and a two-tailed probability would not be significant (as in our chocolate-eating chicken example). Unless you provide a very convincing explanation, people may think you decided to use the one-tailed probability after you saw that the two-tailed probability wasn't quite significant, which would be cheating. It may be easier to always use two-tailed probabilities. For this handbook, I will always use two-tailed probabilities, unless I make it very clear that only one direction of deviation from the null hypothesis would be interesting.

Reporting your results

In the olden days, when people looked up \(P\) values in printed tables, they would report the results of a statistical test as "\(P< 0.05\)", "\(P< 0.01\)", "\(P>0.10\)", etc. Nowadays, almost all computer statistics programs give the exact \(P\) value resulting from a statistical test, such as \(P=0.029\), and that's what you should report in your publications. You will conclude that the results are either significant or they're not significant; they either reject the null hypothesis (if \(P\) is below your pre-determined significance level) or don't reject the null hypothesis (if \(P\) is above your significance level). But other people will want to know if your results are "strongly" significant (\(P\) much less than \(0.05\)), which will give them more confidence in your results than if they were "barely" significant (\(P=0.043\), for example). In addition, other researchers will need the exact \(P\) value if they want to combine your results with others into a meta-analysis.

Computer statistics programs can give somewhat inaccurate \(P\) values when they are very small. Once your \(P\) values get very small, you can just say "\(P< 0.00001\)" or some other impressively small number. You should also give either your raw data, or the test statistic and degrees of freedom, in case anyone wants to calculate your exact \(P\) value.

Effect Sizes and Confidence Intervals

A fairly common criticism of the hypothesis-testing approach to statistics is that the null hypothesis will always be false, if you have a big enough sample size. In the chicken-feet example, critics would argue that if you had an infinite sample size, it is impossible that male chickens would have exactly the same average foot size as female chickens. Therefore, since you know before doing the experiment that the null hypothesis is false, there's no point in testing it.

This criticism only applies to two-tailed tests, where the null hypothesis is "Things are exactly the same" and the alternative is "Things are different." Presumably these critics think it would be okay to do a one-tailed test with a null hypothesis like "Foot length of male chickens is the same as, or less than, that of females," because the null hypothesis that male chickens have smaller feet than females could be true. So if you're worried about this issue, you could think of a two-tailed test, where the null hypothesis is that things are the same, as shorthand for doing two one-tailed tests. A significant rejection of the null hypothesis in a two-tailed test would then be the equivalent of rejecting one of the two one-tailed null hypotheses.

A related criticism is that a significant rejection of a null hypothesis might not be biologically meaningful, if the difference is too small to matter. For example, in the chicken-sex experiment, having a treatment that produced \(49.9\%\) male chicks might be significantly different from \(50\%\), but it wouldn't be enough to make farmers want to buy your treatment. These critics say you should estimate the effect size and put a confidence interval on it, not estimate a \(P\) value. So the goal of your chicken-sex experiment should not be to say "Chocolate gives a proportion of males that is significantly less than \(50\%\) ((\(P=0.015\))" but to say "Chocolate produced \(36.1\%\) males with a \(95\%\) confidence interval of \(25.9\%\) to \(47.4\%\)." For the chicken-feet experiment, you would say something like "The difference between males and females in mean foot size is \(2.45mm\), with a confidence interval on the difference of \(\pm 1.98mm\)."

Estimating effect sizes and confidence intervals is a useful way to summarize your results, and it should usually be part of your data analysis; you'll often want to include confidence intervals in a graph. However, there are a lot of experiments where the goal is to decide a yes/no question, not estimate a number. In the initial tests of chocolate on chicken sex ratio, the goal would be to decide between "It changed the sex ratio" and "It didn't seem to change the sex ratio." Any change in sex ratio that is large enough that you could detect it would be interesting and worth follow-up experiments. While it's true that the difference between \(49.9\%\) and \(50\%\) might not be worth pursuing, you wouldn't do an experiment on enough chickens to detect a difference that small.

Often, the people who claim to avoid hypothesis testing will say something like "the \(95\%\) confidence interval of \(25.9\%\) to \(47.4\%\) does not include \(50\%\), so we conclude that the plant extract significantly changed the sex ratio." This is a clumsy and roundabout form of hypothesis testing, and they might as well admit it and report the \(P\) value.

Bayesian statistics

Another alternative to frequentist statistics is Bayesian statistics. A key difference is that Bayesian statistics requires specifying your best guess of the probability of each possible value of the parameter to be estimated, before the experiment is done. This is known as the "prior probability." So for your chicken-sex experiment, you're trying to estimate the "true" proportion of male chickens that would be born, if you had an infinite number of chickens. You would have to specify how likely you thought it was that the true proportion of male chickens was \(50\%\), or \(51\%\), or \(52\%\), or \(47.3\%\), etc. You would then look at the results of your experiment and use the information to calculate new probabilities that the true proportion of male chickens was \(50\%\), or \(51\%\), or \(52\%\), or \(47.3\%\), etc. (the posterior distribution).

I'll confess that I don't really understand Bayesian statistics, and I apologize for not explaining it well. In particular, I don't understand how people are supposed to come up with a prior distribution for the kinds of experiments that most biologists do. With the exception of systematics, where Bayesian estimation of phylogenies is quite popular and seems to make sense, I haven't seen many research biologists using Bayesian statistics for routine data analysis of simple laboratory experiments. This means that even if the cult-like adherents of Bayesian statistics convinced you that they were right, you would have a difficult time explaining your results to your biologist peers. Statistics is a method of conveying information, and if you're speaking a different language than the people you're talking to, you won't convey much information. So I'll stick with traditional frequentist statistics for this handbook.

Having said that, there's one key concept from Bayesian statistics that is important for all users of statistics to understand. To illustrate it, imagine that you are testing extracts from \(1000\) different tropical plants, trying to find something that will kill beetle larvae. The reality (which you don't know) is that \(500\) of the extracts kill beetle larvae, and \(500\) don't. You do the \(1000\) experiments and do the \(1000\) frequentist statistical tests, and you use the traditional significance level of \(P< 0.05\). The \(500\) plant extracts that really work all give you \(P< 0.05\); these are the true positives. Of the \(500\) extracts that don't work, \(5\%\) of them give you \(P< 0.05\) by chance (this is the meaning of the \(P\) value, after all), so you have \(25\) false positives. So you end up with \(525\) plant extracts that gave you a \(P\) value less than \(0.05\). You'll have to do further experiments to figure out which are the \(25\) false positives and which are the \(500\) true positives, but that's not so bad, since you know that most of them will turn out to be true positives.

Now imagine that you are testing those extracts from \(1000\) different tropical plants to try to find one that will make hair grow. The reality (which you don't know) is that one of the extracts makes hair grow, and the other \(999\) don't. You do the \(1000\) experiments and do the \(1000\) frequentist statistical tests, and you use the traditional significance level of \(P< 0.05\). The one plant extract that really works gives you P <0.05; this is the true positive. But of the \(999\) extracts that don't work, \(5\%\) of them give you \(P< 0.05\) by chance, so you have about \(50\) false positives. You end up with \(51\) \(P\) values less than \(0.05\), but almost all of them are false positives.

Now instead of testing \(1000\) plant extracts, imagine that you are testing just one. If you are testing it to see if it kills beetle larvae, you know (based on everything you know about plant and beetle biology) there's a pretty good chance it will work, so you can be pretty sure that a \(P\) value less than \(0.05\) is a true positive. But if you are testing that one plant extract to see if it grows hair, which you know is very unlikely (based on everything you know about plants and hair), a \(P\) value less than \(0.05\) is almost certainly a false positive. In other words, if you expect that the null hypothesis is probably true, a statistically significant result is probably a false positive. This is sad; the most exciting, amazing, unexpected results in your experiments are probably just your data trying to make you jump to ridiculous conclusions. You should require a much lower \(P\) value to reject a null hypothesis that you think is probably true.

A Bayesian would insist that you put in numbers just how likely you think the null hypothesis and various values of the alternative hypothesis are, before you do the experiment, and I'm not sure how that is supposed to work in practice for most experimental biology. But the general concept is a valuable one: as Carl Sagan summarized it, "Extraordinary claims require extraordinary evidence."

Recommendations

Here are three experiments to illustrate when the different approaches to statistics are appropriate. In the first experiment, you are testing a plant extract on rabbits to see if it will lower their blood pressure. You already know that the plant extract is a diuretic (makes the rabbits pee more) and you already know that diuretics tend to lower blood pressure, so you think there's a good chance it will work. If it does work, you'll do more low-cost animal tests on it before you do expensive, potentially risky human trials. Your prior expectation is that the null hypothesis (that the plant extract has no effect) has a good chance of being false, and the cost of a false positive is fairly low. So you should do frequentist hypothesis testing, with a significance level of \(0.05\).

In the second experiment, you are going to put human volunteers with high blood pressure on a strict low-salt diet and see how much their blood pressure goes down. Everyone will be confined to a hospital for a month and fed either a normal diet, or the same foods with half as much salt. For this experiment, you wouldn't be very interested in the \(P\) value, as based on prior research in animals and humans, you are already quite certain that reducing salt intake will lower blood pressure; you're pretty sure that the null hypothesis that "Salt intake has no effect on blood pressure" is false. Instead, you are very interested to know how much the blood pressure goes down. Reducing salt intake in half is a big deal, and if it only reduces blood pressure by \(1mm\) Hg, the tiny gain in life expectancy wouldn't be worth a lifetime of bland food and obsessive label-reading. If it reduces blood pressure by \(20mm\) with a confidence interval of \(\pm 5mm\), it might be worth it. So you should estimate the effect size (the difference in blood pressure between the diets) and the confidence interval on the difference.

In the third experiment, you are going to put magnetic hats on guinea pigs and see if their blood pressure goes down (relative to guinea pigs wearing the kind of non-magnetic hats that guinea pigs usually wear). This is a really goofy experiment, and you know that it is very unlikely that the magnets will have any effect (it's not impossible—magnets affect the sense of direction of homing pigeons, and maybe guinea pigs have something similar in their brains and maybe it will somehow affect their blood pressure—it just seems really unlikely). You might analyze your results using Bayesian statistics, which will require specifying in numerical terms just how unlikely you think it is that the magnetic hats will work. Or you might use frequentist statistics, but require a \(P\) value much, much lower than \(0.05\) to convince yourself that the effect is real.

- Picture of giant concrete chicken from Sue and Tony's Photo Site.

- Picture of guinea pigs wearing hats from all over the internet; if you know the original photographer, please let me know.

Statistics Made Easy

Introduction to Hypothesis Testing

A statistical hypothesis is an assumption about a population parameter .

For example, we may assume that the mean height of a male in the U.S. is 70 inches.

The assumption about the height is the statistical hypothesis and the true mean height of a male in the U.S. is the population parameter .

A hypothesis test is a formal statistical test we use to reject or fail to reject a statistical hypothesis.

The Two Types of Statistical Hypotheses

To test whether a statistical hypothesis about a population parameter is true, we obtain a random sample from the population and perform a hypothesis test on the sample data.

There are two types of statistical hypotheses:

The null hypothesis , denoted as H 0 , is the hypothesis that the sample data occurs purely from chance.

The alternative hypothesis , denoted as H 1 or H a , is the hypothesis that the sample data is influenced by some non-random cause.

Hypothesis Tests

A hypothesis test consists of five steps:

1. State the hypotheses.

State the null and alternative hypotheses. These two hypotheses need to be mutually exclusive, so if one is true then the other must be false.

2. Determine a significance level to use for the hypothesis.

Decide on a significance level. Common choices are .01, .05, and .1.

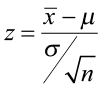

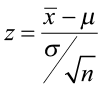

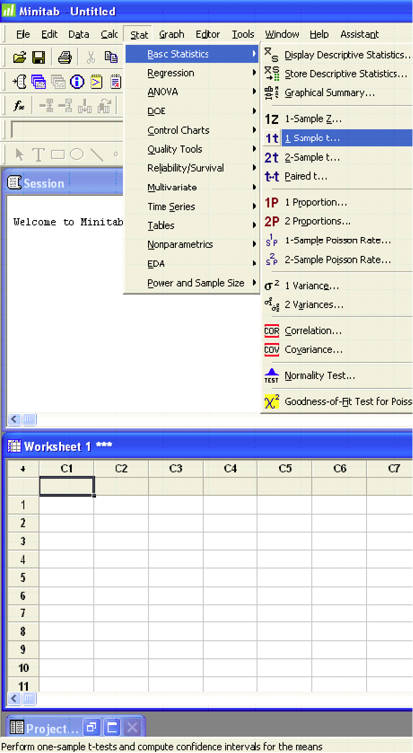

3. Find the test statistic.

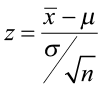

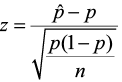

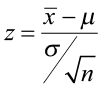

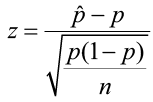

Find the test statistic and the corresponding p-value. Often we are analyzing a population mean or proportion and the general formula to find the test statistic is: (sample statistic – population parameter) / (standard deviation of statistic)

4. Reject or fail to reject the null hypothesis.

Using the test statistic or the p-value, determine if you can reject or fail to reject the null hypothesis based on the significance level.

The p-value tells us the strength of evidence in support of a null hypothesis. If the p-value is less than the significance level, we reject the null hypothesis.

5. Interpret the results.

Interpret the results of the hypothesis test in the context of the question being asked.

The Two Types of Decision Errors

There are two types of decision errors that one can make when doing a hypothesis test:

Type I error: You reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, often called alpha , and denoted as α.

Type II error: You fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is called the Power of the test or Beta , denoted as β.

One-Tailed and Two-Tailed Tests

A statistical hypothesis can be one-tailed or two-tailed.

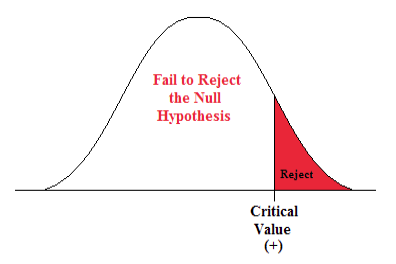

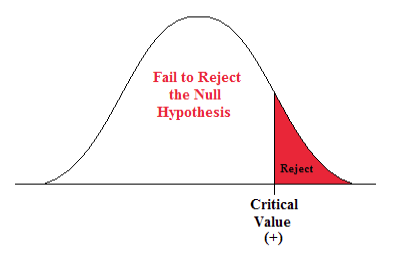

A one-tailed hypothesis involves making a “greater than” or “less than ” statement.

For example, suppose we assume the mean height of a male in the U.S. is greater than or equal to 70 inches. The null hypothesis would be H0: µ ≥ 70 inches and the alternative hypothesis would be Ha: µ < 70 inches.

A two-tailed hypothesis involves making an “equal to” or “not equal to” statement.

For example, suppose we assume the mean height of a male in the U.S. is equal to 70 inches. The null hypothesis would be H0: µ = 70 inches and the alternative hypothesis would be Ha: µ ≠ 70 inches.

Note: The “equal” sign is always included in the null hypothesis, whether it is =, ≥, or ≤.

Related: What is a Directional Hypothesis?

Types of Hypothesis Tests

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

The following tutorials provide an explanation of the most common types of hypothesis tests:

Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test Introduction to the One Proportion Z-Test Introduction to the Two Proportion Z-Test

Hey there. My name is Zach Bobbitt. I have a Master of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

- Number Theory

- Data Structures

- Cornerstones

Summary of the 3 Approaches to Hypothesis Testing

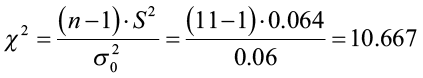

Steps to conduct a hypothesis test using $p$-values:.

Identify the null hypothesis and the alternative hypothesis (and decide which is the claim).

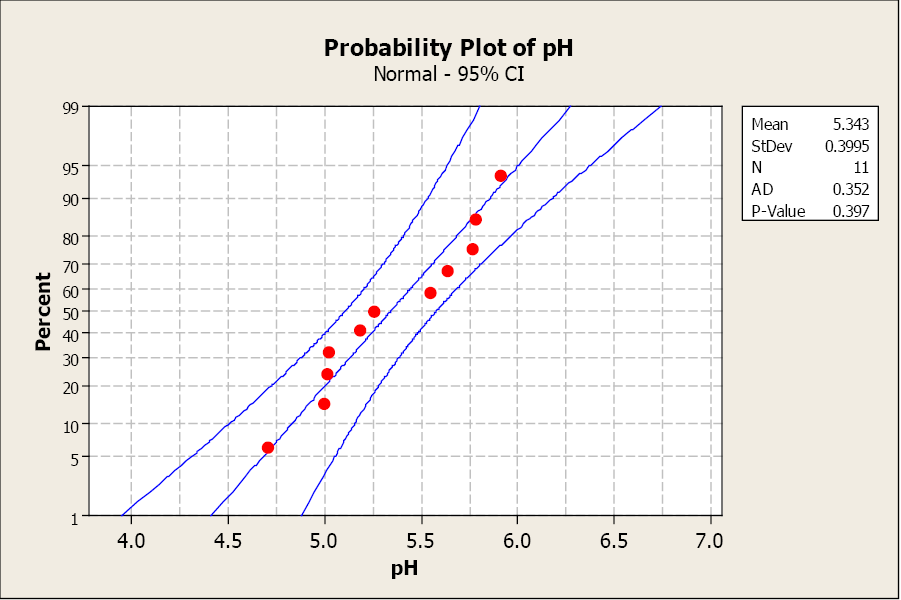

Ensure any necessary assumptions are met for the test to be conducted.

Find the test statistic.

Find the p-value associated with the test statistic as it relates to the alternative hypothesis.

Compare the p-value with the significance level, $\alpha$. If $p \lt \alpha$, conclude that the null hypothesis should be rejected based on what we saw. If not, conclude that we fail to reject the null hypothesis as a result of what we saw.

Make an inference.

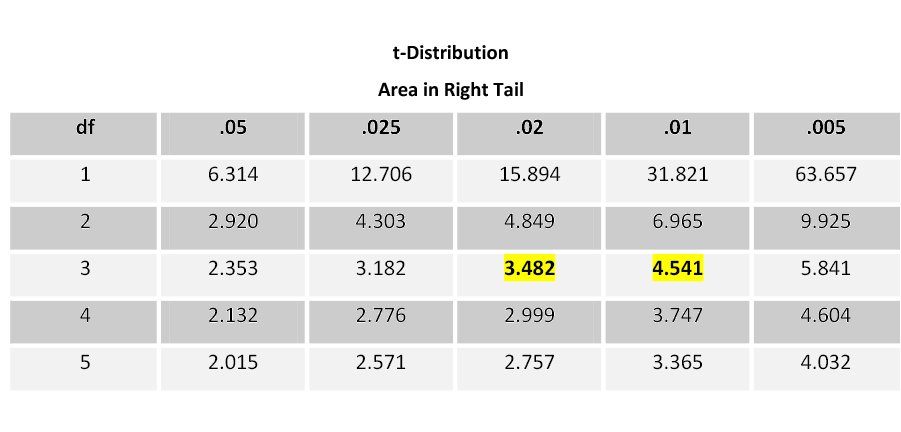

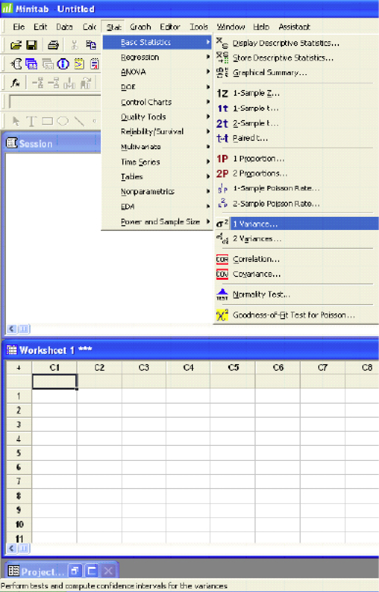

Steps to Conduct a Hypothesis Test Using Critical Values:

Find the critical values associated with the significance level, $\alpha$, and the alternative hypothesis to establish the rejection region in the distribution.

If the test statistic falls in the rejection region, conclude that the null hypothesis should be rejected based on what we saw. If not, conclude that we fail to reject the null hypothesis as a result of what we saw.

Steps to Conduct a Hypothesis Test Using a Confidence Interval:

Construct a confidence interval with a confidence level of $(1-\alpha)$

If the hypothesized population parameter falls outside of the confidence interval, conclude that the null hypothesis should be rejected based on what we saw. If it falls within the confidence interval, conclude that we fail to reject the null hypothesis as a result of what we saw.

- > Machine Learning

- > Statistics

What is Hypothesis Testing? Types and Methods

- Soumyaa Rawat

- Jul 23, 2021

Hypothesis Testing

Hypothesis testing is the act of testing a hypothesis or a supposition in relation to a statistical parameter. Analysts implement hypothesis testing in order to test if a hypothesis is plausible or not.

In data science and statistics , hypothesis testing is an important step as it involves the verification of an assumption that could help develop a statistical parameter. For instance, a researcher establishes a hypothesis assuming that the average of all odd numbers is an even number.

In order to find the plausibility of this hypothesis, the researcher will have to test the hypothesis using hypothesis testing methods. Unlike a hypothesis that is ‘supposed’ to stand true on the basis of little or no evidence, hypothesis testing is required to have plausible evidence in order to establish that a statistical hypothesis is true.

Perhaps this is where statistics play an important role. A number of components are involved in this process. But before understanding the process involved in hypothesis testing in research methodology, we shall first understand the types of hypotheses that are involved in the process. Let us get started!

Types of Hypotheses

In data sampling, different types of hypothesis are involved in finding whether the tested samples test positive for a hypothesis or not. In this segment, we shall discover the different types of hypotheses and understand the role they play in hypothesis testing.

Alternative Hypothesis

Alternative Hypothesis (H1) or the research hypothesis states that there is a relationship between two variables (where one variable affects the other). The alternative hypothesis is the main driving force for hypothesis testing.

It implies that the two variables are related to each other and the relationship that exists between them is not due to chance or coincidence.

When the process of hypothesis testing is carried out, the alternative hypothesis is the main subject of the testing process. The analyst intends to test the alternative hypothesis and verifies its plausibility.

Null Hypothesis

The Null Hypothesis (H0) aims to nullify the alternative hypothesis by implying that there exists no relation between two variables in statistics. It states that the effect of one variable on the other is solely due to chance and no empirical cause lies behind it.

The null hypothesis is established alongside the alternative hypothesis and is recognized as important as the latter. In hypothesis testing, the null hypothesis has a major role to play as it influences the testing against the alternative hypothesis.

(Must read: What is ANOVA test? )

Non-Directional Hypothesis

The Non-directional hypothesis states that the relation between two variables has no direction.

Simply put, it asserts that there exists a relation between two variables, but does not recognize the direction of effect, whether variable A affects variable B or vice versa.

Directional Hypothesis

The Directional hypothesis, on the other hand, asserts the direction of effect of the relationship that exists between two variables.

Herein, the hypothesis clearly states that variable A affects variable B, or vice versa.

Statistical Hypothesis

A statistical hypothesis is a hypothesis that can be verified to be plausible on the basis of statistics.

By using data sampling and statistical knowledge, one can determine the plausibility of a statistical hypothesis and find out if it stands true or not.

(Related blog: z-test vs t-test )

Performing Hypothesis Testing

Now that we have understood the types of hypotheses and the role they play in hypothesis testing, let us now move on to understand the process in a better manner.

In hypothesis testing, a researcher is first required to establish two hypotheses - alternative hypothesis and null hypothesis in order to begin with the procedure.

To establish these two hypotheses, one is required to study data samples, find a plausible pattern among the samples, and pen down a statistical hypothesis that they wish to test.

A random population of samples can be drawn, to begin with hypothesis testing. Among the two hypotheses, alternative and null, only one can be verified to be true. Perhaps the presence of both hypotheses is required to make the process successful.

At the end of the hypothesis testing procedure, either of the hypotheses will be rejected and the other one will be supported. Even though one of the two hypotheses turns out to be true, no hypothesis can ever be verified 100%.

(Read also: Types of data sampling techniques )

Therefore, a hypothesis can only be supported based on the statistical samples and verified data. Here is a step-by-step guide for hypothesis testing.

Establish the hypotheses

First things first, one is required to establish two hypotheses - alternative and null, that will set the foundation for hypothesis testing.

These hypotheses initiate the testing process that involves the researcher working on data samples in order to either support the alternative hypothesis or the null hypothesis.

Generate a testing plan

Once the hypotheses have been formulated, it is now time to generate a testing plan. A testing plan or an analysis plan involves the accumulation of data samples, determining which statistic is to be considered and laying out the sample size.

All these factors are very important while one is working on hypothesis testing.

Analyze data samples

As soon as a testing plan is ready, it is time to move on to the analysis part. Analysis of data samples involves configuring statistical values of samples, drawing them together, and deriving a pattern out of these samples.

While analyzing the data samples, a researcher needs to determine a set of things -

Significance Level - The level of significance in hypothesis testing indicates if a statistical result could have significance if the null hypothesis stands to be true.

Testing Method - The testing method involves a type of sampling-distribution and a test statistic that leads to hypothesis testing. There are a number of testing methods that can assist in the analysis of data samples.

Test statistic - Test statistic is a numerical summary of a data set that can be used to perform hypothesis testing.

P-value - The P-value interpretation is the probability of finding a sample statistic to be as extreme as the test statistic, indicating the plausibility of the null hypothesis.

Infer the results

The analysis of data samples leads to the inference of results that establishes whether the alternative hypothesis stands true or not. When the P-value is less than the significance level, the null hypothesis is rejected and the alternative hypothesis turns out to be plausible.

Methods of Hypothesis Testing

As we have already looked into different aspects of hypothesis testing, we shall now look into the different methods of hypothesis testing. All in all, there are 2 most common types of hypothesis testing methods. They are as follows -

Frequentist Hypothesis Testing

The frequentist hypothesis or the traditional approach to hypothesis testing is a hypothesis testing method that aims on making assumptions by considering current data.

The supposed truths and assumptions are based on the current data and a set of 2 hypotheses are formulated. A very popular subtype of the frequentist approach is the Null Hypothesis Significance Testing (NHST).

The NHST approach (involving the null and alternative hypothesis) has been one of the most sought-after methods of hypothesis testing in the field of statistics ever since its inception in the mid-1950s.

Bayesian Hypothesis Testing

A much unconventional and modern method of hypothesis testing, the Bayesian Hypothesis Testing claims to test a particular hypothesis in accordance with the past data samples, known as prior probability, and current data that lead to the plausibility of a hypothesis.

The result obtained indicates the posterior probability of the hypothesis. In this method, the researcher relies on ‘prior probability and posterior probability’ to conduct hypothesis testing on hand.

On the basis of this prior probability, the Bayesian approach tests a hypothesis to be true or false. The Bayes factor, a major component of this method, indicates the likelihood ratio among the null hypothesis and the alternative hypothesis.

The Bayes factor is the indicator of the plausibility of either of the two hypotheses that are established for hypothesis testing.

(Also read - Introduction to Bayesian Statistics )

To conclude, hypothesis testing, a way to verify the plausibility of a supposed assumption can be done through different methods - the Bayesian approach or the Frequentist approach.

Although the Bayesian approach relies on the prior probability of data samples, the frequentist approach assumes without a probability. A number of elements involved in hypothesis testing are - significance level, p-level, test statistic, and method of hypothesis testing.

(Also read: Introduction to probability distributions )

A significant way to determine whether a hypothesis stands true or not is to verify the data samples and identify the plausible hypothesis among the null hypothesis and alternative hypothesis.

Share Blog :

Be a part of our Instagram community

Trending blogs

5 Factors Influencing Consumer Behavior

Elasticity of Demand and its Types

What is PESTLE Analysis? Everything you need to know about it

An Overview of Descriptive Analysis

What is Managerial Economics? Definition, Types, Nature, Principles, and Scope

5 Factors Affecting the Price Elasticity of Demand (PED)

6 Major Branches of Artificial Intelligence (AI)

Dijkstra’s Algorithm: The Shortest Path Algorithm

Scope of Managerial Economics

Different Types of Research Methods

Latest Comments

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 3: Hypothesis Testing

The previous two chapters introduced methods for organizing and summarizing sample data, and using sample statistics to estimate population parameters. This chapter introduces the next major topic of inferential statistics: hypothesis testing.

A hypothesis is a statement or claim about a property of a population.

The Fundamentals of Hypothesis Testing

When conducting scientific research, typically there is some known information, perhaps from some past work or from a long accepted idea. We want to test whether this claim is believable. This is the basic idea behind a hypothesis test:

- State what we think is true.

- Quantify how confident we are about our claim.

- Use sample statistics to make inferences about population parameters.

For example, past research tells us that the average life span for a hummingbird is about four years. You have been studying the hummingbirds in the southeastern United States and find a sample mean lifespan of 4.8 years. Should you reject the known or accepted information in favor of your results? How confident are you in your estimate? At what point would you say that there is enough evidence to reject the known information and support your alternative claim? How far from the known mean of four years can the sample mean be before we reject the idea that the average lifespan of a hummingbird is four years?

Hypothesis testing is a procedure, based on sample evidence and probability, used to test claims regarding a characteristic of a population.

A hypothesis is a claim or statement about a characteristic of a population of interest to us. A hypothesis test is a way for us to use our sample statistics to test a specific claim.

The population mean weight is known to be 157 lb. We want to test the claim that the mean weight has increased.

Two years ago, the proportion of infected plants was 37%. We believe that a treatment has helped, and we want to test the claim that there has been a reduction in the proportion of infected plants.

Components of a Formal Hypothesis Test

The null hypothesis is a statement about the value of a population parameter, such as the population mean (µ) or the population proportion ( p ). It contains the condition of equality and is denoted as H 0 (H-naught).

H 0 : µ = 157 or H 0 : p = 0.37

The alternative hypothesis is the claim to be tested, the opposite of the null hypothesis. It contains the value of the parameter that we consider plausible and is denoted as H 1 .

H 1 : µ > 157 or H 1 : p ≠ 0.37

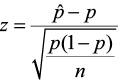

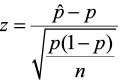

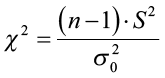

The test statistic is a value computed from the sample data that is used in making a decision about the rejection of the null hypothesis. The test statistic converts the sample mean ( x̄ ) or sample proportion ( p̂ ) to a Z- or t-score under the assumption that the null hypothesis is true . It is used to decide whether the difference between the sample statistic and the hypothesized claim is significant.

The p-value is the area under the curve to the left or right of the test statistic. It is compared to the level of significance ( α ).

The critical value is the value that defines the rejection zone (the test statistic values that would lead to rejection of the null hypothesis). It is defined by the level of significance.

The level of significance ( α ) is the probability that the test statistic will fall into the critical region when the null hypothesis is true. This level is set by the researcher.

The conclusion is the final decision of the hypothesis test. The conclusion must always be clearly stated, communicating the decision based on the components of the test. It is important to realize that we never prove or accept the null hypothesis. We are merely saying that the sample evidence is not strong enough to warrant the rejection of the null hypothesis. The conclusion is made up of two parts:

1) Reject or fail to reject the null hypothesis, and 2) there is or is not enough evidence to support the alternative claim.

Option 1) Reject the null hypothesis (H 0 ). This means that you have enough statistical evidence to support the alternative claim (H 1 ).

Option 2) Fail to reject the null hypothesis (H 0 ). This means that you do NOT have enough evidence to support the alternative claim (H 1 ).

Another way to think about hypothesis testing is to compare it to the US justice system. A defendant is innocent until proven guilty (Null hypothesis—innocent). The prosecuting attorney tries to prove that the defendant is guilty (Alternative hypothesis—guilty). There are two possible conclusions that the jury can reach. First, the defendant is guilty (Reject the null hypothesis). Second, the defendant is not guilty (Fail to reject the null hypothesis). This is NOT the same thing as saying the defendant is innocent! In the first case, the prosecutor had enough evidence to reject the null hypothesis (innocent) and support the alternative claim (guilty). In the second case, the prosecutor did NOT have enough evidence to reject the null hypothesis (innocent) and support the alternative claim of guilty.

The Null and Alternative Hypotheses

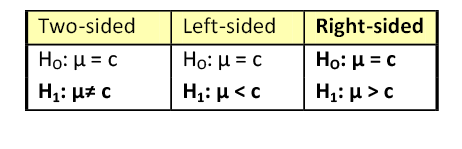

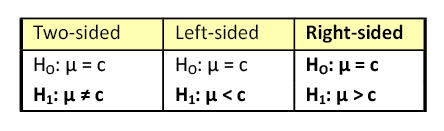

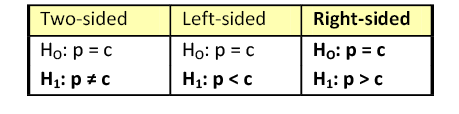

There are three different pairs of null and alternative hypotheses:

where c is some known value.

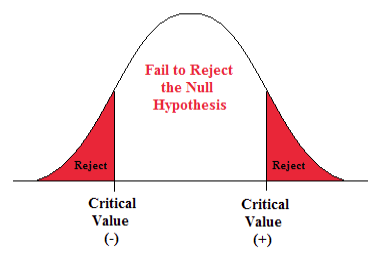

A Two-sided Test

This tests whether the population parameter is equal to, versus not equal to, some specific value.

H o : μ = 12 vs. H 1 : μ ≠ 12

The critical region is divided equally into the two tails and the critical values are ± values that define the rejection zones.

A forester studying diameter growth of red pine believes that the mean diameter growth will be different if a fertilization treatment is applied to the stand.

- H o : μ = 1.2 in./ year

- H 1 : μ ≠ 1.2 in./ year

This is a two-sided question, as the forester doesn’t state whether population mean diameter growth will increase or decrease.

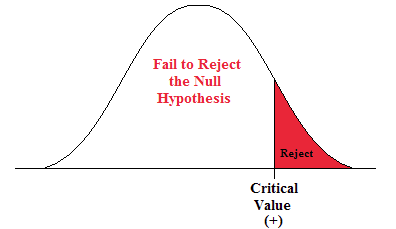

A Right-sided Test

This tests whether the population parameter is equal to, versus greater than, some specific value.

H o : μ = 12 vs. H 1 : μ > 12

The critical region is in the right tail and the critical value is a positive value that defines the rejection zone.

A biologist believes that there has been an increase in the mean number of lakes infected with milfoil, an invasive species, since the last study five years ago.

- H o : μ = 15 lakes

- H 1 : μ >15 lakes

This is a right-sided question, as the biologist believes that there has been an increase in population mean number of infected lakes.

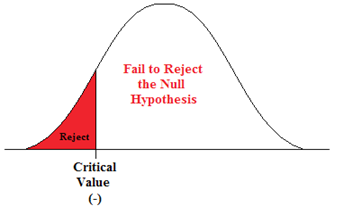

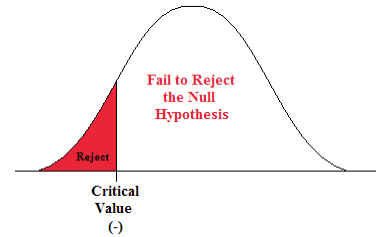

A Left-sided Test

This tests whether the population parameter is equal to, versus less than, some specific value.

H o : μ = 12 vs. H 1 : μ < 12

The critical region is in the left tail and the critical value is a negative value that defines the rejection zone.

A scientist’s research indicates that there has been a change in the proportion of people who support certain environmental policies. He wants to test the claim that there has been a reduction in the proportion of people who support these policies.

- H o : p = 0.57

- H 1 : p < 0.57

This is a left-sided question, as the scientist believes that there has been a reduction in the true population proportion.

Statistically Significant

When the observed results (the sample statistics) are unlikely (a low probability) under the assumption that the null hypothesis is true, we say that the result is statistically significant, and we reject the null hypothesis. This result depends on the level of significance, the sample statistic, sample size, and whether it is a one- or two-sided alternative hypothesis.

Types of Errors

When testing, we arrive at a conclusion of rejecting the null hypothesis or failing to reject the null hypothesis. Such conclusions are sometimes correct and sometimes incorrect (even when we have followed all the correct procedures). We use incomplete sample data to reach a conclusion and there is always the possibility of reaching the wrong conclusion. There are four possible conclusions to reach from hypothesis testing. Of the four possible outcomes, two are correct and two are NOT correct.

A Type I error is when we reject the null hypothesis when it is true. The symbol α (alpha) is used to represent Type I errors. This is the same alpha we use as the level of significance. By setting alpha as low as reasonably possible, we try to control the Type I error through the level of significance.

A Type II error is when we fail to reject the null hypothesis when it is false. The symbol β (beta) is used to represent Type II errors.

In general, Type I errors are considered more serious. One step in the hypothesis test procedure involves selecting the significance level ( α ), which is the probability of rejecting the null hypothesis when it is correct. So the researcher can select the level of significance that minimizes Type I errors. However, there is a mathematical relationship between α, β , and n (sample size).

- As α increases, β decreases

- As α decreases, β increases

- As sample size increases (n), both α and β decrease

The natural inclination is to select the smallest possible value for α, thinking to minimize the possibility of causing a Type I error. Unfortunately, this forces an increase in Type II errors. By making the rejection zone too small, you may fail to reject the null hypothesis, when, in fact, it is false. Typically, we select the best sample size and level of significance, automatically setting β .

Power of the Test

A Type II error ( β ) is the probability of failing to reject a false null hypothesis. It follows that 1- β is the probability of rejecting a false null hypothesis. This probability is identified as the power of the test, and is often used to gauge the test’s effectiveness in recognizing that a null hypothesis is false.

The probability that at a fixed level α significance test will reject H 0 , when a particular alternative value of the parameter is true is called the power of the test.

Power is also directly linked to sample size. For example, suppose the null hypothesis is that the mean fish weight is 8.7 lb. Given sample data, a level of significance of 5%, and an alternative weight of 9.2 lb., we can compute the power of the test to reject μ = 8.7 lb. If we have a small sample size, the power will be low. However, increasing the sample size will increase the power of the test. Increasing the level of significance will also increase power. A 5% test of significance will have a greater chance of rejecting the null hypothesis than a 1% test because the strength of evidence required for the rejection is less. Decreasing the standard deviation has the same effect as increasing the sample size: there is more information about μ .

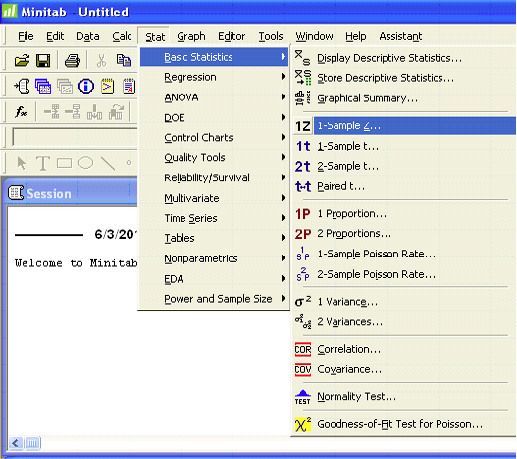

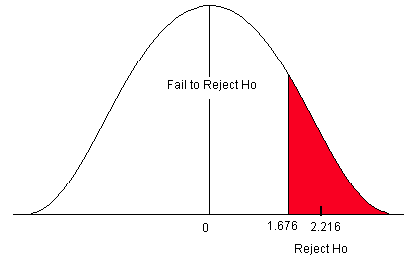

Hypothesis Test about the Population Mean ( μ ) when the Population Standard Deviation ( σ ) is Known

We are going to examine two equivalent ways to perform a hypothesis test: the classical approach and the p-value approach. The classical approach is based on standard deviations. This method compares the test statistic (Z-score) to a critical value (Z-score) from the standard normal table. If the test statistic falls in the rejection zone, you reject the null hypothesis. The p-value approach is based on area under the normal curve. This method compares the area associated with the test statistic to alpha ( α ), the level of significance (which is also area under the normal curve). If the p-value is less than alpha, you would reject the null hypothesis.

As a past student poetically said: If the p-value is a wee value, Reject Ho

Both methods must have:

- Data from a random sample.

- Verification of the assumption of normality.

- A null and alternative hypothesis.

- A criterion that determines if we reject or fail to reject the null hypothesis.

- A conclusion that answers the question.

There are four steps required for a hypothesis test:

- State the null and alternative hypotheses.

- State the level of significance and the critical value.

- Compute the test statistic.

- State a conclusion.

The Classical Method for Testing a Claim about the Population Mean ( μ ) when the Population Standard Deviation ( σ ) is Known

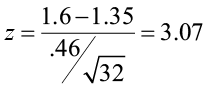

A forester studying diameter growth of red pine believes that the mean diameter growth will be different from the known mean growth of 1.35 inches/year if a fertilization treatment is applied to the stand. He conducts his experiment, collects data from a sample of 32 plots, and gets a sample mean diameter growth of 1.6 in./year. The population standard deviation for this stand is known to be 0.46 in./year. Does he have enough evidence to support his claim?

Step 1) State the null and alternative hypotheses.

- H o : μ = 1.35 in./year

- H 1 : μ ≠ 1.35 in./year

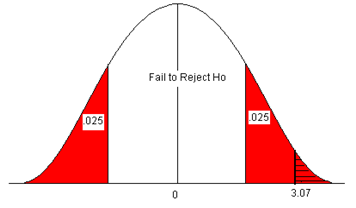

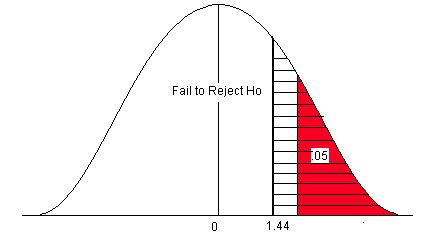

Step 2) State the level of significance and the critical value.

- We will choose a level of significance of 5% ( α = 0.05).

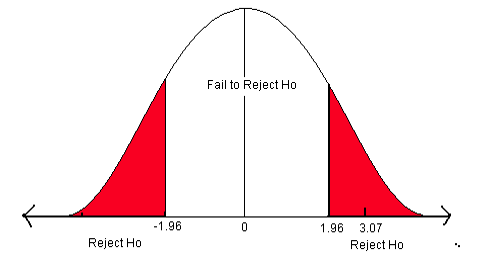

- For a two-sided question, we need a two-sided critical value – Z α /2 and + Z α /2 .

- The level of significance is divided by 2 (since we are only testing “not equal”). We must have two rejection zones that can deal with either a greater than or less than outcome (to the right (+) or to the left (-)).

- We need to find the Z-score associated with the area of 0.025. The red areas are equal to α /2 = 0.05/2 = 0.025 or 2.5% of the area under the normal curve.

- Go into the body of values and find the negative Z-score associated with the area 0.025.

- The negative critical value is -1.96. Since the curve is symmetric, we know that the positive critical value is 1.96.

- ±1.96 are the critical values. These values set up the rejection zone. If the test statistic falls within these red rejection zones, we reject the null hypothesis.

Step 3) Compute the test statistic.

- The test statistic is the number of standard deviations the sample mean is from the known mean. It is also a Z-score, just like the critical value.

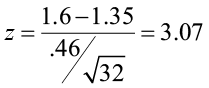

- For this problem, the test statistic is

Step 4) State a conclusion.

- Compare the test statistic to the critical value. If the test statistic falls into the rejection zones, reject the null hypothesis. In other words, if the test statistic is greater than +1.96 or less than -1.96, reject the null hypothesis.

In this problem, the test statistic falls in the red rejection zone. The test statistic of 3.07 is greater than the critical value of 1.96.We will reject the null hypothesis. We have enough evidence to support the claim that the mean diameter growth is different from (not equal to) 1.35 in./year.

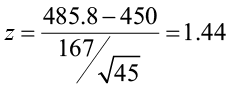

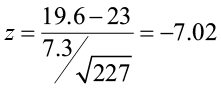

A researcher believes that there has been an increase in the average farm size in his state since the last study five years ago. The previous study reported a mean size of 450 acres with a population standard deviation ( σ ) of 167 acres. He samples 45 farms and gets a sample mean of 485.8 acres. Is there enough information to support his claim?

- H o : μ = 450 acres