What Is Computer Architecture? Components, Types, and Examples

Computer architecture determines how a computer’s components exchange electronic signals to enable input, processing, and output.

- Computer architecture is defined as the end-to-end structure of a computer system that determines how its components interact with each other in helping execute the machine’s purpose (i.e., processing data).

- This article explains the components of computer architecture and its key types and gives a few notable examples.

Table of Contents

What is computer architecture.

Components of Computer Architecture

Types of Computer Architecture

Examples of Computer Architecture

Computer architecture refers to the end-to-end structure of a computer system that determines how its components interact with each other in helping to execute the machine’s purpose (i.e., processing data), often avoiding any reference to the actual technical implementation.

Examples of Computer Architecture: Von Neumann Architecture (a) and Harvard Architecture (b)

Source: ResearchGate Opens a new window

Computers are an integral element of any organization’s infrastructure, from the equipment employees use at the office to the cell phones and wearables they use to work from home. All computers, regardless of their size, are founded on a set of principles describing how hardware and software connect to make them function. This is what constitutes computer architecture.

Computer architecture is the arrangement of the components that comprise a computer system and the engine at the core of the processes that drive its functioning. It specifies the machine interface for which programming languages and associated processors are designed.

Complex instruction set computer (CISC) and reduced instruction set computer (RISC) are the two predominant approaches to the architecture that influence how computer processors function.

CISC processors have one processing unit, auxiliary memory, and a tiny register set containing hundreds of unique commands. These processors execute a task with a single instruction, making a programmer’s work simpler since fewer lines of code are required to complete the operation. This method utilizes less memory but may need more time to execute instructions.

A reassessment led to the creation of high-performance computers based on the RISC architecture. The hardware is designed to be as basic and swift as possible, and sophisticated instructions can be executed with simpler ones.

How does computer architecture work?

Computer architecture allows a computer to compute, retain, and retrieve information. This data can be digits in a spreadsheet, lines of text in a file, dots of color in an image, sound patterns, or the status of a system such as a flash drive.

- Purpose of computer architecture: Everything a system performs, from online surfing to printing, involves the transmission and processing of numbers. A computer’s architecture is merely a mathematical system intended to collect, transmit, and interpret numbers.

- Data in numbers: The computer stores all data as numerals. When a developer is engrossed in machine learning code and analyzing sophisticated algorithms and data structures, it is easy to forget this.

- Manipulating data: The computer manages information using numerical operations. It is possible to display an image on a screen by transferring a matrix of digits to the video memory, with every number reflecting a pixel of color.

- Multifaceted functions: The components of a computer architecture include both software and hardware. The processor — hardware that executes computer programs — is the primary part of any computer.

- Booting up: At the most elementary level of a computer design, programs are executed by the processor whenever the computer is switched on. These programs configure the computer’s proper functioning and initialize the different hardware sub-components to a known state. This software is known as firmware since it is persistently preserved in the computer’s memory.

- Support for temporary storage: Memory is also a vital component of computer architecture, with several types often present in a single system. The memory is used to hold programs (applications) while they are being executed by the processor and the data being processed by the programs.

- Support for permanent storage : There can also be tools for storing data or sending information to the external world as part of the computer system. These provide text inputs through the keyboard, the presentation of knowledge on a monitor, and the transfer of programs and data from or to a disc drive.

- User-facing functionality: Software governs the operation and functioning of a computer. Several software ‘layers’ exist in computer architecture. Typically, a layer would only interface with layers below or above it.

The working of a computer architecture begins with the bootup process. Once the firmware is loaded, it can initialize the rest of the computer architecture and ensure that it works seamlessly, i.e., helping the user retrieve, consume, and work on different types of data.

See More: Distributed Computing vs. Grid Computing: 10 Key Comparisons

Depending on the method of categorization, the parts of a computer architecture can be subdivided in several ways. The main components of a computer architecture are the CPU, memory, and peripherals. All these elements are linked by the system bus, which comprises an address bus, a data bus, and a control bus. Within this framework, the computer architecture has eight key components, as described below.

1. Input unit and associated peripherals

The input unit provides external data sources to the computer system. Therefore, it connects the external environment to the computer. It receives information from input devices, translates it to machine language, and then inserts it within the computer system. The keyboard, mouse, or other input devices are the most often utilized and have corresponding hardware drivers that allow them to work in sync with the rest of the computer architecture.

2. Output unit and associated peripherals

The output unit delivers the computer process’s results to the user. A majority of the output data comprises music, graphics, or video. A computer architecture’s output devices encompass the display, printing unit, speakers, headphones, etc.

To play an MP3 file, for instance, the system reads a number array from the disc and into memory. The computer architecture manipulates these numbers to convert compressed audio data to uncompressed audio data and then outputs the resulting set of numbers (uncompressed audio file) to the audio chips. The chip then makes it user-ready through the output unit and associated peripherals.

3. Storage unit/memory

The storage unit contains numerous computer parts that are employed to store data. It is typically separated into primary storage and secondary storage.

Primary storage unit

This component of the computer architecture is also referred to as the main memory, as the CPU has direct access to it. Primary memory is utilized for storing information and instructions during program execution. Random access memory (RAM) and read-only memory (ROM) are the two kinds of memory:

- RAM supplies the necessary information straight to the CPU. It is a temporary memory that stores data and instructions intermittently.

- ROM is a memory type that contains pre-installed instructions, including firmware. This memory’s content is persistent and cannot be modified. ROM is utilized to boot the machine upon initial startup. The computer is now unaware of anything outside the ROM. The chip instructs it on how to set up the computer architecture, conduct a power-on self-test (POST), and finally locate the hard drive so that the operating system can be launched.

Secondary storage unit

Secondary or external storage is inaccessible directly to the CPU. Before the CPU uses secondary storage data, it must be transferred to the main storage. Secondary storage permanently retains vast amounts of data. Examples include hard disk drives (HDDs), solid-state drives (SSDs) , compact disks (CDs), etc.

See More: What Is IT Infrastructure? Definition, Building Blocks, and Management Best Practices

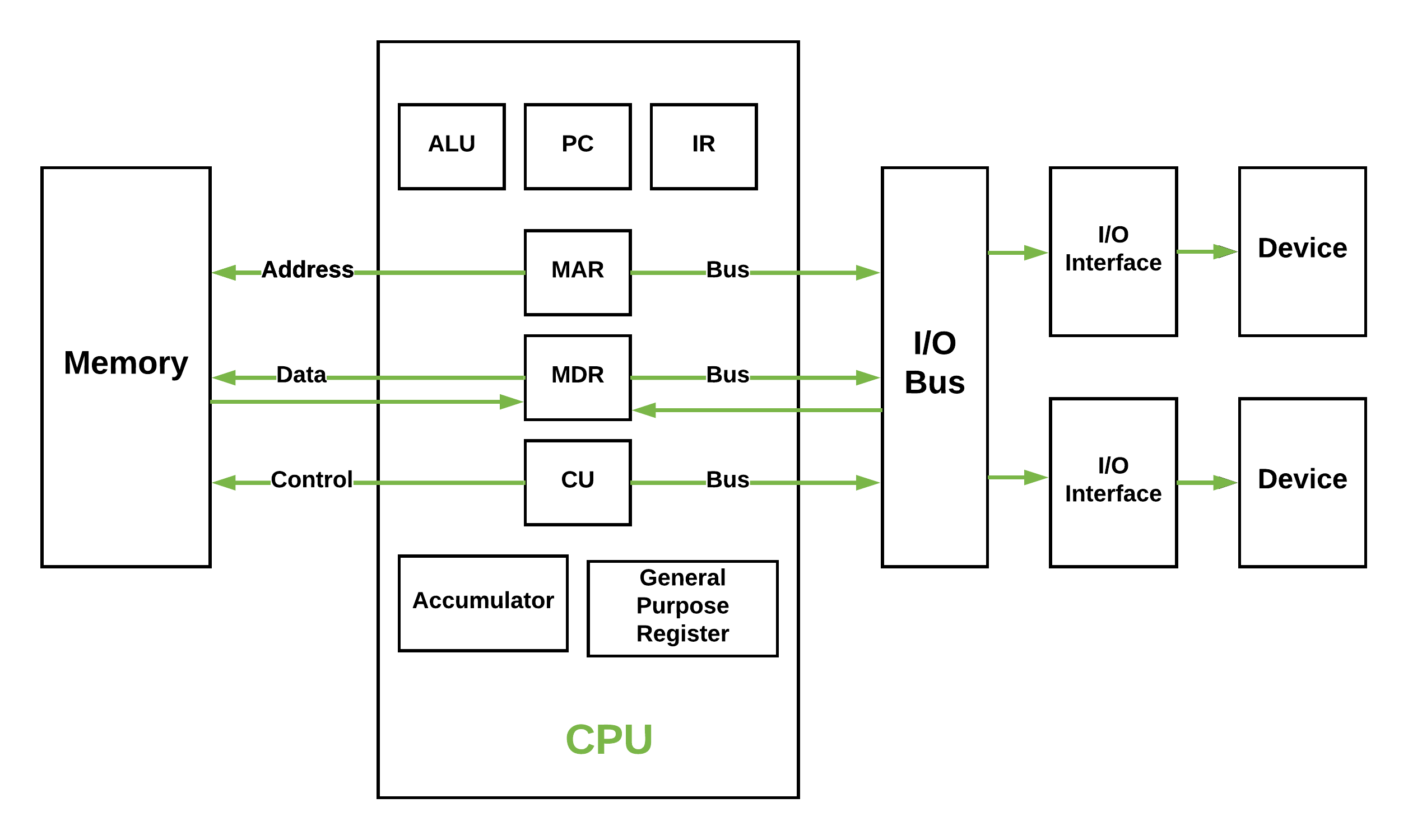

4. Central processing unit (CPU)

The central processing unit includes registers, an arithmetic logic unit (ALU), and control circuits, which interpret and execute assembly language instructions. The CPU interacts with all the other parts of the computer architecture to make sense of the data and deliver the necessary output.

Here is a brief overview of the CPU’s sub-components:

1. Registers

These are high-speed and purpose-built temporary memory devices. Rather than being referred to by their address, they are accessed and modified directly by the CPU throughout execution. Essentially, they contain data that the CPU is presently processing. Registers contain information, commands, addresses, and intermediate processing results.

2. Arithmetic logic unit (ALU)

The arithmetic logic unit includes the electrical circuitry that performs any arithmetic and logical processes on the supplied data. It is used to execute all arithmetic (additions, subtractions, multiplication, division) and logical (<, >, AND, OR, etc.) computations. Registers are used by the ALU to retain the data being processed.

3. Control unit

The control unit collaborates with the computer’s input and output devices. It instructs the computer to execute stored program instructions via communication with the ALU and registers. The control unit aims to arrange data and instruction processing.

The microprocessor is the primary component of computer hardware that runs the CPU. Large printed circuit boards (PCBs) are utilized in all electronic systems, including desktops, calculators, and internet of things (IoT) devices. The Intel 40004 was the first microprocessor with all CPU components on a single chip.

In addition to these four core components, a computer architecture also has supporting elements that make it easier to function, such as:

5. Bootloader

The firmware contains the bootloader, a specific program executed by the processor that retrieves the operating system from the disc (or non-volatile memory or network interface, as deemed applicable) and loads it into the memory so that the processor can execute it. The bootloader is found on desktop and workstation computers and embedded devices. It is essential for all computer architectures.

6. Operating system (OS)

The operating system governs the computer’s functionality just above firmware. It manages memory usage and regulates devices such as the keyboard, mouse, display, and disc drives. The OS also provides the user with an interface, allowing them to launch apps and access data on the drive.

Typically, the operating system offers a set of tools for programs, allowing them to access the screen, disc drives, and other elements of the computer’s architecture.

A bus is a tangible collection of signal lines with a linked purpose; a good example is the universal serial bus (USB) . Buses enable the flow of electrical impulses between various components of a computer’s design, transferring information from one system to another. The size of a bus is the count of information-transferring signal lines. A bus with a size of 8 bits, for instance, transports 8 data bits in a parallel formation.

8. Interrupts

Interrupts, also known as traps or exceptions in certain processors, are a method for redirecting the processor from the running of the current program so that it can handle an occurrence. Such an event might be a malfunction from a peripheral or just the fact that an I/O device has completed its previous duty and is presently ready for another one. Every time you press a key and click a mouse button, your system will generate an interrupt.

See More: What Is Network Hardware? Definition, Architecture, Challenges, and Best Practices

It is possible to set up and configure the above architectural components in numerous ways. This gives rise to the different types of computer architecture. The most notable ones include:

1. Instruction set architecture (ISA)

Instruction set architecture (ISA) is a bridge between the software and hardware of a computer. It functions as a programmer’s viewpoint on a machine. Computers can only comprehend binary language (0 and 1), but humans can comprehend high-level language (if-else, while, conditions, and the like). Consequently, ISA plays a crucial role in user-computer communications by translating high-level language into binary language.

In addition, ISA outlines the architecture of a computer in terms of the fundamental activities it must support. It’s not involved with implementation-specific computer features. Instruction set architecture dictates that the computer must assist:

- Arithmetic/logic instructions: These instructions execute various mathematical or logical processing elements solely on a single or maybe more operands (data inputs).

- Data transfer instructions: These instructions move commands from the memory or into the processor registers, or vice versa.

- Branch and jump instructions: These instructions are essential to interrupt the logical sequence of instructions and jump to other destinations.

2. Microarchitecture

Microarchitecture, unlike ISA, focuses on the implementation of how instructions will be executed at a lower level. This is influenced by the microprocessor’s structural design.

Microarchitecture is a technique in which the instruction set architecture incorporates a processor. Engineering specialists and hardware scientists execute ISA with various microarchitectures that vary according to the development of new technologies. Therefore, processors may be physically designed to execute a certain instruction set without modifying the ISA.

Simply put, microarchitecture is the purpose-built logical arrangement of the microprocessor’s electrical components and data pathways. It facilitates the optimum execution of instructions.

3. Client-server architecture

Multiple clients (remote processors) may request and get services from a single, centralized server in a client-server system (host computer). Client computers allow users to request services from the server and receive the server’s reply. Servers receive and react to client inquiries.

A server should provide clients with a standardized, transparent interface so that they are unaware of the system’s features (software and hardware components) that are used to provide the service.

Clients are often located on desktops or laptops, while servers are typically located somewhere else on the network, on more powerful hardware. This computer architecture is most efficient when the clients and the servers frequently perform pre-specified responsibilities.

4. Single instruction, multiple data (SIMD) architecture

Single instruction, multiple data (SIMD) computer systems can process multiple data points concurrently. This cleared the path for supercomputers and other devices with incredible performance capabilities. In this form of design, all processors receive an identical command from the control unit yet operate on distinct data packets. The shared memory unit requires numerous modules to interact with all CPUs concurrently.

5. Multicore architecture

Multicore is a framework wherein a single physical processor has the logic of multiple processors. A multicore architecture integrates numerous processing cores onto only one integrated circuit. The goal is to develop a system capable of doing more tasks concurrently, improving overall system performance.

See More: What Is Middleware? Definition, Architecture, and Best Practices

Two notable examples of computer architecture have paved the way for recent advancements in computing. These are ‘Von Neumann architecture’ and ‘Harvard architecture.’ Most other architectural designs are proprietary and are therefore not revealed in the public domain beyond a basic abstraction.

Here’s a description of what these two examples of computer architecture are all about.

1. Von Neumann architecture

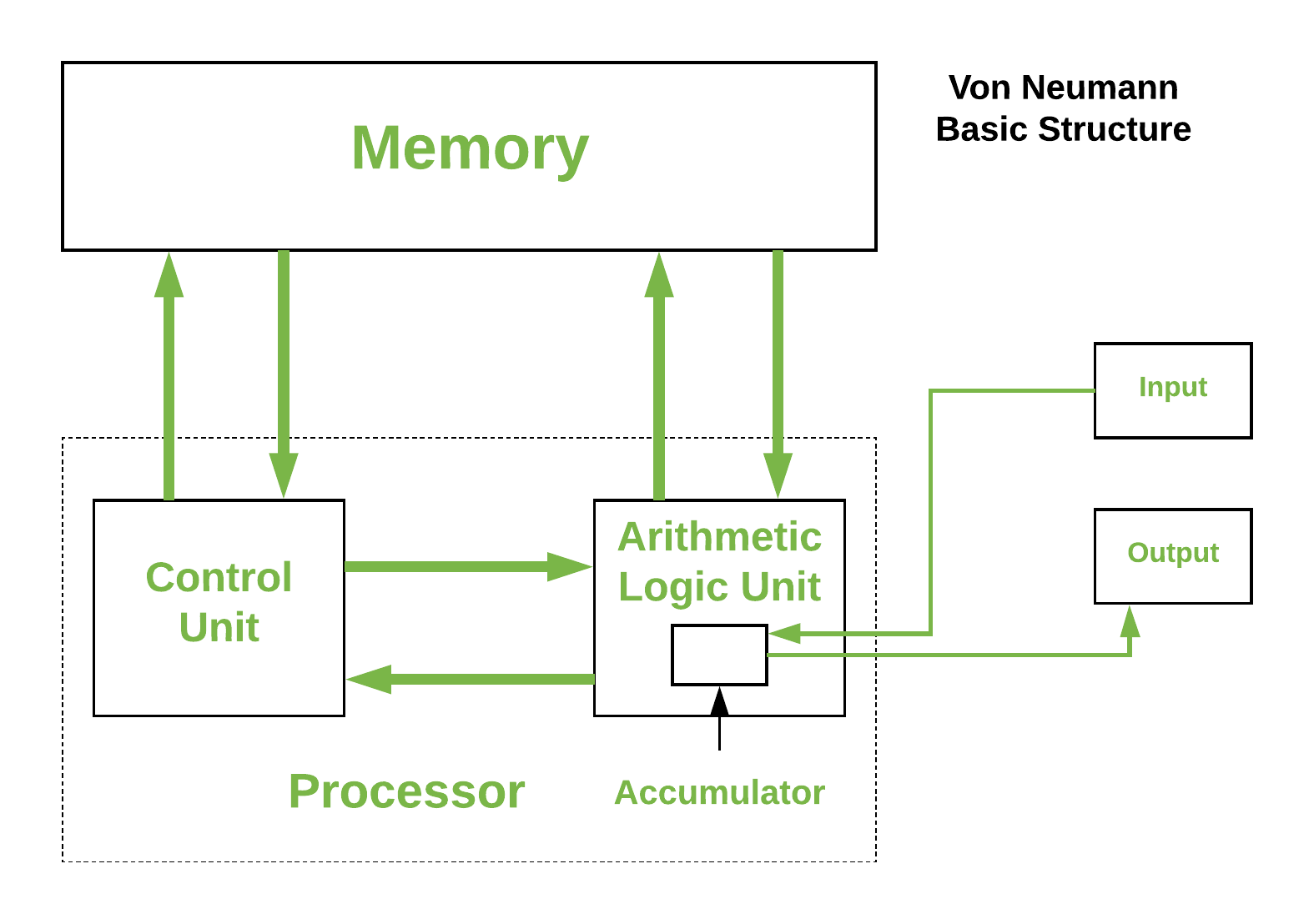

The von Neumann architecture, often referred to as the Princeton architecture, is a computer architecture that was established in a 1945 presentation by John von Neumann and his collaborators in the First Draft of a Report on the EDVAC (electronic discrete variable automatic computer). This example of computer architecture proposes five components:

- A processor with connected registers

- A control unit capable of storing instructions

- Memory capable of storing information as well as instructions and communicating via buses

- Additional or external storage

- Device input as well as output mechanisms

2. Harvard architecture

The Harvard architecture refers to a computer architecture with distinct data and instruction storage and signal pathways. In contrast to the von Neumann architecture, in which program instructions and data use the very same memory and pathways, this design separates the two. In practice, a customized Harvard architecture with two distinct caches is employed (for data and instruction); X86 and Advanced RISC Machine (ARM) systems frequently employ this instruction.

See More: Top 8 Middleware Software Platforms in 2021

Computer architecture is one of the key concepts that define modern computing. Depending on the architecture, you can build micro-machines such as Raspberry Pi or incredibly powerful systems such as supercomputers. It determines how electrical signals move across the different pathways in a computing system to achieve the most optimal outcome.

Did this article help you understand how computer architecture works? Tell us on Facebook Opens a new window , Twitter Opens a new window , and LinkedIn Opens a new window . We’d love to hear from you!

Image source: Shutterstock

MORE ON COMPUTING

- What Is Parallel Processing? Definition, Types, and Examples

- How Do Supercomputers Work? Key Features and Examples

- What Is Cloud Computing? Definition, Benefits, Types, and Trends

- CPU vs. GPU: 11 Key Comparisons

- What Is a Software Engine? Types, Applications, and Importance

Share This Article:

Technical Writer

Recommended Reads

Google Fined by French Regulators in Copyright Dispute With News Publishers

Microsoft and Meta Support Epic Games in Protesting Apple’s App Store Rules

Top 15 ERP Vendors in 2024

What Is Android OS? History, Features, Versions, and Benefits

In Case You Missed It: Standout Tech Moments from SXSW 2024

Meta Being Investigated by Federal Authorities for Drug Sales on Platforms

EE282: Computer Systems Architecture

Instructor : Caroline Trippel Teaching Assistant : Sneha Goenka

EE282 focuses on key topics in advanced computer systems architecture such as multilevel in memory hierarchies, advanced pipelining and super scalar techniques, vectors, GPUs and accelerators, non-volatile storage and advanced IO systems, virtualization, and datacenter hardware and software architecture. The programming assignments introduce performance analysis and optimization techniques for computer systems. At the completion of the course, you will understand how computer systems are organized and, why they are organized that way, and what determines their performance. You will also understand the rich interactions between the hardware and software layers in modern systems. EE282 is appropriate for undergraduate and graduate students specializing in the broad field of computer systems. It is also appropriate for other EE and CS students who want to understand, program, and make efficient use of modern computer systems of any scale in their day-to-day work. Post-EE282, students can take CS316, a research seminar on advanced computer architecture based on recent papers, or CS349d, a seminar that covers the software infrastructure of cloud computing and large-scale datacenters.

News (top is most recent)

- (5/24) Problem Set 3 due date has been extended to May 31, 1pm PST.

- (5/20) Programming Assignment 2 has been released. It is due June 4, 1pm PST.

- (5/10) Problem Set 3 has been released. It is due May 26, 1pm PST.

- (5/6) Programming Assignment 1 has been released. It is due May 20, 1pm PST.

- (4/28) We will not have the office hour on May 4.

- (4/28) We will have the virtual office hour/midterm review session (SCPD students) on May 2 from 5:30AM-6:30PM. It will appear as a lecture under Zoom on Canvas.

- (4/28) We will have the virtual office hour/midterm review session on May 2 from 11:00AM-1:00PM. It will appear as a lecture under Zoom on Canvas.

- (4/28) We will have a review session on April 29 from 2:30PM-5:00PM. It will appear as a lecture under Zoom on Canvas.

- (4/21) Problem Set 2 has been released. It is due May 10, 1pm PST.

- (4/14) We will have a review session on April 15 from 3:00PM-4:30PM. It will appear as a lecture under Zoom on Canvas.

- (4/7) We will have a review session on April 8 from 3:00PM-4:30PM. It will appear as a lecture under Zoom on Canvas.

- (4/5) Problem Set 1 has been released. It is due April 21, 1pm PST.

- Sneha's March 30th office hours will be held over zoom [Password: 101121] from 3:00pm.

- Programming Assignment 3 released here . It is due June 6, 1pm PST on Gradescope. Setup instructions are available here .

- Programming Assignment 2 released here . It is due May 18, 1pm PST on Gradescope. Setup instructions are available here .

- Programming Assignment 1 released here . It is due May 9, 1pm PST on Gradescope.

- GCP setup instructions released here .

- Problem Set for the quarter released here .

- Please make sure you have access to the Canvas, Ed and GradeScope (links posted above).

- Welcome to EE282!

Required Textbook: H&P : J. Hennessy & D. Patterson, Computer Architecture: A Quantitative Approach , 6th edition. M/C : Morgan Claypool Synthesis Lectures (available through the library using your SUID). Problem Set (for the Reference Problems in the table below) Programming Assignment 1 Programming Assignment 2

Homework and Projects

Problem sets.

- Problem Set 3 , due Thursday 5/31, at 1pm PST. Solutions

- Problem Set 2 , due Tuesday 5/10, at 1pm PST. Solutions

- Problem Set 1 , due Thursday 4/21, at 1pm PST. Solutions

Programming Assignments

- GCE Setup Instructions

- Programming Assignment 1 , due Friday 5/20, at 1pm PST.

- Programming Assignment 2 , due Saturday 6/4, at 1pm PST.

Announcements: Visit this web page regularly to access all the handouts, solutions, and announcements. Please check your email regularly as well for announcements from Ed!

- Caroline Trippel: Tuesdays 3:00pm – 4:00pm, Gates 470 (starting 04/04/2023) or by appointment.

- Sneha Goenka: Wednesdays 12:00noon – 1:30pm, Packard 106 (starting 04/05/2023) or by appointment.

- Mondays 2:00PM – 3:00pm, over zoom and it is recorded.

- Midterm quiz on Wednesday, May 11th (1:30PM - 3:00PM PST) in Skilling Auditorium, covers lectures 1-10.

- Final quiz on Saturday, June 10th (3:30PM - 5:00PM PST) in Skilling Auditorium,, covers lectures 1-18.

Grading: Read the material for each lecture, ask questions, participate (5%) Problem set (15%) 3 programming assignments (30%) Midterm quiz (25%) Final quiz (25%)

- No more than 2 people can collaborate on a homework or project assignment.

- Students working together should submit a single assignment for the pair .

- Any assistance received for homework or programming assignment solutions should be acknowledged in writing with specific details.

- No sharing of code, or partial or complete solutions among groups is permitted.

SCPD Video Recording Disclaimer: Video cameras located in the back of the room will capture the instructor presentations in this course. For your convenience, you can access these recordings by logging into the course Canvas site. These recordings might be reused in other Stanford courses, viewed by other Stanford students, faculty, or staff, or used for other education and research purposes. Note that while the cameras are positioned with the intention of recording only the instructor, occasionally a part of your image or voice might be incidentally captured. If you have questions, please contact a member of the teaching team.

Adapted from a template by Andreas Viklund .

Assignments should be attempted individually. If you cannot come up with an answer after trying for a while then you may discuss the material or specific issue with a friend/TA/Prof. The answers should be your own. In other words, don't discuss or compare your answers to an assignment with anyone or any source.

Project teams should work independently. Collaboration among team members is unrestricted (obviously).

Browse Course Material

Course info, instructors.

- Dr. Joel Emer

- Prof. Krste Asanovic

- Prof. Arvind

Departments

- Electrical Engineering and Computer Science

As Taught In

- Computer Design and Engineering

- Theory of Computation

Learning Resource Types

Computer system architecture, course description.

You are leaving MIT OpenCourseWare

Computer architecture and organization

Note: This exam date is subjected to change based on seat availability. You can check final exam date on your hall ticket.

Page Visits

Course layout, books and references, instructor bio.

Prof. Indranil Sengupta

Prof. Kamalika Datta

Course certificate.

DOWNLOAD APP

SWAYAM SUPPORT

Please choose the SWAYAM National Coordinator for support. * :

Computer Organization and Architecture (2-1-1)

SPRING 2019-20

Lecture: monday(8:45 am-10:45 am); lab: monday(10:50 am - 1:50 pm); tuesday(8:45 am - 10:45 am);.

Venue: Room-5006, CC3 Building

Course Objective:

Course Outline:

References:

2. Carl Hamachar, Zvonco Vranesic and Safwat Zaky, Computer Organization, McGraw Hill

3. William Stallings, Computer Organization and Architecture: Designing for Performance, Pearson Education

4. John P. Hayes , Computer Architecture and Organization, McGraw Hill

5. Morris Mano , Computer System Architecture, Pearson Education

6. Michael D. Ciletti , Advanced Digital Design with the Verilog HDL, 2nd Edition, Pearson

Important Instructions:

1. Classes will be conducted using slide presentation as well as chalk-board.

2. Official slide sets and miscellaneous study materials from some of the main text books and elsewhere will be uploaded on the web site on a regular basis.

3. Every student is expected to have access to at least one of the references mentioned above .

4. Attendance in the classes is mandatory. If the attendance of a student falls below 75% at the end of the C2 component, he/she will be dropped from the course

5. Grading Policy :

o 30%: Component 1 - Closed book exam (10%); Take home assignment (10%) and Lab assignment (10%)

o 30%: Component 2 - Closed book exam (10%); Take home assignment (10%) and Lab assignment (10%)

o 40%: Component 3 - Closed book written exam

6. Take home assignments : They will be assigned at the beginning of a module (announcements will be made on the course web-site every week). These assignments will not only help you in development of an in-depth idea of each topic of the course but will also serve to prepare for your written examinations. They will be evaluated during tutorial classes. You will have to explain your solution to the TAs during tutorial classes ( deadlines will be mentioned on the course website) and you may be assigned group projects which have to be demonstrated during tutorial sessions.

Lab Course Outline:

The lab classes will mainly consist of (a) Simulation of Verilog models for digital systems (including data path and control path design of a simple hypothetical CPU) using proprietory/open source simulation tools (b) simulation of MIPS32 programs using MARS/SPIM simulator. It is expected that students perform the lab assignments seriously to have a more refined knowledge of the topics.

Tools and Language

Regarding Modelsim

Regarding MARS

Open Source Simulators

Machines, OS and Editors

Lab Related Instructions

Announcements:

1. C1 review test solutions

2. Semester Project

3. C2 review test solutions

4. C3 review test solutions

1. Quiz1 solutions

2. Mid-sem solutions

3. Quiz2 solutions

4. End-sem solutions

Click to view your marks (midsem- updated and quizzes)

Important Links: WWW Computer Architecture

Tutorial/Resources

Lab Assignments

Lab Resources

Introduction

An Introductory Lecture - IITM

Installing Modelsim

Modelsim Setup - Linux ( .run file)

modelsim.sh

Basics of Logic Design - Part 1

Logic Design

Data Representation

Instructing the Computer-1

Instructing the Computer-2

Assignment 1

Tut 1 : Getting Started with Modelsim

Tut 2 : Verilog Modelling using Modelsim

Detailed Tutorial on Modelsim

Verilog modelling

Combinational Circuit Design Using Verilog

Basics of Logic Design - Part 2

Sequential Circuits

Design of Sequential Circuits

Design of Finite State Machines

Home-Work Assignment 1

MIPS Instruction Set Architecture

MIPS Instruction Set

Assignment 2

Sequential Circuit Design Using Verilog

FSM Design Using Verilog

Discussion on Homework / Evaluation 1

Assembly Language Programming Using MIPS Instruction Set

Home-Work Assignment 2

Getting Started with MIPS using MARS

JRE Download

MARS Download

Discussion on Homework / Evaluation 2

MIPS Assignment 1

Tut 1: MIPS Programming Using MARS

Expected leave on account of ASMITA

Arithmetic for Computer Systems

MIPS Assignment 2

Tut 2: MIPS Programming Using MARS

C1 Component Period

Memory System

Exploiting Memory Hierarchy

MIPS Assignment 3

Cache Memory

Tut 3 : Cache Simulator

Additional Resources

Assignments

Combinational Circuits

Logic Gates

Problem Set 1

Registers and Counters

Problem Set 2

Finite State Machine Design

1. Integer representation

2. Floating Point representation

Problem Set 3

Problem Set 4

Computer Arithmetic

Arithmetic Circuits

Instruction Set Architecture

MIPS Programming

Data Path Design

Control Unit Design

Input/Output

Input Output Organization

Programming the Basic Computer

Simple RISC Instruction Set

ARM Instruction Set

A GUI based SimpleRisc emulator

Problem Set : ARM

Designing the CPU

Input/Output System

Ch 12 slides from book Computer Organization and Design by S.Sarangi

- Guidelines to Write Experiences

- Write Interview Experience

- Write Work Experience

- Write Admission Experience

- Write Campus Experience

- Write Engineering Experience

- Write Coaching Experience

- Write Professional Degree Experience

- Write Govt. Exam Experiences

- Computer Organization and Architecture Tutorial

Basic Computer Instructions

- What is Computer

- Issues in Computer Design

- Difference between assembly language and high level language

- Addressing Modes

- Difference between Memory based and Register based Addressing Modes

Computer Organization | Von Neumann architecture

- Harvard Architecture

- Interaction of a Program with Hardware

- Simplified Instructional Computer (SIC)

- Instruction Set used in simplified instructional Computer (SIC)

- Instruction Set used in SIC/XE

- RISC and CISC in Computer Organization

- Vector processor classification

- Essential Registers for Instruction Execution

- Introduction of Single Accumulator based CPU organization

- Introduction of Stack based CPU Organization

- Machine Control Instructions in Microprocessor

- Very Long Instruction Word (VLIW) Architecture

Input and Output Systems

- Computer Organization | Different Instruction Cycles

- Machine Instructions

- Computer Organization | Instruction Formats (Zero, One, Two and Three Address Instruction)

- Difference between 2-address instruction and 1-address instructions

- Difference between 3-address instruction and 0-address instruction

- Register content and Flag status after Instructions

- Debugging a machine level program

- Vector Instruction Format in Vector Processors

- Vector instruction types

Instruction Design and Format

- Introduction of ALU and Data Path

- Computer Arithmetic | Set - 1

- Computer Arithmetic | Set - 2

- Difference between 1's Complement representation and 2's Complement representation Technique

- Restoring Division Algorithm For Unsigned Integer

- Non-Restoring Division For Unsigned Integer

- Computer Organization | Booth's Algorithm

- How the negative numbers are stored in memory?

Microprogrammed Control

- Computer Organization | Micro-Operation

- Microarchitecture and Instruction Set Architecture

- Types of Program Control Instructions

- Difference between CALL and JUMP instructions

- Computer Organization | Hardwired v/s Micro-programmed Control Unit

- Implementation of Micro Instructions Sequencer

- Performance of Computer in Computer Organization

- Introduction of Control Unit and its Design

- Computer Organization | Amdahl's law and its proof

- Subroutine, Subroutine nesting and Stack memory

- Different Types of RAM (Random Access Memory )

- Random Access Memory (RAM) and Read Only Memory (ROM)

- 2D and 2.5D Memory organization

Input and Output Organization

- Priority Interrupts | (S/W Polling and Daisy Chaining)

- I/O Interface (Interrupt and DMA Mode)

- Direct memory access with DMA controller 8257/8237

- Computer Organization | Asynchronous input output synchronization

- Programmable peripheral interface 8255

- Synchronous Data Transfer in Computer Organization

- Introduction of Input-Output Processor

- MPU Communication in Computer Organization

- Memory mapped I/O and Isolated I/O

Memory Organization

- Introduction to memory and memory units

- Memory Hierarchy Design and its Characteristics

- Register Allocations in Code Generation

- Cache Memory

- Cache Organization | Set 1 (Introduction)

- Multilevel Cache Organisation

- Difference between RAM and ROM

- What's difference between CPU Cache and TLB?

- Introduction to Solid-State Drive (SSD)

- Read and Write operations in Memory

- Instruction Level Parallelism

- Computer Organization and Architecture | Pipelining | Set 1 (Execution, Stages and Throughput)

- Computer Organization and Architecture | Pipelining | Set 3 (Types and Stalling)

- Computer Organization and Architecture | Pipelining | Set 2 (Dependencies and Data Hazard)

- Last Minute Notes Computer Organization

COA GATE PYQ's AND COA Quiz

- Computer Organization and Architecture

- Digital Logic & Number representation

- Number Representation

- Microprocessor

- GATE CS Preparation

Von-Neumann computer architecture:

Von-Neumann computer architecture design was proposed in 1945.It was later known as Von-Neumann architecture.

Historically there have been 2 types of Computers:

- Fixed Program Computers – Their function is very specific and they couldn’t be reprogrammed, e.g. Calculators.

- Stored Program Computers – These can be programmed to carry out many different tasks, applications are stored on them, hence the name.

Modern computers are based on a stored-program concept introduced by John Von Neumann. In this stored-program concept, programs and data are stored in a separate storage unit called memories and are treated the same. This novel idea meant that a computer built with this architecture would be much easier to reprogram.

The basic structure is like this,

It is also known as ISA (Instruction set architecture) computer and is having three basic units:

- The Central Processing Unit (CPU)

- The Main Memory Unit

- The Input/Output Device Let’s consider them in detail.

1. Central Processing Unit-

The central processing unit is defined as the it is an electric circuit used for the executing the instruction of computer program.

It has following major components:

1.Control Unit(CU)

2.Arithmetic and Logic Unit(ALU)

3.variety of Registers

- Control Unit – A control unit (CU) handles all processor control signals. It directs all input and output flow, fetches code for instructions, and controls how data moves around the system.

- Arithmetic and Logic Unit (ALU) – The arithmetic logic unit is that part of the CPU that handles all the calculations the CPU may need, e.g. Addition, Subtraction, Comparisons. It performs Logical Operations, Bit Shifting Operations, and Arithmetic operations.

Figure – Basic CPU structure, illustrating ALU

- Accumulator: Stores the results of calculations made by ALU. It holds the intermediate of arithmetic and logical operatoins.it act as a temporary storage location or device.

- Program Counter (PC): Keeps track of the memory location of the next instructions to be dealt with. The PC then passes this next address to the Memory Address Register (MAR).

- Memory Address Register (MAR): It stores the memory locations of instructions that need to be fetched from memory or stored in memory.

- Memory Data Register (MDR): It stores instructions fetched from memory or any data that is to be transferred to, and stored in, memory.

- Current Instruction Register (CIR): It stores the most recently fetched instructions while it is waiting to be coded and executed.

- Instruction Buffer Register (IBR): The instruction that is not to be executed immediately is placed in the instruction buffer register IBR.

- Data Bus: It carries data among the memory unit, the I/O devices, and the processor.

- Address Bus: It carries the address of data (not the actual data) between memory and processor.

- Control Bus: It carries control commands from the CPU (and status signals from other devices) in order to control and coordinate all the activities within the computer .

- Input/Output Devices – Program or data is read into main memory from the input device or secondary storage under the control of CPU input instruction. Output devices are used to output information from a computer. If some results are evaluated by the computer and it is stored in the computer, then with the help of output devices, we can present them to the user.

Von Neumann bottleneck – Whatever we do to enhance performance, we cannot get away from the fact that instructions can only be done one at a time and can only be carried out sequentially. Both of these factors hold back the competence of the CPU. This is commonly referred to as the ‘Von Neumann bottleneck’. We can provide a Von Neumann processor with more cache, more RAM, or faster components but if original gains are to be made in CPU performance then an influential inspection needs to take place of CPU configuration.

This architecture is very important and is used in our PCs and even in Super Computers.

Please Login to comment...

- Computer Organization & Architecture

- Computer Subject

- Experiences

- 10 Best Screaming Frog Alternatives in 2024

- 10 Best Serpstat Alternatives in 2024

- Top 15 Fastest Roller Coasters in the World

- 10 Best Mint Alternatives in 2024 (Free)

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Increase Font Size

41 Summary and Concluding Remarks

Dr A. P. Shanthi

The objectives of this module are to summarize the various concepts that we have discussed so far in this course on Computer Architecture and to discuss about the future trends.

The basic concepts of Computer Architecture can be beautifully described with the help of the following eight ideas which are listed below. According to David A. Patterson, “Computers come and go, but these ideas have powered through six decades of computer design”. Dr. David A. Patterson is a pioneer in Computer Science who has been teaching Computer Architecture at the University of California, Berkeley since 1977. He is the co-author of the classic texts Computer Organization and Design and Computer Architecture: A Quantitative Approach which we have used throughout this course. His co-author is Stanford University President Dr. John L. Hennessy, who has been a member of the Stanford faculty since 1977 in the Departments of Electrical Engineering and Computer Science. These are eight great ideas that computer architects have invented in the last 60 years of computer design. They are so powerful they have lasted long after the first computer that used them, with newer architects demonstrating their admiration by imitating their predecessors. Let us discuss in detail about each one of them.

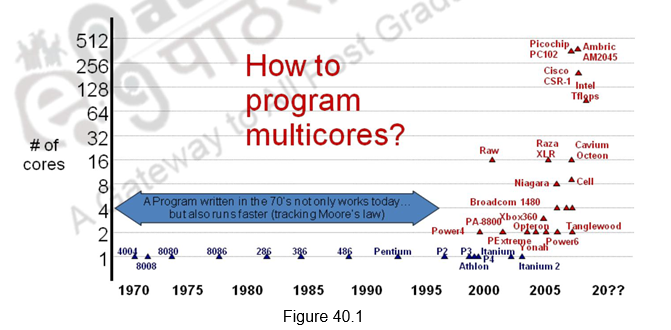

1. Design for Moore’s Law: The one constant for computer designers is rapid change, which is driven largely by Moore’s Law. Moore’s law , as we have seen earlier, is the observation that the number of transistors in a dense integrated circuit doubles approximately every two years. The observation is named after Gordon Moore, the co – founder of Fairchild Semiconductor and Intel, whose 1965 paper described a doubling every year in the number of components per integrated circuit, and projected this rate of growth would continue for at least another decade. In 1975, looking forward to the next decade, he revised the forecast to doubling every two years. The period is often quoted as 18 months because of Intel executive David House, who predicted that chip performance would double every 18 months, being a combination of the effect of more transistors and the transistors being faster.

Moore’s prediction proved accurate for several decades, and has been used in the semiconductor industry to guide long -term planning and to set targets for research and development. As computer designs can take years, the resources available per chip can easily double or quadruple between the start and finish of the project.

Therefore, computer architects must anticipate where the technology will be when the design finishes rather than design for where it starts.

Moore’s law is an observation or projection and not a physical or natural law. Although the rate held steady from 1975 until around 2012, the rate was faster during the first decade. In general, it is not logically sound to extrapolate from the historical growth rate into the indefinite future. For example, the 2010 update to the International Technology Roadmap for Semiconductors, predicted that growth would slow around 2013, and in 2015 Gordon Moore foresaw that the rate of progress would reach saturation.

Intel stated in 2015 that the pace of advancement has slowed, starting at the 22 nm feature width around 2012, and continuing at 14 nm. Brian Krzanich, CEO of Intel, announced that “our cadence today is closer to two and a half years than two.” This is scheduled to hold through the 10 nm width in late 2017. He cited Moore’s 1975 revision as a precedent for the current deceleration, which results from technical challenges and is “a natural part of the history of Moore’s law.”

2. Use Abstraction to Simplify Design: Both computer architects and programmers had to invent techniques to be more productive, for otherwise design time would lengthen as dramatically as resources grew by Moore’s Law. A major productivity technique for hardware and software is to use abstractions to represent the design at different levels of representation; lower-level details are hidden to offer a simpler model at higher levels. Abstraction refers to ignoring irrelevant details and focusing on higher-level design/implementation issues. Abstraction can be used at multiple levels with each level hiding the details of levels below it. For example, the instruction set of a processor hides the details of the activities involved in executing an instruction. High-level languages hide the details of the sequence of instructions need to accomplish a task. Operating systems hide the details involved in handling input and output devices.

3. Make the common case fast: The most significant improvements in computer performance come from improvements to the common case, i.e. computations that are commonly executed, rather than optimizing the rare case. Rare cases are not encountered often and therefore, it does not matter even if the processor is not optimized to execute that and spends more time. Ironically, the common case is often simpler than the rare case and hence is often easier to enhance. The common case can be identified with careful experimentation and measurement. We have discussed the

Amdahl’s Law that puts forth this idea. Recall that Amdahl’s law is a quantitative means of measuring performance and is closely related to the law of diminishing returns.

4. Performance via parallelism: Since the dawn of computing, computer architects have offered designs that get more performance by exploiting the parallelism exhibited by applications. There are basically two types of parallelism present in applications. They are – Data Level Parallelism (DLP) as there are many data items that can be operated in parallel and Task Level Parallelism (TLP) that arises because tasks of work are created that can operate independently and in parallel. Computer systems exploit the DLP and TLP exhibited by applications in four major ways. Instruction Level Parallelism (ILP) exploits DLP at modest levels using the help of compilers with ideas like pipelining, dynamic scheduling and speculative execution. Vector Architectures and GPUs exploit DLP. Thread level parallelism exploits either DLP or TLP in a tightly coupled hardware model that allows for interaction among threads. Request Level Parallelism (RPL) exploits parallelism among largely decoupled tasks specified by the programmer or the OS. Warehouse-scale computers exploit request level parallelism and data level parallelism. We have discussed in detail about all these styles of architectures in our earlier modules. We have also discussed the need for multi -core architectures, which basically fall under the category of MIMD style of architectures and how such architectures exploit ILP, DLP and TLP. The various case studies of multi – core architectures that we have looked at give us details about which type of parallelism is exploited the most and how.

5. Performance via pipelining: Pipelining is a way of exploiting parallelism in uni-processor machines. It forms the primary method of exploiting ILP. It essentially handles the activities involved in instruction execution as in an assembly line. The instruction cycle can be split into several independent steps and as soon as the first activity of an instruction is done you move it to the second activity and start the first activity of a new instruction. This results in overlapped execution of instructions which leads to executing more instructions per unit time compared to waiting for all activities of the first instruction to complete before starting the second instruction. We have discussed in detail about the basics of pipelining, how it is implemented and also focused on the various hazards that might occur in a pipeline and the possible solutions.

6. Performance via prediction: In some cases it can be faster on average to guess and start working rather than wait until you know for sure, assuming that the mechanism to recover from a mis-prediction is not too expensive and your prediction is relatively accurate. We generally make use of prediction, both at the compiler level as well as the architecture level to improve performance. For example, we know that a conditional branch is a type of instruction that determines the next instruction to be executed based on a condition test. Conditional branches are essential for implementing high-level language if statements and loops. Unfortunately, conditional branches interfere with the smooth operation of a pipeline — the processor does not know where to fetch the next instruction until after the condition has been tested. Many modern processors reduce the impact of branches with speculative execution – make an informed guess about the outcome of the condition test and start executing the indicated instruction. Performance is improved if the guesses are reasonably accurate and the penalty of wrong guesses is not too severe. We have discussed in detail about the various branch predictors and speculative execution in our earlier modules.

7. Hierarchy of memories: Programmers want memory to be fast, large, and cheap, as memory speed often shapes performance, capacity limits the size of problems that can be solved, and the cost of memory today is often the majority of computer cost. Architects have found that they can address these conflicting demands with a hierarchy of memories, with the fastest, smallest, and most expensive memory per bit at the top of the hierarchy and the slowest, largest, and cheapest per bit at the bottom. Caches give the programmer the illusion that main memory is nearly as fast as the top of the hierarchy and nearly as big and cheap as the bottom of the hierarchy. We have discussed in detail about the various cache mapping policies and also how the performance of the hierarchical memory system can be improved through various optimization techniques.

8. Dependability via redundancy: Last of all, computers not only need to be fast, they need to be dependable. Since any physical device can fail, we have to make systems dependable by including redundant components that can take over when a failure occurs and to help detect failures. We have discussed about this in our module on warehouse-scale computers, where dependability is very important.

The challenges associated with building better and better architectures are always there. The ever-increasing performance and decreasing costs, makes computers more and more affordable and, in turn, accelerates additional software and hardware developments that fuels this process even more. As new architectures come up, more sophisticated algorithms are run on them, and these algorithms keep demanding more, and the process continues. We will also have to remember the fact that it is not only hardware that has to keep changing with the times, the software also has to improve in order to harness the power of the hardware. According to E. Dijkstra, in his 1972 Turing Award Lecture, “To put it quite bluntly: as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigantic computers, programming has become an equally gigantic problem.” We shall elaborate on these issues a little more.

The First Software Crisis: The first challenge was faced in the time frame of ’60s and ’70s when Assembly Language Programming was predominantly used. Computers had to handle larger and more complex programs and we needed to get abstraction and portability without losing performance. That gave rise to the introduction of high level programming languages.

The Second Software Crisis: The time frame was in the ’80s and ’90s, where there was inability to build and maintain complex and robust applications requiring multi – million lines of code developed by hundreds of programmers . There was a need to get composability, malleability and maintainability. These problems were handled with the introduction of Object Oriented Programming, better tools and better software engineering methodologies.

Today, with so much of abstraction introduced, there is a solid boundary between hardware and software and programmers don’t have to know anything about the processor. Programs are oblivious to the processor as they work on all processors. A program written in ’70 using C still works and is much faster today. This abstraction provides a lot of freedom for the programmers. However, we are also facing a third crisis with respect to programming multi-core processors, for the past decade or so. This is illustrated in Figure 40.1.

The Origins of a Third Crisis

The Origins of a Third Crisis: Since the advent of multi-core architectures, we need to develop software that exploit the architectural advances and also sustain portability, malleability and maintainability without unduly increasing the complexity faced by the programmer. It is critical to keep-up with the current rate of evolution in software. However, parallel programming is hard because of the following factors:

• A huge increase in complexity and work for the programmer

– Programmer has to think about performance

– Parallelism has to be designed in at every level

• Humans are sequential beings

– Deconstructing problems into parallel tasks is hard for many of us

• Parallelism is not easy to implement

– Parallelism cannot be abstracted or layered away

– Code and data has to be restructured in very different (non-intuitive) ways

• Parallel programs are very hard to debug

– Combinatorial explosion of possible execution orderings

– Race condition and deadlock bugs are non-deterministic and illusive

– Non-deterministic bugs go away in lab environment and with instrumentation

Ideas on Solving the Third Software Crisis: Experts in Computer Architecture have also pointed out the following directions to solve the present software crisis.

Advances in Computer Architecture: As advancements are happening in the field of Computer Architecture, we need to be aware of the following facts. As pointed out by David Patterson, there is a change from the conventional wisdom in Computer Architecture.

• Moore’s Law and Power Wall

– In contrary to the past, power is expensive now, but transistors can be considered free

– Dynamic power and static power need to be reduced

• Monolithic uniprocessors are reliable internally, with errors occurring only at pins

– As the feature sizes drop, the soft and hard error rates increase, causing concerns

– Wire delay, noise, cross coupling, reliability, clock jitter, design validation,

… stretch development time and cost of large designs at ≤65 nm

• Researchers normally demonstrate new architectures by building chips

– Cost of 65 nm or lower masks, cost of ECAD, and the extended design time for GHz clocks lead to the fact that researchers no longer build believable chips

• Multiplies are normally slow, but loads and stores are fast

– In contrary to the earlier state, loads and stores are slow, but multiplies are fast, because of the advanced hardware – we have a Memory Wall

• We can reveal more ILP via compilers and architecture innovation

– Diminishing returns on finding more ILP – ILP Wall

• Moore’s law may point to 2X CPU performance every 18 months

– Power Wall + Memory Wall + ILP Wall = Brick Wall

All the above factors have led to a forced shift in the programming model.

Novel Programming Models and Languages: Novel programming models and languages are critical in solving the third software crisis. Novel languages were the central solution in the last two crises also. With a paradigm shift in architecture, new application domains like data science and Internet of Things, new hardware features and new customers coming in, we should be able to achieve parallelism with new programming models and languages, without burdening the programmer.

Tools for Parallel Programming: With new programming model and languages coming in, we need to also build appropriate tools to improve programmer productivity. We need to build aggressive compilers and tools that will identify parallelism, debug parallel code, update and maintain parallel code and stitch multiple domains together.

Thus, while all consumer CPUs are now multi-core, software is still designed as mainly sequential. The parallelisation of legacy code is very expensive and requires developers with skills in both computer architecture and application domain. We need a new generation of tools for writing software, backed by innovative programming models. New tools should be natively parallel and allow for optimisation of code at run-time across the multiple dimensions of performa nce, reliability, throughput, latency and energy consumption while presenting the appropriate level of abstraction to developers. Innovative business models may be needed in order to make the development of new generation tools economically viable.

Future Trends: Based on the discussions provided above, in future, we can expect that hardware will become a commodity and the value will be in the software to drive it and the data it generates. The data deluge will require an infrastructure that can transfer and store the data, and computing systems that can analyze and extract value from data in real time. According to the roadmap provided for computer systems, there are arguments suggesting that the computing sector will become increasingly polari zed between small application-specific computing units that connect to provide system services, and larger more powerful units that will be required to analyze large volumes of data in real time.

Applications in automation, aerospace, automotive and manufacturing require computing power which was typical of supercomputers a few years ago, but with constraints on size, power consumption and guaranteed response time which are typical of the embedded applications. Therefore, we need to develop a family of innovative and scalable technologies, powering computing devices ranging from the embedded micro-server to the large data centre.

Computing applications merging automation, real-time processing of big data, autonomous behaviour and very low power consumption are changi ng the physical world we live in, and creating new areas of application like e.g. smart cities, smart homes, etc. Data locality is becoming an issue, driving the development of multi -level applications which see processing and data shared between local/mobile devices and cloud-based servers. The concept of Internet of Everything is developing fast.

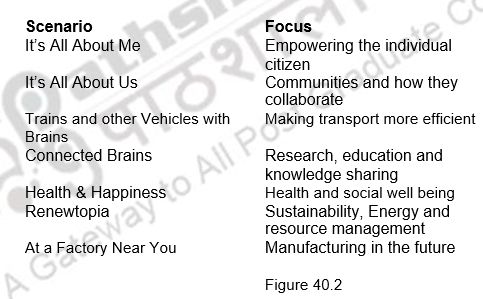

The roadmap points out several scenarios that address critical aspects of society and economy. Figure 40.2 gives an overview of scenarios. Describing how computing will evolve in each of the scenarios will allow us to describe a series of technology needs that could translate into research and innovation challenges for the computer industry. A common theme across all scenarios is the need for small low-cost and low-power computing systems that are fully interconnected, self-aware, context-aware and self-optimising within application boundaries.

To summarize, we have summed up the concepts discussed in this course on Computer Architecture. We have pointed out the basic ideas that need to be remembered and the major challenges that we are facing today.

- Study Guides

- Homework Questions

Computer Arcitecture Assignment 3

- Computer Science

IMAGES

VIDEO

COMMENTS

ASSIGNMENTS TOPICS HANDOUTS Problem Set 0 Prerequisite Self-Assessment Test () Problem Set 1 ISAs, Microprogramming, Simple Pipelining and Hazards (PDF ‑ 3.6 MB) Handout 1: EDSACjr ()Handout 2: 6.823 Stack ISA ()Handout 3: CISC ISA-x86jr ()Handout 4: RISC ISA-6.823 MIPS ()Handout 5: Bus-based MIPS Implementation ()Problem Set 2

Computer Organization and Architecture is used to design computer systems. Computer Architecture is considered to be those attributes of a system that are visible to the user like addressing techniques, instruction sets, and bits used for data, and have a direct impact on the logic execution of a program, It defines the system in an abstract manner, It deals with What does the system do.

Course Prerequisites. Basic Computer Organization (e.g., CS/ECE 552) Logic: gates, Boolean functions, latches, memories. Datapath: ALU, register file, muxes. Control: single-cycle control, micro-code. Caches & pipelining (will go into these in more detail here) Some familiarity with assembly language.

CS-480/585 Assignments: COMPUTER ARCHITECTURE Assignment 2a: READINGS (both CS-480 and CS-585): Principles of Computer Architecture (Murdocca & Heuring): 1. Chapter 2: Data Representation READINGS (CS-585 only): Readings in Computer Architecture (Hill, Jouppi, & Souhi): 2. Section on Data Format (pages 21-24, and the figure on page 25) from the article by Amdahl et

The main components of a computer architecture are the CPU, memory, and peripherals. All these elements are linked by the system bus, which comprises an address bus, a data bus, and a control bus. Within this framework, the computer architecture has eight key components, as described below. 1.

EE282 focuses on key topics in advanced computer systems architecture such as multilevel in memory hierarchies, advanced pipelining and super scalar techniques, vectors, GPUs and accelerators, non-volatile storage and advanced IO systems, virtualization, and datacenter hardware and software architecture. The programming assignments introduce ...

An introduction to computer architecture and organization. Instruction set design; basic processor implementation techniques; performance measurement; caches and virtual memory; pipelined processor design; RISC architectures; design trade-offs among cost, performance, and complexity. ... Assignments should be attempted individually. If you ...

CIS 501 Introduction to Computer Architecture is a course offered by the University of Pennsylvania that covers the basic concepts and principles of designing and analyzing computer systems. The course explores topics such as instruction sets, pipelining, caches, memory, and parallelism. The pdf file provides an overview of the course objectives, contents, and expectations.

Course Description. 6.823 is a course in the department's "Computer Systems and Architecture" concentration. 6.823 is a study of the evolution of computer architecture and the factors influencing the design of hardware and software elements of computer systems. Topics may include: instruction set design; processor micro-architecture and ….

In this course, you will learn to design the computer architecture of complex modern microprocessors. All the features of this course are available for free. It does not offer a certificate upon completion. ... Access to lectures and assignments depends on your type of enrollment. If you take a course in audit mode, you will be able to see most ...

This course will discuss the basic concepts of computer architecture and organization that can help the participants to have a clear view as to how a computer system works. Examples and illustrations will be mostly based on a popular Reduced Instruction Set Computer (RISC) platform. ... • Average assignment score = 25% of average of best 8 ...

Module 2: Instructing a Computer. CPU Architecture, Register Organization , Instruction formats, basic instruction cycle, Instruction interpretation and Sequencing, RTL interpretation of instructions, addressing modes, instruction set. Case study - instruction sets of MIPS processor; Assembly language programming using MIPS instruction set.

• Homework assignments (performed in groups of 2) • Pencil and paper problems • Small programming problems • Project (performed in groups of 2) • Building the Duke152-S11 computer in real hardware! • Programming the Duke152-S11 computer you built • You will choose project partners, and I will ensure that group

Hennessy and Patterson, "Computer Architecture: A Quantitative Approach", Sixth Edition. Other (frequently referenced) online resources: ... An assignment that is turned in before solutions are posted will be assessed a flat 10% (of the maximum score) late penalty. If you turn in the assignment after the solutions have been posted, a flat 20% ...

Von-Neumann computer architecture design was proposed in 1945.It was later known as Von-Neumann architecture. Historically there have been 2 types of Computers: Fixed Program Computers - Their function is very specific and they couldn't be reprogrammed, e.g. Calculators. Stored Program Computers - These can be programmed to carry out many ...

This assignment covers material presented in the chapters Digital Circuit Theory: Combinational Logic Circuits and Instruction Set Architecture. The following lessons, in particular, should help ...

The basic concepts of Computer Architecture can be beautifully described with the help of the following eight ideas which are listed below. According to David A. Patterson, "Computers come and go, but these ideas have powered through six decades of computer design". Dr. David A. Patterson is a pioneer in Computer Science who has been ...

This is an introductory computer architecture course for beginners. We will start out with a discussion on binary representations, and a discussion on number...

UNIT-3 COMPUTER ORGANIZATION AND ARCHITECTURE RCS302 Assignment-3 Q.1 Define instruction pipeline.Explain four segment pipelines with help of flowchart, Q.2 Differentiate between Horizontal and vertical micro programming.Suppose there are 136 control signals in a processor data path.Calculate control word size for horizontal&vertical encoding.

Assignment: Computer Architecture Instructions: Answer all questions thoroughly. Submit your solutions by the deadline specified by your instructor. Provide explanations and reasoning where necessary. Ensure your solutions are clear and easy to understand. Question 1: Explain the concept of the Von Neumann architecture. Discuss its main components and how they interact with each other.

Welcome to the NPTEL Week 2 Assignment for Computer Architecture July 2023! This online course provides an extensive introduction to the fundamentals of comp...

Abstract Computer architecture is the organization of the components making up a computer system and the semantics or meaning of the operations that guide its function. As such, the computer architecture governs the design of a family of computers and defines the logical interface that is targeted by programming languages and their compilers. The organization determines the mix of functional ...

12 Questions on Computer Organization - Assignment 1 | 0306 550. Assignment 4: Energy and Scaling - Computer Organization | ESE 534. An Introduction to Computer Architecture - Homework 3 | CS 35101. Homework II Answers - Computer Architecture and Design | ELEC 5200.

Complete Assignment of Computer Architecture - Free download as PDF File (.pdf), Text File (.txt) or read online for free. Complete Assignment of Computer Architecture