Guide to Computer Vision: Why It Matters and How It Helps Solve Problems

This post was written to enable the beginner developer community, especially those new to computer vision and computer science. NVIDIA recognizes that solving and benefiting the world’s visual computing challenges through computer vision and artificial intelligence requires all of us. NVIDIA is excited to partner and dedicate this post to the Black Women in Artificial Intelligence .

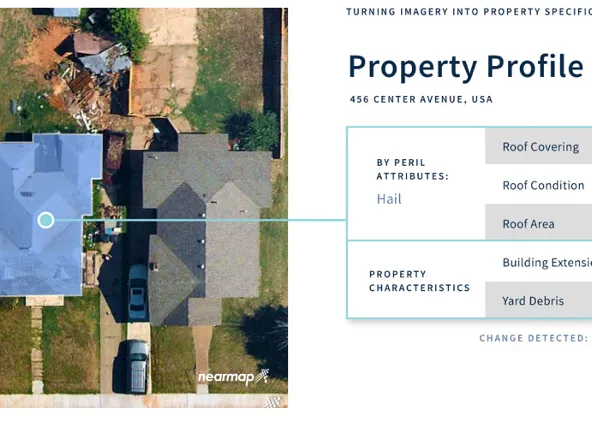

Computer vision’s real world use and reach is growing and its applications in turn are challenging and changing its meaning. Computer vision, which has been in some form of its present existence for decades, is becoming an increasingly common phrase littered in conversation, across the world and across industries: computer vision systems, computer vision software, computer vision hardware, computer vision development, computer vision pipelines, computer vision technology.

What is computer vision?

There is more to the term and field of computer vision than meets the eye, both literally and figuratively. Computer vision is also referred to as vision AI and traditional image processing in specific non-AI instances, and machine vision in manufacturing and industrial use cases.

Simply put, computer vision enables devices, including laptops, smartphones, self-driving cars, robots, drones, satellites, and x-ray machines to perceive, process, analyze, and interpret data in digital images and video.

In other words, computer vision fundamentally intakes image data or image datasets as inputs, including both still images and moving frames of a video, either recorded or from a live camera feed. Computer vision enables devices to have and use human-like vision capabilities just like our human vision system. In human vision, your eyes perceive the physical world around you as different reflections of light in real-time.

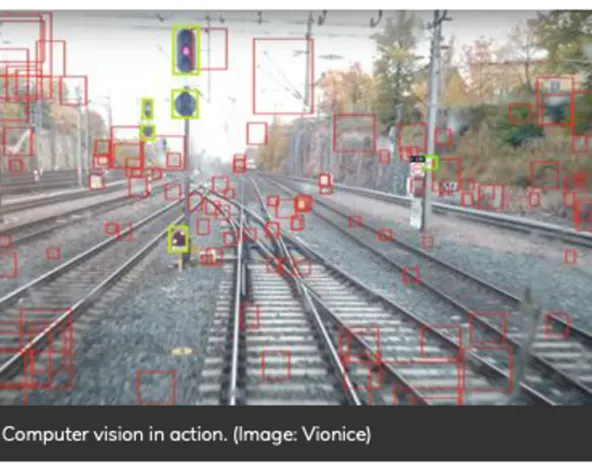

Similarly, computer vision devices perceive pixels of images and videos, detecting patterns and interpreting image inputs that can be used for further analysis or decision making. In this sense, computer vision “sees” just like human vision and uses intelligence and compute power to process input visual data to output meaningful insights, like a robot detecting and avoiding an obstacle in its path.

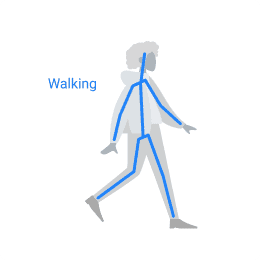

Different computer vision tasks mimic the human vision system, performing, automating, and enhancing functions similar to the human vision system.

How does computer vision relate to other forms of AI?

Computer vision is helping to teach and master seeing, just like conversational AI is helping teach and master the sense of sound through speech, in applications of recognizing, translating, and verbalizing text: the words we use to define and describe the physical world around us.

Similarly, computer vision helps teach and master the sense of sight through digital image and video. More broadly, the term computer vision can also be used to describe how device sensors, typically cameras, perceive and work as vision systems in applications of detecting, tracking and recognizing objects or patterns in images.

Multimodal conversational AI combines the capabilities of conversational AI with computer vision in multimedia conferencing applications, such as NVIDIA Maxine .

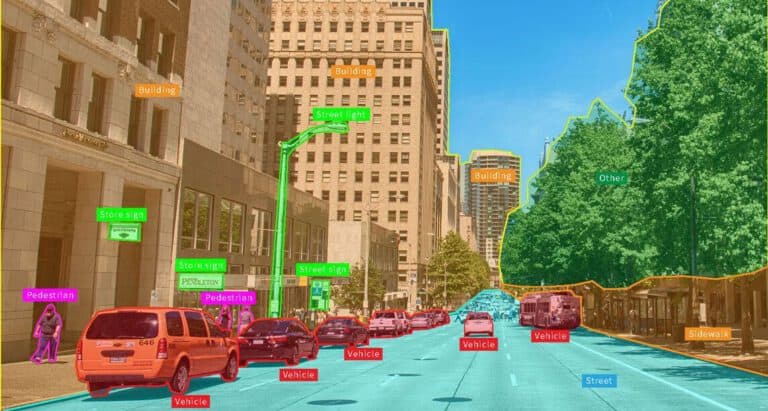

Computer vision can also be used broadly to describe how other types of sensors like light detection and ranging (LiDAR) and radio detection and ranging (RADAR) perceive the physical world. In self-driving cars, computer vision is used to describe how LiDAR and RADAR sensors work, often together and in-tandem with cameras to recognize and classify people, objects, and debris.

What are some common tasks?

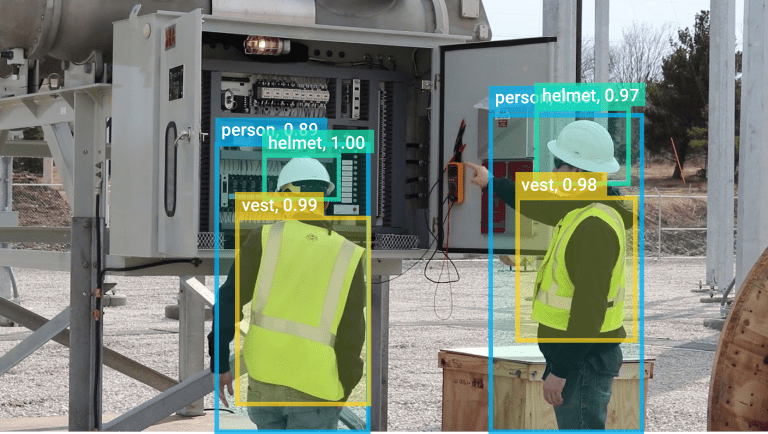

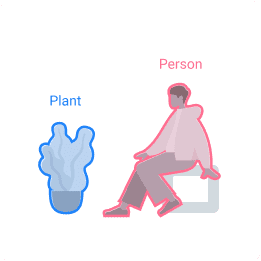

While computer vision tasks cover a wide breadth of perception capabilities and the list continues to grow, the latest techniques support and help solve use cases involving detection, classification, segmentation, and image synthesis.

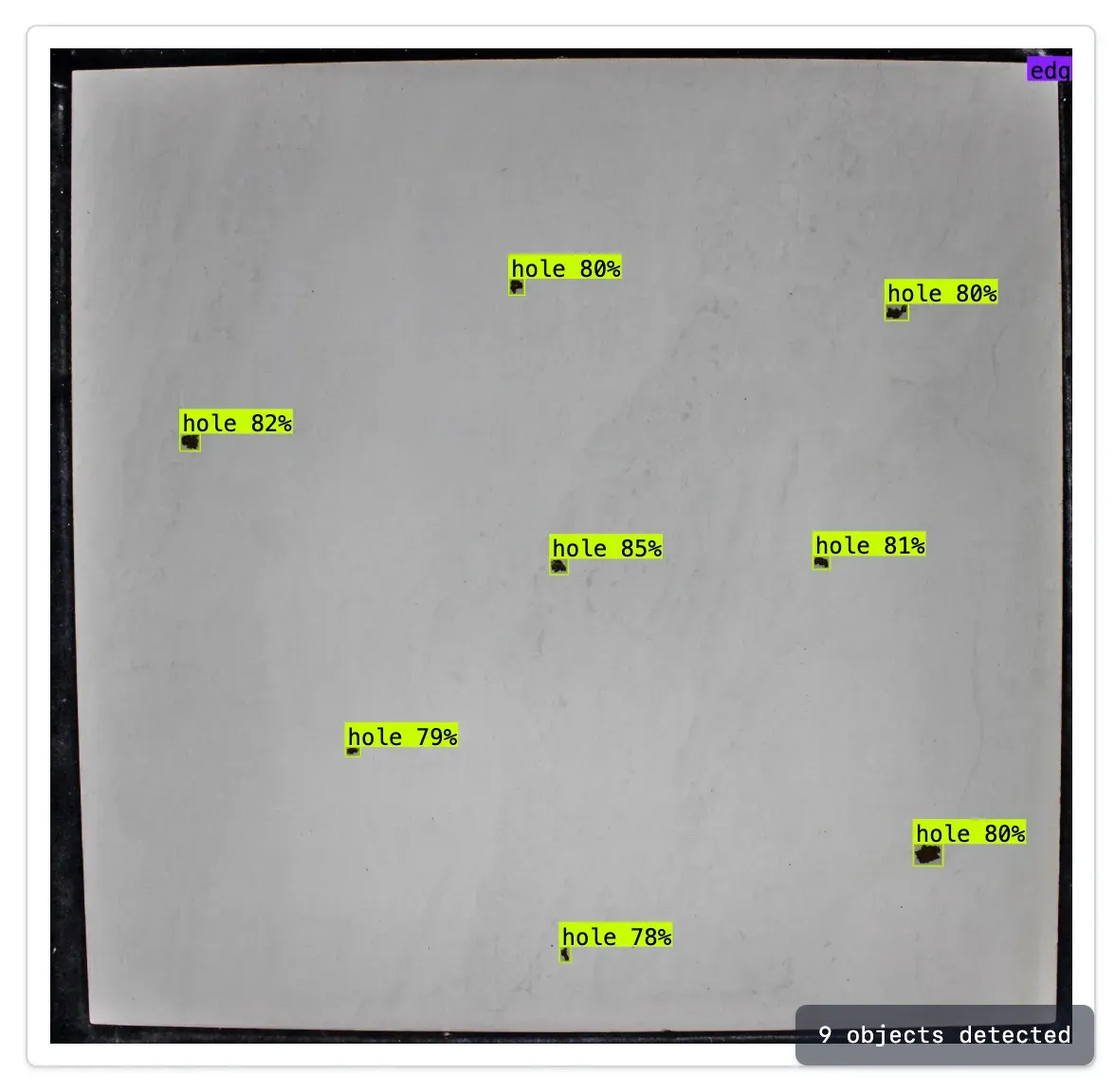

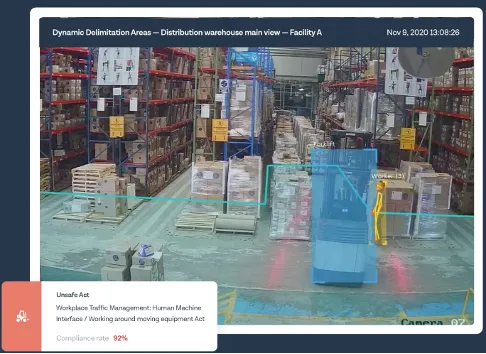

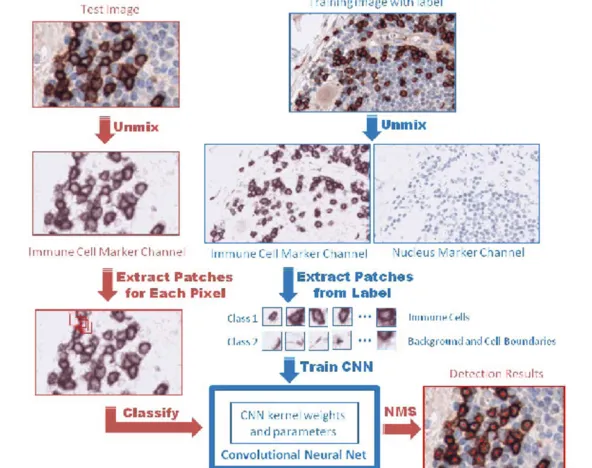

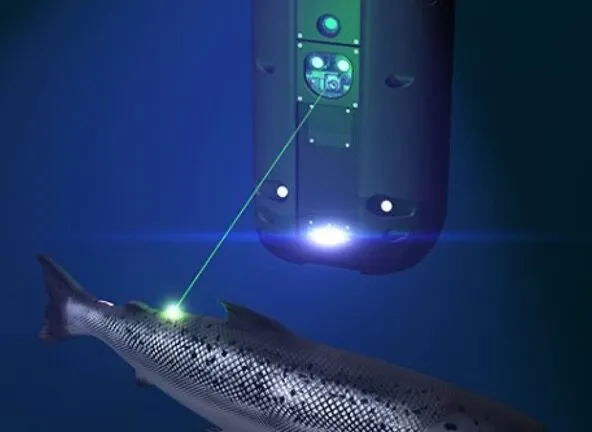

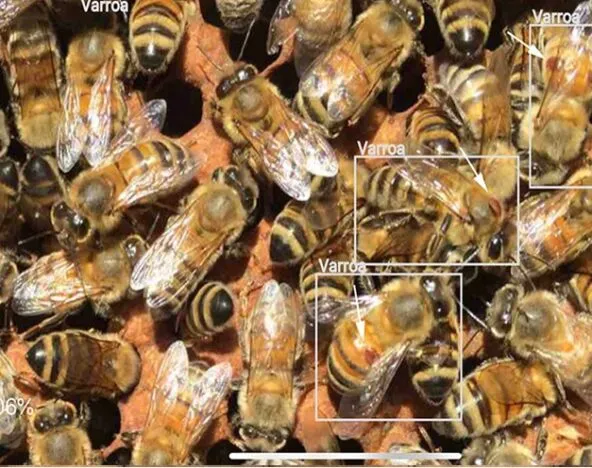

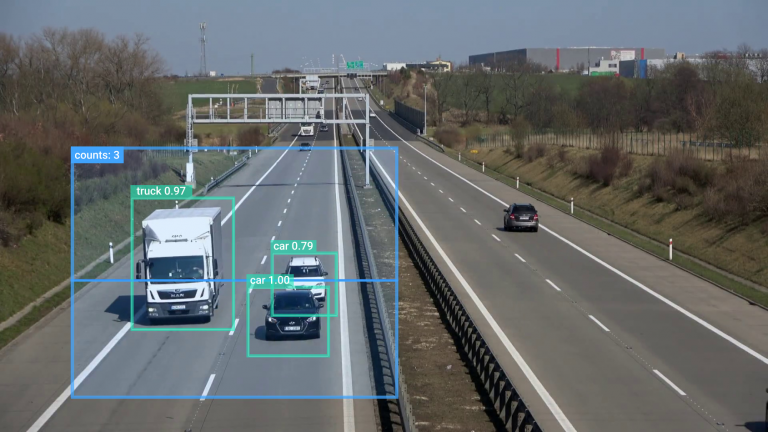

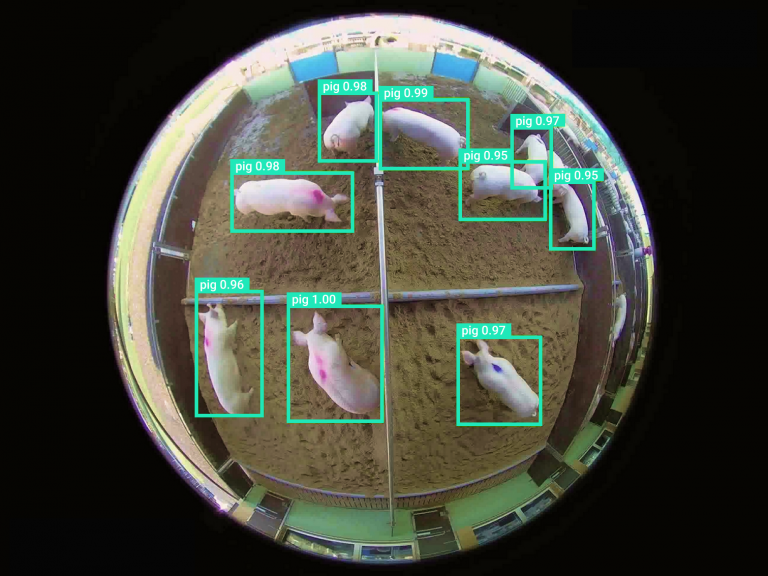

Detection tasks locate, and sometimes track, where an object exists in an image. For example, in healthcare for digital pathology, detection could involve identifying cancer cells through medical imaging. In robotics, software developers are using object detection to avoid obstacles on the factory floor.

Classification techniques determine what object exists within the visual data. For example, in manufacturing, an object recognition system classifies different types of bottles to package. In agriculture, farmers are using classification to identify weeds among their crops.

Segmentation tasks classify pixels belonging to a certain category, either individually by pixel (semantic image segmentation) or by assigning multiple object types of the same class as individual instances (instance image segmentation). For example, a self-driving car segments parts of a road scene as drivable and non-drivable space.

Image synthesis techniques create synthetic data by morphing existing digital images to contain desired content. Generative adversarial networks (GANs), such as EditGAN , enable generating synthetic visual information from text descriptions and existing images of landscapes and people. Using synthetic data to compliment and simulate real data is an emerging computer vision use case in logistics using vision AI for applications like smart inventory control.

What are the different types of computer vision?

To understand the different domains within computer vision, it is important to understand the techniques on which computer vision tasks are based. Most computer vision techniques begin with a model, or mathematical algorithm, that performs a specific elementary operation, task, or combination. While we classify traditional image processing and AI-based computer vision algorithms separately, most computer vision systems rely on a combination depending on the use case, complexity, and performance required.

Traditional computer vision

Traditional, non-deep learning-based computer vision can refer to both computer vision and image processing techniques.

In traditional computer vision, a specific set of instructions perform a specific task, like detecting corners or edges in an image to identify windows in an image of a building.

On the other hand, image processing performs a specific manipulation of an image that can be then used for further processing with a vision algorithm. For instance, you may want to smooth or compress an image’s pixels for display or reduce its overall size. This can be likened to bending the light that enters the eye to adjust focus or viewing field. Other examples of image processing include adjusting, converting, rescaling, and warping an input image.

AI-based computer vision

AI-based computer vision or vision AI relies on algorithms that have been trained on visual data to accomplish a specific task, as opposed to programmed, hard-coded instructions like that of image processing.

The detection, classification, segmentation, and synthesis tasks mentioned earlier typically are AI-based computer vision algorithms because of the accuracy and robustness that can be achieved. In many instances, AI-based computer vision algorithms can outperform traditional algorithms in terms of these two performance metrics.

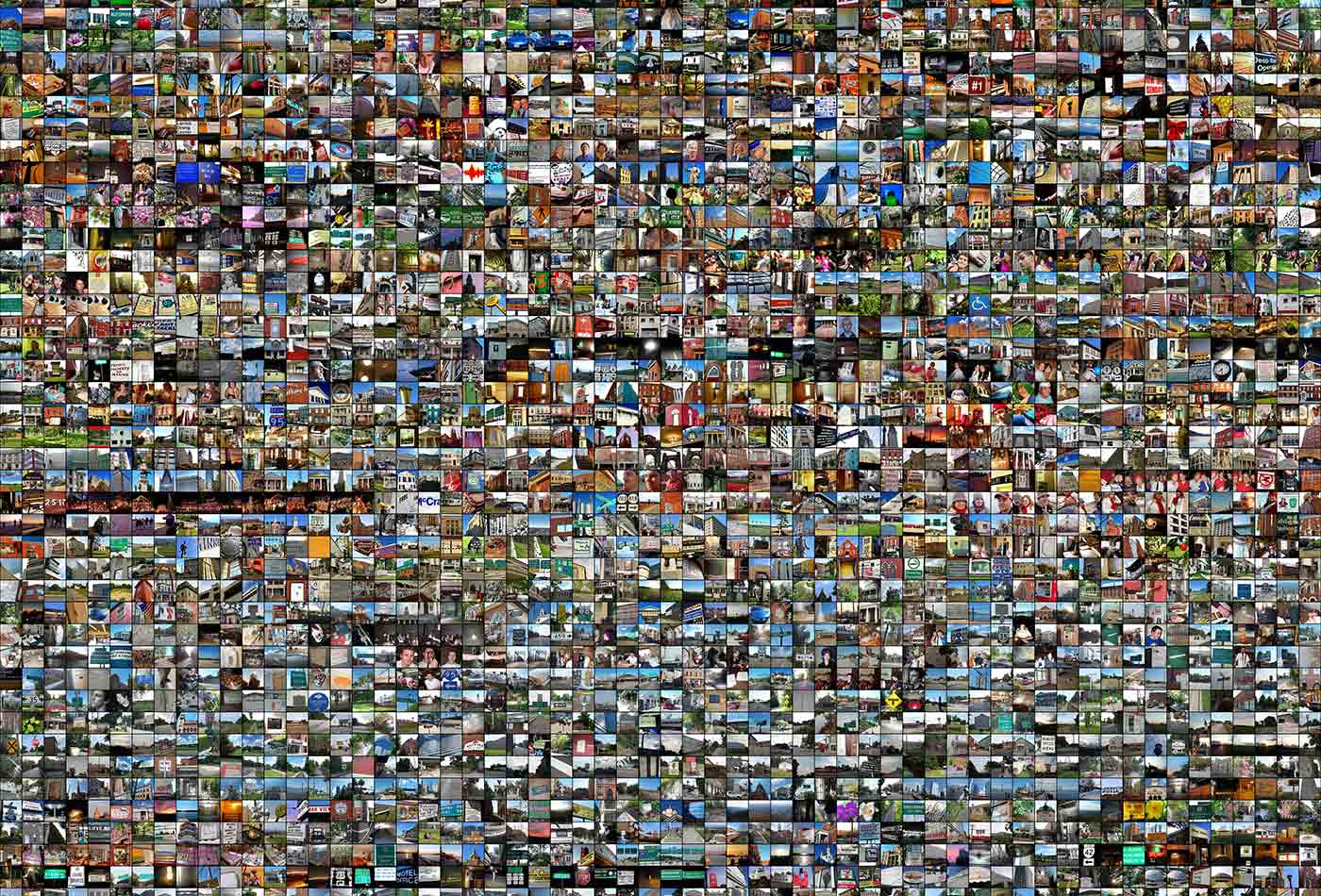

AI-based computer vision algorithms mimic the human vision system more closely by learning from and adapting to visual data inputs, making them the computer vision models of choice in most cases. That being said, AI-based computer vision algorithms require large amounts of data and the quality of that data directly drives the quality of the model’s output. But, the performance outweighs the cost.

AI-based neural networks teach themselves, depending on the data the algorithm was trained on. AI-based computer vision is like learning from experience and making predictions based on context apart from explicit direction. The learning process is akin to when your eye sees an unfamiliar object and the brain tries to learn what it is and stores it for future predictions.

Machine learning compared to deep learning in AI-based computer vision

Machine learning computer vision is a type of AI-based computer vision. AI-based computer vision based on machine learning has artificial neural networks or layers, similar to that seen in the human brain, to connect and transmit signals about the visual data ingested. In machine learning, computer vision neural networks have separate and distinct layers, explicitly-defined connections between the layers, and predefined directions for visual data transmission.

Deep learning-based computer vision models are a subset of machine learning-based computer vision. The “deep” in deep learning derives its name from the depth or number of the layers in the neural network. Typically, a neural network with three or more layers is considered deep.

AI-based computer vision based on deep learning is trained on volumes of data. It is not uncommon to see hundreds of thousands and millions of digital images used to train and develop deep neural network models. For more information, see What’s the difference Between Artificial Intelligence, Machine Learning, and Deep Learning? .

Get started developing computer vision

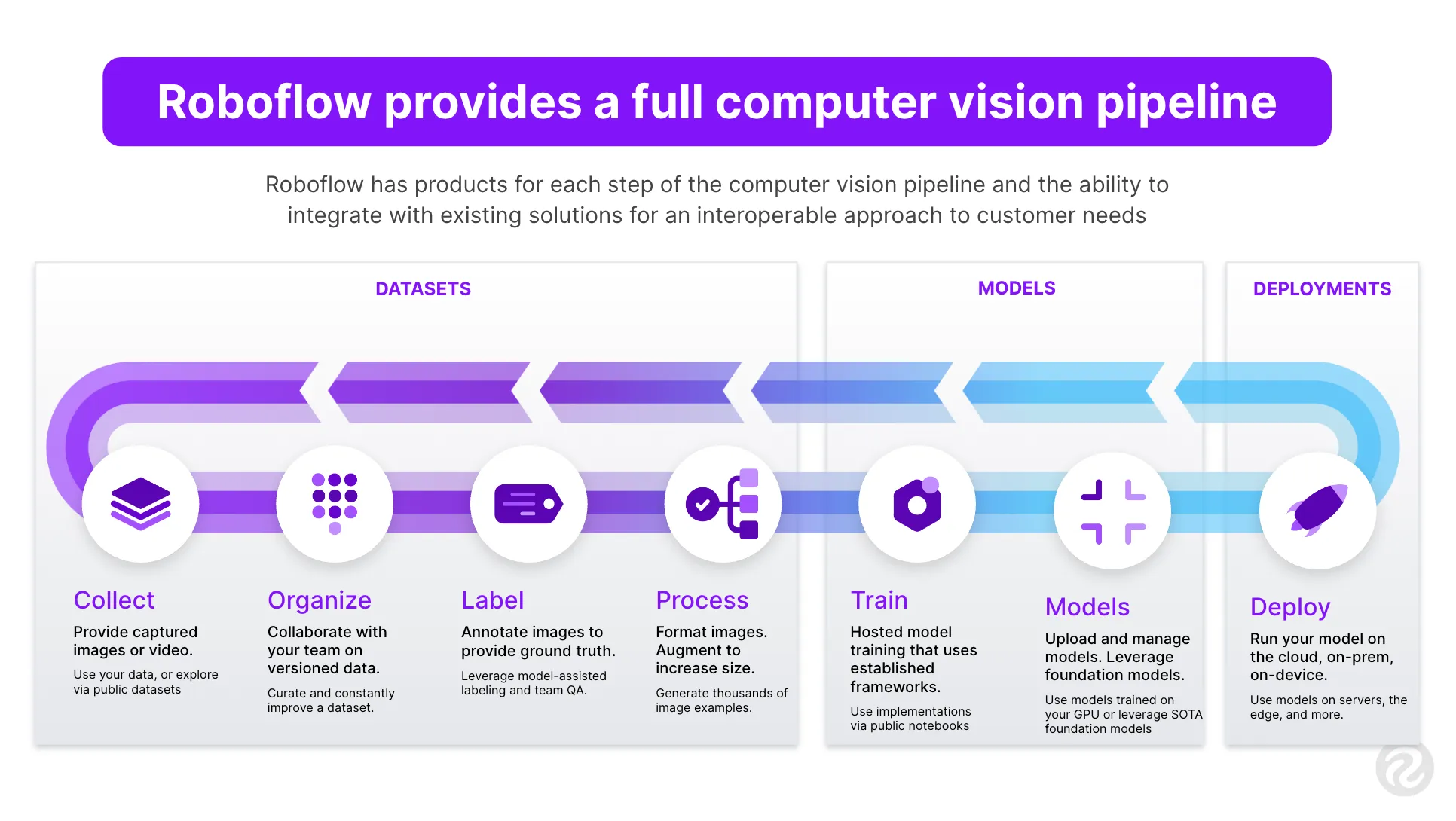

Now that we have covered the fundamentals of computer vision, we encourage you to get started developing computer vision. We recommend that beginners get started with the Vision Programming Interface (VPI) Computer Vision and Image Processing Library for non-AI algorithms or one of the TAO Toolkit fully-operational, ready-to-use, pretrained AI models .

To see how NVIDIA enables the end-to-end computer vision workflow, see the Computer Vision Solutions page. NVIDIA provides models plus computer vision and image-processing tools. We also provide AI-based software application frameworks for training visual data, testing and evaluation of image datasets, deployment and execution, and scaling.

To help enable emerging computer vision developers everywhere, NVIDIA is curating a series of paths to mastery to chart and nurture next-generation leaders. Stay tuned for the upcoming release of the computer vision path to mastery to self-pace your learning journey and showcase your #NVCV progress on social media.

Related resources

- DLI course: Deep Learning for Industrial Inspection

- GTC session: The Visionaries: A Cross-Industry Exploration of Computer Vision

- GTC session: Vision AI Demystified

- GTC session: Boost your Vision AI Application with Vision Transformer

- NGC Containers: MATLAB

- Webinar: Transforming Warehouse Operation Management Using Computer Vision and Digital Twins

About the Authors

Related posts

Explainer: What Is Computer Vision?

The Future of Computer Vision

AI Startup Aims To Redefine How People Interact with Technology

AI Reinvents the Filmmaking Process

CSIRO Powers Bionic Vision Research with New GPU-Accelerated Supercomputer

Revolutionizing Graph Analytics: Next-Gen Architecture with NVIDIA cuGraph Acceleration

Efficient CUDA Debugging: Memory Initialization and Thread Synchronization with NVIDIA Compute Sanitizer

Analyzing the Security of Machine Learning Research Code

Comparing Solutions for Boosting Data Center Redundancy

Validating nvidia drive sim radar models.

- Skip to primary navigation

- Skip to main content

Open Computer Vision Library

A Comprehensive Guide to Computer Vision Research in 2024

bharat January 17, 2024 Leave a Comment AI Careers Tags: ai computer vision computer vision research computer vision research groups deep learning OpenCV

Introduction

In our earlier blogs , we discussed the best institutes across the world for computer vision research. In this fun read, we’ll look at the different stages of Computer Vision research and how you can go about publishing your research work. Let us delve into them now. Looking to become a Computer Vision Engineer? Check out our Comprehensive Guide !

Table of Contents

- Introduction

- Different Stages of Computer Vision

Research Publications

Different stages of computer vision research.

Computer Vision Research can be put into various stages, one building to the next. Let us look at them in detail.

Identification of Problem Statement

Computer Vision research starts with identifying the problem statement. It is a crucial step in defining the scope and goals of a research project. It involves clearly understanding the specific challenge or task the researchers aim to address using computer vision techniques. Here are the steps involved in identifying the problem statement in computer vision research:

- Problem Statement Analysis: The first step is to pinpoint the specific application domain within computer vision. This could be related to object recognition in autonomous vehicles or medical image analysis for disease detection.

- Defining the problem: Next, we define the precise problem we want to solve within that domain, like classifying images of animals or diagnosing diseases from X-rays.

- Understanding the objectives: We need to understand the research objectives and outline what we intend to achieve through this project. For instance, improving classification accuracy or reducing false positives in a medical imaging system.

- Data availability: Next, we need to analyze the availability of data for our project. Check if existing datasets are suitable for our task or if we need to gather our own data, like collecting images of specific objects or medical cases.

- Review: Conduct a thorough review of existing research and the latest methodologies in the field. This will help you gain insights into the current state-of-the-art techniques and the challenges others have faced in similar projects.

- Question formulation: Once we review the work, we can formulate research questions to guide our experiments. These questions could address specific aspects of our computer vision problem and help better structure our research.

- Metrics: Next, we define the evaluation metrics that we’ll use to measure the performance of our vision system. Some common metrics include accuracy, precision, recall, and F1-score.

- Highlighting: Highlight how solving the problem will have an effect in the real world. For instance, improving road safety through better object recognition or enhanced medical diagnoses for early treatment.

- Research Outline: Finally, outline the research plan, and detail the methodology employed for data collection, model development, and evaluation. A structured outline will ensure we are on the right track throughout our research project.

Let us move to the next step, data collection and creation.

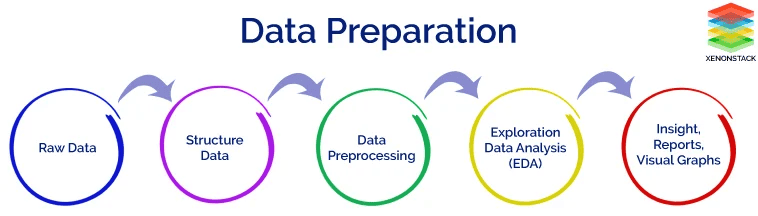

Dataset Collection and Creation

Creating and gathering datasets is one of the key building blocks in computer vision research. These datasets facilitate the algorithms and models used in vision systems. Let us see how this is done.

- Firstly we need to know what we are trying to solve. For instance, are we training models to recognize dogs in photos or identify anomalies in medical images?

- Now, we’ll need images or videos. Depending on the research needs, we can find them on public datasets or collect our own.

- Next, we mark up the data. For instance, if you’re teaching a computer to spot dogs in pictures, you’ll draw boxes around the cars and say, “These are dogs!”

- Raw data can be a mess. We may need to resize images, adjust colors, or add more examples to ensure our dataset is neat and complete.

- 1-part for training your model

- 1-part for fine-tuning

- 1-part for testing how well your model works

- Next, ensure the dataset fairly represents the real world and doesn’t favor one group or category too much.

One can also share their dataset and research with others for inputs and improvements. Dataset collection and creation are vital in computer vision research.

Exploratory Data Analysis

Exploratory Data Analysis (EDA) briefly analyzes a dataset to answer preliminary questions and guide the modeling process. For instance, this could be looking for patterns across different classes. This is not only used by Computer Vision Engineers but also Data Scientists to ensure that the data they provide are aligned with different business goals or outcomes. This step involves understanding the specifics of image datasets. For instance, EDA is used to spot anomalies, understand data distribution, or gain insights to further model training. Let us look at the role of EDA in model development.

- With EDA, one can develop data preprocessing pipelines and choose data augmentation strategies.

- We can analyze how the findings from EDA can affect the choice of model architecture. For instance, the need for some convolutional layers or input images.

- EDA is also crucial for advanced Computer Vision tasks like object detection, segmentation, and image generation backed by studies.

Now let us dive into the specifics of EDA methods and preparing image datasets for model development.

Visualization

- Sample Image Visualization involves displaying a random set of images from the dataset. This is a fundamental step where we get an idea of the data like lighting conditions or variations in image quality. From this, one can infer the visual diversity and any challenges in the dataset.

- Analyzing the pixel distribution intensities offers insights into the brightness and contrast variations across the dataset if there is any need for image enhancement techniques.

- Next, creating histograms for different color channels gives us a better understanding of the color distribution of the dataset. This is a crucial step for tasks such as image classification.

Image Property Analysis

- Another crucial part is understanding the resolution and the aspect ratio of images in the dataset. It helps make decisions like resizing the image or normalizing the aspect ratio, which is crucial in maintaining consistency in input data for neural networks.

- Analyzing the size and distribution of annotated objects can be insightful in datasets with annotations. This influences the design layers in the neural network and understanding the scale of objects.

Correlation Analysis

- With some advanced EDA processes like high dimensional image data, analyzing the relation between different features is helpful. This would aid with dimensionality reduction or feature selection.

- Next, it is crucial to understand the spatial correlations within images, like the relationship between different regions in an image. It helps in the development of spatial hierarchies in neural networks.

Class Distribution Analysis

- EDAs are important in understanding the imbalances in class distribution. This is key in classification tasks where imbalanced data can lead to biased models.

- Once the imbalances are identified, we can adopt techniques like undersampling majority classes or oversampling minority classes during model training.

Geometric Analysis

- Understanding geometric properties like edges, shapes, and textures in images offers insights into the features important for the problem at hand. We can make informed decisions on selecting specific filters or layers in the network architecture.

- It’s important to understand how different morphological transformations affect images for segmentation and object detection tasks.

Sequential Analysis

The sequential analysis applies to video data.

- For instance, analyzing changes between frames can offer information like motion, temporal consistency, or the need for temporal modeling in video datasets or video sequences.

- Identifying temporal variations and scene changes gives us insights into the dynamics within the video data that are crucial for tasks like event detection or action recognition.

Now that we’ve discussed Exploratory Data Analysis and some of its techniques let us move to the next stage in Computer Vision research, defining the model architecture.

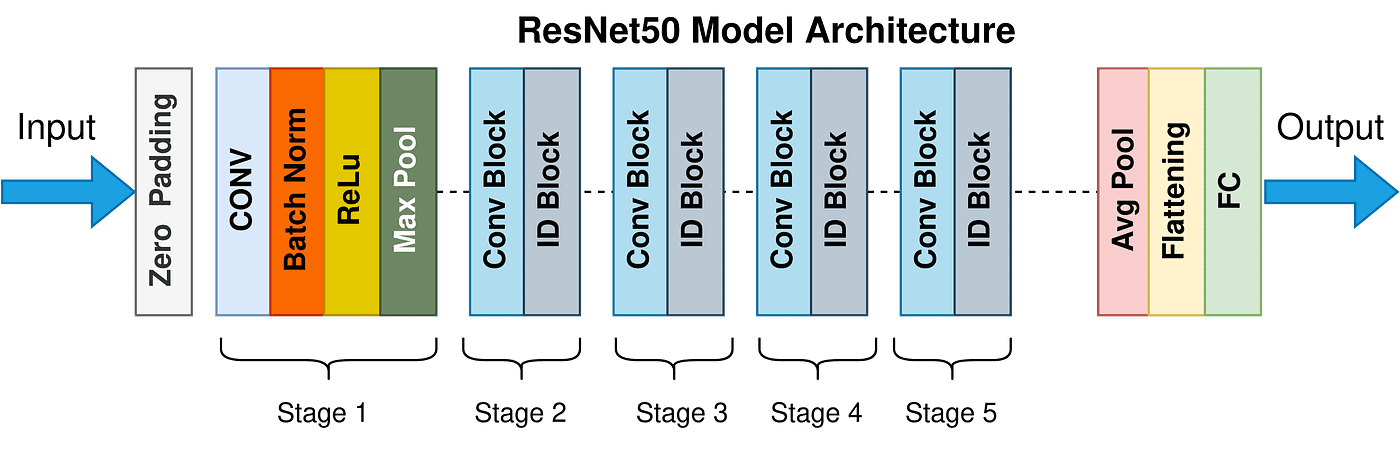

Defining Model Architecture

Defining a model architecture is a critical component of research in computer vision, as it lays the foundation for how a machine learning model will perceive, process, and interpret visual data. We analyze a model that impacts the ability of the model to learn from visual data and perform tasks like object detection or semantic segmentation.

Model architecture in computer vision refers to the structural design of an artificial neural network. The architecture defines how the model processes input images, extracts features, and makes predictions and classifications.

What are the components of a model architecture? Let’s explore them.

Input Layer

This is where the model receives the image data, mostly in the form of a multi-dimensional array. For colored images, this could be a 3D array where color channels show RGB values. Preprocessing steps like normalization are applied here.

Convolutional Layers

These layers apply a set of filters to the input. Every filter convolves across the width and height of the input volume, computing the dot product between the entries of the filter and the input, producing a 2D activation map for each filter. Preserving the relationship between pixels captures spatial hierarchies in the image.

Activation Functions

Activation functions facilitate networks to learn more complex representations by introducing them to non-linear properties. For instance, the ReLU (Rectified Linear Unit) function applies a non-linear transformation (f(x) = max(0,x)) that retains only positive values and sets all negative values to zero. Other functions include sigmoid and tanh.

Pooling Layers

These layers are used to perform a down-sampling operation along the spatial dimensions (width, height), reducing the number of parameters and computations in the network. For instance, Max pooling, a common approach, takes the maximum value from a set of values in the filter area, is a common approach. This operation offers spatial variance, making the recognition of features in the input invariant to scale and orientation changes.

Fully Connected Layers

Here, the layers connect every neuron in one layer to every neuron in the next layer. In a CNN, the high-level reasoning in the neural network is performed via these dense layers. Typically, they are positioned near the end of the network and are used to flatten the output of convolutional and pooling layers to form a single vector of features used for final classification or regression tasks.

Dropout Layers

Dropout is a regularization technique where randomly selected neurons are ignored during training. This means that the contribution of these neurons to activate the downstream neurons is removed temporally on the forward pass and any weight updates are not applied to the neuron on the backward pass. This helps in preventing overfitting.

Batch Normalization

In batch normalization, the output from a previous activation layer is normalized by subtracting the batch mean and then dividing it by the standard deviation of the batch. This technique helps stabilize the learning process and significantly reduces the number of training epochs required for deep network training.

Loss Function

The difference between the expected outcomes and the predictions made by the model is quantified by the loss function. Cross-entropy for classification tasks and mean squared error for regression tasks are some of the common loss functions in computer vision.

The optimizer is an algorithm used to minimize the loss function. It updates the network’s weights based on the loss gradient. Some common optimizers include Stochastic Gradient Descent (SGD), Adam, and RMSprop. They use backpropagation to determine the direction in which each weight should be adjusted to minimize the loss.

Output Layer

This is the final layer, where the model’s output is produced. The output layer typically includes a softmax function for classification tasks that converts the outputs to probability values for each class. For regression tasks, the output layer may have a single neuron.

Frameworks like TensorFlow, PyTorch, and Keras are widely used for designing and implementing model architectures. They offer pre-built layers, training routines, and easy integration with hardware accelerators.

Defining a model architecture requires a good grasp of both the theoretical aspects of neural networks and the practical aspects of the specific task.

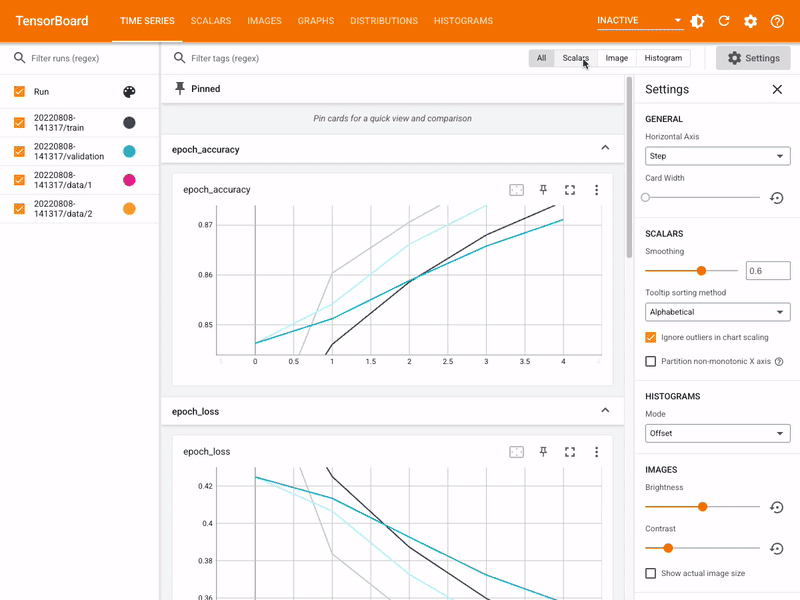

Training and Validation

Training and validation are crucial in developing a model. They help evaluate a model’s performance, especially when dealing with object detection or image classification tasks.

In this phase, the model is represented as a neural network that learns to recognize image patterns and features by altering its internal parameters iteratively. These parameters are weights and biases related to the network’s layers. Training is key for extracting meaningful features from raw visual data. Let us see how one can go about training a model.

- Acquiring a dataset is the first step. It could be in the form of images or videos for model learning purposes. For robustness, they cover various environmental conditions, variations, and object classes.

- Resizing is where all the input data has the same dimensions for batch processing.

- In Normalization, pixels are standardized to zero mean and unit variance, aiding convergence.

- Augmentation applies random transformations to increase the size of the dataset artificially, thereby improving the model’s ability to generalize.

- Once data preprocessing is done, we must choose the appropriate neural network architecture catering to the specific vision task. For instance, CNNs are widely used for image-related tasks.

- Next, we initialize the model parameters, usually weights, and biases, using random values or pre-trained weights from a model trained on a simple dataset. Transfer learning can significantly improve performance, especially when data is limited.

- Then we can optimize the algorithm to adjust its parameters iteratively with stochastic gradient descent (SGD) or RMSprop. Gradients in relation to the model’s parameters are computed through backpropagation which are used to update the parameters.

- Once the algorithm is optimized, the data is trained in mini-batches through the network, computing the loss for each mini-batch and performing gradient updates. This happens until the loss falls below a predefined threshold.

- Next, we must optimize the training performance and convergence speed by fine-tuning the hyperparameters. This could done by optimizing learning rates, batch sizes, weight regulation terms, or network architectures.

- We need to assess the model’s performance using validation or test datasets and eventually deploy the model in real-world applications through software integrations or embedded devices.

Now let us move to the next step- Validation.

Validation is fundamental for the quantitative assessment of performance and generalization capabilities of algorithms. It ensures the reliability and effectiveness of the models when applied to real-world data. Validation evaluates the ability of a model to make accurate predictions of previously unseen data hence being able to gauge its ability for generalization.

Now let us explore some of the key techniques involved in validation.

Cross-Validation Techniques

- K-Fold Cross-Validation is the method where the dataset is partitioned into K non-overlapping subsets. The model is trained and evaluated K times, with each fold taking turns as the validation set while the rest serve as the training set. The results are averaged to obtain a robust performance estimate.

- Leave-One-Out Cross-Validation or LOOCV is an extreme form of cross-validation where each data point is used as the validation set while the remaining data points constitute the training set.LOOCV offers an exhaustive evaluation of model performance.

Stratified Sampling

In some imbalanced datasets where a few classes have significantly fewer instances than others, stratified sampling ensures the balance between training and validation sets for the distribution of classes.

Performance Metrics

To assess the model’s performance, a range of performance metrics specified for computer vision tasks are deployed. They are not limited to the following.

- Accuracy is the ratio of the correctly predicted instances to the total number of instances.

- Precision is the proportion of true positive predictions among all positive predictions.

- Recall is the proportion of true positive predictions among all positive instances.

- F1-Score is the harmonic mean of precision and recall.

- Mean Average Precision (mAP)is commonly used in object detection and image retrieval tasks to evaluate the quality of ranked lists of results.

Hyperparameter Tuning

Validation is closely integrated with hyperparameter tuning, where the model’s hyperparameters are systematically adjusted and evaluated using the validation set. Techniques such as grid search, random search, or Bayesian optimization help identify the optimal hyperparameter configuration for the model.

Data Augmentation

Data augmentation techniques are applied to test the model’s robustness and the ability to handle different conditions or transformations during validation to simulate variations in the input data.

Training is where the model learns from labeled data, and Validation is where the model’s learning and generalization capabilities are assessed. They ensure that the final model is robust, accurate, and capable of performing well on unseen data, which is critical for computer vision research.

Hyperparameter tuning refers to systematically optimizing hyperparameters in deep learning models for tasks like image processing and segmentation. They control the learning algorithm’s performance but did not learn from the training data. Fine-tuning hyperparameters are crucial if we wish to achieve accurate results.

It is the number of training examples used in every forward and backward pass. Large batch sizes offer smoother convergence but need more memory. On the contrary, small batch sizes need less memory and can help escape local minima.

Number of Epochs

The Number of epochs defines how often the entire training dataset is processed during training. Too few epochs can lead to underfitting, and too many can lead to overfitting.

Learning Rate

This determines the step size during gradient-based optimization. If the learning rate is too high, it can lead to overshooting, causing the loss function to diverge, and if the learning rate is too short, it can cause slow convergence.

Weight Initialization

The training stability is affected by the initialization of weights. Techniques such as Glorot initialization are designed to address the vanishing gradient problems.

Regularization Techniques

Some techniques like dropout and weight decay aid in preventing overfitting. The model generalization is enhanced through random rotations using data augmentation.

Choice of Optimizer

The updates during training for model weights are determined by the optimizer. They have their parameters like momentum, decay rates and epsilon.

Hyperparameter tuning is usually approached as an optimization problem. Few techniques like Bayesian optimization efficiently explore the hyperparameter space balancing computational costs and do not slack on the performance. A well-defined hyperparameter tuning includes not just adjusting individual hyperparameters but also also considers their interactions.

Performance Evaluation on Unseen Data

In the earlier section, we discussed how one must go about doing the training and validation of a model. Now we’ll discuss how to evaluate the performance of a dataset on unseen data.

Training and validation dataset split is paramount when developing and evaluating models. This is not to be confused with the training and validation we discussed earlier for a model. Splitting the dataset for training and validation aids in understanding the model’s performance on unseen data. This ensures that the model generalizes well to new data. Let us look at them.

- A training dataset is a collection of labeled data points for training the model, adjusting parameters, and inferring patterns and features.

- A separate dataset is used for evaluating the model during development for hyperparameter tuning and model selection. This is the Validation dataset.

- Then there is the test dataset , an independent dataset used for assessing the final performance and generalization ability on unseen data.

Splitting datasets is needed to prevent the model from training on the same data. This would hinder the model’s performance. Some commonly used split ratios for the dataset are 70:30, 80:20, or 90:10. The larger portion is used for training, while the smaller portion is used for validation.

You have put so much effort into your research paper. But how do we publish it? Where do we publish it? How do I find the right computer vision research groups? That is what this section covers, so let’s get to it.

Conferences

There are some top-tier computer vision conferences happening across the globe. They are among the best places to showcase research work, look for future collaborations, and build networks.

Conference on Computer Vision and Pattern Recognition (CVPR)

Also called the CVPR , it is one of the most prestigious conferences in the world of Computer Vision. It is organized by the IEEE Computer Society and is an annual event. It has an amazing history of showcasing cutting-edge research papers in image analysis, object detection, deep learning techniques, and much more. CVPR has set the bar high, placing a strong emphasis on the technical aspects of the submissions. They must meet the following criteria.

Papers must possess an innovative contribution to the field. This could be the development of new algorithms, techniques, or methodologies that can bring advancements in computer vision.

If applicable, the submissions must have mathematical formulations of their methods, like equations and theorem proofs. This offers a solid theoretical foundation for the paper’s approach.

Next, the paper should include comprehensive experimental results involving many datasets and benchmarking against existing models. These are key to demonstrating the effectiveness of your proposed approach.

Clarity – this is a no-brainer; the writing and presentation must be clear and concise. The writers are expected to explain the algorithms, models, and results in a technically sound manner.

CVPR is an amazing platform for networking and engaging with the community. It’s a great place to meet academics, researchers, and industry experts to collaborate and exchange ideas. The acceptance rate for papers is only 25.8% hence the recognition within the vision community is impressive. It often leads to citations, greater visibility, and potential collaborations with renowned researchers and professionals.

International Conference on Computer Vision (ICCV)

The ICCV is another premier conference held annually once, offering an amazing platform for cutting-edge computer vision research. Much like the CVPR, the ICCV is also organized by the IEEE Computer Society, attracting worldwide visionaries, researchers, and professionals. Topics range from object detection and recognition all the way to computational photography. ICCV invites original papers offering a significant contribution to the field. The criteria for submissions are very similar to the CVPR. They must possess mathematical formulations, algorithms, experimental methodology, and results. ICCV adopts peer review to add a layer of technical rigor and quality to the accepted papers. Submissions usually undergo multiple stages of review, giving detailed feedback on the technical aspects of the research paper. The acceptance rates at ICCV are typically low at 26.2%.

Besides the main conference, the ICCV hosts workshops and tutorials that offer in-depth discussions and presentations in emerging research areas. It also offers challenges and competitions associated with computer vision tasks like image segmentation and object detection.

Like the CVPR, it offers excellent opportunities for future collaborations, networking with peers, and exchanging ideas. The papers accepted at the ICCV are typically published in the IEEE Computer Society and made available to the vision community. This offers significant visibility and recognition to researchers for papers that are accepted.

European Conference on Computer Vision (ECCV)

The European Conference on Computer Vision, or ECCV , is another comprehensive conference if you are looking for the top computer vision conferences globally. The ECCV lays a lot of emphasis on the scientific and technical quality of the paper. Like the above two conferences we discussed, it emphasizes how the researcher incorporates the mathematical foundations, algorithms, and detailed derivations and proofs with extensive experimental evaluations.

According to the ECCV formatting guidelines, the research paper ideally ranges from 10 to 14 pages. It adopts a double-blind peer review, where the researchers must make their submissions anonymous to curb any discrepancies.

ECCV also offers huge opportunities for collaborations and establishing connections. With an acceptance rate of 31.8%, a researcher can benefit from academic recognition, high visibility, and citations.

Winter Conference on Applications of Computer Vision (WACV)

WACV is a top international computer vision event with the main conference and a few workshops and tutorials. Much like the other conferences, it is held annually. With an acceptance rate below 30%, it attracts leading researchers and industry professionals. The conference usually takes place in the first week of January.

As a computer vision researcher, one must publish one’s works in journals to show your findings and give more insights into the field. Let us look at a few of the computer vision journals.

Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

Also called the TPAMI , this journal focuses on the various aspects of machine intelligence, pattern recognition, and computer vision. It offers a hybrid publication permitting traditional or author-paid open-access manuscript submissions.

With open-access manuscripts, the paper has unrestricted access to it through the IEEE Xplore and Computer Society Digital Library.

Regarding traditional manuscript submissions, the IEEE Computer Society has various award-winning journals for publication. One can browse through the different topics that fit their research. They often publish special sections on emerging topics. Some factors you need to consider are submission to publications time, bibliometric scores like impact factor, and publishing fees.

International Journal of Computer Vision (IJCV)

The IJCV offers a platform for new research results. With 15 issues a year, the International Journal of Computer Vision offers high-quality, original contributions to the field of computer vision. The length of the articles ranges from 10-page regular articles to up to 30 pages for survey papers that offer state-of-the-art presentations and results. The research must cover mathematical, physics, and computational aspects of computer vision, like image formation, processing, interpretation, machine learning techniques, and statistical approaches. Researchers are not charged to publish on IJCV . It is not only a journal that opens doors for researchers to showcase their papers but also a goldmine of information in deep learning, artificial intelligence, and robotics.

Journal of Machine Learning Research (JMLR)

Established in 2000, JMLR is a forum for electronic and paper publications of comprehensive research papers. This platform covers topics like machine learning algorithms and techniques, deep learning, neural networks, robotics, and computer vision. JMLR is freely available to the public. It is run by volunteers, and the papers undergo rigorous reviews, which serve as a valuable resource for the latest updates in the field.

You’ve invested weeks and months into this paper. Why not get the recognition and credibility your work deserves? The above Journals and Conferences offer the ultimate gateway for a researcher to showcase their works and open up a plethora of opportunities for academic and industry collaborations.

In conclusion, our journey through the intricate world of computer vision research has been a fun one. From the initial stages of understanding the problem statements to the final steps of publication in computer vision research groups, we’ve comprehensively delved into each of them.

There is no research, big or small; each offers its own contributions to the ever-evolving field of the Computer Vision domain.

We’ve more detailed posts coming your way. Stay tuned! See you guys in the next one!!

Related Blog Posts

- How to Become a Computer Vision Engineer in 2024?

- Top Computer Vision Research Institutes in the USA

- Exploring OpenCV Applications in 2023

- Computer Vision and Image Processing: Understanding the Distinction and Connection

Related Posts

August 16, 2023 Leave a Comment

August 23, 2023 Leave a Comment

August 30, 2023 Leave a Comment

Become a Member

Stay up to date on OpenCV and Computer Vision news

Free Courses

- TensorFlow & Keras Bootcamp

- OpenCV Bootcamp

- Python for Beginners

- Mastering OpenCV with Python

- Fundamentals of CV & IP

- Deep Learning with PyTorch

- Deep Learning with TensorFlow & Keras

- Computer Vision & Deep Learning Applications

- Mastering Generative AI for Art

Partnership

- Intel, OpenCV’s Platinum Member

- Gold Membership

- Development Partnership

General Link

Subscribe and Start Your Free Crash Course

Stay up to date on OpenCV and Computer Vision news and our new course offerings

- We hate SPAM and promise to keep your email address safe.

Join the waitlist to receive a 20% discount

Courses are (a little) oversubscribed and we apologize for your enrollment delay. As an apology, you will receive a 20% discount on all waitlist course purchases. Current wait time will be sent to you in the confirmation email. Thank you!

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

When computer vision works more like a brain, it sees more like people do

Press contact :.

Previous image Next image

From cameras to self-driving cars, many of today’s technologies depend on artificial intelligence to extract meaning from visual information. Today’s AI technology has artificial neural networks at its core, and most of the time we can trust these AI computer vision systems to see things the way we do — but sometimes they falter. According to MIT and IBM research scientists, one way to improve computer vision is to instruct the artificial neural networks that they rely on to deliberately mimic the way the brain’s biological neural network processes visual images.

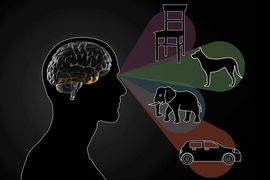

Researchers led by MIT Professor James DiCarlo , the director of MIT’s Quest for Intelligence and member of the MIT-IBM Watson AI Lab, have made a computer vision model more robust by training it to work like a part of the brain that humans and other primates rely on for object recognition. This May, at the International Conference on Learning Representations, the team reported that when they trained an artificial neural network using neural activity patterns in the brain’s inferior temporal (IT) cortex, the artificial neural network was more robustly able to identify objects in images than a model that lacked that neural training. And the model’s interpretations of images more closely matched what humans saw, even when images included minor distortions that made the task more difficult.

Comparing neural circuits

Many of the artificial neural networks used for computer vision already resemble the multilayered brain circuits that process visual information in humans and other primates. Like the brain, they use neuron-like units that work together to process information. As they are trained for a particular task, these layered components collectively and progressively process the visual information to complete the task — determining, for example, that an image depicts a bear or a car or a tree.

DiCarlo and others previously found that when such deep-learning computer vision systems establish efficient ways to solve visual problems, they end up with artificial circuits that work similarly to the neural circuits that process visual information in our own brains. That is, they turn out to be surprisingly good scientific models of the neural mechanisms underlying primate and human vision.

That resemblance is helping neuroscientists deepen their understanding of the brain. By demonstrating ways visual information can be processed to make sense of images, computational models suggest hypotheses about how the brain might accomplish the same task. As developers continue to refine computer vision models, neuroscientists have found new ideas to explore in their own work.

“As vision systems get better at performing in the real world, some of them turn out to be more human-like in their internal processing. That’s useful from an understanding-biology point of view,” says DiCarlo, who is also a professor of brain and cognitive sciences and an investigator at the McGovern Institute for Brain Research.

Engineering a more brain-like AI

While their potential is promising, computer vision systems are not yet perfect models of human vision. DiCarlo suspected one way to improve computer vision may be to incorporate specific brain-like features into these models.

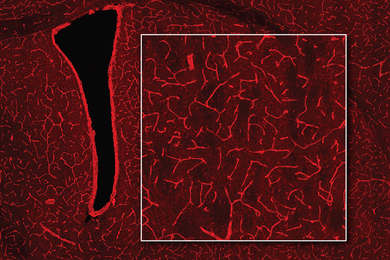

To test this idea, he and his collaborators built a computer vision model using neural data previously collected from vision-processing neurons in the monkey IT cortex — a key part of the primate ventral visual pathway involved in the recognition of objects — while the animals viewed various images. More specifically, Joel Dapello, a Harvard University graduate student and former MIT-IBM Watson AI Lab intern; and Kohitij Kar, assistant professor and Canada Research Chair (Visual Neuroscience) at York University and visiting scientist at MIT; in collaboration with David Cox, IBM Research’s vice president for AI models and IBM director of the MIT-IBM Watson AI Lab; and other researchers at IBM Research and MIT asked an artificial neural network to emulate the behavior of these primate vision-processing neurons while the network learned to identify objects in a standard computer vision task.

“In effect, we said to the network, ‘please solve this standard computer vision task, but please also make the function of one of your inside simulated “neural” layers be as similar as possible to the function of the corresponding biological neural layer,’” DiCarlo explains. “We asked it to do both of those things as best it could.” This forced the artificial neural circuits to find a different way to process visual information than the standard, computer vision approach, he says.

After training the artificial model with biological data, DiCarlo’s team compared its activity to a similarly-sized neural network model trained without neural data, using the standard approach for computer vision. They found that the new, biologically informed model IT layer was — as instructed — a better match for IT neural data. That is, for every image tested, the population of artificial IT neurons in the model responded more similarly to the corresponding population of biological IT neurons.

The researchers also found that the model IT was also a better match to IT neural data collected from another monkey, even though the model had never seen data from that animal, and even when that comparison was evaluated on that monkey’s IT responses to new images. This indicated that the team’s new, “neurally aligned” computer model may be an improved model of the neurobiological function of the primate IT cortex — an interesting finding, given that it was previously unknown whether the amount of neural data that can be currently collected from the primate visual system is capable of directly guiding model development.

With their new computer model in hand, the team asked whether the “IT neural alignment” procedure also leads to any changes in the overall behavioral performance of the model. Indeed, they found that the neurally-aligned model was more human-like in its behavior — it tended to succeed in correctly categorizing objects in images for which humans also succeed, and it tended to fail when humans also fail.

Adversarial attacks

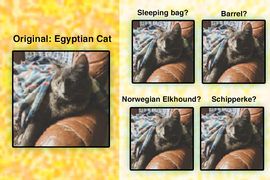

The team also found that the neurally aligned model was more resistant to “adversarial attacks” that developers use to test computer vision and AI systems. In computer vision, adversarial attacks introduce small distortions into images that are meant to mislead an artificial neural network.

“Say that you have an image that the model identifies as a cat. Because you have the knowledge of the internal workings of the model, you can then design very small changes in the image so that the model suddenly thinks it’s no longer a cat,” DiCarlo explains.

These minor distortions don’t typically fool humans, but computer vision models struggle with these alterations. A person who looks at the subtly distorted cat still reliably and robustly reports that it’s a cat. But standard computer vision models are more likely to mistake the cat for a dog, or even a tree.

“There must be some internal differences in the way our brains process images that lead to our vision being more resistant to those kinds of attacks,” DiCarlo says. And indeed, the team found that when they made their model more neurally aligned, it became more robust, correctly identifying more images in the face of adversarial attacks. The model could still be fooled by stronger “attacks,” but so can people, DiCarlo says. His team is now exploring the limits of adversarial robustness in humans.

A few years ago, DiCarlo’s team found they could also improve a model’s resistance to adversarial attacks by designing the first layer of the artificial network to emulate the early visual processing layer in the brain. One key next step is to combine such approaches — making new models that are simultaneously neurally aligned at multiple visual processing layers.

The new work is further evidence that an exchange of ideas between neuroscience and computer science can drive progress in both fields. “Everybody gets something out of the exciting virtuous cycle between natural/biological intelligence and artificial intelligence,” DiCarlo says. “In this case, computer vision and AI researchers get new ways to achieve robustness, and neuroscientists and cognitive scientists get more accurate mechanistic models of human vision.”

This work was supported by the MIT-IBM Watson AI Lab, Semiconductor Research Corporation, the U.S. Defense Research Projects Agency, the MIT Shoemaker Fellowship, U.S. Office of Naval Research, the Simons Foundation, and Canada Research Chair Program.

Share this news article on:

Related links.

- Jim DiCarlo

- McGovern Institute for Brain Research

- MIT-IBM Watson AI Lab

- MIT Quest for Intelligence

- Department of Brain and Cognitive Sciences

Related Topics

- Brain and cognitive sciences

- McGovern Institute

- Artificial intelligence

- Computer vision

- Neuroscience

- Computer modeling

- Quest for Intelligence

Related Articles

Neuroscientists find a way to make object-recognition models perform better

Putting vision models to the test

How the brain distinguishes between objects

Previous item Next item

More MIT News

The power of App Inventor: Democratizing possibilities for mobile applications

Read full story →

Using MRI, engineers have found a way to detect light deep in the brain

A better way to control shape-shifting soft robots

From steel engineering to ovarian tumor research

Professor Emeritus David Lanning, nuclear engineer and key contributor to the MIT Reactor, dies at 96

Discovering community and cultural connections

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

What Is Computer Vision?

Computer vision is a field of artificial intelligence (AI) that applies machine learning to images and videos to understand media and make decisions about them. With computer vision, we can, in a sense, give vision to software and technology.

How Does Computer Vision Work?

Computer vision programs use a combination of techniques to process raw images and turn them into usable data and insights.

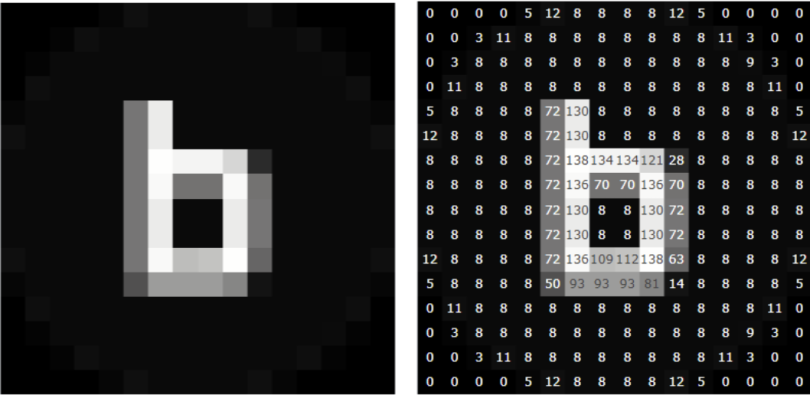

The basis for much computer vision work is 2D images, as shown below. While images may seem like a complex input, we can decompose them into raw numbers. Images are really just a combination of individual pixels and each pixel can be represented by a number (grayscale) or combination of numbers such as (255, 0, 0— RGB ).

Once we’ve translated an image to a set of numbers, a computer vision algorithm applies processing. One way to do this is a classic technique called convolutional neural networks (CNNs) that uses layers to group together the pixels in order to create successively more meaningful representations of the data. A CNN may first translate pixels into lines, which are then combined to form features such as eyes and finally combined to create more complex items such as face shapes.

Why Is Computer Vision Important?

Computer vision has been around since as early as the 1950s and continues to be a popular field of research with many applications. According to the deep learning research group, BitRefine , we should expect the computer vision industry to grow to nearly 50 billion USD in 2022, with 75 percent of the revenue deriving from hardware .

The importance of computer vision comes from the increasing need for computers to be able to understand the human environment. To understand the environment, it helps if computers can see what we do, which means mimicking the sense of human vision. This is especially important as we develop more complex AI systems that are more human-like in their abilities.

On That Note. . . How Do Self-Driving Cars Work?

Computer Vision Examples

Computer vision is often used in everyday life and its applications range from simple to very complex.

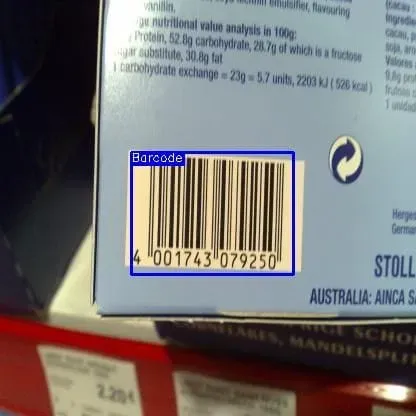

Optical character recognition (OCR) was one of the most widespread applications of computer vision. The most well-known case of this today is Google’s Translate , which can take an image of anything — from menus to signboards — and convert it into text that the program then translates into the user’s native language. We can also apply OCR in other use cases such as automated tolling of cars on highways and translating hand-written documents into digital counterparts.

A more recent application, which is still under development and will play a big role in the future of transportation, is object recognition. In object recognition an algorithm takes an input image and searches for a set of objects within the image, drawing boundaries around the object and labelling it. This application is critical in self-driving cars which need to quickly identify its surroundings in order to decide on the best course of action.

Computer Vision Applications

- Facial recognition

- Self-driving cars

- Robotic automation

- Medical anomaly detection

- Sports performance analysis

- Manufacturing fault detection

- Agricultural monitoring

- Plant species classification

- Text parsing

What Are the Risks of Computer Vision?

As with all technology, computer vision is a tool, which means that it can have benefits, but also risks. Computer vision has many applications in everyday life that make it a useful part of modern society but recent concerns have been raised around privacy. The issue that we see most often in the media is around facial recognition. Facial recognition technology uses computer vision to identify specific people in photos and videos. In its lightest form it’s used by companies such as Meta or Google to suggest people to tag in photos, but it can also be used by law enforcement agencies to track suspicious individuals. Some people feel facial recognition violates privacy, especially when private companies may use it to track customers to learn their movements and buying patterns.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

What Is Computer Vision and How It Works

We perceive and interpret visual information from the world around us automatically. So implementing computer vision might seem like a trivial task. But is it really that easy to artificially model a process that took millions of years to evolve?

Read this post if you want to learn more about what is behind computer vision technology and how ML engineers teach machines to see things.

- What is computer vision?

Computer vision is a field of artificial intelligence and machine learning that studies the technologies and tools that allow for training computers to perceive and interpret visual information from the real world.

‘Seeing’ the world is the easy part: for that, you just need a camera. However, simply connecting a camera to a computer is not enough. The challenging part is to classify and interpret the objects in images and videos, the relationship between them, and the context of what is going on. What we want computers to do is to be able to explain what is in an image, video footage, or real-time video stream.

That means that the computer must effectively solve these three tasks:

- Automatically understand what the objects in the image are and where they are located.

- Categorize these objects and understand the relationships between them.

- Understand the context of the scene.

In other words, a general goal of this field is to ensure that a machine understands an image just as well or better than a human. As you will see later on, this is quite challenging.

How does computer vision work?

In order to make the machine recognize visual objects, it must be trained on hundreds of thousands of examples. For example, you want someone to be able to distinguish between cars and bicycles. How would you describe this task to a human?

Normally, you would say that a bicycle has two wheels, and a machine has four. Or that a bicycle has pedals, and the machine doesn’t. In machine learning, this is called feature engineering .

.png)

However, as you might already notice, this method is far from perfect. Some bicycles have three or four wheels, and some cars have only two. Also, motorcycles and mopeds exist that can be mistaken for bicycles. How will the algorithm classify those?

When you are building more and more complicated systems (for example, facial recognition software) cases of misclassification become more frequent. Simply stating the eye or hair color of every person won’t do: the ML engineer would have to conduct hundreds of measurements like the space between the eyes, space between the eye and the corners of the mouth, etc. to be able to describe a person’s face.

Moreover, the accuracy of such a model would leave much to be desired: change the lighting, face expression, or angle and you have to start the measurements all over again.

Here are several common obstacles to solving computer vision problems.

- Different lighting

For computer vision, it is very important to collect knowledge about the real world that represents objects in different kinds of lighting. A filter might make a ball look blue or yellow while in fact it is still white. A red object under a red lamp becomes almost invisible.

.png)

If the image has a lot of noise, it is hard for computer vision to recognize objects. Noise in computer vision is when individual pixels in the image appear brighter or darker than they should be. For example, videocams that detect violations on the road are much less effective when it is raining or snowing outside.

- Unfamiliar angles

It’s important to have pictures of the object from several angles. Otherwise, a computer won’t be able to recognize it if the angle changes.

- Overlapping

When there is more than one object on the image, they can overlap. This way, some characteristics of the objects might remain hidden, which makes it even more difficult for the machine to recognize them.

- Different types of objects

Things that belong to the same category may look totally different. For example, there are many types of lamps, but the algorithm must successfully recognize both a nightstand lamp and a ceiling lamp.

- Fake similarity

Items from different categories can sometimes look similar. For example, you have probably met people that remind you of a celebrity on photos taken from a certain angle but in real life not so much. Cases of misrecognition are common in CV. For example, samoyed puppies can be easily mistaken for little polar bears in some pictures.

It’s almost impossible to think about all of these cases and prevent them via feature engineering. That is why today, computer vision is almost exclusively dominated by deep artificial neural networks.

Convolutional neural networks are very efficient at extracting features and allow engineers to save time on manual work. VGG-16 and VGG-19 are among the most prominent CNN architectures. It is true that deep learning demands a lot of examples but it is not a problem: approximately 657 billion photos are uploaded to the internet each year!

- Uses of computer vision

Interpreting digital images and videos comes in handy in many fields. Let us look at some of the use cases:

Medical diagnosis. Image classification and pattern detection are widely used to develop software systems that assist doctors with the diagnosis of dangerous diseases such as lung cancer. A group of researchers has trained an AI system to analyze CT scans of oncology patients. The algorithm showed 95% accuracy, while humans – only 65%.

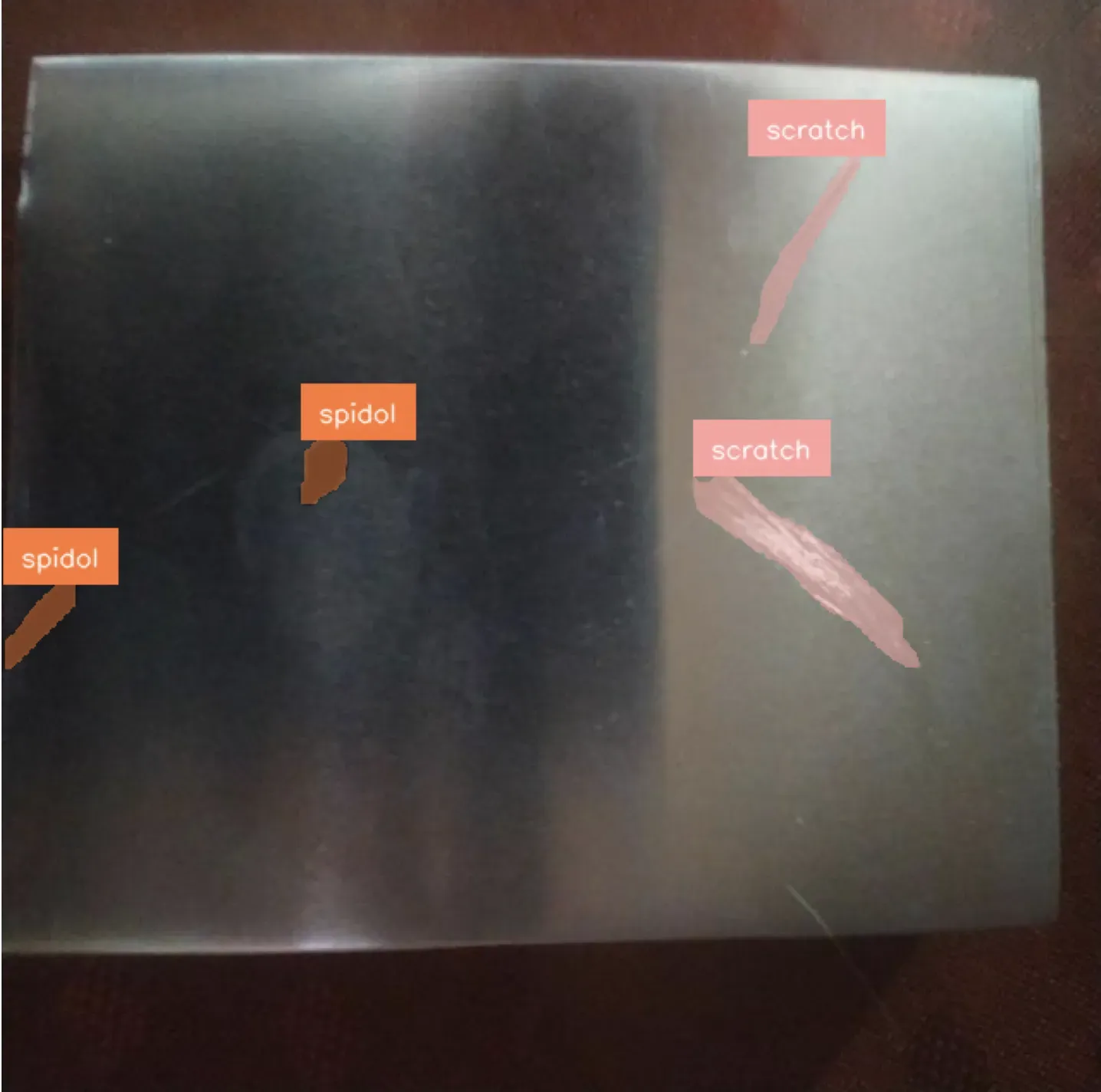

Factory management. It is important to detect defects in the manufacture with maximum accuracy, but this is challenging because it often requires monitoring on a micro-scale. For example, when you need to check the threading of hundreds of thousands of screws. A computer vision system uses real-time data from cameras and applies ML algorithms to analyze the data streams. This way it is easy to find low-quality items.

Retail. Amazon was the first company to open a store that runs without any cashiers or cashier machines. Amazon Go is fitted with hundreds of computer vision cameras. These devices track the items customers put in their shopping carts. Cameras are also able to track if the customer returns the product to the shelf and removes it from the virtual shopping cart. Customers are charged through the Amazon Go app, eliminating any necessity to stay in the line. Cameras also prevent shoplifting and prevent being out of product.

Security systems. Facial recognition is used in enterprises, schools, factories, and, basically, anywhere where security is important. Schools in the United States apply facial recognition technology to identify sex offenders and other criminals and reduce potential threats. Such software can also recognize weapons to prevent acts of violence in schools. Meanwhile, some airlines use face recognition for passenger identification and check-in, saving time and reducing the cost of checking tickets.

Animal conservation. Ecologists benefit from the use of computer vision to get data about the wildlife, including tracking the movements of rare species, their patterns of behavior, etc., without troubling the animals. CV increases the efficiency and accuracy of image review for scientific discoveries.

Self-driving vehicles. By using sensors and cameras, cars have learned to recognize bumpers, trees, poles, and parked vehicles around them. Computer vision enables them to freely move in the environment without human supervision.

Main problems in computer vision

Computer vision aids humans across a variety of different fields. But its possibilities for development are endless. Here are some fields that are yet to be improved and developed.

- Scene understanding

CV is good at finding and identifying objects. However, it experiences difficulties with understanding the context of the scene, especially if it’s non-trivial. Look at this image , for example. What do you think they are doing (don’t look at the URL!)?

You will immediately understand that these are children wearing cardboard boxes on their heads. It is not some sort of postmodern art that tries to expose the meaninglessness of school education. These children are watching a solar eclipse . But if you don’t have this context, you might never understand what’s going on. Artificial intelligence still feels like that in a vast majority of cases. To improve the situation, we would need to invent general artificial intelligence (i.e. AI whose problem-solving capabilities possibilities are more or less equal to that of a human and can be applied universally), but we are very far from doing that .

- Privacy issues

Computer vision has much to do with privacy since the systems for face recognition are being adopted by governments of different countries to promote national security. AI-powered cameras installed in the Moscow metro help catch criminals . Meanwhile, Chinese authorities profile Uyghur individuals (a Muslim ethnic minority) and single them out for tracking and incarceration. When facial recognition is everywhere, everything you do can be subject to policies and shaming. AI ethicists are still to figure out the consequences of omnipresent CV for public wellbeing.

Computer vision is an innovative field that uses the latest machine learning technologies to build software systems that assist humans across different fields. From retail to wildlife conservation, smart algorithms solve the problems of image classification and pattern recognition, sometimes even better than humans.

Want to learn more about technologies? Continue reading our blog and follow us on Twitter , Medium , or DEV for other exciting content.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

PyImageSearch

You can master Computer Vision, Deep Learning, and OpenCV - PyImageSearch

Book Examples of Image Search Engines Image Search Engine Basics Tutorials

Announcing “Case Studies: Solving real world problems with computer vision”

by Adrian Rosebrock on June 26, 2014

I have some big news to announce today…

Besides writing a ton of blog posts about computer vision, image processing, and image search engines, I’ve been behind the scenes, working on a second book .

And you may be thinking, hey, didn’t you just finish up Practical Python and OpenCV ?

Yep. I did.

Now, don’t get me wrong. The feedback for Practical Python and OpenCV has been amazing. And it’s done exactly what I thought it would — teach developers, programmers, and students just like you the basics of computer vision in a single weekend .

But now that you know the fundamentals of computer vision and have a solid starting point, it’s time to move on to something more interesting…

Let’s take your knowledge of computer vision and solve some actual, real world problems .

What type of problems?

I’m happy you asked. Read on and I’ll show you.

What does this book cover?

This book covers five main topics related to computer vision in the real world. Check out each one below, along with a screenshot of each.

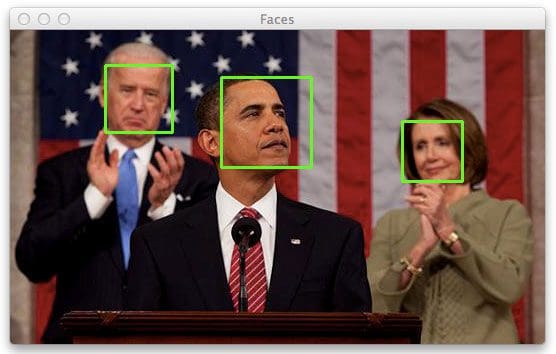

#1. Face detection in photos and video

By far, the most requested tutorial of all time on this blog has been “How do I find faces in images?” If you’re interested in face detection and finding faces in images and video, then this book is for you.

#2. Object tracking in video

Another common question I get asked is “How can I track objects in video?” In this chapter, I discuss how you can use the color of an object to track its trajectory as it moves in the video.

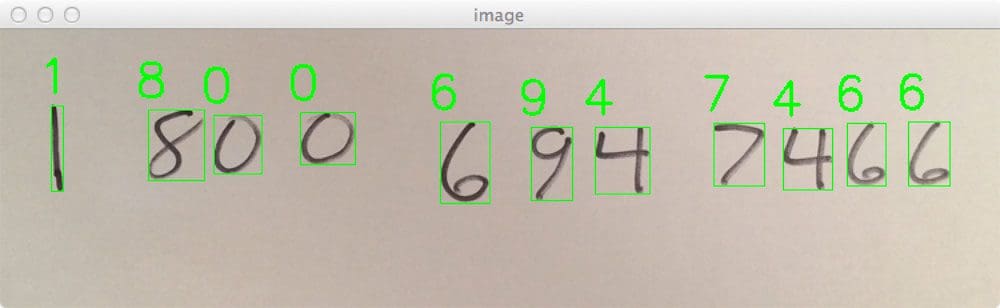

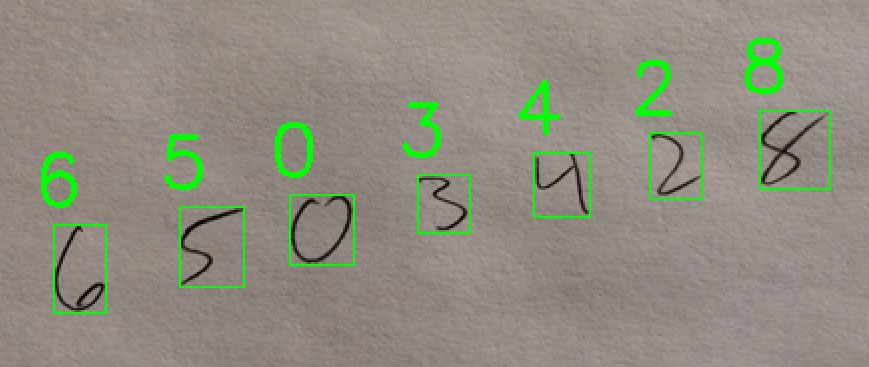

#3. Handwriting recognition with Histogram of Oriented Gradients (HOG)

This is probably my favorite chapter in the entire Case Studies book, simply because it is so practical and useful .

Imagine you’re at a bar or pub with a group of friends, when all of a sudden a beautiful stranger comes up to you and hands you their phone number written on a napkin.

Do you stuff the napkin in your pocket, hoping you don’t lose it? Do you take out your phone and manually create a new contact?

Well you could. Or. You could take a picture of the phone number and have it automatically recognized and stored safely.

In this chapter of my Case Studies book, you’ll learn how to use the Histogram of Oriented Gradients (HOG) descriptor and Linear Support Vector Machines to classify digits in an image.

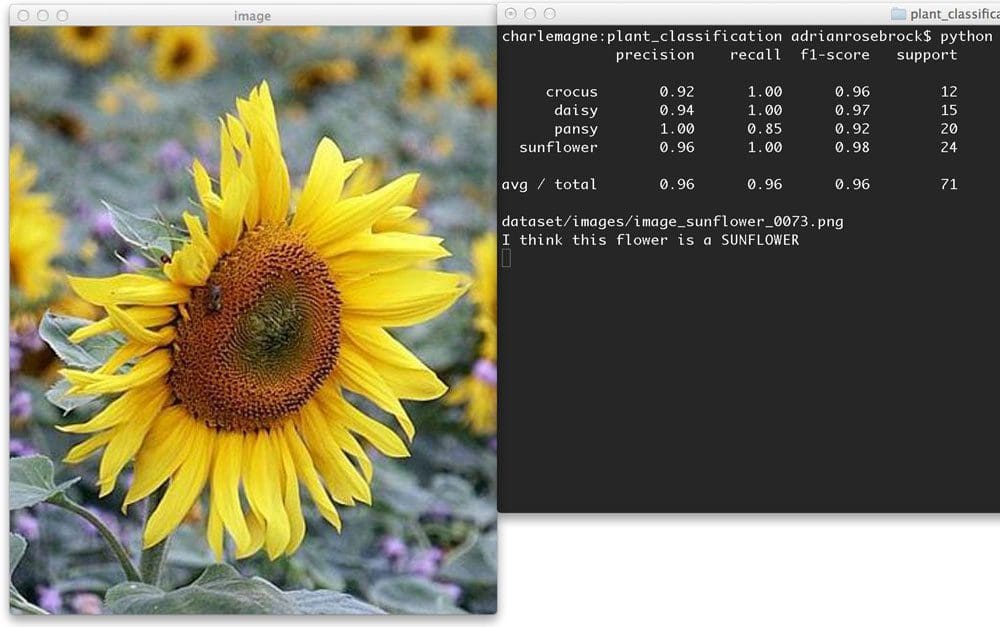

#4. Plant classification using color histograms and machine learning

A common use of computer vision is to classify the contents of an image . In order to do this, you need to utilize machine learning. This chapter explores how to extract color histograms using OpenCV and then train a Random Forest Classifier using scikit-learn to classify the species of a flower.

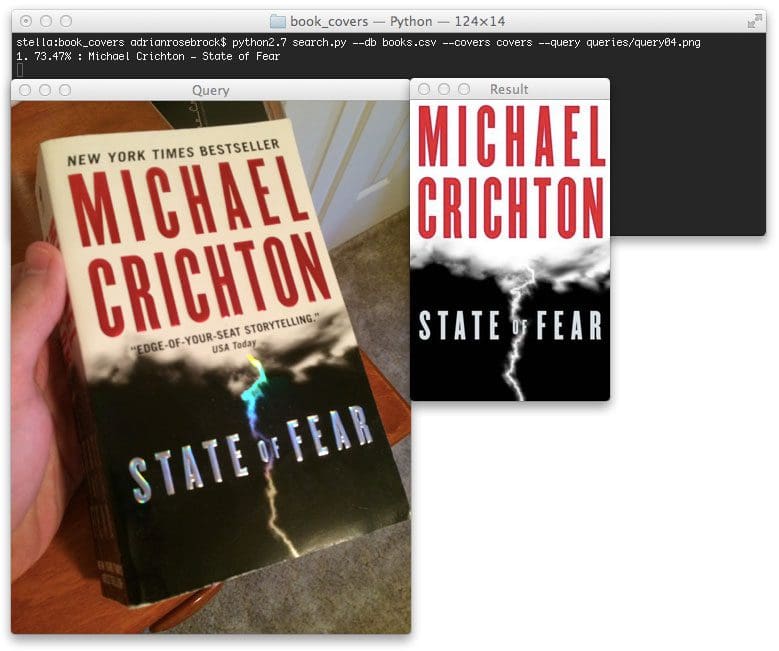

#5. Building an Amazon.com book cover search

Three weeks ago, I went out to have a few beers with my friend Gregory, a hot shot entrepreneur in San Francisco who has been developing a piece of software to instantly recognize and identify book covers — using only an image. Using this piece of software, users could snap a photo of books they were interested in, and then have them automatically added to their cart and shipped to their doorstep — at a substantially cheaper price than your standard Barnes & Noble!

Anyway, I guess Gregory had one too many beers, because guess what?

He clued me in on his secrets.

Gregory begged me not to tell…but I couldn’t resist.

In this chapter you’ll learn how to utilize keypoint extraction and SIFT descriptors to perform keypoint matching.

The end result is a system that can recognize and identify the cover of a book in a snap…of your smartphone!

All of these examples are covered in detail, from front to back, with lots of code.

By the time you finish reading my Case Studies book, you’ll be a pro at solving real world computer vision problems.

So who is this book for?

This book is for people like yourself who have a solid foundation of computer vision and image processing. Ideally, you have already read through Practical Python and OpenCV and have a strong grasp on the basics (if you haven’t had a chance to read Practical Python and OpenCV , definitely pick up a copy ).

I consider my new Case Studies book to be the next logical step in your journey to learn computer vision.

You see, this book focuses on taking the fundamentals of computer vision, and then applying them to solve, actual real-world problems .

So if you’re interested in applying computer vision to solve real world problems, you’ll definitely want to pick up a copy.

Reserve your spot in line to receive early access

If you signup for my newsletter, I’ll be sending out previews of each chapter so you can get see first hand how you can use computer vision techniques to solve real world problems.

But if you simply can’t wait and want to lock-in your spot in line to receive early access to my new Case Studies eBook, just click here .

Sound good?

Sign-up now to receive an exclusive pre-release deal when the book launches.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

About the Author

Hi there, I’m Adrian Rosebrock, PhD. All too often I see developers, students, and researchers wasting their time, studying the wrong things, and generally struggling to get started with Computer Vision, Deep Learning, and OpenCV. I created this website to show you what I believe is the best possible way to get your start.

Previous Article:

Applying deep learning and a RBM to MNIST using Python

Next Article:

Super fast color transfer between images

Comment section.

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.

Similar articles

Saving key event video clips with opencv, opencv gamma correction, opencv eigenfaces for face recognition.

You can learn Computer Vision, Deep Learning, and OpenCV.

Get your FREE 17 page Computer Vision, OpenCV, and Deep Learning Resource Guide PDF. Inside you’ll find our hand-picked tutorials, books, courses, and libraries to help you master CV and DL.

- Deep Learning

- Dlib Library

- Embedded/IoT and Computer Vision

- Face Applications

- Image Processing

- OpenCV Install Guides

- Machine Learning and Computer Vision

- Medical Computer Vision

- Optical Character Recognition (OCR)

- Object Detection

- Object Tracking

- OpenCV Tutorials

- Raspberry Pi

Books & Courses

- PyImageSearch University

- FREE CV, DL, and OpenCV Crash Course

- Practical Python and OpenCV

- Deep Learning for Computer Vision with Python

- PyImageSearch Gurus Course

- Raspberry Pi for Computer Vision

- Get Started

- Privacy Policy

Access the code to this tutorial and all other 500+ tutorials on PyImageSearch

Enter your email address below to learn more about PyImageSearch University (including how you can download the source code to this post):

What's included in PyImageSearch University?

- Easy access to the code, datasets, and pre-trained models for all 500+ tutorials on the PyImageSearch blog

- High-quality, well documented source code with line-by-line explanations (ensuring you know exactly what the code is doing)

- Jupyter Notebooks that are pre-configured to run in Google Colab with a single click

- Run all code examples in your web browser — no dev environment configuration required!

- Support for all major operating systems (Windows, macOS, Linux, and Raspbian)

- Full access to PyImageSearch University courses

- Detailed video tutorials for every lesson

- Certificates of Completion for all courses

- New courses added every month! — stay on top of state-of-the-art trends in computer vision and deep learning

PyImageSearch University is really the best Computer Visions "Masters" Degree that I wish I had when starting out. Being able to access all of Adrian's tutorials in a single indexed page and being able to start playing around with the code without going through the nightmare of setting up everything is just amazing. 10/10 would recommend. Sanyam Bhutani Machine Learning Engineer and 2x Kaggle Master

This is where the search bar goes

Solving real-world business problems with computer vision

Applications of CNNs for real-time image classification in the enterprise.

The process of data integration has traditionally been done using structured and semistructured data in batch-oriented use cases. In the last few years, real-time data has become the new frontier for many enterprises, and real-time streaming of unstructured or binary data has been a particularly tough nut to crack. In fact, many enterprises have large volumes of binary data that are not used to their full potential because of the inherent complexity of ingesting and processing such data.

Here are a few examples of how one might work with binary data :

Learn faster. Dig deeper. See farther.