Real-world evidence research based on big data

Motivation—challenges—success factors

Real-World-Evidence-Forschung auf Basis von Big Data

Motivation – Herausforderungen – Erfolgsfaktoren

- Open access

- Published: 07 June 2018

- Volume 24 , pages 91–98, ( 2018 )

Cite this article

You have full access to this open access article

- Benedikt E. Maissenhaelter 1 ,

- Ashley L. Woolmore 2 &

- Peter M. Schlag 3

45 Citations

7 Altmetric

Explore all metrics

In recent years there has been an increasing, partially also critical interest in understanding the potential benefits of generating real-world evidence (RWE) in medicine.

The benefits and limitations of RWE in the context of randomized controlled trials (RCTs) are described along with a view on how they may complement each other as partners in the generation of evidence for clinical oncology. Moreover, challenges and success factors in building an effective RWE network of cooperating cancer centers are analyzed and discussed.

Material and methods

This article is based on a selective literature search (predominantly 2015–2017) combined with our practical experience to date in establishing European oncology RWE networks.

RWE studies can be highly valuable and complementary to RCTs due to their high external validity. If cancer centers successfully address the various challenges in the establishment of an effective RWE study network and in the consequent execution of studies, they may efficiently generate high-quality research findings on treatment effectiveness and safety. Concerns pertaining to data privacy are of utmost importance and discussed accordingly. Securing data completeness, accuracy, and a common data structure on routinely collected disease and treatment-related data of patients with cancer is a challenging task that requires high engagement of all participants in the process.

Based on the discussed prerequisites, the analysis of comprehensive and complex real-world data in the context of a RWE study network represents an important and promising complementary partner to RCTs. This enables research into the general quality of cancer care and can permit comparative effectiveness studies across partner centers. Moreover, it will provide insights into a broader optimization of cancer care, refined therapeutic strategies for patient subgroups as well as avenues for further research in oncology.

Zusammenfassung

Hintergrund.

In den letzten Jahren ist ein zunehmendes, teilweise auch durchaus kritisches, Interesse an Real-World-Evidence(RWE) in der Medizin festzustellen.

Fragestellung

Vor- und Nachteile von RWE sollen insbesondere im Kontext mit randomisierten klinischen Studien (RCTs) analysiert werden und dabei diskutiert werden, wie RWE und RCTs sich als komplementäre Partner zur Evidenzgenerierung in der klinischen Onkologie ergänzen können. Ferner sollen die Herausforderungen und Erfolgsfaktoren für den Aufbau eines leistungsstarken RWE-Netzwerks kooperierender Krebszentren aufgezeigt werden.

Material und Methoden

Thematisch wurde die aktuelle Literatur (schwerpunktmäßig 2015–2017) selektiv recherchiert und kombiniert mit der eigenen praktischen Erfahrung aus dem bisherigen Aufbau von Europäischen Onkologischen Real-World-Evidence-Netzwerken.

RWE-Studien können durch ihre hohe externe Validität eine äußerst wertvolle Ergänzung zu RCTs sein. Wenn Krebszentren die zahlreichen Herausforderungen sowohl im Aufbau eines leistungsstarken RWE-Netzwerks als auch in der späteren Studiendurchführung sorgfältig adressieren, können hochwertige Forschungsergebnisse zur Therapieeffektivität und Therapiesicherheit erzielt werden. Die mit der Nutzung vernetzter, umfangreicher und vielschichtiger Datensätze (Big Data) sich ergebenden datenschutzrechtlichen Anforderungen sind dabei a priori zu beachten. Die Sicherung qualitätsgeprüfter, angemessen vollständiger und einheitlich strukturierter onkologischer Behandlungs- sowie Verlaufsdaten ist eine anspruchsvolle Aufgabe, welche die aktive und verantwortungsvolle Mitwirkung aller hieran Beteiligten benötigt.

Schlussfolgerungen

Unter den aufgezeigten Voraussetzungen stellt die Analyse umfangreicher und komplexer Real-World-Daten im Rahmen eines RWE-Netzwerks eine wichtige und vielversprechende Ergänzung zu RCTs dar. Hierdurch kann die allgemeine onkologische Versorgungsqualität analysiert und ein (Qualitäts‑)Vergleich verschiedener Einrichtungen ermöglicht werden. Zudem können auch Hinweise zu einer flächendeckenden Optimierung onkologischer Behandlung, zu verbesserten Therapiestrategien für Patientensubgruppen und Anregungen für neue onkologische Forschungsfelder gewonnen werden.

Similar content being viewed by others

Real-world data: towards achieving the achievable in cancer care

Wasted research when systematic reviews fail to provide a complete and up-to-date evidence synthesis: the example of lung cancer.

The future of clinical trials in urological oncology

Avoid common mistakes on your manuscript.

“We have entered the era of big data in healthcare” [ 12 ] and this era will transform medicine and especially oncology [ 13 , 24 ]. In this article, we focus on a specific aspect: how and under which conditions can real-world evidence (RWE) enrich and improve outcome research in oncology?

The U.S. Food and Drug Administration (FDA) defines RWE as “the clinical evidence regarding the usage, and potential benefits or risks, of a medical product derived from analysis of real-world data” [ 34 ]. The British Academy of Medical Sciences employs a similar definition: “the evidence generated from clinically relevant data collected outside of the context of conventional randomised controlled trials” [ 33 ]. Common to these definitions, and others, is the focus on evidence that is clinically relevant and that stems from routine clinical practice [ 32 ]. Our understanding of RWE is the technology-facilitated collation of all routinely collected information on patients from clinical systems to a comprehensive, homogeneously analysable dataset (big data) that reflects the treatment reality in the best possible and comparable manner.

In recent years there has been a growing interest in the potential benefits and the relevance of RWE studies [ 29 , 30 ]. For example, Tannock et al. recently pointed out in The Lancet Oncology that RWE studies enable “crucial insights into quality of care and effectiveness” [ 32 ]. In particular, key healthcare institutions have joined the scientific debate about when and how RWE studies can enrich our understanding of medical evidence [ 33 , 34 ]. At the same time, the high value of traditional randomized controlled trials (RCTs) should not be challenged and the necessity to conduct RWE studies at a high level of methodological and scientific rigor needs to be emphasized [ 18 ].

In oncology, the American Society of Clinical Oncology (ASCO) has recently published a research statement that discusses the potential of RWE and provides recommendations on how RWE may be utilized in conjunction with RCTs [ 35 ]. We will follow this line of reasoning and assume that RWE and RCTs are principally complementary approaches in clinical research. Consequently, it follows that RWE studies can also be a valuable tool for clinical research in oncology. In the following, we first discuss the benefits of RWE studies. Subsequently, we analyze a series of specific challenges and success factors in the establishment of a RWE study network that enables relevant research studies.

Strengths and weaknesses of RWE studies versus RCTs

Only a small proportion of cancer patients are recruited into RCTs and those that participate are typically younger and have fewer comorbidities than those that are not included into RCTs. The inclusion and exclusion criteria of RCTs usually create idealized conditions whereas per definition RWE studies provide insights into the routine clinical setting [ 3 ]. As a result, RWE studies may benefit from greater generalizability and external validity compared to RCTs [ 26 , 27 , 32 ].

RWE studies are complementary to RCTs in the generation of scientific evidence

The lack of generalizability of RCTs may contribute to a limited uptake of novel treatments despite positive evidence within RCTs [ 3 , 26 ]. This may be due to uncertainty about how this evidence may transfer to broader patient groups and how to integrate these treatments into routine practice [ 10 , 30 ].

In addition to a higher external validity, RWE studies have the potential to address a number of further limitations of RCTs. For example, RCTs often underestimate (long-term) toxicity and they rarely, or with a delay, explore certain research topics such as head-to-head comparisons of novel medications or interventions. Analyses with various clinical outcomes, in particular long-term and quality of life parameters are relatively infrequently addressed [ 10 , 26 , 32 ]. Moreover, a substantial number of RCTs focus on surrogate parameters instead of clinical parameters that are more clinically relevant [ 10 , 32 ]. Therefore, RWE studies can be used in a supplemental manner to create surveillance for new therapies and enable analyses of differential benefits of therapies in routine clinical care or by patient subgroups [ 35 ]. Finally, RCTs are relatively time and resource-intensive [ 10 , 29 ]. On the other hand, RWE studies have the promise of being conducted significantly faster and more resource-efficient but only once the necessary structures have been established in the centers and institutions. The financing sources for RWE studies are principally the same as for RCTs.

These critical remarks of caution do not intend to challenge the high value of RCTs, especially in the assessment of the efficacy of novel therapies. We believe, however, that RWE studies based on the data already captured in clinical systems can yield important additional insights into research and clinical care if they are being conducted at a high level of quality. To achieve this, we need sophisticated planning and careful execution to dispel a number of concerns about RWE studies. The large amount of apparently available electronic data may mislead researchers to conduct studies without elaborate attention to a stringent study design. This may include RWE studies that do not properly attend to data quality and thereby run the risk of biased data [ 19 , 35 ]. This further includes RWE studies that conduct ‘data dredging’ in disregard of scientific principles [ 2 ] or RWE studies which are initiated with a view towards commercial objectives instead of clinical or scientific insights [ 14 ].

In principle, the limited internal validity of RWE studies, primarily due to the general lack of randomization, is an important criticism and urges towards caution [ 2 , 17 ]. Certainly, the lower internal validity needs to be addressed to disentangle the effect of the treatment under investigation from other factors [ 3 ]. We will discuss later how advanced statistical techniques may support researchers in responding to this challenge.

Internal and external validity are both vital cornerstones of good science. While RCTs have higher internal validity, RWE studies have higher external validity. Thus, there may be a complementarity in the generation of scientific evidence [ 3 , 29 , 33 ].

For example, RWE studies can help in setting the research direction and in generating hypotheses of future RCTs or serve as the foundation for future confirmatory RCTs [ 29 ]. On the other hand, RWE studies can extend our knowledge of treatment effectiveness and safety by generalizing the findings of prior RCTs [ 29 ]. They may further describe underutilization of therapies or reveal overtreatment [ 3 ], and also foster research of rare tumors because they may allow the use of data sets with sufficiently large patient cohorts [ 13 ].

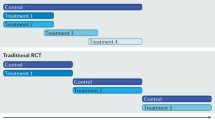

By demonstrating that positive results of RCTs are also applicable in routine clinical practice RWE will also increase the confidence of oncologists with respect to their use of anti-cancer therapies in routine clinical care. Perhaps along the way they may uncover boundary conditions and derive adapted approaches and safety insights for subpopulations (Fig. 1 ).

Strengths and weaknesses of RCTs vs. RWE studies, and their complementarity

Concept and examples of RWE studies

A good example to demonstrate the complementarity of RCTs and RWE studies and the potential therein is a study recently published in the Journal of Clinical Oncology (JCO) [ 22 ]. The research question of this study resulted from disputed results of several international RCTs regarding the role of neoadjuvant chemotherapy (NACT) and primary cytoreductive surgery (PCS) in ovarian cancer of stages IIIC and IV. This study, which analyzed the comprehensive database of 1538 patients from 6 renowned American centers of the National Comprehensive Cancer Network (NCCN) found a survival benefit for patients in stage IIIC in the PCS group. This correlated with a subgroup analysis of a prior European Organisation for Research and Treatment of Cancer (EORTC) study and is in line with current treatment guidelines, e. g. in Germany, the Association of the Scientific Medical Societies in Germany (AWMF) S3 guideline. The comparability of both groups was ensured by means of a refined propensity score matching ( n = 594). The general increase of NACT indications for ovarian cancer during the analysis period of this study should be viewed critically since the study also showed that interval cytoreductive surgery (ICS) did not improve outcomes. However, the study confirmed that NACT is noninferior to PCS in stage IV. Thus, several new research questions (hypotheses) with regard to optimizing the treatment algorithm for ovarian cancer in stages IIIC and IV may be derived from this RWE study.

Other examples for the complementarity of RCTs and RWE studies are long-term studies on treatment safety [ 6 ] or on topics for which RCTs were not feasible (especially for patients with a rare tumor) [ 23 ]. These examples demonstrate the direction that RWE studies could and should be taking. An increased level of data depth and data quality combined with stringent methods and processes should further reduce the limitations while increasing the quality and quantity of RWE studies [ 24 ].

Benefits of establishing an oncology RWE network

There are a range of additional benefits from the establishment of a network of cancer centers that collaborate with each other. In a partner network, cancer centers can learn from each other and exchange experiences in topics such as building the required infrastructure, creating high-quality data sets, or the design and execution of the RWE studies. In addition, the cancer centers can collectively analyze their data to form sufficiently large patient cohorts. Moreover, a network of cancer centers would ideally have centers that partially employ different processes and regimens in the treatment of patients. It is this variation in practices that may potentially help uncover novel insights into as yet insufficiently covered factors and their impact on treatment effectiveness. Furthermore, treatment alternatives (whose pros and cons have not been fully defined in treatment guidelines) can be tested [ 19 ].

Such topics can currently not, or only partially, be derived from epidemiological [ 4 ] or clinical cancer registries [ 15 ] because these registries have a different main objective. RWE studies, as described in this article, are of course not completely new but rather based on established observational study methods such as cohort studies [ 16 ], registry studies [ 25 ] and population studies [ 20 ]. These registries and types of studies provide different and crucial insights into the quality of cancer care but capture data often with a time delay and necessarily in a limited depth. RWE studies are therefore also complementary to these efforts.

Challenges and success factors

Establishing a rwe network.

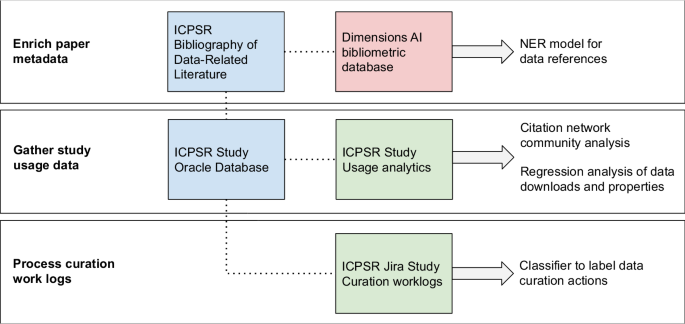

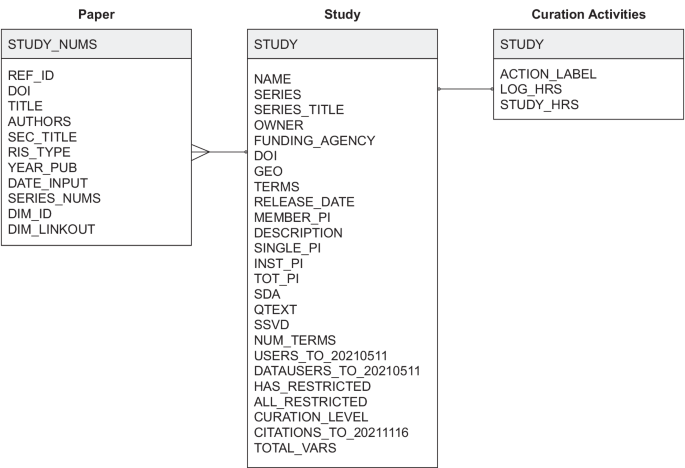

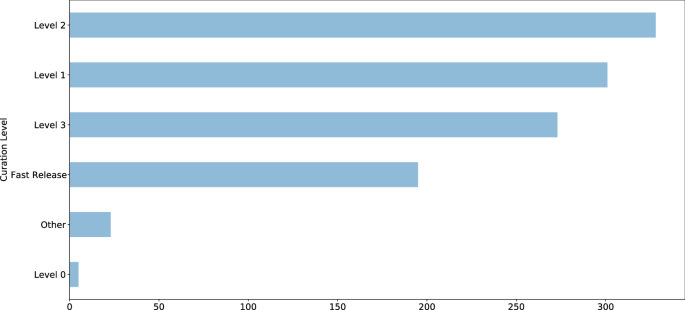

In order to achieve the possibilities and objectives described above, several tasks need to be addressed. These refer predominantly to the current reality of fragmented clinical IT systems, the quality of the data therein, as well as to information governance and operations. Resolving these challenges prior to the initiation of studies will set the technical and operational infrastructure to conduct RWE studies more efficiently, more reliably, and at a consistently high level of quality. In this context, continuous attention and efforts to utilize appropriate technologies and an up to date management of the “big data sets” are of particular importance [ 13 ].

Identification of partner cancer centers

Establishing a network begs the question on how to identify suitable partner cancer centers for the network. The high importance of an appropriate technical infrastructure and especially of high data quality necessitates the inclusion of partner cancer centers that are committed to invest time, resources and determination into optimizing their data infrastructure. Furthermore, key personnel in the cancer centers should be convinced of the benefits of such a RWE network. Lastly, one should strive to connect cancer centers that may complement each other with regard to their patient profiles, research areas, and geographical variation. On this basis, the participating centers are better equipped to cover a wide range of influencing parameters, that may otherwise be neglected, and thereby also counteract potential confounders [ 18 ].

IT systems and databases

Experience tells us that clinical data sets currently frequently reside in ‘silos’ [ 27 , 35 ]. In essence, the data are located in different domains because they are being captured by organizationally different clinical units, by means of different systems and are ultimately stored in different infrastructure units [ 9 ]. Hospitals and cancer centers typically do not have unified and integrated data warehouses. On the contrary, laboratory results are stored in a laboratory information system (LIMS), (radiological) imaging data in a picture archiving and communication system (PACS), prescription data in the pharmacy system etc. This fragmented landscape poses a challenge for any given study even within a specific type of tumor at a single cancer center. In addition, a highly effective RWE network should strive to achieve data comparability across the partner cancer centers to enable multicenter studies. Data, however, are often incomparable within a center and the comparability across cancer centers is thus even more challenging.

The commitment of decision makers in the cancer centers is essential

A key success factor in addressing these challenges is the construction of integrated data warehouses that collect, link and store all relevant data sets [ 9 , 35 ]. This infrastructure should be built in a manner that ensures comparability of the data for the purpose of conducting research studies [ 33 ]. Technically, this can be facilitated by common standards of electronic data exchange such as HL7 and tools designed to ‘extract-transform-load’ (ETL). The data can thereby be extracted from a source, transformed into the desired format, and loaded into a target infrastructure. This process should be supported by medical ontologies and in practice by the diagnosing physicians and the treating oncologists. Eventually, this can result, under the leadership of specialized IT personnel, in a common data model, which will also greatly advance data comparability across cancer centers in the RWE network. Of course, tools for data protection and pseudonymization/de-identification of patient data need to be embedded in the technical solution.

Besides these technological requirements, an essential criterion for the success of such a project is the commitment of key decision makers in the cancer centers [ 13 ]. Some cancer centers have already started to build such solutions but clearly further efforts and advances are necessary in order to fully utilize the potential [ 27 ].

Data quality

Another important aspect is the quality of the data. The systems that routinely collect and store patient data have usually not been designed with the objective to eventually utilize the data for research purposes. Similarly, data entry into documentation systems is rather unpopular (not only) with physicians because it detracts from their direct patient contact by significantly limiting the available time for this. However, completeness and validity of the data collected in clinical routine care are of utmost importance for a meaningful and analytically ready RWE network [ 5 , 29 ]. Specifically, there are four distinct yet related challenges: the completeness, common structure, and accuracy of the data as well as the availability of novel types of data.

Missing data is a common issue with health data in general [ 2 ]. This limits the size of the patient cohort that can be completely analyzed and may also introduce a bias into the data set that could potentially invalidate the findings [ 35 ]. Moreover, cancer patients are often treated by more than one department and unit within a center as well as by office-based oncologists. Cancer centers would need to strive to achieve a nearly complete follow-up to enable outcome research with a comprehensive and long-term view. It is obvious that the success of a high-quality RWE program depends on data sets that are nearly complete and in particular it requires that any incompleteness is not due to a systematic bias [ 34 ]. Within an institution, data completeness may be improved by a change in the front-end data capture coupled with illustrating the value of capturing full records. Across institutions, technology in the sense of common standards and interfaces may also contribute to the analytical integration of commonly collected data. In addition, the commitment among the decision makers and sufficient capacity of trained personnel are both instrumental in ensuring comprehensive follow-up together with partner institutions.

Some types of data in electronic medical records, such as anamnestic data, comorbidities or toxicity, are frequently recorded as free text instead of being stored as structured data variables [ 24 ]. One advantage of real-world data is the ability to construct data sets with long follow-up periods. The potential to fully utilize the theoretically available data is confined by historical, paper-based documents. Both challenges can be increasingly addressed by a mixture of technology and organizational measures. For example, technically by a change in the front-end systems requiring the structured input of key data points along with a general motivation of the staff of a cancer center [ 35 ]. Also, natural language processing (NLP) software solutions that utilize medical dictionaries (e. g. SNOMED, LOINC) can transform unstructured data both retrospectively and prospectively [ 12 ]. They should not be used in isolation but rather by medical coding teams.

Various reasons can endanger the accuracy of data. For example, data may be inaccurate due to an error creating or in entering patient data, or due to a change in classification schemes etc. Data accuracy can be fostered by a combination of electronic means of assuring quality, e. g., checking validity at the point of data entry or business quality rules, and periodic quality checks. Reviewing the distribution of data variables and conducting logic checks may uncover systematic inaccuracies.

Some, primarily scientific, types of data such as new biomarkers, genomics data, or novel laboratory tests are currently not systematically collected or in a heterogeneous manner. This applies also to patient-reported outcomes (PRO) such as structured assessments of patients’ quality of life. Increasingly, (psycho-) oncologists suggest that quality of life data should be key outcome parameters in oncology. The clinical results of novel therapies, unfortunately, still generate in many cases only marginal improvements in overall survival but may result in meaningful differences in patients’ quality of life [ 28 ]. Considering PRO data may also be helpful in uncovering symptomatic toxicities such as nausea or vomiting that are often captured incompletely [ 21 ]. A RWE network should therefore envisage the incorporation of these novel types of data systematically into their routine clinical practice [ 12 ]. Of particular relevance is the establishment of a user-friendly process that captures patients’ quality of life assessments [ 28 ].

Data privacy and protection

The fundamental importance and the indispensable cornerstones of the protection of patient data [ 5 ] have been recently reiterated by the (German) National Ethics Committee (Deutscher Nationaler Ethikrat) in a comprehensive report in the context of big data in healthcare [ 7 ]. In parallel, the new General Data Protection Regulation (GDPR) will come into effect in the European Union as of May 2018. This updated regulation further expands the scope of data privacy and protection by including new principles such as data protection and privacy by design that obligates the implementation of technical and organizational measures that secure patient data already at the design of systems [ 8 ]. Two legitimate claims oscillate here: the individual right to data privacy and the right of the population that improvements in cancer therapy may be developed based on data analyses.

Sophisticated solutions have been developed for the pseudonymization or de-identification of data. Various technical solutions are available to protect the data. They should be combined with well-established organizational processes and training of personnel. Furthermore, the data sets could stay within the confines of each cancer center and be analyzed only by staff associated with the cancer center. Multicenter studies could be conducted by means of federated data analysis in this scheme.

Integrating data from fragmented systems, ensuring high data quality and enhancing it further, while securing data privacy requires a strong governance framework within a cancer center. The framework needs to describe the decision rights and roles and responsibilities of the various departments within a cancer center and the hospital. This is not only crucial in the formation of the network but will also govern how RWE studies are to be conducted within a center and in partnership with other centers. All requirements and tasks described above also necessitate a sufficient capacity of dedicated, specialized personnel [ 5 ]. Only this interplay can enable a high level of scientific and methodological rigor (Table 1 ).

Conducting RWE studies

For high-quality RWE studies there are additional requirements with respect to design, management, and publication.

Study design and execution

A critical review of phase IV trial protocols suggest that many of them neglect to account for recognized measures of quality assurance in the design of these studies [ 14 ]. A clinically relevant research question needs to be postulated based on a stringent medical theory and the corresponding hypotheses need to be established. These may then be analyzed with an appropriate and sufficiently large dataset and by applying suitable methods [ 1 ]. This necessitates a substantial medical expertise in oncology from the inception of the study [ 29 ]. Therefore, RWE studies should be conducted hand in hand with stakeholders who possess the requisite clinical and biometric expertise and who design and execute the studies independently under primarily scientific aspects [ 2 ].

The tasks require sufficient and dedicated specialized personnel

Real-world data are vulnerable to a range of biases [ 35 ]. These include selection bias, information bias, measurement error, confounding, and Simpson ’s paradox [ 11 ], as well as performance, detection or attrition bias [ 35 ]. It is thus necessary to employ stringent methods to assess and ascertain the data quality, for example, by integrating the Cochrane risk-of-bias approach [ 9 ].

As discussed above, the key limitation of RWE studies is their lower internal validity [ 2 , 3 ]. In contrast to RCTs, and more generally, studies with an experimental design, RWE need to apply methods that single out the effect of the treatment under investigation [ 27 ]. To this end, there are a number of statistical matching techniques, such as propensity score matching, inverse probability weighting, or stratification [ 17 ]. The propensity score method has some parallels with controlled trials on some levels [ 17 ]. Conceptually, the method seeks to analytically generate a control group that resembles the treatment group very closely with respect to the characteristics of the patient groups and other impact factors.

Publication of RWE studies

A frequent criticism of post-marketing studies, which include RWE studies, is the partial practice of opaque reporting of findings and selective publication [ 2 ]. A recent British Medical Journal (BMJ) article reported that only a small proportion of post-marketing studies were published in scientific journals [ 31 ]. This development is problematic because it does not contribute to scientific progress and contravenes common principles of good scientific practice. This raises concerns about the motivation for and the scientific discussion of post-marketing studies. A general obligation to publish (completed and discontinued) RWE studies conducted in an oncological RWE network should already be agreed upon in the planning phase of a study [ 2 ]. The publications should be transparent with respect to the original research question, the design of the study, its analysis and interpretation ([ 35 ]; Table 2 ).

“Big data” can be considered the material basis for the realization of RWE studies. These may be a complementary partner of RCTs and thereby a valuable tool in clinical research in oncology.

Modern IT concepts and technologies enable the digital and structured capture of complex oncological information in addition to routine medical data.

Thereby, it is possible to analyze data longitudinally and data that has been collected with different methods.

The individual centers in a RWE study network may conduct national or international benchmarking, depending on the composition of the network, in addition to analyzing their internal clinical context.

This may not only yield clues about outcomes of current treatment pathways but also about alternative approaches or about new research-related hypotheses.

RWE studies are therefore a meaningful complement to RCTs which typically analyze pre-selected patients and to clinical registries which usually operate on a reduced data scope.

The ambition to conduct high-quality RWE studies in oncology poses significant challenges for all stakeholders with regards to IT, personnel, organizational, financial, and data privacy aspects.

These challenges can only be overcome jointly in order to achieve the legitimate aim of a relevant improvement of quality, effectiveness and safety in oncological care.

Antes G (2016) Ist das Zeitalter der Kausalität vorbei? Z Evid Fortbild Qual Gesundhwes 112:S16–S22

Article Google Scholar

Berger ML, Sox H, Willke RJ et al (2017) Good practices for real-world data studies of treatment and/or comparative effectiveness: recommendations from the joint ISPOR-ISPE special task force on real-world evidence in health care decision making. Pharmacoepidemiol Drug Saf 26:1033–1039

Booth CM, Tannock IF (2014) Randomised controlled trials and population-based observational research: partners in the evolution of medical evidence. Br J Cancer 110:551–555

Article CAS Google Scholar

Brenner H, Weberpals J, Jansen L (2017) Epidemiologische Forschung mit Krebsregisterdaten. Onkologe 23:272–279

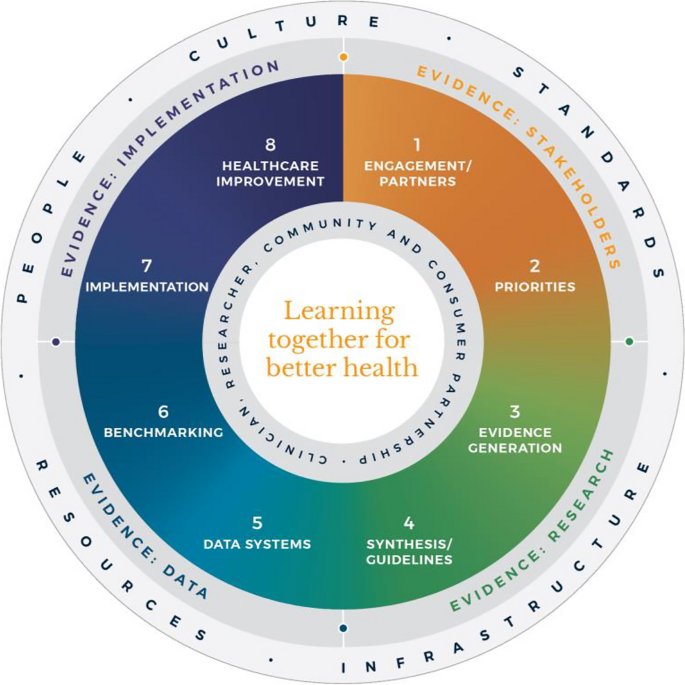

Califf RM, Robb MA, Bindman AB et al (2016) Transforming evidence generation to support health and health care decisions. N Engl J Med 375:2395–2400

Darby SC, Ewertz M, McGale P et al (2013) Risk of ischemic heart disease in women after radiotherapy for breast cancer. N Engl J Med 368:987–998

Deutscher Ethikrat (2017) Big Data und Gesundheit – Datensouveränität als informationelle Freiheitsgestaltung. Vorabfassung vom 30.11.2017

Google Scholar

E.U. Regulation (2016) 679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulat). Off J Eur Union L119:1–88

Elliott JH, Grimshaw J, Altman R et al (2015) Make sense of health data: develop the science of data synthesis to join up the myriad varieties of health information. Nature 527:31–33

Frieden TR (2017) Evidence for health decision making—beyond randomized, controlled trials. N Engl J Med 377:465–475

Hammer GP, du Prel JB, Blettner M (2009) Vermeidung verzerrter Ergebnisse in Beobachtungsstudien. Dtsch Arztebl 47:664–668

Hassett MJ (2017) Quality improvement in the era of big data. J Clin Oncol 35:3178–3180

Jaffee EM, Van Dang C, Agus DB et al (2017) Future cancer research priorities in the USA: a Lancet Oncology Commission. Lancet Oncol 18:e653–e706

von Jeinsen BK, Sudhop T (2013) A 1‑year cross-sectional analysis of non-interventional post-marketing study protocols submitted to the German Federal Institute for Drugs and Medical Devices (BfArM). Eur J Clin Pharmacol 69:1453–1466

Klinkhammer-Schalke M, Gerken M, Barlag H et al (2017) Bedeutung von Krebsregistern für die Versorgungsforschung. Onkologe 23:280–287

Kulkarni GS, Hermanns T, Wei Y et al (2017) Propensity score analysis of radical cystectomy versus bladder-sparing trimodal therapy in the setting of a multidisciplinary bladder cancer clinic. J Clin Oncol 35:2299–2307

Kuss O, Blettner M, Börgermann J (2016) Propensity score: an alternative method of analyzing treatment effects – part 23 of a series on evaluation of scientific publications. Dtsch Aerztebl Int 113:597–603

Lange S, Sauerland S, Lauterberg J, Windeler J (2017) The range and scientific value of randomized trials – part 24 of a series on evaluation of scientific publications. Dtsch Aerztebl Int 114:635–640

Lefering R (2016) Registerdaten zur Nutzenbewertung – Beispiel TraumaRegister DGU®. Z Evid Fortbild Qual Gesundhwes 112:11–S15

van Maaren MC, de Munck L, de Bock GH et al (2016) 10 year survival after breast-conserving surgery plus radiotherapy compared with mastectomy in early breast cancer in the Netherlands: a population-based study. Lancet Oncol 17:1158–1170

Di Maio M, Basch E, Bryce J, Perrone F (2016) Patient-reported outcomes in the evaluation of toxicity of anticancer treatments. Nat Rev Clin Oncol 13:319–325

Meyer LA, Cronin AM, Sun CC et al (2016) Use and Effectiveness of Neoadjuvant Chemotherapy for Treatment of Ovarian Cancer. J Clin Oncol 34:3854–3863

Nussbaum DP, Rushing CN, Lane WO et al (2016) Preoperative or postoperative radiotherapy versus surgery alone for retroperitoneal sarcoma: a case-control, propensity score-matched analysis of a nationwide clinical oncology database. Lancet Oncol 17:966–975

Obermeyer Z, Emanuel EJ (2016) Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med 375:1216

Reiss KA, Yu S, Mamtani R et al (2017) Starting dose of sorafenib for the treatment of hepatocellular carcinoma: a retrospective, multi-institutional study. J Clin Oncol 35:3575–3585

Rothwell PM (2005) External validity of randomised controlled trials:“to whom do the results of this trial apply?”. Lancet 365:82–93

Schneeweiss S (2014) Learning from big health care data. N Engl J Med 370:2161–2163

Secord AA, Coleman RL, Havrilesky LJ et al (2015) Patient-reported outcomes as end points and outcome indicators in solid tumours. Nat Rev Clin Oncol 12:358–370

Sherman RE, Anderson SA, Dal Pan GJ et al (2016) Real-world evidence—what is it and what can it tell us. N Engl J Med 375:2293–2297

Sherman RE, Davies KM, Robb MA et al (2017) Accelerating development of scientific evidence for medical products within the existing US regulatory framework. Nat Rev Drug Discov 16:297–298

Spelsberg A, Prugger C, Doshi P et al (2017) Contribution of industry funded post-marketing studies to drug safety: survey of notifications submitted to regulatory agencies. BMJ 356:j337

Tannock IF, Amir E, Booth CM et al (2016) Relevance of randomised controlled trials in oncology. Lancet Oncol 17:e560–e567

The Academy of Medical Sciences (2015) Real world evidence: summary of a joint meeting held on 17 September 2015 by the Academy of Medical Sciences and the Association of the British Pharmaceutical Industry

U.S. Food and Drug Administration (2017) Use of real-world evidence to support regulatory decision-making for medical devices. Guidance for industry and food and drug administration staff

Visvanathan K, Levit LA, Raghavan D et al (2017) Untapped potential of observational research to inform clinical decision making: American Society of Clinical Oncology research statement. J Clin Oncol 35(16):1845–1854

Download references

Author information

Authors and affiliations.

IQVIA (formerly Quintiles & IMS Health), Landshuter Allee 10, 80637, Munich, Germany

Benedikt E. Maissenhaelter

IQVIA (formerly Quintiles & IMS Health), Paris, France

Ashley L. Woolmore

c/o Charité Comprehensive Cancer Center, Berlin, Germany

Peter M. Schlag

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Benedikt E. Maissenhaelter .

Ethics declarations

Conflict of interest.

B.E. Maissenhaelter, A.L. Woolmore are employees of IQVIA and are responsible for establishing a European Oncology Data and Evidence Network. P.M. Schlag advises IQVIA in establishing a European Oncology Data and Evidence Network and receives consulting fees from IQVIA.

This article does not contain any studies with human participants or animals performed by any of the authors.

Rights and permissions

Open Access. This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Maissenhaelter, B.E., Woolmore, A.L. & Schlag, P.M. Real-world evidence research based on big data. Onkologe 24 (Suppl 2), 91–98 (2018). https://doi.org/10.1007/s00761-018-0358-3

Download citation

Published : 07 June 2018

Issue Date : November 2018

DOI : https://doi.org/10.1007/s00761-018-0358-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Real-world data

- Evidence network

- Network of cancer centers

- Outcome research

- Quality of care

Schlüsselwörter

- Real-World-Data

- Evidenznetzwerk

- Netzwerk von Krebszentren

- Outcome-Forschung

- Versorgungsqualität

- Find a journal

- Publish with us

- Track your research

Amanote Research

Real-world evidence research based on big data, onkologe - germany, doi 10.1007/s00761-018-0358-3.

Available in full text

June 7, 2018

Springer Science and Business Media LLC

Related search

Research on real-time network data mining technology for big data, research on the data service application based on big data, the world of real research. commentary on... research in the real world, evidence-based practice in the real world, research on e-commerce enterprise management based on big data, using big data to predict collective behavior in the real world, pcp53 - a diagnostic framework to evaluate real-world data sources for real-world evidence generation, the research based on big data management accounting model building, new discrimination diagrams for basalts based on big data research.

- Open access

- Published: 05 November 2022

Real-world data: a brief review of the methods, applications, challenges and opportunities

- Fang Liu ORCID: orcid.org/0000-0003-3028-5927 1 &

- Demosthenes Panagiotakos 2

BMC Medical Research Methodology volume 22 , Article number: 287 ( 2022 ) Cite this article

50k Accesses

83 Citations

21 Altmetric

Metrics details

A Correction to this article was published on 02 May 2023

This article has been updated

The increased adoption of the internet, social media, wearable devices, e-health services, and other technology-driven services in medicine and healthcare has led to the rapid generation of various types of digital data, providing a valuable data source beyond the confines of traditional clinical trials, epidemiological studies, and lab-based experiments.

We provide a brief overview on the type and sources of real-world data and the common models and approaches to utilize and analyze real-world data. We discuss the challenges and opportunities of using real-world data for evidence-based decision making This review does not aim to be comprehensive or cover all aspects of the intriguing topic on RWD (from both the research and practical perspectives) but serves as a primer and provides useful sources for readers who interested in this topic.

Results and Conclusions

Real-world hold great potential for generating real-world evidence for designing and conducting confirmatory trials and answering questions that may not be addressed otherwise. The voluminosity and complexity of real-world data also call for development of more appropriate, sophisticated, and innovative data processing and analysis techniques while maintaining scientific rigor in research findings, and attentions to data ethics to harness the power of real-world data.

Peer Review reports

Introduction

Per the definition by the US FDA, real-world data (RWD) in the medical and healthcare field “are the data relating to patient health status and/or the delivery of health care routinely collected from a variety of sources”[ 1 ]. The wide usage of the internet, social media, wearable devices and mobile devices, claims and billing activities, (disease) registries, electronic health records (EHRs), product and disease registries, e-health services, and other technology-driven services, together with increased capacity in data storage, have led to the rapid generation and availability of digital RWD [ 2 ].

The increasing accessibility of RWD and the fast development of artificial intelligence (AI) and machine learning (ML) techniques, together with rising costs and recognized limitations of traditional trials, has spurred great interest in the use of RWD to enhance the efficiency of clinical research and discoveries and bridge the evidence gap between clinical research and practice. For example, during the COVID-19 pandemic, RWD are used to generate or aid the generation of real-world evidence (RWE) on the effectiveness of COVID-19 vaccination [ 3 , 4 , 5 ], to model localized COVID-19 control strategies [ 6 ], to characterize COVID-19 and flu using data from smartphones and wearables [ 7 ], to study behavioral and mental health changes in relation to the lockdown of public life [ 8 ], and to assist in decision and policy making, among others.

In what follows, we provide a brief review on the type and sources of RWD (Section 2 ) and the common models and approaches to utilize and analyze RWD (Section 3 ) , and discuss the challenges and opportunities of using RWD for evidence-based decision making (Section 4 ). This review does not aim to be comprehensive or cover all aspects of the intriguing topic on RWD (from both the research and practical perspectives) but serves as a primer and provides useful sources for readers who interested in this topic.

Characteristics, types and applications of RWD

RWD have several characteristics as compared to data collected from randomized trials in controlled settings. First, RWD are observational as opposed to data gathered in a controlled setting. Second, many types of RWD are unstructured (e.g., texts, imaging, networks) and at times inconsistent due to entry variations across providers and health systems. Third, RWD may be generated in a high-frequency manner (e.g., measurements at the millisecond level from wearables), resulting in voluminous and dynamic data. Fourth, RWD may be incomplete and lack key endpoints for an analysis given that the original collection is not for such a purpose. For example, claims data usually do not have clinical endpoints; registry data have limited follow-ups. Fifth, RWD may be subject to bias and measurement errors (random and non-random). For example, data generated from the internet, mobile devices, and wearables can be subject to selection bias; a RWD dataset is a unrepresentative sample of the underlying population that a study intends to understand; claims data are known to contain fraudulent values. In summary, RWD are messy, incomplete, heterogeneous, and subject to different types of measurement errors and biases. A systematic scoping review of the literature suggests data quality of RWD is not consistent, and as a result quality assessments are challenging due to the complex and heterogeneous nature of these data. The sub-optimal data quality of RWD is well recognized [ 9 , 10 , 11 , 12 ]; how to improve it (e.g. regulatory-grade) is work in progress [ 13 , 14 , 15 ].

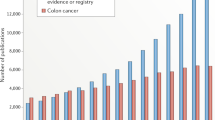

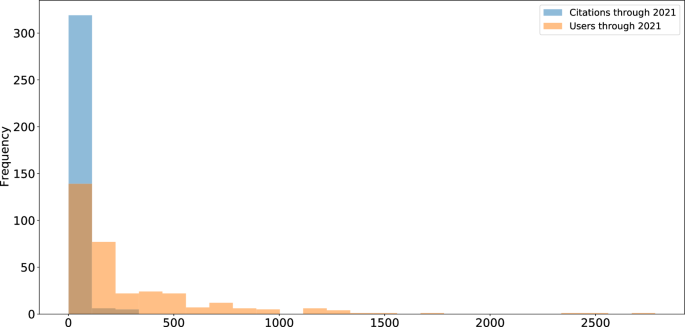

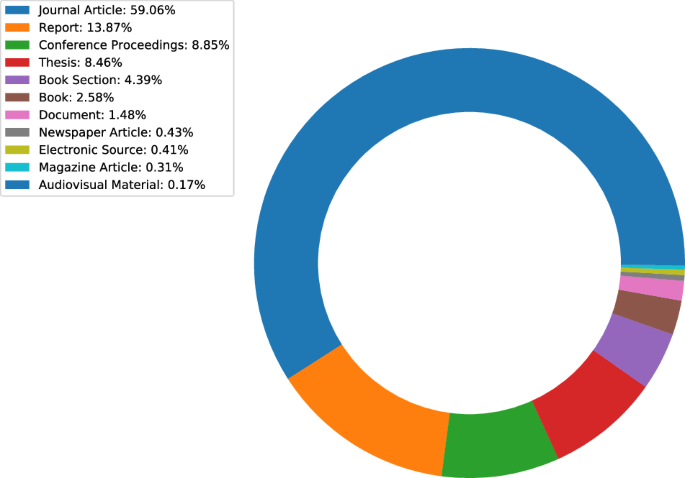

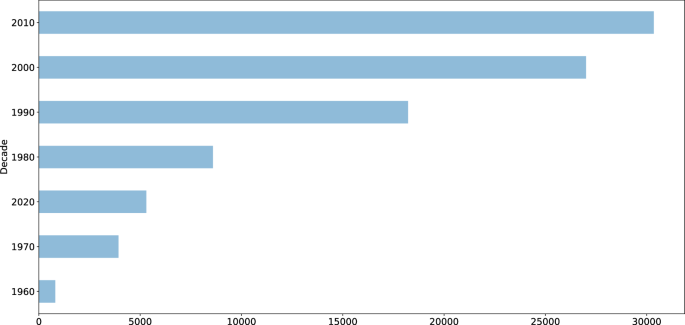

There are many different types of RWD. Figure 1 [ 16 ] provides a list of the RWD types and sources in medicine. We also refer readers to [ 11 ] for a comprehensive overview of the RWD data types. Here we use a few common RWD types, i.e., EHRs, registry data, claims data, patient-reported outcome (PRO) data, and data collected from wearables, as examples to demonstrate the variety of RWD and how they can be used for what purposes.

RWD Types and Sources (source: Fig. 1 in [ 16 ] with written permission by Dr. Brandon Swift to use the figure)

EHRs are collected as part of routine care across clinics, hospitals, and healthcare institutions. EHR data are typical RWD – noisy, heterogeneous, structured, and unstructured (e.g., text, imaging), and dynamic and require careful and intensive efforts pre-processing [ 17 ]. EHRs have created unprecedented opportunities for data-driven approaches to learn patterns, make new discoveries, assist preoperative planning, diagnostics, clinical prognostication, among others [ 18 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 ], improve predictions in selected outcomes especially if linked with administrative and claim data and usage of proper machine learning techniques [ 27 , 28 , 29 , 30 ], and validate and replicate findings from clinical trials [ 31 ].

Registry data have various types. For example, product registries include patients who have been exposed to a biopharmaceutical product or a medical device; health services registries consist of patients who have had a common procedure or hospitalization; and disease registries contains information about people diagnosed with a specific type of disease. Registries data enable identification and sharing best clinical practices, improve accuracy of estimates, provide valuable data for supporting regulatory decision-making [ 32 , 33 , 34 , 35 ]. Especially for rare diseases where clinical trials are often of small size and data are subject to high variability, registries provide a valuable data source to help understand the course of a disease, and provide critical information for confirmatory clinical trial design and translational research to develop treatments and improve patient care [ 34 , 36 , 37 ]. Reader may refer to [ 38 ] for a comprehensive overview on registry data and how they help understanding of patient outcomes.

Claims data refer to data generated during processing healthcare claims in health insurance plans or from practice management systems. Despite that claims data are collected and stored primarily for payment purposes originally, they have been used in healthcare to understand patients’ and prescribes’ behavior and how they interact, to estimate disease prevalence, to learn disease progression, disease diagnosis, medication usage, and drug-drug interactions, and validate and replicate findings from clinical trials [ 31 , 39 , 40 , 41 , 42 , 43 , 44 , 45 , 46 ]. A known pitfall of claim data is fraud, on top of some of the common data characteristics of RMD, such as upcoding Footnote 1 [ 47 ]. The data fraud problem can be mitigated with detailed audits and adoption of modern statistical, data mining and ML techniques for fraud detection [ 48 , 49 , 50 , 51 ].

PRO data refer to data reported directly by patients on their health status. PRO data have been used to provide RWE on effectiveness of interventions, symptoms monitoring, relationships between exposure and outcomes, among others [ 52 , 53 , 54 , 55 ]. PRO data are subject to recall bias and large inter-individual variability.

Wearable devices generate continuous streams of data. When combined with contextual data (e.g., location data, social media), they provide an opportunity to conduct expansive research studies that are large in scale and scope [ 56 ] that would be otherwise infeasible in controlled trials. Examples of using wearable RWD to generate RWE include applications in neuroscience and environmental health [ 57 , 58 , 59 , 60 ]. The wearables generate huge amounts of data. Advances in data storage, real-time processing capabilities and efficient battery technology would be essential for the full utilization of wearable data.

Using and analyzing RWD

A wide range of research methods are available to make use of RWD. In what follows, we outline a few approaches, including pragmatic clinical trials, target trial emulation, and applications of ML and AI techniques.

Pragmatic clinical trials are trials designed to test the effectiveness of an intervention in the real-world clinical setting. Pragmatic trials leverage the increasingly integrated healthcare system and may use data from EHR, claims, patient reminder systems, telephone-based care, etc. Due to the data characteristics of RWD, new guidelines and methodologies are developed to mitigate bias in RWE generated by RWD for decision making and causal inference, especially for per-protocol analysis [ 61 , 62 ]. The research question under investigation in pragmatic trials is whether an intervention works in real life and trials are designed to maximize the applicability and generalizability of the intervention. Various types of outcomes can be measured in these trials, but mostly patient-centered, instead of typical measurable symptoms or markers in explanatory trials. For example, ADAPTABLE trial [ 63 , 64 ] is a high-profile pragmatic trial and is the first large-scale, EHR-enabled clinical trial conducted within the U.S. It used EHR data to identify around 450,000 patients with established atherosclerotic cardiovascular disease (CVD) for recruitment and eventually enrolled about 15,000 individuals at 40 clinical centers that were randomized to two aspirin dose arms. Electronic patient follow-up for patient-reported outcomes was completed every 3 to 6 months, with a median follow-up was 26.2 months to determine the optimal dosage of aspirin in CVD patients, with the primary endpoint being the composite of all-cause mortality, hospitalization for nonfatal myocardial infarction, or hospitalization for a nonfatal stroke. The cost of ADATABLE is estimated to be only 1/5 to 1/2 of a traditional RCT of that scale.

Target trial emulation is the application of trial design and analysis principles from (target) randomized trials to the analysis of observational data [ 65 ]. By precisely specifying the target trial’s inclusion/exclusion criteria, treatment strategies, treatment assignment, causal contrast, outcomes, follow-up period, and statistical analysis, one may draw valid causal inferences about an intervention from RWD. Target trial emulation can be an important tool especially when comparative evaluation is not yet available or feasible in randomized trials. For example, [ 66 ] employs target trial emulation to evaluate real-world COVID-19 vaccine effectiveness, measured by protection against COVID-19 infection or related death, in racially and ethnically diverse, elderly populations by comparing newly vaccinated persons with matched unvaccinated controls using data from the US Department of Veterans Affairs health care system. The simulated trial was conducted with clearly defined inclusion/exclusion criteria, identification of matched controls, including matching based on propensity scores with careful selection of model covariates. Target trial emulation has also been used to evaluate the effect of colon cancer screening on cancer incidence over eight years of follow up [ 67 ], and the risk of urinary tract infection among diabetic patients [ 68 ].

RWD can also be used as historical controls and reference groups for controlled trials, with assessment of the quality and appropriateness of the RWD and employment of proper statistical approaches for analyzing the data [ 69 ]. Controlling for selection bias and confounding is key to the validity of this approach because of the lack of randomization and potentially unrecognized baseline differences, and the control group needs to be comparable with the treated group. RWD also provide a great opportunity to study rare events given the data voluminousness [ 70 , 71 , 72 ]. These studies also highlight the need for improving the RWD data quality, developing surrogate endpoints, and standardizing data collection for outcome measures in registries.

In terms of analysis of RWD, statistical models and inferential approaches are necessary for making sense of RWD, obtaining causal relationships, testing/validating hypotheses, and generating regulatory-grade RWE to inform policymakers and regulators in decision making – just as in the controlled trial settings. In fact, the motivation for and the design and analysis principles in pragmatic trials and target trial emulation are to obtain causal inference, with more innovative methods beyond the traditional statistical methods to adjust for potential confounders and improve the capabilities of RWD for causal inference [ 73 , 74 , 75 , 76 ].

ML techniques are getting increasingly popular and are powerful tools for predictive modeling. One reason for their popularity is that the modern ML techniques are very capable of dealing with voluminous, messy, multi-modal, and various unstructured data types without strong assumptions about the distribution of data. For example, deep learning can learn abstract representations of large, complex, and unstructured data; natural language processing (NLP) and embedding methods can be used to process texts and clinical notes in EHRs and transform them to real-valued vectors for downstream learning tasks. Secondly, new and more powerful ML techniques are being developed rapidly, due to the high demand and the large group of researchers in the field attracted by the hot topic. Thirdly, there are also many open source codes (e.g., on Github) and software libraries (e.g., TensorFlow, Pytorch, Keras) out there to facilitate the implementation of these techniques. Indeed, ML has enjoyed a rapid surge in the last decade or so for a wide range of applications in RWD, outperforming more conventional approaches [ 77 , 78 , 79 , 80 , 81 , 82 , 83 , 84 , 85 ]. For example, ML is widely applied in in health informatics to generate RWE and formulate personalized healthcare [ 86 , 87 , 88 , 89 , 90 ] and was successfully employed on RWD collected during the COVID-19 pandemic to help understand the disease and evaluate its prevention and treatment strategies [ 91 , 92 , 93 , 94 , 95 ]. It should be noted that the ML techniques are largely used for predictions and classification (e.g., disease diagnosis), variable selections (e.g, biomarker screening), data visualization, etc, rather than generating regulatory-level RWE; but this may change soon as regulatory agencies are aggressively evaluating ML/AI for generating RWE and engaging stakeholders on the topic [ 96 , 97 , 98 , 99 ].

It would be more effective and powerful to combine the expertise from statistical inference and ML when it comes to generating RWE and learning causal relationships. One of the recent methodological developments is indeed in that direction – leveraging the advances in semi-parametric and empirical process theory and incorporating the benefits of ML into comparative effectiveness using RWD. A well-known framework is targeted learning [ 100 , 101 , 102 ] that has been successfully applied in causal inference for dynamic treatment rules using EHR data [ 103 ] and efficacy of COVID-19 treatments [ 104 ], among others.

Regardless of which area a RWD project focuses on – causal inference or prediction and classification, representativeness of RWD of the population where the conclusions from the RWD project will be generalized to is critical. Otherwise, estimation or prediction can be misleading or even harmful. The information in RWD might not be adequate to validate the appropriateness of the data for generalization; in that case, the investigators should resist the temptation to generalize to groups that they are unsure about.

Challenges and opportunities

Various challenges – from data gathering to data quality control to decision making – still exist in all stages of a RWD life cycle despite all the excitement around their transformative potentials. We list some of the challenges below, where plenty of opportunities for improvement exist and greater efforts are needed to harness the power of RWD.

Data quality : RWD are now often used for other purposes than what they are originally collected for and thus may lack information for critical endpoints and not always be positioned for generating regulatory-grade evidence. On top of that, RWD are messy, heterogeneous, and subject to various measurement errors, all of which contribute to the lower quality of RWD compared to data from controlled trials. As a result, accuracy and precision of results based on RWD are negatively impacted and misleading results or false conclusions can be generated. While these do not preclude the use of RWD in evidence generation and decision making, data quality issues need to be consistently documented and addressed as much as possible through data cleaning and pre-processing (e.g., imputation to fill in missing values, over-sampling for imbalanced data, denoising, combining disparate pieces of information across databases, etc). If an issue can be addressed during the pre-processing stage, efforts should be made to correct it during data analysis or caution should be used when interpreting the results. Early engagement of key stakeholders (e.g., regulatory agencies if needed, research institutes, industries etc.) are encouraged to establish data quality standards and reduce unforeseen risks and issues.

Efficient and practical ML and statistical procedures : Fast growth of digital medical data and the fact that workforce and investment flood into the field also drive the rapid development and adoption of modern statistical procedures and ML algorithms to analyze the data. The availability of open-source platforms and software greatly facilitate the application of the procedures in practice. On the other hand, noisiness, heterogeneity, incompleteness, and unbalancedness of RWD may cause considerable under-performance of the existing statistical and ML procedures and demand new procedures that target specifically at RWD and can be effectively deployed in the real world. Further, the availability of the open-source platform and software and the accompanied convenience, while offered with good intentions, also increases the chance of practitioners misusing the procedures, if not equipped with proper training or understanding the principles of the techniques before applying them to real-world situations. In addition, to maintain scientific rigor during the RWE generation process from RWD, results from statistical and ML procedures would require medical validation either using expert knowledge or conducting reproducibility and replicability studies before they are being used for decision making in the real world [ 105 ].

Explainability and interpretability : Modern ML approaches are often employed in a black-box fashion and there a lack of understanding of the relationships between input and output and causal effects. Model selection, parameter initialization, and hyper-parameter tuning are also often conducted in a trial-and-error manner, without domain expert input. This is in contrast to the medical and healthcare field where interpretability is critical to building patient/user trust, and doctors are unlikely to use technology that they don’t understand. Promising and encouraging research work on this topic has already started [ 106 , 107 , 108 , 109 , 110 , 111 ], but more research is warranted.

Reproducibility and replicability : Reproducibility and replicability Footnote 2 are major principles in scientific research, RWD included. If an analytical procedure is not robust and its output is not reproducible or replicable, the public would call into questions the scientific rigor of the work and doubt the conclusion from a RWD-based study [ 113 , 114 , 115 ]. Result validation, reproducibility, and replicability can be challenging given their messiness, incompleteness, unstructured data, but need to be established especially considering that the generated evidence could be used towards regulatory decisions and affect the lives of millions of people. Irreproducibility can be mitigated by sharing raw and processed data and codes, assuming no privacy is compromised in this process. For replicability, given that RWD are not generated from controlled trials and every data set may has its own unique data characteristics, complete replicability can be difficult or even infeasible. Nevertheless, detailed documentation of data characteristics and pre-processing, pre-registration of analysis procedures, and adherence to open science principles (e.g., code repositories [ 116 ]) are critical for replicating findings on different RWD datasets, assuming they come from the same underlying population. Readers may refer to [ 117 , 118 , 119 ] for more suggestions and discussions on this topic.

Privacy : Ethical issues exist when an RWD project is implemented, among which, privacy is a commonly discussed topic. Information in RWD is often sensitive, such as medical histories, disease status, financial situations, and social behaviors, among others. Privacy risk can increase dramatically when different databases (e.g., EHR, wearables, claims) are linked together, a common practice in the analysis of RWD. Data users and policymakers should make every effort to ensure that RWD collection, storage, sharing, and analysis follow established data privacy principles (i.e., lawfulness, fairness, purpose limitation, and data minimization). In addition, privacy-enhancing technology and privacy-preserving data sharing and analysis can be deployed, where there already exist plenty effective and well-accepted state-of-the-art concepts and approaches, such as differential privacy Footnote 3 [ 120 ] and federated learning Footnote 4 [ 121 , 122 ]. Investigators and policymakers may consider integrating these concepts and technology when collecting and analyzing RWD and disseminating the results and RWE from the RWD.

Diversity, Equity, Algorithmic fairness, and Transparency (DEAT) : DEAT is another important ethical issue to consider in an RWD project. RWD may contain information from various demographic groups, which can be used to generate RWE with improved generalizability compared to data collected in controlled settings. On the other hand, certain types of RWD may be heavily biased and unbalanced toward a certain group, not as diverse or inclusive, and in some cases, even exacerbate disparity (e.g., wearables and access to facilities and treatment may be limited to certain demographic groups). Greater effort will be needed to gain access to RWD from underrepresented groups and to effectively take into account the heterogeneity in RWD while being mindful of the limitation for diversity/equity. This topic also relates to algorithmic fairness, which aims at understanding and preventing bias in ML models. Algorithmic fairness is an increasingly popular research topic in literature [ 123 , 124 , 125 , 126 , 127 ]. Incorrect and misleading conclusions may be drawn if the trained models systematically disadvantage a certain group (e.g., a trained algorithm might be less likely to detect cancer in black patients than white patients or in men than women). Transparency means that information and communication concerning the processing of personal data must be easily accessible and easy to understand. Transparency ensures that data contributors are aware of how their data are being used and for what purposes and decision-makers can evaluate the quality of the methods and the applicability of the generated RWE [ 128 , 129 , 130 , 131 ]. Being transparent when working with RWD is critical for building trust among the key stakeholders during an RWD life cycle (individuals who supply the data, those who collect and manage the data, data curators who design studies and analyze the data, and decision and policy makers).

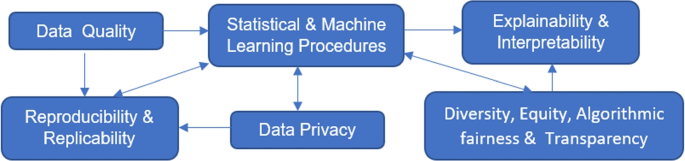

The above challenges are not isolated but rather connected as depicted in Fig. 2 . Data quality affects the performance of statistical and ML procedures; data sources and the cleaning and pre-processing process relate to result reproducibility and replicability. How data are analyzed and which statistical and ML procedures to use have an impact on reproducibility and replicability, whether privacy-preserving procedures are used during data collected and analysis and how information is shared and released relate to data privacy, DEAT, and explainability and interpretability, which can in turns affect which ML procedures to apply and development of new ML techniques.

Challenges in RWD and Their Relations

Conclusions

RWD provide a valuable and rich data source beyond the confines of traditional epidemiological studies, clinical trials, and lab-based experiments, with lower cost in data collection compared to the latter. If used and analyzed appropriately, RWD have the potential to generate valid and unbiased RWE with savings in both cost and time, compared to controlled trials, and to enhance the efficiency of medical and health-related research and decision-making. Procedures that improve the quality of the data and overcome the limitation of RWD to make the best of them have been and will continue to be developed. With the enthusiasm, commitment, and investment in RWD from all key stakeholders, we hope that the day that RWD unleashes its full potential will come soon.

Availability of data and materials

Not applicable. This is a review article. No data or materials were generated or collected.

Change history

02 may 2023.

A Correction to this paper has been published: https://doi.org/10.1186/s12874-023-01937-1

Upcoding refers to instances in which a medical service provider obtains additional reimbursement from insurance by coding a service it provided as a more expensive service than what was actually performed

Reproducibility refers to “instances in which the original researcher’s data and computer codes are used to regenerate the results” and replicability refers to “instances in which a researcher collects new data to arrive at the same scientific findings as a previous study.” [ 112 ]

Differential privacy provides a mathematically rigorous framework in which randomized procedures are used to guarantee individual privacy when releasing information.

Federated learning enables local devices to collaboratively learn a shared model while keeping all training data on the local devices without sharing, mitigating privacy risks.

Abbreviations

artificial intelligence

cardiovascular disease

coronavirus disease

diversity, equity, algorithmic fairness, and transparency

electronic health records

machine learning

natural language processing

patient-reported outcome

real-world data

real-world evidence

US Food and Drug Administration, et al. Real-World Evidence. 2022. https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence . Accessed 1 Sep 2022.

Wikipedia. Real world data. 2022. https://en.wikipedia.org/wiki/Real_world_data . Accessed 19 Mar 2022.

Powell AA, Power L, Westrop S, McOwat K, Campbell H, Simmons R, et al. Real-world data shows increased reactogenicity in adults after heterologous compared to homologous prime-boost COVID-19 vaccination, March- June 2021, England. Eurosurveillance. 2021;26(28):2100634.

Article CAS PubMed PubMed Central Google Scholar

Hunter PR, Brainard JS. Estimating the effectiveness of the Pfizer COVID-19 BNT162b2 vaccine after a single dose. A reanalysis of a study of ’real-world’ vaccination outcomes from Israel. medRxiv. 2021.02.01.21250957. https://doi.org/10.1101/2021.02.01.21250957 .

Henry DA, Jones MA, Stehlik P, Glasziou PP. Effectiveness of COVID-19 vaccines: findings from real world studies. Med J Aust. 2021;215(4):149.

Article PubMed PubMed Central Google Scholar

Firth JA, Hellewell J, Klepac P, Kissler S, Kucharski AJ, Spurgin LG. Using a real-world network to model localized COVID-19 control strategies. Nat Med. 2020;26(10):1616–22.

Article CAS PubMed Google Scholar

Shapiro A, Marinsek N, Clay I, Bradshaw B, Ramirez E, Min J, et al. Characterizing COVID-19 and influenza illnesses in the real world via person-generated health data. Patterns. 2021;2(1):100188.

Ahrens KF, Neumann RJ, Kollmann B, Plichta MM, Lieb K, Tüscher O, et al. Differential impact of COVID-related lockdown on mental health in Germany. World Psychiatr. 2021;20(1):140.

Article Google Scholar

Hernández MA, Stolfo SJ. Real-world data is dirty: Data cleansing and the merge/purge problem. Data Min Knowl Disc. 1998;2(1):9–37.

Corrigan-Curay J, Sacks L, Woodcock J. Real-world evidence and real-world data for evaluating drug safety and effectiveness. Jama. 2018;320(9):867–8.

Article PubMed Google Scholar

Makady A, de Boer A, Hillege H, Klungel O, Goettsch W, et al. What is real-world data? A review of definitions based on literature and stakeholder interviews. Value Health. 2017;20(7):858–65.

Franklin JM, Schneeweiss S. When and how can real world data analyses substitute for randomized controlled trials? Clin Pharmacol Ther. 2017;102(6):924–33.

Miksad RA, Abernethy AP. Harnessing the power of real-world evidence (RWE): a checklist to ensure regulatory-grade data quality. Clin Pharmacol Ther. 2018;103(2):202–5.

Curtis MD, Griffith SD, Tucker M, Taylor MD, Capra WB, Carrigan G, et al. Development and validation of a high-quality composite real-world mortality endpoint. Health Serv Res. 2018;53(6):4460–76.

Booth CM, Karim S, Mackillop WJ. Real-world data: towards achieving the achievable in cancer care. Nat Rev Clin Oncol. 2019;16(5):312–25.

Swift B, Jain L, White C, Chandrasekaran V, Bhandari A, Hughes DA, et al. Innovation at the intersection of clinical trials and real-world data science to advance patient care. Clin Transl Sci. 2018;11(5):450–60.

Sun W, Cai Z, Li Y, Liu F, Fang S, Wang G. Data processing and text mining technologies on electronic medical records: a review. J Healthc Eng. 2018;2018:4302425. https://doi.org/10.1155/2018/4302425 .

Wu J, Roy J, Stewart WF. Prediction modeling using EHR data: challenges, strategies, and a comparison of machine learning approaches. Med Care. 2010;48(6 Suppl):S106-13. https://doi.org/10.1097/MLR.0b013e3181de9e17 , https://pubmed.ncbi.nlm.nih.gov/20473190/ .

Botsis T, Hartvigsen G, Chen F, Weng C. Secondary use of EHR: data quality issues and informatics opportunities. Summit Transl Bioinforma. 2010;2010:1.

Google Scholar

Kawaler E, Cobian A, Peissig P, Cross D, Yale S, Craven M. Learning to predict post-hospitalization VTE risk from EHR data. In: AMIA annual symposium proceedings. vol. 2012. p. 436. American Medical Informatics Association Country United States.

Shickel B, Tighe PJ, Bihorac A, Rashidi P. Deep EHR: a survey of recent advances in deep learning techniques for electronic health record (EHR) analysis. IEEE J Biomed Health Inform. 2017;22(5):1589–604.

Poirier C, Hswen Y, Bouzillé G, Cuggia M, Lavenu A, Brownstein JS, et al. Influenza forecasting for French regions combining EHR, web and climatic data sources with a machine learning ensemble approach. PloS ONE. 2021;16(5):e0250890.

Zheng T, Xie W, Xu L, He X, Zhang Y, You M, et al. A machine learning-based framework to identify type 2 diabetes through electronic health records. Int J Med Inform. 2017;97:120–7.

Pivovarov R, Perotte AJ, Grave E, Angiolillo J, Wiggins CH, Elhadad N. Learning probabilistic phenotypes from heterogeneous EHR data. J Biomed Inform. 2015;58:156–65.

Zhao D, Weng C. Combining PubMed knowledge and EHR data to develop a weighted bayesian network for pancreatic cancer prediction. J Biomed Informa. 2011;44(5):859–68.

Veturi Y, Lucas A, Bradford Y, Hui D, Dudek S, Theusch E, et al. A unified framework identifies new links between plasma lipids and diseases from electronic medical records across large-scale cohorts. Nat Genet. 2021;53(7):972–81.

Kwon BC, Choi MJ, Kim JT, Choi E, Kim YB, Kwon S, et al. Retainvis: Visual analytics with interpretable and interactive recurrent neural networks on electronic medical records. IEEE Trans Vis Comput Graph. 2018;25(1):299–309.

Mahmoudi E, Kamdar N, Kim N, Gonzales G, Singh K, Waljee AK. Use of electronic medical records in development and validation of risk prediction models of hospital readmission: systematic review. BMJ. 2020;369:m958.

Desai RJ, Wang SV, Vaduganathan M, Evers T, Schneeweiss S. Comparison of machine learning methods with traditional models for use of administrative claims with electronic medical records to predict heart failure outcomes. JAMA Netw Open. 2020;3(1):e1918962.

Huang L, Shea AL, Qian H, Masurkar A, Deng H, Liu D. Patient clustering improves efficiency of federated machine learning to predict mortality and hospital stay time using distributed electronic medical records. J Biomed Inform. 2019;99:103291.

Bartlett VL, Dhruva SS, Shah ND, Ryan P, Ross JS. Feasibility of using real-world data to replicate clinical trial evidence. JAMA Netw Open. 2019;2(10):e1912869.

Dreyer NA, Garner S. Registries for robust evidence. Jama. 2009;302(7):790–1.

Larsson S, Lawyer P, Garellick G, Lindahl B, Lundström M. Use of 13 disease registries in 5 countries demonstrates the potential to use outcome data to improve health care’s value. Health Affairs. 2012;31(1):220–7.

McGettigan P, Alonso Olmo C, Plueschke K, Castillon M, Nogueras Zondag D, Bahri P, et al. Patient registries: an underused resource for medicines evaluation. Drug Saf. 2019;42(11):1343–51.

Izmirly PM, Parton H, Wang L, McCune WJ, Lim SS, Drenkard C, et al. Prevalence of systemic lupus erythematosus in the United States: estimates from a meta-analysis of the Centers for Disease Control and Prevention National Lupus Registries. Arthritis Rheumatol. 2021;73(6):991–6.

Jansen-Van Der Weide MC, Gaasterland CM, Roes KC, Pontes C, Vives R, Sancho A, et al. Rare disease registries: potential applications towards impact on development of new drug treatments. Orphanet J Rare Dis. 2018;13(1):1–11.

Lacaze P, Millis N, Fookes M, Zurynski Y, Jaffe A, Bellgard M, et al. Rare disease registries: a call to action. Intern Med J. 2017;47(9):1075–9.

Gliklich RE, Dreyer NA, Leavy MB, editors. Registries for Evaluating Patient Outcomes: A User's Guide. 3rd ed. Rockville (MD): Agency for Healthcare Research and Quality (US); 2014 Apr. Report No.: 13(14)-EHC111. PMID: 24945055.

Svarstad BL, Shireman TI, Sweeney J. Using drug claims data to assess the relationship of medication adherence with hospitalization and costs. Psychiatr Serv. 2001;52(6):805–11.

Izurieta HS, Wu X, Lu Y, Chillarige Y, Wernecke M, Lindaas A, et al. Zostavax vaccine effectiveness among US elderly using real-world evidence: Addressing unmeasured confounders by using multiple imputation after linking beneficiary surveys with Medicare claims. Pharmacoepidemiol Drug Saf. 2019;28(7):993–1001.

Allen AM, Van Houten HK, Sangaralingham LR, Talwalkar JA, McCoy RG. Healthcare cost and utilization in nonalcoholic fatty liver disease: real-world data from a large US claims database. Hepatology. 2018;68(6):2230–8.

Sruamsiri R, Iwasaki K, Tang W, Mahlich J. Persistence rates and medical costs of biological therapies for psoriasis treatment in Japan: a real-world data study using a claims database. BMC Dermatol. 2018;18(1):1–11.

Quock TP, Yan T, Chang E, Guthrie S, Broder MS. Epidemiology of AL amyloidosis: a real-world study using US claims data. Blood Adv. 2018;2(10):1046–53.

Herland M, Bauder RA, Khoshgoftaar TM. Medical provider specialty predictions for the detection of anomalous medicare insurance claims. In: 2017 IEEE international conference on information reuse and integration (IRI). New York City: IEEE; 2017. p. 579–88.

Momo K, Kobayashi H, Sugiura Y, Yasu T, Koinuma M, Kuroda SI. Prevalence of drug–drug interaction in atrial fibrillation patients based on a large claims data. PLoS ONE. 2019;14(12):e0225297.

Ghiani M, Maywald U, Wilke T, Heeg B. RW1 Bridging The Gap Between Clinical Trials And Real World Data: Evidence On Replicability Of Efficacy Results Using German Claims Data. Value Health. 2020;23:S757–8.

Silverman E, Skinner J. Medicare upcoding and hospital ownership. J Health Econ. 2004;23(2):369–89.

Kirlidog M, Asuk C. A fraud detection approach with data mining in health insurance. Procedia-Soc Behav Sci. 2012;62:989–94.

Li J, Huang KY, Jin J, Shi J. A survey on statistical methods for health care fraud detection. Health Care Manag Sci. 2008;11(3):275–87.