Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Literature Review | Guide, Examples, & Templates

How to Write a Literature Review | Guide, Examples, & Templates

Published on January 2, 2023 by Shona McCombes . Revised on September 11, 2023.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research that you can later apply to your paper, thesis, or dissertation topic .

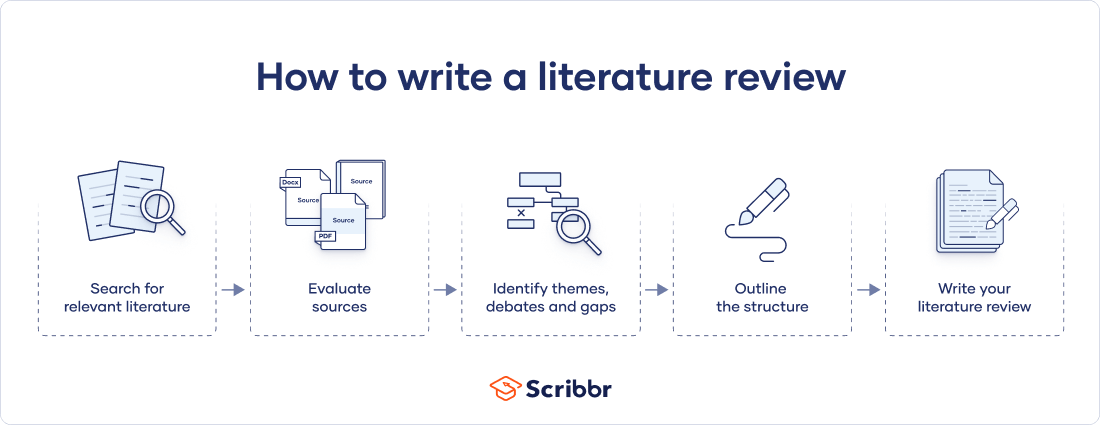

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates, and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarize sources—it analyzes, synthesizes , and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

What is the purpose of a literature review, examples of literature reviews, step 1 – search for relevant literature, step 2 – evaluate and select sources, step 3 – identify themes, debates, and gaps, step 4 – outline your literature review’s structure, step 5 – write your literature review, free lecture slides, other interesting articles, frequently asked questions, introduction.

- Quick Run-through

- Step 1 & 2

When you write a thesis , dissertation , or research paper , you will likely have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and its scholarly context

- Develop a theoretical framework and methodology for your research

- Position your work in relation to other researchers and theorists

- Show how your research addresses a gap or contributes to a debate

- Evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

Writing literature reviews is a particularly important skill if you want to apply for graduate school or pursue a career in research. We’ve written a step-by-step guide that you can follow below.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research problem and questions .

Make a list of keywords

Start by creating a list of keywords related to your research question. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list as you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some useful databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can also use boolean operators to help narrow down your search.

Make sure to read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

You likely won’t be able to read absolutely everything that has been written on your topic, so it will be necessary to evaluate which sources are most relevant to your research question.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models, and methods?

- Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible , and make sure you read any landmark studies and major theories in your field of research.

You can use our template to summarize and evaluate sources you’re thinking about using. Click on either button below to download.

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It is important to keep track of your sources with citations to avoid plagiarism . It can be helpful to make an annotated bibliography , where you compile full citation information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

The only proofreading tool specialized in correcting academic writing - try for free!

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Try for free

To begin organizing your literature review’s argument and structure, be sure you understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly visual platforms like Instagram and Snapchat—this is a gap that you could address in your own research.

There are various approaches to organizing the body of a literature review. Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

Try to analyze patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organize your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text , your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, you can follow these tips:

- Summarize and synthesize: give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: don’t just paraphrase other researchers — add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically evaluate: mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: use transition words and topic sentences to draw connections, comparisons and contrasts

In the conclusion, you should summarize the key findings you have taken from the literature and emphasize their significance.

When you’ve finished writing and revising your literature review, don’t forget to proofread thoroughly before submitting. Not a language expert? Check out Scribbr’s professional proofreading services !

This article has been adapted into lecture slides that you can use to teach your students about writing a literature review.

Scribbr slides are free to use, customize, and distribute for educational purposes.

Open Google Slides Download PowerPoint

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarize yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your thesis or dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, September 11). How to Write a Literature Review | Guide, Examples, & Templates. Scribbr. Retrieved April 15, 2024, from https://www.scribbr.com/dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, what is a theoretical framework | guide to organizing, what is a research methodology | steps & tips, how to write a research proposal | examples & templates, what is your plagiarism score.

- UWF Libraries

Literature Review: Conducting & Writing

- Sample Literature Reviews

- Steps for Conducting a Lit Review

- Finding "The Literature"

- Organizing/Writing

- APA Style This link opens in a new window

- Chicago: Notes Bibliography This link opens in a new window

- MLA Style This link opens in a new window

Sample Lit Reviews from Communication Arts

Have an exemplary literature review.

- Literature Review Sample 1

- Literature Review Sample 2

- Literature Review Sample 3

Have you written a stellar literature review you care to share for teaching purposes?

Are you an instructor who has received an exemplary literature review and have permission from the student to post?

Please contact Britt McGowan at [email protected] for inclusion in this guide. All disciplines welcome and encouraged.

- << Previous: MLA Style

- Next: Get Help! >>

- Last Updated: Mar 22, 2024 9:37 AM

- URL: https://libguides.uwf.edu/litreview

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing a Literature Review

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

A literature review is a document or section of a document that collects key sources on a topic and discusses those sources in conversation with each other (also called synthesis ). The lit review is an important genre in many disciplines, not just literature (i.e., the study of works of literature such as novels and plays). When we say “literature review” or refer to “the literature,” we are talking about the research ( scholarship ) in a given field. You will often see the terms “the research,” “the scholarship,” and “the literature” used mostly interchangeably.

Where, when, and why would I write a lit review?

There are a number of different situations where you might write a literature review, each with slightly different expectations; different disciplines, too, have field-specific expectations for what a literature review is and does. For instance, in the humanities, authors might include more overt argumentation and interpretation of source material in their literature reviews, whereas in the sciences, authors are more likely to report study designs and results in their literature reviews; these differences reflect these disciplines’ purposes and conventions in scholarship. You should always look at examples from your own discipline and talk to professors or mentors in your field to be sure you understand your discipline’s conventions, for literature reviews as well as for any other genre.

A literature review can be a part of a research paper or scholarly article, usually falling after the introduction and before the research methods sections. In these cases, the lit review just needs to cover scholarship that is important to the issue you are writing about; sometimes it will also cover key sources that informed your research methodology.

Lit reviews can also be standalone pieces, either as assignments in a class or as publications. In a class, a lit review may be assigned to help students familiarize themselves with a topic and with scholarship in their field, get an idea of the other researchers working on the topic they’re interested in, find gaps in existing research in order to propose new projects, and/or develop a theoretical framework and methodology for later research. As a publication, a lit review usually is meant to help make other scholars’ lives easier by collecting and summarizing, synthesizing, and analyzing existing research on a topic. This can be especially helpful for students or scholars getting into a new research area, or for directing an entire community of scholars toward questions that have not yet been answered.

What are the parts of a lit review?

Most lit reviews use a basic introduction-body-conclusion structure; if your lit review is part of a larger paper, the introduction and conclusion pieces may be just a few sentences while you focus most of your attention on the body. If your lit review is a standalone piece, the introduction and conclusion take up more space and give you a place to discuss your goals, research methods, and conclusions separately from where you discuss the literature itself.

Introduction:

- An introductory paragraph that explains what your working topic and thesis is

- A forecast of key topics or texts that will appear in the review

- Potentially, a description of how you found sources and how you analyzed them for inclusion and discussion in the review (more often found in published, standalone literature reviews than in lit review sections in an article or research paper)

- Summarize and synthesize: Give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: Don’t just paraphrase other researchers – add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically Evaluate: Mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: Use transition words and topic sentence to draw connections, comparisons, and contrasts.

Conclusion:

- Summarize the key findings you have taken from the literature and emphasize their significance

- Connect it back to your primary research question

How should I organize my lit review?

Lit reviews can take many different organizational patterns depending on what you are trying to accomplish with the review. Here are some examples:

- Chronological : The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order. Try to analyze the patterns, turning points, and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred (as mentioned previously, this may not be appropriate in your discipline — check with a teacher or mentor if you’re unsure).

- Thematic : If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Qualitative versus quantitative research

- Empirical versus theoretical scholarship

- Divide the research by sociological, historical, or cultural sources

- Theoretical : In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theorical concepts to create a framework for your research.

What are some strategies or tips I can use while writing my lit review?

Any lit review is only as good as the research it discusses; make sure your sources are well-chosen and your research is thorough. Don’t be afraid to do more research if you discover a new thread as you’re writing. More info on the research process is available in our "Conducting Research" resources .

As you’re doing your research, create an annotated bibliography ( see our page on the this type of document ). Much of the information used in an annotated bibliography can be used also in a literature review, so you’ll be not only partially drafting your lit review as you research, but also developing your sense of the larger conversation going on among scholars, professionals, and any other stakeholders in your topic.

Usually you will need to synthesize research rather than just summarizing it. This means drawing connections between sources to create a picture of the scholarly conversation on a topic over time. Many student writers struggle to synthesize because they feel they don’t have anything to add to the scholars they are citing; here are some strategies to help you:

- It often helps to remember that the point of these kinds of syntheses is to show your readers how you understand your research, to help them read the rest of your paper.

- Writing teachers often say synthesis is like hosting a dinner party: imagine all your sources are together in a room, discussing your topic. What are they saying to each other?

- Look at the in-text citations in each paragraph. Are you citing just one source for each paragraph? This usually indicates summary only. When you have multiple sources cited in a paragraph, you are more likely to be synthesizing them (not always, but often

- Read more about synthesis here.

The most interesting literature reviews are often written as arguments (again, as mentioned at the beginning of the page, this is discipline-specific and doesn’t work for all situations). Often, the literature review is where you can establish your research as filling a particular gap or as relevant in a particular way. You have some chance to do this in your introduction in an article, but the literature review section gives a more extended opportunity to establish the conversation in the way you would like your readers to see it. You can choose the intellectual lineage you would like to be part of and whose definitions matter most to your thinking (mostly humanities-specific, but this goes for sciences as well). In addressing these points, you argue for your place in the conversation, which tends to make the lit review more compelling than a simple reporting of other sources.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What is a Literature Review? | Guide, Template, & Examples

What is a Literature Review? | Guide, Template, & Examples

Published on 22 February 2022 by Shona McCombes . Revised on 7 June 2022.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research.

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarise sources – it analyses, synthesises, and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Why write a literature review, examples of literature reviews, step 1: search for relevant literature, step 2: evaluate and select sources, step 3: identify themes, debates and gaps, step 4: outline your literature review’s structure, step 5: write your literature review, frequently asked questions about literature reviews, introduction.

- Quick Run-through

- Step 1 & 2

When you write a dissertation or thesis, you will have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and scholarly context

- Develop a theoretical framework and methodology for your research

- Position yourself in relation to other researchers and theorists

- Show how your dissertation addresses a gap or contributes to a debate

You might also have to write a literature review as a stand-alone assignment. In this case, the purpose is to evaluate the current state of research and demonstrate your knowledge of scholarly debates around a topic.

The content will look slightly different in each case, but the process of conducting a literature review follows the same steps. We’ve written a step-by-step guide that you can follow below.

Prevent plagiarism, run a free check.

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research objectives and questions .

If you are writing a literature review as a stand-alone assignment, you will have to choose a focus and develop a central question to direct your search. Unlike a dissertation research question, this question has to be answerable without collecting original data. You should be able to answer it based only on a review of existing publications.

Make a list of keywords

Start by creating a list of keywords related to your research topic. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list if you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can use boolean operators to help narrow down your search:

Read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

To identify the most important publications on your topic, take note of recurring citations. If the same authors, books or articles keep appearing in your reading, make sure to seek them out.

You probably won’t be able to read absolutely everything that has been written on the topic – you’ll have to evaluate which sources are most relevant to your questions.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models and methods? Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- How does the publication contribute to your understanding of the topic? What are its key insights and arguments?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible, and make sure you read any landmark studies and major theories in your field of research.

You can find out how many times an article has been cited on Google Scholar – a high citation count means the article has been influential in the field, and should certainly be included in your literature review.

The scope of your review will depend on your topic and discipline: in the sciences you usually only review recent literature, but in the humanities you might take a long historical perspective (for example, to trace how a concept has changed in meaning over time).

Remember that you can use our template to summarise and evaluate sources you’re thinking about using!

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It’s important to keep track of your sources with references to avoid plagiarism . It can be helpful to make an annotated bibliography, where you compile full reference information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

You can use our free APA Reference Generator for quick, correct, consistent citations.

To begin organising your literature review’s argument and structure, you need to understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly-visual platforms like Instagram and Snapchat – this is a gap that you could address in your own research.

There are various approaches to organising the body of a literature review. You should have a rough idea of your strategy before you start writing.

Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarising sources in order.

Try to analyse patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organise your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text, your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

If you are writing the literature review as part of your dissertation or thesis, reiterate your central problem or research question and give a brief summary of the scholarly context. You can emphasise the timeliness of the topic (“many recent studies have focused on the problem of x”) or highlight a gap in the literature (“while there has been much research on x, few researchers have taken y into consideration”).

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, make sure to follow these tips:

- Summarise and synthesise: give an overview of the main points of each source and combine them into a coherent whole.

- Analyse and interpret: don’t just paraphrase other researchers – add your own interpretations, discussing the significance of findings in relation to the literature as a whole.

- Critically evaluate: mention the strengths and weaknesses of your sources.

- Write in well-structured paragraphs: use transitions and topic sentences to draw connections, comparisons and contrasts.

In the conclusion, you should summarise the key findings you have taken from the literature and emphasise their significance.

If the literature review is part of your dissertation or thesis, reiterate how your research addresses gaps and contributes new knowledge, or discuss how you have drawn on existing theories and methods to build a framework for your research. This can lead directly into your methodology section.

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a dissertation , thesis, research paper , or proposal .

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarise yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, June 07). What is a Literature Review? | Guide, Template, & Examples. Scribbr. Retrieved 15 April 2024, from https://www.scribbr.co.uk/thesis-dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a dissertation proposal | a step-by-step guide, what is a theoretical framework | a step-by-step guide, what is a research methodology | steps & tips.

- Resources Home 🏠

- Try SciSpace Copilot

- Search research papers

- Add Copilot Extension

- Try AI Detector

- Try Paraphraser

- Try Citation Generator

- April Papers

- June Papers

- July Papers

How To Write A Literature Review - A Complete Guide

Table of Contents

A literature review is much more than just another section in your research paper. It forms the very foundation of your research. It is a formal piece of writing where you analyze the existing theoretical framework, principles, and assumptions and use that as a base to shape your approach to the research question.

Curating and drafting a solid literature review section not only lends more credibility to your research paper but also makes your research tighter and better focused. But, writing literature reviews is a difficult task. It requires extensive reading, plus you have to consider market trends and technological and political changes, which tend to change in the blink of an eye.

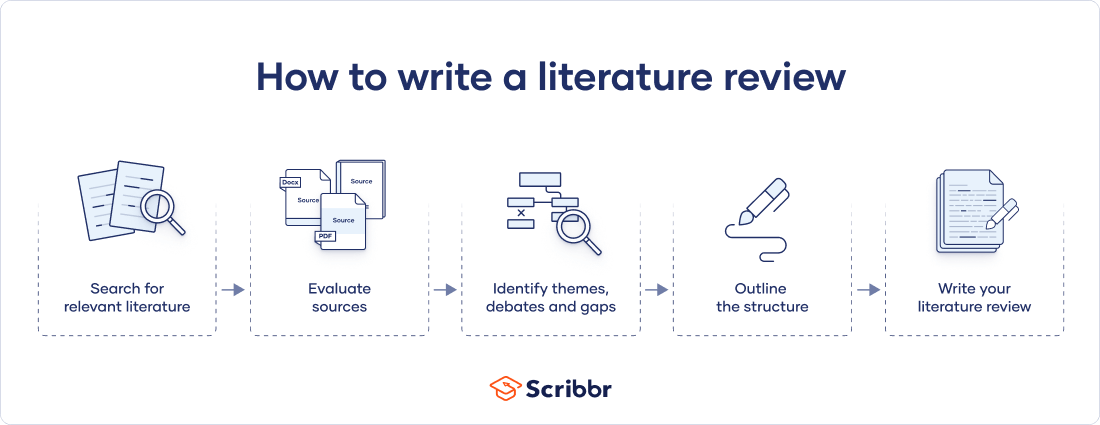

Now streamline your literature review process with the help of SciSpace Copilot. With this AI research assistant, you can efficiently synthesize and analyze a vast amount of information, identify key themes and trends, and uncover gaps in the existing research. Get real-time explanations, summaries, and answers to your questions for the paper you're reviewing, making navigating and understanding the complex literature landscape easier.

In this comprehensive guide, we will explore everything from the definition of a literature review, its appropriate length, various types of literature reviews, and how to write one.

What is a literature review?

A literature review is a collation of survey, research, critical evaluation, and assessment of the existing literature in a preferred domain.

Eminent researcher and academic Arlene Fink, in her book Conducting Research Literature Reviews , defines it as the following:

“A literature review surveys books, scholarly articles, and any other sources relevant to a particular issue, area of research, or theory, and by so doing, provides a description, summary, and critical evaluation of these works in relation to the research problem being investigated.

Literature reviews are designed to provide an overview of sources you have explored while researching a particular topic, and to demonstrate to your readers how your research fits within a larger field of study.”

Simply put, a literature review can be defined as a critical discussion of relevant pre-existing research around your research question and carving out a definitive place for your study in the existing body of knowledge. Literature reviews can be presented in multiple ways: a section of an article, the whole research paper itself, or a chapter of your thesis.

A literature review does function as a summary of sources, but it also allows you to analyze further, interpret, and examine the stated theories, methods, viewpoints, and, of course, the gaps in the existing content.

As an author, you can discuss and interpret the research question and its various aspects and debate your adopted methods to support the claim.

What is the purpose of a literature review?

A literature review is meant to help your readers understand the relevance of your research question and where it fits within the existing body of knowledge. As a researcher, you should use it to set the context, build your argument, and establish the need for your study.

What is the importance of a literature review?

The literature review is a critical part of research papers because it helps you:

- Gain an in-depth understanding of your research question and the surrounding area

- Convey that you have a thorough understanding of your research area and are up-to-date with the latest changes and advancements

- Establish how your research is connected or builds on the existing body of knowledge and how it could contribute to further research

- Elaborate on the validity and suitability of your theoretical framework and research methodology

- Identify and highlight gaps and shortcomings in the existing body of knowledge and how things need to change

- Convey to readers how your study is different or how it contributes to the research area

How long should a literature review be?

Ideally, the literature review should take up 15%-40% of the total length of your manuscript. So, if you have a 10,000-word research paper, the minimum word count could be 1500.

Your literature review format depends heavily on the kind of manuscript you are writing — an entire chapter in case of doctoral theses, a part of the introductory section in a research article, to a full-fledged review article that examines the previously published research on a topic.

Another determining factor is the type of research you are doing. The literature review section tends to be longer for secondary research projects than primary research projects.

What are the different types of literature reviews?

All literature reviews are not the same. There are a variety of possible approaches that you can take. It all depends on the type of research you are pursuing.

Here are the different types of literature reviews:

Argumentative review

It is called an argumentative review when you carefully present literature that only supports or counters a specific argument or premise to establish a viewpoint.

Integrative review

It is a type of literature review focused on building a comprehensive understanding of a topic by combining available theoretical frameworks and empirical evidence.

Methodological review

This approach delves into the ''how'' and the ''what" of the research question — you cannot look at the outcome in isolation; you should also review the methodology used.

Systematic review

This form consists of an overview of existing evidence pertinent to a clearly formulated research question, which uses pre-specified and standardized methods to identify and critically appraise relevant research and collect, report, and analyze data from the studies included in the review.

Meta-analysis review

Meta-analysis uses statistical methods to summarize the results of independent studies. By combining information from all relevant studies, meta-analysis can provide more precise estimates of the effects than those derived from the individual studies included within a review.

Historical review

Historical literature reviews focus on examining research throughout a period, often starting with the first time an issue, concept, theory, or phenomenon emerged in the literature, then tracing its evolution within the scholarship of a discipline. The purpose is to place research in a historical context to show familiarity with state-of-the-art developments and identify future research's likely directions.

Theoretical Review

This form aims to examine the corpus of theory accumulated regarding an issue, concept, theory, and phenomenon. The theoretical literature review helps to establish what theories exist, the relationships between them, the degree the existing approaches have been investigated, and to develop new hypotheses to be tested.

Scoping Review

The Scoping Review is often used at the beginning of an article, dissertation, or research proposal. It is conducted before the research to highlight gaps in the existing body of knowledge and explains why the project should be greenlit.

State-of-the-Art Review

The State-of-the-Art review is conducted periodically, focusing on the most recent research. It describes what is currently known, understood, or agreed upon regarding the research topic and highlights where there are still disagreements.

Can you use the first person in a literature review?

When writing literature reviews, you should avoid the usage of first-person pronouns. It means that instead of "I argue that" or "we argue that," the appropriate expression would be "this research paper argues that."

Do you need an abstract for a literature review?

Ideally, yes. It is always good to have a condensed summary that is self-contained and independent of the rest of your review. As for how to draft one, you can follow the same fundamental idea when preparing an abstract for a literature review. It should also include:

- The research topic and your motivation behind selecting it

- A one-sentence thesis statement

- An explanation of the kinds of literature featured in the review

- Summary of what you've learned

- Conclusions you drew from the literature you reviewed

- Potential implications and future scope for research

Here's an example of the abstract of a literature review

Is a literature review written in the past tense?

Yes, the literature review should ideally be written in the past tense. You should not use the present or future tense when writing one. The exceptions are when you have statements describing events that happened earlier than the literature you are reviewing or events that are currently occurring; then, you can use the past perfect or present perfect tenses.

How many sources for a literature review?

There are multiple approaches to deciding how many sources to include in a literature review section. The first approach would be to look level you are at as a researcher. For instance, a doctoral thesis might need 60+ sources. In contrast, you might only need to refer to 5-15 sources at the undergraduate level.

The second approach is based on the kind of literature review you are doing — whether it is merely a chapter of your paper or if it is a self-contained paper in itself. When it is just a chapter, sources should equal the total number of pages in your article's body. In the second scenario, you need at least three times as many sources as there are pages in your work.

Quick tips on how to write a literature review

To know how to write a literature review, you must clearly understand its impact and role in establishing your work as substantive research material.

You need to follow the below-mentioned steps, to write a literature review:

- Outline the purpose behind the literature review

- Search relevant literature

- Examine and assess the relevant resources

- Discover connections by drawing deep insights from the resources

- Structure planning to write a good literature review

1. Outline and identify the purpose of a literature review

As a first step on how to write a literature review, you must know what the research question or topic is and what shape you want your literature review to take. Ensure you understand the research topic inside out, or else seek clarifications. You must be able to the answer below questions before you start:

- How many sources do I need to include?

- What kind of sources should I analyze?

- How much should I critically evaluate each source?

- Should I summarize, synthesize or offer a critique of the sources?

- Do I need to include any background information or definitions?

Additionally, you should know that the narrower your research topic is, the swifter it will be for you to restrict the number of sources to be analyzed.

2. Search relevant literature

Dig deeper into search engines to discover what has already been published around your chosen topic. Make sure you thoroughly go through appropriate reference sources like books, reports, journal articles, government docs, and web-based resources.

You must prepare a list of keywords and their different variations. You can start your search from any library’s catalog, provided you are an active member of that institution. The exact keywords can be extended to widen your research over other databases and academic search engines like:

- Google Scholar

- Microsoft Academic

- Science.gov

Besides, it is not advisable to go through every resource word by word. Alternatively, what you can do is you can start by reading the abstract and then decide whether that source is relevant to your research or not.

Additionally, you must spend surplus time assessing the quality and relevance of resources. It would help if you tried preparing a list of citations to ensure that there lies no repetition of authors, publications, or articles in the literature review.

3. Examine and assess the sources

It is nearly impossible for you to go through every detail in the research article. So rather than trying to fetch every detail, you have to analyze and decide which research sources resemble closest and appear relevant to your chosen domain.

While analyzing the sources, you should look to find out answers to questions like:

- What question or problem has the author been describing and debating?

- What is the definition of critical aspects?

- How well the theories, approach, and methodology have been explained?

- Whether the research theory used some conventional or new innovative approach?

- How relevant are the key findings of the work?

- In what ways does it relate to other sources on the same topic?

- What challenges does this research paper pose to the existing theory

- What are the possible contributions or benefits it adds to the subject domain?

Be always mindful that you refer only to credible and authentic resources. It would be best if you always take references from different publications to validate your theory.

Always keep track of important information or data you can present in your literature review right from the beginning. It will help steer your path from any threats of plagiarism and also make it easier to curate an annotated bibliography or reference section.

4. Discover connections

At this stage, you must start deciding on the argument and structure of your literature review. To accomplish this, you must discover and identify the relations and connections between various resources while drafting your abstract.

A few aspects that you should be aware of while writing a literature review include:

- Rise to prominence: Theories and methods that have gained reputation and supporters over time.

- Constant scrutiny: Concepts or theories that repeatedly went under examination.

- Contradictions and conflicts: Theories, both the supporting and the contradictory ones, for the research topic.

- Knowledge gaps: What exactly does it fail to address, and how to bridge them with further research?

- Influential resources: Significant research projects available that have been upheld as milestones or perhaps, something that can modify the current trends

Once you join the dots between various past research works, it will be easier for you to draw a conclusion and identify your contribution to the existing knowledge base.

5. Structure planning to write a good literature review

There exist different ways towards planning and executing the structure of a literature review. The format of a literature review varies and depends upon the length of the research.

Like any other research paper, the literature review format must contain three sections: introduction, body, and conclusion. The goals and objectives of the research question determine what goes inside these three sections.

Nevertheless, a good literature review can be structured according to the chronological, thematic, methodological, or theoretical framework approach.

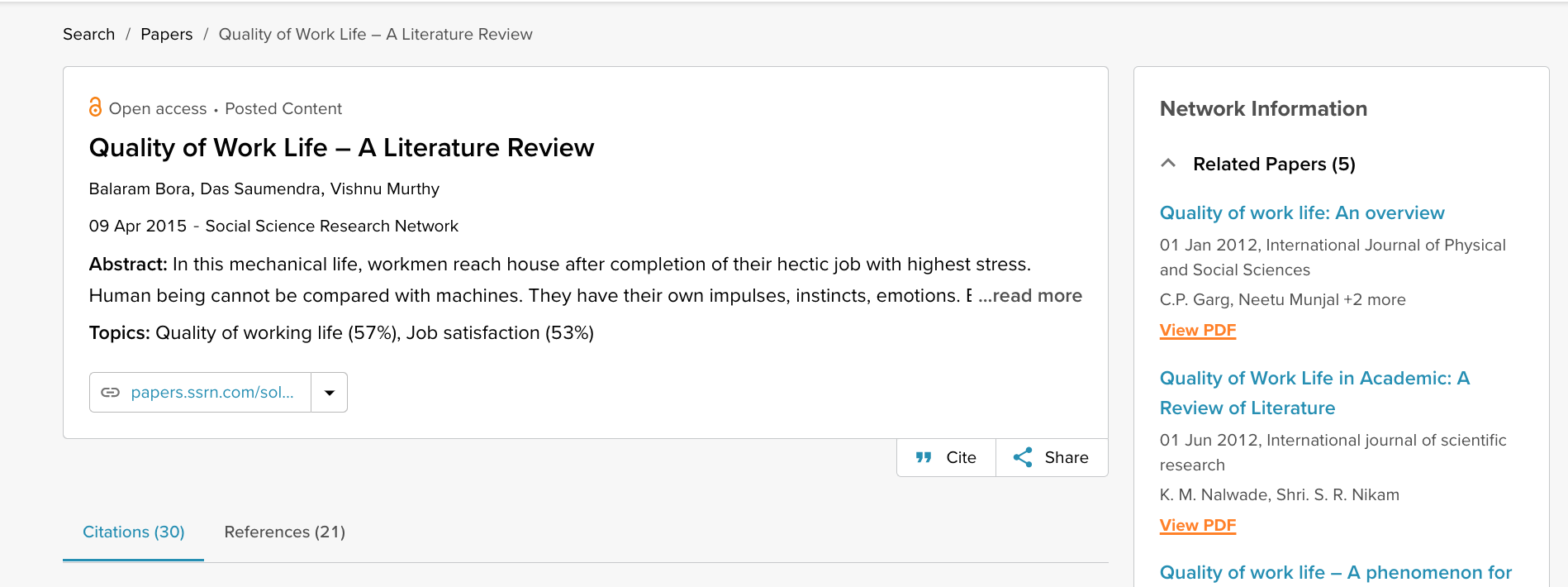

Literature review samples

1. Standalone

2. As a section of a research paper

How SciSpace Discover makes literature review a breeze?

SciSpace Discover is a one-stop solution to do an effective literature search and get barrier-free access to scientific knowledge. It is an excellent repository where you can find millions of only peer-reviewed articles and full-text PDF files. Here’s more on how you can use it:

Find the right information

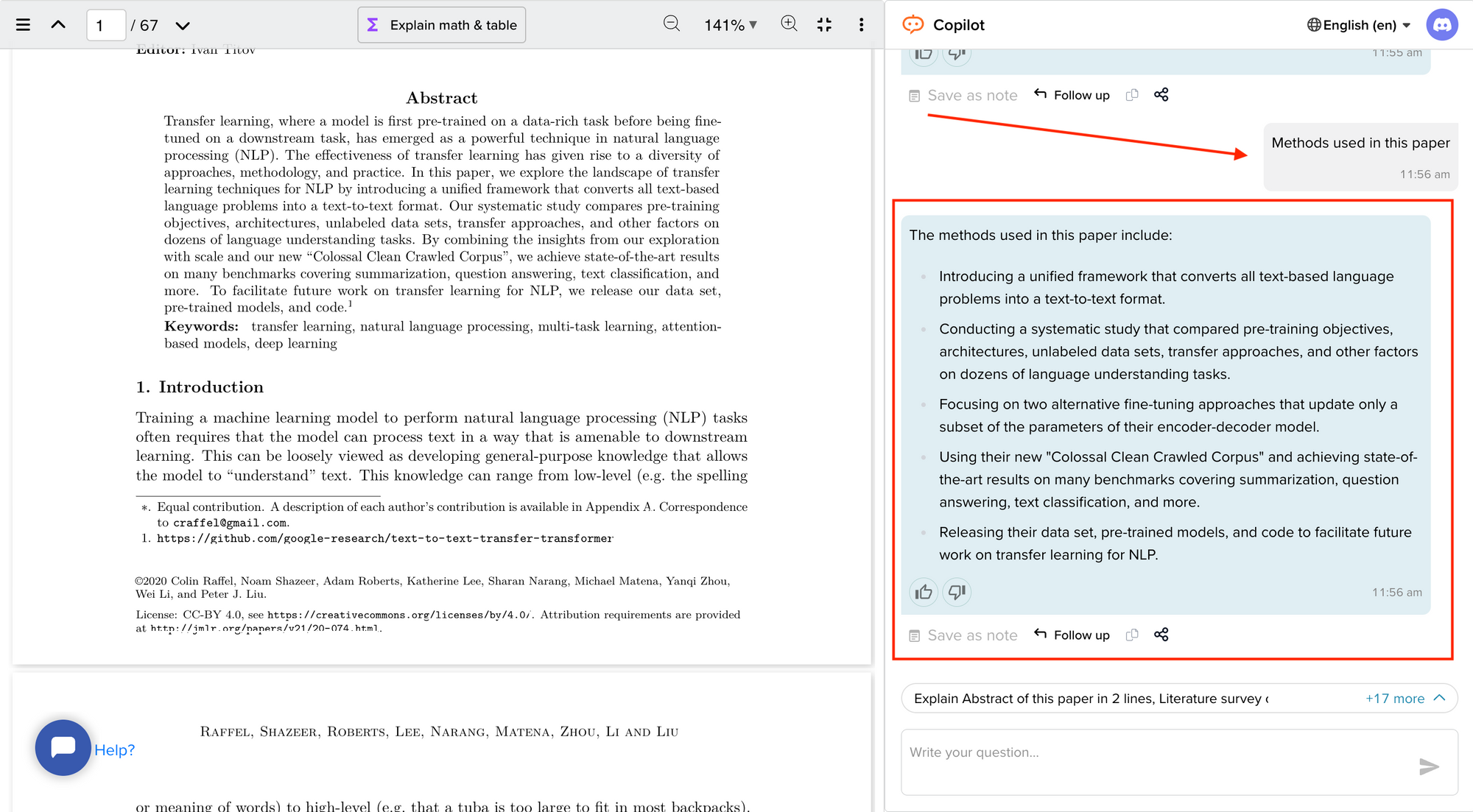

Find what you want quickly and easily with comprehensive search filters that let you narrow down papers according to PDF availability, year of publishing, document type, and affiliated institution. Moreover, you can sort the results based on the publishing date, citation count, and relevance.

Assess credibility of papers quickly

When doing the literature review, it is critical to establish the quality of your sources. They form the foundation of your research. SciSpace Discover helps you assess the quality of a source by providing an overview of its references, citations, and performance metrics.

Get the complete picture in no time

SciSpace Discover’s personalized suggestion engine helps you stay on course and get the complete picture of the topic from one place. Every time you visit an article page, it provides you links to related papers. Besides that, it helps you understand what’s trending, who are the top authors, and who are the leading publishers on a topic.

Make referring sources super easy

To ensure you don't lose track of your sources, you must start noting down your references when doing the literature review. SciSpace Discover makes this step effortless. Click the 'cite' button on an article page, and you will receive preloaded citation text in multiple styles — all you've to do is copy-paste it into your manuscript.

Final tips on how to write a literature review

A massive chunk of time and effort is required to write a good literature review. But, if you go about it systematically, you'll be able to save a ton of time and build a solid foundation for your research.

We hope this guide has helped you answer several key questions you have about writing literature reviews.

Would you like to explore SciSpace Discover and kick off your literature search right away? You can get started here .

Frequently Asked Questions (FAQs)

1. how to start a literature review.

• What questions do you want to answer?

• What sources do you need to answer these questions?

• What information do these sources contain?

• How can you use this information to answer your questions?

2. What to include in a literature review?

• A brief background of the problem or issue

• What has previously been done to address the problem or issue

• A description of what you will do in your project

• How this study will contribute to research on the subject

3. Why literature review is important?

The literature review is an important part of any research project because it allows the writer to look at previous studies on a topic and determine existing gaps in the literature, as well as what has already been done. It will also help them to choose the most appropriate method for their own study.

4. How to cite a literature review in APA format?

To cite a literature review in APA style, you need to provide the author's name, the title of the article, and the year of publication. For example: Patel, A. B., & Stokes, G. S. (2012). The relationship between personality and intelligence: A meta-analysis of longitudinal research. Personality and Individual Differences, 53(1), 16-21

5. What are the components of a literature review?

• A brief introduction to the topic, including its background and context. The introduction should also include a rationale for why the study is being conducted and what it will accomplish.

• A description of the methodologies used in the study. This can include information about data collection methods, sample size, and statistical analyses.

• A presentation of the findings in an organized format that helps readers follow along with the author's conclusions.

6. What are common errors in writing literature review?

• Not spending enough time to critically evaluate the relevance of resources, observations and conclusions.

• Totally relying on secondary data while ignoring primary data.

• Letting your personal bias seep into your interpretation of existing literature.

• No detailed explanation of the procedure to discover and identify an appropriate literature review.

7. What are the 5 C's of writing literature review?

• Cite - the sources you utilized and referenced in your research.

• Compare - existing arguments, hypotheses, methodologies, and conclusions found in the knowledge base.

• Contrast - the arguments, topics, methodologies, approaches, and disputes that may be found in the literature.

• Critique - the literature and describe the ideas and opinions you find more convincing and why.

• Connect - the various studies you reviewed in your research.

8. How many sources should a literature review have?

When it is just a chapter, sources should equal the total number of pages in your article's body. if it is a self-contained paper in itself, you need at least three times as many sources as there are pages in your work.

9. Can literature review have diagrams?

• To represent an abstract idea or concept

• To explain the steps of a process or procedure

• To help readers understand the relationships between different concepts

10. How old should sources be in a literature review?

Sources for a literature review should be as current as possible or not older than ten years. The only exception to this rule is if you are reviewing a historical topic and need to use older sources.

11. What are the types of literature review?

• Argumentative review

• Integrative review

• Methodological review

• Systematic review

• Meta-analysis review

• Historical review

• Theoretical review

• Scoping review

• State-of-the-Art review

12. Is a literature review mandatory?

Yes. Literature review is a mandatory part of any research project. It is a critical step in the process that allows you to establish the scope of your research, and provide a background for the rest of your work.

But before you go,

- Six Online Tools for Easy Literature Review

- Evaluating literature review: systematic vs. scoping reviews

- Systematic Approaches to a Successful Literature Review

- Writing Integrative Literature Reviews: Guidelines and Examples

You might also like

Consensus GPT vs. SciSpace GPT: Choose the Best GPT for Research

Literature Review and Theoretical Framework: Understanding the Differences

Types of Essays in Academic Writing - Quick Guide (2024)

Harvey Cushing/John Hay Whitney Medical Library

- Collections

- Research Help

YSN Doctoral Programs: Steps in Conducting a Literature Review

- Biomedical Databases

- Global (Public Health) Databases

- Soc. Sci., History, and Law Databases

- Grey Literature

- Trials Registers

- Data and Statistics

- Public Policy

- Google Tips

- Recommended Books

- Steps in Conducting a Literature Review

What is a literature review?

A literature review is an integrated analysis -- not just a summary-- of scholarly writings and other relevant evidence related directly to your research question. That is, it represents a synthesis of the evidence that provides background information on your topic and shows a association between the evidence and your research question.

A literature review may be a stand alone work or the introduction to a larger research paper, depending on the assignment. Rely heavily on the guidelines your instructor has given you.

Why is it important?

A literature review is important because it:

- Explains the background of research on a topic.

- Demonstrates why a topic is significant to a subject area.

- Discovers relationships between research studies/ideas.

- Identifies major themes, concepts, and researchers on a topic.

- Identifies critical gaps and points of disagreement.

- Discusses further research questions that logically come out of the previous studies.

APA7 Style resources

APA Style Blog - for those harder to find answers

1. Choose a topic. Define your research question.

Your literature review should be guided by your central research question. The literature represents background and research developments related to a specific research question, interpreted and analyzed by you in a synthesized way.

- Make sure your research question is not too broad or too narrow. Is it manageable?

- Begin writing down terms that are related to your question. These will be useful for searches later.

- If you have the opportunity, discuss your topic with your professor and your class mates.

2. Decide on the scope of your review

How many studies do you need to look at? How comprehensive should it be? How many years should it cover?

- This may depend on your assignment. How many sources does the assignment require?

3. Select the databases you will use to conduct your searches.

Make a list of the databases you will search.

Where to find databases:

- use the tabs on this guide

- Find other databases in the Nursing Information Resources web page

- More on the Medical Library web page

- ... and more on the Yale University Library web page

4. Conduct your searches to find the evidence. Keep track of your searches.

- Use the key words in your question, as well as synonyms for those words, as terms in your search. Use the database tutorials for help.

- Save the searches in the databases. This saves time when you want to redo, or modify, the searches. It is also helpful to use as a guide is the searches are not finding any useful results.

- Review the abstracts of research studies carefully. This will save you time.

- Use the bibliographies and references of research studies you find to locate others.

- Check with your professor, or a subject expert in the field, if you are missing any key works in the field.

- Ask your librarian for help at any time.

- Use a citation manager, such as EndNote as the repository for your citations. See the EndNote tutorials for help.

Review the literature

Some questions to help you analyze the research:

- What was the research question of the study you are reviewing? What were the authors trying to discover?

- Was the research funded by a source that could influence the findings?

- What were the research methodologies? Analyze its literature review, the samples and variables used, the results, and the conclusions.

- Does the research seem to be complete? Could it have been conducted more soundly? What further questions does it raise?

- If there are conflicting studies, why do you think that is?

- How are the authors viewed in the field? Has this study been cited? If so, how has it been analyzed?

Tips:

- Review the abstracts carefully.

- Keep careful notes so that you may track your thought processes during the research process.

- Create a matrix of the studies for easy analysis, and synthesis, across all of the studies.

- << Previous: Recommended Books

- Last Updated: Jan 4, 2024 10:52 AM

- URL: https://guides.library.yale.edu/YSNDoctoral

The Sheridan Libraries

- Write a Literature Review

- Sheridan Libraries

- Find This link opens in a new window

- Evaluate This link opens in a new window

What Will You Do Differently?

Please help your librarians by filling out this two-minute survey of today's class session..

Professor, this one's for you .

Introduction

Literature reviews take time. here is some general information to know before you start. .

- VIDEO -- This video is a great overview of the entire process. (2020; North Carolina State University Libraries) --The transcript is included --This is for everyone; ignore the mention of "graduate students" --9.5 minutes, and every second is important

- OVERVIEW -- Read this page from Purdue's OWL. It's not long, and gives some tips to fill in what you just learned from the video.

- NOT A RESEARCH ARTICLE -- A literature review follows a different style, format, and structure from a research article.

Steps to Completing a Literature Review

- Next: Find >>

- Last Updated: Sep 26, 2023 10:25 AM

- URL: https://guides.library.jhu.edu/lit-review

Get science-backed answers as you write with Paperpal's Research feature

What is a Literature Review? How to Write It (with Examples)

A literature review is a critical analysis and synthesis of existing research on a particular topic. It provides an overview of the current state of knowledge, identifies gaps, and highlights key findings in the literature. 1 The purpose of a literature review is to situate your own research within the context of existing scholarship, demonstrating your understanding of the topic and showing how your work contributes to the ongoing conversation in the field. Learning how to write a literature review is a critical tool for successful research. Your ability to summarize and synthesize prior research pertaining to a certain topic demonstrates your grasp on the topic of study, and assists in the learning process.

Table of Contents

- What is the purpose of literature review?

- a. Habitat Loss and Species Extinction:

- b. Range Shifts and Phenological Changes:

- c. Ocean Acidification and Coral Reefs:

- d. Adaptive Strategies and Conservation Efforts:

- How to write a good literature review

- Choose a Topic and Define the Research Question:

- Decide on the Scope of Your Review:

- Select Databases for Searches:

- Conduct Searches and Keep Track:

- Review the Literature:

- Organize and Write Your Literature Review:

- Frequently asked questions

What is a literature review?

A well-conducted literature review demonstrates the researcher’s familiarity with the existing literature, establishes the context for their own research, and contributes to scholarly conversations on the topic. One of the purposes of a literature review is also to help researchers avoid duplicating previous work and ensure that their research is informed by and builds upon the existing body of knowledge.

What is the purpose of literature review?

A literature review serves several important purposes within academic and research contexts. Here are some key objectives and functions of a literature review: 2

- Contextualizing the Research Problem: The literature review provides a background and context for the research problem under investigation. It helps to situate the study within the existing body of knowledge.

- Identifying Gaps in Knowledge: By identifying gaps, contradictions, or areas requiring further research, the researcher can shape the research question and justify the significance of the study. This is crucial for ensuring that the new research contributes something novel to the field.

- Understanding Theoretical and Conceptual Frameworks: Literature reviews help researchers gain an understanding of the theoretical and conceptual frameworks used in previous studies. This aids in the development of a theoretical framework for the current research.

- Providing Methodological Insights: Another purpose of literature reviews is that it allows researchers to learn about the methodologies employed in previous studies. This can help in choosing appropriate research methods for the current study and avoiding pitfalls that others may have encountered.

- Establishing Credibility: A well-conducted literature review demonstrates the researcher’s familiarity with existing scholarship, establishing their credibility and expertise in the field. It also helps in building a solid foundation for the new research.

- Informing Hypotheses or Research Questions: The literature review guides the formulation of hypotheses or research questions by highlighting relevant findings and areas of uncertainty in existing literature.

Literature review example

Let’s delve deeper with a literature review example: Let’s say your literature review is about the impact of climate change on biodiversity. You might format your literature review into sections such as the effects of climate change on habitat loss and species extinction, phenological changes, and marine biodiversity. Each section would then summarize and analyze relevant studies in those areas, highlighting key findings and identifying gaps in the research. The review would conclude by emphasizing the need for further research on specific aspects of the relationship between climate change and biodiversity. The following literature review template provides a glimpse into the recommended literature review structure and content, demonstrating how research findings are organized around specific themes within a broader topic.

Literature Review on Climate Change Impacts on Biodiversity:

Climate change is a global phenomenon with far-reaching consequences, including significant impacts on biodiversity. This literature review synthesizes key findings from various studies:

a. Habitat Loss and Species Extinction:

Climate change-induced alterations in temperature and precipitation patterns contribute to habitat loss, affecting numerous species (Thomas et al., 2004). The review discusses how these changes increase the risk of extinction, particularly for species with specific habitat requirements.

b. Range Shifts and Phenological Changes:

Observations of range shifts and changes in the timing of biological events (phenology) are documented in response to changing climatic conditions (Parmesan & Yohe, 2003). These shifts affect ecosystems and may lead to mismatches between species and their resources.

c. Ocean Acidification and Coral Reefs:

The review explores the impact of climate change on marine biodiversity, emphasizing ocean acidification’s threat to coral reefs (Hoegh-Guldberg et al., 2007). Changes in pH levels negatively affect coral calcification, disrupting the delicate balance of marine ecosystems.

d. Adaptive Strategies and Conservation Efforts:

Recognizing the urgency of the situation, the literature review discusses various adaptive strategies adopted by species and conservation efforts aimed at mitigating the impacts of climate change on biodiversity (Hannah et al., 2007). It emphasizes the importance of interdisciplinary approaches for effective conservation planning.

How to write a good literature review

Writing a literature review involves summarizing and synthesizing existing research on a particular topic. A good literature review format should include the following elements.

Introduction: The introduction sets the stage for your literature review, providing context and introducing the main focus of your review.

- Opening Statement: Begin with a general statement about the broader topic and its significance in the field.

- Scope and Purpose: Clearly define the scope of your literature review. Explain the specific research question or objective you aim to address.

- Organizational Framework: Briefly outline the structure of your literature review, indicating how you will categorize and discuss the existing research.

- Significance of the Study: Highlight why your literature review is important and how it contributes to the understanding of the chosen topic.

- Thesis Statement: Conclude the introduction with a concise thesis statement that outlines the main argument or perspective you will develop in the body of the literature review.

Body: The body of the literature review is where you provide a comprehensive analysis of existing literature, grouping studies based on themes, methodologies, or other relevant criteria.

- Organize by Theme or Concept: Group studies that share common themes, concepts, or methodologies. Discuss each theme or concept in detail, summarizing key findings and identifying gaps or areas of disagreement.

- Critical Analysis: Evaluate the strengths and weaknesses of each study. Discuss the methodologies used, the quality of evidence, and the overall contribution of each work to the understanding of the topic.

- Synthesis of Findings: Synthesize the information from different studies to highlight trends, patterns, or areas of consensus in the literature.

- Identification of Gaps: Discuss any gaps or limitations in the existing research and explain how your review contributes to filling these gaps.

- Transition between Sections: Provide smooth transitions between different themes or concepts to maintain the flow of your literature review.

Conclusion: The conclusion of your literature review should summarize the main findings, highlight the contributions of the review, and suggest avenues for future research.

- Summary of Key Findings: Recap the main findings from the literature and restate how they contribute to your research question or objective.

- Contributions to the Field: Discuss the overall contribution of your literature review to the existing knowledge in the field.

- Implications and Applications: Explore the practical implications of the findings and suggest how they might impact future research or practice.

- Recommendations for Future Research: Identify areas that require further investigation and propose potential directions for future research in the field.

- Final Thoughts: Conclude with a final reflection on the importance of your literature review and its relevance to the broader academic community.

Conducting a literature review

Conducting a literature review is an essential step in research that involves reviewing and analyzing existing literature on a specific topic. It’s important to know how to do a literature review effectively, so here are the steps to follow: 1

Choose a Topic and Define the Research Question:

- Select a topic that is relevant to your field of study.

- Clearly define your research question or objective. Determine what specific aspect of the topic do you want to explore?

Decide on the Scope of Your Review:

- Determine the timeframe for your literature review. Are you focusing on recent developments, or do you want a historical overview?

- Consider the geographical scope. Is your review global, or are you focusing on a specific region?

- Define the inclusion and exclusion criteria. What types of sources will you include? Are there specific types of studies or publications you will exclude?

Select Databases for Searches:

- Identify relevant databases for your field. Examples include PubMed, IEEE Xplore, Scopus, Web of Science, and Google Scholar.

- Consider searching in library catalogs, institutional repositories, and specialized databases related to your topic.

Conduct Searches and Keep Track:

- Develop a systematic search strategy using keywords, Boolean operators (AND, OR, NOT), and other search techniques.