- Open access

- Published: 17 August 2023

Data visualisation in scoping reviews and evidence maps on health topics: a cross-sectional analysis

- Emily South ORCID: orcid.org/0000-0003-2187-4762 1 &

- Mark Rodgers 1

Systematic Reviews volume 12 , Article number: 142 ( 2023 ) Cite this article

3626 Accesses

13 Altmetric

Metrics details

Scoping reviews and evidence maps are forms of evidence synthesis that aim to map the available literature on a topic and are well-suited to visual presentation of results. A range of data visualisation methods and interactive data visualisation tools exist that may make scoping reviews more useful to knowledge users. The aim of this study was to explore the use of data visualisation in a sample of recent scoping reviews and evidence maps on health topics, with a particular focus on interactive data visualisation.

Ovid MEDLINE ALL was searched for recent scoping reviews and evidence maps (June 2020-May 2021), and a sample of 300 papers that met basic selection criteria was taken. Data were extracted on the aim of each review and the use of data visualisation, including types of data visualisation used, variables presented and the use of interactivity. Descriptive data analysis was undertaken of the 238 reviews that aimed to map evidence.

Of the 238 scoping reviews or evidence maps in our analysis, around one-third (37.8%) included some form of data visualisation. Thirty-five different types of data visualisation were used across this sample, although most data visualisations identified were simple bar charts (standard, stacked or multi-set), pie charts or cross-tabulations (60.8%). Most data visualisations presented a single variable (64.4%) or two variables (26.1%). Almost a third of the reviews that used data visualisation did not use any colour (28.9%). Only two reviews presented interactive data visualisation, and few reported the software used to create visualisations.

Conclusions

Data visualisation is currently underused by scoping review authors. In particular, there is potential for much greater use of more innovative forms of data visualisation and interactive data visualisation. Where more innovative data visualisation is used, scoping reviews have made use of a wide range of different methods. Increased use of these more engaging visualisations may make scoping reviews more useful for a range of stakeholders.

Peer Review reports

Scoping reviews are “a type of evidence synthesis that aims to systematically identify and map the breadth of evidence available on a particular topic, field, concept, or issue” ([ 1 ], p. 950). While they include some of the same steps as a systematic review, such as systematic searches and the use of predetermined eligibility criteria, scoping reviews often address broader research questions and do not typically involve the quality appraisal of studies or synthesis of data [ 2 ]. Reasons for conducting a scoping review include the following: to map types of evidence available, to explore research design and conduct, to clarify concepts or definitions and to map characteristics or factors related to a concept [ 3 ]. Scoping reviews can also be undertaken to inform a future systematic review (e.g. to assure authors there will be adequate studies) or to identify knowledge gaps [ 3 ]. Other evidence synthesis approaches with similar aims have been described as evidence maps, mapping reviews or systematic maps [ 4 ]. While this terminology is used inconsistently, evidence maps can be used to identify evidence gaps and present them in a user-friendly (and often visual) way [ 5 ].

Scoping reviews are often targeted to an audience of healthcare professionals or policy-makers [ 6 ], suggesting that it is important to present results in a user-friendly and informative way. Until recently, there was little guidance on how to present the findings of scoping reviews. In recent literature, there has been some discussion of the importance of clearly presenting data for the intended audience of a scoping review, with creative and innovative use of visual methods if appropriate [ 7 , 8 , 9 ]. Lockwood et al. suggest that innovative visual presentation should be considered over dense sections of text or long tables in many cases [ 8 ]. Khalil et al. suggest that inspiration could be drawn from the field of data visualisation [ 7 ]. JBI guidance on scoping reviews recommends that reviewers carefully consider the best format for presenting data at the protocol development stage and provides a number of examples of possible methods [ 10 ].

Interactive resources are another option for presentation in scoping reviews [ 9 ]. Researchers without the relevant programming skills can now use several online platforms (such as Tableau [ 11 ] and Flourish [ 12 ]) to create interactive data visualisations. The benefits of using interactive visualisation in research include the ability to easily present more than two variables [ 13 ] and increased engagement of users [ 14 ]. Unlike static graphs, interactive visualisations can allow users to view hierarchical data at different levels, exploring both the “big picture” and looking in more detail ([ 15 ], p. 291). Interactive visualizations are often targeted at practitioners and decision-makers [ 13 ], and there is some evidence from qualitative research that they are valued by policy-makers [ 16 , 17 , 18 ].

Given their focus on mapping evidence, we believe that scoping reviews are particularly well-suited to visually presenting data and the use of interactive data visualisation tools. However, it is unknown how many recent scoping reviews visually map data or which types of data visualisation are used. The aim of this study was to explore the use of data visualisation methods in a large sample of recent scoping reviews and evidence maps on health topics. In particular, we were interested in the extent to which these forms of synthesis use any form of interactive data visualisation.

This study was a cross-sectional analysis of studies labelled as scoping reviews or evidence maps (or synonyms of these terms) in the title or abstract.

The search strategy was developed with help from an information specialist. Ovid MEDLINE® ALL was searched in June 2021 for studies added to the database in the previous 12 months. The search was limited to English language studies only.

The search strategy was as follows:

Ovid MEDLINE(R) ALL

(scoping review or evidence map or systematic map or mapping review or scoping study or scoping project or scoping exercise or literature mapping or evidence mapping or systematic mapping or literature scoping or evidence gap map).ab,ti.

limit 1 to english language

(202006* or 202007* or 202008* or 202009* or 202010* or 202011* or 202012* or 202101* or 202102* or 202103* or 202104* or 202105*).dt.

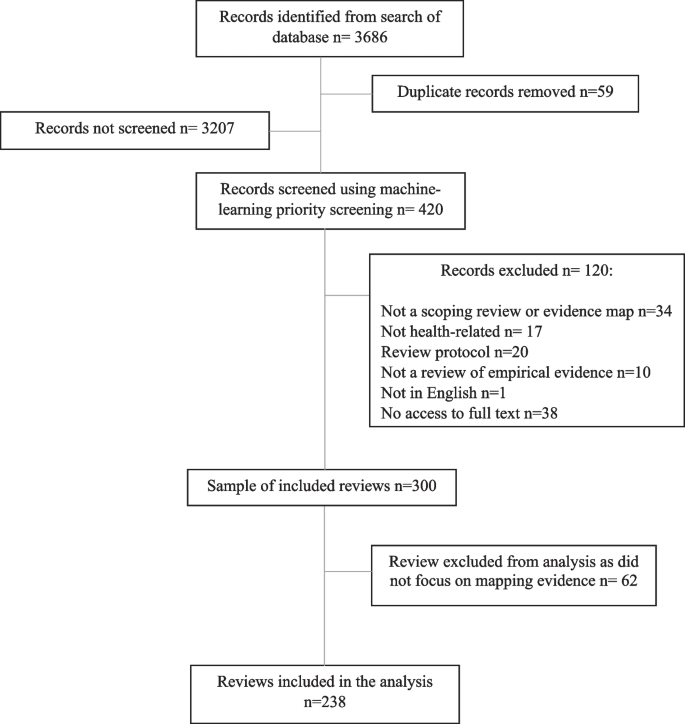

The search returned 3686 records. Records were de-duplicated in EndNote 20 software, leaving 3627 unique records.

A sample of these reviews was taken by screening the search results against basic selection criteria (Table 1 ). These criteria were piloted and refined after discussion between the two researchers. A single researcher (E.S.) screened the records in EPPI-Reviewer Web software using the machine-learning priority screening function. Where a second opinion was needed, decisions were checked by a second researcher (M.R.).

Our initial plan for sampling, informed by pilot searching, was to screen and data extract records in batches of 50 included reviews at a time. We planned to stop screening when a batch of 50 reviews had been extracted that included no new types of data visualisation or after screening time had reached 2 days. However, once data extraction was underway, we found the sample to be richer in terms of data visualisation than anticipated. After the inclusion of 300 reviews, we took the decision to end screening in order to ensure the study was manageable.

Data extraction

A data extraction form was developed in EPPI-Reviewer Web, piloted on 50 reviews and refined. Data were extracted by one researcher (E. S. or M. R.), with a second researcher (M. R. or E. S.) providing a second opinion when needed. The data items extracted were as follows: type of review (term used by authors), aim of review (mapping evidence vs. answering specific question vs. borderline), number of visualisations (if any), types of data visualisation used, variables/domains presented by each visualisation type, interactivity, use of colour and any software requirements.

When categorising review aims, we considered “mapping evidence” to incorporate all of the six purposes for conducting a scoping review proposed by Munn et al. [ 3 ]. Reviews were categorised as “answering a specific question” if they aimed to synthesise study findings to answer a particular question, for example on effectiveness of an intervention. We were inclusive with our definition of “mapping evidence” and included reviews with mixed aims in this category. However, some reviews were difficult to categorise (for example where aims were unclear or the stated aims did not match the actual focus of the paper) and were considered to be “borderline”. It became clear that a proportion of identified records that described themselves as “scoping” or “mapping” reviews were in fact pseudo-systematic reviews that failed to undertake key systematic review processes. Such reviews attempted to integrate the findings of included studies rather than map the evidence, and so reviews categorised as “answering a specific question” were excluded from the main analysis. Data visualisation methods for meta-analyses have been explored previously [ 19 ]. Figure 1 shows the flow of records from search results to final analysis sample.

Flow diagram of the sampling process

Data visualisation was defined as any graph or diagram that presented results data, including tables with a visual mapping element, such as cross-tabulations and heat maps. However, tables which displayed data at a study level (e.g. tables summarising key characteristics of each included study) were not included, even if they used symbols, shading or colour. Flow diagrams showing the study selection process were also excluded. Data visualisations in appendices or supplementary information were included, as well as any in publicly available dissemination products (e.g. visualisations hosted online) if mentioned in papers.

The typology used to categorise data visualisation methods was based on an existing online catalogue [ 20 ]. Specific types of data visualisation were categorised in five broad categories: graphs, diagrams, tables, maps/geographical and other. If a data visualisation appeared in our sample that did not feature in the original catalogue, we checked a second online catalogue [ 21 ] for an appropriate term, followed by wider Internet searches. These additional visualisation methods were added to the appropriate section of the typology. The final typology can be found in Additional file 1 .

We conducted descriptive data analysis in Microsoft Excel 2019 and present frequencies and percentages. Where appropriate, data are presented using graphs or other data visualisations created using Flourish. We also link to interactive versions of some of these visualisations.

Almost all of the 300 reviews in the total sample were labelled by review authors as “scoping reviews” ( n = 293, 97.7%). There were also four “mapping reviews”, one “scoping study”, one “evidence mapping” and one that was described as a “scoping review and evidence map”. Included reviews were all published in 2020 or 2021, with the exception of one review published in 2018. Just over one-third of these reviews ( n = 105, 35.0%) included some form of data visualisation. However, we excluded 62 reviews that did not focus on mapping evidence from the following analysis (see “ Methods ” section). Of the 238 remaining reviews (that either clearly aimed to map evidence or were judged to be “borderline”), 90 reviews (37.8%) included at least one data visualisation. The references for these reviews can be found in Additional file 2 .

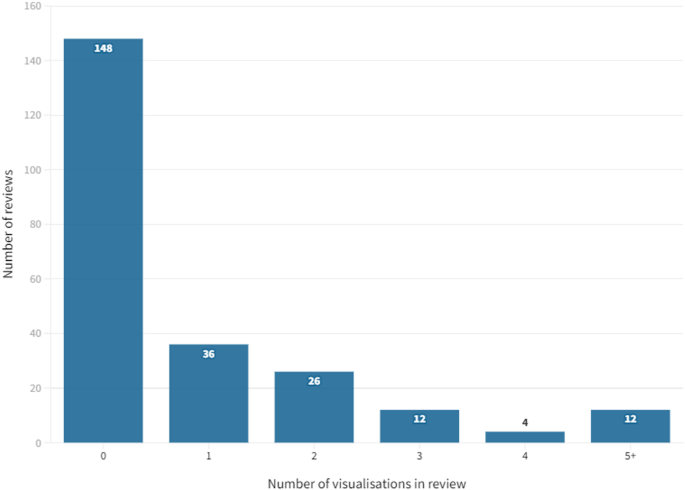

Number of visualisations

Thirty-six (40.0%) of these 90 reviews included just one example of data visualisation (Fig. 2 ). Less than a third ( n = 28, 31.1%) included three or more visualisations. The greatest number of data visualisations in one review was 17 (all bar or pie charts). In total, 222 individual data visualisations were identified across the sample of 238 reviews.

Number of data visualisations per review

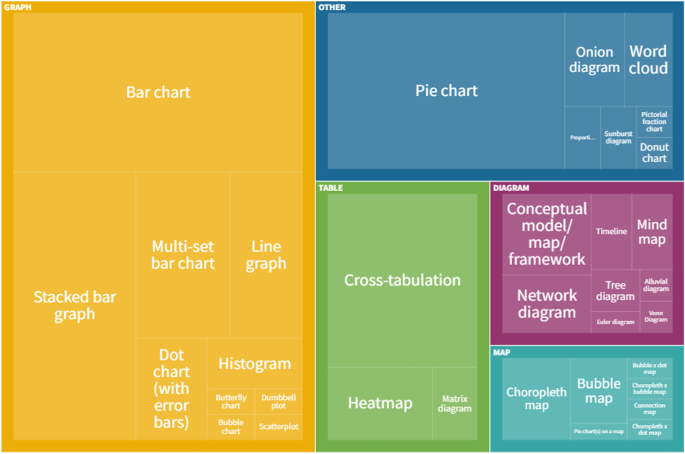

Categories of data visualisation

Graphs were the most frequently used category of data visualisation in the sample. Over half of the reviews with data visualisation included at least one graph ( n = 59, 65.6%). The least frequently used category was maps, with 15.6% ( n = 14) of these reviews including a map.

Of the total number of 222 individual data visualisations, 102 were graphs (45.9%), 34 were tables (15.3%), 23 were diagrams (10.4%), 15 were maps (6.8%) and 48 were classified as “other” in the typology (21.6%).

Types of data visualisation

All of the types of data visualisation identified in our sample are reported in Table 2 . In total, 35 different types were used across the sample of reviews.

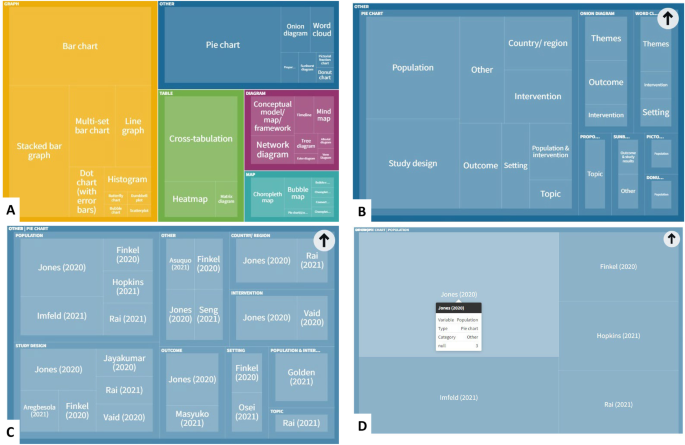

The most frequently used data visualisation type was a bar chart. Of 222 total data visualisations, 78 (35.1%) were a variation on a bar chart (either standard bar chart, stacked bar chart or multi-set bar chart). There were also 33 pie charts (14.9% of data visualisations) and 24 cross-tabulations (10.8% of data visualisations). In total, these five types of data visualisation accounted for 60.8% ( n = 135) of all data visualisations. Figure 3 shows the frequency of each data visualisation category and type; an interactive online version of this treemap is also available ( https://public.flourish.studio/visualisation/9396133/ ). Figure 4 shows how users can further explore the data using the interactive treemap.

Data visualisation categories and types. An interactive version of this treemap is available online: https://public.flourish.studio/visualisation/9396133/ . Through the interactive version, users can further explore the data (see Fig. 4 ). The unit of this treemap is the individual data visualisation, so multiple data visualisations within the same scoping review are represented in this map. Created with flourish.studio ( https://flourish.studio )

Screenshots showing how users of the interactive treemap can explore the data further. Users can explore each level of the hierarchical treemap ( A Visualisation category > B Visualisation subcategory > C Variables presented in visualisation > D Individual references reporting this category/subcategory/variable permutation). Created with flourish.studio ( https://flourish.studio )

Data presented

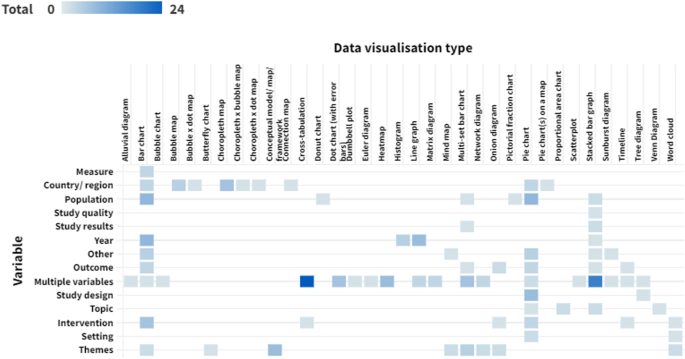

Around two-thirds of data visualisations in the sample presented a single variable ( n = 143, 64.4%). The most frequently presented single variables were themes ( n = 22, 9.9% of data visualisations), population ( n = 21, 9.5%), country or region ( n = 21, 9.5%) and year ( n = 20, 9.0%). There were 58 visualisations (26.1%) that presented two different variables. The remaining 21 data visualisations (9.5%) presented three or more variables. Figure 5 shows the variables presented by each different type of data visualisation (an interactive version of this figure is available online).

Variables presented by each data visualisation type. Darker cells indicate a larger number of reviews. An interactive version of this heat map is available online: https://public.flourish.studio/visualisation/10632665/ . Users can hover over each cell to see the number of data visualisations for that combination of data visualisation type and variable. The unit of this heat map is the individual data visualisation, so multiple data visualisations within a single scoping review are represented in this map. Created with flourish.studio ( https://flourish.studio )

Most reviews presented at least one data visualisation in colour ( n = 64, 71.1%). However, almost a third ( n = 26, 28.9%) used only black and white or greyscale.

Interactivity

Only two of the reviews included data visualisations with any level of interactivity. One scoping review on music and serious mental illness [ 22 ] linked to an interactive bubble chart hosted online on Tableau. Functionality included the ability to filter the studies displayed by various attributes.

The other review was an example of evidence mapping from the environmental health field [ 23 ]. All four of the data visualisations included in the paper were available in an interactive format hosted either by the review management software or on Tableau. The interactive versions linked to the relevant references so users could directly explore the evidence base. This was the only review that provided this feature.

Software requirements

Nine reviews clearly reported the software used to create data visualisations. Three reviews used Tableau (one of them also used review management software as discussed above) [ 22 , 23 , 24 ]. Two reviews generated maps using ArcGIS [ 25 ] or ArcMap [ 26 ]. One review used Leximancer for a lexical analysis [ 27 ]. One review undertook a bibliometric analysis using VOSviewer [ 28 ], and another explored citation patterns using CitNetExplorer [ 29 ]. Other reviews used Excel [ 30 ] or R [ 26 ].

To our knowledge, this is the first systematic and in-depth exploration of the use of data visualisation techniques in scoping reviews. Our findings suggest that the majority of scoping reviews do not use any data visualisation at all, and, in particular, more innovative examples of data visualisation are rare. Around 60% of data visualisations in our sample were simple bar charts, pie charts or cross-tabulations. There appears to be very limited use of interactive online visualisation, despite the potential this has for communicating results to a range of stakeholders. While it is not always appropriate to use data visualisation (or a simple bar chart may be the most user-friendly way of presenting the data), these findings suggest that data visualisation is being underused in scoping reviews. In a large minority of reviews, visualisations were not published in colour, potentially limiting how user-friendly and attractive papers are to decision-makers and other stakeholders. Also, very few reviews clearly reported the software used to create data visualisations. However, 35 different types of data visualisation were used across the sample, highlighting the wide range of methods that are potentially available to scoping review authors.

Our results build on the limited research that has previously been undertaken in this area. Two previous publications also found limited use of graphs in scoping reviews. Results were “mapped graphically” in 29% of scoping reviews in any field in one 2014 publication [ 31 ] and 17% of healthcare scoping reviews in a 2016 article [ 6 ]. Our results suggest that the use of data visualisation has increased somewhat since these reviews were conducted. Scoping review methods have also evolved in the last 10 years; formal guidance on scoping review conduct was published in 2014 [ 32 ], and an extension of the PRISMA checklist for scoping reviews was published in 2018 [ 33 ]. It is possible that an overall increase in use of data visualisation reflects increased quality of published scoping reviews. There is also some literature supporting our findings on the wide range of data visualisation methods that are used in evidence synthesis. An investigation of methods to identify, prioritise or display health research gaps (25/139 included studies were scoping reviews; 6/139 were evidence maps) identified 14 different methods used to display gaps or priorities, with half being “more advanced” (e.g. treemaps, radial bar plots) ([ 34 ], p. 107). A review of data visualisation methods used in papers reporting meta-analyses found over 200 different ways of displaying data [ 19 ].

Only two reviews in our sample used interactive data visualisation, and one of these was an example of systematic evidence mapping from the environmental health field rather than a scoping review (in environmental health, systematic evidence mapping explicitly involves producing a searchable database [ 35 ]). A scoping review of papers on the use of interactive data visualisation in population health or health services research found a range of examples but still limited use overall [ 13 ]. For example, the authors noted the currently underdeveloped potential for using interactive visualisation in research on health inequalities. It is possible that the use of interactive data visualisation in academic papers is restricted by academic publishing requirements; for example, it is currently difficult to incorporate an interactive figure into a journal article without linking to an external host or platform. However, we believe that there is a lot of potential to add value to future scoping reviews by using interactive data visualisation software. Few reviews in our sample presented three or more variables in a single visualisation, something which can easily be achieved using interactive data visualisation tools. We have previously used EPPI-Mapper [ 36 ] to present results of a scoping review of systematic reviews on behaviour change in disadvantaged groups, with links to the maps provided in the paper [ 37 ]. These interactive maps allowed policy-makers to explore the evidence on different behaviours and disadvantaged groups and access full publications of the included studies directly from the map.

We acknowledge there are barriers to use for some of the data visualisation software available. EPPI-Mapper and some of the software used by reviews in our sample incur a cost. Some software requires a certain level of knowledge and skill in its use. However numerous online free data visualisation tools and resources exist. We have used Flourish to present data for this review, a basic version of which is currently freely available and easy to use. Previous health research has been found to have used a range of different interactive data visualisation software, much of which does not required advanced knowledge or skills to use [ 13 ].

There are likely to be other barriers to the use of data visualisation in scoping reviews. Journal guidelines and policies may present barriers for using innovative data visualisation. For example, some journals charge a fee for publication of figures in colour. As previously mentioned, there are limited options for incorporating interactive data visualisation into journal articles. Authors may also be unaware of the data visualisation methods and tools that are available. Producing data visualisations can be time-consuming, particularly if authors lack experience and skills in this. It is possible that many authors prioritise speed of publication over spending time producing innovative data visualisations, particularly in a context where there is pressure to achieve publications.

Limitations

A limitation of this study was that we did not assess how appropriate the use of data visualisation was in our sample as this would have been highly subjective. Simple descriptive or tabular presentation of results may be the most appropriate approach for some scoping review objectives [ 7 , 8 , 10 ], and the scoping review literature cautions against “over-using” different visual presentation methods [ 7 , 8 ]. It cannot be assumed that all of the reviews that did not include data visualisation should have done so. Likewise, we do not know how many reviews used methods of data visualisation that were not well suited to their data.

We initially relied on authors’ own use of the term “scoping review” (or equivalent) to sample reviews but identified a relatively large number of papers labelled as scoping reviews that did not meet the basic definition, despite the availability of guidance and reporting guidelines [ 10 , 33 ]. It has previously been noted that scoping reviews may be undertaken inappropriately because they are seen as “easier” to conduct than a systematic review ([ 3 ], p.6), and that reviews are often labelled as “scoping reviews” while not appearing to follow any established framework or guidance [ 2 ]. We therefore took the decision to remove these reviews from our main analysis. However, decisions on how to classify review aims were subjective, and we did include some reviews that were of borderline relevance.

A further limitation is that this was a sample of published reviews, rather than a comprehensive systematic scoping review as have previously been undertaken [ 6 , 31 ]. The number of scoping reviews that are published has increased rapidly, and this would now be difficult to undertake. As this was a sample, not all relevant scoping reviews or evidence maps that would have met our criteria were included. We used machine learning to screen our search results for pragmatic reasons (to reduce screening time), but we do not see any reason that our sample would not be broadly reflective of the wider literature.

Data visualisation, and in particular more innovative examples of it, is currently underused in published scoping reviews on health topics. The examples that we have found highlight the wide range of methods that scoping review authors could draw upon to present their data in an engaging way. In particular, we believe that interactive data visualisation has significant potential for mapping the available literature on a topic. Appropriate use of data visualisation may increase the usefulness, and thus uptake, of scoping reviews as a way of identifying existing evidence or research gaps by decision-makers, researchers and commissioners of research. We recommend that scoping review authors explore the extensive free resources and online tools available for data visualisation. However, we also think that it would be useful for publishers to explore allowing easier integration of interactive tools into academic publishing, given the fact that papers are now predominantly accessed online. Future research may be helpful to explore which methods are particularly useful to scoping review users.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

Organisation formerly known as Joanna Briggs Institute

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

Munn Z, Pollock D, Khalil H, Alexander L, McLnerney P, Godfrey CM, Peters M, Tricco AC. What are scoping reviews? Providing a formal definition of scoping reviews as a type of evidence synthesis. JBI Evid Synth. 2022;20:950–952.

Peters MDJ, Marnie C, Colquhoun H, Garritty CM, Hempel S, Horsley T, Langlois EV, Lillie E, O’Brien KK, Tunçalp Ӧ, et al. Scoping reviews: reinforcing and advancing the methodology and application. Syst Rev. 2021;10:263.

Article PubMed PubMed Central Google Scholar

Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18:143.

Sutton A, Clowes M, Preston L, Booth A. Meeting the review family: exploring review types and associated information retrieval requirements. Health Info Libr J. 2019;36:202–22.

Article PubMed Google Scholar

Miake-Lye IM, Hempel S, Shanman R, Shekelle PG. What is an evidence map? A systematic review of published evidence maps and their definitions, methods, and products. Syst Rev. 2016;5:28.

Tricco AC, Lillie E, Zarin W, O’Brien K, Colquhoun H, Kastner M, Levac D, Ng C, Sharpe JP, Wilson K, et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med Res Methodol. 2016;16:15.

Khalil H, Peters MDJ, Tricco AC, Pollock D, Alexander L, McInerney P, Godfrey CM, Munn Z. Conducting high quality scoping reviews-challenges and solutions. J Clin Epidemiol. 2021;130:156–60.

Lockwood C, dos Santos KB, Pap R. Practical guidance for knowledge synthesis: scoping review methods. Asian Nurs Res. 2019;13:287–94.

Article Google Scholar

Pollock D, Peters MDJ, Khalil H, McInerney P, Alexander L, Tricco AC, Evans C, de Moraes ÉB, Godfrey CM, Pieper D, et al. Recommendations for the extraction, analysis, and presentation of results in scoping reviews. JBI Evidence Synthesis. 2022;10:11124.

Google Scholar

Peters MDJ GC, McInerney P, Munn Z, Tricco AC, Khalil, H. Chapter 11: Scoping reviews (2020 version). In: Aromataris E MZ, editor. JBI Manual for Evidence Synthesis. JBI; 2020. Available from https://synthesismanual.jbi.global . Accessed 1 Feb 2023.

Tableau Public. https://www.tableau.com/en-gb/products/public . Accessed 24 January 2023.

flourish.studio. https://flourish.studio/ . Accessed 24 January 2023.

Chishtie J, Bielska IA, Barrera A, Marchand J-S, Imran M, Tirmizi SFA, Turcotte LA, Munce S, Shepherd J, Senthinathan A, et al. Interactive visualization applications in population health and health services research: systematic scoping review. J Med Internet Res. 2022;24: e27534.

Isett KR, Hicks DM. Providing public servants what they need: revealing the “unseen” through data visualization. Public Adm Rev. 2018;78:479–85.

Carroll LN, Au AP, Detwiler LT, Fu T-c, Painter IS, Abernethy NF. Visualization and analytics tools for infectious disease epidemiology: a systematic review. J Biomed Inform. 2014;51:287–298.

Lundkvist A, El-Khatib Z, Kalra N, Pantoja T, Leach-Kemon K, Gapp C, Kuchenmüller T. Policy-makers’ views on translating burden of disease estimates in health policies: bridging the gap through data visualization. Arch Public Health. 2021;79:17.

Zakkar M, Sedig K. Interactive visualization of public health indicators to support policymaking: an exploratory study. Online J Public Health Inform. 2017;9:e190–e190.

Park S, Bekemeier B, Flaxman AD. Understanding data use and preference of data visualization for public health professionals: a qualitative study. Public Health Nurs. 2021;38:531–41.

Kossmeier M, Tran US, Voracek M. Charting the landscape of graphical displays for meta-analysis and systematic reviews: a comprehensive review, taxonomy, and feature analysis. BMC Med Res Methodol. 2020;20:26.

Ribecca, S. The Data Visualisation Catalogue. https://datavizcatalogue.com/index.html . Accessed 23 November 2021.

Ferdio. Data Viz Project. https://datavizproject.com/ . Accessed 23 November 2021.

Golden TL, Springs S, Kimmel HJ, Gupta S, Tiedemann A, Sandu CC, Magsamen S. The use of music in the treatment and management of serious mental illness: a global scoping review of the literature. Front Psychol. 2021;12: 649840.

Keshava C, Davis JA, Stanek J, Thayer KA, Galizia A, Keshava N, Gift J, Vulimiri SV, Woodall G, Gigot C, et al. Application of systematic evidence mapping to assess the impact of new research when updating health reference values: a case example using acrolein. Environ Int. 2020;143: 105956.

Article CAS PubMed PubMed Central Google Scholar

Jayakumar P, Lin E, Galea V, Mathew AJ, Panda N, Vetter I, Haynes AB. Digital phenotyping and patient-generated health data for outcome measurement in surgical care: a scoping review. J Pers Med. 2020;10:282.

Qu LG, Perera M, Lawrentschuk N, Umbas R, Klotz L. Scoping review: hotspots for COVID-19 urological research: what is being published and from where? World J Urol. 2021;39:3151–60.

Article CAS PubMed Google Scholar

Rossa-Roccor V, Acheson ES, Andrade-Rivas F, Coombe M, Ogura S, Super L, Hong A. Scoping review and bibliometric analysis of the term “planetary health” in the peer-reviewed literature. Front Public Health. 2020;8:343.

Hewitt L, Dahlen HG, Hartz DL, Dadich A. Leadership and management in midwifery-led continuity of care models: a thematic and lexical analysis of a scoping review. Midwifery. 2021;98: 102986.

Xia H, Tan S, Huang S, Gan P, Zhong C, Lu M, Peng Y, Zhou X, Tang X. Scoping review and bibliometric analysis of the most influential publications in achalasia research from 1995 to 2020. Biomed Res Int. 2021;2021:8836395.

Vigliotti V, Taggart T, Walker M, Kusmastuti S, Ransome Y. Religion, faith, and spirituality influences on HIV prevention activities: a scoping review. PLoS ONE. 2020;15: e0234720.

van Heemskerken P, Broekhuizen H, Gajewski J, Brugha R, Bijlmakers L. Barriers to surgery performed by non-physician clinicians in sub-Saharan Africa-a scoping review. Hum Resour Health. 2020;18:51.

Pham MT, Rajić A, Greig JD, Sargeant JM, Papadopoulos A, McEwen SA. A scoping review of scoping reviews: advancing the approach and enhancing the consistency. Res Synth Methods. 2014;5:371–85.

Peters MDJ, Marnie C, Tricco AC, Pollock D, Munn Z, Alexander L, McInerney P, Godfrey CM, Khalil H. Updated methodological guidance for the conduct of scoping reviews. JBI Evid Synth. 2020;18:2119–26.

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, Moher D, Peters MDJ, Horsley T, Weeks L, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169:467–73.

Nyanchoka L, Tudur-Smith C, Thu VN, Iversen V, Tricco AC, Porcher R. A scoping review describes methods used to identify, prioritize and display gaps in health research. J Clin Epidemiol. 2019;109:99–110.

Wolffe TAM, Whaley P, Halsall C, Rooney AA, Walker VR. Systematic evidence maps as a novel tool to support evidence-based decision-making in chemicals policy and risk management. Environ Int. 2019;130:104871.

Digital Solution Foundry and EPPI-Centre. EPPI-Mapper, Version 2.0.1. EPPI-Centre, UCL Social Research Institute, University College London. 2020. https://eppi.ioe.ac.uk/cms/Default.aspx?tabid=3790 .

South E, Rodgers M, Wright K, Whitehead M, Sowden A. Reducing lifestyle risk behaviours in disadvantaged groups in high-income countries: a scoping review of systematic reviews. Prev Med. 2022;154: 106916.

Download references

Acknowledgements

We would like to thank Melissa Harden, Senior Information Specialist, Centre for Reviews and Dissemination, for advice on developing the search strategy.

This work received no external funding.

Author information

Authors and affiliations.

Centre for Reviews and Dissemination, University of York, York, YO10 5DD, UK

Emily South & Mark Rodgers

You can also search for this author in PubMed Google Scholar

Contributions

Both authors conceptualised and designed the study and contributed to screening, data extraction and the interpretation of results. ES undertook the literature searches, analysed data, produced the data visualisations and drafted the manuscript. MR contributed to revising the manuscript, and both authors read and approved the final version.

Corresponding author

Correspondence to Emily South .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1..

Typology of data visualisation methods.

Additional file 2.

References of scoping reviews included in main dataset.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

South, E., Rodgers, M. Data visualisation in scoping reviews and evidence maps on health topics: a cross-sectional analysis. Syst Rev 12 , 142 (2023). https://doi.org/10.1186/s13643-023-02309-y

Download citation

Received : 21 February 2023

Accepted : 07 August 2023

Published : 17 August 2023

DOI : https://doi.org/10.1186/s13643-023-02309-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Scoping review

- Evidence map

- Data visualisation

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Dimensions of teachers’ data literacy: A systematic review of literature from 1990 to 2021

- Open access

- Published: 06 May 2024

Cite this article

You have full access to this open access article

- Jihyun Lee ORCID: orcid.org/0000-0001-5896-0686 1 ,

- Dennis Alonzo 1 ,

- Kim Beswick 1 ,

- Jan Michael Vincent Abril 1 ,

- Adrian W. Chew 1 &

- Cherry Zin Oo 2

347 Accesses

2 Altmetric

Explore all metrics

The current study presents a systematic review of teachers’ data literacy, arising from a synthesis of 83 empirical studies published between 1990 to 2021. Our review identified 95 distinct indicators across five dimensions: (a) knowledge about data, (b) skills in using data, (c) dispositions towards data use, (d) data application for various purposes, and (e) data-related behaviors. Our findings indicate that teachers' data literacy goes beyond addressing the needs of supporting student learning and includes elements such as teacher reflection, collaboration, communication, and participation in professional development. Considering these findings, future policies should acknowledge the significance of teacher dispositions and behaviors in relation to data, recognizing that they are as important as knowledge and skills acquisition. Additionally, prioritizing the provision of system-level support to foster teacher collaboration within in-school professional development programs may prove useful in enhancing teachers’ data literacy.

Similar content being viewed by others

How Teachers Use Data: Description and Differences Across PreK Through Third Grade

Teachers’ data literacy for learning analytics: a central predictor for digital data use in upper secondary schools, teacher capacity for and beliefs about data-driven decision making: a literature review of international research.

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, there has been a growing recognition of the importance of teachers’ data literacy for educational policy, research, and practice. This trend was ignited in 2009 when Arne Duncan, the former Secretary of Education of the United States, advocated evidence-driven practices in schools to enhance student performance (Mandinach & Gummer, 2016 ). Since then, there has been an increasing expectation for teachers to engage in data-informed practices to guide teaching and decision-making in schools. Following this trend, educational researchers have also increasingly directed their attention towards offering conceptual and theoretical foundations for teachers’ data literacy.

Various organizations and researchers have provided the definitions of teachers’ data literacy. For example, drawing on the opinions of diverse stakeholder groups, Data Quality Campaign ( 2014 ) defined teachers’ data literacy as teachers’ capabilities to “continuously, effectively, and ethically access, interpret, act on, and communicate multiple types of data from state, local, classroom, and other sources to improve outcomes for students in a manner appropriate to educators' professional roles and responsibilities” (p. 1). Kippers et al. ( 2018 ) defined teachers’ data literacy as “educators’ ability to set a purpose, collect, analyze, and interpret data and take instructional action” (p. 21). Similarly, teachers’ data literacy has been defined as “one’s ability, or the broad knowledge and skills, needed to engage in data use or implement a data use inquiry process (Abrams et al., 2021 , p. 100,868).

The data literacy for teachers (DLFT) framework proposed by Mandinach and Gummer defined teachers’ data literacy as “… the ability to transform information into actionable instructional knowledge and practices by collecting, analyzing, and interpreting all types of data to help determine instructional steps” (Gummer & Mandinach, 2015 , p. 2). In recent years, much of the research efforts to provide a theoretical framework on teachers’ data literacy has been led by Mandinach and Gummer (Gummer & Mandinach, 2015 ; Mandinach & Gummer, 2012 , 2013a , 2016 ; Mandinach et al., 2015 ). As far as we can ascertain, their work presents the most comprehensive framework of teachers’ data literacy in the current literature. The primary sources of Mandinach and Gummer’s DLFT framework were their previous works, Mandinach and Gummer ( 2012 ) and Mandinach et al. ( 2015 ). Their DLFT framework was developed as the results of the analysis of the teacher licensure documents across the US states (Mandinach et al., 2015 ) and the text analysis of the perspectives and definitions provided by 55 researchers and professional development providers during a braining storming at the conference held in 2012 (cf. Mandinach & Gummer, 2012 ). There are five components in the framework: (a) identifying problems and framing questions, (b) using data, (c) transforming data into information, (d) transforming information into decisions, and (e) evaluating outcomes. Their framework aimed to identify “the specific knowledge, skills, and dispositions teachers need to use data effectively and responsibly” (Mandinach & Gummer, 2016 , p. 366). However, a potential sixth dimension, “dispositions, habits of mind, or factors that influence data use” (Mandinach & Gummer, 2016 , p. 372) was mentioned but not included in the framework.

2 The present study

In the present study, we conducted a systematic review of the empirical studies on teachers’ data literacy and data use published in academic journals between 1990 and 2021. Our primary purpose was to enhance the conceptual clarity of teachers’ data literacy by providing its updated definition, indicators, and dimensions.

We argue that there are several reasons to justify the need for this systematic review. Firstly, we update, complement, and compare our review outcomes and the DLFT framework in Mandinach and Gummer ( 2016 ). A systematic review of research studies on teachers’ data use was conducted by Mandinach and Gummer ( 2013b ), but the study selection was limited to years between 2001 and 2009. Therefore, one of the aims of the present study is to compare our systematic review outcomes against the dimensions and specific indicators identified in the DLFT framework (Mandinach & Gummer, 2016 ). The present literature search spans a period from 1990 to 2021. We have set 1990 as the lower-boundary year because “during the 1990s, a new hypothesis – that the quality of teaching would provide a high-leverage policy target – began to gain currency” (Darling-Hammond et al., 2003 , p. 5).

Secondly, it appears that much work on teachers’ data literacy, including that of Mandinach and Gummer, has tended to focus on teachers’ data use in relation to teaching (e.g., Beck et al., 2020 ; Datnow et al., 2012 ) and instructional improvement (e.g., Datnow et al., 2021 ; Kerr et al., 2006 ; Wachen et al., 2018 ) or in relation to student academic performance (e.g., Poortman & Schildkamp, 2016 ; Staman et al., 2017 ). However, we argue that classroom teachers’ tasks and responsibilities go beyond teaching itself and include many other tasks such as advising/counselling, organising excursions, and administrative work (e.g., Albiladi et al., 2020 ; Kallemeyn, 2014 ). Our review, therefore, examines how teachers’ data use practices may be manifested across a range of teacher responsibilities beyond teaching and teaching-related tasks.

Thirdly, there has been a relative lack of attention to teachers’ personal dispositions in data literacy research. Dispositions refer to a person's inherent tendencies, attitudes, approaches, and inclinations towards ways of thinking, behaving, and believing (Lee & Stankov, 2018 ; Mischel & Shoda, 1995 ). According to Katz ( 1993 ), a disposition can be defined as “a tendency to exhibit frequently, consciously, and voluntarily a pattern of behavior that is directed to a broad goal” (p. 2). In the context of education, disposition refers to the attitudes, beliefs, and values that influence a teacher’s actions, decision-making, and interactions with various stakeholders including students, colleagues, and school leaders (Darling-Hammond et al., 2003 ). While teachers’ dispositions were mentioned in Mandinach and Gummer ( 2016 ), dispositions were not included in their DLFT framework. Teacher educators have long emphasized that accomplished teachers need to possess extensive knowledge, skills, and a range of dispositions to support the learning of all students in the classroom, engage in on-going professional development, and continuously strive to enhance their own learning throughout their careers (Darling-Hammond et al., 2003 ; Sykes, 1999 ). Therefore, we aim to identify a range of teachers’ dispositions in relation to data literacy and data use in the school contexts.

Fourthly, we argue that teachers’ data literacy may be more important in the current context of the rapidly evolving data and digital landscape influenced by the technical advancements in artificial intelligence. Teachers may encounter significant challenges in comprehending and addressing a wide array of issues, both anticipated and unforeseen, as well as observed and unobserved situations, stemming from various artificial intelligence tools and automated machines. In this sense, comprehending the nature, types, and functions of data is crucial for teachers. Without such understanding, the educational community and teaching workforce may soon find themselves in an increasingly worrisome situation when it comes to evaluating data and information.

Finally, we argue that there is a need to update conceptual clarity regarding teachers’ data literacy in the current literature. Several systematic review studies have focused on features in professional development interventions (PDIs) aimed at improving teachers’ data use in schools (e.g., Ansyari et al., 2020 ; 2022 ; Espin et al., 2021 ), emphasizing the need to understand data literacy as a continuum spanning from pre-service to in-service teachers and from novice to veteran educators (Beck & Nunnaley, 2021 ). Other systematic review studies have given substantial attention to data-based decision-making (DBDM) in the schools (e.g., Espin et al., 2021 ; Filderman et al., 2018 ; Gesel et al., 2021 ; Hoogland et al., 2016 ). For example, Hoogland et al. ( 2016 ) investigated the prerequisites for data-based decision-making (DBDM) in the classroom, highlighting nine themes that influence DBDM, such as collaboration, leadership, culture, time, and resources. These systematic reviews are highly relevant to the current review, as the PDIs, understanding the continuum, or data-based decision-making would require a clear and updated understanding of what teachers’ data literacy should be. We hope that the current study’s definition, indicators, and dimensions of teachers’ data literacy may be useful in conjunction with other systematic review studies on teachers’ data use and factors influencing teachers’ data use.

3.1 Data sources and selection of the studies

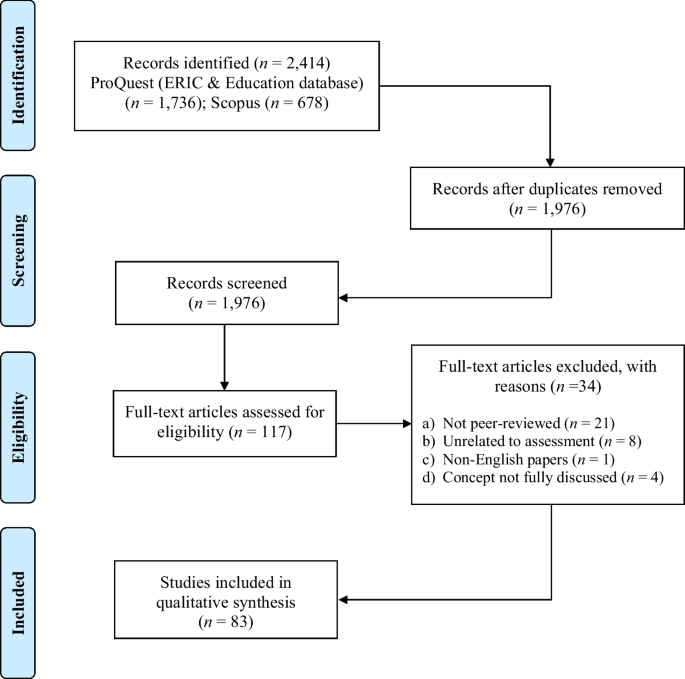

Our strategies for literature search were based on the guidelines of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), a framework for reporting and synthesising literature review (Moher et al., 2009 ). In accordance with PRISMA suggestions, we followed the four steps in locating and reviewing the relevant studies. First, we conducted initial searches to identify relevant studies, using three databases: Scopus, ProQuest, and Web of Science. Keywords in our search were teacher, school, data, data use, data literacy, evidence-based, and decision-making (see Table 1 for the detailed search strategy syntax). This initial search, using the combination of the identified keywords, yielded 2,414 journal articles (see Fig. 1 ). After removing duplicates, 1,976 articles remained.

Study selection flow using PRISMA guidelines

Secondly, we set and applied the inclusion criteria to screen the studies. The inclusion criteria were: (a) topics relating to the key words, (b) school context of primary or secondary school settings (i.e., excluding studies focusing on university, vocational education, and adult learning), (c) the full text written in English (excluding studies if the full text is presented in another language or if only the abstract was presented in English), (d) peer-reviewed empirical studies (across quantitative, qualitative, and mixed-methods) published in academic journals (excluding book chapters, conference papers, thesis) to ensure the inclusion of the published work that has undergone peer-review process, and finally, (e) published studies from 1990 onwards. The titles and abstracts of the studies were reviewed to assess their eligibility based on the inclusion criteria. As a result of applying these criteria, 117 articles were selected for the next step, full-text review.

Thirdly, we evaluated the eligibility of the full-text versions of the published studies. This full-text review resulted in a further exclusion of 34 studies as they were found to not meet all the inclusion criteria. We also examined whether the studies included data literacy or data-driven decision-making. Following these assessments, we identified 83 articles that met all the inclusion criteria.

Finally, we reviewed, coded, and analyzed the final set of the selected studies. The analysis approaches are described below.

3.2 Approach to analysis

We employed a thematic synthesis methodology, following the framework outlined by Thomas & Harden ( 2008 ). The coding and analysis process consisted of three main stages: (a) conducting a line-by-line reading and coding of the text, (b) identifying specific descriptive codes, and (c) generating analytical themes by grouping conceptually inter-related descriptive codes. The final analytic process was, therefore, categorizing and naming related descriptive codes to produce analytical themes. During the development of the analytic themes, we utilized an inductive approach, organizing conceptually interconnected codes into broader themes.

The first author developed the descriptive and analytical themes, which were then reviewed by another two authors. To ensure coding rigor and consistency, three authors independently coded the same two articles, and then compared the coding to address any inconsistencies and reach a consensus. This process was repeated in four iterations. Once the three authors who were involved in the initial coding reached the consensus, the remaining authors double-checked the final outputs of the thematic analysis (i.e., codes, and themes). We have labelled descriptive codes as ‘indicators’ of teachers' data literacy, while the broader groups of descriptive codes, referred to as analytic themes, represent ‘dimensions’ of teachers’ data literacy.

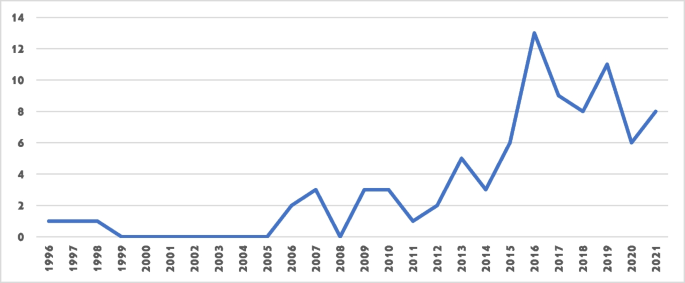

4.1 Characteristics of the reviewed studies

The main purpose of the present study was to examine the conceptualization of teachers’ data literacy from 83 peer-reviewed empirical studies. Table 2 presents the studies included in our systematic review, along with the summary of the study characteristics such as country, school-level, study focus (i.e., main constructs), study purposes/objectives, research method, data collection tools, and sample size. Figure 2 presents the number of the reviewed studies by publication year. We found that since 2015, there has been an increase in the number of published empirical studies on teachers' data literacy.

Number of the reviewed studies by publication year

Out of 83 studies, 50 were conducted in the United States. Thirteen studies were from Netherlands, four from Belgium, three from Australia, two from each of Canada and the United Kingdom, and one study for each of the following ten countries: China, Denmark, Germany, Indonesia, Ireland, Kenya, Korea, Norway, South Africa, and Sweden. Therefore, more than half of the studies (i.e., 58 studies, 70%) were conducted in the English-speaking countries. In terms of school-settings, studies were mostly conducted in primary school settings or in combination with high school: 36 studies in primary school settings, 16 in secondary school settings, and 30 studies were in both primary and secondary school settings. The most common design was qualitative ( n = 35 studies), followed by mixed methods ( n = 30) and quantitative ( n = 18). Multiple sources of data collection (e.g., interview and survey) were used in 22 studies. The most commonly used data collection tool was interview ( n = 55), which was followed by surveys ( n = 37) and observation ( n = 25). A smaller set of studies used focus group discussion ( n = 18) and document analysis ( n = 19). A few studies used students’ standardised assessment data ( n = 4), field notes ( n = 4), and teacher performance on data literacy test ( n = 4).

We also reviewed the study topics and found that there are seven foci among the reviewed studies: (a) factors influencing teachers’ data use ( n = 29), (b) specific practices in teachers’ data use ( n = 27), (c) teachers’ data use to enhance teaching practices ( n = 25), (d) teachers’ data use for various purposes ( n = 24), (e) approaches to improve teachers’ data literacy ( n = 22), (f) approaches to improve teachers’ assessment literacy ( n = 19), and (g) teachers’ data use to improve student learning outcomes ( n = 19).

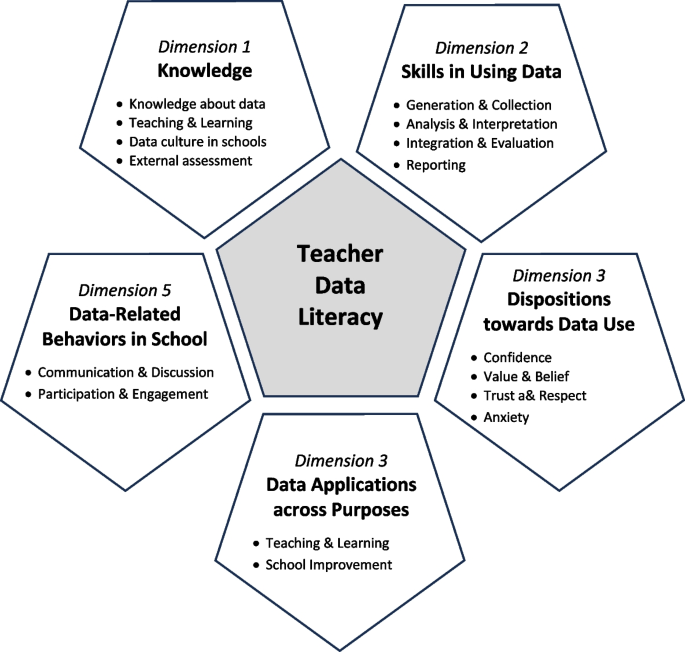

4.2 Dimensions and indicators of teaches’ data literacy

Our thematic analysis identified 95 descriptive codes (see Table 3 ). Careful review of the identified descriptive codes suggested that they can be viewed as indicators of teachers’ knowledge, attitudes, behaviors, and dispositions in data use. These indicators were further organized into inter-related concepts, which formed analytic themes; we refer to these as ‘dimensions’ (see Table 3 ). There were five broad dimensions that emerged from the indicators: knowledge about data (Dimension 1), skills in using data (Dimension 2), dispositions towards data use (Dimension 3), data application for various purposes (Dimension 4), and data-related behaviors (Dimension 5).

It is necessary to point out that Dimension 1 pertains to understanding the nature of data itself, focusing on knowledge about data. On the other hand, Dimension 2 revolves around data-related skills in the actual use of data , encompassing a spectrum of sequences including data generation, processing, and production. These two dimensions, i.e., knowledge and skills, are highly interconnected and complement each other. Proficiency in data-use skills (Dimension 2) may not be developed without a solid understanding of how data can be utilised, for instance, in teaching practices or school improvement in data use (Dimension 1). Conversely, teachers' understanding of how data can enhance teaching practices (Dimension 1) can guide them in determining specific approaches to analysing particular datasets (Dimension 2). While we acknowledge the complementary nature of knowledge and skills, it is important to note that certain aspects of knowledge and skills may not completely overlap. For instance, a teacher who understands the process of creating state-level assessment data may not necessarily possess the technical expertise required to analyze state-level data, taking into account measurement errors. Therefore, we maintain knowledge and skills as two distinct dimensions to highlight both as the core components of teachers’ data literacy.

Within each of the five broad dimensions, we also uncovered sub-themes to illuminate the constituent elements of those dimensions. Under Dimension 1, four sub-themes emerged: “knowledge about data”, knowledge about data for “teaching practices”, understanding “data culture in the school”, and understanding the use of “external assessment”. Dimension 2 featured sub-themes highlighting the sequential stages of data utilization: “data generation & collection”, “data analysis”, “data interpretation”, “data integration”, “evaluation”, and “reporting”. Within Dimension 3, we identified dispositions towards data use, encompassing sub-themes such as confidence, values/beliefs, trust/respect, and anxiety. Dimension 4 revealed various purposes of data applications, categorized into three sub-themes: “teaching,” “student learning,” and “school improvement.” Lastly, Dimension 5 delineated teachers’ behaviors related to data into two sub-themes: “communication & discussion” and “participation & engagement.”

In the following passages we provide detailed descriptions of the indicators and their associated dimensions. Figure 3 presents a visual a summary of these indicators and dimensions.

A summary of the dimensions and indicators of teachers’ data literacy

4.2.1 Dimension 1. Knowledge about data

The first dimension of teachers’ data literacy pertains to teachers’ knowledge about data . Many studies recognized the importance of data-related knowledge to be utilized in the schools (e.g., Jacobs et al., 2009 ; Omoso et al., 2019 ; Schildkamp et al., 2017 ). Our review revealed four major ways that teachers' data-related knowledge can be manifested. Firstly, teachers’ knowledge about data involves their understanding of the necessary steps in data analysis procedures (Ebbeler et al., 2016 ; Snodgrass Rangel et al., 2016 ; Vanlommel et al., 2021 ; Wardrip & Herman, 2018 ) and understanding of different data types to be used for varying purposes (Abdusyakur & Poortman, 2019 ; Beck et al., 2020 ; Howley et al., 2013 ; Reeves et al., 2016 ).

Secondly, teachers’ knowledge about data involves their capability to relate the insights gleaned from data to inform their teaching practices (Abrams et al., 2016 ; Jimerson et al., 2016 ). Specifically, data-literate teachers leverage student assessment data to evaluate learning progress (Abrams et al., 2016 ; Jimerson, 2014 ; Jimerson & Wayman, 2015 ; Jimerson et al., 2016 ; Snodgrass Rangel et al., 2016 ), to tailor classroom instruction based on data insights (Mokhtari et al., 2009 ; Poortman & Schildkamp, 2016 ; Staman et al., 2017 ; van der Scheer & Visscher, 2018 ), and to ensure alignment between instructional approaches and appropriate assessment methods (Howley et al., 2013 ; Marsh & Farrell, 2015 ; van der Scheer & Visscher, 2018 ).

Thirdly, teachers’ data literacy extends to understanding of the school culture surrounding data utilization (e.g., Andersen, 2020 ; Schildkamp, 2019 ; Wachen et al., 2018 ). This encompasses recognizing the conditions that may facilitate or hinder teachers’ data use (Abdusyakur & Poortman, 2019 ; Anderson et al., 2010 ; Keuning et al., 2017 ) and navigating various challenges associated with using assessment data in the school (Datnow et al., 2012 ; Ford, 2018 ; Kanjee & Moloi, 2014 ; Thomas & Huffman, 2011 ).

Lastly, teachers’ knowledge about data includes understanding of externally administered assessment data and data system, such as state-level assessment policies related to data use (Copp, 2017 ; Hardy, 2019 ; Reed, 2015 ) and understanding the broader state-level contexts that impact data utilization within the school (Datnow et al., 2013 ; Dunn et al., 2013a ; Ford, 2018 ; Omoso et al., 2019 ; Powell et al., 2021 ). Teachers may need to have thorough knowledge of educational government policies to ensure alignment between state-level curriculum initiatives and school-level assessment policies (Anderson et al., 2010 ; Copp, 2017 ; Gelderblom et al., 2016 ; Hardy, 2015 ).

In summary, existing literature highlights that data-literate teachers would have a comprehensive understanding of a diverse range of data sources and purposes, regularly reviewing and evaluating student outcomes from various channels. Consequently, if teachers face excessive pressure to meet accountability measures and improve standardized testing results, it could potentially hinder their overall development and growth in a broad spectrum of data-related knowledge.

4.2.2 Dimension 2. Skills in using data

Skills in using data is the second key dimension in teachers’ data literacy. There were a wide range of specific data-skills mentioned in the literature, spanning from data generation and collection (Farley-Ripple et al., 2019 ; Jimerson & Wayman, 2015 ) to data analysis (Farley-Ripple et al., 2019 ; Jimerson & Wayman, 2015 ; Marsh et al., 2010 ), data interpretation and integration (Jimerson & Wayman, 2015 ; Marsh et al., 2010 ), evaluation (Andersen, 2020 ; Dunn et al., 2013b ; Thomas & Huffman, 2011 ), and report writing (Farley-Ripple et al., 2019 ; Jimerson & Wayman, 2015 ). These indicators (see Table 3 ) emphasize that teachers’ data literacy requires proficiency across the entire sequence, across different stages of data generation, processing, and production.

Teachers’ skills in data use also involve selecting specific data types appropriate for different purposes (Anderson et al., 2010 ; Jimerson et al., 2016 ; Kanjee & Moloi, 2014 ), analysing multiple sources of data on student learning outcomes (Datnow et al., 2012 ; Vanlommel et al., 2021 ; von der Embse et al., 2021 ), and integrating multiple data sources to arrive at a holistic assessment of student progress (Brunner et al., 2005 ; Farley-Ripple et al., 2019 ; Ford, 2018 ; Jacobs et al., 2009 ; Mausethagen et al., 2018 ). For example, teachers may need to apply different data analytic approaches when evaluating student outcomes based on school-based versus externally administered standardized assessments (Copp, 2017 ; Curry et al., 2016 ; Omoso et al., 2019 ; Wardrip & Herman, 2018 ; Zeuch et al., 2017 ). Data-literate teachers may also plan data analysis for targeted purposes, such as analyzing students’ social-emotional outcomes (Abrams et al., 2021 ; Jimerson et al., 2021 ; von der Embse et al., 2021 ; Wardrip & Herman, 2018 ), identifying individual students’ learning needs, making recommendations for curriculum revisions, or evaluating pedagogical approaches (Dunn et al., 2013a ; Snodgrass Rangel et al., 2016 ; Wolff et al., 2019 ; Young, 2006 ).

In summary, this “skills” dimension highlights the importance of teachers possessing a diverse array of competencies to leverage data effectively. The literature reviewed identified various aspects of teachers’ data use, spanning the spectrum from data collection and generation to analysis, interpretation, integration across multiple sources, evaluation, and reporting.

4.2.3 Dimension 3. Dispositions towards data use

While somewhat overlooked in data literacy literature, teachers’ disposition is a crucial component of their data literacy. Our review identified four major types of such dispositions in the context of teachers’ data literacy (see Table 3 ). Firstly, studies have underscored that teachers’ confidence in using data may be necessary when making data-driven school-level decisions, for example, to design intervention programs (Andersen, 2020 ; Keuning et al., 2017 ; Staman et al., 2017 ; Thompson, 2012 ), or to develop strategic plans for school improvement (Dunn et al., 2013b ; Poortman & Schildkamp, 2016 ). Researchers also claimed that teachers may need to feel confident in many steps of data processes, across accessing, analyzing, interpreting, evaluating, and discussing data within the school environment (Abrams et al., 2021 ; Dunn et al., 2013a ; von der Embse et al., 2021 ).

The second disposition pertains to teachers valuing and believing in the importance of data use in schools. Data-literate teachers would recognize the usefulness of data in informing school improvement and enhancing student performance (Howley et al., 2013 ; Poortman & Schildkamp, 2016 ; Prenger & Schildkamp, 2018 ). They would also place value on collaboration among colleagues and actively seek institutional support for effective data use (Kallemeyn, 2014 ; Marsh & Farrell, 2015 ; Nicholson et al., 2017 ; Poortman & Schildkamp, 2016 ). Furthermore, they would appreciate the pivotal role of school leaders in supporting and promoting teachers’ data use within the school (Albiladi et al., 2020 ; Curry et al., 2016 ; Joo, 2020 ; Young, 2006 ).

A third type of teacher disposition that our review identified is trust in and respect towards colleagues and school leaders . Teachers often work collaboratively in the school environment when they learn about and utilise school-level data. In this sense, teacher collaboration and sustaining trusting relationships are fundamental in fostering a school culture that appreciates data-driven decision-making, as well as for encouraging teachers to further develop their own data knowledge and skills (Abrams et al., 2021 ; Andersen, 2020 ; Keuning et al., 2017 ). Mutual trust and respect among teachers can allow them to have open and honest conversations about their experiences and share any concerns arising from data use in the school context (Andersen, 2020 ; Datnow et al., 2013 ; Ford, 2018 ; Wachen et al., 2018 ).

Lastly, data anxiety may play a role when teachers use or are expected to use data in the school (Abrams et al., 2021 ; Dunn et al., 2013b ; Reeves et al., 2016 ). Teachers may experience data anxiety when they are expected to effectively analyze student assessment outcomes (Dunn et al., 2013b ; Powell et al., 2021 ), when they are introduced to new data management systems in the school, when they feel pressured to quickly grasp the school’s data management system (Andersen, 2020 ; Dunn et al., 2013a ), or when they are tasked with developing specific strategies to assess and enhance student learning outcomes (Dunn et al., 2013a , b ; Jimerson et al., 2019 ). These types of teacher responsibilities demand proficient data skills and knowledge, which not all teachers may possess, and thus, anxiety may hinder their ability to further develop their data literacy.

In summary, teacher dispositions towards data use can impact their effective utilization of data or impede the capacity to further develop their own data literacy. Our review also illuminated that it is not just individual teachers’ confidence or anxiety towards data use, but also the social dynamics within the school environment, including teacher collaboration, trust and respect, and relationships with the school management team, that can influence teachers’ data literacy. Therefore, fostering a collaborative climate within the school community and creating more opportunities for data use may strengthen a data-driven culture within the school.

4.2.4 Dimension 4. Data applications for various purposes

Our review suggests that teachers' data literacy can be manifested in their use of data for multiple purposes, primarily in three areas: (a) to enhance teaching practices (e.g., Datnow et al., 2012 , 2021 ; Farrell, 2015 ; Gelderblom et al., 2016 ; Wachen et al., 2018 ), (b) to support student learning (e.g., Joo, 2020 ; Lockton et al., 2020 ; Staman et al., 2017 ; Vanlommel et al., 2021 ; van der Scheer & Visscher, 2018 ), and (c) to make plans and strategies for school improvement (e.g., Abdusyakur & Poortman, 2019 ; Jimerson et al., 2021 ; Kallemeyn, 2014 ).

With respect to teaching enhancement purposes, teachers use data to inform their lesson plans (Ford, 2018 ; Gelderblom et al., 2016 ; Snodgrass Rangel et al., 2016 ; Reeves et al., 2016 ), set lesson objectives (Kallemeyn, 2014 ; Snodgrass Rangel et al., 2016 ; Reeves et al., 2016 ), develop differentiated instructions (Beck et al., 2020 ; Datnow et al., 2012 ; Farley-Ripple et al., 2019 ), and provide feedback to students (Gelderblom et al., 2016 ; Andersen, 2020 ; Jimerson et al., 2019 ; Marsh & Farrell, 2015 ). Furthermore, teachers use data to reflect on their own teaching practices (Datnow et al., 2021 ; Ford, 2018 ; Jimerson et al., 2019 ; Snodgrass Rangel et al., 2016 ) and evaluate the impact of using data on teaching and learning outcomes (Gelderblom et al., 2016 ; Marsh & Farrell, 2015 ).

In relation to supporting student learning, teachers use data to recognize individual students’ learning needs (Curry et al., 2016 ; Gelderblom et al., 2016 ), guide students to learning new or challenging concepts (Abrams et al., 2021 ; Keuning et al., 2017 ; Marsh et al., 2010 ; Reeves et al., 2016 ), set learning goals (Abdusyakur & Poortman, 2019 ; Curry et al., 2016 ), and monitor learning progress (Curry et al., 2016 ; Gelderblom et al., 2016 ; Marsh et al., 2010 ).

In terms of guiding school improvement strategies, teachers use data to develop school-based intervention programs (Abdusyakur & Poortman, 2019 ; Jimerson et al., 2021 ; Kallemeyn, 2014 ; Thompson, 2012 ), make decisions about school directions (Huffman & Kalnin, 2003 ; Prenger & Schildkamp, 2018 ; Schildkamp, 2019 ), and evaluate school performance for meeting the accountability requirements (Hardy, 2015 ; Jacobs et al., 2009 ; Jimerson & Wayman, 2015 ; Marsh et al., 2010 ; Omoso et al., 2019 ; Snodgrass Rangel et al., 2019 ).

In summary, the literature indicates that data-literate teachers use data for multiple purposes and consider it essential in fulfilling their various roles and responsibilities within the school. Teachers’ data use for supporting student learning tends to focus primarily on helping students achieve better learning outcomes; in contrast, teachers’ data use for teaching enhancement includes a broader range of data processes and practices.

4.2.5 Dimension 5. Data-related behavior

The fifth and final dimension we identified pertains to teachers' data-related behaviors within and outside the school context. Within this dimension, there appear to be two distinctive sets of teacher behaviors: (a) teachers’ data use to enhance communication and discussion with various stakeholders such as colleagues (Datnow et al., 2013 ; Van Gasse et al., 2017 ), school leaders (Jimerson, 2014 ; Marsh & Farrell, 2015 ; Nicholson et al., 2017 ), and parents (Jimerson & Wayman, 2015 ; Jimerson et al., 2019 ); and (b) teachers’ participation in and engagement with learning about data use (Schildkamp et al., 2019 ; Wardrip & Herman, 2018 ) and data culture in schools (Datnow et al., 2021 ; Keuning et al., 2016 ). These behaviors were found to be integral aspects of teachers' data literacy. Teacher engagement with data is manifested in multiple ways, such as involvement in team-based approaches to data utilization (Michaud, 2016 ; Schildkamp et al., 2017 ; Wardrip & Herman, 2018 ; Young, 2006 ), active participation in creating a school culture of data use (Abrams et al., 2021 ; Albiladi et al., 2020 ), evaluation of the organizational culture and conditions pertaining to data use (Andersen, 2020 ; Datnow et al., 2021 ; Lockton et al., 2020 ), and participation in professional development opportunities focused on data literacy (Ebbeler et al., 2016 ; O’Brien et al., 2022 ; Schildkamp et al., 2017 ).

In summary, this dimension highlights that teachers’ data literacy includes various forms of their active engagement and behavior to enhance the effective use and understanding of data. Our findings also indicate that teacher communication and discussions regarding data primarily focus on student assessment data with various stakeholder groups including colleagues, school leaders, and parents.

5 Discussion

The present study reviews 83 empirical studies on teachers' data literacy published in peer-reviewed journals from 1990 to 2021, and we identified 95 specific indicators categorized across five dimensions: (a) knowledge about data , (b) skills in using data , (c) dispositions towards data use , (d) data applications for various purposes , and (e) data-related behaviors in the school . Our review of the identified indicators of this study has led to the following definition of teachers’ data literacy:

A set of knowledge, skills, and dispositions that empower teachers to utilize data for various purposes, including generating, collecting, analyzing, interpreting, integrating, evaluating, reporting, and communicating, aimed at enhancing teaching, supporting student learning, engaging in school improvement, and fostering self-reflection. Teachers’ data literacy also involves the appreciation for working together with colleagues and school leaders to (a) assess organizational conditions for data use, (b) foster a supportive school culture, and (c) engage in ongoing learning to optimize the effective utilization of data.

Our analysis also revealed several noteworthy findings that are presented in the following sections.

5.1 Teachers’ data literacy and assessment literacy

There have been concerns expressed by scholars about conceptual fuzziness in teachers’ data literacy and assessment literacy (cf. Brookhart, 2011 ; Ebbeler et al., 2016 ; Mandinach, 2014 ; Mandinach & Gummer, 2016 ). Indeed, student assessment data are the most salient form of data in the school (Mandinach & Schildkamp, 2021 ). The research trend of recognising the importance of teachers’ data literacy is often based on the premise that teachers’ data literacy would enhance teaching and ultimately improve student outcomes (cf. Ebbeler et al., 2016 ; Mandinach & Gummer, 2016 ; Poortman & Schildkamp, 2016 ; Thompson, 2012 ; Van Gasse et al., 2018 ; Zhao et al., 2016 ). Furthermore, the systemic pressure on schools to meet accountability requirements has also impacted their endeavors to utilize, assess, and demonstrate school performance based on student assessment data in recent years (Abdusyakur & Poortman, 2019 ; Farrell, 2015 ; Schildkamp et al., 2017 ; Weiss, 2012 ). In these contexts, it is not surprising that educational practitioners would think about student assessment data when they are expected to improve their data skills.

In this light, we have tallied the teacher data literacy indicators that directly relate to student assessment or about students’ learning outcomes . In Table 3 , the symbol “⁑” is used for the indicators related to student assessment, and “ξ” is used for the indicators related to students’ learning outcomes. We found that there were only 19 out of 95 indicators that directly related to student assessment (e.g., knowledge about different purposes of assessment, understanding the alignment between instruction and assessment, understanding state-level assessment policies on data use). Similarly, there were only 13 out of 95 indicators that directly related to students’ learning outcomes (e.g., identifying evidence of student learning outcomes, understanding student learning outcomes using multiple sources).

Our review demonstrates that teachers regularly interact with a diverse array of data and undertake various tasks closely associated with its utilization. Therefore, teachers' data literacy encompasses more than just its use in student assessment and learning outcomes; it extends to understanding students’ social-emotional learning and higher-order thinking skills, assessing school conditions for data use, reflecting on teaching practices, and communicating with colleagues. Consequently, limiting the perspective of teachers’ data literacy solely to assessment literacy may impede their full utilization and appreciation of data applications essential to their multifaceted work in supporting and enhancing student and school outcomes.

5.2 Teachers’ data literacy and data-related dispositions

We found that one of the key aspects of teachers’ data literacy is teachers’ dispositions towards data use. As noted by Mandinach and Gummer ( 2012 , 2016 ), this aspect of teacher characteristics has not received as much research attention as data knowledge or data skills. It is perhaps due to ‘literacy’ being traditionally linked to knowledge and skills (Shavelson et al., 2005 ; also see Mandinach & Gummer, 2012 ) or due to the research trend of unpacking teachers’ needs and pedagogical approaches in specific subject/learning domains (Sykes, 1999 ; see Mandinach & Gummer, 2016 ). However, our review suggests that teacher dispositions towards data use are required in virtually all aspects of data use and data analyses processes. We also found that the most important data-related teacher disposition was confidence . The data literacy literature recognized the importance of teacher confidence, with respect to accessing, collecting, analysing, integrating, evaluating, discussing, and making decisions, suggesting that for teachers to be data literate, confidence may be required in every step of data use. There has been extensive research that has demonstrated a strong link between confidence and learning motivation, indicating that individuals tend to gravitate towards domains in which they feel comfortable and confident (e.g., Lee & Durksen, 2018 ; Lee & Stankov, 2018 ; Stankov & Lee, 2008 ). Our review findings contribute to this existing body of research, emphasizing the importance of confidence in teachers’ data utilization. This underscores the necessity for policies and professional development initiatives aimed at enhancing teachers’ data use to also prioritize strategies for building teachers’ confidence in this area.