Research on the application of robot welding technology in modern architecture

- Original article

- Published: 13 November 2021

- Volume 14 , pages 681–690, ( 2023 )

Cite this article

- Tao Guan ORCID: orcid.org/0000-0001-9124-8818 1

449 Accesses

3 Citations

Explore all metrics

In order to explore the application of robot welding machine technology in modern buildings, this paper analyzes the robot welding technology, combines machine vision to analyze the visual calibration of the welding robot, and corrects the calibration results through experimental data to obtain the robot hand-eye parameters. Moreover, this paper uses Rodriguez transformation to convert the rotation vector into a rotation matrix and combines with the translation vector to obtain the conversion matrix from the camera coordinate system to the calibration board coordinate system. In addition, this paper combines the simulation test to evaluate the technical application effect of robot welding technology. From the simulation results, it can be seen that robot welding technology can meet the welding needs of modern buildings. Finally, this paper analyzes the application of robotic welding technology in modern buildings. The research results show that robot welding technology can play an important role in modern buildings.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Innovations in Infrastructure Service Robots

On-Site Automatic Construction of Partition Walls with Mobile Robot and Computer Vision

Status Quo of Construction Robotics: Potentials, Applications and Challenges

Chai X, Zhang N, He L et al (2020) Kinematic sensitivity analysis and dimensional synthesis of a redundantly actuated parallel robot for friction stir welding[J]. Chinese J Mech Eng 33(1):1–10

Article Google Scholar

Chen S, Zhou Y, Xue J et al (2017) High rotation speed friction stir welding for 2014 aluminum alloy thin sheets[J]. J Mater Eng Perform 26(3):1337–1345

Du JH, Deng JX, Huang KJ et al (2018) Seam tracking system based on rotating arc sensor for robot arc welding[J]. J Discrete Math Sci Cryptography 21(6):1407–1412

Fang HC, Ong SK, Nee AYC (2017) Adaptive pass planning and optimization for robotic welding of complex joints[J]. Advances in Manufacturing 5(2):93–104

Feucht T, Lange J, Erven M et al (2020) Additive manufacturing by means of parametric robot programming [J]. Construction Robotics 4(1):31–48

Haitao L, Tingke W, Jia F et al (2021) Analysis of typical working conditions and experimental research of friction stir welding robot for aerospace applications[J]. Proc Inst Mech Eng C J Mech Eng Sci 235(6):1045–1056

Han L, Zhong Q, Chen G et al (2019) Development of local dry underwater welding technology[J]. J ZheJiang Univ (engineering Science) 53(7):1252–1264

Google Scholar

Hou Z, Xu Y, Xiao R et al (2020) A teaching-free welding method based on laser visual sensing system in robotic GMAW[J]. Int J Adv Manufacturing Technol 109(5):1755–1774

Jichang G, Zhiming Z, Xin W et al (2018) Numerical solution of the inverse kinematics and trajectory planning for an all-position welding robot[J]. J Tsinghua Univ (sci Technol) 58(3):292–297

Kim J, Lee J, Chung M et al (2021) Multiple weld seam extraction from RGB-depth images for automatic robotic welding via point cloud registration[J]. Multimed Tools Appl 80(6):9703–9719

Liu Y, Ren L, Tian X (2019) A robot welding approach for the sphere-pipe joints with swing and multi-layer planning[J]. Int J Adv Manufacturing Technol 105(1):265–278

Łukasik Z, Kuśmińska-Fijałkowska A, Kozyra J et al (2018) The problem of power supply for station with industrial robot in an automated welding process[J]. Electr Eng 100(3):1365–1377

Michal D, Košťál P, Lecký Š et al (2018) Racionalization of Robotic Workstation in Welding Industry[J]. Vedecké Práce Materiálovotechnologickej Fakulty Slovenskej Technickej Univerzity v Bratislave so Sídlom v Trnave 26(42):159–164

Ströber K, Abele C (2018) Titanium Welding Technology for Series Production[J]. Lightweight Des Worldw 11(4):12–15

Tam W, Cheng L, Wang T et al (2019) An improved genetic algorithm based robot path planning method without collision in confined workspace[J]. Int J Model Ident Control 33(2):120–129

Wang Y, Chen X, Konovalov SV (2017) Additive manufacturing based on welding arc: a low-cost method[J]. J Surf Invest 11(6):1317–1328

Wang Q, Jiao W, Wang P et al (2020) Digital twin for human-robot interactive welding and welder behavior analysis[J]. IEEE/CAA J Autom Sinica 8(2):334–343

Yang L, Li E, Long T et al (2018a) A welding quality detection method for arc welding robot based on 3D reconstruction with SFS algorithm[J]. Int J Adv Manufacturing Technol 94(1):1209–1220

Yang L, Li E, Long T et al (2018b) A novel 3-D path extraction method for arc welding robot based on stereo structured light sensor[J]. IEEE Sens J 19(2):763–773

Yang L, Li E, Long T et al (2018c) A high-speed seam extraction method based on the novel structured-light sensor for arc welding robot: a review[J]. IEEE Sens J 18(21):8631–8641

Zhang B, Shi Y, Gu S (2019) Narrow-seam identification and deviation detection in keyhole deep-penetration TIG welding[J]. Int J Adv Manufacturing Technol 101(5):2051–2064

Zou Y, Lan R (2019) An end-to-end calibration method for welding robot laser vision systems with deep reinforcement learning[J]. IEEE Trans Instrum Meas 69(7):4270–4280

Download references

There was no outside funding or grants received that assisted in this study.

Author information

Authors and affiliations.

Architectural Engineering Institute, Xinyang Vocational and Technical College, Xinyang, 464000, China

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Tao Guan .

Ethics declarations

Conflict of interest.

The author declared that they have no conflicts of interest to this work. I declare that I do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Guan, T. Research on the application of robot welding technology in modern architecture. Int J Syst Assur Eng Manag 14 , 681–690 (2023). https://doi.org/10.1007/s13198-021-01473-5

Download citation

Received : 24 August 2021

Revised : 01 October 2021

Accepted : 22 October 2021

Published : 13 November 2021

Issue Date : April 2023

DOI : https://doi.org/10.1007/s13198-021-01473-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Welding technology

- Modern technology

- Application analysis

- Find a journal

- Publish with us

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 31 December 2022

Design and analysis of welding inspection robot

- Pengyu Zhang 1 ,

- Ji Wang 1 ,

- Feng Zhang 1 ,

- Peiquan Xu 1 ,

- Leijun Li 2 &

- Baoming Li 3

Scientific Reports volume 12 , Article number: 22651 ( 2022 ) Cite this article

2384 Accesses

4 Citations

Metrics details

- Electrical and electronic engineering

- Mechanical engineering

Periodic inspection, commonly performed by a technician, of weld seam quality is important for assessing equipment reliability. To save labor costs and improve efficiency, an autonomous navigation and inspection robot is developed. The development process involves the design of chassis damping, target detection mechanism, control system, and algorithms. For performing weld inspection in complex, outdoor, environments, an algorithm is developed for the robot to avoid any obstacles. This algorithm for planning the inspection route is based on an improved timed-elastic-band (TEB) algorithm. The developed robot is capable of conducting inspection tasks in complex and dangerous environments efficiently and autonomously.

Similar content being viewed by others

Path planning and smoothing of mobile robot based on improved artificial fish swarm algorithm

An intelligent fuzzy-particle swarm optimization supervisory-based control of robot manipulator for industrial welding applications

Motion control and positioning system of multi-sensor tunnel defect inspection robot: from methodology to application

Introduction.

With the rapid developments, robots can perform simple or complex tasks in dangerous environments that are beyond people's reach. Today, the use of robots as work aids has become increasingly common in both industrial and consumer spaces. Robots can reduce labor costs, save time, improve safety, and improve the quality of work 1 . Robots also play a significant role in welding, such as spot welding for automotives, arc welding for bridge girders, and welding of polymer-matrix composites. Welding is widely used for metallic materials, from vessels and pipelines to bridges and railways 2 . Good weld quality can ensure the strength and toughness of the joints. During service, welds often will deteriorate, by corrosion and fatigue cracking, and result in structural failures. The most serious failures often involve welds in critical locations, where damage caused by corrosion leads to cracking, leakage, or bursting of vessels. In large-scale infrastructures where the welds deteriorate over a large area, it is impractical and cumbersome to inspect the welds using human labor. The use of robots becomes necessary and feasible for these cases 3 . The design of weld inspection robots revolves around two questions: how can the robot accurately reach the place where the weld is located, and how can the robot accurately inspect the weld.

Different solutions have been proposed by researchers to solve these essential weld-inspection robotic questions. A robotic climber with multiple breathing chambers for inspection was designed for the inspection of concrete walls 4 . The propulsion system consisted of three omnidirectional drive wheels with a great maneuverability. Combined with a vacuum system comprising seven controllable vacuum chambers and a large fluid reservoir operating system, the robot had pressure sensors and valves integrated for controls. Shang et al. introduced a method that utilized neodymium permanent magnets for bonding, giving the robot a payload-carrying capacity 5 . The arrangement of the magnets improved the ground clearance, enabling the robot to overcome obstacles. To be able to work on curved surfaces, a wheeled robot with two articulated segments was designed, which had the advantages of high speed and good maneuverability.

A robot with magnetic wheels and vision sensors for defect detection was designed, and the drive mechanisms of inspection robots had included three forms: a track, a wheel, and a leg 6 , 7 . Adsorption or magnet suction affixed to the location of detection had been the most common for welding inspecting robots. The sensors would detect the weld seam and then extracts the weld seam geometry, and correct the robot's position in real time 8 , 9 , but the degree of automation was low and the requirements for sensor accuracy were high.

Inspection robots have recently evolved to become autonomous and semi-autonomous, for saving inspection time and reducing labor costs. Nita and other researchers 10 have studied a semi-autonomous tracked inspection robot to detect defects in building ceilings. The developed inspection robots were equipped with wireless cameras and data processing functions, which could provide valuable information for the repair of the damaged structures. The inspection robot was able to assess the damage without the need for an engineer on site. Krenich 11 designed a six-axis robot that could move autonomously for inspection, while a human could also inspect around the weld seam with a camera carried by the robot. Bruzzone 12 introduced a mobile robot with a hybrid wheel and leg design, which featured wheels that could roll on flat grounds at a high speed, while the legs enabled the robot to avoid obstacles and to climb hills 13 .

Detection algorithms were developed for corrosion and cracking in aged welds. The use of advanced vision algorithms based on deep learning and machine learning made it possible to detect and recognize the defects. An algorithm based on an improved Gaussian mixture model for weld seam detection and classification was introduced by Sun et al. 14 It could classify the identify the weld defects with a high accuracy and in real-time. Li et al. proposed a deep learning-based algorithm for weld seam image recognition 15 , which accelerated the neural training on several thousand images of the welds. The disadvantage of this algorithm was that it was too computationally intensive and hardware demanding. Yang et al. proposed a method to improve the defect localization of U-net mesh to improve the automatic localization accuracy for weld detection 16 . For low-cost robot development using low- to mid-cost main control boards, less computationally intensive algorithms need to be developed to achieve the identification and localization of weld defects.

Based on the above survey of literature, the objectives of the present research include: (1) Design of an autonomous weld inspection robot and its control system. The robot can achieve autonomous weld inspection, and can pass smoothly between narrow spaces with less time while autonomously avoiding obstacles. (2) Design of a yolo5-based target algorithm for weld seam detection and identification in complex environments.

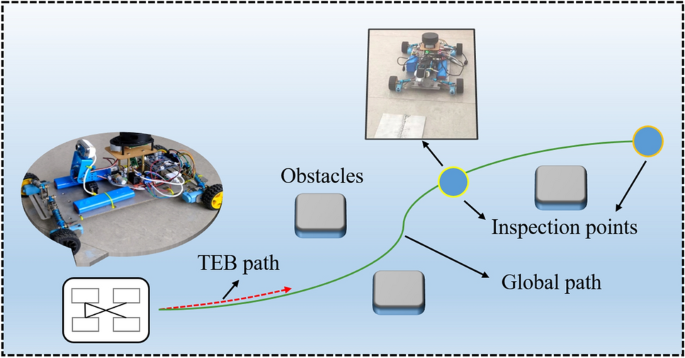

Figure 1 shows an overview of the scope-of-work for the autonomous weld inspection robot, which dodges obstacles in a complex environment and performs inspection of the weld seam at the inspection point.

Work overview of weld inspection robot.

Design layout

The design of the autonomous inspection robot is divided into four different components: mechanical structure design, chassis motion control design, vision detection system design, and control system architecture design.

Structural design of the robot

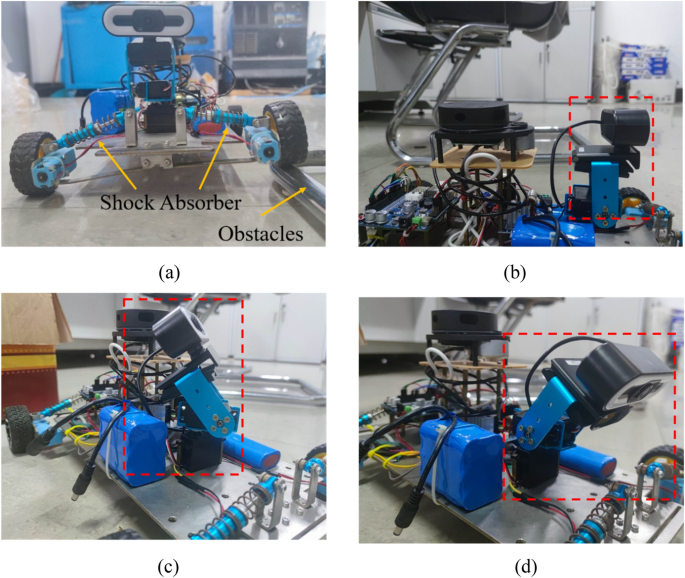

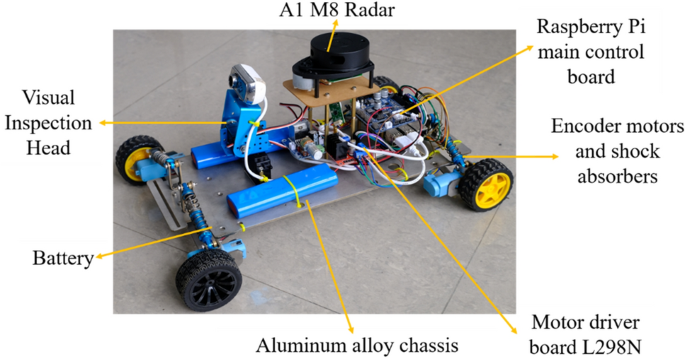

An aluminum chassis is used as the main frame of the robot, and each motor is individually attached to the chassis through a motor mounting plate. Shock absorbers are placed between the motor mounting plate and the chassis to reduce the vibration of the robot and to help it pass through obstacles as shown in Fig. 2 a, so that the robot maintains its posture when the wheels cross over the obstacles. Each wheel is controlled by a separate gear motor for proper power distribution as well as flexible control. Encoders and a 1:90 gear ratio allow large torques to be transmitted to the wheels. High precision motors provide precise feedback of speed and position information. The four-wheel drive allows for fast turning and easier passage through complex roads. Due to the high mounting position of the radar, smaller obstacles cannot be detected, so ultrasonic sensors and infrared sensors are used to solve the collision problems caused by the blind spots of the radar. The main control system is mounted on the chassis and equipped with a sensor-radar with obstacle detection functions. For weld seam detection, the robot's detection system consists of a camera, two servos, two brackets and several bolts. Adjusting the orientation of the servos allows for multi-directional detection as shown in Fig. 2 b–d. The information received by the inspection system is transmitted to a PC, on which the inspection information can be viewed and analyzed remotely. The complete structural design of the robot is shown in Fig. 3 .

Status of the shock absorber and visual head.

Structural design of the robot.

Chassis motion control design

Popular control models for chassis drive include the Wheel Differential model, Ackerman model, and Omnidirectional model. The turning radius of the Ackerman model cannot be 0, which does not allow the robot to turn in narrow space. The Omnidirectional model needs to use McNamee wheels instead of the common wheels. In addition, the gaps between each small wheel of the McNamee wheel are liable to be struck by foreign objects and affect the robot's movement. Therefore, the Wheel Differential model is selected, and optimized for turning around tight corners and for reducing sliding friction.

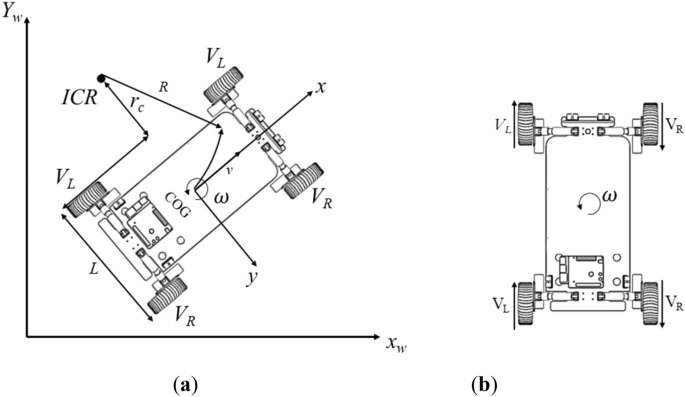

For the kinematics of the robot, a local coordinate frame, denoted as ( x , y , z ), is located at the center of gravity ( COG ) of the model. The motion of the robot is on the horizontal plane, formed by the Xw and Yw axes of the world coordinate system, shown in Fig. 4 a.

( a ) Motion model of the differential speed robot. ( b ) The steering model.

The instantaneous center of rotation of the robot is ICR , the linear velocity of the left wheel is V L , the linear velocity of the right wheel is V R , the angular velocity is ω , the distance between the two wheels is L , and the distance from the left wheel to the center of the circle is r c .

The physical relationship between angular velocity, linear velocity (v), and radius of motion of the differential robot is as follows:

The decomposition of the velocity of the left wheel and the right wheel can be found as:

From this, the relationship between the overall linear velocity of the robot, the angular velocity, and the left and right wheels can be solved as follows:

As shown in Fig. 4 b, the left and right wheels of the robot designed are configured in parallel, and the robot turning is realized by the speed difference between the left wheel and the right wheel. The radius of curvature of the turn increases when the speed difference between the left and right wheels is larger. When the robot works in a narrow space, the robot turns around its middle vertical axis, i.e., the left and right wheels have the same speed but in opposite directions, and the turning radius is 0.

The performance of key parts of the robot needs to be analyzed using specialized software before it is actually applied in order to avoid unnecessary waste of time and money 17 . In order to investigate the slippage problem of the above motion model, the simulation is carried out in gazebo. The dynamic tag is added to the tire link tag and the ground link tag in the XACRO file of the robot model. A dynamic tag is to add to measure the friction coefficient between the tire and the ground. The parameters are shown in Table 1 . Figure 5 a shows the rotation speed of the inspection robot around its own central axis. Figure 5 b shows the speed test of the inspection robot walking a straight line. Through the simulation, it is found that the motion model can complete going straight and rotating, while the occurrence of slippage is relatively mild.

Testing the chassis in a simulation environment: ( a ) Turning test. ( b ) Straight-line speed test.

Vision inspection system

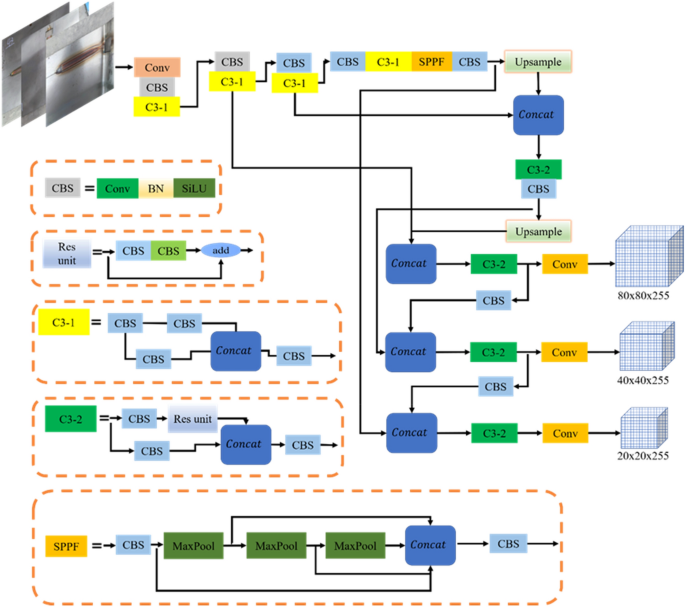

The visual inspection function is for identifying and locating the weld seam. The images are captured at the robot side and the weld seam pictures are identified at the computer side using YOLOv5 inspection algorithm. The YOLOv5 based target detection algorithm, which has the advantages of high detection speed and lightweight deployment model, is used for the robot's inspection system. The YOLOv5 algorithm has four structures (i.e., s, m, l, and x) to represent different depths and widths of the network. It uses indicators to deepen and widen the network, but as the detection accuracy increases, the response velocity becomes progressively slower. The device design in this study requires model lightweight, real-time responses, and any-size image input, so YOLOv5 is chosen as the benchmark model, and its structure is shown in Fig. 6 .

YOLOv5 network structure.

A brief description of Yolov5s model

The YOLOv5 network structure is consisted of the input, backbone, neck, and detection end. The input includes the data enhancement of the mosaic, adaptive calculations of the anchor frame and adaptive scaling of the image. The back-bone is mainly composed of the CBS (convolution, BN layer, SiLU activation function), SPPF (spatial pyramid pooling-fast), and C3 (concentrated-comprehensive convolution) modules 18 . Among them, the batch normalization (BN) layer solves the problems of gradient disappearance and gradient explosion through data normalization. The sigmoid weighted linear unit (SiLU) activation function is a smooth and non-monotonic function, and it prevents the gradient from gradually decreasing to 0 during slow training.

The neck is the combination of FPN 19 and PANet 20 . The deep-feature map contains stronger semantic features and weaker localization information, while the shallow-feature map contains stronger location information and weaker semantic features. FPN transfers the semantic features from the deep-layer to the shallow-layer to enhance the semantic representation at multiple scales, while PANet transfers the location information from the shallow-layer to the deep-layer to enhance localization at multiple scales. PANet adds a bottom-up direction enhancement on top of FPN.

For network training, the loss function plays an important role in the weld detection model, which marks the difference between the predicted and actual values of the model. In YOLOv5s, a joint loss function is used to train bounding box regression, classification, and confidence. The used loss function ( L l oss ) is as follows 21 :

where L cls indicates the classification error; L box indicates the bounding box regression error; L conf indicates the confidence error.

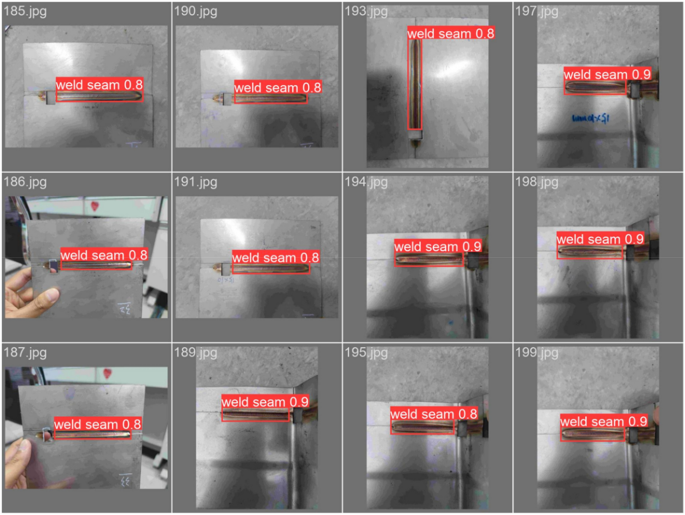

Acquisition and annotation of images

The weld seam is inspected and the acquisition photos are of the completed weld seam. The image acquisition device is a camera (China Vistra Q16 2k usb camera) with an f-value of 1.8. Photographs of the weld seam are collected in different environments (partially obscured weld seam, weld seam at various distances from the camera). A total of 300 images of the weld seam are collected at a distance of about 20–30 cm. To speed up the model training, the images are compressed to 512 × 341 pixels, and saved in jpg format. Example images are shown in Table 2 . The collected images are annotated using LabeIImg, and the annotation produces xml files for model training. The data are divided into training set, validation set, and test set, with a ratio of 7:2:1, while there are no data duplications among the sets.

Experimental environment and parameter setting

The training model for weld detection is simulated on an HP Shadow Wizard computer with the configurations shown in Table 3 .

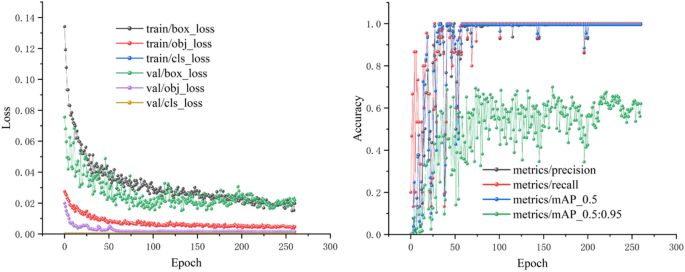

The hyperparameters optimized for training using the above hardware are: epoch value is 150, learning rate is 0.01, momentum is 0.937, weight decay is 0.0005, batch size is 16, workers are 8, optimizer is stochastic gradient descent (SGD), and a single graphic processing unit (GPU) is used to speed up the training. All hyperparameters are performed for the pre-training on the Validation set. The change in the loss values of the pre-training process is shown in Fig. 7 . It can be seen that the loss value decreases rapidly at the beginning of the training period, and after 50 rounds of training, it tends to be smooth and converges, without any underfitting or overfitting.

Convergence results of the pre-training model.

Control architecture

Electronics and control.

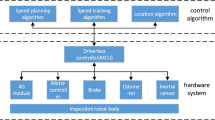

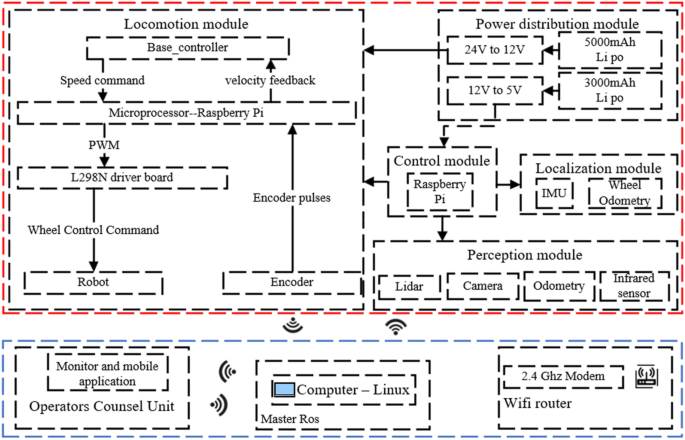

The control architecture of the robot is shown in Fig. 8 . The motion module is controlled by four DC motors with an encoder (gear ratio 1:90, size 25 × 25 × 80 mm), two motors are installed on each side of the chassis, and all motors are connected to the L298n motor driver board through a Dupont cable. The motor driver board and the Raspberry Pi main control board are connected through the IO port. The Raspberry Pi subscribes to the data from the encoder through the IO port, and it processes the data and sends speed commands to the motors. The sensing module of the robot consists of the LIDAR (Lidar A1M8), a camera head, an odometer, and an infrared sensor. The laser sensor, communicating with the Raspberry Pi through a USB port, is used for building a map of the surrounding environment, positioning, and avoiding obstacles. However, LIDAR has a blind scanning area, and there is a possibility that obstacles in the complex environment are not perceived, so the infrared sensor is used to supplement and improve the obstacle sensing. The odometer is used for robot positioning, and the LIDAR positioning is used to improve the odometer accuracy. The camera head is connected to the Raspberry Pi via a USB port for weld detection. The power supply module consists of two batteries (12 V 5000 mAh; 12 V 3000 mAh). The 5000 mAh battery supplies power to the motor driver board L298n. The 3000 mAh battery supplies power to the Raspberry Pi mainboard, and the rest of the sensors are powered through a USB or IO port.

Control system of the robot.

The motors do not use stm32 or the Arduino control method, but directly connect to the motor driver board through the IO port on the Raspberry Pi, which improves the convenience of operation and the sensitivity of control. The core controller of the robot is the Raspberry Pi 4B. The data processed on this robot is moderate, so the Raspberry Pi 4B is sufficient, which has Ubuntu 18.04 Linux and Robot Operating System (ROS) installed. The data collected by various sensors are transferred to the ROS system for processing. The various sensors, main control board, GUI, and PC are integrated through the ROS framework. To reduce the pressure on the Raspberry Pi for processing the data, the collected data are transferred through WIFI from Raspberry Pi to the PC, for processing on the distributed framework of ROS.

Graphical user interface (GUI)

When they are connected to the same network, both the robot and PC can be remotely monitored and controlled through the distributed ROS. Communication between the robot and control device is carried out through ROS' Message Queue Telemetry Transfer (MQTT). When the navigation control node is started on the mobile side, a message is received on the PC, and a map building command can be executed on the PC at the same time, which controls the robot to build a map and issues a point-to-point cruise operation. This operation reduces the computational pressure on the Raspberry Pi 4B onboard the robot. A graphical user interface for monitoring and control is developed for the PC using the qt software. The robot can be viewed through the GUI when it is working in an unknown environment, and is assigned with control buttons and video outputs.

Navigation and control in complex environments

Selection of local path planning.

Path planning requires the cooperation of global path planning and local path planning. The weight of global path planning in the obstacle avoidance process is less than that of local path planning. Therefore, in this study, the local path planning is emphasized. The most critical issue is that the robot must safely avoid static obstacles and dynamic obstacles during an inspection. The local path planning methods in complex environments include the Artificial Potential Field method (APF) 22 , Genetic Algorithm 23 , Dynamic Window method (DWA) 24 , Neural Network Algorithm, and other intelligent algorithms. The ATF method tends to fall into local minima and fails to reach the focus position 25 , and the Neural Network algorithm is too demanding on the performance of the main control board 26 . All the above algorithms have a lower convergence speed, and none of them have the ability to avoid local extremes.

An improved TEB algorithm is, therefore, selected to implement the local path planning. The TEB algorithm was proposed by Rösmann 27 and was based on the classical elastic band algorithm, which was an obstacle avoidance method by optimizing multi-objective trajectory optimization. Compared with the local path planning algorithms described above, the TEB algorithm can set multiple constraints as needed to ensure the applicability of the algorithm. The multi-objective optimization of the TEB algorithm relies on only a few continuous states, thus optimizing for a sparse matrix model. Rösmann et al. proposed that the sparsity problem of the hypergraph-based TEB algorithm can be solved quickly and efficiently using the G2o framework to improve the computational speed. However, mobile robots equipped with the TEB algorithm can appear to be trapped in local minima and unable to cross obstacles in complex environment navigation. To solve this problem, Rösmann et al. proposed an extension of the TEB technique by using parallel trajectory planning in a spatially unique topology 28 , 29 . However, these approaches only considered the location of obstacles and did not consider potential collisions between the robot and surrounding obstacles. Lan et al. 30 proposed an active timed elastic band (PTEB) technique for autonomous mobile robot navigation systems in dynamic environments. Previous work to improve the effectiveness of TEB algorithms operating in complex environments has focused on obstacle avoidance. However, most of the related research only pursued avoiding local minima and smoothing the planned paths in complex environments. They did not consider the shortest local path, and the planned local path might not be the optimal path 31 . Therefore, the improved TEB algorithm still suffered from the robot backing up during turns, local detours, and unable to enter narrow areas.

The improved TEB algorithm proposed in this study optimizes the behavior of local bypassing and reversing, adds the constraint of angular acceleration to the constraints of the multi-objective optimization, and considers the time consumption brought by excessive turning. The proposed TEB algorithm is proved by experiments to achieve fast turning and reduce the behavior of reversing, improve the detection range of the inspection robot, and reduce the time cost of the inspection.

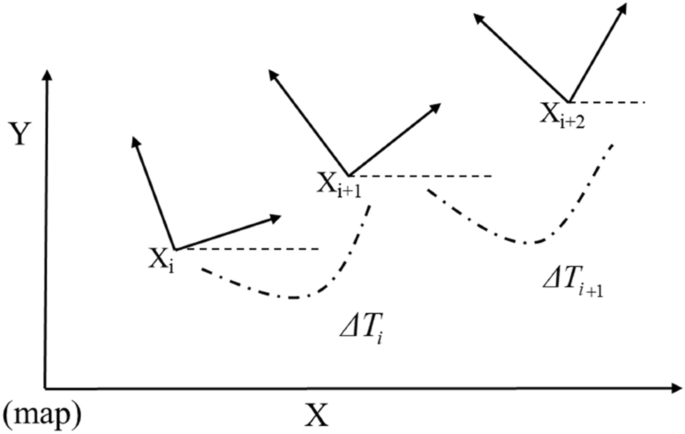

Timed elastic band algorithm (TEB) model construction

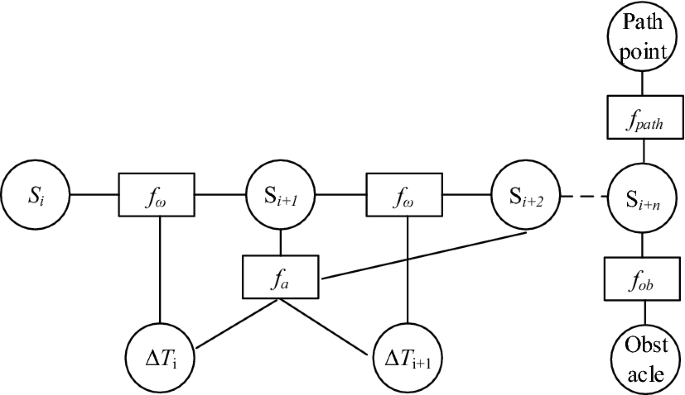

The proposed TEB algorithm is based on the elastic-band-algorithm with the addition of temporal information between bit-pose sequences, as shown in Eq. ( 5 ), which considers the dynamic constraints of the robot, and modifies the trajectory directly, instead of modifying the path. The operation principle of the TEB algorithm is to convert the position information of the searched initial path into the trajectory sequence with time information for the existing global path points, as shown in Fig. 9 . The large-scale optimization algorithm of the sparse system in the "G2O framework" is solved to obtain the optimal control quantity that satisfies the constraints, and the robot drive system is directly commanded by calculating the control variables v and ω, as in Eq. ( 6 ):

where X i is the poses at the time I , and Q is the sequence of the poses; ΔT i is the time interval between adjacent poses, and τ is the time interval sequence; the pose sequence and the time interval sequence are combined into a trajectory sequence B .

Pose and time interval of mobile robot in the world coordinate system.

Because the objective function of the TEB algorithm depends on only a few continuous pose states, this leads to a sparse system matrix that represents these constraints as objectives according to a segmented continuous, differentiable cost function. The function penalizes the violation of the constraints that represent the boundaries, as in Eq. ( 7 ).

where x r is the critical value, S is the scaling factor, and n is the polynomial coefficient, which usually takes the value of 2; ε is a small section of displacement near the critical value.

The multi-objective optimization function is shown in Eq. ( 8 ).

where f k ( B ) is a constraint function in Fig. 10 , and γ k is the weight corresponding to the constraint function.

The improved hyper-graph.

The trajectory constraints of the TEB algorithm are divided into two parts. The first part is constrained by global path planning; the second part is constrained by velocity, acceleration, and its own kinematic model. In this study, the focus is on optimizing the velocity, acceleration, and obstacle constraints.

The obstacle constraint is the most critical condition to ensure that the robot can avoid the obstacle completely. The minimum distance allowed between the robot and the obstacle is set to d min , and the distance between the robot and the obstacle is set to D . The position information of the obstacle on the map is obtained by sensors such as LIDAR. To ensure the safety of the planned trajectory, each bit posed on the TEB trajectory is related to the obstacles appearing on the map, and the penalty function is triggered when the distance D between the robot and the obstacle is lower than d min . The penalty function is expressed as Eq. ( 9 ):

The velocity and acceleration constraints are described similarly for the geometrically constrained penalty functions. The linear and angular velocities are approximated by the Euclidean distance between adjacent poses, and the amount of change in the directional angle can be expressed as Eq. ( 10 ).

The acceleration is related to two consecutive average velocities, so the average velocity corresponding to three consecutive poses needs to be acquired, and can be expressed as Eq. ( 11 ).

Constraints Based on Improved TEB Algorithm

To decrease the energy consumption, the control algorithm for the turning angle speed of the TEB algorithm is considered next. To realize the reverse and detour movtions of the robot in the process of avoiding obstacles, the control algorithm of the angular velocity is optimized. When the target point of the robot is given, the position point of the robot is set to ( x i , y j ). The adjacent path points are( x i , y i ) ( x i +1 , y i +1 ), the angle between the line connecting the two points and the robot's initial test pose is θ i . A minimum threshold angle θ imin is set. When θ i is greater than the minimum threshold, the angular velocity is set to the maximum. The robot will accelerate its turning to avoid reversing, and as the θ i becomes smaller, the angular velocity also decreases to achieve a smooth transition of the turn. The penalty function can be expressed as Eq. ( 12 ):

The above angular-velocity optimized control is used as an angular steering constraint, and the angular-velocity constrained edges are added to the hypermesh. A new hypergraph is thus constructed, as shown in Fig. 10 . The optimized angular-velocity constraint function is connected to two poses vertices S i and S i +1 . The optimization problem is transformed into a hypergraph, and solved using a large-scale algorithm for sparse systems in the G2O framework. The robot is driven directly by computing control variables ν and ω .

Experiments and discussion

Simulation experiment.

The simulation experiments are conducted on the ROS platform, first building the simulation environment in Gazebo, and observing the motion of the robot equipped with the improved TEB algorithm on the RVIZ visualization platform. The motion of the robot is modeled as a 4WD differential, with the left and right wheels controlled separately. The simulation parameters on the simulation platform are given in Table 4 .

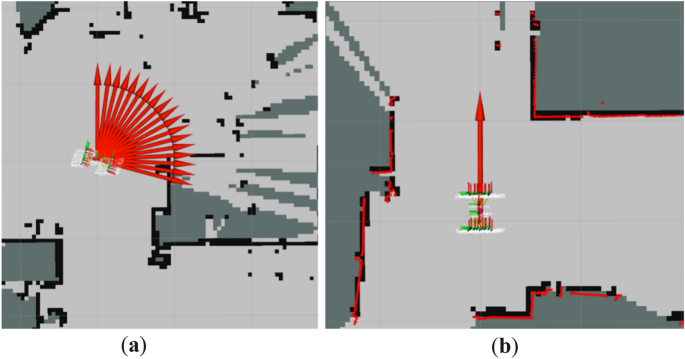

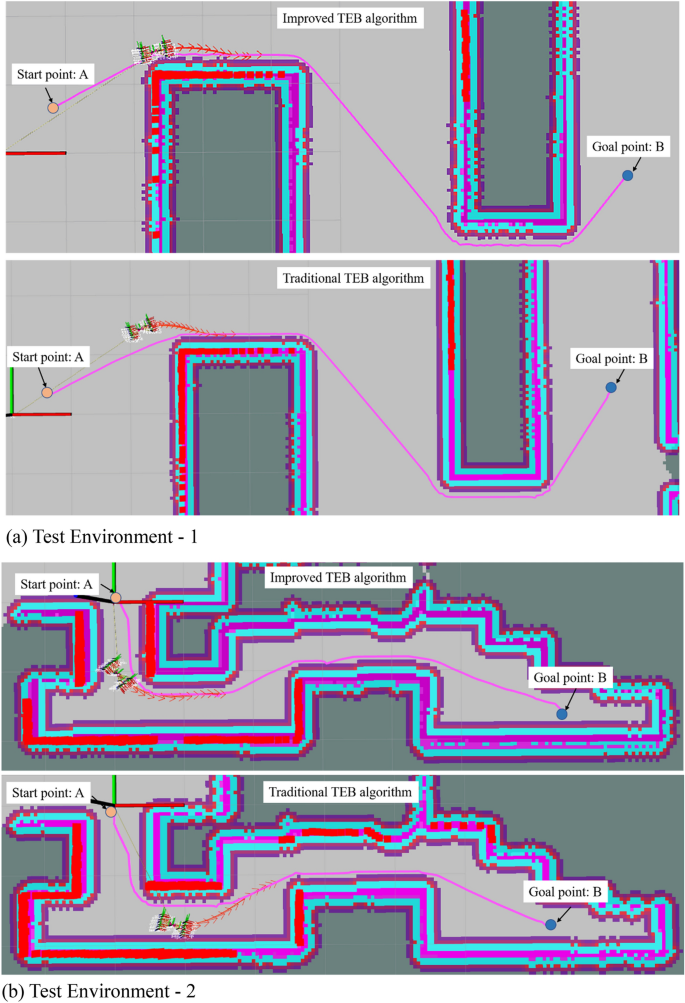

Figure 11 shows the motion process of the improved TEB algorithm and the traditional TEB algorithm in two different environmental scenarios. The robot with the improved TEB algorithm turns in Environment 1 and Environment 2 closely to the global path, with a shortened running time while avoiding the obstacles. While with the traditional TEB algorithm, it is clear that the robot makes greater detours at the turns, increasing the time for a given average speed. As can be seen from Table 5 , the running time for the improved algorithm in different scenarios is reduced by about 5 s compared with the running time of the traditional algorithm. The improved TEB algorithm shortens the running time by 12%, ensuring smooth operation and improving efficiency, while the robot moves close to the global path and avoids energy loss due to oversteering.

Test environments for robots.

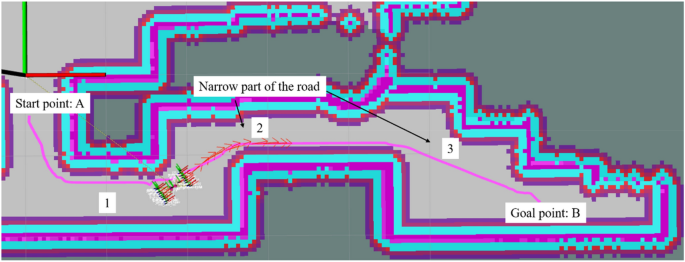

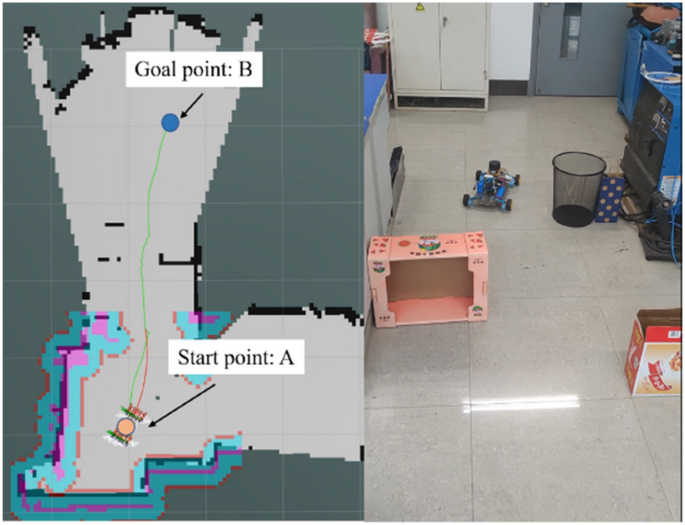

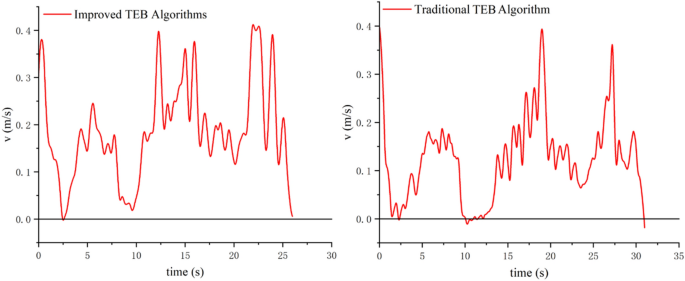

To verify the turning sensitivity of the inspection robot equipped with TEB algorithm and prevent reversing in narrow spaces, Fig. 12 shows the robot encountering successive narrow road sections. The robot starts from point A through the narrow sections (near the points 1, 2, and 3) to reach point B. The speed profile generated at each stage is viewed to compare the reversing before and after the improvement of the TEB algorithm.

The robot passes through the narrow road.

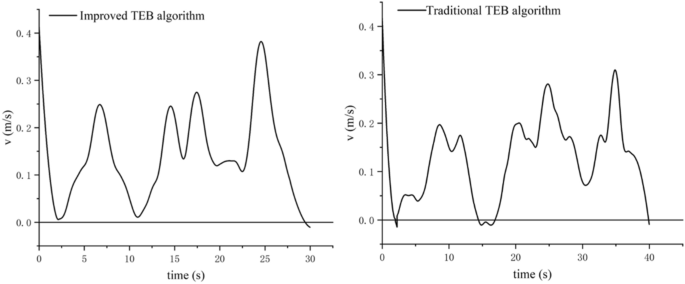

The velocity output curve of the robot from the starting point A to the target point B, after a continuous narrow road section, is shown in Fig. 13 . The traditional TEB algorithm shows reversing phenomena (i.e., the velocity becomes negative) when the robot passes the narrow road sections, where it is very easy to collide; while for the improved TEB algorithm reversing is improved, and turning efficiency, safety, and smoothness are improved. The reversing at the end of both curves is a fine adjustment to get closer to the target point.

Robot speed output curves of the traditional TEB algorithm and the proposed TEB algorithm.

Real robot experiment

The experimental platform of the automatic inspection robot has been built. It is to verify whether the robot's reversal in avoiding obstacles and the robot's turning problem in a narrow environment are significantly improved.

Analysis of robot backing behavior in a real-world environment

Figure 14 shows the experimental environment for testing the reversing behavior. The actual speed profile of the robot is shown in Fig. 15 . It can be seen that the improved algorithm reduces the backing behavior of the robot, and it reduces the running time of the robot. The improved algorithm shortens the running time by about 5 s, and the running efficiency increases by 15%. The actual test results have confirmed earlier simulations.

Robot testing environment.

Speed profiles of the robot using the improved TEB algorithm ( a ) and using the traditional TEB algorithm ( b ).

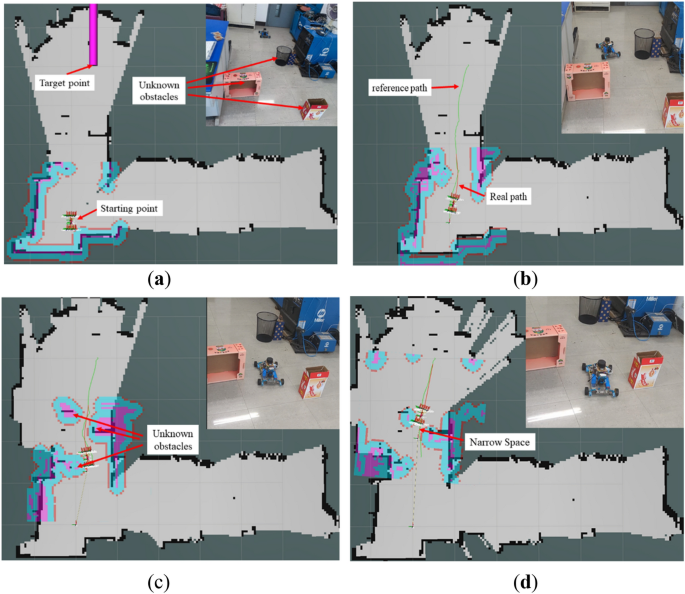

Inspection robot obstacle avoidance motion analysis

Figure 16 shows the actual position of the inspection robot navigating in ROS RVIZ, and with unknown obstacles in a realistic environment. The green line represents the global reference path planned by the robot; the red line represents the real-time planned path of the robot as planned by the TEB algorithm. To test the inspection and the robot's obstacle avoidance in the case of unknown obstacles, the obstacles are not included in the original map. The robot has no prior knowledge of these obstacles, and needs to sense them in real-time during the inspection. There are multiple small boxes that act as static obstacles randomly scattered at different locations to significantly intercept the trajectory of the inspection robot toward the target.

Movement of inspection robots avoiding unknown obstacles and passing through narrow spaces. ( a ) Position of robot and obstacles. ( b ) Robot dodging the first obstacle. ( c ) Detect the second obstacle and re-plan the path. ( d ) Robot passes through narrow space to reach the target position.

Figure 16 a shows the actual positions of the inspection robot and the target. Figure 16 b–d show the motion of the inspection robot in encountering unknown static obstacles and successfully reaching the target position in a complex environment. The robot is able to implement the inspection in a complex environment driven by the improved TEB algorithm. In Fig. 16 d, the robot passes smoothly in the narrow gap between the obstacles and does not collide with the obstacles and there is no reversed motion. This experiment verifies that the inspection robot can move flexibly in the complex environment, and can plan the path in real-time when encountering obstacles.

Weld seam inspection experiment

The images collected for this experiment are of the weld seam taken by the robot, and the detection results are shown in Fig. 17 . The detection results show that the recognition rate of the detection algorithm is above 90%, which illustrates the effectiveness of the algorithm. The weld seam detection system proposed in this paper is mainly designed to locate and identify the weld seam. After locating the weld seam, the detection system guides the administrator to observe and inspect the weld seam for defects, which is the key part of this study.

Welding seam test results.

This study uses an unmanned vehicle (the robot) with autonomous navigation and obstacle avoidance as a carrier to inspect and locate weld seams using vision detection algorithms. The inspector can set the inspection location as needed, and the robot can independently reach the inspection location, identify and locate the weld seam, and provide inspection information to the inspector. The vast majority of current inspection weld robots 8 , 9 , 32 could only detect weld seams in specific scenarios while requiring staff intervention to adjust the robot's position with real-time monitoring. They relied on the clarity and continuity of the weld seams, and limited span between two weld seams. This study has tested some new ideas and new designs for realistic autonomous weld seam inspections.

This paper presents the design of a novel flexible inspection robot. The inspection robot is equipped with a four-wheel independent suspension adapted to undulating sections of the ground, a flexible inspection head that can detect all around, and a control algorithm that can detect in narrow passages, all of which improve the efficiency of the robot's inspection. Detection route planning is simulated by an improved Timed-Elastic-Band (TEB) algorithm. Experiments on the path planning algorithm, a key problem for the robot, show that the improved planning algorithm can effectively control the robot in a narrow space, ensuring that the robot does not encounter other obstacles and that the running time is shortened by 12%; the target detection algorithm of yolov5s is also used to train the weld seam detection model with a detection accuracy of better than 90%, based on the robot-provided photo information to identify and locate the weld seam, and provide information to the weld inspector. There is a shortcoming in this study, that the robot recognizes only a single type of weld, and does not detect and classify weld defects.

Data availability

The data used in the manuscript are available from the corresponding author on reasonable request.

Salama, S., Hajjaj, H. & Khalid, I. B. Design and development of an inspection robot for oil and gas applications. Int. J. Eng. Technol. (IJET) 7 , 5–10. https://doi.org/10.14419/IJET.V7I4.35.22310 (2018).

Article Google Scholar

Feng, X. et al. Application of wall climbing welding robot in automatic welding of island spherical tank. J. Coastal. Res. 107 , 1–4. https://doi.org/10.2112/JCR-SI107-001.1 (2020).

Nguyen, L. & Miro, J. V. Efficient evaluation of remaining wall thickness in corroded water pipes using pulsed eddy current data. IEEE Sens. 20 , 14465–14473. https://doi.org/10.1109/JSEN.2020.3007868 (2020).

Hillenbrand, C., Schmidt, D. & Berns, K. CROMSCI: Development of a climbing robot with negative pressure adhesion for inspections. Ind. Robot. 35 , 228–237. https://doi.org/10.1108/01439910810868552 (2008).

Shang, J., Bridge, B., Sattar, T., Mondal, S. & Brenner, A. Development of a climbing robot for inspection of long weld lines. Ind Robot. 35 , 217–223. https://doi.org/10.1108/01439910810868534 (2008).

Fischer, W. et al. Foldable magnetic wheeled climbing robot for the inspection of gas turbines and similar environments with very narrow access holes. Ind. Robot. 37 , 244–249. https://doi.org/10.1108/01439911011037631 (2010).

Okamoto, J. et al. Development of an autonomous robot for gas storage spheres inspection. J. Intell. Robot. Syst. 66 , 23–35. https://doi.org/10.1007/s10846-011-9607-z (2012).

Wang, Y. et al. Design and adsorption force optimization analysis of TOFD-based weld inspection robot. J. Phys. Conf. Ser. 1303 , 012022. https://doi.org/10.1088/1742-6596/1303/1/012022 (2019).

Li, J., Li, B., Dong, L., Wang, X. & Tian, M. Weld seam identification and tracking of inspection robot based on deep learning network. Drones 6 , 216. https://doi.org/10.3390/drones6080216 (2022).

Nitta, Y. et al. Damage assessment methodology for nonstructural components with inspection robot. Key Eng. Mater. 558 , 297–304. https://doi.org/10.4028/www.scientific.net/KEM.558.297 (2013).

Krenich, S. & Urbanczyk, M. Six-legged walking robot for inspection tasks. Solid State Phenom. 180 , 137–144. https://doi.org/10.4028/www.scientific.net/SSP.180.137 (2012).

Bruzzone, L. & Fanghella, P. Functional redesign of Mantis 2.0, a hybrid leg-wheel robot for surveillance and inspection. J. Intell. Robot Syst. 81 , 215–230. https://doi.org/10.1007/s10846-015-0240-0 (2016).

Kim, S. H., Choi, H. H. & Yu, Y. S. Improvements in adhesion force and smart embedded programming of wall inspection robot. J. Supercomput. 72 , 2635–2650. https://doi.org/10.1007/s11227-015-1549-y (2016).

Sun, J., Li, C., Wu, X. J., Palade, V. & Fang, W. An effective method of weld defect detection and classification based on machine vision. IEEE Trans. Ind. Inform. 15 , 6322–6333. https://doi.org/10.1109/TII.2019.2896357 (2019).

Li, Y., Hu, M. & Wang, T. Weld image recognition algorithm based on deep learning. Int. J. Pattern Recognit. 34 (08), 2052004. https://doi.org/10.1142/S0218001420520047 (2020).

Yang, L., Wang, H., Huo, B., Li, F. & Liu, Y. An automatic welding defect location algorithm based on deep learning. NDT E Int. 120 , 102435. https://doi.org/10.1016/j.ndteint.2021.102435 (2021).

Shanmugasundar, G., Sivaramakrishnan, R. & Venugopal, S. Modeling, design and static analysis of seven degree of freedom articulated inspection robot. Adv. Mat. Res. 655 , 1053–1056. https://doi.org/10.4028/www.scientific.net/AMR.655-657.1053 (2013).

Li, S., Zhang, S., Xue, J. & Sun, H. Lightweight target detection for the field flat jujube based on improved YOLOv5. Comput. Electron. Agricult. 202 , 107391. https://doi.org/10.1016/j.compag.2022.107391 (2022).

Lin, T., Dollár, P., Girshick, R., He, K., Hariharan, B., & Belongie, S. Feature pyramid networks for object detection. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2117–2125. (2017)

Liu, S., Qi, L., Qin, H., Shi, J., & Jia, J. Path aggregation network for instance segmentation. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition . 8759–8768 (2018)

Li, J. et al. An improved YOLOv5-based vegetable disease detection method. Comput. Electron. Agric. 202 , 107345. https://doi.org/10.1016/j.compag.2022.107345 (2022).

Chen, W., Wu, X. & Lu, Y. An improved path planning method based on artificial potential field for a mobile robot. CIT 15 , 181–191. https://doi.org/10.1515/cait-2015-0037 (2015).

Article MathSciNet Google Scholar

Ding, S., Su, C. & Yu, J. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev. 36 , 153–162. https://doi.org/10.1007/s10462-011-9208-z (2011).

Bounini, F., Gingras, D., Pollart, H. & Gruyer, D. Modified artificial potential field method for online path planning applications. in IEEE Intelligent Vehicles Symposium Proceedings . 180–185. https://doi.org/10.1109/IVS.2017.7995717 (2017)

Seddaoui, A. & Saaj, C. M. Collision-free optimal trajectory generation for a space robot using genetic algorithm. Acta Astronaut. 179 , 311–321. https://doi.org/10.1016/j.actaastro.2020.11.001 (2021).

Article ADS Google Scholar

Saranrittichai, P., Niparnan, N. & Sudsang, A. Robust local obstacle avoidance for mobile robot based on dynamic window approach. in 2013 10th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand. 1–4 https://doi.org/10.1109/ECTICon.2013.6559615 (2013)

Rösmann, C., Feiten, W., Wösch, T., Hoffmann, F. & Bertram, T. Trajectory modification considering dynamic constraints of autonomous robots. in ROBOTIK 2012; 7th German Conference on Robotics, Munich, Germany . 1–6 (2012).

Rösmann, C., Hoffmann, F. & Bertram, T. Integrated online trajectory planning and optimization in distinctive topologies. Robot. Auton. Syst. 88 , 142–153. https://doi.org/10.1016/j.robot.2016.11.007 (2017).

Rösmann, C., Oeljeklaus, M., Hoffmann, F. & Bertram, T. Online trajectory prediction and planning for social robot navigation. in 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM) , Munich, Germany. 1255–1260. https://doi.org/10.1109/AIM.2017.8014190 (2017).

Nguyen, L. A., Pham, T. D., Ngo, T. D. & Truong, X. T. A proactive trajectory planning algorithm for autonomous mobile robots in dynamic social environments. in 2020 17th International Conference on Ubiquitous Robots (UR) Kyoto, Japan . 309–314. https://doi.org/10.1109/UR49135.2020.9144925 (2020).

Wu, J., Ma, X., Peng, T. & Wang, H. An improved timed elastic band (TEB) algorithm of autonomous ground vehicle (AGV) in complex environment. Sensors. 21 , 8312. https://doi.org/10.3390/s21248312 (2021).

Giang, H. N., Anh, N. K., Quang, N. K. & Nguyen, L. An inspection robot for detecting and tracking welding seam. in 2021 Innovations in Intelligent Systems and Applications Conference (ASYU) . 1–6. https://doi.org/10.1109/ASYU52992.2021.9599065 (2021)

Download references

Acknowledgements

This work was supported by the Natural Science Foundation of Shanghai [Grant number: 20ZR1422700]. Peiquan Xu has received research support from Science and Technology Commission of Shanghai Municipality (STCSM).

Author information

Authors and affiliations.

School of Materials Science and Engineering, Shanghai University of Engineering Science, Shanghai, 201620, China

Pengyu Zhang, Ji Wang, Feng Zhang & Peiquan Xu

Department of Chemical and Materials Engineering, University of Alberta, Edmonton, T6G 1H9, Canada

Yanfeng Visteon Electronic Technology (Shanghai) Co., Ltd, Shanghai, 200235, China

You can also search for this author in PubMed Google Scholar

Contributions

P.Z. and P.X. designed the whole research plan and directed writing of the manuscript. P.Z., J.W., B.L., L.L. F.Z. and P.X. analyzed the simulation data and wrote the manuscript. The photos were taken by P.Z. and F.Z. L.L. and P.X. reviewed and revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Correspondence to Peiquan Xu or Leijun Li .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Zhang, P., Wang, J., Zhang, F. et al. Design and analysis of welding inspection robot. Sci Rep 12 , 22651 (2022). https://doi.org/10.1038/s41598-022-27209-4

Download citation

Received : 14 June 2022

Accepted : 28 December 2022

Published : 31 December 2022

DOI : https://doi.org/10.1038/s41598-022-27209-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Review Article

- Open access

- Published: 17 July 2015

Robotic arc welding sensors and programming in industrial applications

- M Shrestha 1 ,

- E Hiltunen 1 &

- J Martikainen 1

International Journal of Mechanical and Materials Engineering volume 10 , Article number: 13 ( 2015 ) Cite this article

46k Accesses

53 Citations

Metrics details

Technical innovations in robotic welding and greater availability of sensor-based control features have enabled manual welding processes in harsh work environments with excessive heat and fumes to be replaced with robotic welding. The use of industrial robots or mechanized equipment for high-volume productivity has become increasingly common, with robotized gas metal arc welding (GMAW) generally being used. More widespread use of robotic welding has necessitated greater capability to control welding parameters and robotic motion and improved fault detection and fault correction. Semi-autonomous robotic welding (i.e., highly automated systems requiring only minor operator intervention) faces a number of problems, the most common of which are the need to compensate for inaccuracies in fixtures for the workpiece, variations in workpiece dimensions, imperfect edge preparation, and in-process thermal distortions. Major challenges are joint edge detection, joint seam tracking, weld penetration control, and measurement of the width or profile of a joint. Such problems can be most effectively solved with the use of sensory feedback signals from the weld joint. Thus, sensors play an important role in robotic arc welding systems with adaptive and intelligent control system features that can track the joint, monitor in-process quality of the weld, and account for variation in joint location and geometry. This work describes various aspects of robotic welding, programming of robotic welding systems, and problems associated with the technique. It further discusses commercially available seam-tracking and seam-finding sensors and presents a practical case application of sensors for semi-autonomous robotic welding. This study increases familiarity with robotic welding and the role of sensors in robotic welding and their associated problems.

Introduction

Industrial robots and mechanized equipment have become indispensable for industrial welding for high-volume productivity because manual welding yields low production rates due to the harsh work environment and extreme physical demands (Laiping et al. 2005 ). Dynamic market behavior and strong competition are forcing manufacturing companies to search for optimal production procedures. As shown in Fig. 1 (Pires et al. 2003 ), for small/medium production volumes, robotic production yields the best cost per unit performance when compared to manual and hard automation. In addition to competitive unit costs, robotic welding systems bring other advantages, such as improved productivity, safety, weld quality, flexibility and workspace utilization, and reduced labor costs (Robot et al. 2013a ; Robert et al. 2013 ). The increase in the range of applications of robotic welding technology has led to a need to reduce operator input and enhance automated control over welding parameters, path of robotic motion, fault detection, and fault correction (Schwab et al. 2008 ). Even though the level of complexity and sophistication of these robotic systems is high, their ability to adapt to real-time changes in environmental conditions cannot equal the ability of human senses to adapt to the weld environment (Hohn and Holmes 1982 ).

Industrial robotics zone (Pires et al. 2003 ; Myhr 1999 )

According to the Robotics Institute of America, a robot is a “reprogrammable, multifunctional manipulator designed to move materials, parts, tools, or specialized devices, to variable programmed motions for the performance of a variety of tasks.” While the first industrial robot was developed by Joseph Engelburger already in the mid-1950s, it was not until the mid-1970s that robotic arc welding was first used in production. Subsequently, robotics has been adopted with many welding processes. The advantages of robotic welding vary from process to process but common benefits generally include improved weld quality, increased productivity, reduced weld costs, and increased repeatable consistency of welding (Lane 1987 ).

Robots in arc welding

Welding is an integral part of advanced industrial manufacturing and robotic welding is considered the main symbol of modern welding technology (Cui et al. 2013 ). In the earliest applications of robotic welding, so-called first-generation robotic welding systems, welding was performed as a two-pass weld system, in which the first pass was dedicated to learning the seam geometry and was then followed by the actual tracking and welding of the seam in the second pass. With developments in technology came the second generation of robotic welding systems, which tracked the seam in real time, performing simultaneously the learning and the seam-tracking phases. The latest technology in robotic welding is third-generation systems, in which the system not only operates in real time but also learns the rapidly changing geometry of the seam while operating within unstructured environments ( Pires et al. 2006 ). Figure 2 shows the major components of a robotic arc welding system (Cary and Helzer 2005 ).

Robotic arc welding system (Cary and Helzer 2005 )

The following sections briefly discuss some of the key aspects of robotics in welding technology.

Robotic configurations

Robots can be categorized based on criteria like degrees of freedom, kinematics structure, drive technology, workspace geometry, and motion characteristics (Tsai 2000 ). In selection of robots for a specific application, all of these factors need to be considered. Based on the workspace geometry, robots with revolute (or jointed arm) configuration are the most commonly used type in industrial robotic arc welding (Ross et al. 2010 ). Figure 3 illustrates an example of a revolute configuration robot.

Vertically articulated (revolute configuration) robot with five revolute joints (Ross et al. 2010 )

Phases in welding operations

The welding operation consists of three different phases that need critical consideration in designing a fully automated robotic welding system to achieve good performance and weld quality ( Pires et al. 2006 ):

Preparation phase

In this phase, the weld operator sets up the parts to be welded, the apparatus (power source, robot, robot program, etc.) and the weld parameters, along with the type of gas and electrode wires. When CAD/CAM or other offline programming is used, a robot weld pre-program is available and placed online. Consequently, the robotic program might only need minor tuning for calibration, which can be easily done by the weld operator performing selected online simulations of the process.

Welding phase

Automatic equipment requires the same capabilities as manual welding, i.e., the system should be capable of maintaining a torch orientation that follows the desired trajectory (which may be different from planned), performing seam tracking, and changing weld parameters in real time, thus emulating the adaptive behavior of manual welders.

Analysis phase

The analysis phase is generally a post-welding phase where the welding operator examines the obtained weld to ascertain if it is acceptable or whether changes are required in the previous two phases. Use of advanced sensors, such as 3D laser cameras, enables execution of this phase online during the welding phase.

Robotic programming modes

Different methods exist for teaching or programming a robot controller; namely, manual methods, online programming (walk-through, lead-through), and offline programming. Manual methods are primarily used for pick-and-place robots and are not used for arc welding robots (Cary and Helzer 2005 ).

Online programming

This category of robotic programming includes lead-through and walk-through programming. Use of the manual online programming method requires no special hardware or software on-site other than that which is used for the manufacturing process. The major drawback of online programming is that it is quite inflexible and it is only able to control simple robot paths (Pan et al. 2012a ). In the walk-through method, the operator moves the torch manually through the desired sequence of movements, which are recorded into the memory for playback during welding. The walk-through method was adopted in a few early welding robots (Cary and Helzer 2005 ) but did not gain widespread use. The conventional method for programming welding robots is online programming with the help of a teach pendant, i.e., lead-through programming. In this approach, the programmer jogs the robot to the desired position with the use of control keys on the teaching pendant and the desired position and sequence of motions are recorded. The main disadvantage of the online teaching method is that the programming of the robot causes breaks in production during the programming phase (McWhirter 2012 ).

The teach and playback mode has limited flexibility as it is unable to adapt to the many problems that might be encountered in the welding operation, for example, errors in pre-machining and fitting of the workpiece, and in-process thermal distortion leading to change in gap size. Thus, advanced applications of robotic welding require an automatic control system that can adapt and adjust the welding parameters and motion of the welding robots (Hongyuan et al. 2009 ). Hongyuan et al. ( 2009 ) developed a closed loop control system for robots that used teach and playback based on real-time vision sensing for sensing topside width of the weld pool and seam gap to control weld formation in gas tungsten arc welding with gap variation in multi-pass welding. In spite of all the abovementioned drawbacks, online programming is still the only programming choice for most small to median enterprises (SMEs). Online programming methods using more intuitive human-machine interfaces (HMI) and sensors information have been proposed by several institutions (Zhang et al. 2006 ; Sugita et al. 2003 ). The assisted online programming can be categorized into assisted online programming and sensor-guided online programming. Although dramatic progress has been carried out to make online programming more intuitive, less reliant on operator skill, and more automatic, most of the research outcomes are not commercially available aside from Sugita et al. 2003 .

Offline programming

Offline programming (OLP) with simulation software allows programming of the welding path and operation sequence from a computer rather than from the robot itself. 3D CAD models of the workpieces, robots, and fixtures used in the cell are required for OLP. The simulation software matches these 3D CAD models, permitting programming of the robot’s welding trajectory from a computer instead of a teaching pendant in the welding cell as in online programming. After simulation and testing of the program, the instructions can be exported from the computer to the robot controller via an Ethernet communication network. Ongoing research suggests, however, that the use of sensing technology would make it feasible to completely program the final trajectory only with OLP (Miller Electric Mfg Co. 2013 ). Pan et al. ( 2012a ) developed an automated offline programming method with software that allows automatic planning and programming (with CAD models as input) for a robotic welding system with high degrees of freedom without any programming effort. The main advantages of OLP are its reusable code, flexibility for modification, ability to generate complex paths, and reduction in production downtime in the programming phase for setup of a new part. Nevertheless, OLP is mostly used to generate complex robot paths for large production volumes because the time and cost required to generate code for complex robotic systems is similar to if not greater than with online programming (Pan et al. 2012a ). Currently, for a complex manufacturing process with small to median production volume, very few robotic automation solution are used to replace manual production due to this expensive and time-consuming programming overhead. Although OLP has the abovementioned advantages, it is not popular for small to median enterprise (SME) users due to its obvious drawbacks. It is difficult to economically justify an OLP for smaller product values due to the high cost of the OLP package and programming overhead required to customize the software for a specific application. Development of customized software for offline programming is time-consuming and requires high-level programming skills. Typically, these skills are not available from the process engineers and operators who often perform the robot programming in-process today. As OLP methods rely accurate modeling of the robot and work cell, additional calibration procedures using extra sensors are in many cases inevitable to meet requirements (Pan et al. 2012b ).

Intelligent robot

It is very difficult and even impossible to anticipate and identify all situations that the robot could do during his task execution. Therefore, the software developer must specify the categories of situation and provide the robot with sufficient intelligence and the ability to solve problems of any class of its program. Sometimes, when situations are ambiguous and uncertain, the robot must be able to evaluate different possible actions. If the robot’s environment does not change, the robot is given a model of its environment so that it can predict the outcome of his actions. But if the environment changes, the robot should learn. This is among other prerequisites, which calls for the development and embedding in robots’ system of artificial intelligence (AI) capable of learning, reasoning, and problem solving (Tzafestas and Verbruggen 1995 ).

The most welding robots serving in practical production still are the teaching and playback type and cannot well meet quality and diversification requirements of welding production because these types of robots do not have the automatic functions to adapt circumstance changes and uncertain disturbances (errors of pre-machining and fitting workpiece, heat conduction, dispersion during welding process) during welding process (Tarn et al. 2004 ; Tarn et al. 2007 ). In order to overcome or restrict different uncertainty which influences the quality of the weld, it would be an effective approach to develop and improve the intelligent technology of welding robots such as vision sensing, multi-sensing for welding robots, recognition of welded environment, self-guiding and seam-tracking, and intelligent real-time control procedures for welding robots. To this end, the development of an intelligence technology to improve the current method of learning and use for playback programming for welding robots is essential to achieve high quality and flexibility expected of welded products (Chen and Wu 2008 ; Chen 2007 ).

Intelligent robots are expected to take an active role in the joining job, which comprises as large a part of the machine industry as the machining job. The intelligent robot can perform highly accurate assembly jobs, picking up a workpiece from randomly piled workpieces on a tray, assembling it with fitting precision of 10 μm or less clearance with its force sensors, and high-speed resistant spot arc welding in automotive welding and painting. However, the industrial intelligent robots still have tasks in which they cannot compete with skilled workers, though they have a high level of skills, as has been explained so far. Such as assembling flexible objects like a wire harness, there are several ongoing research and development activities in the world to solve these challenges (Nof 2009 ).

Problems in robotic welding

Despite the benefits from using robotic systems, associated problems require due consideration. Issues include the following:

The consistency required for making part after part, which, in the absence of proper control, might fluctuate due to poor fixturing or variations in the metal forming process.

In the case of low to medium volume manufacturing or repair work, the time and effort taken to program the robot to weld a new part can be quite high (Dinham and Fang 2013 ).

Robotic welding requires proper joint design, consistent gap conditions and gap tolerance not exceeding 0.5 to 1 mm. Variation in gap condition requires the use of sensing technologies for gap filling (Robot et al. 2013b ).

Automation of welding by robotic systems has high initial cost, so accurate calculation of return on investment (ROI) is essential (Rochelle 2010 ).

Possible shortages of skilled welders with the requisite knowledge and training pose limitations.

Unlike adaptive human behavior, robots cannot independently make autonomous corrective decisions and have to be supplemented by the use of sensors and a robust control system for decision-making.

Robotic welding cannot easily be performed in some areas like pressure vessels, interior tanks, and ship bodies due to workspace constraints (Robotics Bible 2011 ).

The majority of sensor-based intelligent systems available in the market are not tightly integrated with the robot controller, which limits the performance of the robotic system as most industrial robots only offer around a 20-Hz feedback loop through the programming interface. Consequently, the robot cannot respond to the sensor information quickly, resulting in sluggish and sometimes unstable performance.

Sensors in robotic welding

Need for sensors in robotic welding.

At present, welding robots are predominantly found in automatic manufacturing processes, most of which use teach and playback robots that require a great deal of time for training and path planning, etc. Furthermore, teaching and programming needs to be repeated if the dimensions of the weld workpieces are changed, as they cannot self-rectify during the welding process. The seam position in particular is often disturbed in practice due to various problems. The use of sensors is a way to address these problems in automated robotic welding processes (Xu et al. 2012 ). The main use of sensors in robotic welding is to detect and measure process features and parameters, such as joint geometry, weld pool geometry and location, and online control of the welding process. Sensors are additionally used for weld inspection of defects and quality evaluation ( Pires et al. 2006 ). The ideal sensor for robot application should measure the welding point (avoidance of tracking misalignment), should detect in advance (finding the start point of the seam, recognizing corners, avoiding collisions), and should be as small as possible (no restriction in accessibility). The ideal sensors, which combine all three requirements, do not exist; therefore, one must select a sensor which is suitable for the individual welding job (Bolmsjö and Olsson 2005 ). Sensors that measure geometrical parameters are mainly used to provide the robot with seam-tracking capability and/or search capability, allowing the path of the robot to be adapted according to geometrical deviations from the nominal path. Technological sensors measure parameters within the welding process for its stability and are mostly used for monitoring and/or controlling purposes ( Pires et al. 2006 ). Table 1 presents different sensor applications, and summarized advantages, and drawbacks for a specific time during welding operation.

Contact-type sensors, like nozzle or finger, are less expensive and easier to use than a non-contact. However, this type of sensors cannot be used for butt joints and thin lap joints. Non-contact sensors referred as through-the-arc sensors may be used for tee joints, U and V grooves, or lap joints over a certain thickness. These types of sensors are appropriate for welding of bigger pieces with weaving when penetration control is not necessary. However, it is not applicable to materials with high reflectivity such as aluminum. Great attention has been paid to joint sensing by welding personnel since the 1980s. The principal types of industrial arc-welding sensors that have been employed are optical and arc sensors (Nomura et al. 1986 ). Some of the most important uses of sensors in robotic welding are discussed below:

Seam finding

Seam finding (or joint finding) is a process in which the seam is located using one or more searches to make sure that the weld bead is precisely deposited in the joint. Seam finding is done by adjusting the robotic manipulator and weld torch to the right position and orientation in relation to the welding groove or by adjusting the machine program, prior to welding (Servo Robot Inc 2013a ). Many robotic applications, especially in the auto industry, involve producing a series of short and repeated welds for which real-time tracking is not required; however, it is necessary to begin each weld in the correct place, which necessitates the use of seam-finding sensors (Meta Vision Systems Ltd 2006 ).

Seam tracking

Seam tracking enables the welding torch to follow automatically the weld seam groove and adjust the robotic manipulator accordingly; to counter the effects of variation in the seam caused by distortion, uneven heat transfer, variability of gap size, staggered edges, etc. (Xu et al. 2012 ).

Reliable seam-tracking sensors provide the following advantages (Björkelund 1987 ):

Automatic vertical and horizontal correction of the path (even path changes necessitated by thermal distortion)

Less stringent accuracy demands on objects and fixtures

Welding parameter adaptation

Reduced programming time

Lower rejection rates

Higher welding quality

Viability of short series

Adaptive control

In adaptive control welding, i.e., a closed loop system using feedback-sensing devices and adaptive control, there is a process control system that detects changes in welding conditions automatically with the aid of sensors and directs the equipment to take appropriate action. Sensors are needed in adaptive control welding to find the joint, assess root penetration, conduct bead placement and seam tracking, and ensure proper joint fill (Cary and Helzer 2005 ). Use of sensors allows adaptive control for real-time control and adjustment of process parameters such as welding current and voltage. For example, the capabilities of sensors in seam finding, identification of joint penetration and joint filling, and ensuring root penetration and acceptable weld bead shape mean that corrective modification of relevant welding parameters is done such that constant weld quality is maintained (Cary and Helzer 2005 ; Drews and Starke 1986 ). An adaptive welding robot should have the capabilities to address two main aspects. The first aspect is the control of the end effector’s path and orientation so that the robot is able to track the joint to be welded with high precision. The second one is the control of welding process variables in real time, for example, the control of the amount of metal deposition into the joint as per the dimensions of the gap separating the parts to be welded.

Chen et al. ( 2007 ) studied the use of laser vision sensing for adaptive welding of an aluminum alloy in which the wire feed speed and the welding current are adjusted automatically as per the groove conditions. The sensor was used to precisely measure the weld groove and for automatic seam tracking involving automatic torch traverse alignment and torch height adjustment during welding. An adaptive software was employed that calculated the wire feed rate according to the variation in the gap and the weld area. The software included extraction of groove geometry, calculation and filtering, querying of the adaptive table (ADAP table as shown in Table 2 ), and generation of the control output signal.

Figure 4 shows the control flow module for adaptive control of weld parameters for the system.

Diagram of welding parameter adaptive control (Chen et al. 2007 )