Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10 Experimental research

Experimental research—often considered to be the ‘gold standard’ in research designs—is one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity (causality) due to its ability to link cause and effect through treatment manipulation, while controlling for the spurious effect of extraneous variable.

Experimental research is best suited for explanatory research—rather than for descriptive or exploratory research—where the goal of the study is to examine cause-effect relationships. It also works well for research that involves a relatively limited and well-defined set of independent variables that can either be manipulated or controlled. Experimental research can be conducted in laboratory or field settings. Laboratory experiments , conducted in laboratory (artificial) settings, tend to be high in internal validity, but this comes at the cost of low external validity (generalisability), because the artificial (laboratory) setting in which the study is conducted may not reflect the real world. Field experiments are conducted in field settings such as in a real organisation, and are high in both internal and external validity. But such experiments are relatively rare, because of the difficulties associated with manipulating treatments and controlling for extraneous effects in a field setting.

Experimental research can be grouped into two broad categories: true experimental designs and quasi-experimental designs. Both designs require treatment manipulation, but while true experiments also require random assignment, quasi-experiments do not. Sometimes, we also refer to non-experimental research, which is not really a research design, but an all-inclusive term that includes all types of research that do not employ treatment manipulation or random assignment, such as survey research, observational research, and correlational studies.

Basic concepts

Treatment and control groups. In experimental research, some subjects are administered one or more experimental stimulus called a treatment (the treatment group ) while other subjects are not given such a stimulus (the control group ). The treatment may be considered successful if subjects in the treatment group rate more favourably on outcome variables than control group subjects. Multiple levels of experimental stimulus may be administered, in which case, there may be more than one treatment group. For example, in order to test the effects of a new drug intended to treat a certain medical condition like dementia, if a sample of dementia patients is randomly divided into three groups, with the first group receiving a high dosage of the drug, the second group receiving a low dosage, and the third group receiving a placebo such as a sugar pill (control group), then the first two groups are experimental groups and the third group is a control group. After administering the drug for a period of time, if the condition of the experimental group subjects improved significantly more than the control group subjects, we can say that the drug is effective. We can also compare the conditions of the high and low dosage experimental groups to determine if the high dose is more effective than the low dose.

Treatment manipulation. Treatments are the unique feature of experimental research that sets this design apart from all other research methods. Treatment manipulation helps control for the ‘cause’ in cause-effect relationships. Naturally, the validity of experimental research depends on how well the treatment was manipulated. Treatment manipulation must be checked using pretests and pilot tests prior to the experimental study. Any measurements conducted before the treatment is administered are called pretest measures , while those conducted after the treatment are posttest measures .

Random selection and assignment. Random selection is the process of randomly drawing a sample from a population or a sampling frame. This approach is typically employed in survey research, and ensures that each unit in the population has a positive chance of being selected into the sample. Random assignment, however, is a process of randomly assigning subjects to experimental or control groups. This is a standard practice in true experimental research to ensure that treatment groups are similar (equivalent) to each other and to the control group prior to treatment administration. Random selection is related to sampling, and is therefore more closely related to the external validity (generalisability) of findings. However, random assignment is related to design, and is therefore most related to internal validity. It is possible to have both random selection and random assignment in well-designed experimental research, but quasi-experimental research involves neither random selection nor random assignment.

Threats to internal validity. Although experimental designs are considered more rigorous than other research methods in terms of the internal validity of their inferences (by virtue of their ability to control causes through treatment manipulation), they are not immune to internal validity threats. Some of these threats to internal validity are described below, within the context of a study of the impact of a special remedial math tutoring program for improving the math abilities of high school students.

History threat is the possibility that the observed effects (dependent variables) are caused by extraneous or historical events rather than by the experimental treatment. For instance, students’ post-remedial math score improvement may have been caused by their preparation for a math exam at their school, rather than the remedial math program.

Maturation threat refers to the possibility that observed effects are caused by natural maturation of subjects (e.g., a general improvement in their intellectual ability to understand complex concepts) rather than the experimental treatment.

Testing threat is a threat in pre-post designs where subjects’ posttest responses are conditioned by their pretest responses. For instance, if students remember their answers from the pretest evaluation, they may tend to repeat them in the posttest exam.

Not conducting a pretest can help avoid this threat.

Instrumentation threat , which also occurs in pre-post designs, refers to the possibility that the difference between pretest and posttest scores is not due to the remedial math program, but due to changes in the administered test, such as the posttest having a higher or lower degree of difficulty than the pretest.

Mortality threat refers to the possibility that subjects may be dropping out of the study at differential rates between the treatment and control groups due to a systematic reason, such that the dropouts were mostly students who scored low on the pretest. If the low-performing students drop out, the results of the posttest will be artificially inflated by the preponderance of high-performing students.

Regression threat —also called a regression to the mean—refers to the statistical tendency of a group’s overall performance to regress toward the mean during a posttest rather than in the anticipated direction. For instance, if subjects scored high on a pretest, they will have a tendency to score lower on the posttest (closer to the mean) because their high scores (away from the mean) during the pretest were possibly a statistical aberration. This problem tends to be more prevalent in non-random samples and when the two measures are imperfectly correlated.

Two-group experimental designs

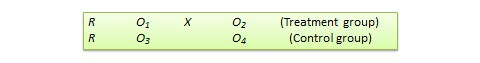

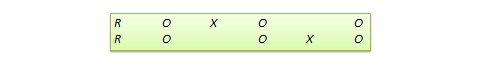

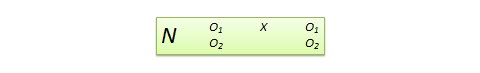

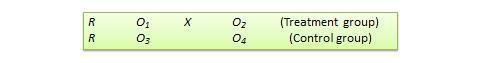

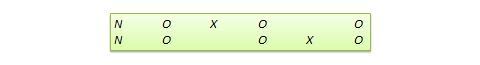

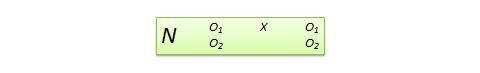

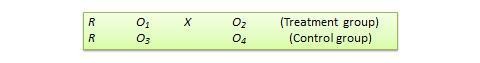

Pretest-posttest control group design . In this design, subjects are randomly assigned to treatment and control groups, subjected to an initial (pretest) measurement of the dependent variables of interest, the treatment group is administered a treatment (representing the independent variable of interest), and the dependent variables measured again (posttest). The notation of this design is shown in Figure 10.1.

Statistical analysis of this design involves a simple analysis of variance (ANOVA) between the treatment and control groups. The pretest-posttest design handles several threats to internal validity, such as maturation, testing, and regression, since these threats can be expected to influence both treatment and control groups in a similar (random) manner. The selection threat is controlled via random assignment. However, additional threats to internal validity may exist. For instance, mortality can be a problem if there are differential dropout rates between the two groups, and the pretest measurement may bias the posttest measurement—especially if the pretest introduces unusual topics or content.

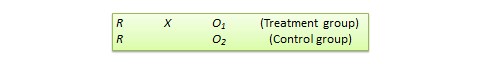

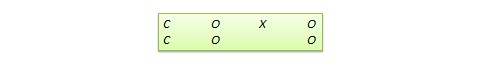

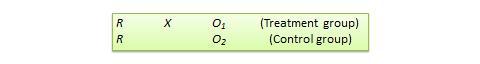

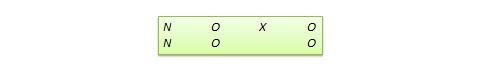

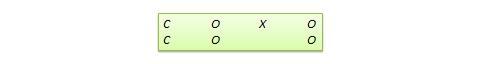

Posttest -only control group design . This design is a simpler version of the pretest-posttest design where pretest measurements are omitted. The design notation is shown in Figure 10.2.

The treatment effect is measured simply as the difference in the posttest scores between the two groups:

The appropriate statistical analysis of this design is also a two-group analysis of variance (ANOVA). The simplicity of this design makes it more attractive than the pretest-posttest design in terms of internal validity. This design controls for maturation, testing, regression, selection, and pretest-posttest interaction, though the mortality threat may continue to exist.

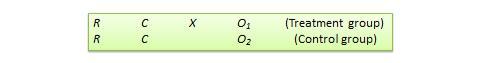

Because the pretest measure is not a measurement of the dependent variable, but rather a covariate, the treatment effect is measured as the difference in the posttest scores between the treatment and control groups as:

Due to the presence of covariates, the right statistical analysis of this design is a two-group analysis of covariance (ANCOVA). This design has all the advantages of posttest-only design, but with internal validity due to the controlling of covariates. Covariance designs can also be extended to pretest-posttest control group design.

Factorial designs

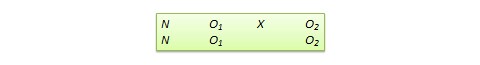

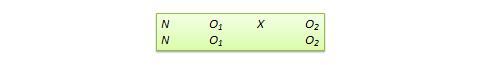

Two-group designs are inadequate if your research requires manipulation of two or more independent variables (treatments). In such cases, you would need four or higher-group designs. Such designs, quite popular in experimental research, are commonly called factorial designs. Each independent variable in this design is called a factor , and each subdivision of a factor is called a level . Factorial designs enable the researcher to examine not only the individual effect of each treatment on the dependent variables (called main effects), but also their joint effect (called interaction effects).

In a factorial design, a main effect is said to exist if the dependent variable shows a significant difference between multiple levels of one factor, at all levels of other factors. No change in the dependent variable across factor levels is the null case (baseline), from which main effects are evaluated. In the above example, you may see a main effect of instructional type, instructional time, or both on learning outcomes. An interaction effect exists when the effect of differences in one factor depends upon the level of a second factor. In our example, if the effect of instructional type on learning outcomes is greater for three hours/week of instructional time than for one and a half hours/week, then we can say that there is an interaction effect between instructional type and instructional time on learning outcomes. Note that the presence of interaction effects dominate and make main effects irrelevant, and it is not meaningful to interpret main effects if interaction effects are significant.

Hybrid experimental designs

Hybrid designs are those that are formed by combining features of more established designs. Three such hybrid designs are randomised bocks design, Solomon four-group design, and switched replications design.

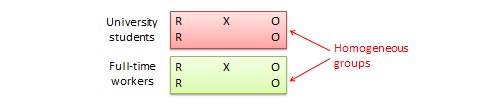

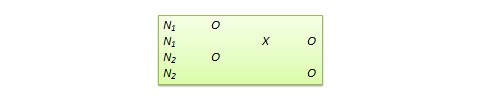

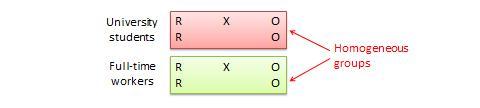

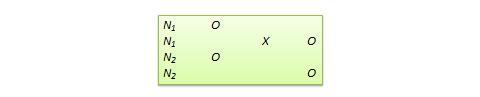

Randomised block design. This is a variation of the posttest-only or pretest-posttest control group design where the subject population can be grouped into relatively homogeneous subgroups (called blocks ) within which the experiment is replicated. For instance, if you want to replicate the same posttest-only design among university students and full-time working professionals (two homogeneous blocks), subjects in both blocks are randomly split between the treatment group (receiving the same treatment) and the control group (see Figure 10.5). The purpose of this design is to reduce the ‘noise’ or variance in data that may be attributable to differences between the blocks so that the actual effect of interest can be detected more accurately.

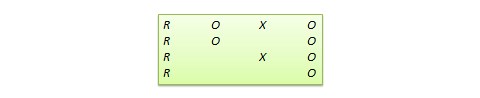

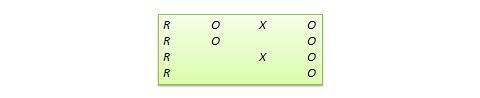

Solomon four-group design . In this design, the sample is divided into two treatment groups and two control groups. One treatment group and one control group receive the pretest, and the other two groups do not. This design represents a combination of posttest-only and pretest-posttest control group design, and is intended to test for the potential biasing effect of pretest measurement on posttest measures that tends to occur in pretest-posttest designs, but not in posttest-only designs. The design notation is shown in Figure 10.6.

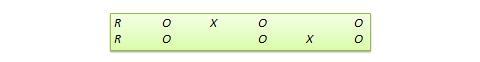

Switched replication design . This is a two-group design implemented in two phases with three waves of measurement. The treatment group in the first phase serves as the control group in the second phase, and the control group in the first phase becomes the treatment group in the second phase, as illustrated in Figure 10.7. In other words, the original design is repeated or replicated temporally with treatment/control roles switched between the two groups. By the end of the study, all participants will have received the treatment either during the first or the second phase. This design is most feasible in organisational contexts where organisational programs (e.g., employee training) are implemented in a phased manner or are repeated at regular intervals.

Quasi-experimental designs

Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient: random assignment. For instance, one entire class section or one organisation is used as the treatment group, while another section of the same class or a different organisation in the same industry is used as the control group. This lack of random assignment potentially results in groups that are non-equivalent, such as one group possessing greater mastery of certain content than the other group, say by virtue of having a better teacher in a previous semester, which introduces the possibility of selection bias . Quasi-experimental designs are therefore inferior to true experimental designs in interval validity due to the presence of a variety of selection related threats such as selection-maturation threat (the treatment and control groups maturing at different rates), selection-history threat (the treatment and control groups being differentially impacted by extraneous or historical events), selection-regression threat (the treatment and control groups regressing toward the mean between pretest and posttest at different rates), selection-instrumentation threat (the treatment and control groups responding differently to the measurement), selection-testing (the treatment and control groups responding differently to the pretest), and selection-mortality (the treatment and control groups demonstrating differential dropout rates). Given these selection threats, it is generally preferable to avoid quasi-experimental designs to the greatest extent possible.

In addition, there are quite a few unique non-equivalent designs without corresponding true experimental design cousins. Some of the more useful of these designs are discussed next.

Regression discontinuity (RD) design . This is a non-equivalent pretest-posttest design where subjects are assigned to the treatment or control group based on a cut-off score on a preprogram measure. For instance, patients who are severely ill may be assigned to a treatment group to test the efficacy of a new drug or treatment protocol and those who are mildly ill are assigned to the control group. In another example, students who are lagging behind on standardised test scores may be selected for a remedial curriculum program intended to improve their performance, while those who score high on such tests are not selected from the remedial program.

Because of the use of a cut-off score, it is possible that the observed results may be a function of the cut-off score rather than the treatment, which introduces a new threat to internal validity. However, using the cut-off score also ensures that limited or costly resources are distributed to people who need them the most, rather than randomly across a population, while simultaneously allowing a quasi-experimental treatment. The control group scores in the RD design do not serve as a benchmark for comparing treatment group scores, given the systematic non-equivalence between the two groups. Rather, if there is no discontinuity between pretest and posttest scores in the control group, but such a discontinuity persists in the treatment group, then this discontinuity is viewed as evidence of the treatment effect.

Proxy pretest design . This design, shown in Figure 10.11, looks very similar to the standard NEGD (pretest-posttest) design, with one critical difference: the pretest score is collected after the treatment is administered. A typical application of this design is when a researcher is brought in to test the efficacy of a program (e.g., an educational program) after the program has already started and pretest data is not available. Under such circumstances, the best option for the researcher is often to use a different prerecorded measure, such as students’ grade point average before the start of the program, as a proxy for pretest data. A variation of the proxy pretest design is to use subjects’ posttest recollection of pretest data, which may be subject to recall bias, but nevertheless may provide a measure of perceived gain or change in the dependent variable.

Separate pretest-posttest samples design . This design is useful if it is not possible to collect pretest and posttest data from the same subjects for some reason. As shown in Figure 10.12, there are four groups in this design, but two groups come from a single non-equivalent group, while the other two groups come from a different non-equivalent group. For instance, say you want to test customer satisfaction with a new online service that is implemented in one city but not in another. In this case, customers in the first city serve as the treatment group and those in the second city constitute the control group. If it is not possible to obtain pretest and posttest measures from the same customers, you can measure customer satisfaction at one point in time, implement the new service program, and measure customer satisfaction (with a different set of customers) after the program is implemented. Customer satisfaction is also measured in the control group at the same times as in the treatment group, but without the new program implementation. The design is not particularly strong, because you cannot examine the changes in any specific customer’s satisfaction score before and after the implementation, but you can only examine average customer satisfaction scores. Despite the lower internal validity, this design may still be a useful way of collecting quasi-experimental data when pretest and posttest data is not available from the same subjects.

An interesting variation of the NEDV design is a pattern-matching NEDV design , which employs multiple outcome variables and a theory that explains how much each variable will be affected by the treatment. The researcher can then examine if the theoretical prediction is matched in actual observations. This pattern-matching technique—based on the degree of correspondence between theoretical and observed patterns—is a powerful way of alleviating internal validity concerns in the original NEDV design.

Perils of experimental research

Experimental research is one of the most difficult of research designs, and should not be taken lightly. This type of research is often best with a multitude of methodological problems. First, though experimental research requires theories for framing hypotheses for testing, much of current experimental research is atheoretical. Without theories, the hypotheses being tested tend to be ad hoc, possibly illogical, and meaningless. Second, many of the measurement instruments used in experimental research are not tested for reliability and validity, and are incomparable across studies. Consequently, results generated using such instruments are also incomparable. Third, often experimental research uses inappropriate research designs, such as irrelevant dependent variables, no interaction effects, no experimental controls, and non-equivalent stimulus across treatment groups. Findings from such studies tend to lack internal validity and are highly suspect. Fourth, the treatments (tasks) used in experimental research may be diverse, incomparable, and inconsistent across studies, and sometimes inappropriate for the subject population. For instance, undergraduate student subjects are often asked to pretend that they are marketing managers and asked to perform a complex budget allocation task in which they have no experience or expertise. The use of such inappropriate tasks, introduces new threats to internal validity (i.e., subject’s performance may be an artefact of the content or difficulty of the task setting), generates findings that are non-interpretable and meaningless, and makes integration of findings across studies impossible.

The design of proper experimental treatments is a very important task in experimental design, because the treatment is the raison d’etre of the experimental method, and must never be rushed or neglected. To design an adequate and appropriate task, researchers should use prevalidated tasks if available, conduct treatment manipulation checks to check for the adequacy of such tasks (by debriefing subjects after performing the assigned task), conduct pilot tests (repeatedly, if necessary), and if in doubt, use tasks that are simple and familiar for the respondent sample rather than tasks that are complex or unfamiliar.

In summary, this chapter introduced key concepts in the experimental design research method and introduced a variety of true experimental and quasi-experimental designs. Although these designs vary widely in internal validity, designs with less internal validity should not be overlooked and may sometimes be useful under specific circumstances and empirical contingencies.

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- How it works

A Complete Guide to Experimental Research

Published by Carmen Troy at August 14th, 2021 , Revised On August 25, 2023

A Quick Guide to Experimental Research

Experimental research refers to the experiments conducted in the laboratory or observation under controlled conditions. Researchers try to find out the cause-and-effect relationship between two or more variables.

The subjects/participants in the experiment are selected and observed. They receive treatments such as changes in room temperature, diet, atmosphere, or given a new drug to observe the changes. Experiments can vary from personal and informal natural comparisons. It includes three types of variables ;

- Independent variable

- Dependent variable

- Controlled variable

Before conducting experimental research, you need to have a clear understanding of the experimental design. A true experimental design includes identifying a problem , formulating a hypothesis , determining the number of variables, selecting and assigning the participants, types of research designs , meeting ethical values, etc.

There are many types of research methods that can be classified based on:

- The nature of the problem to be studied

- Number of participants (individual or groups)

- Number of groups involved (Single group or multiple groups)

- Types of data collection methods (Qualitative/Quantitative/Mixed methods)

- Number of variables (single independent variable/ factorial two independent variables)

- The experimental design

Types of Experimental Research

Laboratory Experiment

It is also called experimental research. This type of research is conducted in the laboratory. A researcher can manipulate and control the variables of the experiment.

Example: Milgram’s experiment on obedience.

Field Experiment

Field experiments are conducted in the participants’ open field and the environment by incorporating a few artificial changes. Researchers do not have control over variables under measurement. Participants know that they are taking part in the experiment.

Natural Experiments

The experiment is conducted in the natural environment of the participants. The participants are generally not informed about the experiment being conducted on them.

Examples: Estimating the health condition of the population. Did the increase in tobacco prices decrease the sale of tobacco? Did the usage of helmets decrease the number of head injuries of the bikers?

Quasi-Experiments

A quasi-experiment is an experiment that takes advantage of natural occurrences. Researchers cannot assign random participants to groups.

Example: Comparing the academic performance of the two schools.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Conduct Experimental Research?

Step 1. identify and define the problem.

You need to identify a problem as per your field of study and describe your research question .

Example: You want to know about the effects of social media on the behavior of youngsters. It would help if you found out how much time students spend on the internet daily.

Example: You want to find out the adverse effects of junk food on human health. It would help if you found out how junk food frequent consumption can affect an individual’s health.

Step 2. Determine the Number of Levels of Variables

You need to determine the number of variables . The independent variable is the predictor and manipulated by the researcher. At the same time, the dependent variable is the result of the independent variable.

In the first example, we predicted that increased social media usage negatively correlates with youngsters’ negative behaviour.

In the second example, we predicted the positive correlation between a balanced diet and a good healthy and negative relationship between junk food consumption and multiple health issues.

Step 3. Formulate the Hypothesis

One of the essential aspects of experimental research is formulating a hypothesis . A researcher studies the cause and effect between the independent and dependent variables and eliminates the confounding variables. A null hypothesis is when there is no significant relationship between the dependent variable and the participants’ independent variables. A researcher aims to disprove the theory. H0 denotes it. The Alternative hypothesis is the theory that a researcher seeks to prove. H1or HA denotes it.

Why should you use a Plagiarism Detector for your Paper?

It ensures:

- Original work

- Structure and Clarity

- Zero Spelling Errors

- No Punctuation Faults

Step 4. Selection and Assignment of the Subjects

It’s an essential feature that differentiates the experimental design from other research designs . You need to select the number of participants based on the requirements of your experiment. Then the participants are assigned to the treatment group. There should be a control group without any treatment to study the outcomes without applying any changes compared to the experimental group.

Randomisation: The participants are selected randomly and assigned to the experimental group. It is known as probability sampling. If the selection is not random, it’s considered non-probability sampling.

Stratified sampling : It’s a type of random selection of the participants by dividing them into strata and randomly selecting them from each level.

Matching: Even though participants are selected randomly, they can be assigned to the various comparison groups. Another procedure for selecting the participants is ‘matching.’ The participants are selected from the controlled group to match the experimental groups’ participants in all aspects based on the dependent variables.

What is Replicability?

When a researcher uses the same methodology and subject groups to carry out the experiments, it’s called ‘replicability.’ The results will be similar each time. Researchers usually replicate their own work to strengthen external validity.

Step 5. Select a Research Design

You need to select a research design according to the requirements of your experiment. There are many types of experimental designs as follows.

Step 6. Meet Ethical and Legal Requirements

- Participants of the research should not be harmed.

- The dignity and confidentiality of the research should be maintained.

- The consent of the participants should be taken before experimenting.

- The privacy of the participants should be ensured.

- Research data should remain confidential.

- The anonymity of the participants should be ensured.

- The rules and objectives of the experiments should be followed strictly.

- Any wrong information or data should be avoided.

Tips for Meeting the Ethical Considerations

To meet the ethical considerations, you need to ensure that.

- Participants have the right to withdraw from the experiment.

- They should be aware of the required information about the experiment.

- It would help if you avoided offensive or unacceptable language while framing the questions of interviews, questionnaires, or Focus groups.

- You should ensure the privacy and anonymity of the participants.

- You should acknowledge the sources and authors in your dissertation using any referencing styles such as APA/MLA/Harvard referencing style.

Step 7. Collect and Analyse Data.

Collect the data by using suitable data collection according to your experiment’s requirement, such as observations, case studies , surveys , interviews , questionnaires, etc. Analyse the obtained information.

Step 8. Present and Conclude the Findings of the Study.

Write the report of your research. Present, conclude, and explain the outcomes of your study .

Frequently Asked Questions

What is the first step in conducting an experimental research.

The first step in conducting experimental research is to define your research question or hypothesis. Clearly outline the purpose and expectations of your experiment to guide the entire research process.

You May Also Like

This comprehensive guide introduces what median is, how it’s calculated and represented and its importance, along with some simple examples.

A confounding variable can potentially affect both the suspected cause and the suspected effect. Here is all you need to know about accounting for confounding variables in research.

A hypothesis is a research question that has to be proved correct or incorrect through hypothesis testing – a scientific approach to test a hypothesis.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- A Quick Guide to Experimental Design | 5 Steps & Examples

A Quick Guide to Experimental Design | 5 Steps & Examples

Published on 11 April 2022 by Rebecca Bevans . Revised on 5 December 2022.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design means creating a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If if random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, frequently asked questions about experimental design.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

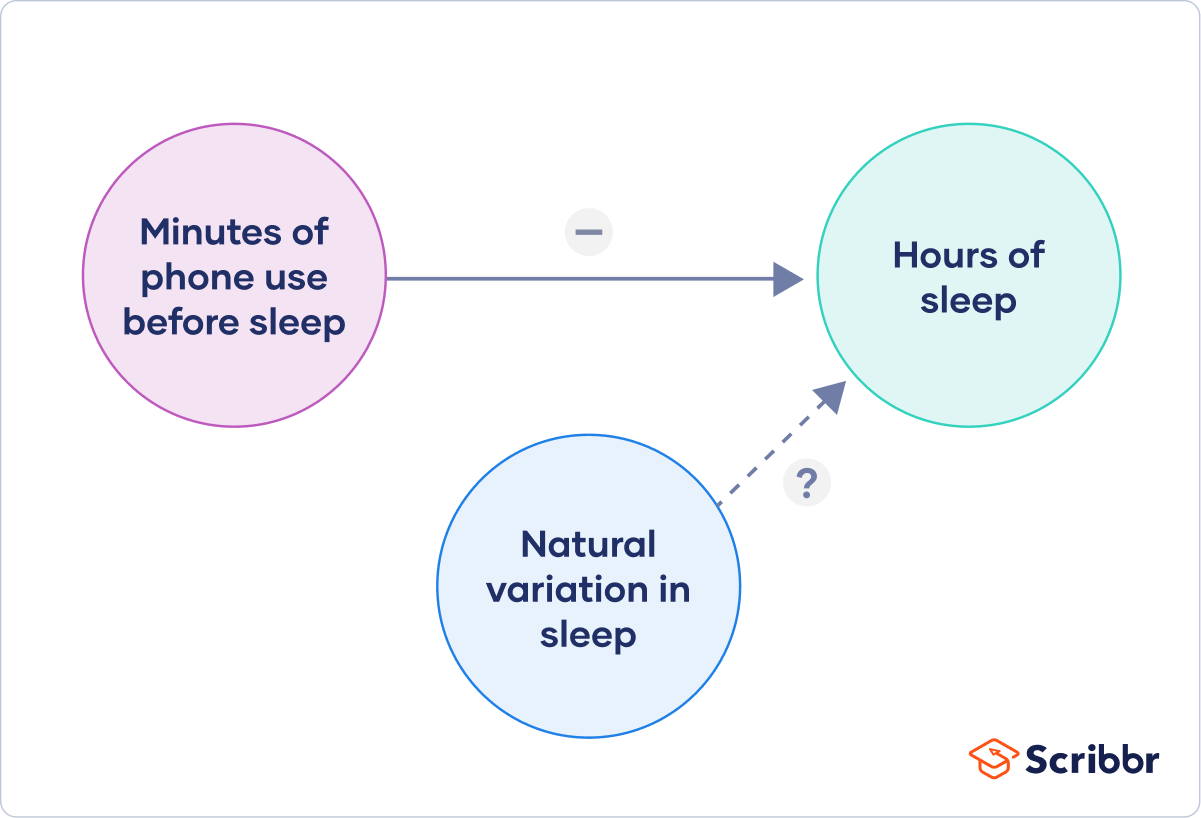

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism, run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalised and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomised design vs a randomised block design .

- A between-subjects design vs a within-subjects design .

Randomisation

An experiment can be completely randomised or randomised within blocks (aka strata):

- In a completely randomised design , every subject is assigned to a treatment group at random.

- In a randomised block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

Sometimes randomisation isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomising or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimise bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalised to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

Experimental designs are a set of procedures that you plan in order to examine the relationship between variables that interest you.

To design a successful experiment, first identify:

- A testable hypothesis

- One or more independent variables that you will manipulate

- One or more dependent variables that you will measure

When designing the experiment, first decide:

- How your variable(s) will be manipulated

- How you will control for any potential confounding or lurking variables

- How many subjects you will include

- How you will assign treatments to your subjects

The key difference between observational studies and experiments is that, done correctly, an observational study will never influence the responses or behaviours of participants. Experimental designs will have a treatment condition applied to at least a portion of participants.

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word ‘between’ means that you’re comparing different conditions between groups, while the word ‘within’ means you’re comparing different conditions within the same group.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, December 05). A Quick Guide to Experimental Design | 5 Steps & Examples. Scribbr. Retrieved 9 April 2024, from https://www.scribbr.co.uk/research-methods/guide-to-experimental-design/

Is this article helpful?

Rebecca Bevans

Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Experimental research.

The major feature that distinguishes experimental research from other types of research is that the researcher manipulates the independent variable. There are a number of experimental group designs in experimental research. Some of these qualify as experimental research, others do not.

- In true experimental research , the researcher not only manipulates the independent variable, he or she also randomly assigned individuals to the various treatment categories (i.e., control and treatment).

- In quasi experimental research , the researcher does not randomly assign subjects to treatment and control groups. In other words, the treatment is not distributed among participants randomly. In some cases, a researcher may randomly assigns one whole group to treatment and one whole group to control. In this case, quasi-experimental research involves using intact groups in an experiment, rather than assigning individuals at random to research conditions. (some researchers define this latter situation differently. For our course, we will allow this definition).

- In causal comparative ( ex post facto ) research, the groups are already formed. It does not meet the standards of an experiment because the independent variable in not manipulated.

The statistics by themselves have no meaning. They only take on meaning within the design of your study. If we just examine stats, bread can be deadly . The term validity is used three ways in research…

- I n the sampling unit, we learn about external validity (generalizability).

- I n the survey unit, we learn about instrument validity .

- In this unit, we learn about internal validity and external validity . Internal validity means that the differences that we were found between groups on the dependent variable in an experiment were directly related to what the researcher did to the independent variable, and not due to some other unintended variable (confounding variable). Simply stated, the question addressed by internal validity is “Was the study done well?” Once the researcher is satisfied that the study was done well and the independent variable caused the dependent variable (internal validity), then the research examines external validity (under what conditions [ecological] and with whom [population] can these results be replicated [Will I get the same results with a different group of people or under different circumstances?]). If a study is not internally valid, then considering external validity is a moot point (If the independent did not cause the dependent, then there is no point in applying the results [generalizing the results] to other situations.). Interestingly, as one tightens a study to control for treats to internal validity, one decreases the generalizability of the study (to whom and under what conditions one can generalize the results).

There are several common threats to internal validity in experimental research. They are described in our text. I have review each below (this material is also included in the PowerPoint Presentation on Experimental Research for this unit):

- Subject Characteristics (Selection Bias/Differential Selection) — The groups may have been different from the start. If you were testing instructional strategies to improve reading and one group enjoyed reading more than the other group, they may improve more in their reading because they enjoy it, rather than the instructional strategy you used.

- Loss of Subjects ( Mortality ) — All of the high or low scoring subject may have dropped out or were missing from one of the groups. If we collected posttest data on a day when the honor society was on field trip at the treatment school, the mean for the treatment group would probably be much lower than it really should have been.

- Location — Perhaps one group was at a disadvantage because of their location. The city may have been demolishing a building next to one of the schools in our study and there are constant distractions which interferes with our treatment.

- Instrumentation Instrument Decay — The testing instruments may not be scores similarly. Perhaps the person grading the posttest is fatigued and pays less attention to the last set of papers reviewed. It may be that those papers are from one of our groups and will received different scores than the earlier group’s papers

- Data Collector Characteristics — The subjects of one group may react differently to the data collector than the other group. A male interviewing males and females about their attitudes toward a type of math instruction may not receive the same responses from females as a female interviewing females would.

- Data Collector Bias — The person collecting data my favors one group, or some characteristic some subject possess, over another. A principal who favors strict classroom management may rate students’ attention under different teaching conditions with a bias toward one of the teaching conditions.

- Testing — The act of taking a pretest or posttest may influence the results of the experiment. Suppose we were conducting a unit to increase student sensitivity to prejudice. As a pretest we have the control and treatment groups watch Shindler’s List and write a reaction essay. The pretest may have actually increased both groups’ sensitivity and we find that our treatment groups didn’t score any higher on a posttest given later than the control group did. If we hadn’t given the pretest, we might have seen differences in the groups at the end of the study.

- History — Something may happen at one site during our study that influences the results. Perhaps a classmate dies in a car accident at the control site for a study teaching children bike safety. The control group may actually demonstrate more concern about bike safety than the treatment group.

- Maturation –There may be natural changes in the subjects that can account for the changes found in a study. A critical thinking unit may appear more effective if it taught during a time when children are developing abstract reasoning.

- Hawthorne Effect — The subjects may respond differently just because they are being studied. The name comes from a classic study in which researchers were studying the effect of lighting on worker productivity. As the intensity of the factor lights increased, so did the work productivity. One researcher suggested that they reverse the treatment and lower the lights. The productivity of the workers continued to increase. It appears that being observed by the researchers was increasing productivity, not the intensity of the lights.

- John Henry Effect — One group may view that it is competition with the other group and may work harder than than they would under normal circumstances. This generally is applied to the control group “taking on” the treatment group. The terms refers to the classic story of John Henry laying railroad track.

- Resentful Demoralization of the Control Group — The control group may become discouraged because it is not receiving the special attention that is given to the treatment group. They may perform lower than usual because of this.

- Regression ( Statistical Regression) — A class that scores particularly low can be expected to score slightly higher just by chance. Likewise, a class that scores particularly high, will have a tendency to score slightly lower by chance. The change in these scores may have nothing to do with the treatment.

- Implementation –The treatment may not be implemented as intended. A study where teachers are asked to use student modeling techniques may not show positive results, not because modeling techniques don’t work, but because the teacher didn’t implement them or didn’t implement them as they were designed.

- Compensatory Equalization of Treatmen t — Someone may feel sorry for the control group because they are not receiving much attention and give them special treatment. For example, a researcher could be studying the effect of laptop computers on students’ attitudes toward math. The teacher feels sorry for the class that doesn’t have computers and sponsors a popcorn party during math class. The control group begins to develop a more positive attitude about mathematics.

- Experimental Treatment Diffusion — Sometimes the control group actually implements the treatment. If two different techniques are being tested in two different third grades in the same building, the teachers may share what they are doing. Unconsciously, the control may use of the techniques she or he learned from the treatment teacher.

When planning a study, it is important to consider the threats to interval validity as we finalize the study design. After we complete our study, we should reconsider each of the threats to internal validity as we review our data and draw conclusions.

Del Siegle, Ph.D. Neag School of Education – University of Connecticut [email protected] www.delsiegle.com

Experimental Research

- First Online: 25 February 2021

Cite this chapter

- C. George Thomas 2

4216 Accesses

Experiments are part of the scientific method that helps to decide the fate of two or more competing hypotheses or explanations on a phenomenon. The term ‘experiment’ arises from Latin, Experiri, which means, ‘to try’. The knowledge accrues from experiments differs from other types of knowledge in that it is always shaped upon observation or experience. In other words, experiments generate empirical knowledge. In fact, the emphasis on experimentation in the sixteenth and seventeenth centuries for establishing causal relationships for various phenomena happening in nature heralded the resurgence of modern science from its roots in ancient philosophy spearheaded by great Greek philosophers such as Aristotle.

The strongest arguments prove nothing so long as the conclusions are not verified by experience. Experimental science is the queen of sciences and the goal of all speculation . Roger Bacon (1214–1294)

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Bibliography

Best, J.W. and Kahn, J.V. 1993. Research in Education (7th Ed., Indian Reprint, 2004). Prentice–Hall of India, New Delhi, 435p.

Google Scholar

Campbell, D. and Stanley, J. 1963. Experimental and quasi-experimental designs for research. In: Gage, N.L., Handbook of Research on Teaching. Rand McNally, Chicago, pp. 171–247.

Chandel, S.R.S. 1991. A Handbook of Agricultural Statistics. Achal Prakashan Mandir, Kanpur, 560p.

Cox, D.R. 1958. Planning of Experiments. John Wiley & Sons, New York, 308p.

Fathalla, M.F. and Fathalla, M.M.F. 2004. A Practical Guide for Health Researchers. WHO Regional Publications Eastern Mediterranean Series 30. World Health Organization Regional Office for the Eastern Mediterranean, Cairo, 232p.

Fowkes, F.G.R., and Fulton, P.M. 1991. Critical appraisal of published research: Introductory guidelines. Br. Med. J. 302: 1136–1140.

Gall, M.D., Borg, W.R., and Gall, J.P. 1996. Education Research: An Introduction (6th Ed.). Longman, New York, 788p.

Gomez, K.A. 1972. Techniques for Field Experiments with Rice. International Rice Research Institute, Manila, Philippines, 46p.

Gomez, K.A. and Gomez, A.A. 1984. Statistical Procedures for Agricultural Research (2nd Ed.). John Wiley & Sons, New York, 680p.

Hill, A.B. 1971. Principles of Medical Statistics (9th Ed.). Oxford University Press, New York, 390p.

Holmes, D., Moody, P., and Dine, D. 2010. Research Methods for the Bioscience (2nd Ed.). Oxford University Press, Oxford, 457p.

Kerlinger, F.N. 1986. Foundations of Behavioural Research (3rd Ed.). Holt, Rinehart and Winston, USA. 667p.

Kirk, R.E. 2012. Experimental Design: Procedures for the Behavioural Sciences (4th Ed.). Sage Publications, 1072p.

Kothari, C.R. 2004. Research Methodology: Methods and Techniques (2nd Ed.). New Age International, New Delhi, 401p.

Kumar, R. 2011. Research Methodology: A Step-by step Guide for Beginners (3rd Ed.). Sage Publications India, New Delhi, 415p.

Leedy, P.D. and Ormrod, J.L. 2010. Practical Research: Planning and Design (9th Ed.), Pearson Education, New Jersey, 360p.

Marder, M.P. 2011. Research Methods for Science. Cambridge University Press, 227p.

Panse, V.G. and Sukhatme, P.V. 1985. Statistical Methods for Agricultural Workers (4th Ed., revised: Sukhatme, P.V. and Amble, V. N.). ICAR, New Delhi, 359p.

Ross, S.M. and Morrison, G.R. 2004. Experimental research methods. In: Jonassen, D.H. (ed.), Handbook of Research for Educational Communications and Technology (2nd Ed.). Lawrence Erlbaum Associates, New Jersey, pp. 10211043.

Snedecor, G.W. and Cochran, W.G. 1980. Statistical Methods (7th Ed.). Iowa State University Press, Ames, Iowa, 507p.

Download references

Author information

Authors and affiliations.

Kerala Agricultural University, Thrissur, Kerala, India

C. George Thomas

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to C. George Thomas .

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s)

About this chapter

Thomas, C.G. (2021). Experimental Research. In: Research Methodology and Scientific Writing . Springer, Cham. https://doi.org/10.1007/978-3-030-64865-7_5

Download citation

DOI : https://doi.org/10.1007/978-3-030-64865-7_5

Published : 25 February 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-64864-0

Online ISBN : 978-3-030-64865-7

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Experimental Research: What it is + Types of designs

Any research conducted under scientifically acceptable conditions uses experimental methods. The success of experimental studies hinges on researchers confirming the change of a variable is based solely on the manipulation of the constant variable. The research should establish a notable cause and effect.

What is Experimental Research?

Experimental research is a study conducted with a scientific approach using two sets of variables. The first set acts as a constant, which you use to measure the differences of the second set. Quantitative research methods , for example, are experimental.

If you don’t have enough data to support your decisions, you must first determine the facts. This research gathers the data necessary to help you make better decisions.

You can conduct experimental research in the following situations:

- Time is a vital factor in establishing a relationship between cause and effect.

- Invariable behavior between cause and effect.

- You wish to understand the importance of cause and effect.

Experimental Research Design Types

The classic experimental design definition is: “The methods used to collect data in experimental studies.”

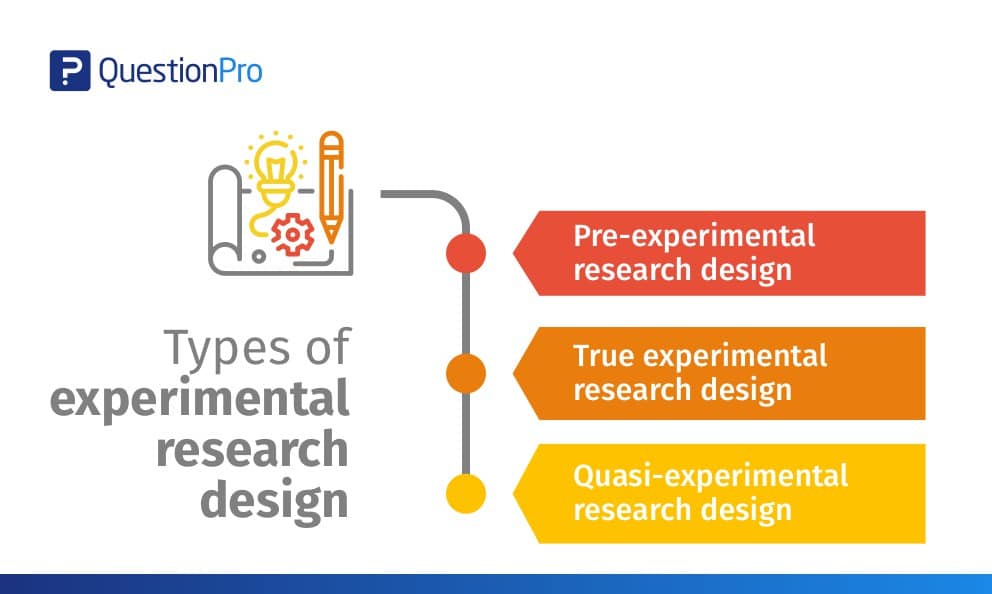

There are three primary types of experimental design:

- Pre-experimental research design

- True experimental research design

- Quasi-experimental research design

The way you classify research subjects based on conditions or groups determines the type of research design you should use.

0 1. Pre-Experimental Design

A group, or various groups, are kept under observation after implementing cause and effect factors. You’ll conduct this research to understand whether further investigation is necessary for these particular groups.

You can break down pre-experimental research further into three types:

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

0 2. True Experimental Design

It relies on statistical analysis to prove or disprove a hypothesis, making it the most accurate form of research. Of the types of experimental design, only true design can establish a cause-effect relationship within a group. In a true experiment, three factors need to be satisfied:

- There is a Control Group, which won’t be subject to changes, and an Experimental Group, which will experience the changed variables.

- A variable that can be manipulated by the researcher

- Random distribution

This experimental research method commonly occurs in the physical sciences.

0 3. Quasi-Experimental Design

The word “Quasi” indicates similarity. A quasi-experimental design is similar to an experimental one, but it is not the same. The difference between the two is the assignment of a control group. In this research, an independent variable is manipulated, but the participants of a group are not randomly assigned. Quasi-research is used in field settings where random assignment is either irrelevant or not required.

Importance of Experimental Design

Experimental research is a powerful tool for understanding cause-and-effect relationships. It allows us to manipulate variables and observe the effects, which is crucial for understanding how different factors influence the outcome of a study.

But the importance of experimental research goes beyond that. It’s a critical method for many scientific and academic studies. It allows us to test theories, develop new products, and make groundbreaking discoveries.

For example, this research is essential for developing new drugs and medical treatments. Researchers can understand how a new drug works by manipulating dosage and administration variables and identifying potential side effects.

Similarly, experimental research is used in the field of psychology to test theories and understand human behavior. By manipulating variables such as stimuli, researchers can gain insights into how the brain works and identify new treatment options for mental health disorders.

It is also widely used in the field of education. It allows educators to test new teaching methods and identify what works best. By manipulating variables such as class size, teaching style, and curriculum, researchers can understand how students learn and identify new ways to improve educational outcomes.

In addition, experimental research is a powerful tool for businesses and organizations. By manipulating variables such as marketing strategies, product design, and customer service, companies can understand what works best and identify new opportunities for growth.

Advantages of Experimental Research

When talking about this research, we can think of human life. Babies do their own rudimentary experiments (such as putting objects in their mouths) to learn about the world around them, while older children and teens do experiments at school to learn more about science.

Ancient scientists used this research to prove that their hypotheses were correct. For example, Galileo Galilei and Antoine Lavoisier conducted various experiments to discover key concepts in physics and chemistry. The same is true of modern experts, who use this scientific method to see if new drugs are effective, discover treatments for diseases, and create new electronic devices (among others).

It’s vital to test new ideas or theories. Why put time, effort, and funding into something that may not work?

This research allows you to test your idea in a controlled environment before marketing. It also provides the best method to test your theory thanks to the following advantages:

- Researchers have a stronger hold over variables to obtain desired results.

- The subject or industry does not impact the effectiveness of experimental research. Any industry can implement it for research purposes.

- The results are specific.

- After analyzing the results, you can apply your findings to similar ideas or situations.

- You can identify the cause and effect of a hypothesis. Researchers can further analyze this relationship to determine more in-depth ideas.

- Experimental research makes an ideal starting point. The data you collect is a foundation for building more ideas and conducting more action research .

Whether you want to know how the public will react to a new product or if a certain food increases the chance of disease, experimental research is the best place to start. Begin your research by finding subjects using QuestionPro Audience and other tools today.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Top 13 A/B Testing Software for Optimizing Your Website

Apr 12, 2024

21 Best Contact Center Experience Software in 2024

Government Customer Experience: Impact on Government Service

Apr 11, 2024

Employee Engagement App: Top 11 For Workforce Improvement

Apr 10, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Experimental design: Guide, steps, examples

Last updated

27 April 2023

Reviewed by

Miroslav Damyanov

Experimental research design is a scientific framework that allows you to manipulate one or more variables while controlling the test environment.

When testing a theory or new product, it can be helpful to have a certain level of control and manipulate variables to discover different outcomes. You can use these experiments to determine cause and effect or study variable associations.

This guide explores the types of experimental design, the steps in designing an experiment, and the advantages and limitations of experimental design.

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- What is experimental research design?

You can determine the relationship between each of the variables by:

Manipulating one or more independent variables (i.e., stimuli or treatments)

Applying the changes to one or more dependent variables (i.e., test groups or outcomes)

With the ability to analyze the relationship between variables and using measurable data, you can increase the accuracy of the result.

What is a good experimental design?

A good experimental design requires:

Significant planning to ensure control over the testing environment

Sound experimental treatments

Properly assigning subjects to treatment groups

Without proper planning, unexpected external variables can alter an experiment's outcome.

To meet your research goals, your experimental design should include these characteristics:

Provide unbiased estimates of inputs and associated uncertainties

Enable the researcher to detect differences caused by independent variables

Include a plan for analysis and reporting of the results

Provide easily interpretable results with specific conclusions

What's the difference between experimental and quasi-experimental design?

The major difference between experimental and quasi-experimental design is the random assignment of subjects to groups.

A true experiment relies on certain controls. Typically, the researcher designs the treatment and randomly assigns subjects to control and treatment groups.

However, these conditions are unethical or impossible to achieve in some situations.

When it's unethical or impractical to assign participants randomly, that’s when a quasi-experimental design comes in.

This design allows researchers to conduct a similar experiment by assigning subjects to groups based on non-random criteria.

Another type of quasi-experimental design might occur when the researcher doesn't have control over the treatment but studies pre-existing groups after they receive different treatments.

When can a researcher conduct experimental research?

Various settings and professions can use experimental research to gather information and observe behavior in controlled settings.

Basically, a researcher can conduct experimental research any time they want to test a theory with variable and dependent controls.

Experimental research is an option when the project includes an independent variable and a desire to understand the relationship between cause and effect.

- The importance of experimental research design

Experimental research enables researchers to conduct studies that provide specific, definitive answers to questions and hypotheses.

Researchers can test Independent variables in controlled settings to:

Test the effectiveness of a new medication

Design better products for consumers

Answer questions about human health and behavior

Developing a quality research plan means a researcher can accurately answer vital research questions with minimal error. As a result, definitive conclusions can influence the future of the independent variable.

Types of experimental research designs

There are three main types of experimental research design. The research type you use will depend on the criteria of your experiment, your research budget, and environmental limitations.

Pre-experimental research design

A pre-experimental research study is a basic observational study that monitors independent variables’ effects.

During research, you observe one or more groups after applying a treatment to test whether the treatment causes any change.

The three subtypes of pre-experimental research design are:

One-shot case study research design

This research method introduces a single test group to a single stimulus to study the results at the end of the application.

After researchers presume the stimulus or treatment has caused changes, they gather results to determine how it affects the test subjects.

One-group pretest-posttest design

This method uses a single test group but includes a pretest study as a benchmark. The researcher applies a test before and after the group’s exposure to a specific stimulus.

Static group comparison design

This method includes two or more groups, enabling the researcher to use one group as a control. They apply a stimulus to one group and leave the other group static.

A posttest study compares the results among groups.

True experimental research design

A true experiment is the most common research method. It involves statistical analysis to prove or disprove a specific hypothesis .

Under completely experimental conditions, researchers expose participants in two or more randomized groups to different stimuli.

Random selection removes any potential for bias, providing more reliable results.

These are the three main sub-groups of true experimental research design:

Posttest-only control group design

This structure requires the researcher to divide participants into two random groups. One group receives no stimuli and acts as a control while the other group experiences stimuli.

Researchers perform a test at the end of the experiment to observe the stimuli exposure results.

Pretest-posttest control group design

This test also requires two groups. It includes a pretest as a benchmark before introducing the stimulus.

The pretest introduces multiple ways to test subjects. For instance, if the control group also experiences a change, it reveals that taking the test twice changes the results.

Solomon four-group design

This structure divides subjects into two groups, with two as control groups. Researchers assign the first control group a posttest only and the second control group a pretest and a posttest.

The two variable groups mirror the control groups, but researchers expose them to stimuli. The ability to differentiate between groups in multiple ways provides researchers with more testing approaches for data-based conclusions.

Quasi-experimental research design

Although closely related to a true experiment, quasi-experimental research design differs in approach and scope.

Quasi-experimental research design doesn’t have randomly selected participants. Researchers typically divide the groups in this research by pre-existing differences.

Quasi-experimental research is more common in educational studies, nursing, or other research projects where it's not ethical or practical to use randomized subject groups.

- 5 steps for designing an experiment

Experimental research requires a clearly defined plan to outline the research parameters and expected goals.

Here are five key steps in designing a successful experiment:

Step 1: Define variables and their relationship

Your experiment should begin with a question: What are you hoping to learn through your experiment?

The relationship between variables in your study will determine your answer.

Define the independent variable (the intended stimuli) and the dependent variable (the expected effect of the stimuli). After identifying these groups, consider how you might control them in your experiment.

Could natural variations affect your research? If so, your experiment should include a pretest and posttest.

Step 2: Develop a specific, testable hypothesis

With a firm understanding of the system you intend to study, you can write a specific, testable hypothesis.

What is the expected outcome of your study?

Develop a prediction about how the independent variable will affect the dependent variable.

How will the stimuli in your experiment affect your test subjects?

Your hypothesis should provide a prediction of the answer to your research question .

Step 3: Design experimental treatments to manipulate your independent variable

Depending on your experiment, your variable may be a fixed stimulus (like a medical treatment) or a variable stimulus (like a period during which an activity occurs).

Determine which type of stimulus meets your experiment’s needs and how widely or finely to vary your stimuli.

Step 4: Assign subjects to groups

When you have a clear idea of how to carry out your experiment, you can determine how to assemble test groups for an accurate study.

When choosing your study groups, consider:

The size of your experiment

Whether you can select groups randomly

Your target audience for the outcome of the study

You should be able to create groups with an equal number of subjects and include subjects that match your target audience. Remember, you should assign one group as a control and use one or more groups to study the effects of variables.

Step 5: Plan how to measure your dependent variable

This step determines how you'll collect data to determine the study's outcome. You should seek reliable and valid measurements that minimize research bias or error.

You can measure some data with scientific tools, while you’ll need to operationalize other forms to turn them into measurable observations.

- Advantages of experimental research

Experimental research is an integral part of our world. It allows researchers to conduct experiments that answer specific questions.

While researchers use many methods to conduct different experiments, experimental research offers these distinct benefits:

Researchers can determine cause and effect by manipulating variables.

It gives researchers a high level of control.

Researchers can test multiple variables within a single experiment.

All industries and fields of knowledge can use it.

Researchers can duplicate results to promote the validity of the study .

Replicating natural settings rapidly means immediate research.

Researchers can combine it with other research methods.

It provides specific conclusions about the validity of a product, theory, or idea.

- Disadvantages (or limitations) of experimental research

Unfortunately, no research type yields ideal conditions or perfect results.

While experimental research might be the right choice for some studies, certain conditions could render experiments useless or even dangerous.

Before conducting experimental research, consider these disadvantages and limitations:

Required professional qualification

Only competent professionals with an academic degree and specific training are qualified to conduct rigorous experimental research. This ensures results are unbiased and valid.

Limited scope

Experimental research may not capture the complexity of some phenomena, such as social interactions or cultural norms. These are difficult to control in a laboratory setting.

Resource-intensive

Experimental research can be expensive, time-consuming, and require significant resources, such as specialized equipment or trained personnel.

Limited generalizability

The controlled nature means the research findings may not fully apply to real-world situations or people outside the experimental setting.

Practical or ethical concerns

Some experiments may involve manipulating variables that could harm participants or violate ethical guidelines .

Researchers must ensure their experiments do not cause harm or discomfort to participants.

Sometimes, recruiting a sample of people to randomly assign may be difficult.

- Experimental research design example

Experiments across all industries and research realms provide scientists, developers, and other researchers with definitive answers. These experiments can solve problems, create inventions, and heal illnesses.

Product design testing is an excellent example of experimental research.

A company in the product development phase creates multiple prototypes for testing. With a randomized selection, researchers introduce each test group to a different prototype.

When groups experience different product designs , the company can assess which option most appeals to potential customers.

Experimental research design provides researchers with a controlled environment to conduct experiments that evaluate cause and effect.

Using the five steps to develop a research plan ensures you anticipate and eliminate external variables while answering life’s crucial questions.

Get started today