- Reference Manager

- Simple TEXT file

People also looked at

Systematic review article, a systematic review of the effectiveness of online learning in higher education during the covid-19 pandemic period.

- 1 Department of Basic Education, Beihai Campus, Guilin University of Electronic Technology Beihai, Beihai, Guangxi, China

- 2 School of Sports and Arts, Harbin Sport University, Harbin, Heilongjiang, China

- 3 School of Music, Harbin Normal University, Harbin, Heilongjiang, China

- 4 School of General Education, Beihai Vocational College, Beihai, Guangxi, China

- 5 School of Economics and Management, Beihai Campus, Guilin University of Electronic Technology, Guilin, Guangxi, China

Background: The effectiveness of online learning in higher education during the COVID-19 pandemic period is a debated topic but a systematic review on this topic is absent.

Methods: The present study implemented a systematic review of 25 selected articles to comprehensively evaluate online learning effectiveness during the pandemic period and identify factors that influence such effectiveness.

Results: It was concluded that past studies failed to achieve a consensus over online learning effectiveness and research results are largely by how learning effectiveness was assessed, e.g., self-reported online learning effectiveness, longitudinal comparison, and RCT. Meanwhile, a set of factors that positively or negatively influence the effectiveness of online learning were identified, including infrastructure factors, instructional factors, the lack of social interaction, negative emotions, flexibility, and convenience.

Discussion: Although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education and these challenges and difficulties are more prominent in developing countries. In addition, this review critically assesses limitations in past research, develops pedagogical implications, and proposes recommendations for future research.

1 Introduction

1.1 research background.

The COVID-19 pandemic first out broken in early 2020 has considerably shaped the higher education landscape globally. To restrain viral transmission, universities globally locked down, and teaching and learning activities were transferred to online platforms. Although online learning is a relatively mature learning model and is increasingly integrated into higher education, the sudden and unprepared transition to wholly online learning caused by the pandemic posed formidable challenges to higher education stakeholders, e.g., policymakers, instructors, and students, especially at the early stage of the pandemic ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Correspondingly, the effectiveness of online learning during the pandemic period is still questionable as online learning during this period has some unique characteristics, e.g., the lack of preparation, sudden and unprepared transition, the huge scale of implementation, and social distancing policies ( Sharma et al., 2020 ; Rahman, 2021 ; Tsang et al., 2021 ; Hollister et al., 2022 ; Zhang and Chen, 2023 ). This question is more prominent in developing or undeveloped countries because of insufficient Internet access, network problems, the lack of electronic devices, and poor network infrastructure ( Adnan and Anwar, 2020 ; Muthuprasad et al., 2021 ; Rahman, 2021 ; Chandrasiri and Weerakoon, 2022 ).

Learning effectiveness is a key consideration of education as it reflects the extent to which learning and teaching objectives are achieved and learners’ needs are satisfied ( Joy and Garcia, 2000 ; Swan, 2003 ). Online learning was generally proven to be effective within a higher education context ( Kebritchi et al., 2017 ) prior to the pandemic. ICTs have fundamentally shaped the process of learning as they allow learners to learn anywhere and anytime, interact with others efficiently and conveniently, and freely acquire a large volume of learning materials online ( Kebritchi et al., 2017 ; Choudhury and Pattnaik, 2020 ). Such benefits may be offset by the challenges brought about by the pandemic. A lot of empirical studies globally have investigated the effectiveness of online learning but there is currently a scarcity of a systematic review of these studies to comprehensively evaluate online learning effectiveness and identify factors that influence effectiveness.

At present, although the vast majority of countries have implemented opening policies to deal with the pandemic and higher education institutes have recovered offline teaching and learning, assessing the effectiveness of online learning during the pandemic period via a systematic review is still essential. First, it is necessary to summarize, learn from, and reflect on the lessons and experiences of online learning practices during the pandemic period to offer implications for future practices and research. Second, the review of online learning research carried out during the pandemic period is likely to generate interesting knowledge because of the unique research context. Third, higher education institutes still need a contingency plan for emergency online learning to deal with potential crises in the future, e.g., wars, pandemics, and natural disasters. A systematic review of research on the effectiveness of online learning during the pandemic period offers valuable knowledge for designing a contingency plan for the future.

1.2 Related concepts

1.2.1 online learning.

Online learning should not be simply understood as learning on the Internet or the integration of ICTs with learning because it is a systematic framework consisting of a set of pedagogies, technologies, implementations, and processes ( Kebritchi et al., 2017 ; Choudhury and Pattnaik, 2020). Choudhury and Pattnaik (2020; p.2) summarized prior definitions of online learning and provided a comprehensive and up-to-date definition, i.e., online learning refers to “ the transfer of knowledge and skills, in a well-designed course content that has established accreditations, through an electronic media like the Internet, Web 4.0, intranets and extranets .” Online learning differs from traditional learning because of not only technological differences, but also differences in social development and pedagogies ( Camargo et al., 2020 ). Online learning has also considerably shaped the patterns by which knowledge is stored, shared, and transferred, skills are practiced, as well as the way by which stakeholders (e.g., teachers and teachers) interact ( Desai et al., 2008 ; Anderson and Hajhashemi, 2013 ). In addition, online learning has altered educational objectives and learning requirements. Memorizing knowledge was traditionally viewed as vital to learning but it is now less important since required knowledge can be conveniently searched and acquired on the Internet while the reflection and application of knowledge becomes more important ( Gamage et al., 2023 ). Online learning also entails learners’ self-regulated learning ability more than traditional learning because the online learning environment inflicts less external regulation and provides more autonomy and flexibility ( Barnard-Brak et al., 2010 ; Wong et al., 2019 ). The above differences imply that traditional pedagogies may not apply to online learning.

There are a variety of online learning models according to the differences in learning methods, processes, outcomes, and the application of technologies ( Zeitoun, 2008 ). As ICTs can be used as either the foundation of learning or auxiliary means, online learning can be classified into assistant, blended, and wholly online models. Here, assistant online learning refers to the scenario where online learning technologies are used to supplement and support traditional learning; blended online learning refers to the integration/ mixture of online and offline methods, and; wholly online learning refers to the exclusive use of the Internet for learning ( Arkorful and Abaidoo, 2015 ). The present review focuses on wholly online learning because the review is interested in the COVID-19 pandemic context where learning activities are fully switched to online platforms.

1.2.2 Learning effectiveness

Learning effectiveness can be broadly defined as the extent to which learning and teaching objectives have been effectively and efficiently achieved via educational activities ( Swan, 2003 ) or the extent to which learners’ needs are satisfied by learning activities ( Joy and Garcia, 2000 ). It is a multi-dimensional construct because learning objectives and needs are always diversified ( Joy and Garcia, 2000 ; Swan, 2003 ). Assessing learning effectiveness is a key challenge in educational research and researchers generally use a set of subjective and objective indicators to assess learning effectiveness, e.g., examination scores, assignment performance, perceived effectiveness, student satisfaction, learning motivation, engagement in learning, and learning experience ( Rajaram and Collins, 2013 ; Noesgaard and Ørngreen, 2015 ). Prior research related to the effectiveness of online learning was diversified in terms of learning outcomes, e.g., satisfaction, perceived effectiveness, motivation, and learning engagement, and there is no consensus over which outcomes are valid indicators of learning effectiveness. The present study adopts a broad definition of learning effectiveness and considers various learning outcomes that are closely associated with learning objectives and needs.

1.3 Previous review research

Up to now, online learning during the COVID-19 pandemic period has attracted considerable attention from academia and there is a lot of related review research. Some review research analyzed the trends and major topics in related research. Pratama et al. (2020) tracked the trend of using online meeting applications in online learning during the pandemic period based on a systematic review of 12 articles. It was reported that the use of these applications kept a rising trend and this use helps promote learning and teaching processes. However, this review was descriptive and failed to identify problems related to these applications as well as the limitations of these applications. Zhang et al. (2022) implemented a bibliometric review to provide a holistic view of research on online learning in higher education during the COVID-19 pandemic period. They concluded that the majority of research focused on identifying the use of strategies and technologies, psychological impacts brought by the pandemic, and student perceptions. Meanwhile, collaborative learning, hands-on learning, discovery learning, and inquiry-based learning were the most frequently discussed instructional approaches. In addition, chemical and medical education were found to be the most investigated disciplines. This review hence offered a relatively comprehensive landscape of related research in the field. However, since it was a bibliometric review, it merely analyzed the superficial characteristics of past articles in the field without a detailed analysis of their research contributions. Bughrara et al. (2023) categorized the major research topics in the field of online medical education during the pandemic period via a scoping review. A total of 174 articles were included in the review and it was found there were seven major topics, including students’ mental health, stigma, student vaccination, use of telehealth, students’ physical health, online modifications and educational adaptations, and students’ attitudes and knowledge. Overall, the review comprehensively reveals major topics in the focused field.

Some scholars believed that online learning during the pandemic period has brought about a lot of problems while both students and teachers encounter many challenges. García-Morales et al. (2021) implemented a systematic review to identify the challenges encountered by higher education in an online learning scenario during the pandemic period. A total of seven studies were included and it was found that higher education suddenly transferred to online learning and a lot of technologies and platforms were used to support online learning. However, this transition was hasty and forced by the extreme situation. Thus, various stakeholders in learning and teaching (e.g., students, universities, and teachers) encountered difficulties in adapting to this sudden change. To deal with these challenges, universities need to utilize the potential of technologies, improve learning experience, and meet students’ expectations. The major limitation of García-Morales et al. (2021) review of the small-sized sample. Meanwhile, García-Morales et al. (2021) also failed to systematically categorize various types of challenges. Stojan et al. (2022) investigated the changes to medical education brought about by the shift to online learning in the COVID-19 pandemic context as well as the lessons and impacts of these changes via a systematic review. A total of 56 articles were included in the analysis, it was reported that small groups and didactics were the most prevalent instructional methods. Although learning engagement was always interactive, teachers majorly integrated technologies to amplify and replace, rather than transform learning. Based on this, they argued that the use of asynchronous and synchronous formats promoted online learning engagement and offered self-directed and flexible learning. The major limitation of this review is that the article is somewhat descriptive and lacks the crucial evaluation of problems of online learning.

Review research has also focused on the changes and impacts brought by online learning during the pandemic period. Camargo et al. (2020) implemented a meta-analysis on seven empirical studies regarding online learning methods during the pandemic period to evaluate feasible online learning platforms, effective online learning models, and the optimal duration of online lectures, as well as the perceptions of teachers and students in the online learning process. Overall, it was concluded that the shift from offline to online learning is feasible, and; effective online learning needs a well-trained and integrated team to identify students’ and teachers’ needs, timely respond, and support them via digital tools. In addition, the pandemic has brought more or less difficulties to online learning. An obvious limitation of this review is the overly small-sized sample ( N = 7), which offers very limited information, but the review tries to answer too many questions (four questions). Grafton-Clarke et al. (2022) investigated the innovation/adaptations implemented, their impacts, and the reasons for their selections in the shift to online learning in medical education during the pandemic period via a systematic review of 55 articles. The major adaptations implemented include the rapid shift to the virtual space, pre-recorded videos or live streaming of surgical procedures, remote adaptations for clinical visits, and multidisciplinary ward rounds and team meetings. Major challenges encountered by students and teachers include the need for technical resources, faculty time, and devices, the shortage of standardized telemedicine curricula, and the lack of personal interactions. Based on this, they criticized the quality of online medical education. Tang (2023) explored the impact of the pandemic on primary, secondary, and tertiary education in the pandemic context via a systematic review of 41 articles. It was reported that the majority of these impacts are negative, e.g., learning loss among learners, assessment and experiential learning in the virtual environment, limitations in instructions, technology-related constraints, the lack of learning materials and resources, and deteriorated psychosocial well-being. These negative impacts are amplified by the unequal distribution of resources, unfair socioeconomic status, ethnicity, gender, physical conditions, and learning ability. Overall, this review comprehensively criticizes the problems brought about by online learning during the pandemic period.

Very little review research evaluated students’ responses to online learning during the pandemic period. For instance, Salas-Pilco et al. (2022) evaluated the engagement in online learning in Latin American higher education during the COVID-19 pandemic period via a systematic review of 23 studies. They considered three dimensions of engagement, including affective, cognitive, and behavioral engagement. They described the characteristics of learning engagement and proposed suggestions for enhancing engagement, including improving Internet connectivity, providing professional training, transforming higher education, ensuring quality, and offering emotional support. A key limitation of the review is that these authors focused on describing the characteristics of engagement without identifying factors that influence engagement.

A synthesis of previous review research offers some implications. First, although learning effectiveness is an important consideration in educational research, review research is scarce on this topic and hence there is a lack of comprehensive knowledge regarding the extent to which online learning is effective during the COVID-19 pandemic period. Second, according to past review research that summarized the major topics of related research, e.g., Bughrara et al. (2023) and Zhang et al. (2022) , the effectiveness of online learning is not a major topic in prior empirical research and hence the author of this article argues that this topic has not received due attention from researchers. Third, some review research has identified a lot of problems in online learning during the pandemic period, e.g., García-Morales et al. (2021) and Stojan et al. (2022) . Many of these problems are caused by the sudden and rapid shift to online learning as well as the unique context of the pandemic. These problems may undermine the effectiveness of online learning. However, the extent to which these problems influence online learning effectiveness is still under-investigated.

1.4 Purpose of the review research

The research is carried out based on a systematic review of past empirical research to answer the following two research questions:

Q1: To what extent online learning in higher education is effective during the COVID-19 pandemic period?

Q2: What factors shape the effectiveness of online learning in higher education during the COVID-19 pandemic period?

2 Research methodology

2.1 literature review as a research methodology.

Regardless of discipline, all academic research activities should be related to and based on existing knowledge. As a result, scholars must identify related research on the topic of interest, critically assess the quality and content of existing research, and synthesize available results ( Linnenluecke et al., 2020 ). However, this task is increasingly challenging for scholars because of the exponential growth of academic knowledge, which makes it difficult to be at the forefront and keep up with state-of-the-art research ( Snyder, 2019 ). Correspondingly, literature review, as a research methodology is more relevant than previously ( Snyder, 2019 ; Linnenluecke et al., 2020 ). A well-implemented review provides a solid foundation for facilitating theory development and advancing knowledge ( Webster and Watson, 2002 ). Here, a literature review is broadly defined as a more or less systematic way of collecting and synthesizing past studies ( Tranfield et al., 2003 ). It allows researchers to integrate perspectives and results from a lot of past research and is able to address research questions unanswered by a single study ( Snyder, 2019 ).

There are generally three types of literature review, including meta-analysis, bibliometric review, and systematic review ( Snyder, 2019 ). A meta-analysis refers to a statistical technique for integrating results from a large volume of empirical research (majorly quantitative research) to compare, identify, and evaluate patterns, relationships, agreements, and disagreements generated by research on the same topic ( Davis et al., 2014 ). This study does not adopt a meta-analysis for two reasons. First, the research on the effectiveness of online learning in the context of the COVID-19 pandemic was published since 2020 and currently, there is a limited volume of empirical evidence. If the study adopts a meta-analysis, the sample size will be small, resulting in limited statistical power. Second, as mentioned above, there are a variety of indicators, e.g., motivation, satisfaction, experience, test score, and perceived effectiveness ( Rajaram and Collins, 2013 ; Noesgaard and Ørngreen, 2015 ), that reflect different aspects of online learning effectiveness. The use of diversified effectiveness indicators increases the difficulty of carrying out meta-analysis.

A bibliometric review refers to the analysis of a large volume of empirical research in terms of publication characteristics (e.g., year, journal, and citation), theories, methods, research questions, countries, and authors ( Donthu et al., 2021 ) and it is useful in tracing the trend, distribution, relationship, and general patterns of research published in a focused topic ( Wallin, 2005 ). A bibliometric review does not fit the present study for two reasons. First, at present, there are less than 4 years of history of research on online learning effectiveness. Hence the volume of relevant research is limited and the public trend is currently unclear. Second, this study is interested in the inner content and results of articles published, rather than their external characteristics.

A systematic review is a method and process of critically identifying and appraising research in a specific field based on predefined inclusion and exclusion criteria to test a hypothesis, answer a research question, evaluate problems in past research, identify research gaps, and/or point out the avenue for future research ( Liberati et al., 2009 ; Moher et al., 2009 ). This type of review is particularly suitable to the present study as there are still a lot of unanswered questions regarding the effectiveness of online learning in the pandemic context, a need for indicating future research direction, a lack of summary of relevant research in this field, and a scarcity of critical appraisal of problems in past research.

Adopting a systematic review methodology brings multiple benefits to the present study. First, it is helpful for distinguishing what needs to be done from what has been done, identifying major contributions made by past research, finding out gaps in past research, avoiding fruitless research, and providing insights for future research in the focused field ( Linnenluecke et al., 2020 ). Second, it is also beneficial for finding out new research directions, needs for theory development, and potential solutions for limitations in past research ( Snyder, 2019 ). Third, this methodology helps scholars to efficiently gain an overview of valuable research results and theories generated by past research, which inspires their research design, ideas, and perspectives ( Callahan, 2014 ).

Commonly, a systematic review can be either author-centric or theme-centric ( Webster and Watson, 2002 ) and the present review is theme-centric. Specifically, an author-centric review focuses on works published by a certain author or a group of authors and summarizes the major contributions made by the author(s; ( Webster and Watson, 2002 ). This type of review is problematic in terms of its incompleteness of research conclusions in a specific field and descriptive nature ( Linnenluecke et al., 2020 ). A theme-centric review is more common where a researcher guides readers through reviewing themes, concepts, and interesting phenomena according to a certain logic ( Callahan, 2014 ). A theme in this review can be further structured into several related sub-themes and this type of review helps researchers to gain a comprehensive understanding of relevant academic knowledge ( Papaioannou et al., 2016 ).

2.2 Research procedures

This study follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guideline ( Liberati et al., 2009 ) to implement a systematic review. The guideline indicates four phases of performing a systematic review, including (1) identifying possible research, (2) abstract screening, (3) assessing full-text for eligibility, and (4) qualitatively synthesizing included research. Figure 1 provides a flowchart of the process and the number of articles excluded and included in each phase.

Figure 1 . PRISMA flowchart concerning the selection of articles.

This study uses multiple academic databases to identify possible research, e.g., Academic Search Complete, IGI Global, ACM Digital Library, Elsevier (SCOPUS), Emerald, IEEE Xplore, Web of Science, Science Direct, ProQuest, Wiley Online Library, Taylor and Francis, and EBSCO. Since the COVID-19 pandemic broke out in January 2020, this study limits the literature search to articles published from January 2020 to August 2023. During this period, online learning was highly prevalent in schools globally and a considerable volume of articles were published to investigate various aspects of online learning in this period. Keywords used for searching possible research include pandemic, COVID, SARS-CoV-2, 2019-nCoV, coronavirus, online learning, e-learning, electronic learning, higher education, tertiary education, universities, learning effectiveness, learning satisfaction, learning engagement, and learning motivation. Aside from searching from databases, this study also manually checks the reference lists of relevant articles and uses Google Scholar to find out other articles that have cited these articles.

2.3 Inclusion and exclusion criteria

Articles included in the review must meet the following criteria. First, articles have to be written in English and published on peer-reviewed journals. The academic language being English was chosen because it is in the Q zone of the specified search engines. Second, the research must be carried out in an online learning context. Third, the research must have collected and analyzed empirical data. Fourth, the research should be implemented in a higher education context and during the pandemic period. Fifth, the outcome variable must be factors related to learning effectiveness, and included studies must have reported the quantitative results for online learning effectiveness. The outcome variable should be measured by data collected from students, rather than other individuals (e.g., instructors). For instance, the research of Rahayu and Wirza (2020) used teacher perception as a measurement of online learning effectiveness and was hence excluded from the sample. According to the above criteria, a total of 25 articles were included in the review.

2.4 Data extraction and analysis

Content analysis is performed on included articles and an inductive approach is used to answer the two research questions. First, to understand the basic characteristics of the 25 articles/studies, the researcher summarizes their types, research designs, and samples and categorizes them into several groups. The researcher carefully reads the full-text of these articles and codes valuable pieces of content. In this process, an inductive approach is used, and key themes in these studies have been extracted and summarized. Second, the researcher further categorizes these studies into different groups according to their similarities and differences in research findings. In this way, these studies are broadly categorized into three groups, i.e., (1) ineffective (2) neutral, and (3) effective. Based on this, the research answers the research question and indicates the percentage of studies that evidenced online learning as effective in a COVID-19 pandemic context. The researcher also discusses how online learning is effective by analyzing the learning outcomes brought by online learning. Third, the researcher analyzes and compares the characteristics of the three groups of studies and extracts key themes that are relevant to the conditional effectiveness of online learning from these studies. Based on this, the researcher identifies factors that influence the effectiveness of online learning in a pandemic context. In this way, the two research questions have been adequately answered.

3 Research results and discussion

3.1 study characteristics.

Table 1 shows the statistics of the 25 studies while Table 2 shows a summary of these studies. Overall, these studies varied greatly in terms of research design, research subjects, contexts, measurements of learning effectiveness, and eventually research findings. Approximately half of the studies were published in 2021 and the number of studies reduced in 2022 and 2023, which may be attributed to the fact that universities gradually implemented opening-up policies after 2020. China received the largest number of studies ( N = 5), followed by India ( N = 4) and the United States ( N = 3). The sample sizes of the majority of studies (88.0%) ranged between 101 and 500. As this review excluded qualitative studies, all studies included adopted a purely quantitative design (88.0%) or a mixed design (12.0%). The majority of the studies were cross-sectional (72%) and a few studies (2%) were experimental.

Table 1 . Statistics of studies included in the review.

Table 2 . A summary of studies reviewed.

3.2 The effectiveness of online learning

Overall, the 25 studies generated mixed results regarding the effectiveness of online learning during the pandemic period. 9 (36%) studies reported online learning as effective; 13 (52%) studies reported online learning as ineffective, and the rest 3 (12%) studies produced neutral results. However, it should be noted that the results generated by these studies are not comparable as they used different approaches to evaluate the effectiveness of online learning. According to the approach of evaluating online learning effectiveness, these studies are categorized into four groups, including (1) Cross-sectional evaluation of online learning effectiveness without a comparison with offline learning; without a control group ( N = 14; 56%), (2) Cross-sectional comparison of the effectiveness of online learning with offline learning; without control group (7; 28%), (3) Longitudinal comparison of the effectiveness of online learning with offline learning, without a control group ( N = 2; 8%), and (4) Randomized Controlled Trial (RCT); with a control group ( N = 2; 8%).

The first group of studies asked students to report the extent to which they perceived online learning as effective, they had achieved expected learning outcomes through online learning, or they were satisfied with online learning experience or outcomes, without a comparison with offline learning. Six out of 14 studies reported online learning as ineffective, including Adnan and Anwar (2020) , Hong et al. (2021) , Mok et al. (2021) , Baber (2022) , Chandrasiri and Weerakoon (2022) , and Lalduhawma et al. (2022) . Five out of 14 studies reported online learning as effective, including Almusharraf and Khahro (2020) , Sharma et al. (2020) , Mahyoob (2021) , Rahman (2021) , and Haningsih and Rohmi (2022) . In addition, 3 out of 14 studies reported neutral results, including Cranfield et al. (2021) , Tsang et al. (2021) , and Conrad et al. (2022) . It should be noted that this measurement approach is problematic in three aspects. First, researchers used various survey instruments to measure learning effectiveness without reaching a consensus over a widely accepted instrument. As a result, these studies measured different aspects of learning effectiveness and hence their results may be incomparable. Second, these studies relied on students’ self-reports to evaluate learning effectiveness, which is subjective and inaccurate. Third, even though students perceived online learning as effective, it does not imply that online learning is more effective than offline learning because of the absence of comparables.

The second group of studies asked students to compare online learning with offline learning to evaluate learning effectiveness. Interestingly, all 7 studies, including Alawamleh et al. (2020) , Almahasees et al. (2021) , Gonzalez-Ramirez et al. (2021) , Muthuprasad et al. (2021) , Selco and Habbak (2021) , Hollister et al. (2022) , and Zhang and Chen (2023) , reported that online learning was perceived by participants as less effective than offline learning. It should be noted that these results were specific to the COVID-19 pandemic context where strict social distancing policies were implemented. Consequently, these results should be interpreted as online learning during the school lockdown period was perceived by participants as less effective than offline learning during the pre-pandemic period. A key problem of the measurement of learning effectiveness in these studies is subjectivity, i.e., students’ self-reported online learning effectiveness relative to offline learning may be subjective and influenced by a lot of factors caused by the pandemic, e.g., negative emotions (e.g., fear, loneliness, and anxiety).

Only two studies implemented a longitudinal comparison of the effectiveness of online learning with offline learning, i.e., Chang et al. (2021) and Fyllos et al. (2021) . Interestingly, both studies reported that participants perceived online learning as more effective than offline learning, which is contradicted with the second group of studies. In the two studies, the same group of students participated in offline learning and online learning successively and rated the effectiveness of the two learning approaches, respectively. The two studies were implemented by time coincidence, i.e., researchers unexpectedly encountered the pandemic and subsequently, school lockdown when they were investigating learning effectiveness. Such time coincidence enabled them to compare the effectiveness of offline and online learning. However, this research design has three key problems. First, the content of learning in the online and offline learning periods was different and hence the evaluations of learning effectiveness of the two periods are not comparable. Second, self-reported learning effectiveness is subjective. Third, students are likely to obtain better examination scores in online examinations than in offline examinations because online examinations bring a lot of cheating behaviors and are less fair than offline examinations. As reported by Fyllos et al. (2021) , the examination score after online learning was significantly higher than after offline learning. Chang et al. (2021) reported that participants generally believed that offline examinations are fairer than online examinations.

Lastly, only two studies, i.e., Jiang et al. (2023) and Shirahmadi et al. (2023) , implemented an RCT design, which is more persuasive, objective, and accurate than the above-reviewed studies. Indeed, implementing an RCT to evaluate the effectiveness of online learning was a formidable challenge during the pandemic period because of viral transmission and social distancing policies. Both studies reported that online learning is more effective than offline learning during the pandemic period. However, it is questionable about the extent to which such results are affected by health/safety-related issues. It is reasonable to infer that online learning was perceived by students as safer than offline learning during the pandemic period and such perceptions may affect learning effectiveness.

Overall, it is difficult to conclude whether online learning is effective during the pandemic period. Nevertheless, it is possible to identify factors that shape the effectiveness of online learning, which is discussed in the next section.

3.3 Factors that shape online learning effectiveness

Infrastructure factors were reported as the most salient factors that determine online learning effectiveness. It seems that research from developed countries generated more positive results for online learning than research from less developed countries. This view was confirmed by the cross-country comparative study of Cranfield et al. (2021) . Indeed, online learning entails the support of ICT infrastructure, and hence ICT related factors, e.g., Internet connectivity, technical issues, network speed, accessibility of digital devices, and digital devices, considerably influence the effectiveness of online learning ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Prior review research, e.g., Tang (2023) also suggested that the unequal distribution of resources and unfair socioeconomic status intensified the problems brought about by online learning during the pandemic period. Salas-Pilco et al. (2022) recommended that improving Internet connectivity would increase students’ engagement in online learning during the pandemic period.

Adnan and Anwar (2020) study is one of the most cited works in the focused field. They reported that online learning is ineffective in Pakistan because of the problems of Internet access due to monetary and technical issues. The above problems hinder students from implementing online learning activities, making online learning ineffective. Likewise, Lalduhawma et al. (2022) research from India indicated that online learning is ineffective because of poor network interactivity, slow data speed, low data limits, and expensive costs of devices. As a result, online learning during the COVID-19 pandemic may have expanded the education gap between developed and developing countries because of developing countries’ infrastructure disadvantages. More attention to online learning infrastructure problems in developing countries is needed.

Instructional factors, e.g., course management and design, instructor characteristics, instructor-student interaction, assignments, and assessments were found to affect online learning effectiveness ( Sharma et al., 2020 ; Rahman, 2021 ; Tsang et al., 2021 ; Hollister et al., 2022 ; Zhang and Chen, 2023 ). Although these instructional factors have been well-documented as significant drivers of learning effectiveness in traditional learning literature, these factors in the pandemic period have some unique characteristics. Both students and teachers were not well prepared for wholly online instruction and learning in 2020 and hence they encountered a lot of problems in course management and design, learning interactions, assignments, and assessments ( Stojan et al., 2022 ; Tang, 2023 ). García-Morales et al. (2021) review also suggested that various stakeholders in learning and teaching encountered difficulties in adapting to the sudden, hasty, and forced transition of offline to online learning. Consequently, these instructional factors become salient in terms of affecting online learning effectiveness.

The negative role of the lack of social interaction caused by social distancing in affecting online learning effectiveness was highlighted by a lot of studies ( Almahasees et al., 2021 ; Baber, 2022 ; Conrad et al., 2022 ; Hollister et al., 2022 ). Baber (2022) argued that people give more importance to saving lives than socializing in the online environment and hence social interactions in learning are considerably reduced by social distancing norms. The negative impact of the lack of social interaction on online learning effectiveness is reflected in two aspects. First, according to a constructivist view, interaction is an indispensable element of learning because knowledge is actively constructed by learners in social interactions ( Woo and Reeves, 2007 ). Consequently, online learning effectiveness during the pandemic period is reduced by the lack of social interaction. Second, the lack of social interaction brings a lot of negative emotions, e.g., feelings of isolation, loneliness, anxiety, and depression ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). Such negative emotions undermine online learning effectiveness.

Negative emotions caused by the pandemic and school lockdown were also found to be detrimental to online learning effectiveness. In this context, it was reported that many students experience a lot of negative emotions, e.g., feelings of isolation, exhaustion, loneliness, and distraction ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). Such negative emotions, as mentioned above, reduce online learning effectiveness.

Several factors were also found to increase online learning effectiveness during the pandemic period, e.g., convenience and flexibility ( Hong et al., 2021 ; Muthuprasad et al., 2021 ; Selco and Habbak, 2021 ). Students with strong self-regulated learning abilities gain more benefits from convenience and flexibility in online learning ( Hong et al., 2021 ).

Overall, although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education. Meanwhile, the majority of students prefer offline learning to online learning. The above challenges and difficulties are more prominent in developing countries than in developed countries.

3.4 Pedagogical implications

The results generated by the systematic review offer a lot of pedagogical implications. First, online learning entails the support of ICT infrastructure, and infrastructure defects strongly undermine learning effectiveness ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Given the fact online learning is increasingly integrated into higher education ( Kebritchi et al., 2017 ) regardless of the presence of the pandemic, governments globally should increase the investment in learning-related ICT infrastructure in higher education institutes. Meanwhile, schools should consider students’ affordability of digital devices and network fees when implementing online learning activities. It is important to offer material support for those students with poor economic status. Infrastructure issues are more prominent in developing countries because of the lack of monetary resources and poor infrastructure base. Thus, international collaboration and aid are recommended to address these issues.

Second, since the lack of social interaction is a key factor that reduces online learning effectiveness, it is important to increase social interactions during the implementation of online learning activities. On the one hand, both students and instructors are encouraged to utilize network technologies to promote inter-individual interactions. On the other hand, the two parties are also encouraged to engage in offline interaction activities if the risk is acceptable.

Third, special attention should be paid to students’ emotions during the online learning process as online learning may bring a lot of negative emotions to students, which undermine learning effectiveness ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). In addition, higher education institutes should prepare a contingency plan for emergency online learning to deal with potential crises in the future, e.g., wars, pandemics, and natural disasters.

3.5 Limitations and suggestions for future research

There are several limitations in past research regarding online learning effectiveness during the pandemic period. The first is the lack of rigor in assessing learning effectiveness. Evidently, there is a scarcity of empirical research with an RCT design, which is considered to be accurate, objective, and rigorous in assessing pedagogical models ( Torgerson and Torgerson, 2001 ). The scarcity of ICT research leads to the difficulty in accurately assessing the effectiveness of online learning and comparing it with offline learning. Second, the widely accepted criteria for assessing learning effectiveness are absent, and past empirical studies used diversified procedures, techniques, instruments, and criteria for measuring online learning effectiveness, resulting in difficulty in comparing research results. Third, learning effectiveness is a multi-dimensional construct but its multidimensionality was largely ignored by past research. Therefore, it is difficult to evaluate which dimensions of learning effectiveness are promoted or undermined by online learning and it is also difficult to compare the results of different studies. Finally, there is very limited knowledge about the difference in online learning effectiveness between different subjects. It is likely that the subjects that depend on lab-based work (e.g., experimental physics, organic chemistry, and cell biology) are less appropriate for online learning than the subjects that depend on desk-based work (e.g., economics, psychology, and literature).

To deal with the above limitations, there are several recommendations for future research on online learning effectiveness. First, future research is encouraged to adopt an RCT design and collect a large-sized sample to objectively, rigorously, and accurately quantify the effectiveness of online learning. Second, scholars are also encouraged to develop a new framework to assess learning effectiveness comprehensively. This framework should cover multiple dimensions of learning effectiveness and have strong generalizability. Finally, it is recommended that future research could compare the effectiveness of online learning between different subjects.

4 Conclusion

This study carried out a systematic review of 25 empirical studies published between 2020 and 2023 to evaluate the effectiveness of online learning during the COVID-19 pandemic period. According to how online learning effectiveness was assessed, these 25 studies were categorized into four groups. The first group of studies employed a cross-sectional design and assessed online learning based on students’ perceptions without a control group. Less than half of these studies reported online learning as effective. The second group of studies also employed a cross-sectional design and asked students to compare the effectiveness of online learning with offline learning. All these studies reported that online learning is less effective than offline learning. The third group of studies employed a longitudinal design and compared the effectiveness of online learning with offline learning but without a control group and this group includes only 2 studies. It was reported that online learning is more effective than offline learning. The fourth group of studies employed an RCT design and this group includes only 2 studies. Both studies reported online learning as more effective than offline learning.

Overall, it is difficult to conclude whether online learning is effective during the pandemic period because of the diversified research contexts, methods, and approaches in past research. Nevertheless, the review identifies a set of factors that positively or negatively influence the effectiveness of online learning, including infrastructure factors, instructional factors, the lack of social interaction, negative emotions, flexibility, and convenience. Although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education. Meanwhile, the majority of students prefer offline learning to online learning. In addition, developing countries face more challenges and difficulties in online learning because of monetary and infrastructure issues.

The findings of this review offer significant pedagogical implications for online learning in higher education institutes, including enhancing the development of ICT infrastructure, providing material support for students with poor economic status, enhancing social interactions, paying attention to students’ emotional status, and preparing a contingency plan of emergency online learning.

The review also identifies several limitations in past research regarding online learning effectiveness during the pandemic period, including the lack of rigor in assessing learning effectiveness, the absence of accepted criteria for assessing learning effectiveness, the neglect of the multidimensionality of learning effectiveness, and limited knowledge about the difference in online learning effectiveness between different subjects.

To deal with the above limitations, there are several recommendations for future research on online learning effectiveness. First, future research is encouraged to adopt an RCT design and collect a large-sized sample to objectively, rigorously, and accurately quantify the effectiveness of online learning. Second, scholars are also encouraged to develop a new framework to assess learning effectiveness comprehensively. This framework should cover multiple dimensions of learning effectiveness and have strong generalizability. Finally, it is recommended that future research could compare the effectiveness of online learning between different subjects. To fix these limitations in future research, recommendations are made.

It should be noted that this review is not free of problems. First, only studies that quantitatively measured online learning effectiveness were included in the review and hence a lot of other studies (e.g., qualitative studies) that investigated factors that influence online learning effectiveness were excluded, resulting in a relatively small-sized sample and incomplete synthesis of past research contributions. Second, since this review was qualitative, it was difficult to accurately quantify the level of online learning effectiveness.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

WM: Writing – original draft, Writing – review & editing. LY: Writing – original draft, Writing – review & editing. CL: Writing – review & editing. NP: Writing – review & editing. XP: Writing – review & editing. YZ: Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adnan, M., and Anwar, K. (2020). Online learning amid the COVID-19 pandemic: Students' perspectives. J. Pedagogical Sociol. Psychol. 1, 45–51. doi: 10.33902/JPSP.2020261309

Crossref Full Text | Google Scholar

Alawamleh, M., Al-Twait, L. M., and Al-Saht, G. R. (2020). The effect of online learning on communication between instructors and students during Covid-19 pandemic. Asian Educ. Develop. Stud. 11, 380–400. doi: 10.1108/AEDS-06-2020-0131

Almahasees, Z., Mohsen, K., and Amin, M. O. (2021). Faculty’s and students’ perceptions of online learning during COVID-19. Front. Educ. 6:638470. doi: 10.3389/feduc.2021.638470

Almusharraf, N., and Khahro, S. (2020). Students satisfaction with online learning experiences during the COVID-19 pandemic. Int. J. Emerg. Technol. Learn. (iJET) 15, 246–267. doi: 10.3991/ijet.v15i21.15647

Anderson, N., and Hajhashemi, K. (2013). Online learning: from a specialized distance education paradigm to a ubiquitous element of contemporary education. In 4th international conference on e-learning and e-teaching (ICELET 2013) (pp. 91–94). IEEE.

Google Scholar

Arkorful, V., and Abaidoo, N. (2015). The role of e-learning, advantages and disadvantages of its adoption in higher education. Int. J. Instructional Technol. Distance Learn. 12, 29–42.

Baber, H. (2022). Social interaction and effectiveness of the online learning–a moderating role of maintaining social distance during the pandemic COVID-19. Asian Educ. Develop. Stud. 11, 159–171. doi: 10.1108/AEDS-09-2020-0209

Barnard-Brak, L., Paton, V. O., and Lan, W. Y. (2010). Profiles in self-regulated learning in the online learning environment. Int. Rev. Res. Open Dist. Learn. 11, 61–80. doi: 10.19173/irrodl.v11i1.769

Bughrara, M. S., Swanberg, S. M., Lucia, V. C., Schmitz, K., Jung, D., and Wunderlich-Barillas, T. (2023). Beyond COVID-19: the impact of recent pandemics on medical students and their education: a scoping review. Med. Educ. Online 28:2139657. doi: 10.1080/10872981.2022.2139657

PubMed Abstract | Crossref Full Text | Google Scholar

Callahan, J. L. (2014). Writing literature reviews: a reprise and update. Hum. Resour. Dev. Rev. 13, 271–275. doi: 10.1177/1534484314536705

Camargo, C. P., Tempski, P. Z., Busnardo, F. F., Martins, M. D. A., and Gemperli, R. (2020). Online learning and COVID-19: a meta-synthesis analysis. Clinics 75:e2286. doi: 10.6061/clinics/2020/e2286

Choudhury, S., and Pattnaik, S. (2020). Emerging themes in e-learning: A review from the stakeholders’ perspective. Computers and Education 144, 103657. doi: 10.1016/j.compedu.2019.103657

Chandrasiri, N. R., and Weerakoon, B. S. (2022). Online learning during the COVID-19 pandemic: perceptions of allied health sciences undergraduates. Radiography 28, 545–549. doi: 10.1016/j.radi.2021.11.008

Chang, J. Y. F., Wang, L. H., Lin, T. C., Cheng, F. C., and Chiang, C. P. (2021). Comparison of learning effectiveness between physical classroom and online learning for dental education during the COVID-19 pandemic. J. Dental Sci. 16, 1281–1289. doi: 10.1016/j.jds.2021.07.016

Conrad, C., Deng, Q., Caron, I., Shkurska, O., Skerrett, P., and Sundararajan, B. (2022). How student perceptions about online learning difficulty influenced their satisfaction during Canada's Covid-19 response. Br. J. Educ. Technol. 53, 534–557. doi: 10.1111/bjet.13206

Cranfield, D. J., Tick, A., Venter, I. M., Blignaut, R. J., and Renaud, K. (2021). Higher education students’ perceptions of online learning during COVID-19—a comparative study. Educ. Sci. 11, 403–420. doi: 10.3390/educsci11080403

Desai, M. S., Hart, J., and Richards, T. C. (2008). E-learning: paradigm shift in education. Education 129, 1–20.

Davis, J., Mengersen, K., Bennett, S., and Mazerolle, L. (2014). Viewing systematic reviews and meta-analysis in social research through different lenses. SpringerPlus 3, 1–9. doi: 10.1186/2193-1801-3-511

Donthu, N., Kumar, S., Mukherjee, D., Pandey, N., and Lim, W. M. (2021). How to conduct a bibliometric analysis: An overview and guidelines. Journal of business research 133, 264–269. doi: 10.1016/j.jbusres.2021.04.070

Fyllos, A., Kanellopoulos, A., Kitixis, P., Cojocari, D. V., Markou, A., Raoulis, V., et al. (2021). University students perception of online education: is engagement enough? Acta Informatica Medica 29, 4–9. doi: 10.5455/aim.2021.29.4-9

Gamage, D., Ruipérez-Valiente, J. A., and Reich, J. (2023). A paradigm shift in designing education technology for online learning: opportunities and challenges. Front. Educ. 8:1194979. doi: 10.3389/feduc.2023.1194979

García-Morales, V. J., Garrido-Moreno, A., and Martín-Rojas, R. (2021). The transformation of higher education after the COVID disruption: emerging challenges in an online learning scenario. Front. Psychol. 12:616059. doi: 10.3389/fpsyg.2021.616059

Gonzalez-Ramirez, J., Mulqueen, K., Zealand, R., Silverstein, S., Mulqueen, C., and BuShell, S. (2021). Emergency online learning: college students' perceptions during the COVID-19 pandemic. Coll. Stud. J. 55, 29–46.

Grafton-Clarke, C., Uraiby, H., Gordon, M., Clarke, N., Rees, E., Park, S., et al. (2022). Pivot to online learning for adapting or continuing workplace-based clinical learning in medical education following the COVID-19 pandemic: a BEME systematic review: BEME guide no. 70. Med. Teach. 44, 227–243. doi: 10.1080/0142159X.2021.1992372

Haningsih, S., and Rohmi, P. (2022). The pattern of hybrid learning to maintain learning effectiveness at the higher education level post-COVID-19 pandemic. Eurasian J. Educ. Res. 11, 243–257. doi: 10.12973/eu-jer.11.1.243

Hollister, B., Nair, P., Hill-Lindsay, S., and Chukoskie, L. (2022). Engagement in online learning: student attitudes and behavior during COVID-19. Front. Educ. 7:851019. doi: 10.3389/feduc.2022.851019

Hong, J. C., Lee, Y. F., and Ye, J. H. (2021). Procrastination predicts online self-regulated learning and online learning ineffectiveness during the coronavirus lockdown. Personal. Individ. Differ. 174:110673. doi: 10.1016/j.paid.2021.110673

Jiang, P., Namaziandost, E., Azizi, Z., and Razmi, M. H. (2023). Exploring the effects of online learning on EFL learners’ motivation, anxiety, and attitudes during the COVID-19 pandemic: a focus on Iran. Curr. Psychol. 42, 2310–2324. doi: 10.1007/s12144-022-04013-x

Joy, E. H., and Garcia, F. E. (2000). Measuring learning effectiveness: a new look at no-significant-difference findings. JALN 4, 33–39.

Kebritchi, M., Lipschuetz, A., and Santiague, L. (2017). Issues and challenges for teaching successful online courses in higher education: a literature review. J. Educ. Technol. Syst. 46, 4–29. doi: 10.1177/0047239516661713

Lalduhawma, L. P., Thangmawia, L., and Hussain, J. (2022). Effectiveness of online learning during the COVID-19 pandemic in Mizoram. J. Educ. e-Learning Res. 9, 175–183. doi: 10.20448/jeelr.v9i3.4162

Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gotzsche, P. C., Ioannidis, J. P., et al. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Annals of internal medicine , 151, W-65. doi: 10.7326/0003-4819-151-4-200908180-00136

Linnenluecke, M. K., Marrone, M., and Singh, A. K. (2020). Conducting systematic literature reviews and bibliometric analyses. Aust. J. Manag. 45, 175–194. doi: 10.1177/0312896219877678

Mahyoob, M. (2021). Online learning effectiveness during the COVID-19 pandemic: a case study of Saudi universities. Int. J. Info. Commun. Technol. Educ. (IJICTE) 17, 1–14. doi: 10.4018/IJICTE.20211001.oa7

Moher, D., Liberati, A., Tetzlaff, D., and Altman, G. and PRISMA Group (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Annals of internal medicine , 151, 264–269. doi: 10.3736/jcim20090918

Mok, K. H., Xiong, W., and Bin Aedy Rahman, H. N. (2021). COVID-19 pandemic’s disruption on university teaching and learning and competence cultivation: student evaluation of online learning experiences in Hong Kong. Int. J. Chinese Educ. 10:221258682110070. doi: 10.1177/22125868211007011

Muthuprasad, T., Aiswarya, S., Aditya, K. S., and Jha, G. K. (2021). Students’ perception and preference for online education in India during COVID-19 pandemic. Soc. Sci. Humanities open 3:100101. doi: 10.1016/j.ssaho.2020.100101

Noesgaard, S. S., and Ørngreen, R. (2015). The effectiveness of e-learning: an explorative and integrative review of the definitions, methodologies and factors that promote e-learning effectiveness. Electronic J. E-learning 13, 278–290.

Papaioannou, D., Sutton, A., and Booth, A. (2016). Systematic approaches to a successful literature review. London: Sage.

Pratama, H., Azman, M. N. A., Kassymova, G. K., and Duisenbayeva, S. S. (2020). The trend in using online meeting applications for learning during the period of pandemic COVID-19: a literature review. J. Innovation in Educ. Cultural Res. 1, 58–68. doi: 10.46843/jiecr.v1i2.15

Rahayu, R. P., and Wirza, Y. (2020). Teachers’ perception of online learning during pandemic covid-19. Jurnal penelitian pendidikan 20, 392–406. doi: 10.17509/jpp.v20i3.29226

Rahman, A. (2021). Using students’ experience to derive effectiveness of COVID-19-lockdown-induced emergency online learning at undergraduate level: evidence from Assam. India. Higher Education for the Future 8, 71–89. doi: 10.1177/2347631120980549

Rajaram, K., and Collins, B. (2013). Qualitative identification of learning effectiveness indicators among mainland Chinese students in culturally dislocated study environments. J. Int. Educ. Bus. 6, 179–199. doi: 10.1108/JIEB-03-2013-0010

Salas-Pilco, S. Z., Yang, Y., and Zhang, Z. (2022). Student engagement in online learning in Latin American higher education during the COVID-19 pandemic: a systematic review. Br. J. Educ. Technol. 53, 593–619. doi: 10.1111/bjet.13190

Selco, J. I., and Habbak, M. (2021). Stem students’ perceptions on emergency online learning during the covid-19 pandemic: challenges and successes. Educ. Sci. 11:799. doi: 10.3390/educsci11120799

Sharma, K., Deo, G., Timalsina, S., Joshi, A., Shrestha, N., and Neupane, H. C. (2020). Online learning in the face of COVID-19 pandemic: assessment of students’ satisfaction at Chitwan medical college of Nepal. Kathmandu Univ. Med. J. 18, 40–47. doi: 10.3126/kumj.v18i2.32943

Shirahmadi, S., Hazavehei, S. M. M., Abbasi, H., Otogara, M., Etesamifard, T., Roshanaei, G., et al. (2023). Effectiveness of online practical education on vaccination training in the students of bachelor programs during the Covid-19 pandemic. PLoS One 18:e0280312. doi: 10.1371/journal.pone.0280312

Snyder, H. (2019). Literature review as a research methodology: an overview and guidelines. J. Bus. Res. 104, 333–339. doi: 10.1016/j.jbusres.2019.07.039

Stojan, J., Haas, M., Thammasitboon, S., Lander, L., Evans, S., Pawlik, C., et al. (2022). Online learning developments in undergraduate medical education in response to the COVID-19 pandemic: a BEME systematic review: BEME guide no. 69. Med. Teach. 44, 109–129. doi: 10.1080/0142159X.2021.1992373

Swan, K. (2003). Learning effectiveness online: what the research tells us. Elements of quality online education, practice and direction 4, 13–47.

Tang, K. H. D. (2023). Impacts of COVID-19 on primary, secondary and tertiary education: a comprehensive review and recommendations for educational practices. Educ. Res. Policy Prac. 22, 23–61. doi: 10.1007/s10671-022-09319-y

Torgerson, C. J., and Torgerson, D. J. (2001). The need for randomised controlled trials in educational research. Br. J. Educ. Stud. 49, 316–328. doi: 10.1111/1467-8527.t01-1-00178

Tranfield, D., Denyer, D., and Smart, P. (2003). Towards a methodology for developing evidence‐informed management knowledge by means of systematic review. British journal of management , 14, 207–222. doi: 10.1111/1467-8551.00375

Tsang, J. T., So, M. K., Chong, A. C., Lam, B. S., and Chu, A. M. (2021). Higher education during the pandemic: the predictive factors of learning effectiveness in COVID-19 online learning. Educ. Sci. 11:446. doi: 10.3390/educsci11080446

Wallin, J. A. (2005). Bibliometric methods: pitfalls and possibilities. Basic Clin. Pharmacol. Toxicol. 97, 261–275. doi: 10.1111/j.1742-7843.2005.pto_139.x

Webster, J., and Watson, R. T. (2002). Analyzing the past to prepare for the future: Writing a literature review. MIS quarterly , 26, 13–23.

Wong, J., Baars, M., Davis, D., Van Der Zee, T., Houben, G. J., and Paas, F. (2019). Supporting self-regulated learning in online learning environments and MOOCs: a systematic review. Int. J. Human–Computer Interaction 35, 356–373. doi: 10.1080/10447318.2018.1543084

Woo, Y., and Reeves, T. C. (2007). Meaningful interaction in web-based learning: a social constructivist interpretation. Internet High. Educ. 10, 15–25. doi: 10.1016/j.iheduc.2006.10.005

Zeitoun, H. (2008). E-learning: Concept, Issues, Application, Evaluation . Riyadh: Dar Alsolateah Publication.

Zhang, L., Carter, R. A. Jr., Qian, X., Yang, S., Rujimora, J., and Wen, S. (2022). Academia's responses to crisis: a bibliometric analysis of literature on online learning in higher education during COVID-19. Br. J. Educ. Technol. 53, 620–646. doi: 10.1111/bjet.13191

Zhang, Y., and Chen, X. (2023). Students’ perceptions of online learning in the post-COVID era: a focused case from the universities of applied sciences in China. Sustain. For. 15:946. doi: 10.3390/su15020946

Keywords: COVID-19 pandemic, higher education, online learning, learning effectiveness, systematic review

Citation: Meng W, Yu L, Liu C, Pan N, Pang X and Zhu Y (2024) A systematic review of the effectiveness of online learning in higher education during the COVID-19 pandemic period. Front. Educ . 8:1334153. doi: 10.3389/feduc.2023.1334153

Received: 06 November 2023; Accepted: 27 December 2023; Published: 17 January 2024.

Reviewed by:

Copyright © 2024 Meng, Yu, Liu, Pan, Pang and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lei Yu, [email protected]

Advertisement

The effects of online education on academic success: A meta-analysis study

- Published: 06 September 2021

- Volume 27 , pages 429–450, ( 2022 )

Cite this article

- Hakan Ulum ORCID: orcid.org/0000-0002-1398-6935 1

79k Accesses

26 Citations

11 Altmetric

Explore all metrics

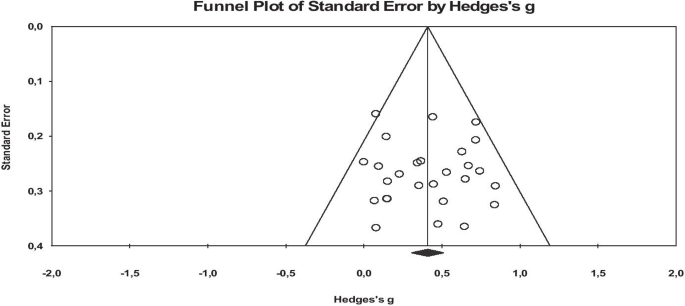

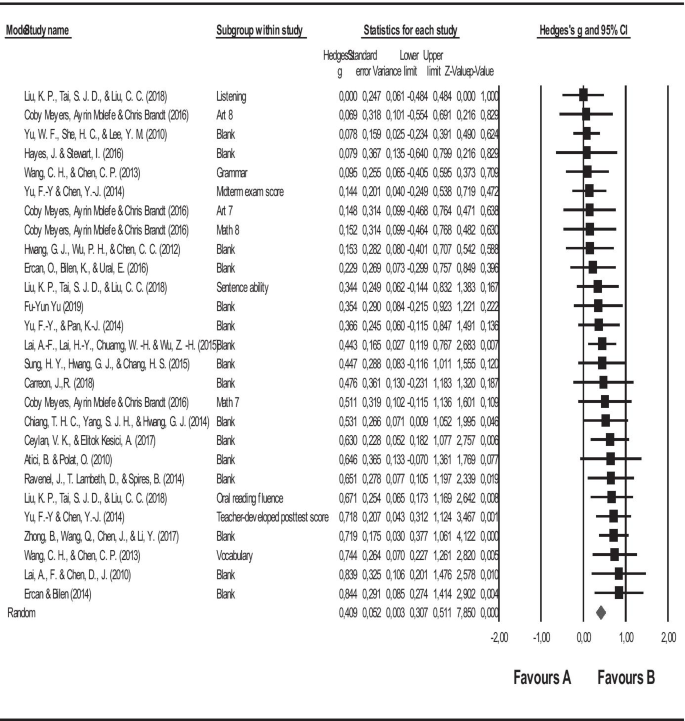

The purpose of this study is to analyze the effect of online education, which has been extensively used on student achievement since the beginning of the pandemic. In line with this purpose, a meta-analysis of the related studies focusing on the effect of online education on students’ academic achievement in several countries between the years 2010 and 2021 was carried out. Furthermore, this study will provide a source to assist future studies with comparing the effect of online education on academic achievement before and after the pandemic. This meta-analysis study consists of 27 studies in total. The meta-analysis involves the studies conducted in the USA, Taiwan, Turkey, China, Philippines, Ireland, and Georgia. The studies included in the meta-analysis are experimental studies, and the total sample size is 1772. In the study, the funnel plot, Duval and Tweedie’s Trip and Fill Analysis, Orwin’s Safe N Analysis, and Egger’s Regression Test were utilized to determine the publication bias, which has been found to be quite low. Besides, Hedge’s g statistic was employed to measure the effect size for the difference between the means performed in accordance with the random effects model. The results of the study show that the effect size of online education on academic achievement is on a medium level. The heterogeneity test results of the meta-analysis study display that the effect size does not differ in terms of class level, country, online education approaches, and lecture moderators.

Avoid common mistakes on your manuscript.

1 Introduction

Information and communication technologies have become a powerful force in transforming the educational settings around the world. The pandemic has been an important factor in transferring traditional physical classrooms settings through adopting information and communication technologies and has also accelerated the transformation. The literature supports that learning environments connected to information and communication technologies highly satisfy students. Therefore, we need to keep interest in technology-based learning environments. Clearly, technology has had a huge impact on young people's online lives. This digital revolution can synergize the educational ambitions and interests of digitally addicted students. In essence, COVID-19 has provided us with an opportunity to embrace online learning as education systems have to keep up with the rapid emergence of new technologies.

Information and communication technologies that have an effect on all spheres of life are also actively included in the education field. With the recent developments, using technology in education has become inevitable due to personal and social reasons (Usta, 2011a ). Online education may be given as an example of using information and communication technologies as a consequence of the technological developments. Also, it is crystal clear that online learning is a popular way of obtaining instruction (Demiralay et al., 2016 ; Pillay et al., 2007 ), which is defined by Horton ( 2000 ) as a way of education that is performed through a web browser or an online application without requiring an extra software or a learning source. Furthermore, online learning is described as a way of utilizing the internet to obtain the related learning sources during the learning process, to interact with the content, the teacher, and other learners, as well as to get support throughout the learning process (Ally, 2004 ). Online learning has such benefits as learning independently at any time and place (Vrasidas & MsIsaac, 2000 ), granting facility (Poole, 2000 ), flexibility (Chizmar & Walbert, 1999 ), self-regulation skills (Usta, 2011b ), learning with collaboration, and opportunity to plan self-learning process.

Even though online education practices have not been comprehensive as it is now, internet and computers have been used in education as alternative learning tools in correlation with the advances in technology. The first distance education attempt in the world was initiated by the ‘Steno Courses’ announcement published in Boston newspaper in 1728. Furthermore, in the nineteenth century, Sweden University started the “Correspondence Composition Courses” for women, and University Correspondence College was afterwards founded for the correspondence courses in 1843 (Arat & Bakan, 2011 ). Recently, distance education has been performed through computers, assisted by the facilities of the internet technologies, and soon, it has evolved into a mobile education practice that is emanating from progress in the speed of internet connection, and the development of mobile devices.

With the emergence of pandemic (Covid-19), face to face education has almost been put to a halt, and online education has gained significant importance. The Microsoft management team declared to have 750 users involved in the online education activities on the 10 th March, just before the pandemic; however, on March 24, they informed that the number of users increased significantly, reaching the number of 138,698 users (OECD, 2020 ). This event supports the view that it is better to commonly use online education rather than using it as a traditional alternative educational tool when students do not have the opportunity to have a face to face education (Geostat, 2019 ). The period of Covid-19 pandemic has emerged as a sudden state of having limited opportunities. Face to face education has stopped in this period for a long time. The global spread of Covid-19 affected more than 850 million students all around the world, and it caused the suspension of face to face education. Different countries have proposed several solutions in order to maintain the education process during the pandemic. Schools have had to change their curriculum, and many countries supported the online education practices soon after the pandemic. In other words, traditional education gave its way to online education practices. At least 96 countries have been motivated to access online libraries, TV broadcasts, instructions, sources, video lectures, and online channels (UNESCO, 2020 ). In such a painful period, educational institutions went through online education practices by the help of huge companies such as Microsoft, Google, Zoom, Skype, FaceTime, and Slack. Thus, online education has been discussed in the education agenda more intensively than ever before.

Although online education approaches were not used as comprehensively as it has been used recently, it was utilized as an alternative learning approach in education for a long time in parallel with the development of technology, internet and computers. The academic achievement of the students is often aimed to be promoted by employing online education approaches. In this regard, academicians in various countries have conducted many studies on the evaluation of online education approaches and published the related results. However, the accumulation of scientific data on online education approaches creates difficulties in keeping, organizing and synthesizing the findings. In this research area, studies are being conducted at an increasing rate making it difficult for scientists to be aware of all the research outside of their expertise. Another problem encountered in the related study area is that online education studies are repetitive. Studies often utilize slightly different methods, measures, and/or examples to avoid duplication. This erroneous approach makes it difficult to distinguish between significant differences in the related results. In other words, if there are significant differences in the results of the studies, it may be difficult to express what variety explains the differences in these results. One obvious solution to these problems is to systematically review the results of various studies and uncover the sources. One method of performing such systematic syntheses is the application of meta-analysis which is a methodological and statistical approach to draw conclusions from the literature. At this point, how effective online education applications are in increasing the academic success is an important detail. Has online education, which is likely to be encountered frequently in the continuing pandemic period, been successful in the last ten years? If successful, how much was the impact? Did different variables have an impact on this effect? Academics across the globe have carried out studies on the evaluation of online education platforms and publishing the related results (Chiao et al., 2018 ). It is quite important to evaluate the results of the studies that have been published up until now, and that will be published in the future. Has the online education been successful? If it has been, how big is the impact? Do the different variables affect this impact? What should we consider in the next coming online education practices? These questions have all motivated us to carry out this study. We have conducted a comprehensive meta-analysis study that tries to provide a discussion platform on how to develop efficient online programs for educators and policy makers by reviewing the related studies on online education, presenting the effect size, and revealing the effect of diverse variables on the general impact.

There have been many critical discussions and comprehensive studies on the differences between online and face to face learning; however, the focus of this paper is different in the sense that it clarifies the magnitude of the effect of online education and teaching process, and it represents what factors should be controlled to help increase the effect size. Indeed, the purpose here is to provide conscious decisions in the implementation of the online education process.

The general impact of online education on the academic achievement will be discovered in the study. Therefore, this will provide an opportunity to get a general overview of the online education which has been practiced and discussed intensively in the pandemic period. Moreover, the general impact of online education on academic achievement will be analyzed, considering different variables. In other words, the current study will allow to totally evaluate the study results from the related literature, and to analyze the results considering several cultures, lectures, and class levels. Considering all the related points, this study seeks to answer the following research questions:

What is the effect size of online education on academic achievement?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the country?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the class level?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the lecture?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the online education approaches?

This study aims at determining the effect size of online education, which has been highly used since the beginning of the pandemic, on students’ academic achievement in different courses by using a meta-analysis method. Meta-analysis is a synthesis method that enables gathering of several study results accurately and efficiently, and getting the total results in the end (Tsagris & Fragkos, 2018 ).

2.1 Selecting and coding the data (studies)

The required literature for the meta-analysis study was reviewed in July, 2020, and the follow-up review was conducted in September, 2020. The purpose of the follow-up review was to include the studies which were published in the conduction period of this study, and which met the related inclusion criteria. However, no study was encountered to be included in the follow-up review.