Create Your Course

The 7 main types of learning styles (and how to teach to them), share this article.

Understanding the 7 main types of learning styles and how to teach them will help both your students and your courses be more successful.

When it comes to learning something new, we all absorb information at different rates and understand it differently too. Some students get new concepts right away; others need to sit and ponder for some time before they can arrive at similar conclusions.

Why? The answer lies in the type of learning styles different students feel more comfortable with. In other words, we respond to information in different ways depending on how it is presented to us.

Clearly, different types of learning styles exist, and there are lots of debates in pedagogy about what they are and how to adapt to them.

For practical purposes, it’s recommended to ensure that your course or presentation covers the 7 main types of learning.

In this article, we’ll break down the 7 types of learning styles, and give practical tips for how you can improve your own teaching styles , whether it’s in higher education or an online course you plan to create on the side.

Skip ahead:

What are the 7 types of learning styles?

How to accommodate different types of learning styles online.

- How to help students understand their different types of learning styles

How to create an online course for all

In the academic literature, the most common model for the types of learning you can find is referred to as VARK.

VARK is an acronym that stands for Visual, Auditory, Reading & Writing, and Kinesthetic. While these learning methods are the most recognized, there are people that do not fit into these boxes and prefer to learn differently. So we’re adding three more learning types to our list, including Logical, Social, and Solitary.

Visual learners

Visual learners are individuals that learn more through images, diagrams, charts, graphs, presentations, and anything that illustrates ideas. These people often doodle and make all kinds of visual notes of their own as it helps them retain information better.

When teaching visual learners, the goal isn’t just to incorporate images and infographics into your lesson. It’s about helping them visualize the relationships between different pieces of data or information as they learn.

Gamified lessons are a great way to teach visual learners as they’re interactive and aesthetically appealing. You should also give handouts, create presentations, and search for useful infographics to support your lessons.

Since visual information can be pretty dense, give your students enough time to absorb all the new knowledge and make their own connections between visual clues.

Auditory/aural learners

The auditory style of learning is quite the opposite of the visual one. Auditory learners are people that absorb information better when it is presented in audio format (i.e. the lessons are spoken). This type of learner prefers to learn by listening and might not take any notes at all. They also ask questions often or repeat what they have just heard aloud to remember it better.

Aural learners are often not afraid of speaking up and are great at explaining themselves. When teaching auditory learners, keep in mind that they shouldn’t stay quiet for long periods of time. So plan a few activities where you can exchange ideas or ask questions. Watching videos or listening to audio during class will also help with retaining new information.

Reading and writing (or verbal) learners

Reading & Writing learners absorb information best when they use words, whether they’re reading or writing them. To verbal learners, written words are more powerful and granular than images or spoken words, so they’re excellent at writing essays, articles, books, etc.

To support the way reading-writing students learn best, ensure they have time to take ample notes and allocate extra time for reading. This type of learner also does really well at remote learning, on their own schedule. Including reading materials and writing assignments in their homework should also yield good results.

Kinesthetic/tactile learners

Kinesthetic learners use different senses to absorb information. They prefer to learn by doing or experiencing what they’re being taught. These types of learners are tactile and need to live through experiences to truly understand something new. This makes it a bit challenging to prepare for them in a regular class setting.

As you try to teach tactile learners, note that they can’t sit still for long and need more frequent breaks than others. You need to get them moving and come up with activities that reinforce the information that was just covered in class. Acting out different roles is great; games are excellent; even collaborative writing on a whiteboard should work fine. If applicable, you can also organize hands-on laboratory sessions, immersions, and workshops.

In general, try to bring every abstract idea into the real world to help kinesthetic learners succeed.

Logical/analytical learners

As the name implies, logical learners rely on logic to process information and understand a particular subject. They search for causes and patterns to create a connection between different kinds of information. Many times, these connections are not obvious to people to learn differently, but they make perfect sense to logical learners.

Logical learners generally do well with facts, statistics, sequential lists, and problem-solving tasks to mention a few.

As a teacher, you can engage logical learners by asking open-ended or obscure questions that require them to apply their own interpretation. You should also use teaching material that helps them hone their problem-solving skills and encourages them to form conclusions based on facts and critical thinking.

Social/interpersonal learners

Social or interpersonal learners love socializing with others and working in groups so they learn best during lessons that require them to interact with their peers . Think study groups, peer discussions, and class quizzes.

To effectively teach interpersonal learners, you’ll need to make teamwork a core part of your lessons. Encourage student interaction by asking questions and sharing stories. You can also incorporate group activities and role-playing into your lessons, and divide the students into study groups.

Solitary/intrapersonal learners

Solitary learning is the opposite of social learning. Solitary, or solo, learners prefer to study alone without interacting with other people. These learners are quite good at motivating themselves and doing individual work. In contrast, they generally don’t do well with teamwork or group discussions.

To help students like this, you should encourage activities that require individual work, such as journaling, which allows them to reflect on themselves and improve their skills. You should also acknowledge your students’ individual accomplishments and help them refine their problem-solving skills.

Are there any unique intelligence types commonly shared by your students? Adapting to these different types of intelligence can help you can design a course best suited to help your students succeed.

Launch your online learning product for free

Use Thinkific to create, market, and sell online courses, communities, and memberships — all from a single platform.

How to help students understand their different types of learning styles

Unless you’re teaching preschoolers, most students probably already realize the type of learning style that fits them best. But some students do get it wrong.

The key here is to observe every student carefully and plan your content for different learning styles right from the start.

Another idea is to implement as much individual learning as you can and then customize that learning for each student. So you can have visual auditory activities, riddles for logical learners, games for kinesthetic learners, reading activities, writing tasks, drawing challenges, and more.

When you’re creating your first course online, it’s important to dedicate enough time to planning out its structure. Don’t just think that a successful course consists of five uploaded videos.

Think about how you present the new knowledge. Where it makes sense to pause and give students the time to reflect. Where to include activities to review the new material. Adapting to the different learning types that people exhibit can help you design an online course best suited to help your students succeed.

That being said, here are some tips to help you tailor your course to each learning style, or at least create enough balance.

Visual learners

Since visual learners like to see or observe images, diagrams, demonstrations, etc., to understand a topic, here’s how you can create a course for them:

- Include graphics, cartoons, or illustrations of concepts

- Use flashcards to review course material

- Use flow charts or maps to organize materials

- Highlight and color code notes to organize materials

- Use color-coded tables to compare and contrast elements

- Use a whiteboard to explain important information

- Have students play around with different font styles and sizes to improve readability

Auditory learners prefer to absorb information by listening to spoken words, so they do well when teachers give spoken instructions and lessons. Here’s how to cater to this learning type through your online course:

- Converse with your students about the subject or topic

- Ask your students questions after each lesson and have them answer you (through the spoken word)

- Have them record lectures and review them with you

- Have articles, essays, and comprehension passages out to them

- As you teach, explain your methods, questions, and answers

- Ask for oral summaries of the course material

- If you teach math or any other math-related course, use a talking calculator

- Create an audio file that your students can listen to

- Create a video of you teaching your lesson to your student

- Include a YouTube video or podcast episode for your students to listen to

- Organize a live Q & A session where students can talk to you and other learners to help them better understand the subject

Reading and writing (or verbal) learners

This one is pretty straightforward. Verbal learners learn best when they read or write (or both), so here are some practical ways to include that in your online course:

- Have your students write summaries about the lesson

- If you teach language or literature, assign them stories and essays that they’d have to read out loud to understand

- If your course is video-based, add transcripts to aid your students’ learning process

- Make lists of important parts of your lesson to help your students memorize them

- Provide downloadable notes and checklists that your students can review after they’ve finished each chapter of your course

- Encourage extra reading by including links to a post on your blog or another website in the course

- Use some type of body movement or rhythm, such as snapping your fingers, mouthing, or pacing, while reciting the material your students should learn

Since kinesthetic learners like to experience hands-on what they learn with their senses — holding, touching, hearing, and doing. So instead of churning out instructions and expecting to follow, do these instead:

- Encourage them to experiment with textured paper, and different sizes of pencils, pens, and crayons to jot down information

- If you teach diction or language, give them words that they should incorporate into their daily conversations with other people

- Encourage students to dramatize or act out lesson concepts to understand them better

Logical learners are great at recognizing patterns, analyzing information, and solving problems. So in your online course, you need to structure your lessons to help them hone these abilities. Here are some things you can do:

- Come up with tasks that require them to solve problems. This is easy if you teach math or a math-related course

- Create charts and graphs that your students need to interpret to fully grasp the lesson

- Ask open-ended questions that require critical thinking

- Create a mystery for your students to solve with clues that require logical thinking or math

- Pose an issue/topic to your students and ask them to address it from multiple perspectives

Since social learners prefer to discuss or interact with others, you should set up your course to include group activities. Here’s how you can do that:

- Encourage them to discuss the course concept with their classmates

- Get your students involved in forum discussions

- Create a platform (via Slack, Discord, etc.) for group discussions

- Pair two or more social students to teach each other the course material

- If you’re offering a cohort-based course , you can encourage students to make their own presentations and explain them to the rest of the class

Solitary learners prefer to learn alone. So when designing your course, you need to take that into consideration and provide these learners a means to work by themselves. Here are some things you can try:

- Encourage them to do assignments by themselves

- Break down big projects into smaller ones to help them manage time efficiently

- Give them activities that require them to do research on their own

- When they’re faced with problems regarding the topic, let them try to work around it on their own. But let them know that they are welcome to ask you for help if they need to

- Encourage them to speak up when you ask them questions as it builds their communication skills

- Explore blended learning , if possible, by combining teacher-led classes with self-guided assignments and extra ideas that students can explore on their own.

Now that you’re ready to teach something to everyone, you might be wondering what you actually need to do to create your online courses. Well, start with a platform.

Thinkific is an intuitive and easy-to-use platform any instructor can use to create online courses that would resonate with all types of learning styles. Include videos, audio, presentations, quizzes, and assignments in your curriculum. Guide courses in real-time or pre-record information in advance. It’s your choice.

In addition, creating a course on Thinkific doesn’t require you to know any programming. You can use a professionally designed template and customize it with a drag-and-drop editor to get exactly the course you want in just a few hours. Try it yourself to see how easy it can be.

This blog was originally published in August 2017, it has since been updated in March 2023.

Althea Storm is a B2B SaaS writer who specializes in creating data-driven content that drives traffic and increases conversions for businesses. She has worked with top companies like AdEspresso, HubSpot, Aura, and Thinkific. When she's not writing web content, she's curled up in a chair reading a crime thriller or solving a Rubik's cube.

- The 5 Most Effective Teaching Styles (Pros & Cons of Each)

- 7 Top Challenges with Online Learning For Students (and Solutions)

- 6 Reasons Why Creators Fail To Sell Their Online Courses

- The Advantages and Disadvantages of Learning in Online Classes in 2023

- 10 Steps To Creating A Wildly Successful Online Course

Related Articles

How tabitha carro creates & promotes online courses using her smartphone.

An in-depth look at how Thinkific customer Tabitha Carro built a successful online course business teaching smart phone marketing to entrepreneurs.

How To Find Your Niche in 4 Simple Steps (Expert Tips)

A step-by-step guide to find your niche. Learn everything from brainstorming profitable niche options to honing in on the best niche for you.

How Jonathan Levi Built a Successful Online Course Business Teaching Speed Readi...

An in-depth case study of how Thinkific customer Jonathan Levi built a successful online course business teaching speed reading and memory skills online.

Try Thinkific for yourself!

Accomplish your course creation and student success goals faster with thinkific..

Download this guide and start building your online program!

It is on its way to your inbox

Center for Teaching

Learning styles, what are learning styles, why are they so popular.

The term learning styles is widely used to describe how learners gather, sift through, interpret, organize, come to conclusions about, and “store” information for further use. As spelled out in VARK (one of the most popular learning styles inventories), these styles are often categorized by sensory approaches: v isual, a ural, verbal [ r eading/writing], and k inesthetic. Many of the models that don’t resemble the VARK’s sensory focus are reminiscent of Felder and Silverman’s Index of Learning Styles , with a continuum of descriptors for how learners process and organize information: active-reflective, sensing-intuitive, verbal-visual, and sequential-global.

There are well over 70 different learning styles schemes (Coffield, 2004), most of which are supported by “a thriving industry devoted to publishing learning-styles tests and guidebooks” and “professional development workshops for teachers and educators” (Pashler, et al., 2009, p. 105).

Despite the variation in categories, the fundamental idea behind learning styles is the same: that each of us has a specific learning style (sometimes called a “preference”), and we learn best when information is presented to us in this style. For example, visual learners would learn any subject matter best if given graphically or through other kinds of visual images, kinesthetic learners would learn more effectively if they could involve bodily movements in the learning process, and so on. The message thus given to instructors is that “optimal instruction requires diagnosing individuals’ learning style[s] and tailoring instruction accordingly” (Pashler, et al., 2009, p. 105).

Despite the popularity of learning styles and inventories such as the VARK, it’s important to know that there is no evidence to support the idea that matching activities to one’s learning style improves learning . It’s not simply a matter of “the absence of evidence doesn’t mean the evidence of absence.” On the contrary, for years researchers have tried to make this connection through hundreds of studies.

In 2009, Psychological Science in the Public Interest commissioned cognitive psychologists Harold Pashler, Mark McDaniel, Doug Rohrer, and Robert Bjork to evaluate the research on learning styles to determine whether there is credible evidence to support using learning styles in instruction. They came to a startling but clear conclusion: “Although the literature on learning styles is enormous,” they “found virtually no evidence” supporting the idea that “instruction is best provided in a format that matches the preference of the learner.” Many of those studies suffered from weak research design, rendering them far from convincing. Others with an effective experimental design “found results that flatly contradict the popular” assumptions about learning styles (p. 105). In sum,

“The contrast between the enormous popularity of the learning-styles approach within education and the lack of credible evidence for its utility is, in our opinion, striking and disturbing” (p. 117).

Pashler and his colleagues point to some reasons to explain why learning styles have gained—and kept—such traction, aside from the enormous industry that supports the concept. First, people like to identify themselves and others by “type.” Such categories help order the social environment and offer quick ways of understanding each other. Also, this approach appeals to the idea that learners should be recognized as “unique individuals”—or, more precisely, that differences among students should be acknowledged —rather than treated as a number in a crowd or a faceless class of students (p. 107). Carried further, teaching to different learning styles suggests that “ all people have the potential to learn effectively and easily if only instruction is tailored to their individual learning styles ” (p. 107).

There may be another reason why this approach to learning styles is so widely accepted. They very loosely resemble the concept of metacognition , or the process of thinking about one’s thinking. For instance, having your students describe which study strategies and conditions for their last exam worked for them and which didn’t is likely to improve their studying on the next exam (Tanner, 2012). Integrating such metacognitive activities into the classroom—unlike learning styles—is supported by a wealth of research (e.g., Askell Williams, Lawson, & Murray-Harvey, 2007; Bransford, Brown, & Cocking, 2000; Butler & Winne, 1995; Isaacson & Fujita, 2006; Nelson & Dunlosky, 1991; Tobias & Everson, 2002).

Importantly, metacognition is focused on planning, monitoring, and evaluating any kind of thinking about thinking and does nothing to connect one’s identity or abilities to any singular approach to knowledge. (For more information about metacognition, see CFT Assistant Director Cynthia Brame’s “ Thinking about Metacognition ” blog post, and stay tuned for a Teaching Guide on metacognition this spring.)

There is, however, something you can take away from these different approaches to learning—not based on the learner, but instead on the content being learned . To explore the persistence of the belief in learning styles, CFT Assistant Director Nancy Chick interviewed Dr. Bill Cerbin, Professor of Psychology and Director of the Center for Advancing Teaching and Learning at the University of Wisconsin-La Crosse and former Carnegie Scholar with the Carnegie Academy for the Scholarship of Teaching and Learning. He points out that the differences identified by the labels “visual, auditory, kinesthetic, and reading/writing” are more appropriately connected to the nature of the discipline:

“There may be evidence that indicates that there are some ways to teach some subjects that are just better than others , despite the learning styles of individuals…. If you’re thinking about teaching sculpture, I’m not sure that long tracts of verbal descriptions of statues or of sculptures would be a particularly effective way for individuals to learn about works of art. Naturally, these are physical objects and you need to take a look at them, you might even need to handle them.” (Cerbin, 2011, 7:45-8:30 )

Pashler and his colleagues agree: “An obvious point is that the optimal instructional method is likely to vary across disciplines” (p. 116). In other words, it makes disciplinary sense to include kinesthetic activities in sculpture and anatomy courses, reading/writing activities in literature and history courses, visual activities in geography and engineering courses, and auditory activities in music, foreign language, and speech courses. Obvious or not, it aligns teaching and learning with the contours of the subject matter, without limiting the potential abilities of the learners.

- Askell-Williams, H., Lawson, M. & Murray, Harvey, R. (2007). ‘ What happens in my university classes that helps me to learn?’: Teacher education students’ instructional metacognitive knowledge. International Journal of the Scholarship of Teaching and Learning , 1. 1-21.

- Bransford, J. D., Brown, A. L. & Cocking, R. R., (Eds.). (2000). How people learn: Brain, mind, experience, and school (Expanded Edition). Washington, D.C.: National Academy Press.

- Butler, D. L., & Winne, P. H. (1995) Feedback and self-regulated learning: A theoretical synthesis . Review of Educational Research , 65, 245-281.

- Cerbin, William. (2011). Understanding learning styles: A conversation with Dr. Bill Cerbin . Interview with Nancy Chick. UW Colleges Virtual Teaching and Learning Center .

- Coffield, F., Moseley, D., Hall, E., & Ecclestone, K. (2004). Learning styles and pedagogy in post-16 learning. A systematic and critical review . London: Learning and Skills Research Centre.

- Isaacson, R. M. & Fujita, F. (2006). Metacognitive knowledge monitoring and self-regulated learning: Academic success and reflections on learning . Journal of the Scholarship of Teaching and Learning , 6, 39-55.

- Nelson, T.O. & Dunlosky, J. (1991). The delayed-JOL effect: When delaying your judgments of learning can improve the accuracy of your metacognitive monitoring. Psychological Science , 2, 267-270.

- Pashler, Harold, McDaniel, M., Rohrer, D., & Bjork, R. (2008). Learning styles: Concepts and evidence . Psychological Science in the Public Interest . 9.3 103-119.

- Tobias, S., & Everson, H. (2002). Knowing what you know and what you don’t: Further research on metacognitive knowledge monitoring . College Board Report No. 2002-3 . College Board, NY.

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

- Covid-19 SYL Resources

- Student Led Conference

- Presentation of Learning

- SYL Stories

What is a Presentation of Learning and Why Do We Do It?

Alec patton.

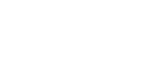

A Presentation of Learning (POL) requires students to present their learning to an audience, in order to prove that they are ready to progress. Effective POLs include both academic content and the student’s reflection on their social and personal growth. They are important rituals – literally “rites of passage” for students.

At my school, every student gives two POLs per year – one at the end of fall semester, and one at the end of the year. They happen at the same time that most schools have their final exams, and serve a similar function. However, unlike exams, POLs happen in front of an audience that includes their teachers, parents, and peers. By requiring students to present to an audience, reflect on their learning, and answer probing questions on the spot, we are helping students build skills that they will use for the rest of their life. Taking an exam, on the other hand, is a skill that students will rarely, if ever, need to utilize after they finish college.

Every team’s POL expectations are slightly different, but they all fall into one of two broad categories: “presentation” or “discussion”.

Presentation

The presentation is the “classic” version of the POL. A student gives a prepared presentation on their own, and takes questions. Designing a POL structure is a balancing act for the teacher: require students to cover too much material, and every one of your students will march in and recite a near-identical list of assignments completed and skills learned. On the other hand, make the requirements too open-ended and the POL can become an empty facsimile of reflection – or, as students have described it to me, “BS-ing”!

I once saw a POL assignment that included the phrase “it has to have some magic”, which students were free to interpret as they saw fit. It led to unpredictable and delightful presentations, and inspired more thought and extra work than any rubric could have.

The “Discussion of Learning” trades the presentation structure for a seminar structure: a small group of students facilitates their own hour-long discussion, with the teachers initially just listening, then adding questions to enrich and drive the discussion. The parents are invited in for the final fifteen minutes, when the students summarize the discussion thus far and invite the parents to participate.

In my experience, this format tends to lead to meatier, more honest reflection than presentations. Especially when students are allowed to choose their own groups, they tend to make themselves more vulnerable than in other contexts. This format also opens up a space for students whose voices aren’t always heard in the classroom. The most memorable POL I’ve ever been a part of was a discussion by a group of girls, all them native Spanish speakers, who talked about having been made uncomfortably aware of their accents by peers, and struggling to make their voices heard within our team. It was powerful, effective, thoughtful – everything I would have wanted from a POL, but it never would have happened if the structure had been different.

Which format should I choose, and when?

Students will be best-served by experiencing both the “presentation” and “discussion” format at some point in their academic careers.

I like to end fall semester with a presentation, because individual presentations give me the clearest sense of which skills a student has successfully developed, and what they will need more help with in the coming semester. I then end the year with a discussion, because at this point I know the students very well, and in a small-group setting we can speak frankly both about their successes, and the potential problems they will face in the coming year. I end this discussion with every student setting goals for the summer and coming year that I record and email to the student and their parents, so that they leave my class with the best possible trajectory into the future.

Related Projects

The Missing Person in Traditional Parent-Teacher Conferences by Krista Gypton

“Fish Tank” at Lake Travis High School

Trigg County Shares Their Learning, What About Your County? By Michelle Sadrena Pledger

Montgomery Middle Banner Design Exhibition

Students as Teachers: A Senior Reflects on her Final Exhibitions Evening at Meridian Academy

Got any suggestions?

We want to hear from you! Send us a message and help improve Slidesgo

Top searches

Trending searches

memorial day

12 templates

66 templates

8 templates

environmental science

36 templates

ocean theme

44 templates

49 templates

Learning Styles

It seems that you like this template, learning styles presentation, free google slides theme, powerpoint template, and canva presentation template.

Contrary to popular belief, there are lots of different ways of teaching, and they aren’t incompatible. In fact, they complement each other. Some work better for some subjects than others. Eliana Delacour knows this very well, and that’s why she has been the one in charge of the content of this template. These slides about education include real content written by a pedagogist and an amazing design curated by Slidesgo. The perfect combination for teachers, right?

Features of this template

- Designed for teachers and parents

- 100% editable and easy to modify

- 22 different slides to impress your audience

- Contains easy-to-edit graphics such as graphs, maps, tables, timelines and mockups

- Includes 500+ icons and Flaticon’s extension for customizing your slides

- Designed to be used in Google Slides, Canva, and Microsoft PowerPoint

- 16:9 widescreen format suitable for all types of screens

- Includes information about fonts, colors, and credits of the resources used

- Available in different languages

How can I use the template?

Am I free to use the templates?

How to attribute?

Attribution required If you are a free user, you must attribute Slidesgo by keeping the slide where the credits appear. How to attribute?

Available in, related posts on our blog.

How to Add, Duplicate, Move, Delete or Hide Slides in Google Slides

How to Change Layouts in PowerPoint

How to Change the Slide Size in Google Slides

Related presentations.

Premium template

Unlock this template and gain unlimited access

Register for free and start editing online

- Center for Innovative Teaching and Learning

- Instructional Guide

Teaching with PowerPoint

When effectively planned and used, PowerPoint (or similar tools, like Google Slides) can enhance instruction. People are divided on the effectiveness of this ubiquitous presentation program—some say that PowerPoint is wonderful while others bemoan its pervasiveness. No matter which side you take, PowerPoint does offer effective ways to enhance instruction when used and designed appropriately.

PowerPoint can be an effective tool to present material in the classroom and encourage student learning. You can use PowerPoint to project visuals that would otherwise be difficult to bring to class. For example, in an anthropology class, a single PowerPoint presentation could project images of an anthropological dig from a remote area, questions asking students about the topic, a chart of related statistics, and a mini quiz about what was just discussed that provides students with information that is visual, challenging, and engaging.

PowerPoint can be an effective tool to present material in the classroom and encourage student learning.

This section is organized in three major segments: Part I will help faculty identify and use basic but important design elements, Part II will cover ways to enhance teaching and learning with PowerPoint, and Part III will list ways to engage students with PowerPoint.

PART I: Designing the PowerPoint Presentation

Accessibility.

- Student accessibility—students with visual or hearing impairments may not be able to fully access a PowerPoint presentation, especially those with graphics, images, and sound.

- Use an accessible layout. Built-in slide template layouts were designed to be accessible: “the reading order is the same for people with vision and for people who use assistive technology such as screen readers” (University of Washington, n.d.). If you want to alter the layout of a theme, use the Slide Master; this will ensure your slides will retain accessibility.

- Use unique and specific slide titles so students can access the material they need.

- Consider how you display hyperlinks. Since screen readers read what is on the page, you may want to consider creating a hyperlink using a descriptive title instead of displaying the URL.

- All visuals and tables should include alt text. Alt text should describe the visual or table in detail so that students with visual impairments can “read” the images with their screen readers. Avoid using too many decorative visuals.

- All video and audio content should be captioned for students with hearing impairments. Transcripts can also be useful as an additional resource, but captioning ensures students can follow along with what is on the screen in real-time.

- Simplify your tables. If you use tables on your slides, ensure they are not overly complex and do not include blank cells. Screen readers may have difficulty providing information about the table if there are too many columns and rows, and they may “think” the table is complete if they come to a blank cell.

- Set a reading order for text on your slides. The order that text appears on the slide may not be the reading order of the text. Check that your reading order is correct by using the Selection Pane (organized bottom-up).

- Use Microsoft’s Accessibility Checker to identify potential accessibility issues in your completed PowerPoint. Use the feedback to improve your PowerPoint’s accessibility. You could also send your file to the Disability Resource Center to have them assess its accessibility (send it far in advance of when you will need to use it).

- Save your PowerPoint presentation as a PDF file to distribute to students with visual impairments.

Preparing for the presentation

- Consider time and effort in preparing a PowerPoint presentation; give yourself plenty of lead time for design and development.

- PowerPoint is especially useful when providing course material online. Consider student technology compatibility with PowerPoint material put on the web; ensure images and graphics have been compressed for access by computers using dial-up connection.

PowerPoint is especially useful when providing course material online.

- Be aware of copyright law when displaying course materials, and properly cite source material. This is especially important when using visuals obtained from the internet or other sources. This also models proper citation for your students.

- Think about message interpretation for PowerPoint use online: will students be able to understand material in a PowerPoint presentation outside of the classroom? Will you need to provide notes and/or other material to help students understand complex information, data, or graphics?

- If you will be using your own laptop, make sure the classroom is equipped with the proper cables, drivers, and other means to display your presentation the way you have intended.

Slide content

- Avoid text-dense slides. It’s better to have more slides than trying to place too much text on one slide. Use brief points instead of long sentences or paragraphs and outline key points rather than transcribing your lecture. Use PowerPoint to cue and guide the presentation.

- Use the Notes feature to add content to your presentation that the audience will not see. You can access the Notes section for each slide by sliding the bottom of the slide window up to reveal the notes section or by clicking “View” and choosing “Notes Page” from the Presentation Views options.

- Relate PowerPoint material to course objectives to reinforce their purpose for students.

Number of slides

- As a rule of thumb, plan to show one slide per minute to account for discussion and time and for students to absorb the material.

- Reduce redundant or text-heavy sentences or bullets to ensure a more professional appearance.

- Incorporate active learning throughout the presentation to hold students’ interest and reinforce learning.

Emphasizing content

- Use italics, bold, and color for emphasizing content.

- Use of a light background (white, beige, yellow) with dark typeface or a dark background (blue, purple, brown) with a light typeface is easy to read in a large room.

- Avoid using too many colors or shifting colors too many times within the presentation, which can be distracting to students.

- Avoid using underlines for emphasis; underlining typically signifies hypertext in digital media.

Use of a light background with dark typeface or a dark background with a light typeface is easy to read in a large room.

- Limit the number of typeface styles to no more than two per slide. Try to keep typeface consistent throughout your presentation so it does not become a distraction.

- Avoid overly ornate or specialty fonts that may be harder for students to read. Stick to basic fonts so as not to distract students from the content.

- Ensure the typeface is large enough to read from anywhere in the room: titles and headings should be no less than 36-40-point font. The subtext should be no less than 32-point font.

Clip art and graphics

- Use clip art and graphics sparingly. Research shows that it’s best to use graphics only when they support the content. Irrelevant graphics and images have been proven to hinder student learning.

- Photographs can be used to add realism. Again, only use photographs that are relevant to the content and serve a pedagogical purpose. Images for decorative purposes are distracting.

- Size and place graphics appropriately on the slide—consider wrapping text around a graphic.

- Use two-dimensional pie and bar graphs rather than 3D styles which can interfere with the intended message.

Use clip art and graphics sparingly. Research shows that it’s best to use graphics only when they support the content.

Animation and sound

- Add motion, sound, or music only when necessary. When in doubt, do without.

- Avoid distracting animations and transitions. Excessive movement within or between slides can interfere with the message and students find them distracting. Avoid them or use only simple screen transitions.

Final check

- Check for spelling, correct word usage, flow of material, and overall appearance of the presentation.

- Colleagues can be helpful to check your presentation for accuracy and appeal. Note: Errors are more obvious when they are projected.

- Schedule at least one practice session to check for timing and flow.

- PowerPoint’s Slide Sorter View is especially helpful to check slides for proper sequencing as well as information gaps and redundancy. You can also use the preview pane on the left of the screen when you are editing the PowerPoint in “Normal” view.

- Prepare for plan “B” in case you have trouble with the technology in the classroom: how will you provide material located on your flash drive or computer? Have an alternate method of instruction ready (printing a copy of your PowerPoint with notes is one idea).

PowerPoint’s Slide Sorter View is especially helpful to check slides for proper sequencing and information gaps and redundancy.

PowerPoint Handouts

PowerPoint provides multiple options for print-based handouts that can be distributed at various points in the class.

Before class: students might like having materials available to help them prepare and formulate questions before the class period.

During class: you could distribute a handout with three slides and lines for notes to encourage students to take notes on the details of your lecture so they have notes alongside the slide material (and aren’t just taking notes on the slide content).

After class: some instructors wait to make the presentation available after the class period so that students concentrate on the presentation rather than reading ahead on the handout.

Never: Some instructors do not distribute the PowerPoint to students so that students don’t rely on access to the presentation and neglect to pay attention in class as a result.

- PowerPoint slides can be printed in the form of handouts—with one, two, three, four, six, or nine slides on a page—that can be given to students for reference during and after the presentation. The three-slides-per-page handout includes lined space to assist in note-taking.

- Notes Pages. Detailed notes can be printed and used during the presentation, or if they are notes intended for students, they can be distributed before the presentation.

- Outline View. PowerPoint presentations can be printed as an outline, which provides all the text from each slide. Outlines offer a welcome alternative to slide handouts and can be modified from the original presentation to provide more or less information than the projected presentation.

The Presentation

Alley, Schreiber, Ramsdell, and Muffo (2006) suggest that PowerPoint slide headline design “affects audience retention,” and they conclude that “succinct sentence headlines are more effective” in information recall than headlines of short phrases or single words (p. 233). In other words, create slide titles with as much information as is used for newspapers and journals to help students better understand the content of the slide.

- PowerPoint should provide key words, concepts, and images to enhance your presentation (but PowerPoint should not replace you as the presenter).

- Avoid reading from the slide—reading the material can be perceived as though you don’t know the material. If you must read the material, provide it in a handout instead of a projected PowerPoint slide.

- Avoid moving a laser pointer across the slide rapidly. If using a laser pointer, use one with a dot large enough to be seen from all areas of the room and move it slowly and intentionally.

Avoid reading from the slide—reading the material can be perceived as though you don’t know the material.

- Use a blank screen to allow students to reflect on what has just been discussed or to gain their attention (Press B for a black screen or W for a white screen while delivering your slide show; press these keys again to return to the live presentation). This pause can also be used for a break period or when transitioning to new content.

- Stand to one side of the screen and face the audience while presenting. Using Presenter View will display your slide notes to you on the computer monitor while projecting only the slides to students on the projector screen.

- Leave classroom lights on and turn off lights directly over the projection screen if possible. A completely dark or dim classroom will impede notetaking (and may encourage nap-taking).

- Learn to use PowerPoint efficiently and have a back-up plan in case of technical failure.

- Give yourself enough time to finish the presentation. Trying to rush through slides can give the impression of an unorganized presentation and may be difficult for students to follow or learn.

PART II: Enhancing Teaching and Learning with PowerPoint

Class preparation.

PowerPoint can be used to prepare lectures and presentations by helping instructors refine their material to salient points and content. Class lectures can be typed in outline format, which can then be refined as slides. Lecture notes can be printed as notes pages (notes pages: Printed pages that display author notes beneath the slide that the notes accompany.) and could also be given as handouts to accompany the presentation.

Multimodal Learning

Using PowerPoint can help you present information in multiple ways (a multimodal approach) through the projection of color, images, and video for the visual mode; sound and music for the auditory mode; text and writing prompts for the reading/writing mode; and interactive slides that ask students to do something, e.g. a group or class activity in which students practice concepts, for the kinesthetic mode (see Part III: Engaging Students with PowerPoint for more details). Providing information in multiple modalities helps improve comprehension and recall for all students.

Providing information in multiple modalities helps improve comprehension and recall for all students.

Type-on Live Slides

PowerPoint allows users to type directly during the slide show, which provides another form of interaction. These write-on slides can be used to project students’ comments and ideas for the entire class to see. When the presentation is over, the new material can be saved to the original file and posted electronically. This feature requires advanced preparation in the PowerPoint file while creating your presentation. For instructions on how to set up your type-on slide text box, visit this tutorial from AddictiveTips .

Write or Highlight on Slides

PowerPoint also allows users to use tools to highlight or write directly onto a presentation while it is live. When you are presenting your PowerPoint, move your cursor over the slide to reveal tools in the lower-left corner. One of the tools is a pen icon. Click this icon to choose either a laser pointer, pen, or highlighter. You can use your cursor for these options, or you can use the stylus for your smart podium computer monitor or touch-screen laptop monitor (if applicable).

Just-In-Time Course Material

You can make your PowerPoint slides, outline, and/or notes pages available online 24/7 through Blackboard, OneDrive, other websites. Students can review the material before class, bring printouts to class, and better prepare themselves for listening rather than taking a lot of notes during the class period. They can also come to class prepared with questions about the material so you can address their comprehension of the concepts.

PART III: Engaging Students with PowerPoint

The following techniques can be incorporated into PowerPoint presentations to increase interactivity and engagement between students and between students and the instructor. Each technique can be projected as a separate PowerPoint slide.

Running Slide Show as Students Arrive in the Classroom

This technique provides visual interest and can include a series of questions for students to answer as they sit waiting for class to begin. These questions could be on future texts or quizzes.

- Opening Question : project an opening question, e.g. “Take a moment to reflect on ___.”

- Think of what you know about ___.

- Turn to a partner and share your knowledge about ___.

- Share with the class what you have discussed with your partner.

- Focused Listing helps with recall of pertinent information, e.g. “list as many characteristics of ___, or write down as many words related to ___ as you can think of.”

- Brainstorming stretches the mind and promotes deep thinking and recall of prior knowledge, e.g. “What do you know about ___? Start with your clearest thoughts and then move on to those what are kind of ‘out there.’”

- Questions : ask students if they have any questions roughly every 15 minutes. This technique provides time for students to reflect and is also a good time for a scheduled break or for the instructor to interact with students.

- Note Check : ask students to “take a few minutes to compare notes with a partner,” or “…summarize the most important information,” or “…identify and clarify any sticking points,” etc.

- Questions and Answer Pairs : have students “take a minute to come with one question then see if you can stump your partner!”

- The Two-Minute Paper allows the instructor to check the class progress, e.g. “summarize the most important points of today’s lecture.” Have students submit the paper at the end of class.

- “If You Could Ask One Last Question—What Would It Be?” This technique allows for students to think more deeply about the topic and apply what they have learned in a question format.

- A Classroom Opinion Poll provides a sense of where students stand on certain topics, e.g. “do you believe in ___,” or “what are your thoughts on ___?”

- Muddiest Point allows anonymous feedback to inform the instructor if changes and or additions need to be made to the class, e.g. “What parts of today’s material still confuse you?”

- Most Useful Point can tell the instructor where the course is on track, e.g. “What is the most useful point in today’s material, and how can you illustrate its use in a practical setting?”

Positive Features of PowerPoint

- PowerPoint saves time and energy—once the presentation has been created, it is easy to update or modify for other courses.

- PowerPoint is portable and can be shared easily with students and colleagues.

- PowerPoint supports multimedia, such as video, audio, images, and

PowerPoint supports multimedia, such as video, audio, images, and animation.

Potential Drawbacks of PowerPoint

- PowerPoint could reduce the opportunity for classroom interaction by being the primary method of information dissemination or designed without built-in opportunities for interaction.

- PowerPoint could lead to information overload, especially with the inclusion of long sentences and paragraphs or lecture-heavy presentations with little opportunity for practical application or active learning.

- PowerPoint could “drive” the instruction and minimize the opportunity for spontaneity and creative teaching unless the instructor incorporates the potential for ingenuity into the presentation.

As with any technology, the way PowerPoint is used will determine its pedagogical effectiveness. By strategically using the points described above, PowerPoint can be used to enhance instruction and engage students.

Alley, M., Schreiber, M., Ramsdell, K., & Muffo, J. (2006). How the design of headlines in presentation slides affects audience retention. Technical Communication, 53 (2), 225-234. Retrieved from https://www.jstor.org/stable/43090718

University of Washington, Accessible Technology. (n.d.). Creating accessible presentations in Microsoft PowerPoint. Retrieved from https://www.washington.edu/accessibility/documents/powerpoint/

Selected Resources

Brill, F. (2016). PowerPoint for teachers: Creating interactive lessons. LinkedIn Learning . Retrieved from https://www.lynda.com/PowerPoint-tutorials/PowerPoint-Teachers-Create-Interactive-Lessons/472427-2.html

Huston, S. (2011). Active learning with PowerPoint [PDF file]. DE Oracle @ UMUC . Retrieved from http://contentdm.umuc.edu/digital/api/collection/p16240coll5/id/78/download

Microsoft Office Support. (n.d.). Make your PowerPoint presentations accessible to people with disabilities. Retrieved from https://support.office.com/en-us/article/make-your-powerpoint-presentations-accessible-to-people-with-disabilities-6f7772b2-2f33-4bd2-8ca7-ae3b2b3ef25

Tufte, E. R. (2006). The cognitive style of PowerPoint: Pitching out corrupts within. Cheshire, CT: Graphics Press LLC.

University of Nebraska Medical Center, College of Medicine. (n.d.). Active Learning with a PowerPoint. Retrieved from https://www.unmc.edu/com/_documents/active-learning-ppt.pdf

University of Washington, Department of English. (n.d.). Teaching with PowerPoint. Retrieved from https://english.washington.edu/teaching/teaching-powerpoint

Vanderbilt University, Center for Teaching. (n.d.). Making better PowerPoint presentations. Retrieved from https://cft.vanderbilt.edu/guides-sub-pages/making-better-powerpoint-presentations/

Suggested citation

Northern Illinois University Center for Innovative Teaching and Learning. (2020). Teaching with PowerPoint. In Instructional guide for university faculty and teaching assistants. Retrieved from https://www.niu.edu/citl/resources/guides/instructional-guide

Phone: 815-753-0595 Email: [email protected]

Connect with us on

Facebook page Twitter page YouTube page Instagram page LinkedIn page

Ace the Presentation

5 Learning Styles to Consider for Memorable Presentations

There is no consensus on foolproof tricks to satisfy the audience in a presentation, and each researcher certainly has tips on this based on their personal experience.

However, there is an aspect that often eludes attention and directly influences the appeal that the material exposed has on the audience: the different learning styles.

Professionals who want to succeed in their corporate presentations should know the different learning styles deeply. After all, each individual has their way of capturing information and sending it to the brain to turn it into knowledge.

Thinking about this challenge for presenters, we elaborated this article with the primary learning styles. By dominating each of them, you’ll be able to create personalized and more efficient presentations that meet the expectations of all participants.

What are Learning Styles?

Learning styles are cognitive skills that each person uses to fix specific topics, implying that learning a subject varies from person to person.

From the strategy of personal knowledge, one has more efficiently with a style and more incredible difficulty understanding information with others.

The Vark method, developed by the New Zealand professor Neil Fleming and Colleen Mills, proposes that learning takes place through five skills: auditory, visual, kinesthetic, reading and writing, and multimodal (when learning occurs through two or more skills).

VARK is an acronym that designates the four modes of learning: Visual, Auditory, Read/Write, and Kinesthetic.

5 Learning Styles to use when structuring Public Presentations

1. visual learning style.

The visual learning style is the one in which the person learns through vision. The easiest to assimilate information is recorded in graphs, videos, images, diagrams, maps, symbols, and lists.

People with a visual learning style tend to take notes to assimilate something or record it in memory; they are not satisfied or do not get along just by listening to the presenter.

For you to capture the attention of a visual audience, your presentation needs some features. Some rich features that can boost presentations are:

- Photography;

2. Auditory learning style

The person with the sharpest aural style can learn by listening to information. In this way, the individual can participate in discussions, explain concepts, listen to podcasts, read aloud, and listen to recordings of lessons.

People who have an auditory learning style, when they hear some information, their brain memorizes it.

So during a presentation it is expected that they express themselves and repeat the content to memorize certain subjects, even though the ideas have not gone through great reflection before being exposed to the public.

Knowing this, see what you need when setting up your presentations to cater for these people:

- Podcasts extracts.

3-4. Reading/Writing

It is the modality that differs because it is tied to written content. People who identify with this style have ease in dealing with information expressed in the form of words.

In addition, they are people who have the facility to express abstract knowledge in written language.

They prefer to study through books, workbooks, dictionaries, online texts, articles, and research because they find ways to learn more quickly. Most of them are people who also like to read a lot.

5. Kinesthetic learning style

This term may seem a little strange, but if we search the origin and meaning of this word, we will realize that it is associated with the perception of muscle movements.

At this point, you may think, but what does this have to do with learning style?

The relationship of the kinesthetic learning style with muscular movements is because specific individuals develop the ability to learn through practical situations of life.

That is, they are highly active. They are those who do not cling too much to theories.

Imagine those who are seen as agitated people. In the case of school children, teachers often consider them hyperactive. Even as adults, they don’t get to spend much time listening to a story or reading a lengthy text is too quiet a place.

The ideal for this public is not too dull and monotonous; otherwise, they can stand up from their chairs and go away or stay a period outside the auditorium room.

Here are the tips to keep people with the characteristics of the style of learning Kinesthetic interested in your presentation:

- Hold debates;

- If possible, take short breaks;

- Move on the stage;

- Give practical examples,

- Avoid being only in theory;

- Make provocations and inquiries.

This is especially true in presentations to large audiences, in which the exhibitor tends to want to offer an impact presentation.

With a great diversity of information, technological resources, or a lot of interactivity, it often forgets whether it will capture the attention of the majority.

Given this knowledge of different learning styles, the idea is to create a clear, objective presentation and coordinate appeals that satisfy the four types.

Want to Stand Out? 15 Key Tips for an Awesome Presentation

11 Best Body Language Tips For Engaging Presentations (#11 is Underrated)

Growing up, we were always taught how we should have manners while talking to others and that there were some things we could not do in front of people like sprawling or even putting our elbows on the table while eating because it was rude. In the examples above, the rudeness comes from gestures, not…

Learning styles are the way each person uses to learn the proposed content, and this is because each person has eased with a particular style and more difficulty learning from others. Therefore, each person is unique in their way of learning!

The presenter must adapt his presentation to the primary intelligence, which is also the secret of good interpersonal communication.

Learning styles represent part of our five senses – vision, hearing, touch, smell, and taste. Each person usually has one or more of them developed.

References and Further Reading

Learning Styles. OREILLY.

Your Guide To Understanding And Adapting To Different Learning Styles. Cornerstone.

Ace Presentation. Tips for Conducting Audience Analysis.

Similar Posts

5 Great Tips on How to Become a Motivational Speaker

There a lot of people pondering and wondering. How do I become a motivational speaker? What steps do I take? I want to inspire people? How do I do it? I wish I could speak eloquently and help people, how do I go about becoming a great role model, an inspiration for others? How to…

18 PUBLIC SPEAKING QUESTIONS ANSWERED

At some point in your life, you will have to speak in public, maybe at school or work, it is inevitable. One’s best course of action is to prepare for it. Here, I have put together a list of 18 frequently asked questions about public speaking, to help you on your journey to becoming a…

8 Tips on How to Overcome the Public Speaking Fear

Public Speaking Fear is quite common. In fact, 3 in every four people are afraid of speaking in public. Regardless of the numbers from researches, this is a prevalent problem, so much so that every one of us might have faced it or seen people who suffer from it. I have got a more in-depth…

Patrick Henry Speech Analysis

There are speeches that educate. There are speeches that inspire. And then there is the Patrick Henry speech – an explosive, passionate oration that we can consider as quite possibly the reason for the American Revolution (and the consequent adoption of the Declaration of Independence over a year later). So, it’s no wonder that this…

The I Have A Dream Speech By Martin Luther King Jr

The “I Have A Dream” speech, which was delivered by Martin Luther King Jr on August 28, 1963, was part of the speeches delivered during the March on Washington to secure the enactment of the Civil Rights Acts in 1964. The Speech, which was a call for freedom and equality, turned out to become one of the defining moments that…

Your Mind goes Blank during a presentation – What to do?

Conducting lectures is an excellent achievement for any professional, but certain basic precautions must be taken to avoid turning this opportunity of accomplishment into a nightmare. One of the most common mistakes is having the famous blank, where the words suddenly run away, and you cannot complete the presentation. When you make it clear to…

- Our Mission

How a Simple Presentation Framework Helps Students Learn

Explaining concepts to their peers helps students shore up their content knowledge and improve their communication skills.

A few years ago, my colleague and I were awarded a Hawai‘i Innovation Fund Grant. The joy of being awarded the grant was met with dread and despair when we were informed that we would have to deliver a 15-minute presentation on our grant write-up to a room full of educational leaders. If that wasn’t intimidating enough, my colleague informed me that he was not going to be in Hawai‘i at the time of the presentation. I had “one shot,” just a 15-minute presentation to encapsulate all of the 17 pages of the grant I had cowritten, but how?

I worked hard to construct and deliver a presentation that was concise yet explicit. I was clear on the big picture of what the grant was composed of and provided a visual of it in practice. I made sure the audience understood the “why” behind the grant. I showed how it worked, the concrete elements of it, and how they made it successful. I finished with a scaffold that would help others know how to initiate it within their context, giving them the freedom to make it authentically their own.

I received good feedback from the presentation, and more important, what was shared positively impacted student learning in other classrooms across the state.

A Simple Framework for Presentations

That first presentation took me over a month to prepare, but afterward I noticed that my prep time for presentations shrank exponentially from a few months to a few (uninterrupted) days. Interestingly enough, as a by-product of creating the original presentation, I created an abstract framework that I have used for every professional learning presentation I have delivered since then. The “What, Why, How, and How-To” framework goes as follows:

- What? What can the audience easily connect to and know as a bridge to the unknown for the rest of the experience?

- Why? Why should they care to listen to (and learn from) the rest of the presentation? What’s in it for them to shift from passive listeners to actively engaged? The audience needs to know why you believe in this so much that you are compelled to share it.

- How? What are the key elements that make it unique? How is it effective in doing what it does? What are the intricacies of how it works?

- How-to? How could they start doing this on their own? How could this knowledge serve as a foundational springboard? Connect it to “why.”

Benefits for Students

One of the best parts of presentations is that they help the presenter to improve their communication skills. The presenter is learning how to give a presentation by doing it. To prepare a presentation, the presenter must know the intricate elements of what they are presenting and the rationale for their importance. In the presentation delivery, the presenter must be articulate and meticulous to ensure that everyone in the audience is able (and willing) to process the information provided.

It didn’t take long for me to realize that preparing and delivering presentations could provide a valuable learning opportunity for my students.

I recall teaching mathematical concepts whereby students would immediately apply knowledge learned to accomplish the task in silence and without any deeper questioning. Only after I asked them to provide presentations on these concepts did they regularly ask me, “Why is this important, again?” or “What makes this so special?” My students’ mathematical literacy grew through preparing presentations with the “What, Why, How, and How-To” framework, which supported them in their ability to demonstrate content knowledge through mathematical rigor (balancing conceptual understanding, skills and procedural fluency, and real-world application).

- The “what” served as the mathematical concept.

- The “why” demonstrated the real-world application of the concept.

- “The “how” demonstrated conceptual understanding of the concept.

- The “how-to” demonstrated skills and procedures of the concept.

In addition to content knowledge, the sequential competencies of clarity, cohesiveness, and captivation ensured that the presenter could successfully share the information with their audience. When combined, these framed a rubric that supported students in optimizing their presentation deliveries. The competencies are as follows:

1. Content knowledge. The presenter must display a deep understanding of what they are delivering in order to share the “what, why, how, and how-to” of the topic.

2. Clarity. The presenter must be clear with precise, academic language. As the content they deliver may be new to the audience, any lack of clarity will alienate the audience. Providing multiple modes of representation greatly addresses a variety of processing needs of a diverse audience.

3. Cohesiveness. When making clear connections, the presenter bridges gaps between each discrete component in how they all work together as integral elements of the topic. Any gaps too large may make the elements look disjointed or, worse, the audience feel lost.

4. Captivation. The presenter must captivate the audience through any combination of audience engagement or storytelling . They make the presentation flow with the energy of a song , and in the end, they leave the audience with a delicate balance of feeling fulfilled and inspired to learn more.

Anyone can build an effective presentation with the “What, Why, How, and How-To” framework, along with competencies of content knowledge, clarity, cohesiveness, and captivation. The better we teach and coach others on how to create and deliver presentations, the more we learn from these individuals through their work.

In my class, one multilingual learner responded to the prompt “What are the non-math (life lessons) you have found valuable from this class?” with “I learn what is learning and teaching... I truly understood how teaching is actually learning when I had presentation. I found a bit of desire to being a teacher. I hope you also learned something from this class.” I always learn from my students when they present.

- The Open University

- Explore OpenLearn

- Get started

- Create a course

- Free courses

- Collections

My OpenLearn Create Profile

- Personalise your OpenLearn profile

- Save Your favourite content

- Get recognition for your learning

Already Registered?

- Welcome to this free course on 'General Teaching M...

- Information that is not to miss

- Alternative format

- Tell us what you think of this course

- Acknowledgements & references

- Course guide

- TOPIC 1 - QUIZ

- TOPIC 2 - QUIZ

- TOPIC 3 - QUIZ

- TOPIC 4 - QUIZ

- TOPIC 5 - QUIZ

- Introduction

- 1.1 DEFINITIONS, TYPES & PROCESSES OF LEARNING

- What is learning

- Behaviourism

- Constructivism

- Social-constructivism

- Cognitivism

- Conclusion on learning theories

- 1.2 LEARNING STYLES

- Introduction to learning styles

- Overview of learning styles

- Interpersonal learners

- Intrapersonal learners

- Kinesthetic learners

- Verbal learners

- Visual learners

- Logical learners

- Auditory learners

- Identifying learning styles

- 1.3 LEVELS OF COGNITION

- Introduction to Bloom's taxonomy

- How Bloom’s Taxonomy is useful for teachers

- 2.1 FOUNDATION AND RATIONALE

- Introduction to Active Teaching and Learning

- Defining Active Teaching and Learning

- Rationale for Active Teaching and Learning

- 2.2 METHODS, TECHNIQUES & TOOLS

- METHODS FOR ACTIVE TEACHING AND LEARNING

- Problem-based learning

- Project-based learning

- Learning stations

- Learning contracts

- TECHNIQUES FOR ACTIVE TEACHING AND LEARNING

- Demonstration

Presentation

- Brainstorming

- Storytelling

- TOOLS FOR ACTIVE TEACHING AND LEARNING

- Low cost experiments

- Charts and maps

- Student portfolio

- 2.3 BARRIES IN INTEGRATING ACTIVE TEACHING

- Identifying Barriers

- 3.1 INTRODUCTION TO CLASSROOM MANAGEMENT & ORGANIZATION

- Defining classroom management

- The role of the teacher

- Defining classroom organization

- Classroom seating arrangement

- Overview of classroom seating arrangement styles

- Benefits of effective classroom management and organization

- 3.2 STRATEGIES FOR EFFECTIVE CLASSROOM MANAGEMENT

- The teacher as a model

- Desired learner behaviour

- Rewarding learners

- Types of rewards

- Reinforcing learners

- Delivering a reinforcement

- 3.3 LESSON PLANNING

- Definition of a lesson plan

- Components of a lesson plan

- 4.1 INTRODUCTION TO ASSESSMENT AND EVALUATION

- Definition of assessment

- Formative vs. summative assessment

- Assessment for learning

- Assessment vs. evaluation

- 4.2 CLASS ASSESSMENT TOOLS

- Assessment rubrics

- Self-assessment

- Peer-assessment

- 4.3 REFLECTIVE PRACTICE

- Definition of reflective practice

- The reflective cycle

- 5.1 CONCEPT OF INSTRUCTIONAL MATERIALS

- Introduction to teaching and learning materials

- Purpose of teaching and learning materials

- 5.2 TYPES OF INSTRUCTIONAL MATERIALS

- Traditional and innovative resources

- Screencasts

- Educational videos

- Educational posters

- Open Educational Resources (OERs)

- 5.3 CHOOSING INSTRUCTIONAL MATERIALS

- Integrating instructional materials

- Factors to consider when selecting instructional materials

About this course

- 11 hours study

- 1 Level 1: Introductory

- Course description

Course rewards

Free Statement of Participation on completion of these courses.

Earn a free digital badge if you complete this course, to display and share your achievement.

General Teaching Methods

If you create an account, you can set up a personal learning profile on the site.

A presentation delivers content through oral, audio and visual channels allowing teacher-learner interaction and making the learning process more attractive. Through presentations, teachers can clearly introduce difficult concepts by illustrating the key principles and by engaging the audience in active discussions. When presentations are designed by learners, their knowledge sharing competences, their communication skills and their confidence are developed.

- Define the objectives of the presentation in accordance to the lesson plan (lesson planning)

- Prepare the structure of the presentation, including text, illustrations and other content (lesson planning)

- Set up and test the presentation equipment and provide a conducive seating arrangement and environment for the audience (lesson planning)

- Invite the audience to reflect on the presentation and give feedback (lesson delivery)

- After the presentation, propose activities or tasks to check the learners’ understanding

- Use Mentimeter for interactive presentations and to get instant feedback from your audience (consult this written tutorial on how to use Mentimeter).

- An infographic; graphic visual representations of information, data, or knowledge, is an innovative way to present. Use the digital tool Canva to create your own infographics (consult this written tutorial on how to use Canva).

- Use Google Slides or the Microsoft software PowerPoint , to easily create digital presentations.

- The purpose of a presentation is to visually reinforce what you are saying. Therefore the text should contain few words and concise ideas organised in bullet-point.

- Support your text using images .

- Provide time for reflection and interaction between the presenter and the audience, for example by using Mentimeter .

Techniques/ Demonstration Techniques/ Brainstorming

For further information, take a look at our frequently asked questions which may give you the support you need.

Have a question?

If you have any concerns about anything on this site please get in contact with us here.

Report a concern

Microsoft 365 Life Hacks > Presentations > Creating Presentations to Connect with Each Type of Learner

Creating Presentations to Connect with Each Type of Learner

When you’re presenting a topic to an audience, you want to ensure that it resonates with your entire audience. However, adults have different learning styles that affect how they absorb information. By understanding these styles of understanding and retaining information, you can tailor aspects of your presentation to these different kinds of learners to ensure that no one will feel left behind.

How to Craft Presentations that Connect with Different Types of Learners

Each person learns and understands information differently. Imagine that you’ve gotten turned around in an unfamiliar city and need to find your way back to your hotel. If you refer to a map, you might be a visual learner. If you ask for directions, you might be an auditory learner, but if you take the time to write those directions down, you might be a reading/writing learner. If you prefer to wander and find your way on your own, your learning style might be more kinesthetic. While this is a very simple example of different learning styles, it’s easy to see that what works for one person may not work for an entire audience at a presentation.

Tell your story with captivating presentations

Powerpoint empowers you to develop well-designed content across all your devices

Learn more about the different styles of learning and how to tailor your presentations to include each one.

Visual Learners

Use charts, graphics, and videos to appeal to visual learners.

How they learn

A visual learner absorbs and retains information that’s presented visually. If you’re trying to show the relationship between a set of numbers, a chart or a graph is your best bet. In order for you to make an impact on a visual learner, you’ll need to use something other than just words in order for the, to realize the relationships between data and concepts.

Tactics for reaching them

Your presentation should lean on visual aids. A few examples of this include:

- Sharing an outline of what information is going to be covered during the presentation.

- Using graphs and diagrams to present your data.

- Use a bright color scheme that incorporates complementary colors to draw their eye to what you’re sharing.

- Encourage your audience to take notes and write down key facts.

- Visually map out your concepts and connect information with arrows. Infographics are a great tool for this.

- Make your presentation more engaging by embedding videos.

Auditory Learners

Speak loudly and clearly to connect with auditory learners.

In school, the auditory learners in your classes would simply remember everything their teachers said, instead of taking notes. They simply find it easiest to remember information that they hear and may understand and remember knowledge gleaned from lectures, discussions, audiobooks, podcasts, and having a conversation with another person. It may also be normal for an auditory learner to recite facts to themselves as a way to retain information. They may ask repetitive questions as a way to memorize a concept.

Most presentations rely on a speaker sharing information, which is incredibly helpful to an auditory learner. However, there are other ways that you can help them retain the content you’re presenting: