- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 7. The Results

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

The results section is where you report the findings of your study based upon the methodology [or methodologies] you applied to gather information. The results section should state the findings of the research arranged in a logical sequence without bias or interpretation. A section describing results should be particularly detailed if your paper includes data generated from your own research.

Annesley, Thomas M. "Show Your Cards: The Results Section and the Poker Game." Clinical Chemistry 56 (July 2010): 1066-1070.

Importance of a Good Results Section

When formulating the results section, it's important to remember that the results of a study do not prove anything . Findings can only confirm or reject the hypothesis underpinning your study. However, the act of articulating the results helps you to understand the problem from within, to break it into pieces, and to view the research problem from various perspectives.

The page length of this section is set by the amount and types of data to be reported . Be concise. Use non-textual elements appropriately, such as figures and tables, to present findings more effectively. In deciding what data to describe in your results section, you must clearly distinguish information that would normally be included in a research paper from any raw data or other content that could be included as an appendix. In general, raw data that has not been summarized should not be included in the main text of your paper unless requested to do so by your professor.

Avoid providing data that is not critical to answering the research question . The background information you described in the introduction section should provide the reader with any additional context or explanation needed to understand the results. A good strategy is to always re-read the background section of your paper after you have written up your results to ensure that the reader has enough context to understand the results [and, later, how you interpreted the results in the discussion section of your paper that follows].

Bavdekar, Sandeep B. and Sneha Chandak. "Results: Unraveling the Findings." Journal of the Association of Physicians of India 63 (September 2015): 44-46; Brett, Paul. "A Genre Analysis of the Results Section of Sociology Articles." English for Specific Speakers 13 (1994): 47-59; Go to English for Specific Purposes on ScienceDirect;Burton, Neil et al. Doing Your Education Research Project . Los Angeles, CA: SAGE, 2008; Results. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper. Department of Biology. Bates College; Kretchmer, Paul. Twelve Steps to Writing an Effective Results Section. San Francisco Edit; "Reporting Findings." In Making Sense of Social Research Malcolm Williams, editor. (London;: SAGE Publications, 2003) pp. 188-207.

Structure and Writing Style

I. Organization and Approach

For most research papers in the social and behavioral sciences, there are two possible ways of organizing the results . Both approaches are appropriate in how you report your findings, but use only one approach.

- Present a synopsis of the results followed by an explanation of key findings . This approach can be used to highlight important findings. For example, you may have noticed an unusual correlation between two variables during the analysis of your findings. It is appropriate to highlight this finding in the results section. However, speculating as to why this correlation exists and offering a hypothesis about what may be happening belongs in the discussion section of your paper.

- Present a result and then explain it, before presenting the next result then explaining it, and so on, then end with an overall synopsis . This is the preferred approach if you have multiple results of equal significance. It is more common in longer papers because it helps the reader to better understand each finding. In this model, it is helpful to provide a brief conclusion that ties each of the findings together and provides a narrative bridge to the discussion section of the your paper.

NOTE : Just as the literature review should be arranged under conceptual categories rather than systematically describing each source, you should also organize your findings under key themes related to addressing the research problem. This can be done under either format noted above [i.e., a thorough explanation of the key results or a sequential, thematic description and explanation of each finding].

II. Content

In general, the content of your results section should include the following:

- Introductory context for understanding the results by restating the research problem underpinning your study . This is useful in re-orientating the reader's focus back to the research problem after having read a review of the literature and your explanation of the methods used for gathering and analyzing information.

- Inclusion of non-textual elements, such as, figures, charts, photos, maps, tables, etc. to further illustrate key findings, if appropriate . Rather than relying entirely on descriptive text, consider how your findings can be presented visually. This is a helpful way of condensing a lot of data into one place that can then be referred to in the text. Consider referring to appendices if there is a lot of non-textual elements.

- A systematic description of your results, highlighting for the reader observations that are most relevant to the topic under investigation . Not all results that emerge from the methodology used to gather information may be related to answering the " So What? " question. Do not confuse observations with interpretations; observations in this context refers to highlighting important findings you discovered through a process of reviewing prior literature and gathering data.

- The page length of your results section is guided by the amount and types of data to be reported . However, focus on findings that are important and related to addressing the research problem. It is not uncommon to have unanticipated results that are not relevant to answering the research question. This is not to say that you don't acknowledge tangential findings and, in fact, can be referred to as areas for further research in the conclusion of your paper. However, spending time in the results section describing tangential findings clutters your overall results section and distracts the reader.

- A short paragraph that concludes the results section by synthesizing the key findings of the study . Highlight the most important findings you want readers to remember as they transition into the discussion section. This is particularly important if, for example, there are many results to report, the findings are complicated or unanticipated, or they are impactful or actionable in some way [i.e., able to be pursued in a feasible way applied to practice].

NOTE: Always use the past tense when referring to your study's findings. Reference to findings should always be described as having already happened because the method used to gather the information has been completed.

III. Problems to Avoid

When writing the results section, avoid doing the following :

- Discussing or interpreting your results . Save this for the discussion section of your paper, although where appropriate, you should compare or contrast specific results to those found in other studies [e.g., "Similar to the work of Smith [1990], one of the findings of this study is the strong correlation between motivation and academic achievement...."].

- Reporting background information or attempting to explain your findings. This should have been done in your introduction section, but don't panic! Often the results of a study point to the need for additional background information or to explain the topic further, so don't think you did something wrong. Writing up research is rarely a linear process. Always revise your introduction as needed.

- Ignoring negative results . A negative result generally refers to a finding that does not support the underlying assumptions of your study. Do not ignore them. Document these findings and then state in your discussion section why you believe a negative result emerged from your study. Note that negative results, and how you handle them, can give you an opportunity to write a more engaging discussion section, therefore, don't be hesitant to highlight them.

- Including raw data or intermediate calculations . Ask your professor if you need to include any raw data generated by your study, such as transcripts from interviews or data files. If raw data is to be included, place it in an appendix or set of appendices that are referred to in the text.

- Be as factual and concise as possible in reporting your findings . Do not use phrases that are vague or non-specific, such as, "appeared to be greater than other variables..." or "demonstrates promising trends that...." Subjective modifiers should be explained in the discussion section of the paper [i.e., why did one variable appear greater? Or, how does the finding demonstrate a promising trend?].

- Presenting the same data or repeating the same information more than once . If you want to highlight a particular finding, it is appropriate to do so in the results section. However, you should emphasize its significance in relation to addressing the research problem in the discussion section. Do not repeat it in your results section because you can do that in the conclusion of your paper.

- Confusing figures with tables . Be sure to properly label any non-textual elements in your paper. Don't call a chart an illustration or a figure a table. If you are not sure, go here .

Annesley, Thomas M. "Show Your Cards: The Results Section and the Poker Game." Clinical Chemistry 56 (July 2010): 1066-1070; Bavdekar, Sandeep B. and Sneha Chandak. "Results: Unraveling the Findings." Journal of the Association of Physicians of India 63 (September 2015): 44-46; Burton, Neil et al. Doing Your Education Research Project . Los Angeles, CA: SAGE, 2008; Caprette, David R. Writing Research Papers. Experimental Biosciences Resources. Rice University; Hancock, Dawson R. and Bob Algozzine. Doing Case Study Research: A Practical Guide for Beginning Researchers . 2nd ed. New York: Teachers College Press, 2011; Introduction to Nursing Research: Reporting Research Findings. Nursing Research: Open Access Nursing Research and Review Articles. (January 4, 2012); Kretchmer, Paul. Twelve Steps to Writing an Effective Results Section. San Francisco Edit ; Ng, K. H. and W. C. Peh. "Writing the Results." Singapore Medical Journal 49 (2008): 967-968; Reporting Research Findings. Wilder Research, in partnership with the Minnesota Department of Human Services. (February 2009); Results. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper. Department of Biology. Bates College; Schafer, Mickey S. Writing the Results. Thesis Writing in the Sciences. Course Syllabus. University of Florida.

Writing Tip

Why Don't I Just Combine the Results Section with the Discussion Section?

It's not unusual to find articles in scholarly social science journals where the author(s) have combined a description of the findings with a discussion about their significance and implications. You could do this. However, if you are inexperienced writing research papers, consider creating two distinct sections for each section in your paper as a way to better organize your thoughts and, by extension, your paper. Think of the results section as the place where you report what your study found; think of the discussion section as the place where you interpret the information and answer the "So What?" question. As you become more skilled writing research papers, you can consider melding the results of your study with a discussion of its implications.

Driscoll, Dana Lynn and Aleksandra Kasztalska. Writing the Experimental Report: Methods, Results, and Discussion. The Writing Lab and The OWL. Purdue University.

- << Previous: Insiderness

- Next: Using Non-Textual Elements >>

- Last Updated: Mar 26, 2024 10:40 AM

- URL: https://libguides.usc.edu/writingguide

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- How to Write Discussions and Conclusions

The discussion section contains the results and outcomes of a study. An effective discussion informs readers what can be learned from your experiment and provides context for the results.

What makes an effective discussion?

When you’re ready to write your discussion, you’ve already introduced the purpose of your study and provided an in-depth description of the methodology. The discussion informs readers about the larger implications of your study based on the results. Highlighting these implications while not overstating the findings can be challenging, especially when you’re submitting to a journal that selects articles based on novelty or potential impact. Regardless of what journal you are submitting to, the discussion section always serves the same purpose: concluding what your study results actually mean.

A successful discussion section puts your findings in context. It should include:

- the results of your research,

- a discussion of related research, and

- a comparison between your results and initial hypothesis.

Tip: Not all journals share the same naming conventions.

You can apply the advice in this article to the conclusion, results or discussion sections of your manuscript.

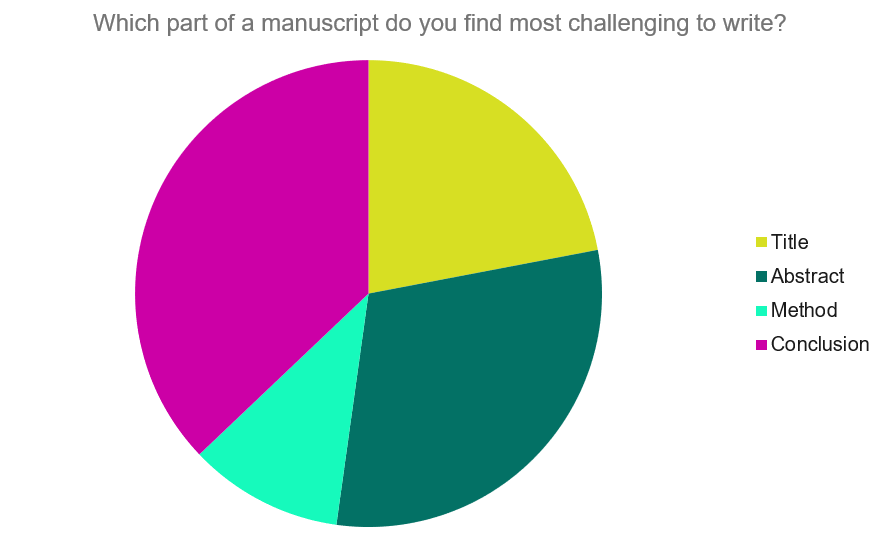

Our Early Career Researcher community tells us that the conclusion is often considered the most difficult aspect of a manuscript to write. To help, this guide provides questions to ask yourself, a basic structure to model your discussion off of and examples from published manuscripts.

Questions to ask yourself:

- Was my hypothesis correct?

- If my hypothesis is partially correct or entirely different, what can be learned from the results?

- How do the conclusions reshape or add onto the existing knowledge in the field? What does previous research say about the topic?

- Why are the results important or relevant to your audience? Do they add further evidence to a scientific consensus or disprove prior studies?

- How can future research build on these observations? What are the key experiments that must be done?

- What is the “take-home” message you want your reader to leave with?

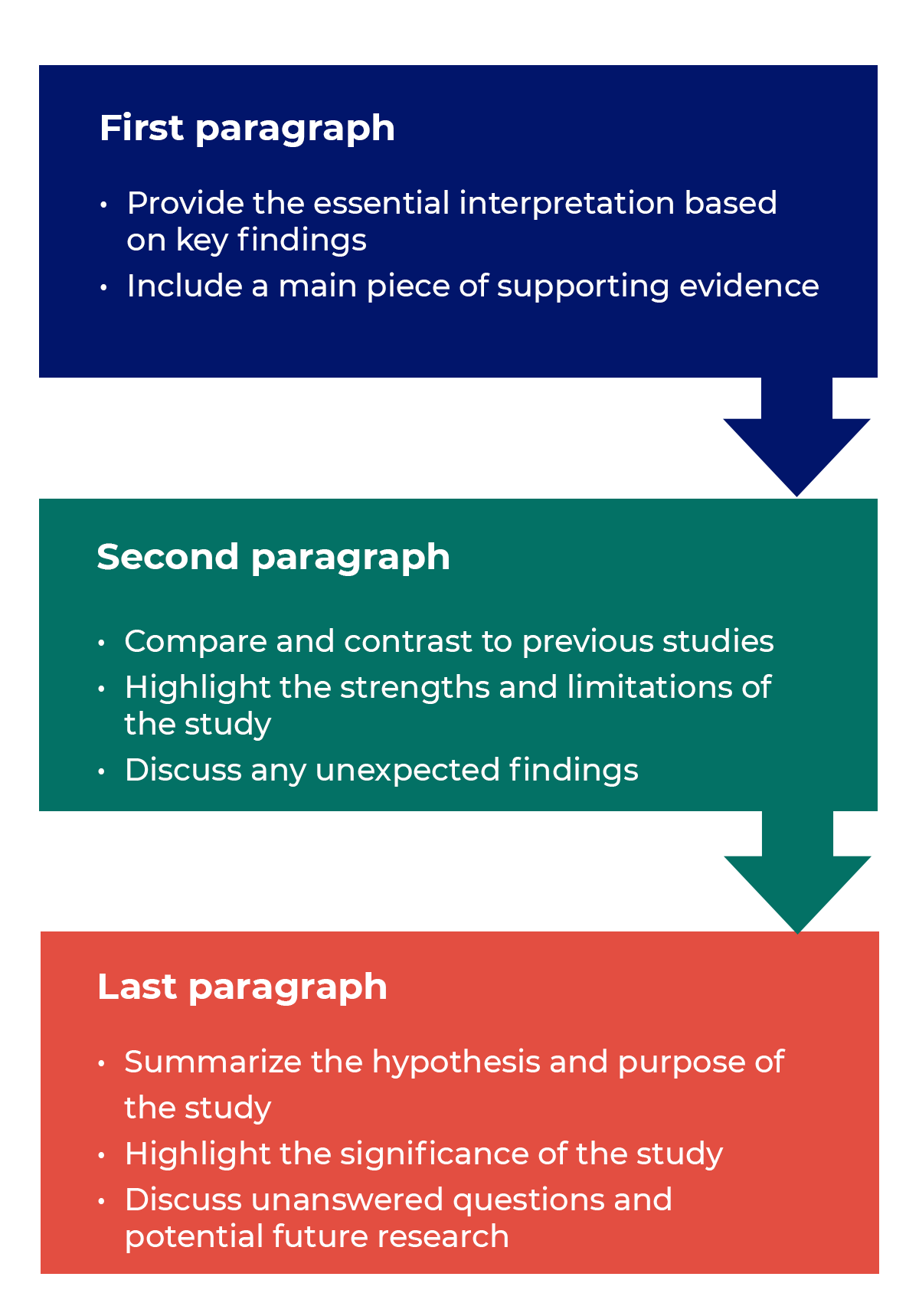

How to structure a discussion

Trying to fit a complete discussion into a single paragraph can add unnecessary stress to the writing process. If possible, you’ll want to give yourself two or three paragraphs to give the reader a comprehensive understanding of your study as a whole. Here’s one way to structure an effective discussion:

Writing Tips

While the above sections can help you brainstorm and structure your discussion, there are many common mistakes that writers revert to when having difficulties with their paper. Writing a discussion can be a delicate balance between summarizing your results, providing proper context for your research and avoiding introducing new information. Remember that your paper should be both confident and honest about the results!

- Read the journal’s guidelines on the discussion and conclusion sections. If possible, learn about the guidelines before writing the discussion to ensure you’re writing to meet their expectations.

- Begin with a clear statement of the principal findings. This will reinforce the main take-away for the reader and set up the rest of the discussion.

- Explain why the outcomes of your study are important to the reader. Discuss the implications of your findings realistically based on previous literature, highlighting both the strengths and limitations of the research.

- State whether the results prove or disprove your hypothesis. If your hypothesis was disproved, what might be the reasons?

- Introduce new or expanded ways to think about the research question. Indicate what next steps can be taken to further pursue any unresolved questions.

- If dealing with a contemporary or ongoing problem, such as climate change, discuss possible consequences if the problem is avoided.

- Be concise. Adding unnecessary detail can distract from the main findings.

Don’t

- Rewrite your abstract. Statements with “we investigated” or “we studied” generally do not belong in the discussion.

- Include new arguments or evidence not previously discussed. Necessary information and evidence should be introduced in the main body of the paper.

- Apologize. Even if your research contains significant limitations, don’t undermine your authority by including statements that doubt your methodology or execution.

- Shy away from speaking on limitations or negative results. Including limitations and negative results will give readers a complete understanding of the presented research. Potential limitations include sources of potential bias, threats to internal or external validity, barriers to implementing an intervention and other issues inherent to the study design.

- Overstate the importance of your findings. Making grand statements about how a study will fully resolve large questions can lead readers to doubt the success of the research.

Snippets of Effective Discussions:

Consumer-based actions to reduce plastic pollution in rivers: A multi-criteria decision analysis approach

Identifying reliable indicators of fitness in polar bears

- How to Write a Great Title

- How to Write an Abstract

- How to Write Your Methods

- How to Report Statistics

- How to Edit Your Work

The contents of the Peer Review Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

The contents of the Writing Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

There’s a lot to consider when deciding where to submit your work. Learn how to choose a journal that will help your study reach its audience, while reflecting your values as a researcher…

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research paper

Writing a Research Paper Conclusion | Step-by-Step Guide

Published on October 30, 2022 by Jack Caulfield . Revised on April 13, 2023.

- Restate the problem statement addressed in the paper

- Summarize your overall arguments or findings

- Suggest the key takeaways from your paper

The content of the conclusion varies depending on whether your paper presents the results of original empirical research or constructs an argument through engagement with sources .

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

Step 1: restate the problem, step 2: sum up the paper, step 3: discuss the implications, research paper conclusion examples, frequently asked questions about research paper conclusions.

The first task of your conclusion is to remind the reader of your research problem . You will have discussed this problem in depth throughout the body, but now the point is to zoom back out from the details to the bigger picture.

While you are restating a problem you’ve already introduced, you should avoid phrasing it identically to how it appeared in the introduction . Ideally, you’ll find a novel way to circle back to the problem from the more detailed ideas discussed in the body.

For example, an argumentative paper advocating new measures to reduce the environmental impact of agriculture might restate its problem as follows:

Meanwhile, an empirical paper studying the relationship of Instagram use with body image issues might present its problem like this:

“In conclusion …”

Avoid starting your conclusion with phrases like “In conclusion” or “To conclude,” as this can come across as too obvious and make your writing seem unsophisticated. The content and placement of your conclusion should make its function clear without the need for additional signposting.

Scribbr Citation Checker New

The AI-powered Citation Checker helps you avoid common mistakes such as:

- Missing commas and periods

- Incorrect usage of “et al.”

- Ampersands (&) in narrative citations

- Missing reference entries

Having zoomed back in on the problem, it’s time to summarize how the body of the paper went about addressing it, and what conclusions this approach led to.

Depending on the nature of your research paper, this might mean restating your thesis and arguments, or summarizing your overall findings.

Argumentative paper: Restate your thesis and arguments

In an argumentative paper, you will have presented a thesis statement in your introduction, expressing the overall claim your paper argues for. In the conclusion, you should restate the thesis and show how it has been developed through the body of the paper.

Briefly summarize the key arguments made in the body, showing how each of them contributes to proving your thesis. You may also mention any counterarguments you addressed, emphasizing why your thesis holds up against them, particularly if your argument is a controversial one.

Don’t go into the details of your evidence or present new ideas; focus on outlining in broad strokes the argument you have made.

Empirical paper: Summarize your findings

In an empirical paper, this is the time to summarize your key findings. Don’t go into great detail here (you will have presented your in-depth results and discussion already), but do clearly express the answers to the research questions you investigated.

Describe your main findings, even if they weren’t necessarily the ones you expected or hoped for, and explain the overall conclusion they led you to.

Having summed up your key arguments or findings, the conclusion ends by considering the broader implications of your research. This means expressing the key takeaways, practical or theoretical, from your paper—often in the form of a call for action or suggestions for future research.

Argumentative paper: Strong closing statement

An argumentative paper generally ends with a strong closing statement. In the case of a practical argument, make a call for action: What actions do you think should be taken by the people or organizations concerned in response to your argument?

If your topic is more theoretical and unsuitable for a call for action, your closing statement should express the significance of your argument—for example, in proposing a new understanding of a topic or laying the groundwork for future research.

Empirical paper: Future research directions

In a more empirical paper, you can close by either making recommendations for practice (for example, in clinical or policy papers), or suggesting directions for future research.

Whatever the scope of your own research, there will always be room for further investigation of related topics, and you’ll often discover new questions and problems during the research process .

Finish your paper on a forward-looking note by suggesting how you or other researchers might build on this topic in the future and address any limitations of the current paper.

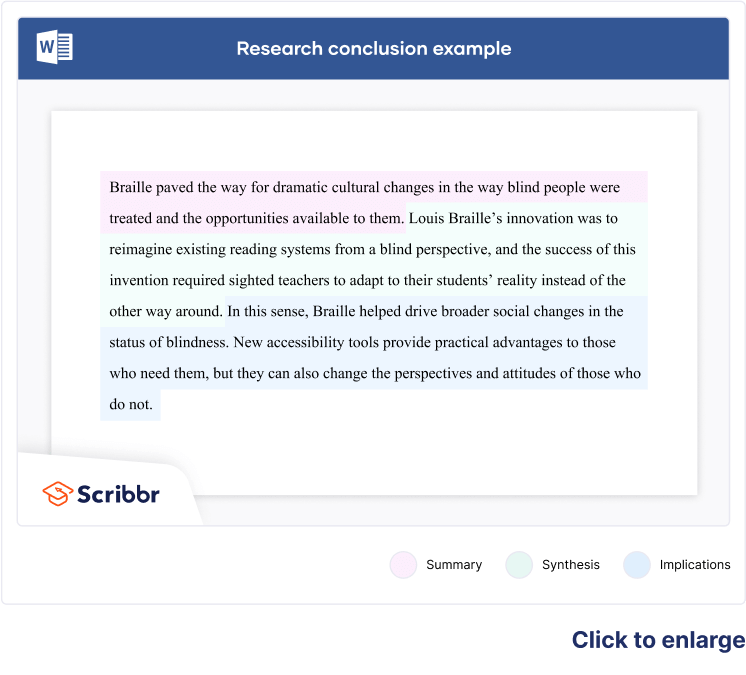

Full examples of research paper conclusions are shown in the tabs below: one for an argumentative paper, the other for an empirical paper.

- Argumentative paper

- Empirical paper

While the role of cattle in climate change is by now common knowledge, countries like the Netherlands continually fail to confront this issue with the urgency it deserves. The evidence is clear: To create a truly futureproof agricultural sector, Dutch farmers must be incentivized to transition from livestock farming to sustainable vegetable farming. As well as dramatically lowering emissions, plant-based agriculture, if approached in the right way, can produce more food with less land, providing opportunities for nature regeneration areas that will themselves contribute to climate targets. Although this approach would have economic ramifications, from a long-term perspective, it would represent a significant step towards a more sustainable and resilient national economy. Transitioning to sustainable vegetable farming will make the Netherlands greener and healthier, setting an example for other European governments. Farmers, policymakers, and consumers must focus on the future, not just on their own short-term interests, and work to implement this transition now.

As social media becomes increasingly central to young people’s everyday lives, it is important to understand how different platforms affect their developing self-conception. By testing the effect of daily Instagram use among teenage girls, this study established that highly visual social media does indeed have a significant effect on body image concerns, with a strong correlation between the amount of time spent on the platform and participants’ self-reported dissatisfaction with their appearance. However, the strength of this effect was moderated by pre-test self-esteem ratings: Participants with higher self-esteem were less likely to experience an increase in body image concerns after using Instagram. This suggests that, while Instagram does impact body image, it is also important to consider the wider social and psychological context in which this usage occurs: Teenagers who are already predisposed to self-esteem issues may be at greater risk of experiencing negative effects. Future research into Instagram and other highly visual social media should focus on establishing a clearer picture of how self-esteem and related constructs influence young people’s experiences of these platforms. Furthermore, while this experiment measured Instagram usage in terms of time spent on the platform, observational studies are required to gain more insight into different patterns of usage—to investigate, for instance, whether active posting is associated with different effects than passive consumption of social media content.

If you’re unsure about the conclusion, it can be helpful to ask a friend or fellow student to read your conclusion and summarize the main takeaways.

- Do they understand from your conclusion what your research was about?

- Are they able to summarize the implications of your findings?

- Can they answer your research question based on your conclusion?

You can also get an expert to proofread and feedback your paper with a paper editing service .

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The conclusion of a research paper has several key elements you should make sure to include:

- A restatement of the research problem

- A summary of your key arguments and/or findings

- A short discussion of the implications of your research

No, it’s not appropriate to present new arguments or evidence in the conclusion . While you might be tempted to save a striking argument for last, research papers follow a more formal structure than this.

All your findings and arguments should be presented in the body of the text (more specifically in the results and discussion sections if you are following a scientific structure). The conclusion is meant to summarize and reflect on the evidence and arguments you have already presented, not introduce new ones.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Caulfield, J. (2023, April 13). Writing a Research Paper Conclusion | Step-by-Step Guide. Scribbr. Retrieved March 29, 2024, from https://www.scribbr.com/research-paper/research-paper-conclusion/

Is this article helpful?

Jack Caulfield

Other students also liked, writing a research paper introduction | step-by-step guide, how to create a structured research paper outline | example, checklist: writing a great research paper, what is your plagiarism score.

Research Guide

Chapter 7 presenting your findings.

Now that you have worked so hard in your project, how to ensure that you can communicate your findings in an effective and efficient way? In this section, I will introduce a few tips that could help you prepare your slides and preparing for your final presentation.

7.1 Sections of the Presentation

When preparing your slides, you need to ensure that you have a clear roadmap. You have a limited time to explain the context of your study, your results, and the main takeaways. Thus, you need to be organized and efficient when deciding what material will be included in the slides.

You need to ensure that your presentation contains the following sections:

- Motivation : Why did you choose your topic? What is the bigger question?

- Research question : Needs to be clear and concise. Include secondary questions, if available, but be clear about what is your research question.

- Literature Review : How does your paper fit in the overall literature? What are your contributions?

- Context : Give an overview of the issue and the population/countries that you analyzed

- Study Characteristics : This section is key, as it needs to include your model, identification strategy, and introduce your data (sources, summary statistics, etc.).

- Results : In this section, you need to answer your research question(s). Include tables that are readable.

- Additional analysis : Here, include any additional information that your public needs to know. For instance, did you try different specifications? did you find an obstacle (i.e. your data is very noisy, the sample is very small, something else) that may bias your results or create some issues in your analysis? Tell your audience! No research project is perfect, but you need to be clear about the imperfections of your project.

- Conclusion : Be repetitive! What was your research question? How did you answer it? What did you find? What is next in this topic?

7.2 How to prepare your slides

When preparing your slides, remember that humans have a limited capacity to pay attention. If you want to convey your convey your message in an effective way, you need to ensure that the message is simple and that you keep your audience attention. Here are some strategies that you may want to follow:

- Have a clear roadmap at the beginning of the presentation. Tell your audience what to expect.

- Number your slides. This will help you and your audience to know where you are in your analysis.

- Ensure that each slide has a purpose

- Ensure that each slide is connected to your key point.

- Make just one argument per slide

- State the objective of each slide in the headline

- Use bullet points. Do not include more than one sentence per bullet point.

- Choose a simple background.

- If you want to direct your audience attention to a specific point, make it more attractive (using a different font color)

- Each slide needs to have a similar structure (going from the general to the particular detauls).

- Use images/graphs when possible. Ensure that the axes for the graphs are clear.

- Use a large font for your tables. Keep them as simple as possible.

- If you can say it with an image, choose it over a table.

- Have an Appendix with slides that address potential questions.

7.3 How to prepare your presentation

One of the main constraints of having simple presentations is that you cannot rely on them and read them. Instead, you need to have extra notes and memorize them to explain things beyond what is on your slides. The following are some suggestions on how to ensure you communicate effectively during your presentation.

- Practice, practice, practice!

- Keep the right volume (practice will help you with that)

- Be journalistic about your presentation. Indicate what you want to say, then say it.

- Ensure that your audience knows where you are going

- Avoid passive voice.

- Be consistent with the terms you are using. You do not want to confuse your audience, even if using synonyms.

- Face your audience and keep an eye contact.

- Do not try reading your slides

- Ensure that your audience is focused on what you are presenting and there are no other distractions that you can control.

- Do not rush your presentation. Speak calmly and controlled.

- Be comprehensive when answering questions. Avoid yes/no answers. Instead, rephrase question (to ensure you are answering the right question), then give a short answer, then develop.

- If you lose track, do not panick. Go back a little bit or ask your audience for assistance.

- Again, practice is the secret.

You have worked so hard in your final project, and the presentation is your opportunity to share that work with the rest of the world. Use this opportunity to shine and enjoy it.

Since this is the first iteration of the Guide, I expect that there are going to be multiple typos and structure issues. Please feel free to let me know, and I will correct accordingly. ↩︎

Note that you would still need to refine some of the good questions even more. ↩︎

- Affiliate Program

- UNITED STATES

- 台灣 (TAIWAN)

- TÜRKIYE (TURKEY)

- Academic Editing Services

- - Research Paper

- - Journal Manuscript

- - Dissertation

- - College & University Assignments

- Admissions Editing Services

- - Application Essay

- - Personal Statement

- - Recommendation Letter

- - Cover Letter

- - CV/Resume

- Business Editing Services

- - Business Documents

- - Report & Brochure

- - Website & Blog

- Writer Editing Services

- - Script & Screenplay

- Our Editors

- Client Reviews

- Editing & Proofreading Prices

- Wordvice Points

- Partner Discount

- Plagiarism Checker

- APA Citation Generator

- MLA Citation Generator

- Chicago Citation Generator

- Vancouver Citation Generator

- - APA Style

- - MLA Style

- - Chicago Style

- - Vancouver Style

- Writing & Editing Guide

- Academic Resources

- Admissions Resources

How to Write the Results/Findings Section in Research

What is the research paper Results section and what does it do?

The Results section of a scientific research paper represents the core findings of a study derived from the methods applied to gather and analyze information. It presents these findings in a logical sequence without bias or interpretation from the author, setting up the reader for later interpretation and evaluation in the Discussion section. A major purpose of the Results section is to break down the data into sentences that show its significance to the research question(s).

The Results section appears third in the section sequence in most scientific papers. It follows the presentation of the Methods and Materials and is presented before the Discussion section —although the Results and Discussion are presented together in many journals. This section answers the basic question “What did you find in your research?”

What is included in the Results section?

The Results section should include the findings of your study and ONLY the findings of your study. The findings include:

- Data presented in tables, charts, graphs, and other figures (may be placed into the text or on separate pages at the end of the manuscript)

- A contextual analysis of this data explaining its meaning in sentence form

- All data that corresponds to the central research question(s)

- All secondary findings (secondary outcomes, subgroup analyses, etc.)

If the scope of the study is broad, or if you studied a variety of variables, or if the methodology used yields a wide range of different results, the author should present only those results that are most relevant to the research question stated in the Introduction section .

As a general rule, any information that does not present the direct findings or outcome of the study should be left out of this section. Unless the journal requests that authors combine the Results and Discussion sections, explanations and interpretations should be omitted from the Results.

How are the results organized?

The best way to organize your Results section is “logically.” One logical and clear method of organizing research results is to provide them alongside the research questions—within each research question, present the type of data that addresses that research question.

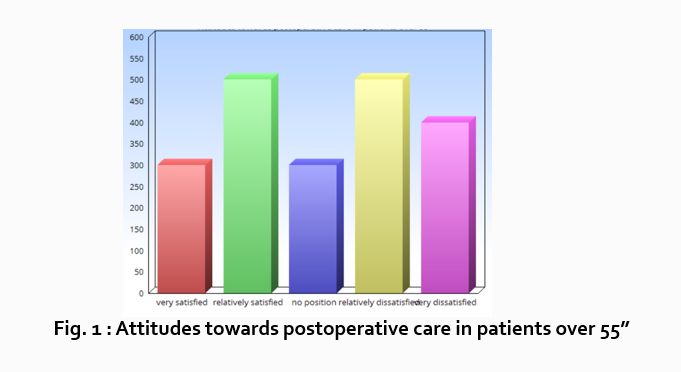

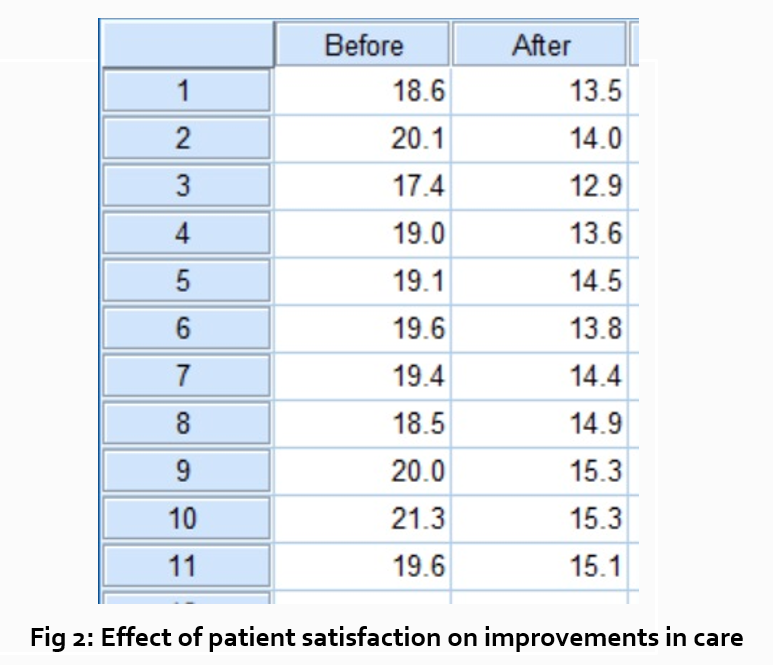

Let’s look at an example. Your research question is based on a survey among patients who were treated at a hospital and received postoperative care. Let’s say your first research question is:

“What do hospital patients over age 55 think about postoperative care?”

This can actually be represented as a heading within your Results section, though it might be presented as a statement rather than a question:

Attitudes towards postoperative care in patients over the age of 55

Now present the results that address this specific research question first. In this case, perhaps a table illustrating data from a survey. Likert items can be included in this example. Tables can also present standard deviations, probabilities, correlation matrices, etc.

Following this, present a content analysis, in words, of one end of the spectrum of the survey or data table. In our example case, start with the POSITIVE survey responses regarding postoperative care, using descriptive phrases. For example:

“Sixty-five percent of patients over 55 responded positively to the question “ Are you satisfied with your hospital’s postoperative care ?” (Fig. 2)

Include other results such as subcategory analyses. The amount of textual description used will depend on how much interpretation of tables and figures is necessary and how many examples the reader needs in order to understand the significance of your research findings.

Next, present a content analysis of another part of the spectrum of the same research question, perhaps the NEGATIVE or NEUTRAL responses to the survey. For instance:

“As Figure 1 shows, 15 out of 60 patients in Group A responded negatively to Question 2.”

After you have assessed the data in one figure and explained it sufficiently, move on to your next research question. For example:

“How does patient satisfaction correspond to in-hospital improvements made to postoperative care?”

This kind of data may be presented through a figure or set of figures (for instance, a paired T-test table).

Explain the data you present, here in a table, with a concise content analysis:

“The p-value for the comparison between the before and after groups of patients was .03% (Fig. 2), indicating that the greater the dissatisfaction among patients, the more frequent the improvements that were made to postoperative care.”

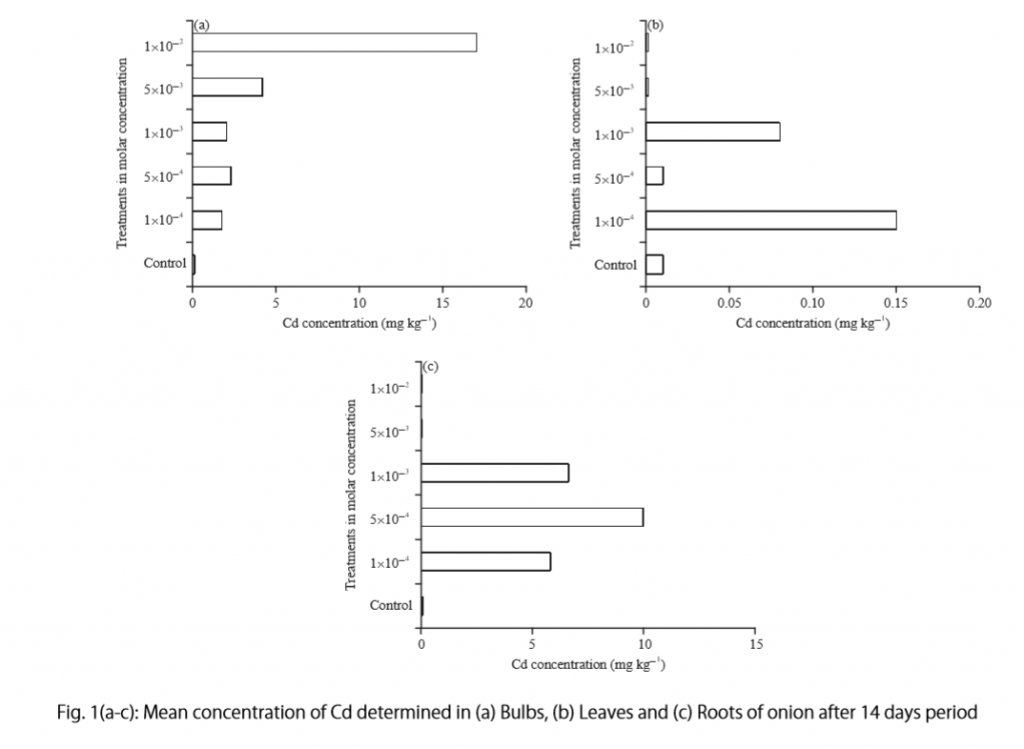

Let’s examine another example of a Results section from a study on plant tolerance to heavy metal stress . In the Introduction section, the aims of the study are presented as “determining the physiological and morphological responses of Allium cepa L. towards increased cadmium toxicity” and “evaluating its potential to accumulate the metal and its associated environmental consequences.” The Results section presents data showing how these aims are achieved in tables alongside a content analysis, beginning with an overview of the findings:

“Cadmium caused inhibition of root and leave elongation, with increasing effects at higher exposure doses (Fig. 1a-c).”

The figure containing this data is cited in parentheses. Note that this author has combined three graphs into one single figure. Separating the data into separate graphs focusing on specific aspects makes it easier for the reader to assess the findings, and consolidating this information into one figure saves space and makes it easy to locate the most relevant results.

Following this overall summary, the relevant data in the tables is broken down into greater detail in text form in the Results section.

- “Results on the bio-accumulation of cadmium were found to be the highest (17.5 mg kgG1) in the bulb, when the concentration of cadmium in the solution was 1×10G2 M and lowest (0.11 mg kgG1) in the leaves when the concentration was 1×10G3 M.”

Captioning and Referencing Tables and Figures

Tables and figures are central components of your Results section and you need to carefully think about the most effective way to use graphs and tables to present your findings . Therefore, it is crucial to know how to write strong figure captions and to refer to them within the text of the Results section.

The most important advice one can give here as well as throughout the paper is to check the requirements and standards of the journal to which you are submitting your work. Every journal has its own design and layout standards, which you can find in the author instructions on the target journal’s website. Perusing a journal’s published articles will also give you an idea of the proper number, size, and complexity of your figures.

Regardless of which format you use, the figures should be placed in the order they are referenced in the Results section and be as clear and easy to understand as possible. If there are multiple variables being considered (within one or more research questions), it can be a good idea to split these up into separate figures. Subsequently, these can be referenced and analyzed under separate headings and paragraphs in the text.

To create a caption, consider the research question being asked and change it into a phrase. For instance, if one question is “Which color did participants choose?”, the caption might be “Color choice by participant group.” Or in our last research paper example, where the question was “What is the concentration of cadmium in different parts of the onion after 14 days?” the caption reads:

“Fig. 1(a-c): Mean concentration of Cd determined in (a) bulbs, (b) leaves, and (c) roots of onions after a 14-day period.”

Steps for Composing the Results Section

Because each study is unique, there is no one-size-fits-all approach when it comes to designing a strategy for structuring and writing the section of a research paper where findings are presented. The content and layout of this section will be determined by the specific area of research, the design of the study and its particular methodologies, and the guidelines of the target journal and its editors. However, the following steps can be used to compose the results of most scientific research studies and are essential for researchers who are new to preparing a manuscript for publication or who need a reminder of how to construct the Results section.

Step 1 : Consult the guidelines or instructions that the target journal or publisher provides authors and read research papers it has published, especially those with similar topics, methods, or results to your study.

- The guidelines will generally outline specific requirements for the results or findings section, and the published articles will provide sound examples of successful approaches.

- Note length limitations on restrictions on content. For instance, while many journals require the Results and Discussion sections to be separate, others do not—qualitative research papers often include results and interpretations in the same section (“Results and Discussion”).

- Reading the aims and scope in the journal’s “ guide for authors ” section and understanding the interests of its readers will be invaluable in preparing to write the Results section.

Step 2 : Consider your research results in relation to the journal’s requirements and catalogue your results.

- Focus on experimental results and other findings that are especially relevant to your research questions and objectives and include them even if they are unexpected or do not support your ideas and hypotheses.

- Catalogue your findings—use subheadings to streamline and clarify your report. This will help you avoid excessive and peripheral details as you write and also help your reader understand and remember your findings. Create appendices that might interest specialists but prove too long or distracting for other readers.

- Decide how you will structure of your results. You might match the order of the research questions and hypotheses to your results, or you could arrange them according to the order presented in the Methods section. A chronological order or even a hierarchy of importance or meaningful grouping of main themes or categories might prove effective. Consider your audience, evidence, and most importantly, the objectives of your research when choosing a structure for presenting your findings.

Step 3 : Design figures and tables to present and illustrate your data.

- Tables and figures should be numbered according to the order in which they are mentioned in the main text of the paper.

- Information in figures should be relatively self-explanatory (with the aid of captions), and their design should include all definitions and other information necessary for readers to understand the findings without reading all of the text.

- Use tables and figures as a focal point to tell a clear and informative story about your research and avoid repeating information. But remember that while figures clarify and enhance the text, they cannot replace it.

Step 4 : Draft your Results section using the findings and figures you have organized.

- The goal is to communicate this complex information as clearly and precisely as possible; precise and compact phrases and sentences are most effective.

- In the opening paragraph of this section, restate your research questions or aims to focus the reader’s attention to what the results are trying to show. It is also a good idea to summarize key findings at the end of this section to create a logical transition to the interpretation and discussion that follows.

- Try to write in the past tense and the active voice to relay the findings since the research has already been done and the agent is usually clear. This will ensure that your explanations are also clear and logical.

- Make sure that any specialized terminology or abbreviation you have used here has been defined and clarified in the Introduction section .

Step 5 : Review your draft; edit and revise until it reports results exactly as you would like to have them reported to your readers.

- Double-check the accuracy and consistency of all the data, as well as all of the visual elements included.

- Read your draft aloud to catch language errors (grammar, spelling, and mechanics), awkward phrases, and missing transitions.

- Ensure that your results are presented in the best order to focus on objectives and prepare readers for interpretations, valuations, and recommendations in the Discussion section . Look back over the paper’s Introduction and background while anticipating the Discussion and Conclusion sections to ensure that the presentation of your results is consistent and effective.

- Consider seeking additional guidance on your paper. Find additional readers to look over your Results section and see if it can be improved in any way. Peers, professors, or qualified experts can provide valuable insights.

One excellent option is to use a professional English proofreading and editing service such as Wordvice, including our paper editing service . With hundreds of qualified editors from dozens of scientific fields, Wordvice has helped thousands of authors revise their manuscripts and get accepted into their target journals. Read more about the proofreading and editing process before proceeding with getting academic editing services and manuscript editing services for your manuscript.

As the representation of your study’s data output, the Results section presents the core information in your research paper. By writing with clarity and conciseness and by highlighting and explaining the crucial findings of their study, authors increase the impact and effectiveness of their research manuscripts.

For more articles and videos on writing your research manuscript, visit Wordvice’s Resources page.

Wordvice Resources

- How to Write a Research Paper Introduction

- Which Verb Tenses to Use in a Research Paper

- How to Write an Abstract for a Research Paper

- How to Write a Research Paper Title

- Useful Phrases for Academic Writing

- Common Transition Terms in Academic Papers

- Active and Passive Voice in Research Papers

- 100+ Verbs That Will Make Your Research Writing Amazing

- Tips for Paraphrasing in Research Papers

Jump to navigation

Cochrane Training

Chapter 14: completing ‘summary of findings’ tables and grading the certainty of the evidence.

Holger J Schünemann, Julian PT Higgins, Gunn E Vist, Paul Glasziou, Elie A Akl, Nicole Skoetz, Gordon H Guyatt; on behalf of the Cochrane GRADEing Methods Group (formerly Applicability and Recommendations Methods Group) and the Cochrane Statistical Methods Group

Key Points:

- A ‘Summary of findings’ table for a given comparison of interventions provides key information concerning the magnitudes of relative and absolute effects of the interventions examined, the amount of available evidence and the certainty (or quality) of available evidence.

- ‘Summary of findings’ tables include a row for each important outcome (up to a maximum of seven). Accepted formats of ‘Summary of findings’ tables and interactive ‘Summary of findings’ tables can be produced using GRADE’s software GRADEpro GDT.

- Cochrane has adopted the GRADE approach (Grading of Recommendations Assessment, Development and Evaluation) for assessing certainty (or quality) of a body of evidence.

- The GRADE approach specifies four levels of the certainty for a body of evidence for a given outcome: high, moderate, low and very low.

- GRADE assessments of certainty are determined through consideration of five domains: risk of bias, inconsistency, indirectness, imprecision and publication bias. For evidence from non-randomized studies and rarely randomized studies, assessments can then be upgraded through consideration of three further domains.

Cite this chapter as: Schünemann HJ, Higgins JPT, Vist GE, Glasziou P, Akl EA, Skoetz N, Guyatt GH. Chapter 14: Completing ‘Summary of findings’ tables and grading the certainty of the evidence. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

14.1 ‘Summary of findings’ tables

14.1.1 introduction to ‘summary of findings’ tables.

‘Summary of findings’ tables present the main findings of a review in a transparent, structured and simple tabular format. In particular, they provide key information concerning the certainty or quality of evidence (i.e. the confidence or certainty in the range of an effect estimate or an association), the magnitude of effect of the interventions examined, and the sum of available data on the main outcomes. Cochrane Reviews should incorporate ‘Summary of findings’ tables during planning and publication, and should have at least one key ‘Summary of findings’ table representing the most important comparisons. Some reviews may include more than one ‘Summary of findings’ table, for example if the review addresses more than one major comparison, or includes substantially different populations that require separate tables (e.g. because the effects differ or it is important to show results separately). In the Cochrane Database of Systematic Reviews (CDSR), all ‘Summary of findings’ tables for a review appear at the beginning, before the Background section.

14.1.2 Selecting outcomes for ‘Summary of findings’ tables

Planning for the ‘Summary of findings’ table starts early in the systematic review, with the selection of the outcomes to be included in: (i) the review; and (ii) the ‘Summary of findings’ table. This is a crucial step, and one that review authors need to address carefully.

To ensure production of optimally useful information, Cochrane Reviews begin by developing a review question and by listing all main outcomes that are important to patients and other decision makers (see Chapter 2 and Chapter 3 ). The GRADE approach to assessing the certainty of the evidence (see Section 14.2 ) defines and operationalizes a rating process that helps separate outcomes into those that are critical, important or not important for decision making. Consultation and feedback on the review protocol, including from consumers and other decision makers, can enhance this process.

Critical outcomes are likely to include clearly important endpoints; typical examples include mortality and major morbidity (such as strokes and myocardial infarction). However, they may also represent frequent minor and rare major side effects, symptoms, quality of life, burdens associated with treatment, and resource issues (costs). Burdens represent the impact of healthcare workload on patient function and well-being, and include the demands of adhering to an intervention that patients or caregivers (e.g. family) may dislike, such as having to undergo more frequent tests, or the restrictions on lifestyle that certain interventions require (Spencer-Bonilla et al 2017).

Frequently, when formulating questions that include all patient-important outcomes for decision making, review authors will confront reports of studies that have not included all these outcomes. This is particularly true for adverse outcomes. For instance, randomized trials might contribute evidence on intended effects, and on frequent, relatively minor side effects, but not report on rare adverse outcomes such as suicide attempts. Chapter 19 discusses strategies for addressing adverse effects. To obtain data for all important outcomes it may be necessary to examine the results of non-randomized studies (see Chapter 24 ). Cochrane, in collaboration with others, has developed guidance for review authors to support their decision about when to look for and include non-randomized studies (Schünemann et al 2013).

If a review includes only randomized trials, these trials may not address all important outcomes and it may therefore not be possible to address these outcomes within the constraints of the review. Review authors should acknowledge these limitations and make them transparent to readers. Review authors are encouraged to include non-randomized studies to examine rare or long-term adverse effects that may not adequately be studied in randomized trials. This raises the possibility that harm outcomes may come from studies in which participants differ from those in studies used in the analysis of benefit. Review authors will then need to consider how much such differences are likely to impact on the findings, and this will influence the certainty of evidence because of concerns about indirectness related to the population (see Section 14.2.2 ).

Non-randomized studies can provide important information not only when randomized trials do not report on an outcome or randomized trials suffer from indirectness, but also when the evidence from randomized trials is rated as very low and non-randomized studies provide evidence of higher certainty. Further discussion of these issues appears also in Chapter 24 .

14.1.3 General template for ‘Summary of findings’ tables

Several alternative standard versions of ‘Summary of findings’ tables have been developed to ensure consistency and ease of use across reviews, inclusion of the most important information needed by decision makers, and optimal presentation (see examples at Figures 14.1.a and 14.1.b ). These formats are supported by research that focused on improved understanding of the information they intend to convey (Carrasco-Labra et al 2016, Langendam et al 2016, Santesso et al 2016). They are available through GRADE’s official software package developed to support the GRADE approach: GRADEpro GDT (www.gradepro.org).

Standard Cochrane ‘Summary of findings’ tables include the following elements using one of the accepted formats. Further guidance on each of these is provided in Section 14.1.6 .

- A brief description of the population and setting addressed by the available evidence (which may be slightly different to or narrower than those defined by the review question).

- A brief description of the comparison addressed in the ‘Summary of findings’ table, including both the experimental and comparison interventions.

- A list of the most critical and/or important health outcomes, both desirable and undesirable, limited to seven or fewer outcomes.

- A measure of the typical burden of each outcomes (e.g. illustrative risk, or illustrative mean, on comparator intervention).

- The absolute and relative magnitude of effect measured for each (if both are appropriate).

- The numbers of participants and studies contributing to the analysis of each outcomes.

- A GRADE assessment of the overall certainty of the body of evidence for each outcome (which may vary by outcome).

- Space for comments.

- Explanations (formerly known as footnotes).

Ideally, ‘Summary of findings’ tables are supported by more detailed tables (known as ‘evidence profiles’) to which the review may be linked, which provide more detailed explanations. Evidence profiles include the same important health outcomes, and provide greater detail than ‘Summary of findings’ tables of both of the individual considerations feeding into the grading of certainty and of the results of the studies (Guyatt et al 2011a). They ensure that a structured approach is used to rating the certainty of evidence. Although they are rarely published in Cochrane Reviews, evidence profiles are often used, for example, by guideline developers in considering the certainty of the evidence to support guideline recommendations. Review authors will find it easier to develop the ‘Summary of findings’ table by completing the rating of the certainty of evidence in the evidence profile first in GRADEpro GDT. They can then automatically convert this to one of the ‘Summary of findings’ formats in GRADEpro GDT, including an interactive ‘Summary of findings’ for publication.

As a measure of the magnitude of effect for dichotomous outcomes, the ‘Summary of findings’ table should provide a relative measure of effect (e.g. risk ratio, odds ratio, hazard) and measures of absolute risk. For other types of data, an absolute measure alone (such as a difference in means for continuous data) might be sufficient. It is important that the magnitude of effect is presented in a meaningful way, which may require some transformation of the result of a meta-analysis (see also Chapter 15, Section 15.4 and Section 15.5 ). Reviews with more than one main comparison should include a separate ‘Summary of findings’ table for each comparison.

Figure 14.1.a provides an example of a ‘Summary of findings’ table. Figure 15.1.b provides an alternative format that may further facilitate users’ understanding and interpretation of the review’s findings. Evidence evaluating different formats suggests that the ‘Summary of findings’ table should include a risk difference as a measure of the absolute effect and authors should preferably use a format that includes a risk difference .

A detailed description of the contents of a ‘Summary of findings’ table appears in Section 14.1.6 .

Figure 14.1.a Example of a ‘Summary of findings’ table

Summary of findings (for interactive version click here )

a All the stockings in the nine studies included in this review were below-knee compression stockings. In four studies the compression strength was 20 mmHg to 30 mmHg at the ankle. It was 10 mmHg to 20 mmHg in the other four studies. Stockings come in different sizes. If a stocking is too tight around the knee it can prevent essential venous return causing the blood to pool around the knee. Compression stockings should be fitted properly. A stocking that is too tight could cut into the skin on a long flight and potentially cause ulceration and increased risk of DVT. Some stockings can be slightly thicker than normal leg covering and can be potentially restrictive with tight foot wear. It is a good idea to wear stockings around the house prior to travel to ensure a good, comfortable fit. Participants put their stockings on two to three hours before the flight in most of the studies. The availability and cost of stockings can vary.

b Two studies recruited high risk participants defined as those with previous episodes of DVT, coagulation disorders, severe obesity, limited mobility due to bone or joint problems, neoplastic disease within the previous two years, large varicose veins or, in one of the studies, participants taller than 190 cm and heavier than 90 kg. The incidence for the seven studies that excluded high risk participants was 1.45% and the incidence for the two studies that recruited high-risk participants (with at least one risk factor) was 2.43%. We have used 10 and 30 per 1000 to express different risk strata, respectively.

c The confidence interval crosses no difference and does not rule out a small increase.

d The measurement of oedema was not validated (indirectness of the outcome) or blinded to the intervention (risk of bias).

e If there are very few or no events and the number of participants is large, judgement about the certainty of evidence (particularly judgements about imprecision) may be based on the absolute effect. Here the certainty rating may be considered ‘high’ if the outcome was appropriately assessed and the event, in fact, did not occur in 2821 studied participants.

f None of the other studies reported adverse effects, apart from four cases of superficial vein thrombosis in varicose veins in the knee region that were compressed by the upper edge of the stocking in one study.

Figure 14.1.b Example of alternative ‘Summary of findings’ table

14.1.4 Producing ‘Summary of findings’ tables

The GRADE Working Group’s software, GRADEpro GDT ( www.gradepro.org ), including GRADE’s interactive handbook, is available to assist review authors in the preparation of ‘Summary of findings’ tables. GRADEpro can use data on the comparator group risk and the effect estimate (entered by the review authors or imported from files generated in RevMan) to produce the relative effects and absolute risks associated with experimental interventions. In addition, it leads the user through the process of a GRADE assessment, and produces a table that can be used as a standalone table in a review (including by direct import into software such as RevMan or integration with RevMan Web), or an interactive ‘Summary of findings’ table (see help resources in GRADEpro).

14.1.5 Statistical considerations in ‘Summary of findings’ tables

14.1.5.1 dichotomous outcomes.

‘Summary of findings’ tables should include both absolute and relative measures of effect for dichotomous outcomes. Risk ratios, odds ratios and risk differences are different ways of comparing two groups with dichotomous outcome data (see Chapter 6, Section 6.4.1 ). Furthermore, there are two distinct risk ratios, depending on which event (e.g. ‘yes’ or ‘no’) is the focus of the analysis (see Chapter 6, Section 6.4.1.5 ). In the presence of a non-zero intervention effect, any variation across studies in the comparator group risks (i.e. variation in the risk of the event occurring without the intervention of interest, for example in different populations) makes it impossible for more than one of these measures to be truly the same in every study.

It has long been assumed in epidemiology that relative measures of effect are more consistent than absolute measures of effect from one scenario to another. There is empirical evidence to support this assumption (Engels et al 2000, Deeks and Altman 2001, Furukawa et al 2002). For this reason, meta-analyses should generally use either a risk ratio or an odds ratio as a measure of effect (see Chapter 10, Section 10.4.3 ). Correspondingly, a single estimate of relative effect is likely to be a more appropriate summary than a single estimate of absolute effect. If a relative effect is indeed consistent across studies, then different comparator group risks will have different implications for absolute benefit. For instance, if the risk ratio is consistently 0.75, then the experimental intervention would reduce a comparator group risk of 80% to 60% in the intervention group (an absolute risk reduction of 20 percentage points), but would also reduce a comparator group risk of 20% to 15% in the intervention group (an absolute risk reduction of 5 percentage points).

‘Summary of findings’ tables are built around the assumption of a consistent relative effect. It is therefore important to consider the implications of this effect for different comparator group risks (these can be derived or estimated from a number of sources, see Section 14.1.6.3 ), which may require an assessment of the certainty of evidence for prognostic evidence (Spencer et al 2012, Iorio et al 2015). For any comparator group risk, it is possible to estimate a corresponding intervention group risk (i.e. the absolute risk with the intervention) from the meta-analytic risk ratio or odds ratio. Note that the numbers provided in the ‘Corresponding risk’ column are specific to the ‘risks’ in the adjacent column.

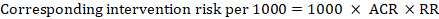

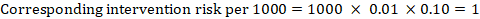

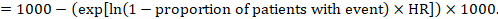

For the meta-analytic risk ratio (RR) and assumed comparator risk (ACR) the corresponding intervention risk is obtained as:

As an example, in Figure 14.1.a , the meta-analytic risk ratio for symptomless deep vein thrombosis (DVT) is RR = 0.10 (95% CI 0.04 to 0.26). Assuming a comparator risk of ACR = 10 per 1000 = 0.01, we obtain:

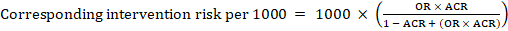

For the meta-analytic odds ratio (OR) and assumed comparator risk, ACR, the corresponding intervention risk is obtained as:

Upper and lower confidence limits for the corresponding intervention risk are obtained by replacing RR or OR by their upper and lower confidence limits, respectively (e.g. replacing 0.10 with 0.04, then with 0.26, in the example). Such confidence intervals do not incorporate uncertainty in the assumed comparator risks.

When dealing with risk ratios, it is critical that the same definition of ‘event’ is used as was used for the meta-analysis. For example, if the meta-analysis focused on ‘death’ (as opposed to survival) as the event, then corresponding risks in the ‘Summary of findings’ table must also refer to ‘death’.

In (rare) circumstances in which there is clear rationale to assume a consistent risk difference in the meta-analysis, in principle it is possible to present this for relevant ‘assumed risks’ and their corresponding risks, and to present the corresponding (different) relative effects for each assumed risk.

The risk difference expresses the difference between the ACR and the corresponding intervention risk (or the difference between the experimental and the comparator intervention).

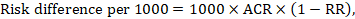

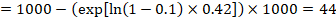

For the meta-analytic risk ratio (RR) and assumed comparator risk (ACR) the corresponding risk difference is obtained as (note that risks can also be expressed using percentage or percentage points):

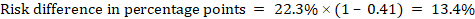

As an example, in Figure 14.1.b the meta-analytic risk ratio is 0.41 (95% CI 0.29 to 0.55) for diarrhoea in children less than 5 years of age. Assuming a comparator group risk of 22.3% we obtain:

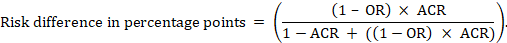

For the meta-analytic odds ratio (OR) and assumed comparator risk (ACR) the absolute risk difference is obtained as (percentage points):

Upper and lower confidence limits for the absolute risk difference are obtained by re-running the calculation above while replacing RR or OR by their upper and lower confidence limits, respectively (e.g. replacing 0.41 with 0.28, then with 0.55, in the example). Such confidence intervals do not incorporate uncertainty in the assumed comparator risks.

14.1.5.2 Time-to-event outcomes

Time-to-event outcomes measure whether and when a particular event (e.g. death) occurs (van Dalen et al 2007). The impact of the experimental intervention relative to the comparison group on time-to-event outcomes is usually measured using a hazard ratio (HR) (see Chapter 6, Section 6.8.1 ).

A hazard ratio expresses a relative effect estimate. It may be used in various ways to obtain absolute risks and other interpretable quantities for a specific population. Here we describe how to re-express hazard ratios in terms of: (i) absolute risk of event-free survival within a particular period of time; (ii) absolute risk of an event within a particular period of time; and (iii) median time to the event. All methods are built on an assumption of consistent relative effects (i.e. that the hazard ratio does not vary over time).

(i) Absolute risk of event-free survival within a particular period of time Event-free survival (e.g. overall survival) is commonly reported by individual studies. To obtain absolute effects for time-to-event outcomes measured as event-free survival, the summary HR can be used in conjunction with an assumed proportion of patients who are event-free in the comparator group (Tierney et al 2007). This proportion of patients will be specific to a period of time of observation. However, it is not strictly necessary to specify this period of time. For instance, a proportion of 50% of event-free patients might apply to patients with a high event rate observed over 1 year, or to patients with a low event rate observed over 2 years.

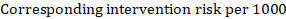

As an example, suppose the meta-analytic hazard ratio is 0.42 (95% CI 0.25 to 0.72). Assuming a comparator group risk of event-free survival (e.g. for overall survival people being alive) at 2 years of ACR = 900 per 1000 = 0.9 we obtain:

so that that 956 per 1000 people will be alive with the experimental intervention at 2 years. The derivation of the risk should be explained in a comment or footnote.

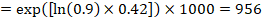

(ii) Absolute risk of an event within a particular period of time To obtain this absolute effect, again the summary HR can be used (Tierney et al 2007):

In the example, suppose we assume a comparator group risk of events (e.g. for mortality, people being dead) at 2 years of ACR = 100 per 1000 = 0.1. We obtain:

so that that 44 per 1000 people will be dead with the experimental intervention at 2 years.

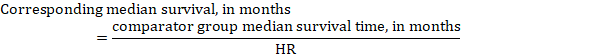

(iii) Median time to the event Instead of absolute numbers, the time to the event in the intervention and comparison groups can be expressed as median survival time in months or years. To obtain median survival time the pooled HR can be applied to an assumed median survival time in the comparator group (Tierney et al 2007):

In the example, assuming a comparator group median survival time of 80 months, we obtain:

For all three of these options for re-expressing results of time-to-event analyses, upper and lower confidence limits for the corresponding intervention risk are obtained by replacing HR by its upper and lower confidence limits, respectively (e.g. replacing 0.42 with 0.25, then with 0.72, in the example). Again, as for dichotomous outcomes, such confidence intervals do not incorporate uncertainty in the assumed comparator group risks. This is of special concern for long-term survival with a low or moderate mortality rate and a corresponding high number of censored patients (i.e. a low number of patients under risk and a high censoring rate).

14.1.6 Detailed contents of a ‘Summary of findings’ table

14.1.6.1 table title and header.

The title of each ‘Summary of findings’ table should specify the healthcare question, framed in terms of the population and making it clear exactly what comparison of interventions are made. In Figure 14.1.a , the population is people taking long aeroplane flights, the intervention is compression stockings, and the control is no compression stockings.

The first rows of each ‘Summary of findings’ table should provide the following ‘header’ information:

Patients or population This further clarifies the population (and possibly the subpopulations) of interest and ideally the magnitude of risk of the most crucial adverse outcome at which an intervention is directed. For instance, people on a long-haul flight may be at different risks for DVT; those using selective serotonin reuptake inhibitors (SSRIs) might be at different risk for side effects; while those with atrial fibrillation may be at low (< 1%), moderate (1% to 4%) or high (> 4%) yearly risk of stroke.

Setting This should state any specific characteristics of the settings of the healthcare question that might limit the applicability of the summary of findings to other settings (e.g. primary care in Europe and North America).

Intervention The experimental intervention.

Comparison The comparator intervention (including no specific intervention).

14.1.6.2 Outcomes

The rows of a ‘Summary of findings’ table should include all desirable and undesirable health outcomes (listed in order of importance) that are essential for decision making, up to a maximum of seven outcomes. If there are more outcomes in the review, review authors will need to omit the less important outcomes from the table, and the decision selecting which outcomes are critical or important to the review should be made during protocol development (see Chapter 3 ). Review authors should provide time frames for the measurement of the outcomes (e.g. 90 days or 12 months) and the type of instrument scores (e.g. ranging from 0 to 100).

Note that review authors should include the pre-specified critical and important outcomes in the table whether data are available or not. However, they should be alert to the possibility that the importance of an outcome (e.g. a serious adverse effect) may only become known after the protocol was written or the analysis was carried out, and should take appropriate actions to include these in the ‘Summary of findings’ table.

The ‘Summary of findings’ table can include effects in subgroups of the population for different comparator risks and effect sizes separately. For instance, in Figure 14.1.b effects are presented for children younger and older than 5 years separately. Review authors may also opt to produce separate ‘Summary of findings’ tables for different populations.

Review authors should include serious adverse events, but it might be possible to combine minor adverse events as a single outcome, and describe this in an explanatory footnote (note that it is not appropriate to add events together unless they are independent, that is, a participant who has experienced one adverse event has an unaffected chance of experiencing the other adverse event).

Outcomes measured at multiple time points represent a particular problem. In general, to keep the table simple, review authors should present multiple time points only for outcomes critical to decision making, where either the result or the decision made are likely to vary over time. The remainder should be presented at a common time point where possible.

Review authors can present continuous outcome measures in the ‘Summary of findings’ table and should endeavour to make these interpretable to the target audience. This requires that the units are clear and readily interpretable, for example, days of pain, or frequency of headache, and the name and scale of any measurement tools used should be stated (e.g. a Visual Analogue Scale, ranging from 0 to 100). However, many measurement instruments are not readily interpretable by non-specialist clinicians or patients, for example, points on a Beck Depression Inventory or quality of life score. For these, a more interpretable presentation might involve converting a continuous to a dichotomous outcome, such as >50% improvement (see Chapter 15, Section 15.5 ).

14.1.6.3 Best estimate of risk with comparator intervention