The P value: What it really means

As nurses, we must administer nursing care based on the best available scientific evidence. But for many nurses, critical appraisal, the process used to determine the best available evidence, can seem intimidating. To make critical appraisal more approachable, let’s examine the P value and make sure we know what it is and what it isn’t.

Defining P value

The P value is the probability that the results of a study are caused by chance alone. To better understand this definition, consider the role of chance.

The concept of chance is illustrated with every flip of a coin. The true probability of obtaining heads in any single flip is 0.5, meaning that heads would come up in half of the flips and tails would come up in half of the flips. But if you were to flip a coin 10 times, you likely would not obtain heads five times and tails five times. You’d be more likely to see a seven-to-three split or a six-to-four split. Chance is responsible for this variation in results.

Just as chance plays a role in determining the flip of a coin, it plays a role in the sampling of a population for a scientific study. When subjects are selected, chance may produce an unequal distribution of a characteristic that can affect the outcome of the study. Statistical inquiry and the P value are designed to help us determine just how large a role chance plays in study results. We begin a study with the assumption that there will be no difference between the experimental and control groups. This assumption is called the null hypothesis. When the results of the study indicate that there is a difference, the P value helps us determine the likelihood that the difference is attributed to chance.

Competing hypotheses

In every study, researchers put forth two kinds of hypotheses: the research or alternative hypothesis and the null hypothesis. The research hypothesis reflects what the researchers hope to show—that there is a difference between the experimental group and the control group. The null hypothesis directly competes with the research hypothesis. It states that there is no difference between the experimental group and the control group.

It may seem logical that researchers would test the research hypothesis—that is, that they would test what they hope to prove. But the probability theory requires that they test the null hypothesis instead. To support the research hypothesis, the data must contradict the null hypothesis. By demonstrating a difference between the two groups, the data contradict the null hypothesis.

Testing the null hypothesis

Now that you know why we test the null hypothesis, let’s look at how we test the null hypothesis.

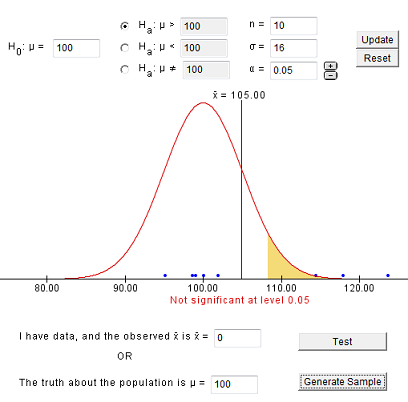

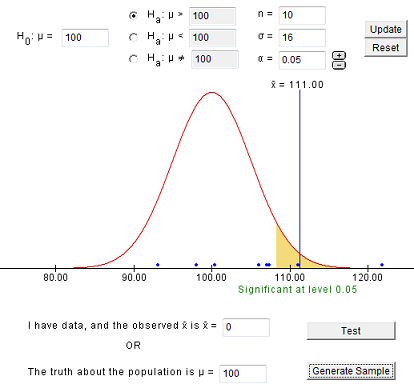

After formulating the null and research hypotheses, researchers decide on a test statistic they can use to determine whether to accept or reject the null hypothesis. They also propose a fixed-level P value. The fixed level P value is often set at .05 and serves as the value against which the test-generated P value must be compared. (See Why .05?)

A comparison of the two P values determines whether the null hypothesis is rejected or accepted. If the P value associated with the test statistic is less than the fixed-level P value, the null hypothesis is rejected because there’s a statistically significant difference between the two groups. If the P value associated with the test statistic is greater than the fixed-level P value, the null hypothesis is accepted because there’s no statistically significant difference between the groups.

The decision to use .05 as the threshold in testing the null hypothesis is completely arbitrary. The researchers credited with establishing this threshold warned against strictly adhering to it.

Remember that warning when appraising a study in which the test statistic is greater than .05. The savvy reader will consider other important measurements, including effect size, confidence intervals, and power analyses when deciding whether to accept or reject scientific findings that could influence nursing practice.

Real-world hypothesis testing

How does this play out in real life? Let’s assume that you and a nurse colleague are conducting a study to find out if patients who receive backrubs fall asleep faster than patients who do not receive backrubs.

1. State your null and research hypotheses

Your null hypothesis will be that there will be no difference in the average amount of time it takes patients in each group to fall asleep. Your research hypothesis will be that patients who receive backrubs fall asleep, on average, faster than those who do not receive backrubs. You will be testing the null hypothesis in hopes of supporting your research hypothesis.

2. Propose a fixed-level P value

Although you can choose any value as your fixed-level P value, you and your research colleague decide you’ll stay with the conventional .05. If you were testing a new medical product or a new drug, you would choose a much smaller P value (perhaps as small as .0001). That’s because you would want to be as sure as possible that any difference you see between groups is attributed to the new product or drug and not to chance. A fixed-level P value of .0001 would mean that the difference between the groups was attributed to chance only 1 time out of 10,000. For a study on backrubs, however, .05 seems appropriate.

3. Conduct hypothesis testing to calculate a probability value

You and your research colleague agree that a randomized controlled study will help you best achieve your research goals, and you design the process accordingly. After consenting to participate in the study, patients are randomized to one of two groups:

- the experimental group that receives the intervention—the backrub group

- the control group—the non-backrub group.

After several nights of measuring the number of minutes it takes each participant to fall asleep, you and your research colleague find that on average, the backrub group takes 19 minutes to fall asleep and the non-backrub group takes 24 minutes to fall asleep.

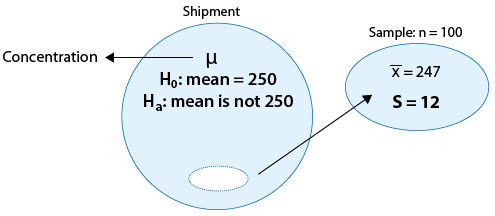

Now the question is: Would you have the same results if you conducted the study using two different groups of people? That is, what role did chance play in helping the backrub group fall asleep 5 minutes faster than the non-backrub group? To answer this, you and your colleague will use an independent samples t-test to calculate a probability value.

An independent samples t-test is a kind of hypothesis test that compares the mean values of two groups (backrub and non-backrub) on a given variable (time to fall asleep).

Hypothesis testing is really nothing more than testing the null hypothesis. In this case, the null hypothesis is that the amount of time needed to fall asleep is the same for the experimental group and the control group. The hypothesis test addresses this question: If there’s really no difference between the groups, what is the probability of observing a difference of 5 minutes or more, say 10 minutes or 15 minutes?

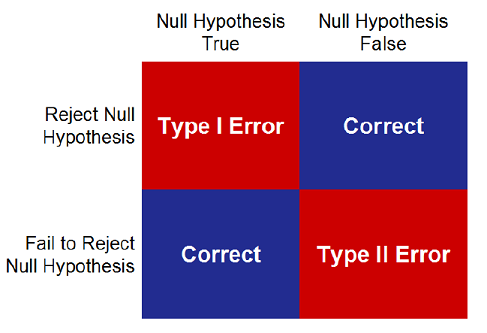

We can define the P value as the probability that the observed time difference resulted from chance. Some find it easier to understand the P value when they think of it in relationship to error. In this case, the P value is defined as the probability of committing a Type 1 error. (Type 1 error occurs when a true null hypothesis is incorrectly rejected.)

4. Compare and interpret the P value

Early on in your study, you and your colleague selected a fixed-level P value of .05, meaning that you were willing to accept that 5% of the time, your results might be caused by chance. Also, you used an independent samples t-test to arrive at a probability value that will help you determine the role chance played in obtaining your results. Let’s assume, for the sake of this example, that the probability value generated by the independent samples t-test is .01 (P = .01). Because this P value associated with the test statistic is less than the fixed-level statistic (.01 < .05), you can reject the null hypothesis. By doing so, you declare that there is a statistically significant difference between the experimental and control groups. (See Putting the P value in context.)

In effect, you’re saying that the chance of observing a difference of 5 minutes or more, when in fact there is no difference, is less than 5 in 100. If the P value associated with the test statistic would have been greater than .05, then you would accept the null hypothesis, which would mean that there is no statistically significant difference between the control and experimental groups. Accepting the null hypothesis would mean that a difference of 5 minutes or more between the two groups would occur more than 5 times in 100.

Putting the P value in context

Although the P value helps you interpret study results, keep in mind that many factors can influence the P value—and your decision to accept or reject the null hypothesis. These factors include the following:

- Insufficient power. The study may not have been designed appropriately to detect an effect of the independent variable on the dependent variable. Therefore, a change may have occurred without your knowing it, causing you to incorrectly reject your hypothesis.

- Unreliable measures. Instruments that don’t meet consistency or reliability standards may have been used to measure a particular phenomenon.

- Threats to internal validity. Various biases, such as selection of patients, regression, history, and testing bias, may unduly influence study outcomes.

A decision to accept or reject study findings should focus not only on P value but also on other metrics including the following:

- Confidence intervals (an estimated range of values with a high probability of including the true population value of a given parameter)

- Effect size (a value that measures the magnitude of a treatment effect)

Remember, P value tells you only whether a difference exists between groups. It doesn’t tell you the magnitude of the difference.

5. Communicate your findings

The final step in hypothesis testing is communicating your findings. When sharing research findings (hypotheses) in writing or discussion, understand that they are statements of relationships or differences in populations. Your findings are not proved or disproved. Scientific findings are always subject to change. But each study leads to better understanding and, ideally, better outcomes for patients.

Key concepts

The P value isn’t the only concept you need to understand to analyze research findings. But it is a very important one. And chances are that understanding the P value will make it easier to understand other key analytical concepts.

Selected references

Burns N, Grove S: The Practice of Nursing Research: Conduct, Critique, and Utilization. 5th ed. Philadelphia: WB Saunders; 2004.

Glaser DN: The controversy of significance testing: misconceptions and alternatives. Am J Crit Care. 1999;8(5):291-296.

Kenneth J. Rempher, PhD, RN, MBA, CCRN, APRN,BC, is Director, Professional Nursing Practice at Sinai Hospital of Baltimore (Md.). Kathleen Urquico, BSN, RN, is a Direct Care Nurse in the Rubin Institute of Advanced Orthopedics at Sinai Hospital of Baltimore.

NurseLine Newsletter

- First Name *

- Last Name *

- Hidden Referrer

*By submitting your e-mail, you are opting in to receiving information from Healthcom Media and Affiliates. The details, including your email address/mobile number, may be used to keep you informed about future products and services.

Test Your Knowledge

Recent posts.

Interpreting statistical significance in nursing research

Introduction to qualitative nursing research

Navigating statistics for successful project implementation

Nurse research and the institutional review board

Research 101: Descriptive statistics

Research 101: Forest plots

Understanding confidence intervals helps you make better clinical decisions

Differentiating statistical significance and clinical significance

Differentiating research, evidence-based practice, and quality improvement

Are you confident about confidence intervals?

Making sense of statistical power

- Physician Physician Board Reviews Physician Associate Board Reviews CME Lifetime CME Free CME

- Student USMLE Step 1 USMLE Step 2 USMLE Step 3 COMLEX Level 1 COMLEX Level 2 COMLEX Level 3 96 Medical School Exams Student Resource Center NCLEX - RN NCLEX - LPN/LVN/PN 24 Nursing Exams

- Nurse Practitioner APRN/NP Board Reviews CNS Certification Reviews CE - Nurse Practitioner FREE CE

- Nurse RN Certification Reviews CE - Nurse FREE CE

- Pharmacist Pharmacy Board Exam Prep CE - Pharmacist

- Allied Allied Health Exam Prep Dentist Exams CE - Social Worker CE - Dentist

- Point of Care

- Free CME/CE

Hypothesis Testing, P Values, Confidence Intervals, and Significance

Definition/introduction.

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

Issues of Concern

Register for free and read the full article, learn more about a subscription to statpearls point-of-care.

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

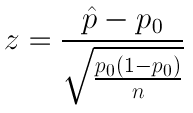

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

Jones M, Gebski V, Onslow M, Packman A. Statistical power in stuttering research: a tutorial. Journal of speech, language, and hearing research : JSLHR. 2002 Apr:45(2):243-55 [PubMed PMID: 12003508]

Sedgwick P. Pitfalls of statistical hypothesis testing: type I and type II errors. BMJ (Clinical research ed.). 2014 Jul 3:349():g4287. doi: 10.1136/bmj.g4287. Epub 2014 Jul 3 [PubMed PMID: 24994622]

Fethney J. Statistical and clinical significance, and how to use confidence intervals to help interpret both. Australian critical care : official journal of the Confederation of Australian Critical Care Nurses. 2010 May:23(2):93-7. doi: 10.1016/j.aucc.2010.03.001. Epub 2010 Mar 29 [PubMed PMID: 20347326]

Hayat MJ. Understanding statistical significance. Nursing research. 2010 May-Jun:59(3):219-23. doi: 10.1097/NNR.0b013e3181dbb2cc. Epub [PubMed PMID: 20445438]

Ferrill MJ, Brown DA, Kyle JA. Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Journal of pharmacy practice. 2010 Aug:23(4):344-51. doi: 10.1177/0897190009358774. Epub 2010 Apr 13 [PubMed PMID: 21507834]

Infanger D, Schmidt-Trucksäss A. P value functions: An underused method to present research results and to promote quantitative reasoning. Statistics in medicine. 2019 Sep 20:38(21):4189-4197. doi: 10.1002/sim.8293. Epub 2019 Jul 3 [PubMed PMID: 31270842]

Dorey F. Statistics in brief: Interpretation and use of p values: all p values are not equal. Clinical orthopaedics and related research. 2011 Nov:469(11):3259-61. doi: 10.1007/s11999-011-2053-1. Epub [PubMed PMID: 21918804]

Liu XS. Implications of statistical power for confidence intervals. The British journal of mathematical and statistical psychology. 2012 Nov:65(3):427-37. doi: 10.1111/j.2044-8317.2011.02035.x. Epub 2011 Oct 25 [PubMed PMID: 22026811]

Tijssen JG, Kolm P. Demystifying the New Statistical Recommendations: The Use and Reporting of p Values. Journal of the American College of Cardiology. 2016 Jul 12:68(2):231-3. doi: 10.1016/j.jacc.2016.05.026. Epub [PubMed PMID: 27386779]

Spanos A. Recurring controversies about P values and confidence intervals revisited. Ecology. 2014 Mar:95(3):645-51 [PubMed PMID: 24804448]

Freire APCF, Elkins MR, Ramos EMC, Moseley AM. Use of 95% confidence intervals in the reporting of between-group differences in randomized controlled trials: analysis of a representative sample of 200 physical therapy trials. Brazilian journal of physical therapy. 2019 Jul-Aug:23(4):302-310. doi: 10.1016/j.bjpt.2018.10.004. Epub 2018 Oct 16 [PubMed PMID: 30366845]

Dorey FJ. In brief: statistics in brief: Confidence intervals: what is the real result in the target population? Clinical orthopaedics and related research. 2010 Nov:468(11):3137-8. doi: 10.1007/s11999-010-1407-4. Epub [PubMed PMID: 20532716]

Porcher R. Reporting results of orthopaedic research: confidence intervals and p values. Clinical orthopaedics and related research. 2009 Oct:467(10):2736-7. doi: 10.1007/s11999-009-0952-1. Epub 2009 Jun 30 [PubMed PMID: 19565303]

Gardner MJ, Altman DG. Confidence intervals rather than P values: estimation rather than hypothesis testing. British medical journal (Clinical research ed.). 1986 Mar 15:292(6522):746-50 [PubMed PMID: 3082422]

Cooper RJ, Wears RL, Schriger DL. Reporting research results: recommendations for improving communication. Annals of emergency medicine. 2003 Apr:41(4):561-4 [PubMed PMID: 12658257]

Doll H, Carney S. Statistical approaches to uncertainty: P values and confidence intervals unpacked. Equine veterinary journal. 2007 May:39(3):275-6 [PubMed PMID: 17520981]

Colquhoun D. The reproducibility of research and the misinterpretation of p-values. Royal Society open science. 2017 Dec:4(12):171085. doi: 10.1098/rsos.171085. Epub 2017 Dec 6 [PubMed PMID: 29308247]

Use the mouse wheel to zoom in and out, click and drag to pan the image

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.19(7); 2019 Jul

Hypothesis tests

Associated data.

- • Hypothesis tests are used to assess whether a difference between two samples represents a real difference between the populations from which the samples were taken.

- • A null hypothesis of ‘no difference’ is taken as a starting point, and we calculate the probability that both sets of data came from the same population. This probability is expressed as a p -value.

- • When the null hypothesis is false, p- values tend to be small. When the null hypothesis is true, any p- value is equally likely.

Learning objectives

By reading this article, you should be able to:

- • Explain why hypothesis testing is used.

- • Use a table to determine which hypothesis test should be used for a particular situation.

- • Interpret a p- value.

A hypothesis test is a procedure used in statistics to assess whether a particular viewpoint is likely to be true. They follow a strict protocol, and they generate a ‘ p- value’, on the basis of which a decision is made about the truth of the hypothesis under investigation. All of the routine statistical ‘tests’ used in research— t- tests, χ 2 tests, Mann–Whitney tests, etc.—are all hypothesis tests, and in spite of their differences they are all used in essentially the same way. But why do we use them at all?

Comparing the heights of two individuals is easy: we can measure their height in a standardised way and compare them. When we want to compare the heights of two small well-defined groups (for example two groups of children), we need to use a summary statistic that we can calculate for each group. Such summaries (means, medians, etc.) form the basis of descriptive statistics, and are well described elsewhere. 1 However, a problem arises when we try to compare very large groups or populations: it may be impractical or even impossible to take a measurement from everyone in the population, and by the time you do so, the population itself will have changed. A similar problem arises when we try to describe the effects of drugs—for example by how much on average does a particular vasopressor increase MAP?

To solve this problem, we use random samples to estimate values for populations. By convention, the values we calculate from samples are referred to as statistics and denoted by Latin letters ( x ¯ for sample mean; SD for sample standard deviation) while the unknown population values are called parameters , and denoted by Greek letters (μ for population mean, σ for population standard deviation).

Inferential statistics describes the methods we use to estimate population parameters from random samples; how we can quantify the level of inaccuracy in a sample statistic; and how we can go on to use these estimates to compare populations.

Sampling error

There are many reasons why a sample may give an inaccurate picture of the population it represents: it may be biased, it may not be big enough, and it may not be truly random. However, even if we have been careful to avoid these pitfalls, there is an inherent difference between the sample and the population at large. To illustrate this, let us imagine that the actual average height of males in London is 174 cm. If I were to sample 100 male Londoners and take a mean of their heights, I would be very unlikely to get exactly 174 cm. Furthermore, if somebody else were to perform the same exercise, it would be unlikely that they would get the same answer as I did. The sample mean is different each time it is taken, and the way it differs from the actual mean of the population is described by the standard error of the mean (standard error, or SEM ). The standard error is larger if there is a lot of variation in the population, and becomes smaller as the sample size increases. It is calculated thus:

where SD is the sample standard deviation, and n is the sample size.

As errors are normally distributed, we can use this to estimate a 95% confidence interval on our sample mean as follows:

We can interpret this as meaning ‘We are 95% confident that the actual mean is within this range.’

Some confusion arises at this point between the SD and the standard error. The SD is a measure of variation in the sample. The range x ¯ ± ( 1.96 × SD ) will normally contain 95% of all your data. It can be used to illustrate the spread of the data and shows what values are likely. In contrast, standard error tells you about the precision of the mean and is used to calculate confidence intervals.

One straightforward way to compare two samples is to use confidence intervals. If we calculate the mean height of two groups and find that the 95% confidence intervals do not overlap, this can be taken as evidence of a difference between the two means. This method of statistical inference is reasonably intuitive and can be used in many situations. 2 Many journals, however, prefer to report inferential statistics using p -values.

Inference testing using a null hypothesis

In 1925, the British statistician R.A. Fisher described a technique for comparing groups using a null hypothesis , a method which has dominated statistical comparison ever since. The technique itself is rather straightforward, but often gets lost in the mechanics of how it is done. To illustrate, imagine we want to compare the HR of two different groups of people. We take a random sample from each group, which we call our data. Then:

- (i) Assume that both samples came from the same group. This is our ‘null hypothesis’.

- (ii) Calculate the probability that an experiment would give us these data, assuming that the null hypothesis is true. We express this probability as a p- value, a number between 0 and 1, where 0 is ‘impossible’ and 1 is ‘certain’.

- (iii) If the probability of the data is low, we reject the null hypothesis and conclude that there must be a difference between the two groups.

Formally, we can define a p- value as ‘the probability of finding the observed result or a more extreme result, if the null hypothesis were true.’ Standard practice is to set a cut-off at p <0.05 (this cut-off is termed the alpha value). If the null hypothesis were true, a result such as this would only occur 5% of the time or less; this in turn would indicate that the null hypothesis itself is unlikely. Fisher described the process as follows: ‘Set a low standard of significance at the 5 per cent point, and ignore entirely all results which fail to reach this level. A scientific fact should be regarded as experimentally established only if a properly designed experiment rarely fails to give this level of significance.’ 3 This probably remains the most succinct description of the procedure.

A question which often arises at this point is ‘Why do we use a null hypothesis?’ The simple answer is that it is easy: we can readily describe what we would expect of our data under a null hypothesis, we know how data would behave, and we can readily work out the probability of getting the result that we did. It therefore makes a very simple starting point for our probability assessment. All probabilities require a set of starting conditions, in much the same way that measuring the distance to London needs a starting point. The null hypothesis can be thought of as an easy place to put the start of your ruler.

If a null hypothesis is rejected, an alternate hypothesis must be adopted in its place. The null and alternate hypotheses must be mutually exclusive, but must also between them describe all situations. If a null hypothesis is ‘no difference exists’ then the alternate should be simply ‘a difference exists’.

Hypothesis testing in practice

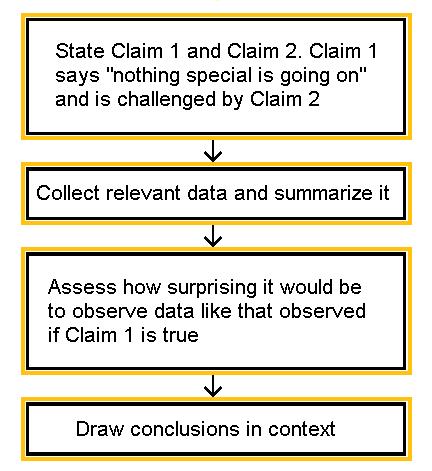

The components of a hypothesis test can be readily described using the acronym GOST: identify the Groups you wish to compare; define the Outcome to be measured; collect and Summarise the data; then evaluate the likelihood of the null hypothesis, using a Test statistic .

When considering groups, think first about how many. Is there just one group being compared against an audit standard, or are you comparing one group with another? Some studies may wish to compare more than two groups. Another situation may involve a single group measured at different points in time, for example before or after a particular treatment. In this situation each participant is compared with themselves, and this is often referred to as a ‘paired’ or a ‘repeated measures’ design. It is possible to combine these types of groups—for example a researcher may measure arterial BP on a number of different occasions in five different groups of patients. Such studies can be difficult, both to analyse and interpret.

In other studies we may want to see how a continuous variable (such as age or height) affects the outcomes. These techniques involve regression analysis, and are beyond the scope of this article.

The outcome measures are the data being collected. This may be a continuous measure, such as temperature or BMI, or it may be a categorical measure, such as ASA status or surgical specialty. Often, inexperienced researchers will strive to collect lots of outcome measures in an attempt to find something that differs between the groups of interest; if this is done, a ‘primary outcome measure’ should be identified before the research begins. In addition, the results of any hypothesis tests will need to be corrected for multiple measures.

The summary and the test statistic will be defined by the type of data that have been collected. The test statistic is calculated then transformed into a p- value using tables or software. It is worth looking at two common tests in a little more detail: the χ 2 test, and the t -test.

Categorical data: the χ 2 test

The χ 2 test of independence is a test for comparing categorical outcomes in two or more groups. For example, a number of trials have compared surgical site infections in patients who have been given different concentrations of oxygen perioperatively. In the PROXI trial, 4 685 patients received oxygen 80%, and 701 patients received oxygen 30%. In the 80% group there were 131 infections, while in the 30% group there were 141 infections. In this study, the groups were oxygen 80% and oxygen 30%, and the outcome measure was the presence of a surgical site infection.

The summary is a table ( Table 1 ), and the hypothesis test compares this table (the ‘observed’ table) with the table that would be expected if the proportion of infections in each group was the same (the ‘expected’ table). The test statistic is χ 2 , from which a p- value is calculated. In this instance the p -value is 0.64, which means that results like this would occur 64% of the time if the null hypothesis were true. We thus have no evidence to reject the null hypothesis; the observed difference probably results from sampling variation rather than from an inherent difference between the two groups.

Table 1

Summary of the results of the PROXI trial. Figures are numbers of patients.

Continuous data: the t- test

The t- test is a statistical method for comparing means, and is one of the most widely used hypothesis tests. Imagine a study where we try to see if there is a difference in the onset time of a new neuromuscular blocking agent compared with suxamethonium. We could enlist 100 volunteers, give them a general anaesthetic, and randomise 50 of them to receive the new drug and 50 of them to receive suxamethonium. We then time how long it takes (in seconds) to have ideal intubation conditions, as measured by a quantitative nerve stimulator. Our data are therefore a list of times. In this case, the groups are ‘new drug’ and suxamethonium, and the outcome is time, measured in seconds. This can be summarised by using means; the hypothesis test will compare the means of the two groups, using a p- value calculated from a ‘ t statistic’. Hopefully it is becoming obvious at this point that the test statistic is usually identified by a letter, and this letter is often cited in the name of the test.

The t -test comes in a number of guises, depending on the comparison being made. A single sample can be compared with a standard (Is the BMI of school leavers in this town different from the national average?); two samples can be compared with each other, as in the example above; or the same study subjects can be measured at two different times. The latter case is referred to as a paired t- test, because each participant provides a pair of measurements—such as in a pre- or postintervention study.

A large number of methods for testing hypotheses exist; the commonest ones and their uses are described in Table 2 . In each case, the test can be described by detailing the groups being compared ( Table 2 , columns) the outcome measures (rows), the summary, and the test statistic. The decision to use a particular test or method should be made during the planning stages of a trial or experiment. At this stage, an estimate needs to be made of how many test subjects will be needed. Such calculations are described in detail elsewhere. 5

Table 2

The principle types of hypothesis test. Tests comparing more than two samples can indicate that one group differs from the others, but will not identify which. Subsequent ‘post hoc’ testing is required if a difference is found.

Controversies surrounding hypothesis testing

Although hypothesis tests have been the basis of modern science since the middle of the 20th century, they have been plagued by misconceptions from the outset; this has led to what has been described as a crisis in science in the last few years: some journals have gone so far as to ban p -value s outright. 6 This is not because of any flaw in the concept of a p -value, but because of a lack of understanding of what they mean.

Possibly the most pervasive misunderstanding is the belief that the p- value is the chance that the null hypothesis is true, or that the p- value represents the frequency with which you will be wrong if you reject the null hypothesis (i.e. claim to have found a difference). This interpretation has frequently made it into the literature, and is a very easy trap to fall into when discussing hypothesis tests. To avoid this, it is important to remember that the p- value is telling us something about our sample , not about the null hypothesis. Put in simple terms, we would like to know the probability that the null hypothesis is true, given our data. The p- value tells us the probability of getting these data if the null hypothesis were true, which is not the same thing. This fallacy is referred to as ‘flipping the conditional’; the probability of an outcome under certain conditions is not the same as the probability of those conditions given that the outcome has happened.

A useful example is to imagine a magic trick in which you select a card from a normal deck of 52 cards, and the performer reveals your chosen card in a surprising manner. If the performer were relying purely on chance, this would only happen on average once in every 52 attempts. On the basis of this, we conclude that it is unlikely that the magician is simply relying on chance. Although simple, we have just performed an entire hypothesis test. We have declared a null hypothesis (the performer was relying on chance); we have even calculated a p -value (1 in 52, ≈0.02); and on the basis of this low p- value we have rejected our null hypothesis. We would, however, be wrong to suggest that there is a probability of 0.02 that the performer is relying on chance—that is not what our figure of 0.02 is telling us.

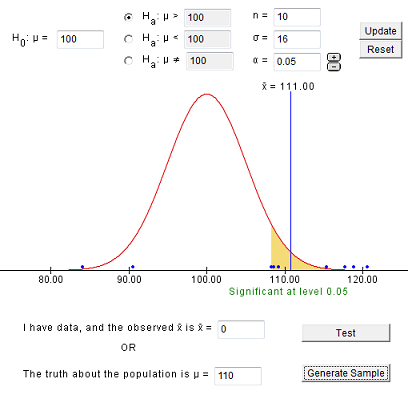

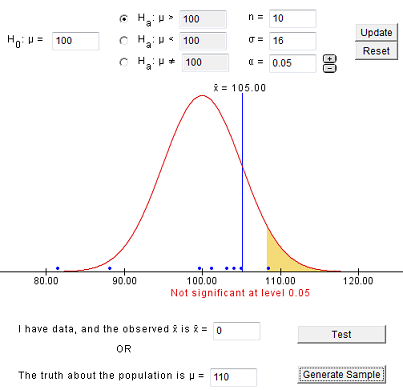

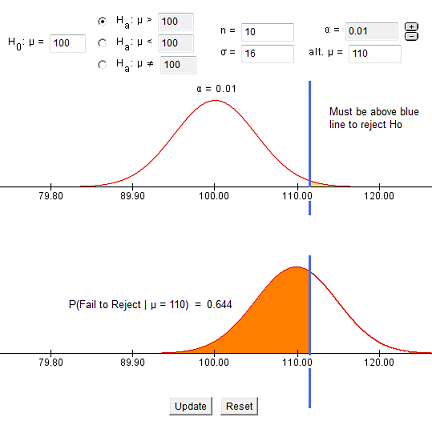

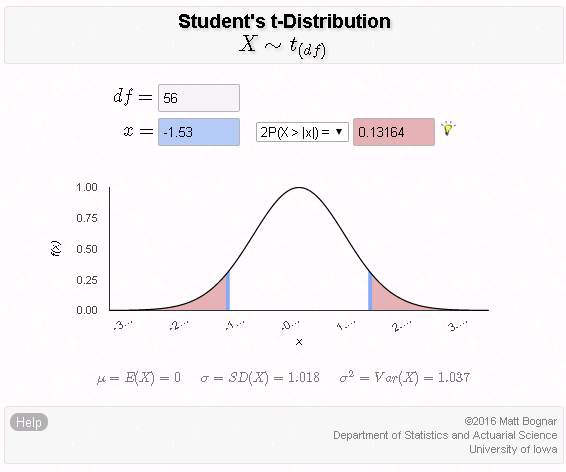

To explore this further we can create two populations, and watch what happens when we use simulation to take repeated samples to compare these populations. Computers allow us to do this repeatedly, and to see what p- value s are generated (see Supplementary online material). 7 Fig 1 illustrates the results of 100,000 simulated t -tests, generated in two set of circumstances. In Fig 1 a , we have a situation in which there is a difference between the two populations. The p- value s cluster below the 0.05 cut-off, although there is a small proportion with p >0.05. Interestingly, the proportion of comparisons where p <0.05 is 0.8 or 80%, which is the power of the study (the sample size was specifically calculated to give a power of 80%).

The p- value s generated when 100,000 t -tests are used to compare two samples taken from defined populations. ( a ) The populations have a difference and the p- value s are mostly significant. ( b ) The samples were taken from the same population (i.e. the null hypothesis is true) and the p- value s are distributed uniformly.

Figure 1 b depicts the situation where repeated samples are taken from the same parent population (i.e. the null hypothesis is true). Somewhat surprisingly, all p- value s occur with equal frequency, with p <0.05 occurring exactly 5% of the time. Thus, when the null hypothesis is true, a type I error will occur with a frequency equal to the alpha significance cut-off.

Figure 1 highlights the underlying problem: when presented with a p -value <0.05, is it possible with no further information, to determine whether you are looking at something from Fig 1 a or Fig 1 b ?

Finally, it cannot be stressed enough that although hypothesis testing identifies whether or not a difference is likely, it is up to us as clinicians to decide whether or not a statistically significant difference is also significant clinically.

Hypothesis testing: what next?

As mentioned above, some have suggested moving away from p -values, but it is not entirely clear what we should use instead. Some sources have advocated focussing more on effect size; however, without a measure of significance we have merely returned to our original problem: how do we know that our difference is not just a result of sampling variation?

One solution is to use Bayesian statistics. Up until very recently, these techniques have been considered both too difficult and not sufficiently rigorous. However, recent advances in computing have led to the development of Bayesian equivalents of a number of standard hypothesis tests. 8 These generate a ‘Bayes Factor’ (BF), which tells us how more (or less) likely the alternative hypothesis is after our experiment. A BF of 1.0 indicates that the likelihood of the alternate hypothesis has not changed. A BF of 10 indicates that the alternate hypothesis is 10 times more likely than we originally thought. A number of classifications for BF exist; greater than 10 can be considered ‘strong evidence’, while BF greater than 100 can be classed as ‘decisive’.

Figures such as the BF can be quoted in conjunction with the traditional p- value, but it remains to be seen whether they will become mainstream.

Declaration of interest

The author declares that they have no conflict of interest.

The associated MCQs (to support CME/CPD activity) will be accessible at www.bjaed.org/cme/home by subscribers to BJA Education .

Jason Walker FRCA FRSS BSc (Hons) Math Stat is a consultant anaesthetist at Ysbyty Gwynedd Hospital, Bangor, Wales, and an honorary senior lecturer at Bangor University. He is vice chair of his local research ethics committee, and an examiner for the Primary FRCA.

Matrix codes: 1A03, 2A04, 3J03

Supplementary data to this article can be found online at https://doi.org/10.1016/j.bjae.2019.03.006 .

Supplementary material

The following is the Supplementary data to this article:

Fastest Nurse Insight Engine

- MEDICAL ASSISSTANT

- Abdominal Key

- Anesthesia Key

- Basicmedical Key

- Otolaryngology & Ophthalmology

- Musculoskeletal Key

- Obstetric, Gynecology and Pediatric

- Oncology & Hematology

- Plastic Surgery & Dermatology

- Clinical Dentistry

- Radiology Key

- Thoracic Key

- Veterinary Medicine

- Gold Membership

Hypothesis testing: selection and use of statistical tests

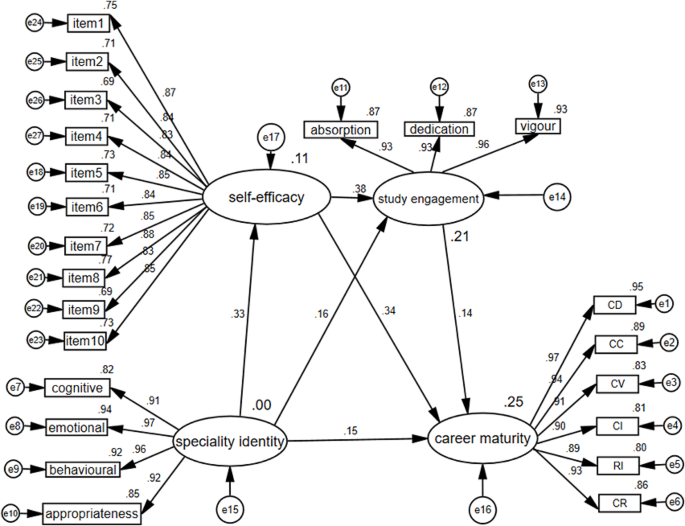

20 Hypothesis testing selection and use of statistical tests Chapter Contents Introduction The logic of hypothesis testing Steps in hypothesis testing Illustrations of hypothesis testing The relationship between descriptive and inferential statistics Selection of the appropriate inferential test The χ 2 test χ 2 and contingency tables Statistical packages Summary Introduction Hypotheses are statements about the association between variables as pertaining to a specific person or population. For example, ‘penicillin is an effective treatment for pneumonia’ or ‘obesity is a risk factor for heart disease’. Hypotheses addressing the state of populations are tested using sample data. Inferences are conclusions based on data using samples and are therefore always open to the possibility of error. In this chapter we will examine the use of inferential statistics for establishing the probable truth of hypotheses, as tested through sample data. Inferential statistics are based on applied probability theory and entail the use of statistical tests. There are numerous statistical tests available that are used in a similar fashion to analyse clinical data. That is, all statistical tests involve setting up the relevant hypotheses, H 0 and H A , and then, on the basis of the appropriate inferential statistics, computing the probability of the sample statistics obtained occurring by chance alone. We are not going to attempt to examine all statistical tests in this introductory book. These are described in various statistics textbooks or in data analysis manuals. Rather, in this chapter we will examine the criteria used for selecting tests appropriate for the analysis of the data obtained in specific investigations. To illustrate the use of statistical tests we will examine the use of the chi-square test (χ 2 ). This is a statistical test commonly employed to analyse categorical data. Finally, we will briefly examine the uses of the Statistical Package for Social Sciences™ (SPSS) for data analysis in general. The aims of this chapter are to: 1. Discuss the criteria by which a statistical test is selected for analysing the data for a specific study. 2. Demonstrate the use of the χ 2 test for analysing nominal scale data. 3. Explain how statistical packages are used for quantitative data analysis. The logic of hypothesis testing Hypothesis testing is the process of deciding using statistics whether the findings of an investigation reflect chance or real effects at a given level of probability or certainty. If the results seem to not represent chance effects, then we say that the results are statistically significant. That is, when we say that our results are statistically significant we mean that the patterns or differences seen in the sample data are likely to be generalizable to the wider population from our study sample. The mathematical procedures for hypothesis testing are based on the application of probability theory and sampling, as discussed previously. Because of the probabilistic nature of the process, decision errors in hypothesis testing cannot be entirely eliminated. However, the procedures outlined in this chapter enable us to specify the probability level at which we can claim that the data obtained in an investigation support experimental hypotheses. This procedure is fundamental for determining the statistical significance of the data as well as being relevant to the logic of clinical decision making. Steps in hypothesis testing The following steps are conventionally followed in hypothesis testing: 1. State the alternative hypothesis (H A ), which is the based on the research hypothesis. The H A asserts that the results are ‘real’ or ‘significant’, i.e. that the independent variable influenced the dependent variable, or that there is a real difference among groups. The important point here is that H A is a statement concerning the population. A real or significant effect means that the results in the sample data can be generalized to the population. 2. State the null hypothesis (H 0 ), which is the logical opposite of the H A . The H 0 claims that any differences in the data were just due to chance: that the independent variable had no effect on the dependent variable, or that any difference among groups is due to random effects. In other words, if the H 0 is retained, differences or patterns seen in the sample data should not be generalized to the population. 3. Set the decision level, α (alpha). There are two mutually exclusive hypotheses (H A and H 0 ) competing to explain the results of an investigation. Hypothesis testing, or statistical decision making, involves establishing the probability of H 0 being true. If this probability is very small, we are in a position to reject the H 0 . You might ask ‘How small should the probability (α) be for rejecting H 0 ?’ By convention, we use the probability of α = 0.05. If the H 0 being true is less than 0.05, we can reject H 0 . We can choose an α of 0.05, but not more, That is, by convention among researchers, results are not characterized as significant if p > 0.05. 4. Calculate the probability of H 0 being true. That is, we assume that H 0 is true and calculate the probability of the outcome of the investigation being due to chance alone, i.e. due to random effects. We must use an appropriate sampling distribution for this calculation. 5. Make a decision concerning H 0 . The following decision rule is used. If the probability of H 0 being true is less than α, then we reject H 0 at the level of significance set by α. However, if the probability of H 0 is greater than α, then we must retain H 0 . In other words, if: a. p (H 0 is true) ≤ α, reject H 0 b. p (H 0 is true) > α, retain H 0 It follows that if we reject H 0 we are in a position to accept H A , its logical alternative. If p ≤ 0.05 then we reject H 0 , and decide that H A is probably true. Illustrations of hypothesis testing One of the simplest forms of gambling is betting on the fall of a coin. Let us play a little game. We, the authors, will toss a coin. If it comes out heads (H) you will give us ≤1; if it comes out tails (T) we will give you ≤1. To make things interesting, let us have 10 tosses. The results are: Oh dear, you seem to have lost. Never mind, we were just lucky, so send along your cheque for ≤10. Are you a little hesitant? Are you saying that we ‘fixed’ the game? There is a systematic procedure for demonstrating the probable truth of your allegations: 1. We can state two competing hypotheses concerning the outcome of the game: a. the authors fixed the game; that is, the outcome did not reflect the fair throwing of a coin. Let us call this statement the ‘alternative hypothesis’, H A . In effect, the H A claims that the sample of 10 heads came from a population other than P (probability of heads) = Q (probability of tails) = 0.5 b. the authors did not fix the game; that is, the outcome is due to the tossing of a fair coin. Let us call this statement the ‘null hypothesis’, or H 0 . H 0 suggests that the sample of 10 heads was a random sample from a population where P = Q = 0.5. 2. It can be shown that the probability of tossing 10 consecutive heads with a fair coin is actually p = 0.001, as discussed previously (see Ch. 19). That is, the probability of obtaining such a sample from a population where P = Q = 0.5 is extremely low. 3. Now we can decide between H 0 and H A . It was shown that the probability of H 0 being true was p = 0.001 (1 in a 1000). Therefore, in the balance of probabilities, we can reject it as being true and accept H A , which is the logical alternative. In other words, it is likely that the game was fixed and no ≤10 cheque needed to be posted. The probability of calculating the truth of H 0 depended on the number of tosses ( n = the sample size). For instance, the probability of obtaining heads every times with five coin tosses is shown in Table 19.4 . As the sample size ( n ) becomes larger, the probability for which it is possible to reject H 0 becomes smaller. With only a few tosses we really cannot be sure if the game is fixed or not: without sufficient information it becomes hard to reject H 0 at a reasonable level of probability. A question emerges: ‘What is a reasonable level of probability for rejecting H 0 ?’ As we shall see, there are conventions for specifying these probabilities. One way to proceed, however, is to set the appropriate probability for rejecting H 0 on the basis of the implications of erroneous decisions. Obviously, any decision made on a probabilistic basis might be in error. Two types of decision errors are identified in statistics as type I and type II errors . A type I error involves mistakenly rejecting H 0 , while a type II error involves mistakenly retaining the H 0 . Researchers can make mistakes about the truth or falsity of hypotheses using sample research data. Statistical method does not provide a guarantee against making a mistake, but it is the most rigorous way of making these decisions. In the above example, a type I error would involve deciding that the outcome was not due to chance when in fact it was. The practical outcome of this would be to falsely accuse the authors of fixing the game. A type II error would represent the decision that the outcome was due to chance, when in fact it was due to a ‘fix’. The practical outcome of this would be to send your hard-earned ≤10 to a couple of crooks. Clearly, in a situation like this, a type II error would be more odious than a type I error, and you would set a fairly high probability for rejecting H 0 . However, if you were gambling with a villain, who had a loaded revolver handy, you would tend to set a very low probability for rejecting H 0 . We will examine these ideas more formally in subsequent parts of this chapter. Let us look at another example. A rehabilitation therapist has devised an exercise program which is expected to reduce the time taken for people to leave hospital following orthopaedic surgery. Previous records show that the recovery time for patients had been µ = 30 days, with σ = 8 days. A sample of 64 patients were treated with the exercise program, and their mean recovery time was found to be = 24 days. Do these results show that patients who had the treatment recovered significantly faster than previous patients? We can apply the steps for hypothesis testing to make our decision. 1. State H A : ‘The exercise program reduces the time taken for patients to recover from orthopaedic surgery’. That is, the researcher claims that the independent variable (the treatment) has a ‘real’ or ‘generalizable’ effect on the dependent variable (time to recover). 2. State H 0 : ‘The exercise program does not reduce the time taken for patients to recover from orthopaedic surgery’. That is, the statement claims that the independent variable has no effect on the dependent variable. The statement implies that the treated sample with = 24, and n = 64 is in fact a random sample from the population µ = 30, σ = 8. Any difference between and µ can be attributed to sampling error. 3. The decision level, α, is set before the results are analysed. The probability of α depends on how certain the investigator wants to be that the results show real differences. If he set α = 0.01, then the probability of falsely rejecting a true H 0 is less than or equal to 0.01 (1/100). If he set α = 0.05, then the probability of falsely rejecting a true H 0 is less than or equal to 0.05 or (1/20). That is, the smaller the α, the more confident the researcher is that the results support the alternative hypothesis. We also call α the level of significance. The smaller the α, the more significant the findings for a study, if we can reject H 0 . In this case, say that the researcher sets α = 0.01. (Note: by convention, α should not be greater than 0.05.) 4. Calculate the probability of H 0 being true. As stated above, H 0 implies that the sample with = 24 is a random sample from the population with µ = 30, σ = 8. How probable is it that this statement is true? To calculate this probability, we must generate an appropriate sampling distribution. As we have seen in Chapter 17 , the sampling distribution of the mean will enable us to calculate the probability of obtaining a sample mean of = 24 or more extreme from a population with known parameters. As shown in Figure 20.1 , we can calculate the probability of drawing a sample mean of = 24 or less. Using the table of normal curves (Appendix A), as outlined previously, we find that the probability of randomly selecting a sample mean of = 24 (or less) is extremely small. In terms of our table, which only shows the exact probability of up to z = 4.00, we can see that the present probability is less than 0.00003. Therefore, the probability that H 0 is true is less than 0.00003. Figure 20.1 Sampling distribution of means. Sample size = 64, population mean = 30, standard deviation = 8. 5. Make a decision. We have set α = 0.01. The calculated probability was less than 0.0001. Clearly, the calculated probability is far less than α, indicating that the difference is unlikely to be due to chance. Therefore, the investigator can reject the statement that H 0 is true and accept H A , that patients in general treated with the exercise program recover earlier than the population of untreated patients. The relationship between descriptive and inferential statistics As we have seen in the previous chapters, statistics may be classified as descriptive or inferential. Descriptive statistics describe the characteristics of data and are concerned with issues such as ‘What is the average length of hospitalization of a group of patients?’ Inferential statistics are used to address issues such as whether the differences in average lengths of hospitalization of patients in two groups are significantly different statistically. Thus, descriptive statistics describe aspects of the data such as the frequencies of scores, and the average or the range of values for samples, whereas inferential statistics enables researchers to decide (infer) whether differences between groups or relationships between variables represent persistent and reproducible trends in the populations. In Section 5 we saw that the selection of appropriate descriptive statistics depends on the type of data being described. For example, in a variable such as incomes of patients, the best statistics to represent the typical income would be the mean and/or the median. If you had a millionaire in the group of patients, the mean statistic might give a distorted impression of the central tendency. In this situation the median statistic would be the most appropriate one to use. The mode is most commonly used when the data being described are categorical data. For example, if in a questionnaire respondents were asked to indicate their sex and 65% said they were male and 35% said they were female, then ‘male’ is the modal response. It is quite unusual to use the mode only with data that are not nominal. As a rule, the scale of measurement used to obtain the data and its distribution determine which descriptive statistics are selected. In the same way, the appropriate inferential statistics are determined by the characteristics of the data being analysed. For example, where the mean is the appropriate descriptive statistic, the inferential statistics will determine if the differences between the means are statistically significant. In the case of ordinal data, the appropriate inferential statistics will make it possible to decide if either the medians or the rank orders are significantly different. With nominal data, the appropriate inferential statistic will decide if proportions of cases falling into specific categories are significantly different. Thus, when the data have been adequately described, the appropriate inferential statistic will follow logically. However, when selecting an appropriate statistical test, the design of the investigation must also be taken into account.

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Facebook (Opens in new window)

Related posts:

- Surveys and quasi-experimental designs

- 11:Questionnaires

- Effect size and the interpretation of evidence

- Standard scores and normal distributions

Stay updated, free articles. Join our Telegram channel

Comments are closed for this page.

Full access? Get Clinical Tree

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6.6 - confidence intervals & hypothesis testing.

Confidence intervals and hypothesis tests are similar in that they are both inferential methods that rely on an approximated sampling distribution. Confidence intervals use data from a sample to estimate a population parameter. Hypothesis tests use data from a sample to test a specified hypothesis. Hypothesis testing requires that we have a hypothesized parameter.

The simulation methods used to construct bootstrap distributions and randomization distributions are similar. One primary difference is a bootstrap distribution is centered on the observed sample statistic while a randomization distribution is centered on the value in the null hypothesis.

In Lesson 4, we learned confidence intervals contain a range of reasonable estimates of the population parameter. All of the confidence intervals we constructed in this course were two-tailed. These two-tailed confidence intervals go hand-in-hand with the two-tailed hypothesis tests we learned in Lesson 5. The conclusion drawn from a two-tailed confidence interval is usually the same as the conclusion drawn from a two-tailed hypothesis test. In other words, if the the 95% confidence interval contains the hypothesized parameter, then a hypothesis test at the 0.05 \(\alpha\) level will almost always fail to reject the null hypothesis. If the 95% confidence interval does not contain the hypothesize parameter, then a hypothesis test at the 0.05 \(\alpha\) level will almost always reject the null hypothesis.

Example: Mean Section

This example uses the Body Temperature dataset built in to StatKey for constructing a bootstrap confidence interval and conducting a randomization test .

Let's start by constructing a 95% confidence interval using the percentile method in StatKey:

The 95% confidence interval for the mean body temperature in the population is [98.044, 98.474].

Now, what if we want to know if there is enough evidence that the mean body temperature is different from 98.6 degrees? We can conduct a hypothesis test. Because 98.6 is not contained within the 95% confidence interval, it is not a reasonable estimate of the population mean. We should expect to have a p value less than 0.05 and to reject the null hypothesis.

\(H_0: \mu=98.6\)

\(H_a: \mu \ne 98.6\)

\(p = 2*0.00080=0.00160\)

\(p \leq 0.05\), reject the null hypothesis

There is evidence that the population mean is different from 98.6 degrees.

Selecting the Appropriate Procedure Section

The decision of whether to use a confidence interval or a hypothesis test depends on the research question. If we want to estimate a population parameter, we use a confidence interval. If we are given a specific population parameter (i.e., hypothesized value), and want to determine the likelihood that a population with that parameter would produce a sample as different as our sample, we use a hypothesis test. Below are a few examples of selecting the appropriate procedure.

Example: Cheese Consumption Section

Research question: How much cheese (in pounds) does an average American adult consume annually?

What is the appropriate inferential procedure?

Cheese consumption, in pounds, is a quantitative variable. We have one group: American adults. We are not given a specific value to test, so the appropriate procedure here is a confidence interval for a single mean .

Example: Age Section

Research question: Is the average age in the population of all STAT 200 students greater than 30 years?

There is one group: STAT 200 students. The variable of interest is age in years, which is quantitative. The research question includes a specific population parameter to test: 30 years. The appropriate procedure is a hypothesis test for a single mean .

Try it! Section

For each research question, identify the variables, the parameter of interest and decide on the the appropriate inferential procedure.

Research question: How strong is the correlation between height (in inches) and weight (in pounds) in American teenagers?

There are two variables of interest: (1) height in inches and (2) weight in pounds. Both are quantitative variables. The parameter of interest is the correlation between these two variables.

We are not given a specific correlation to test. We are being asked to estimate the strength of the correlation. The appropriate procedure here is a confidence interval for a correlation .

Research question: Are the majority of registered voters planning to vote in the next presidential election?

The parameter that is being tested here is a single proportion. We have one group: registered voters. "The majority" would be more than 50%, or p>0.50. This is a specific parameter that we are testing. The appropriate procedure here is a hypothesis test for a single proportion .

Research question: On average, are STAT 200 students younger than STAT 500 students?

We have two independent groups: STAT 200 students and STAT 500 students. We are comparing them in terms of average (i.e., mean) age.

If STAT 200 students are younger than STAT 500 students, that translates to \(\mu_{200}<\mu_{500}\) which is an alternative hypothesis. This could also be written as \(\mu_{200}-\mu_{500}<0\), where 0 is a specific population parameter that we are testing.

The appropriate procedure here is a hypothesis test for the difference in two means .

Research question: On average, how much taller are adult male giraffes compared to adult female giraffes?

There are two groups: males and females. The response variable is height, which is quantitative. We are not given a specific parameter to test, instead we are asked to estimate "how much" taller males are than females. The appropriate procedure is a confidence interval for the difference in two means .

Research question: Are STAT 500 students more likely than STAT 200 students to be employed full-time?

There are two independent groups: STAT 500 students and STAT 200 students. The response variable is full-time employment status which is categorical with two levels: yes/no.

If STAT 500 students are more likely than STAT 200 students to be employed full-time, that translates to \(p_{500}>p_{200}\) which is an alternative hypothesis. This could also be written as \(p_{500}-p_{200}>0\), where 0 is a specific parameter that we are testing. The appropriate procedure is a hypothesis test for the difference in two proportions.

Research question: Is there is a relationship between outdoor temperature (in Fahrenheit) and coffee sales (in cups per day)?

There are two variables here: (1) temperature in Fahrenheit and (2) cups of coffee sold in a day. Both variables are quantitative. The parameter of interest is the correlation between these two variables.

If there is a relationship between the variables, that means that the correlation is different from zero. This is a specific parameter that we are testing. The appropriate procedure is a hypothesis test for a correlation .

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 31289

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

CO-6: Apply basic concepts of probability, random variation, and commonly used statistical probability distributions.

Learning Objectives

LO 6.26: Outline the logic and process of hypothesis testing.

LO 6.27: Explain what the p-value is and how it is used to draw conclusions.

Video: Hypothesis Testing (8:43)

Introduction

We are in the middle of the part of the course that has to do with inference for one variable.

So far, we talked about point estimation and learned how interval estimation enhances it by quantifying the magnitude of the estimation error (with a certain level of confidence) in the form of the margin of error. The result is the confidence interval — an interval that, with a certain confidence, we believe captures the unknown parameter.

We are now moving to the other kind of inference, hypothesis testing . We say that hypothesis testing is “the other kind” because, unlike the inferential methods we presented so far, where the goal was estimating the unknown parameter, the idea, logic and goal of hypothesis testing are quite different.

In the first two parts of this section we will discuss the idea behind hypothesis testing, explain how it works, and introduce new terminology that emerges in this form of inference. The final two parts will be more specific and will discuss hypothesis testing for the population proportion ( p ) and the population mean ( μ, mu).

If this is your first statistics course, you will need to spend considerable time on this topic as there are many new ideas. Many students find this process and its logic difficult to understand in the beginning.

In this section, we will use the hypothesis test for a population proportion to motivate our understanding of the process. We will conduct these tests manually. For all future hypothesis test procedures, including problems involving means, we will use software to obtain the results and focus on interpreting them in the context of our scenario.

General Idea and Logic of Hypothesis Testing

The purpose of this section is to gradually build your understanding about how statistical hypothesis testing works. We start by explaining the general logic behind the process of hypothesis testing. Once we are confident that you understand this logic, we will add some more details and terminology.

To start our discussion about the idea behind statistical hypothesis testing, consider the following example:

A case of suspected cheating on an exam is brought in front of the disciplinary committee at a certain university.

There are two opposing claims in this case:

- The student’s claim: I did not cheat on the exam.

- The instructor’s claim: The student did cheat on the exam.

Adhering to the principle “innocent until proven guilty,” the committee asks the instructor for evidence to support his claim. The instructor explains that the exam had two versions, and shows the committee members that on three separate exam questions, the student used in his solution numbers that were given in the other version of the exam.