- Our Mission

Helping Students Hone Their Critical Thinking Skills

Used consistently, these strategies can help middle and high school teachers guide students to improve much-needed skills.

Critical thinking skills are important in every discipline, at and beyond school. From managing money to choosing which candidates to vote for in elections to making difficult career choices, students need to be prepared to take in, synthesize, and act on new information in a world that is constantly changing.

While critical thinking might seem like an abstract idea that is tough to directly instruct, there are many engaging ways to help students strengthen these skills through active learning.

Make Time for Metacognitive Reflection

Create space for students to both reflect on their ideas and discuss the power of doing so. Show students how they can push back on their own thinking to analyze and question their assumptions. Students might ask themselves, “Why is this the best answer? What information supports my answer? What might someone with a counterargument say?”

Through this reflection, students and teachers (who can model reflecting on their own thinking) gain deeper understandings of their ideas and do a better job articulating their beliefs. In a world that is go-go-go, it is important to help students understand that it is OK to take a breath and think about their ideas before putting them out into the world. And taking time for reflection helps us more thoughtfully consider others’ ideas, too.

Teach Reasoning Skills

Reasoning skills are another key component of critical thinking, involving the abilities to think logically, evaluate evidence, identify assumptions, and analyze arguments. Students who learn how to use reasoning skills will be better equipped to make informed decisions, form and defend opinions, and solve problems.

One way to teach reasoning is to use problem-solving activities that require students to apply their skills to practical contexts. For example, give students a real problem to solve, and ask them to use reasoning skills to develop a solution. They can then present their solution and defend their reasoning to the class and engage in discussion about whether and how their thinking changed when listening to peers’ perspectives.

A great example I have seen involved students identifying an underutilized part of their school and creating a presentation about one way to redesign it. This project allowed students to feel a sense of connection to the problem and come up with creative solutions that could help others at school. For more examples, you might visit PBS’s Design Squad , a resource that brings to life real-world problem-solving.

Ask Open-Ended Questions

Moving beyond the repetition of facts, critical thinking requires students to take positions and explain their beliefs through research, evidence, and explanations of credibility.

When we pose open-ended questions, we create space for classroom discourse inclusive of diverse, perhaps opposing, ideas—grounds for rich exchanges that support deep thinking and analysis.

For example, “How would you approach the problem?” and “Where might you look to find resources to address this issue?” are two open-ended questions that position students to think less about the “right” answer and more about the variety of solutions that might already exist.

Journaling, whether digitally or physically in a notebook, is another great way to have students answer these open-ended prompts—giving them time to think and organize their thoughts before contributing to a conversation, which can ensure that more voices are heard.

Once students process in their journal, small group or whole class conversations help bring their ideas to life. Discovering similarities between answers helps reveal to students that they are not alone, which can encourage future participation in constructive civil discourse.

Teach Information Literacy

Education has moved far past the idea of “Be careful of what is on Wikipedia, because it might not be true.” With AI innovations making their way into classrooms, teachers know that informed readers must question everything.

Understanding what is and is not a reliable source and knowing how to vet information are important skills for students to build and utilize when making informed decisions. You might start by introducing the idea of bias: Articles, ads, memes, videos, and every other form of media can push an agenda that students may not see on the surface. Discuss credibility, subjectivity, and objectivity, and look at examples and nonexamples of trusted information to prepare students to be well-informed members of a democracy.

One of my favorite lessons is about the Pacific Northwest tree octopus . This project asks students to explore what appears to be a very real website that provides information on this supposedly endangered animal. It is a wonderful, albeit over-the-top, example of how something might look official even when untrue, revealing that we need critical thinking to break down “facts” and determine the validity of the information we consume.

A fun extension is to have students come up with their own website or newsletter about something going on in school that is untrue. Perhaps a change in dress code that requires everyone to wear their clothes inside out or a change to the lunch menu that will require students to eat brussels sprouts every day.

Giving students the ability to create their own falsified information can help them better identify it in other contexts. Understanding that information can be “too good to be true” can help them identify future falsehoods.

Provide Diverse Perspectives

Consider how to keep the classroom from becoming an echo chamber. If students come from the same community, they may have similar perspectives. And those who have differing perspectives may not feel comfortable sharing them in the face of an opposing majority.

To support varying viewpoints, bring diverse voices into the classroom as much as possible, especially when discussing current events. Use primary sources: videos from YouTube, essays and articles written by people who experienced current events firsthand, documentaries that dive deeply into topics that require some nuance, and any other resources that provide a varied look at topics.

I like to use the Smithsonian “OurStory” page , which shares a wide variety of stories from people in the United States. The page on Japanese American internment camps is very powerful because of its first-person perspectives.

Practice Makes Perfect

To make the above strategies and thinking routines a consistent part of your classroom, spread them out—and build upon them—over the course of the school year. You might challenge students with information and/or examples that require them to use their critical thinking skills; work these skills explicitly into lessons, projects, rubrics, and self-assessments; or have students practice identifying misinformation or unsupported arguments.

Critical thinking is not learned in isolation. It needs to be explored in English language arts, social studies, science, physical education, math. Every discipline requires students to take a careful look at something and find the best solution. Often, these skills are taken for granted, viewed as a by-product of a good education, but true critical thinking doesn’t just happen. It requires consistency and commitment.

In a moment when information and misinformation abound, and students must parse reams of information, it is imperative that we support and model critical thinking in the classroom to support the development of well-informed citizens.

Why Schools Need to Change Yes, We Can Define, Teach, and Assess Critical Thinking Skills

Jeff Heyck-Williams (He, His, Him) Director of the Two Rivers Learning Institute in Washington, DC

Today’s learners face an uncertain present and a rapidly changing future that demand far different skills and knowledge than were needed in the 20th century. We also know so much more about enabling deep, powerful learning than we ever did before. Our collective future depends on how well young people prepare for the challenges and opportunities of 21st-century life.

Critical thinking is a thing. We can define it; we can teach it; and we can assess it.

While the idea of teaching critical thinking has been bandied around in education circles since at least the time of John Dewey, it has taken greater prominence in the education debates with the advent of the term “21st century skills” and discussions of deeper learning. There is increasing agreement among education reformers that critical thinking is an essential ingredient for long-term success for all of our students.

However, there are still those in the education establishment and in the media who argue that critical thinking isn’t really a thing, or that these skills aren’t well defined and, even if they could be defined, they can’t be taught or assessed.

To those naysayers, I have to disagree. Critical thinking is a thing. We can define it; we can teach it; and we can assess it. In fact, as part of a multi-year Assessment for Learning Project , Two Rivers Public Charter School in Washington, D.C., has done just that.

Before I dive into what we have done, I want to acknowledge that some of the criticism has merit.

First, there are those that argue that critical thinking can only exist when students have a vast fund of knowledge. Meaning that a student cannot think critically if they don’t have something substantive about which to think. I agree. Students do need a robust foundation of core content knowledge to effectively think critically. Schools still have a responsibility for building students’ content knowledge.

However, I would argue that students don’t need to wait to think critically until after they have mastered some arbitrary amount of knowledge. They can start building critical thinking skills when they walk in the door. All students come to school with experience and knowledge which they can immediately think critically about. In fact, some of the thinking that they learn to do helps augment and solidify the discipline-specific academic knowledge that they are learning.

The second criticism is that critical thinking skills are always highly contextual. In this argument, the critics make the point that the types of thinking that students do in history is categorically different from the types of thinking students do in science or math. Thus, the idea of teaching broadly defined, content-neutral critical thinking skills is impossible. I agree that there are domain-specific thinking skills that students should learn in each discipline. However, I also believe that there are several generalizable skills that elementary school students can learn that have broad applicability to their academic and social lives. That is what we have done at Two Rivers.

Defining Critical Thinking Skills

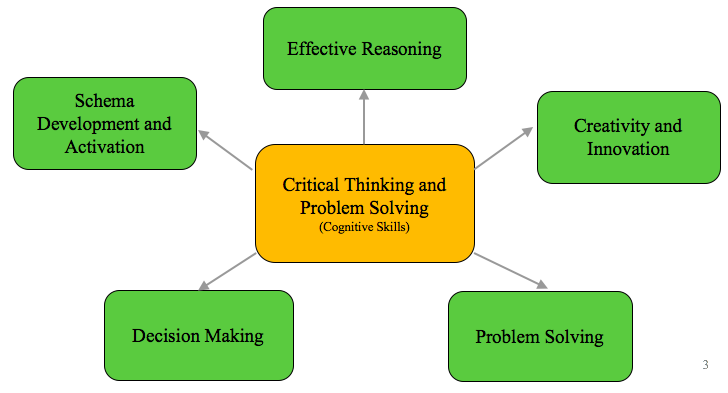

We began this work by first defining what we mean by critical thinking. After a review of the literature and looking at the practice at other schools, we identified five constructs that encompass a set of broadly applicable skills: schema development and activation; effective reasoning; creativity and innovation; problem solving; and decision making.

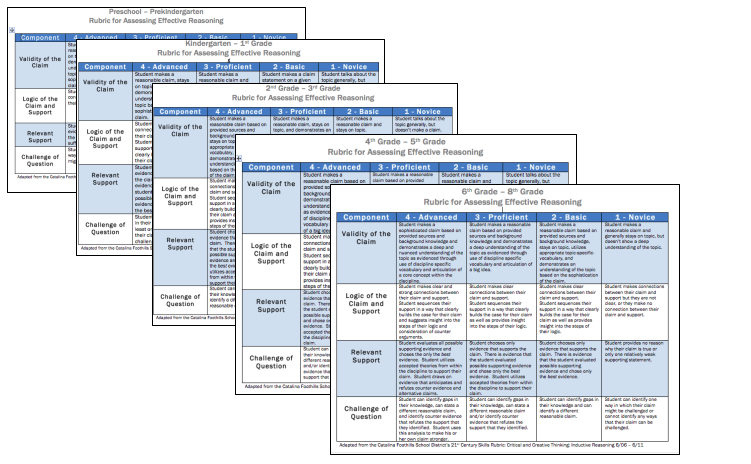

We then created rubrics to provide a concrete vision of what each of these constructs look like in practice. Working with the Stanford Center for Assessment, Learning and Equity (SCALE) , we refined these rubrics to capture clear and discrete skills.

For example, we defined effective reasoning as the skill of creating an evidence-based claim: students need to construct a claim, identify relevant support, link their support to their claim, and identify possible questions or counter claims. Rubrics provide an explicit vision of the skill of effective reasoning for students and teachers. By breaking the rubrics down for different grade bands, we have been able not only to describe what reasoning is but also to delineate how the skills develop in students from preschool through 8th grade.

Before moving on, I want to freely acknowledge that in narrowly defining reasoning as the construction of evidence-based claims we have disregarded some elements of reasoning that students can and should learn. For example, the difference between constructing claims through deductive versus inductive means is not highlighted in our definition. However, by privileging a definition that has broad applicability across disciplines, we are able to gain traction in developing the roots of critical thinking. In this case, to formulate well-supported claims or arguments.

Teaching Critical Thinking Skills

The definitions of critical thinking constructs were only useful to us in as much as they translated into practical skills that teachers could teach and students could learn and use. Consequently, we have found that to teach a set of cognitive skills, we needed thinking routines that defined the regular application of these critical thinking and problem-solving skills across domains. Building on Harvard’s Project Zero Visible Thinking work, we have named routines aligned with each of our constructs.

For example, with the construct of effective reasoning, we aligned the Claim-Support-Question thinking routine to our rubric. Teachers then were able to teach students that whenever they were making an argument, the norm in the class was to use the routine in constructing their claim and support. The flexibility of the routine has allowed us to apply it from preschool through 8th grade and across disciplines from science to economics and from math to literacy.

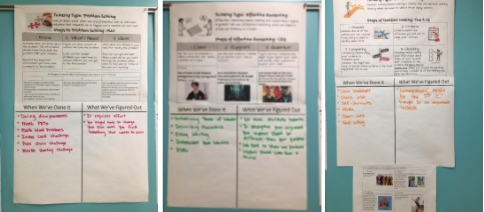

Kathryn Mancino, a 5th grade teacher at Two Rivers, has deliberately taught three of our thinking routines to students using the anchor charts above. Her charts name the components of each routine and has a place for students to record when they’ve used it and what they have figured out about the routine. By using this structure with a chart that can be added to throughout the year, students see the routines as broadly applicable across disciplines and are able to refine their application over time.

Assessing Critical Thinking Skills

By defining specific constructs of critical thinking and building thinking routines that support their implementation in classrooms, we have operated under the assumption that students are developing skills that they will be able to transfer to other settings. However, we recognized both the importance and the challenge of gathering reliable data to confirm this.

With this in mind, we have developed a series of short performance tasks around novel discipline-neutral contexts in which students can apply the constructs of thinking. Through these tasks, we have been able to provide an opportunity for students to demonstrate their ability to transfer the types of thinking beyond the original classroom setting. Once again, we have worked with SCALE to define tasks where students easily access the content but where the cognitive lift requires them to demonstrate their thinking abilities.

These assessments demonstrate that it is possible to capture meaningful data on students’ critical thinking abilities. They are not intended to be high stakes accountability measures. Instead, they are designed to give students, teachers, and school leaders discrete formative data on hard to measure skills.

While it is clearly difficult, and we have not solved all of the challenges to scaling assessments of critical thinking, we can define, teach, and assess these skills . In fact, knowing how important they are for the economy of the future and our democracy, it is essential that we do.

Jeff Heyck-Williams (He, His, Him)

Director of the two rivers learning institute.

Jeff Heyck-Williams is the director of the Two Rivers Learning Institute and a founder of Two Rivers Public Charter School. He has led work around creating school-wide cultures of mathematics, developing assessments of critical thinking and problem-solving, and supporting project-based learning.

Read More About Why Schools Need to Change

Bring Your Vision for Student Success to Life with NGLC and Bravely

March 13, 2024

For Ethical AI, Listen to Teachers

Jason Wilmot

October 23, 2023

Turning School Libraries into Discipline Centers Is Not the Answer to Disruptive Classroom Behavior

Stephanie McGary

October 4, 2023

- ADEA Connect

- Communities

- Career Opportunities

- New Thinking

- ADEA Governance

- House of Delegates

- Board of Directors

- Advisory Committees

- Sections and Special Interest Groups

- Governance Documents and Publications

- Dental Faculty Code of Conduct

- ADEAGies Foundation

- About ADEAGies Foundation

- ADEAGies Newsroom

- Gies Awards

- Press Center

- Strategic Directions

- 2022 Annual Report

- ADEA Membership

- Institutions

- Faculty and Staff

- Individuals

- Corporations

- ADEA Members

- Predoctoral Dental

- Allied Dental

- Nonfederal Advanced Dental

- U.S. Federal Dental

- Students, Residents and Fellows

- Corporate Members

- Member Directory

- Directory of Institutional Members (DIM)

- 5 Questions With

- ADEA Member to Member Recruitment

- Students, Residents, and Fellows

- Information For

- Deans & Program Directors

- Current Students & Residents

- Prospective Students

- Educational Meetings

- Upcoming Events

- 2024 Annual Session & Exhibition

- eLearn Webinars

- Past Events

- Professional Development

- eLearn Micro-credentials

- Leadership Institute

- Leadership Institute Alumni Association (LIAA)

- Faculty Development Programs

- ADEA Scholarships, Awards and Fellowships

- Academic Fellowship

- For Students

- For Dental Educators

- For Leadership Institute Fellows

- Teaching Resources

- ADEA weTeach®

- MedEdPORTAL

Critical Thinking Skills Toolbox

- Resources for Teaching

- Policy Topics

- Task Force Report

- Opioid Epidemic

- Financing Dental Education

- Holistic Review

- Sex-based Health Differences

- Access, Diversity and Inclusion

- ADEA Commission on Change and Innovation in Dental Education

- Tool Resources

- Campus Liaisons

- Policy Resources

- Policy Publications

- Holistic Review Workshops

- Leading Conversations Webinar Series

- Collaborations

- Summer Health Professions Education Program

- Minority Dental Faculty Development Program

- Federal Advocacy

- Dental School Legislators

- Policy Letters and Memos

- Legislative Process

- Federal Advocacy Toolkit

- State Information

- Opioid Abuse

- Tracking Map

- Loan Forgiveness Programs

- State Advocacy Toolkit

- Canadian Information

- Dental Schools

- Provincial Information

- ADEA Advocate

- Books and Guides

- About ADEA Publications

- 2023-24 Official Guide

- Dental School Explorer

- Dental Education Trends

- Ordering Publications

- ADEA Bookstore

- Newsletters

- About ADEA Newsletters

- Bulletin of Dental Education

- Charting Progress

- Subscribe to Newsletter

- Journal of Dental Education

- Subscriptions

- Submissions FAQs

- Data, Analysis and Research

- Educational Institutions

- Applicants, Enrollees and Graduates

- Dental School Seniors

- ADEA AADSAS® (Dental School)

- AADSAS Applicants

- Health Professions Advisors

- Admissions Officers

- ADEA CAAPID® (International Dentists)

- CAAPID Applicants

- Program Finder

- ADEA DHCAS® (Dental Hygiene Programs)

- DHCAS Applicants

- Program Directors

- ADEA PASS® (Advanced Dental Education Programs)

- PASS Applicants

- PASS Evaluators

- DentEd Jobs

- Information For:

- Introduction

- Overview of Critical Thinking Skills

- Teaching Observations

- Avenues for Research

CTS Tools for Faculty and Student Assessment

- Critical Thinking and Assessment

- Conclusions

- Bibliography

- Helpful Links

- Appendix A. Author's Impressions of Vignettes

A number of critical thinking skills inventories and measures have been developed:

Watson-Glaser Critical Thinking Appraisal (WGCTA) Cornell Critical Thinking Test California Critical Thinking Disposition Inventory (CCTDI) California Critical Thinking Skills Test (CCTST) Health Science Reasoning Test (HSRT) Professional Judgment Rating Form (PJRF) Teaching for Thinking Student Course Evaluation Form Holistic Critical Thinking Scoring Rubric Peer Evaluation of Group Presentation Form

Excluding the Watson-Glaser Critical Thinking Appraisal and the Cornell Critical Thinking Test, Facione and Facione developed the critical thinking skills instruments listed above. However, it is important to point out that all of these measures are of questionable utility for dental educators because their content is general rather than dental education specific. (See Critical Thinking and Assessment .)

Table 7. Purposes of Critical Thinking Skills Instruments

Reliability and Validity

Reliability means that individual scores from an instrument should be the same or nearly the same from one administration of the instrument to another. The instrument can be assumed to be free of bias and measurement error (68). Alpha coefficients are often used to report an estimate of internal consistency. Scores of .70 or higher indicate that the instrument has high reliability when the stakes are moderate. Scores of .80 and higher are appropriate when the stakes are high.

Validity means that individual scores from a particular instrument are meaningful, make sense, and allow researchers to draw conclusions from the sample to the population that is being studied (69) Researchers often refer to "content" or "face" validity. Content validity or face validity is the extent to which questions on an instrument are representative of the possible questions that a researcher could ask about that particular content or skills.

Watson-Glaser Critical Thinking Appraisal-FS (WGCTA-FS)

The WGCTA-FS is a 40-item inventory created to replace Forms A and B of the original test, which participants reported was too long.70 This inventory assesses test takers' skills in:

(a) Inference: the extent to which the individual recognizes whether assumptions are clearly stated (b) Recognition of assumptions: whether an individual recognizes whether assumptions are clearly stated (c) Deduction: whether an individual decides if certain conclusions follow the information provided (d) Interpretation: whether an individual considers evidence provided and determines whether generalizations from data are warranted (e) Evaluation of arguments: whether an individual distinguishes strong and relevant arguments from weak and irrelevant arguments

Researchers investigated the reliability and validity of the WGCTA-FS for subjects in academic fields. Participants included 586 university students. Internal consistencies for the total WGCTA-FS among students majoring in psychology, educational psychology, and special education, including undergraduates and graduates, ranged from .74 to .92. The correlations between course grades and total WGCTA-FS scores for all groups ranged from .24 to .62 and were significant at the p < .05 of p < .01. In addition, internal consistency and test-retest reliability for the WGCTA-FS have been measured as .81. The WGCTA-FS was found to be a reliable and valid instrument for measuring critical thinking (71).

Cornell Critical Thinking Test (CCTT)

There are two forms of the CCTT, X and Z. Form X is for students in grades 4-14. Form Z is for advanced and gifted high school students, undergraduate and graduate students, and adults. Reliability estimates for Form Z range from .49 to .87 across the 42 groups who have been tested. Measures of validity were computed in standard conditions, roughly defined as conditions that do not adversely affect test performance. Correlations between Level Z and other measures of critical thinking are about .50.72 The CCTT is reportedly as predictive of graduate school grades as the Graduate Record Exam (GRE), a measure of aptitude, and the Miller Analogies Test, and tends to correlate between .2 and .4.73

California Critical Thinking Disposition Inventory (CCTDI)

Facione and Facione have reported significant relationships between the CCTDI and the CCTST. When faculty focus on critical thinking in planning curriculum development, modest cross-sectional and longitudinal gains have been demonstrated in students' CTS.74 The CCTDI consists of seven subscales and an overall score. The recommended cut-off score for each scale is 40, the suggested target score is 50, and the maximum score is 60. Scores below 40 on a specific scale are weak in that CT disposition, and scores above 50 on a scale are strong in that dispositional aspect. An overall score of 280 shows serious deficiency in disposition toward CT, while an overall score of 350 (while rare) shows across the board strength. The seven subscales are analyticity, self-confidence, inquisitiveness, maturity, open-mindedness, systematicity, and truth seeking (75).

In a study of instructional strategies and their influence on the development of critical thinking among undergraduate nursing students, Tiwari, Lai, and Yuen found that, compared with lecture students, PBL students showed significantly greater improvement in overall CCTDI (p = .0048), Truth seeking (p = .0008), Analyticity (p =.0368) and Critical Thinking Self-confidence (p =.0342) subscales from the first to the second time points; in overall CCTDI (p = .0083), Truth seeking (p= .0090), and Analyticity (p =.0354) subscales from the second to the third time points; and in Truth seeking (p = .0173) and Systematicity (p = .0440) subscales scores from the first to the fourth time points (76). California Critical Thinking Skills Test (CCTST)

Studies have shown the California Critical Thinking Skills Test captured gain scores in students' critical thinking over one quarter or one semester. Multiple health science programs have demonstrated significant gains in students' critical thinking using site-specific curriculum. Studies conducted to control for re-test bias showed no testing effect from pre- to post-test means using two independent groups of CT students. Since behavioral science measures can be impacted by social-desirability bias-the participant's desire to answer in ways that would please the researcher-researchers are urged to have participants take the Marlowe Crowne Social Desirability Scale simultaneously when measuring pre- and post-test changes in critical thinking skills. The CCTST is a 34-item instrument. This test has been correlated with the CCTDI with a sample of 1,557 nursing education students. Results show that, r = .201, and the relationship between the CCTST and the CCTDI is significant at p< .001. Significant relationships between CCTST and other measures including the GRE total, GRE-analytic, GRE-Verbal, GRE-Quantitative, the WGCTA, and the SAT Math and Verbal have also been reported. The two forms of the CCTST, A and B, are considered statistically significant. Depending on the testing, context KR-20 alphas range from .70 to .75. The newest version is CCTST Form 2000, and depending on the testing context, KR-20 alphas range from .78-.84.77

The Health Science Reasoning Test (HSRT)

Items within this inventory cover the domain of CT cognitive skills identified by a Delphi group of experts whose work resulted in the development of the CCTDI and CCTST. This test measures health science undergraduate and graduate students' CTS. Although test items are set in health sciences and clinical practice contexts, test takers are not required to have discipline-specific health sciences knowledge. For this reason, the test may have limited utility in dental education (78).

Preliminary estimates of internal consistency show that overall KR-20 coefficients range from .77 to .83.79 The instrument has moderate reliability on analysis and inference subscales, although the factor loadings appear adequate. The low K-20 coefficients may be result of small sample size, variance in item response, or both (see following table).

Table 8. Estimates of Internal Consistency and Factor Loading by Subscale for HSRT

Professional Judgment Rating Form (PJRF)

The scale consists of two sets of descriptors. The first set relates primarily to the attitudinal (habits of mind) dimension of CT. The second set relates primarily to CTS.

A single rater should know the student well enough to respond to at least 17 or the 20 descriptors with confidence. If not, the validity of the ratings may be questionable. If a single rater is used and ratings over time show some consistency, comparisons between ratings may be used to assess changes. If more than one rater is used, then inter-rater reliability must be established among the raters to yield meaningful results. While the PJRF can be used to assess the effectiveness of training programs for individuals or groups, the evaluation of participants' actual skills are best measured by an objective tool such as the California Critical Thinking Skills Test.

Teaching for Thinking Student Course Evaluation Form

Course evaluations typically ask for responses of "agree" or "disagree" to items focusing on teacher behavior. Typically the questions do not solicit information about student learning. Because contemporary thinking about curriculum is interested in student learning, this form was developed to address differences in pedagogy and subject matter, learning outcomes, student demographics, and course level characteristic of education today. This form also grew out of a "one size fits all" approach to teaching evaluations and a recognition of the limitations of this practice. It offers information about how a particular course enhances student knowledge, sensitivities, and dispositions. The form gives students an opportunity to provide feedback that can be used to improve instruction.

Holistic Critical Thinking Scoring Rubric

This assessment tool uses a four-point classification schema that lists particular opposing reasoning skills for select criteria. One advantage of a rubric is that it offers clearly delineated components and scales for evaluating outcomes. This rubric explains how students' CTS will be evaluated, and it provides a consistent framework for the professor as evaluator. Users can add or delete any of the statements to reflect their institution's effort to measure CT. Like most rubrics, this form is likely to have high face validity since the items tend to be relevant or descriptive of the target concept. This rubric can be used to rate student work or to assess learning outcomes. Experienced evaluators should engage in a process leading to consensus regarding what kinds of things should be classified and in what ways.80 If used improperly or by inexperienced evaluators, unreliable results may occur.

Peer Evaluation of Group Presentation Form

This form offers a common set of criteria to be used by peers and the instructor to evaluate student-led group presentations regarding concepts, analysis of arguments or positions, and conclusions.81 Users have an opportunity to rate the degree to which each component was demonstrated. Open-ended questions give users an opportunity to cite examples of how concepts, the analysis of arguments or positions, and conclusions were demonstrated.

Table 8. Proposed Universal Criteria for Evaluating Students' Critical Thinking Skills

Aside from the use of the above-mentioned assessment tools, Dexter et al. recommended that all schools develop universal criteria for evaluating students' development of critical thinking skills (82).

Their rationale for the proposed criteria is that if faculty give feedback using these criteria, graduates will internalize these skills and use them to monitor their own thinking and practice (see Table 4).

- Application Information

- ADEA GoDental

- ADEA AADSAS

- ADEA CAAPID

- Events & Professional Development

- Scholarships, Awards & Fellowships

- Publications & Data

- Official Guide to Dental Schools

- Data, Analysis & Research

- Follow Us On:

- ADEA Privacy Policy

- Terms of Use

- Website Feedback

- Website Help

- WordPress.org

- Documentation

- Learn WordPress

- Members Newsfeed

16 Critical Thinking Questions For Students

Critical thinking is an essential skill that empowers students to think critically and make informed decisions. It encourages them to explore different perspectives, analyze information, and develop logical reasoning. To foster critical thinking skills, it is crucial to ask students thought-provoking questions that challenge their assumptions and encourage deeper analysis. Here are 16 critical thinking questions for students to enhance their problem-solving abilities:

- What evidence supports this argument?

- Can you identify any biases in this article?

- How does this relate to what we have learned previously?

- What alternative solutions can you propose to this problem?

- How might different cultures perceive this situation?

- What assumptions underlie this theory?

- How reliable is the source of this information?

- Can you identify any logical fallacies in this argument?

- What impact does this decision have on various stakeholders?

- What are the strengths and weaknesses of this argument?

- How might you approach this problem differently?

- Wha t ethical considerations need to be taken into account?

- Can you identify any gaps in the evidence provided?

- How does this concept apply to real-world situations?

- What are the potential consequences of this decision?

- How might you evaluate the credibility of this research?

By incorporating these critical thinking questions, educators can help students develop essential skills such as analyzing information, evaluating arguments, and problem-solving. Encouraging students to think critically will not only benefit their academic performance but also prepare them for success in various aspects of their lives.

Remember, critical thinking is a skill that can be nurtured and strengthened with practice. By guiding students to ask and answer these thought-provoking questions, educators can create a learning environment that fosters independent thinking and creativity.

Related Articles

The first year of teaching can be a thrilling and challenging experience…

Dice games can be a fantastic tool for teachers looking to incorporate…

In the ever-changing landscape of education, teachers constantly find new ways to…

Pedagogue is a social media network where educators can learn and grow. It's a safe space where they can share advice, strategies, tools, hacks, resources, etc., and work together to improve their teaching skills and the academic performance of the students in their charge.

If you want to collaborate with educators from around the globe, facilitate remote learning, etc., sign up for a free account today and start making connections.

Pedagogue is Free Now, and Free Forever!

- New? Start Here

- Frequently Asked Questions

- Privacy Policy

- Terms of Service

- Registration

Don't you have an account? Register Now! it's really simple and you can start enjoying all the benefits!

We just sent you an Email. Please Open it up to activate your account.

I allow this website to collect and store submitted data.

This content cannot be displayed without JavaScript. Please enable JavaScript and reload the page.

- Teaching & Learning

- Using quizzes to evaluate student learning

Writing quiz questions that assess student understanding and critical thinking

This article discusses the writing of effective multiple-choice style quiz questions that assess students' understanding and critical thinking skills, and guidance on how to design quiz questions that provide a reliable and valid measure of learning.

Designing questions to assess higher-order thinking

One of the greatest challenges of multiple-choice design is assessing higher-order thinking skills. Higher-order thinking is often used to refer to 'transfer', 'critical thinking' and 'problem-solving.'

When designing a quiz that assesses higher-order thinking skills, it is necessary to write questions/problems that require students to:

- use information, methods, concepts, or theories in new situations

- predict sequences and outcomes

- solve problems in which students must select the approach to use

- see patterns and organization of parts (e.g. classify, order)

- determine the quality/importance of different pieces of information

- discriminate among ideas

- examine pieces of evidence to determine the likelihood of certain outcomes/scenarios

- make choices based on reasoned argument.

The number of questions included in a quiz will vary depending on the content and purpose of the quiz. Generally, 10-20 questions are appropriate to ensure that students are only assessed on terms and concepts that are directly connected to learning outcomes. It is essential to consider including different question types to design a quiz that evaluates different levels of cognition and aligns with principles of academic integrity. Below the video there is an overview of the different question types available in Blackboard tests.

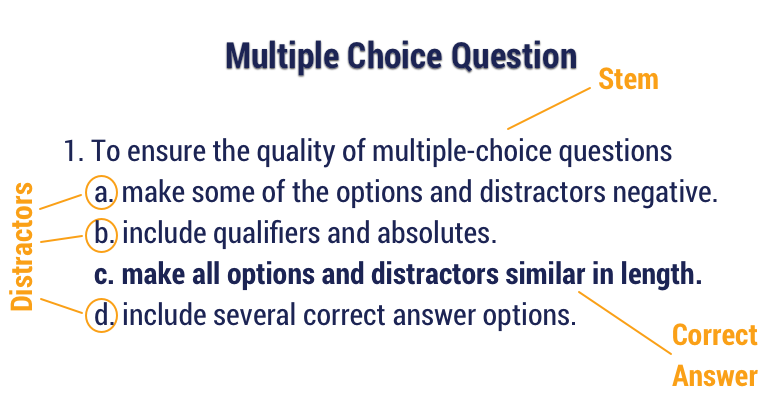

Parts of a Quiz

A typical multiple-choice question is comprised of 3 parts: the stem, the distractors and a single correct answer. For a simple, lower-order thinking or knowledge-based question this usually suffices.

However, an effective quiz question that addresses critical thinking should be made up of the following components:

- Context/introduction

- Question/problem (also known as the 'stem')

- Correct answer/s

- Distractors (wrong answer/s)

Adding a context or introduction is important. Because students are being asked to examine more information relevant to the question being asked they are much less likely to guess, or depend on the 'recall' of facts.

Increase question complexity

Making students select multiple possible correct answers increases the number of potential answers, requires students to exercise discrimination, and reduces the risk that students can "guess" the right answer.

The sections below outline how to write each component of an effective quiz question effectively.

1. Writing the context/introduction

It is critical to introduce the question and to relate it to a ‘real world’ context so that students can relate to what is being asked. A well-written context sets the stage for the question and should be phrased in a concise manner using familiar vocabulary .

After making a documentation error which action should the nurse take?

a) Use correcting liquid to cover the mistake and make a new entry b) Draw a line through it and write error above the entry c) Draw a line and write through it and write mistaken entry above d) Draw a line and write through it and write mistaken entry and initials above

Imagine you are a nurse in the palliative care unit in a hospital, during your shift one of your patients has an allergic reaction to a new medication. As you are documenting the allergic reaction, you accidentally put down the wrong medication.

How do you correct the documentation error?

a) Use liquid paper correction fluid to cover the mistake and write the correct medication on top b) Strikethrough the error and write the correct medication above c) Draw a line and write mistaken entry and your initials then write the correct medication above d) Always write in pencil and use an eraser to correct the entry

Using visuals to provide context

Images can help to convey context in very few words. Where appropriate, you could consider using charts and graphs to provide the context for the question as this will require students to carefully interpret the information.

2. Writing the question

A well-written question enables students to:

- Understand the question without reading it several times

- Answer the question without reading all the options

- Answer the question without knowing the answers to other quiz questions

The question should be:

- A direct/complete question rather than incomplete statements

- Concise and brief avoiding undue complexity, redundancy, and irrelevant information

- Stated in a positive form as negatively phrased questions are easily misunderstood

- Using familiar language , avoiding any unfamiliar terminology

To increase the validity and reliability of the quiz, it is also a good idea to randomize the position of the correct responses throughout the quiz. One way to ensure this is to consistently organize question-order alphabetically or from lowest-highest number. Blackboard also gives an option to randomize the order of questions and the order of answers for each student. Utilizing these features when designing a quiz is a way to further align it with SCU values connected to academic integrity .

Example:

A hotel attempting to match customers’ purchase patterns and their demand for guest rooms with future occupancy forecasts is known as:

a) Integrated management b) Yield management c) Sophisticated management d) Reservations management

Imagine you are the manager at a busy hotel. As the manager, you are asked to match customer purchase patterns and demand for guest rooms with future occupancy forecasts.

What is this process known as?

a) Integrated management b) Yield management c) Sophisticated management d) Reservations management

3. Writing the answer

A well-written answer enables students to s elect the right response without having to sort out complexities that have nothing to do with knowing the correct answer. However, students should not be able to guess the correct answer from the way the responses are written.

Quiz questions often have around 4 options for the students to choose from. However, there may be situations where it may make sense to have fewer or additional response options.

The best way to achieve more accurate and achievable budgets is to:

a) Have the budget committee monitor actual results on a frequent basis so that quick punitive action can be taken when actual results do not comply with budgeted expectations b) Have the budget prepared by top executives only c) Have all employees participated in the preparation of the budget d) All of the above

What is the best way to achieve more accurate and achievable budgets?

a) Have a budget committee that frequently monitors results b) Have a budget that is prepared by executives only c) Have all employees participate in monitoring results d) Have a budget that is created by employees

4. Writing effective distractors

It is critical to carefully write appropriate distractors of the same length and complexity, that are neither too similar that the answer is vague or arguable, nor too obvious that anyone could guess. An appropriate distractor:

- Mirrors the correct answer in length, style, complexity, phrasing and style

- Is plausible rather than exaggerated or unrealistic in a way that gives away the correct answer

Avoid the following

- Avoid distractors that contain minuscule or vague distinctions from the correct answer , as this may confuse the students (except where these distinctions are significant to demonstrating the unit learning outcomes).

- Using “ all of the above ” or “ both a) & c) ,” make it easier for students to guess the correct answer with only partial knowledge. Instead, use a multiple answer question type or add more appropriate distractors.

- Verbal or grammatical clues that give away the correct answer.

- Using “ none of the above ” unless there is an objectively correct answer (e.g. a mathematics quiz).

Breathing rate may increase as a result of:

a) A small decrease in oxygen levels in the body b) A small increase in carbon dioxide levels in the body c) A decrease in blood pH d) Both b and c

Imagine you are going for a run. As you run faster, you begin to breathe faster as well.

What reactions in the body causes the breathing rate to increase? (Select all that apply)

a) A decrease in oxygen levels in the body b) An increase in carbon dioxide levels in the body c) A decrease in blood pH d) An increase in blood pH

All other things being equal, an increased seat turnover will :

a) Increase total revenue b) Has no impact on the average check c) Increase the average check d) Decrease total revenue

If all other variables are unchanged, what will an increased turnover result in?

a) An increase in total revenue b) An increase in the average check c) A decrease in total revenue d) A decrease in the average check

Reflecting on quiz questions used for assessing student learning

Once you have written a quiz question reflect carefully and analytically to ensure that:

- Students can't recall the answer from a case study already covered in class (where the question requires critical thinking)

- Check that the distractors are plausible.

- The correct answer is not notably longer or shorter than the others

- Check spelling and grammar and are correct

Also make sure to test the quiz out with others, especially those familiar with the unit content.

Analysing Blackboard Tests

Where you are using Blackboard tests for online quizzes it is possible to analyse the student responses to determine the discrimination and difficulty of a particular quiz. Please see the following article: Analysing questions/responses for a Blackboard test (quiz)

Further Resources

- Is This a Trick Question? A Short Guide to Writing Effective Test Questions

- Multiple choice questions - Charles Sturt University

The Importance of Critical Thinking Skills for Students

Link Copied

Share on Facebook

Share on Twitter

Share on LinkedIn

.jpg)

Brains at Work!

If you’re moving toward the end of your high school career, you’ve likely heard a lot about college life and how different it is from high school. Classes are more intense, professors are stricter, and the curriculum is more complicated. All in all, it’s very different compared to high school.

Different doesn’t have to mean scary, though. If you’re nervous about beginning college and you’re worried about how you’ll learn in a place so different from high school, there are steps you can take to help you thrive in your college career.

If you’re wondering how to get accepted into college and how to succeed as a freshman in such a new environment, the answer is simple: harness the power of critical thinking skills for students.

What is critical thinking?

Critical thinking entails using reasoning and the questioning of assumptions to address problems, assess information, identify biases, and more. It's a skillset crucial for students navigating their academic journey and beyond, including how to get accepted into college . At its crux, critical thinking for students has everything to do with self-discipline and making active decisions to 'think outside the box,' allowing individuals to think beyond a concept alone in order to understand it better.

Critical thinking skills for students is a concept highly encouraged in any and every educational setting, and with good reason. Possessing strong critical thinking skills will make you a better student and, frankly, help you gain valuable life skills. Not only will you be more efficient in gathering knowledge and processing information, but you will also enhance your ability to analyse and comprehend it.

Importance of critical thinking for students

Developing critical thinking skills for students is essential for success at all academic levels, particularly in college. It introduces reflection and perspective while encouraging you to question what you’re learning! Even if you’ve seen solid facts. Asking questions, considering other perspectives, and self-reflection cultivate resilient students with endless potential for learning, retention, and personal growth.A well-developed set of critical thinking skills for students will help them excel in many areas. Here are some critical thinking examples for students:

1. Decision-making

If you’re thinking critically, you’re not making impulse decisions or snap judgments; you’re taking the time to weigh the pros and cons. You’re making informed decisions. Critical thinking skills for students can make all the difference.

2. Problem-solving

Students with critical thinking skills are more effective in problem-solving. This reflective thinking process helps you use your own experiences to ideate innovations, solutions, and decisions.

3. Communication

Strong communication skills are a vital aspect of critical thinking for students, helping with their overall critical thinking abilities. How can you learn without asking questions? Critical thinking for students is what helps them produce the questions they may not have ever thought to ask. As a critical thinker, you’ll get better at expressing your ideas concisely and logically, facilitating thoughtful discussion, and learning from your teachers and peers.

4. Analytical skills

Developing analytical skills is a key component of strong critical thinking skills for students. It goes beyond study tips on reviewing data or learning a concept. It’s about the “Who? What? Where? Why? When? How?” When you’re thinking critically, these questions will come naturally, and you’ll be an expert learner because of it.

How can students develop critical thinking skills

Although critical thinking skills for students is an important and necessary process, it isn’t necessarily difficult to develop these observational skills. All it takes is a conscious effort and a little bit of practice. Here are a few tips to get you started:

1. Never stop asking questions

This is the best way to learn critical thinking skills for students. As stated earlier, ask questions—even if you’re presented with facts to begin with. When you’re examining a problem or learning a concept, ask as many questions as you can. Not only will you be better acquainted with what you’re learning, but it’ll soon become second nature to follow this process in every class you take and help you improve your GPA .

2. Practice active listening

As important as asking questions is, it is equally vital to be a good listener to your peers. It is astounding how much we can learn from each other in a collaborative environment! Diverse perspectives are key to fostering critical thinking skills for students. Keep an open mind and view every discussion as an opportunity to learn.

3. Dive into your creativity

Although a college environment is vastly different from high school classrooms, one thing remains constant through all levels of education: the importance of creativity. Creativity is a guiding factor through all facets of critical thinking skills for students. It fosters collaborative discussion, innovative solutions, and thoughtful analyses.

4. Engage in debates and discussions

Participating in debates and discussions helps you articulate your thoughts clearly and consider opposing viewpoints. It challenges the critical thinking skills of students about the evidence presented, decoding arguments, and constructing logical reasoning. Look for debates and discussion opportunities in class, online forums, or extracurricular activities.

5. Look out for diverse sources of information

In today's digital age, information is easily available from a variety of sources. Make it a habit to explore different opinions, perspectives, and sources of information. This not only broadens one's understanding of a subject but also helps in distinguishing between reliable and biased sources, honing the critical thinking skills of students.

Unlock the power of critical thinking skills while enjoying a seamless student living experience!

Book through amber today!

6. Practice problem-solving

Try engaging in challenging problems, riddles or puzzles that require critical thinking skills for students to solve. Whether it's solving mathematical equations, tackling complex scenarios in literature, or analysing data in science experiments, regular practice of problem-solving tasks sharpens your analytical skills. It enhances your ability to think critically under pressure.

Nurturing critical thinking skills helps students with the tools to navigate the complexities of academia and beyond. By learning active listening, curiosity, creativity, and problem-solving, students can create a sturdy foundation for lifelong learning. By building upon all these skills, you’ll be an expert critical thinker in no time—and you’ll be ready to conquer all that college has to offer!

Frequently Asked Questions

What questions should i ask to be a better critical thinker, how can i sharpen critical thinking skills for students, how do i avoid bias, can i use my critical thinking skills outside of school, will critical thinking skills help students in their future careers.

Your ideal student home & a flight ticket awaits

Follow us on :

Related Posts

25 Amazing Hobbies For Students To Develop In 2024

.jpg)

10 Best Apps For Monitoring Phone Usage

OneNote Tips and Tricks : 15 Best Ways to Enchance Productivity

amber © 2023. All rights reserved.

4.8/5 on Trustpilot

Rated as "Excellent" • 4700+ Reviews by Students

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Psychol

Constructing a critical thinking evaluation framework for college students majoring in the humanities

Associated data.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Introduction

Education for sustainable development (ESD) has focused on the promotion of sustainable thinking skills, capacities, or abilities for learners of different educational stages. Critical thinking (CT) plays an important role in the lifelong development of college students, which is also one of the key competencies in ESD. The development of a valuable framework for assessing college students’ CT is important for understanding their level of CT. Therefore, this study aimed to construct a reliable self-evaluation CT framework for college students majoring in the humanities.

Exploratory factor analysis (EFA), confirmatory factor analysis (CFA), and Item analysis were conducted to explore the reliability and validity of the CT evaluation framework. Six hundred and forty-two college students majoring in the humanities were collected. The sample was randomly divided into two subsamples ( n 1 = 321, n 2 = 321).

The Cronbach’s alpha coefficient for the whole scale was 0.909, and the values of the Cronbach’s alpha coefficients for individual factors of the scale ranged from 0.724 to 0.878. Then CFA was conducted within the scope of the validity study of the scale. In this way, the structure of the 7-factor scale was confirmed. Results indicated that the constructed evaluation framework performed consistently with the collected data. CFA also confirmed a good model fitting of the relevant 22 factors of the college students’ CT framework ( χ 2 /df = 3.110, RMSEA = 0.056, GFI = 0.927, AGFI = 0.902, NFI = 0.923, and CFI = 0.946).

These findings revealed that the CT abilities self-evaluation scale was a valid and reliable instrument for measuring the CT abilities of college students in the humanities. Therefore, the college students’ CT self-evaluation framework included three dimensions: discipline cognition (DC), CT disposition, and CT skills. Among them, CT disposition consisted of motivation (MO), attention (AT), and open-mindedness (OM), while CT skills included clarification skills (CS), organization skills (OS), and reflection (RE). Therefore, this framework can be an effective instrument to support college students’ CT measurement. Consequently, some suggestions are also put forward regarding how to apply the instrument in future studies.

Nowadays, individuals should be equipped with the abilities of identifying problems, in-depth thinking, and generating effective solutions to cope with various risks and challenges caused by the rapid development of science and technology ( Arisoy and Aybek, 2021 ). In this context, critical thinking (CT) is gaining increasing attention. Promoting college students’ CT is an important way of improving their abilities of problem solving and decision making to further enhance their lifelong development ( Feng et al., 2010 ). Although human beings are not born with CT abilities ( Scriven and Paul, 2005 ), they can be acquired through learning and training, and are always sustainable ( Barta et al., 2022 ).

Especially in the field of education, CT should be valued ( Pnevmatikos et al., 2019 ). Students should be good thinkers who possess the abilities of applying critical evaluation, finding, and collating evidence for their views, as well as maintaining a doubting attitude regarding the validity of facts provided by their teachers or other students ( Sulaiman et al., 2010 ). Many countries have regarded the development of students’ CT as one of the fundamental educational goals ( Flores et al., 2012 ; Ennis, 2018 ). CT is helpful for students to develop their constructive, creative, and productive thinking, as well as to foster their independence ( Wechsler et al., 2018 ; Odebiyi and Odebiyi, 2021 ). It also provides the power to broaden their horizons ( Les and Moroz, 2021 ). Meanwhile, when college students have a high level of CT abilities, they will likely perform better in their future careers ( Stone et al., 2017 ; Cáceres et al., 2020 ). Therefore, college students should be capable of learning to access knowledge, solve problems, and embrace different ideas to develop their CT ability ( Ulger, 2018 ; Arisoy and Aybek, 2021 ).

Due to the significant meaningfulness of CT abilities at all education levels and in various disciplines, how to cultivate students’ CT abilities has been the focus of CT-related research ( Fernández-Santín and Feliu-Torruella, 2020 ). Many studies have shown that inquiry-based learning activities or programs are an effective way to exercise and enhance students’ CT abilities ( Thaiposri and Wannapiroon, 2015 ; Liang and Fung, 2020 ; Boso et al., 2021 ; Chen et al., 2022 ). Students not only need the motivation and belief to actively participate in such learning activities and to commit to problem solving, but also need the learning skills to cope with the problems that may be encountered in problem-solving oriented learning activities. These requirements are in line with the cultivation of students’ CT abilities. Meanwhile, research has also indicated that there is an interrelationship between problem solving and CT ( Dunne, 2015 ; Kanbay and Okanlı, 2017 ).

However, another important issue is how to test whether learning activities contribute to improving the level of students’ CT abilities. It is effective to measure students’ CT abilities through using CT measurement instruments. Some CT measurement frameworks have been developed to cope with the need to cultivate CT abilities in teaching and learning activities ( Saad and Zainudin, 2022 ). However, there are still some imperfections in these existing CT evaluation frameworks. For example, most studies on college students’ CT are in the field of science, with very little research on students in the humanities, and even less on specifically developing CT assessment frameworks for college students in the humanities. Only Khandaghi et al. (2011) conducted a study on the CT disposition of college students in the humanities, and the result indicated that their CT abilities were at an intermediate level. However, there are few descriptions of college students’ CT with a background in humanities disciplines. Compared to humanities disciplines, science disciplines seem to place more emphasis on logical and rational thinking, which might cater more to the development of CT abilities ( Li, 2021 ). However, it is also vital for college students in the humanities to engage in rational thinking processes ( Al-Khatib, 2019 ). Hence, it is worth performing CT abilities evaluations of college students in the humanities by constructing a CT evaluation framework specifically for such students. In addition, previous measurements of CT have tended to be constructed according to one dimension of CT only, either CT skills or CT disposition. CT skills and disposition are equally important factors, and the level of CT abilities can be assessed more comprehensively and accurately by measuring both dimensions simultaneously. Therefore, the purpose of this study was to develop a self-evaluation CT framework for college students that integrates both CT skills and disposition dimensions to comprehensively evaluate the CT ability of college students in the humanities.

Literature review

Ct of college students in the humanities.

CT is hardly a new concept, as it can be traced back 2,500 years to the dialogs of Socrates ( Giannouli and Giannoulis, 2021 ). In the book, How We Think, Dewey (1933 , p 9; first edition, 1910) mentioned that thinking critically can help us move forward in our thinking. Subsequently, different explanations of CT have been presented through different perspectives by researchers. Some researchers think that CT means to think with logic and reasonableness ( Mulnix and Mulnix, 2010 ), while others suggest that CT refers to the specific learning process in which learners need to think critically to achieve learning objectives through making decisions and problem solving ( Ennis, 1987 ).

Generally, for a consensus, CT involves two aspects: CT skills and CT disposition ( Bensley et al., 2010 ; Sosu, 2013 ). CT skills refer to the abilities to understand problems and produce reasonable solutions to problems, such as analysis, interpretation, and the drawing of conclusions ( Chan, 2019 ; Ahmady and Shahbazi, 2020 ). CT disposition emphasizes the willingness of individuals to apply the skills mentioned above when there is a problem or issue that needs to be solved ( Chen et al., 2020 ). People are urged by CT disposition to engage in a reflective, inferential thinking process about the information they receive ( Álvarez-Huerta et al., 2022 ), and then in specific problem-solving processes, specific CT skills would be applied. CT disposition is the motivation for critical behavior and an important quality for the learning and use of critical skills ( Lederer, 2007 ; Jiang et al., 2018 ).

For college students, the cultivation of their CT abilities is usually based on specific learning curriculums ( O’Reilly et al., 2022 ). Hence, many studies about students’ CT have been conducted in various disciplines. For example, in science education, Ma et al.’s (2021) study confirmed that there was a significant relationship between CT and science achievement, so they suggested that it might be valuable to consider fostering CT as a considerable outcome in science education. In political science, when developing college students’ CT, teachers should focus on not only the development of skills, but also of meta-awareness ( Berdahl et al., 2021 ), which emphasizes the importance of CT disposition, i.e., learners not only need to acquire CT skills, such as analysis, inference, and interpretation, but also need to have clear cognition of how to apply these skills at a cognitive level. Duro et al. (2013) found that psychology students valued explicit CT training. For students majoring in mathematics, Basri and Rahman (2019) developed an assessment framework to investigate students’ CT when solving mathematical problems. According to the above literature review, there have been many studies on CT in various disciplines, which also reflects the significant importance of CT for the development of students in various disciplines. However, most studies on CT have been conducted in the field of science subjects, such as mathematics, business, nursing, and so on ( Kim et al., 2014 ; Siew and Mapeala, 2016 ; Basri and Rahman, 2019 ), but there have been few studies on the CT of students in the humanities ( Ennis, 2018 ).

There is a widespread stereotype that compared to humanities subjects, science majors are more logical, and so more attention should be paid to their CT ( Lin, 2016 ). This begs the question, are all students in the humanities (e.g., history, pedagogy, Chinese language literature, and so on) sensual or “romantic”? Do they not also need to develop independent, logical, and CT? Can they depend only on “romantic” thinking? This may be a prejudice. In fact, the humanities are subjects that focus on humanities and our society ( Lin, 2020 ). Humanities should be seen as the purpose rather than as a tool. The academic literacy of humanities needs to be developed and enhanced through a long-term, subtle learning process ( Bhatt and Samanhudi, 2022 ), and the significance for individuals is profound. Hence, the subjects of both humanities and sciences play an equally important role in an individual’s lifelong development. As such, what should students majoring in humanities subjects do to develop and enhance their professional competence? Chen and Wei (2021) suggested that individuals in the humanities should have the abilities to identify and tackle unstructured problems to adapt to the changing environments, and this suggestion is in line with a developmental pathway for fostering CT. Therefore, developing their CT abilities is an important way to foster the humanistic literacy of students in the humanities. Specifically, it is important to be equipped with the abilities to think independently and questioningly, to read individually, and to interpret texts in depth and in multiple senses. They also need to learn and understand the content of texts and evaluate the views of others in order to expand the breadth of their thinking ( Barrett, 2005 ). Moreover, they need the ability to analyze issues dialectically and rationally, and to continually reflect on themselves and offer constructive comments ( Klugman, 2018 ; Dumitru, 2019 ). Collegiate CT skills are taught via independent courses or embedded modules ( Zhang et al., 2022 ). The humanities are no exception. Yang (2007) once designed thematic history projects, as independent courses, to foster students’ disposition toward CT concerning the subject of history, and the results showed that the history projects can support learners’ development of historical literacy and CT. In a word, the humanities also play an important role in fostering the development and enhancement of college students’ CT, esthetic appreciation and creativity, and cultural heritage and understanding ( Jomli et al., 2021 ). Having good CT therefore also plays a crucial role in the lifelong development of students in the humanities.

An accurate assessment of the level of CT abilities is an important prerequisite for targeted improvement of students’ CT abilities in special disciplines ( Braeuning et al., 2021 ). Therefore, it might be meaningful to construct a self-evaluation CT framework for college students in the humanities according to their professional traits.

Evaluating college students’ CT

Given that CT can be cultivated ( Butler et al., 2017 ), more attention has been paid to how to improve students’ CT abilities level in instruction and learning ( Araya, 2020 ; Suh et al., 2021 ). However, it is also important to examine how CT can be better assessed. The evaluation of thinking is helpful for students to think at higher levels ( Kilic et al., 2020 ). Although the definitions of CT are controversial ( Hashemi and Ghanizadeh, 2012 ), many researchers have reached a consensus on the main components of CT: skills and disposition ( Bensley et al., 2016 ), and different CT evaluation frameworks have been developed according to one of the two dimensions. For example, Li and Liu (2021) developed a five-skill framework for high school students which included analysis, inference, evaluation, construct, and self-reflection. Meanwhile, in recent years, the assessment of CT disposition has also attracted the interest of a growing number of researchers. Sosu (2013) developed the “Critical Thinking Disposition Scale” (STDS), which included two dimensions: critical openness and reflective skepticism. The specific taxonomies of the evaluation framework of CT skills and dispositions is shown in Table 1 . As illustrated in Table 1 , there are some universal core items to describe CT skills. For the dimension of CT skills, the sub-dimensions of interpretation, analysis, inference, and evaluation are the important components. Those CT skills are usually applied along with the general process of learning activities ( Hsu et al., 2022 ). For instance, at the beginning of learning activities, students should have a clear understanding of the issues raised and the knowledge utilized through applying interpretation skills. Likewise, there are some universal core items to describe CT dispositions, such as open-mindedness, attentiveness, flexibility, curiosity, and so on.

Taxonomies of the evaluation framework of CT skills and dispositions.

For a good critical thinker, it is equally important to have both dispositional CT and CT skills. Students need to have the awareness of applying CT abilities to think about problem-solving and subsequently be able to utilize a variety of CT skills in specific problem-solving processes. Therefore, we argue that designing a CT self-evaluation framework that integrates the two dimensions will provide a more comprehensive assessment of college students’ CT. In terms of CT disposition, motivation, attentiveness, and open-mindedness were included as the three sub-dimensions of CT disposition. Motivation is an important prerequisite for all thinking activities ( Rodríguez-Sabiote et al., 2022 ). Especially in problem-solving-oriented learning activities, the development of CT abilities will be significantly influenced by the motivation level ( Berestova et al., 2021 ). Attentiveness refers to the state of concentration of the learner during the learning process, which reflects the learners’ level of commitment to learning, playing a crucial role in the development of CT abilities during the learning process. Open-mindedness requires learners to keep an open mind to the views of others when engaging in learning activities. The three sub-dimensions have been used to reflect leaners’ disposition to think critically. Especially in the humanities, it is only through in-depth communication between learners that a crash of minds and an improvement in abilities can take place ( Liu et al., 2022 ), and it is therefore essential that learners maintain a high level of motivation, attentiveness, and open-mindedness in this process to develop their CT abilities. In terms of CT skills, three sub-dimensions were also selected to measure the level of learners’ CT skills, namely clarification skills, organization skills, and reflection. In the humanities, it should be essential abilities for students to understand, analyze, and describe the literature and problems comprehensively and exactly ( Chen and Wei, 2021 ). Then, following the ability to extract key information about the problem, to organize and process it, and to organize the information with the help of organizational tools such as diagrams and mind maps. Finally, the whole process of problem solving is reflected upon and evaluated ( Ghanizadeh, 2016 ), and research has shown that reflection learning intervention could significantly improve learners’ CT abilities ( Chen et al., 2019 ).

Research purpose

CT plays an important role in college students’ academic and lifelong career development ( Din, 2020 ). In the current study on college students’ CT measurement, it can be improved in two main ways.

Firstly, the attention to the discipline cognition related to CT in previous studies is insufficient. Generally, students’ CT abilities can be cultivated based on two contexts: the subject-specific instructional context and the general skills instructional context ( Ennis, 1989 ; Swartz, 2018 ). In authentic teaching and learning contexts, the generation and development of CT usually takes place in problem-oriented learning activities ( Liang and Fung, 2020 ), in which students need to achieve their learning objectives by identifying problems and solving them. According to Willingham (2007) , if you are to think critically, you must have a sound knowledge base of the problem or topic of enquiry and view it from multiple perspectives. Due to the difference in nature of the disciplines, the format of specific learning activities should also vary. Hence, an adequate cognition of the discipline is an important prerequisite for learning activities; meanwhile, college students’ cognition level regarding their discipline should also be an important assessment criterion for them to understand their own level of CT abilities. Cognition refers to the acquisition of knowledge through mental activity (e.g., forming concepts, perceptions, judgments, or imagination; Colling et al., 2022 ). Learners’ thinking, beliefs, and feelings will affect how they behave ( Han et al., 2021 ). Analogically speaking, discipline cognition refers to an individual’s understanding of their discipline’s backgrounds and knowledge ( Flynn et al., 2021 ). Cognition should be an important variable in CT instruction ( Ma and Luo, 2020 ). In the current study, we added the dimension of discipline cognition into the self-evaluation CT framework of college students in the humanities. What’s more, in order to represent the learning contexts of humanities disciplines, the specific descriptions of items are concerned with the knowledge of the humanities, (e.g., “I can recognize the strengths and limitations of the discipline I am majoring in.,” and “Through studying this subject, my understanding of the world and life is constantly developing.”).

Secondly, the measurement factors of CT skills and disposition should be more specific according to the specific humanities background. In previous studies, researchers tended to measure students’ CT in terms of one of the two dimensions of CT skills. CT thinking skills used to be measured from perspectives such as analysis, interpretation, inference, self-regulation, and evaluation. However, in specific learning processes, how should students concretely analyze and interpret the problems they encounter, and how can they self-regulate their learning processes and evaluate their learning outcomes? Those issues should also be considered to evaluate college students’ levels of CT abilities more accurately. Therefore, the current study attempted to construct a CT framework in a more specific way, and by integrating both dimensions of CT disposition and skills. Therefore, what specific factors would work well as dimensions for evaluating the CT abilities of college students in the humanities? In the current study, firstly, students’ disposition to think critically is assessed in terms of three sub-dimensions: motivation, attention, and open-mindedness, to help students understand the strength of their own awareness to engage in CT ( Bravo et al., 2020 ). Motivation is an important prerequisite for all thinking activities ( Rodríguez-Sabiote et al., 2022 ), and it could contribute to the development of engagement, behavior, and analysis of problems ( Berestova et al., 2021 ). Meanwhile, there was a positive relationship between academic motivation and CT. Therefore, in the current study, motivation is still one of the crucial factors. The sub-dimension of attentiveness was also an important measurement factor, which aimed to investigate the level of the persistence of attention. Attentiveness also has a positive influence on a variety of student behaviors ( Reynolds, 2008 ), while the sub-dimension of open-mindedness mainly assesses college students’ flexibility of thinking, which is also an important factor of CT ( Southworth, 2020 ). A good critical thinker should be receptive of some views that might be challenging to their own prior beliefs with an open-minded attitude ( Southworth, 2022 ). Secondly, college students’ CT skills were then assessed in the following three sub-dimensions of clarification skills, organization skills, and reflection, with the aim of understanding how well students use CT skills in the problem-solving process ( Tumkaya et al., 2009 ). The three sub-dimensions of CT skills selected in this framework are consistent with the specific learning process of problem solving, which begins with a clear description and understanding of the problem, i.e., clarification skills. In the humanities, it should be an essential competence for students to understand, analyze, and describe the literature and problems comprehensively and exactly ( Chen and Wei, 2021 ).