15 Types Of Computers (Analog To Quantum)

As a computer science student, I use computers for nearly the whole day, often several different types. For example, I work from my desktop computer ( with lovely dual monitors ), my Chromebook, and sometimes even my phone. However, I recently learned of supercomputers when studying the history of computers which piqued my curiosity. So I spent some time learning about what a computer is, as well as the different types of computers. And let me tell you, there are quite a few!

What Is A Computer?

A computer is a device that takes in some form of input data, processes it, then produces logical output. Computers used to be mechanical machines. However, in recent history, they’ve transformed into electrical devices. The earliest computers were simply calculators designed to assist in scientific computation. However, computers have since evolved to process data at incredible rates, even storing data and program instructions in their internal memory.

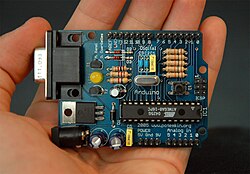

Within the last 60 years, computers have gone from taking up entire rooms and costing millions of dollars to being the size of a credit card and costing a mere $35. I’m referring to the first supercomputer, the CDC 6600, and the Raspberry Pi computer minicomputer respectively. Not only is the Raspberry Pi nearly a million times less expensive and many times smaller, but it’s also more than ten times faster .

“What a computer is to me is, it’s the most remarkable tool that we’ve ever come up with. It’s the equivalent to a bicycle for our minds.” -Steve Jobs

How Do Computers Work?

If you’re unsure how computers work, they probably seem like magic to you. That’s how computers seemed to me before I lifted the veil and discovered their inner workings. There are four basic functions of computers that define how they work:

- Input – Refers to information fed into the computer

- Memory – Refers to data and algorithms stored within the computer

- Processing – Refers to the act of processing input data

- Output – Refers to the processed data coming out of the computer

Types of Computers

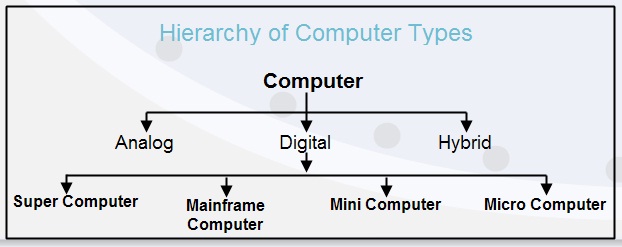

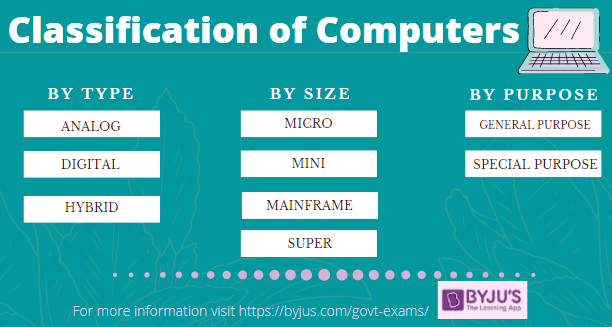

There used to be only a few different types of computers but today, there are at least 15 types of computers in the world. These include analog computers, digital computers, hybrid computers, PCs, tablets, mainframes, servers, supercomputers, minicomputers, quantum computers, smartphones, smartwatches, and more. Additionally, with the continuously decreasing size of computers over time, a growing number of appliances are coming online, referred to as the Internet of Things (IoT).

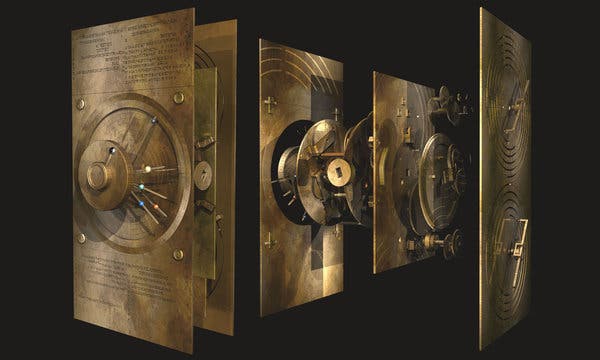

Analog Computers

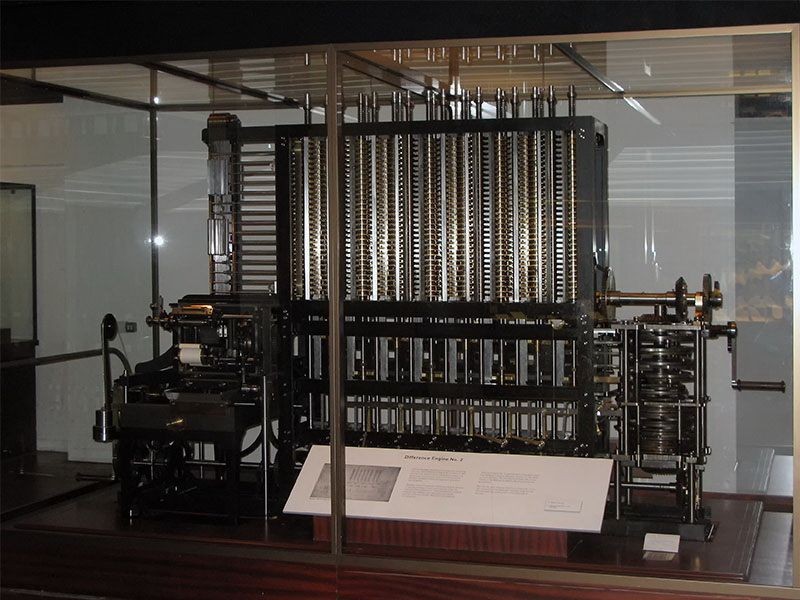

Analog computers have been around for at least 2,000 years, dating back to the Antikythera Mechanism (pictured below). However, analog computers peaked in popularity sometime around the 1950s. Eventually, analog computers peaked when they were used inside the Saturn V rocket and assisted in the Apollo Moon landings .

The invention of the integrated circuit, the transistor, and the microprocessor around the same time led to much faster, smaller, and less expensive digital computers. Since then, they haven’t disappeared but they’ve become far less popular with very few still in use today.

What Is An Analog Computer?

Analog computers are a type of computer that uses constantly changing mechanisms and displays output data in an analog fashion. For example, an analog watch has many complex gears that are constantly turning precisely and continuously and display the time with turning hands. On the other hand, a digital watch has electric components that compute the time and display it in a still, digital fashion.

Features of Analog Computers

- Mechanical parts such as gears and levers

- Continuously changing mechanisms

- Hydraulic components such as pipes and valves

- Electrical components such as resistors and capacitors

Digital Computers

Nearly every type of computer in the world today is classified as digital. This includes all of our personal computers and wearables, supercomputers and minicomputers, and IoT devices. Digital computers process information in a different way than analog computers. Rather than processing continuously changing data, digital computers process the simplest language in the world: binary.

What Is Binary?

Binary, a base-2 number system, is referred to as ‘machine language’ because it’s the language that computers understand. It contains only two numbers in the entire number system: 0 and 1. With these two simple numbers, computers can take on two different states: ‘off’ and ‘on.’ The beauty of modern digital computers is that they can process many series of binary inputs in a short time.

What Is A Digital Computer?

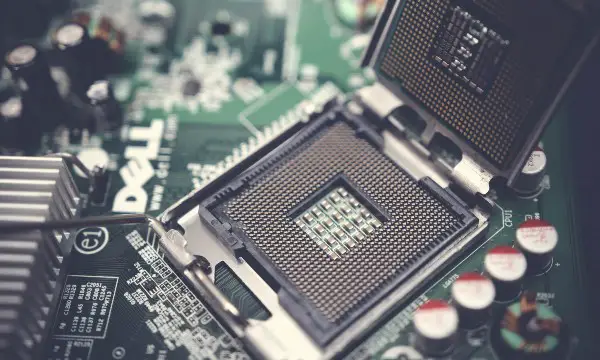

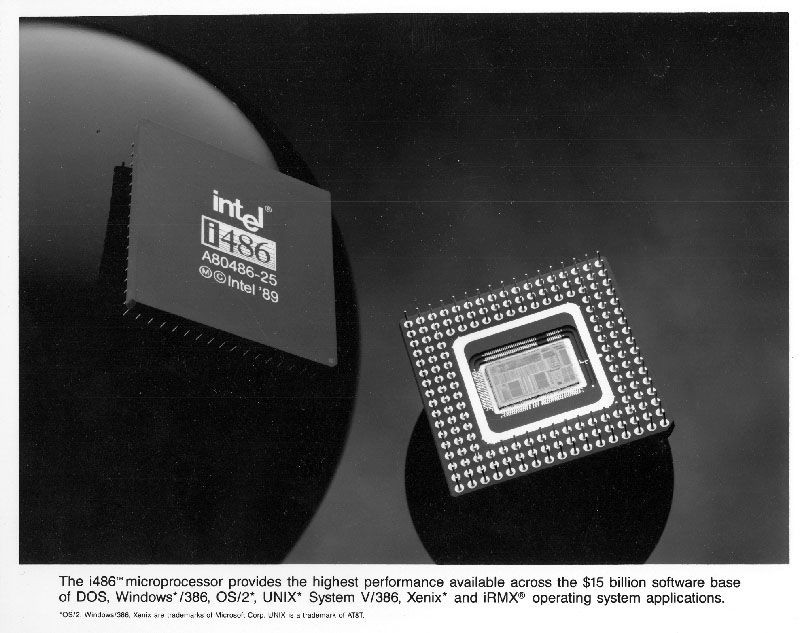

Digital computers process digital data, often in binary format. Technically, the Abacus invented more than 4,000 years ago, was the first digital computer. However, we typically think of digital computers as the modern digital electrical computing powerhouses of today. Typically, digital computers consist of input devices such as keyboard and mouse and output devices such as screens and speakers. The ‘brain’ of a digital computer is its CPU or Central Processing Unit.

Main Components Of Digital Computers

- Motherboard: Circuit board connecting all components

- Processor: (CPU) Central Processing Unit

- Video Card: (GPU) Graphics Processing Unit

- Memory: (RAM) Random Access Memory

- Storage: (SSD) Solid-State Drive or (HDD) Hard Disk Drive

- Power Supply Unit: Takes in power to use for computer

- Input/Output Devices: Keyboard, mouse, screen, speakers

Hybrid Computers

The history of hybrid computers dates back to the 1960s. In fact, the first hybrid computer, the HYCOMP 250 , was created in 1961. There were other hybrid computers that came about in the 1960s such as the HYDAC 2400 in 1963 but they never quite became mainstream devices. However, they were still made even in the 1980s as the Marconi Space and Defense System Limited came out with their Starglow Hybrid Computer. Around this time, hybrid computers have dwindled in popularity.

What Is A Hybrid Computer?

Hybrid computers combine aspects of both digital and analog computers. Essentially, you get the high speeds and complexity of analog computers combined with the accuracy of digital computers. Also, there are often digital components of hybrid computers that act as controllers.

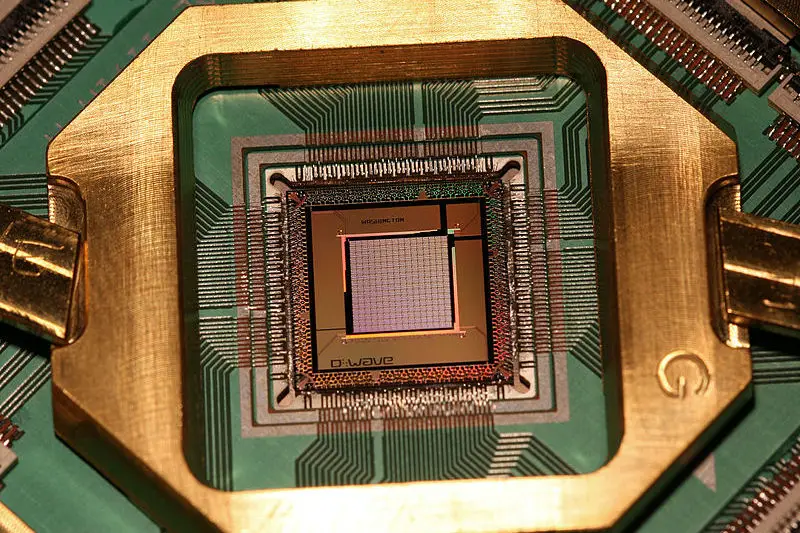

Quantum Computers

Quantum Computers are a mysterious new type of computer, separate from digital and analog computers. However, they do take principles from digital computers, borrowing the binary system, and extending it to include qubits. The first quantum computer , a 2-qubit device, was created fairly recently in 1998 by three leading quantum computer scientists. It However, they’ve since made tremendous progress.

Just two years later, in 2000, a functioning 4-qubit quantum computer was created by David Wineland and others of the U.S. National Institute for Standards and Technology (NIST). Only a week later, a 7-qubit quantum computer was completed by another group of researchers by utilizing trans-crotonic acid in the development of the device.

In the last 20 years, quantum computers have made… (dare I say it?) quantum leaps. Some of the leaders in the space today include IBM, Google, and the world’s first quantum computing company, D-Wave Systems Inc. Just recently, in 2015, D-Wave broke a quantum barrier in the field, the 1000 qubit barrier when they developed a 1000-qubit quantum annealing processor chip. This processor opened a world of possibilities.

What Is A Qubit?

Qubit is short for a quantum bit . A classic bit refers to a ‘0’ or a ‘1’ and is the basis of all digital computers. However, a qubit can maintain a state of ‘0’, ‘1’, as well as both simultaneously . This seemingly magical phenomenon of the two states occurring simultaneously stems from quantum theory and is commonly referred to as superposition .

What Is A Quantum Computer?

Quantum computers are a type of computer that utilizes concepts from quantum physics such as superposition. The concept of superposition stems from the fact that, unlike digital computers that rely on bits, quantum computers use qubits or quantum bits. Because of the quantum state of the bits, quantum computers are able to perform at unprecedented speeds and are expected to soon attain quantum supremacy , leaving digital computers in the dust.

Mainframe Computers

Some of the first digital computers were large mainframe computers. They’re known to be huge computers, dubbed “big iron” from their bulky origins. The first mainframe, the Harvard Mark I, goes back to the 1940s. It was costed $200,000 to develop and was as large as a room, weighing five tons!

Mainframe computers took off in the 1960s and 1970s. However, demand began to shrink in the 1980s when in 1984, the sale of personal computers surpassed that of mainframe computers. This was shortly after the release of the Apple II and IBM’s PC, the IBM Model 5150.

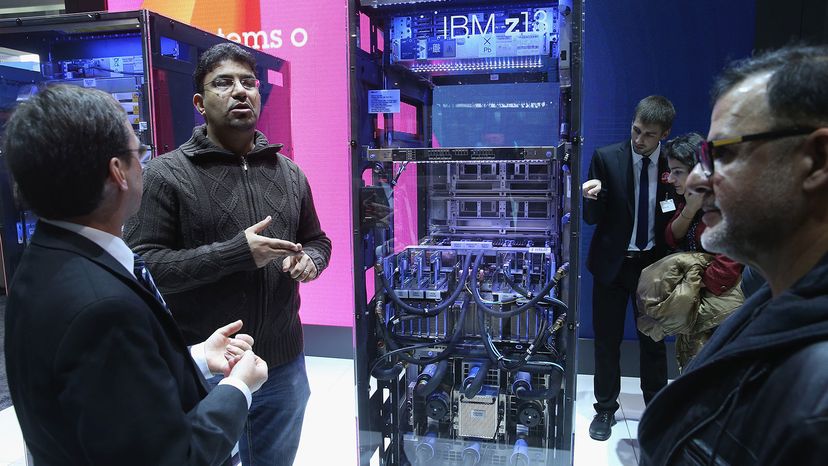

Although mainframe computers have dwindled in popularity, they’re still very widely used today and will continue to be relevant in the future. To this day, roughly 70% of Fortune 500 businesses use mainframes in some regard. Additionally, innovations are still being made in mainframes. The IBM z13 (shown below) was created in 2015 and the Rockhopper (shown next to the z13) was created in late 2018.

What Are Mainframe Computers?

Mainframe computers are also known simply as ‘mainframes’ or even as a ‘big iron.’ They were referred to as such because they were extremely large and powerful computers. The main function of mainframes is to process extremely large amounts of data very quickly. Although popularity has dwindled in recent times, they’re still very useful today, especially in enterprise applications.

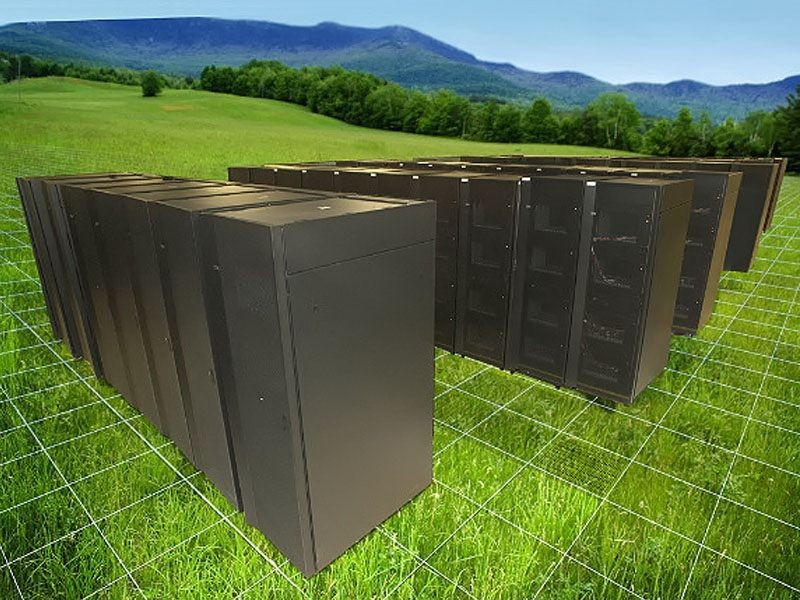

Server Computers

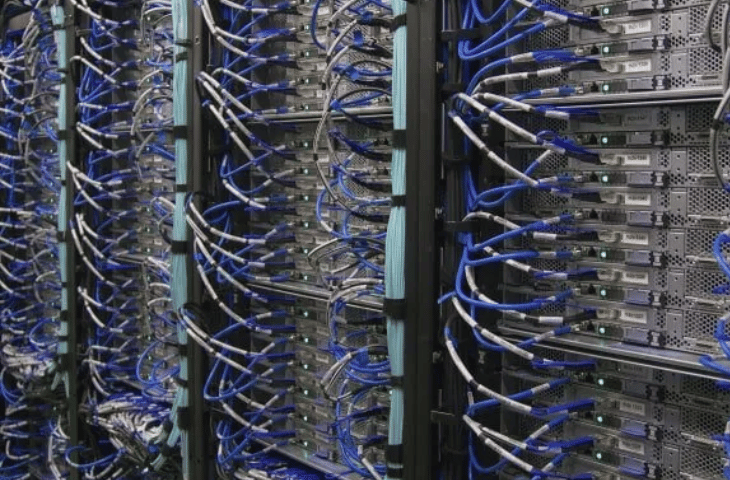

Servers play a major role in computing and have ever since IBM launched the first list server in 1981, the IBM VM Machine. Of course, there was also the first web server that was created in 1991 which launched the worldwide web. In more recent years, physical servers have waned and virtual cloud servers have quickly become the market leader, hosting most of today’s web pages and applications.

Over the years, there have been many types of server computers have come into existence. In fact, there are several types of servers in use today, in addition to list servers, web servers, and virtual cloud servers. Here is a brief list of some of the different types of servers.

Types of Servers

- Application Server

- Cloud Server

- Communication Server

- Computing Server

- Database Server

- File Server

- Game Server

- Mail Server

- Media Server

- Proxy Server

- Virtual Server

What Is A Server Computer?

There are several types of server computers, also known simply as ‘servers.’ However, a server is a computer (or program) that provides additional functionality for other computers, referred to as ‘clients.’ Perhaps the most popular servers are web servers, which allow other computers such as a PC (Personal Computer) to connect to the web via the internet.

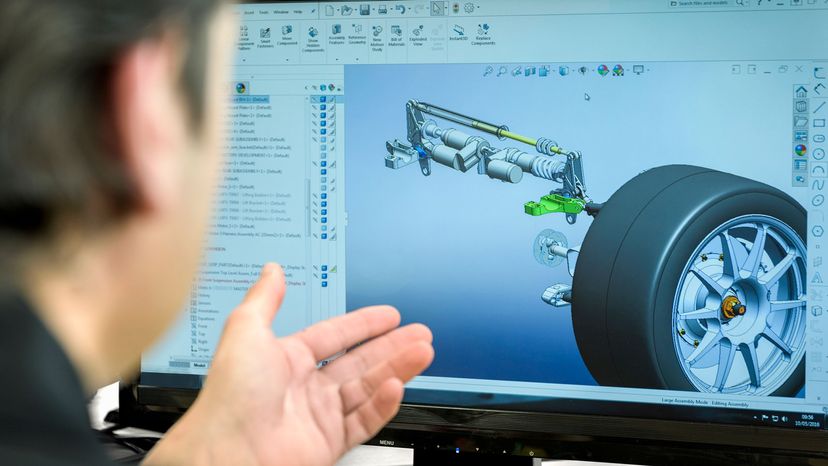

Supercomputers

Supercomputers are actually some of the earliest digital computers, with the first being the CDC 6600 which was built back in 1964. It was a highly sought-after computer for any scientists that were in need of running complex computations. The same scientists that developed the CDC 6600, also invented several other supercomputers going into the 1970s, including the Cray-1, followed by the liquid-cooled Cray-2 in the 1980s.

Through the 1990s and early 2000s, supercomputers continued advancing until in 2008, the IBM Roadrunner broke the petaFLOPS barrier. PetaFLOPS is a measure of computing speed that computes one thousand million million (10 15 ) floating-point operations per second. In other words, it’s fast . Yet, as unthinkably fast as the IBM Roadrunner was, it pales in comparison to the latest supercomputer and became obsolete just 5 years after it was made.

The Fugaku supercomputer , the successor of the 2011 Fujitsu K Computer (shown below), was operational as of June 2020. The amazing thing about the Fugaku supercomputer is that it reaches speeds of up to 415 PetaFLOPS. That’s more than three times faster than the next fastest supercomputer, the IBM Summit, which runs as fast as 122 PetaFLOPS. It won’t be long now until supercomputers reach ExaFLOPS (1,000 PetaFLOPS) territory.

What Is A Supercomputer?

Supercomputers are the fastest digital computers on the planet, rivaled only by quantum computers. Many will make the claim that supercomputers are also similar to mainframes because of their size and structure, but mainframes don’t come close in terms of processing speed. Supercomputers are often used for scientific work.

Minicomputers

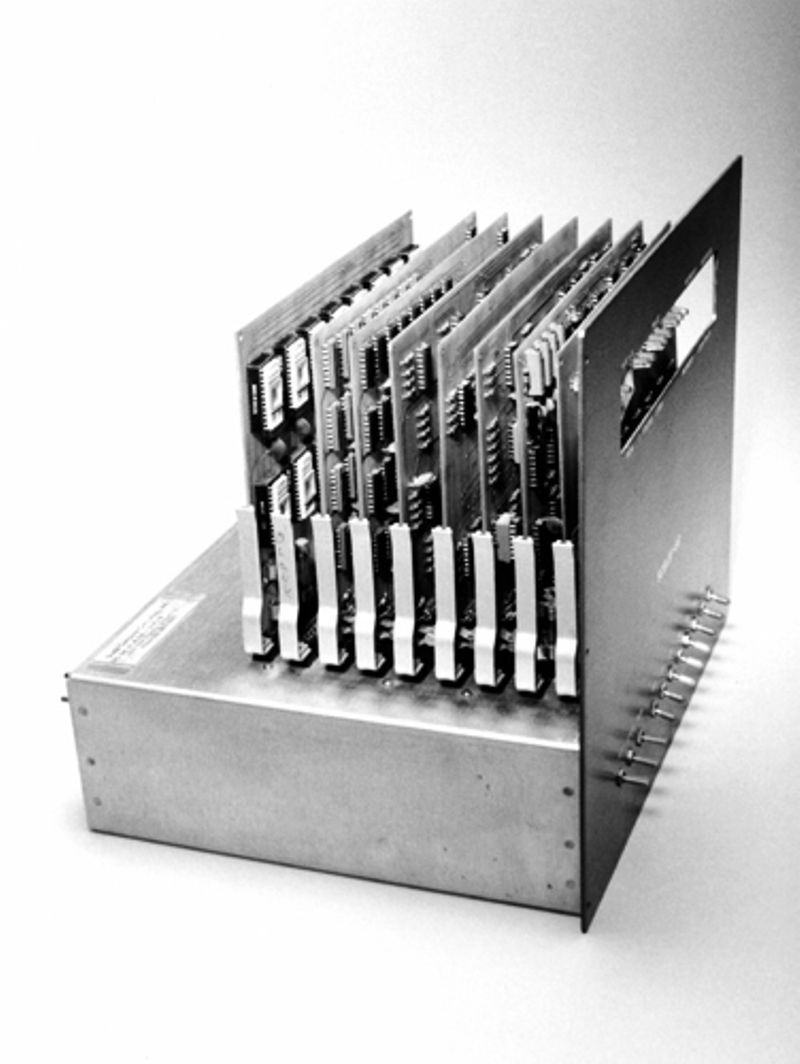

Minicomputers, also referred to as ‘mini’s,’ first appeared in the 1960s with the first mini being the DEC PDP-8 (shown below). By today’s standards, these computers were anything but mini. However, when compared to the previous generation of computers in the 1950s that used vacuum tubes and occupied an entire room, you realize they were indeed very small.

What made minicomputers possible was the invention of the transistor in 1947 and the integrated circuit in 1958. With these new inventions replaced vacuum tubes, making computers smaller and cheaper. The DEC PDP-8 weighed 250 pounds and cost $20,000 which was smaller and cheaper than most computers available at the time.

Through the 1960s and 1970s, computers continued to make consistent strides, as described by Moore’s Law . Thus, with the inventions of the personal computer and laptop, the demand for minicomputers quickly dwindled. The decrease in popularity for minis began in the 1980s and sharply increased in the 1990s as newer computers utilized microprocessors, spelling the end for minicomputers.

What Is A Minicomputer?

A minicomputer, or mini, is a computer that’s smaller and less powerful than a mainframe yet larger and more powerful than a personal computer. According to the 1970 article in The New York Times , minicomputers by definition must also cost less than $25,000. Unlike personal computers which are very much general-purpose, minicomputers were often designed for a specific function.

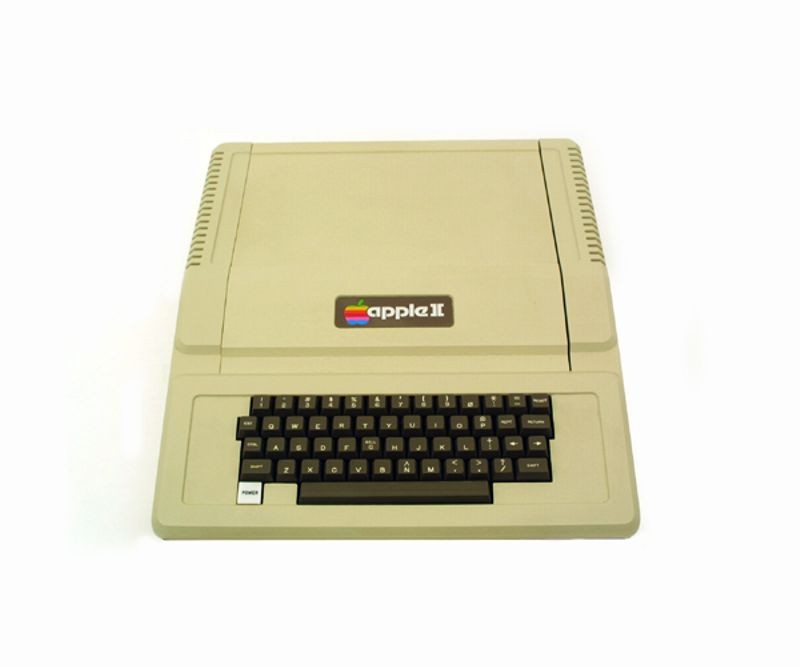

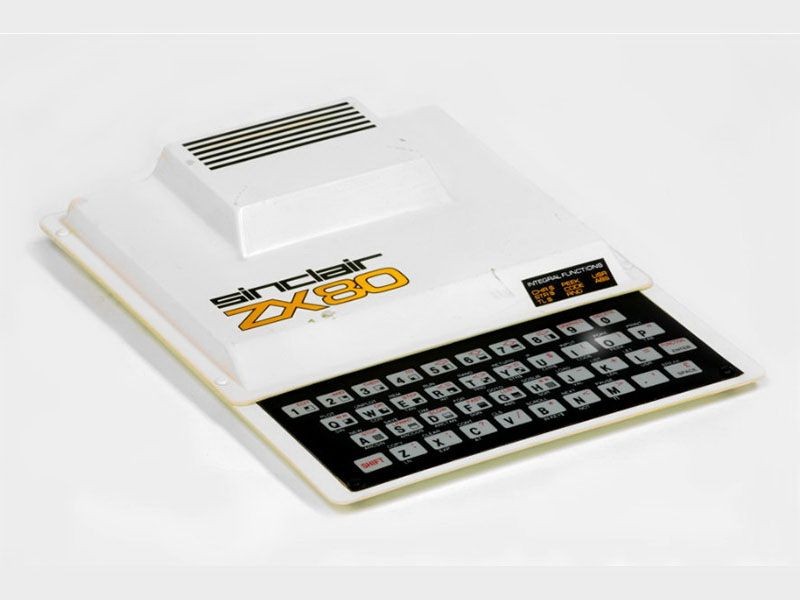

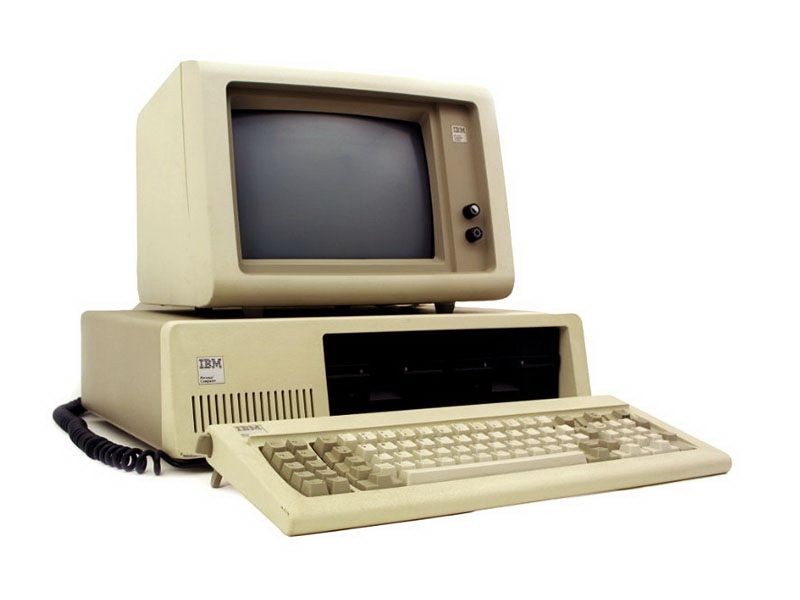

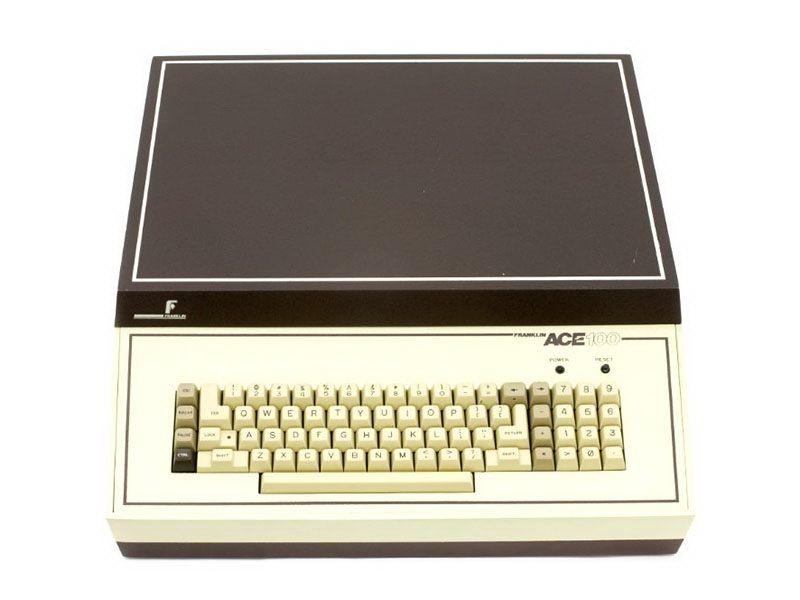

Personal Computer

Many people claim that the first-ever Personal Computer was created in 1971 by John Blankenbaker, known as the Kenbak-1 . This first Personal Computer, or PC, cost a reasonable $750 and had a whopping 256 bytes of RAM. The concept caught on and in 1977, the Apple-II was released, becoming the first mass-produced personal computer.

Flash forward to today and PCs have reached a whole new plateau. Today, PCs come in several shapes and sizes. There are desktops, laptops, tablets, smartphones, and even wearable computers such as smartwatches. Personal Computers in all of their variety have enabled people like never before. We’re all more connected than ever and have boundless opportunities.

Anything we want to learn is just a click away, including coding. Anyone could learn to code and launch their own product or website, just like this one. The Personal Computer, especially those on the market today, is the single most empowering invention in modern history.

What Is A Personal Computer?

A Personal Computer, more commonly known as a PC, is a computer intended for personal use. PCs are general-purpose and highly capable devices of varying types. Desktop computers, laptops, tablets, smartphones, and smartwatches are all classified as Personal Computers.

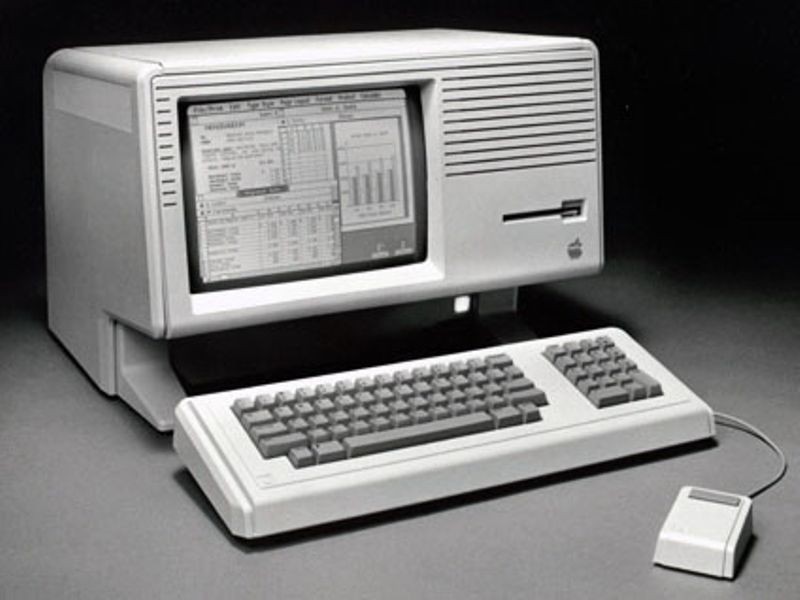

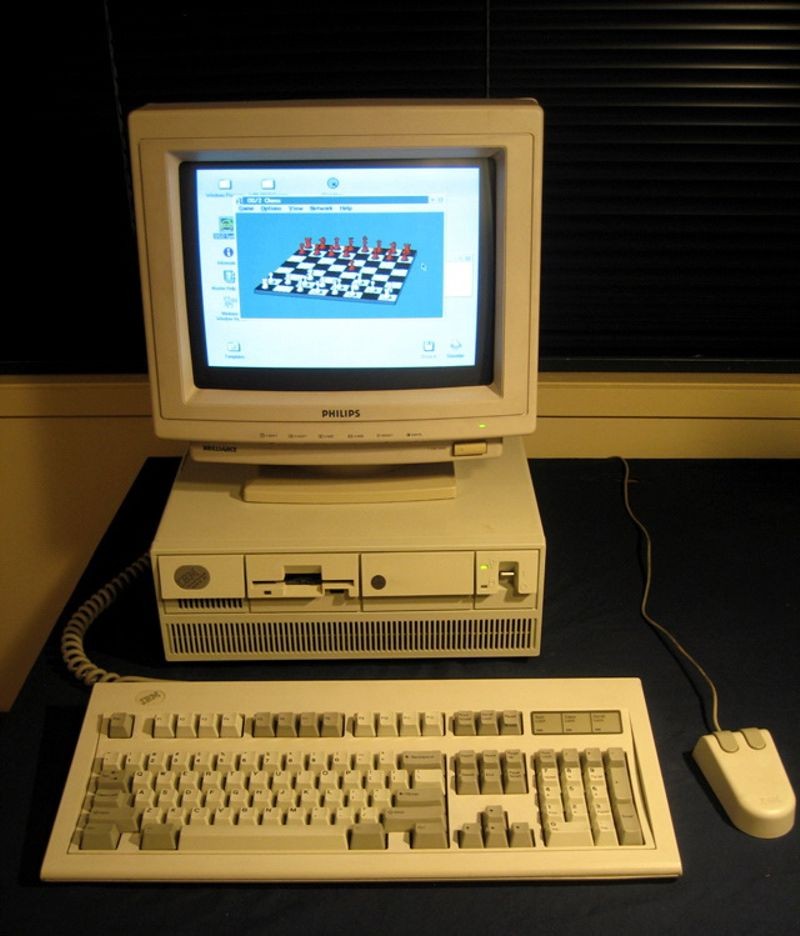

Desktop Computer

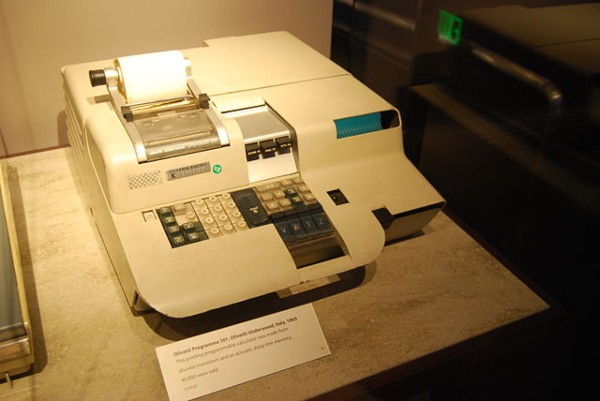

The first-ever desktop computer was the Programma 101 (shown below), invented by Pier Giorgio Perotto in 1964. However, it’s not at all like the desktop computers of today. The Programma 101, also known as the P101, didn’t have a monitor for an output device, nor did it have a mouse for an input device.

For input, it had a small keyboard consisting of numbers, a few letters, and a few arithmetic operators. The output was printed onto a small roll of paper. One of the amazing things about this initial desktop computer is that it was capable of playing a simple mathematical dice game . Thus, becoming the first game to ever run on a desktop.

Truth be told Programma 101 was more of a calculator than a computer by today’s standards. However, it was remarkable at the time and it served to push computers forward into the modern era of computing. It can be likened to the grandfather of the Apple II, which is the grandfather of modern desktop computers.

The lineage of desktop computers runs deeper than you might have expected. Desktop computers saw a massive boost in popularity during the 1980s when desktops became cheaper and more practical for the average person. However, since around the mid-2000s, the laptop computer has overtaken the desktop in popularity.

What Is A Desktop Computer?

A desktop computer also referred to simply as a desktop, is a type of personal computer that is intended to sit atop a desk. Modern desktops have a monitor, a keyboard, and a mouse as input and output devices. Desktops differ from Laptops in that laptops are more mobile and compact and can sit atop the user’s lap.

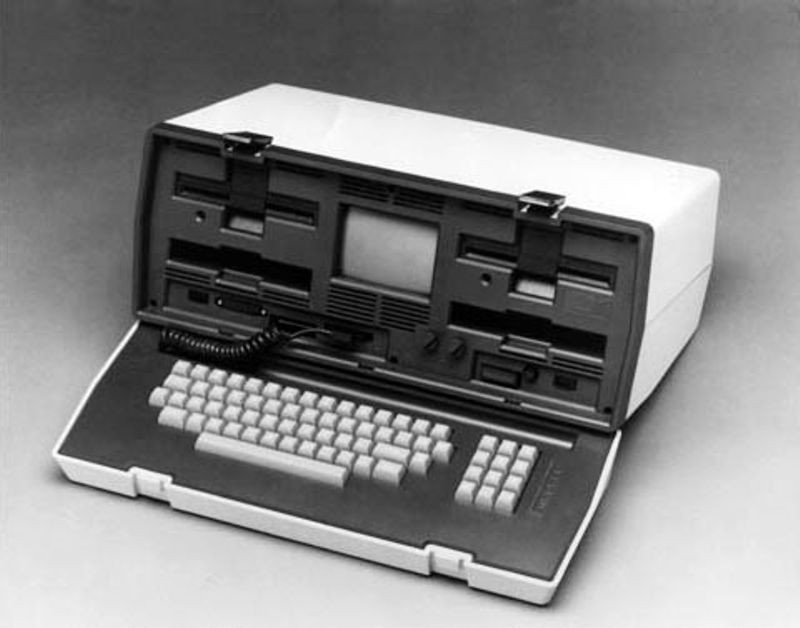

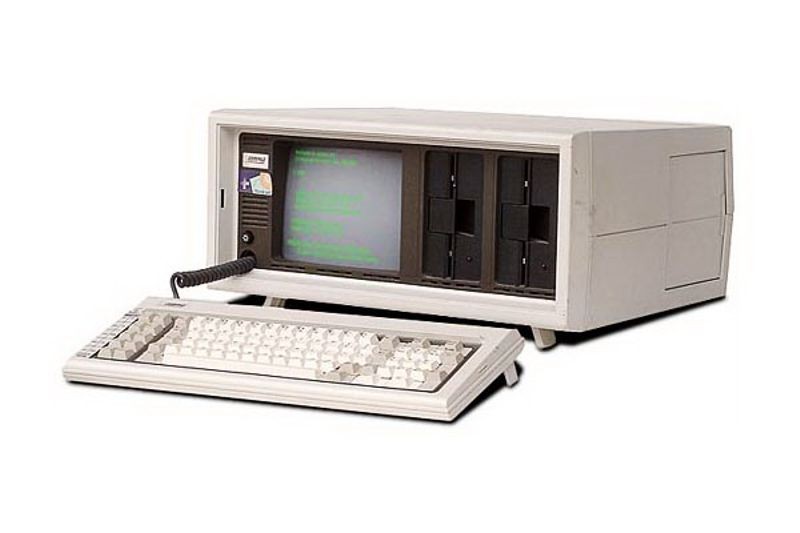

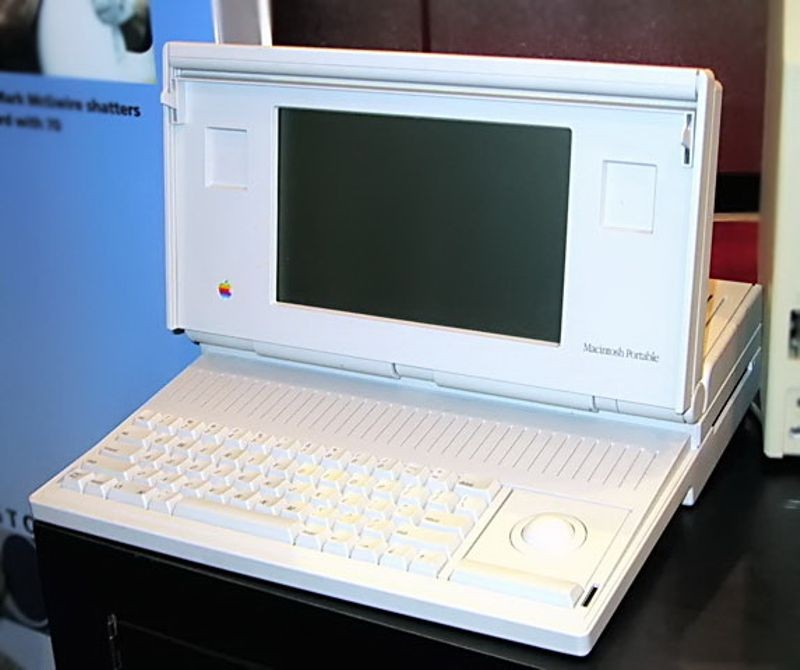

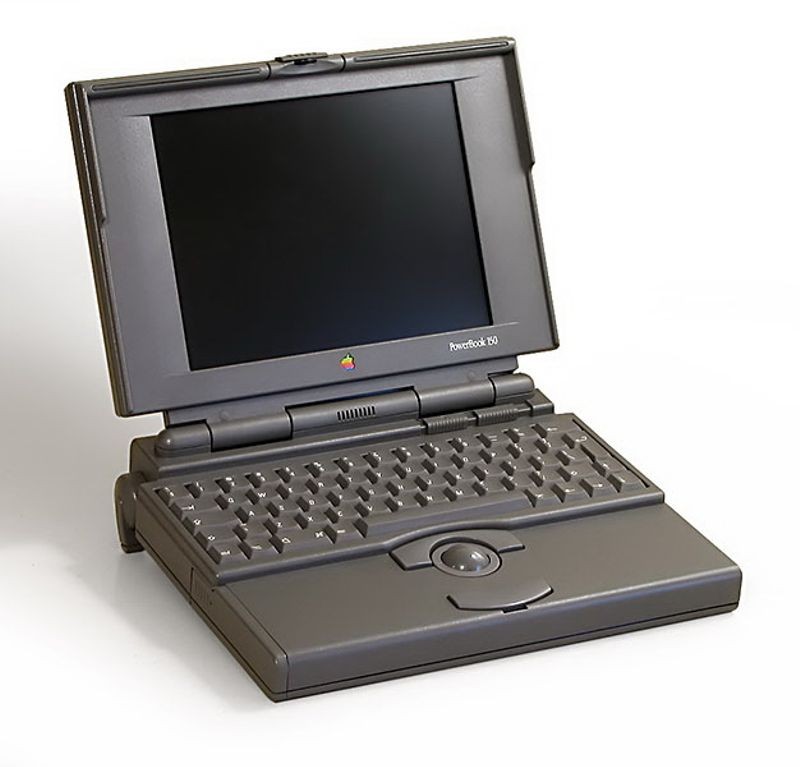

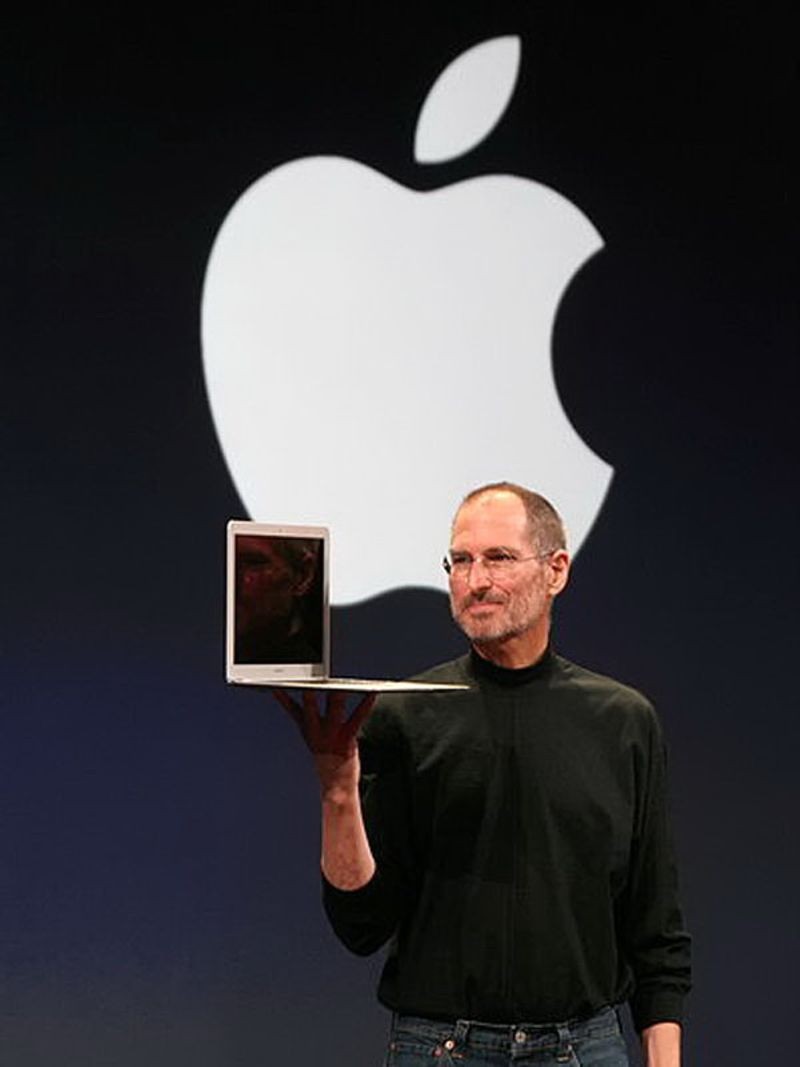

Laptop Computers

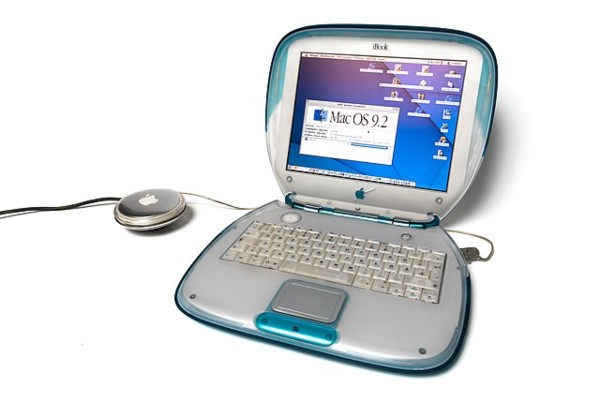

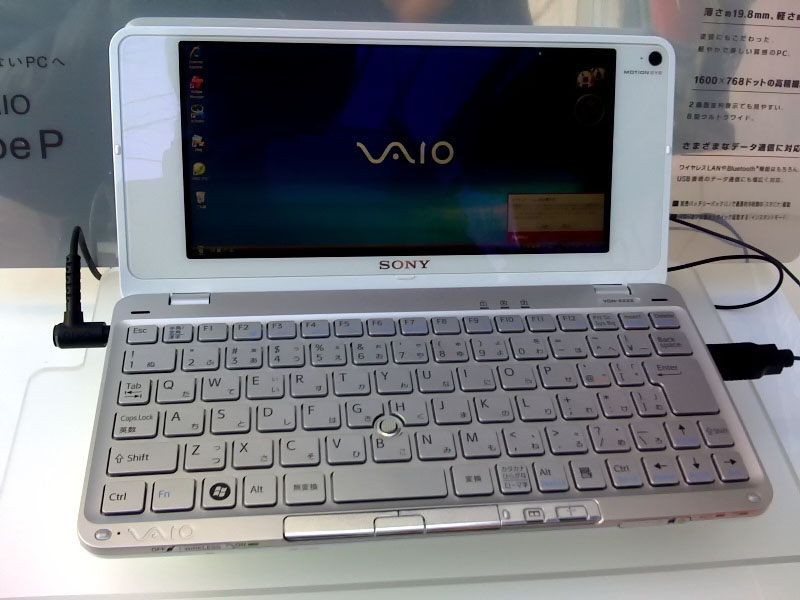

Laptop Computers have been around since the early 1980s. However, they really took off during the 1990s. In fact, one laptop, in particular, ended the 1990s with a lot of flash, style, and performance. Apple has pathed the way for the best new personal computers since the 1980s and the Apple iBook (as shown below) is no exception.

The iBook laptop dazzled with its looks and its groundbreaking wireless technology. It was the first of its kind to do so, using their Airport. Suddenly, there was a compact, sleek laptop computer that can wirelessly surf the web and send emails.

Today, as advanced as laptops are, they’re still decreasing in popularity as newer, smaller computer has taken over. I’m referring of course to smartphones. However, even as laptops aren’t the most popular computer, they’re still the first choice for many who need more capability than that contained in today’s smartphones.

What Is A Laptop Computer?

A laptop computer, or simply a laptop, is a portable personal computer that can rest on the user’s lap, or a desk. Laptops are more portable than PCs, yet offer very similar performance, making them extremely popular for most students and enterprises. Modern laptops are all Wi-Fi enabled, adding to their portability.

Smartphones

The first smartphone was the Simon Personal Communicator, created in 1994. In the nineties, you were the coolest person alive if you had one of these bricks. However, fast forward to 2007 and there’s a brand new hot product sweeping the market: the iPhone.

Smartphones were able to access the internet earlier in the 2000s. However, the iPhone greatly improved the experience. Also, while other smartphones at the time had built-in apps, the iPhone had an app store. The truly groundbreaking thing about the iPhone’s relation to applications is that it opened its app store up to 3rd-party developers.

Suddenly, a whole new industry was created , as well as a whole new class of developers: mobile applications. Before long, we had social media apps and awesome games like Angry Birds, don’t even get me started on Flappy Bird! With most people having a smartphone in their pocket, it has quickly become the most popular personal computer in the world and remains so.

What Is A Smartphone?

A smartphone is a mobile phone with nearly all the same functionality as a desktop or laptop computer. Unlike older generations of mobile phones, smartphones also have large touchscreens that function as both input and output. Not only can smartphones connect to the internet wirelessly, but they can also access a wide range of applications that provide additional functionality.

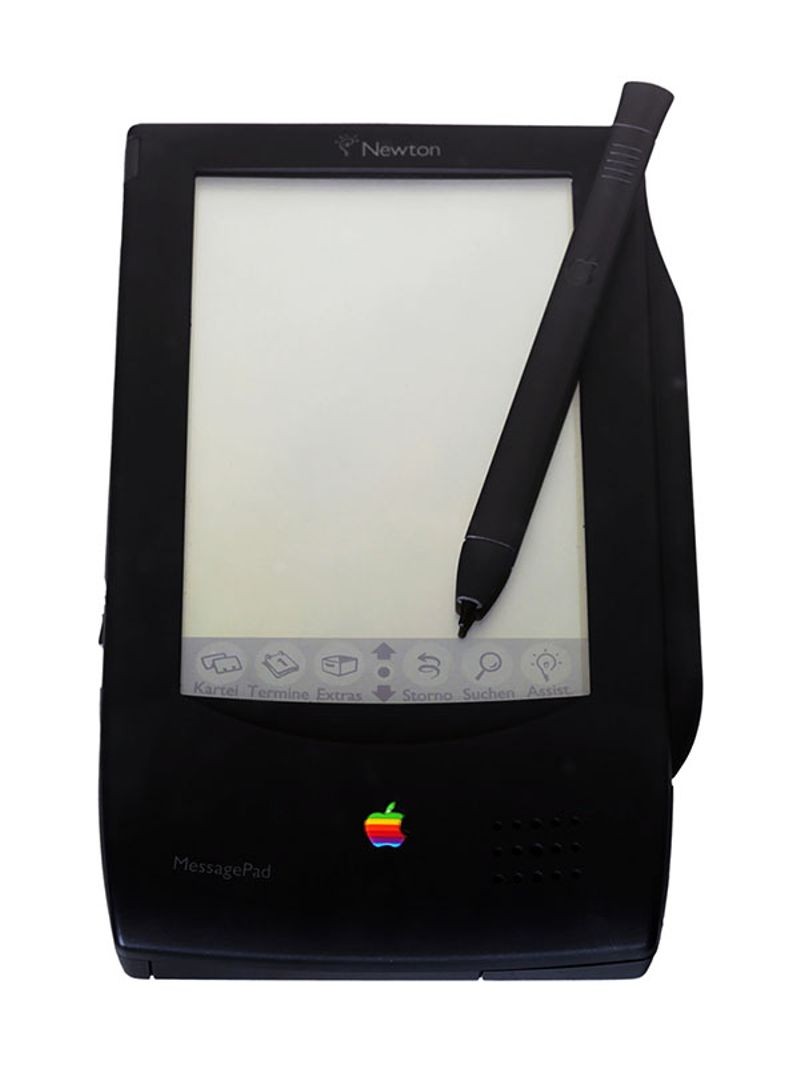

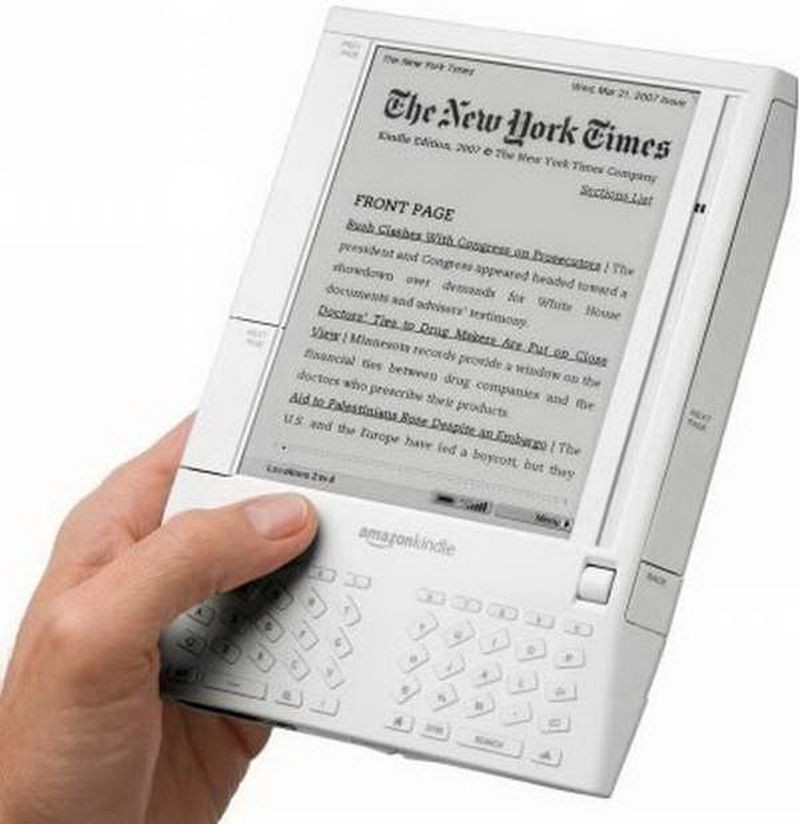

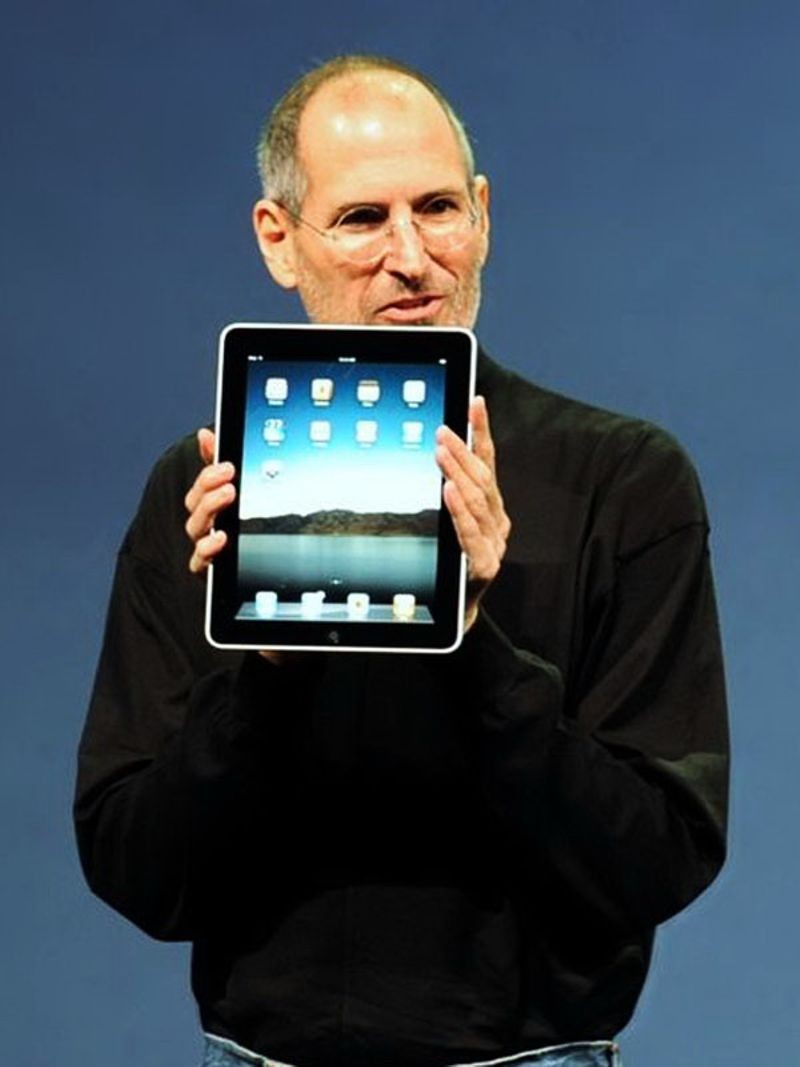

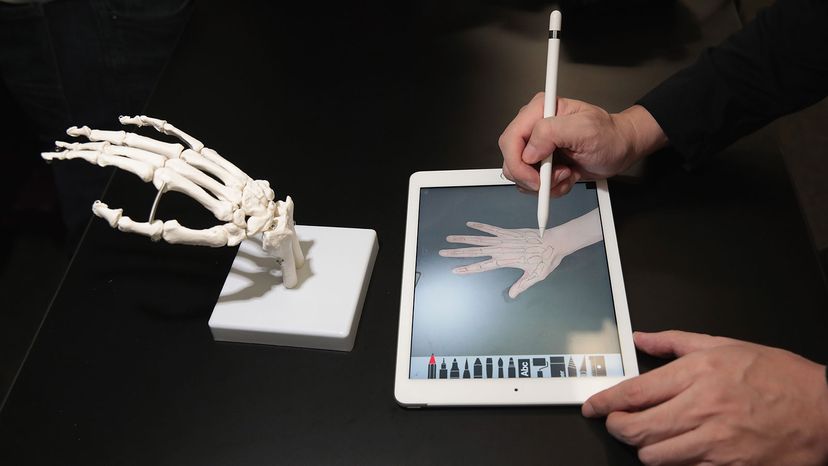

Tablet Computers

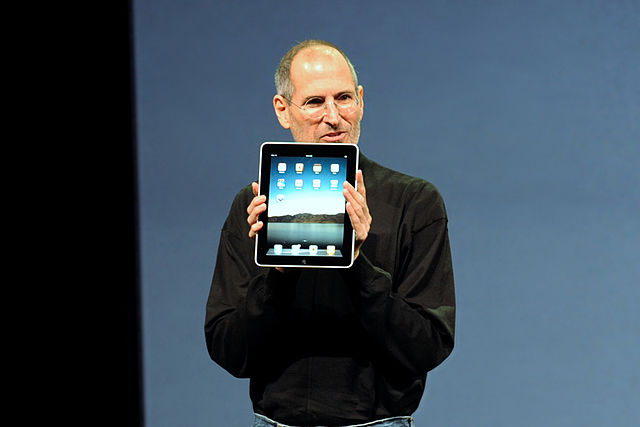

Tablet computers are still pretty new, relative to the other types of computers on this list. The prototype tablets were various PDAs (Personal Digital Assistants) such as Apple’s Newton MessagePad in 1993. However, Windows coined the phrase “tablet computer” when they released arguably the first true tablet in the year 2000: The Microsoft Tablet PC .

Only 10 years later, in 2010, the legendary Steve Jobs presented the Apple iPad (shown below) and once again stunned the crowd. Unlike other tablets, Apple’s iPad had access to the Apple store and all of the applications within it. However, it was also simply an amazing new personal computer with the look, feel, and performance you would expect from any Apple product.

Just a year later, Apple launched the iPad 2 with even more features, including a front-facing camera for FaceTime video calls. It was also thinner and more powerful. Other competitors have released fantastic tablets such as Amazon’s Kindle Fire table, of which I personally own one. These two tablets are on completely opposite sides of the pricing spectrum. However, they offer a lot of value.

What Is A Tablet Computer?

A tablet computer, also known simply as a tablet, is a flat, mobile computer with a touchscreen display. Tablets can be compared to smartphones, as they’re both very similar. Tablets are typically larger and faster than smartphones, yet lack the capability of making phone calls.

Wearable Computers

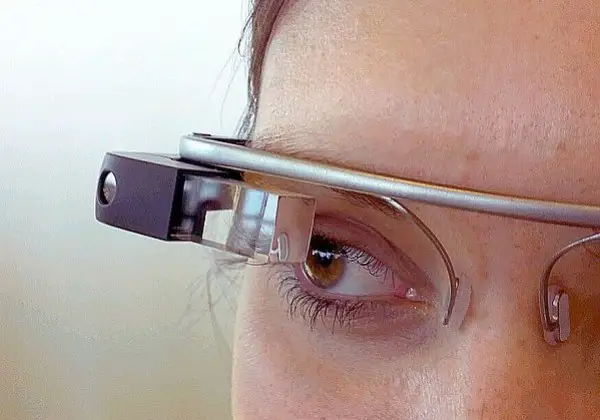

The newest and smallest PCs don’t reside in your pocket. Rather, they live on our wrists and even on our faces. I’m referring to smartwatches and smart glasses. The two leaders in the space are to no one’s surprise, Apple and Google products. Google released its smart glasses, the Google Glass (shown below) in 2013 on a limited basis, and Apple released the Apple Watch in 2015.

Wearable computers haven’t been around long, but they’re certainly here to stay. Although, they will continue to evolve. In fact, the next generation of personal computers will likely reside in us, rather than on us. Elon Musk’s company Neuralink is developing computers that will interface directly with the human brain. Prototypes are already functioning inside pigs and human trials are right around the corner.

What Are Wearable Computers?

A wearable computer, also known as a wearable, is a type of computer that is worn on the body. Smartwatches such as the Apple Watch and smart glasses such as the Google Glass are two prime examples of wearable computers. Wearables are changing the way people interface with computers.

IoT Devices

The Internet of Things (IoT) refers to the growing number of items with embedded computers and internet access. The term IoT was coined by Keven Ashton in 1999 during the internet boom. The following year, LG announced its first smart fridge with a large digital touchscreen display on the front of it.

IoT devices continued to grow in popularity at an extraordinary rate through the 2000s and in 2008, the number of “things” online surpassed the number of people on the internet. In 2009, Google began testing self-driving cars that recorded and relayed sensory data via the internet.

In 2011, you probably remember the Nest thermostat (shown below) that took the internet by storm. What was once an ugly albeit small appliance was now shiny, cool, and cost-effective as it saved money on heating bills. But the wave was just rolling in the world of IoT and many tech companies rode that wave brilliantly.

What Is An IoT Device?

An IoT device is anything that has an embedded computer and sensors that send sensory data via the internet. There are many types of IoT-enabled devices including thermostats, refrigerators, doorbell cameras, drones, light bulbs, light switches, smoke detectors, air purifiers, and the list goes on.

Final Thoughts

In conclusion, the world of computing has come a long way since the invention of the first analog computer. Today, we have a vast range of computing devices at our disposal, ranging from personal computers and smartphones to supercomputers and quantum computers.

Each type of computer has its unique features, advantages, and limitations, making them suitable for various applications. Whether you need a powerful machine to run complex simulations, a portable device to stay connected on the go, or a wearable gadget to track your fitness goals, there’s a computer out there for you.

With the rise of IoT devices, we can expect computing technology to become even more integrated into our daily lives in the future, making our lives more convenient, efficient, and connected.

Tim Statler

Tim Statler is a Computer Science student at Governors State University and the creator of Comp Sci Central. He lives in Crete, IL with his wife, Stefanie, and their cats, Beyoncé and Monte. When he's not studying or writing for Comp Sci Central, he's probably just hanging out or making some delicious food.

Recent Posts

Programming Language Levels (Lowest to Highest)

When learning to code, one of the first things I was curious about was the difference in programming language levels. I recently did a deep dive into these different levels and put together this...

Is Python a High-Level Language?

Python is my favorite programming language so I wanted to know, "Is Python a High-Level Language?" I did a little bit of research to find out for myself and here is what I learned. Is Python a...

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Unit 6. Basic computer terminologies

Topic B: Types of computers

Click play on the following audio player to listen along as you read this section.

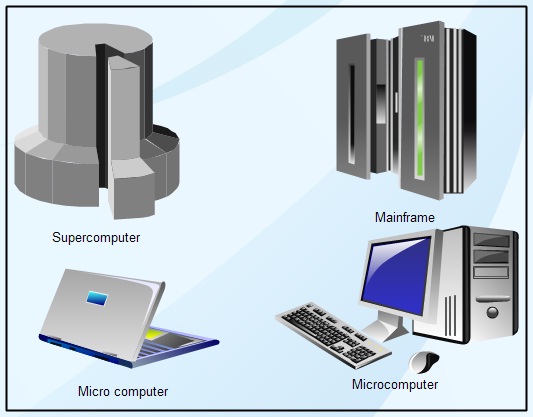

Classification of Computers by Size

Supercomputers, mainframe computers, minicomputers.

- Personal computers (PCs) or microcomputers

Supercomputer – a powerful computer that can process large amounts of data and do a great amount of computation very quickly.

Supercomputers are used for areas related to:

- Engineering

Supercomputers are useful for applications involving very large databases or that require a great amount of computation.

Supercomputers are used for complex tasks, such as:

- Weather forecasting

- Climate research

- Scientific simulation

- Oil and gas exploration

- Quantum mechanics

- Cryptanalysis

Mainframe computer – a high-performance computer used for large information processing jobs.

Mainframe computers are primarily used in :

- Institutions

- Health care

- Large businesses

- Financial institutions

- Stock brokerage firms

- Insurance agencies

Mainframe computers are useful for tasks related to:

- Census taking

- Industry and consumer statistics

- Enterprise resource planning

- Transaction processing

- e-business and e-commerce

Minicomputer – a mid-range computer that is intermediate in size, power, speed, storage capacity, etc., between a mainframe and a microcomputer.

Minicomputers are used by small organizations.

“Minicomputer” is a term that is no longer used much. In recent years, minicomputers are often referred to as small or midsize servers (a server is a central computer that provides information to other computers).

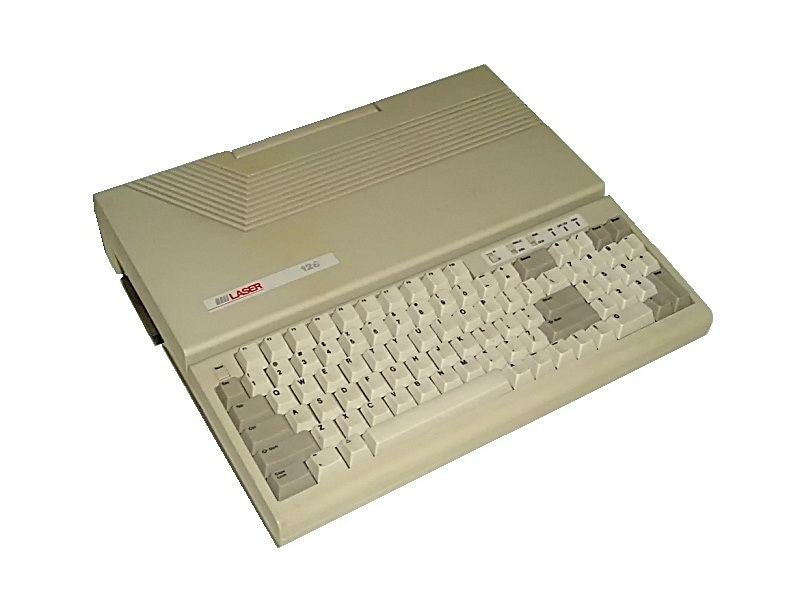

Personal computers

Personal computer (PC) – a small computer designed for use by a single user at a time.

A PC or microcomputer uses a single chip (microprocessor) for its central processing unit (CPU).

“Microcomputer” is now primarily used to mean a PC, but it can refer to any kind of small computer, such as a desktop computer, laptop computer, tablet, smartphone, or wearable.

Types of personal computers

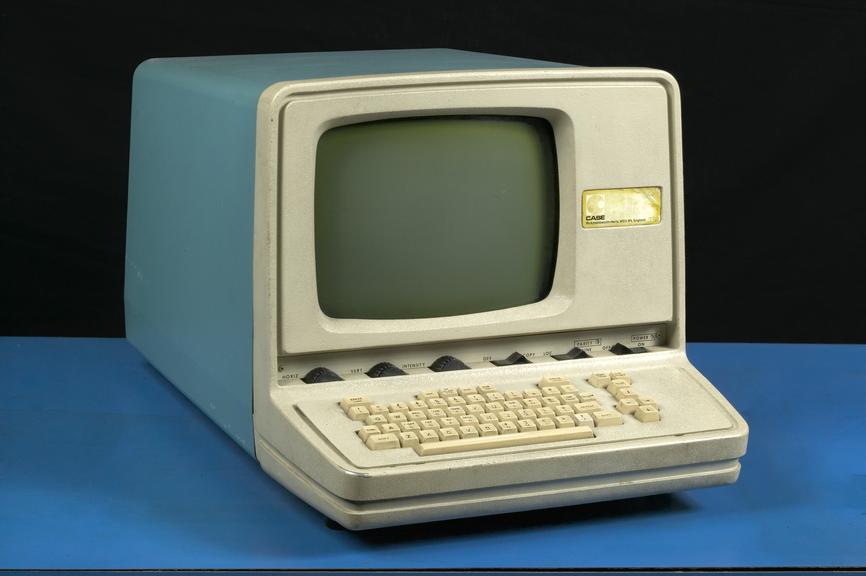

Desktop computer – a personal computer that is designed to stay at one location and fits on or under a desk. It typically has a monitor, keyboard, mouse, and a tower (system unit).

Laptop computer (or notebook) – A portable personal computer that is small enough to rest on the user’s lap and can be powered by a battery. It includes a flip down screen and a keyboard with a touchpad.

Tablet – A wireless touchscreen PC that is slightly smaller and weighs less than the average laptop.

Smartphone – A mobile phone that performs many of the functions of a personal computer.

a powerful computer that can process large amounts of data and do a great amount of computation very quickly.

a high-performance computer used for large information processing jobs.

a mid-range computer that is intermediate in size, power, speed, storage capacity, etc., between a mainframe and a microcomputer.

a central computer that provides information to other computers.

a small computer designed for use by a single user at a time. Also known as a PC or a microcomputer.

a personal computer that is designed to stay at one location and fits on or under a desk. It typically has a monitor, keyboard, mouse, and a tower (system unit).

a portable personal computer that is small enough to rest on the user's lap and can be powered by a battery. It includes a flip down screen and a keyboard with a touchpad. Also known as a notebook.

a wireless touchscreen PC that is slightly smaller and weighs less than the average laptop.

a mobile phone that performs many of the functions of a personal computer.

Key Concepts of Computer Studies Copyright © 2020 by Meizhong Wang is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

- Collections

- Publications

- K-12 Students & Educators

- Families & Community Groups

- Colleges & Universities

- Business & Government Leaders

- Make a Plan

- Exhibits at the Museum

- Tours & Group Reservations

- Customize It

- This is CHM

- Ways to Give

- Donor Recognition

- Institutional Partnerships

- Upcoming Events

- Hours & Directions

- Subscribe Now

- Terms of Use

- By Category

Bell Laboratories scientist George Stibitz uses relays for a demonstration adder

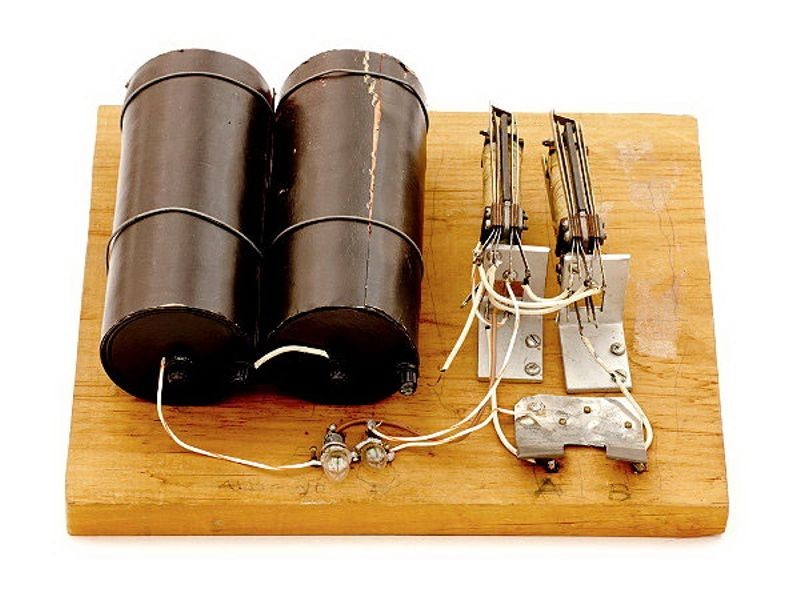

“Model K” Adder

Called the “Model K” Adder because he built it on his “Kitchen” table, this simple demonstration circuit provides proof of concept for applying Boolean logic to the design of computers, resulting in construction of the relay-based Model I Complex Calculator in 1939. That same year in Germany, engineer Konrad Zuse built his Z2 computer, also using telephone company relays.

Hewlett-Packard is founded

Hewlett and Packard in their garage workshop

David Packard and Bill Hewlett found their company in a Palo Alto, California garage. Their first product, the HP 200A Audio Oscillator, rapidly became a popular piece of test equipment for engineers. Walt Disney Pictures ordered eight of the 200B model to test recording equipment and speaker systems for the 12 specially equipped theatres that showed the movie “Fantasia” in 1940.

The Complex Number Calculator (CNC) is completed

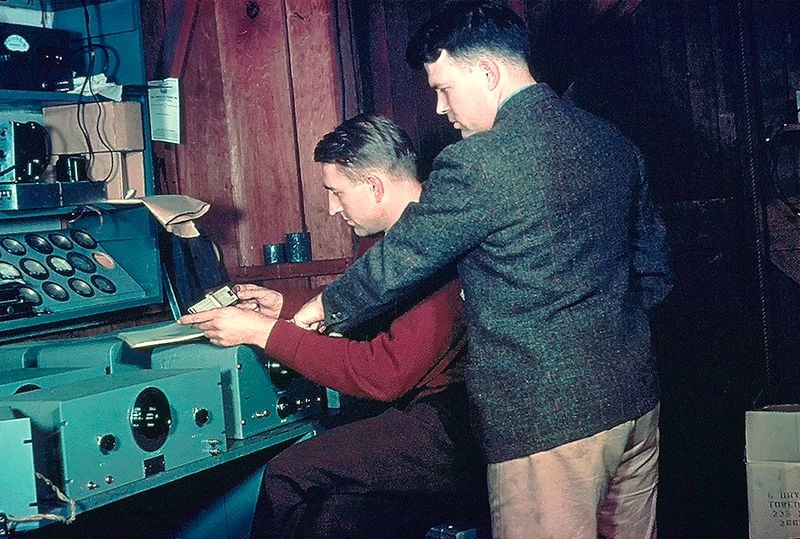

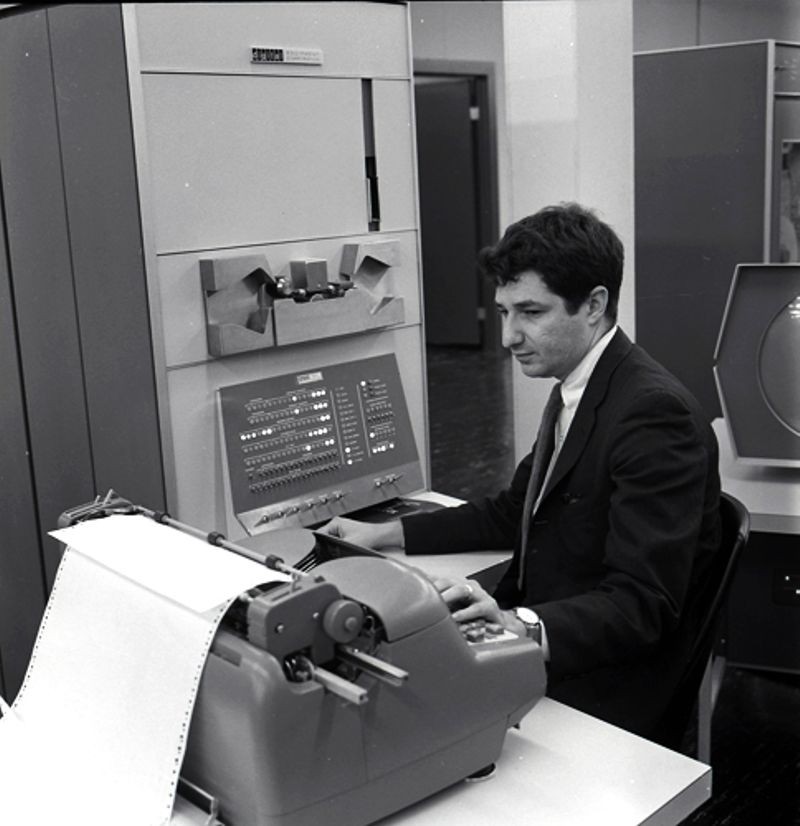

Operator at Complex Number Calculator (CNC)

In 1939, Bell Telephone Laboratories completes this calculator, designed by scientist George Stibitz. In 1940, Stibitz demonstrated the CNC at an American Mathematical Society conference held at Dartmouth College. Stibitz stunned the group by performing calculations remotely on the CNC (located in New York City) using a Teletype terminal connected to New York over special telephone lines. This is likely the first example of remote access computing.

Konrad Zuse finishes the Z3 Computer

The Zuse Z3 Computer

The Z3, an early computer built by German engineer Konrad Zuse working in complete isolation from developments elsewhere, uses 2,300 relays, performs floating point binary arithmetic, and has a 22-bit word length. The Z3 was used for aerodynamic calculations but was destroyed in a bombing raid on Berlin in late 1943. Zuse later supervised a reconstruction of the Z3 in the 1960s, which is currently on display at the Deutsches Museum in Munich.

The first Bombe is completed

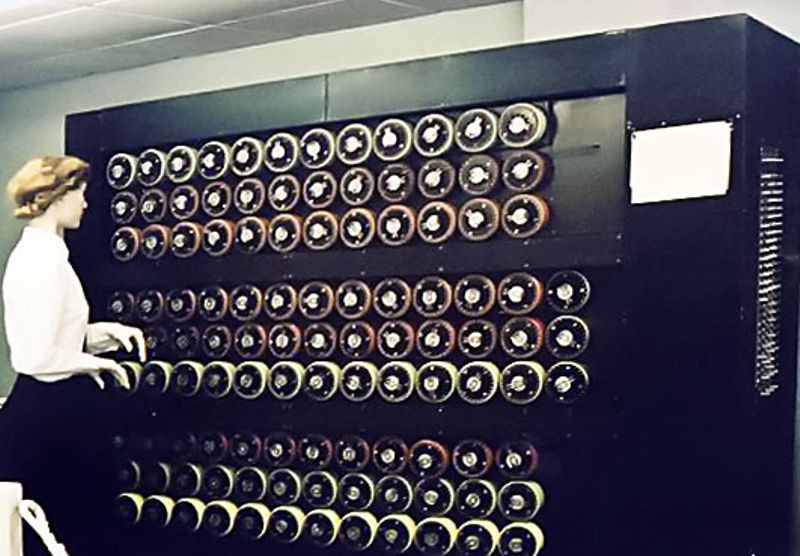

Bombe replica, Bletchley Park, UK

Built as an electro-mechanical means of decrypting Nazi ENIGMA-based military communications during World War II, the British Bombe is conceived of by computer pioneer Alan Turing and Harold Keen of the British Tabulating Machine Company. Hundreds of allied bombes were built in order to determine the daily rotor start positions of Enigma cipher machines, which in turn allowed the Allies to decrypt German messages. The basic idea for bombes came from Polish code-breaker Marian Rejewski's 1938 "Bomba."

The Atanasoff-Berry Computer (ABC) is completed

The Atanasoff-Berry Computer

After successfully demonstrating a proof-of-concept prototype in 1939, Professor John Vincent Atanasoff receives funds to build a full-scale machine at Iowa State College (now University). The machine was designed and built by Atanasoff and graduate student Clifford Berry between 1939 and 1942. The ABC was at the center of a patent dispute related to the invention of the computer, which was resolved in 1973 when it was shown that ENIAC co-designer John Mauchly had seen the ABC shortly after it became functional.

The legal result was a landmark: Atanasoff was declared the originator of several basic computer ideas, but the computer as a concept was declared un-patentable and thus freely open to all. A full-scale working replica of the ABC was completed in 1997, proving that the ABC machine functioned as Atanasoff had claimed. The replica is currently on display at the Computer History Museum.

Bell Labs Relay Interpolator is completed

George Stibitz circa 1940

The US Army asked Bell Laboratories to design a machine to assist in testing its M-9 gun director, a type of analog computer that aims large guns to their targets. Mathematician George Stibitz recommends using a relay-based calculator for the project. The result was the Relay Interpolator, later called the Bell Labs Model II. The Relay Interpolator used 440 relays, and since it was programmable by paper tape, was used for other applications following the war.

Curt Herzstark designs Curta calculator

Curta Model 1 calculator

Curt Herzstark was an Austrian engineer who worked in his family’s manufacturing business until he was arrested by the Nazis in 1943. While imprisoned at Buchenwald concentration camp for the rest of World War II, he refines his pre-war design of a calculator featuring a modified version of Leibniz’s “stepped drum” design. After the war, Herzstark’s Curta made history as the smallest all-mechanical, four-function calculator ever built.

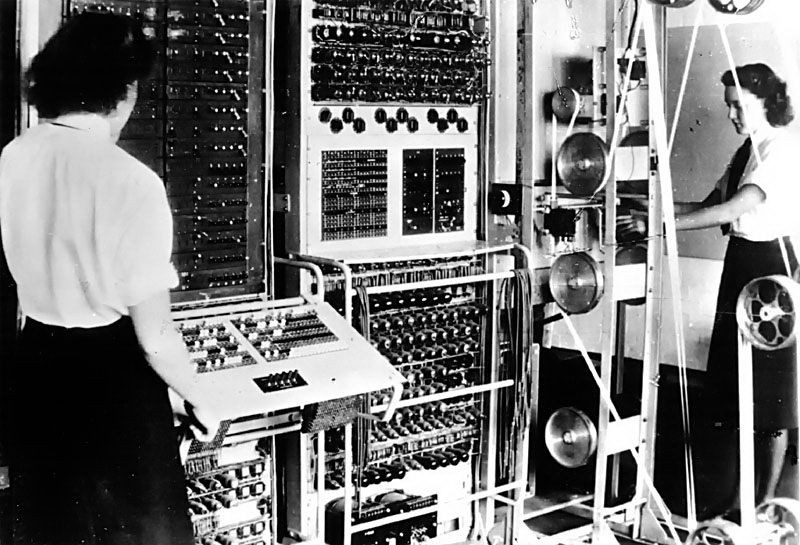

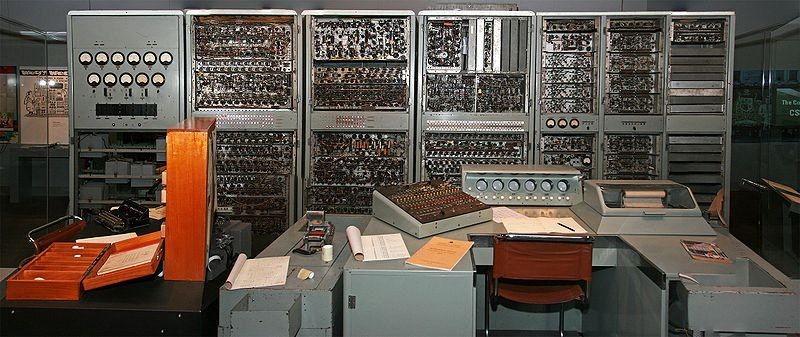

First Colossus operational at Bletchley Park

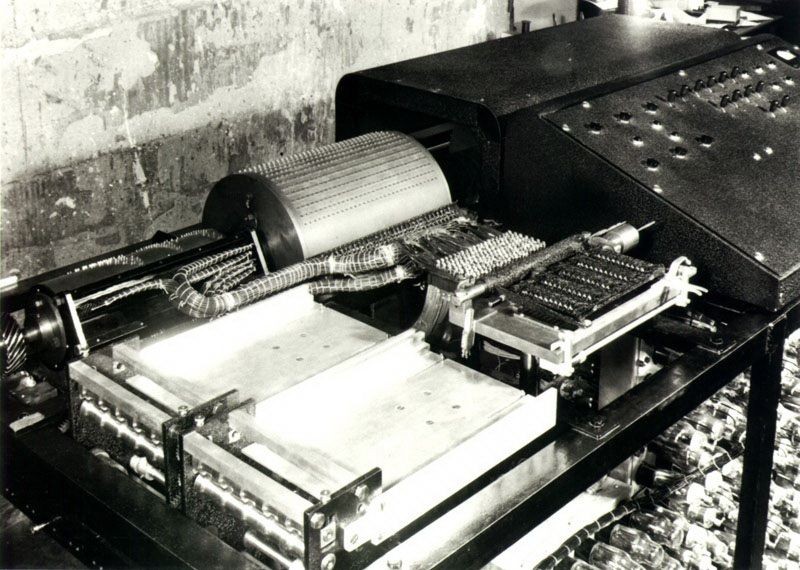

The Colossus at work at Bletchley Park

Designed by British engineer Tommy Flowers, the Colossus is designed to break the complex Lorenz ciphers used by the Nazis during World War II. A total of ten Colossi were delivered, each using as many as 2,500 vacuum tubes. A series of pulleys transported continuous rolls of punched paper tape containing possible solutions to a particular code. Colossus reduced the time to break Lorenz messages from weeks to hours. Most historians believe that the use of Colossus machines significantly shortened the war by providing evidence of enemy intentions and beliefs. The machine’s existence was not made public until the 1970s.

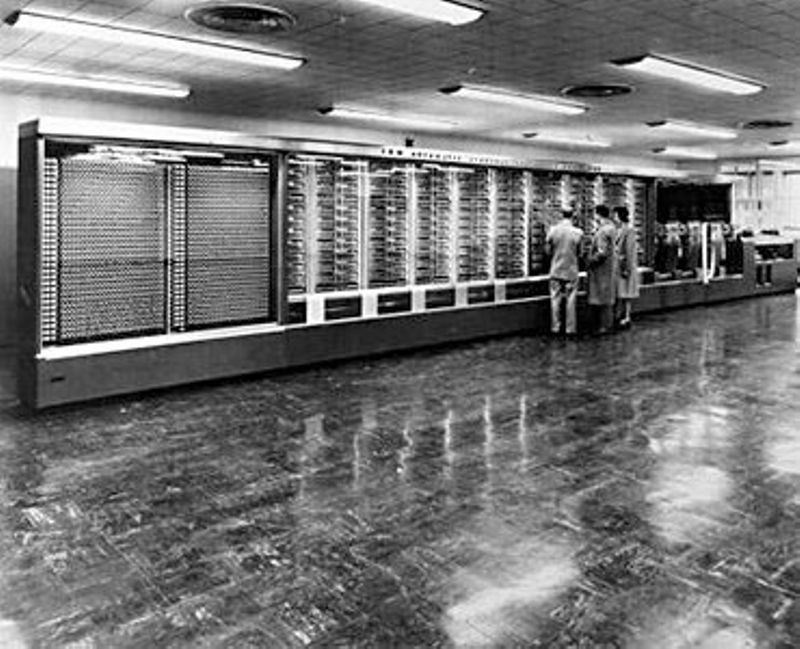

Harvard Mark 1 is completed

Conceived by Harvard physics professor Howard Aiken, and designed and built by IBM, the Harvard Mark 1 is a room-sized, relay-based calculator. The machine had a fifty-foot long camshaft running the length of machine that synchronized the machine’s thousands of component parts and used 3,500 relays. The Mark 1 produced mathematical tables but was soon superseded by electronic stored-program computers.

John von Neumann writes First Draft of a Report on the EDVAC

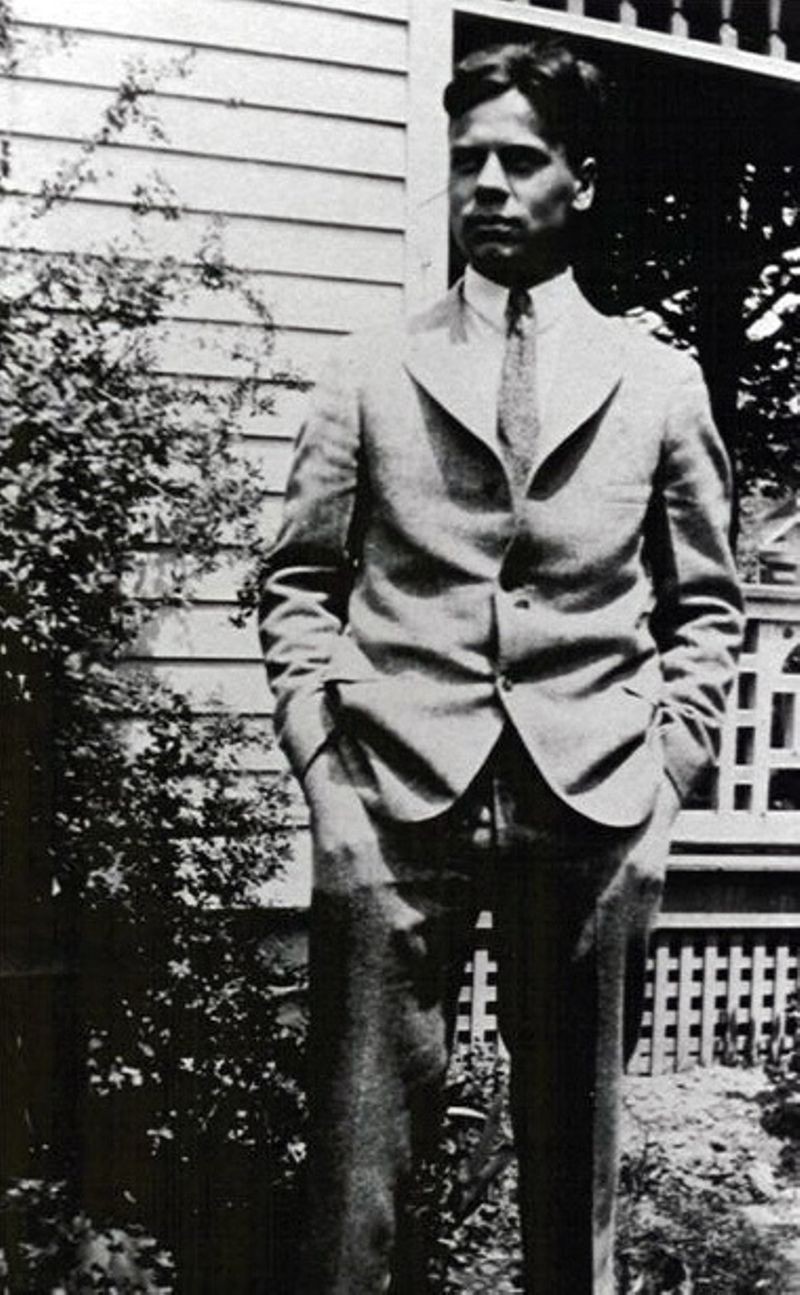

John von Neumann

In a widely circulated paper, mathematician John von Neumann outlines the architecture of a stored-program computer, including electronic storage of programming information and data -- which eliminates the need for more clumsy methods of programming such as plugboards, punched cards and paper. Hungarian-born von Neumann demonstrated prodigious expertise in hydrodynamics, ballistics, meteorology, game theory, statistics, and the use of mechanical devices for computation. After the war, he concentrated on the development of Princeton´s Institute for Advanced Studies computer.

Moore School lectures take place

The Moore School Building at the University of Pennsylvania

An inspiring summer school on computing at the University of Pennsylvania´s Moore School of Electrical Engineering stimulates construction of stored-program computers at universities and research institutions in the US, France, the UK, and Germany. Among the lecturers were early computer designers like John von Neumann, Howard Aiken, J. Presper Eckert and John Mauchly, as well as mathematicians including Derrick Lehmer, George Stibitz, and Douglas Hartree. Students included future computing pioneers such as Maurice Wilkes, Claude Shannon, David Rees, and Jay Forrester. This free, public set of lectures inspired the EDSAC, BINAC, and, later, IAS machine clones like the AVIDAC.

Project Whirlwind begins

Whirlwind installation at MIT

During World War II, the US Navy approaches the Massachusetts Institute of Technology (MIT) about building a flight simulator to train bomber crews. Under the leadership of MIT's Gordon Brown and Jay Forrester, the team first built a small analog simulator, but found it inaccurate and inflexible. News of the groundbreaking electronic ENIAC computer that same year inspired the group to change course and attempt a digital solution, whereby flight variables could be rapidly programmed in software. Completed in 1951, Whirlwind remains one of the most important computer projects in the history of computing. Foremost among its developments was Forrester’s perfection of magnetic core memory, which became the dominant form of high-speed random access memory for computers until the mid-1970s.

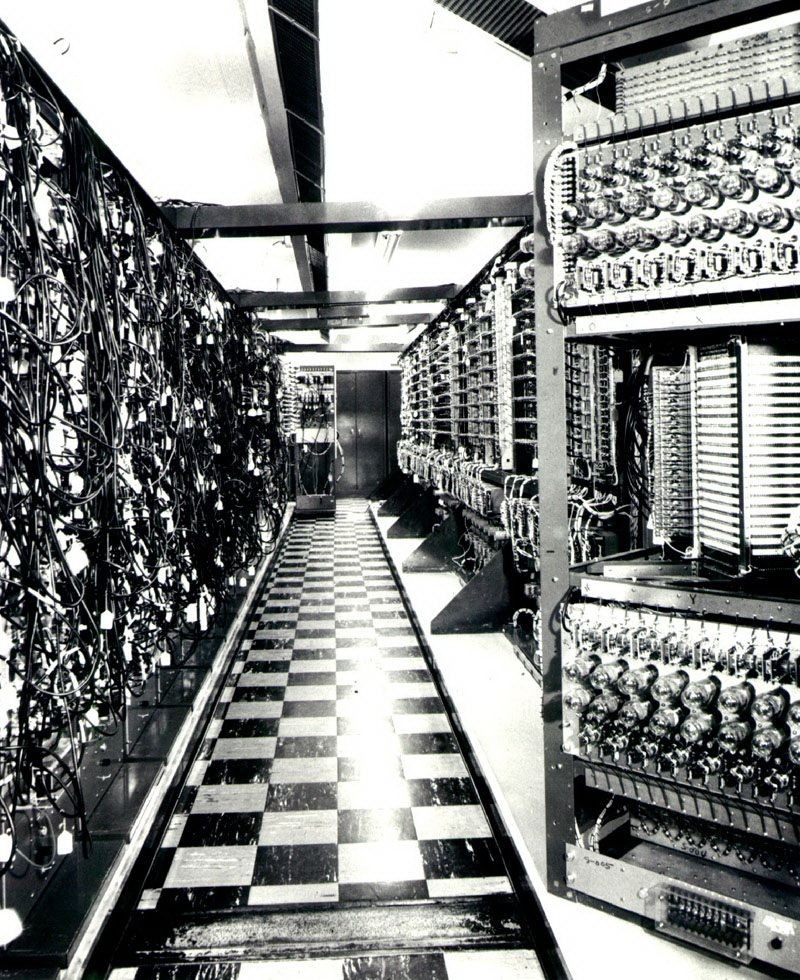

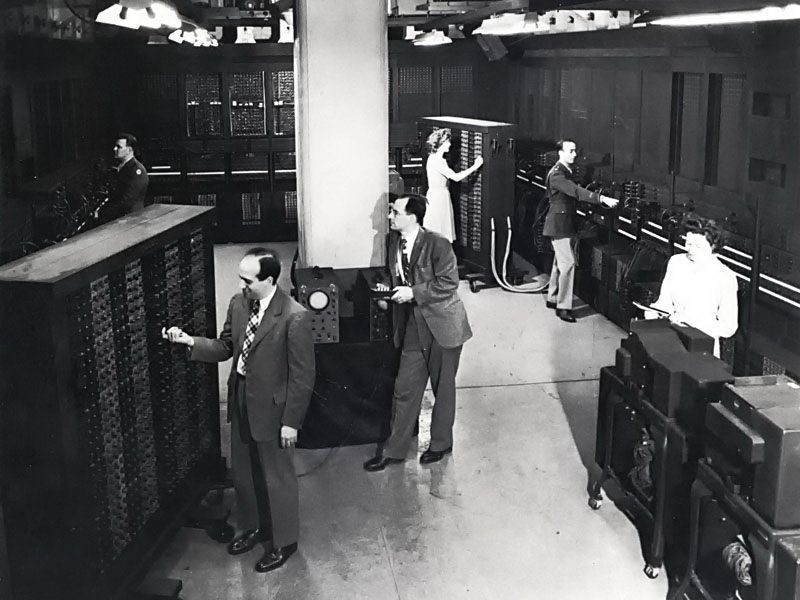

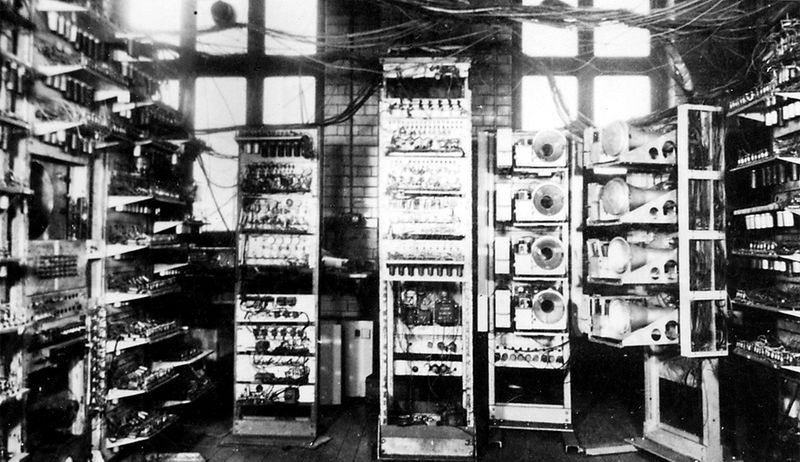

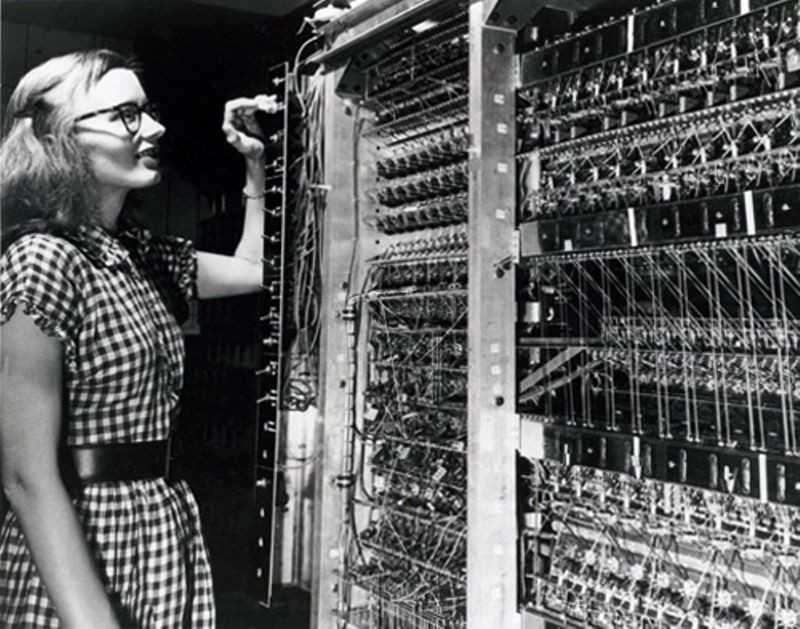

Public unveiling of ENIAC

Started in 1943, the ENIAC computing system was built by John Mauchly and J. Presper Eckert at the Moore School of Electrical Engineering of the University of Pennsylvania. Because of its electronic, as opposed to electromechanical, technology, it is over 1,000 times faster than any previous computer. ENIAC used panel-to-panel wiring and switches for programming, occupied more than 1,000 square feet, used about 18,000 vacuum tubes and weighed 30 tons. It was believed that ENIAC had done more calculation over the ten years it was in operation than all of humanity had until that time.

First Computer Program to Run on a Computer

Kilburn (left) and Williams in front of 'Baby'

University of Manchester researchers Frederic Williams, Tom Kilburn, and Geoff Toothill develop the Small-Scale Experimental Machine (SSEM), better known as the Manchester "Baby." The Baby was built to test a new memory technology developed by Williams and Kilburn -- soon known as the Williams Tube – which was the first high-speed electronic random access memory for computers. Their first program, consisting of seventeen instructions and written by Kilburn, ran on June 21st, 1948. This was the first program in history to run on a digital, electronic, stored-program computer.

SSEC goes on display

IBM Selective Sequence Electronic Calculator (SSEC)

The Selective Sequence Electronic Calculator (SSEC) project, led by IBM engineer Wallace Eckert, uses both relays and vacuum tubes to process scientific data at the rate of 50 14 x 14 digit multiplications per second. Before its decommissioning in 1952, the SSEC produced the moon position tables used in early planning of the 1969 Apollo XII moon landing. These tables were later confirmed by using more modern computers for the actual flights. The SSEC was one of the last of the generation of 'super calculators' to be built using electromechanical technology.

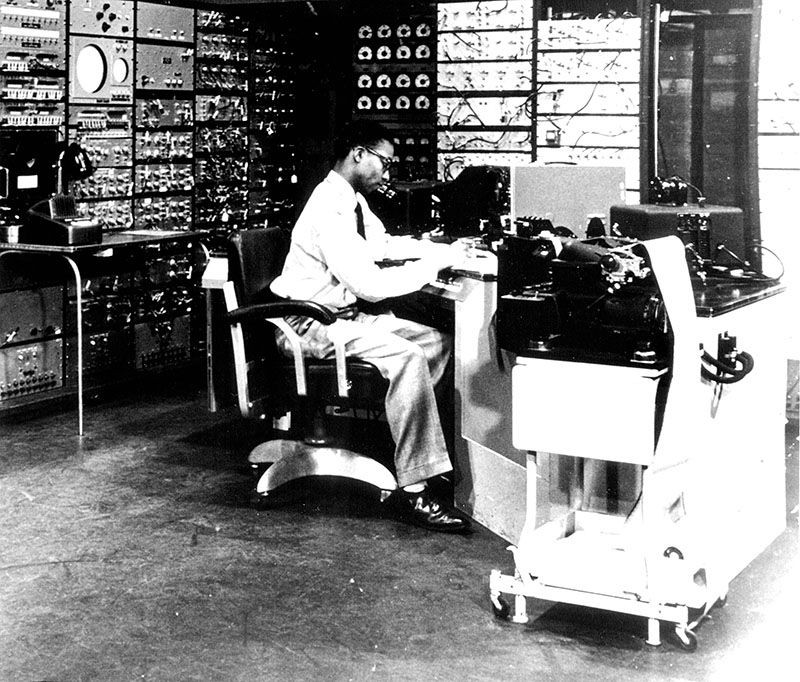

CSIRAC runs first program

While many early digital computers were based on similar designs, such as the IAS and its copies, others are unique designs, like the CSIRAC. Built in Sydney, Australia by the Council of Scientific and Industrial Research for use in its Radio physics Laboratory in Sydney, CSIRAC was designed by British-born Trevor Pearcey, and used unusual 12-hole paper tape. It was transferred to the Department of Physics at the University of Melbourne in 1955 and remained in service until 1964.

EDSAC completed

The first practical stored-program computer to provide a regular computing service, EDSAC is built at Cambridge University using vacuum tubes and mercury delay lines for memory. The EDSAC project was led by Cambridge professor and director of the Cambridge Computation Laboratory, Maurice Wilkes. Wilkes' ideas grew out of the Moore School lectures he had attended three years earlier. One major advance in programming was Wilkes' use of a library of short programs, called “subroutines,” stored on punched paper tapes and used for performing common repetitive calculations within a larger program.

MADDIDA developed

MADDIDA (Magnetic Drum Digital Differential Analyzer) prototype

MADDIDA is a digital drum-based differential analyzer. This type of computer is useful in performing many of the mathematical equations scientists and engineers encounter in their work. It was originally created for a nuclear missile design project in 1949 by a team led by Fred Steele. It used 53 vacuum tubes and hundreds of germanium diodes, with a magnetic drum for memory. Tracks on the drum did the mathematical integration. MADDIDA was flown across the country for a demonstration to John von Neumann, who was impressed. Northrop was initially reluctant to make MADDIDA a commercial product, but by the end of 1952, six had sold.

Manchester Mark I completed

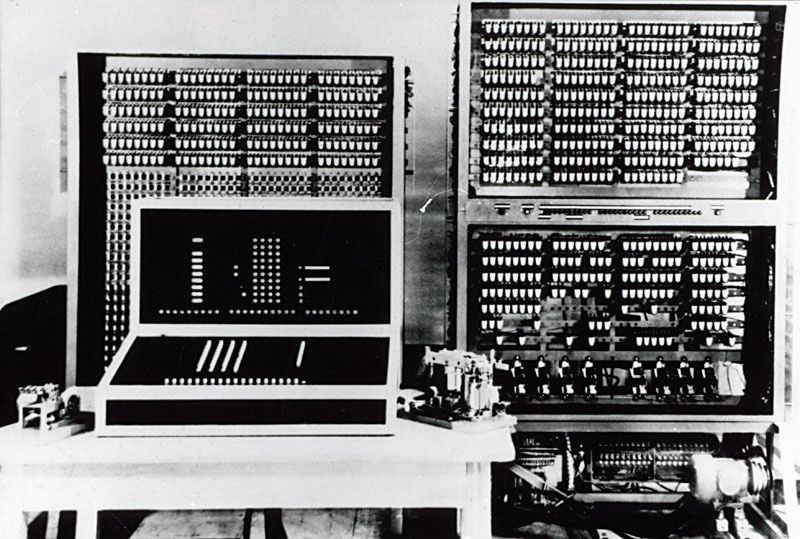

Manchester Mark I

Built by a team led by engineers Frederick Williams and Tom Kilburn, the Mark I serves as the prototype for Ferranti’s first computer – the Ferranti Mark 1. The Manchester Mark I used more than 1,300 vacuum tubes and occupied an area the size of a medium room. Its “Williams-Kilburn tube” memory system was later adopted by several other early computer systems around the world.

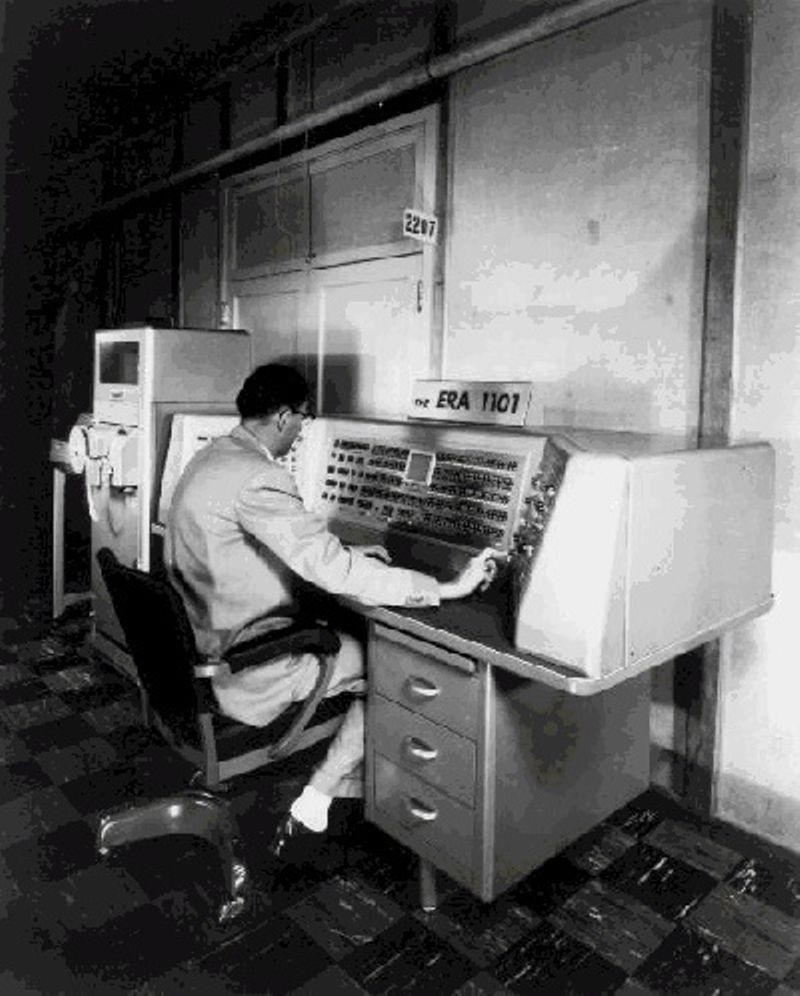

ERA 1101 introduced

One of the first commercially produced computers, the company´s first customer was the US Navy. The 1101, designed by ERA but built by Remington-Rand, was intended for high-speed computing and stored 1 million bits on its magnetic drum, one of the earliest magnetic storage devices and a technology which ERA had done much to perfect in its own laboratories. Many of the 1101’s basic architectural details were used again in later Remington-Rand computers until the 1960s.

NPL Pilot ACE completed

Based on ideas from Alan Turing, Britain´s Pilot ACE computer is constructed at the National Physical Laboratory. "We are trying to build a machine to do all kinds of different things simply by programming rather than by the addition of extra apparatus," Turing said at a symposium on large-scale digital calculating machinery in 1947 in Cambridge, Massachusetts. The design packed 800 vacuum tubes into a relatively compact 12 square feet.

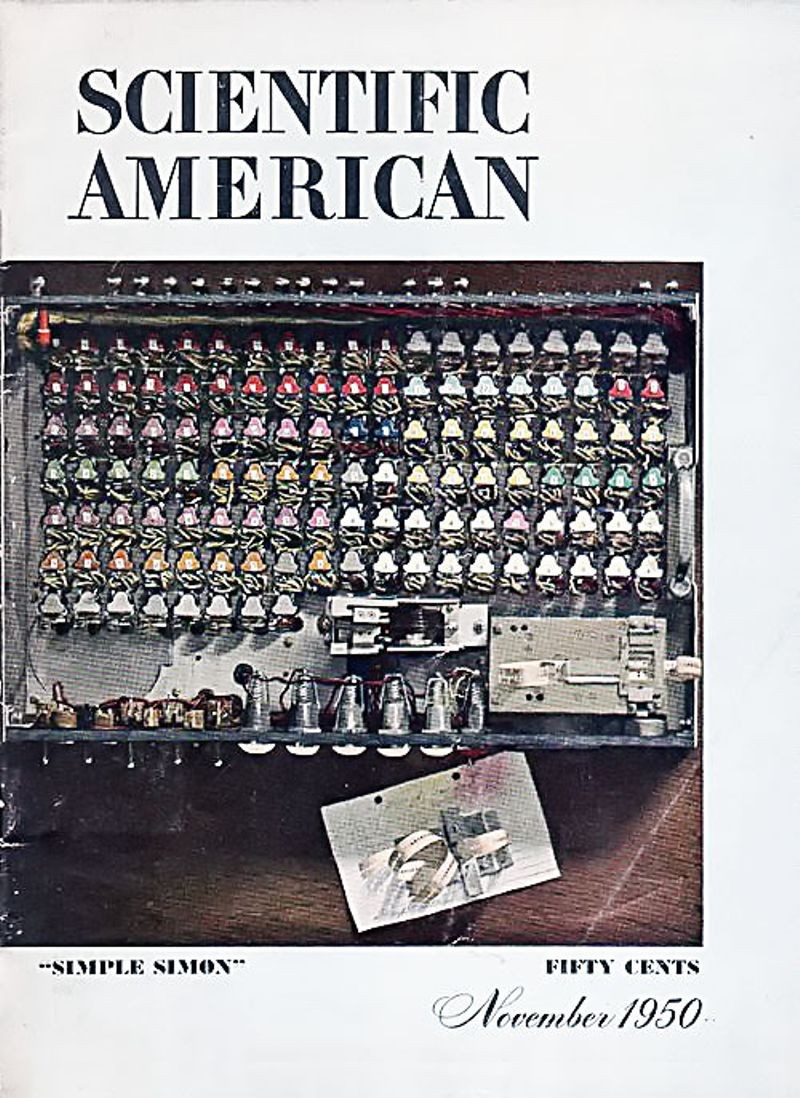

Plans to build the Simon 1 relay logic machine are published

Simon featured on the November 1950 Scientific American cover

The hobbyist magazine Radio Electronics publishes Edmund Berkeley's design for the Simon 1 relay computer from 1950 to 1951. The Simon 1 used relay logic and cost about $600 to build. In his book Giant Brains , Berkeley noted - “We shall now consider how we can design a very simple machine that will think. Let us call it Simon, because of its predecessor, Simple Simon... Simon is so simple and so small in fact that it could be built to fill up less space than a grocery-store box; about four cubic feet.”

SEAC and SWAC completed

The Standards Eastern Automatic Computer (SEAC) is among the first stored program computers completed in the United States. It was built in Washington DC as a test-bed for evaluating components and systems as well as for setting computer standards. It was also one of the first computers to use all-diode logic, a technology more reliable than vacuum tubes. The world's first scanned image was made on SEAC by engineer Russell Kirsch in 1957.

The NBS also built the Standards Western Automatic Computer (SWAC) at the Institute for Numerical Analysis on the UCLA campus. Rather than testing components like the SEAC, the SWAC was built using already-developed technology. SWAC was used to solve problems in numerical analysis, including developing climate models and discovering five previously unknown Mersenne prime numbers.

Ferranti Mark I sold

Ferranti Mark 1

The title of “first commercially available general-purpose computer” probably goes to Britain’s Ferranti Mark I for its sale of its first Mark I computer to Manchester University. The Mark 1 was a refinement of the experimental Manchester “Baby” and Manchester Mark 1 computers, also at Manchester University. A British government contract spurred its initial development but a change in government led to loss of funding and the second and only other Mark I was sold at a major loss to the University of Toronto, where it was re-christened FERUT.

First Univac 1 delivered to US Census Bureau

Univac 1 installation

The Univac 1 is the first commercial computer to attract widespread public attention. Although manufactured by Remington Rand, the machine was often mistakenly referred to as “the IBM Univac." Univac computers were used in many different applications but utilities, insurance companies and the US military were major customers. One biblical scholar even used a Univac 1 to compile a concordance to the King James version of the Bible. Created by Presper Eckert and John Mauchly -- designers of the earlier ENIAC computer -- the Univac 1 used 5,200 vacuum tubes and weighed 29,000 pounds. Remington Rand eventually sold 46 Univac 1s at more than $1 million each.

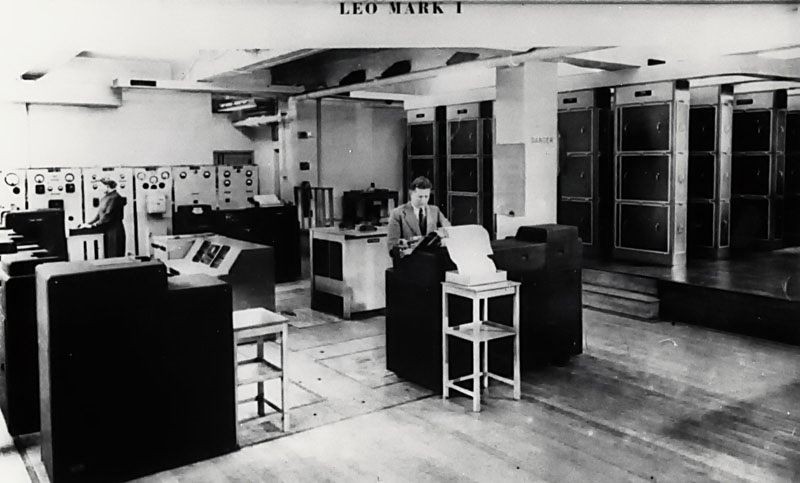

J. Lyons & Company introduce LEO-1

Modeled after the Cambridge University EDSAC computer, the president of Lyons Tea Co. has the LEO built to solve the problem of production scheduling and delivery of cakes to the hundreds of Lyons tea shops around England. After the success of the first LEO, Lyons went into business manufacturing computers to meet the growing need for data processing systems in business. The LEO was England’s first commercial computer and was performing useful work before any other commercial computer system in the world.

IAS computer operational

MANIAC at Los Alamos

The Institute of Advanced Study (IAS) computer is a multi-year research project conducted under the overall supervision of world-famous mathematician John von Neumann. The notion of storing both data and instructions in memory became known as the ‘stored program concept’ to distinguish it from earlier methods of instructing a computer. The IAS computer was designed for scientific calculations and it performed essential work for the US atomic weapons program. Over the next few years, the basic design of the IAS machine was copied in at least 17 places and given similar-sounding names, for example, the MANIAC at Los Alamos Scientific Laboratory; the ILLIAC at the University of Illinois; the Johnniac at The Rand Corporation; and the SILLIAC in Australia.

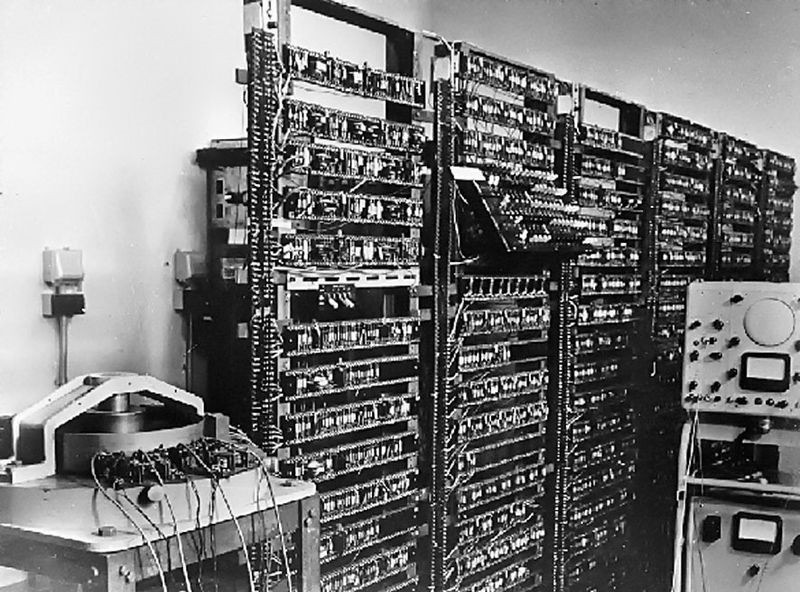

Grimsdale and Webb build early transistorized computer

Manchester transistorized computer

Working under Tom Kilburn at England’s Manchester University, Richard Grimsdale and Douglas Webb demonstrate a prototype transistorized computer, the "Manchester TC", on November 16, 1953. The 48-bit machine used 92 point-contact transistors and 550 diodes.

IBM ships its Model 701 Electronic Data Processing Machine

Cuthbert Hurd (standing) and Thomas Watson, Sr. at IBM 701 console

During three years of production, IBM sells 19 701s to research laboratories, aircraft companies, and the federal government. Also known inside IBM as the “Defense Calculator," the 701 rented for $15,000 a month. Programmer Arthur Samuels used the 701 to write the first computer program designed to play checkers. The 701 introduction also marked the beginning of IBM’s entry into the large-scale computer market, a market it came to dominate in later decades.

RAND Corporation completes Johnniac computer

RAND Corporation’s Johnniac

The Johnniac computer is one of 17 computers that followed the basic design of Princeton's Institute of Advanced Study (IAS) computer. It was named after John von Neumann, a world famous mathematician and computer pioneer of the day. Johnniac was used for scientific and engineering calculations. It was also repeatedly expanded and improved throughout its 13-year lifespan. Many innovative programs were created for Johnniac, including the time-sharing system JOSS that allowed many users to simultaneously access the machine.

IBM 650 magnetic drum calculator introduced

IBM establishes the 650 as its first mass-produced computer, with the company selling 450 in just one year. Spinning at 12,500 rpm, the 650´s magnetic data-storage drum allowed much faster access to stored information than other drum-based machines. The Model 650 was also highly popular in universities, where a generation of students first learned programming.

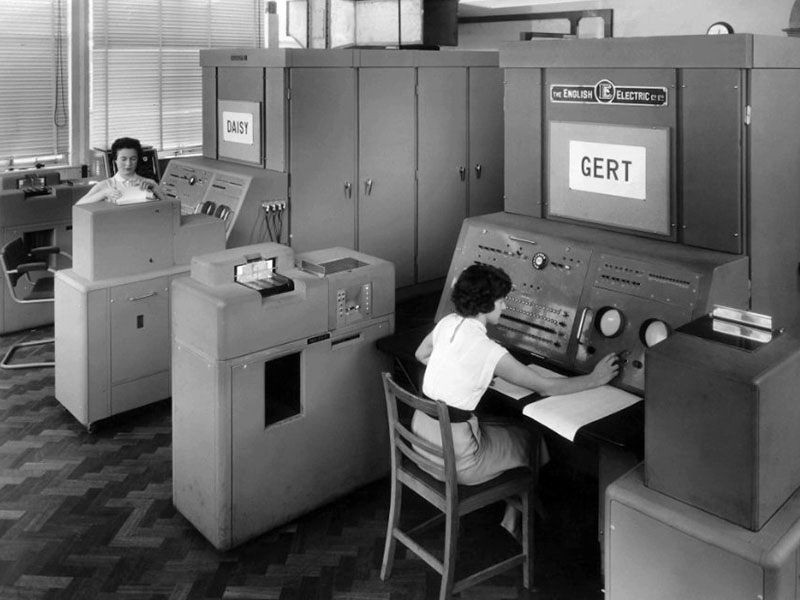

English Electric DEUCE introduced

English Electric DEUCE

A commercial version of Alan Turing's Pilot ACE, called DEUCE—the Digital Electronic Universal Computing Engine -- is used mostly for science and engineering problems and a few commercial applications. Over 30 were completed, including one delivered to Australia.

Direct keyboard input to computers

Joe Thompson at Whirlwind console, ca. 1951

At MIT, researchers begin experimenting with direct keyboard input to computers, a precursor to today´s normal mode of operation. Typically, computer users of the time fed their programs into a computer using punched cards or paper tape. Doug Ross wrote a memo advocating direct access in February. Ross contended that a Flexowriter -- an electrically-controlled typewriter -- connected to an MIT computer could function as a keyboard input device due to its low cost and flexibility. An experiment conducted five months later on the MIT Whirlwind computer confirmed how useful and convenient a keyboard input device could be.

Librascope LGP-30 introduced

Physicist Stan Frankel, intrigued by small, general-purpose computers, developed the MINAC at Caltech. The Librascope division of defense contractor General Precision buys Frankel’s design, renaming it the LGP-30 in 1956. Used for science and engineering as well as simple data processing, the LGP-30 was a “bargain” at less than $50,000 and an early example of a ‘personal computer,’ that is, a computer made for a single user.

MIT researchers build the TX-0

TX-0 at MIT

The TX-0 (“Transistor eXperimental - 0”) is the first general-purpose programmable computer built with transistors. For easy replacement, designers placed each transistor circuit inside a "bottle," similar to a vacuum tube. Constructed at MIT´s Lincoln Laboratory, the TX-0 moved to the MIT Research Laboratory of Electronics, where it hosted some early imaginative tests of programming, including writing a Western movie shown on television, 3-D tic-tac-toe, and a maze in which a mouse found martinis and became increasingly inebriated.

Digital Equipment Corporation (DEC) founded

The Maynard mill

DEC is founded initially to make electronic modules for test, measurement, prototyping and control markets. Its founders were Ken and Stan Olsen, and Harlan Anderson. Headquartered in Maynard, Massachusetts, Digital Equipment Corporation, took over 8,680 square foot leased space in a nineteenth century mill that once produced blankets and uniforms for soldiers who fought in the Civil War. General Georges Doriot and his pioneering venture capital firm, American Research and Development, invested $70,000 for 70% of DEC’s stock to launch the company in 1957. The mill is still in use today as an office park (Clock Tower Place) today.

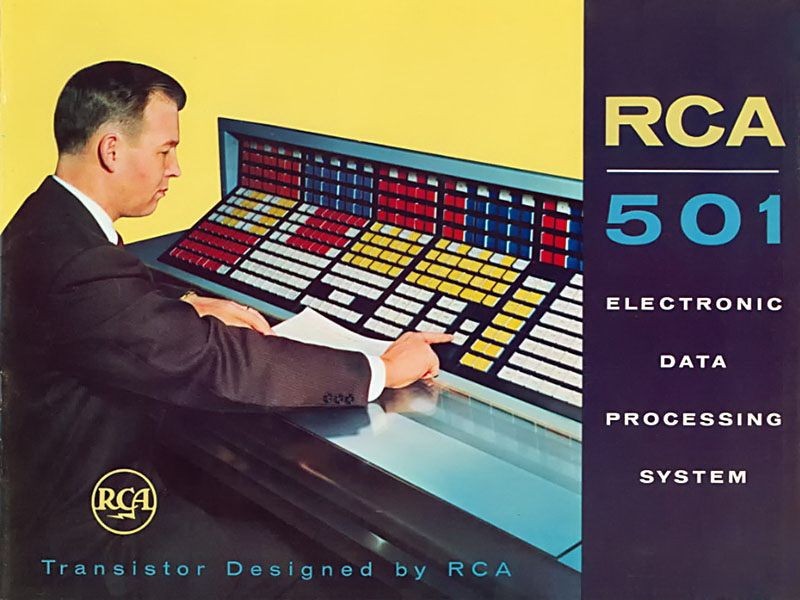

RCA introduces its Model 501 transistorized computer

RCA 501 brochure cover

The 501 is built on a 'building block' concept which allows it to be highly flexible for many different uses and could simultaneously control up to 63 tape drives—very useful for large databases of information. For many business users, quick access to this huge storage capability outweighed its relatively slow processing speed. Customers included US military as well as industry.

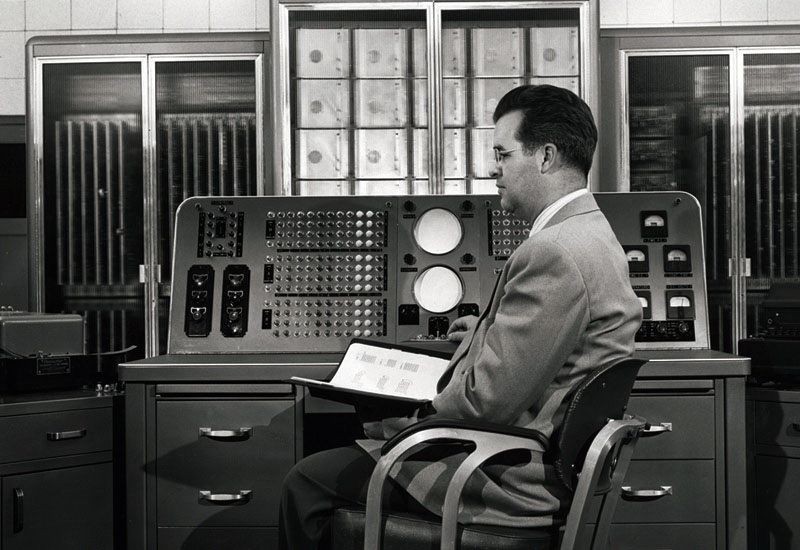

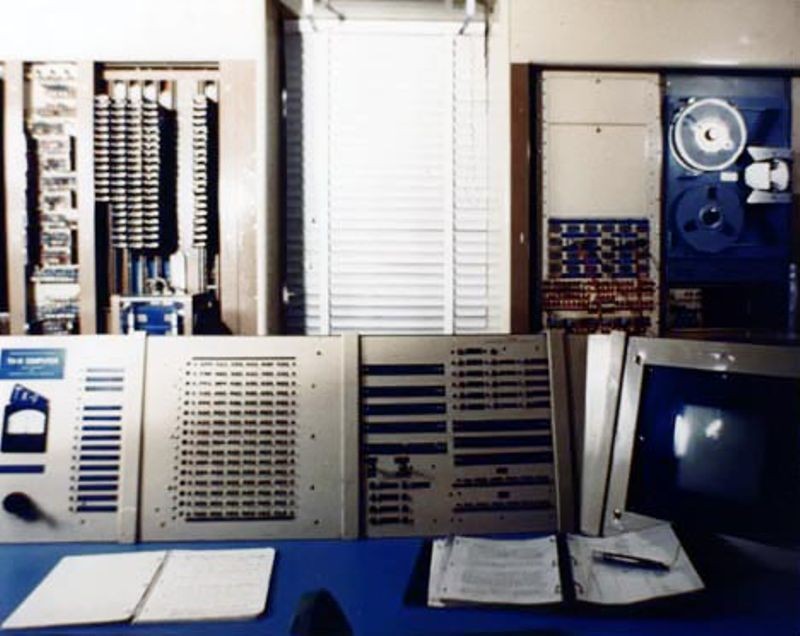

SAGE system goes online

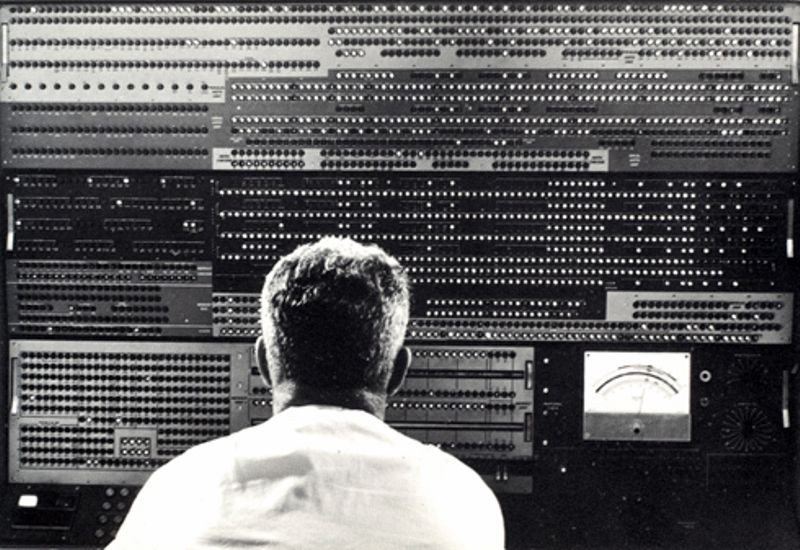

SAGE Operator Station

The first large-scale computer communications network, SAGE connects 23 hardened computer sites in the US and Canada. Its task was to detect incoming Soviet bombers and direct interceptor aircraft to destroy them. Operators directed actions by touching a light gun to the SAGE airspace display. The air defense system used two AN/FSQ-7 computers, each of which used a full megawatt of power to drive its 55,000 vacuum tubes, 175,000 diodes and 13,000 transistors.

DEC PDP-1 introduced

Ed Fredkin at DEC PDP-1

The typical PDP-1 computer system, which sells for about $120,000, includes a cathode ray tube graphic display, paper tape input/output, needs no air conditioning and requires only one operator; all of which become standards for minicomputers. Its large scope intrigued early hackers at MIT, who wrote the first computerized video game, SpaceWar! , as well as programs to play music. More than 50 PDP-1s were sold.

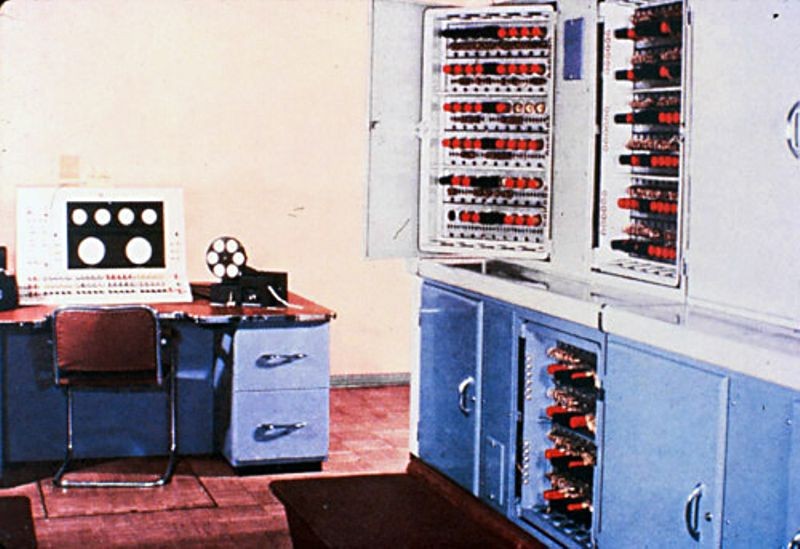

NEAC 2203 goes online

NEAC 2203 transistorized computer

An early transistorized computer, the NEAC (Nippon Electric Automatic Computer) includes a CPU, console, paper tape reader and punch, printer and magnetic tape units. It was sold exclusively in Japan, but could process alphabetic and Japanese kana characters. Only about thirty NEACs were sold. It managed Japan's first on-line, real-time reservation system for Kinki Nippon Railways in 1960. The last one was decommissioned in 1979.

IBM 7030 (“Stretch”) completed

IBM Stretch

IBM´s 7000 series of mainframe computers are the company´s first to use transistors. At the top of the line was the Model 7030, also known as "Stretch." Nine of the computers, which featured dozens of advanced design innovations, were sold, mainly to national laboratories and major scientific users. A special version, known as HARVEST, was developed for the US National Security Agency (NSA). The knowledge and technologies developed for the Stretch project played a major role in the design, management, and manufacture of the later IBM System/360--the most successful computer family in IBM history.

IBM Introduces 1400 series

The 1401 mainframe, the first in the series, replaces earlier vacuum tube technology with smaller, more reliable transistors. Demand called for more than 12,000 of the 1401 computers, and the machine´s success made a strong case for using general-purpose computers rather than specialized systems. By the mid-1960s, nearly half of all computers in the world were IBM 1401s.

Minuteman I missile guidance computer developed

Minuteman Guidance computer

Minuteman missiles use transistorized computers to continuously calculate their position in flight. The computer had to be rugged and fast, with advanced circuit design and reliable packaging able to withstand the forces of a missile launch. The military’s high standards for its transistors pushed manufacturers to improve quality control. When the Minuteman I was decommissioned, some universities received these computers for use by students.

Naval Tactical Data System introduced

Naval Tactical Data System (NTDS)

The US Navy Tactical Data System uses computers to integrate and display shipboard radar, sonar and communications data. This real-time information system began operating in the early 1960s. In October 1961, the Navy tested the NTDS on the USS Oriskany carrier and the USS King and USS Mahan frigates. After being successfully used for decades, NTDS was phased out in favor of the newer AEGIS system in the 1980s.

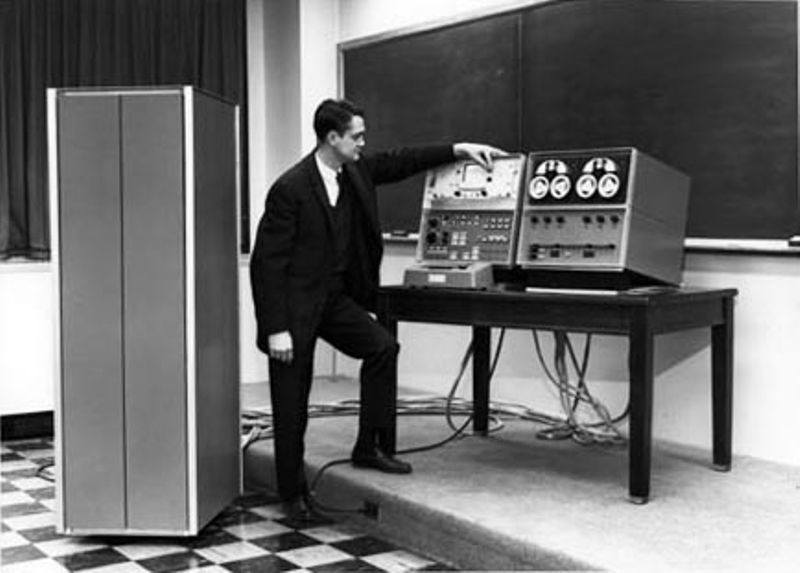

MIT LINC introduced

Wesley Clark with LINC

The LINC is an early and important example of a ‘personal computer,’ that is, a computer designed for only one user. It was designed by MIT Lincoln Laboratory engineer Wesley Clark. Under the auspices of a National Institutes of Health (NIH) grant, biomedical research faculty from around the United States came to a workshop at MIT to build their own LINCs, and then bring them back to their home institutions where they would be used. For research, Digital Equipment Corporation (DEC) supplied the components, and 50 original LINCs were made. The LINC was later commercialized by DEC and sold as the LINC-8.

The Atlas Computer debuts

Chilton Atlas installation

A joint project of England’s Manchester University, Ferranti Computers, and Plessey, Atlas comes online nine years after Manchester’s computer lab begins exploring transistor technology. Atlas was the fastest computer in the world at the time and introduced the concept of “virtual memory,” that is, using a disk or drum as an extension of main memory. System control was provided through the Atlas Supervisor, which some consider to be the first true operating system.

CDC 6600 supercomputer introduced

The Control Data Corporation (CDC) 6600 performs up to 3 million instructions per second —three times faster than that of its closest competitor, the IBM 7030 supercomputer. The 6600 retained the distinction of being the fastest computer in the world until surpassed by its successor, the CDC 7600, in 1968. Part of the speed came from the computer´s design, which used 10 small computers, known as peripheral processing units, to offload the workload from the central processor.

Digital Equipment Corporation introduces the PDP-8

PDP-8 advertisement

The Canadian Chalk River Nuclear Lab needed a special device to monitor a reactor. Instead of designing a custom controller, two young engineers from Digital Equipment Corporation (DEC) -- Gordon Bell and Edson de Castro -- do something unusual: they develop a small, general purpose computer and program it to do the job. A later version of that machine became the PDP-8, the first commercially successful minicomputer. The PDP-8 sold for $18,000, one-fifth the price of a small IBM System/360 mainframe. Because of its speed, small size, and reasonable cost, the PDP-8 was sold by the thousands to manufacturing plants, small businesses, and scientific laboratories around the world.

IBM announces System/360

IBM 360 Model 40

System/360 is a major event in the history of computing. On April 7, IBM announced five models of System/360, spanning a 50-to-1 performance range. At the same press conference, IBM also announced 40 completely new peripherals for the new family. System/360 was aimed at both business and scientific customers and all models could run the same software, largely without modification. IBM’s initial investment of $5 billion was quickly returned as orders for the system climbed to 1,000 per month within two years. At the time IBM released the System/360, the company had just made the transition from discrete transistors to integrated circuits, and its major source of revenue began to move from punched card equipment to electronic computer systems.

SABRE comes on-line

Airline reservation agents working with SABRE

SABRE is a joint project between American Airlines and IBM. Operational by 1964, it was not the first computerized reservation system, but it was well publicized and became very influential. Running on dual IBM 7090 mainframe computer systems, SABRE was inspired by IBM’s earlier work on the SAGE air-defense system. Eventually, SABRE expanded, even making airline reservations available via on-line services such as CompuServe, Genie, and America Online.

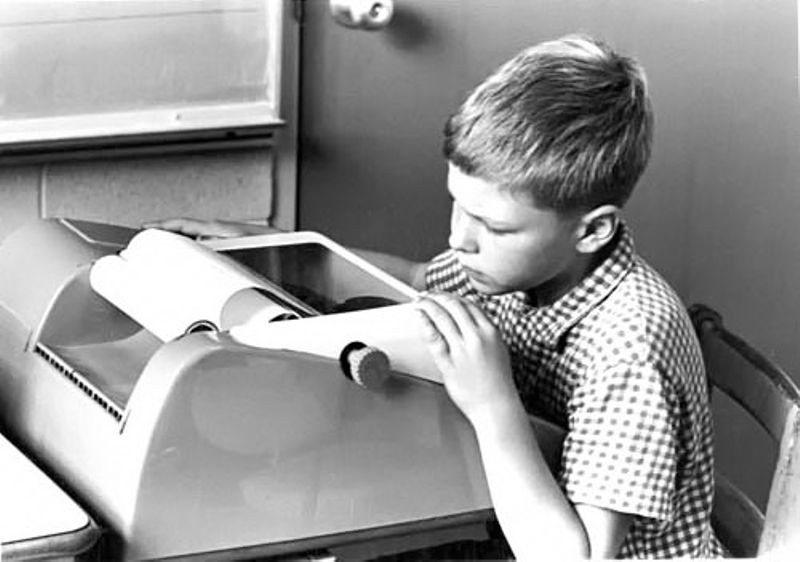

Teletype introduced its ASR-33 Teletype

Student using ASR-33

At a cost to computer makers of roughly $700, the ASR-33 Teletype is originally designed as a low cost terminal for the Western Union communications network. Throughout the 1960s and ‘70s, the ASR-33 was a popular and inexpensive choice of input and output device for minicomputers and many of the first generation of microcomputers.

3C DDP-116 introduced

DDP-116 General Purpose Computer

Designed by engineer Gardner Hendrie for Computer Control Corporation (CCC), the DDP-116 is announced at the 1965 Spring Joint Computer Conference. It was the world's first commercial 16-bit minicomputer and 172 systems were sold. The basic computer cost $28,500.

Olivetti Programma 101 is released

Olivetti Programma 101

Announced the year previously at the New York World's Fair the Programma 101 goes on sale. This printing programmable calculator was made from discrete transistors and an acoustic delay-line memory. The Programma 101 could do addition, subtraction, multiplication, and division, as well as calculate square roots. 40,000 were sold, including 10 to NASA for use on the Apollo space project.

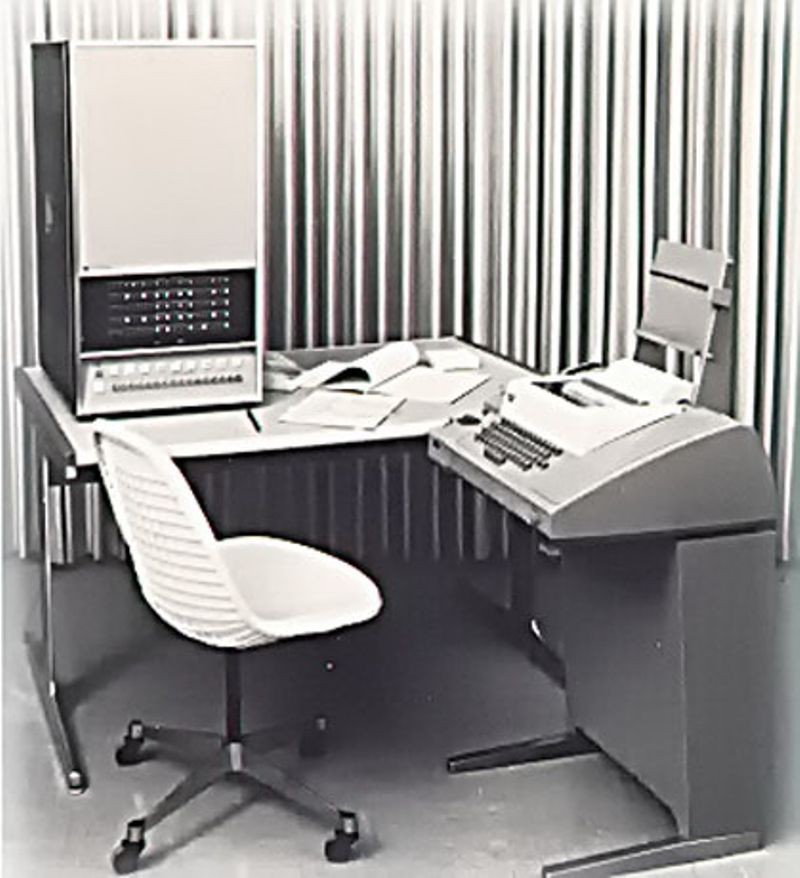

HP introduces the HP 2116A

HP 2116A system

The 2116A is HP’s first computer. It was developed as a versatile instrument controller for HP's growing family of programmable test and measurement products. It interfaced with a wide number of standard laboratory instruments, allowing customers to computerize their instrument systems. The 2116A also marked HP's first use of integrated circuits in a commercial product.

ILLIAC IV project begins

A large parallel processing computer, the ILLIAC IV does not operate until 1972. It was eventually housed at NASA´s Ames Research Center in Mountain View, California. The most ambitious massively parallel computer at the time, the ILLIAC IV was plagued with design and production problems. Once finally completed, it achieved a computational speed of 200 million instructions per second and 1 billion bits per second of I/O transfer via a unique combination of its parallel architecture and the overlapping or "pipelining" structure of its 64 processing elements.

RCA announces its Spectra series of computers

Image from RCA Spectra-70 brochure

The first large commercial computers to use integrated circuits, RCA highlights the IC's advantage over IBM’s custom SLT modules. Spectra systems were marketed on the basis of their compatibility with the IBM System/360 series of computer since it implemented the IBM 360 instruction set and could run most IBM software with little or no modification.

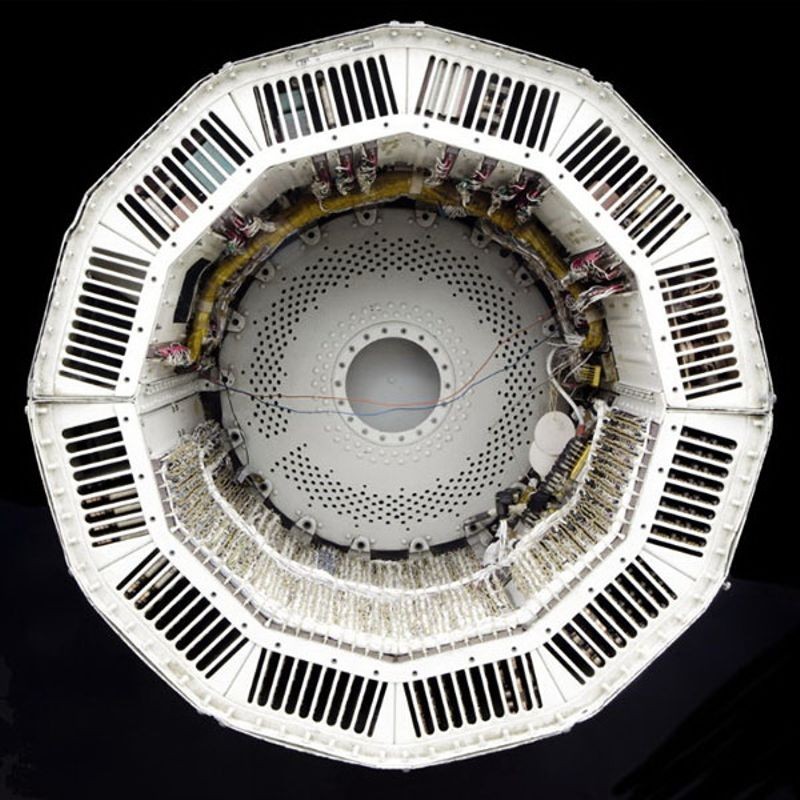

Apollo Guidance Computer (AGC) makes its debut

DSKY interface for the Apollo Guidance Computer

Designed by scientists and engineers at MIT’s Instrumentation Laboratory, the Apollo Guidance Computer (AGC) is the culmination of years of work to reduce the size of the Apollo spacecraft computer from the size of seven refrigerators side-by-side to a compact unit weighing only 70 lbs. and taking up a volume of less than 1 cubic foot. The AGC’s first flight was on Apollo 7. A year later, it steered Apollo 11 to the lunar surface. Astronauts communicated with the computer by punching two-digit codes into the display and keyboard unit (DSKY). The AGC was one of the earliest uses of integrated circuits, and used core memory, as well as read-only magnetic rope memory. The astronauts were responsible for entering more than 10,000 commands into the AGC for each trip between Earth and the Moon.

Data General Corporation introduces the Nova Minicomputer

Edson deCastro with a Data General Nova

Started by a group of engineers that left Digital Equipment Corporation (DEC), Data General designs the Nova minicomputer. It had 32 KB of memory and sold for $8,000. Ed de Castro, its main designer and co-founder of Data General, had earlier led the team that created the DEC PDP-8. The Nova line of computers continued through the 1970s, and influenced later systems like the Xerox Alto and Apple 1.

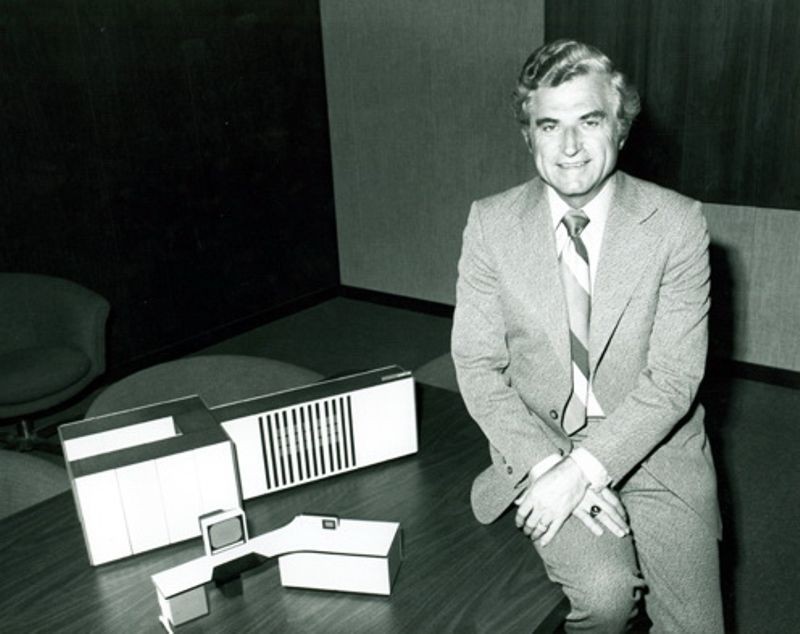

Amdahl Corporation introduces the Amdahl 470

Gene Amdahl with 470V/6 model

Gene Amdahl, father of the IBM System/360, starts his own company, Amdahl Corporation, to compete with IBM in mainframe computer systems. The 470V/6 was the company’s first product and ran the same software as IBM System/370 computers but cost less and was smaller and faster.

First Kenbak-1 is sold

One of the earliest personal computers, the Kenbak-1 is advertised for $750 in Scientific American magazine. Designed by John V. Blankenbaker using standard medium-- and small-scale integrated circuits, the Kenbak-1 relied on switches for input and lights for output from its 256-byte memory. In 1973, after selling only 40 machines, Kenbak Corporation closed its doors.

Hewlett-Packard introduces the HP-35

HP-35 handheld calculator

Initially designed for internal use by HP employees, co-founder Bill Hewlett issues a challenge to his engineers in 1971: fit all of the features of their desktop scientific calculator into a package small enough for his shirt pocket. They did. Marketed as “a fast, extremely accurate electronic slide rule” with a solid-state memory similar to that of a computer, the HP-35 distinguished itself from its competitors by its ability to perform a broad variety of logarithmic and trigonometric functions, to store more intermediate solutions for later use, and to accept and display entries in a form similar to standard scientific notation. The HP-35 helped HP become one of the most dominant companies in the handheld calculator market for more than two decades.

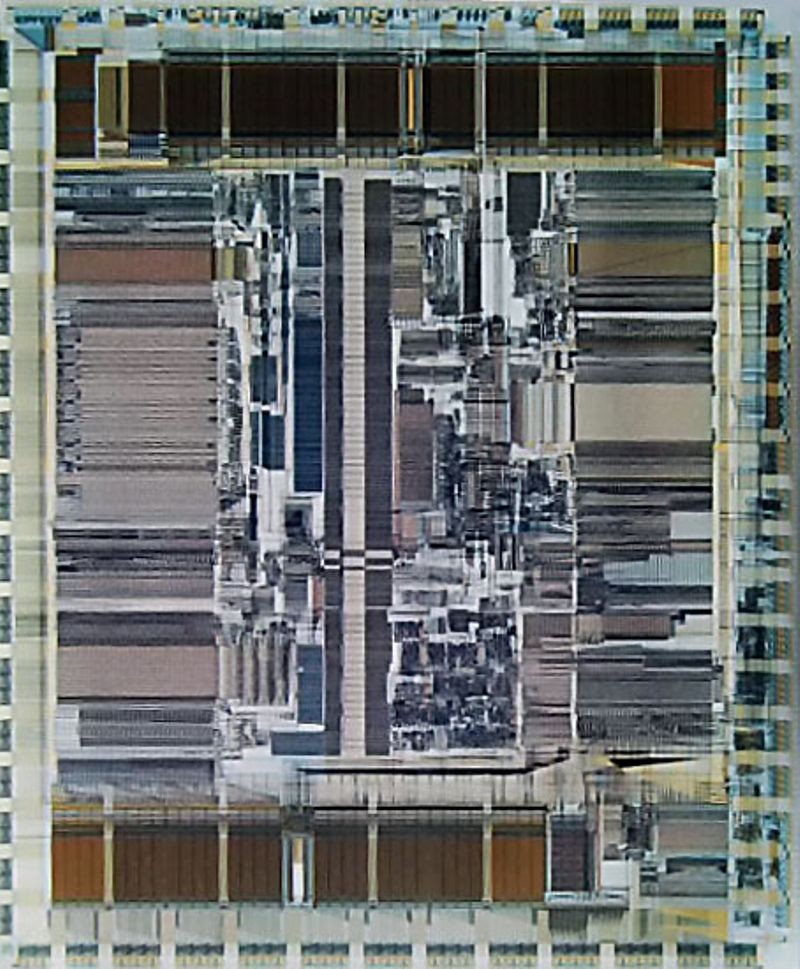

Intel introduces the first microprocessor

Advertisement for Intel's 4004

Computer History Museum

The first advertisement for a microprocessor, the Intel 4004, appears in Electronic News. Developed for Busicom, a Japanese calculator maker, the 4004 had 2250 transistors and could perform up to 90,000 operations per second in four-bit chunks. Federico Faggin led the design and Ted Hoff led the architecture.

Laser printer invented at Xerox PARC

Dover laser printer

Xerox PARC physicist Gary Starkweather realizes in 1967 that exposing a copy machine’s light-sensitive drum to a paper original isn’t the only way to create an image. A computer could “write” it with a laser instead. Xerox wasn’t interested. So in 1971, Starkweather transferred to Xerox Palo Alto Research Center (PARC), away from corporate oversight. Within a year, he had built the world’s first laser printer, launching a new era in computer printing, generating billions of dollars in revenue for Xerox. The laser printer was used with PARC’s Alto computer, and was commercialized as the Xerox 9700.

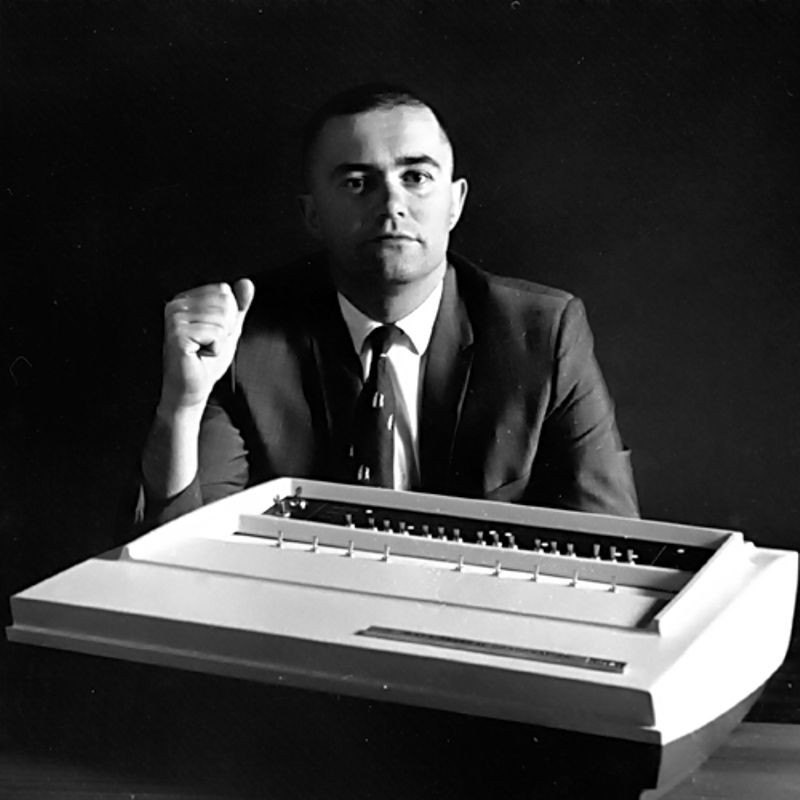

IBM SCAMP is developed

Dr. Paul Friedl with SCAMP prototype

Under the direction of engineer Dr. Paul Friedl, the Special Computer APL Machine Portable (SCAMP) personal computer prototype is developed at IBM's Los Gatos and Palo Alto, California laboratories. IBM’s first personal computer, the system was designed to run the APL programming language in a compact, briefcase-like enclosure which comprised a keyboard, CRT display, and cassette tape storage. Friedl used the SCAMP prototype to gain approval within IBM to promote and develop IBM’s 5100 family of computers, including the most successful, the 5150, also known as the IBM Personal Computer (PC), introduced in 1981. From concept to finished system, SCAMP took only six months to develop.

Micral is released

Based on the Intel 8008 microprocessor, the Micral is one of the earliest commercial, non-kit personal computers. Designer Thi Truong developed the computer while Philippe Kahn wrote the software. Truong, founder and president of the French company R2E, created the Micral as a replacement for minicomputers in situations that did not require high performance, such as process control and highway toll collection. Selling for $1,750, the Micral never penetrated the U.S. market. In 1979, Truong sold R2E to Bull.

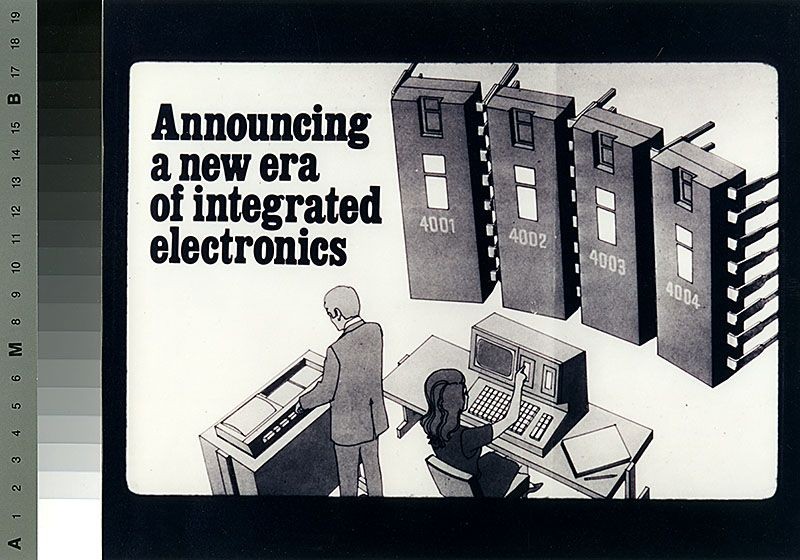

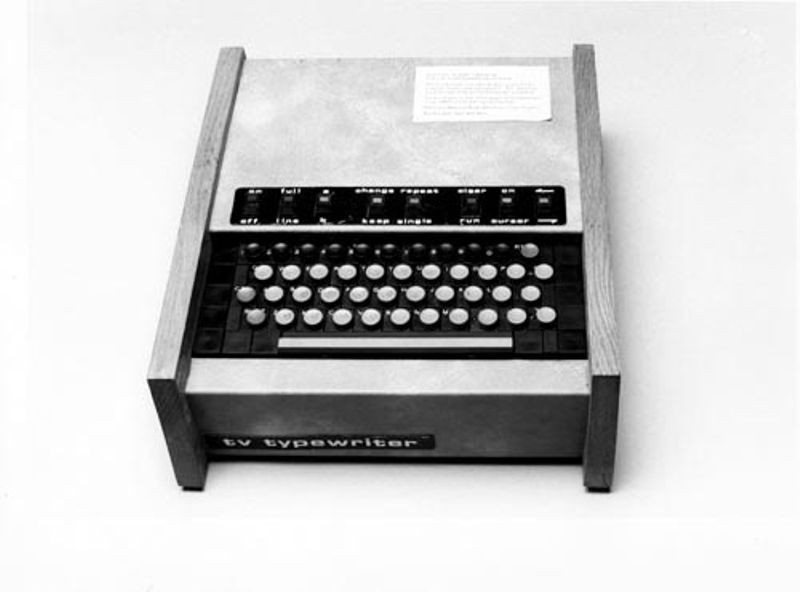

The TV Typewriter plans are published

TV Typewriter

Designed by Don Lancaster, the TV Typewriter is an easy-to-build kit that can display alphanumeric information on an ordinary television set. It used $120 worth of electronics components, as outlined in the September 1973 issue of hobbyist magazine Radio Electronics . The original design included two memory boards and could generate and store 512 characters as 16 lines of 32 characters. A cassette tape interface provided supplementary storage for text. The TV Typewriter was used by many small television stations well in the 1990s.

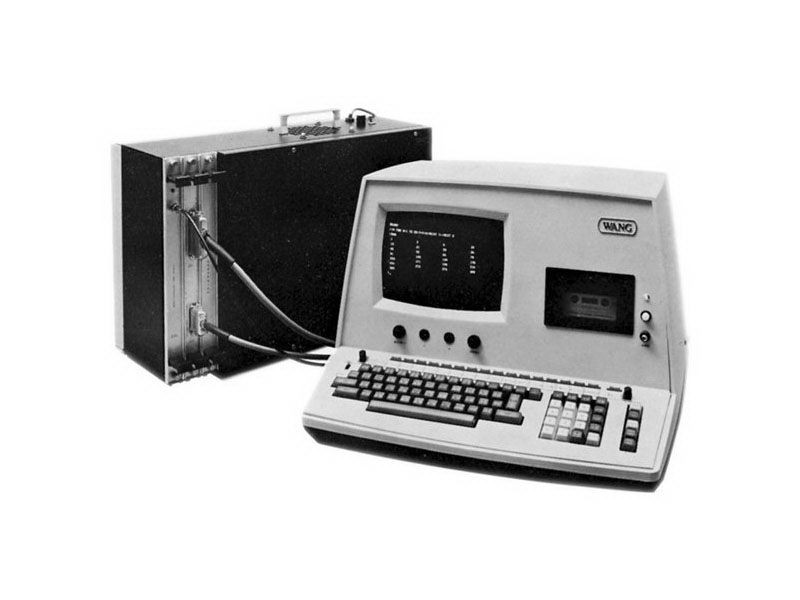

Wang Laboratories releases the Wang 2200

Wang was a successful calculator manufacturer, then a successful word processor company. The 1973 Wang 2200 makes it a successful computer company, too. Wang sold the 2200 primarily through Value Added Resellers, who added special software to solve specific customer problems. The 2200 used a built-in CRT, cassette tape for storage, and ran the programming language BASIC. The PC era ended Wang’s success, and it filed for bankruptcy in 1992.

Scelbi advertises its 8H computer

The first commercially advertised US computer based on a microprocessor (the Intel 8008,) the Scelbi has 4 KB of internal memory and a cassette tape interface, as well as Teletype and oscilloscope interfaces. Scelbi aimed the 8H, available both in kit form and fully assembled, at scientific, electronic, and biological applications. In 1975, Scelbi introduced the 8B version with 16 KB of memory for the business market. The company sold about 200 machines, losing $500 per unit.

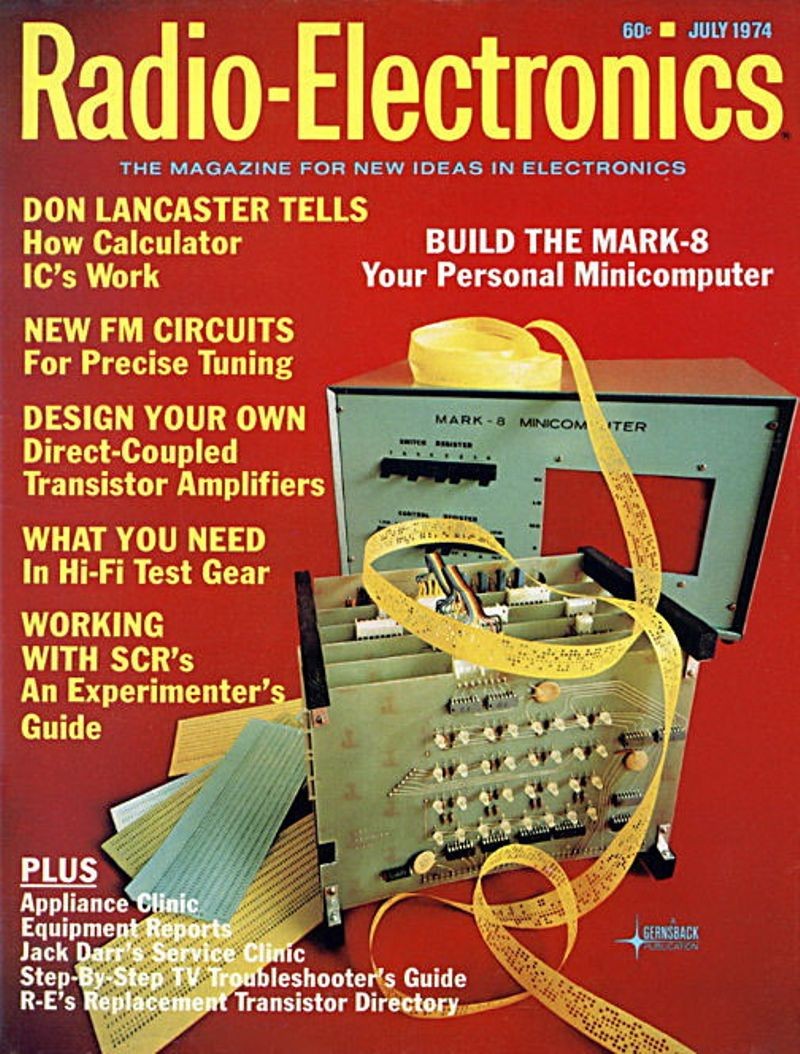

The Mark-8 appears in the pages of Radio-Electronics

Mark-8 featured on Radio-Electronics July 1974 cover

The Mark-8 “Do-It-Yourself” kit is designed by graduate student John Titus and uses the Intel 8008 microprocessor. The kit was the cover story of hobbyist magazine Radio-Electronics in July 1974 – six months before the MITS Altair 8800 was in rival Popular Electronics magazine. Plans for the Mark-8 cost $5 and the blank circuit boards were available for $50.

Xerox PARC Alto introduced

The Alto is a groundbreaking computer with wide influence on the computer industry. It was based on a graphical user interface using windows, icons, and a mouse, and worked together with other Altos over a local area network. It could also share files and print out documents on an advanced Xerox laser printer. Applications were also highly innovative: a WYSISYG word processor known as “Bravo,” a paint program, a graphics editor, and email for example. Apple’s inspiration for the Lisa and Macintosh computers came from the Xerox Alto.

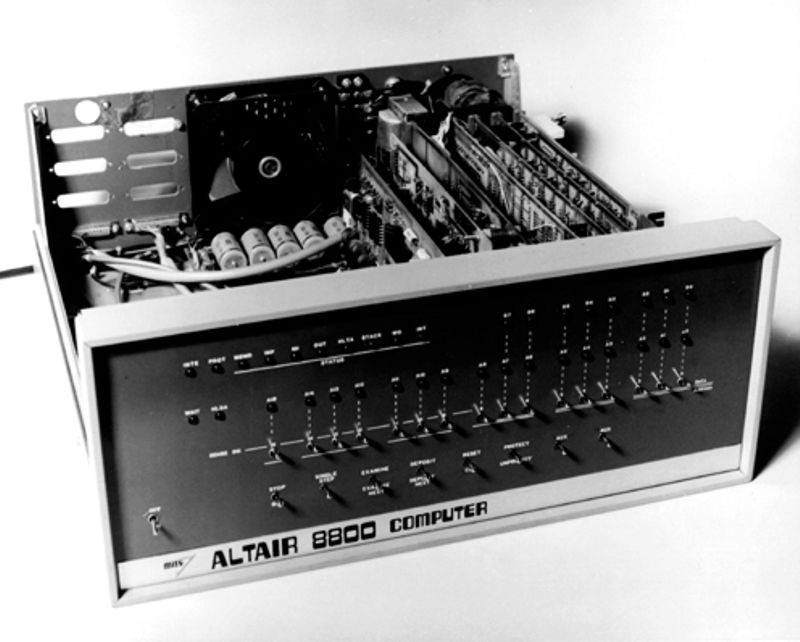

MITS Altair 8800 kit appears in Popular Electronics

Altair 8800

For its January issue, hobbyist magazine Popular Electronics runs a cover story of a new computer kit – the Altair 8800. Within weeks of its appearance, customers inundated its maker, MITS, with orders. Bill Gates and Paul Allen licensed their BASIC programming language interpreter to MITS as the main language for the Altair. MITS co-founder Ed Roberts invented the Altair 8800 — which sold for $297, or $395 with a case — and coined the term “personal computer”. The machine came with 256 bytes of memory (expandable to 64 KB) and an open 100-line bus structure that evolved into the “S-100” standard widely used in hobbyist and personal computers of this era. In 1977, MITS was sold to Pertec, which continued producing Altairs in 1978.

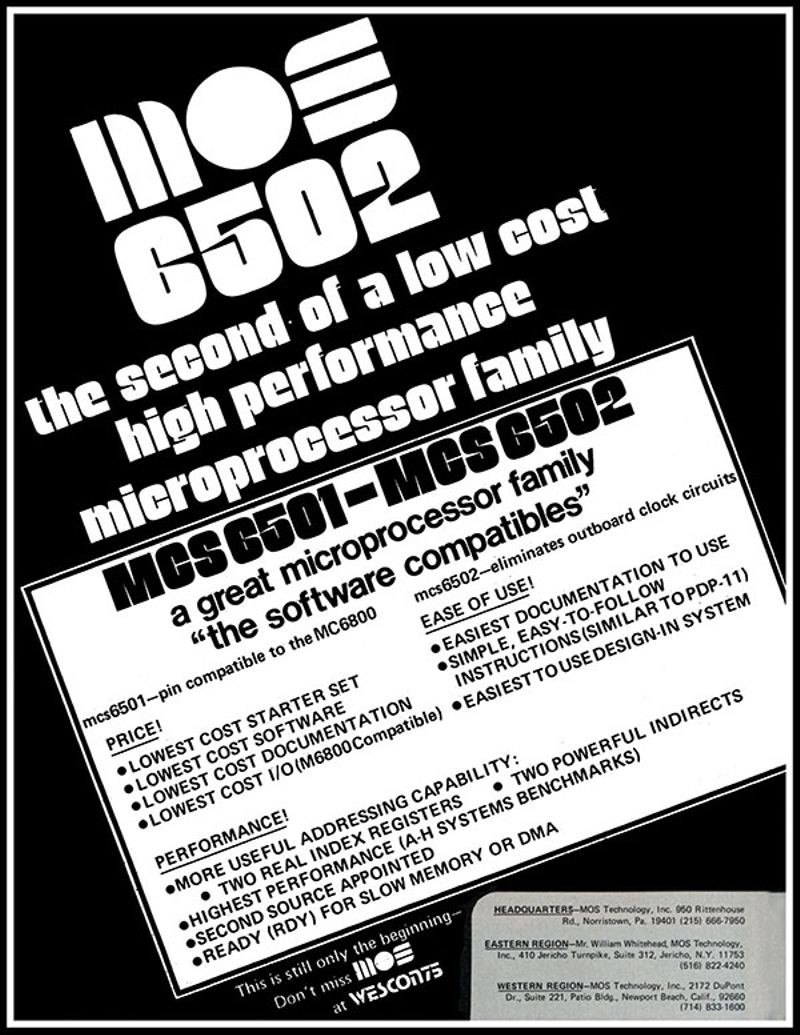

MOS 6502 is introduced

MOS 6502 ad from IEEE Computer, Sept. 1975

Chuck Peddle leads a small team of former Motorola employees to build a low-cost microprocessor. The MOS 6502 was introduced at a conference in San Francisco at a cost of $25, far less than comparable processors from Intel and Motorola, leading some attendees to believe that the company was perpetrating a hoax. The chip quickly became popular with designers of early personal computers like the Apple II and Commodore PET, as well as game consoles like the Nintendo Entertainment System. The 6502 and its progeny are still used today, usually in embedded applications.

Southwest Technical Products introduces the SWTPC 6800

Southwest Technical Products 6800

Southwest Technical Products is founded by Daniel Meyer as DEMCO in the 1960s to provide a source for kit versions of projects published in electronics hobbyist magazines. SWTPC introduces many computer kits based on the Motorola 6800, and later, the 6809. Of the dozens of different SWTP kits available, the 6800 proved the most popular.

Tandem Computers releases the Tandem-16

Dual-processor Tandem 16 system

Tailored for online transaction processing, the Tandem-16 is one of the first commercial fault-tolerant computers. The banking industry rushed to adopt the machine, built to run during repair or expansion. The Tandem-16 eventually led to the “Non-Stop” series of systems, which were used for early ATMs and to monitor stock trades.

VDM prototype built

The Video Display Module (VDM)

The Video Display Module (VDM) marks the first implementation of a memory-mapped alphanumeric video display for personal computers. Introduced at the Altair Convention in Albuquerque in March 1976, the visual display module enabled the use of personal computers for interactive games.

Cray-1 supercomputer introduced

Cray I 'Self-portrait'

The fastest machine of its day, The Cray-1's speed comes partly from its shape, a "C," which reduces the length of wires and thus the time signals need to travel across them. High packaging density of integrated circuits and a novel Freon cooling system also contributed to its speed. Each Cray-1 took a full year to assemble and test and cost about $10 million. Typical applications included US national defense work, including the design and simulation of nuclear weapons, and weather forecasting.

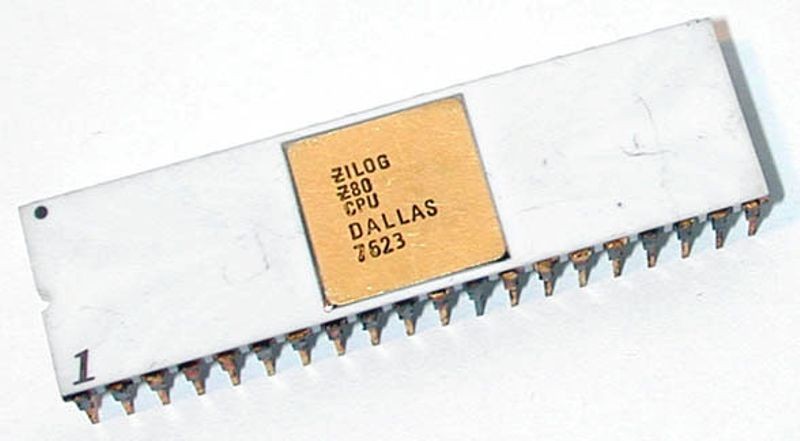

Intel 8080 and Zilog Z-80

Zilgo Z-80 microprocessor

Image by Gennadiy Shvets

Intel and Zilog introduced new microprocessors. Five times faster than its predecessor, the 8008, the Intel 8080 could address four times as many bytes for a total of 64 kilobytes. The Zilog Z-80 could run any program written for the 8080 and included twice as many built-in machine instructions.

Steve Wozniak completes the Apple-1

Designed by Sunnyvale, California native Steve Wozniak, and marketed by his friend Steve Jobs, the Apple-1 is a single-board computer for hobbyists. With an order for 50 assembled systems from Mountain View, California computer store The Byte Shop in hand, the pair started a new company, naming it Apple Computer, Inc. In all, about 200 of the boards were sold before Apple announced the follow-on Apple II a year later as a ready-to-use computer for consumers, a model which sold in the millions for nearly two decades.

Apple II introduced

Sold complete with a main logic board, switching power supply, keyboard, case, manual, game paddles, and cassette tape containing the game Breakout , the Apple-II finds popularity far beyond the hobbyist community which made up Apple’s user community until then. When connected to a color television set, the Apple II produced brilliant color graphics for the time. Millions of Apple IIs were sold between 1977 and 1993, making it one of the longest-lived lines of personal computers. Apple gave away thousands of Apple IIs to school, giving a new generation their first access to personal computers.

Tandy Radio Shack introduces its TRS-80

Performing far better than the company projections of 3,000 units for the first year, in the first month after its release Tandy Radio Shack´s first desktop computer — the TRS-80 — sells 10,000 units. The TRS-80 was priced at $599.95, included a Z80 microprocessor, video display, 4 KB of memory, a built-in BASIC programming language interpreter, cassette storage, and easy-to-understand manuals that assumed no prior knowledge on the part of the user. The TRS-80 proved popular with schools, as well as for home use. The TRS-80 line of computers later included color, portable, and handheld versions before being discontinued in the early 1990s.

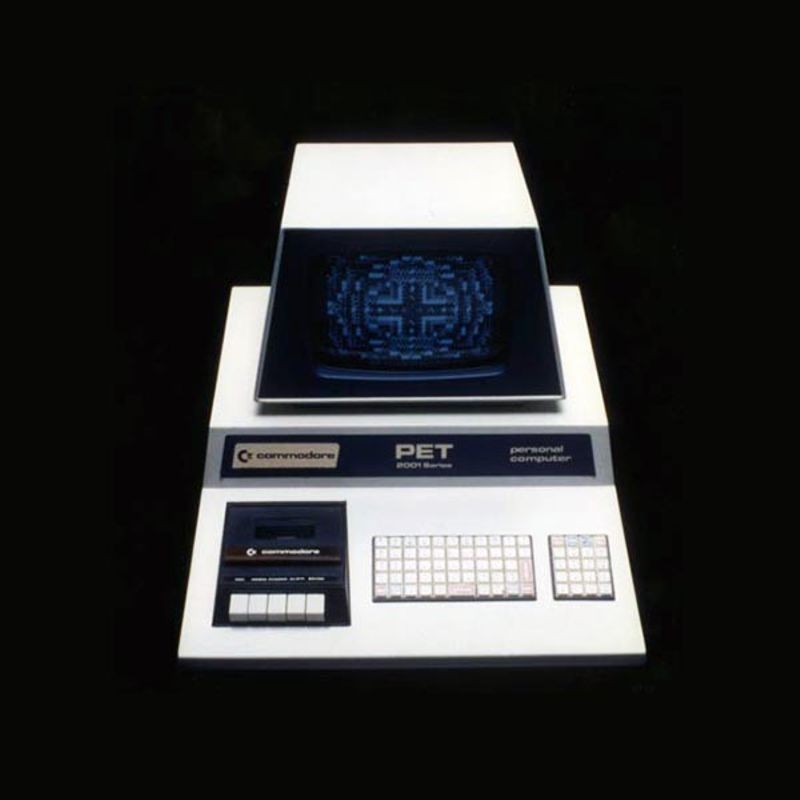

The Commodore PET (Personal Electronic Transactor) introduced

Commodore PET

The first of several personal computers released in 1977, the PET comes fully assembled with either 4 or 8 KB of memory, a built-in cassette tape drive, and a membrane keyboard. The PET was popular with schools and for use as a home computer. It used a MOS Technologies 6502 microprocessor running at 1 MHz. After the success of the PET, Commodore remained a major player in the personal computer market into the 1990s.

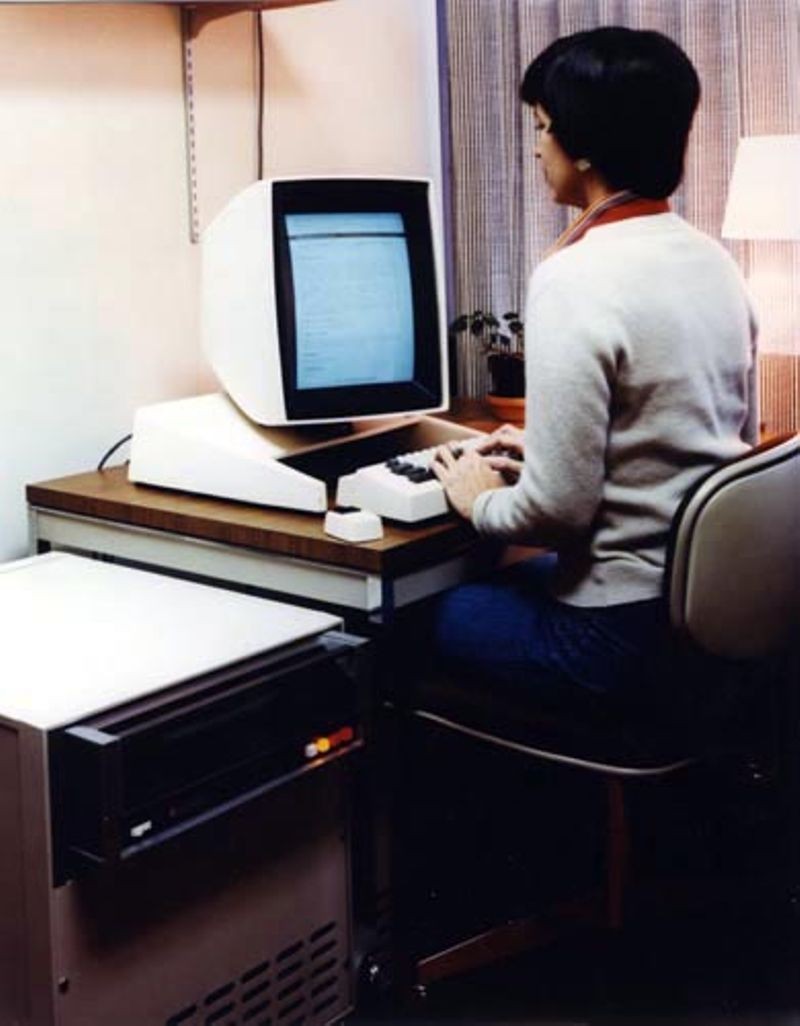

The DEC VAX introduced

DEC VAX 11/780

Beginning with the VAX-11/780, the Digital Equipment Corporation (DEC) VAX family of computers rivals much more expensive mainframe computers in performance and features the ability to address over 4 GB of virtual memory, hundreds of times the capacity of most minicomputers. Called a “complex instruction set computer,” VAX systems were backward compatible and so preserved the investment owners of previous DEC computers had in software. The success of the VAX family of computers transformed DEC into the second-largest computer company in the world, as VAX systems became the de facto standard computing system for industry, the sciences, engineering, and research.

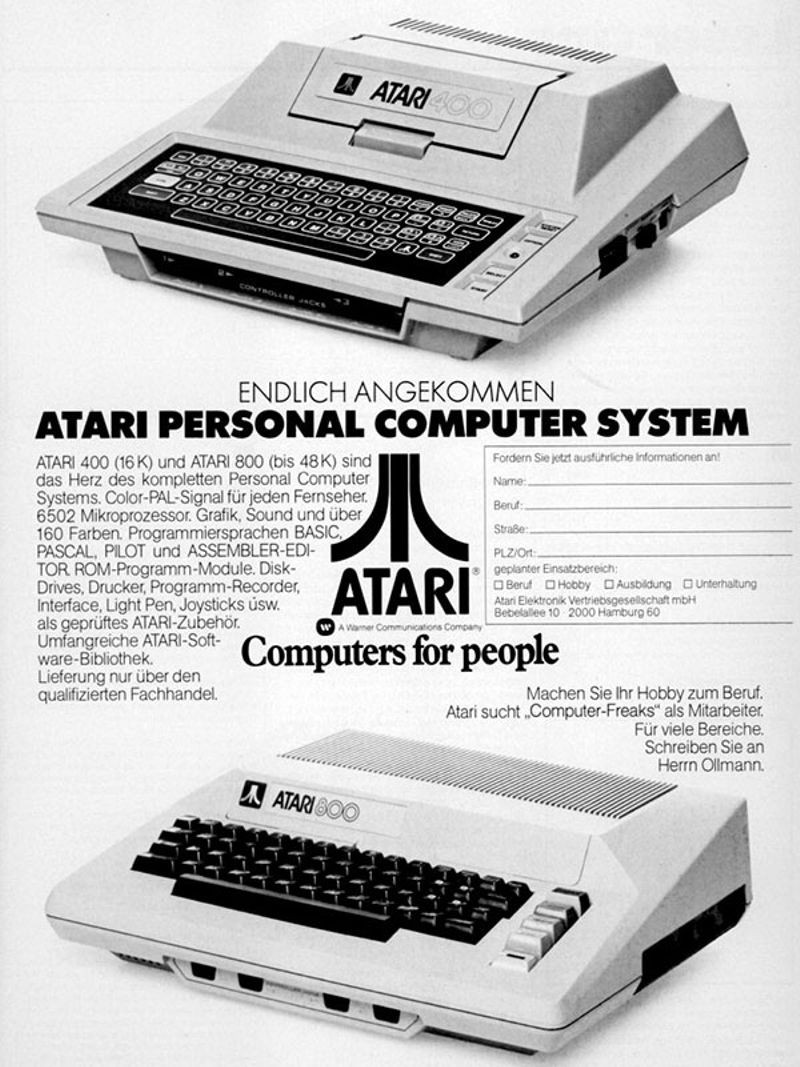

Atari introduces its Model 400 and 800 computers

Early Atari 400/800 advertisement

Shortly after delivery of the Atari VCS game console, Atari designs two microcomputers with game capabilities: the Model 400 and Model 800. The 400 served primarily as a game console, while the 800 was more of a home computer. Both faced strong competition from the Apple II, Commodore PET, and TRS-80 computers. Atari's 8-bit computers were influential in the arts, especially in the emerging DemoScene culture of the 1980s and '90s.

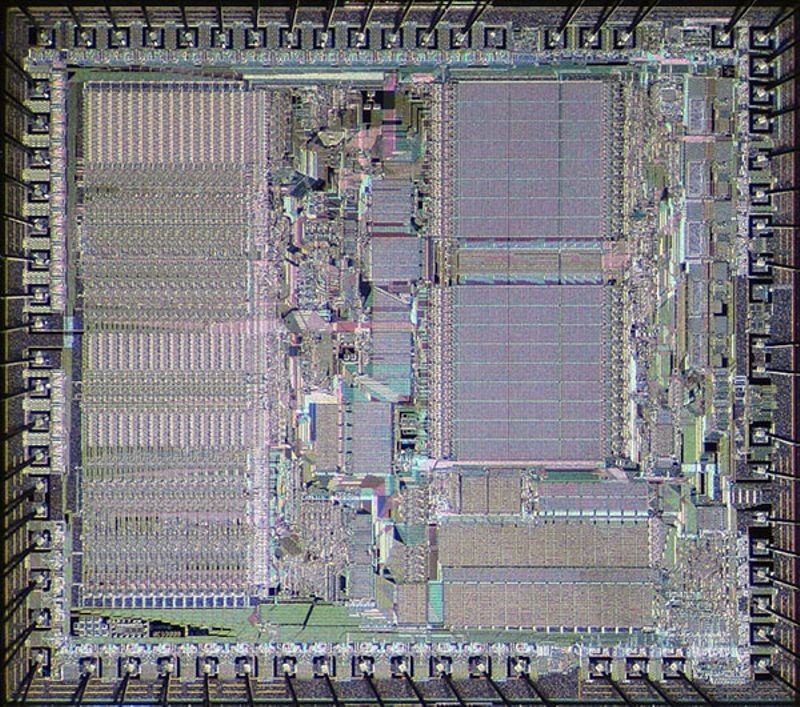

Motorola introduces the 68000 microprocessor

Die shot of Motorola 68000

Image by Pauli Rautakorpi

The Motorola 68000 microprocessor exhibited a processing speed far greater than its contemporaries. This high performance processor found its place in powerful work stations intended for graphics-intensive programs common in engineering.

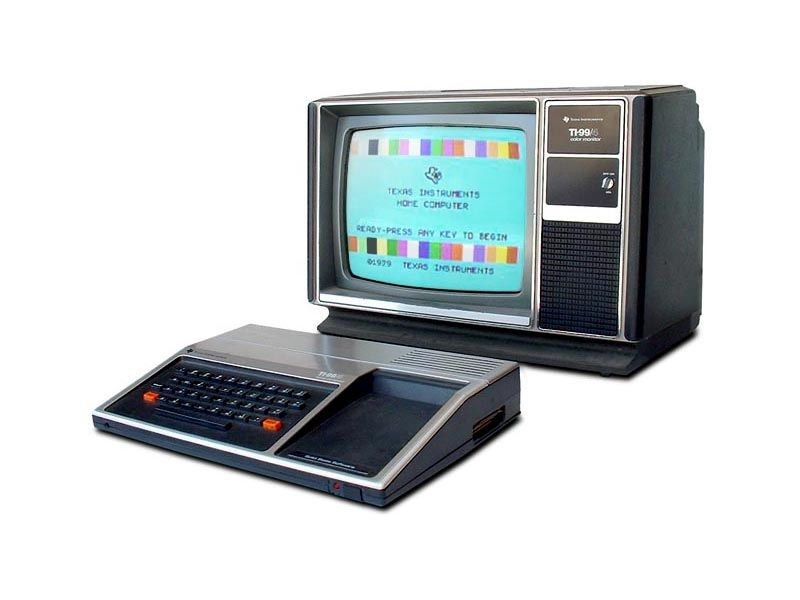

Texas Instruments TI 99/4 is released

Texas Instruments TI 99/4 microcomputer