The Importance of Data Analysis in Research

Studying data is amongst the everyday chores of researchers. It’s not a big deal for them to go through hundreds of pages per day to extract useful information from it. However, recent times have seen a massive jump in the amount of data available. While it’s certainly good news for researchers to get their hands on more data that could result in better studies, it’s also no less than a headache.

Thankfully, the rising trend of data science in the past years has also meant a sharp rise in data analysis techniques . These tools and techniques save a lot of time in hefty processes a researcher has to go through and allow them to finish the work of days in minutes!

As a famous saying goes,

“Information is the oil of the 21st century , and analytics is the combustion engine.”

– Peter Sondergaard , senior vice president, Gartner Research.

So, if you’re also a researcher or just curious about the most important data analysis techniques in research, this article is for you. Make sure you give it a thorough read, as I’ll be dropping some very important points throughout the article.

What is the Importance of Data Analysis in Research?

Data analysis is important in research because it makes studying data a lot simpler and more accurate. It helps the researchers straightforwardly interpret the data so that researchers don’t leave anything out that could help them derive insights from it.

Data analysis is a way to study and analyze huge amounts of data. Research often includes going through heaps of data, which is getting more and more for the researchers to handle with every passing minute.

Hence, data analysis knowledge is a huge edge for researchers in the current era, making them very efficient and productive.

What is Data Analysis?

Once the data is cleaned , transformed , and ready to use, it can do wonders. Not only does it contain a variety of useful information, studying the data collectively results in uncovering very minor patterns and details that would otherwise have been ignored.

So, you can see why it has such a huge role to play in research. Research is all about studying patterns and trends, followed by making a hypothesis and proving them. All this is supported by appropriate data.

Further in the article, we’ll see some of the most important types of data analysis that you should be aware of as a researcher so you can put them to use.

The Role of Data Analytics at The Senior Management Level

From small and medium-sized businesses to Fortune 500 conglomerates, the success of a modern business is now increasingly tied to how the company implements its data infrastructure and data-based decision-making. According

The Decision-Making Model Explained (In Plain Terms)

Any form of the systematic decision-making process is better enhanced with data. But making sense of big data or even small data analysis when venturing into a decision-making process might

13 Reasons Why Data Is Important in Decision Making

Data is important in decision making process, and that is the new golden rule in the business world. Businesses are always trying to find the balance of cutting costs while

Types of Data Analysis: Qualitative Vs Quantitative

Looking at it from a broader perspective, data analysis boils down to two major types. Namely, qualitative data analysis and quantitative data analysis. While the latter deals with the numerical data, comprising of numbers, the former comes in the non-text form. It can be anything such as summaries, images, symbols, and so on.

Both types have different methods to deal with them and we’ll be taking a look at both of them so you can use whatever suits your requirements.

Qualitative Data Analysis

As mentioned before, qualitative data comprises non-text-based data, and it can be either in the form of text or images. So, how do we analyze such data? Before we start, here are a few common tips first that you should always use before applying any techniques.

Now, let’s move ahead and see where the qualitative data analysis techniques come in. Even though there are a lot of professional ways to achieve this, here are some of them that you’ll need to know as a beginner.

Narrative Analysis

If your research is based upon collecting some answers from people in interviews or other scenarios, this might be one of the best analysis techniques for you. The narrative analysis helps to analyze the narratives of various people, which is available in textual form. The stories, experiences, and other answers from respondents are used to power the analysis.

The important thing to note here is that the data has to be available in the form of text only. Narrative analysis cannot be performed on other data types such as images.

Content Analysis

Content analysis is amongst the most used methods in analyzing quantitative data. This method doesn’t put a restriction on the form of data. You can use any kind of data here, whether it’s in the form of images, text, or even real-life items.

Here, an important application is when you know the questions you need to know the answers to. Upon getting the answers, you can perform this method to perform analysis to them, followed by extracting insights from it to be used in your research. It’s a full-fledged method and a lot of analytical studies are based solely on this.

Grounded Theory

Grounded theory is used when the researchers want to know the reason behind the occurrence of a certain event. They may have to go through a lot of different use cases and comparing them to each other while following this approach. It’s an iterative approach and the explanations keep on being modified or re-created till the researchers end up on a suitable conclusion that satisfies their specific conditions.

So, make sure you employ this method if you need to have certain qualitative data at hand and you need to know the reason why something happened, based on that data.

Discourse Analysis

Discourse analysis is quite similar to narrative analysis in the sense that it also uses interactions with people for the analysis purpose. The only difference is that the focal point here is different. Instead of analyzing the narrative, the researchers focus on the context in which the conversation is happening.

The complete background of the person being questioned, including his everyday environment, is used to perform the research.

Quantitative Analysis

Quantitative analysis involves any kind of analysis that’s being done on numbers. From the most basic analysis techniques to the most advanced ones, quantitative analysis techniques comprise a huge range of techniques. No matter what level of research you need to do, if it’s based on numerical data, you’ll always have efficient analysis methods to use.

There are two broad ways here; Descriptive statistics and inferential analysis .

However, before applying the analysis methods on numerical data, there are a few pre-processing steps that need to be done. These steps are used to make the data ‘ready’ for applying the analysis methods.

Make sure you don’t miss these steps, or you will end up drawing biased conclusions from the data analysis. IF you want to know why data is the key in data analysis and problem-solving, feel free to check out this article here . Now, about the steps for PRE-PROCESSING THE QUANTITATIVE DATA .

Descriptive Statistics

Descriptive statistics is the most basic step that researchers can use to draw conclusions from data. It helps to find patterns and helps the data ‘speak’. Let’s see some of the most common data analysis techniques used to perform descriptive statistics .

Mean is nothing but the average of the total data available at hand. The formula is simple and tells what average value to expect throughout the data.

The median is the middle value available in the data. It lets the researchers estimate where the mid-point of the data is. It’s important to note that the data needs to be sorted to find the median from it.

The mode is simply the most frequently occurring data in the dataset. For example, if you’re studying the ages of students in a particular class, the model will be the age of most students in the class.

- Standard Deviation

Numerical data is always spread over a wide range and finding out how much the data is spread is quite important. Standard deviation is what lets us achieve this. It tells us how much an average data point is far from the average.

Related Article: The Best Programming Language for Statistics

Inferential Analysis

Inferential statistics point towards the techniques used to predict future occurrences of data. These methods help draw relationships between data and once it’s done, predicting future data becomes possible.

- Correlation

Correlation s the measure of the relationship between two numerical variables. It measures the degree of their relation, whether it is causal or not.

For example, the age and height of a person are highly correlated. If the age of a person increases, height is also likely to increase. This is called a positive correlation.

A negative correlation means that upon increasing one variable, the other one decreases. An example would be the relationship between the age and maturity of a random person.

Regression aims to find the mathematical relationship between a set of variables. While the correlation was a statistical measure, regression is a mathematical measure that can be measured in the form of variables. Once the relationship between variables is formed, one variable can be used to predict the other variable.

This method has a huge application when it comes to predicting future data. If your research is based upon calculating future occurrences of some data based on past data and then testing it, make sure you use this method.

A Summary of Data Analysis Methods

Now that we’re done with some of the most common methods for both quantitative and qualitative data, let’s summarize them in a tabular form so you would have something to take home in the end.

Before we close the article, I’d like to strongly recommend you to check out some interesting related topics:

That’s it! We have seen why data analysis is such an important tool when it comes to research and how it saves a huge lot of time for the researchers, making them not only efficient but more productive as well.

Moreover, the article covers some of the most important data analysis techniques that one needs to know for research purposes in today’s age. We’ve gone through the analysis methods for both quantitative and qualitative data in a basic way so it might be easy to understand for beginners.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

Boosting Algorithms vs. Random Forests Explained

Imagine yourself in the position of a marketing analyst for an e-commerce site who has to make a model that will predict if a customer purchases in the next month or not. In such a scenario, you...

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods, and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Top 10 Employee Development Software for Talent Growth

Apr 3, 2024

Top 5 Insight Community Platforms to Elevate Your Research

Choose The Right Concept Testing Platform to Boost Your Ideas

Apr 2, 2024

Top 15 NPS Software for Customer Feedback in 2024

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Call Us Today! +91 99907 48956 | [email protected]

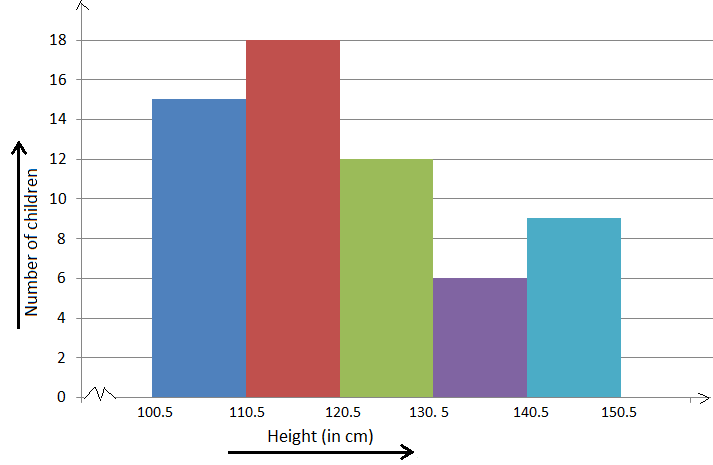

It is the simplest form of data Presentation often used in schools or universities to provide a clearer picture to students, who are better able to capture the concepts effectively through a pictorial Presentation of simple data.

2. Column chart

It is a simplified version of the pictorial Presentation which involves the management of a larger amount of data being shared during the presentations and providing suitable clarity to the insights of the data.

3. Pie Charts

Pie charts provide a very descriptive & a 2D depiction of the data pertaining to comparisons or resemblance of data in two separate fields.

4. Bar charts

A bar chart that shows the accumulation of data with cuboid bars with different dimensions & lengths which are directly proportionate to the values they represent. The bars can be placed either vertically or horizontally depending on the data being represented.

5. Histograms

It is a perfect Presentation of the spread of numerical data. The main differentiation that separates data graphs and histograms are the gaps in the data graphs.

6. Box plots

Box plot or Box-plot is a way of representing groups of numerical data through quartiles. Data Presentation is easier with this style of graph dealing with the extraction of data to the minutes of difference.

Map Data graphs help you with data Presentation over an area to display the areas of concern. Map graphs are useful to make an exact depiction of data over a vast case scenario.

All these visual presentations share a common goal of creating meaningful insights and a platform to understand and manage the data in relation to the growth and expansion of one’s in-depth understanding of data & details to plan or execute future decisions or actions.

Importance of Data Presentation

Data Presentation could be both can be a deal maker or deal breaker based on the delivery of the content in the context of visual depiction.

Data Presentation tools are powerful communication tools that can simplify the data by making it easily understandable & readable at the same time while attracting & keeping the interest of its readers and effectively showcase large amounts of complex data in a simplified manner.

If the user can create an insightful presentation of the data in hand with the same sets of facts and figures, then the results promise to be impressive.

There have been situations where the user has had a great amount of data and vision for expansion but the presentation drowned his/her vision.

To impress the higher management and top brass of a firm, effective presentation of data is needed.

Data Presentation helps the clients or the audience to not spend time grasping the concept and the future alternatives of the business and to convince them to invest in the company & turn it profitable both for the investors & the company.

Although data presentation has a lot to offer, the following are some of the major reason behind the essence of an effective presentation:-

- Many consumers or higher authorities are interested in the interpretation of data, not the raw data itself. Therefore, after the analysis of the data, users should represent the data with a visual aspect for better understanding and knowledge.

- The user should not overwhelm the audience with a number of slides of the presentation and inject an ample amount of texts as pictures that will speak for themselves.

- Data presentation often happens in a nutshell with each department showcasing their achievements towards company growth through a graph or a histogram.

- Providing a brief description would help the user to attain attention in a small amount of time while informing the audience about the context of the presentation

- The inclusion of pictures, charts, graphs and tables in the presentation help for better understanding the potential outcomes.

- An effective presentation would allow the organization to determine the difference with the fellow organization and acknowledge its flaws. Comparison of data would assist them in decision making.

Recommended Courses

Data Visualization

Using powerbi &tableau.

Tableau for Data Analysis

MySQL Certification Program

The PowerBI Masterclass

Need help call our support team 7:00 am to 10:00 pm (ist) at (+91 999-074-8956 | 9650-308-956), keep in touch, email: [email protected].

WhatsApp us

- Lecture 1: Course Overview

- Lecture 2: Intro to R

- Lecture 3: Data & Data Handling

- Lecture 4: Data Wrangling

- Lecture 5: Summary Statistics

- Lecture 6: Plotting 1

- Lecture 7: Plotting 2

- Lecture 8: Probability Basics

- Lecture 9: Models

- Lecture 10: Parameter Estimation 1

- Lecture 11: Classical Testing 1

- Lecture 12: Classical Testing 2

- Lecture 13: Classical Testing 3

- Lecture 14: Impact of Statistical Practices (T. Roettger)

- Lecture 15: Model Comparison

- Lecture 16: Simple Regression

- Tutorial 1: Using R, Data Handling / Wrangling

- Tutorial 2: More R, Wrangling, Summary Stats

- Tutorial 3: Summary statistics

- Tutorial 4: Plotting

- Tutorial 5: Probability calculus

- Tutorial 6: Probability calculus, models

- Tutorial 7: Parameter Estimation

- Tutorial 8: Classical Testing

- Tutorial 9: Hypothesis Testing

- Tutorial 10: Bayesian Hypothesis Testing

Table of Contents

What is data analysis, why is data analysis important, what is the data analysis process, data analysis methods, applications of data analysis, top data analysis techniques to analyze data, what is the importance of data analysis in research, future trends in data analysis, choose the right program, what is data analysis: a comprehensive guide.

In the contemporary business landscape, gaining a competitive edge is imperative, given the challenges such as rapidly evolving markets, economic unpredictability, fluctuating political environments, capricious consumer sentiments, and even global health crises. These challenges have reduced the room for error in business operations. For companies striving not only to survive but also to thrive in this demanding environment, the key lies in embracing the concept of data analysis . This involves strategically accumulating valuable, actionable information, which is leveraged to enhance decision-making processes.

If you're interested in forging a career in data analysis and wish to discover the top data analysis courses in 2024, we invite you to explore our informative video. It will provide insights into the opportunities to develop your expertise in this crucial field.

Data analysis inspects, cleans, transforms, and models data to extract insights and support decision-making. As a data analyst , your role involves dissecting vast datasets, unearthing hidden patterns, and translating numbers into actionable information.

Data analysis plays a pivotal role in today's data-driven world. It helps organizations harness the power of data, enabling them to make decisions, optimize processes, and gain a competitive edge. By turning raw data into meaningful insights, data analysis empowers businesses to identify opportunities, mitigate risks, and enhance their overall performance.

1. Informed Decision-Making

Data analysis is the compass that guides decision-makers through a sea of information. It enables organizations to base their choices on concrete evidence rather than intuition or guesswork. In business, this means making decisions more likely to lead to success, whether choosing the right marketing strategy, optimizing supply chains, or launching new products. By analyzing data, decision-makers can assess various options' potential risks and rewards, leading to better choices.

2. Improved Understanding

Data analysis provides a deeper understanding of processes, behaviors, and trends. It allows organizations to gain insights into customer preferences, market dynamics, and operational efficiency .

3. Competitive Advantage

Organizations can identify opportunities and threats by analyzing market trends, consumer behavior , and competitor performance. They can pivot their strategies to respond effectively, staying one step ahead of the competition. This ability to adapt and innovate based on data insights can lead to a significant competitive advantage.

Become a Data Science & Business Analytics Professional

- 11.5 M Expected New Jobs For Data Science And Analytics

- 28% Annual Job Growth By 2026

- $46K-$100K Average Annual Salary

Post Graduate Program in Data Analytics

- Post Graduate Program certificate and Alumni Association membership

- Exclusive hackathons and Ask me Anything sessions by IBM

Data Analyst

- Industry-recognized Data Analyst Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Here's what learners are saying regarding our programs:

Felix Chong

Project manage , codethink.

After completing this course, I landed a new job & a salary hike of 30%. I now work with Zuhlke Group as a Project Manager.

Gayathri Ramesh

Associate data engineer , publicis sapient.

The course was well structured and curated. The live classes were extremely helpful. They made learning more productive and interactive. The program helped me change my domain from a data analyst to an Associate Data Engineer.

4. Risk Mitigation

Data analysis is a valuable tool for risk assessment and management. Organizations can assess potential issues and take preventive measures by analyzing historical data. For instance, data analysis detects fraudulent activities in the finance industry by identifying unusual transaction patterns. This not only helps minimize financial losses but also safeguards the reputation and trust of customers.

5. Efficient Resource Allocation

Data analysis helps organizations optimize resource allocation. Whether it's allocating budgets, human resources, or manufacturing capacities, data-driven insights can ensure that resources are utilized efficiently. For example, data analysis can help hospitals allocate staff and resources to the areas with the highest patient demand, ensuring that patient care remains efficient and effective.

6. Continuous Improvement

Data analysis is a catalyst for continuous improvement. It allows organizations to monitor performance metrics, track progress, and identify areas for enhancement. This iterative process of analyzing data, implementing changes, and analyzing again leads to ongoing refinement and excellence in processes and products.

The data analysis process is a structured sequence of steps that lead from raw data to actionable insights. Here are the answers to what is data analysis:

- Data Collection: Gather relevant data from various sources, ensuring data quality and integrity.

- Data Cleaning: Identify and rectify errors, missing values, and inconsistencies in the dataset. Clean data is crucial for accurate analysis.

- Exploratory Data Analysis (EDA): Conduct preliminary analysis to understand the data's characteristics, distributions, and relationships. Visualization techniques are often used here.

- Data Transformation: Prepare the data for analysis by encoding categorical variables, scaling features, and handling outliers, if necessary.

- Model Building: Depending on the objectives, apply appropriate data analysis methods, such as regression, clustering, or deep learning.

- Model Evaluation: Depending on the problem type, assess the models' performance using metrics like Mean Absolute Error, Root Mean Squared Error , or others.

- Interpretation and Visualization: Translate the model's results into actionable insights. Visualizations, tables, and summary statistics help in conveying findings effectively.

- Deployment: Implement the insights into real-world solutions or strategies, ensuring that the data-driven recommendations are implemented.

1. Regression Analysis

Regression analysis is a powerful method for understanding the relationship between a dependent and one or more independent variables. It is applied in economics, finance, and social sciences. By fitting a regression model, you can make predictions, analyze cause-and-effect relationships, and uncover trends within your data.

2. Statistical Analysis

Statistical analysis encompasses a broad range of techniques for summarizing and interpreting data. It involves descriptive statistics (mean, median, standard deviation), inferential statistics (hypothesis testing, confidence intervals), and multivariate analysis. Statistical methods help make inferences about populations from sample data, draw conclusions, and assess the significance of results.

3. Cohort Analysis

Cohort analysis focuses on understanding the behavior of specific groups or cohorts over time. It can reveal patterns, retention rates, and customer lifetime value, helping businesses tailor their strategies.

4. Content Analysis

It is a qualitative data analysis method used to study the content of textual, visual, or multimedia data. Social sciences, journalism, and marketing often employ it to analyze themes, sentiments, or patterns within documents or media. Content analysis can help researchers gain insights from large volumes of unstructured data.

5. Factor Analysis

Factor analysis is a technique for uncovering underlying latent factors that explain the variance in observed variables. It is commonly used in psychology and the social sciences to reduce the dimensionality of data and identify underlying constructs. Factor analysis can simplify complex datasets, making them easier to interpret and analyze.

6. Monte Carlo Method

This method is a simulation technique that uses random sampling to solve complex problems and make probabilistic predictions. Monte Carlo simulations allow analysts to model uncertainty and risk, making it a valuable tool for decision-making.

7. Text Analysis

Also known as text mining , this method involves extracting insights from textual data. It analyzes large volumes of text, such as social media posts, customer reviews, or documents. Text analysis can uncover sentiment, topics, and trends, enabling organizations to understand public opinion, customer feedback, and emerging issues.

8. Time Series Analysis

Time series analysis deals with data collected at regular intervals over time. It is essential for forecasting, trend analysis, and understanding temporal patterns. Time series methods include moving averages, exponential smoothing, and autoregressive integrated moving average (ARIMA) models. They are widely used in finance for stock price prediction, meteorology for weather forecasting, and economics for economic modeling.

9. Descriptive Analysis

Descriptive analysis involves summarizing and describing the main features of a dataset. It focuses on organizing and presenting the data in a meaningful way, often using measures such as mean, median, mode, and standard deviation. It provides an overview of the data and helps identify patterns or trends.

10. Inferential Analysis

Inferential analysis aims to make inferences or predictions about a larger population based on sample data. It involves applying statistical techniques such as hypothesis testing, confidence intervals, and regression analysis. It helps generalize findings from a sample to a larger population.

11. Exploratory Data Analysis (EDA)

EDA focuses on exploring and understanding the data without preconceived hypotheses. It involves visualizations, summary statistics, and data profiling techniques to uncover patterns, relationships, and interesting features. It helps generate hypotheses for further analysis.

12. Diagnostic Analysis

Diagnostic analysis aims to understand the cause-and-effect relationships within the data. It investigates the factors or variables that contribute to specific outcomes or behaviors. Techniques such as regression analysis, ANOVA (Analysis of Variance), or correlation analysis are commonly used in diagnostic analysis.

13. Predictive Analysis

Predictive analysis involves using historical data to make predictions or forecasts about future outcomes. It utilizes statistical modeling techniques, machine learning algorithms, and time series analysis to identify patterns and build predictive models. It is often used for forecasting sales, predicting customer behavior, or estimating risk.

14. Prescriptive Analysis

Prescriptive analysis goes beyond predictive analysis by recommending actions or decisions based on the predictions. It combines historical data, optimization algorithms, and business rules to provide actionable insights and optimize outcomes. It helps in decision-making and resource allocation.

Our Data Analyst Master's Program will help you learn analytics tools and techniques to become a Data Analyst expert! It's the pefect course for you to jumpstart your career. Enroll now!

Data analysis is a versatile and indispensable tool that finds applications across various industries and domains. Its ability to extract actionable insights from data has made it a fundamental component of decision-making and problem-solving. Let's explore some of the key applications of data analysis:

1. Business and Marketing

- Market Research: Data analysis helps businesses understand market trends, consumer preferences, and competitive landscapes. It aids in identifying opportunities for product development, pricing strategies, and market expansion.

- Sales Forecasting: Data analysis models can predict future sales based on historical data, seasonality, and external factors. This helps businesses optimize inventory management and resource allocation.

2. Healthcare and Life Sciences

- Disease Diagnosis: Data analysis is vital in medical diagnostics, from interpreting medical images (e.g., MRI, X-rays) to analyzing patient records. Machine learning models can assist in early disease detection.

- Drug Discovery: Pharmaceutical companies use data analysis to identify potential drug candidates, predict their efficacy, and optimize clinical trials.

- Genomics and Personalized Medicine: Genomic data analysis enables personalized treatment plans by identifying genetic markers that influence disease susceptibility and response to therapies.

- Risk Management: Financial institutions use data analysis to assess credit risk, detect fraudulent activities, and model market risks.

- Algorithmic Trading: Data analysis is integral to developing trading algorithms that analyze market data and execute trades automatically based on predefined strategies.

- Fraud Detection: Credit card companies and banks employ data analysis to identify unusual transaction patterns and detect fraudulent activities in real time.

4. Manufacturing and Supply Chain

- Quality Control: Data analysis monitors and controls product quality on manufacturing lines. It helps detect defects and ensure consistency in production processes.

- Inventory Optimization: By analyzing demand patterns and supply chain data, businesses can optimize inventory levels, reduce carrying costs, and ensure timely deliveries.

5. Social Sciences and Academia

- Social Research: Researchers in social sciences analyze survey data, interviews, and textual data to study human behavior, attitudes, and trends. It helps in policy development and understanding societal issues.

- Academic Research: Data analysis is crucial to scientific physics, biology, and environmental science research. It assists in interpreting experimental results and drawing conclusions.

6. Internet and Technology

- Search Engines: Google uses complex data analysis algorithms to retrieve and rank search results based on user behavior and relevance.

- Recommendation Systems: Services like Netflix and Amazon leverage data analysis to recommend content and products to users based on their past preferences and behaviors.

7. Environmental Science

- Climate Modeling: Data analysis is essential in climate science. It analyzes temperature, precipitation, and other environmental data. It helps in understanding climate patterns and predicting future trends.

- Environmental Monitoring: Remote sensing data analysis monitors ecological changes, including deforestation, water quality, and air pollution.

1. Descriptive Statistics

Descriptive statistics provide a snapshot of a dataset's central tendencies and variability. These techniques help summarize and understand the data's basic characteristics.

2. Inferential Statistics

Inferential statistics involve making predictions or inferences based on a sample of data. Techniques include hypothesis testing, confidence intervals, and regression analysis. These methods are crucial for drawing conclusions from data and assessing the significance of findings.

3. Regression Analysis

It explores the relationship between one or more independent variables and a dependent variable. It is widely used for prediction and understanding causal links. Linear, logistic, and multiple regression are common in various fields.

4. Clustering Analysis

It is an unsupervised learning method that groups similar data points. K-means clustering and hierarchical clustering are examples. This technique is used for customer segmentation, anomaly detection, and pattern recognition.

5. Classification Analysis

Classification analysis assigns data points to predefined categories or classes. It's often used in applications like spam email detection, image recognition, and sentiment analysis. Popular algorithms include decision trees, support vector machines, and neural networks.

6. Time Series Analysis

Time series analysis deals with data collected over time, making it suitable for forecasting and trend analysis. Techniques like moving averages, autoregressive integrated moving averages (ARIMA), and exponential smoothing are applied in fields like finance, economics, and weather forecasting.

7. Text Analysis (Natural Language Processing - NLP)

Text analysis techniques, part of NLP , enable extracting insights from textual data. These methods include sentiment analysis, topic modeling, and named entity recognition. Text analysis is widely used for analyzing customer reviews, social media content, and news articles.

8. Principal Component Analysis

It is a dimensionality reduction technique that simplifies complex datasets while retaining important information. It transforms correlated variables into a set of linearly uncorrelated variables, making it easier to analyze and visualize high-dimensional data.

9. Anomaly Detection

Anomaly detection identifies unusual patterns or outliers in data. It's critical in fraud detection, network security, and quality control. Techniques like statistical methods, clustering-based approaches, and machine learning algorithms are employed for anomaly detection.

10. Data Mining

Data mining involves the automated discovery of patterns, associations, and relationships within large datasets. Techniques like association rule mining, frequent pattern analysis, and decision tree mining extract valuable knowledge from data.

11. Machine Learning and Deep Learning

ML and deep learning algorithms are applied for predictive modeling, classification, and regression tasks. Techniques like random forests, support vector machines, and convolutional neural networks (CNNs) have revolutionized various industries, including healthcare, finance, and image recognition.

12. Geographic Information Systems (GIS) Analysis

GIS analysis combines geographical data with spatial analysis techniques to solve location-based problems. It's widely used in urban planning, environmental management, and disaster response.

- Uncovering Patterns and Trends: Data analysis allows researchers to identify patterns, trends, and relationships within the data. By examining these patterns, researchers can better understand the phenomena under investigation. For example, in epidemiological research, data analysis can reveal the trends and patterns of disease outbreaks, helping public health officials take proactive measures.

- Testing Hypotheses: Research often involves formulating hypotheses and testing them. Data analysis provides the means to evaluate hypotheses rigorously. Through statistical tests and inferential analysis, researchers can determine whether the observed patterns in the data are statistically significant or simply due to chance.

- Making Informed Conclusions: Data analysis helps researchers draw meaningful and evidence-based conclusions from their research findings. It provides a quantitative basis for making claims and recommendations. In academic research, these conclusions form the basis for scholarly publications and contribute to the body of knowledge in a particular field.

- Enhancing Data Quality: Data analysis includes data cleaning and validation processes that improve the quality and reliability of the dataset. Identifying and addressing errors, missing values, and outliers ensures that the research results accurately reflect the phenomena being studied.

- Supporting Decision-Making: In applied research, data analysis assists decision-makers in various sectors, such as business, government, and healthcare. Policy decisions, marketing strategies, and resource allocations are often based on research findings.

- Identifying Outliers and Anomalies: Outliers and anomalies in data can hold valuable information or indicate errors. Data analysis techniques can help identify these exceptional cases, whether medical diagnoses, financial fraud detection, or product quality control.

- Revealing Insights: Research data often contain hidden insights that are not immediately apparent. Data analysis techniques, such as clustering or text analysis, can uncover these insights. For example, social media data sentiment analysis can reveal public sentiment and trends on various topics in social sciences.

- Forecasting and Prediction: Data analysis allows for the development of predictive models. Researchers can use historical data to build models forecasting future trends or outcomes. This is valuable in fields like finance for stock price predictions, meteorology for weather forecasting, and epidemiology for disease spread projections.

- Optimizing Resources: Research often involves resource allocation. Data analysis helps researchers and organizations optimize resource use by identifying areas where improvements can be made, or costs can be reduced.

- Continuous Improvement: Data analysis supports the iterative nature of research. Researchers can analyze data, draw conclusions, and refine their hypotheses or research designs based on their findings. This cycle of analysis and refinement leads to continuous improvement in research methods and understanding.

Data analysis is an ever-evolving field driven by technological advancements. The future of data analysis promises exciting developments that will reshape how data is collected, processed, and utilized. Here are some of the key trends of data analysis:

1. Artificial Intelligence and Machine Learning Integration

Artificial intelligence (AI) and machine learning (ML) are expected to play a central role in data analysis. These technologies can automate complex data processing tasks, identify patterns at scale, and make highly accurate predictions. AI-driven analytics tools will become more accessible, enabling organizations to harness the power of ML without requiring extensive expertise.

2. Augmented Analytics

Augmented analytics combines AI and natural language processing (NLP) to assist data analysts in finding insights. These tools can automatically generate narratives, suggest visualizations, and highlight important trends within data. They enhance the speed and efficiency of data analysis, making it more accessible to a broader audience.

3. Data Privacy and Ethical Considerations

As data collection becomes more pervasive, privacy concerns and ethical considerations will gain prominence. Future data analysis trends will prioritize responsible data handling, transparency, and compliance with regulations like GDPR . Differential privacy techniques and data anonymization will be crucial in balancing data utility with privacy protection.

4. Real-time and Streaming Data Analysis

The demand for real-time insights will drive the adoption of real-time and streaming data analysis. Organizations will leverage technologies like Apache Kafka and Apache Flink to process and analyze data as it is generated. This trend is essential for fraud detection, IoT analytics, and monitoring systems.

5. Quantum Computing

It can potentially revolutionize data analysis by solving complex problems exponentially faster than classical computers. Although quantum computing is in its infancy, its impact on optimization, cryptography , and simulations will be significant once practical quantum computers become available.

6. Edge Analytics

With the proliferation of edge devices in the Internet of Things (IoT), data analysis is moving closer to the data source. Edge analytics allows for real-time processing and decision-making at the network's edge, reducing latency and bandwidth requirements.

7. Explainable AI (XAI)

Interpretable and explainable AI models will become crucial, especially in applications where trust and transparency are paramount. XAI techniques aim to make AI decisions more understandable and accountable, which is critical in healthcare and finance.

8. Data Democratization

The future of data analysis will see more democratization of data access and analysis tools. Non-technical users will have easier access to data and analytics through intuitive interfaces and self-service BI tools , reducing the reliance on data specialists.

9. Advanced Data Visualization

Data visualization tools will continue to evolve, offering more interactivity, 3D visualization, and augmented reality (AR) capabilities. Advanced visualizations will help users explore data in new and immersive ways.

10. Ethnographic Data Analysis

Ethnographic data analysis will gain importance as organizations seek to understand human behavior, cultural dynamics, and social trends. This qualitative data analysis approach and quantitative methods will provide a holistic understanding of complex issues.

11. Data Analytics Ethics and Bias Mitigation

Ethical considerations in data analysis will remain a key trend. Efforts to identify and mitigate bias in algorithms and models will become standard practice, ensuring fair and equitable outcomes.

Our Data Analytics courses have been meticulously crafted to equip you with the necessary skills and knowledge to thrive in this swiftly expanding industry. Our instructors will lead you through immersive, hands-on projects, real-world simulations, and illuminating case studies, ensuring you gain the practical expertise necessary for success. Through our courses, you will acquire the ability to dissect data, craft enlightening reports, and make data-driven choices that have the potential to steer businesses toward prosperity.

Having addressed the question of what is data analysis, if you're considering a career in data analytics, it's advisable to begin by researching the prerequisites for becoming a data analyst. You may also want to explore the Post Graduate Program in Data Analytics offered in collaboration with Purdue University. This program offers a practical learning experience through real-world case studies and projects aligned with industry needs. It provides comprehensive exposure to the essential technologies and skills currently employed in the field of data analytics.

Program Name Data Analyst Post Graduate Program In Data Analytics Data Analytics Bootcamp Geo All Geos All Geos US University Simplilearn Purdue Caltech Course Duration 11 Months 8 Months 6 Months Coding Experience Required No Basic No Skills You Will Learn 10+ skills including Python, MySQL, Tableau, NumPy and more Data Analytics, Statistical Analysis using Excel, Data Analysis Python and R, and more Data Visualization with Tableau, Linear and Logistic Regression, Data Manipulation and more Additional Benefits Applied Learning via Capstone and 20+ industry-relevant Data Analytics projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Access to Integrated Practical Labs Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

1. What is the difference between data analysis and data science?

Data analysis primarily involves extracting meaningful insights from existing data using statistical techniques and visualization tools. Whereas, data science encompasses a broader spectrum, incorporating data analysis as a subset while involving machine learning, deep learning, and predictive modeling to build data-driven solutions and algorithms.

2. What are the common mistakes to avoid in data analysis?

Common mistakes to avoid in data analysis include neglecting data quality issues, failing to define clear objectives, overcomplicating visualizations, not considering algorithmic biases, and disregarding the importance of proper data preprocessing and cleaning. Additionally, avoiding making unwarranted assumptions and misinterpreting correlation as causation in your analysis is crucial.

Data Science & Business Analytics Courses Duration and Fees

Data Science & Business Analytics programs typically range from a few weeks to several months, with fees varying based on program and institution.

Learn from Industry Experts with free Masterclasses

Data science & business analytics.

How Can You Master the Art of Data Analysis: Uncover the Path to Career Advancement

Develop Your Career in Data Analytics with Purdue University Professional Certificate

Career Masterclass: How to Get Qualified for a Data Analytics Career

Recommended Reads

Big Data Career Guide: A Comprehensive Playbook to Becoming a Big Data Engineer

Why Python Is Essential for Data Analysis and Data Science?

The Best Spotify Data Analysis Project You Need to Know

The Rise of the Data-Driven Professional: 6 Non-Data Roles That Need Data Analytics Skills

Exploratory Data Analysis [EDA]: Techniques, Best Practices and Popular Applications

All the Ins and Outs of Exploratory Data Analysis

Get Affiliated Certifications with Live Class programs

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Present Your Data Like a Pro

- Joel Schwartzberg

Demystify the numbers. Your audience will thank you.

While a good presentation has data, data alone doesn’t guarantee a good presentation. It’s all about how that data is presented. The quickest way to confuse your audience is by sharing too many details at once. The only data points you should share are those that significantly support your point — and ideally, one point per chart. To avoid the debacle of sheepishly translating hard-to-see numbers and labels, rehearse your presentation with colleagues sitting as far away as the actual audience would. While you’ve been working with the same chart for weeks or months, your audience will be exposed to it for mere seconds. Give them the best chance of comprehending your data by using simple, clear, and complete language to identify X and Y axes, pie pieces, bars, and other diagrammatic elements. Try to avoid abbreviations that aren’t obvious, and don’t assume labeled components on one slide will be remembered on subsequent slides. Every valuable chart or pie graph has an “Aha!” zone — a number or range of data that reveals something crucial to your point. Make sure you visually highlight the “Aha!” zone, reinforcing the moment by explaining it to your audience.

With so many ways to spin and distort information these days, a presentation needs to do more than simply share great ideas — it needs to support those ideas with credible data. That’s true whether you’re an executive pitching new business clients, a vendor selling her services, or a CEO making a case for change.

- JS Joel Schwartzberg oversees executive communications for a major national nonprofit, is a professional presentation coach, and is the author of Get to the Point! Sharpen Your Message and Make Your Words Matter and The Language of Leadership: How to Engage and Inspire Your Team . You can find him on LinkedIn and X. TheJoelTruth

Partner Center

Data Analysis PPT: Definition, Types and Process

Data Analysis PPT: Definition, Types and Process Free Download: Although many groups, organizations, and specialists have extraordinary approaches to approach data analysis, maximum of them may be distilled right into a one-size-fits-all definition.

Data analysis is the system of cleaning, changing, and processing raw facts, and extracting actionable, applicable records that enables corporations make knowledgeable decisions. The process enables lessen the dangers inherent in choice-making through imparting beneficial insights and statistics, regularly supplied in charts, images, tables, and graphs. A easy instance of facts evaluation may be visible on every occasion we take a choice in our every day lives through comparing what has occurred withinside the beyond or what is going to occur if we make that choice.

Table of Content

- Introduction

- Why is Data Analysis Important?

- Data Analysis Process

- Types of Data Analysis

Related Posts

Social media marketing ppt presentation seminar free, biomedical waste management ppt presentation free, monkey and the cap seller story ppt presentation free download, 1210 electrical engineering(eee) seminar topics 2024, 112 iot seminar topics-internet of thing presentation topics 2024.

330 Latest AI (Artificial Intelligence Seminar Topics) 2024

No comments yet, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Python Data Analytics pp 1–13 Cite as

An Introduction to Data Analysis

- Fabio Nelli 2

- First Online: 02 September 2023

1430 Accesses

In this chapter, you take the first steps in the world of data analysis, learning in detail about all the concepts and processes that make up this discipline. The concepts discussed in this chapter are helpful background for the following chapters, where these concepts and procedures are applied in the form of Python code, through the use of several libraries that are discussed in later chapters.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Author information

Authors and affiliations.

Rome, Italy

Fabio Nelli

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to APress Media, LLC, part of Springer Nature

About this chapter

Cite this chapter.

Nelli, F. (2023). An Introduction to Data Analysis. In: Python Data Analytics. Apress, Berkeley, CA. https://doi.org/10.1007/978-1-4842-9532-8_1

Download citation

DOI : https://doi.org/10.1007/978-1-4842-9532-8_1

Published : 02 September 2023

Publisher Name : Apress, Berkeley, CA

Print ISBN : 978-1-4842-9531-1

Online ISBN : 978-1-4842-9532-8

eBook Packages : Professional and Applied Computing Apress Access Books Professional and Applied Computing (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Can J Hosp Pharm

- v.68(4); Jul-Aug 2015

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study

There are three kinds of lies: lies, damned lies, and statistics. – Mark Twain 1

INTRODUCTION

Statistics represent an essential part of a study because, regardless of the study design, investigators need to summarize the collected information for interpretation and presentation to others. It is therefore important for us to heed Mr Twain’s concern when creating the data analysis plan. In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses.

The purpose of this article is to help you create a data analysis plan for a quantitative study. For those interested in conducting qualitative research, previous articles in this Research Primer series have provided information on the design and analysis of such studies. 2 , 3 Information in the current article is divided into 3 main sections: an overview of terms and concepts used in data analysis, a review of common methods used to summarize study data, and a process to help identify relevant statistical tests. My intention here is to introduce the main elements of data analysis and provide a place for you to start when planning this part of your study. Biostatistical experts, textbooks, statistical software packages, and other resources can certainly add more breadth and depth to this topic when you need additional information and advice.

TERMS AND CONCEPTS USED IN DATA ANALYSIS

When analyzing information from a quantitative study, we are often dealing with numbers; therefore, it is important to begin with an understanding of the source of the numbers. Let us start with the term variable , which defines a specific item of information collected in a study. Examples of variables include age, sex or gender, ethnicity, exercise frequency, weight, treatment group, and blood glucose. Each variable will have a group of categories, which are referred to as values , to help describe the characteristic of an individual study participant. For example, the variable “sex” would have values of “male” and “female”.

Although variables can be defined or grouped in various ways, I will focus on 2 methods at this introductory stage. First, variables can be defined according to the level of measurement. The categories in a nominal variable are names, for example, male and female for the variable “sex”; white, Aboriginal, black, Latin American, South Asian, and East Asian for the variable “ethnicity”; and intervention and control for the variable “treatment group”. Nominal variables with only 2 categories are also referred to as dichotomous variables because the study group can be divided into 2 subgroups based on information in the variable. For example, a study sample can be split into 2 groups (patients receiving the intervention and controls) using the dichotomous variable “treatment group”. An ordinal variable implies that the categories can be placed in a meaningful order, as would be the case for exercise frequency (never, sometimes, often, or always). Nominal-level and ordinal-level variables are also referred to as categorical variables, because each category in the variable can be completely separated from the others. The categories for an interval variable can be placed in a meaningful order, with the interval between consecutive categories also having meaning. Age, weight, and blood glucose can be considered as interval variables, but also as ratio variables, because the ratio between values has meaning (e.g., a 15-year-old is half the age of a 30-year-old). Interval-level and ratio-level variables are also referred to as continuous variables because of the underlying continuity among categories.

As we progress through the levels of measurement from nominal to ratio variables, we gather more information about the study participant. The amount of information that a variable provides will become important in the analysis stage, because we lose information when variables are reduced or aggregated—a common practice that is not recommended. 4 For example, if age is reduced from a ratio-level variable (measured in years) to an ordinal variable (categories of < 65 and ≥ 65 years) we lose the ability to make comparisons across the entire age range and introduce error into the data analysis. 4

A second method of defining variables is to consider them as either dependent or independent. As the terms imply, the value of a dependent variable depends on the value of other variables, whereas the value of an independent variable does not rely on other variables. In addition, an investigator can influence the value of an independent variable, such as treatment-group assignment. Independent variables are also referred to as predictors because we can use information from these variables to predict the value of a dependent variable. Building on the group of variables listed in the first paragraph of this section, blood glucose could be considered a dependent variable, because its value may depend on values of the independent variables age, sex, ethnicity, exercise frequency, weight, and treatment group.