Chapter 2. Research Design

Getting started.

When I teach undergraduates qualitative research methods, the final product of the course is a “research proposal” that incorporates all they have learned and enlists the knowledge they have learned about qualitative research methods in an original design that addresses a particular research question. I highly recommend you think about designing your own research study as you progress through this textbook. Even if you don’t have a study in mind yet, it can be a helpful exercise as you progress through the course. But how to start? How can one design a research study before they even know what research looks like? This chapter will serve as a brief overview of the research design process to orient you to what will be coming in later chapters. Think of it as a “skeleton” of what you will read in more detail in later chapters. Ideally, you will read this chapter both now (in sequence) and later during your reading of the remainder of the text. Do not worry if you have questions the first time you read this chapter. Many things will become clearer as the text advances and as you gain a deeper understanding of all the components of good qualitative research. This is just a preliminary map to get you on the right road.

Research Design Steps

Before you even get started, you will need to have a broad topic of interest in mind. [1] . In my experience, students can confuse this broad topic with the actual research question, so it is important to clearly distinguish the two. And the place to start is the broad topic. It might be, as was the case with me, working-class college students. But what about working-class college students? What’s it like to be one? Why are there so few compared to others? How do colleges assist (or fail to assist) them? What interested me was something I could barely articulate at first and went something like this: “Why was it so difficult and lonely to be me?” And by extension, “Did others share this experience?”

Once you have a general topic, reflect on why this is important to you. Sometimes we connect with a topic and we don’t really know why. Even if you are not willing to share the real underlying reason you are interested in a topic, it is important that you know the deeper reasons that motivate you. Otherwise, it is quite possible that at some point during the research, you will find yourself turned around facing the wrong direction. I have seen it happen many times. The reason is that the research question is not the same thing as the general topic of interest, and if you don’t know the reasons for your interest, you are likely to design a study answering a research question that is beside the point—to you, at least. And this means you will be much less motivated to carry your research to completion.

Researcher Note

Why do you employ qualitative research methods in your area of study? What are the advantages of qualitative research methods for studying mentorship?

Qualitative research methods are a huge opportunity to increase access, equity, inclusion, and social justice. Qualitative research allows us to engage and examine the uniquenesses/nuances within minoritized and dominant identities and our experiences with these identities. Qualitative research allows us to explore a specific topic, and through that exploration, we can link history to experiences and look for patterns or offer up a unique phenomenon. There’s such beauty in being able to tell a particular story, and qualitative research is a great mode for that! For our work, we examined the relationships we typically use the term mentorship for but didn’t feel that was quite the right word. Qualitative research allowed us to pick apart what we did and how we engaged in our relationships, which then allowed us to more accurately describe what was unique about our mentorship relationships, which we ultimately named liberationships ( McAloney and Long 2021) . Qualitative research gave us the means to explore, process, and name our experiences; what a powerful tool!

How do you come up with ideas for what to study (and how to study it)? Where did you get the idea for studying mentorship?

Coming up with ideas for research, for me, is kind of like Googling a question I have, not finding enough information, and then deciding to dig a little deeper to get the answer. The idea to study mentorship actually came up in conversation with my mentorship triad. We were talking in one of our meetings about our relationship—kind of meta, huh? We discussed how we felt that mentorship was not quite the right term for the relationships we had built. One of us asked what was different about our relationships and mentorship. This all happened when I was taking an ethnography course. During the next session of class, we were discussing auto- and duoethnography, and it hit me—let’s explore our version of mentorship, which we later went on to name liberationships ( McAloney and Long 2021 ). The idea and questions came out of being curious and wanting to find an answer. As I continue to research, I see opportunities in questions I have about my work or during conversations that, in our search for answers, end up exposing gaps in the literature. If I can’t find the answer already out there, I can study it.

—Kim McAloney, PhD, College Student Services Administration Ecampus coordinator and instructor

When you have a better idea of why you are interested in what it is that interests you, you may be surprised to learn that the obvious approaches to the topic are not the only ones. For example, let’s say you think you are interested in preserving coastal wildlife. And as a social scientist, you are interested in policies and practices that affect the long-term viability of coastal wildlife, especially around fishing communities. It would be natural then to consider designing a research study around fishing communities and how they manage their ecosystems. But when you really think about it, you realize that what interests you the most is how people whose livelihoods depend on a particular resource act in ways that deplete that resource. Or, even deeper, you contemplate the puzzle, “How do people justify actions that damage their surroundings?” Now, there are many ways to design a study that gets at that broader question, and not all of them are about fishing communities, although that is certainly one way to go. Maybe you could design an interview-based study that includes and compares loggers, fishers, and desert golfers (those who golf in arid lands that require a great deal of wasteful irrigation). Or design a case study around one particular example where resources were completely used up by a community. Without knowing what it is you are really interested in, what motivates your interest in a surface phenomenon, you are unlikely to come up with the appropriate research design.

These first stages of research design are often the most difficult, but have patience . Taking the time to consider why you are going to go through a lot of trouble to get answers will prevent a lot of wasted energy in the future.

There are distinct reasons for pursuing particular research questions, and it is helpful to distinguish between them. First, you may be personally motivated. This is probably the most important and the most often overlooked. What is it about the social world that sparks your curiosity? What bothers you? What answers do you need in order to keep living? For me, I knew I needed to get a handle on what higher education was for before I kept going at it. I needed to understand why I felt so different from my peers and whether this whole “higher education” thing was “for the likes of me” before I could complete my degree. That is the personal motivation question. Your personal motivation might also be political in nature, in that you want to change the world in a particular way. It’s all right to acknowledge this. In fact, it is better to acknowledge it than to hide it.

There are also academic and professional motivations for a particular study. If you are an absolute beginner, these may be difficult to find. We’ll talk more about this when we discuss reviewing the literature. Simply put, you are probably not the only person in the world to have thought about this question or issue and those related to it. So how does your interest area fit into what others have studied? Perhaps there is a good study out there of fishing communities, but no one has quite asked the “justification” question. You are motivated to address this to “fill the gap” in our collective knowledge. And maybe you are really not at all sure of what interests you, but you do know that [insert your topic] interests a lot of people, so you would like to work in this area too. You want to be involved in the academic conversation. That is a professional motivation and a very important one to articulate.

Practical and strategic motivations are a third kind. Perhaps you want to encourage people to take better care of the natural resources around them. If this is also part of your motivation, you will want to design your research project in a way that might have an impact on how people behave in the future. There are many ways to do this, one of which is using qualitative research methods rather than quantitative research methods, as the findings of qualitative research are often easier to communicate to a broader audience than the results of quantitative research. You might even be able to engage the community you are studying in the collecting and analyzing of data, something taboo in quantitative research but actively embraced and encouraged by qualitative researchers. But there are other practical reasons, such as getting “done” with your research in a certain amount of time or having access (or no access) to certain information. There is nothing wrong with considering constraints and opportunities when designing your study. Or maybe one of the practical or strategic goals is about learning competence in this area so that you can demonstrate the ability to conduct interviews and focus groups with future employers. Keeping that in mind will help shape your study and prevent you from getting sidetracked using a technique that you are less invested in learning about.

STOP HERE for a moment

I recommend you write a paragraph (at least) explaining your aims and goals. Include a sentence about each of the following: personal/political goals, practical or professional/academic goals, and practical/strategic goals. Think through how all of the goals are related and can be achieved by this particular research study . If they can’t, have a rethink. Perhaps this is not the best way to go about it.

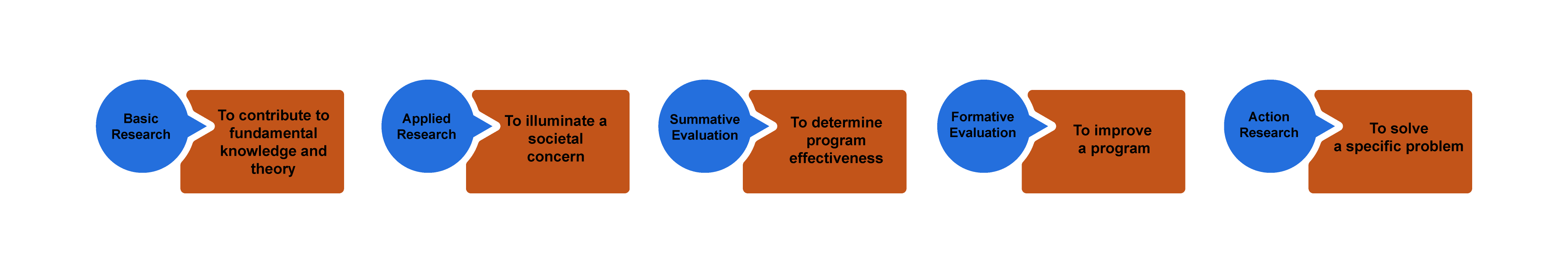

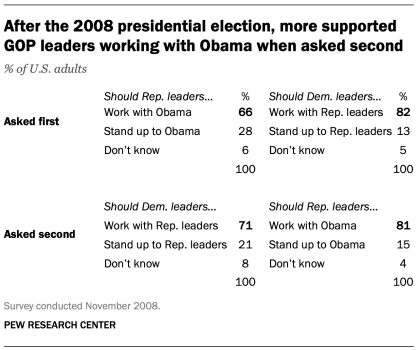

You will also want to be clear about the purpose of your study. “Wait, didn’t we just do this?” you might ask. No! Your goals are not the same as the purpose of the study, although they are related. You can think about purpose lying on a continuum from “ theory ” to “action” (figure 2.1). Sometimes you are doing research to discover new knowledge about the world, while other times you are doing a study because you want to measure an impact or make a difference in the world.

Basic research involves research that is done for the sake of “pure” knowledge—that is, knowledge that, at least at this moment in time, may not have any apparent use or application. Often, and this is very important, knowledge of this kind is later found to be extremely helpful in solving problems. So one way of thinking about basic research is that it is knowledge for which no use is yet known but will probably one day prove to be extremely useful. If you are doing basic research, you do not need to argue its usefulness, as the whole point is that we just don’t know yet what this might be.

Researchers engaged in basic research want to understand how the world operates. They are interested in investigating a phenomenon to get at the nature of reality with regard to that phenomenon. The basic researcher’s purpose is to understand and explain ( Patton 2002:215 ).

Basic research is interested in generating and testing hypotheses about how the world works. Grounded Theory is one approach to qualitative research methods that exemplifies basic research (see chapter 4). Most academic journal articles publish basic research findings. If you are working in academia (e.g., writing your dissertation), the default expectation is that you are conducting basic research.

Applied research in the social sciences is research that addresses human and social problems. Unlike basic research, the researcher has expectations that the research will help contribute to resolving a problem, if only by identifying its contours, history, or context. From my experience, most students have this as their baseline assumption about research. Why do a study if not to make things better? But this is a common mistake. Students and their committee members are often working with default assumptions here—the former thinking about applied research as their purpose, the latter thinking about basic research: “The purpose of applied research is to contribute knowledge that will help people to understand the nature of a problem in order to intervene, thereby allowing human beings to more effectively control their environment. While in basic research the source of questions is the tradition within a scholarly discipline, in applied research the source of questions is in the problems and concerns experienced by people and by policymakers” ( Patton 2002:217 ).

Applied research is less geared toward theory in two ways. First, its questions do not derive from previous literature. For this reason, applied research studies have much more limited literature reviews than those found in basic research (although they make up for this by having much more “background” about the problem). Second, it does not generate theory in the same way as basic research does. The findings of an applied research project may not be generalizable beyond the boundaries of this particular problem or context. The findings are more limited. They are useful now but may be less useful later. This is why basic research remains the default “gold standard” of academic research.

Evaluation research is research that is designed to evaluate or test the effectiveness of specific solutions and programs addressing specific social problems. We already know the problems, and someone has already come up with solutions. There might be a program, say, for first-generation college students on your campus. Does this program work? Are first-generation students who participate in the program more likely to graduate than those who do not? These are the types of questions addressed by evaluation research. There are two types of research within this broader frame; however, one more action-oriented than the next. In summative evaluation , an overall judgment about the effectiveness of a program or policy is made. Should we continue our first-gen program? Is it a good model for other campuses? Because the purpose of such summative evaluation is to measure success and to determine whether this success is scalable (capable of being generalized beyond the specific case), quantitative data is more often used than qualitative data. In our example, we might have “outcomes” data for thousands of students, and we might run various tests to determine if the better outcomes of those in the program are statistically significant so that we can generalize the findings and recommend similar programs elsewhere. Qualitative data in the form of focus groups or interviews can then be used for illustrative purposes, providing more depth to the quantitative analyses. In contrast, formative evaluation attempts to improve a program or policy (to help “form” or shape its effectiveness). Formative evaluations rely more heavily on qualitative data—case studies, interviews, focus groups. The findings are meant not to generalize beyond the particular but to improve this program. If you are a student seeking to improve your qualitative research skills and you do not care about generating basic research, formative evaluation studies might be an attractive option for you to pursue, as there are always local programs that need evaluation and suggestions for improvement. Again, be very clear about your purpose when talking through your research proposal with your committee.

Action research takes a further step beyond evaluation, even formative evaluation, to being part of the solution itself. This is about as far from basic research as one could get and definitely falls beyond the scope of “science,” as conventionally defined. The distinction between action and research is blurry, the research methods are often in constant flux, and the only “findings” are specific to the problem or case at hand and often are findings about the process of intervention itself. Rather than evaluate a program as a whole, action research often seeks to change and improve some particular aspect that may not be working—maybe there is not enough diversity in an organization or maybe women’s voices are muted during meetings and the organization wonders why and would like to change this. In a further step, participatory action research , those women would become part of the research team, attempting to amplify their voices in the organization through participation in the action research. As action research employs methods that involve people in the process, focus groups are quite common.

If you are working on a thesis or dissertation, chances are your committee will expect you to be contributing to fundamental knowledge and theory ( basic research ). If your interests lie more toward the action end of the continuum, however, it is helpful to talk to your committee about this before you get started. Knowing your purpose in advance will help avoid misunderstandings during the later stages of the research process!

The Research Question

Once you have written your paragraph and clarified your purpose and truly know that this study is the best study for you to be doing right now , you are ready to write and refine your actual research question. Know that research questions are often moving targets in qualitative research, that they can be refined up to the very end of data collection and analysis. But you do have to have a working research question at all stages. This is your “anchor” when you get lost in the data. What are you addressing? What are you looking at and why? Your research question guides you through the thicket. It is common to have a whole host of questions about a phenomenon or case, both at the outset and throughout the study, but you should be able to pare it down to no more than two or three sentences when asked. These sentences should both clarify the intent of the research and explain why this is an important question to answer. More on refining your research question can be found in chapter 4.

Chances are, you will have already done some prior reading before coming up with your interest and your questions, but you may not have conducted a systematic literature review. This is the next crucial stage to be completed before venturing further. You don’t want to start collecting data and then realize that someone has already beaten you to the punch. A review of the literature that is already out there will let you know (1) if others have already done the study you are envisioning; (2) if others have done similar studies, which can help you out; and (3) what ideas or concepts are out there that can help you frame your study and make sense of your findings. More on literature reviews can be found in chapter 9.

In addition to reviewing the literature for similar studies to what you are proposing, it can be extremely helpful to find a study that inspires you. This may have absolutely nothing to do with the topic you are interested in but is written so beautifully or organized so interestingly or otherwise speaks to you in such a way that you want to post it somewhere to remind you of what you want to be doing. You might not understand this in the early stages—why would you find a study that has nothing to do with the one you are doing helpful? But trust me, when you are deep into analysis and writing, having an inspirational model in view can help you push through. If you are motivated to do something that might change the world, you probably have read something somewhere that inspired you. Go back to that original inspiration and read it carefully and see how they managed to convey the passion that you so appreciate.

At this stage, you are still just getting started. There are a lot of things to do before setting forth to collect data! You’ll want to consider and choose a research tradition and a set of data-collection techniques that both help you answer your research question and match all your aims and goals. For example, if you really want to help migrant workers speak for themselves, you might draw on feminist theory and participatory action research models. Chapters 3 and 4 will provide you with more information on epistemologies and approaches.

Next, you have to clarify your “units of analysis.” What is the level at which you are focusing your study? Often, the unit in qualitative research methods is individual people, or “human subjects.” But your units of analysis could just as well be organizations (colleges, hospitals) or programs or even whole nations. Think about what it is you want to be saying at the end of your study—are the insights you are hoping to make about people or about organizations or about something else entirely? A unit of analysis can even be a historical period! Every unit of analysis will call for a different kind of data collection and analysis and will produce different kinds of “findings” at the conclusion of your study. [2]

Regardless of what unit of analysis you select, you will probably have to consider the “human subjects” involved in your research. [3] Who are they? What interactions will you have with them—that is, what kind of data will you be collecting? Before answering these questions, define your population of interest and your research setting. Use your research question to help guide you.

Let’s use an example from a real study. In Geographies of Campus Inequality , Benson and Lee ( 2020 ) list three related research questions: “(1) What are the different ways that first-generation students organize their social, extracurricular, and academic activities at selective and highly selective colleges? (2) how do first-generation students sort themselves and get sorted into these different types of campus lives; and (3) how do these different patterns of campus engagement prepare first-generation students for their post-college lives?” (3).

Note that we are jumping into this a bit late, after Benson and Lee have described previous studies (the literature review) and what is known about first-generation college students and what is not known. They want to know about differences within this group, and they are interested in ones attending certain kinds of colleges because those colleges will be sites where academic and extracurricular pressures compete. That is the context for their three related research questions. What is the population of interest here? First-generation college students . What is the research setting? Selective and highly selective colleges . But a host of questions remain. Which students in the real world, which colleges? What about gender, race, and other identity markers? Will the students be asked questions? Are the students still in college, or will they be asked about what college was like for them? Will they be observed? Will they be shadowed? Will they be surveyed? Will they be asked to keep diaries of their time in college? How many students? How many colleges? For how long will they be observed?

Recommendation

Take a moment and write down suggestions for Benson and Lee before continuing on to what they actually did.

Have you written down your own suggestions? Good. Now let’s compare those with what they actually did. Benson and Lee drew on two sources of data: in-depth interviews with sixty-four first-generation students and survey data from a preexisting national survey of students at twenty-eight selective colleges. Let’s ignore the survey for our purposes here and focus on those interviews. The interviews were conducted between 2014 and 2016 at a single selective college, “Hilltop” (a pseudonym ). They employed a “purposive” sampling strategy to ensure an equal number of male-identifying and female-identifying students as well as equal numbers of White, Black, and Latinx students. Each student was interviewed once. Hilltop is a selective liberal arts college in the northeast that enrolls about three thousand students.

How did your suggestions match up to those actually used by the researchers in this study? It is possible your suggestions were too ambitious? Beginning qualitative researchers can often make that mistake. You want a research design that is both effective (it matches your question and goals) and doable. You will never be able to collect data from your entire population of interest (unless your research question is really so narrow to be relevant to very few people!), so you will need to come up with a good sample. Define the criteria for this sample, as Benson and Lee did when deciding to interview an equal number of students by gender and race categories. Define the criteria for your sample setting too. Hilltop is typical for selective colleges. That was a research choice made by Benson and Lee. For more on sampling and sampling choices, see chapter 5.

Benson and Lee chose to employ interviews. If you also would like to include interviews, you have to think about what will be asked in them. Most interview-based research involves an interview guide, a set of questions or question areas that will be asked of each participant. The research question helps you create a relevant interview guide. You want to ask questions whose answers will provide insight into your research question. Again, your research question is the anchor you will continually come back to as you plan for and conduct your study. It may be that once you begin interviewing, you find that people are telling you something totally unexpected, and this makes you rethink your research question. That is fine. Then you have a new anchor. But you always have an anchor. More on interviewing can be found in chapter 11.

Let’s imagine Benson and Lee also observed college students as they went about doing the things college students do, both in the classroom and in the clubs and social activities in which they participate. They would have needed a plan for this. Would they sit in on classes? Which ones and how many? Would they attend club meetings and sports events? Which ones and how many? Would they participate themselves? How would they record their observations? More on observation techniques can be found in both chapters 13 and 14.

At this point, the design is almost complete. You know why you are doing this study, you have a clear research question to guide you, you have identified your population of interest and research setting, and you have a reasonable sample of each. You also have put together a plan for data collection, which might include drafting an interview guide or making plans for observations. And so you know exactly what you will be doing for the next several months (or years!). To put the project into action, there are a few more things necessary before actually going into the field.

First, you will need to make sure you have any necessary supplies, including recording technology. These days, many researchers use their phones to record interviews. Second, you will need to draft a few documents for your participants. These include informed consent forms and recruiting materials, such as posters or email texts, that explain what this study is in clear language. Third, you will draft a research protocol to submit to your institutional review board (IRB) ; this research protocol will include the interview guide (if you are using one), the consent form template, and all examples of recruiting material. Depending on your institution and the details of your study design, it may take weeks or even, in some unfortunate cases, months before you secure IRB approval. Make sure you plan on this time in your project timeline. While you wait, you can continue to review the literature and possibly begin drafting a section on the literature review for your eventual presentation/publication. More on IRB procedures can be found in chapter 8 and more general ethical considerations in chapter 7.

Once you have approval, you can begin!

Research Design Checklist

Before data collection begins, do the following:

- Write a paragraph explaining your aims and goals (personal/political, practical/strategic, professional/academic).

- Define your research question; write two to three sentences that clarify the intent of the research and why this is an important question to answer.

- Review the literature for similar studies that address your research question or similar research questions; think laterally about some literature that might be helpful or illuminating but is not exactly about the same topic.

- Find a written study that inspires you—it may or may not be on the research question you have chosen.

- Consider and choose a research tradition and set of data-collection techniques that (1) help answer your research question and (2) match your aims and goals.

- Define your population of interest and your research setting.

- Define the criteria for your sample (How many? Why these? How will you find them, gain access, and acquire consent?).

- If you are conducting interviews, draft an interview guide.

- If you are making observations, create a plan for observations (sites, times, recording, access).

- Acquire any necessary technology (recording devices/software).

- Draft consent forms that clearly identify the research focus and selection process.

- Create recruiting materials (posters, email, texts).

- Apply for IRB approval (proposal plus consent form plus recruiting materials).

- Block out time for collecting data.

- At the end of the chapter, you will find a " Research Design Checklist " that summarizes the main recommendations made here ↵

- For example, if your focus is society and culture , you might collect data through observation or a case study. If your focus is individual lived experience , you are probably going to be interviewing some people. And if your focus is language and communication , you will probably be analyzing text (written or visual). ( Marshall and Rossman 2016:16 ). ↵

- You may not have any "live" human subjects. There are qualitative research methods that do not require interactions with live human beings - see chapter 16 , "Archival and Historical Sources." But for the most part, you are probably reading this textbook because you are interested in doing research with people. The rest of the chapter will assume this is the case. ↵

One of the primary methodological traditions of inquiry in qualitative research, ethnography is the study of a group or group culture, largely through observational fieldwork supplemented by interviews. It is a form of fieldwork that may include participant-observation data collection. See chapter 14 for a discussion of deep ethnography.

A methodological tradition of inquiry and research design that focuses on an individual case (e.g., setting, institution, or sometimes an individual) in order to explore its complexity, history, and interactive parts. As an approach, it is particularly useful for obtaining a deep appreciation of an issue, event, or phenomenon of interest in its particular context.

The controlling force in research; can be understood as lying on a continuum from basic research (knowledge production) to action research (effecting change).

In its most basic sense, a theory is a story we tell about how the world works that can be tested with empirical evidence. In qualitative research, we use the term in a variety of ways, many of which are different from how they are used by quantitative researchers. Although some qualitative research can be described as “testing theory,” it is more common to “build theory” from the data using inductive reasoning , as done in Grounded Theory . There are so-called “grand theories” that seek to integrate a whole series of findings and stories into an overarching paradigm about how the world works, and much smaller theories or concepts about particular processes and relationships. Theory can even be used to explain particular methodological perspectives or approaches, as in Institutional Ethnography , which is both a way of doing research and a theory about how the world works.

Research that is interested in generating and testing hypotheses about how the world works.

A methodological tradition of inquiry and approach to analyzing qualitative data in which theories emerge from a rigorous and systematic process of induction. This approach was pioneered by the sociologists Glaser and Strauss (1967). The elements of theory generated from comparative analysis of data are, first, conceptual categories and their properties and, second, hypotheses or generalized relations among the categories and their properties – “The constant comparing of many groups draws the [researcher’s] attention to their many similarities and differences. Considering these leads [the researcher] to generate abstract categories and their properties, which, since they emerge from the data, will clearly be important to a theory explaining the kind of behavior under observation.” (36).

An approach to research that is “multimethod in focus, involving an interpretative, naturalistic approach to its subject matter. This means that qualitative researchers study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them. Qualitative research involves the studied use and collection of a variety of empirical materials – case study, personal experience, introspective, life story, interview, observational, historical, interactional, and visual texts – that describe routine and problematic moments and meanings in individuals’ lives." ( Denzin and Lincoln 2005:2 ). Contrast with quantitative research .

Research that contributes knowledge that will help people to understand the nature of a problem in order to intervene, thereby allowing human beings to more effectively control their environment.

Research that is designed to evaluate or test the effectiveness of specific solutions and programs addressing specific social problems. There are two kinds: summative and formative .

Research in which an overall judgment about the effectiveness of a program or policy is made, often for the purpose of generalizing to other cases or programs. Generally uses qualitative research as a supplement to primary quantitative data analyses. Contrast formative evaluation research .

Research designed to improve a program or policy (to help “form” or shape its effectiveness); relies heavily on qualitative research methods. Contrast summative evaluation research

Research carried out at a particular organizational or community site with the intention of affecting change; often involves research subjects as participants of the study. See also participatory action research .

Research in which both researchers and participants work together to understand a problematic situation and change it for the better.

The level of the focus of analysis (e.g., individual people, organizations, programs, neighborhoods).

The large group of interest to the researcher. Although it will likely be impossible to design a study that incorporates or reaches all members of the population of interest, this should be clearly defined at the outset of a study so that a reasonable sample of the population can be taken. For example, if one is studying working-class college students, the sample may include twenty such students attending a particular college, while the population is “working-class college students.” In quantitative research, clearly defining the general population of interest is a necessary step in generalizing results from a sample. In qualitative research, defining the population is conceptually important for clarity.

A fictional name assigned to give anonymity to a person, group, or place. Pseudonyms are important ways of protecting the identity of research participants while still providing a “human element” in the presentation of qualitative data. There are ethical considerations to be made in selecting pseudonyms; some researchers allow research participants to choose their own.

A requirement for research involving human participants; the documentation of informed consent. In some cases, oral consent or assent may be sufficient, but the default standard is a single-page easy-to-understand form that both the researcher and the participant sign and date. Under federal guidelines, all researchers "shall seek such consent only under circumstances that provide the prospective subject or the representative sufficient opportunity to consider whether or not to participate and that minimize the possibility of coercion or undue influence. The information that is given to the subject or the representative shall be in language understandable to the subject or the representative. No informed consent, whether oral or written, may include any exculpatory language through which the subject or the representative is made to waive or appear to waive any of the subject's rights or releases or appears to release the investigator, the sponsor, the institution, or its agents from liability for negligence" (21 CFR 50.20). Your IRB office will be able to provide a template for use in your study .

An administrative body established to protect the rights and welfare of human research subjects recruited to participate in research activities conducted under the auspices of the institution with which it is affiliated. The IRB is charged with the responsibility of reviewing all research involving human participants. The IRB is concerned with protecting the welfare, rights, and privacy of human subjects. The IRB has the authority to approve, disapprove, monitor, and require modifications in all research activities that fall within its jurisdiction as specified by both the federal regulations and institutional policy.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

- Tools and Resources

- Customer Services

- Original Language Spotlight

- Alternative and Non-formal Education

- Cognition, Emotion, and Learning

- Curriculum and Pedagogy

- Education and Society

- Education, Change, and Development

- Education, Cultures, and Ethnicities

- Education, Gender, and Sexualities

- Education, Health, and Social Services

- Educational Administration and Leadership

- Educational History

- Educational Politics and Policy

- Educational Purposes and Ideals

- Educational Systems

- Educational Theories and Philosophies

- Globalization, Economics, and Education

- Languages and Literacies

- Professional Learning and Development

- Research and Assessment Methods

- Technology and Education

- Share This Facebook LinkedIn Twitter

Article contents

Qualitative design research methods.

- Michael Domínguez Michael Domínguez San Diego State University

- https://doi.org/10.1093/acrefore/9780190264093.013.170

- Published online: 19 December 2017

Emerging in the learning sciences field in the early 1990s, qualitative design-based research (DBR) is a relatively new methodological approach to social science and education research. As its name implies, DBR is focused on the design of educational innovations, and the testing of these innovations in the complex and interconnected venue of naturalistic settings. As such, DBR is an explicitly interventionist approach to conducting research, situating the researcher as a part of the complex ecology in which learning and educational innovation takes place.

With this in mind, DBR is distinct from more traditional methodologies, including laboratory experiments, ethnographic research, and large-scale implementation. Rather, the goal of DBR is not to prove the merits of any particular intervention, or to reflect passively on a context in which learning occurs, but to examine the practical application of theories of learning themselves in specific, situated contexts. By designing purposeful, naturalistic, and sustainable educational ecologies, researchers can test, extend, or modify their theories and innovations based on their pragmatic viability. This process offers the prospect of generating theory-developing, contextualized knowledge claims that can complement the claims produced by other forms of research.

Because of this interventionist, naturalistic stance, DBR has also been the subject of ongoing debate concerning the rigor of its methodology. In many ways, these debates obscure the varied ways DBR has been practiced, the varied types of questions being asked, and the theoretical breadth of researchers who practice DBR. With this in mind, DBR research may involve a diverse range of methods as researchers from a variety of intellectual traditions within the learning sciences and education research design pragmatic innovations based on their theories of learning, and document these complex ecologies using the methodologies and tools most applicable to their questions, focuses, and academic communities.

DBR has gained increasing interest in recent years. While it remains a popular methodology for developmental and cognitive learning scientists seeking to explore theory in naturalistic settings, it has also grown in importance to cultural psychology and cultural studies researchers as a methodological approach that aligns in important ways with the participatory commitments of liberatory research. As such, internal tension within the DBR field has also emerged. Yet, though approaches vary, and have distinct genealogies and commitments, DBR might be seen as the broad methodological genre in which Change Laboratory, design-based implementation research (DBIR), social design-based experiments (SDBE), participatory design research (PDR), and research-practice partnerships might be categorized. These critically oriented iterations of DBR have important implications for educational research and educational innovation in historically marginalized settings and the Global South.

- design-based research

- learning sciences

- social-design experiment

- qualitative research

- research methods

Educational research, perhaps more than many other disciplines, is a situated field of study. Learning happens around us every day, at all times, in both formal and informal settings. Our worlds are replete with complex, dynamic, diverse communities, contexts, and institutions, many of which are actively seeking guidance and support in the endless quest for educational innovation. Educational researchers—as a source of potential expertise—are necessarily implicated in this complexity, linked to the communities and institutions through their very presence in spaces of learning, poised to contribute with possible solutions, yet often positioned as separate from the activities they observe, creating dilemmas of responsibility and engagement.

So what are educational scholars and researchers to do? These tensions invite a unique methodological challenge for the contextually invested researcher, begging them to not just produce knowledge about learning, but to participate in the ecology, collaborating on innovations in the complex contexts in which learning is taking place. In short, for many educational researchers, our backgrounds as educators, our connections to community partners, and our sociopolitical commitments to the process of educational innovation push us to ensure that our work is generative, and that our theories and ideas—our expertise—about learning and education are made pragmatic, actionable, and sustainable. We want to test what we know outside of laboratories, designing, supporting, and guiding educational innovation to see if our theories of learning are accurate, and useful to the challenges faced in schools and communities where learning is messy, collaborative, and contested. Through such a process, we learn, and can modify our theories to better serve the real needs of communities. It is from this impulse that qualitative design-based research (DBR) emerged as a new methodological paradigm for education research.

Qualitative design-based research will be examined, documenting its origins, the major tenets of the genre, implementation considerations, and methodological issues, as well as variance within the paradigm. As a relatively new methodology, much tension remains in what constitutes DBR, and what design should mean, and for whom. These tensions and questions, as well as broad perspectives and emergent iterations of the methodology, will be discussed, and considerations for researchers looking toward the future of this paradigm will be considered.

The Origins of Design-Based Research

Qualitative design-based research (DBR) first emerged in the learning sciences field among a group of scholars in the early 1990s, with the first articulation of DBR as a distinct methodological construct appearing in the work of Ann Brown ( 1992 ) and Allan Collins ( 1992 ). For learning scientists in the 1970s and 1980s, the traditional methodologies of laboratory experiments, ethnographies, and large-scale educational interventions were the only methods available. During these decades, a growing community of learning science and educational researchers (e.g., Bereiter & Scardamalia, 1989 ; Brown, Campione, Webber, & McGilley, 1992 ; Cobb & Steffe, 1983 ; Cole, 1995 ; Scardamalia & Bereiter, 1991 ; Schoenfeld, 1982 , 1985 ; Scribner & Cole, 1978 ) interested in educational innovation and classroom interventions in situated contexts began to find the prevailing methodologies insufficient for the types of learning they wished to document, the roles they wished to play in research, and the kinds of knowledge claims they wished to explore. The laboratory, or laboratory-like settings, where research on learning was at the time happening, was divorced from the complexity of real life, and necessarily limiting. Alternatively, most ethnographic research, while more attuned to capturing these complexities and dynamics, regularly assumed a passive stance 1 and avoided interceding in the learning process, or allowing researchers to see what possibility for innovation existed from enacting nascent learning theories. Finally, large-scale interventions could test innovations in practice but lost sight of the nuance of development and implementation in local contexts (Brown, 1992 ; Collins, Joseph, & Bielaczyc, 2004 ).

Dissatisfied with these options, and recognizing that in order to study and understand learning in the messiness of socially, culturally, and historically situated settings, new methods were required, Brown ( 1992 ) proposed an alternative: Why not involve ourselves in the messiness of the process, taking an active, grounded role in disseminating our theories and expertise by becoming designers and implementers of educational innovations? Rather than observing from afar, DBR researchers could trace their own iterative processes of design, implementation, tinkering, redesign, and evaluation, as it unfolded in shared work with teachers, students, learners, and other partners in lived contexts. This premise, initially articulated as “design experiments” (Brown, 1992 ), would be variously discussed over the next decade as “design research,” (Edelson, 2002 ) “developmental research,” (Gravemeijer, 1994 ), and “design-based research,” (Design-Based Research Collective, 2003 ), all of which reflect the original, interventionist, design-oriented concept. The latter term, “design-based research” (DBR), is used here, recognizing this as the prevailing terminology used to refer to this research approach at present. 2

Regardless of the evolving moniker, the prospects of such a methodology were extremely attractive to researchers. Learning scientists acutely aware of various aspects of situated context, and interested in studying the applied outcomes of learning theories—a task of inquiry into situated learning for which canonical methods were rather insufficient—found DBR a welcome development (Bell, 2004 ). As Barab and Squire ( 2004 ) explain: “learning scientists . . . found that they must develop technological tools, curriculum, and especially theories that help them systematically understand and predict how learning occurs” (p. 2), and DBR methodologies allowed them to do this in proactive, hands-on ways. Thus, rather than emerging as a strict alternative to more traditional methodologies, DBR was proposed to fill a niche that other methodologies were ill-equipped to cover.

Effectively, while its development is indeed linked to an inherent critique of previous research paradigms, neither Brown nor Collins saw DBR in opposition to other forms of research. Rather, by providing a bridge from the laboratory to the real world, where learning theories and proposed innovations could interact and be implemented in the complexity of lived socio-ecological contexts (Hoadley, 2004 ), new possibilities emerged. Learning researchers might “trace the evolution of learning in complex, messy classrooms and schools, test and build theories of teaching and learning, and produce instructional tools that survive the challenges of everyday practice” (Shavelson, Phillips, Towne, & Feuer, 2003 , p. 25). Thus, DBR could complement the findings of laboratory, ethnographic, and large-scale studies, answering important questions about the implementation, sustainability, limitations, and usefulness of theories, interventions, and learning when introduced as innovative designs into situated contexts of learning. Moreover, while studies involving these traditional methodologies often concluded by pointing toward implications—insights subsequent studies would need to take up—DBR allowed researchers to address implications iteratively and directly. No subsequent research was necessary, as emerging implications could be reflexively explored in the context of the initial design, offering considerable insight into how research is translated into theory and practice.

Since its emergence in 1992 , DBR as a methodological approach to educational and learning research has quickly grown and evolved, used by researchers from a variety of intellectual traditions in the learning sciences, including developmental and cognitive psychology (e.g., Brown & Campione, 1996 , 1998 ; diSessa & Minstrell, 1998 ), cultural psychology (e.g., Cole, 1996 , 2007 ; Newman, Griffin, & Cole, 1989 ; Gutiérrez, Bien, Selland, & Pierce, 2011 ), cultural anthropology (e.g., Barab, Kinster, Moore, Cunningham, & the ILF Design Team, 2001 ; Polman, 2000 ; Stevens, 2000 ; Suchman, 1995 ), and cultural-historical activity theory (e.g., Engeström, 2011 ; Espinoza, 2009 ; Espinoza & Vossoughi, 2014 ; Gutiérrez, 2008 ; Sannino, 2011 ). Given this plurality of epistemological and theoretical fields that employ DBR, it might best be understood as a broad methodology of educational research, realized in many different, contested, heterogeneous, and distinct iterations, and engaging a variety of qualitative tools and methods (Bell, 2004 ). Despite tensions among these iterations, and substantial and important variances in the ways they employ design-as-research in community settings, there are several common, methodological threads that unite the broad array of research that might be classified as DBR under a shared, though pluralistic, paradigmatic umbrella.

The Tenets of Design-Based Research

Why design-based research.

As we turn to the core tenets of the design-based research (DBR) paradigm, it is worth considering an obvious question: Why use DBR as a methodology for educational research? To answer this, it is helpful to reflect on the original intentions for DBR, particularly, that it is not simply the study of a particular, isolated intervention. Rather, DBR methodologies were conceived of as the complete, iterative process of designing, modifying, and assessing the impact of an educational innovation in a contextual, situated learning environment (Barab & Kirshner, 2001 ; Brown, 1992 ; Cole & Engeström, 2007 ). The design process itself—inclusive of the theory of learning employed, the relationships among participants, contextual factors and constraints, the pedagogical approach, any particular intervention, as well as any changes made to various aspects of this broad design as it proceeds—is what is under study.

Considering this, DBR offers a compelling framework for the researcher interested in having an active and collaborative hand in designing for educational innovation, and interested in creating knowledge about how particular theories of learning, pedagogical or learning practices, or social arrangements function in a context of learning. It is a methodology that can put the researcher in the position of engineer , actively experimenting with aspects of learning and sociopolitical ecologies to arrive at new knowledge and productive outcomes, as Cobb, Confrey, diSessa, Lehrer, and Schauble ( 2003 ) explain:

Prototypically, design experiments entail both “engineering” particular forms of learning and systematically studying those forms of learning within the context defined by the means of supporting them. This designed context is subject to test and revision, and the successive iterations that result play a role similar to that of systematic variation in experiment. (p. 9)

This being said, how directive the engineering role the researcher takes on varies considerably among iterations of DBR. Indeed, recent approaches have argued strongly for researchers to take on more egalitarian positionalities with respect to the community partners with whom they work (e.g., Zavala, 2016 ), acting as collaborative designers, rather than authoritative engineers.

Method and Methodology in Design-Based Research

Now, having established why we might use DBR, a recurring question that has faced the DBR paradigm is whether DBR is a methodology at all. Given the variety of intellectual and ontological traditions that employ it, and thus the pluralism of methods used in DBR to enact the “engineering” role (whatever shape that may take) that the researcher assumes, it has been argued that DBR is not, in actuality a methodology at all (Kelly, 2004 ). The proliferation and diversity of approaches, methods, and types of analysis purporting to be DBR have been described as a lack of coherence that shows there is no “argumentative grammar” or methodology present in DBR (Kelly, 2004 ).

Now, the conclusions one will eventually draw in this debate will depend on one’s orientations and commitments, but it is useful to note that these demands for “coherence” emerge from previous paradigms in which methodology was largely marked by a shared, coherent toolkit for data collection and data analysis. These previous paradigmatic rules make for an odd fit when considering DBR. Yet, even if we proceed—within the qualitative tradition from which DBR emerges—defining methodology as an approach to research that is shaped by the ontological and epistemological commitments of the particular researcher, and methods as the tools for research, data collection, and analysis that are chosen by the researcher with respect to said commitments (Gutiérrez, Engeström, & Sannino, 2016 ), then a compelling case for DBR as a methodology can be made (Bell, 2004 ).

Effectively, despite the considerable variation in how DBR has been and is employed, and tensions within the DBR field, we might point to considerable, shared epistemic common ground among DBR researchers, all of whom are invested in an approach to research that involves engaging actively and iteratively in the design and exploration of learning theory in situated, natural contexts. This common epistemic ground, even in the face of pluralistic ideologies and choices of methods, invites in a new type of methodological coherence, marked by “intersubjectivity without agreement” (Matusov, 1996 ), that links DBR from traditional developmental and cognitive psychology models of DBR (e.g., Brown, 1992 ; Brown & Campione, 1998 ; Collins, 1992 ), to more recent critical and sociocultural manifestations (e.g., Bang & Vossoughi, 2016 ; Engeström, 2011 ; Gutiérrez, 2016 ), and everything in between.

Put in other terms, even as DBR researchers may choose heterogeneous methods for data collection, data analysis, and reporting results complementary to the ideological and sociopolitical commitments of the particular researcher and the types of research questions that are under examination (Bell, 2004 ), a shared epistemic commitment gives the methodology shape. Indeed, the common commitment toward design innovation emerges clearly across examples of DBR methodological studies ranging in method from ethnographic analyses (Salvador, Bell, & Anderson, 1999 ) to studies of critical discourse within a design (Kärkkäinen, 1999 ), to focused examinations of metacognition of individual learners (White & Frederiksen, 1998 ), and beyond. Rather than indicating a lack of methodology, or methodological weakness, the use of varying qualitative methods for framing data collection and retrospective analyses within DBR, and the tensions within the epistemic common ground itself, simply reflects the scope of its utility. Learning in context is complex, contested, and messy, and the plurality of methods present across DBR allow researchers to dynamically respond to context as needed, employing the tools that fit best to consider the questions that are present, or may arise.

All this being the case, it is useful to look toward the coherent elements—the “argumentative grammar” of DBR, if you will—that can be identified across the varied iterations of DBR. Understanding these shared features, in the context and terms of the methodology itself, help us to appreciate what is involved in developing robust and thorough DBR research, and how DBR seeks to make strong, meaningful claims around the types of research questions it takes up.

Coherent Features of Design-Based Research

Several scholars have provided comprehensive overviews and listings of what they see as the cross-cutting features of DBR, both in the context of more traditional models of DBR (e.g., Cobb et al., 2003 ; Design-Based Research Collective, 2003 ), and in regards to newer iterations (e.g., Gutiérrez & Jurow, 2016 ; Bang & Vossoughi, 2016 ). Rather than try to offer an overview of each of these increasingly pluralistic classifications, the intent here is to attend to three broad elements that are shared across articulations of DBR and reflect the essential elements that constitute the methodological approach DBR offers to educational researchers.

Design research is concerned with the development, testing, and evolution of learning theory in situated contexts

This first element is perhaps most central to what DBR of all types is, anchored in what Brown ( 1992 ) was initially most interested in: testing the pragmatic validity of theories of learning by designing interventions that engaged with, or proposed, entire, naturalistic, ecologies of learning. Put another way, while DBR studies may have various units of analysis, focuses, and variables, and may organize learning in many different ways, it is the theoretically informed design for educational innovation that is most centrally under evaluation. DBR actively and centrally exists as a paradigm that is engaged in the development of theory, not just the evaluation of aspects of its usage (Bell, 2004 ; Design-Based Research Collective, 2003 ; Lesh & Kelly, 2000 ; van den Akker, 1999 ).

Effectively, where DBR is taking place, theory as a lived possibility is under examination. Specifically, in most DBR, this means a focus on “intermediate-level” theories of learning, rather than “grand” ones. In essence, DBR does not contend directly with “grand” learning theories (such as developmental or sociocultural theory writ large) (diSessa, 1991 ). Rather, DBR seeks to offer constructive insights by directly engaging with particular learning processes that flow from these theories on a “grounded,” “intermediate” level. This is not, however, to say DBR is limited in what knowledge it can produce; rather, tinkering in this “intermediate” realm can produce knowledge that informs the “grand” theory (Gravemeijer, 1994 ). For example, while cognitive and motivational psychology provide “grand” theoretical frames, interest-driven learning (IDL) is an “intermediate” theory that flows from these and can be explored in DBR to both inform the development of IDL designs in practice and inform cognitive and motivational psychology more broadly (Joseph, 2004 ).

Crucially, however, DBR entails putting the theory in question under intense scrutiny, or, “into harm’s way” (Cobb et al., 2003 ). This is an especially core element to DBR, and one that distinguishes it from the proliferation of educational-reform or educational-entrepreneurship efforts that similarly take up the discourse of “design” and “innovation.” Not only is the reflexive, often participatory element of DBR absent from such efforts—that is, questioning and modifying the design to suit the learning needs of the context and partners—but the theory driving these efforts is never in question, and in many cases, may be actively obscured. Indeed, it is more common to see educational-entrepreneur design innovations seek to modify a context—such as the way charter schools engage in selective pupil recruitment and intensive disciplinary practices (e.g., Carnoy et al., 2005 ; Ravitch, 2010 ; Saltman, 2007 )—rather than modify their design itself, and thus allow for humility in their theory. Such “innovations” and “design” efforts are distinct from DBR, which must, in the spirit of scientific inquiry, be willing to see the learning theory flail and struggle, be modified, and evolve.

This growth and evolution of theory and knowledge is of course central to DBR as a rigorous research paradigm; moving it beyond simply the design of local educational programs, interventions, or innovations. As Barab and Squire ( 2004 ) explain: