Ready to level up your insights?

Get ready to streamline, scale and supercharge your research. Fill out this form to request a demo of the InsightHub platform and discover the difference insights empowerment can make. A member of our team will reach out within two working days.

Cost effective insights that scale

Quality insight doesn't need to cost the earth. Our flexible approach helps you make the most of research budgets and build an agile solution that works for you. Fill out this form to request a call back from our team to explore our pricing options.

- What is InsightHub?

- Data Collection

- Data Analysis

- Data Activation

- Research Templates

- Information Security

- Our Expert Services

- Support & Education

- Consultative Services

- Insight Delivery

- Research Methods

- Sectors We Work With

- Meet the team

- Advisory Board

- Press & Media

- Book a Demo

- Request Pricing

Embark on a new adventure. Join Camp InsightHub, our free demo platform, to discover the future of research.

Read a brief overview of the agile research platform enabling brands to inform decisions at speed in this PDF.

InsightHub on the Blog

- Surveys, Video and the Changing Face of Agile Research

- Building a Research Technology Stack for Better Insights

- The Importance of Delegation in Managing Insight Activities

- Common Insight Platform Pitfalls (and How to Avoid Them)

- Support and Education

- Insight Delivery Services

Our services drive operational and strategic success in challenging environments. Find out how.

Close Connections bring stakeholders and customers together for candid, human conversations.

Services on the Blog

- Closing the Client-Agency Divide in Market Research

- How to Speed Up Fieldwork Without Compromising Quality

- Practical Ways to Support Real-Time Decision Making

- Developing a Question Oriented, Not Answer Oriented Culture

- Meet the Team

The FlexMR credentials deck provides a brief introduction to the team, our approach to research and previous work.

We are the insights empowerment company. Our framework addresses the major pressures insight teams face.

Latest News

- Insight as Art Shortlisted for AURA Innovation Award

- FlexMR Launch Video Close Connection Programme

- VideoMR Analysis Tool Added to InsightHub

- FlexMR Makes Shortlist for Quirks Research Supplier Award

- Latest Posts

- Strategic Thinking

- Technology & Trends

- Practical Application

- Insights Empowerment

- View Full Blog Archives

Discover how to build close customer connections to better support real-time decision making.

What is a market research and insights playbook, plus discover why should your team consider building one.

Featured Posts

- Five Strategies for Turning Insight into Action

- How to Design Surveys that Ask the Right Questions

- Scaling Creative Qual for Rich Customer Insight

- How to Measure Brand Awareness: The Complete Guide

- All Resources

- Client Stories

- Whitepapers

- Events & Webinars

- The Open Ideas Panel

- InsightHub Help Centre

- FlexMR Client Network

The insights empowerment readiness calculator measures your progress in building an insight-led culture.

The MRX Lab podcast explores new and novel ideas from the insights industry in 10 minutes or less.

Featured Stories

- Specsavers Informs Key Marketing Decisions with InsightHub

- The Coventry Panel Helps Maintain Award Winning CX

- Isagenix Customer Community Steers New Product Launch

- Curo Engage Residents with InsightHub Community

- Practical Application /

- Survey Templates /

Market Research Recruitment Screener Survey Template

Chris martin, how to use synthetic data in market research.

In recent years, the rapid advancement of technology and data analytics has revolutionised working p...

Dr Katharine Johnson

- Insights Empowerment (32)

- Practical Application (175)

- Research Methods (283)

- Strategic Thinking (201)

- Survey Templates (7)

- Tech & Trends (389)

A market research recruitment screener is a questionnaire used to identify and select potential participants for a market research study, panel or community. A screener is typically a set of questions designed to filter out individuals who don't meet the specific criteria required, or to achieve a balanced quota. These criteria may include demographic information, purchasing behaviour, brand usage, interests, or other relevant characteristics that align with the research objectives.

The purpose of the recruitment screener is to ensure that the participants chosen for the study, panel or community represent the intended target audience or user group. By using a screener, market researchers can save time and resources by focusing on recruiting only those participants who are likely to provide valuable insights and feedback.

Here are some common elements that a market research recruitment screener may include:

- Demographics : Age, gender, ethnicity, location, household income, education level, occupation, etc.

- Usage behaviour : Questions about product or service usage, frequency of usage, and experiences with specific brands or products.

- Attitudes and opinions : Questions to assess participants' attitudes, preferences, or opinions related to the research topic.

- Purchase behaviour : Questions about past purchasing habits or intentions to purchase certain products or services.

- Media consumption : Questions about where participants get their information or media preferences.

- Exclusion criteria : Identifying factors that would disqualify a participant from the study.

- Open-ended questions : Additional questions to gather more specific insights or information that may not fit into the predefined categories.

The responses from the screener can help your team identify individuals who closely match the desired participant profile. Once the potential participants have been selected, they are usually contacted for further screening and, if they qualify, invited to take part in the actual study, which may involve focus groups, interviews, surveys, or other research methods.

Recruitment Screener Survey Template

The following panel recruitment screener is an example of an online survey built with InsightHub. Across multiple screens and questions, the survey collects basic data on individual demographics, plus additional behaviour and psychographic information to asses fit with the panel objectives.

As with all surveys built on the platform, routing, piping and complex logic can be applied between screens depending on your specific requirements. For instance, you may wish to ask customers which of your products they have purchased before, then use the response data to ask about purchase frequency and location on the following screen. Depending on the quotas you have set, you may only be looking for participants who have purchased a particular product multiple times, or not at all. By splitting such questions across multiple pages, it’s easier to avoid bias and leading language.

This simple survey includes template text that you can adapt to your recruitment screeners. It’s important not only to ask the questions you want to know the answers to, but also provide adequate information to potential participants about the project, panel or community that they are signing up to. Therefore, you should try to be clear about both expectations and incentives.

You may also want to collect contact information (such as a valid email address) so that you able to send invitations to participate should a participate meet your criteria. Even if a participant doesn’t meet your current criteria, consider asking whether they would be willing to be contacted to take part in future opportunities. This tactic can help you expand your pool of potential research participants in a quick and cost-effective manner.

Want to find out how InsightHub can help you deliver a complete, cost-effective research programme with integrated data collection, analysis and activation tools? Sign up for a free demo account here .

Market Research Recruitment Best Practices

Effective market research recruitment screeners can significantly impact the quality of your research by ensuring you recruit the right participants who meet your study's objectives. Here are some best practices to follow when designing market research recruitment screeners.

First, be sure to clearly define research objectives. Before creating the screener, have a clear understanding of your research objectives. Identify the specific characteristics, behaviours, or attitudes you are looking for in your participants to ensure they align with your research goals.

When writing your screener, try to keep it concise. A long and complex screener may discourage potential participants from completing it. Keep the screener as short and straightforward as possible, focusing on the most critical qualifying questions. Similarly, use closed questions. Including multiple-choice options makes it easier for participants to respond quickly and accurately. This helps streamline the screening process.

Another best practice is to avoid leading questions. Ensure the questions are neutral and do not lead participants to a specific answer. Biased questions can influence responses and impact the representativeness of your sample.

While leading questions should be avoided, there are also certain questions you want to actively consider including – such as verification questions. These help to identify inconsistent responses or participants who may not be paying attention. This helps ensure the quality of the data. If certain questions are only relevant to specific subsets of participants, implement logical skipping in your screener to direct participants to the appropriate questions based on their previous responses.

You can check the quality of your screener or uncover any unintended leading questions by test the screener before launch. Send the survey to a small group to identify any issues, ambiguities, or misunderstandings. Revise and refine the screener based on feedback to improve its effectiveness.

Throughout the survey design, it’s important to respect participants' privacy. Request only necessary personal information and assure participants that their data will be treated confidentially and used solely for research purposes.

Lastly, consider whether a screener survey alone is sufficient. While it is likely the most efficient option for recruiting into a panel or community, more complex studies (especially those in which the sample size is low, are longitudinal in nature or have high requirements) may require a screening call to verify participants' eligibility and willingness to participate.

About FlexMR

We are The Insights Empowerment Company. We help research, product and marketing teams drive informed decisions with efficient, scalable & impactful insight.

About Chris Martin

Chris is an experienced executive and marketing strategist in the insight and technology sectors. He also hosts our MRX Lab podcast.

Stay up to date

You might also like....

Inclusive Insights: Best Practices ...

In market research, we often talk about the ‘consumer voice’, but rarely do we consider what this means for different people. As we know, we live in a diverse and wonderful world and we all process in...

Infographic: Artificial Intelligenc...

In today’s data-driven landscape, insight and business professionals are often looking for more efficient ways to analyse information and generate actionable insights. Artificial intelligence is here,...

6 InsightHub Hacks You Need to Know

We are lucky to have such a diverse marketplace for online community platform software, which comes in use for the plethora of different use cases we are faced with in the world of research and insigh...

- Sign Up Now

- -- Navigate To -- CR Dashboard Connect for Researchers Connect for Participants

- Log In Log Out Log In

- Recent Press

- Papers Citing Connect

- Connect for Participants

- Connect for Researchers

- Connect AI Training

- Managed Research

- Prime Panels

- MTurk Toolkit

- Health & Medicine

- Enterprise Accounts

- Conferences

- Knowledge Base

Survey Screening Questions: Good & Bad Examples

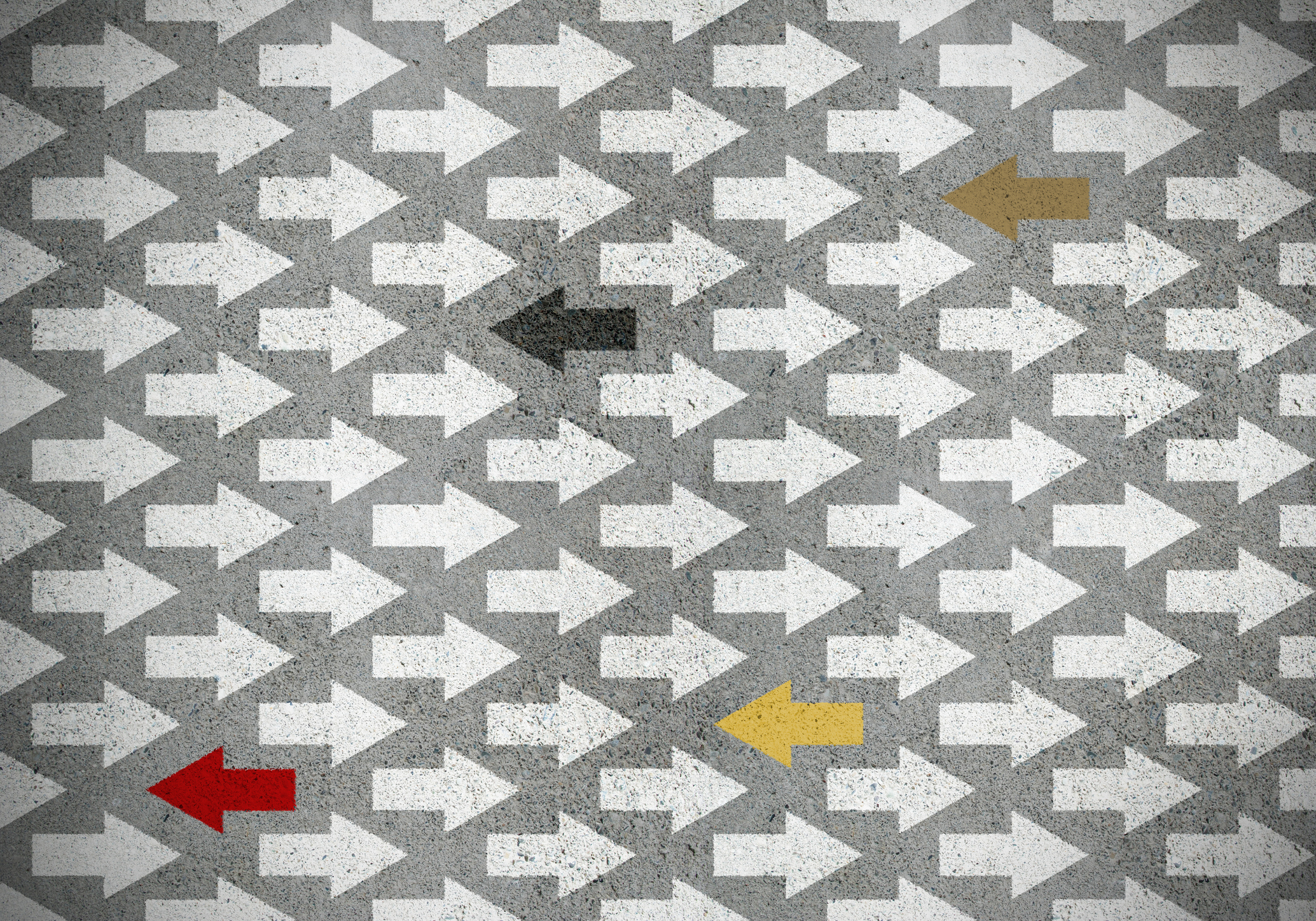

The idea behind survey screening questions is pretty simple: to identify people who are eligible for your study you can… just…ask. People who qualify continue to the survey while those who do not are directed out.

Although the idea may be simple, writing effective screening questions is a lot more complicated. Screening questions need to be short and easy for respondents to answer. They should avoid binary response options but also should not include too many response options. You should order the response options in the right way, and the overall screener shouldn’t contain too many questions. Above all, you absolutely need to ensure you don’t lead people to guess which attributes you’re looking for.

To balance all these objectives—and to demonstrate examples of good and bad survey screening questions—we wrote this blog. Let’s dig in.

Why Use Screening Questions?

As mentioned above, the purpose of survey screening questions is simple. But you might ask: why do I need these questions at all? Why can’t I just find people who I know meet my criteria?

In many cases you can. Nearly all online research platforms collect demographic data from participants in a process known as “ profiling .” The data a platform gathers can then be used to “target” participants who hold certain characteristics.

The problem, however, is that platforms could never ask in advance all the questions researchers may want to use when sampling. Some platforms such as Prime Panels allow researchers to screen participants just as they are entering a study, so that those who qualify can continue directly into the study, while those who don’t can be redirected away from that study. Fortunately, we have some advice for how to construct these screening questions.

* NOTE: Platforms like Amazon Mechanical Turk and other “microtask sites” generally don’t allow survey screeners within a study. This is because participants accept tasks one-at-a-time and compensating people who do not qualify is difficult. Instead of a within survey screener, you can set up a two-step screening process to identify people who meet specific characteristics on Mechanical Turk. After you identify people who meet your criteria in Step 1, you can use CloudResearch’s tools to “ include ” those workers in Step 2 of your study.

How To Construct Screening Questions: Do’s and Don’ts

1. maximize efficiency.

Think of your survey screening questions like that old game Guess Who? You want to zero in on your target respondents with as few questions as possible. This means you should start broad and then get specific.

For example, imagine you want to sample people who watched some event on television the night before. Say, a Presidential debate or a major sports championship.

Start with a question asking about general TV viewing habits. Then get more specific by asking people which kinds of programming they like to watch, and finish by asking if they watched the program you are interested in.

The series of questions may look something like this:

a Every day

b A few times per week

c Once per week

d Less than once per week

a Live sports

c Entertainment

d Soap Operas

e Cable news/politics

g Documentaries

a National evening news

b The Presidential debate

c Live sports

d Saturday Night Live

e The Tonight Show

g Blue Planet

As you can see, it is okay to ask more than one question to refine your sample. But you want to qualify people for the survey typically with four questions or less.

2. Avoid “Yes” or “No” Response Options

It’s tempting to think that a series of questions with “yes or no” response options is an efficient way to zero in on your participants. However, “yes or no” questions have important limitations.

For one, these questions give respondents a 50/50 chance of qualifying for your study even if they are not paying attention! Questions with “yes or no” response options may also lead respondents to say “yes” more often than they say “no” either because they want to appear agreeable or because saying “yes” is just a little bit easier than disagreeing with a statement, a response pattern known as acquiescence bias .

You may occasionally need to ask a yes or no question but avoid relying on these too often and do not use them as the only question to qualify people for your study.

3. Consider the “Right” Number of Response Options

If two response options are too few, is more always better? Should questions be written with ten or fifteen response options? The answer is that it depends.

Fifteen response options is likely too many for all but the most unusual of circumstances because as you add response options to a question you also decrease the odds that people will select the options that qualify them for the study. In other words, if your criteria are so refined that few people will meet them, you will have trouble filling your sample.

On the other side of the equation, you don’t want to provide people with too few options because doing so can make it easier to guess or answer dishonestly and still qualify for the study. The risk of having too few answer choices is clear with a question like the one asking about which events people watched on TV the night before (see above).

Somewhere between five and seven multiple choice options is often best. However, there are times where you may scale this number up or down depending on your needs.

4. Don’t Neglect the Order of Response Options

Now that you know how many response options to shoot for, don’t forget to consider how to arrange them.

Many questions have response options with an assumed order. For instance, a question that asks people how often they watch TV has a clear order.

How often do you watch TV?

For other question types, however, there is no implied order. If, for example, you wanted to ask how people typically watch TV shows and movies.

How do you prefer to watch TV shows and movies?

b On my computer (laptop or desktop)

c On a tablet

d On my phone

e Through a streaming player (e.g., Roku, Chromecast)

When no order is inherent to your response options, consider randomizing or shuffling how they appear to participants. Randomizing answer options reduces sources of answer bias and does a little more to ensure the people you screen actually qualify for your study.

5. Don’t Lead Respondents

Writing good survey questions is both a science and an art. But one basic principle is that questions shouldn’t lead respondents to provide certain answers.

When writing survey screening questions, you want to ensure that your questions do not tip off respondents to the criteria that will help them qualify for the study. For example, if you are looking to recruit parents of young children, you should first establish that people have children before you ask how many or for specific ages. Questions that are loaded with assumptions may bias people’s answers in a certain direction or make it easier for fraudsters to misrepresent themselves.

Possibly Leading Question

How many children do you have?

e Four or more

Better Approach

Do you have children under the age of 18?

How many children under age 18 do you have?

d Four or more

6. Watch for “Maximizers”

Some participants may seek to maximize their chances of qualifying for a survey by selecting several (or all) answer choices within screening questions. Keep an eye out for these maximizers, especially when the odds of someone endorsing more than half of the items in a question are low.

For example, anyone who selects more than half of the answer options in a question asking what they viewed on TV last night can probably be safely omitted from the study as it is unlikely any one person was able to watch so many things in one evening. Combined with in-survey questions , disqualifying maximizers during screening can help you reduce fraud and low-quality responses .

How Screening Affects Incidence Rates

Survey screening questions lead to an important concept that affects the cost of your research: incidence rate . Within market research, the incidence rate is the percentage of respondents who pass your screening questions and go on to participate in your survey.

Because studies with a low incidence rate require screening lots of participants to find just a few who qualify, low incidence rate studies cost more than those with participants who are easier to sample.

The second thing to know about survey screening is what to do with participants who are ineligible for the study. These participants must be redirected to a specific URL because online panels use a standardized set of codes to understand why participants are dropped from studies. You can usually find this URL during the survey setup process.

Finding Qualified Participants with CloudResearch

Regardless of where you recruit participants, finding people who meet your demographic qualifications is only half the battle. The other half is finding people who are willing to invest the time, effort, and attention necessary to provide quality data. At CloudResearch this is where we excel.

We improve data quality from any source with our patented Sentry system . Sentry vets participants with both technological and behavioral measures before they enter your study. Just as survey screening questions seek to identify participants with specific characteristics, Sentry identifies people who are likely to provide high quality data. People who are inattentive, show suspicious signs, or misrepresent themselves are kept from even starting your survey. To learn more about Sentry and how CloudResearch can help you reach the participants you need, contact us today!

Related Articles

Best practices that can affect data quality on mturk.

Of late, researchers have reported a decrease in data quality on Mechanical Turk (MTurk). To combat the issue, we recently developed some data quality solutions, which are described in detail...

Examples of Good (and Bad) Attention Check Questions in Surveys

Attention check questions are frequently used by researchers to measure data quality. The goal of such checks is to differentiate between people who provide high-quality responses and those who provide...

SUBSCRIBE TO RECEIVE UPDATES

- Name * First name Last name

- I would like to request a demo of the Engage platform

- Email This field is for validation purposes and should be left unchanged.

2024 Grant Application Form

Personal and institutional information.

- Full Name * First Last

- Position/Title *

- Affiliated Academic Institution or Research Organization *

Detailed Research Proposal Questions

- Project Title *

- Research Category * - Antisemitism Islamophobia Both

- Objectives *

- Methodology (including who the targeted participants are) *

- Expected Outcomes *

- Significance of the Study *

Budget and Grant Tier Request

- Requested Grant Tier * - $200 $500 $1000 Applicants requesting larger grants may still be eligible for smaller awards if the full amount requested is not granted.

- Budget Justification *

Research Timeline

- Projected Start Date * MM slash DD slash YYYY Preference will be given to projects that can commence soon, preferably before September 2024.

- Estimated Completion Date * MM slash DD slash YYYY Preference will be given to projects that aim to complete within a year.

- Project Timeline *

- Comments This field is for validation purposes and should be left unchanged.

- Name * First Name Last Name

- I would like to request a demo of the Sentry platform

- Name This field is for validation purposes and should be left unchanged.

- Name * First Last

- Name * First and Last

- Please select the best time to discuss your project goals/details to claim your free Sentry pilot for the next 60 days or to receive 10% off your first Managed Research study with Sentry.

- Email * Enter Email Confirm Email

- Organization

- Job Title *

- Phone This field is for validation purposes and should be left unchanged.

IMAGES

VIDEO