Jump to navigation

Cochrane Training

Chapter 5: collecting data.

Tianjing Li, Julian PT Higgins, Jonathan J Deeks

Key Points:

- Systematic reviews have studies, rather than reports, as the unit of interest, and so multiple reports of the same study need to be identified and linked together before or after data extraction.

- Because of the increasing availability of data sources (e.g. trials registers, regulatory documents, clinical study reports), review authors should decide on which sources may contain the most useful information for the review, and have a plan to resolve discrepancies if information is inconsistent across sources.

- Review authors are encouraged to develop outlines of tables and figures that will appear in the review to facilitate the design of data collection forms. The key to successful data collection is to construct easy-to-use forms and collect sufficient and unambiguous data that faithfully represent the source in a structured and organized manner.

- Effort should be made to identify data needed for meta-analyses, which often need to be calculated or converted from data reported in diverse formats.

- Data should be collected and archived in a form that allows future access and data sharing.

Cite this chapter as: Li T, Higgins JPT, Deeks JJ (editors). Chapter 5: Collecting data. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

5.1 Introduction

Systematic reviews aim to identify all studies that are relevant to their research questions and to synthesize data about the design, risk of bias, and results of those studies. Consequently, the findings of a systematic review depend critically on decisions relating to which data from these studies are presented and analysed. Data collected for systematic reviews should be accurate, complete, and accessible for future updates of the review and for data sharing. Methods used for these decisions must be transparent; they should be chosen to minimize biases and human error. Here we describe approaches that should be used in systematic reviews for collecting data, including extraction of data directly from journal articles and other reports of studies.

5.2 Sources of data

Studies are reported in a range of sources which are detailed later. As discussed in Section 5.2.1 , it is important to link together multiple reports of the same study. The relative strengths and weaknesses of each type of source are discussed in Section 5.2.2 . For guidance on searching for and selecting reports of studies, refer to Chapter 4 .

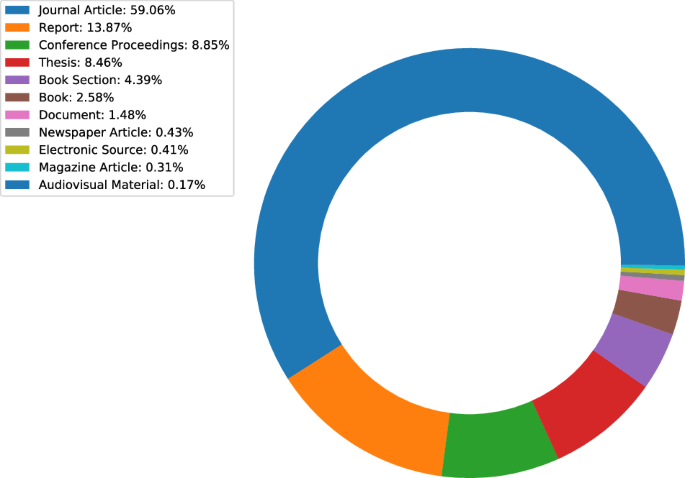

Journal articles are the source of the majority of data included in systematic reviews. Note that a study can be reported in multiple journal articles, each focusing on some aspect of the study (e.g. design, main results, and other results).

Conference abstracts are commonly available. However, the information presented in conference abstracts is highly variable in reliability, accuracy, and level of detail (Li et al 2017).

Errata and letters can be important sources of information about studies, including critical weaknesses and retractions, and review authors should examine these if they are identified (see MECIR Box 5.2.a ).

Trials registers (e.g. ClinicalTrials.gov) catalogue trials that have been planned or started, and have become an important data source for identifying trials, for comparing published outcomes and results with those planned, and for obtaining efficacy and safety data that are not available elsewhere (Ross et al 2009, Jones et al 2015, Baudard et al 2017).

Clinical study reports (CSRs) contain unabridged and comprehensive descriptions of the clinical problem, design, conduct and results of clinical trials, following a structure and content guidance prescribed by the International Conference on Harmonisation (ICH 1995). To obtain marketing approval of drugs and biologics for a specific indication, pharmaceutical companies submit CSRs and other required materials to regulatory authorities. Because CSRs also incorporate tables and figures, with appendices containing the protocol, statistical analysis plan, sample case report forms, and patient data listings (including narratives of all serious adverse events), they can be thousands of pages in length. CSRs often contain more data about trial methods and results than any other single data source (Mayo-Wilson et al 2018). CSRs are often difficult to access, and are usually not publicly available. Review authors could request CSRs from the European Medicines Agency (Davis and Miller 2017). The US Food and Drug and Administration had historically avoided releasing CSRs but launched a pilot programme in 2018 whereby selected portions of CSRs for new drug applications were posted on the agency’s website. Many CSRs are obtained through unsealed litigation documents, repositories (e.g. clinicalstudydatarequest.com ), and other open data and data-sharing channels (e.g. The Yale University Open Data Access Project) (Doshi et al 2013, Wieland et al 2014, Mayo-Wilson et al 2018)).

Regulatory reviews such as those available from the US Food and Drug Administration or European Medicines Agency provide useful information about trials of drugs, biologics, and medical devices submitted by manufacturers for marketing approval (Turner 2013). These documents are summaries of CSRs and related documents, prepared by agency staff as part of the process of approving the products for marketing, after reanalysing the original trial data. Regulatory reviews often are available only for the first approved use of an intervention and not for later applications (although review authors may request those documents, which are usually brief). Using regulatory reviews from the US Food and Drug Administration as an example, drug approval packages are available on the agency’s website for drugs approved since 1997 (Turner 2013); for drugs approved before 1997, information must be requested through a freedom of information request. The drug approval packages contain various documents: approval letter(s), medical review(s), chemistry review(s), clinical pharmacology review(s), and statistical reviews(s).

Individual participant data (IPD) are usually sought directly from the researchers responsible for the study, or may be identified from open data repositories (e.g. www.clinicalstudydatarequest.com ). These data typically include variables that represent the characteristics of each participant, intervention (or exposure) group, prognostic factors, and measurements of outcomes (Stewart et al 2015). Access to IPD has the advantage of allowing review authors to reanalyse the data flexibly, in accordance with the preferred analysis methods outlined in the protocol, and can reduce the variation in analysis methods across studies included in the review. IPD reviews are addressed in detail in Chapter 26 .

MECIR Box 5.2.a Relevant expectations for conduct of intervention reviews

5.2.1 Studies (not reports) as the unit of interest

In a systematic review, studies rather than reports of studies are the principal unit of interest. Since a study may have been reported in several sources, a comprehensive search for studies for the review may identify many reports from a potentially relevant study (Mayo-Wilson et al 2017a, Mayo-Wilson et al 2018). Conversely, a report may describe more than one study.

Multiple reports of the same study should be linked together (see MECIR Box 5.2.b ). Some authors prefer to link reports before they collect data, and collect data from across the reports onto a single form. Other authors prefer to collect data from each report and then link together the collected data across reports. Either strategy may be appropriate, depending on the nature of the reports at hand. It may not be clear that two reports relate to the same study until data collection has commenced. Although sometimes there is a single report for each study, it should never be assumed that this is the case.

MECIR Box 5.2.b Relevant expectations for conduct of intervention reviews

It can be difficult to link multiple reports from the same study, and review authors may need to do some ‘detective work’. Multiple sources about the same trial may not reference each other, do not share common authors (Gøtzsche 1989, Tramèr et al 1997), or report discrepant information about the study design, characteristics, outcomes, and results (von Elm et al 2004, Mayo-Wilson et al 2017a).

Some of the most useful criteria for linking reports are:

- trial registration numbers;

- authors’ names;

- sponsor for the study and sponsor identifiers (e.g. grant or contract numbers);

- location and setting (particularly if institutions, such as hospitals, are named);

- specific details of the interventions (e.g. dose, frequency);

- numbers of participants and baseline data; and

- date and duration of the study (which also can clarify whether different sample sizes are due to different periods of recruitment), length of follow-up, or subgroups selected to address secondary goals.

Review authors should use as many trial characteristics as possible to link multiple reports. When uncertainties remain after considering these and other factors, it may be necessary to correspond with the study authors or sponsors for confirmation.

5.2.2 Determining which sources might be most useful

A comprehensive search to identify all eligible studies from all possible sources is resource-intensive but necessary for a high-quality systematic review (see Chapter 4 ). Because some data sources are more useful than others (Mayo-Wilson et al 2018), review authors should consider which data sources may be available and which may contain the most useful information for the review. These considerations should be described in the protocol. Table 5.2.a summarizes the strengths and limitations of different data sources (Mayo-Wilson et al 2018). Gaining access to CSRs and IPD often takes a long time. Review authors should begin searching repositories and contact trial investigators and sponsors as early as possible to negotiate data usage agreements (Mayo-Wilson et al 2015, Mayo-Wilson et al 2018).

Table 5.2.a Strengths and limitations of different data sources for systematic reviews

5.2.3 Correspondence with investigators

Review authors often find that they are unable to obtain all the information they seek from available reports about the details of the study design, the full range of outcomes measured and the numerical results. In such circumstances, authors are strongly encouraged to contact the original investigators (see MECIR Box 5.2.c ). Contact details of study authors, when not available from the study reports, often can be obtained from more recent publications, from university or institutional staff listings, from membership directories of professional societies, or by a general search of the web. If the contact author named in the study report cannot be contacted or does not respond, it is worthwhile attempting to contact other authors.

Review authors should consider the nature of the information they require and make their request accordingly. For descriptive information about the conduct of the trial, it may be most appropriate to ask open-ended questions (e.g. how was the allocation process conducted, or how were missing data handled?). If specific numerical data are required, it may be more helpful to request them specifically, possibly providing a short data collection form (either uncompleted or partially completed). If IPD are required, they should be specifically requested (see also Chapter 26 ). In some cases, study investigators may find it more convenient to provide IPD rather than conduct additional analyses to obtain the specific statistics requested.

MECIR Box 5.2.c Relevant expectations for conduct of intervention reviews

5.3 What data to collect

5.3.1 what are data.

For the purposes of this chapter, we define ‘data’ to be any information about (or derived from) a study, including details of methods, participants, setting, context, interventions, outcomes, results, publications, and investigators. Review authors should plan in advance what data will be required for their systematic review, and develop a strategy for obtaining them (see MECIR Box 5.3.a ). The involvement of consumers and other stakeholders can be helpful in ensuring that the categories of data collected are sufficiently aligned with the needs of review users ( Chapter 1, Section 1.3 ). The data to be sought should be described in the protocol, with consideration wherever possible of the issues raised in the rest of this chapter.

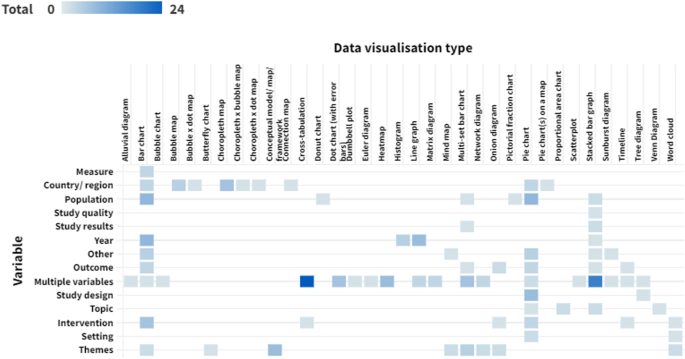

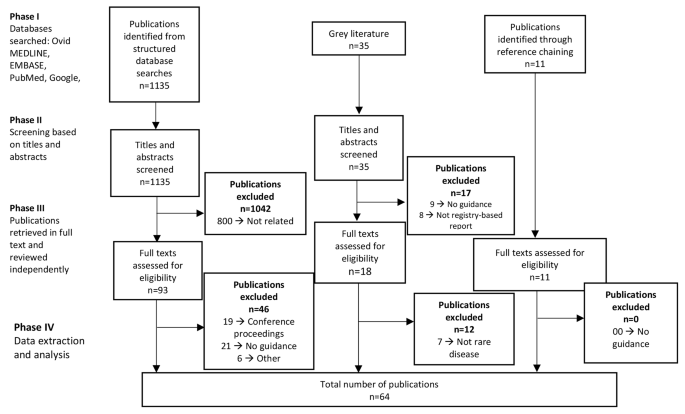

The data collected for a review should adequately describe the included studies, support the construction of tables and figures, facilitate the risk of bias assessment, and enable syntheses and meta-analyses. Review authors should familiarize themselves with reporting guidelines for systematic reviews (see online Chapter III and the PRISMA statement; (Liberati et al 2009) to ensure that relevant elements and sections are incorporated. The following sections review the types of information that should be sought, and these are summarized in Table 5.3.a (Li et al 2015).

MECIR Box 5.3.a Relevant expectations for conduct of intervention reviews

Table 5.3.a Checklist of items to consider in data collection

*Full description required for assessments of risk of bias (see Chapter 8 , Chapter 23 and Chapter 25 ).

5.3.2 Study methods and potential sources of bias

Different research methods can influence study outcomes by introducing different biases into results. Important study design characteristics should be collected to allow the selection of appropriate methods for assessment and analysis, and to enable description of the design of each included study in a table of ‘Characteristics of included studies’, including whether the study is randomized, whether the study has a cluster or crossover design, and the duration of the study. If the review includes non-randomized studies, appropriate features of the studies should be described (see Chapter 24 ).

Detailed information should be collected to facilitate assessment of the risk of bias in each included study. Risk-of-bias assessment should be conducted using the tool most appropriate for the design of each study, and the information required to complete the assessment will depend on the tool. Randomized studies should be assessed using the tool described in Chapter 8 . The tool covers bias arising from the randomization process, due to deviations from intended interventions, due to missing outcome data, in measurement of the outcome, and in selection of the reported result. For each item in the tool, a description of what happened in the study is required, which may include verbatim quotes from study reports. Information for assessment of bias due to missing outcome data and selection of the reported result may be most conveniently collected alongside information on outcomes and results. Chapter 7 (Section 7.3.1) discusses some issues in the collection of information for assessments of risk of bias. For non-randomized studies, the most appropriate tool is described in Chapter 25 . A separate tool also covers bias due to missing results in meta-analysis (see Chapter 13 ).

A particularly important piece of information is the funding source of the study and potential conflicts of interest of the study authors.

Some review authors will wish to collect additional information on study characteristics that bear on the quality of the study’s conduct but that may not lead directly to risk of bias, such as whether ethical approval was obtained and whether a sample size calculation was performed a priori.

5.3.3 Participants and setting

Details of participants are collected to enable an understanding of the comparability of, and differences between, the participants within and between included studies, and to allow assessment of how directly or completely the participants in the included studies reflect the original review question.

Typically, aspects that should be collected are those that could (or are believed to) affect presence or magnitude of an intervention effect and those that could help review users assess applicability to populations beyond the review. For example, if the review authors suspect important differences in intervention effect between different socio-economic groups, this information should be collected. If intervention effects are thought constant over such groups, and if such information would not be useful to help apply results, it should not be collected. Participant characteristics that are often useful for assessing applicability include age and sex. Summary information about these should always be collected unless they are not obvious from the context. These characteristics are likely to be presented in different formats (e.g. ages as means or medians, with standard deviations or ranges; sex as percentages or counts for the whole study or for each intervention group separately). Review authors should seek consistent quantities where possible, and decide whether it is more relevant to summarize characteristics for the study as a whole or by intervention group. It may not be possible to select the most consistent statistics until data collection is complete across all or most included studies. Other characteristics that are sometimes important include ethnicity, socio-demographic details (e.g. education level) and the presence of comorbid conditions. Clinical characteristics relevant to the review question (e.g. glucose level for reviews on diabetes) also are important for understanding the severity or stage of the disease.

Diagnostic criteria that were used to define the condition of interest can be a particularly important source of diversity across studies and should be collected. For example, in a review of drug therapy for congestive heart failure, it is important to know how the definition and severity of heart failure was determined in each study (e.g. systolic or diastolic dysfunction, severe systolic dysfunction with ejection fractions below 20%). Similarly, in a review of antihypertensive therapy, it is important to describe baseline levels of blood pressure of participants.

If the settings of studies may influence intervention effects or applicability, then information on these should be collected. Typical settings of healthcare intervention studies include acute care hospitals, emergency facilities, general practice, and extended care facilities such as nursing homes, offices, schools, and communities. Sometimes studies are conducted in different geographical regions with important differences that could affect delivery of an intervention and its outcomes, such as cultural characteristics, economic context, or rural versus city settings. Timing of the study may be associated with important technology differences or trends over time. If such information is important for the interpretation of the review, it should be collected.

Important characteristics of the participants in each included study should be summarized for the reader in the table of ‘Characteristics of included studies’.

5.3.4 Interventions

Details of all experimental and comparator interventions of relevance to the review should be collected. Again, details are required for aspects that could affect the presence or magnitude of an effect or that could help review users assess applicability to their own circumstances. Where feasible, information should be sought (and presented in the review) that is sufficient for replication of the interventions under study. This includes any co-interventions administered as part of the study, and applies similarly to comparators such as ‘usual care’. Review authors may need to request missing information from study authors.

The Template for Intervention Description and Replication (TIDieR) provides a comprehensive framework for full description of interventions and has been proposed for use in systematic reviews as well as reports of primary studies (Hoffmann et al 2014). The checklist includes descriptions of:

- the rationale for the intervention and how it is expected to work;

- any documentation that instructs the recipient on the intervention;

- what the providers do to deliver the intervention (procedures and processes);

- who provides the intervention (including their skill level), how (e.g. face to face, web-based) and in what setting (e.g. home, school, or hospital);

- the timing and intensity;

- whether any variation is permitted or expected, and whether modifications were actually made; and

- any strategies used to ensure or assess fidelity or adherence to the intervention, and the extent to which the intervention was delivered as planned.

For clinical trials of pharmacological interventions, key information to collect will often include routes of delivery (e.g. oral or intravenous delivery), doses (e.g. amount or intensity of each treatment, frequency of delivery), timing (e.g. within 24 hours of diagnosis), and length of treatment. For other interventions, such as those that evaluate psychotherapy, behavioural and educational approaches, or healthcare delivery strategies, the amount of information required to characterize the intervention will typically be greater, including information about multiple elements of the intervention, who delivered it, and the format and timing of delivery. Chapter 17 provides further information on how to manage intervention complexity, and how the intervention Complexity Assessment Tool (iCAT) can facilitate data collection (Lewin et al 2017).

Important characteristics of the interventions in each included study should be summarized for the reader in the table of ‘Characteristics of included studies’. Additional tables or diagrams such as logic models ( Chapter 2, Section 2.5.1 ) can assist descriptions of multi-component interventions so that review users can better assess review applicability to their context.

5.3.4.1 Integrity of interventions

The degree to which specified procedures or components of the intervention are implemented as planned can have important consequences for the findings from a study. We describe this as intervention integrity ; related terms include adherence, compliance and fidelity (Carroll et al 2007). The verification of intervention integrity may be particularly important in reviews of non-pharmacological trials such as behavioural interventions and complex interventions, which are often implemented in conditions that present numerous obstacles to idealized delivery.

It is generally expected that reports of randomized trials provide detailed accounts of intervention implementation (Zwarenstein et al 2008, Moher et al 2010). In assessing whether interventions were implemented as planned, review authors should bear in mind that some interventions are standardized (with no deviations permitted in the intervention protocol), whereas others explicitly allow a degree of tailoring (Zwarenstein et al 2008). In addition, the growing field of implementation science has led to an increased awareness of the impact of setting and context on delivery of interventions (Damschroder et al 2009). (See Chapter 17, Section 17.1.2.1 for further information and discussion about how an intervention may be tailored to local conditions in order to preserve its integrity.)

Information about integrity can help determine whether unpromising results are due to a poorly conceptualized intervention or to an incomplete delivery of the prescribed components. It can also reveal important information about the feasibility of implementing a given intervention in real life settings. If it is difficult to achieve full implementation in practice, the intervention will have low feasibility (Dusenbury et al 2003).

Whether a lack of intervention integrity leads to a risk of bias in the estimate of its effect depends on whether review authors and users are interested in the effect of assignment to intervention or the effect of adhering to intervention, as discussed in more detail in Chapter 8, Section 8.2.2 . Assessment of deviations from intended interventions is important for assessing risk of bias in the latter, but not the former (see Chapter 8, Section 8.4 ), but both may be of interest to decision makers in different ways.

An example of a Cochrane Review evaluating intervention integrity is provided by a review of smoking cessation in pregnancy (Chamberlain et al 2017). The authors found that process evaluation of the intervention occurred in only some trials and that the implementation was less than ideal in others, including some of the largest trials. The review highlighted how the transfer of an intervention from one setting to another may reduce its effectiveness when elements are changed, or aspects of the materials are culturally inappropriate.

5.3.4.2 Process evaluations

Process evaluations seek to evaluate the process (and mechanisms) between the intervention’s intended implementation and the actual effect on the outcome (Moore et al 2015). Process evaluation studies are characterized by a flexible approach to data collection and the use of numerous methods to generate a range of different types of data, encompassing both quantitative and qualitative methods. Guidance for including process evaluations in systematic reviews is provided in Chapter 21 . When it is considered important, review authors should aim to collect information on whether the trial accounted for, or measured, key process factors and whether the trials that thoroughly addressed integrity showed a greater impact. Process evaluations can be a useful source of factors that potentially influence the effectiveness of an intervention.

5.3.5 Outcome s

An outcome is an event or a measurement value observed or recorded for a particular person or intervention unit in a study during or following an intervention, and that is used to assess the efficacy and safety of the studied intervention (Meinert 2012). Review authors should indicate in advance whether they plan to collect information about all outcomes measured in a study or only those outcomes of (pre-specified) interest in the review. Research has shown that trials addressing the same condition and intervention seldom agree on which outcomes are the most important, and consequently report on numerous different outcomes (Dwan et al 2014, Ismail et al 2014, Denniston et al 2015, Saldanha et al 2017a). The selection of outcomes across systematic reviews of the same condition is also inconsistent (Page et al 2014, Saldanha et al 2014, Saldanha et al 2016, Liu et al 2017). Outcomes used in trials and in systematic reviews of the same condition have limited overlap (Saldanha et al 2017a, Saldanha et al 2017b).

We recommend that only the outcomes defined in the protocol be described in detail. However, a complete list of the names of all outcomes measured may allow a more detailed assessment of the risk of bias due to missing outcome data (see Chapter 13 ).

Review authors should collect all five elements of an outcome (Zarin et al 2011, Saldanha et al 2014):

1. outcome domain or title (e.g. anxiety);

2. measurement tool or instrument (including definition of clinical outcomes or endpoints); for a scale, name of the scale (e.g. the Hamilton Anxiety Rating Scale), upper and lower limits, and whether a high or low score is favourable, definitions of any thresholds if appropriate;

3. specific metric used to characterize each participant’s results (e.g. post-intervention anxiety, or change in anxiety from baseline to a post-intervention time point, or post-intervention presence of anxiety (yes/no));

4. method of aggregation (e.g. mean and standard deviation of anxiety scores in each group, or proportion of people with anxiety);

5. timing of outcome measurements (e.g. assessments at end of eight-week intervention period, events occurring during eight-week intervention period).

Further considerations for economics outcomes are discussed in Chapter 20 , and for patient-reported outcomes in Chapter 18 .

5.3.5.1 Adverse effects

Collection of information about the harmful effects of an intervention can pose particular difficulties, discussed in detail in Chapter 19 . These outcomes may be described using multiple terms, including ‘adverse event’, ‘adverse effect’, ‘adverse drug reaction’, ‘side effect’ and ‘complication’. Many of these terminologies are used interchangeably in the literature, although some are technically different. Harms might additionally be interpreted to include undesirable changes in other outcomes measured during a study, such as a decrease in quality of life where an improvement may have been anticipated.

In clinical trials, adverse events can be collected either systematically or non-systematically. Systematic collection refers to collecting adverse events in the same manner for each participant using defined methods such as a questionnaire or a laboratory test. For systematically collected outcomes representing harm, data can be collected by review authors in the same way as efficacy outcomes (see Section 5.3.5 ).

Non-systematic collection refers to collection of information on adverse events using methods such as open-ended questions (e.g. ‘Have you noticed any symptoms since your last visit?’), or reported by participants spontaneously. In either case, adverse events may be selectively reported based on their severity, and whether the participant suspected that the effect may have been caused by the intervention, which could lead to bias in the available data. Unfortunately, most adverse events are collected non-systematically rather than systematically, creating a challenge for review authors. The following pieces of information are useful and worth collecting (Nicole Fusco, personal communication):

- any coding system or standard medical terminology used (e.g. COSTART, MedDRA), including version number;

- name of the adverse events (e.g. dizziness);

- reported intensity of the adverse event (e.g. mild, moderate, severe);

- whether the trial investigators categorized the adverse event as ‘serious’;

- whether the trial investigators identified the adverse event as being related to the intervention;

- time point (most commonly measured as a count over the duration of the study);

- any reported methods for how adverse events were selected for inclusion in the publication (e.g. ‘We reported all adverse events that occurred in at least 5% of participants’); and

- associated results.

Different collection methods lead to very different accounting of adverse events (Safer 2002, Bent et al 2006, Ioannidis et al 2006, Carvajal et al 2011, Allen et al 2013). Non-systematic collection methods tend to underestimate how frequently an adverse event occurs. It is particularly problematic when the adverse event of interest to the review is collected systematically in some studies but non-systematically in other studies. Different collection methods introduce an important source of heterogeneity. In addition, when non-systematic adverse events are reported based on quantitative selection criteria (e.g. only adverse events that occurred in at least 5% of participants were included in the publication), use of reported data alone may bias the results of meta-analyses. Review authors should be cautious of (or refrain from) synthesizing adverse events that are collected differently.

Regardless of the collection methods, precise definitions of adverse effect outcomes and their intensity should be recorded, since they may vary between studies. For example, in a review of aspirin and gastrointestinal haemorrhage, some trials simply reported gastrointestinal bleeds, while others reported specific categories of bleeding, such as haematemesis, melaena, and proctorrhagia (Derry and Loke 2000). The definition and reporting of severity of the haemorrhages (e.g. major, severe, requiring hospital admission) also varied considerably among the trials (Zanchetti and Hansson 1999). Moreover, a particular adverse effect may be described or measured in different ways among the studies. For example, the terms ‘tiredness’, ‘fatigue’ or ‘lethargy’ may all be used in reporting of adverse effects. Study authors also may use different thresholds for ‘abnormal’ results (e.g. hypokalaemia diagnosed at a serum potassium concentration of 3.0 mmol/L or 3.5 mmol/L).

No mention of adverse events in trial reports does not necessarily mean that no adverse events occurred. It is usually safest to assume that they were not reported. Quality of life measures are sometimes used as a measure of the participants’ experience during the study, but these are usually general measures that do not look specifically at particular adverse effects of the intervention. While quality of life measures are important and can be used to gauge overall participant well-being, they should not be regarded as substitutes for a detailed evaluation of safety and tolerability.

5.3.6 Results

Results data arise from the measurement or ascertainment of outcomes for individual participants in an intervention study. Results data may be available for each individual in a study (i.e. individual participant data; see Chapter 26 ), or summarized at arm level, or summarized at study level into an intervention effect by comparing two intervention arms. Results data should be collected only for the intervention groups and outcomes specified to be of interest in the protocol (see MECIR Box 5.3.b ). Results for other outcomes should not be collected unless the protocol is modified to add them. Any modification should be reported in the review. However, review authors should be alert to the possibility of important, unexpected findings, particularly serious adverse effects.

MECIR Box 5.3.b Relevant expectations for conduct of intervention reviews

Reports of studies often include several results for the same outcome. For example, different measurement scales might be used, results may be presented separately for different subgroups, and outcomes may have been measured at different follow-up time points. Variation in the results can be very large, depending on which data are selected (Gøtzsche et al 2007, Mayo-Wilson et al 2017a). Review protocols should be as specific as possible about which outcome domains, measurement tools, time points, and summary statistics (e.g. final values versus change from baseline) are to be collected (Mayo-Wilson et al 2017b). A framework should be pre-specified in the protocol to facilitate making choices between multiple eligible measures or results. For example, a hierarchy of preferred measures might be created, or plans articulated to select the result with the median effect size, or to average across all eligible results for a particular outcome domain (see also Chapter 9, Section 9.3.3 ). Any additional decisions or changes to this framework made once the data are collected should be reported in the review as changes to the protocol.

Section 5.6 describes the numbers that will be required to perform meta-analysis, if appropriate. The unit of analysis (e.g. participant, cluster, body part, treatment period) should be recorded for each result when it is not obvious (see Chapter 6, Section 6.2 ). The type of outcome data determines the nature of the numbers that will be sought for each outcome. For example, for a dichotomous (‘yes’ or ‘no’) outcome, the number of participants and the number who experienced the outcome will be sought for each group. It is important to collect the sample size relevant to each result, although this is not always obvious. A flow diagram as recommended in the CONSORT Statement (Moher et al 2001) can help to determine the flow of participants through a study. If one is not available in a published report, review authors can consider drawing one (available from www.consort-statement.org ).

The numbers required for meta-analysis are not always available. Often, other statistics can be collected and converted into the required format. For example, for a continuous outcome, it is usually most convenient to seek the number of participants, the mean and the standard deviation for each intervention group. These are often not available directly, especially the standard deviation. Alternative statistics enable calculation or estimation of the missing standard deviation (such as a standard error, a confidence interval, a test statistic (e.g. from a t-test or F-test) or a P value). These should be extracted if they provide potentially useful information (see MECIR Box 5.3.c ). Details of recalculation are provided in Section 5.6 . Further considerations for dealing with missing data are discussed in Chapter 10, Section 10.12 .

MECIR Box 5.3.c Relevant expectations for conduct of intervention reviews

5.3.7 Other information to collect

We recommend that review authors collect the key conclusions of the included study as reported by its authors. It is not necessary to report these conclusions in the review, but they should be used to verify the results of analyses undertaken by the review authors, particularly in relation to the direction of effect. Further comments by the study authors, for example any explanations they provide for unexpected findings, may be noted. References to other studies that are cited in the study report may be useful, although review authors should be aware of the possibility of citation bias (see Chapter 7, Section 7.2.3.2 ). Documentation of any correspondence with the study authors is important for review transparency.

5.4 Data collection tools

5.4.1 rationale for data collection forms.

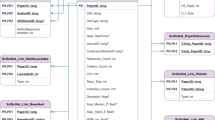

Data collection for systematic reviews should be performed using structured data collection forms (see MECIR Box 5.4.a ). These can be paper forms, electronic forms (e.g. Google Form), or commercially or custom-built data systems (e.g. Covidence, EPPI-Reviewer, Systematic Review Data Repository (SRDR)) that allow online form building, data entry by several users, data sharing, and efficient data management (Li et al 2015). All different means of data collection require data collection forms.

MECIR Box 5.4.a Relevant expectations for conduct of intervention reviews

The data collection form is a bridge between what is reported by the original investigators (e.g. in journal articles, abstracts, personal correspondence) and what is ultimately reported by the review authors. The data collection form serves several important functions (Meade and Richardson 1997). First, the form is linked directly to the review question and criteria for assessing eligibility of studies, and provides a clear summary of these that can be used to identify and structure the data to be extracted from study reports. Second, the data collection form is the historical record of the provenance of the data used in the review, as well as the multitude of decisions (and changes to decisions) that occur throughout the review process. Third, the form is the source of data for inclusion in an analysis.

Given the important functions of data collection forms, ample time and thought should be invested in their design. Because each review is different, data collection forms will vary across reviews. However, there are many similarities in the types of information that are important. Thus, forms can be adapted from one review to the next. Although we use the term ‘data collection form’ in the singular, in practice it may be a series of forms used for different purposes: for example, a separate form could be used to assess the eligibility of studies for inclusion in the review to assist in the quick identification of studies to be excluded from or included in the review.

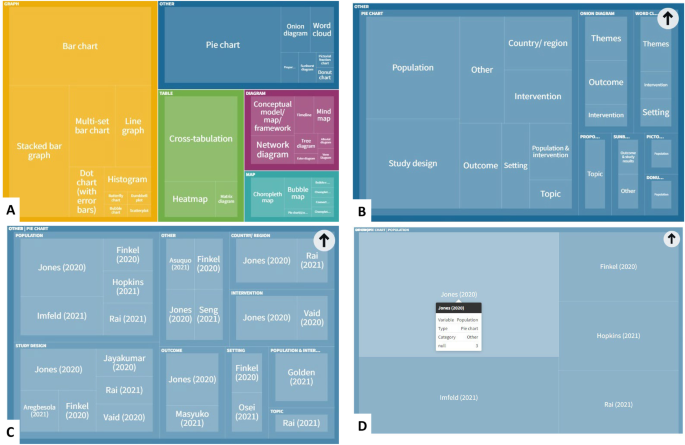

5.4.2 Considerations in selecting data collection tools

The choice of data collection tool is largely dependent on review authors’ preferences, the size of the review, and resources available to the author team. Potential advantages and considerations of selecting one data collection tool over another are outlined in Table 5.4.a (Li et al 2015). A significant advantage that data systems have is in data management ( Chapter 1, Section 1.6 ) and re-use. They make review updates more efficient, and also facilitate methodological research across reviews. Numerous ‘meta-epidemiological’ studies have been carried out using Cochrane Review data, resulting in methodological advances which would not have been possible if thousands of studies had not all been described using the same data structures in the same system.

Some data collection tools facilitate automatic imports of extracted data into RevMan (Cochrane’s authoring tool), such as CSV (Excel) and Covidence. Details available here https://documentation.cochrane.org/revman-kb/populate-study-data-260702462.html

Table 5.4.a Considerations in selecting data collection tools

5.4.3 Design of a data collection form

Regardless of whether data are collected using a paper or electronic form, or a data system, the key to successful data collection is to construct easy-to-use forms and collect sufficient and unambiguous data that faithfully represent the source in a structured and organized manner (Li et al 2015). In most cases, a document format should be developed for the form before building an electronic form or a data system. This can be distributed to others, including programmers and data analysts, and as a guide for creating an electronic form and any guidance or codebook to be used by data extractors. Review authors also should consider compatibility of any electronic form or data system with analytical software, as well as mechanisms for recording, assessing and correcting data entry errors.

Data described in multiple reports (or even within a single report) of a study may not be consistent. Review authors will need to describe how they work with multiple reports in the protocol, for example, by pre-specifying which report will be used when sources contain conflicting data that cannot be resolved by contacting the investigators. Likewise, when there is only one report identified for a study, review authors should specify the section within the report (e.g. abstract, methods, results, tables, and figures) for use in case of inconsistent information.

If review authors wish to automatically import their extracted data into RevMan, it is advised that their data collection forms match the data extraction templates available via the RevMan Knowledge Base. Details available here https://documentation.cochrane.org/revman-kb/data-extraction-templates-260702375.html.

A good data collection form should minimize the need to go back to the source documents. When designing a data collection form, review authors should involve all members of the team, that is, content area experts, authors with experience in systematic review methods and data collection form design, statisticians, and persons who will perform data extraction. Here are suggested steps and some tips for designing a data collection form, based on the informal collation of experiences from numerous review authors (Li et al 2015).

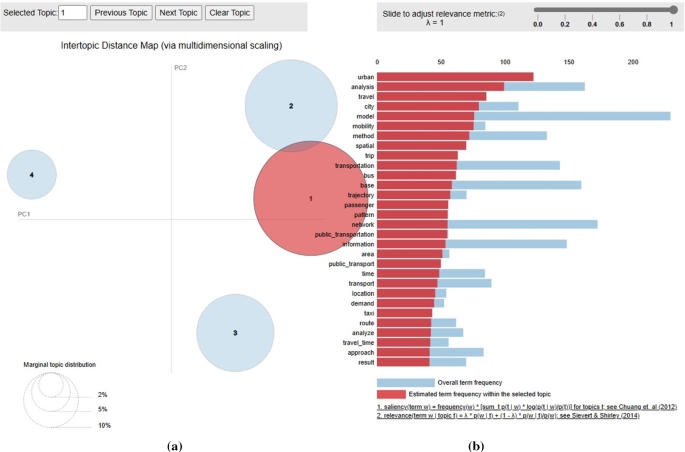

Step 1. Develop outlines of tables and figures expected to appear in the systematic review, considering the comparisons to be made between different interventions within the review, and the various outcomes to be measured. This step will help review authors decide the right amount of data to collect (not too much or too little). Collecting too much information can lead to forms that are longer than original study reports, and can be very wasteful of time. Collection of too little information, or omission of key data, can lead to the need to return to study reports later in the review process.

Step 2. Assemble and group data elements to facilitate form development. Review authors should consult Table 5.3.a , in which the data elements are grouped to facilitate form development and data collection. Note that it may be more efficient to group data elements in the order in which they are usually found in study reports (e.g. starting with reference information, followed by eligibility criteria, intervention description, statistical methods, baseline characteristics and results).

Step 3. Identify the optimal way of framing the data items. Much has been written about how to frame data items for developing robust data collection forms in primary research studies. We summarize a few key points and highlight issues that are pertinent to systematic reviews.

- Ask closed-ended questions (i.e. questions that define a list of permissible responses) as much as possible. Closed-ended questions do not require post hoc coding and provide better control over data quality than open-ended questions. When setting up a closed-ended question, one must anticipate and structure possible responses and include an ‘other, specify’ category because the anticipated list may not be exhaustive. Avoid asking data extractors to summarize data into uncoded text, no matter how short it is.

- Avoid asking a question in a way that the response may be left blank. Include ‘not applicable’, ‘not reported’ and ‘cannot tell’ options as needed. The ‘cannot tell’ option tags uncertain items that may promote review authors to contact study authors for clarification, especially on data items critical to reach conclusions.

- Remember that the form will focus on what is reported in the article rather what has been done in the study. The study report may not fully reflect how the study was actually conducted. For example, a question ‘Did the article report that the participants were masked to the intervention?’ is more appropriate than ‘Were participants masked to the intervention?’

- Where a judgement is required, record the raw data (i.e. quote directly from the source document) used to make the judgement. It is also important to record the source of information collected, including where it was found in a report or whether information was obtained from unpublished sources or personal communications. As much as possible, questions should be asked in a way that minimizes subjective interpretation and judgement to facilitate data comparison and adjudication.

- Incorporate flexibility to allow for variation in how data are reported. It is strongly recommended that outcome data be collected in the format in which they were reported and transformed in a subsequent step if required. Review authors also should consider the software they will use for analysis and for publishing the review (e.g. RevMan).

Step 4. Develop and pilot-test data collection forms, ensuring that they provide data in the right format and structure for subsequent analysis. In addition to data items described in Step 2, data collection forms should record the title of the review as well as the person who is completing the form and the date of completion. Forms occasionally need revision; forms should therefore include the version number and version date to reduce the chances of using an outdated form by mistake. Because a study may be associated with multiple reports, it is important to record the study ID as well as the report ID. Definitions and instructions helpful for answering a question should appear next to the question to improve quality and consistency across data extractors (Stock 1994). Provide space for notes, regardless of whether paper or electronic forms are used.

All data collection forms and data systems should be thoroughly pilot-tested before launch (see MECIR Box 5.4.a ). Testing should involve several people extracting data from at least a few articles. The initial testing focuses on the clarity and completeness of questions. Users of the form may provide feedback that certain coding instructions are confusing or incomplete (e.g. a list of options may not cover all situations). The testing may identify data that are missing from the form, or likely to be superfluous. After initial testing, accuracy of the extracted data should be checked against the source document or verified data to identify problematic areas. It is wise to draft entries for the table of ‘Characteristics of included studies’ and complete a risk of bias assessment ( Chapter 8 ) using these pilot reports to ensure all necessary information is collected. A consensus between review authors may be required before the form is modified to avoid any misunderstandings or later disagreements. It may be necessary to repeat the pilot testing on a new set of reports if major changes are needed after the first pilot test.

Problems with the data collection form may surface after pilot testing has been completed, and the form may need to be revised after data extraction has started. When changes are made to the form or coding instructions, it may be necessary to return to reports that have already undergone data extraction. In some situations, it may be necessary to clarify only coding instructions without modifying the actual data collection form.

5.5 Extracting data from reports

5.5.1 introduction.

In most systematic reviews, the primary source of information about each study is published reports of studies, usually in the form of journal articles. Despite recent developments in machine learning models to automate data extraction in systematic reviews (see Section 5.5.9 ), data extraction is still largely a manual process. Electronic searches for text can provide a useful aid to locating information within a report. Examples include using search facilities in PDF viewers, internet browsers and word processing software. However, text searching should not be considered a replacement for reading the report, since information may be presented using variable terminology and presented in multiple formats.

5.5.2 Who should extract data?

Data extractors should have at least a basic understanding of the topic, and have knowledge of study design, data analysis and statistics. They should pay attention to detail while following instructions on the forms. Because errors that occur at the data extraction stage are rarely detected by peer reviewers, editors, or users of systematic reviews, it is recommended that more than one person extract data from every report to minimize errors and reduce introduction of potential biases by review authors (see MECIR Box 5.5.a ). As a minimum, information that involves subjective interpretation and information that is critical to the interpretation of results (e.g. outcome data) should be extracted independently by at least two people (see MECIR Box 5.5.a ). In common with implementation of the selection process ( Chapter 4, Section 4.6 ), it is preferable that data extractors are from complementary disciplines, for example a methodologist and a topic area specialist. It is important that everyone involved in data extraction has practice using the form and, if the form was designed by someone else, receives appropriate training.

Evidence in support of duplicate data extraction comes from several indirect sources. One study observed that independent data extraction by two authors resulted in fewer errors than data extraction by a single author followed by verification by a second (Buscemi et al 2006). A high prevalence of data extraction errors (errors in 20 out of 34 reviews) has been observed (Jones et al 2005). A further study of data extraction to compute standardized mean differences found that a minimum of seven out of 27 reviews had substantial errors (Gøtzsche et al 2007).

MECIR Box 5.5.a Relevant expectations for conduct of intervention reviews

5.5.3 Training data extractors

Training of data extractors is intended to familiarize them with the review topic and methods, the data collection form or data system, and issues that may arise during data extraction. Results of the pilot testing of the form should prompt discussion among review authors and extractors of ambiguous questions or responses to establish consistency. Training should take place at the onset of the data extraction process and periodically over the course of the project (Li et al 2015). For example, when data related to a single item on the form are present in multiple locations within a report (e.g. abstract, main body of text, tables, and figures) or in several sources (e.g. publications, ClinicalTrials.gov, or CSRs), the development and documentation of instructions to follow an agreed algorithm are critical and should be reinforced during the training sessions.

Some have proposed that some information in a report, such as its authors, be blinded to the review author prior to data extraction and assessment of risk of bias (Jadad et al 1996). However, blinding of review authors to aspects of study reports generally is not recommended for Cochrane Reviews as there is little evidence that it alters the decisions made (Berlin 1997).

5.5.4 Extracting data from multiple reports of the same study

Studies frequently are reported in more than one publication or in more than one source (Tramèr et al 1997, von Elm et al 2004). A single source rarely provides complete information about a study; on the other hand, multiple sources may contain conflicting information about the same study (Mayo-Wilson et al 2017a, Mayo-Wilson et al 2017b, Mayo-Wilson et al 2018). Because the unit of interest in a systematic review is the study and not the report, information from multiple reports often needs to be collated and reconciled. It is not appropriate to discard any report of an included study without careful examination, since it may contain valuable information not included in the primary report. Review authors will need to decide between two strategies:

- Extract data from each report separately, then combine information across multiple data collection forms.

- Extract data from all reports directly into a single data collection form.

The choice of which strategy to use will depend on the nature of the reports and may vary across studies and across reports. For example, when a full journal article and multiple conference abstracts are available, it is likely that the majority of information will be obtained from the journal article; completing a new data collection form for each conference abstract may be a waste of time. Conversely, when there are two or more detailed journal articles, perhaps relating to different periods of follow-up, then it is likely to be easier to perform data extraction separately for these articles and collate information from the data collection forms afterwards. When data from all reports are extracted into a single data collection form, review authors should identify the ‘main’ data source for each study when sources include conflicting data and these differences cannot be resolved by contacting authors (Mayo-Wilson et al 2018). Flow diagrams such as those modified from the PRISMA statement can be particularly helpful when collating and documenting information from multiple reports (Mayo-Wilson et al 2018).

5.5.5 Reliability and reaching consensus

When more than one author extracts data from the same reports, there is potential for disagreement. After data have been extracted independently by two or more extractors, responses must be compared to assure agreement or to identify discrepancies. An explicit procedure or decision rule should be specified in the protocol for identifying and resolving disagreements. Most often, the source of the disagreement is an error by one of the extractors and is easily resolved. Thus, discussion among the authors is a sensible first step. More rarely, a disagreement may require arbitration by another person. Any disagreement that cannot be resolved should be addressed by contacting the study authors; if this is unsuccessful, the disagreement should be reported in the review.

The presence and resolution of disagreements should be carefully recorded. Maintaining a copy of the data ‘as extracted’ (in addition to the consensus data) allows assessment of reliability of coding. Examples of ways in which this can be achieved include the following:

- Use one author’s (paper) data collection form and record changes after consensus in a different ink colour.

- Enter consensus data onto an electronic form.

- Record original data extracted and consensus data in separate forms (some online tools do this automatically).

Agreement of coded items before reaching consensus can be quantified, for example using kappa statistics (Orwin 1994), although this is not routinely done in Cochrane Reviews. If agreement is assessed, this should be done only for the most important data (e.g. key risk of bias assessments, or availability of key outcomes).

Throughout the review process informal consideration should be given to the reliability of data extraction. For example, if after reaching consensus on the first few studies, the authors note a frequent disagreement for specific data, then coding instructions may need modification. Furthermore, an author’s coding strategy may change over time, as the coding rules are forgotten, indicating a need for retraining and, possibly, some recoding.

5.5.6 Extracting data from clinical study reports

Clinical study reports (CSRs) obtained for a systematic review are likely to be in PDF format. Although CSRs can be thousands of pages in length and very time-consuming to review, they typically follow the content and format required by the International Conference on Harmonisation (ICH 1995). Information in CSRs is usually presented in a structured and logical way. For example, numerical data pertaining to important demographic, efficacy, and safety variables are placed within the main text in tables and figures. Because of the clarity and completeness of information provided in CSRs, data extraction from CSRs may be clearer and conducted more confidently than from journal articles or other short reports.

To extract data from CSRs efficiently, review authors should familiarize themselves with the structure of the CSRs. In practice, review authors may want to browse or create ‘bookmarks’ within a PDF document that record section headers and subheaders and search key words related to the data extraction (e.g. randomization). In addition, it may be useful to utilize optical character recognition software to convert tables of data in the PDF to an analysable format when additional analyses are required, saving time and minimizing transcription errors.

CSRs may contain many outcomes and present many results for a single outcome (due to different analyses) (Mayo-Wilson et al 2017b). We recommend review authors extract results only for outcomes of interest to the review (Section 5.3.6 ). With regard to different methods of analysis, review authors should have a plan and pre-specify preferred metrics in their protocol for extracting results pertaining to different populations (e.g. ‘all randomized’, ‘all participants taking at least one dose of medication’), methods for handling missing data (e.g. ‘complete case analysis’, ‘multiple imputation’), and adjustment (e.g. unadjusted, adjusted for baseline covariates). It may be important to record the range of analysis options available, even if not all are extracted in detail. In some cases it may be preferable to use metrics that are comparable across multiple included studies, which may not be clear until data collection for all studies is complete.

CSRs are particularly useful for identifying outcomes assessed but not presented to the public. For efficacy outcomes and systematically collected adverse events, review authors can compare what is described in the CSRs with what is reported in published reports to assess the risk of bias due to missing outcome data ( Chapter 8, Section 8.5 ) and in selection of reported result ( Chapter 8, Section 8.7 ). Note that non-systematically collected adverse events are not amenable to such comparisons because these adverse events may not be known ahead of time and thus not pre-specified in the protocol.

5.5.7 Extracting data from regulatory reviews

Data most relevant to systematic reviews can be found in the medical and statistical review sections of a regulatory review. Both of these are substantially longer than journal articles (Turner 2013). A list of all trials on a drug usually can be found in the medical review. Because trials are referenced by a combination of numbers and letters, it may be difficult for the review authors to link the trial with other reports of the same trial (Section 5.2.1 ).

Many of the documents downloaded from the US Food and Drug Administration’s website for older drugs are scanned copies and are not searchable because of redaction of confidential information (Turner 2013). Optical character recognition software can convert most of the text. Reviews for newer drugs have been redacted electronically; documents remain searchable as a result.

Compared to CSRs, regulatory reviews contain less information about trial design, execution, and results. They provide limited information for assessing the risk of bias. In terms of extracting outcomes and results, review authors should follow the guidance provided for CSRs (Section 5.5.6 ).

5.5.8 Extracting data from figures with software

Sometimes numerical data needed for systematic reviews are only presented in figures. Review authors may request the data from the study investigators, or alternatively, extract the data from the figures either manually (e.g. with a ruler) or by using software. Numerous tools are available, many of which are free. Those available at the time of writing include tools called Plot Digitizer, WebPlotDigitizer, Engauge, Dexter, ycasd, GetData Graph Digitizer. The software works by taking an image of a figure and then digitizing the data points off the figure using the axes and scales set by the users. The numbers exported can be used for systematic reviews, although additional calculations may be needed to obtain the summary statistics, such as calculation of means and standard deviations from individual-level data points (or conversion of time-to-event data presented on Kaplan-Meier plots to hazard ratios; see Chapter 6, Section 6.8.2 ).

It has been demonstrated that software is more convenient and accurate than visual estimation or use of a ruler (Gross et al 2014, Jelicic Kadic et al 2016). Review authors should consider using software for extracting numerical data from figures when the data are not available elsewhere.

5.5.9 Automating data extraction in systematic reviews

Because data extraction is time-consuming and error-prone, automating or semi-automating this step may make the extraction process more efficient and accurate. The state of science relevant to automating data extraction is summarized here (Jonnalagadda et al 2015).

- At least 26 studies have tested various natural language processing and machine learning approaches for facilitating data extraction for systematic reviews.

· Each tool focuses on only a limited number of data elements (ranges from one to seven). Most of the existing tools focus on the PICO information (e.g. number of participants, their age, sex, country, recruiting centres, intervention groups, outcomes, and time points). A few are able to extract study design and results (e.g. objectives, study duration, participant flow), and two extract risk of bias information (Marshall et al 2016, Millard et al 2016). To date, well over half of the data elements needed for systematic reviews have not been explored for automated extraction.

- Most tools highlight the sentence(s) that may contain the data elements as opposed to directly recording these data elements into a data collection form or a data system.

- There is no gold standard or common dataset to evaluate the performance of these tools, limiting our ability to interpret the significance of the reported accuracy measures.

At the time of writing, we cannot recommend a specific tool for automating data extraction for routine systematic review production. There is a need for review authors to work with experts in informatics to refine these tools and evaluate them rigorously. Such investigations should address how the tool will fit into existing workflows. For example, the automated or semi-automated data extraction approaches may first act as checks for manual data extraction before they can replace it.

5.5.10 Suspicions of scientific misconduct

Systematic review authors can uncover suspected misconduct in the published literature. Misconduct includes fabrication or falsification of data or results, plagiarism, and research that does not adhere to ethical norms. Review authors need to be aware of scientific misconduct because the inclusion of fraudulent material could undermine the reliability of a review’s findings. Plagiarism of results data in the form of duplicated publication (either by the same or by different authors) may, if undetected, lead to study participants being double counted in a synthesis.

It is preferable to identify potential problems before, rather than after, publication of the systematic review, so that readers are not misled. However, empirical evidence indicates that the extent to which systematic review authors explore misconduct varies widely (Elia et al 2016). Text-matching software and systems such as CrossCheck may be helpful for detecting plagiarism, but they can detect only matching text, so data tables or figures need to be inspected by hand or using other systems (e.g. to detect image manipulation). Lists of data such as in a meta-analysis can be a useful means of detecting duplicated studies. Furthermore, examination of baseline data can lead to suspicions of misconduct for an individual randomized trial (Carlisle et al 2015). For example, Al-Marzouki and colleagues concluded that a trial report was fabricated or falsified on the basis of highly unlikely baseline differences between two randomized groups (Al-Marzouki et al 2005).

Cochrane Review authors are advised to consult with Cochrane editors if cases of suspected misconduct are identified. Searching for comments, letters or retractions may uncover additional information. Sensitivity analyses can be used to determine whether the studies arousing suspicion are influential in the conclusions of the review. Guidance for editors for addressing suspected misconduct will be available from Cochrane’s Editorial Publishing and Policy Resource (see community.cochrane.org ). Further information is available from the Committee on Publication Ethics (COPE; publicationethics.org ), including a series of flowcharts on how to proceed if various types of misconduct are suspected. Cases should be followed up, typically including an approach to the editors of the journals in which suspect reports were published. It may be useful to write first to the primary investigators to request clarification of apparent inconsistencies or unusual observations.

Because investigations may take time, and institutions may not always be responsive (Wager 2011), articles suspected of being fraudulent should be classified as ‘awaiting assessment’. If a misconduct investigation indicates that the publication is unreliable, or if a publication is retracted, it should not be included in the systematic review, and the reason should be noted in the ‘excluded studies’ section.

5.5.11 Key points in planning and reporting data extraction

In summary, the methods section of both the protocol and the review should detail:

- the data categories that are to be extracted;

- how extracted data from each report will be verified (e.g. extraction by two review authors, independently);

- whether data extraction is undertaken by content area experts, methodologists, or both;

- pilot testing, training and existence of coding instructions for the data collection form;

- how data are extracted from multiple reports from the same study; and

- how disagreements are handled when more than one author extracts data from each report.

5.6 Extracting study results and converting to the desired format

In most cases, it is desirable to collect summary data separately for each intervention group of interest and to enter these into software in which effect estimates can be calculated, such as RevMan. Sometimes the required data may be obtained only indirectly, and the relevant results may not be obvious. Chapter 6 provides many useful tips and techniques to deal with common situations. When summary data cannot be obtained from each intervention group, or where it is important to use results of adjusted analyses (for example to account for correlations in crossover or cluster-randomized trials) effect estimates may be available directly.

5.7 Managing and sharing data

When data have been collected for each individual study, it is helpful to organize them into a comprehensive electronic format, such as a database or spreadsheet, before entering data into a meta-analysis or other synthesis. When data are collated electronically, all or a subset of them can easily be exported for cleaning, consistency checks and analysis.

Tabulation of collected information about studies can facilitate classification of studies into appropriate comparisons and subgroups. It also allows identification of comparable outcome measures and statistics across studies. It will often be necessary to perform calculations to obtain the required statistics for presentation or synthesis. It is important through this process to retain clear information on the provenance of the data, with a clear distinction between data from a source document and data obtained through calculations. Statistical conversions, for example from standard errors to standard deviations, ideally should be undertaken with a computer rather than using a hand calculator to maintain a permanent record of the original and calculated numbers as well as the actual calculations used.

Ideally, data only need to be extracted once and should be stored in a secure and stable location for future updates of the review, regardless of whether the original review authors or a different group of authors update the review (Ip et al 2012). Standardizing and sharing data collection tools as well as data management systems among review authors working in similar topic areas can streamline systematic review production. Review authors have the opportunity to work with trialists, journal editors, funders, regulators, and other stakeholders to make study data (e.g. CSRs, IPD, and any other form of study data) publicly available, increasing the transparency of research. When legal and ethical to do so, we encourage review authors to share the data used in their systematic reviews to reduce waste and to allow verification and reanalysis because data will not have to be extracted again for future use (Mayo-Wilson et al 2018).

5.8 Chapter information

Editors: Tianjing Li, Julian PT Higgins, Jonathan J Deeks

Acknowledgements: This chapter builds on earlier versions of the Handbook . For details of previous authors and editors of the Handbook , see Preface. Andrew Herxheimer, Nicki Jackson, Yoon Loke, Deirdre Price and Helen Thomas contributed text. Stephanie Taylor and Sonja Hood contributed suggestions for designing data collection forms. We are grateful to Judith Anzures, Mike Clarke, Miranda Cumpston and Peter Gøtzsche for helpful comments.

Funding: JPTH is a member of the National Institute for Health Research (NIHR) Biomedical Research Centre at University Hospitals Bristol NHS Foundation Trust and the University of Bristol. JJD received support from the NIHR Birmingham Biomedical Research Centre at the University Hospitals Birmingham NHS Foundation Trust and the University of Birmingham. JPTH received funding from National Institute for Health Research Senior Investigator award NF-SI-0617-10145. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

5.9 References

Al-Marzouki S, Evans S, Marshall T, Roberts I. Are these data real? Statistical methods for the detection of data fabrication in clinical trials. BMJ 2005; 331 : 267-270.

Allen EN, Mushi AK, Massawe IS, Vestergaard LS, Lemnge M, Staedke SG, Mehta U, Barnes KI, Chandler CI. How experiences become data: the process of eliciting adverse event, medical history and concomitant medication reports in antimalarial and antiretroviral interaction trials. BMC Medical Research Methodology 2013; 13 : 140.

Baudard M, Yavchitz A, Ravaud P, Perrodeau E, Boutron I. Impact of searching clinical trial registries in systematic reviews of pharmaceutical treatments: methodological systematic review and reanalysis of meta-analyses. BMJ 2017; 356 : j448.

Bent S, Padula A, Avins AL. Better ways to question patients about adverse medical events: a randomized, controlled trial. Annals of Internal Medicine 2006; 144 : 257-261.

Berlin JA. Does blinding of readers affect the results of meta-analyses? University of Pennsylvania Meta-analysis Blinding Study Group. Lancet 1997; 350 : 185-186.

Buscemi N, Hartling L, Vandermeer B, Tjosvold L, Klassen TP. Single data extraction generated more errors than double data extraction in systematic reviews. Journal of Clinical Epidemiology 2006; 59 : 697-703.

Carlisle JB, Dexter F, Pandit JJ, Shafer SL, Yentis SM. Calculating the probability of random sampling for continuous variables in submitted or published randomised controlled trials. Anaesthesia 2015; 70 : 848-858.

Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implementation Science 2007; 2 : 40.

Carvajal A, Ortega PG, Sainz M, Velasco V, Salado I, Arias LHM, Eiros JM, Rubio AP, Castrodeza J. Adverse events associated with pandemic influenza vaccines: Comparison of the results of a follow-up study with those coming from spontaneous reporting. Vaccine 2011; 29 : 519-522.

Chamberlain C, O'Mara-Eves A, Porter J, Coleman T, Perlen SM, Thomas J, McKenzie JE. Psychosocial interventions for supporting women to stop smoking in pregnancy. Cochrane Database of Systematic Reviews 2017; 2 : CD001055.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science 2009; 4 : 50.

Davis AL, Miller JD. The European Medicines Agency and publication of clinical study reports: a challenge for the US FDA. JAMA 2017; 317 : 905-906.

Denniston AK, Holland GN, Kidess A, Nussenblatt RB, Okada AA, Rosenbaum JT, Dick AD. Heterogeneity of primary outcome measures used in clinical trials of treatments for intermediate, posterior, and panuveitis. Orphanet Journal of Rare Diseases 2015; 10 : 97.

Derry S, Loke YK. Risk of gastrointestinal haemorrhage with long term use of aspirin: meta-analysis. BMJ 2000; 321 : 1183-1187.

Doshi P, Dickersin K, Healy D, Vedula SS, Jefferson T. Restoring invisible and abandoned trials: a call for people to publish the findings. BMJ 2013; 346 : f2865.

Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Education Research 2003; 18 : 237-256.

Dwan K, Altman DG, Clarke M, Gamble C, Higgins JPT, Sterne JAC, Williamson PR, Kirkham JJ. Evidence for the selective reporting of analyses and discrepancies in clinical trials: a systematic review of cohort studies of clinical trials. PLoS Medicine 2014; 11 : e1001666.

Elia N, von Elm E, Chatagner A, Popping DM, Tramèr MR. How do authors of systematic reviews deal with research malpractice and misconduct in original studies? A cross-sectional analysis of systematic reviews and survey of their authors. BMJ Open 2016; 6 : e010442.

Gøtzsche PC. Multiple publication of reports of drug trials. European Journal of Clinical Pharmacology 1989; 36 : 429-432.

Gøtzsche PC, Hróbjartsson A, Maric K, Tendal B. Data extraction errors in meta-analyses that use standardized mean differences. JAMA 2007; 298 : 430-437.

Gross A, Schirm S, Scholz M. Ycasd - a tool for capturing and scaling data from graphical representations. BMC Bioinformatics 2014; 15 : 219.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, Lamb SE, Dixon-Woods M, McCulloch P, Wyatt JC, Chan AW, Michie S. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 348 : g1687.

ICH. ICH Harmonised tripartite guideline: Struture and content of clinical study reports E31995. ICH1995. www.ich.org/fileadmin/Public_Web_Site/ICH_Products/Guidelines/Efficacy/E3/E3_Guideline.pdf .

Ioannidis JPA, Mulrow CD, Goodman SN. Adverse events: The more you search, the more you find. Annals of Internal Medicine 2006; 144 : 298-300.

Ip S, Hadar N, Keefe S, Parkin C, Iovin R, Balk EM, Lau J. A web-based archive of systematic review data. Systematic Reviews 2012; 1 : 15.

Ismail R, Azuara-Blanco A, Ramsay CR. Variation of clinical outcomes used in glaucoma randomised controlled trials: a systematic review. British Journal of Ophthalmology 2014; 98 : 464-468.

Jadad AR, Moore RA, Carroll D, Jenkinson C, Reynolds DJM, Gavaghan DJ, McQuay H. Assessing the quality of reports of randomized clinical trials: Is blinding necessary? Controlled Clinical Trials 1996; 17 : 1-12.

Jelicic Kadic A, Vucic K, Dosenovic S, Sapunar D, Puljak L. Extracting data from figures with software was faster, with higher interrater reliability than manual extraction. Journal of Clinical Epidemiology 2016; 74 : 119-123.

Jones AP, Remmington T, Williamson PR, Ashby D, Smyth RL. High prevalence but low impact of data extraction and reporting errors were found in Cochrane systematic reviews. Journal of Clinical Epidemiology 2005; 58 : 741-742.

Jones CW, Keil LG, Holland WC, Caughey MC, Platts-Mills TF. Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Medicine 2015; 13 : 282.

Jonnalagadda SR, Goyal P, Huffman MD. Automating data extraction in systematic reviews: a systematic review. Systematic Reviews 2015; 4 : 78.