Advertisement

- Previous Article

- Next Article

Introduction

Smart design, hypothetical melanoma smart, disclosure of potential conflicts of interest, authors' contributions, acknowledgments, sequential, multiple assignment, randomized trial designs in immuno-oncology research.

- Funder(s): Memorial Sloan Kettering Cancer Center

- Award Id(s): P30 CA008748

- Principal Award Recipient(s): K.S. M.A. C. Panageas Postow Thompson

- Funder(s): PCORI

- Award Id(s): ME-1507-31108

- Principal Award Recipient(s): K.M. Kidwell

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Open the PDF for in another window

- Get Permissions

- Cite Icon Cite

- Search Site

- Version of Record February 14 2018

- Proof January 25 2018

- Accepted Manuscript August 23 2017

Kelley M. Kidwell , Michael A. Postow , Katherine S. Panageas; Sequential, Multiple Assignment, Randomized Trial Designs in Immuno-oncology Research. Clin Cancer Res 15 February 2018; 24 (4): 730–736. https://doi.org/10.1158/1078-0432.CCR-17-1355

Download citation file:

- Ris (Zotero)

- Reference Manager

Clinical trials investigating immune checkpoint inhibitors have led to the approval of anti–CTLA-4 (cytotoxic T-lymphocyte antigen-4), anti–PD-1 (programmed death-1), and anti–PD-L1 (PD-ligand 1) drugs by the FDA for numerous tumor types. In the treatment of metastatic melanoma, combinations of checkpoint inhibitors are more effective than single-agent inhibitors, but combination immunotherapy is associated with increased frequency and severity of toxicity. There are questions about the use of combination immunotherapy or single-agent anti–PD-1 as initial therapy and the number of doses of either approach required to sustain a response. In this article, we describe a novel use of sequential, multiple assignment, randomized trial (SMART) design to evaluate immune checkpoint inhibitors to find treatment regimens that adapt within an individual based on intermediate response and lead to the longest overall survival. We provide a hypothetical example SMART design for BRAF wild-type metastatic melanoma as a framework for investigating immunotherapy treatment regimens. We compare implementing a SMART design to implementing multiple traditional randomized clinical trials. We illustrate the benefits of a SMART over traditional trial designs and acknowledge the complexity of a SMART. SMART designs may be an optimal way to find treatment strategies that yield durable response, longer survival, and lower toxicity. Clin Cancer Res; 24(4); 730–6. ©2017 AACR .

Clinical trials investigating immune checkpoint inhibitors have led to the approval of anti–CTLA-4 (cytotoxic T-lymphocyte antigen-4), anti–PD-1 (programmed death-1), and anti–PD-L1 (PD-ligand 1) drugs by the FDA for numerous tumor types. Immune checkpoint inhibitors are a novel class of immunotherapy agents that block normally negative regulatory proteins on T cells and enable immune system activation. By activating the immune system rather than directly attacking the cancer, immunotherapy drugs differ from cytotoxic chemotherapy and oncogene-directed molecularly targeted agents. Cytotoxic chemotherapy or molecularly targeted agents generally provide clinical benefit during treatment and usually not after treatment discontinuation, whereas immunotherapy benefit may persist after treatment discontinuation.

The anti–CTLA-4 drug ipilimumab was approved for the treatment of metastatic melanoma in 2011 and as adjuvant therapy for resected stage III melanoma in 2015. Inhibition of CTLA-4 is also being tested in other malignancies. In melanoma, ipilimumab improves overall survival but is associated with 20% grade 3/4 immune related adverse events ( 1–6 ). Agents that inhibit PD-1 and PD-L1 have less immune-related adverse events than CTLA-4–blocking agents ( 7 ). PD-1 and PD-L1 agents have been approved by the FDA for use in multiple malignancies including, but not limited to, melanoma (nivolumab and pembrolizumab), non–small cell lung cancer (NSCLC; nivolumab, pembrolizumab, and atezolizumab), renal cell carcinoma (nivolumab), and urothelial carcinoma (atezolizumab; refs. 8–10 ). Combinations of checkpoint inhibitors that block both CTLA-4 and PD-1 are more effective than CTLA-4 blockade alone (ipilimumab) in patients with melanoma, but combination immunotherapy is associated with increased frequency and severity of toxicity. Although we build our framework on the FDA-approved combination of anti–PD-1 therapy and ipilimumab, as this is reflects the current landscape, one could replace the anti–PD-1 and ipilimumab combination with anti–PD-1 and any drug to reflect novel combination agents that may become available down the pipeline, such as inhibitors of indoleamine-2,3-dioxygenase (IDO).

Some individuals may not need combination therapy because they may respond to a single agent, and these individuals should not be subjected to increased toxicities associated with combination therapy. Defining this group of individuals, however, is difficult. Many trials are being proposed to evaluate combinations or sequences of immunotherapy drugs alone in combination with other treatments such as chemotherapy, radiation, and targeted therapies, or with varied doses and schedules (sequential versus concurrent). The goal of these trials is to increase efficacy and decrease toxicity ( 11 ).

The long-term effect of immune activation by these drugs is unknown. It is also unknown whether individuals need continued treatment. Oncologists must optimize a balance in the clinic, incorporating observed efficacy and toxicity, and informally implement treatment pathways so that treatment may change for an individual depending on the individual's status. Many of these treatment pathways are ad hoc , based on the physician's experience and judgement or information pieced together from several randomized clinical trials. Formalized, evidence-based treatment pathways to inform decision-making over the course of care are needed. Formal, evidence-based treatment guidelines that adapt treatment based on a patient's outcomes, including efficacy and toxicity, are known as treatment pathways, dynamic treatment regimens ( 12 ), or adaptive interventions ( 13 ). Specifically, a treatment pathway is a sequence of treatment guidelines or decisions that indicate if, when, and how to modify the dosage or duration of interventions at decision stages throughout clinical care ( 14 ). For example, in treating individuals with stage III or stage IV Hodgkin lymphoma, one treatment pathway is as follows: “Treat with two cycles of doxorubicin, bleomycin, vinblastine and dacarbazine (ABVD). At the end of therapy (6 to 8 weeks), perform positron emission tomography/computed tomography (PET/CT) imaging. Treat with an additional 4 cycles of ABVD if the scan scores 1–3 on the Deauville scale (considered a negative scan). Otherwise, if the scan scores 4–5 on the Deauville scale (considered a positive scan), switch treatment to escalated bleomycin, etoposide, docorubicin, cyclophosphamide, vincreistine, procarbazine and prednisone (eBEACOPP) for 6 cycles ( 15 ).” Note that one treatment pathway includes an initial treatment followed by subsequent treatment that depends on an intermediate outcome for all possibilities of that intermediate outcome.

Treatment pathways are difficult to develop in traditional randomized clinical trial settings because they specify adapting treatments over time for an individual based on response and/or toxicity. Treatments may have delayed effects such that the best initial treatment is not a part of the best overall treatment regimen. For example, one treatment may initially produce the best response rate, but that treatment may also be so aggressive that for those who did not have a response, they cannot tolerate additional treatment; whereas another treatment may produce a lower proportion of responders initially but can be followed by an additional treatment to rescue more nonresponders and lead to a better overall response rate and longer survival. Thus, treatments in combination or sequence do not necessarily result in overall best outcomes. The sequential, multiple assignment, randomized trial (SMART; refs. 16, 17 ) is a multistage trial that is designed to develop and investigate treatment pathways. SMART designs can investigate delayed effects as well as treatment synergies and antagonisms, and provide robust evidence about the timing, sequences, and combinations of immunotherapies. Furthermore, treatment pathways may be individualized to find baseline and time-varying clinical and pathologic characteristics associated with optimal response.

In this article, we describe a novel use of SMART design to evaluate immuno-oncologic agents. We provide a hypothetical example SMART design for metastatic melanoma as a framework for investigating immunotherapy treatment. We compare implementation of a SMART design with implementation of multiple traditional randomized clinical trials. We illustrate the benefits of a SMART over traditional trial designs and acknowledge the complexity of a SMART. SMART designs may be an optimal way to find treatment strategies that yield durable response, longer survival, and lower toxicity.

A SMART is a multistage, randomized trial in which each stage corresponds to an important treatment decision point. Participants are enrolled in a SMART and followed throughout the trial, but each participant may be randomized more than once. Subsequent randomizations allow for unbiased comparisons of post-initial randomization treatments and comparisons of treatment pathways. The goal of a SMART is to develop and find evidence of effective treatment pathways that mimic clinical practice.

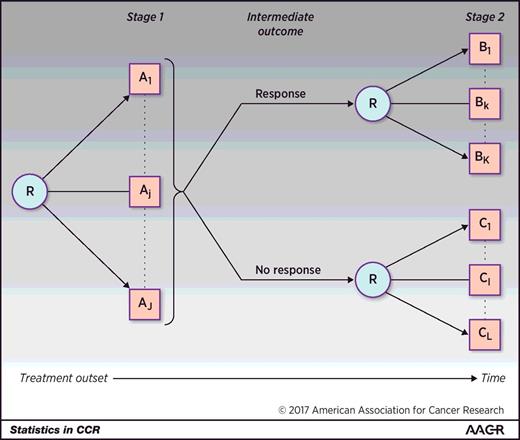

In a generic two-stage SMART, participants are randomized between several treatments (usually 2–3; Fig. 1 ). Participants are followed, and an intermediate outcome is assessed over time or at a specific time. On the basis of the intermediate outcome, participants may be classified into groups, and they may be re-randomized to subsequent treatment. The intermediate outcome is a measure of early success or failure that allows the identification of those who may benefit from a treatment change. This intermediate outcome, also known as a tailoring variable, should have only a few categories so that it is a low-dimensional summary that is well defined, agreed upon, implementable in practice and gives early information about the overall endpoint. This intermediate outcome does not need to be defined as response/nonresponse, or more specifically as tumor response, but rather, it may be defined differently, such as adherence to treatment, a composite of efficacy measures, or efficacy and toxicity measures. It is imperative that the intermediate outcome is validated and replicable. Although the two-stage design is most commonly used, SMARTs are not limited to two stages, such as a SMART that investigated treatment strategies in prostate cancer ( 18 ).

A generic two-stage SMART design where participants are randomized between any number of treatments A 1 to A J . Response is measured at some intermediate time point or over time such that responders are re-randomized in the second stage between any number of treatments B 1 to B K and nonresponders are re-randomized between any number of treatments C 1 to C L . The same participants are followed throughout the trial. R denotes randomization.

A SMART is similar to other commonly used trial designs but has unique features that enable the development of robust evidence of effective treatment strategies. The SMART design is a type of sequential factorial trial design in which the second-stage treatment is restricted based on the previous response. A SMART design is similar to a crossover trial in that the same participants are followed throughout the trial and participants may receive multiple treatments. However, in a SMART, subsequent treatment is based on the response to the previous treatment, and a SMART design takes advantage of treatment interactions as opposed to washing out treatment effects (i.e., a SMART does not require time in between treatments to eliminate carryover effects from the initial treatment on the assessment of the second-stage treatment).

We focus this overview on SMART designs that are nonadaptive. In a nonadaptive SMART, the operating characteristics of the trial, including randomization probabilities and eligibility criteria, are predetermined and fixed throughout the trial. Treatment may adapt within a participant based on intermediate response, but randomization probabilities or other trial-operating characteristics do not change for future participants based on previous participants' results.

By following the same participants over the trial, a SMART enables the development of evidence for treatment pathways that specify an initial treatment, followed by a maintenance treatment for responders and rescue treatment for nonresponders. These treatment pathways are embedded within a SMART design, but within the trial, participants are randomized to treatments based on the intermediate outcome to enable unbiased comparisons and valid causal inference. The end goal of the trial is to provide definitive evidence for treatment pathways to be used in practice. The SMART design has been used in oncology ( 19, 20 ), mental health ( 21 ), and other areas ( 22 ), but to our knowledge, this is the first description of using a SMART in immuno-oncology.

Ipilimumab and anti–PD-1 therapy currently are approved to treat metastatic melanoma. However, combinations of these and other immunotherapy drugs may cause toxic events, and it remains unclear whether patients should start with these combinations or start with single agent anti–PD-1 therapy and receive these additional treatments upon disease progression. There are also questions about the number of doses required to sustain a response for single-agent or combination therapy. The best treatment strategy that may provide enough therapy for sustained response and limit toxicities is unknown. A SMART design may address these questions to provide rigorous evidence for the best immunotherapy treatment pathway for individuals. Our proposed example focuses on patients with BRAF wild-type metastatic melanoma to avoid complexities of additionally considering incorporation of BRAF and MEK inhibitors into the treatment regimen of patients with BRAF-mutant melanoma.

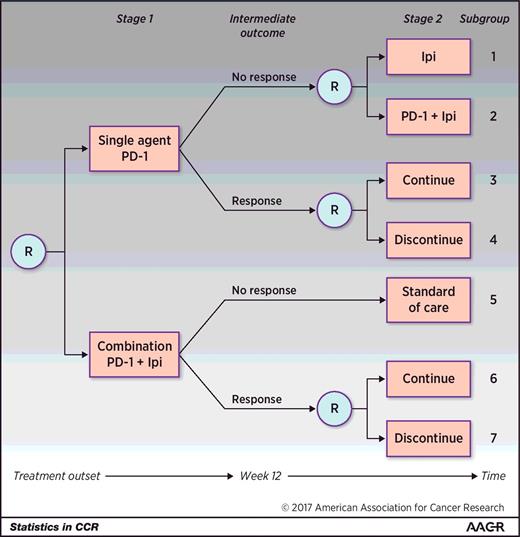

In a hypothetical SMART design to investigate treatment strategies, including anti–PD-1 therapy and ipilimumab, participants may be randomized in the first stage to receive four doses of single-agent anti–PD-1 therapy (pembrolizumab 2 mg/kg or nivolumab 240 mg) or combination nivolumab (1 mg/kg), and ipilimumab (3 mg/kg; Fig. 2 , note these drugs could be replaced with any novel immunotherapy or approved drug). During follow-up, participants would be evaluated for their tumor response; the intermediate outcome in this SMART would be defined by disease response after four doses of immunotherapy (week 12). Although Response Evaluation Criteria in Solid Tumors (RECIST) could be used to define disease response, favorable response could also be defined as any decline in total tumor burden, even in the presence of new lesions, as specified by principles related to immune-related response criteria ( 23 ).

A hypothetical two-stage SMART design in the setting of BRAF wild-type metastatic melanoma. Participants are initially randomized to either single-agent anti–PD-1 therapy or to a combination of anti–PD-1 therapy + ipilimumab (Ipi). Note that Ipi may be replaced by any novel combination agent. After four doses or approximately 12 weeks, response is measured. Those who did not respond to the single agent are re-randomized to receive Ipi or the combination. Those who did respond to single-agent anti–PD-1 are re-randomized to continue the single agent or discontinue therapy. Those who did not respond initially to the combination receive standard of care and those who did respond are re-randomized to continue the combination or discontinue therapy. Subgroups 1 to 7 denote the subgroups that any one participant may fall into. There are six embedded treatment pathways in this SMART, and each one is made up of 2 subgroups: {1,3}, {1,4}, {2,3}, {2,4}, {5,6}, and {5,7}. R denotes randomization.

In the second stage of the trial, responders to either initial treatment would be re-randomized to continue versus discontinue their initial treatment. Specifically, participants who responded to single agent anti–PD-1 would be re-randomized to continue current treatment for additional doses up to 2 years or to discontinue treatment, and participants who responded to the combination of anti–PD-1 + ipilimumab would be re-randomized to continue anti–PD-1 maintenance or discontinue treatment. Participants who did not respond to single-agent anti–PD-1 by 12 weeks would be re-randomized to receive ipilimumab or the combination of anti–PD-1 and ipilimumab. Participants who did not respond to the combination therapy would receive the standard of care (e.g., oncogene-directed targeted therapy if appropriate, chemotherapy, or considered for clinical trials; Fig. 2 ). As newer drugs become available and are promising for nonresponders to combination therapy, we anticipate that there could be an additional randomization for these nonresponders to explore additional treatment pathways. All participants would be followed for at least 28 months. The overall outcome of the trial would be overall survival. Any participant who experienced major toxicity at any time or progressive disease in the second stage would be removed from the study and treated as directed by the treating physician.

Participants belong to one subgroup ( Fig. 2 ) in a SMART. Two subgroups make up one treatment pathway, since a treatment pathway describes the clinical guidelines for initial treatment and subsequent treatment for both responders and nonresponders ( Fig. 2 ). Although there are seven subgroups that a participant may belong to, there are six embedded treatment pathways in this SMART design. The six treatment pathways include the following:

(1) First begin with single-agent anti–PD-1 therapy. If no response to single-agent anti–PD-1 therapy, then switch to single-agent ipilimumab. If response to single-agent anti–PD-1, then continue treatment (subgroups 1 and 3);

(2) First begin with single-agent anti–PD-1 therapy. If no response to single-agent anti–PD-1 therapy, then switch to single-agent ipilimumab. If response to single-agent anti–PD-1, then discontinue treatment (subgroups 1 and 4);

(3) First begin with single-agent anti–PD-1 therapy. If no response to single-agent anti–PD-1 therapy, then add ipilimumab to anti–PD-1 therapy. If response to single-agent anti–PD-1 therapy, then continue treatment (subgroups 2 and 3);

(4) First begin with single-agent anti–PD-1 therapy. If no response to single-agent anti–PD-1 therapy, then add ipilimumab to anti–PD-1 therapy. If response to single-agent anti–PD-1 therapy, then discontinue treatment (subgroups 2 and 4);

(5) First begin with combination anti–PD-1 therapy + ipilimumab. If no response to combination anti–PD-1 therapy + ipilimumab, then receive standard of care. If response to combination anti–PD-1 therapy + ipilimumab, then continue treatment (subgroups 5 and 6); and

(6) First begin with combination anti–PD-1 therapy + ipilimumab. If no response to combination anti–PD-1 therapy + ipilimumab then receive standard of care. If response to combination anti–PD-1 therapy + ipilimumab, then discontinue treatment (subgroups 5 and 7).

A SMART may have several scientific aims, some of which may resemble those of traditional trials and some, pertaining to the treatment pathways, differ. It is important, as in standard trials, to identify and power on a primary aim. Subsequent aims and multiple comparisons may be additionally powered for using any type I error-control method ( 24 ). In metastatic melanoma, the SMART may be interested in answering one of following four questions:

(1) Does a treatment strategy that begins with single-agent anti–PD-1 or combination anti–PD-1 and ipilimumab therapy lead to the longest overall survival?

(2) For responders to initial therapy, does continuing or discontinuing treatment provide the longest overall survival?

(3) For nonresponders to single-agent anti–PD-1 therapy, does ipilimumab or the combination of ipilimumab and anti–PD-1 therapy provide the longest overall survival?

(4) Is there a difference in the overall survival between the six embedded treatment pathways?

Questions similar to numbers 1, 2, and 3 could be answered in three separate, traditional, parallel-arm clinical trials. The traditional paradigm would run a single-stage trial (e.g., single-agent vs. combination therapy) to determine the most effective therapy. A first trial may investigate single agent anti–PD-1 versus the combination of anti–PD-1 and ipilimumab. Another trial with a randomized discontinuation design could identify if continuing or discontinuing treatment leads to longer overall survival for individuals who received the most effective therapy (e.g., anti–PD-1 alone or in combination with ipilimumab). And a third trial could determine for those refractory to anti–PD-1 therapy, if ipilimumab or the combination of ipilimumab and anti–PD-1 therapy results in longer survival. For each of these three traditional trials, power and analyses are standard in terms of powering for and analyzing a two-group comparison with a survival outcome.

If question 1, 2, or 3 is the primary aim of a SMART, the sample size and analysis plan is also standard; however, for questions 2 and 3, the calculated sample size must be inflated. For question 2, the sample size must be inflated on the basis of the assumed response rates to first-stage therapies. Specifically, if 40% respond to single-agent therapy and 55% to combination therapy, the calculated two-group comparison sample size must be increased by these amounts to ensure that in the SMART there will be sufficient responders in the second stage. For question 3, the sample size must also be inflated for the expected percentage of nonresponders to anti–PD-1 therapy. Similarly, in a standard one-stage trial to address question 2 (or 3), more patients would need to be screened to account for the response status, but unlike a SMART, the nonresponders (responders) would not be followed. Furthermore, implementing three separate trials may not provide robust evidence for entire treatment pathways and instead provide evidence for only the best treatments at specific time points.

For a SMART powered on question number 1, 2, or 3, the analysis of treatment pathways would be exploratory and hypothesis generating to be confirmed in a follow-up trial. Instead, the SMART may be powered to compare the embedded treatment pathways (question 4) in contrast with the stage-specific differences. Comparisons of pathways require power calculations and analytic methods specific to SMART designs. Currently, the only sample-size calculator available for a SMART design with a survival outcome compares two specific treatment pathways using a weighted log-rank test. This calculator is only applicable for designs similar to the hypothetical melanoma SMART if the non-responders to anti–PD-1 therapy were not re-randomized (i.e., if there were only 4 embedded treatment pathways instead of 6; ref. 25 ). Any other SMART design (e.g., our hypothetical design in Fig. 2 ) or any other test (e.g., a global test of equality across all treatment pathways or finding the best set of treatment pathways using multiple comparisons with the best) requires statistical simulation. Other sample size calculations exist for survival outcomes but do not have an easy-to-implement calculator ( 26, 27 ). Methods are available to estimate survival ( 28, 29 ) and compare ( 25, 26, 30–32 ) treatment pathways with survival outcomes, and R packages ( 33 ) can aid in the analysis.

In this example, we calculate sample sizes of implementing three single trials versus implementing one trial using a SMART design. For the first single-stage trial, we assume a log-rank test, survival rates of 80% and 68%, respectively, at 1 year for combination and single agent anti–PD-1, exponential survival distributions, 1 year for accrual, and an additional 2.5 years of follow-up. The same assumptions were applied for continuing initial treatment versus discontinuing the initial (this is a conservative sample size for this trial, since the survival rates at 1 year would likely be closer together and require more patients). To have the same assumptions across the single-stage trials and SMART design, the survival rate at 1 year for those who did not respond to single agent anti–PD-1 therapy and received ipilimumab was set at 68% and for those who received the combination anti–PD-1 and ipilimumab was set to 74%. Parameters for the SMART were specified to mimic the single-stage settings with the additional assumptions of a response rate to initial therapy being 40% and 1-year survival rates of 69%, 68%, 75%, 74%, 80%, and 74% for the treatment pathways 1 through 6, respectively. For the SMART, a weighted log-rank test of any difference in the six treatment pathways was used for power via simulation ( 30, 33 ). With these assumptions, 570 participants are required to observe any difference in the six embedded treatment pathways within 1 SMART ( Table 1 ). This sample size is less than the 1,142 participants that are required by summing the sample sizes with the same assumptions using three traditional single-stage trials. We note that using a global test in the SMART allows for less participants, and that potentially, one of the trials in the single-stage trial setting may be dropped on the basis of previous trial results. However, a SMART allows us to answer many questions simultaneously and find optimal treatment pathways potentially ignored in the single-stage setting.

Comparison of the sample sizes needed for three single trials versus one SMART design

NOTE: The trials in approach 1 would require a total of 1,142 participants versus 570 total participants from one SMART.

A SMART would most likely require less time from start to finish than the single-stage trials because it is unlikely that the single-stage trials would run simultaneously (because the trials based on response to initial treatment would require an actionable result from the first trial; ref. 34 ). Furthermore, because participants are followed throughout the trial and offered follow-up treatment, individuals may be more likely to enroll in the SMART (i.e., the sample of participants in a SMART may be more generalizable) and adhere to treatment ( 34 ).

Beyond the sequences of treatments in a SMART design that are tailored to an individual based on intermediate outcome, additional analyses (like subgroup analyses in traditional trials) may evaluate more individualized treatment pathways. Information, including demographic, clinical, and pathologic data collected at baseline and between baseline and the measurement of the intermediate outcome, may be used to further individualize treatment sequences for better overall survival. To further personalize treatment pathways, the analysis requires methods specific for SMART data such as Q-learning or other similar methods ( 35, 36 ). Briefly, Q-learning, borrowed from computer science, is an extension of regression to sequential treatments ( 37 ). Q-learning is a series of regressions used to construct a sequence of treatment guidelines that maximize the outcome (e.g., find more detailed treatment pathways that include baseline and time-varying variables associated with the longest survival). It may be as beneficial for some individuals to receive single-agent as combination therapy even when combination therapy is better when averaged across all individuals. In addition, a subgroup of individuals may benefit more from single-agent therapy because of savings in cost and toxicity compared to combination therapy. These questions are unlikely to be powered for in the SMART, but a priori hypotheses can direct analysis and lead to the identification of more personalized treatment pathways that can be validated in subsequent trials.

This article has focused on an example SMART in BRAF wild-type metastatic melanoma to answer questions about the best treatment pathways, including ipilimumab and anti–PD-1 therapy. As new immunotherapies are available for trials, ipilimumab may ultimately be replaced in this type of design by one of the more novel drugs (e.g., inhibitors of the immunosuppressive enzyme IDO or other checkpoint inhibitors such as drugs targeting lymphocyte-activation gene 3, “LAG-3”). Our proposed SMART design could be considered as a template for testing any number of these potential future possible combinations.

A SMART design may be a more efficient trial design to understand which immunotherapy treatment pathways in BRAF wild-type metastatic melanoma lead to the longest overall survival. SMARTs can definitively evaluate the treatment pathways that many physicians use in practice, leading to the recommendation of treatments over time based on individual response. A single SMART can enroll and continue to follow participants throughout the course of care to provide evidence for beginning treatment with single-agent anti–PD-1 or combination therapy and the optimal number of doses needed to sustain a response while limiting toxicity.

Of course, a SMART design is not limited to providing robust evidence for treatment pathways in BRAF wild-type metastatic melanoma but can help develop and test treatment pathways that lead to optimal outcomes in other melanomas, cancers, and diseases. We acknowledge our SMART proposal is inherently limited by heterogeneity in some of the treatment pathways, such as in the “Standard-of-care” box in subgroup 5. In our melanoma example, this box could include diverse treatments such as chemotherapy, inhibitors of other molecular drivers such as imatinib for patients with KIT mutations, and other potentially effective immunotherapy agents. How the various treatments within this pathway affect overall outcomes remains unknown in our proposed design.

A SMART requires less overall participants and can be implemented and analyzed in a shorter period of time than executing several single-stage, standard two-arm trial designs ( 34 ). However, a commitment to more participants at the initiation of the trial for a SMART is needed than for individual standard trials, and logistics may be more complex in a SMART by re-randomizing participants at an intermediate time point ( 34 ). With current technology that can handle multisite interim randomizations or the ability to randomize participants upfront to follow particular treatment pathways, the increased logistics should not outweigh the benefits of finding optimal immunotherapy treatment pathways from SMART designs.

The SMART design, even when powered on questions regarding the best initial treatment in a pathway or best strategy for responders or nonresponders (i.e., question 1, 2, or 3 from the previous section), may be more beneficial than multiple traditional single-stage designs. A SMART can conclusively answer one question with additional analyses to address questions concerning treatment pathways that may be relevant to clinical practice, such as how long to remain on immunotherapy. Furthermore, SMART designs can identify treatment interactions when treatments differ in the first and second stages (i.e., a SMART design that differs from that in Fig. 2 by re-randomizing to different treatments in the second stage as opposed to continuing or discontinuing initial treatment), and there may be delayed effects of initial treatments that modify the effects of follow-up treatments. Single-stage trials cannot evaluate these interactions between first and second-stage treatments dependent on intermediate outcomes.

More novel trial designs, including the SMART, may be needed to answer pertinent treatment questions and provide robust evidence for effective treatment regimens, especially in immuno-oncology research where novel combinations are frequently being proposed. A SMART can examine treatment sequences and combinations of immunotherapies and other drugs that lead to the longest overall survival with decreased toxicities. SMART designs may be able to verify potential optimal treatment pathways identified from dynamic mathematical modeling ( 38 ). SMARTs may require a paradigm shift for practicing physicians, pharmaceutical companies, and guidance agencies to begin to test and approve treatment regimens that may adapt within an individual along the course of care, as opposed to testing and approving agents at particular snapshots in time and piecing these snapshots together trusting that these pieces tell the full story.

M.A. Postow reports receiving commercial research grants from Bristol-Myers Squibb, speakers bureau honoraria from Bristol-Myers Squibb and Merck, and is a consultant/advisory board member for Array BioPharma, Bristol-Myers Squibb, Merck, and Novartis. No potential conflicts of interest were disclosed by the other authors.

Conception and design: K.M. Kidwell, M.A. Postow, K.S. Panageas

Development of methodology: K.M. Kidwell, K.S. Panageas

Analysis and interpretation of data (e.g., statistical analysis, biostatistics, computational analysis): K.M. Kidwell, K.S. Panageas

Writing, review, and/or revision of the manuscript: K.M. Kidwell, M.A. Postow, K.S. Panageas

Study supervision: K.S. Panageas

This study was support by the Memorial Sloan Kettering Cancer Center Core grant (P30 CA008748; to K.S. Panageas and M.A. Postow; principle investigator: C. Thompson) and PCORI Award (ME-1507-31108; to K.M. Kidwell).

Citing articles via

Email alerts.

- Online First

- Collections

- Online ISSN 1557-3265

- Print ISSN 1078-0432

AACR Journals

- Blood Cancer Discovery

- Cancer Discovery

- Cancer Epidemiology, Biomarkers & Prevention

- Cancer Immunology Research

- Cancer Prevention Research

- Cancer Research

- Cancer Research Communications

- Clinical Cancer Research

- Molecular Cancer Research

- Molecular Cancer Therapeutics

- Info for Advertisers

- Information for Institutions/Librarians

- Privacy Policy

- Copyright © 2023 by the American Association for Cancer Research.

This Feature Is Available To Subscribers Only

Sign In or Create an Account

Sequential, Multiple Assignment, Randomized Trials (SMART)

- Living reference work entry

- First Online: 12 August 2021

- Cite this living reference work entry

- Nicholas J. Seewald 3 ,

- Olivia Hackworth 3 &

- Daniel Almirall 3

222 Accesses

1 Citations

6 Altmetric

A dynamic treatment regimen (DTR) is a prespecified set of decision rules that can be used to guide important clinical decisions about treatment planning. This includes decisions concerning how to begin treatment based on a patient’s characteristics at entry, as well as how to tailor treatment over time based on the patient’s changing needs. Sequential, multiple assignment, randomized trials (SMARTs) are a type of experimental design that can be used to build effective dynamic treatment regimens (DTRs). This chapter provides an introduction to DTRs, common types of scientific questions researchers may have concerning the development of a highly effective DTR, and how SMARTs can be used to address such questions. To illustrate ideas, we discuss the design of a SMART used to answer critical questions in the development of a DTR for individuals diagnosed with alcohol use disorder.

- Dynamic treatment regimen

- Adaptive intervention

- Tailoring variable

- Sequential randomization

- Multistage randomized trial

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Almirall D, DiStefano C, Chang Y-C, Shire S, Kaiser A, Lu X, Nahum-Shani I, Landa R, Mathy P, Kasari C (2016) “Longitudinal Effects of Adaptive Interventions With a Speech-Generating Device in Minimally Verbal Children With ASD.” J Clin Child Adolesc 45 (4): 442–56. https://doi.org/10.1080/15374416.2016.1138407

Almirall D, Nahum-Shani I, Lu W, Kasari C (2018) Experimental designs for research on adaptive interventions: singly and sequentially randomized trials. In: Collins LM, Kugler KC (eds) Optimization of behavioral, biobehavioral, and biomedical interventions: advanced topics, Statistics for social and behavioral sciences. Springer International Publishing, Cham, pp 89–120. https://doi.org/10.1007/978-3-319-91776-4_4

Chapter Google Scholar

August GJ, Piehler TF, Bloomquist ML (2016) Being ‘SMART’ about adolescent conduct problems prevention: executing a SMART pilot study in a juvenile diversion agency. J Clin Child Adolesc Psychol 45(4):495–509. https://doi.org/10/ghpbrn

Article Google Scholar

Cable N, Sacker A (2007) Typologies of alcohol consumption in adolescence: predictors and adult outcomes. Alcohol Alcoholism 43(1):81–90. https://doi.org/10/fpmm33

Chakraborty B, Moodie EEM (2013) Statistical Methods for Dynamic Treatment Regimes . Statistics for biology and health. Springer New York, New York, NY. https://doi.org/10.1007/978-1-4614-7428-9

Book Google Scholar

Cheung YK, Chakraborty B, Davidson KW (2015) Sequential multiple assignment randomized trial (SMART) with adaptive randomization for quality improvement in depression treatment program: SMART with adaptive randomization. Biometrics 71(2):450–459. https://doi.org/10.1111/biom.12258

Article MathSciNet MATH Google Scholar

Chronis-Tuscano A, Wang CH, Strickland J, Almirall D, Stein MA (2016) Personalized treatment of mothers with ADHD and their young at-risk children: a SMART pilot. J Clin Child Adolesc Psychol 45(4):510–521. https://doi.org/10/gg2h36

Collins LM, Nahum-Shani I, Almirall D (2014) Optimization of behavioral dynamic treatment regimens based on the sequential, multiple assignment, randomized trial (SMART). Clin Trials 11(4):426–434. https://doi.org/10/f6cjxm

Dragalin V (2006) Adaptive designs: terminology and classification. Drug Inf J 40(4):425–435. https://doi.org/10/ghpbrt

Article MathSciNet Google Scholar

Dziak JJ, Yap JRT, Almirall D, McKay JR, Lynch KG, Nahum-Shani I (2019) A data analysis method for using longitudinal binary outcome data from a SMART to compare adaptive interventions. Multivar Behav Res 0(0):1–24. https://doi.org/10/gftzjg

Google Scholar

Feng W, Wahed AS (2009) Sample size for two-stage studies with maintenance therapy. Stat Med 28(15):2028–2041. https://doi.org/10.1002/sim.3593

Gunlicks-Stoessel M, Mufson L, Westervelt A, Almirall D, Murphy SA (2016) A pilot SMART for developing an adaptive treatment strategy for adolescent depression. J Clin Child Adolesc Psychol 45(4):480–494. https://doi.org/10/ghpbrv

Hall KL, Nahum-Shani I, August GJ, Patrick ME, Murphy SA, Almirall D (2019) Adaptive intervention designs in substance use prevention. In: Sloboda Z, Petras H, Robertson E, Hingson R (eds) Prevention of substance use, Advances in prevention science. Springer International Publishing, Cham, pp 263–280. https://doi.org/10.1007/978-3-030-00627-3_17

Heilig M, Egli M (2006) Pharmacological treatment of alcohol dependence: target symptoms and target mechanisms. Pharmacol Ther 111(3):855–876. https://doi.org/10/cfs7df

Kasari C, Kaiser A, Goods K, Nietfeld J, Mathy P, Landa R, Murphy SA, Almirall D (2014) Communication interventions for minimally verbal children with autism: a sequential multiple assignment randomized trial. J Am Acad Child Adolesc Psychiatry 53(6):635–646. https://doi.org/10.1016/j.jaac.2014.01.019

Kidwell KM, Seewald NJ, Tran Q, Kasari C, Almirall D (2018) Design and analysis considerations for comparing dynamic treatment regimens with binary outcomes from sequential multiple assignment randomized trials. J Appl Stat 45(9):1628–1651. https://doi.org/10.1080/02664763.2017.1386773

Kilbourne AM, Almirall D, Eisenberg D, Waxmonsky J, Goodrich DE, Fortney JC, JoAnn E. Kirchner, et al. (2014) Protocol: adaptive implementation of effective programs trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement Sci 9(1):132. https://doi.org/10/f6q9fc

Kilbourne AM, Smith SN, Choi SY, Koschmann E, Liebrecht C, Rusch A, Abelson JL et al (2018) Adaptive school-based implementation of CBT (ASIC): clustered-SMART for building an optimized adaptive implementation intervention to improve uptake of mental health interventions in schools. Implement Sci 13(1):119. https://doi.org/10/gd7jt2

Kosorok MR, Moodie EEM (eds) (2015) Adaptive treatment strategies in practice: planning trials and analyzing data for personalized medicine. Society for Industrial and Applied Mathematics, Philadelphia, PA. https://doi.org/10.1137/1.9781611974188

Book MATH Google Scholar

Laber EB, Lizotte DJ, Qian M, Pelham WE, Murphy SA (2014) Dynamic treatment regimes: technical challenges and applications. Electron J Stat 8(1):1225–1272. https://doi.org/10/gg29c8

Lavori PW, Dawson R (2004) Dynamic treatment regimes: practical design considerations. Clin Trials 1(1):9–20. https://doi.org/10/cqtvnn

Lavori PW, Dawson R (2014) Introduction to dynamic treatment strategies and sequential multiple assignment randomization. Clin Trials 11(4):393–399. https://doi.org/10.1177/1740774514527651

Lei H, Nahum-Shani I, Lynch K, Oslin D, Murphy SA (2012) A ‘SMART’ design for building individualized treatment sequences. Annu Rev Clin Psychol 8(1):21–48. https://doi.org/10.1146/annurev-clinpsy-032511-143152

Li Z (2017) Comparison of adaptive treatment strategies based on longitudinal outcomes in sequential multiple assignment randomized trials. Stat Med 36(3):403–415. https://doi.org/10.1002/sim.7136

Li Z, Murphy SA (2011) Sample size formulae for two-stage randomized trials with survival outcomes. Biometrika 98(3):503–518. https://doi.org/10.1093/biomet/asr019

Longabaugh R, Zweben A, Locastro JS, Miller WR (2005) Origins, issues and options in the development of the combined behavioral intervention. J Stud Alcohol Suppl (15):179–187. https://doi.org/10/ghpb9f

Lu X, Nahum-Shani I, Kasari C, Lynch KG, Oslin DW, Pelham WE, Fabiano G, Almirall D (2016) Comparing dynamic treatment regimes using repeated-measures outcomes: modeling considerations in SMART studies. Stat Med 35(10):1595–1615. https://doi.org/10/gg2gxc

Lunceford JK, Davidian M, Tsiatis AA (2002) Estimation of survival distributions of treatment policies in two-stage randomization designs in clinical trials. Biometrics 58(1):48–57. https://doi.org/10/bk2dj9

McKay JR (2005) Is there a case for extended interventions for alcohol and drug use disorders? Addiction 100(11):1594–1610. https://doi.org/10/btpvtr

Meurer WJ, Lewis RJ, Berry DA (2012) Adaptive clinical trials: a partial remedy for the therapeutic misconception? JAMA-J Am Med Assoc 307(22):2377–2378. https://doi.org/10/gf3pmm

Moodie EEM, Richardson TS, Stephens DA (2007) Demystifying optimal dynamic treatment regimes. Biometrics 63(2):447–455. https://doi.org/10/ffcq8r

Murphy SA (2003) Optimal dynamic treatment regimes. J R Stat Soc B 65(2):331–355. https://doi.org/10/dmmr89

Murphy SA (2005) An experimental Design for the Development of adaptive treatment strategies. Stat Med 24(10):1455–1481. https://doi.org/10.1002/sim.2022

Murphy SA, Almirall D (2009) Dynamic treatment regimens. In: Encyclopedia of Medical Decision Making , 1:419–22. SAGE Publications, Thousand Oaks

Murphy SA, Bingham D (2009) Screening experiments for developing dynamic treatment regimes. J Am Stat Assoc 104(485):391–408. https://doi.org/10/dk2gpv

Naar-King S, Ellis DA, Carcone AI, Templin T, Jacques-Tiura AJ, Hartlieb KB, Cunningham P, Jen K-LC (2016) Sequential multiple assignment randomized trial (SMART) to construct weight loss interventions for African American adolescents. J Clin Child Adolesc Psychol 45(4):428–441. https://doi.org/10/gf4ks4

Nahum-Shani I, Qian M, Almirall D, Pelham WE, Gnagy B, Fabiano GA, Waxmonsky JG, Yu J, Murphy SA (2012a) Q-learning: a data analysis method for constructing adaptive interventions. Psychol Methods 17(4):478–494. https://doi.org/10.1037/a0029373

Nahum-Shani I, Qian M, Almirall D, Pelham WE, Gnagy B, Fabiano GA, Waxmonsky JG, Yu J, Murphy SA (2012b) Experimental design and primary data analysis methods for comparing adaptive interventions. Psychol Methods 17(4):457–477. https://doi.org/10.1037/a0029372

Nahum-Shani I, Ertefaie A, Xi (Lucy) Lu, Lynch KG, McKay JR, Oslin DW, Almirall D (2017) A SMART data analysis method for constructing adaptive treatment strategies for substance use disorders. Addiction 112(5):901–909. https://doi.org/10/ghpb9n

Nahum-Shani I, Almirall D, Yap JRT, McKay JR, Lynch KG, Freiheit EA, Dziak JJ (2020) SMART longitudinal analysis: a tutorial for using repeated outcome Measures from SMART studies to compare adaptive interventions. Psychol Methods 25(1):1–29. https://doi.org/10/ggttht

NeCamp T, Kilbourne A, Almirall D (2017) Comparing cluster-level dynamic treatment regimens using sequential, multiple assignment, randomized trials: regression estimation and sample size considerations. Stat Methods Med Res 26(4):1572–1589. https://doi.org/10.1177/0962280217708654

Oetting AI, Levy JA, Weiss RD, Murphy SA (2011) Statistical methodology for a SMART Design in the Development of adaptive treatment strategies. In: Shrout PE, Keyes KM, Ornstein K (eds) Causality and psychopathology: finding the determinants of disorders and their cures. Oxford University Press, New York, pp 179–205

Ogbagaber SB, Karp J, Wahed AS (2016) Design of Sequentially Randomized Trials for testing adaptive treatment strategies. Stat Med 35(6):840–858. https://doi.org/10.1002/sim.6747

Oslin DW, Berrettini WH, O’Brien CP (2006) Targeting treatments for alcohol dependence: the pharmacogenetics of naltrexone. Addict Biol 11(3–4):397–403. https://doi.org/10/fgcfbk

Pelham WE Jr, Fabiano GA, Waxmonsky JG, Greiner AR, Gnagy EM, Pelham WE III, Coxe S et al (2016) Treatment sequencing for childhood ADHD: a multiple-randomization study of adaptive medication and behavioral interventions. J Clin Child Adolesc Psychol 45(4):396–415. https://doi.org/10/gfn9xr

Quanbeck A, Almirall D, Jacobson N, Brown RT, Landeck JK, Madden L, Cohen A et al (2020) The balanced opioid initiative: protocol for a clustered, sequential, multiple-assignment randomized trial to construct an adaptive implementation strategy to improve guideline-concordant opioid prescribing in primary care. Implement Sci 15(1):26. https://doi.org/10/gjh5tx

Rubin DB (1974) Estimating causal effects of treatments in randomized and nonrandomized studies. J Educ Psychol 66(5):688–701. https://doi.org/10.1037/H0037350

Schmitz JM, Stotts AL, Vujanovic AA, Weaver MF, Yoon JH, Vincent J, Green CE (2018) A sequential multiple assignment randomized trial for cocaine cessation and relapse prevention: tailoring treatment to the individual. Contemp Clin Trials 65(February):109–115. https://doi.org/10/gc3tqr

Seewald NJ, Kidwell KM, Nahum-Shani I, Wu T, McKay JR, Almirall D (2020) Sample size considerations for comparing dynamic treatment regimens in a sequential multiple-assignment randomized trial with a continuous longitudinal outcome. Stat Methods Med Res 29(7):1891–1912. https://doi.org/10/gf85ss

Sherwood NE, Butryn ML, Forman EM, Almirall D, Seburg EM, Lauren Crain A, Kunin-Batson AS, Hayes MG, Levy RL, Jeffery RW (2016) The BestFIT trial: a SMART approach to developing individualized weight loss treatments. Contemp Clin Trials 47(March):209–216. https://doi.org/10.1016/j.cct.2016.01.011

Thall PF, Kyle Wathen J (2005) Covariate-adjusted adaptive randomization in a sarcoma trial with multi-stage treatments. Stat Med 24(13):1947–1964. https://doi.org/10/d5ztnt

Thall PF, Millikan RE, Sung H-G (2000) Evaluating multiple treatment courses in clinical trials. Stat Med 19(8):1011–1028. https://doi.org/10/bmv5jc

Thall PF, Sung H-G, Estey EH (2002) Selecting therapeutic strategies based on efficacy and death in multicourse clinical trials. J Am Stat Assoc 97(457):29–39. https://doi.org/10/dx3fkb

Tsiatis AA, Davidian M, Holloway ST, Laber EB (2019) Dynamic Treatment Regimes: Statistical Methods for Precision Medicine . Monographs on statistics and applied probability 164. CRC Press LLC, Milton

Vock DM, Almirall D (2018) Sequential multiple assignment randomized trial (SMART). In: Balakrishnan N, Colton T, Everitt W, Piegorsch F, Teugels JL (eds) Wiley StatsRef: statistics reference online. https://doi.org/10.1002/9781118445112.stat08073

Wahed AS, Tsiatis AA (2004) Optimal estimator for the survival distribution and related quantities for treatment policies in two-stage randomization designs in clinical trials. Biometrics 60(1):124–133. https://doi.org/10/dc4kfb

Wahed AS, Tsiatis AA (2006) Semiparametric efficient estimation of survival distributions in two-stage randomisation designs in clinical trials with censored data. Biometrika 93(1):163–177. https://doi.org/10/cgchp6

Zhao Y-Q, Laber EB (2014) Estimation of optimal dynamic treatment regimes. Clin Trials 11(4):400–407. https://doi.org/10/f6cjrn

Download references

Acknowledgments

Funding was provided by the National Institutes of Health (P50DA039838, R01DA039901) and the Institute for Education Sciences (R324B180003). Funding for the ExTENd study, which was used to illustrate ideas, was provided by the National Institutes of Health (R01AA014851; PI: David Oslin).

Author information

Authors and affiliations.

University of Michigan, Ann Arbor, MI, USA

Nicholas J. Seewald, Olivia Hackworth & Daniel Almirall

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Nicholas J. Seewald .

Editor information

Editors and affiliations.

Samuel Oschin Comprehensive Cancer Insti, WEST HOLLYWOOD, CA, USA

Steven Piantadosi

Bloomberg School of Public Health, Johns Hopkins Center for Clinical Trials Bloomberg School of Public Health, Baltimore, MD, USA

Curtis L. Meinert

Section Editor information

Statistician, MRC Clinical Trials Unit at UCL, Institute of Clinical Trials and Methodology, London, UK

Babak Choodari-Oskooei

MRC Clinical Trials Unit and Institute of Clinical Trials and Methodology, University College of London, London, England

Mahesh Parmar

The Johns Hopkins Center for Clinical Trials and Evidence Synthesis, Johns Hopkins School of Public Health, Baltimore, MA, USA

Brigham and Women’s Hospital, Harvard Medical School, Boston, MA, USA

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this entry

Cite this entry.

Seewald, N.J., Hackworth, O., Almirall, D. (2021). Sequential, Multiple Assignment, Randomized Trials (SMART). In: Piantadosi, S., Meinert, C.L. (eds) Principles and Practice of Clinical Trials. Springer, Cham. https://doi.org/10.1007/978-3-319-52677-5_280-1

Download citation

DOI : https://doi.org/10.1007/978-3-319-52677-5_280-1

Received : 09 April 2021

Accepted : 28 April 2021

Published : 12 August 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-52677-5

Online ISBN : 978-3-319-52677-5

eBook Packages : Springer Reference Mathematics Reference Module Computer Science and Engineering

- Publish with us

Policies and ethics

- Find a journal

- Track your research

A Sequential Adaptive Intervention Strategy Targeting Remission and Functional Recovery in Young People at Ultrahigh Risk of Psychosis: The Staged Treatment in Early Psychosis (STEP) Sequential Multiple Assignment Randomized Trial

Affiliations.

- 1 Orygen, Melbourne, Victoria, Australia.

- 2 Centre for Youth Mental Health, The University of Melbourne, Melbourne, Victoria, Australia.

- 3 Orygen Specialist Program, Melbourne, Victoria, Australia.

- 4 Department of Psychiatry, Columbia University, New York, New York.

- 5 Department of Psychiatry and Behavioral Sciences, University of California, Davis, Sacramento.

- 6 Department of Psychiatry and Behavioral Sciences, University of California, San Francisco.

- PMID: 37378974

- PMCID: PMC10308298

- DOI: 10.1001/jamapsychiatry.2023.1947

Importance: Clinical trials have not established the optimal type, sequence, and duration of interventions for people at ultrahigh risk of psychosis.

Objective: To determine the effectiveness of a sequential and adaptive intervention strategy for individuals at ultrahigh risk of psychosis.

Design, setting, and participants: The Staged Treatment in Early Psychosis (STEP) sequential multiple assignment randomized trial took place within the clinical program at Orygen, Melbourne, Australia. Individuals aged 12 to 25 years who were seeking treatment and met criteria for ultrahigh risk of psychosis according to the Comprehensive Assessment of At-Risk Mental States were recruited between April 2016 and January 2019. Of 1343 individuals considered, 342 were recruited.

Interventions: Step 1: 6 weeks of support and problem solving (SPS); step 2: 20 weeks of cognitive-behavioral case management (CBCM) vs SPS; and step 3: 26 weeks of CBCM with fluoxetine vs CBCM with placebo with an embedded fast-fail option of ω-3 fatty acids or low-dose antipsychotic medication. Individuals who did not remit progressed through these steps; those who remitted received SPS or monitoring for up to 12 months.

Main outcomes and measures: Global Functioning: Social and Role scales (primary outcome), Brief Psychiatric Rating Scale, Scale for the Assessment of Negative Symptoms, Montgomery-Åsberg Depression Rating Scale, quality of life, transition to psychosis, and remission and relapse rates.

Results: The sample comprised 342 participants (198 female; mean [SD] age, 17.7 [3.1] years). Remission rates, reflecting sustained symptomatic and functional improvement, were 8.5%, 10.3%, and 11.4% at steps 1, 2, and 3, respectively. A total of 27.2% met remission criteria at any step. Relapse rates among those who remitted did not significantly differ between SPS and monitoring (step 1: 65.1% vs 58.3%; step 2: 37.7% vs 47.5%). There was no significant difference in functioning, symptoms, and transition rates between SPS and CBCM and between CBCM with fluoxetine and CBCM with placebo. Twelve-month transition rates to psychosis were 13.5% (entire sample), 3.3% (those who ever remitted), and 17.4% (those with no remission).

Conclusions and relevance: In this sequential multiple assignment randomized trial, transition rates to psychosis were moderate, and remission rates were lower than expected, partly reflecting the ambitious criteria set and challenges with real-world treatment fidelity and adherence. While all groups showed mild to moderate functional and symptomatic improvement, this was typically short of remission. While further adaptive trials that address these challenges are needed, findings confirm substantial and sustained morbidity and reveal relatively poor responsiveness to existing treatments.

Trial registration: ClinicalTrials.gov Identifier: NCT02751632 .

Publication types

- Randomized Controlled Trial

- Research Support, Non-U.S. Gov't

- Research Support, N.I.H., Extramural

- Antipsychotic Agents* / therapeutic use

- Fluoxetine / therapeutic use

- Psychotic Disorders* / diagnosis

- Quality of Life

- Treatment Outcome

- Antipsychotic Agents

Associated data

- ClinicalTrials.gov/NCT02751632

Grants and funding

- U01 MH105258/MH/NIMH NIH HHS/United States

- Introduction

- Conclusions

- Article Information

Follow-up was through routine National Health Services (NHS) electronic vital status and cancer registry databases for diagnoses and deaths notified by November 17, 2021, but that occurred up to March 31, 2021.

a Practices were randomized prior to invitation to take part in the trial. Randomization details are given in the Randomization subsection of the Methods section.

b Numbers of men are as of November 17, 2021, and are subject to small changes over time because of continued updates from NHS Digital (eg, changes to the trace status of the men, men newly successfully traced). Note that not all men traced at 15 years were traced at 10 years.

c Pseudo-anonymized follow-up.

d NHS digital national data opt-outs (previously type 2 opt-outs) preventing NHS data being used for research. 14

P values are from a random-effects Poisson model (see Statistical Analysis subsection of the Methods section).

Trial Protocol and Statistical Analysis Plan

eTable 1. Prostate cancer-specific diagnoses and mortality and all-cause mortality at 10-years, 15-years and 18-years post-randomization (and at 18 months for prostate cancer diagnoses) by random allocation and an as randomized estimate of the difference between groups

eTable 2. Underlying causes of death in intervention vs control groups at 15-year median follow-up (not including prostate cancer)

eTable 3. Effect of the CAP trial intervention on characteristics of prostate cancer cases at diagnosis

eTable 4. Sensitivity analyses employing alternative definitions of prostate cancer deaths

eTable 5. Estimated mean and median sojourn time and probability of overdiagnosis

eFigure 1. CAP trial design

eFigure 2. Cumulative incidence of prostate cancer by TNM stage at diagnosis

eFigure 3. Cumulative incidence of prostate cancer by Gleason score at diagnosis

eFigure 4. Comparing simulated data to empirical data for the cumulative prostate cancer incidence and cancer-specific and all-other cause mortality risk among the screened men and the unscreened group

eFigure 5. Comparison number of subjects per 100, 000 cohorts at death from all causes by ages between simulated data and CAP data

eFigure 6. Transition diagram for multi-state survival models

CAP Trial Group

Data Sharing Statement

- USPSTF Recommendation: Screening for Prostate Cancer JAMA US Preventive Services Task Force May 8, 2018 This 2018 updated Recommendation Statement from the US Preventive Services Task Force concludes that clinicians should not screen for prostate cancer in men aged 55 to 69 years who do not express a preference for screening (C recommendation) and recommends against PSA-based screening in men 70 years and older (D recommendation). US Preventive Services Task Force; David C. Grossman, MD, MPH; Susan J. Curry, PhD; Douglas K. Owens, MD, MS; Kirsten Bibbins-Domingo, PhD, MD, MAS; Aaron B. Caughey, MD, PhD; Karina W. Davidson, PhD, MASc; Chyke A. Doubeni, MD, MPH; Mark Ebell, MD, MS; John W. Epling Jr, MD, MSEd; Alex R. Kemper, MD, MPH, MS; Alex H. Krist, MD, MPH; Martha Kubik, PhD, RN; C. Seth Landefeld, MD; Carol M. Mangione, MD, MSPH; Michael Silverstein, MD, MPH; Melissa A. Simon, MD, MPH; Albert L. Siu, MD, MSPH; Chien-Wen Tseng, MD, MPH, MSEE

- Screening for Prostate Cancer JAMA JAMA Patient Page May 8, 2018 This JAMA Patient Page discusses the US Preventive Services Task Force’s recommendations on screening for prostate cancer in asymptomatic men. Jill Jin, MD, MPH

- Prostate Cancer Screening With PSA, Kallikrein Panel, and MRI JAMA Preliminary Communication April 6, 2024 This preliminary descriptive report compares the rates of detecting high- and low-grade prostate cancer in men invited for prostate cancer screening vs those not invited to undergo prostate cancer screening. Anssi Auvinen, MD, PhD; Teuvo L. J. Tammela, MD, PhD; Tuomas Mirtti, MD, PhD; Hans Lilja, MD, PhD; Teemu Tolonen, MD, PhD; Anu Kenttämies, MD, PhD; Irina Rinta-Kiikka, MD, PhD; Terho Lehtimäki, MD, PhD; Kari Natunen, MSc; Jaakko Nevalainen, PhD; Jani Raitanen, MSc; Johanna Ronkainen, MD, PhD; Theodorus van der Kwast, MD, PhD; Jarno Riikonen, MD, PhD; Anssi Pétas, MD, PhD; Mika Matikainen, MD, PhD; Kimmo Taari, MD, PhD; Tuomas Kilpeläinen, MD, PhD; Antti S. Rannikko, MD, PhD; ProScreen Trial Investigators; Paula Kujala; Teemu Murtola; Juha Koskimäki; Antti Kaipia; Tomi Pakarainen; Suvi Marjasuo; Juha Oksala; Tuomas Saarinen; Kirsty Ijäs; Into Kiviluoto; Juhani Kosunen; Arja Pauna; Arya Yar; Pekka Ruusuvuori; Neill Booth; Jill Hannus; Sanna Huovinen; Marita Laurila; Johanna Pulkkinen; Mika Tirkkonen; Mona Hassan Al-Battat

See More About

Select your interests.

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

Others Also Liked

- Download PDF

- X Facebook More LinkedIn

- CME & MOC

Martin RM , Turner EL , Young GJ, et al. Prostate-Specific Antigen Screening and 15-Year Prostate Cancer Mortality : A Secondary Analysis of the CAP Randomized Clinical Trial . JAMA. Published online April 06, 2024. doi:10.1001/jama.2024.4011

Manage citations:

© 2024

- Permissions

Prostate-Specific Antigen Screening and 15-Year Prostate Cancer Mortality : A Secondary Analysis of the CAP Randomized Clinical Trial

- 1 Department of Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, United Kingdom

- 2 National Institute for Health Research Bristol Biomedical Research Centre, University Hospitals Bristol and Weston NHS Foundation Trust and University of Bristol, Bristol, United Kingdom

- 3 MRC Integrative Epidemiology Unit, University of Bristol, Bristol, United Kingdom

- 4 Health Data Research UK South-West, University of Bristol, Bristol, United Kingdom

- 5 Nuffield Department of Surgical Sciences, University of Oxford, Oxford, United Kingdom

- 6 Department of Applied Health Research, University College London, London, United Kingdom

- 7 Division of Urology, University of Connecticut Health Center, Farmington

- 8 Department of Radiation Medicine and Applied Sciences, University of California San Diego, La Jolla

- 9 Department of Radiology, University of California San Diego, La Jolla

- 10 Department of Bioengineering, University of California San Diego, La Jolla

- 11 Department of Radiation Oncology, Massachusetts General Hospital, Harvard Medical School, Boston

- 12 Department of Cellular Pathology, North Bristol NHS Trust, Bristol, United Kingdom

- 13 Department of Clinical Science, Intervention and Technology, Karolinska Institutet, Stockholm, Sweden

- 14 School of Medicine, Cardiff University, Cardiff, Wales, United Kingdom

- US Preventive Services Task Force USPSTF Recommendation: Screening for Prostate Cancer US Preventive Services Task Force; David C. Grossman, MD, MPH; Susan J. Curry, PhD; Douglas K. Owens, MD, MS; Kirsten Bibbins-Domingo, PhD, MD, MAS; Aaron B. Caughey, MD, PhD; Karina W. Davidson, PhD, MASc; Chyke A. Doubeni, MD, MPH; Mark Ebell, MD, MS; John W. Epling Jr, MD, MSEd; Alex R. Kemper, MD, MPH, MS; Alex H. Krist, MD, MPH; Martha Kubik, PhD, RN; C. Seth Landefeld, MD; Carol M. Mangione, MD, MSPH; Michael Silverstein, MD, MPH; Melissa A. Simon, MD, MPH; Albert L. Siu, MD, MSPH; Chien-Wen Tseng, MD, MPH, MSEE JAMA

- JAMA Patient Page Screening for Prostate Cancer Jill Jin, MD, MPH JAMA

- Preliminary Communication Prostate Cancer Screening With PSA, Kallikrein Panel, and MRI Anssi Auvinen, MD, PhD; Teuvo L. J. Tammela, MD, PhD; Tuomas Mirtti, MD, PhD; Hans Lilja, MD, PhD; Teemu Tolonen, MD, PhD; Anu Kenttämies, MD, PhD; Irina Rinta-Kiikka, MD, PhD; Terho Lehtimäki, MD, PhD; Kari Natunen, MSc; Jaakko Nevalainen, PhD; Jani Raitanen, MSc; Johanna Ronkainen, MD, PhD; Theodorus van der Kwast, MD, PhD; Jarno Riikonen, MD, PhD; Anssi Pétas, MD, PhD; Mika Matikainen, MD, PhD; Kimmo Taari, MD, PhD; Tuomas Kilpeläinen, MD, PhD; Antti S. Rannikko, MD, PhD; ProScreen Trial Investigators; Paula Kujala; Teemu Murtola; Juha Koskimäki; Antti Kaipia; Tomi Pakarainen; Suvi Marjasuo; Juha Oksala; Tuomas Saarinen; Kirsty Ijäs; Into Kiviluoto; Juhani Kosunen; Arja Pauna; Arya Yar; Pekka Ruusuvuori; Neill Booth; Jill Hannus; Sanna Huovinen; Marita Laurila; Johanna Pulkkinen; Mika Tirkkonen; Mona Hassan Al-Battat JAMA

Question In men aged 50 to 69 years, does a single invitation for a prostate-specific antigen (PSA) screening test reduce prostate cancer mortality at 15-year follow-up compared with no invitation for testing?

Findings In this secondary analysis of a randomized clinical trial of 415 357 men aged 50 to 69 years randomized to a single invitation for PSA screening (n = 195 912) or a control group without PSA screening (n = 219 445) and followed up for a median of 15 years, risk of death from prostate cancer was lower in the group invited to screening (0.69% vs 0.78%; mean difference, 0.09%) compared with the control group.

Meaning Compared with no invitation for routine PSA testing, a single invitation for a PSA screening test reduced prostate cancer mortality at a median follow-up of 15 years, but the absolute mortality benefit was small.

Importance The Cluster Randomized Trial of PSA Testing for Prostate Cancer (CAP) reported no effect of prostate-specific antigen (PSA) screening on prostate cancer mortality at a median 10-year follow-up (primary outcome), but the long-term effects of PSA screening on prostate cancer mortality remain unclear.

Objective To evaluate the effect of a single invitation for PSA screening on prostate cancer–specific mortality at a median 15-year follow-up compared with no invitation for screening.

Design, Setting, and Participants This secondary analysis of the CAP randomized clinical trial included men aged 50 to 69 years identified at 573 primary care practices in England and Wales. Primary care practices were randomized between September 25, 2001, and August 24, 2007, and men were enrolled between January 8, 2002, and January 20, 2009. Follow-up was completed on March 31, 2021.

Intervention Men received a single invitation for a PSA screening test with subsequent diagnostic tests if the PSA level was 3.0 ng/mL or higher. The control group received standard practice (no invitation).

Main Outcomes and Measures The primary outcome was reported previously. Of 8 prespecified secondary outcomes, results of 4 were reported previously. The 4 remaining prespecified secondary outcomes at 15-year follow-up were prostate cancer–specific mortality, all-cause mortality, and prostate cancer stage and Gleason grade at diagnosis.

Results Of 415 357 eligible men (mean [SD] age, 59.0 [5.6] years), 98% were included in these analyses. Overall, 12 013 and 12 958 men with a prostate cancer diagnosis were in the intervention and control groups, respectively (15-year cumulative risk, 7.08% [95% CI, 6.95%-7.21%] and 6.94% [95% CI, 6.82%-7.06%], respectively). At a median 15-year follow-up, 1199 men in the intervention group (0.69% [95% CI, 0.65%-0.73%]) and 1451 men in the control group (0.78% [95% CI, 0.73%-0.82%]) died of prostate cancer (rate ratio [RR], 0.92 [95% CI, 0.85-0.99]; P = .03). Compared with the control, the PSA screening intervention increased detection of low-grade (Gleason score [GS] ≤6: 2.2% vs 1.6%; P < .001) and localized (T1/T2: 3.6% vs 3.1%; P < .001) disease but not intermediate (GS of 7), high-grade (GS ≥8), locally advanced (T3), or distally advanced (T4/N1/M1) tumors. There were 45 084 all-cause deaths in the intervention group (23.2% [95% CI, 23.0%-23.4%]) and 50 336 deaths in the control group (23.3% [95% CI, 23.1%-23.5%]) (RR, 0.97 [95% CI, 0.94-1.01]; P = .11). Eight of the prostate cancer deaths in the intervention group (0.7%) and 7 deaths in the control group (0.5%) were related to a diagnostic biopsy or prostate cancer treatment.

Conclusions and Relevance In this secondary analysis of a randomized clinical trial, a single invitation for PSA screening compared with standard practice without routine screening reduced prostate cancer deaths at a median follow-up of 15 years. However, the absolute reduction in deaths was small.

Trial Registration isrctn.org Identifier: ISRCTN92187251

In England, the number of men diagnosed with prostate cancer increased by 68% from 28 216 in 2001 to 47 479 in 2019, 1 reflecting population aging and increased prostate-specific antigen (PSA) testing. 2 In the US, approximately 3.3 million men currently live with a diagnosis of prostate cancer. 3 While low-risk prostate cancer progresses slowly and is associated with a low risk of mortality, 4 - 7 aggressive prostate cancer currently causes approximately 12 000 deaths in the UK and 34 700 deaths in the US annually. 3 , 8 The goal of PSA screening is to reduce prostate cancer mortality by early detection of curable disease. However, uncertainty remains regarding the long-term effect of PSA-based screening on mortality. 9 - 11

The Cluster Randomized Trial of PSA Testing for Prostate Cancer (CAP) (N = 415 357) showed that compared with usual care (no screening), an invitation to a single PSA screening test increased the number of prostate cancers diagnosed during the first 18 months of follow-up (the period when PSA testing and subsequent biopsies for men with an elevated level of PSA took place). In this trial, rates of diagnosed prostate cancer in the first 18 months were 2.2 per 1000 person-years in the control group and 10.4 per 1000 person-years in the intervention group ( P < .001). 10 However, at a median 10-year follow-up, the invitation for a single PSA screening did not reduce prostate cancer mortality compared with the control (0.29% vs 0.30%; rate ratio, 0.96 [95% CI, 0.85-1.08]; P = .50). 10 This secondary analysis of the CAP trial describes the effects of this single invitation to a PSA screening test, with subsequent diagnostic tests if the PSA level was 3.0 ng/mL or higher (to convert to micrograms per liter, multiply by 1.0), on the prespecified secondary outcome of prostate-cancer mortality at 15-year follow-up compared with standard practice (no screening). 12

The Derby National Research Ethics Service Committee East Midlands approved this study ( ISRCTN92187251 ). The trial protocol and statistical analysis plan are available in Supplement 1 . Participants were enrolled between January 8, 2002, and January 20, 2009. Final follow-up occurred on March 31, 2021. Men who attended PSA testing in the intervention group gave individual written informed consent via the ProtecT study. 13 Individual consent was not sought from men in the control group or from nonresponders in the intervention group. Instead, approval for their identification and linkage to routine electronic records was obtained under Section 251 of the National Health Services Act 2006 from the UK Patient Information Advisory Group (now Confidentiality Advisory Group). 10 All clinical centers had local research governance approval. This study followed the Consolidated Standards of Reporting Trials ( CONSORT ) reporting guideline. Study data were collected using REDCap electronic data capture tools hosted at the University of Bristol.

The CAP trial was a primary care–based cluster randomized clinical trial that tested the effects of a single invitation for a PSA screening test (eFigure 1 in Supplement 2 ) compared with usual care (no screening) on the primary outcome of prostate cancer mortality at a median follow-up of 10 years. The primary outcome has been reported previously. 10 Between September 25, 2001, and August 24, 2007, 785 eligible general practices in the catchment area of 8 hospitals across England and Wales (located in Birmingham, Bristol, Cambridge, Cardiff, Leeds, Leicester, Newcastle, and Sheffield) were randomized before recruitment (Zelen design) to intervention or control groups, and practices were invited to consent to participate. Randomization was blocked and stratified within groups of 10 to 12 neighboring practices using a computerized random number generator. Because allocation preceded the invitation for practices to participate, it was not possible to conceal allocation. A total of 573 practices (73%), including 271 (68%) randomized to the intervention group and 302 (78%) randomized to the control group, agreed to participate ( Figure 1 ).

Men aged 50 to 69 years in each participating randomized general practice were included. Men with prostate cancer on or before the randomization date and those registered as a patient with participating practices on a temporary or emergency basis were excluded. Race and ethnicity for men attending the intervention group PSA test clinic were ascertained by a nurse using standardized definitions as one of a range of baseline characteristics to assess generalizability. 13 Race and ethnicity were defined using UK Office for National Statistics Census categories and recoded as White and other (all other categories collapsed due to low numbers of participants who were not White). Race and ethnicity data were not available from National Health Services (NHS) routine data that we had access to at the time, so we could not compute these data for the control group.

Quiz Ref ID Men in practices randomized to the intervention received a single invitation for a PSA test after counseling. If the resulting PSA level was 3.0 to 19.9 ng/mL, they were offered 10-core transrectal ultrasonography-guided biopsies. All laboratories participated in the UK National External Quality Assessment Service for PSA testing. Test results that did not meet laboratory quality assurance requirements or were lost were considered nonvalid, as were tests for which consent was ambiguous or insufficient blood was obtained. Men in the intervention group diagnosed with localized prostate cancer were invited to participate in a second randomized clinical trial, the ProtecT treatment trial, which randomized participants to active monitoring (consisting of regular PSA testing and clinical review), radical prostatectomy, or radical conformal radiotherapy with neoadjuvant androgen deprivation (eFigure 1 in Supplement 2 ). 15 Men with a PSA level of 20 ng/mL or higher were referred to a urologist and received standard care.