Engineering

Github copilot research recitation.

GitHub Copilot: Parrot or Crow? A first look at rote learning in GitHub Copilot suggestions.

Introduction

GitHub Copilot is trained on billions of lines of public code. The suggestions it makes to you are adapted to your code, but the processing behind it is ultimately informed by code written by others.

How direct is the relationship between the suggested code and the code that informed it? In a recent thought-provoking paper 1 , Bender, Gebru et al. coined the phrase “stochastic parrots” for artificial intelligence systems, like the ones that power GitHub Copilot. Or, as a fellow machine learning engineer at GitHub 2 remarked during a water cooler chat: these systems can feel like “a toddler with a photographic memory.”

These are deliberate oversimplifications. Many GitHub Copilot suggestions feel specifically tailored to the particular code base the user is working on. Often, it looks less like a parrot and more like a crow building novel tools out of small blocks 3 . Yet there’s no denying that GitHub Copilot has an impressive memory:

Here, I intentionally directed 4 GitHub Copilot to recite a well-known text it obviously knows by heart. I, too, know a couple of texts by heart. For example, I still remember some poems I learned in school. Yet no matter the topic, not once have I been tempted to derail a conversation by falling into iambic tetrameter and waxing about daffodils.

So, is that (or rather the coding equivalent of it) something GitHub Copilot is prone to doing? How many of its suggestions are unique, and how often does it just parrot some likely looking code it has seen during training?

The experiment

During GitHub Copilot’s early development, nearly 300 employees used it in their daily work as part of an internal trial. This trial provided a good dataset to test for recitation. I wanted to find out how often GitHub Copilot gave them a suggestion that was quoted from something it had seen before.

I limited the investigation to Python suggestions with a cutoff on May 7, 2021 (the day we started extracting that data). That left 453,780 suggestions spread out over 396 “user weeks”, that is, calendar weeks during which a user actively used GitHub Copilot on Python code.

Automatic filtering

Though 453,780 suggestions are a lot, many of them can be dismissed immediately. To get to the interesting cases, consider sequences of “words” that occur in the suggestion in the same order as in the code GitHub Copilot has been trained on. In this context, punctuation, brackets, or other special characters all count as “words,” while tabs, spaces, or even line breaks are ignored completely. After all, a quote is still a quote, whether it’s indented by one tab or eight spaces.

For example, one of GitHub Copilot’s suggestions was the following regex for numbers separated by whitespace:

This would be exactly 100 “words” in the sense above, but it’s a particularly dense example. The average non-empty line of code has only 10 “words.” I’ve restricted this investigation to cases where the overlap with the code GitHub Copilot was trained on contains at least 60 such “words”. We must set the cut somewhere, and I think it’s rather rare that shorter sequences would be of great interest. In fact, most of the interesting cases identified later are well clear of that threshold of 60.

If the overlap extends to what the user has already written, that also counts for the length. After all, the user may have written that context with the help of GitHub Copilot as well!

In the following example, the user has started writing a very common snippet. GitHub Copilot completes it. Even though the completion itself is rather short, together with the already existing code, it clears the threshold and is retained.

This procedure is permissive enough to let many relatively “boring” examples through, like the two above. But it’s still effective at dialing in the human analysis to the interesting cases, sorting out more than 99% of GitHub Copilot suggestions.

Manual bucketing

After filtering, there were 473 suggestions left. However, they came in very different forms:

- Some were basically just repeats of another case that passed filtering. For example, sometimes GitHub Copilot makes a suggestion, the developer types a comment line, and GitHub Copilot offers a very similar suggestion again. I removed these cases from the analysis as duplicates.

- Then there are all other cases. Those with at least some specific overlap in either code or comments. These are what interest me the most, and what I’m going to concentrate on moving forward.

This bucketing necessarily has some edge cases 6 , and your mileage may vary in how you think they should be classified. Maybe you even disagree with the whole set of buckets in the first place.

That’s why we’ve open sourced that dataset 7 . So, if you feel a bit differently about the bucketing, or if you’re interested in other aspects of GitHub Copilot parroting its training set, you’re very welcome to ignore my next section and draw your own conclusions.

For most of GitHub Copilot’s suggestions, our automatic filter didn’t find any significant overlap with the code used for training. Yet it did bring 473 cases to our attention. Removing the first bucket (cases that look very similar to other cases) left me with 185 suggestions. Of these suggestions, 144 got sorted out in buckets 2 – 4. This left 41 cases in the last bucket, the “recitations,” in the meaning of the term I have in mind.

That corresponds to one recitation event every 10 user weeks (95% confidence interval: 7 – 13 weeks, using a Poisson test).

Naturally, this was measured by the GitHub and Microsoft developers who tried out GitHub Copilot. If your coding behavior is very different from theirs, your results might differ. Some of these developers are only working part-time on Python projects. I could not distinguish that and therefore counted everyone who writes some Python in a given week as a user.

One event in 10 weeks doesn’t sound like a lot, but it’s not 0 either. Also, I found three things that struck me.

GitHub Copilot quotes when it lacks specific context

If I want to learn the lyrics to a song, I must listen to it many times. GitHub Copilot is no different: To learn a snippet of code by heart, it must see that snippet a lot. Each file is only shown to GitHub Copilot once, so the snippet needs to exist in many different files in public code.

Of the 41 main cases we singled out during manual labelling, none appear in less than 10 different files. Most (35 cases) appear more than a hundred times. In one instance, GitHub Copilot suggested starting an empty file with something it had even seen more than a whopping 700,000 different times during training–that was the GNU General Public License.

The following plot shows the number of matched files of the results in bucket 5 (one red mark on the bottom for each result), versus buckets 2-4. I left out bucket 1, which is really just a mix of duplicates of bucket 2-4 cases and duplicates of bucket 5 cases. The inferred distribution is displayed as a red line. It peaks between 100 and 1000 matches.

GitHub Copilot mostly quotes in generic contexts

As time goes on, each file becomes unique. Yet GitHub Copilot doesn’t wait for that 8 . It will offer its solutions while your file is still extremely generic. And in the absence of anything specific to go on, it’s much more likely to quote from somewhere else than it would be otherwise.

Of course, software developers spend most of their time deep inside the files, where the context is unique enough that GitHub Copilot will offer unique suggestions. In contrast, the suggestions at the beginning are rather hit-and-miss, since GitHub Copilot cannot know what the program will be. Yet sometimes, especially in toy projects or standalone scripts, a modest amount of context can be enough to hazard a reasonable guess of what the user wanted to do. Sometimes it’s also still generic enough so that GitHub Copilot thinks one of the solutions it knows by heart looks promising:

This is all but directly taken from coursework for a robotics class uploaded in different variations 9 .

Detection is only as good as the tool that does the detecting

In its current form, the filter will turn up a good number of uninteresting cases when applied broadly. Yet it still should not be too much noise. For the internal users in the experiment, it would have been a bit more than one find per week on average (albeit likely in bursts!). Of these finds, roughly 17% (95% confidence interval using a binomial test: 14%-21%) would be in the fifth bucket.

Nothing is ever foolproof, of course, so this too can be tricked. Some cases are rather hard to detect by the tool we’re building, but still have an obvious source. To return to the Zen of Python:

Conclusion and next steps

This investigation demonstrates that GitHub Copilot can quote a body of code verbatim, yet it rarely does so, and when it does, it mostly quotes code that everybody quotes, typically at the beginning of a file, as if to break the ice.

However, there’s still one big difference between GitHub Copilot reciting code and me reciting a poem: I know when I’m quoting. I would also like to know when Copilot is echoing existing code rather than coming up with its own ideas. That way, I’m able to look up background information about that code, and when to include credit where credit is due.

The answer is obvious: sharing the prefiltering solution we used in this analysis to detect overlap with the training set. When a suggestion contains snippets copied from the training set, the UI should simply tell you where it’s quoted from. You can then either include proper attribution, or decide against using that code altogether.

This duplication search is not yet integrated into the technical preview, but we plan to do so. We will both continue to work on decreasing rates of recitation, as well as making its detection more precise.

1 : On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? ^

2 : Tiferet Gazit ^

3 : see von Bayern et al. about the creative wisdom of crows: Compound tool construction by New Caledonian crows ^

4 : see Carlini et al. about deliberately triggering the recall of training data: Extracting Training Data from Large Language Models ^

5 : jaeteekae: DelayedTwitter ^

6 : Probably not too many though. I’ve asked some developers to help me label the cases, and everyone was prompted to flag up any uncertainty with their judgement. That happened in only 34 cases, i.e. less than 10%. ^

7 : In the public dataset , I list the part of Copilot’s suggestion that was also found in the training set, how often it was found, and a link to an example where it occurs in public code. For privacy reasons, I don’t include the not-matched part of the completion or the code context the user had typed (only an indication of its length). ^

8 : In fact, since this experiment has been made, GitHub Copilot has changed to require a minimum file content. So some of the suggestions flagged here would not have been shown by the current version. ^

9 : For example jenevans33: CS8803-1 ^

- GitHub Copilot

Related posts

5 tips to supercharge your developer career in 2024

From mastering prompt engineering to leveraging AI for code security, here’s how you can excel in today's competitive job market.

GitHub Availability Report: March 2024

In March, we experienced two incidents that resulted in degraded performance across GitHub services.

4 ways GitHub engineers use GitHub Copilot

GitHub Copilot increases efficiency for our engineers by allowing us to automate repetitive tasks, stay focused, and more.

Explore more from GitHub

The readme project, github actions, work at github, subscribe to our newsletter.

Code with confidence. Discover tips, technical guides, and best practices in our biweekly newsletter just for devs.

How to read Machine Learning and Deep Learning Research papers

Tips on preparing Literature survey of a field and how to read a ML / DL research papers. The 3 pass method to read ML or DL research papers is discussed.

Jul 31, 2021 • Sai Amrit Patnaik • 23 min read

research reading_papers

Introduction

Dynamically expanding field of deep learning, why to read research papers, step 1: assembling all available resources, step2 - filtering out relevant and irrelevant resources, step3: taking systematic notes, organization of a paper, how to read a research paper, second pass, important questions to answer.

How to read a research paper, is probably the most important skill which any one who is into research or even anyone who wishes to be updated in the field with latest advancements has to master. When someone thinks of starting out in a domain, the first advice that comes is to look for relevant literature in the domain and read papers to develop an understanding of the domain. Papers are the most reliable and updated source of information about a particular domain. A research paper is a result of days of brainstorming of ideas, and structured and systematic experimentation to express an approach.

But why is reading papers considered such an important skill to be learnt ? Why is even reading papers necessary ? Let’s take on some motivation as to why is reading papers important to keep-up with the latest advances.

This article is the summary of a talk that I delivered for the Introductory Paper Reading Session generously supported by Weights and Biases whose recorded version can be found here and slides can be found here .

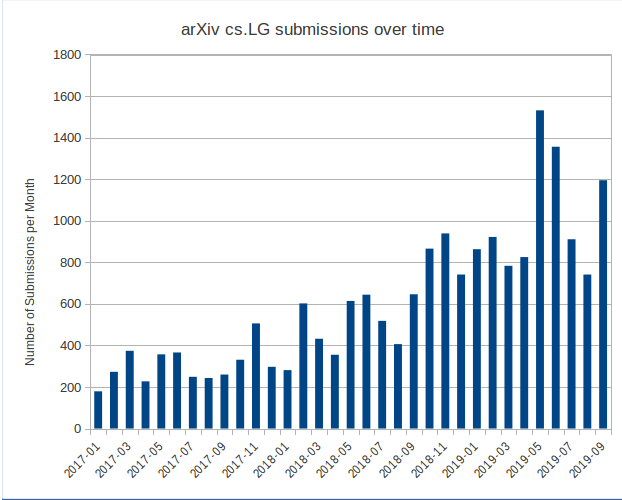

The field of deep learning has grown very rapidly in the recent years. We can quantify growth in a field by theh number of papers that come up everyday. Here is an illustration from one of the studies by ArXiv which is one of the platform where almost all of the papers, whether published or unpublished are putup.

From the figure we can see that the average no of papers has grown to 5X averaging from 300 papers per month in 2017 to around 1500 papers per month in 2019. The figure would probably be close to or above 2k papers per month in 2021. This is a huge number of papers coming up everyday. This shows how dynamic the field is at the current time and it is just growing exponentially in terms of number of papers and amount of new ideas and experiments coming up everyday.

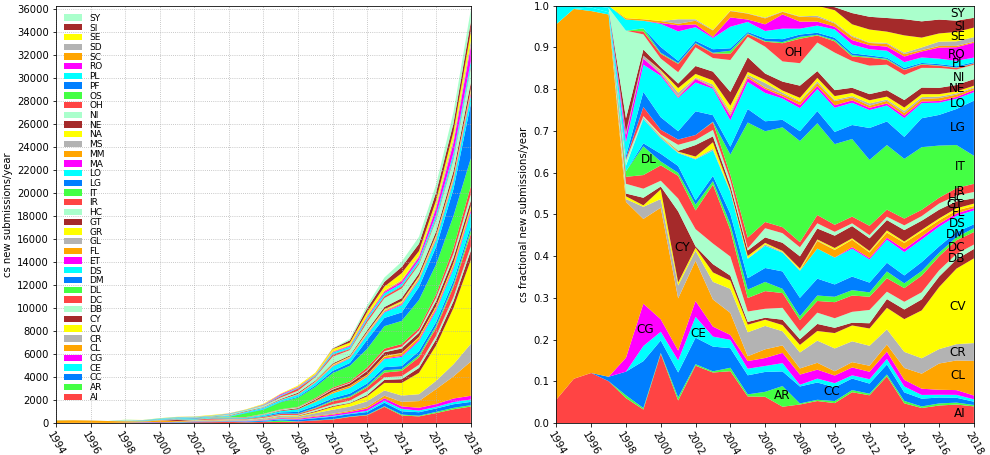

Let’s look at another figure from another study by arXiv

From the figure, the number of papers in the field of Computer Science has grown like a step exponential curve and we see that around 36k papers come out each year out of which around 24k of them as we saw in the previous section are in the field of ML and DL. We can also see in both the figures that the DL field in Green and CV in yellow are among the dominant areas in terms of percentages of papers coming out every year since the early 2000s while the field of CV has grown and opened up a lot after 2012 probably when the prominent work on Image classification by deep networks showed significant performance. These studies definitely speak how fast the field of computer Science is growing and amongst it, how the sub areas related to Machine Learning and Deep Learning are evolving too.

I hope these give a good idea of how fast the field has been evolving and would continue to evolve even faster in the future. But in this fast evolving field, How can we keep up with the pace and develop a expertise in the field ?

Quoting Dr. Jennifer Raff , To form a truly educated opinion on a scientific subject, you need to become familiar with current research in that field. And to be able to distinguish between good and bad interpretations of research, you have to be willing and able to read the primary research literature for yourself.

- To have a better grasp and understanding of the field: For a particular field, there may be a lot of video lectures and books but with the rate at which the field has been growing, no book or video lecture can accomodate the latest information as soon as they get published. So research papers provide the most updated and reliable information in the field.

- To be able to contribute to the field in terms of novel ideas: When we start working in a field, the first thing that we are advised to do is to do an extensive literature survey, going through all of the latest papers that have come up in the field till date. That is advised because we can have a very good understanding of the directions of works in the field and how the people actively working in the field are thinking by reading papers. Only then we can start coming up with our own ideas to experiment upon.

- To develop confidence in the field: Once we start learning about the latest works in the field and we start to develop a good understanding by performing a extensive literature survey, we start developing more confidence to perform more experiments and exploring deeper in the field.

- Most condensed and authentic source of latest knowledge in the field: A reseach paper comes out of days and months, or some times even years of brainstorming of ideas, performing extensive experiments and validating the expected outcomes. The condensed experiments and thoughts is what is best expressed in a research paper that the authors write. Any new content that comes in the field in terms of state-of-the-art works is through research papers. Research papers are the source through which works that push the limits of knowledge in a field come up.

Motivated enough ?

Now that we have attained enough motivations as to why we should read research papers, lets look at how to do literature survey in a domain.

Let’s do it !

Literature survey of a domain

The basic steps to perform literature survey in a field are the following:

- Assemble collections of resources in the form of research papers, Medium articles, blog posts, videos, GitHub repository etc.

- Conduct a deep dive to classify the relevant and irrelevant material.

- Take structured notes that summarises the key discoveries, findings and techniques within a paper.

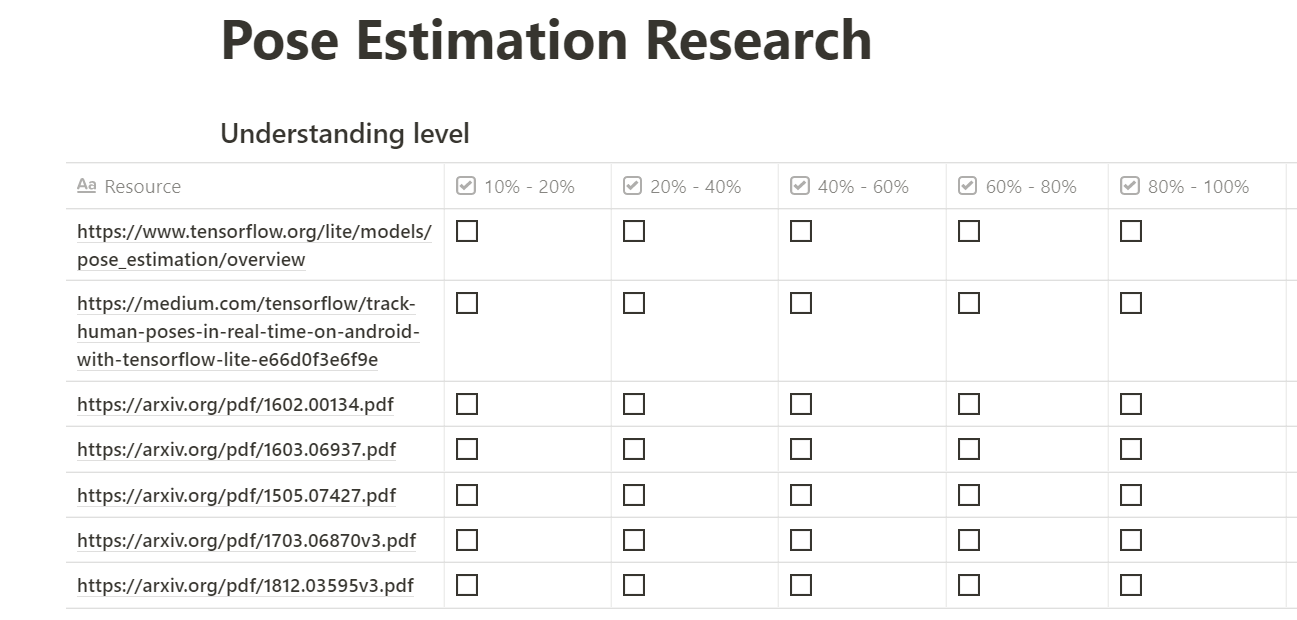

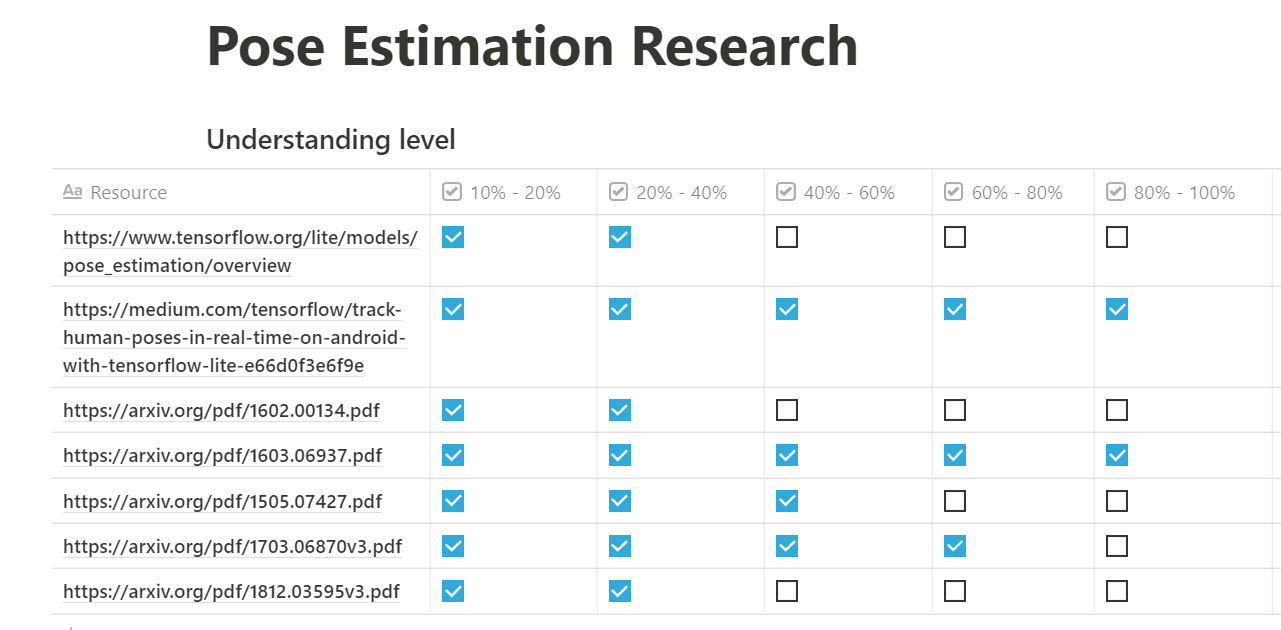

We shall take Pose Estimation as a example domain and understand each step.

First of all we collect all the resources in the form of blog posts, github repositories, medium articles and research papers available in the field, for our case it’s pose estimation. The important question here is, where can we find relevant resources in the field ?

Following are sources where we can find the latest papers and resources:

- Twitter : We can follow top researchers, groups and labs actively working and publishing in our field of domain and be updated with what they are currently working on.

- ML subreddit

- arXiv : Platform where almost all of the papers be it accepted to a conference or not, are uploaded.

- Arxiv Sanity Preserver : Created by Anderj Karpathy which used ML techniques to suggest relevant papers based on previous searches and interests.

- Papers With Code : Redirects to the paper’s abstract page on arXiv, open source implementation of the papers along with links to datasets used and a lot of other analysis and meta information like the current state-of-the art method, comparision of performance of all previous methods in the field e.t.c.

- Top ML, DL Conferences ( CVPR , ICCV , NeurIPS , ICML , ICLR etc): Proceedings of the following conferences are a great place to look for latest accepted works in the domains accepted by the conference.

- Google Search

Once listed down all the papers that we wish to look at and all resources we could find be it relevant or irrelevant, a table of this format shown in figure 3 can be prepared and in the first column, all the resources collected can be listed down.

Once listed down all the resources and prepared a table like the one shown in figure 3, the next step is to keep the relevant resources and reject the un-necessary ones which may not be directly related to what we want to work on our our research objectives. Follow the following steps to do that:

For all the resources listed down, finish 10% of reading of each resource or research paper(first pass reading, we will discuss about it later). If we find it not related to our research objective, we can reject it.

If that resurce is related to our objective and is relevant and important to us, do a complete full pass reading over the paper. From the references, if we find any other relevant reference then mark those in the original paper and add them to the list and repeat the same over this new paper or resource now.

So after this, this is what the final table might look like this,

Notice that the 2nd, 4th and 6th resources were important and relevant so we read it in detail but the other oned were not very important or the entire thing was not relevant so we read through some portion of each, whatever was necessary and left the rest.

Such a table can be really useful when we return back to it after some months or years to look for or recall what we have read or the papers we have already looked at and rejected. It helps us to save a lot of time iterating over unnecessary resources and helps us effectively dedicate time to the useful resources.

Once decided on which papers to read, this step depends on the individial about they want to go about taking notes. I personally follow a annotation tool to annotate different sections of the paper according to my comfort. I prepare some flow charts for the entire flow of the paper, write some explaining notes on the paper and summarise each paper to the best of my understanding to a github repository. Here I would Like to give a shoutout to Akshay Uppal who had generously shared his blogpost with his annotated version of the MLP Mixer paper for the Weights and Biases paper reading group . I also wish to share one of my repositories of literature survey when I started working on the field of face spoofing.

Tip: You can use your own ways of making yourself comfortable with the content and taking notes either on github, notion or google docs e.t.c to organise notes.

The majority of papers follow, more or less, the same convention of organization:

- Title: Hopefully catchy ! Includes additional info about the authors and their institutions.

- Abstract: High level summary of the entire work of the paper.

- Introduction: Background info on the field and related research leading up to this paper.

- Related works: Describe the already existing literature on the particular domain.

- Methods: Highly detailed section on the study that was conducted, how it was set up, any instruments used, and finally, the process and workflow.

- Results: Authors talk about the data that was created or collected, it should read as an unbiased account of what occurred.

- Discussions: Here is where authors interpret the results, and convince the readers of their findings and hypothesis.

- References: Any other work that was cited in the body of the text will show up here.

- Appendix: More figures, additional treatments on related math, or extra items of interest can find their way in an appendix.

Finally coming to the most awaited section of the blogpost !

Now that we know about the different sections of a paper, to understand how to read a paper, we need to understand how a author writes a paper. The intension of an author writing a paper is to get it accepted at a conference. In conferences, reviewers read all the submissions and take a decision based on the work and the scope and expectations of the conference. Let’s have a quick understanding of how the review process works at a very high level.

Warning: Reading a paper sequentially one section after another is not a good option.

In most of the top conferences, there are two submission deadlines: one, the abstract submission deadline. Second, the actual paper submission deadline. So why exactly are there 2 deadlines ? A separate deadline for abstract even before the actual paper deadline definitely implies that abstract is an important part of the paper. But Why is abstract important ?

Note: While Considering to submit for a conference, always note they have 2 deadlines: One, for abstract submission. Second, for the full paper submission.

Every year, a lot of papers get submitted to each conference. The number of submissions are in tens of thousands and it is not feasible to read through all the papers irrespective of how many reviewers the conference can have. So to make the review process easier and quicker, there is a guideline how different sections of a paper must be written and the reviewer also reads in that same pattern.

The first level of review is always the abstract filtering . The abstract is supposed to summarise the entire work briefly and it should clearly state the problem statement and the solution very briefly. If the abstract doesnot satisfy these criterias, the paper gets rejected in this filtering. So the abstract should clearly expain the gist of the work. Hence while reading paper too, the abstract is the place where we can find the gist of the paper clearly and briefly. Hence the abstract is read first to get an overall idea of the entire work. The authors also spend a lot of efforts in getting one figure which gives a visual illustration of the entire approach or a complete flow chart of the entire work. Even this figure contains a gist of the entire method of the paper. The authors try to condense and pack of information about thier work in a single figure.

Note: The abstract is one of the most important sections in a paper and it explains the entire gist of the paper in brief and the most important figure summarises the method adopted.

The reviewers then read the introduction section as it should explain the problem statement in a detailed way and the main proposal of the paper and the contributions. Immediately after this section, once you know what the paper is assuming, the conclusion section tells about the conclusion of the work and whether the assumptions and expectations presented in the introduction are satisfied or not.

Note: The introduction section is supposed to explain the problem statement in detail and the major contributions of the paper. We get to know the intent of the author from this section. The Conclusion section validates the assumptions and propositions given in the introduction through experiments and proofs.

After validating that the assumed propositions have been validated successfully, the method section is seen in detail to see what approach was taken to acheive the goal. In the discussion section, the experiments are explained as to why exactly the proposed method works. This is basically how a reviewer reads a paper and it is the same approach that is to be taken by a reader like us to read a paper.

3 pass approach to read a research paper

A 3 pass approach is taken to read research papers. The content covered in each passes is in sync with the discussion on the review procedure from last section. Following are the 3 passes:

- Should be able to answer the five C’s (Category, Context, Correctness, Contribution, Clarity)

- Second Pass: Read the Introduction, Conclusion and rest figures and skim rest of the sections(ignoring the details such as mathematical derivations proofs e.t.c.).

- Third Pass: Reading the entire paper with an intention to reimplement it.

Lets go into detail of each section.

The main intension in the first pass is to understand the overall gist of the paper and have a bird’s eye view of the paper. The intension is to get into the authors intent about the problem statement and his thought process to develop a solution to it. The major sections which should be focused in this pass are the Abstract and the summarising figure and extract the beat possible information of the problem statemant the paper is addressing, solution and the method. The following points are what we cover in the first pass:

- Read through the Title, abstract and the summarising figure.

- Skip all other details of the paper.

- Glance at the paper and understand its overall structure.

- Category : Which category of paper is it, whether its an architecture paper, or a new training strategy, or a new loss function ar is it a review paper e.t.c.

- Context : What previous works and area does it relate to. E.g - while Reading the DenseNet paper, it falls in the context of architectural papers and it falls into the resnet kind of networks architecture context.

- Correctness : How correct and valid is the problem statement that the problem is addressing and how correct does the proposed solution sound. Honesty this can’t be totally jugded from just the first pass completely as a complete answer and unserstanding of correctness would need looking at the conclusion section, but try to judge as best to your knowledge about the correctness.

- Contribution : What exactly is the contribution of the paper to the community. Eg - the resnet paper contributed the resisual block and skip connection architecture.

- Clarity : How clearly does the abstract explain the problem statement and their approach towards it.

- Based on our understanding of first pass, we decide weather to go forward or stop with the paper for a detailed study into further passes.

While discussing about literature survey, I mentioned about the 10% study on each resource to figure out if that resource is relevant to us. The 10% basically meant doing a first pass over all the resources.

Note: After the first pass, we understand the gist of the paper and get into the intent and thinking of the author.

After getting an overall gist of the paper after the first pass, we headon to the 2nd pass of the paper. The main intention of this section is to understand the paper in a litle more detail in terms of understanding the problemstatement in detail, validating if the paper validates the propositions it made to solve, understand the method in detail and understand the experiments well through the discussion section. The following is what we do in a 2nd pass:

- Reading more in depth through the Introduction, conclusion and other figures.

- Literature survey, Mathematical derivations, proofs etc and any thing that seems complicated and needs extended study from the references or other resources are skipped.

- Understand the other figures in the paper properly, develop intuition about the tables, charts and analysis presented. These figures contain a lot of latent information and explain a lot more things. so it is important to extract the maximum understanding from the figures

- Discuss the gist of the paper and main contents with a friend or colleague.

- Mark relevant references that may be required to be revisited later.

- Decide weather to go forward or stop based on this pass.

After the 2nd pass, we have a good understanding of the paper in terms of the method of the paper, experiments and conclusions out of them. Depending on understanding from it, we go on to the next pass.

Tip: A second pass is suitable for papers that you are interested but not from your field or is not directly related to your research goal.

After getting a more indepth understanding of the paper after a second pass, we go on to the final pass of reading which is the most detailed pass over the paper. This pass is only for papers which are most important for the research objective and are directly related to the objective we are working on. Following are the key points for a third pass:

- Reading with an intention to reimplement the paper.

- Consider every minor assumption and details and make note of it.

- Recreate the exact work as in the paper and compare it with original work

- Identify, question and Challenge every assumption in the paper.

- Make a flow chart of the entire process considering each step.

- Try deriving the mathematical derivations from scratch.

- Start looking at the code implementation of various components if an open source implementation is available else try to implement it.

After a third pass, we should be knowing the paper inside out including every minor assumption and detail in it along with a clear understanding of the implementation and good understanding of the hyperparameters of each experiment perform and presented in the paper. After all the passes we can claim to have a clear understanding of the research paper.

To validate our understanding of the paper, there are a few generic question we can try to answer about the paper and if we are able to answer these questions, we have more or less understood the paper to a level where we can use it for our own research as per our requrement and our objective.

- Answer to this can be found in brief in the abstract section and in detail in the Introduction section.

- self assessment of the problem statement.

- Answer to this can be found from the Introduction section.

- Answer to this can be found from the Introduction section section in the contributions section and also the methoda section.

- Answer to this is the entire method sections and discussions section.

- Answer to this is the entire conclusion section.

- Many a times a paper has many key elements which they put together to solve their problem statement. At times your problem statement maybe just a subset of the papers problem set or viceversa or a particular element of the paper may be solving some problem youa re interested into and not the others. So it is important to figure out what part of the paper is useful to you.

- Some sections of the paper may seem complicated or you may need to look at some previous references to understand this work completely. Also you might find some papers from the citations which are also useful to your research. So figure out the necessary references and refer to them.

Being able to answer all these question to the ebst of our understanding and abilities validates our level of understanding of the paper. These questions can also be attempted after the 2nd pass itself and we can check our understanding after the 2nd pass itself. Then again try to answer them after a 3rd pass and judge if our understanding has improved over the 2nd pass or another pass with deeper exploration is again needed.

Tip: Nothing teaches better than implementing the entire thing from scratch and experimenting and comparing the results with original results. Even if a open source implementation is available, experimentation with the opensource code and coming up with own tweeks to the code, running different hyperparameters can improve our understanding a lot.

Finishing with a important note that reading papers is a skill that can be learnt with consistency over a long period of time. It is not a sprint but a marathon and demands lot of patience and consistency.

I hope I have been able to justify the title of the blog post and explain everything in detail about how to do literature survey of a domain and how to read an ML / DL research paper. Incase I missed out on anything or you have any other comments, reach me out @SaiAmritPatnaik

Thank you !

- Andrew Ng’s lecture in CS230 on how to read research papers

- S. Keshav’s paper on how to read research papers

- Slides of the talk

- Blog Post 1 on reading Papers

- Blog Post 2 on reading Papers

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

Machine learning

Machine learning is the practice of teaching a computer to learn. The concept uses pattern recognition, as well as other forms of predictive algorithms, to make judgments on incoming data. This field is closely related to artificial intelligence and computational statistics.

Here are 124,998 public repositories matching this topic...

Tensorflow / tensorflow.

An Open Source Machine Learning Framework for Everyone

- Updated May 10, 2024

huggingface / transformers

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

pytorch / pytorch

Tensors and Dynamic neural networks in Python with strong GPU acceleration

netdata / netdata

The open-source observability platform everyone needs!

microsoft / ML-For-Beginners

12 weeks, 26 lessons, 52 quizzes, classic Machine Learning for all

- Updated May 4, 2024

Developer-Y / cs-video-courses

List of Computer Science courses with video lectures.

- Updated May 9, 2024

keras-team / keras

Deep Learning for humans

tesseract-ocr / tesseract

Tesseract Open Source OCR Engine (main repository)

- Updated May 3, 2024

scikit-learn / scikit-learn

scikit-learn: machine learning in Python

d2l-ai / d2l-zh

《动手学深度学习》:面向中文读者、能运行、可讨论。中英文版被70多个国家的500多所大学用于教学。

- Updated May 8, 2024

binhnguyennus / awesome-scalability

The Patterns of Scalable, Reliable, and Performant Large-Scale Systems

- Updated Apr 25, 2024

ageitgey / face_recognition

The world's simplest facial recognition api for Python and the command line

- Updated Feb 24, 2024

deepfakes / faceswap

Deepfakes Software For All

- Updated Apr 29, 2024

labmlai / annotated_deep_learning_paper_implementations

🧑🏫 60 Implementations/tutorials of deep learning papers with side-by-side notes 📝; including transformers (original, xl, switch, feedback, vit, ...), optimizers (adam, adabelief, sophia, ...), gans(cyclegan, stylegan2, ...), 🎮 reinforcement learning (ppo, dqn), capsnet, distillation, ... 🧠

- Updated Mar 23, 2024

- Jupyter Notebook

ultralytics / yolov5

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

iperov / DeepFaceLab

DeepFaceLab is the leading software for creating deepfakes.

- Updated Oct 24, 2023

JuliaLang / julia

The Julia Programming Language

Avik-Jain / 100-Days-Of-ML-Code

100 Days of ML Coding

- Updated Dec 29, 2023

aymericdamien / TensorFlow-Examples

TensorFlow Tutorial and Examples for Beginners (support TF v1 & v2)

- Updated Feb 5, 2024

LAION-AI / Open-Assistant

OpenAssistant is a chat-based assistant that understands tasks, can interact with third-party systems, and retrieve information dynamically to do so.

- Updated May 7, 2024

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper, mark the official implementation from paper authors, add a new evaluation result row.

- LEARNING THEORY

Remove a task

Add a method, remove a method, edit datasets, data-error scaling in machine learning on natural discrete combinatorial mutation-prone sets: case studies on peptides and small molecules.

8 May 2024 · Vanni Doffini , O. Anatole von Lilienfeld , Michael A. Nash · Edit social preview

We investigate trends in the data-error scaling behavior of machine learning (ML) models trained on discrete combinatorial spaces that are prone-to-mutation, such as proteins or organic small molecules. We trained and evaluated kernel ridge regression machines using variable amounts of computationally generated training data. Our synthetic datasets comprise i) two na\"ive functions based on many-body theory; ii) binding energy estimates between a protein and a mutagenised peptide; and iii) solvation energies of two 6-heavy atom structural graphs. In contrast to typical data-error scaling, our results showed discontinuous monotonic phase transitions during learning, observed as rapid drops in the test error at particular thresholds of training data. We observed two learning regimes, which we call saturated and asymptotic decay, and found that they are conditioned by the level of complexity (i.e. number of mutations) enclosed in the training set. We show that during training on this class of problems, the predictions were clustered by the ML models employed in the calibration plots. Furthermore, we present an alternative strategy to normalize learning curves (LCs) and the concept of mutant based shuffling. This work has implications for machine learning on mutagenisable discrete spaces such as chemical properties or protein phenotype prediction, and improves basic understanding of concepts in statistical learning theory.

Code Edit Add Remove Mark official

Tasks edit add remove, datasets edit, results from the paper edit add remove, methods edit add remove.

InstructLab: Advancing generative AI through open source

Make your mark on AI

While large language models (LLMs) offer incredible potential, they also come with their share of challenges. Working with LLMs demands high-quality training data, specialized skills and knowledge, and extensive computing resources. The process of forking and retraining a model is also time consuming and expensive.

The InstructLab project offers an open source approach to generative AI, sourcing community contributions to support regular builds of an enhanced version of an LLM. This approach is designed to lower costs, remove barriers to testing and experimentation, and improve alignment—that is, ensuring the model's answers are accurate, unbiased, and consistent with the values and goals of its users and creators.

What is InstructLab?

Initiated by IBM and Red Hat, the InstructLab project aims to democratize generative AI through the power of an open source community. It simplifies the LLM training phase through community skills and knowledge submissions.

InstructLab leverages the LAB (Large-scale Alignment for chatBots) methodology to enable community-driven development and model evolution. To learn more about the science behind LAB approach, see the InstructLab research paper posted by IBM .

Who is InstructLab for?

You don’t have to be a rocket scientist to contribute to InstructLab (but it’s great if you are!). With the InstructLab approach, there is minimal technical experience required. Contributions to the model are accepted in the form of knowledge and skills, with topics ranging from Beyoncé facts to professional law. This broad scope of topics makes the process approachable and entertaining.

We especially encourage contributions from experts in non-technical fields. Not only will this enhance the model's performance on a topic, but contributions from non-technical industry experts give them a voice in the AI conversation. InstructLab offers a practical way for less technical folks to contribute to a technical space that is poised to have lasting impact on the world.

What benefits does InstructLab offer?

InstructLab provides a cost-effective, community-driven solution for improving the alignment of LLMs and makes it easy for those with minimal machine learning experience to contribute.

Cost-effective

An open source approach makes InstructLab accessible to individuals and organizations regardless of their financial resources. As long as you have access to a laptop, you can download and use InstructLab tools, as we've designed it to run on laptop hardware. Such accessibility promotes a more inclusive environment for both developers and contributors.

Community-driven instruction tuning also drives the cost of model training down. By relying on the community, users cover topic generation by adding tasks of interest via skills and knowledge contributions. The synthetic data generation approach also means a smaller amount of data is needed from those contributions to have an impact on the model during training. This can all be tuned into a small-parameter, open source licensed model that is relatively cheap to both tune and serve for inferencing.

Community-driven

Opening up the data generation for the instruction tuning phase of model training to a large pool of contributors helps address innovation challenges that often arise during LLM training. Having a community drives together diverse talent by fostering collaboration among individuals with different backgrounds, expertise, and perspectives. This in turn encourages a wide range of contributions to land into the models. In addition, feedback from the users, contributors, and code reviewers in the community can help inform topic selection instead of solely relying on performance analysis and benchmarking data.

Ease of use

Non-technical people are typically deterred from contributing to software or AI due to perceived complexity and technical barriers. The vast array of models and tooling available and the perceived investment of time and effort required can be overwhelming for anyone, especially those without a technical background.

However, InstructLab removes most of these barriers. Thanks to YAML’s structured format and intuitive syntax, it’s easy to contribute knowledge and skill bounties in the form of a question-and-answer template. Contributors also benefit from an entire community with a wealth of resources including forums, docs, and user groups where individuals can seek support from one another.

Key features

- Regularly released models built with community contributions: Stay up to date by creating an account on HuggingFace.co and ‘liking’ the model(s) from the InstructLab repository .

- Pull request-focused contribution process in the open community: Keep track of new knowledge and skills contributions by watching the InstructLab/taxonomy repository on GitHub.

- Enhanced CLI tooling for contributing skills and knowledge and the ability to smoke test them in a locally-built model: Stay tuned by following our GitHub repo .

What’s next?

- Discover the developer preview of Red Hat Enterprise Linux AI , a foundation model platform to develop, test, and run Granite family large language models for enterprise applications.

- If you’re interested in building models that you can develop and serve yourself, check out Podman Desktop AI Lab , an open source extension for Podman Desktop to work with LLMs on a local environment.

Get started

Check out the InstructLab community page to get started now.

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- See all products

- See all technologies

- Developer Sandbox

- Developer Tools

- Interactive Tutorials

- API Catalog

- Operators Marketplace

- Learning Resources

- Cheat Sheets

Communicate

- Contact sales

- Find a partner

Report a website issue

- Site Status Dashboard

- Report a security problem

RED HAT DEVELOPER

Build here. Go anywhere.

We serve the builders. The problem solvers who create careers with code.

Join us if you’re a developer, software engineer, web designer, front-end designer, UX designer, computer scientist, architect, tester, product manager, project manager or team lead.

Red Hat legal and privacy links

- About Red Hat

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit

- Privacy statement

- Terms of use

- All policies and guidelines

- Digital accessibility

Announcing the NeurIPS 2023 Paper Awards

Communications Chairs 2023 2023 Conference awards , neurips2023

By Amir Globerson, Kate Saenko, Moritz Hardt, Sergey Levine and Comms Chair, Sahra Ghalebikesabi

We are honored to announce the award-winning papers for NeurIPS 2023! This year’s prestigious awards consist of the Test of Time Award plus two Outstanding Paper Awards in each of these three categories:

- Two Outstanding Main Track Papers

- Two Outstanding Main Track Runner-Ups

- Two Outstanding Datasets and Benchmark Track Papers

This year’s organizers received a record number of paper submissions. Of the 13,300 submitted papers that were reviewed by 968 Area Chairs, 98 senior area chairs, and 396 Ethics reviewers 3,540 were accepted after 502 papers were flagged for ethics reviews .

We thank the awards committee for the main track: Yoav Artzi, Chelsea Finn, Ludwig Schmidt, Ricardo Silva, Isabel Valera, and Mengdi Wang. For the Datasets and Benchmarks track, we thank Sergio Escalera, Isabelle Guyon, Neil Lawrence, Dina Machuve, Olga Russakovsky, Hugo Jair Escalante, Deepti Ghadiyaram, and Serena Yeung. Conflicts of interest were taken into account in the decision process.

Congratulations to all the authors! See Posters Sessions Tue-Thur in Great Hall & B1-B2 (level 1).

Outstanding Main Track Papers

Privacy Auditing with One (1) Training Run Authors: Thomas Steinke · Milad Nasr · Matthew Jagielski

Poster session 2: Tue 12 Dec 5:15 p.m. — 7:15 p.m. CST, #1523

Oral: Tue 12 Dec 3:40 p.m. — 4:40 p.m. CST, Room R06-R09 (level 2)

Abstract: We propose a scheme for auditing differentially private machine learning systems with a single training run. This exploits the parallelism of being able to add or remove multiple training examples independently. We analyze this using the connection between differential privacy and statistical generalization, which avoids the cost of group privacy. Our auditing scheme requires minimal assumptions about the algorithm and can be applied in the black-box or white-box setting. We demonstrate the effectiveness of our framework by applying it to DP-SGD, where we can achieve meaningful empirical privacy lower bounds by training only one model. In contrast, standard methods would require training hundreds of models.

Are Emergent Abilities of Large Language Models a Mirage? Authors: Rylan Schaeffer · Brando Miranda · Sanmi Koyejo

Poster session 6: Thu 14 Dec 5:00 p.m. — 7:00 p.m. CST, #1108

Oral: Thu 14 Dec 3:20 p.m. — 3:35 p.m. CST, Hall C2 (level 1)

Abstract: Recent work claims that large language models display emergent abilities, abilities not present in smaller-scale models that are present in larger-scale models. What makes emergent abilities intriguing is two-fold: their sharpness, transitioning seemingly instantaneously from not present to present, and their unpredictability , appearing at seemingly unforeseeable model scales. Here, we present an alternative explanation for emergent abilities: that for a particular task and model family, when analyzing fixed model outputs, emergent abilities appear due to the researcher’s choice of metric rather than due to fundamental changes in model behavior with scale. Specifically, nonlinear or discontinuous metrics produce apparent emergent abilities, whereas linear or continuous metrics produce smooth, continuous, predictable changes in model performance. We present our alternative explanation in a simple mathematical model, then test it in three complementary ways: we (1) make, test and confirm three predictions on the effect of metric choice using the InstructGPT/GPT-3 family on tasks with claimed emergent abilities, (2) make, test and confirm two predictions about metric choices in a meta-analysis of emergent abilities on BIG-Bench; and (3) show how to choose metrics to produce never-before-seen seemingly emergent abilities in multiple vision tasks across diverse deep networks. Via all three analyses, we provide evidence that alleged emergent abilities evaporate with different metrics or with better statistics, and may not be a fundamental property of scaling AI models.

Outstanding Main Track Runner-Ups

Scaling Data-Constrained Language Models Authors : Niklas Muennighoff · Alexander Rush · Boaz Barak · Teven Le Scao · Nouamane Tazi · Aleksandra Piktus · Sampo Pyysalo · Thomas Wolf · Colin Raffel

Poster session 2: Tue 12 Dec 5:15 p.m. — 7:15 p.m. CST, #813

Oral: Tue 12 Dec 3:40 p.m. — 4:40 p.m. CST, Hall C2 (level 1)

Abstract : The current trend of scaling language models involves increasing both parameter count and training dataset size. Extrapolating this trend suggests that training dataset size may soon be limited by the amount of text data available on the internet. Motivated by this limit, we investigate scaling language models in data-constrained regimes. Specifically, we run a large set of experiments varying the extent of data repetition and compute budget, ranging up to 900 billion training tokens and 9 billion parameter models. We find that with constrained data for a fixed compute budget, training with up to 4 epochs of repeated data yields negligible changes to loss compared to having unique data. However, with more repetition, the value of adding compute eventually decays to zero. We propose and empirically validate a scaling law for compute optimality that accounts for the decreasing value of repeated tokens and excess parameters. Finally, we experiment with approaches mitigating data scarcity, including augmenting the training dataset with code data or removing commonly used filters. Models and datasets from our 400 training runs are freely available at https://github.com/huggingface/datablations .

Direct Preference Optimization: Your Language Model is Secretly a Reward Model Authors: Rafael Rafailov · Archit Sharma · Eric Mitchell · Christopher D Manning · Stefano Ermon · Chelsea Finn

Poster session 6: Thu 14 Dec 5:00 p.m. — 7:00 p.m. CST, #625

Oral: Thu 14 Dec 3:50 p.m. — 4:05 p.m. CST, Ballroom A-C (level 2)

Abstract: While large-scale unsupervised language models (LMs) learn broad world knowledge and some reasoning skills, achieving precise control of their behavior is difficult due to the completely unsupervised nature of their training. Existing methods for gaining such steerability collect human labels of the relative quality of model generations and fine-tune the unsupervised LM to align with these preferences, often with reinforcement learning from human feedback (RLHF). However, RLHF is a complex and often unstable procedure, first fitting a reward model that reflects the human preferences, and then fine-tuning the large unsupervised LM using reinforcement learning to maximize this estimated reward without drifting too far from the original model. In this paper, we leverage a mapping between reward functions and optimal policies to show that this constrained reward maximization problem can be optimized exactly with a single stage of policy training, essentially solving a classification problem on the human preference data. The resulting algorithm, which we call Direct Preference Optimization (DPO), is stable, performant, and computationally lightweight, eliminating the need for fitting a reward model, sampling from the LM during fine-tuning, or performing significant hyperparameter tuning. Our experiments show that DPO can fine-tune LMs to align with human preferences as well as or better than existing methods. Notably, fine-tuning with DPO exceeds RLHF’s ability to control sentiment of generations and improves response quality in summarization and single-turn dialogue while being substantially simpler to implement and train.

Outstanding Datasets and Benchmarks Papers

In the dataset category :

ClimSim: A large multi-scale dataset for hybrid physics-ML climate emulation

Authors: Sungduk Yu · Walter Hannah · Liran Peng · Jerry Lin · Mohamed Aziz Bhouri · Ritwik Gupta · Björn Lütjens · Justus C. Will · Gunnar Behrens · Julius Busecke · Nora Loose · Charles Stern · Tom Beucler · Bryce Harrop · Benjamin Hillman · Andrea Jenney · Savannah L. Ferretti · Nana Liu · Animashree Anandkumar · Noah Brenowitz · Veronika Eyring · Nicholas Geneva · Pierre Gentine · Stephan Mandt · Jaideep Pathak · Akshay Subramaniam · Carl Vondrick · Rose Yu · Laure Zanna · Tian Zheng · Ryan Abernathey · Fiaz Ahmed · David Bader · Pierre Baldi · Elizabeth Barnes · Christopher Bretherton · Peter Caldwell · Wayne Chuang · Yilun Han · YU HUANG · Fernando Iglesias-Suarez · Sanket Jantre · Karthik Kashinath · Marat Khairoutdinov · Thorsten Kurth · Nicholas Lutsko · Po-Lun Ma · Griffin Mooers · J. David Neelin · David Randall · Sara Shamekh · Mark Taylor · Nathan Urban · Janni Yuval · Guang Zhang · Mike Pritchard

Poster session 4: Wed 13 Dec 5:00 p.m. — 7:00 p.m. CST, #105

Oral: Wed 13 Dec 3:45 p.m. — 4:00 p.m. CST, Ballroom A-C (level 2)

Abstract: Modern climate projections lack adequate spatial and temporal resolution due to computational constraints. A consequence is inaccurate and imprecise predictions of critical processes such as storms. Hybrid methods that combine physics with machine learning (ML) have introduced a new generation of higher fidelity climate simulators that can sidestep Moore’s Law by outsourcing compute-hungry, short, high-resolution simulations to ML emulators. However, this hybrid ML-physics simulation approach requires domain-specific treatment and has been inaccessible to ML experts because of lack of training data and relevant, easy-to-use workflows. We present ClimSim, the largest-ever dataset designed for hybrid ML-physics research. It comprises multi-scale climate simulations, developed by a consortium of climate scientists and ML researchers. It consists of 5.7 billion pairs of multivariate input and output vectors that isolate the influence of locally-nested, high-resolution, high-fidelity physics on a host climate simulator’s macro-scale physical state. The dataset is global in coverage, spans multiple years at high sampling frequency, and is designed such that resulting emulators are compatible with downstream coupling into operational climate simulators. We implement a range of deterministic and stochastic regression baselines to highlight the ML challenges and their scoring. The data (https://huggingface.co/datasets/LEAP/ClimSim_high-res) and code (https://leap-stc.github.io/ClimSim) are released openly to support the development of hybrid ML-physics and high-fidelity climate simulations for the benefit of science and society.

In the benchmark category :

DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models

Authors: Boxin Wang · Weixin Chen · Hengzhi Pei · Chulin Xie · Mintong Kang · Chenhui Zhang · Chejian Xu · Zidi Xiong · Ritik Dutta · Rylan Schaeffer · Sang Truong · Simran Arora · Mantas Mazeika · Dan Hendrycks · Zinan Lin · Yu Cheng · Sanmi Koyejo · Dawn Song · Bo Li

Poster session 1: Tue 12 Dec 10:45 a.m. — 12:45 p.m. CST, #1618

Oral: Tue 12 Dec 10:30 a.m. — 10:45 a.m. CST, Ballroom A-C (Level 2)

Abstract: Generative Pre-trained Transformer (GPT) models have exhibited exciting progress in capabilities, capturing the interest of practitioners and the public alike. Yet, while the literature on the trustworthiness of GPT models remains limited, practitioners have proposed employing capable GPT models for sensitive applications to healthcare and finance – where mistakes can be costly. To this end, this work proposes a comprehensive trustworthiness evaluation for large language models with a focus on GPT-4 and GPT-3.5, considering diverse perspectives – including toxicity, stereotype bias, adversarial robustness, out-of-distribution robustness, robustness on adversarial demonstrations, privacy, machine ethics, and fairness. Based on our evaluations, we discover previously unpublished vulnerabilities to trustworthiness threats. For instance, we find that GPT models can be easily misled to generate toxic and biased outputs and leak private information in both training data and conversation history. We also find that although GPT-4 is usually more trustworthy than GPT-3.5 on standard benchmarks, GPT-4 is more vulnerable given jailbreaking system or user prompts, potentially due to the reason that GPT-4 follows the (misleading) instructions more precisely. Our work illustrates a comprehensive trustworthiness evaluation of GPT models and sheds light on the trustworthiness gaps. Our benchmark is publicly available at https://decodingtrust.github.io/.

Test of Time

This year, following the usual practice, we chose a NeurIPS paper from 10 years ago to receive the Test of Time Award, and “ Distributed Representations of Words and Phrases and their Compositionality ” by Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean, won.

Published at NeurIPS 2013 and cited over 40,000 times, the work introduced the seminal word embedding technique word2vec. Demonstrating the power of learning from large amounts of unstructured text, the work catalyzed progress that marked the beginning of a new era in natural language processing.

Greg Corrado and Jeffrey Dean will be giving a talk about this work and related research on Tuesday, 12 Dec at 3:05 – 3:25 pm CST in Hall F.

Related Posts

2023 Conference

Announcing NeurIPS 2023 Invited Talks

Reflections on the neurips 2023 ethics review process, neurips newsletter – november 2023.

IMAGES

VIDEO

COMMENTS

2019-10-28 Started must-read-papers-for-ml repo. 2019-10-29 Added analytics vidhya use case studies article links. 2019-10-30 Added Outlier/Anomaly detection paper, separated Boosting, CNN, Object Detection, NLP papers, and added Image captioning papers. 2019-10-31 Added Famous Blogs from Deep and Machine Learning Researchers

A list of research papers in the domain of machine learning, deep learning and related fields. I have curated a list of research papers that I come across and read. I'll keep on updating the list of papers and their summary as I read them every week.

Add this topic to your repo. To associate your repository with the machine-learning-research topic, visit your repo's landing page and select "manage topics." GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Add this topic to your repo. To associate your repository with the machine-learning-papers topic, visit your repo's landing page and select "manage topics." GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Code Availability: For every open access machine learning paper, we check whether a code implementation is available on GitHub. The date axis is the publication date of the paper. We include both official and community implementations. Papers With Code highlights trending Machine Learning research and the code to implement it.

donutloop/machine-learning-research-papers This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. master

Open Science initiatives prompt machine learning (ML) researchers and experts to share source codes - "scientific artifacts" - alongside research papers via public repositories such as GitHub. Here we analyze the extent to which 1) the availability of GitHub repositories influences paper citation and 2) the popularity trend of ML frameworks (e.g., PyTorch and TensorFlow) affects article ...

Machine Learning papers (landing page) mlpapers. Collection of open machine learning papers. View on GitHub mlpapers/mlpapers.github.io. Follow on Twitter @mlpapers. Machine learning papers. Missing data; Feature extraction; Feature selection; Bayesian networks; Bayesian inference; Causal inference;

By Matthew Mayo, KDnuggets Managing Editor on December 31, 2018 in GitHub, Machine Learning, Research. Looking for papers with code? If so, this GitHub repository, accurately titled "Papers with Code," by Zaur Fataliyev, is just what you are after. Papers with code. Sorted by stars.

10809 leaderboards • 4846 tasks • 9814 datasets • 126982 papers with code. Browse State-of-the-Art Datasets ; Methods; More . ... Subscribe to the PwC Newsletter ×. Stay informed on the latest trending ML papers with code, research developments, libraries, methods, and datasets. ... BIG-bench Machine Learning. 1 benchmark

In a recent thought-provoking paper 1, Bender, Gebru et al. coined the phrase "stochastic parrots" for artificial intelligence systems, like the ones that power GitHub Copilot. Or, as a fellow machine learning engineer at GitHub 2 remarked during a water cooler chat: these systems can feel like "a toddler with a photographic memory."

With the advances in machine learning, there is a growing interest in AI-enabled tools for autocompleting source code. GitHub Copilot, also referred to as the "AI Pair Programmer", has been trained on billions of lines of open source GitHub code, and is one of such tools that has been increasingly used since its launch on June 2021. However, little effort has been devoted to understanding the ...

10 GitHub Repositories to Master Machine Learning. The blog covers machine learning courses, bootcamps, books, tools, interview questions, cheat sheets, MLOps platforms, and more to master ML and secure your dream job. By Abid Ali Awan, KDnuggets Assistant Editor on December 1, 2023 in Machine Learning. Image generated with DALLE-3.

Dynamically Expanding field of Deep Learning. Why to read research Papers. Literature survey of a domain. Step 1: Assembling all available resources. Step2 - Filtering out relevant and Irrelevant resources. Step3: Taking Systematic Notes. Organization of a Paper. How to read a Research Paper. 3 pass approach to read a research paper.

Machine learning is the practice of teaching a computer to learn. The concept uses pattern recognition, as well as other forms of predictive algorithms, to make judgments on incoming data. This field is closely related to artificial intelligence and computational statistics.

The number of research papers has increased steadily in the past. This is particularly true for papers in AI-related fields, such as machine learning and computer vision. For instance, more than 60,000 papers have been published in the area of machine learning in each of the last years [2]. Furthermore, it has become increas-

In this paper, we introduce (LPWC), an RDF knowledge graph that provides comprehensive, current information about almost 400,000 machine learning publications. This includes the tasks addressed, the datasets utilized, the methods implemented, and the evaluations conducted, Papers With Code. along with their results.

6. Paper. Code. **Federated Learning** is a machine learning approach that allows multiple devices or entities to collaboratively train a shared model without exchanging their data with each other. Instead of sending data to a central server for training, the model is trained locally on each device, and only the model updates are sent to the ...

486 votes, 23 comments. The best AI papers of 2021 with a clear video demo, short read, paper, and code for each of them. In-depth blog article…

Open Science initiatives prompt machine learning (ML) researchers and experts to share source codes - "scientific artifacts" - alongside research papers via public repositories such as GitHub.

This paper presents an overview of more than 160 ML-based approaches developed to combat COVID-19. They come from various sources like Elsevier, Springer, ArXiv, MedRxiv, and IEEE Xplore. They are analyzed and classified into two categories: Supervised Learning-based approaches and Deep Learning-based ones.

This work has implications for machine learning on mutagenisable discrete spaces such as chemical properties or protein phenotype prediction, and improves basic understanding of concepts in statistical learning theory. ... results from this paper to get state-of-the-art GitHub badges and help the community compare results to other papers ...

Overall, the paper shows promise as AutoCodeRover is capable of significantly reducing the manual effort required in program maintenance and improvement tasks. Conclusion . There are many machine learning papers to read in 2024, and here are my recommendation papers to read: HyperFast: Instant Classification for Tabular Data

Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights"). KANs have no linear weights at all -- every weight parameter is replaced by a univariate function ...

NeurIPS 2024 Datasets and Benchmarks Track If you'd like to become a reviewer for the track, or recommend someone, please use this form.. The Datasets and Benchmarks track serves as a venue for high-quality publications, talks, and posters on highly valuable machine learning datasets and benchmarks, as well as a forum for discussions on how to improve dataset development.

InstructLab provides a cost-effective, community-driven solution for improving the alignment of LLMs and makes it easy for those with minimal machine learning experience to contribute. Cost-effective. An open source approach makes InstructLab accessible to individuals and organizations regardless of their financial resources.

Announcing the NeurIPS 2023 Paper Awards By Amir Globerson, Kate Saenko, Moritz Hardt, Sergey Levine and Comms Chair, Sahra Ghalebikesabi . We are honored to announce the award-winning papers for NeurIPS 2023! This year's prestigious awards consist of the Test of Time Award plus two Outstanding Paper Awards in each of these three categories: . Two Outstanding Main Track Papers

GitHub Copilot is the evolution of the 'Bing Code Search' plugin for Visual Studio 2013, which was a Microsoft Research project released in February 2014. This plugin integrated with various sources, including MSDN and StackOverflow, to provide high-quality contextually relevant code snippets in response to natural language queries.