16 Advantages and Disadvantages of Experimental Research

How do you make sure that a new product, theory, or idea has validity? There are multiple ways to test them, with one of the most common being the use of experimental research. When there is complete control over one variable, the other variables can be manipulated to determine the value or validity that has been proposed.

Then, through a process of monitoring and administration, the true effects of what is being studied can be determined. This creates an accurate outcome so conclusions about the final value potential. It is an efficient process, but one that can also be easily manipulated to meet specific metrics if oversight is not properly performed.

Here are the advantages and disadvantages of experimental research to consider.

What Are the Advantages of Experimental Research?

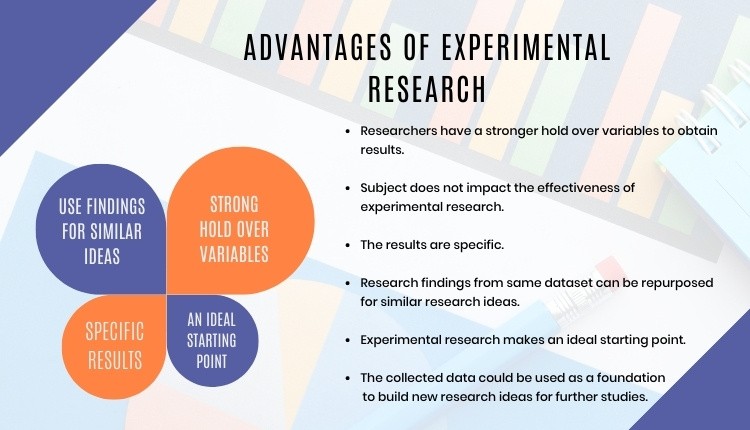

1. It provides researchers with a high level of control. By being able to isolate specific variables, it becomes possible to determine if a potential outcome is viable. Each variable can be controlled on its own or in different combinations to study what possible outcomes are available for a product, theory, or idea as well. This provides a tremendous advantage in an ability to find accurate results.

2. There is no limit to the subject matter or industry involved. Experimental research is not limited to a specific industry or type of idea. It can be used in a wide variety of situations. Teachers might use experimental research to determine if a new method of teaching or a new curriculum is better than an older system. Pharmaceutical companies use experimental research to determine the viability of a new product.

3. Experimental research provides conclusions that are specific. Because experimental research provides such a high level of control, it can produce results that are specific and relevant with consistency. It is possible to determine success or failure, making it possible to understand the validity of a product, theory, or idea in a much shorter amount of time compared to other verification methods. You know the outcome of the research because you bring the variable to its conclusion.

4. The results of experimental research can be duplicated. Experimental research is straightforward, basic form of research that allows for its duplication when the same variables are controlled by others. This helps to promote the validity of a concept for products, ideas, and theories. This allows anyone to be able to check and verify published results, which often allows for better results to be achieved, because the exact steps can produce the exact results.

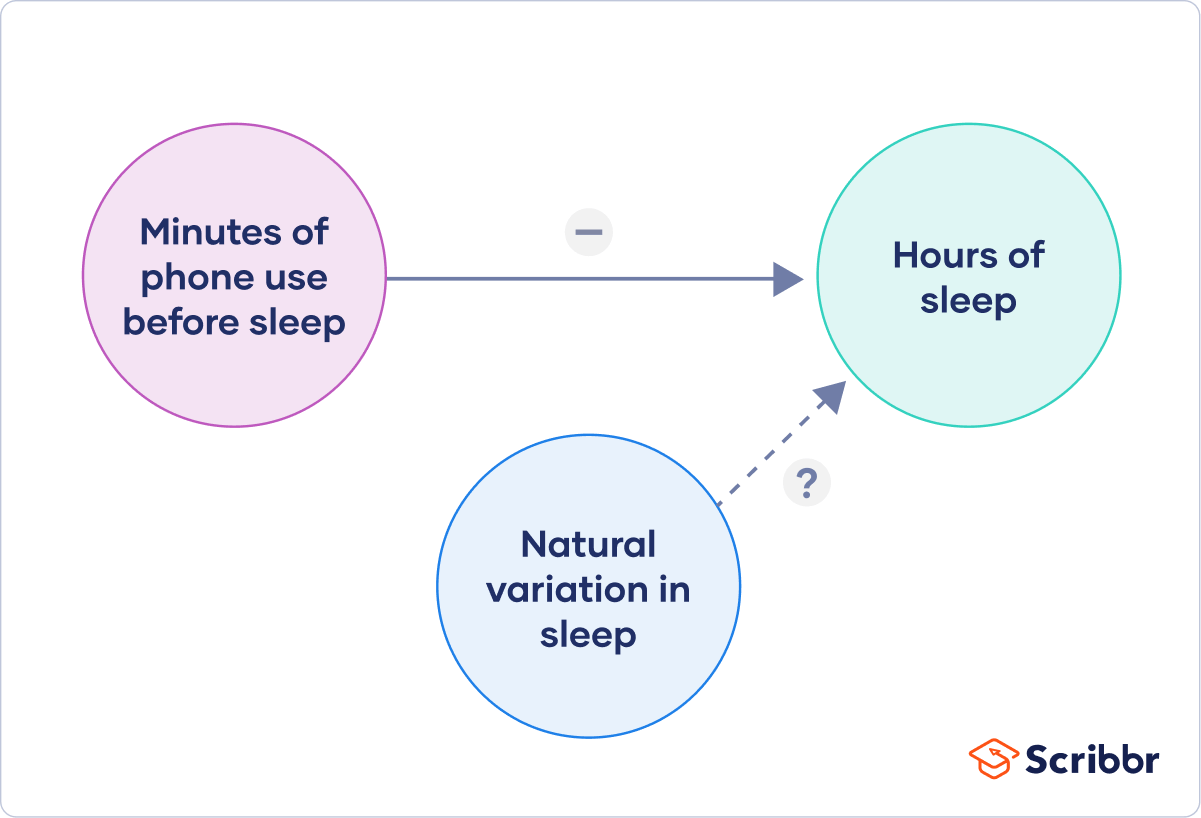

5. Natural settings can be replicated with faster speeds. When conducting research within a laboratory environment, it becomes possible to replicate conditions that could take a long time so that the variables can be tested appropriately. This allows researchers to have a greater control of the extraneous variables which may exist as well, limiting the unpredictability of nature as each variable is being carefully studied.

6. Experimental research allows cause and effect to be determined. The manipulation of variables allows for researchers to be able to look at various cause-and-effect relationships that a product, theory, or idea can produce. It is a process which allows researchers to dig deeper into what is possible, showing how the various variable relationships can provide specific benefits. In return, a greater understanding of the specifics within the research can be understood, even if an understanding of why that relationship is present isn’t presented to the researcher.

7. It can be combined with other research methods. This allows experimental research to be able to provide the scientific rigor that may be needed for the results to stand on their own. It provides the possibility of determining what may be best for a specific demographic or population while also offering a better transference than anecdotal research can typically provide.

What Are the Disadvantages of Experimental Research?

1. Results are highly subjective due to the possibility of human error. Because experimental research requires specific levels of variable control, it is at a high risk of experiencing human error at some point during the research. Any error, whether it is systemic or random, can reveal information about the other variables and that would eliminate the validity of the experiment and research being conducted.

2. Experimental research can create situations that are not realistic. The variables of a product, theory, or idea are under such tight controls that the data being produced can be corrupted or inaccurate, but still seem like it is authentic. This can work in two negative ways for the researcher. First, the variables can be controlled in such a way that it skews the data toward a favorable or desired result. Secondly, the data can be corrupted to seem like it is positive, but because the real-life environment is so different from the controlled environment, the positive results could never be achieved outside of the experimental research.

3. It is a time-consuming process. For it to be done properly, experimental research must isolate each variable and conduct testing on it. Then combinations of variables must also be considered. This process can be lengthy and require a large amount of financial and personnel resources. Those costs may never be offset by consumer sales if the product or idea never makes it to market. If what is being tested is a theory, it can lead to a false sense of validity that may change how others approach their own research.

4. There may be ethical or practical problems with variable control. It might seem like a good idea to test new pharmaceuticals on animals before humans to see if they will work, but what happens if the animal dies because of the experimental research? Or what about human trials that fail and cause injury or death? Experimental research might be effective, but sometimes the approach has ethical or practical complications that cannot be ignored. Sometimes there are variables that cannot be manipulated as it should be so that results can be obtained.

5. Experimental research does not provide an actual explanation. Experimental research is an opportunity to answer a Yes or No question. It will either show you that it will work or it will not work as intended. One could argue that partial results could be achieved, but that would still fit into the “No” category because the desired results were not fully achieved. The answer is nice to have, but there is no explanation as to how you got to that answer. Experimental research is unable to answer the question of “Why” when looking at outcomes.

6. Extraneous variables cannot always be controlled. Although laboratory settings can control extraneous variables, natural environments provide certain challenges. Some studies need to be completed in a natural setting to be accurate. It may not always be possible to control the extraneous variables because of the unpredictability of Mother Nature. Even if the variables are controlled, the outcome may ensure internal validity, but do so at the expense of external validity. Either way, applying the results to the general population can be quite challenging in either scenario.

7. Participants can be influenced by their current situation. Human error isn’t just confined to the researchers. Participants in an experimental research study can also be influenced by extraneous variables. There could be something in the environment, such an allergy, that creates a distraction. In a conversation with a researcher, there may be a physical attraction that changes the responses of the participant. Even internal triggers, such as a fear of enclosed spaces, could influence the results that are obtained. It is also very common for participants to “go along” with what they think a researcher wants to see instead of providing an honest response.

8. Manipulating variables isn’t necessarily an objective standpoint. For research to be effective, it must be objective. Being able to manipulate variables reduces that objectivity. Although there are benefits to observing the consequences of such manipulation, those benefits may not provide realistic results that can be used in the future. Taking a sample is reflective of that sample and the results may not translate over to the general population.

9. Human responses in experimental research can be difficult to measure. There are many pressures that can be placed on people, from political to personal, and everything in-between. Different life experiences can cause people to react to the same situation in different ways. Not only does this mean that groups may not be comparable in experimental research, but it also makes it difficult to measure the human responses that are obtained or observed.

The advantages and disadvantages of experimental research show that it is a useful system to use, but it must be tightly controlled in order to be beneficial. It produces results that can be replicated, but it can also be easily influenced by internal or external influences that may alter the outcomes being achieved. By taking these key points into account, it will become possible to see if this research process is appropriate for your next product, theory, or idea.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Perspect Med Educ

- v.8(4); 2019 Aug

Limited by our limitations

Paula t. ross.

Medical School, University of Michigan, Ann Arbor, MI USA

Nikki L. Bibler Zaidi

Study limitations represent weaknesses within a research design that may influence outcomes and conclusions of the research. Researchers have an obligation to the academic community to present complete and honest limitations of a presented study. Too often, authors use generic descriptions to describe study limitations. Including redundant or irrelevant limitations is an ineffective use of the already limited word count. A meaningful presentation of study limitations should describe the potential limitation, explain the implication of the limitation, provide possible alternative approaches, and describe steps taken to mitigate the limitation. This includes placing research findings within their proper context to ensure readers do not overemphasize or minimize findings. A more complete presentation will enrich the readers’ understanding of the study’s limitations and support future investigation.

Introduction

Regardless of the format scholarship assumes, from qualitative research to clinical trials, all studies have limitations. Limitations represent weaknesses within the study that may influence outcomes and conclusions of the research. The goal of presenting limitations is to provide meaningful information to the reader; however, too often, limitations in medical education articles are overlooked or reduced to simplistic and minimally relevant themes (e.g., single institution study, use of self-reported data, or small sample size) [ 1 ]. This issue is prominent in other fields of inquiry in medicine as well. For example, despite the clinical implications, medical studies often fail to discuss how limitations could have affected the study findings and interpretations [ 2 ]. Further, observational research often fails to remind readers of the fundamental limitation inherent in the study design, which is the inability to attribute causation [ 3 ]. By reporting generic limitations or omitting them altogether, researchers miss opportunities to fully communicate the relevance of their work, illustrate how their work advances a larger field under study, and suggest potential areas for further investigation.

Goals of presenting limitations

Medical education scholarship should provide empirical evidence that deepens our knowledge and understanding of education [ 4 , 5 ], informs educational practice and process, [ 6 , 7 ] and serves as a forum for educating other researchers [ 8 ]. Providing study limitations is indeed an important part of this scholarly process. Without them, research consumers are pressed to fully grasp the potential exclusion areas or other biases that may affect the results and conclusions provided [ 9 ]. Study limitations should leave the reader thinking about opportunities to engage in prospective improvements [ 9 – 11 ] by presenting gaps in the current research and extant literature, thereby cultivating other researchers’ curiosity and interest in expanding the line of scholarly inquiry [ 9 ].

Presenting study limitations is also an ethical element of scientific inquiry [ 12 ]. It ensures transparency of both the research and the researchers [ 10 , 13 , 14 ], as well as provides transferability [ 15 ] and reproducibility of methods. Presenting limitations also supports proper interpretation and validity of the findings [ 16 ]. A study’s limitations should place research findings within their proper context to ensure readers are fully able to discern the credibility of a study’s conclusion, and can generalize findings appropriately [ 16 ].

Why some authors may fail to present limitations

As Price and Murnan [ 8 ] note, there may be overriding reasons why researchers do not sufficiently report the limitations of their study. For example, authors may not fully understand the importance and implications of their study’s limitations or assume that not discussing them may increase the likelihood of publication. Word limits imposed by journals may also prevent authors from providing thorough descriptions of their study’s limitations [ 17 ]. Still another possible reason for excluding limitations is a diffusion of responsibility in which some authors may incorrectly assume that the journal editor is responsible for identifying limitations. Regardless of reason or intent, researchers have an obligation to the academic community to present complete and honest study limitations.

A guide to presenting limitations

The presentation of limitations should describe the potential limitations, explain the implication of the limitations, provide possible alternative approaches, and describe steps taken to mitigate the limitations. Too often, authors only list the potential limitations, without including these other important elements.

Describe the limitations

When describing limitations authors should identify the limitation type to clearly introduce the limitation and specify the origin of the limitation. This helps to ensure readers are able to interpret and generalize findings appropriately. Here we outline various limitation types that can occur at different stages of the research process.

Study design

Some study limitations originate from conscious choices made by the researcher (also known as delimitations) to narrow the scope of the study [ 1 , 8 , 18 ]. For example, the researcher may have designed the study for a particular age group, sex, race, ethnicity, geographically defined region, or some other attribute that would limit to whom the findings can be generalized. Such delimitations involve conscious exclusionary and inclusionary decisions made during the development of the study plan, which may represent a systematic bias intentionally introduced into the study design or instrument by the researcher [ 8 ]. The clear description and delineation of delimitations and limitations will assist editors and reviewers in understanding any methodological issues.

Data collection

Study limitations can also be introduced during data collection. An unintentional consequence of human subjects research is the potential of the researcher to influence how participants respond to their questions. Even when appropriate methods for sampling have been employed, some studies remain limited by the use of data collected only from participants who decided to enrol in the study (self-selection bias) [ 11 , 19 ]. In some cases, participants may provide biased input by responding to questions they believe are favourable to the researcher rather than their authentic response (social desirability bias) [ 20 – 22 ]. Participants may influence the data collected by changing their behaviour when they are knowingly being observed (Hawthorne effect) [ 23 ]. Researchers—in their role as an observer—may also bias the data they collect by allowing a first impression of the participant to be influenced by a single characteristic or impression of another characteristic either unfavourably (horns effect) or favourably (halo effort) [ 24 ].

Data analysis

Study limitations may arise as a consequence of the type of statistical analysis performed. Some studies may not follow the basic tenets of inferential statistical analyses when they use convenience sampling (i.e. non-probability sampling) rather than employing probability sampling from a target population [ 19 ]. Another limitation that can arise during statistical analyses occurs when studies employ unplanned post-hoc data analyses that were not specified before the initial analysis [ 25 ]. Unplanned post-hoc analysis may lead to statistical relationships that suggest associations but are no more than coincidental findings [ 23 ]. Therefore, when unplanned post-hoc analyses are conducted, this should be clearly stated to allow the reader to make proper interpretation and conclusions—especially when only a subset of the original sample is investigated [ 23 ].

Study results

The limitations of any research study will be rooted in the validity of its results—specifically threats to internal or external validity [ 8 ]. Internal validity refers to reliability or accuracy of the study results [ 26 ], while external validity pertains to the generalizability of results from the study’s sample to the larger, target population [ 8 ].

Examples of threats to internal validity include: effects of events external to the study (history), changes in participants due to time instead of the studied effect (maturation), systematic reduction in participants related to a feature of the study (attrition), changes in participant responses due to repeatedly measuring participants (testing effect), modifications to the instrument (instrumentality) and selecting participants based on extreme scores that will regress towards the mean in repeat tests (regression to the mean) [ 27 ].

Threats to external validity include factors that might inhibit generalizability of results from the study’s sample to the larger, target population [ 8 , 27 ]. External validity is challenged when results from a study cannot be generalized to its larger population or to similar populations in terms of the context, setting, participants and time [ 18 ]. Therefore, limitations should be made transparent in the results to inform research consumers of any known or potentially hidden biases that may have affected the study and prevent generalization beyond the study parameters.

Explain the implication(s) of each limitation

Authors should include the potential impact of the limitations (e.g., likelihood, magnitude) [ 13 ] as well as address specific validity implications of the results and subsequent conclusions [ 16 , 28 ]. For example, self-reported data may lead to inaccuracies (e.g. due to social desirability bias) which threatens internal validity [ 19 ]. Even a researcher’s inappropriate attribution to a characteristic or outcome (e.g., stereotyping) can overemphasize (either positively or negatively) unrelated characteristics or outcomes (halo or horns effect) and impact the internal validity [ 24 ]. Participants’ awareness that they are part of a research study can also influence outcomes (Hawthorne effect) and limit external validity of findings [ 23 ]. External validity may also be threatened should the respondents’ propensity for participation be correlated with the substantive topic of study, as data will be biased and not represent the population of interest (self-selection bias) [ 29 ]. Having this explanation helps readers interpret the results and generalize the applicability of the results for their own setting.

Provide potential alternative approaches and explanations

Often, researchers use other studies’ limitations as the first step in formulating new research questions and shaping the next phase of research. Therefore, it is important for readers to understand why potential alternative approaches (e.g. approaches taken by others exploring similar topics) were not taken. In addition to alternative approaches, authors can also present alternative explanations for their own study’s findings [ 13 ]. This information is valuable coming from the researcher because of the direct, relevant experience and insight gained as they conducted the study. The presentation of alternative approaches represents a major contribution to the scholarly community.

Describe steps taken to minimize each limitation

No research design is perfect and free from explicit and implicit biases; however various methods can be employed to minimize the impact of study limitations. Some suggested steps to mitigate or minimize the limitations mentioned above include using neutral questions, randomized response technique, force choice items, or self-administered questionnaires to reduce respondents’ discomfort when answering sensitive questions (social desirability bias) [ 21 ]; using unobtrusive data collection measures (e.g., use of secondary data) that do not require the researcher to be present (Hawthorne effect) [ 11 , 30 ]; using standardized rubrics and objective assessment forms with clearly defined scoring instructions to minimize researcher bias, or making rater adjustments to assessment scores to account for rater tendencies (halo or horns effect) [ 24 ]; or using existing data or control groups (self-selection bias) [ 11 , 30 ]. When appropriate, researchers should provide sufficient evidence that demonstrates the steps taken to mitigate limitations as part of their study design [ 13 ].

In conclusion, authors may be limiting the impact of their research by neglecting or providing abbreviated and generic limitations. We present several examples of limitations to consider; however, this should not be considered an exhaustive list nor should these examples be added to the growing list of generic and overused limitations. Instead, careful thought should go into presenting limitations after research has concluded and the major findings have been described. Limitations help focus the reader on key findings, therefore it is important to only address the most salient limitations of the study [ 17 , 28 ] related to the specific research problem, not general limitations of most studies [ 1 ]. It is important not to minimize the limitations of study design or results. Rather, results, including their limitations, must help readers draw connections between current research and the extant literature.

The quality and rigor of our research is largely defined by our limitations [ 31 ]. In fact, one of the top reasons reviewers report recommending acceptance of medical education research manuscripts involves limitations—specifically how the study’s interpretation accounts for its limitations [ 32 ]. Therefore, it is not only best for authors to acknowledge their study’s limitations rather than to have them identified by an editor or reviewer, but proper framing and presentation of limitations can actually increase the likelihood of acceptance. Perhaps, these issues could be ameliorated if academic and research organizations adopted policies and/or expectations to guide authors in proper description of limitations.

Chapter 10 Experimental Research

Experimental research, often considered to be the “gold standard” in research designs, is one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity (causality) due to its ability to link cause and effect through treatment manipulation, while controlling for the spurious effect of extraneous variable.

Experimental research is best suited for explanatory research (rather than for descriptive or exploratory research), where the goal of the study is to examine cause-effect relationships. It also works well for research that involves a relatively limited and well-defined set of independent variables that can either be manipulated or controlled. Experimental research can be conducted in laboratory or field settings. Laboratory experiments , conducted in laboratory (artificial) settings, tend to be high in internal validity, but this comes at the cost of low external validity (generalizability), because the artificial (laboratory) setting in which the study is conducted may not reflect the real world. Field experiments , conducted in field settings such as in a real organization, and high in both internal and external validity. But such experiments are relatively rare, because of the difficulties associated with manipulating treatments and controlling for extraneous effects in a field setting.

Experimental research can be grouped into two broad categories: true experimental designs and quasi-experimental designs. Both designs require treatment manipulation, but while true experiments also require random assignment, quasi-experiments do not. Sometimes, we also refer to non-experimental research, which is not really a research design, but an all-inclusive term that includes all types of research that do not employ treatment manipulation or random assignment, such as survey research, observational research, and correlational studies.

Basic Concepts

Treatment and control groups. In experimental research, some subjects are administered one or more experimental stimulus called a treatment (the treatment group ) while other subjects are not given such a stimulus (the control group ). The treatment may be considered successful if subjects in the treatment group rate more favorably on outcome variables than control group subjects. Multiple levels of experimental stimulus may be administered, in which case, there may be more than one treatment group. For example, in order to test the effects of a new drug intended to treat a certain medical condition like dementia, if a sample of dementia patients is randomly divided into three groups, with the first group receiving a high dosage of the drug, the second group receiving a low dosage, and the third group receives a placebo such as a sugar pill (control group), then the first two groups are experimental groups and the third group is a control group. After administering the drug for a period of time, if the condition of the experimental group subjects improved significantly more than the control group subjects, we can say that the drug is effective. We can also compare the conditions of the high and low dosage experimental groups to determine if the high dose is more effective than the low dose.

Treatment manipulation. Treatments are the unique feature of experimental research that sets this design apart from all other research methods. Treatment manipulation helps control for the “cause” in cause-effect relationships. Naturally, the validity of experimental research depends on how well the treatment was manipulated. Treatment manipulation must be checked using pretests and pilot tests prior to the experimental study. Any measurements conducted before the treatment is administered are called pretest measures , while those conducted after the treatment are posttest measures .

Random selection and assignment. Random selection is the process of randomly drawing a sample from a population or a sampling frame. This approach is typically employed in survey research, and assures that each unit in the population has a positive chance of being selected into the sample. Random assignment is however a process of randomly assigning subjects to experimental or control groups. This is a standard practice in true experimental research to ensure that treatment groups are similar (equivalent) to each other and to the control group, prior to treatment administration. Random selection is related to sampling, and is therefore, more closely related to the external validity (generalizability) of findings. However, random assignment is related to design, and is therefore most related to internal validity. It is possible to have both random selection and random assignment in well-designed experimental research, but quasi-experimental research involves neither random selection nor random assignment.

Threats to internal validity. Although experimental designs are considered more rigorous than other research methods in terms of the internal validity of their inferences (by virtue of their ability to control causes through treatment manipulation), they are not immune to internal validity threats. Some of these threats to internal validity are described below, within the context of a study of the impact of a special remedial math tutoring program for improving the math abilities of high school students.

- History threat is the possibility that the observed effects (dependent variables) are caused by extraneous or historical events rather than by the experimental treatment. For instance, students’ post-remedial math score improvement may have been caused by their preparation for a math exam at their school, rather than the remedial math program.

- Maturation threat refers to the possibility that observed effects are caused by natural maturation of subjects (e.g., a general improvement in their intellectual ability to understand complex concepts) rather than the experimental treatment.

- Testing threat is a threat in pre-post designs where subjects’ posttest responses are conditioned by their pretest responses. For instance, if students remember their answers from the pretest evaluation, they may tend to repeat them in the posttest exam. Not conducting a pretest can help avoid this threat.

- Instrumentation threat , which also occurs in pre-post designs, refers to the possibility that the difference between pretest and posttest scores is not due to the remedial math program, but due to changes in the administered test, such as the posttest having a higher or lower degree of difficulty than the pretest.

- Mortality threat refers to the possibility that subjects may be dropping out of the study at differential rates between the treatment and control groups due to a systematic reason, such that the dropouts were mostly students who scored low on the pretest. If the low-performing students drop out, the results of the posttest will be artificially inflated by the preponderance of high-performing students.

- Regression threat , also called a regression to the mean, refers to the statistical tendency of a group’s overall performance on a measure during a posttest to regress toward the mean of that measure rather than in the anticipated direction. For instance, if subjects scored high on a pretest, they will have a tendency to score lower on the posttest (closer to the mean) because their high scores (away from the mean) during the pretest was possibly a statistical aberration. This problem tends to be more prevalent in non-random samples and when the two measures are imperfectly correlated.

Two-Group Experimental Designs

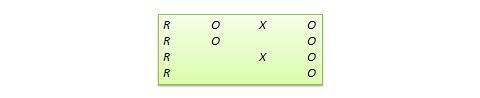

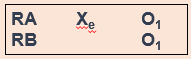

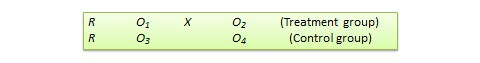

The simplest true experimental designs are two group designs involving one treatment group and one control group, and are ideally suited for testing the effects of a single independent variable that can be manipulated as a treatment. The two basic two-group designs are the pretest-posttest control group design and the posttest-only control group design, while variations may include covariance designs. These designs are often depicted using a standardized design notation, where R represents random assignment of subjects to groups, X represents the treatment administered to the treatment group, and O represents pretest or posttest observations of the dependent variable (with different subscripts to distinguish between pretest and posttest observations of treatment and control groups).

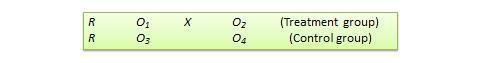

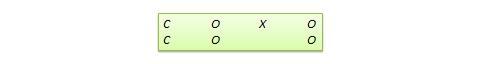

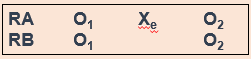

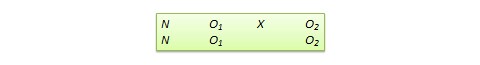

Pretest-posttest control group design . In this design, subjects are randomly assigned to treatment and control groups, subjected to an initial (pretest) measurement of the dependent variables of interest, the treatment group is administered a treatment (representing the independent variable of interest), and the dependent variables measured again (posttest). The notation of this design is shown in Figure 10.1.

Figure 10.1. Pretest-posttest control group design

The effect E of the experimental treatment in the pretest posttest design is measured as the difference in the posttest and pretest scores between the treatment and control groups:

E = (O 2 – O 1 ) – (O 4 – O 3 )

Statistical analysis of this design involves a simple analysis of variance (ANOVA) between the treatment and control groups. The pretest posttest design handles several threats to internal validity, such as maturation, testing, and regression, since these threats can be expected to influence both treatment and control groups in a similar (random) manner. The selection threat is controlled via random assignment. However, additional threats to internal validity may exist. For instance, mortality can be a problem if there are differential dropout rates between the two groups, and the pretest measurement may bias the posttest measurement (especially if the pretest introduces unusual topics or content).

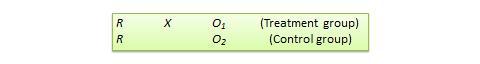

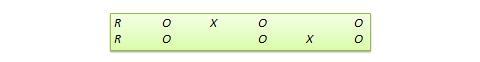

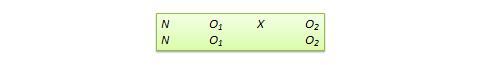

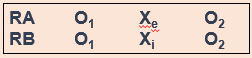

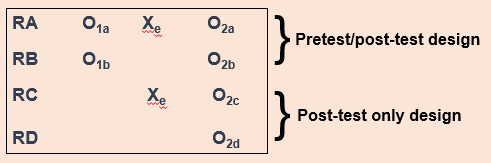

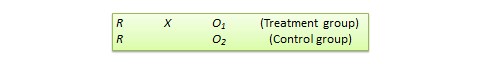

Posttest-only control group design . This design is a simpler version of the pretest-posttest design where pretest measurements are omitted. The design notation is shown in Figure 10.2.

Figure 10.2. Posttest only control group design.

The treatment effect is measured simply as the difference in the posttest scores between the two groups:

E = (O 1 – O 2 )

The appropriate statistical analysis of this design is also a two- group analysis of variance (ANOVA). The simplicity of this design makes it more attractive than the pretest-posttest design in terms of internal validity. This design controls for maturation, testing, regression, selection, and pretest-posttest interaction, though the mortality threat may continue to exist.

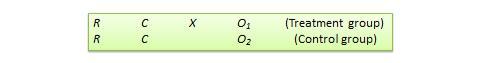

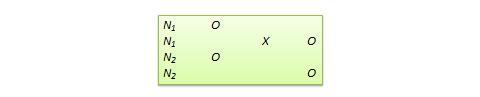

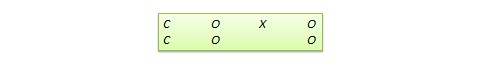

Covariance designs . Sometimes, measures of dependent variables may be influenced by extraneous variables called covariates . Covariates are those variables that are not of central interest to an experimental study, but should nevertheless be controlled in an experimental design in order to eliminate their potential effect on the dependent variable and therefore allow for a more accurate detection of the effects of the independent variables of interest. The experimental designs discussed earlier did not control for such covariates. A covariance design (also called a concomitant variable design) is a special type of pretest posttest control group design where the pretest measure is essentially a measurement of the covariates of interest rather than that of the dependent variables. The design notation is shown in Figure 10.3, where C represents the covariates:

Figure 10.3. Covariance design

Because the pretest measure is not a measurement of the dependent variable, but rather a covariate, the treatment effect is measured as the difference in the posttest scores between the treatment and control groups as:

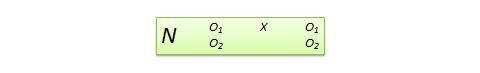

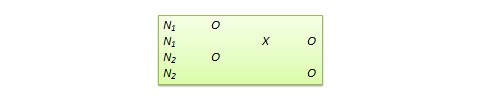

Figure 10.4. 2 x 2 factorial design

Factorial designs can also be depicted using a design notation, such as that shown on the right panel of Figure 10.4. R represents random assignment of subjects to treatment groups, X represents the treatment groups themselves (the subscripts of X represents the level of each factor), and O represent observations of the dependent variable. Notice that the 2 x 2 factorial design will have four treatment groups, corresponding to the four combinations of the two levels of each factor. Correspondingly, the 2 x 3 design will have six treatment groups, and the 2 x 2 x 2 design will have eight treatment groups. As a rule of thumb, each cell in a factorial design should have a minimum sample size of 20 (this estimate is derived from Cohen’s power calculations based on medium effect sizes). So a 2 x 2 x 2 factorial design requires a minimum total sample size of 160 subjects, with at least 20 subjects in each cell. As you can see, the cost of data collection can increase substantially with more levels or factors in your factorial design. Sometimes, due to resource constraints, some cells in such factorial designs may not receive any treatment at all, which are called incomplete factorial designs . Such incomplete designs hurt our ability to draw inferences about the incomplete factors.

In a factorial design, a main effect is said to exist if the dependent variable shows a significant difference between multiple levels of one factor, at all levels of other factors. No change in the dependent variable across factor levels is the null case (baseline), from which main effects are evaluated. In the above example, you may see a main effect of instructional type, instructional time, or both on learning outcomes. An interaction effect exists when the effect of differences in one factor depends upon the level of a second factor. In our example, if the effect of instructional type on learning outcomes is greater for 3 hours/week of instructional time than for 1.5 hours/week, then we can say that there is an interaction effect between instructional type and instructional time on learning outcomes. Note that the presence of interaction effects dominate and make main effects irrelevant, and it is not meaningful to interpret main effects if interaction effects are significant.

Hybrid Experimental Designs

Hybrid designs are those that are formed by combining features of more established designs. Three such hybrid designs are randomized bocks design, Solomon four-group design, and switched replications design.

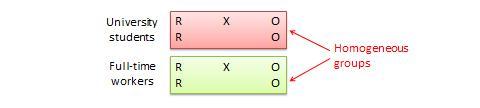

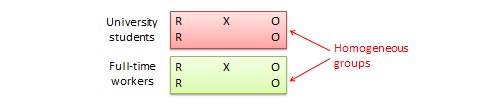

Randomized block design. This is a variation of the posttest-only or pretest-posttest control group design where the subject population can be grouped into relatively homogeneous subgroups (called blocks ) within which the experiment is replicated. For instance, if you want to replicate the same posttest-only design among university students and full -time working professionals (two homogeneous blocks), subjects in both blocks are randomly split between treatment group (receiving the same treatment) or control group (see Figure 10.5). The purpose of this design is to reduce the “noise” or variance in data that may be attributable to differences between the blocks so that the actual effect of interest can be detected more accurately.

Figure 10.5. Randomized blocks design.

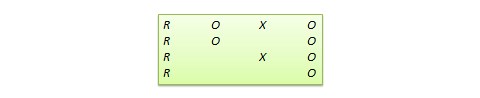

Solomon four-group design . In this design, the sample is divided into two treatment groups and two control groups. One treatment group and one control group receive the pretest, and the other two groups do not. This design represents a combination of posttest-only and pretest-posttest control group design, and is intended to test for the potential biasing effect of pretest measurement on posttest measures that tends to occur in pretest-posttest designs but not in posttest only designs. The design notation is shown in Figure 10.6.

Figure 10.6. Solomon four-group design

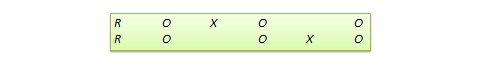

Switched replication design . This is a two-group design implemented in two phases with three waves of measurement. The treatment group in the first phase serves as the control group in the second phase, and the control group in the first phase becomes the treatment group in the second phase, as illustrated in Figure 10.7. In other words, the original design is repeated or replicated temporally with treatment/control roles switched between the two groups. By the end of the study, all participants will have received the treatment either during the first or the second phase. This design is most feasible in organizational contexts where organizational programs (e.g., employee training) are implemented in a phased manner or are repeated at regular intervals.

Figure 10.7. Switched replication design.

Quasi-Experimental Designs

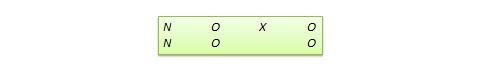

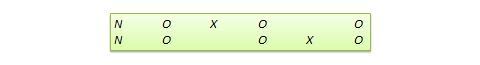

Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient: random assignment. For instance, one entire class section or one organization is used as the treatment group, while another section of the same class or a different organization in the same industry is used as the control group. This lack of random assignment potentially results in groups that are non-equivalent, such as one group possessing greater mastery of a certain content than the other group, say by virtue of having a better teacher in a previous semester, which introduces the possibility of selection bias . Quasi-experimental designs are therefore inferior to true experimental designs in interval validity due to the presence of a variety of selection related threats such as selection-maturation threat (the treatment and control groups maturing at different rates), selection-history threat (the treatment and control groups being differentially impact by extraneous or historical events), selection-regression threat (the treatment and control groups regressing toward the mean between pretest and posttest at different rates), selection-instrumentation threat (the treatment and control groups responding differently to the measurement), selection-testing (the treatment and control groups responding differently to the pretest), and selection-mortality (the treatment and control groups demonstrating differential dropout rates). Given these selection threats, it is generally preferable to avoid quasi-experimental designs to the greatest extent possible.

Many true experimental designs can be converted to quasi-experimental designs by omitting random assignment. For instance, the quasi-equivalent version of pretest-posttest control group design is called nonequivalent groups design (NEGD), as shown in Figure 10.8, with random assignment R replaced by non-equivalent (non-random) assignment N . Likewise, the quasi -experimental version of switched replication design is called non-equivalent switched replication design (see Figure 10.9).

Figure 10.8. NEGD design.

Figure 10.9. Non-equivalent switched replication design.

In addition, there are quite a few unique non -equivalent designs without corresponding true experimental design cousins. Some of the more useful of these designs are discussed next.

Regression-discontinuity (RD) design . This is a non-equivalent pretest-posttest design where subjects are assigned to treatment or control group based on a cutoff score on a preprogram measure. For instance, patients who are severely ill may be assigned to a treatment group to test the efficacy of a new drug or treatment protocol and those who are mildly ill are assigned to the control group. In another example, students who are lagging behind on standardized test scores may be selected for a remedial curriculum program intended to improve their performance, while those who score high on such tests are not selected from the remedial program. The design notation can be represented as follows, where C represents the cutoff score:

Figure 10.10. RD design.

Because of the use of a cutoff score, it is possible that the observed results may be a function of the cutoff score rather than the treatment, which introduces a new threat to internal validity. However, using the cutoff score also ensures that limited or costly resources are distributed to people who need them the most rather than randomly across a population, while simultaneously allowing a quasi-experimental treatment. The control group scores in the RD design does not serve as a benchmark for comparing treatment group scores, given the systematic non-equivalence between the two groups. Rather, if there is no discontinuity between pretest and posttest scores in the control group, but such a discontinuity persists in the treatment group, then this discontinuity is viewed as evidence of the treatment effect.

Proxy pretest design . This design, shown in Figure 10.11, looks very similar to the standard NEGD (pretest-posttest) design, with one critical difference: the pretest score is collected after the treatment is administered. A typical application of this design is when a researcher is brought in to test the efficacy of a program (e.g., an educational program) after the program has already started and pretest data is not available. Under such circumstances, the best option for the researcher is often to use a different prerecorded measure, such as students’ grade point average before the start of the program, as a proxy for pretest data. A variation of the proxy pretest design is to use subjects’ posttest recollection of pretest data, which may be subject to recall bias, but nevertheless may provide a measure of perceived gain or change in the dependent variable.

Figure 10.11. Proxy pretest design.

Separate pretest-posttest samples design . This design is useful if it is not possible to collect pretest and posttest data from the same subjects for some reason. As shown in Figure 10.12, there are four groups in this design, but two groups come from a single non-equivalent group, while the other two groups come from a different non-equivalent group. For instance, you want to test customer satisfaction with a new online service that is implemented in one city but not in another. In this case, customers in the first city serve as the treatment group and those in the second city constitute the control group. If it is not possible to obtain pretest and posttest measures from the same customers, you can measure customer satisfaction at one point in time, implement the new service program, and measure customer satisfaction (with a different set of customers) after the program is implemented. Customer satisfaction is also measured in the control group at the same times as in the treatment group, but without the new program implementation. The design is not particularly strong, because you cannot examine the changes in any specific customer’s satisfaction score before and after the implementation, but you can only examine average customer satisfaction scores. Despite the lower internal validity, this design may still be a useful way of collecting quasi-experimental data when pretest and posttest data are not available from the same subjects.

Figure 10.12. Separate pretest-posttest samples design.

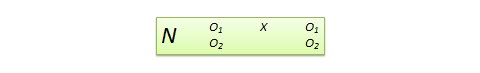

Nonequivalent dependent variable (NEDV) design . This is a single-group pre-post quasi-experimental design with two outcome measures, where one measure is theoretically expected to be influenced by the treatment and the other measure is not. For instance, if you are designing a new calculus curriculum for high school students, this curriculum is likely to influence students’ posttest calculus scores but not algebra scores. However, the posttest algebra scores may still vary due to extraneous factors such as history or maturation. Hence, the pre-post algebra scores can be used as a control measure, while that of pre-post calculus can be treated as the treatment measure. The design notation, shown in Figure 10.13, indicates the single group by a single N , followed by pretest O 1 and posttest O 2 for calculus and algebra for the same group of students. This design is weak in internal validity, but its advantage lies in not having to use a separate control group.

An interesting variation of the NEDV design is a pattern matching NEDV design , which employs multiple outcome variables and a theory that explains how much each variable will be affected by the treatment. The researcher can then examine if the theoretical prediction is matched in actual observations. This pattern-matching technique, based on the degree of correspondence between theoretical and observed patterns is a powerful way of alleviating internal validity concerns in the original NEDV design.

Figure 10.13. NEDV design.

Perils of Experimental Research

Experimental research is one of the most difficult of research designs, and should not be taken lightly. This type of research is often best with a multitude of methodological problems. First, though experimental research requires theories for framing hypotheses for testing, much of current experimental research is atheoretical. Without theories, the hypotheses being tested tend to be ad hoc, possibly illogical, and meaningless. Second, many of the measurement instruments used in experimental research are not tested for reliability and validity, and are incomparable across studies. Consequently, results generated using such instruments are also incomparable. Third, many experimental research use inappropriate research designs, such as irrelevant dependent variables, no interaction effects, no experimental controls, and non-equivalent stimulus across treatment groups. Findings from such studies tend to lack internal validity and are highly suspect. Fourth, the treatments (tasks) used in experimental research may be diverse, incomparable, and inconsistent across studies and sometimes inappropriate for the subject population. For instance, undergraduate student subjects are often asked to pretend that they are marketing managers and asked to perform a complex budget allocation task in which they have no experience or expertise. The use of such inappropriate tasks, introduces new threats to internal validity (i.e., subject’s performance may be an artifact of the content or difficulty of the task setting), generates findings that are non-interpretable and meaningless, and makes integration of findings across studies impossible.

The design of proper experimental treatments is a very important task in experimental design, because the treatment is the raison d’etre of the experimental method, and must never be rushed or neglected. To design an adequate and appropriate task, researchers should use prevalidated tasks if available, conduct treatment manipulation checks to check for the adequacy of such tasks (by debriefing subjects after performing the assigned task), conduct pilot tests (repeatedly, if necessary), and if doubt, using tasks that are simpler and familiar for the respondent sample than tasks that are complex or unfamiliar.

In summary, this chapter introduced key concepts in the experimental design research method and introduced a variety of true experimental and quasi-experimental designs. Although these designs vary widely in internal validity, designs with less internal validity should not be overlooked and may sometimes be useful under specific circumstances and empirical contingencies.

- Social Science Research: Principles, Methods, and Practices. Authored by : Anol Bhattacherjee. Provided by : University of South Florida. Located at : http://scholarcommons.usf.edu/oa_textbooks/3/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Experimental and Quasi-Experimental Research

Guide Title: Experimental and Quasi-Experimental Research Guide ID: 64

You approach a stainless-steel wall, separated vertically along its middle where two halves meet. After looking to the left, you see two buttons on the wall to the right. You press the top button and it lights up. A soft tone sounds and the two halves of the wall slide apart to reveal a small room. You step into the room. Looking to the left, then to the right, you see a panel of more buttons. You know that you seek a room marked with the numbers 1-0-1-2, so you press the button marked "10." The halves slide shut and enclose you within the cubicle, which jolts upward. Soon, the soft tone sounds again. The door opens again. On the far wall, a sign silently proclaims, "10th floor."

You have engaged in a series of experiments. A ride in an elevator may not seem like an experiment, but it, and each step taken towards its ultimate outcome, are common examples of a search for a causal relationship-which is what experimentation is all about.

You started with the hypothesis that this is in fact an elevator. You proved that you were correct. You then hypothesized that the button to summon the elevator was on the left, which was incorrect, so then you hypothesized it was on the right, and you were correct. You hypothesized that pressing the button marked with the up arrow would not only bring an elevator to you, but that it would be an elevator heading in the up direction. You were right.

As this guide explains, the deliberate process of testing hypotheses and reaching conclusions is an extension of commonplace testing of cause and effect relationships.

Basic Concepts of Experimental and Quasi-Experimental Research

Discovering causal relationships is the key to experimental research. In abstract terms, this means the relationship between a certain action, X, which alone creates the effect Y. For example, turning the volume knob on your stereo clockwise causes the sound to get louder. In addition, you could observe that turning the knob clockwise alone, and nothing else, caused the sound level to increase. You could further conclude that a causal relationship exists between turning the knob clockwise and an increase in volume; not simply because one caused the other, but because you are certain that nothing else caused the effect.

Independent and Dependent Variables

Beyond discovering causal relationships, experimental research further seeks out how much cause will produce how much effect; in technical terms, how the independent variable will affect the dependent variable. You know that turning the knob clockwise will produce a louder noise, but by varying how much you turn it, you see how much sound is produced. On the other hand, you might find that although you turn the knob a great deal, sound doesn't increase dramatically. Or, you might find that turning the knob just a little adds more sound than expected. The amount that you turned the knob is the independent variable, the variable that the researcher controls, and the amount of sound that resulted from turning it is the dependent variable, the change that is caused by the independent variable.

Experimental research also looks into the effects of removing something. For example, if you remove a loud noise from the room, will the person next to you be able to hear you? Or how much noise needs to be removed before that person can hear you?

Treatment and Hypothesis

The term treatment refers to either removing or adding a stimulus in order to measure an effect (such as turning the knob a little or a lot, or reducing the noise level a little or a lot). Experimental researchers want to know how varying levels of treatment will affect what they are studying. As such, researchers often have an idea, or hypothesis, about what effect will occur when they cause something. Few experiments are performed where there is no idea of what will happen. From past experiences in life or from the knowledge we possess in our specific field of study, we know how some actions cause other reactions. Experiments confirm or reconfirm this fact.

Experimentation becomes more complex when the causal relationships they seek aren't as clear as in the stereo knob-turning examples. Questions like "Will olestra cause cancer?" or "Will this new fertilizer help this plant grow better?" present more to consider. For example, any number of things could affect the growth rate of a plant-the temperature, how much water or sun it receives, or how much carbon dioxide is in the air. These variables can affect an experiment's results. An experimenter who wants to show that adding a certain fertilizer will help a plant grow better must ensure that it is the fertilizer, and nothing else, affecting the growth patterns of the plant. To do this, as many of these variables as possible must be controlled.

Matching and Randomization

In the example used in this guide (you'll find the example below), we discuss an experiment that focuses on three groups of plants -- one that is treated with a fertilizer named MegaGro, another group treated with a fertilizer named Plant!, and yet another that is not treated with fetilizer (this latter group serves as a "control" group). In this example, even though the designers of the experiment have tried to remove all extraneous variables, results may appear merely coincidental. Since the goal of the experiment is to prove a causal relationship in which a single variable is responsible for the effect produced, the experiment would produce stronger proof if the results were replicated in larger treatment and control groups.

Selecting groups entails assigning subjects in the groups of an experiment in such a way that treatment and control groups are comparable in all respects except the application of the treatment. Groups can be created in two ways: matching and randomization. In the MegaGro experiment discussed below, the plants might be matched according to characteristics such as age, weight and whether they are blooming. This involves distributing these plants so that each plant in one group exactly matches characteristics of plants in the other groups. Matching may be problematic, though, because it "can promote a false sense of security by leading [the experimenter] to believe that [the] experimental and control groups were really equated at the outset, when in fact they were not equated on a host of variables" (Jones, 291). In other words, you may have flowers for your MegaGro experiment that you matched and distributed among groups, but other variables are unaccounted for. It would be difficult to have equal groupings.

Randomization, then, is preferred to matching. This method is based on the statistical principle of normal distribution. Theoretically, any arbitrarily selected group of adequate size will reflect normal distribution. Differences between groups will average out and become more comparable. The principle of normal distribution states that in a population most individuals will fall within the middle range of values for a given characteristic, with increasingly fewer toward either extreme (graphically represented as the ubiquitous "bell curve").

Differences between Quasi-Experimental and Experimental Research

Thus far, we have explained that for experimental research we need:

- a hypothesis for a causal relationship;

- a control group and a treatment group;

- to eliminate confounding variables that might mess up the experiment and prevent displaying the causal relationship; and

- to have larger groups with a carefully sorted constituency; preferably randomized, in order to keep accidental differences from fouling things up.

But what if we don't have all of those? Do we still have an experiment? Not a true experiment in the strictest scientific sense of the term, but we can have a quasi-experiment, an attempt to uncover a causal relationship, even though the researcher cannot control all the factors that might affect the outcome.

A quasi-experimenter treats a given situation as an experiment even though it is not wholly by design. The independent variable may not be manipulated by the researcher, treatment and control groups may not be randomized or matched, or there may be no control group. The researcher is limited in what he or she can say conclusively.

The significant element of both experiments and quasi-experiments is the measure of the dependent variable, which it allows for comparison. Some data is quite straightforward, but other measures, such as level of self-confidence in writing ability, increase in creativity or in reading comprehension are inescapably subjective. In such cases, quasi-experimentation often involves a number of strategies to compare subjectivity, such as rating data, testing, surveying, and content analysis.

Rating essentially is developing a rating scale to evaluate data. In testing, experimenters and quasi-experimenters use ANOVA (Analysis of Variance) and ANCOVA (Analysis of Co-Variance) tests to measure differences between control and experimental groups, as well as different correlations between groups.

Since we're mentioning the subject of statistics, note that experimental or quasi-experimental research cannot state beyond a shadow of a doubt that a single cause will always produce any one effect. They can do no more than show a probability that one thing causes another. The probability that a result is the due to random chance is an important measure of statistical analysis and in experimental research.

Example: Causality

Let's say you want to determine that your new fertilizer, MegaGro, will increase the growth rate of plants. You begin by getting a plant to go with your fertilizer. Since the experiment is concerned with proving that MegaGro works, you need another plant, using no fertilizer at all on it, to compare how much change your fertilized plant displays. This is what is known as a control group.

Set up with a control group, which will receive no treatment, and an experimental group, which will get MegaGro, you must then address those variables that could invalidate your experiment. This can be an extensive and exhaustive process. You must ensure that you use the same plant; that both groups are put in the same kind of soil; that they receive equal amounts of water and sun; that they receive the same amount of exposure to carbon-dioxide-exhaling researchers, and so on. In short, any other variable that might affect the growth of those plants, other than the fertilizer, must be the same for both plants. Otherwise, you can't prove absolutely that MegaGro is the only explanation for the increased growth of one of those plants.

Such an experiment can be done on more than two groups. You may not only want to show that MegaGro is an effective fertilizer, but that it is better than its competitor brand of fertilizer, Plant! All you need to do, then, is have one experimental group receiving MegaGro, one receiving Plant! and the other (the control group) receiving no fertilizer. Those are the only variables that can be different between the three groups; all other variables must be the same for the experiment to be valid.

Controlling variables allows the researcher to identify conditions that may affect the experiment's outcome. This may lead to alternative explanations that the researcher is willing to entertain in order to isolate only variables judged significant. In the MegaGro experiment, you may be concerned with how fertile the soil is, but not with the plants'; relative position in the window, as you don't think that the amount of shade they get will affect their growth rate. But what if it did? You would have to go about eliminating variables in order to determine which is the key factor. What if one receives more shade than the other and the MegaGro plant, which received more shade, died? This might prompt you to formulate a plausible alternative explanation, which is a way of accounting for a result that differs from what you expected. You would then want to redo the study with equal amounts of sunlight.

Methods: Five Steps

Experimental research can be roughly divided into five phases:

Identifying a research problem

The process starts by clearly identifying the problem you want to study and considering what possible methods will affect a solution. Then you choose the method you want to test, and formulate a hypothesis to predict the outcome of the test.

For example, you may want to improve student essays, but you don't believe that teacher feedback is enough. You hypothesize that some possible methods for writing improvement include peer workshopping, or reading more example essays. Favoring the former, your experiment would try to determine if peer workshopping improves writing in high school seniors. You state your hypothesis: peer workshopping prior to turning in a final draft will improve the quality of the student's essay.

Planning an experimental research study

The next step is to devise an experiment to test your hypothesis. In doing so, you must consider several factors. For example, how generalizable do you want your end results to be? Do you want to generalize about the entire population of high school seniors everywhere, or just the particular population of seniors at your specific school? This will determine how simple or complex the experiment will be. The amount of time funding you have will also determine the size of your experiment.

Continuing the example from step one, you may want a small study at one school involving three teachers, each teaching two sections of the same course. The treatment in this experiment is peer workshopping. Each of the three teachers will assign the same essay assignment to both classes; the treatment group will participate in peer workshopping, while the control group will receive only teacher comments on their drafts.

Conducting the experiment

At the start of an experiment, the control and treatment groups must be selected. Whereas the "hard" sciences have the luxury of attempting to create truly equal groups, educators often find themselves forced to conduct their experiments based on self-selected groups, rather than on randomization. As was highlighted in the Basic Concepts section, this makes the study a quasi-experiment, since the researchers cannot control all of the variables.

For the peer workshopping experiment, let's say that it involves six classes and three teachers with a sample of students randomly selected from all the classes. Each teacher will have a class for a control group and a class for a treatment group. The essay assignment is given and the teachers are briefed not to change any of their teaching methods other than the use of peer workshopping. You may see here that this is an effort to control a possible variable: teaching style variance.

Analyzing the data

The fourth step is to collect and analyze the data. This is not solely a step where you collect the papers, read them, and say your methods were a success. You must show how successful. You must devise a scale by which you will evaluate the data you receive, therefore you must decide what indicators will be, and will not be, important.

Continuing our example, the teachers' grades are first recorded, then the essays are evaluated for a change in sentence complexity, syntactical and grammatical errors, and overall length. Any statistical analysis is done at this time if you choose to do any. Notice here that the researcher has made judgments on what signals improved writing. It is not simply a matter of improved teacher grades, but a matter of what the researcher believes constitutes improved use of the language.

Writing the paper/presentation describing the findings

Once you have completed the experiment, you will want to share findings by publishing academic paper (or presentations). These papers usually have the following format, but it is not necessary to follow it strictly. Sections can be combined or not included, depending on the structure of the experiment, and the journal to which you submit your paper.

- Abstract : Summarize the project: its aims, participants, basic methodology, results, and a brief interpretation.

- Introduction : Set the context of the experiment.

- Review of Literature : Provide a review of the literature in the specific area of study to show what work has been done. Should lead directly to the author's purpose for the study.

- Statement of Purpose : Present the problem to be studied.

- Participants : Describe in detail participants involved in the study; e.g., how many, etc. Provide as much information as possible.

- Materials and Procedures : Clearly describe materials and procedures. Provide enough information so that the experiment can be replicated, but not so much information that it becomes unreadable. Include how participants were chosen, the tasks assigned them, how they were conducted, how data were evaluated, etc.

- Results : Present the data in an organized fashion. If it is quantifiable, it is analyzed through statistical means. Avoid interpretation at this time.

- Discussion : After presenting the results, interpret what has happened in the experiment. Base the discussion only on the data collected and as objective an interpretation as possible. Hypothesizing is possible here.

- Limitations : Discuss factors that affect the results. Here, you can speculate how much generalization, or more likely, transferability, is possible based on results. This section is important for quasi-experimentation, since a quasi-experiment cannot control all of the variables that might affect the outcome of a study. You would discuss what variables you could not control.

- Conclusion : Synthesize all of the above sections.

- References : Document works cited in the correct format for the field.

Experimental and Quasi-Experimental Research: Issues and Commentary

Several issues are addressed in this section, including the use of experimental and quasi-experimental research in educational settings, the relevance of the methods to English studies, and ethical concerns regarding the methods.

Using Experimental and Quasi-Experimental Research in Educational Settings

Charting causal relationships in human settings.

Any time a human population is involved, prediction of casual relationships becomes cloudy and, some say, impossible. Many reasons exist for this; for example,

- researchers in classrooms add a disturbing presence, causing students to act abnormally, consciously or unconsciously;

- subjects try to please the researcher, just because of an apparent interest in them (known as the Hawthorne Effect); or, perhaps

- the teacher as researcher is restricted by bias and time pressures.

But such confounding variables don't stop researchers from trying to identify causal relationships in education. Educators naturally experiment anyway, comparing groups, assessing the attributes of each, and making predictions based on an evaluation of alternatives. They look to research to support their intuitive practices, experimenting whenever they try to decide which instruction method will best encourage student improvement.

Combining Theory, Research, and Practice

The goal of educational research lies in combining theory, research, and practice. Educational researchers attempt to establish models of teaching practice, learning styles, curriculum development, and countless other educational issues. The aim is to "try to improve our understanding of education and to strive to find ways to have understanding contribute to the improvement of practice," one writer asserts (Floden 1996, p. 197).

In quasi-experimentation, researchers try to develop models by involving teachers as researchers, employing observational research techniques. Although results of this kind of research are context-dependent and difficult to generalize, they can act as a starting point for further study. The "educational researcher . . . provides guidelines and interpretive material intended to liberate the teacher's intelligence so that whatever artistry in teaching the teacher can achieve will be employed" (Eisner 1992, p. 8).

Bias and Rigor

Critics contend that the educational researcher is inherently biased, sample selection is arbitrary, and replication is impossible. The key to combating such criticism has to do with rigor. Rigor is established through close, proper attention to randomizing groups, time spent on a study, and questioning techniques. This allows more effective application of standards of quantitative research to qualitative research.

Often, teachers cannot wait to for piles of experimentation data to be analyzed before using the teaching methods (Lauer and Asher 1988). They ultimately must assess whether the results of a study in a distant classroom are applicable in their own classrooms. And they must continuously test the effectiveness of their methods by using experimental and qualitative research simultaneously. In addition to statistics (quantitative), researchers may perform case studies or observational research (qualitative) in conjunction with, or prior to, experimentation.

Relevance to English Studies

Situations in english studies that might encourage use of experimental methods.

Whenever a researcher would like to see if a causal relationship exists between groups, experimental and quasi-experimental research can be a viable research tool. Researchers in English Studies might use experimentation when they believe a relationship exists between two variables, and they want to show that these two variables have a significant correlation (or causal relationship).

A benefit of experimentation is the ability to control variables, such as the amount of treatment, when it is given, to whom and so forth. Controlling variables allows researchers to gain insight into the relationships they believe exist. For example, a researcher has an idea that writing under pseudonyms encourages student participation in newsgroups. Researchers can control which students write under pseudonyms and which do not, then measure the outcomes. Researchers can then analyze results and determine if this particular variable alone causes increased participation.

Transferability-Applying Results

Experimentation and quasi-experimentation allow for generating transferable results and accepting those results as being dependent upon experimental rigor. It is an effective alternative to generalizability, which is difficult to rely upon in educational research. English scholars, reading results of experiments with a critical eye, ultimately decide if results will be implemented and how. They may even extend that existing research by replicating experiments in the interest of generating new results and benefiting from multiple perspectives. These results will strengthen the study or discredit findings.

Concerns English Scholars Express about Experiments

Researchers should carefully consider if a particular method is feasible in humanities studies, and whether it will yield the desired information. Some researchers recommend addressing pertinent issues combining several research methods, such as survey, interview, ethnography, case study, content analysis, and experimentation (Lauer and Asher, 1988).

Advantages and Disadvantages of Experimental Research: Discussion

In educational research, experimentation is a way to gain insight into methods of instruction. Although teaching is context specific, results can provide a starting point for further study. Often, a teacher/researcher will have a "gut" feeling about an issue which can be explored through experimentation and looking at causal relationships. Through research intuition can shape practice .

A preconception exists that information obtained through scientific method is free of human inconsistencies. But, since scientific method is a matter of human construction, it is subject to human error . The researcher's personal bias may intrude upon the experiment , as well. For example, certain preconceptions may dictate the course of the research and affect the behavior of the subjects. The issue may be compounded when, although many researchers are aware of the affect that their personal bias exerts on their own research, they are pressured to produce research that is accepted in their field of study as "legitimate" experimental research.

The researcher does bring bias to experimentation, but bias does not limit an ability to be reflective . An ethical researcher thinks critically about results and reports those results after careful reflection. Concerns over bias can be leveled against any research method.

Often, the sample may not be representative of a population, because the researcher does not have an opportunity to ensure a representative sample. For example, subjects could be limited to one location, limited in number, studied under constrained conditions and for too short a time.

Despite such inconsistencies in educational research, the researcher has control over the variables , increasing the possibility of more precisely determining individual effects of each variable. Also, determining interaction between variables is more possible.

Even so, artificial results may result . It can be argued that variables are manipulated so the experiment measures what researchers want to examine; therefore, the results are merely contrived products and have no bearing in material reality. Artificial results are difficult to apply in practical situations, making generalizing from the results of a controlled study questionable. Experimental research essentially first decontextualizes a single question from a "real world" scenario, studies it under controlled conditions, and then tries to recontextualize the results back on the "real world" scenario. Results may be difficult to replicate .

Perhaps, groups in an experiment may not be comparable . Quasi-experimentation in educational research is widespread because not only are many researchers also teachers, but many subjects are also students. With the classroom as laboratory, it is difficult to implement randomizing or matching strategies. Often, students self-select into certain sections of a course on the basis of their own agendas and scheduling needs. Thus when, as often happens, one class is treated and the other used for a control, the groups may not actually be comparable. As one might imagine, people who register for a class which meets three times a week at eleven o'clock in the morning (young, no full-time job, night people) differ significantly from those who register for one on Monday evenings from seven to ten p.m. (older, full-time job, possibly more highly motivated). Each situation presents different variables and your group might be completely different from that in the study. Long-term studies are expensive and hard to reproduce. And although often the same hypotheses are tested by different researchers, various factors complicate attempts to compare or synthesize them. It is nearly impossible to be as rigorous as the natural sciences model dictates.