- Search Menu

- Sign in through your institution

- Advance Articles

- Editor's Choice

- Braunwald's Corner

- ESC Guidelines

- EHJ Dialogues

- Issue @ a Glance Podcasts

- CardioPulse

- Weekly Journal Scan

- European Heart Journal Supplements

- Year in Cardiovascular Medicine

- Asia in EHJ

- Most Cited Articles

- ESC Content Collections

- Author Guidelines

- Submission Site

- Why publish with EHJ?

- Open Access Options

- Submit from medRxiv or bioRxiv

- Author Resources

- Self-Archiving Policy

- Read & Publish

- Advertising and Corporate Services

- Advertising

- Reprints and ePrints

- Sponsored Supplements

- Journals Career Network

- About European Heart Journal

- Editorial Board

- About the European Society of Cardiology

- ESC Publications

- War in Ukraine

- ESC Membership

- ESC Journals App

- Developing Countries Initiative

- Dispatch Dates

- Terms and Conditions

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, the power of non-verbal communication, in academic settings, the role of body language in interviews and evaluations, cultural considerations, the impact of body language on collaboration, declarations.

- < Previous

Unspoken science: exploring the significance of body language in science and academia

- Article contents

- Figures & tables

- Supplementary Data

Mansi Patil, Vishal Patil, Unisha Katre, Unspoken science: exploring the significance of body language in science and academia, European Heart Journal , Volume 45, Issue 4, 21 January 2024, Pages 250–252, https://doi.org/10.1093/eurheartj/ehad598

- Permissions Icon Permissions

Scientific presentations serve as a platform for researchers to share their work and engage with their peers. Science and academia rely heavily on effective communication to share knowledge and foster collaboration. Science and academia are domains deeply rooted in the pursuit of knowledge and the exchange of ideas. While the focus is often on the content of research papers, lectures, and presentations, there is another form of communication that plays a significant role in these fields: body language. Non-verbal cues, such as facial expressions, gestures, posture, and eye contact, can convey a wealth of information, often subtly influencing interpersonal dynamics and the perception of scientific work. In this article, we will delve into the unspoken science of body language, exploring its significance in science and academia. It is essential to emphasize on the importance of body language in scientific and academic settings, highlighting its impact on presentations, interactions, interviews, and collaborations. Additionally, cultural considerations and the implications for cross-cultural communication are explored. By understanding the unspoken science of body language, researchers and academics can enhance their communication skills and promote a more inclusive and productive scientific community.

Communication is a multi-faceted process, and words are only one aspect of it. Research suggests that non-verbal communication constitutes a substantial portion of human interaction, often conveying information that words alone cannot. Body language has a direct impact on how people perceive and interpret scientific ideas and findings. 1 For example, a presenter who maintains confident eye contact, uses purposeful gestures, and exhibits an open posture is likely to be seen as more credible and persuasive compared with someone who fidgets, avoids eye contact, and displays closed-off body language ( Figure 1 ).

Types of non-verbal communications. 2 Non-verbal communication comprises of haptics, gestures, proxemics, facial expressions, paralinguistics, body language, appearance, eye contact, and artefacts.

In academia, body language plays a crucial role in various contexts. During lectures, professors who use engaging body language, such as animated gestures and expressive facial expressions, can captivate their students and enhance the learning experience. Similarly, students who exhibit attentive and respectful body language, such as maintaining eye contact and nodding, signal their interest and engagement in the subject matter. 3

Body language also influences interactions between colleagues and supervisors. For instance, in a laboratory setting, researchers who display confident and open body language are more likely to be perceived as competent and reliable by their peers. Conversely, individuals who exhibit closed-off or defensive body language may inadvertently create an environment that inhibits collaboration and knowledge sharing. The impact of haptics in research collaboration and networking lies in its potential to enhance interpersonal connections and convey emotions, thereby fostering a deeper sense of empathy and engagement among participants.

Interviews and evaluations are critical moments in academic and scientific careers. Body language can significantly impact the outcomes of these processes. Candidates who display confident body language, including good posture, firm handshakes, and appropriate gestures, are more likely to make positive impressions on interviewers or evaluators. Conversely, individuals who exhibit nervousness or closed-off body language may unwittingly convey a lack of confidence or competence, even if their qualifications are strong. Recognizing the power of body language in these situations allows individuals to present themselves more effectively and positively.

Non-verbal cues play a pivotal role during interviews and conferences, where researchers and academics showcase their work. When attending conferences or presenting research, scientists must be aware of their body language to effectively convey their expertise and credibility. Confident body language can inspire confidence in others, making it easier to establish professional connections, garner support for research projects, and secure collaborations.

Similarly, during job interviews, body language can significantly impact the outcome. The facial non-verbal elements of an interviewee in a job interview setting can have a great effect on their chances of being hired. The face as a whole, the eyes, and the mouth are features that are looked at and observed by the interviewer as they makes their judgements on the person’s effective work ability. The more an applicant genuinely smiles and has their eyes’ non-verbal message match their mouth’s non-verbal message, they will be more likely to get hired than those who do not. As proven, that first impression can be made in only milliseconds; thus, it is crucial for an applicant to pass that first test. It paints the road for the rest of the interview process. 4

While body language is a universal form of communication, it is important to recognize that its interpretation can vary across cultures. Different cultures have distinct norms and expectations regarding body language, and what may be seen as confident in one culture may be interpreted differently in another. 5 It is crucial for scientists and academics to be aware of these cultural nuances to foster effective cross-cultural communication and understanding. Awareness of cultural nuances is crucial in fostering effective cross-cultural communication and understanding. Scientists and academics engaged in international collaborations or interactions should familiarize themselves with cultural differences to avoid misunderstandings and promote respectful and inclusive communication.

Collaboration lies at the heart of scientific progress and academic success. Body language plays a significant role in building trust and establishing effective collaboration among researchers and academics. Open and inviting body language, along with active listening skills, can foster an environment where ideas can be freely exchanged, leading to innovative breakthroughs. In research collaboration and networking, proxemics can significantly affect the level of trust and rapport between researchers. Respecting each other’s personal space and maintaining appropriate distances during interactions can foster a more positive and productive working relationship, leading to better communication and idea exchange ( Figure 2 ). Furthermore, being aware of cultural variations in proxemics can help researchers navigate diverse networking contexts, promoting cross-cultural understanding and enabling more fruitful international collaborations.

Overcoming the barrier of communication. The following factors are important for overcoming the barriers in communication, namely, using culturally appropriate language, being observant, assuming positive intentions, avoiding being judgemental, identifying and controlling bias, slowing down responses, emphasizing relationships, seeking help from interpreters, being eager to learn and adapt, and being empathetic.

On the other hand, negative body language, such as crossed arms, lack of eye contact, or dismissive gestures, can signal disinterest or disagreement, hindering collaboration and stifling the flow of ideas. Recognizing and addressing such non-verbal cues can help create a more inclusive and productive scientific community.

Effective communication is paramount in science and academia, where the exchange of ideas and knowledge fuels progress. While the scientific community often focuses on the power of words, it is crucial not to send across conflicting verbal and non-verbal cues. While much attention is given to verbal communication, the significance of non-verbal cues, specifically body language, cannot be overlooked. Body language encompasses facial expressions, gestures, posture, eye contact, and other non-verbal behaviours that convey information beyond words.

Disclosure of Interest

There are no conflicts of interests from all authors.

Baugh AD , Vanderbilt AA , Baugh RF . Communication training is inadequate: the role of deception, non-verbal communication, and cultural proficiency . Med Educ Online 2020 ; 25 : 1820228 . https://doi.org/10.1080/10872981.2020.1820228

Google Scholar

Aralia . 8 Nonverbal Tips for Public Speaking . Aralia Education Technology. https://www.aralia.com/helpful-information/nonverbal-tips-public-speaking/ (22 July 2023, date last accessed)

Danesi M . Nonverbal communication. In: Understanding Nonverbal Communication : Boomsburry Academic , 2022 ; 121 – 162 . https://doi.org/10.5040/9781350152670.ch-001

Google Preview

Cortez R , Marshall D , Yang C , Luong L . First impressions, cultural assimilation, and hireability in job interviews: examining body language and facial expressions’ impact on employer’s perceptions of applicants . Concordia J Commun Res 2017 ; 4 . https://doi.org/10.54416/dgjn3336

Pozzer-Ardenghi L . Nonverbal aspects of communication and interaction and their role in teaching and learning science. In: The World of Science Education . Netherlands : Brill , 2009 , 259 – 271 . https://doi.org/10.1163/9789087907471_019

| Month: | Total Views: |

|---|---|

| October 2023 | 341 |

| November 2023 | 239 |

| December 2023 | 206 |

| January 2024 | 982 |

| February 2024 | 418 |

| March 2024 | 593 |

| April 2024 | 797 |

| May 2024 | 1,031 |

| June 2024 | 762 |

| July 2024 | 705 |

| August 2024 | 815 |

| September 2024 | 1,131 |

| October 2024 | 245 |

Email alerts

Citing articles via, looking for your next opportunity, affiliations.

- Online ISSN 1522-9645

- Print ISSN 0195-668X

- Copyright © 2024 European Society of Cardiology

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Healthcare (Basel)

Body Language Analysis in Healthcare: An Overview

Rawad abdulghafor.

1 Department of Computer Science, Faculty of Information and Communication Technology, International Islamic University Malaysia, Kuala Lumpur 53100, Malaysia

Sherzod Turaev

2 Department of Computer Science and Software Engineering, College of Information Technology, United Arab Emirates University, Al-Ain, Abu Dhabi P.O. Box 15556, United Arab Emirates

Mohammed A. H. Ali

3 Department of Mechanical Engineering, Faculty of Engineering, University of Malaya, Kuala Lumpur 50603, Malaysia

Associated Data

Not applicable.

Given the current COVID-19 pandemic, medical research today focuses on epidemic diseases. Innovative technology is incorporated in most medical applications, emphasizing the automatic recognition of physical and emotional states. Most research is concerned with the automatic identification of symptoms displayed by patients through analyzing their body language. The development of technologies for recognizing and interpreting arm and leg gestures, facial features, and body postures is still in its early stage. More extensive research is needed using artificial intelligence (AI) techniques in disease detection. This paper presents a comprehensive survey of the research performed on body language processing. Upon defining and explaining the different types of body language, we justify the use of automatic recognition and its application in healthcare. We briefly describe the automatic recognition framework using AI to recognize various body language elements and discuss automatic gesture recognition approaches that help better identify the external symptoms of epidemic and pandemic diseases. From this study, we found that since there are studies that have proven that the body has a language called body language, it has proven that language can be analyzed and understood by machine learning (ML). Since diseases also show clear and different symptoms in the body, the body language here will be affected and have special features related to a particular disease. From this examination, we discovered that it is possible to specialize the features and language changes of each disease in the body. Hence, ML can understand and detect diseases such as pandemic and epidemic diseases and others.

1. Introduction

Body language constitutes one of the languages of communication. The types of languages are classified into verbal and non-verbal languages. Body language includes non-verbal language, where the movements and behaviors of the body are used instead of words to express and convey information. Body language may involve hand movements, facial expressions and hints, eye movements, tone of voice, body movements and positions, gestures, use of space, and the like. This research will focus on interpretations of the human body language, classified under kinesiology.

Body language is entirely different from sign language, a complete language—like verbal language—with its own basic rules and complex grammar systems [ 1 , 2 ]. On the other hand, body language does not contain grammatical rules and is usually a language belonging to or classified according to cultures [ 3 ]. Interpretations of body language may differ from country to country and from one culture to another. There exists some controversy over whether body language can be regarded as a universal language for all people. Some researchers have concluded that most communication among individuals involves physical symbols or gestures since the interaction of body language here facilitates speedy information transmission and understanding [ 4 ]. According to [ 5 ], body language speaks more and better content than verbal language. When, for example, an individual speaks over the phone to someone about an inquiry, the information becomes mysterious due to the physical language’s restrictions. However, an individual sitting directly in front of an audience has fewer restrictions and does not have an audience. The information with body language is more easily transmitted and received, even more so if the speaker is standing, allowing more freedom of movement. Thus, it follows that body language enhances communication. This work attempts to prove that body language enhances workplace positivity.

Several experiments were performed in [ 6 ] on facial expressions and body movements affected by human emotions. The study has shown that facial expressions and body movements can accurately determine human emotions. It also proved that combining facial features and activities with body movements is essential for analyzing human expressions. Three different stages of experiments were conducted to determine whether it is necessary to combine the two expressions or not. It was confirmed that it is essential to connect them for identification. Reading someone’s eyes should also not be ignored. It is considered an important factor in expressing and understanding human emotions. We are generally able to know what others want from their eye movements. For that, eye language has many effects. According to [ 7 ], the expansion and tightness of the eye size are affected by emotions and allow the observer to convey specific additional information. The human eye blinks, on average, 6 to 10 times per minute. However, when someone is attracted to someone else, the number of blinks is fewer. Study [ 8 ] discovered that human feelings could be identified and defined through body position. For example, when a person feels angry, they will push their body forward to express dominance over the other person, and their upper body is tilted and no longer upright. On the other hand, if someone feels intimidated by the opponent, they signal submission by retreating backward or moving their head back. Additionally, a person’s emotional state can be determined from their sitting position. Someone sitting on a chair with half of their upper body and head slightly tilted forward indicates attentiveness and eagerness to follow what is being said. However, sitting with legs and hands crossed suggests that they do not wish to engage and feel uncomfortable with what is being said or the person saying it [ 5 ].

Body language analysis is also essential to avoid confusion in a single movement’s meanings and purposes that carry more than one meaning. For example, the expressive movement of a person may be due to a physical handicap or a compulsive movement rather than an intentional one. Furthermore, a particular movement in the body of someone may not mean the same to another. For example, a person may rub their eyes due to itchiness and not fatigue. Foreign cultures also need careful analysis due to their social differences. Although most body movements are universal, there are also movements specific to each culture. This may vary from country to country, region to region, and even social group.

Pandemic and epidemic diseases constitute a global risk factor responsible for the death of millions of people worldwide. The ability to detect and treat casualties is limited, primarily due to the lack of human and technical resources. When patients are not physically accessible, remote diagnosis is required. All pandemic and epidemic diseases are characterized by distinct body movements affecting the face, shoulders, chest, and hands. AI technology has shown positive results in some reading of these gestures. Hence, the idea is to use body language to detect epidemic diseases early and provide treatment. It should be noted that the primary and vital catalyst for the proposal of this study is the COVID-19 disease, which is presently terrorizing the whole world. As researchers in information technology and computer science, we must play our part in rapidly detecting this disease.

This paper aims to study the previous literature and identify body language expressions that indicate disease. Body language is defined as certain expressions, movements, and gestures that point to the physical and emotional state of the bearer. Certain parts of the body can express different characteristics or feelings. Some studies have demonstrated the presence of certain emotional conditions as reflected in particular facial expressions (e.g., joy, sadness, surprise, and anger). Regarding the relationship between diseases and body language, it is known that diseases affect the body parts and qualities and are reflected in the movements and expressions of parts of the body. Different diseases affect different body parts and can be measured, identified, and used for diagnosis.

Hence, this paper is proposed to study some diseases that can be diagnosed by identifying and measuring the external movements of the body. In addition, this paper discusses the findings of previous studies to demonstrate the usefulness and contribution of AI in detecting diseases through body language. One of the biggest obstacles to treating COVID-19 patients effectively is speedy diagnosis. However, the large number of cases exceeds the capacity of most hospitals. Hence, AI offers a solution through ML. ML can detect disease symptoms as manifested in the patient’s body language and can be used to generate correct readings and predictions.

Therefore, the main contribution of this paper is to show the potential use of analyzing body language in health care. The importance of body language analysis in health care and patient body language analysis using AI will be discussed in the following sections. The added tables list previous studies that used ML to identify symptoms through body expressions. The findings demonstrate that a patient’s body language can be analyzed using ML for diagnostic purposes.

2. Methodology

The methods used to review in this work are as follows (also see Figure 1 ): first, the importance of body language analysis is highlighted to prove that the body movements can be read and analyzed to produce outcomes that are useful for many applications; second, body language analysis in health care is presented to show the importance of body language in medical diagnosis in research; third, ML is used successfully to identify characteristic symptoms; fourth, Table 1 show studies that used ML as a diagnostic tool and include the used algorithms. Each topic was discussed separately, as detailed in the following sections.

Some Studies of AI Methods for Body Language Elements to Identify the Symptoms.

| References | Title | Study Purpose | Method | Year | Result Evaluation | Future Work |

|---|---|---|---|---|---|---|

| [ ] | Early prediction of disease progression in COVID-19 pneumonia patients with chest CT and clinical characteristics | 2020 | ||||

| [ ] | Individual-Level Fatality Prediction of COVID-19 Patients Using AI Methods | 2020 | ||||

| [ ] | COVID-19 Prediction and Detection Using Deep Learning | 2020 | ||||

| [ ] | Artificial Intelligence was applied to chest X-ray images to detect COVID-19 automatically. A thoughtful evaluation approach | 2020 | ||||

| [ ] | A Machine Learning Model to Identify Early-Stage Symptoms of SARS-CoV-2 Infected Patients | 2020 | ||||

| [ ] | A combined deep CNNLSTM network for the detection of novel coronavirus (COVID-19) using X-ray image | 2020 | ||||

| [ ] | Smart and automation technologies for ensuring the long-term operation of a factory amid the COVID-19 pandemic: An evolving fuzzy assessment approach | 2020 | ||||

| [ ] | A Rapid, Accurate, and Machine-Agnostic Segmentation and Quantification Method for CT-Based COVID-19 Diagnosis | 2020 | ||||

| [ ] | Coronavirus (COVID-19) Classification using CT Images by Machine Learning Methods | 2020 | ||||

| [ ] | Early Prediction of Mortality Risk Among Severe COVID-19 Patients Using Machine Learning | 2020 | ||||

| [ ] | Multi-task deep learning-based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation | 2020 | ||||

| [ ] | Artificial Intelligence and COVID-19: Deep Learning Approaches for Diagnosis and Treatment | 2020 | ||||

| [ ] | COVID-19 Prediction and Detection Using Deep Learning | 2020 | ||||

| [ ] | Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software | 2020 | ||||

| [ ] | Common cardiovascular risk factors and in-hospital mortality in 3894 patients with COVID-19: survival analysis and machine learning-based findings from the multicenter Italian CORIST Study | 2020 | ; HR = 2.3; 1.5–3.6 for Creative protein levels ≥10 versus ≤3 mg/L). | |||

| [ ] | InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using Chest X-ray | 2020 | ||||

| [ ] | Monitoring and analysis of the recovery rate of COVID-19 positive cases to prevent dangerous stages using IoT and sensors | 2020 | ||||

| [ ] | COVID-19 Patient Detection from Telephone Quality Speech Data | 2020 | ||||

| [ ] | Machine learning-based approaches to detect COVID-19 using clinical text data | 2020 | ||||

| [ ] | Data science and the role of Artificial Intelligence in achieving the fast diagnosis of COVID-19 | 2020 | ||||

| [ ] | DeepCOVIDNet: An Interpretable Deep Learning Model for Predictive Surveillance of COVID-19 Using Heterogeneous Features and Their Interactions | 2020 | ||||

| [ ] | COVID-19 Detection Through Transfer Learning Using Multimodal Imaging Data | 2020 | ||||

| [ ] | Early Detection of COVID19 by Deep Learning Transfer Model for Populations in Isolated Rural Areas | The processes from image pre-processing, data augmentation, VGG16, VGG19, and transfer of knowledge using | 2020 | |||

| [ ] | Deep learning-based detection and analysis of COVID-19 on chest X-ray images | 2020 | ||||

| [ ] | Analysis of novel coronavirus (COVID-19) using machine learning methods | 2020 | Results on average accuracy evaluation for the total number of cases across all four countries: For the average growth rate spread across all four countries: Simple Linear Average prediction score across all four countries: | |||

| [ ] | Facial emotion detection using deep learning | 2016 | ||||

| [ ] | Measuring facial expressions of emotion | The three approaches of | 2007 | |||

| [ ] | PRATIT: a CNN-based emotion recognition system using histogram equalization and data augmentation | Pre-processing procedures such as | 2020 | |||

| [ ] | Facial expression video analysis for depression detection in Chinese patients | 2018 | ||||

| [ ] | A Novel Facial Thermal Feature Extraction Method for Non-Contact Healthcare System | Four models are trained using | 2020 | |||

| [ ] | Emotion Detection Using Facial Recognition | Emotion detection experiments are performed using | 2020 | To extend facial recognition systems based on | ||

| [ ] | Stress and anxiety detection using facial cues from videos | 2017 | ||||

| [ ] | Automatic Detection of ADHD and ASD from Expressive Behavior in RGBD Data | 2017 | ||||

| [ ] | A Facial-Expression Monitoring System for Improved Healthcare in Smart Cities | 2017 | ||||

| [ ] | Deep Pain: Exploiting Long Short-Term Memory Networks for Facial Expression Classification | 2017 | ||||

| [ ] | Patient State Recognition System for Healthcare Using Speech and Facial Expressions | 2016 | ||||

| [ ] | Facial expression monitoring system for predicting patient’s sudden movement during radiotherapy using deep learning | 2020 | ||||

| [ ] | Patient Monitoring System from Facial Expressions using Python | 2020 | ||||

| [ ] | Combining Facial Expressions and Electroencephalography to Enhance Emotion Recognition | 2019 | Each surpasses the most noteworthy performing single technique | |||

| [ ] | Gestures Controlled Audio Assistive Software for the Voice Impaired and Paralysis Patients | 2019 | ||||

| [ ] | Detecting speech impairments from temporal Visual facial features of aphasia patients | 2019 | ||||

| [ ] | Gestures Controlled Audio Assistive Software for Voice Impaired and Paralysis Patients | 2019 | ||||

| [ ] | Detecting Speech Impairments from Temporal Visual Facial Features of Aphasia Patients | 2018 | ||||

| [ ] | The Elements of End-to-end Deep Face Recognition: A Survey of Recent Advances | 2021 | ||||

| [ ] | Automated Facial Action Coding System for Dynamic Analysis of Facial Expressions in Neuropsychiatric Disorders | The automated FACS system and its application to video analysis will be described. processed for feature extraction | 2011 | |||

| [ ] | Measuring facial expression of emotion | 2015 | ||||

| [ ] | Classifying Facial Action | Three methods are compared | 1996 | |||

| [ ] | Real-Time Gait Analysis Algorithm for Patient Activity Detection to Understand and Respond to the Movements | 2012 | ||||

| [ ] | A Real-Time Patient Monitoring Framework for Fall Detection | 2019 | ||||

| [ ] | Anomaly Detection of Elderly Patient Activities in Smart Homes using a Graph-Based Approach | 2018 | ||||

| [ ] | Fall Detection and Activity Recognition with Machine Learning | 2008 | ||||

| [ ] | Vision-based detection of unusual patient activity | 2011 | ||||

| [ ] | MDS: Multi-level decision system for patient behavior analysis based on wearable device information | 2019 | To widen the scope of decision-making and include other activities for different age groups. | |||

| [ ] | Proposal Gesture Recognition Algorithm Combining CNN for Health Monitoring | 2019 | ||||

| [ ] | A robust method for VR-based hand gesture recognition using density-based CNN | 2020 | ||||

| [ ] | Hand Gesture Recognition Using Convolutional Neural Network for People Who Have Experienced a Stroke | 2019 | ||||

| [ ] | Determining the affective body language of older adults during socially assistive HRI | 2014 | ||||

| [ ] | Vision based body gesture meta features for Affective Computing | 2020 | ||||

| [ ] | Deep Learning and Medical Diagnosis: A Review of Literature | 2020 | ||||

| [ ] | Machine learning classification of design team members’ body language patterns for real time emotional state detection | 2015 | ||||

| [ ] | Towards Automatic Detection of Amyotrophic Lateral Sclerosis from Speech Acoustic and Articulatory Samples | 2016 | ||||

| [ ] | Towards improving diagnosis of skin diseases by combining deep neural network and human knowledge | 2018 | ||||

| [ ] | Early prediction of chronic disease using an efficient machine learning algorithm through adaptive probabilistic divergence-based feature selection approach | 2020 | ||||

| [ ] | Multi-Modal Depression Detection and Estimation | 2019 | ||||

| [ ] | Dual-hand detection for human-robot interaction by a parallel network based on hand detection and body pose estimation | A deep parallel neural network uses two channels: | 2019 | |||

| [ ] | Gesture recognition based on multi-modal feature weight | 2021 | Simulation experiments show that: | |||

| [ ] | Pose-based Body Language Recognition for Emotion and Psychiatric Symptom Interpretation | 2021 | ] of 5.2 percent, the performance reduction is significantly lower. |

The Review Stages.

3. The Importance of Body Language Analysis

AI is one of the most significant technological developments, increasing in popularity and being used in all application areas. One of the most important of these applications is the use of AI in healthcare. Health is the most important human factor for life on this planet. Recently, the use and applications of AI in healthcare have played a significant role in helping doctors discover diseases and improve human health. The use of AI in health depends on the appearance of some symptoms on parts of the body. These symptoms affect and are reflected in the movements and expressions of the body, which are manifested as body language. From this point, these features of body language can be used to classify disease symptoms by detecting them in ML. In this section, we want to explain the importance of using body language by artificial intelligence. There are features that appear in body language that AI can analyze to solve many problems in many applications. For example, facial expressions can be analyzed to know human feelings and benefit from them in psychotherapy or examine subjects’ emotions in the study. Another example is analyzing the movements of the hand, shoulder, or leg, and using them to discover valuable features in medicine, security, etc. From this point, we want to show that body language has many benefits and applications, so this is important. Therefore, we want to suggest that body language can also be used to detect infectious diseases such as COVID-19 using ML.

Now, it is feasible to employ this technology in healthcare systems. Pandemic and epidemic diseases are considered an intractable matter that inferiorly affects human health, regarded as peoples’ most valuable asset. Additionally, the biggest worry is that new pandemics or epidemics will suddenly appear and become deadly, such as COVID-19, which has claimed nearly a million lives so far. This stimulates us to develop AI technologies to help detect the disease’s external symptoms by analyzing the patients’ body language. This work deals with general studies that prove the importance of body language processing in various fields.

Every computer user interacts with the device via mouse and keyboard. Currently, researchers are developing a computer system for interaction and response through body language such as hand gestures and movement. In [ 8 ], a comprehensive survey was completed evaluating the published literature recommending the visual interpretation of hand gestures when interacting with computing devices and introducing more advanced methods to analyze body language rather than mouse and keyboard movements. The study of [ 9 ] considered the problem of robot accuracy recognition. It proposed a fusion system to identify the fall movement types and abnormal directions with an accuracy rate of 99.37%. A facial coding system was developed in [ 10 ] to measure and analyze facial muscle movements and identify facial expressions. A database was created with a series of 1100 images. The system analyzed and classified facial creases and wrinkles to match their movements. The results showed that the performance improved, reaching 92%. Combining facial features and movements with body movements is essential for analyzing individual expressions. Three different experiments were conducted to determine whether facial expressions and body language should be combined and concluded in the affirmative. Another study [ 11 ] focused on deep learning techniques to identify emotions revealed in facial expressions. This research used pure convolutional neural network techniques to prove that deep learning using these neural networks successfully recognizes emotions by developing cognition, significantly improving the usability. A new model was invented in [ 12 ] that detected body gestures and movements with a pair of digital video images, which supplied a set of vector monitors with three dimensions.

The first study showed the relationship between the contraction of the internal muscles of the face and the facial movements as established by Hjortsjo 1970 [ 13 ] to develop a coding system by identifying the minor units of facial muscle movements and then drawing coordinates that defined the facial expressions. The recognition of people’s emotions has merited much attention. However, the issue of detecting facial emotions and expressions of speech, especially among researchers, is still problematic. The work presented in [ 14 ] offered a comprehensive survey to facilitate further research in this field. It focused on identifying gender-specific characteristics, setting an automatic framework to determine the physical manifestation of emotions, and identifying constant and dynamic body shape comments. It also examined recent studies on learning and emotion by identifying gestures through photos or video. Several methods combined speech, body, and facial gestures were also discussed to identify optimized emotions. The study concluded that the knowledge of a person’s feelings through overtones was still incomplete.

4. Body Language Analysis in Healthcare

A coding system was created to classify the facial expressions by analyzing more than 1100 pictures at work [ 10 ]. Three ways to classify facial expressions were compared: a method for analyzing image components in the gray field, measuring wrinkles, and a template for creating facial movements. The accuracy of performance of the coding system for the three roads was 89%, 57%, and 85%, respectively, while when assembling the methods, the performance accuracy reached 92%. Online learning is challenged by knowing students’ participation in learning processes. In work [ 15 ], an algorithm is introduced to learn about student interactions and see their problems. In this algorithm, two methods were used to collect evidence of student participation: the first method involved collecting facial expressions using a camera, and the second involved collecting hand movement data using mouse movements. The data were trained by building two groups; one group collected facial data with mouse data, and the second was without the mouse. It was discovered that the first group’s performance was better than the second group’s by 94.60% compared to 91.51%. Work [ 14 ] commented on recognizing facial and speech gestures that may provide a comprehensive survey of body language. It provided a framework for the automatic identification of dynamic and fixed emotional body gestures that combined facial and speech gestures to improve recognition of a person’s emotions. Paper [ 16 ] defines facial expressions by matching them with body positions. The work demonstrated that the effects and expressions are more evident when the major irritations on the face are similar to those highlighted in the body. However, the model produces different results according to the dependence on the properties, whether physical, dimensional, or latent. Another significant finding in the study is that expressions of fear bloom better when paired with facial expressions than when performing tasks.

In [ 17 ], the authors stated that the medical advisor must exhibit exciting communication qualities that make the patient feel comfortable making a correct decision. They advised doctors to know how to use facial expressions, eyes, hand gestures, and other body expressions. It was mentioned that a smile is the most robust expression that a doctor can use to communicate with their patients, as the doctor’s smile makes the patient feel comfortable. The patient’s sense of comfort makes them appear confident, and they answer the doctor’s questions with clear responses, credibility, and confidence. In addition, communicating with the eyes is very important to help the patient, as the lack of this from the doctor may suggest that the doctor does not care about them. The research in [ 18 ] concludes that the doctor’s appropriate nonverbal communication positively impacts the patient. Objective evidence has shown that the patient improves and recovers better and faster when the doctor uses a smile and direct eye communication with the patient compared to those who do not use a smile and direct eye with the patient. It was also concluded that patients who receive more attention, feeling, sensation, and participation by the doctor respond better to treatment, as the tone of voice, movement of the face and body, and eye gaze affect the patient. Clint [ 19 ] reported his first day on the job in the intensive care unit. He felt fear and anxiety on that day as the unit was comprehensive and informative. Clint was asking himself, “is it worth working in that unit?” He had a patient with her sister next to her. The patient glimpsed Clint’s nervousness and anxiety but did not dare ask him, so she whispered that the nurse was nervous to her sister. Then, her sister asked Clint, “you are worried and anxious today; why?” What is there to be so nervous about? Clint thought to hide his nervousness and anxiety and restore confidence; he smiled and replied, “I am not nervous.” However, sometimes, we have to ask our patients ridiculous questions that make us tense. Here, Clint states that he noticed from the patient’s looks that he could not persuade her to hide his stress. Clint made it clear that patients are affected by their body language and facial expressions. They can know their cases through their body language. From here, Clint realized that he was wrong. As anxiety and stress began on his patient, his condition may increase for that reason.

In one of Henry’s articles [ 20 ], he wrote that treating a patient with behaviors and body language has a more significant impact than using drugs. The work [ 21 ] concluded that non-verbal language between a doctor and their patient plays a vital role in treating the patient. The doctor can use non-verbal signals sent from the patient to collect information about the condition of the disease to help them decide on diagnosis and treatment. The research summarized that the non-verbal technique used by the doctor toward the patient affects them in obtaining information and helping them recover from the disease. For example, eye gaze, closeness to the patient, and facial and hand gestures to appear relaxed. The research suggests that there is a positive effect on the use of non-verbal cues on the patient. It is recommended that doctors be trained in incorporating non-verbal cues as a significant way of dealing with patients to speed up their treatment.

5. Patient’s Body Language Analysis Using AI

Different AI methods and techniques have been used to analyze patients’ body language. We briefly discuss some studies conducted so far in this area. More specifically, focusing on facial recognition, a pimple system was introduced in [ 22 ] to analyze facial muscles and thus identify different emotions. The proposed system automatically tracks faces using video and extracts geometric shapes for facial features. The study was conducted on eight patients with schizophrenia, and the study collected dynamical information on facial muscle movements. This study showed the possibility of identifying engineering measurements for individual faces and determining their exact differences for recognition purposes. Three methods were used in [ 23 ] to measure facial expressions to define emotions and identify persons with mental illness. The study’s proposed facial action coding system enabled the interpretation of emotional facial expressions and thus contributed to the knowledge of therapeutic intervention for patients with mental illnesses.

Many people suffer from an imbalance in the nervous system, which leads to paralysis of the patient’s movement and falls without prior warning. The study [ 24 ] was targeted to improve early warning signs detection and identification rate using a platform (R). Wireless sensor devices were placed on the chest and waist. The collected data were converted to an algorithm for analysis that extracted them and activated if there was a risk. The results showed that the patient at risk engaged in specific typical movements, which indicated an imminent fall. The authors further suggested applying this algorithm to patients with seizures to warn of an imminent attack and alert the emergency services.

In research [ 25 ], a computational framework was designed to monitor the movements of older adults to signal organ failures and other sudden drops in vital body functions. The system monitored the patient’s activity and determined its level using sensors placed on different body parts. The experiments show that this system identifies the correct locations in real-time with an accuracy of 95.8%. Another approach based on data analysis was presented in [ 26 ] for an intelligent home using sensors to monitor its residents’ movements and behaviors. This system helps detect behaviors and forecast diseases or injuries that residents may experience, especially older people. This study is helpful for doctors in providing remote care and monitoring their patients’ progress. The target object capture setup model proposed in [ 27 ] is based on the candidate region–suggestion network to detect the position grab of the manipulator combined with information for color and deep image capture using deep learning. It achieved a 94.3% crawl detection success rate on multiple target detection datasets through merging information for a color image. A paper [ 28 ] under review deals with the elderly and their struggle to continue living independently without relying on the support of others—the research project aimed to compare automated learning algorithms used to monitor their body functions and movements. Among the eight higher education algorithms studied, the support conveyor algorithm achieved the highest accuracy rate of 95%, using reference traits. Some jobs require prolonged sitting, resulting in long-term spinal injury and nervous disease. Some surveys helped design sitting position monitoring systems (SPMS) to assess the position of the seated person using sensors attached to the chair. The drawback of the proposed method was that it required too many sensors. This problem was resolved by [ 29 ], who designed an SPMS system that only needed four such sensors. This improved system defined six different sitting positions through several machine-learning algorithms applied to average body weight measurements. The positions were then analyzed and classified into any approach that would produce the highest level of accuracy, reaching from 97.20% to 97.94%. In most hospitals, medical doctors face anxiety about treating patients with mental illness regarding potential bodily harm, staff risks, and hospital tool damage. The study [ 30 ] devised a method to analyze the patient’s movements and identify the risk of harmful behavior by extracting visual data monitoring the patient’s movements from cameras installed in their rooms. The proposed method traced the movement points, accumulated them, and extracted their properties. The characteristics of the movement points were analyzed according to spacing, position, and speed. The study concluded that the proposed method could be used to explore features and characteristics for other purposes, such as analyzing the quality of the disease and determining its level of progression. In the study [ 31 ], wireless intelligent sensor applications and devices were designed to care for patient health, provide better patient monitoring, and facilitate disease diagnosis. Wireless sensors were installed on the body to periodically monitor the patient’s health, update the information, and send it to the service center. The researchers investigated the multi-level decision system (MDS) to monitor patient behaviors and match them with the stored historical data. This information allowed the decision makers in the medical centers to give treatment recommendations. The proposed system could also record new cases, store new disease data, and reduce the doctors’ effort and time spent examining the patients. The results proved accurate and reliable (MDS) in predicting and monitoring patients.

The study of [ 32 ] proposed the Short Time Fourier Transform application to monitor the patient’s movements and voice through sensors and microphones. The system transmitted sound and accelerometer data, analyzed the data to identify the patient’s conditions, and achieved high accuracy. Three experiments were conducted in reference [ 33 ], which involve the recognition of full-body expressions. The first experiment was about matching body expressions to incorporate all emotions, where fear was the most difficult emotion to express. At the same time, the second experiment focused on facial expressions strongly influenced by physical expression and, as a result, was ambiguous. In the last experiment, attention was given to expressions of the tone of a voice to identify emotional feelings related to the body. Finally, it was concluded that it was essential to pool the results of the three experiments to reveal true body expression.

A valuable study was conducted at the MIT Institute [ 34 ] to develop a system that detects pain in patients by analyzing data on brain activities using a wearable device to scan brain nerves. This was shown to help diagnose and treat patients with loss of consciousness and sense of touch. In this research, researchers use several fNIRS sensors specifically on the patient’s front to measure the activity of the frontal lobe, where the researchers developed ML models to determine the levels of oxygenated hemoglobin related to pain. The results showed that pain was detected with an accuracy of 87%.

The study [ 35 ] considered the heartbeat as a type of body language. Checking a patient’s heartbeat constitutes a crucial medical examination tool. The researcher suggested a one-dimensional (1D) convolutional neural network model CNN, which classified the vibrational signals of the regular and irregular heartbeats through an electrocardiogram. The model used the de-noising auto-encoder (DAE) algorithm, and the results showed that the proposed model classified the sound signals of the heart with an accuracy of up to 99%.

6. Discussion

We can conclude from this study that reading and understanding body language through AI will help automatically detect epidemic diseases. Counting epidemic patients is a significant obstacle to detecting every infected person. The most prominent example that is evident now is COVID-19 sufferers. All the developed, middle, and developing countries of the world have faced a significant problem examining the disease due to many infected people and the rapid spread. Thus, infections increased significantly, making it difficult to catch up to detect. We suggest conducting a study to determine the movements and gestures of the body with epidemic diseases, such as those with COVID-19. Indeed, the epidemic disease will have unique and distinct movements in some body parts. The thermal camera to detect high body temperature certainly plays a significant role in indicating a patient with a disease. Still, it is difficult to determine what kind of disease is affected, and secondly, there may be a patient with epidemic disease, but their temperature may not have significantly increased. Thirdly, it may be revealed that the high temperature of an epidemic may be delayed, and the patient is in a critical stage of treatment. We focus in this study on the interest in studying the body language of some epidemics, especially COVID-19, which changed our lives for the worse. We have learned a harsh lesson from this deadly enemy: not to stand still. We must help our people, countries, and the world defend and attack this disease. Hence, we propose studying the use of body language using AI. We hope to collect and identify body parts’ gestures that characterize the epidemic in the upcoming studies on which we are currently working.

Table 1 indicates some studies that have used ML to discover disease and symptoms through gestures, hands, and facial expressions. This table concludes that the CNN algorithms are the most common and efficient methods of identifying disease symptoms through facial expressions and hand gestures. Some studies indicate that analyzing other body parts is also helpful in identifying some types of diseases using different ML algorithms, such as SVM and LSTM. It appears to us here that combining the proposed CNN algorithm with a new proposed algorithm to determine facial expressions will lead to high-quality results for detecting some epidemic diseases. It is essential first to study the symptoms that characterize the epidemic disease and their reflection on body expressions and then use the algorithm to learn the machine that has a higher efficiency in identifying these expressions.

The studies in Table 1 are classified as follows:

- (1) Studies on medical diagnosis using AI for analyzing body language.

- (2) Studies on medical diagnosis using electronic devices and AI for analyzing body language.

- (3) Studies on COVID-19 diagnosis using other methods.

This study aims to survey research using ML algorithms to identify body features, movements, and expressions. Each movement is affected by the disease, and each disease is characterized by a distinct and different effect on the body. This means some body parts will undergo certain changes that point to a specific disease. Thus, we propose that ML algorithms capture images of body movements and expressions, analyze them, and identify diseases. This study surveyed a selection of existing studies that use different ML algorithms to detect body movements and expressions. Since these studies do not discuss this epidemiology method, this study seeks to document the use of ML algorithms in discovering epidemics such as COVID-19. Our survey analysis concludes that the results achieved indicate the possibility of identifying the body movements and expressions and that ML and convolutional neural networks are the most proficient in determining body language.

From an epidemiological, diagnostic, and pharmacological standpoint, AI has yet to play a substantial part in the fight against coronavirus. Its application is limited by a shortage of data, outlier data, and an abundance of noise. It is vital to create unbiased time series data for AI training. While the expanding number of worldwide activities in this area is promising, more diagnostic testing is required, not just for supplying training data for AI models but also for better controlling the epidemic and lowering the cost of human lives and economic harm. Clearly, data are crucial in determining if AI can be used to combat future diseases and pandemics. As [ 91 ] previously stated, the risk is that public health reasons will override data privacy concerns. Long after the epidemic has passed, governments may choose to continue the unparalleled surveillance of their population. As a result, worries regarding data privacy are reasonable.

7. Conclusions

According to patient surveys, communication is one of the most crucial skills a physician should have. However, communication encompasses more than just what is spoken. From the time a patient first visits a physician, their nonverbal communication, or “body language”, determines the course of therapy. Bodily language encompasses all nonverbal forms of communication, including posture, facial expression, and body movements. Being aware of such habits can help doctors get more access to their patients. Patient involvement, compliance, and the result can all be influenced by effective nonverbal communication.

Pandemic and epidemic illnesses are a worldwide threat that might kill millions. Doctors have limited abilities to recognize and treat victims. Human and technological resources are still in short supply regarding epidemic and pandemic conditions. To better the treatment process and when the patient cannot travel to the treatment location, remote diagnosis is necessary, and the patient’s status should be automatically examined. Altering facial wrinkles, movements of the eyes and eyebrows, some protrusion of the nose, changing the lips, and the appearance of certain motions of the hands, shoulders, chest, head, and other areas of the body are all characteristics of pandemic and epidemic illnesses. AI technology has shown promise in understanding these motions and cues in some cases. As a result, the concept of allocating body language to identifying epidemic diseases in patients early, treating them before, and assisting doctors in recognizing them arose owing to the speed with which they spread and people died. It should be emphasized that the COVID-19 disease, which horrified the entire world and revolutionized the world’s life, was the significant and crucial motivator for the idea of this study after we studied the body language analysis research in healthcare and defined the automatic recognition frame using AI to recognize various body language elements.

As researchers in information technology and computer science, we must contribute to discussing an automatic gesture recognition model that helps better identify the external symptoms of epidemic and pandemic diseases to help humanity.

Acknowledgments

First author’s research has been supported by Grant RMCG20-023-0023, Malaysia International Islamic University, and the second author’s work has been endorsed by the United Arab Emirates University Start-Up Grant 31T137.

Funding Statement

This research was funded by Grant RMCG20-023-0023, Malaysia International Islamic University, and United Arab Emirates University Start-Up Grant 31T137.

Author Contributions

Conceptualization, R.A. and S.T.; methodology, R.A.; software, R.A.; validation, R.A. and S.T.; formal analysis, R.A.; investigation, M.A.H.A.; resources, M.A.H.A.; data curation, R.A.; writing—original draft preparation, R.A.; writing—review and editing, S.T.; visualization, M.A.H.A.; supervision, R.A. and S.T.; project administration, R.A. and S.T.; funding acquisition, R.A. and S.T. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Informed consent statement, data availability statement, conflicts of interest.

The authors declare no conflict of interest.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Body Language

Microexpressions

Reviewed by Psychology Today Staff

Body language is a silent orchestra, as people constantly give clues to what they’re thinking and feeling. Non-verbal messages including body movements, facial expressions, vocal tone and volume, and other signals are collectively known as body language.

Microexpressions (brief displays of emotion on the face), hand gestures, and posture all register in the human brain almost immediately—even when a person is not consciously aware they have perceived anything. For this reason, body language can strongly color how an individual is perceived, and how he or she, in turn, interprets others’ motivation , mood, and openness . It's natural to mirror; beginning as soon as infancy, a newborn moves its body to the rhythm of the voice he hears.

Body language is a vital form of communication, but most of it happens below the level of conscious awareness. When you wait for a blind date to arrive, for instance, you may nervously tap your foot without even realizing that you’re doing it. Similarly, when you show up to meet your date, you may not consciously perceive that your date appears closed-off, but your unconscious mind may pick up on the crossed arms or averted gaze. Luckily, with knowledge and a little practice, it is possible to exert some measure of control over your own body language and to become more skilled at reading others.

The face is the first place to look , arching eyebrows might indicate an invitation of sorts, and smiling is another indication that the person welcomes you. And is the person standing or sitting close to you? If so, then there is interest. Plus, open arms are just that: Open.

If a person repeatedly touches your arm, places a light hand on your shoulder, or knocks elbows with you, the person is attracted to you and is demonstrating this with increased touch. People interested in each other smile more, and their mouths may even be slightly open. Engaging in eye contact is another indication. A person who leans towards you or mirrors your body language is also demonstrating interest.

A common form of body language is mirroring another person’s gestures and mannerisms; mirroring also includes mimicking another person’s speech patterns and even attitudes. This is a method of building rapport with others. We learn through imitating others, and it is mostly an unconscious action.

When you want to persuade or influence a person, mirroring can be an effective way to build rapport. Salespeople who use this with prospective clients pay close attention to them and they listen, observe, mimic with positive results.

People who are attracted to one another indeed copy each other’s movements and mannerisms. In fact, many animals mirror as well. That is why cats circle each other, and why chimpanzees stare at each other before intercourse.

If you tilt your head while looking at a baby, the baby relaxes. Why is that? The same applies to couples who are in love, tilting the head exposes the neck, and perhaps shows vulnerability. The person with a tilted head is perceived as more interested, attentive, caring, and having less of an agenda.

Eye blocking , or covering your eyes, expresses emotions such as frustration and worry. And sometimes the eyelids shut to show determination, while sometimes the eyelids flutter to show that you have screwed up and feel embarrassed.

When you’re stressed out, touching or stroking the neck signals a pacifying behavior. We all rub our necks at the back, the sides, and also under the chin. The fleshy area under the chin has nerve endings and stroking it lowers heart rate and calms us.

The hands reveal a lot about a person. When you feel confident, the space between your fingers grows, but that space lessens when you feel insecure. And while rubbing the hands conveys stress, steepling the fingers means that a person feels confident.

In many cultures, a light touch on the arm conveys harmony and trust. In one study, people in the UK, the US, France, and Puerto Rico were observed while sitting at a coffee shop. The British and the Americans hardly touched, and the French and the Puerto Ricans freely touched in togetherness.

To make others feel comfortable while standing, crossing your legs will show you are interested in what the other person has to say. It also means, “Take your time.” The standing crossed legs will help you say that you are comfortable with the other person.

Fidgety hands mean anxiousness or even boredom and keeping your arms akimbo may telegraph arrogance. Crossing the arms and legs is, no doubt, a closed position. Whereas sitting with open arms invites the other person in. If you are sitting and want to appear neutral, it’s best to hold your hands on your lap, just like the Queen of England.

Shake hands firmly while making eye contact, but do not squeeze the person’s hand—your goal is to make someone feel comfortable , not to assert dominance. It is important to be sensitive to cultural norms: if you receive a weak handshake, it may be that the person comes from a background in which a gentle handshake is the norm.

Most people think that crossed arms are a sign of aggression or refusal to cooperate. In fact, crossed arms can signal many other things, including anxiety, self-restraint, and even interest, if the person crossing their arms is mirroring someone who is doing the same.

For the most part, yes. All primates demonstrate behaviors including the freeze response and various self-soothing behaviors, such as touching the neck or twirling the hair in humans. We know that many non-verbal behaviors are innate because even blind children engage in them. Still, some behaviors are mysteries.

In males, wide shoulders and narrow hips are associated with strength and vitality; this is reflected in everything from the form of Greek statues to padded shoulders in men's suit jackets. How one hold's one's shoulders conveys dominance and relative status within a hierarchy.

Freezing in place, rocking back and forth, and contorting into a fetal position are all known as " reserved behaviors ," as they are used only when a person experiences extreme stress. Facial expressions alone can signal this state, such as pursing or sucking in the lips, often seen when a person is upset or feels contrite.

As social animals, we evolved to display emotions, thoughts, and intentions, all of which are processed by the brain's limbic system. Because these reactions precede and at times even override conscious deliberation, body language is uniquely capable of revealing how a person feels--but only if another person is schooled in what these gestures indicate.

Do healthcare professionals hold anti-fat attitudes? Talking openly about body size and shape is important to preventing weight-based discrimination.

Can you be too self-aware? To be an effective leader, it's important to get the balance right.

Neurodiverse individuals face conflicting expectations about emotional expression. Gus Walz's story reveals the bias and challenges around being authentic.

You’re not playing poker; you are connecting. To build a relationship, you need to ditch the poker face and show us what you are feeling.

People lie, but how do you know that they are? You may not be able to trust everyone, but trust yourself. You are the best lie detector on the planet.

Loneliness is destructive and a gateway to the development of more serious disorders, like anxiety and depression. As we lose the nonverbal skills of connecting, loneliness makes gains.

The CALM method helps us navigate life’s disruptions by capturing external details, attuning to inner sensations, labeling emotions, and moving into aligned action for growth.

Find out how to take advantage of interoception to elevate your emotional state.

One of the most debated areas of intent is decoding whether or not someone is lying. An enormous amount of human effort has gone into increasing our ability to detect liars.

Learn the relational skills to improve your intimate relationship.

- Find a Therapist

- Find a Treatment Center

- Find a Psychiatrist

- Find a Support Group

- Find Online Therapy

- United States

- Brooklyn, NY

- Chicago, IL

- Houston, TX

- Los Angeles, CA

- New York, NY

- Portland, OR

- San Diego, CA

- San Francisco, CA

- Seattle, WA

- Washington, DC

- Asperger's

- Bipolar Disorder

- Chronic Pain

- Eating Disorders

- Passive Aggression

- Personality

- Goal Setting

- Positive Psychology

- Stopping Smoking

- Low Sexual Desire

- Relationships

- Child Development

- Self Tests NEW

- Therapy Center

- Diagnosis Dictionary

- Types of Therapy

It’s increasingly common for someone to be diagnosed with a condition such as ADHD or autism as an adult. A diagnosis often brings relief, but it can also come with as many questions as answers.

- Emotional Intelligence

- Gaslighting

- Affective Forecasting

- Neuroscience

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 01 March 2006

Towards the neurobiology of emotional body language

- Beatrice de Gelder 1 , 2 , 3

Nature Reviews Neuroscience volume 7 , pages 242–249 ( 2006 ) Cite this article

12k Accesses

524 Citations

33 Altmetric

Metrics details

People's faces show fear in many different circumstances. However, when people are terrified, as well as showing emotion, they run for cover. When we see a bodily expression of emotion, we immediately know what specific action is associated with a particular emotion, leaving little need for interpretation of the signal, as is the case for facial expressions. Research on emotional body language is rapidly emerging as a new field in cognitive and affective neuroscience. This article reviews how whole-body signals are automatically perceived and understood, and their role in emotional communication and decision-making.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

176,64 € per year

only 14,72 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Context matters: task relevance shapes neural responses to emotional facial expressions

Commonalities and variations in emotion representation across modalities and brain regions

Stimulus arousal drives amygdalar responses to emotional expressions across sensory modalities

Sprengelmeyer, R. et al. Knowing no fear. Proc. Biol. Sci. 266 , 2451–2456 (1999).

Article CAS PubMed PubMed Central Google Scholar

de Gelder, B., Snyder, J., Greve, D., Gerard, G. & Hadjikhani, N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl Acad. Sci. USA 101 , 16701–16706 (2004).

Dittrich, W. H., Troscianko, T., Lea, S. E. & Morgan, D. Perception of emotion from dynamic point-light displays represented in dance. Perception 25 , 727–738 (1996).

Article CAS PubMed Google Scholar

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J. & Young, A. W. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33 , 717–746 (2004).

Article PubMed Google Scholar

Ekman, P. Differential communication of affect by head and body cues. J. Pers. Soc. Psychol. 2 , 726–735 (1965).

Panksepp, J. Affective Neuroscience: The Foundation of Human and Animal Emotions (Oxford Univ. Press, New York, 1998).

Google Scholar

Bulwer, J. Chirologia, or the Natural Language of the Hand (Harper, London1644).

Book Google Scholar

Bell, C. The Anatomy and Philosophy of Expression as Connected with the Fine Arts (George Bell and Sons, London, 1806).

Gratiolet, L. P. Mémoire sur les plis Cérébraux de l'homme et des Primates (A. Bertrand, Paris, 1854).

Duchene de Boulogne, G. B. Mécanismes de la Physionomie Humaine, ou Analyse Electro-physiologique de l'expression des Passions (Baillière, Paris, 1862).

Darwin, C. The Expression of the Emotions in Man and Animals (John Murray, London, 1872).

Frijda, N. H. The Emotions (Cambridge Univ. Press, Cambridge, 1986).

Schmidt, K. L. & Cohn, J. F. Human facial expressions as adaptations: evolutionary questions in facial expression research. Am. J. Phys. Anthropol. 33 (Suppl.), 3–24 (2001).

Davidson, R. J. & Irwin, W. The functional neuroanatomy of emotion and affective style. Trends Cogn. Sci. 3 , 11–21 (1999).

Damasio, A. R. The Feeling of What Happens (Harcourt Brace, New York, 1999).

LeDoux, J. E. The Emotional Brain: The Mysterious Underpinnings of Emotional Life 384 (Simon and Schuster, New York, USA, 1996).

Zald, D. H. The human amygdala and the emotional evaluation of sensory stimuli. Brain Res. Brain Res. Rev. 41 , 88–123 (2003).

Phelps, E. A. & Ledoux, J. E. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48 , 175–187 (2005).

Brothers, L. The neural basis of primate social communication. Motiv. Emot. 14 , 81–91 (1990).

Article Google Scholar

Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. The distributed human neural system for face perception. Trends Cogn. Sci. 4 , 223–233 (2000).

de Gelder, B., Frissen, I., Barton, J. & Hadjikhani, N. A modulatory role for facial expressions in prosopagnosia. Proc. Natl Acad. Sci. USA 100 , 13105–13110 (2003).

Rotshtein, P., Malach, R., Hadar, U., Graif, M. & Hendler, T. Feeling or features: different sensitivity to emotion in high-order visual cortex and amygdala. Neuron 32 , 747–757 (2001).

Adolphs, R. Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12 , 169–177 (2002).

Emery, N. J. & Amaral, D. G. in Cognitive Neuroscience of Emotion (eds Lane, R. D., Nadel, L. & Ahern, G.) 156–191 (Oxford Univ. Press, New York, 2000).

Graziano, M. S. & Cooke, D. F. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 8 Nov 2005 (10.1016/j.neuropsychologia.2005.09.009).

Argyle, M. Bodily Communication 363 (Methuen, London, 1988).

de Meijer, M. The contribution of general features of body movement to the attribution of emotions. J. Nonverbal Behav. 13 , 247–268 (1989).

Reed, C. L., Stone, V. E., Bozova, S. & Tanaka, J. The body-inversion effect. Psychol. Sci. 14 , 302–308 (2003).

Stekelenburg, J. J. & de Gelder, B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport 15 , 777–780 (2004).

Perrett, D. I., Hietanen, J. K., Oram, M. W. & Benson, P. J. Organization and functions of cells responsive to faces in the temporal cortex. Phil. Trans. R. Soc. Lond. B 335 , 23–30 (1992).

Article CAS Google Scholar

Rizzolatti, G., Fadiga, L., Gallese, V. & Fogassi, L. Premotor cortex and the recognition of motor actions. Brain Res. Cogn. Brain Res. 3 , 131–141 (1996).

Downing, P. E., Jiang, Y., Shuman, M. & Kanwisher, N. A cortical area selective for visual processing of the human body. Science 293 , 2470–2473 (2001).

Bonda, E., Petrides, M., Ostry, D. & Evans, A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J. Neurosci. 16 , 3737–3744 (1996).

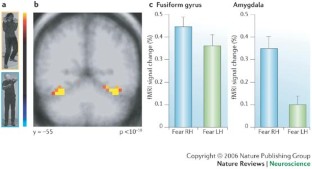

Hadjikhani, N. & de Gelder, B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr. Biol. 13 , 2201–2205 (2003).

Peelen, M. V. & Downing, P. E. Selectivity for the human body in the fusiform gyrus. J. Neurophysiol. 93 , 603–608 (2005).

Johnson, M. H. Subcortical face processing. Nature Rev. Neurosci. 6 , 766–774 (2005).

Gliga, T. & Dehaene-Lambertz, G. Structural encoding of body and face in human infants and adults. J. Cogn. Neurosci. 17 , 1328–1340 (2005).

Meeren, H. K. M., Hadjikhani, N., Ahlfors, S. P., Hamalainen, M. S. & de Gelder, B. in Human Brain Mapping (Florence, Italy, 2006).

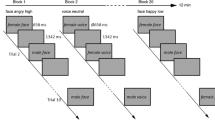

Meeren, H. K., van Heijnsbergen, C. C. & de Gelder, B. Rapid perceptual integration of facial expression and emotional body language. Proc. Natl Acad. Sci. USA 102 , 16518–16523 (2005).

Bentin, S., Sagiv, N., Mecklinger, A., Friederici, A. & von Cramon, Y. D. Priming visual face-processing mechanisms: electrophysiological evidence. Psychol. Sci. 13 , 190–193 (2002).

Damasio, A. R. et al. Subcortical and cortical brain activity during the feeling of self-generated emotions. Nature Neurosci. 3 , 1049–1056 (2000).

Singer, T. et al. Empathy for pain involves the affective but not sensory components of pain. Science 303 , 1157–1162 (2004).

Kilts, C. D., Egan, G., Gideon, D. A., Ely, T. D. & Hoffman, J. M. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage 18 , 156–168 (2003).

Kourtzi, Z. & Kanwisher, N. Activation in human MT/MST by static images with implied motion. J. Cogn. Neurosci. 12 , 48–55 (2000).

Bertenthal, B. I., Proffitt, D. R. & Kramer, S. J. Perception of biomechanical motions by infants: implementation of various processing constraints. J. Exp. Psychol. Hum. Percept. Perform. 13 , 577–585 (1987).

Jellema, T. & Perrett, D. I. Cells in monkey STS responsive to articulated body motions and consequent static posture: a case of implied motion? Neuropsychologia 41 , 1728–1737 (2003).

Vallortigara, G., Regolin, L. & Marconato, F. Visually inexperienced chicks exhibit spontaneous preference for biological motion patterns. PLoS Biol. 3 , e208 (2005).

Heberlein, A. S. & Adolphs, R. Impaired spontaneous anthropomorphizing despite intact perception and social knowledge. Proc. Natl Acad. Sci. USA 101 , 7487–7491 (2004).

Anderson, A. K. & Phelps, E. A. Expression without recognition: contributions of the human amygdala to emotional communication. Psychol. Sci. 11 , 106–111 (2000).

Astafiev, S. V., Stanley, C. M., Shulman, G. L. & Corbetta, M. Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nature Neurosci. 7 , 542–548 (2004).

Jeannerod, M. The Cognitive Neuroscience of Action (Blackwell, Oxford, 1997).

Gallese, V., Fadiga, L., Fogassi, L. & Rizzolatti, G. Action recognition in the premotor cortex. Brain 119 , 593–609 (1996).

Grèzes, J. et al. Does perception of biological motion rely on specific brain regions? Neuroimage 13 , 775–785 (2001).

Allison, T., Puce, A. & McCarthy, G. Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4 , 267–278 (2000).

Grèzes, J., Armony, J. L., Rowe, J. & Passingham, R. E. Activations related to 'mirror' and 'canonical' neurones in the human brain: an fMRI study. Neuroimage 18 , 928–937 (2003).

Fadiga, L., Fogassi, L., Gallese, V. & Rizzolatti, G. Visuomotor neurons: ambiguity of the discharge or 'motor' perception? Int. J. Psychophysiol. 35 , 165–177 (2000).

Rizzolatti, G. & Craighero, L. The mirror-neuron system. Annu. Rev. Neurosci. 27 , 169–192 (2004).

Gallese, V., Keysers, C. & Rizzolatti, G. A unifying view of the basis of social cognition. Trends Cogn. Sci. 8 , 396–403 (2004).