- Learn Python

- Python Lists

- Python Dictionaries

- Python Strings

- Python Functions

- Learn Pandas & NumPy

- Pandas Tutorials

- Numpy Tutorials

- Learn Data Visualization

- Python Seaborn

- Python Matplotlib

Using Python Generators and yield: A Complete Guide

- February 15, 2023 February 15, 2023

In this tutorial, you’ll learn how to use generators in Python, including how to interpret the yield expression and how to use generator expressions . You’ll learn what the benefits of Python generators are and why they’re often referred to as lazy iteration. Then, you’ll learn how they work and how they’re different from normal functions.

Python generators provide you with the means to create your own iterator functions. These functions allow you to generate complex, memory-intensive operations. These operations will be executed lazily, meaning that you can better manage the memory of your Python program.

By the end of this tutorial, you’ll have learned:

- What Python generators are and how to use the yield expression

- How to use multiple yield keywords in a single generator

- How to use generator expressions to make generators simpler to write

- Some common use cases for Python generators

Table of Contents

Understanding Python Generators

Before diving into what generators are, let’s explore what iterators are. Iterators are objects that can be iterated upon, meaning that they return one action or item at a time . To be considered an iterator, objects need to implement two methods: __iter__() and __next__() . Some common examples of iterators in Python include for loops and list comprehensions .

Generators are a Pythonic implementation of creating iterators, without needing to explicitly implement a class with __iter__() and __next__() methods. Similarly, you don’t need to keep track of the object’s internal state. An important thing to note is that generators iterate over an object lazily, meaning they do not store their contents in memory .

The yield statement’s job is to control the flow of a generator function. The statement goes further to handle the state of the generator function, pausing it until it’s called again, using the next() function.

Creating a Simple Generator

In this section, you’ll learn how to create a basic generator. One of the key syntactical differences between a normal function and a generator function is that the generator function includes a yield statement .

Let’s see how we can create a simple generator function:

Let’s break down what is happening here:

- We define a function, return_n_values() , which takes a single parameter, n

- In the function, we first set the value of num to 0

- We then enter a while loop that evaluates whether the value of num is less than our function argument, n

- While that condition is True , we yield the value of num

- Then, we increment the value of num using the augmented assignment operator

Immediately, there are two very interesting things that happen:

- We use yield instead of return

- A statement follows the yield statement, which isn’t ignored

Let’s see how we can actually use this function:

In the code above, we create a variable values , which is the result of calling our generator function with an argument of 5 passed in. When we print the value of values , a generator object is returned.

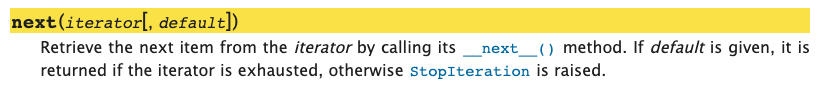

So, how do we access the values in our generator object? This is done using the next() function, which calls the internal .__iter__() method. Let’s see how this works in Python:

We can see here that the value of 0 is returned. However, intuitively, we know that the values of 0 through 4 should be returned. Because a Python generator remembers the function’s state, we can call the next() function multiple times . Let’s call it a few more times:

In this case, we’ve yielded all of the values that the while loop will accept. Let’s see what happens when we call the next() function a sixth time:

We can see in the code sample above that when the condition of our while loop is no longer True , Python will raise StopIteration .

In the next section, you’ll learn how to create a Python generator using a for loop.

Creating a Python Generator with a For Loop

In the previous example, you learned how to create and use a simple generator. However, the example above is complicated by the fact that we’re yielding a value and then incrementing it. This can often make generators much more difficult for beginners and novices to understand.

Instead, we can use a for loop, rather than a while loop, for simpler generators . Let’s rewrite our previous generator using a for loop to make the process a little more intuitive:

In the code block above, we used a for loop instead of a while loop. We used the Python range() function to create a range of values from 0 through to the end of the values. This simplifies the generator a little bit, making it more approachable to readers of your code.

Unpacking a Generator with a For Loop

In many cases, you’ll see generators wrapped inside of for loops, in order to exhaust all possible yields. In these cases, the benefit of generators is less about remembering the state (though this is used, of course, internally), and more about using memory wisely.

In the code block above, we used a for loop to loop over each iteration of the generator. This implicitly calls the __next__() method. Note that we’re using the optional end= parameter of the print function, which allows you to overwrite the default newline character .

Creating a Python Generator with Multiple Yield Statements

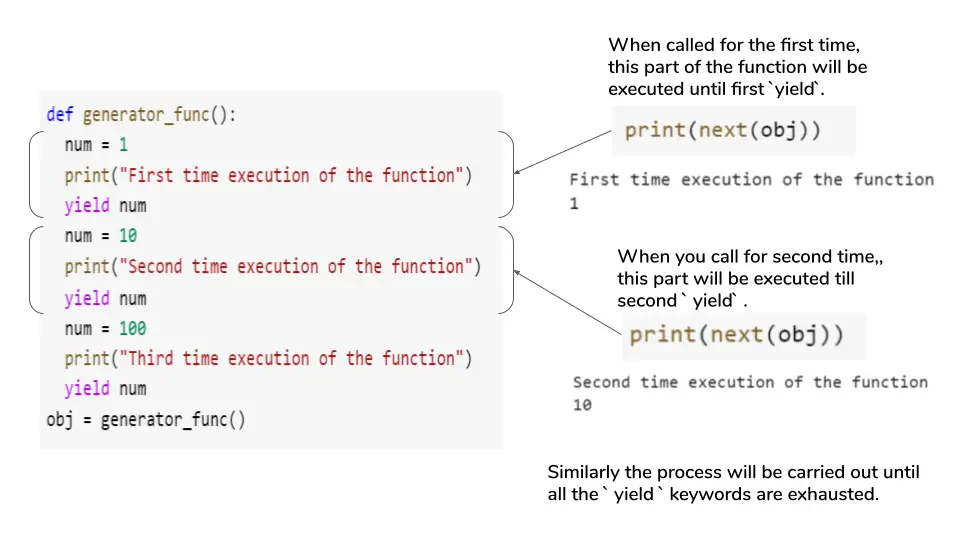

A very interesting difference between Python functions and generators is that a generator can actually hold more than one yield expressions ! While, theoretically, a function can have more than one return keyword, nothing after the first will execute.

Let’s take a look at an example where we define a generator with more than one yield statement:

In the code block above, our generator has more than one yield statement. When we call the first next() function, it returns only the first yielded value. We can keep calling the next() function until all the yielded values are depleted. At this point, the generator will raise a StopIteration exception.

Understanding the Performance of Python Generators

One of the key things to understand is why you’d want to use a Python generator. Because Python generators evaluate lazily, they use significantly less memory than other objects.

For example, if we created a generator that yielded the first one million numbers, the generator doesn’t actually hold the values. Meanwhile, by using a list comprehension to create a list of the first one million values, the list actually holds the values. Let’s see what this looks like:

In the code block above, we import the sys library which allows us to access the getsizeof() function. We then print the size of both the generator and the list. We can see that the list is over 75,000 times larger.

In the following section, you’ll learn how to simplify creating generators by using generator expressions.

Creating Python Generator Expressions

When you want to create one-off generators, using a function can seem redundant. Similar to list and dictionary comprehensions , Python allows you to create generator expressions. This simplifies the process of creating generators, especially for generators that you only need to use once.

In order to create a generator expression, you wrap the expression in parentheses . Say you wanted to create a generator that yields the numbers from zero through four. Then, you could write (i for i in range(5)) .

In the example above, we used a generator expression to yield values from 0 to 4. We then call the next() function five times to print out the values in the generator.

In the following section, we’ll dive further into the yield statement.

Understanding the Python yield Statement

The Python yield statement can often feel unintuitive to newcomers to generators. What separates the yield statement from the return statement is that rather than ending the process, it simply suspends the current process.

The yield statement will suspend the process and return the yielded value. When the subsequent next() function is called, the process is resumed until the following value is yielded.

What is great about this is that the state of the process is saved. This means that Python will know where to pick up its iteration, allowing it to move forward without a problem.

How to Throw Exceptions in Python Generators Using throw

Python generators have access to a special method, .throw() , which allows them to throw an exception at a specific point of iteration . This can be helpful if you know that an erroneous value may exist in the generator.

Let’s take a look at how we can use the .throw() method in a Python generator:

Let’s break down how we can use the .throw() method to throw an exception in a Python generator:

- We create our generator using a generator expression

- We then use a for loop to loop over each value

- Within the for loop, we use an if statement to check if the value is equal to 3. If it is, we call the .throw() method, which raises an error

In some cases, you may simply want to stop a generator, rather than throwing an exception. This is what you’ll learn in the following section.

How to Stop a Python Generator Using stop

Python allows you to stop iterating over a generator by using the .close() function . This can be very helpful if you’re reading a file using a generator and you only want to read the file until a certain condition is met.

Let’s repeat our previous example, though we’ll stop the generator rather than throwing an exception:

In the code block above we used the .close() method to stop the iteration. While the example above is simple, it can be extended quite a lot. Imagine reading a file using Python – rather than reading the entire file, you may only want to read it until you find a given line.

In this tutorial, you learned how to use generators in Python, including how to interpret the yield expression and how to use generator expressions . You learned what the benefits of Python generators are and why they’re often referred to as lazy iteration. Then, you learned how they work and how they’re different from normal functions.

Additional Resources

To learn more about related topics, check out the resources below:

- Understanding and Using Functions in Python for Data Science

- Python: Return Multiple Values from a Function

- Python Built-In Functions

- Python generators: Official Documentation

Nik Piepenbreier

Nik is the author of datagy.io and has over a decade of experience working with data analytics, data science, and Python. He specializes in teaching developers how to use Python for data science using hands-on tutorials. View Author posts

2 thoughts on “Using Python Generators and yield: A Complete Guide”

Guides like these are highlights in the net. Thanks

Thanks so much, Vik!!

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

CodeFatherTech

Learn to Code. Shape Your Future

Python Yield: Create Your Generators [With Examples]

The Python yield keyword is something that at some point you will encounter as developer. What is yield? How can you use it in your programs?

The yield keyword is used to return a value to the caller of a Python function without losing the state of the function. When the function is called again its execution continues from the line after the yield expression. A function that uses the yield keyword is called generator function.

This definition might not be enough to understand yield.

That’s why we will look at some examples of how to the yield keyword in your Python code.

Let’s start coding!

Table of Contents

Regular Functions and Generator Functions

Most developers are familiar with the Python return keyword. It is used to return a value from a function and it stops the execution of that function.

When you use return in your function any information about the state of that function is lost after the execution of the return statement.

The same doesn’t happen with yield…

When you use yield the function still returns a value to the caller with the difference that the state of the function is stored in memory. This means that the execution of the function can continue from the line of code after the yield expression when the function is called again.

That sounds complicated!?!

Here is an example…

The following regular function takes as input a list of numbers and returns a new array with every value multiplied by 2.

When you execute this code you get the following output:

When the function reaches the return statement the execution of the function stops. At this point the Python interpreter doesn’t keep any details about its state in memory.

Let’s see how we can get the same result by using yield instead of return .

This new function is a lot simpler…

…here are the differences from the function that was using the return statement:

- We don’t need the new double_numbers list.

- We can remove the line that contains the return statement because we don’t need to return an entire list back.

- Inside the for loop we can directly use yield to return values to the caller one at the time .

What output do we get this time from the print statement?

A generator function returns a generator object.

In the next section we will see how to read values from this generator object.

Read the Output of Generator Functions

Firstly let’s recap what yield does when is used in a Python function:

A function that contains the yield keyword is called generator function as opposed to a regular function that uses the return keyword to return a value to the caller. The behaviour of yield is different from return because yield returns values one at the time and pauses the execution of the function until the next call.

In the previous section we have seen that when we print the output of a generator function we get back a generator object.

But how can we get the values from the generator object in the same way we do with a regular Python list?

We can use a for loop . Remember that we were calling the generator function double(). Let’s assign the output of this function to a variable and then loop through it:

With a for loop we get back all the values from this generator object:

In the exact same way we could use this for loop to print the values in the list returned by the regular function we have defined. The one that was using the return statement.

So, what’s the difference between the two functions?

The regular function creates a list in memory and returns the full list using the return statement. The generator function doesn’t keep the full list of numbers in memory. Numbers are returned, one by one, each time the generator function is called in the for loop.

We can also get values from the generator using the next() function .

The next function returns the next item in the generator every time we pass the generator object to it.

We are expecting back a sequence of five numbers. Let’s pass the generator to the next() function six times and see what happens:

The first time we call the next() function we get back 6, then 112, then 8 and so on.

After the fifth time we call the next() function there are no more numbers to be returned by the generator. At that point we call the next() function again and we get back a StopIteration exception from the Python interpreter.

The exception is raised because no more values are available in the generator.

When you use the for loop to get the values from the generator you don’t see the StopIteration exception because the for loop handles it transparently.

Next Function and __next__() Generator Object Method

Using the dir() built-in function we can see that __next__ is one of the methods available for our generator object.

This is the method that is called when we pass the generator to the next() function .

Python methods whose name starts and ends with double underscores are called dunder methods .

How to Convert a Generator to a Python List

In our example of generator we have seen that when we print the value of the generator variable we get back a reference to a generator object.

But, how can we see all the values in the generator object without using a for loop or the next() function?

A way to do that is by converting the generator into a Python list using the list() function .

As you can see we got back the list of numbers in the generator as a list.

This doesn’t necessarily makes sense considering that one of the reasons you would use a generator is that generators require a lot less memory than lists.

That’s because when you use a list Python stores every single element of the list in memory while a generator returns only one value at the time. Some additional memory is required to “pause” the generator function and remember its state.

When we convert the generator into a list using the list() function we basically allocate memory required for every element returned by the generator (basically the same that happens with a regular list).

In one of the next sections we will analyse the difference in size between a list and a generator.

Generator Expressions

We have seen how to use the yield keyword to create generator function.

This is not the only way to create generators, you can also use a generator expression .

To introduce generator expression we will start from an example of list comprehension, a Python construct used to create lists based on existing lists in a one liner.

Let’s say we want to write a list comprehension that returns the same output of the functions we have defined before.

The list comprehension takes a list and returns a new list where every element is multiplied by 2.

The list comprehension starts and ends with a square bracket and in a single line does what the functions we have defined before were doing with multiple lines of code.

As you can see the value returned by the list comprehension is of type list.

Now, let’s replace the square brackets of the list comprehension with parentheses. This is a generator expression .

This time the output is slightly different…

The object returned by the new expression is a generator, it’s not a list anymore.

We can go through this generator in the same way we have seen before by using either a for loop or the next function:

To convert a list comprehension into a generator expression replace the square brackets that surround the list comprehension with parentheses.

Notice that there is a small difference in the way Python represents an object returned by a generator function and a generator expression.

Generator Function

Generator Expression

More About Using Yield in a Python Function

We have seen an example on how to use yield in a function but I want to give you another example that clearly shows the behaviour of yield.

Let’s take the generator function we have created before and add some print statements to show exactly what happens when the function is called?

When we call the next() function and pass the generator we get the following:

The first print statement and the yield statement are executed. After that the function is paused and the value in the yield expression is returned.

When we call next() again the execution of the function continues from where it left before. Here is what the Python interpreter does:

- Execute the print statement after the yield expression.

- Start the next iteration of the for loop.

- Execute the print statement before the yield expression.

- Return the yielded value and pause the function.

This gives you a better understanding of how Python pauses and resumes the state of a generator function.

How To Yield a Tuple in Python

In the examples we have seen so far we have been using the yield keyword to return a single number.

Can we apply yield to a tuple instead?

Let’s say we want to pass the following list of tuples to our function:

We can modify the previous generator function to return tuples where we multiply every element by 2.

In the same way we have done before let’s call the next() function twice and see what happens:

Second call

So, the behaviour is exactly the same.

Multiple Yield Statements in a Python Function

Can you use multiple yield statements in a single Python function?

Yes, you can!

The behaviour of the generator function doesn’t change from the scenario where you have a single yield expression.

Every time the __next__ method gets called on the generator function the execution of the function continues where it left until the next yield expression is reached.

Here is an example. Open the Python shell and create a generator function with two yield expressions. The first one returns a list and the second one returns a tuple:

When we pass the generator object gen to the next function we should get back the list first and then the tuple.

Passing the generator object to the next function is basically the same as calling the __next__ method of the generator object.

As expected the Python interpreter raises a StopIteration exception when we execute the __next__ method the third time. That’s because our generator function only contains two yield expressions.

Can I Use Yield and Return in the Same Function?

Have you wondered if you can use yield and return in the same function?

Let’s see what happens when we do that in the function we have created in the previous section.

Here we are using Python 3.8.5:

The behaviour is similar to the one of the function without the return statement. The first two times we call the next() function we get back the two values in the yield expressions.

The third time we call the next() function the Python interpreter raises a StopIteration exception. The only difference is that the string in the return statement (‘done’) becomes the exception message.

If you try to run the same code with Python 2.7 you get a SyntaxError because a return statement with argument cannot be used inside a generator function.

Let’s try to remove the return argument:

All good this time.

This is just an experiment…

In reality it might not make sense to use yield and return as part of the same generator function.

Have you found a scenario where it might be useful doing that? Let me know in the comment.

Generators and Memory Usage

One of the reasons to use generators instead of lists is to save memory.

That’s because when working with lists all the elements of a lists are stored in memory while the same doesn’t happen when working with generators.

We will generate a list made of 100,000 elements and see how much space it takes in memory using the sys module.

Let’s start by defining two functions, one regular function that returns a list of numbers and a generator function that returns a generator object for the same sequence of numbers.

Regular Function

Now, let’s get the list of numbers and the generator object back and calculate their size in bytes using the sys.getsizeof() function .

The output is:

The list takes over 7000 times the memory required by the generator!

So, there is definitely a benefit in memory allocation when it comes to using generators. At the same time using a list is faster so it’s about finding a tradeoff between memory usage and performance.

You have learned the difference between return and yield in a Python function.

So now you know how to use the yield keyword to convert a regular function into a generator function.

I have also explained how generator expressions can be used as alternative to generator functions.

Finally, we have compared generators and regular lists from a memory usage perspective and showed why you can use generators to save memory especially if you are working with big datasets.

Claudio Sabato is an IT expert with over 15 years of professional experience in Python programming, Linux Systems Administration, Bash programming, and IT Systems Design. He is a professional certified by the Linux Professional Institute .

With a Master’s degree in Computer Science, he has a strong foundation in Software Engineering and a passion for robotics with Raspberry Pi.

Related posts:

- How to Execute a Shell Command in Python [Step-by-Step]

- What is the ternary operator in Python?

- How to Create a Stack in Python From Scratch: Step-By-Step

- How to Implement the Binary Search Algorithm in Python

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

- Privacy Overview

- Strictly Necessary Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

Python Topics

Popular articles.

- Reading And Writing (Apr 27, 2024)

- Asyncio (Apr 26, 2024)

- Metaclasses (Apr 26, 2024)

- Type Hints (Apr 26, 2024)

- Deep Learning Framework (Apr 26, 2024)

Yield Statement

Table of Contents

Understanding the Yield Statement in Python

Difference between yield and return in python, how to use the yield statement in python, practical applications of yield in python, yield in python generator expressions and functions, benefits and limitations of using yield in python, examples of yield statement in python, python yield statement in multi-threading, understanding the yield from statement in python, best practices for using yield in python.

- 'return' gives back a value and terminates the function, while 'yield' produces a value and suspends the function’s execution, to be resumed later.

- 'return' sends a specified value back to its caller whereas 'yield' can produce a sequence of values.

- When a function is called, a new local scope is created for that call, and all local variables are created in that scope. In contrast, 'yield' allows the function to remember its state, so that when it's called again, the function continues where it left off, with all local variables retaining their values.

- While a 'return' statement can only be used once in a function, 'yield' can be used multiple times, producing multiple results.

- The 'yield' statement can only be used inside a function.

- A function with a 'yield' statement is called a generator function.

- Generator functions return a special iterator called a generator. You can iterate over this generator to retrieve its values.

- When a generator function is called, it returns a generator object without even beginning execution of the function. When 'next()' is called for the first time, the function starts executing until it reaches the 'yield' statement, which returns the yielded value. The 'yield' keyword pauses the function and saves the local state so that it can be resumed right where it left off when 'next()' is called again.

- Generator functions allow you to declare a function that behaves like an iterator, i.e., it can be used in a 'for' loop.

- The difference between list comprehensions and generator expressions is that the former produces the entire list while the latter produces one item at a time, which is more memory-friendly.

- Both generator functions and generator expressions are a part of Python's iterator protocol and can be used with built-in functions that accept iterators, like 'sum()', 'max()', and 'min()'.

- Coroutines are a form of cooperative multitasking where the control of execution is explicitly yielded by the current task (using 'yield') to the scheduler (the 'main' function in this case).

- Unlike threads, coroutines are a form of concurrency that do not run in parallel and do not require context switching, and thus they are more lightweight.

- Coroutines can be used to simplify code that deals with problems which can be broken down into tasks that need to run concurrently, but do not necessarily need to run in parallel.

- The 'yield from' statement is only valid in Python 3.3 and later.

- 'yield from' allows a generator to delegate part of its operations to another generator.

- It simplifies the code by abstracting the call to the inner generator, eliminating the need for a loop in the outer generator.

- 'yield from' makes the code more readable and makes it easier to handle exceptions and closing of the generators.

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Python Yield – What does the yield keyword do?

- September 7, 2020

- Venmani A D

Adding yield keyword to a function will make the function return a generator object that can be iterated upon.

What does the yield keyword do?

Approaches to overcome generator exhaustion, how to materialize generators, how yield works, step by step, exercise 1: write a program to create a generator that generates cubes of numbers up to 1000 using yield, exercise 2: write a program to return odd number by pipelining generators, difference between yield and return.

yield in Python can be used like the return statement in a function. When done so, the function instead of returning the output, it returns a generator that can be iterated upon.

You can then iterate through the generator to extract items. Iterating is done using a for loop or simply using the next() function. But what exactly happens when you use yield ?

What the yield keyword does is as follows:

Each time you iterate, Python runs the code until it encounters a yield statement inside the function. Then, it sends the yielded value and pauses the function in that state without exiting.

When the function is invoked the next time, the state at which it was last paused is remembered and execution is continued from that point onwards. This continues until the generator is exhausted.

What does remembering the state mean?

It means, any local variable you may have created inside the function before yield was called will be available the next time you invoke the function. This is NOT the way a regular function usually behaves.

Now, how is it different from using the return keyword?

Had you used return in place of yield , the function would have returned the respective value, all the local variable values that the function had earlier computed would be cleared off and the next time the function is called, the function execution will start fresh.

Since the yield enables the function to remember its ‘state’, this function can be used to generate values in a logic defined by you. So, it function becomes a ‘generator’.

Now you can iterate through the generator object. But it works only once.

Calling the generator the second time wont give anything. Because the generator object is already exhausted and has to be re-initialized.

If you call next() over this iterator, a StopIteration error is raised

To overcome generator exhaustion, you can:

- Approach 1 : Replenish the generator by recreating it again and iterate over. You just saw how to do this.

- Approach 2 : Iterate by calling the function that created the generator in the first place

- Approach 3 (best) : Convert it to an class that implements a __iter__() method. This creates an iterator every time, so you don’t have to worry about the generator getting exhausted.

We’ve see the first approach already. Approach 2: The second approach is to simple replace the generator with a call the the function that produced the generator, which is simple_generator() in this case. This will continue to work no matter how many times you iterate it.

Approach 3: Now, let’s try creating a class that implements a __iter__() method. It creates an iterator object every time, so you don’t have to keep recreating the generator.

We often store data in a list if you want to materialize it at some point. If you do so, the content of the list occupies tangible memory. The larger the list gets, it occupies more memory resource.

But if there is a certain logic behind producing the items that you want, you don’t have to store in a list. But rather, simply write a generator that will produce the items whenever you want them.

Let’s say, you want to iterate through squares of numbers from 1 to 10. There are at least two ways you can go about it: create the list beforehand and iterate. Or create a generator that will produce these numbers.

Let’s do the same with generators now.

Generators are memory efficient because the values are not materialized until called. And are usually faster. You will want to use a generator especially if you know the logic to produce the next number (or any object) that you want to generate.

Can a generator be materialized to a list?

Yes. You can do so easily using list comprehensions or by simply calling list() .

yield is a keyword that returns from the function without destroying the state of it’s local variables. When you replace return with yield in a function, it causes the function to hand back a generator object to its caller. In effect, yield will prevent the function from exiting, until the next time next() is called. When called, it will start executing from the point where it paused before. Output:

See that I have created a function using yield keyword. Let’s try to access the function, as we have created an object obj for the function, it will be defined as an iterator. So to access it, use the next() function. It will iterate until the next yield statement is reached.

I am going to try to create a generator function which will return the cubic of the number until the cube limit reaches 1000, one at a time using yield keyword. The memory will be alloted only to the element which is running, after the execution of output of that element, the memory will be deleted.

Multiple generators can be pipelined(one generator using another) as a series of operations in the same code. Pipelining also makes the code more efficient and easy to read. For pipeling functions, use () paranthesis to give function caller inside a function.

More Articles

How to convert python code to cython (and speed up 100x), how to convert python to cython inside jupyter notebooks, install opencv python – a comprehensive guide to installing “opencv-python”, install pip mac – how to install pip in macos: a comprehensive guide, scrapy vs. beautiful soup: which is better for web scraping, add python to path – how to add python to the path environment variable in windows, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

- Python Basics

- Interview Questions

- Python Quiz

- Popular Packages

- Python Projects

- Practice Python

- AI With Python

- Learn Python3

- Python Automation

- Python Web Dev

- DSA with Python

- Python OOPs

- Dictionaries

Python | yield Keyword

- Python Raise Keyword

- Python Keywords

- Python in Keyword

- Python def Keyword

- Keywords in Python | Set 2

- Keyword Module in Python

- Python IMDbPY - Searching keyword

- finally keyword in Python

- Python Yield Multiple Values

- Python Tuple - len() Method

- Python Keywords and Identifiers

- __new__ in Python

- Python | sympy.core() method

- Python | sympy.symbols() method

- Python | os.urandom() method

- The yield Keyword in Ruby

- Scala | yield Keyword

- What's the yield keyword in JavaScript?

- Ruby | Keywords

- Adding new column to existing DataFrame in Pandas

- Python map() function

- Read JSON file using Python

- How to get column names in Pandas dataframe

- Taking input in Python

- Read a file line by line in Python

- Dictionaries in Python

- Enumerate() in Python

- Iterate over a list in Python

- Different ways to create Pandas Dataframe

In this article, we will cover the yield keyword in Python . Before starting, let’s understand the yield keyword definition.

Syntax of the Yield Keyword in Python

What does the Yield Keyword do?

yield keyword is used to create a generator function. A type of function that is memory efficient and can be used like an iterator object.

In layman terms, the yield keyword will turn any expression that is given with it into a generator object and return it to the caller. Therefore, you must iterate over the generator object if you wish to obtain the values stored there. we will see the yield python example.

Difference between return and yield Python

The yield keyword in Python is similar to a return statement used for returning values in Python which returns a generator object to the one who calls the function which contains yield, instead of simply returning a value. The main difference between them is, the return statement terminates the execution of the function. Whereas, the yield statement only pauses the execution of the function. Another difference is return statements are never executed. whereas, yield statements are executed when the function resumes its execution.

Advantages of yield:

- Using yield keyword is highly memory efficient, since the execution happens only when the caller iterates over the object.

- As the variables states are saved, we can pause and resume from the same point, thus saving time.

Disadvantages of yield:

- Sometimes it becomes hard to understand the flow of code due to multiple times of value return from the function generator.

- Calling of generator functions must be handled properly, else might cause errors in program.

Example 1: Generator functions and yield Keyword in Python

Generator functions behave and look just like normal functions, but with one defining characteristic. Instead of returning data, Python generator functions use the yield keyword. Generators’ main benefit is that they automatically create the functions __iter__() and next (). Generators offer a very tidy technique to produce data that is enormous or limitless.

Output:

Example 2: Generating an Infinite Sequence

Here, we are generating an infinite sequence of numbers with yield, yield returns the number and increments the num by + 1.

Note: Here we can observe that num+=1 is executed after yield but in the case of a return , no execution takes place after the return keyword.

Example 3: Demonstrating yield working with a list .

Here, we are extracting the even number from the list.

Example 4: Use of yield Keyword as Boolean

The possible practical application is that when handling the last amount of data and searching particulars from it, yield can be used as we don’t need to look up again from start and hence would save time. There can possibly be many applications of yield depending upon the use cases.

Related Article –

- Generators in Python

- Difference between Yield and Return in Python

Please Login to comment...

Similar reads.

- Python-Functions

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Python Enhancement Proposals

- Python »

- PEP Index »

PEP 572 – Assignment Expressions

The importance of real code, exceptional cases, scope of the target, relative precedence of :=, change to evaluation order, differences between assignment expressions and assignment statements, specification changes during implementation, _pydecimal.py, datetime.py, sysconfig.py, simplifying list comprehensions, capturing condition values, changing the scope rules for comprehensions, alternative spellings, special-casing conditional statements, special-casing comprehensions, lowering operator precedence, allowing commas to the right, always requiring parentheses, why not just turn existing assignment into an expression, with assignment expressions, why bother with assignment statements, why not use a sublocal scope and prevent namespace pollution, style guide recommendations, acknowledgements, a numeric example, appendix b: rough code translations for comprehensions, appendix c: no changes to scope semantics.

This is a proposal for creating a way to assign to variables within an expression using the notation NAME := expr .

As part of this change, there is also an update to dictionary comprehension evaluation order to ensure key expressions are executed before value expressions (allowing the key to be bound to a name and then re-used as part of calculating the corresponding value).

During discussion of this PEP, the operator became informally known as “the walrus operator”. The construct’s formal name is “Assignment Expressions” (as per the PEP title), but they may also be referred to as “Named Expressions” (e.g. the CPython reference implementation uses that name internally).

Naming the result of an expression is an important part of programming, allowing a descriptive name to be used in place of a longer expression, and permitting reuse. Currently, this feature is available only in statement form, making it unavailable in list comprehensions and other expression contexts.

Additionally, naming sub-parts of a large expression can assist an interactive debugger, providing useful display hooks and partial results. Without a way to capture sub-expressions inline, this would require refactoring of the original code; with assignment expressions, this merely requires the insertion of a few name := markers. Removing the need to refactor reduces the likelihood that the code be inadvertently changed as part of debugging (a common cause of Heisenbugs), and is easier to dictate to another programmer.

During the development of this PEP many people (supporters and critics both) have had a tendency to focus on toy examples on the one hand, and on overly complex examples on the other.

The danger of toy examples is twofold: they are often too abstract to make anyone go “ooh, that’s compelling”, and they are easily refuted with “I would never write it that way anyway”.

The danger of overly complex examples is that they provide a convenient strawman for critics of the proposal to shoot down (“that’s obfuscated”).

Yet there is some use for both extremely simple and extremely complex examples: they are helpful to clarify the intended semantics. Therefore, there will be some of each below.

However, in order to be compelling , examples should be rooted in real code, i.e. code that was written without any thought of this PEP, as part of a useful application, however large or small. Tim Peters has been extremely helpful by going over his own personal code repository and picking examples of code he had written that (in his view) would have been clearer if rewritten with (sparing) use of assignment expressions. His conclusion: the current proposal would have allowed a modest but clear improvement in quite a few bits of code.

Another use of real code is to observe indirectly how much value programmers place on compactness. Guido van Rossum searched through a Dropbox code base and discovered some evidence that programmers value writing fewer lines over shorter lines.

Case in point: Guido found several examples where a programmer repeated a subexpression, slowing down the program, in order to save one line of code, e.g. instead of writing:

they would write:

Another example illustrates that programmers sometimes do more work to save an extra level of indentation:

This code tries to match pattern2 even if pattern1 has a match (in which case the match on pattern2 is never used). The more efficient rewrite would have been:

Syntax and semantics

In most contexts where arbitrary Python expressions can be used, a named expression can appear. This is of the form NAME := expr where expr is any valid Python expression other than an unparenthesized tuple, and NAME is an identifier.

The value of such a named expression is the same as the incorporated expression, with the additional side-effect that the target is assigned that value:

There are a few places where assignment expressions are not allowed, in order to avoid ambiguities or user confusion:

This rule is included to simplify the choice for the user between an assignment statement and an assignment expression – there is no syntactic position where both are valid.

Again, this rule is included to avoid two visually similar ways of saying the same thing.

This rule is included to disallow excessively confusing code, and because parsing keyword arguments is complex enough already.

This rule is included to discourage side effects in a position whose exact semantics are already confusing to many users (cf. the common style recommendation against mutable default values), and also to echo the similar prohibition in calls (the previous bullet).

The reasoning here is similar to the two previous cases; this ungrouped assortment of symbols and operators composed of : and = is hard to read correctly.

This allows lambda to always bind less tightly than := ; having a name binding at the top level inside a lambda function is unlikely to be of value, as there is no way to make use of it. In cases where the name will be used more than once, the expression is likely to need parenthesizing anyway, so this prohibition will rarely affect code.

This shows that what looks like an assignment operator in an f-string is not always an assignment operator. The f-string parser uses : to indicate formatting options. To preserve backwards compatibility, assignment operator usage inside of f-strings must be parenthesized. As noted above, this usage of the assignment operator is not recommended.

An assignment expression does not introduce a new scope. In most cases the scope in which the target will be bound is self-explanatory: it is the current scope. If this scope contains a nonlocal or global declaration for the target, the assignment expression honors that. A lambda (being an explicit, if anonymous, function definition) counts as a scope for this purpose.

There is one special case: an assignment expression occurring in a list, set or dict comprehension or in a generator expression (below collectively referred to as “comprehensions”) binds the target in the containing scope, honoring a nonlocal or global declaration for the target in that scope, if one exists. For the purpose of this rule the containing scope of a nested comprehension is the scope that contains the outermost comprehension. A lambda counts as a containing scope.

The motivation for this special case is twofold. First, it allows us to conveniently capture a “witness” for an any() expression, or a counterexample for all() , for example:

Second, it allows a compact way of updating mutable state from a comprehension, for example:

However, an assignment expression target name cannot be the same as a for -target name appearing in any comprehension containing the assignment expression. The latter names are local to the comprehension in which they appear, so it would be contradictory for a contained use of the same name to refer to the scope containing the outermost comprehension instead.

For example, [i := i+1 for i in range(5)] is invalid: the for i part establishes that i is local to the comprehension, but the i := part insists that i is not local to the comprehension. The same reason makes these examples invalid too:

While it’s technically possible to assign consistent semantics to these cases, it’s difficult to determine whether those semantics actually make sense in the absence of real use cases. Accordingly, the reference implementation [1] will ensure that such cases raise SyntaxError , rather than executing with implementation defined behaviour.

This restriction applies even if the assignment expression is never executed:

For the comprehension body (the part before the first “for” keyword) and the filter expression (the part after “if” and before any nested “for”), this restriction applies solely to target names that are also used as iteration variables in the comprehension. Lambda expressions appearing in these positions introduce a new explicit function scope, and hence may use assignment expressions with no additional restrictions.

Due to design constraints in the reference implementation (the symbol table analyser cannot easily detect when names are re-used between the leftmost comprehension iterable expression and the rest of the comprehension), named expressions are disallowed entirely as part of comprehension iterable expressions (the part after each “in”, and before any subsequent “if” or “for” keyword):

A further exception applies when an assignment expression occurs in a comprehension whose containing scope is a class scope. If the rules above were to result in the target being assigned in that class’s scope, the assignment expression is expressly invalid. This case also raises SyntaxError :

(The reason for the latter exception is the implicit function scope created for comprehensions – there is currently no runtime mechanism for a function to refer to a variable in the containing class scope, and we do not want to add such a mechanism. If this issue ever gets resolved this special case may be removed from the specification of assignment expressions. Note that the problem already exists for using a variable defined in the class scope from a comprehension.)

See Appendix B for some examples of how the rules for targets in comprehensions translate to equivalent code.

The := operator groups more tightly than a comma in all syntactic positions where it is legal, but less tightly than all other operators, including or , and , not , and conditional expressions ( A if C else B ). As follows from section “Exceptional cases” above, it is never allowed at the same level as = . In case a different grouping is desired, parentheses should be used.

The := operator may be used directly in a positional function call argument; however it is invalid directly in a keyword argument.

Some examples to clarify what’s technically valid or invalid:

Most of the “valid” examples above are not recommended, since human readers of Python source code who are quickly glancing at some code may miss the distinction. But simple cases are not objectionable:

This PEP recommends always putting spaces around := , similar to PEP 8 ’s recommendation for = when used for assignment, whereas the latter disallows spaces around = used for keyword arguments.)

In order to have precisely defined semantics, the proposal requires evaluation order to be well-defined. This is technically not a new requirement, as function calls may already have side effects. Python already has a rule that subexpressions are generally evaluated from left to right. However, assignment expressions make these side effects more visible, and we propose a single change to the current evaluation order:

- In a dict comprehension {X: Y for ...} , Y is currently evaluated before X . We propose to change this so that X is evaluated before Y . (In a dict display like {X: Y} this is already the case, and also in dict((X, Y) for ...) which should clearly be equivalent to the dict comprehension.)

Most importantly, since := is an expression, it can be used in contexts where statements are illegal, including lambda functions and comprehensions.

Conversely, assignment expressions don’t support the advanced features found in assignment statements:

- Multiple targets are not directly supported: x = y = z = 0 # Equivalent: (z := (y := (x := 0)))

- Single assignment targets other than a single NAME are not supported: # No equivalent a [ i ] = x self . rest = []

- Priority around commas is different: x = 1 , 2 # Sets x to (1, 2) ( x := 1 , 2 ) # Sets x to 1

- Iterable packing and unpacking (both regular or extended forms) are not supported: # Equivalent needs extra parentheses loc = x , y # Use (loc := (x, y)) info = name , phone , * rest # Use (info := (name, phone, *rest)) # No equivalent px , py , pz = position name , phone , email , * other_info = contact

- Inline type annotations are not supported: # Closest equivalent is "p: Optional[int]" as a separate declaration p : Optional [ int ] = None

- Augmented assignment is not supported: total += tax # Equivalent: (total := total + tax)

The following changes have been made based on implementation experience and additional review after the PEP was first accepted and before Python 3.8 was released:

- for consistency with other similar exceptions, and to avoid locking in an exception name that is not necessarily going to improve clarity for end users, the originally proposed TargetScopeError subclass of SyntaxError was dropped in favour of just raising SyntaxError directly. [3]

- due to a limitation in CPython’s symbol table analysis process, the reference implementation raises SyntaxError for all uses of named expressions inside comprehension iterable expressions, rather than only raising them when the named expression target conflicts with one of the iteration variables in the comprehension. This could be revisited given sufficiently compelling examples, but the extra complexity needed to implement the more selective restriction doesn’t seem worthwhile for purely hypothetical use cases.

Examples from the Python standard library

env_base is only used on these lines, putting its assignment on the if moves it as the “header” of the block.

- Current: env_base = os . environ . get ( "PYTHONUSERBASE" , None ) if env_base : return env_base

- Improved: if env_base := os . environ . get ( "PYTHONUSERBASE" , None ): return env_base

Avoid nested if and remove one indentation level.

- Current: if self . _is_special : ans = self . _check_nans ( context = context ) if ans : return ans

- Improved: if self . _is_special and ( ans := self . _check_nans ( context = context )): return ans

Code looks more regular and avoid multiple nested if. (See Appendix A for the origin of this example.)

- Current: reductor = dispatch_table . get ( cls ) if reductor : rv = reductor ( x ) else : reductor = getattr ( x , "__reduce_ex__" , None ) if reductor : rv = reductor ( 4 ) else : reductor = getattr ( x , "__reduce__" , None ) if reductor : rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

- Improved: if reductor := dispatch_table . get ( cls ): rv = reductor ( x ) elif reductor := getattr ( x , "__reduce_ex__" , None ): rv = reductor ( 4 ) elif reductor := getattr ( x , "__reduce__" , None ): rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

tz is only used for s += tz , moving its assignment inside the if helps to show its scope.

- Current: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) tz = self . _tzstr () if tz : s += tz return s

- Improved: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) if tz := self . _tzstr (): s += tz return s

Calling fp.readline() in the while condition and calling .match() on the if lines make the code more compact without making it harder to understand.

- Current: while True : line = fp . readline () if not line : break m = define_rx . match ( line ) if m : n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v else : m = undef_rx . match ( line ) if m : vars [ m . group ( 1 )] = 0

- Improved: while line := fp . readline (): if m := define_rx . match ( line ): n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v elif m := undef_rx . match ( line ): vars [ m . group ( 1 )] = 0

A list comprehension can map and filter efficiently by capturing the condition:

Similarly, a subexpression can be reused within the main expression, by giving it a name on first use:

Note that in both cases the variable y is bound in the containing scope (i.e. at the same level as results or stuff ).

Assignment expressions can be used to good effect in the header of an if or while statement:

Particularly with the while loop, this can remove the need to have an infinite loop, an assignment, and a condition. It also creates a smooth parallel between a loop which simply uses a function call as its condition, and one which uses that as its condition but also uses the actual value.

An example from the low-level UNIX world:

Rejected alternative proposals

Proposals broadly similar to this one have come up frequently on python-ideas. Below are a number of alternative syntaxes, some of them specific to comprehensions, which have been rejected in favour of the one given above.

A previous version of this PEP proposed subtle changes to the scope rules for comprehensions, to make them more usable in class scope and to unify the scope of the “outermost iterable” and the rest of the comprehension. However, this part of the proposal would have caused backwards incompatibilities, and has been withdrawn so the PEP can focus on assignment expressions.

Broadly the same semantics as the current proposal, but spelled differently.

Since EXPR as NAME already has meaning in import , except and with statements (with different semantics), this would create unnecessary confusion or require special-casing (e.g. to forbid assignment within the headers of these statements).

(Note that with EXPR as VAR does not simply assign the value of EXPR to VAR – it calls EXPR.__enter__() and assigns the result of that to VAR .)

Additional reasons to prefer := over this spelling include:

- In if f(x) as y the assignment target doesn’t jump out at you – it just reads like if f x blah blah and it is too similar visually to if f(x) and y .

- import foo as bar

- except Exc as var

- with ctxmgr() as var

To the contrary, the assignment expression does not belong to the if or while that starts the line, and we intentionally allow assignment expressions in other contexts as well.

- NAME = EXPR

- if NAME := EXPR

reinforces the visual recognition of assignment expressions.

This syntax is inspired by languages such as R and Haskell, and some programmable calculators. (Note that a left-facing arrow y <- f(x) is not possible in Python, as it would be interpreted as less-than and unary minus.) This syntax has a slight advantage over ‘as’ in that it does not conflict with with , except and import , but otherwise is equivalent. But it is entirely unrelated to Python’s other use of -> (function return type annotations), and compared to := (which dates back to Algol-58) it has a much weaker tradition.

This has the advantage that leaked usage can be readily detected, removing some forms of syntactic ambiguity. However, this would be the only place in Python where a variable’s scope is encoded into its name, making refactoring harder.

Execution order is inverted (the indented body is performed first, followed by the “header”). This requires a new keyword, unless an existing keyword is repurposed (most likely with: ). See PEP 3150 for prior discussion on this subject (with the proposed keyword being given: ).

This syntax has fewer conflicts than as does (conflicting only with the raise Exc from Exc notation), but is otherwise comparable to it. Instead of paralleling with expr as target: (which can be useful but can also be confusing), this has no parallels, but is evocative.

One of the most popular use-cases is if and while statements. Instead of a more general solution, this proposal enhances the syntax of these two statements to add a means of capturing the compared value:

This works beautifully if and ONLY if the desired condition is based on the truthiness of the captured value. It is thus effective for specific use-cases (regex matches, socket reads that return '' when done), and completely useless in more complicated cases (e.g. where the condition is f(x) < 0 and you want to capture the value of f(x) ). It also has no benefit to list comprehensions.

Advantages: No syntactic ambiguities. Disadvantages: Answers only a fraction of possible use-cases, even in if / while statements.

Another common use-case is comprehensions (list/set/dict, and genexps). As above, proposals have been made for comprehension-specific solutions.

This brings the subexpression to a location in between the ‘for’ loop and the expression. It introduces an additional language keyword, which creates conflicts. Of the three, where reads the most cleanly, but also has the greatest potential for conflict (e.g. SQLAlchemy and numpy have where methods, as does tkinter.dnd.Icon in the standard library).

As above, but reusing the with keyword. Doesn’t read too badly, and needs no additional language keyword. Is restricted to comprehensions, though, and cannot as easily be transformed into “longhand” for-loop syntax. Has the C problem that an equals sign in an expression can now create a name binding, rather than performing a comparison. Would raise the question of why “with NAME = EXPR:” cannot be used as a statement on its own.

As per option 2, but using as rather than an equals sign. Aligns syntactically with other uses of as for name binding, but a simple transformation to for-loop longhand would create drastically different semantics; the meaning of with inside a comprehension would be completely different from the meaning as a stand-alone statement, while retaining identical syntax.

Regardless of the spelling chosen, this introduces a stark difference between comprehensions and the equivalent unrolled long-hand form of the loop. It is no longer possible to unwrap the loop into statement form without reworking any name bindings. The only keyword that can be repurposed to this task is with , thus giving it sneakily different semantics in a comprehension than in a statement; alternatively, a new keyword is needed, with all the costs therein.

There are two logical precedences for the := operator. Either it should bind as loosely as possible, as does statement-assignment; or it should bind more tightly than comparison operators. Placing its precedence between the comparison and arithmetic operators (to be precise: just lower than bitwise OR) allows most uses inside while and if conditions to be spelled without parentheses, as it is most likely that you wish to capture the value of something, then perform a comparison on it:

Once find() returns -1, the loop terminates. If := binds as loosely as = does, this would capture the result of the comparison (generally either True or False ), which is less useful.

While this behaviour would be convenient in many situations, it is also harder to explain than “the := operator behaves just like the assignment statement”, and as such, the precedence for := has been made as close as possible to that of = (with the exception that it binds tighter than comma).

Some critics have claimed that the assignment expressions should allow unparenthesized tuples on the right, so that these two would be equivalent:

(With the current version of the proposal, the latter would be equivalent to ((point := x), y) .)

However, adopting this stance would logically lead to the conclusion that when used in a function call, assignment expressions also bind less tight than comma, so we’d have the following confusing equivalence:

The less confusing option is to make := bind more tightly than comma.

It’s been proposed to just always require parentheses around an assignment expression. This would resolve many ambiguities, and indeed parentheses will frequently be needed to extract the desired subexpression. But in the following cases the extra parentheses feel redundant:

Frequently Raised Objections

C and its derivatives define the = operator as an expression, rather than a statement as is Python’s way. This allows assignments in more contexts, including contexts where comparisons are more common. The syntactic similarity between if (x == y) and if (x = y) belies their drastically different semantics. Thus this proposal uses := to clarify the distinction.

The two forms have different flexibilities. The := operator can be used inside a larger expression; the = statement can be augmented to += and its friends, can be chained, and can assign to attributes and subscripts.

Previous revisions of this proposal involved sublocal scope (restricted to a single statement), preventing name leakage and namespace pollution. While a definite advantage in a number of situations, this increases complexity in many others, and the costs are not justified by the benefits. In the interests of language simplicity, the name bindings created here are exactly equivalent to any other name bindings, including that usage at class or module scope will create externally-visible names. This is no different from for loops or other constructs, and can be solved the same way: del the name once it is no longer needed, or prefix it with an underscore.

(The author wishes to thank Guido van Rossum and Christoph Groth for their suggestions to move the proposal in this direction. [2] )

As expression assignments can sometimes be used equivalently to statement assignments, the question of which should be preferred will arise. For the benefit of style guides such as PEP 8 , two recommendations are suggested.

- If either assignment statements or assignment expressions can be used, prefer statements; they are a clear declaration of intent.

- If using assignment expressions would lead to ambiguity about execution order, restructure it to use statements instead.

The authors wish to thank Alyssa Coghlan and Steven D’Aprano for their considerable contributions to this proposal, and members of the core-mentorship mailing list for assistance with implementation.

Appendix A: Tim Peters’s findings

Here’s a brief essay Tim Peters wrote on the topic.

I dislike “busy” lines of code, and also dislike putting conceptually unrelated logic on a single line. So, for example, instead of:

instead. So I suspected I’d find few places I’d want to use assignment expressions. I didn’t even consider them for lines already stretching halfway across the screen. In other cases, “unrelated” ruled:

is a vast improvement over the briefer:

The original two statements are doing entirely different conceptual things, and slamming them together is conceptually insane.

In other cases, combining related logic made it harder to understand, such as rewriting:

as the briefer:

The while test there is too subtle, crucially relying on strict left-to-right evaluation in a non-short-circuiting or method-chaining context. My brain isn’t wired that way.

But cases like that were rare. Name binding is very frequent, and “sparse is better than dense” does not mean “almost empty is better than sparse”. For example, I have many functions that return None or 0 to communicate “I have nothing useful to return in this case, but since that’s expected often I’m not going to annoy you with an exception”. This is essentially the same as regular expression search functions returning None when there is no match. So there was lots of code of the form:

I find that clearer, and certainly a bit less typing and pattern-matching reading, as:

It’s also nice to trade away a small amount of horizontal whitespace to get another _line_ of surrounding code on screen. I didn’t give much weight to this at first, but it was so very frequent it added up, and I soon enough became annoyed that I couldn’t actually run the briefer code. That surprised me!

There are other cases where assignment expressions really shine. Rather than pick another from my code, Kirill Balunov gave a lovely example from the standard library’s copy() function in copy.py :

The ever-increasing indentation is semantically misleading: the logic is conceptually flat, “the first test that succeeds wins”:

Using easy assignment expressions allows the visual structure of the code to emphasize the conceptual flatness of the logic; ever-increasing indentation obscured it.

A smaller example from my code delighted me, both allowing to put inherently related logic in a single line, and allowing to remove an annoying “artificial” indentation level:

That if is about as long as I want my lines to get, but remains easy to follow.

So, in all, in most lines binding a name, I wouldn’t use assignment expressions, but because that construct is so very frequent, that leaves many places I would. In most of the latter, I found a small win that adds up due to how often it occurs, and in the rest I found a moderate to major win. I’d certainly use it more often than ternary if , but significantly less often than augmented assignment.

I have another example that quite impressed me at the time.

Where all variables are positive integers, and a is at least as large as the n’th root of x, this algorithm returns the floor of the n’th root of x (and roughly doubling the number of accurate bits per iteration):

It’s not obvious why that works, but is no more obvious in the “loop and a half” form. It’s hard to prove correctness without building on the right insight (the “arithmetic mean - geometric mean inequality”), and knowing some non-trivial things about how nested floor functions behave. That is, the challenges are in the math, not really in the coding.

If you do know all that, then the assignment-expression form is easily read as “while the current guess is too large, get a smaller guess”, where the “too large?” test and the new guess share an expensive sub-expression.

To my eyes, the original form is harder to understand:

This appendix attempts to clarify (though not specify) the rules when a target occurs in a comprehension or in a generator expression. For a number of illustrative examples we show the original code, containing a comprehension, and the translation, where the comprehension has been replaced by an equivalent generator function plus some scaffolding.

Since [x for ...] is equivalent to list(x for ...) these examples all use list comprehensions without loss of generality. And since these examples are meant to clarify edge cases of the rules, they aren’t trying to look like real code.

Note: comprehensions are already implemented via synthesizing nested generator functions like those in this appendix. The new part is adding appropriate declarations to establish the intended scope of assignment expression targets (the same scope they resolve to as if the assignment were performed in the block containing the outermost comprehension). For type inference purposes, these illustrative expansions do not imply that assignment expression targets are always Optional (but they do indicate the target binding scope).

Let’s start with a reminder of what code is generated for a generator expression without assignment expression.

- Original code (EXPR usually references VAR): def f (): a = [ EXPR for VAR in ITERABLE ]

- Translation (let’s not worry about name conflicts): def f (): def genexpr ( iterator ): for VAR in iterator : yield EXPR a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a simple assignment expression.

- Original code: def f (): a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): if False : TARGET = None # Dead code to ensure TARGET is a local variable def genexpr ( iterator ): nonlocal TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a global TARGET declaration in f() .

- Original code: def f (): global TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): global TARGET def genexpr ( iterator ): global TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Or instead let’s add a nonlocal TARGET declaration in f() .

- Original code: def g (): TARGET = ... def f (): nonlocal TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def g (): TARGET = ... def f (): nonlocal TARGET def genexpr ( iterator ): nonlocal TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Finally, let’s nest two comprehensions.

- Original code: def f (): a = [[ TARGET := i for i in range ( 3 )] for j in range ( 2 )] # I.e., a = [[0, 1, 2], [0, 1, 2]] print ( TARGET ) # prints 2

- Translation: def f (): if False : TARGET = None def outer_genexpr ( outer_iterator ): nonlocal TARGET def inner_generator ( inner_iterator ): nonlocal TARGET for i in inner_iterator : TARGET = i yield i for j in outer_iterator : yield list ( inner_generator ( range ( 3 ))) a = list ( outer_genexpr ( range ( 2 ))) print ( TARGET )

Because it has been a point of confusion, note that nothing about Python’s scoping semantics is changed. Function-local scopes continue to be resolved at compile time, and to have indefinite temporal extent at run time (“full closures”). Example:

This document has been placed in the public domain.

Source: https://github.com/python/peps/blob/main/peps/pep-0572.rst

Last modified: 2023-10-11 12:05:51 GMT

- Python »

- 3.9.18 Documentation »

- The Python Standard Library »

- Python Language Services »

- Theme Auto Light Dark |

ast — Abstract Syntax Trees ¶

Source code: Lib/ast.py

The ast module helps Python applications to process trees of the Python abstract syntax grammar. The abstract syntax itself might change with each Python release; this module helps to find out programmatically what the current grammar looks like.

An abstract syntax tree can be generated by passing ast.PyCF_ONLY_AST as a flag to the compile() built-in function, or using the parse() helper provided in this module. The result will be a tree of objects whose classes all inherit from ast.AST . An abstract syntax tree can be compiled into a Python code object using the built-in compile() function.

Abstract Grammar ¶

The abstract grammar is currently defined as follows:

Node classes ¶

This is the base of all AST node classes. The actual node classes are derived from the Parser/Python.asdl file, which is reproduced below . They are defined in the _ast C module and re-exported in ast .

There is one class defined for each left-hand side symbol in the abstract grammar (for example, ast.stmt or ast.expr ). In addition, there is one class defined for each constructor on the right-hand side; these classes inherit from the classes for the left-hand side trees. For example, ast.BinOp inherits from ast.expr . For production rules with alternatives (aka “sums”), the left-hand side class is abstract: only instances of specific constructor nodes are ever created.

Each concrete class has an attribute _fields which gives the names of all child nodes.

Each instance of a concrete class has one attribute for each child node, of the type as defined in the grammar. For example, ast.BinOp instances have an attribute left of type ast.expr .

If these attributes are marked as optional in the grammar (using a question mark), the value might be None . If the attributes can have zero-or-more values (marked with an asterisk), the values are represented as Python lists. All possible attributes must be present and have valid values when compiling an AST with compile() .

Instances of ast.expr and ast.stmt subclasses have lineno , col_offset , end_lineno , and end_col_offset attributes. The lineno and end_lineno are the first and last line numbers of the source text span (1-indexed so the first line is line 1), and the col_offset and end_col_offset are the corresponding UTF-8 byte offsets of the first and last tokens that generated the node. The UTF-8 offset is recorded because the parser uses UTF-8 internally.

Note that the end positions are not required by the compiler and are therefore optional. The end offset is after the last symbol, for example one can get the source segment of a one-line expression node using source_line[node.col_offset : node.end_col_offset] .

The constructor of a class ast.T parses its arguments as follows:

If there are positional arguments, there must be as many as there are items in T._fields ; they will be assigned as attributes of these names.

If there are keyword arguments, they will set the attributes of the same names to the given values.

For example, to create and populate an ast.UnaryOp node, you could use

or the more compact

Changed in version 3.8: Class ast.Constant is now used for all constants.

Changed in version 3.9: Simple indices are represented by their value, extended slices are represented as tuples.