Null hypothesis

Null hypothesis n., plural: null hypotheses [nʌl haɪˈpɒθɪsɪs] Definition: a hypothesis that is valid or presumed true until invalidated by a statistical test

Table of Contents

Null Hypothesis Definition

Null hypothesis is defined as “the commonly accepted fact (such as the sky is blue) and researcher aim to reject or nullify this fact”.

More formally, we can define a null hypothesis as “a statistical theory suggesting that no statistical relationship exists between given observed variables” .

In biology , the null hypothesis is used to nullify or reject a common belief. The researcher carries out the research which is aimed at rejecting the commonly accepted belief.

What Is a Null Hypothesis?

A hypothesis is defined as a theory or an assumption that is based on inadequate evidence. It needs and requires more experiments and testing for confirmation. There are two possibilities that by doing more experiments and testing, a hypothesis can be false or true. It means it can either prove wrong or true (Blackwelder, 1982).

For example, Susie assumes that mineral water helps in the better growth and nourishment of plants over distilled water. To prove this hypothesis, she performs this experiment for almost a month. She watered some plants with mineral water and some with distilled water.

In a hypothesis when there are no statistically significant relationships among the two variables, the hypothesis is said to be a null hypothesis. The investigator is trying to disprove such a hypothesis. In the above example of plants, the null hypothesis is:

There are no statistical relationships among the forms of water that are given to plants for growth and nourishment.

Usually, an investigator tries to prove the null hypothesis wrong and tries to explain a relation and association between the two variables.

An opposite and reverse of the null hypothesis are known as the alternate hypothesis . In the example of plants the alternate hypothesis is:

There are statistical relationships among the forms of water that are given to plants for growth and nourishment.

The example below shows the difference between null vs alternative hypotheses:

Alternate Hypothesis: The world is round Null Hypothesis: The world is not round.

Copernicus and many other scientists try to prove the null hypothesis wrong and false. By their experiments and testing, they make people believe that alternate hypotheses are correct and true. If they do not prove the null hypothesis experimentally wrong then people will not believe them and never consider the alternative hypothesis true and correct.

The alternative and null hypothesis for Susie’s assumption is:

- Null Hypothesis: If one plant is watered with distilled water and the other with mineral water, then there is no difference in the growth and nourishment of these two plants.

- Alternative Hypothesis: If one plant is watered with distilled water and the other with mineral water, then the plant with mineral water shows better growth and nourishment.

The null hypothesis suggests that there is no significant or statistical relationship. The relation can either be in a single set of variables or among two sets of variables.

Most people consider the null hypothesis true and correct. Scientists work and perform different experiments and do a variety of research so that they can prove the null hypothesis wrong or nullify it. For this purpose, they design an alternate hypothesis that they think is correct or true. The null hypothesis symbol is H 0 (it is read as H null or H zero ).

Why is it named the “Null”?

The name null is given to this hypothesis to clarify and explain that the scientists are working to prove it false i.e. to nullify the hypothesis. Sometimes it confuses the readers; they might misunderstand it and think that statement has nothing. It is blank but, actually, it is not. It is more appropriate and suitable to call it a nullifiable hypothesis instead of the null hypothesis.

Why do we need to assess it? Why not just verify an alternate one?

In science, the scientific method is used. It involves a series of different steps. Scientists perform these steps so that a hypothesis can be proved false or true. Scientists do this to confirm that there will be any limitation or inadequacy in the new hypothesis. Experiments are done by considering both alternative and null hypotheses, which makes the research safe. It gives a negative as well as a bad impact on research if a null hypothesis is not included or a part of the study. It seems like you are not taking your research seriously and not concerned about it and just want to impose your results as correct and true if the null hypothesis is not a part of the study.

Development of the Null

In statistics, firstly it is necessary to design alternate and null hypotheses from the given problem. Splitting the problem into small steps makes the pathway towards the solution easier and less challenging. how to write a null hypothesis?

Writing a null hypothesis consists of two steps:

- Firstly, initiate by asking a question.

- Secondly, restate the question in such a way that it seems there are no relationships among the variables.

In other words, assume in such a way that the treatment does not have any effect.

The usual recovery duration after knee surgery is considered almost 8 weeks.

A researcher thinks that the recovery period may get elongated if patients go to a physiotherapist for rehabilitation twice per week, instead of thrice per week, i.e. recovery duration reduces if the patient goes three times for rehabilitation instead of two times.

Step 1: Look for the problem in the hypothesis. The hypothesis either be a word or can be a statement. In the above example the hypothesis is:

“The expected recovery period in knee rehabilitation is more than 8 weeks”

Step 2: Make a mathematical statement from the hypothesis. Averages can also be represented as μ, thus the null hypothesis formula will be.

In the above equation, the hypothesis is equivalent to H1, the average is denoted by μ and > that the average is greater than eight.

Step 3: Explain what will come up if the hypothesis does not come right i.e., the rehabilitation period may not proceed more than 08 weeks.

There are two options: either the recovery will be less than or equal to 8 weeks.

H 0 : μ ≤ 8

In the above equation, the null hypothesis is equivalent to H 0 , the average is denoted by μ and ≤ represents that the average is less than or equal to eight.

What will happen if the scientist does not have any knowledge about the outcome?

Problem: An investigator investigates the post-operative impact and influence of radical exercise on patients who have operative procedures of the knee. The chances are either the exercise will improve the recovery or will make it worse. The usual time for recovery is 8 weeks.

Step 1: Make a null hypothesis i.e. the exercise does not show any effect and the recovery time remains almost 8 weeks.

H 0 : μ = 8

In the above equation, the null hypothesis is equivalent to H 0 , the average is denoted by μ, and the equal sign (=) shows that the average is equal to eight.

Step 2: Make the alternate hypothesis which is the reverse of the null hypothesis. Particularly what will happen if treatment (exercise) makes an impact?

In the above equation, the alternate hypothesis is equivalent to H1, the average is denoted by μ and not equal sign (≠) represents that the average is not equal to eight.

Significance Tests

To get a reasonable and probable clarification of statistics (data), a significance test is performed. The null hypothesis does not have data. It is a piece of information or statement which contains numerical figures about the population. The data can be in different forms like in means or proportions. It can either be the difference of proportions and means or any odd ratio.

The following table will explain the symbols:

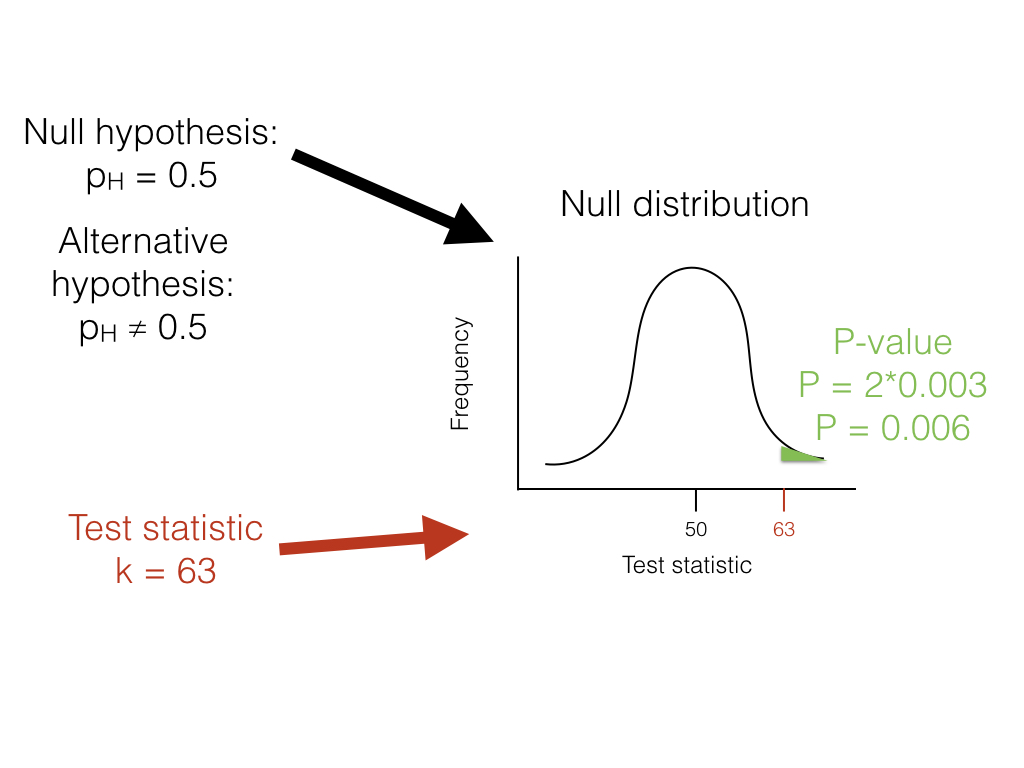

P-value is the chief statistical final result of the significance test of the null hypothesis.

- P-value = Pr(data or data more extreme | H 0 true)

- | = “given”

- Pr = probability

- H 0 = the null hypothesis

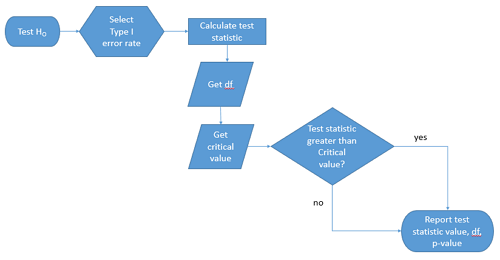

The first stage of Null Hypothesis Significance Testing (NHST) is to form an alternate and null hypothesis. By this, the research question can be briefly explained.

Null Hypothesis = no effect of treatment, no difference, no association Alternative Hypothesis = effective treatment, difference, association

When to reject the null hypothesis?

Researchers will reject the null hypothesis if it is proven wrong after experimentation. Researchers accept null hypothesis to be true and correct until it is proven wrong or false. On the other hand, the researchers try to strengthen the alternate hypothesis. The binomial test is performed on a sample and after that, a series of tests were performed (Frick, 1995).

Step 1: Evaluate and read the research question carefully and consciously and make a null hypothesis. Verify the sample that supports the binomial proportion. If there is no difference then find out the value of the binomial parameter.

Show the null hypothesis as:

H 0 :p= the value of p if H 0 is true

To find out how much it varies from the proposed data and the value of the null hypothesis, calculate the sample proportion.

Step 2: In test statistics, find the binomial test that comes under the null hypothesis. The test must be based on precise and thorough probabilities. Also make a list of pmf that apply, when the null hypothesis proves true and correct.

When H 0 is true, X~b(n, p)

N = size of the sample

P = assume value if H 0 proves true.

Step 3: Find out the value of P. P-value is the probability of data that is under observation.

Rise or increase in the P value = Pr(X ≥ x)

X = observed number of successes

P value = Pr(X ≤ x).

Step 4: Demonstrate the findings or outcomes in a descriptive detailed way.

- Sample proportion

- The direction of difference (either increases or decreases)

Perceived Problems With the Null Hypothesis

Variable or model selection and less information in some cases are the chief important issues that affect the testing of the null hypothesis. Statistical tests of the null hypothesis are reasonably not strong. There is randomization about significance. (Gill, 1999) The main issue with the testing of the null hypothesis is that they all are wrong or false on a ground basis.

There is another problem with the a-level . This is an ignored but also a well-known problem. The value of a-level is without a theoretical basis and thus there is randomization in conventional values, most commonly 0.q, 0.5, or 0.01. If a fixed value of a is used, it will result in the formation of two categories (significant and non-significant) The issue of a randomized rejection or non-rejection is also present when there is a practical matter which is the strong point of the evidence related to a scientific matter.

The P-value has the foremost importance in the testing of null hypothesis but as an inferential tool and for interpretation, it has a problem. The P-value is the probability of getting a test statistic at least as extreme as the observed one.

The main point about the definition is: Observed results are not based on a-value

Moreover, the evidence against the null hypothesis was overstated due to unobserved results. A-value has importance more than just being a statement. It is a precise statement about the evidence from the observed results or data. Similarly, researchers found that P-values are objectionable. They do not prefer null hypotheses in testing. It is also clear that the P-value is strictly dependent on the null hypothesis. It is computer-based statistics. In some precise experiments, the null hypothesis statistics and actual sampling distribution are closely related but this does not become possible in observational studies.

Some researchers pointed out that the P-value is depending on the sample size. If the true and exact difference is small, a null hypothesis even of a large sample may get rejected. This shows the difference between biological importance and statistical significance. (Killeen, 2005)

Another issue is the fix a-level, i.e., 0.1. On the basis, if a-level a null hypothesis of a large sample may get accepted or rejected. If the size of simple is infinity and the null hypothesis is proved true there are still chances of Type I error. That is the reason this approach or method is not considered consistent and reliable. There is also another problem that the exact information about the precision and size of the estimated effect cannot be known. The only solution is to state the size of the effect and its precision.

Null Hypothesis Examples

Here are some examples:

Example 1: Hypotheses with One Sample of One Categorical Variable

Among all the population of humans, almost 10% of people prefer to do their task with their left hand i.e. left-handed. Let suppose, a researcher in the Penn States says that the population of students at the College of Arts and Architecture is mostly left-handed as compared to the general population of humans in general public society. In this case, there is only a sample and there is a comparison among the known population values to the population proportion of sample value.

- Research Question: Do artists more expected to be left-handed as compared to the common population persons in society?

- Response Variable: Sorting the student into two categories. One category has left-handed persons and the other category have right-handed persons.

- Form Null Hypothesis: Arts and Architecture college students are no more predicted to be lefty as compared to the common population persons in society (Lefty students of Arts and Architecture college population is 10% or p= 0.10)

Example 2: Hypotheses with One Sample of One Measurement Variable

A generic brand of antihistamine Diphenhydramine making medicine in the form of a capsule, having a 50mg dose. The maker of the medicines is concerned that the machine has come out of calibration and is not making more capsules with the suitable and appropriate dose.

- Research Question: Does the statistical data recommended about the mean and average dosage of the population differ from 50mg?

- Response Variable: Chemical assay used to find the appropriate dosage of the active ingredient.

- Null Hypothesis: Usually, the 50mg dosage of capsules of this trade name (population average and means dosage =50 mg).

Example 3: Hypotheses with Two Samples of One Categorical Variable

Several people choose vegetarian meals on a daily basis. Typically, the researcher thought that females like vegetarian meals more than males.

- Research Question: Does the data recommend that females (women) prefer vegetarian meals more than males (men) regularly?

- Response Variable: Cataloguing the persons into vegetarian and non-vegetarian categories. Grouping Variable: Gender

- Null Hypothesis: Gender is not linked to those who like vegetarian meals. (Population percent of women who eat vegetarian meals regularly = population percent of men who eat vegetarian meals regularly or p women = p men).

Example 4: Hypotheses with Two Samples of One Measurement Variable

Nowadays obesity and being overweight is one of the major and dangerous health issues. Research is performed to confirm that a low carbohydrates diet leads to faster weight loss than a low-fat diet.

- Research Question: Does the given data recommend that usually, a low-carbohydrate diet helps in losing weight faster as compared to a low-fat diet?

- Response Variable: Weight loss (pounds)

- Explanatory Variable: Form of diet either low carbohydrate or low fat

- Null Hypothesis: There is no significant difference when comparing the mean loss of weight of people using a low carbohydrate diet to people using a diet having low fat. (population means loss of weight on a low carbohydrate diet = population means loss of weight on a diet containing low fat).

Example 5: Hypotheses about the relationship between Two Categorical Variables

A case-control study was performed. The study contains nonsmokers, stroke patients, and controls. The subjects are of the same occupation and age and the question was asked if someone at their home or close surrounding smokes?

- Research Question: Did second-hand smoke enhance the chances of stroke?

- Variables: There are 02 diverse categories of variables. (Controls and stroke patients) (whether the smoker lives in the same house). The chances of having a stroke will be increased if a person is living with a smoker.

- Null Hypothesis: There is no significant relationship between a passive smoker and stroke or brain attack. (odds ratio between stroke and the passive smoker is equal to 1).

Example 6: Hypotheses about the relationship between Two Measurement Variables

A financial expert observes that there is somehow a positive and effective relationship between the variation in stock rate price and the quantity of stock bought by non-management employees

- Response variable- Regular alteration in price

- Explanatory Variable- Stock bought by non-management employees

- Null Hypothesis: The association and relationship between the regular stock price alteration ($) and the daily stock-buying by non-management employees ($) = 0.

Example 7: Hypotheses about comparing the relationship between Two Measurement Variables in Two Samples

- Research Question: Is the relation between the bill paid in a restaurant and the tip given to the waiter, is linear? Is this relation different for dining and family restaurants?

- Explanatory Variable- total bill amount

- Response Variable- the amount of tip

- Null Hypothesis: The relationship and association between the total bill quantity at a family or dining restaurant and the tip, is the same.

Try to answer the quiz below to check what you have learned so far about the null hypothesis.

Choose the best answer.

Send Your Results (Optional)

- Blackwelder, W. C. (1982). “Proving the null hypothesis” in clinical trials. Controlled Clinical Trials , 3(4), 345–353.

- Frick, R. W. (1995). Accepting the null hypothesis. Memory & Cognition, 23(1), 132–138.

- Gill, J. (1999). The insignificance of null hypothesis significance testing. Political Research Quarterly , 52(3), 647–674.

- Killeen, P. R. (2005). An alternative to null-hypothesis significance tests. Psychological Science, 16(5), 345–353.

©BiologyOnline.com. Content provided and moderated by Biology Online Editors.

Last updated on June 16th, 2022

You will also like...

Plant Cell Defense

Plants protect themselves by releasing hydrogen peroxide to fight against fungal invasion. Another way is by secreting c..

Neurology of Illusions

Illusions are the perceptions and sensory data obtained from situations in which human error prevents us from seeing the..

Cell Biology

The cell is defined as the fundamental, functional unit of life. Some organisms are comprised of only one cell whereas o..

Mātauranga Māori and Science

Mātauranga Māori is the living knowledge system of the indigenous people of New Zealand, including the relationships t..

Still Water Community Plants

This tutorial looks at the adaptations of freshwater plants for them to thrive in still water habitats. Familiarize your..

Psychiatry & Mental Disorders

Different mental disorders are described here. Read this tutorial to get an overview of schizophrenia, affective mood di..

Related Articles...

No related articles found

Statistics Made Easy

How to Write a Null Hypothesis (5 Examples)

A hypothesis test uses sample data to determine whether or not some claim about a population parameter is true.

Whenever we perform a hypothesis test, we always write a null hypothesis and an alternative hypothesis, which take the following forms:

H 0 (Null Hypothesis): Population parameter =, ≤, ≥ some value

H A (Alternative Hypothesis): Population parameter <, >, ≠ some value

Note that the null hypothesis always contains the equal sign .

We interpret the hypotheses as follows:

Null hypothesis: The sample data provides no evidence to support some claim being made by an individual.

Alternative hypothesis: The sample data does provide sufficient evidence to support the claim being made by an individual.

For example, suppose it’s assumed that the average height of a certain species of plant is 20 inches tall. However, one botanist claims the true average height is greater than 20 inches.

To test this claim, she may go out and collect a random sample of plants. She can then use this sample data to perform a hypothesis test using the following two hypotheses:

H 0 : μ ≤ 20 (the true mean height of plants is equal to or even less than 20 inches)

H A : μ > 20 (the true mean height of plants is greater than 20 inches)

If the sample data gathered by the botanist shows that the mean height of this species of plants is significantly greater than 20 inches, she can reject the null hypothesis and conclude that the mean height is greater than 20 inches.

Read through the following examples to gain a better understanding of how to write a null hypothesis in different situations.

Example 1: Weight of Turtles

A biologist wants to test whether or not the true mean weight of a certain species of turtles is 300 pounds. To test this, he goes out and measures the weight of a random sample of 40 turtles.

Here is how to write the null and alternative hypotheses for this scenario:

H 0 : μ = 300 (the true mean weight is equal to 300 pounds)

H A : μ ≠ 300 (the true mean weight is not equal to 300 pounds)

Example 2: Height of Males

It’s assumed that the mean height of males in a certain city is 68 inches. However, an independent researcher believes the true mean height is greater than 68 inches. To test this, he goes out and collects the height of 50 males in the city.

H 0 : μ ≤ 68 (the true mean height is equal to or even less than 68 inches)

H A : μ > 68 (the true mean height is greater than 68 inches)

Example 3: Graduation Rates

A university states that 80% of all students graduate on time. However, an independent researcher believes that less than 80% of all students graduate on time. To test this, she collects data on the proportion of students who graduated on time last year at the university.

H 0 : p ≥ 0.80 (the true proportion of students who graduate on time is 80% or higher)

H A : μ < 0.80 (the true proportion of students who graduate on time is less than 80%)

Example 4: Burger Weights

A food researcher wants to test whether or not the true mean weight of a burger at a certain restaurant is 7 ounces. To test this, he goes out and measures the weight of a random sample of 20 burgers from this restaurant.

H 0 : μ = 7 (the true mean weight is equal to 7 ounces)

H A : μ ≠ 7 (the true mean weight is not equal to 7 ounces)

Example 5: Citizen Support

A politician claims that less than 30% of citizens in a certain town support a certain law. To test this, he goes out and surveys 200 citizens on whether or not they support the law.

H 0 : p ≥ .30 (the true proportion of citizens who support the law is greater than or equal to 30%)

H A : μ < 0.30 (the true proportion of citizens who support the law is less than 30%)

Additional Resources

Introduction to Hypothesis Testing Introduction to Confidence Intervals An Explanation of P-Values and Statistical Significance

Published by Zach

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Hypothesis Examples

A hypothesis is a prediction of the outcome of a test. It forms the basis for designing an experiment in the scientific method . A good hypothesis is testable, meaning it makes a prediction you can check with observation or experimentation. Here are different hypothesis examples.

Null Hypothesis Examples

The null hypothesis (H 0 ) is also known as the zero-difference or no-difference hypothesis. It predicts that changing one variable ( independent variable ) will have no effect on the variable being measured ( dependent variable ). Here are null hypothesis examples:

- Plant growth is unaffected by temperature.

- If you increase temperature, then solubility of salt will increase.

- Incidence of skin cancer is unrelated to ultraviolet light exposure.

- All brands of light bulb last equally long.

- Cats have no preference for the color of cat food.

- All daisies have the same number of petals.

Sometimes the null hypothesis shows there is a suspected correlation between two variables. For example, if you think plant growth is affected by temperature, you state the null hypothesis: “Plant growth is not affected by temperature.” Why do you do this, rather than say “If you change temperature, plant growth will be affected”? The answer is because it’s easier applying a statistical test that shows, with a high level of confidence, a null hypothesis is correct or incorrect.

Research Hypothesis Examples

A research hypothesis (H 1 ) is a type of hypothesis used to design an experiment. This type of hypothesis is often written as an if-then statement because it’s easy identifying the independent and dependent variables and seeing how one affects the other. If-then statements explore cause and effect. In other cases, the hypothesis shows a correlation between two variables. Here are some research hypothesis examples:

- If you leave the lights on, then it takes longer for people to fall asleep.

- If you refrigerate apples, they last longer before going bad.

- If you keep the curtains closed, then you need less electricity to heat or cool the house (the electric bill is lower).

- If you leave a bucket of water uncovered, then it evaporates more quickly.

- Goldfish lose their color if they are not exposed to light.

- Workers who take vacations are more productive than those who never take time off.

Is It Okay to Disprove a Hypothesis?

Yes! You may even choose to write your hypothesis in such a way that it can be disproved because it’s easier to prove a statement is wrong than to prove it is right. In other cases, if your prediction is incorrect, that doesn’t mean the science is bad. Revising a hypothesis is common. It demonstrates you learned something you did not know before you conducted the experiment.

Test yourself with a Scientific Method Quiz .

- Mellenbergh, G.J. (2008). Chapter 8: Research designs: Testing of research hypotheses. In H.J. Adèr & G.J. Mellenbergh (eds.), Advising on Research Methods: A Consultant’s Companion . Huizen, The Netherlands: Johannes van Kessel Publishing.

- Popper, Karl R. (1959). The Logic of Scientific Discovery . Hutchinson & Co. ISBN 3-1614-8410-X.

- Schick, Theodore; Vaughn, Lewis (2002). How to think about weird things: critical thinking for a New Age . Boston: McGraw-Hill Higher Education. ISBN 0-7674-2048-9.

- Tobi, Hilde; Kampen, Jarl K. (2018). “Research design: the methodology for interdisciplinary research framework”. Quality & Quantity . 52 (3): 1209–1225. doi: 10.1007/s11135-017-0513-8

Related Posts

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis ( H a ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect,” “a difference,” or “a relationship.” When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes < or >). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question.

- They both make claims about the population.

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

Prevent plagiarism. Run a free check.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

General template sentences

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis ( H 0 ): Independent variable does not affect dependent variable.

- Alternative hypothesis ( H a ): Independent variable affects dependent variable.

Test-specific template sentences

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 22). Null & Alternative Hypotheses | Definitions, Templates & Examples. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/statistics/null-and-alternative-hypotheses/

Is this article helpful?

Shaun Turney

Other students also liked, inferential statistics | an easy introduction & examples, hypothesis testing | a step-by-step guide with easy examples, type i & type ii errors | differences, examples, visualizations, what is your plagiarism score.

- Open access

- Published: 29 August 2015

Defining the null hypothesis

- Emma Saxon 1

BMC Biology volume 13 , Article number: 68 ( 2015 ) Cite this article

4335 Accesses

2 Citations

7 Altmetric

Metrics details

Virus B is a newly emerged viral strain for which there is no current treatment. Drug A was identified as a potential treatment for infection with virus B. In this pre-clinical phase of drug testing, the effects of drug A on survival after infection with virus B was tested. There was no difference in survival between control (dark blue) and drug A-treated, virus B-infected mice (green), but a significant difference in survival between control and virus B-infected mice without drug treatment (light blue, z-test for proportions P < 0.05, n = 30 in each group). The authors therefore concluded that drug A is effective in reducing mouse mortality due to virus B.

Some studies report conclusions based on a null hypothesis different from the one that is actually tested. In this example, the authors tested the effect of a novel antiviral drug on mouse survival 7 days after infection with a virus. The virus alone reduced mouse survival (the light blue bar in Fig. 1 , z-test P < 0.05), but there was no significant difference between uninfected, untreated control mice (dark blue) and infected, drug A-treated mice (green), so the authors concluded that the drug significantly increased the survival time of infected mice.

The effects of drug A on the relative survival of mice infected with virus B. Relative survival is significantly decreased in infected mice (light blue), but not in infected mice treated with drug A (green), compared with the control (dark blue); n = 30, z-test for proportions * P < 0.05. n/s not significant

But the statistical test used to support the claim was applied inappropriately. In order to conclude that the drug increased the survival of infected mice, the authors would have had to compare infected treated mice (green) with infected untreated mice (light blue), and not with uninfected mice (dark blue). Their results do show that the survival of virus-infected mice was significantly lower than that of uninfected control mice, by 20 %. But the difference between infected untreated and infected treated mice (the light blue versus green bars in Fig. 1 , the correct comparison for testing the drug effect) is only 10 %: as the non-significant difference in survival between uninfected control (dark blue) and infected drug-treated mice (green) was also 10 %, it, too, will be non-significant. In this case, the data support the null hypothesis, contrary to the authors’ conclusions.

Note also that the effects are not large — the majority of infected animals survive — and that with 30 animals in each group the differences amount to six animals at most between the groups. This makes it difficult to know realistically what to make of the results. To address this problem, the authors would need to increase the power of their study by using larger sample sizes, which would show whether there is a significant increase in survival with drug treatment or not.

Indeed, UK funding agencies recently changed their animal experimental guidelines to reflect growing concerns that sample size is commonly too small in studies like this, which therefore may not have sufficient statistical power to detect real differences [ 1 ]. Appropriate sample sizes can be calculated based on the study design, and new tools are being developed to help researchers with this: one example is the Experimental Design Assistant, from the National Centre for the Replacement, Refinement & Reduction of Animals in Research [ 2 ], expected to launch later in 2015.

Cressey D. UK funders demand strong statistics for animal studies. Nature. 2015;520:271–2.

Article CAS PubMed Google Scholar

Experimental Design Assistant. https://www.nc3rs.org.uk/experimental-design-assistant-eda .

Download references

Author information

Authors and affiliations.

BMC Biology, BioMed Central, 236 Gray’s Inn Road, London, WC1X 8HB, UK

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Emma Saxon .

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Saxon, E. Defining the null hypothesis. BMC Biol 13 , 68 (2015). https://doi.org/10.1186/s12915-015-0181-x

Download citation

Published : 29 August 2015

DOI : https://doi.org/10.1186/s12915-015-0181-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Infected Mouse

- Uninfected Control

- Sufficient Statistical Power

BMC Biology

ISSN: 1741-7007

- Submission enquiries: [email protected]

- General enquiries: [email protected]

9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

This page has been archived and is no longer updated

null hypothesis

Further Exploration

Concept Links for further exploration

- Gene Inheritance and Transmission

- Gene Expression and Regulation

- Nucleic Acid Structure and Function

- Chromosomes and Cytogenetics

- Evolutionary Genetics

- Population and Quantitative Genetics

- Genes and Disease

- Genetics and Society

- Cell Origins and Metabolism

- Proteins and Gene Expression

- Subcellular Compartments

- Cell Communication

- Cell Cycle and Cell Division

© 2014 Nature Education

- Press Room |

- Terms of Use |

- Privacy Notice |

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.11(11); 2021 Jun

When are hypotheses useful in ecology and evolution?

Matthew g. betts.

1 Forest Biodiversity Research Network, Department of Forest Ecosystems and Society, Oregon State University, Corvallis OR, USA

Adam S. Hadley

David w. frey, sarah j. k. frey, dusty gannon, scott h. harris, urs g. kormann, kara leimberger, katie moriarty.

2 USDA Forest Service, Pacific Northwest Research Station, Corvallis OR, USA

Joseph M. Northrup

3 Wildlife Research and Monitoring Section, Ontario Ministry of Natural Resources and Forestry, Environmental and Life Sciences Graduate Program, Trent University, Peterborough ON, Canada

Josée S. Rousseau

Thomas d. stokely, jonathon j. valente, diego zárrate‐charry, associated data.

Data for the analysis of hypothesis use in ecology and evolution publications is available at https://figshare.com/articles/dataset/Betts_et_al_2021_When_are_hypotheses_useful_in_ecology_and_evolution_Ecology_and_Evolution/14110289 .

Research hypotheses have been a cornerstone of science since before Galileo. Many have argued that hypotheses (1) encourage discovery of mechanisms, and (2) reduce bias—both features that should increase transferability and reproducibility. However, we are entering a new era of big data and highly predictive models where some argue the hypothesis is outmoded. We hypothesized that hypothesis use has declined in ecology and evolution since the 1990s, given the substantial advancement of tools further facilitating descriptive, correlative research. Alternatively, hypothesis use may have become more frequent due to the strong recommendation by some journals and funding agencies that submissions have hypothesis statements. Using a detailed literature analysis ( N = 268 articles), we found prevalence of hypotheses in eco–evo research is very low (6.7%–26%) and static from 1990–2015, a pattern mirrored in an extensive literature search ( N = 302,558 articles). Our literature review also indicates that neither grant success nor citation rates were related to the inclusion of hypotheses, which may provide disincentive for hypothesis formulation. Here, we review common justifications for avoiding hypotheses and present new arguments based on benefits to the individual researcher. We argue that stating multiple alternative hypotheses increases research clarity and precision, and is more likely to address the mechanisms for observed patterns in nature. Although hypotheses are not always necessary, we expect their continued and increased use will help our fields move toward greater understanding, reproducibility, prediction, and effective conservation of nature.

We use a quantitative literature review to show that use of a priori hypotheses is still rare in the fields of ecology and evolution. We provide suggestions about the group and individual‐level benefits of hypothesis use.

1. INTRODUCTION

Why should ecologists have hypotheses? At the beginning of most science careers, there comes a time of “hypothesis angst” where students question the need for the hypothetico‐deductive approach their elders have deemed essential for good science. Why is it not sufficient to just have a research objective or question? Why can't we just collect observations and describe those in our research papers?

Research hypotheses are explanations for an observed phenomenon (Loehle, 1987 ; Wolff & Krebs, 2008 ) (see Box 1 ) and have been proposed as a central tool of science since Galileo and Francis Bacon in the mid‐1600s (Glass & Hall, 2008 ). Over the past century, there have been repeated calls for rigorous application of hypotheses in science, and arguments that hypothesis use is the cornerstone of the scientific method (Chamberlin, 1890 ; Popper, 1959 ; Romesburg, 1981 ). In a seminal paper in Science, Platt ( 1964 ) challenged all scientific fields to adopt and rigorously test multiple hypotheses (sensu Chamberlin, 1890 ), arguing that without such hypothesis tests, disciplines would be prone to “stamp collecting” (Landy, 1986 ). To constitute “strong inference,” Platt required the scientific method to be a three‐step process including (1) developing alternative hypotheses, (2) devising a set of “crucial” experiments to eliminate all but one hypothesis, and (3) performing the experiments (Elliott & Brook, 2007 ).

Definitions of hypotheses and associated terms

Hypothesis : An explanation for an observed phenomenon.

Research Hypothesis: A statement about a phenomenon that also includes the potential mechanism or cause of that phenomenon. Though a research hypothesis doesn't need to adhere to this strict framework it is often best described as the “if” in an “if‐then” statement. In other words, “if X is true” (where X is the mechanism or cause for an observed phenomenon) “then Y” (where Y is the outcome of a crucial test that supports the hypothesis). These can also be thought of as “ mechanistic hypotheses ” since they link with a causal mechanism. For example, trees grow slowly at high elevation because of nutrient limitation (hypothesis); if this is the case, fertilizing trees should result in more rapid growth (prediction).

Prediction: The potential outcome of a test that would support a hypothesis. Most researchers call the second part of the if‐then statement a “prediction”.

Multiple alternative hypotheses: Multiple plausible explanations for the same phenomenon.

Descriptive Hypothesis: Descriptive statements or predictions with the word “hypothesis” in front of them. Typically researchers state their guess about the results they expect and call this the “hypothesis” (e.g., “I hypothesize trees at higher elevation will grow slowly”).

Statistical Hypothesis : A predicted pattern in data that should occur if a research hypothesis is true.

Null Hypothesis : A concise statement expressing the concept of “no difference” between a sample and the population mean.

The commonly touted strengths of hypotheses are two‐fold. First, by adopting multiple plausible explanations for a phenomenon (hereafter “ multiple alternative hypotheses ”; Box 1 ), a researcher reduces the chance that they will become attached to a single possibility, thereby biasing research in favor of this outcome (Chamberlin, 1890 ); this “confirmation bias” is a well‐known human trait (Loehle, 1987 ; Rosen, 2016 ) and likely decreases reproducibility (Munafò et al., 2017 ). Second, various authors have argued that the a priori hypothesis framework forces one to think in advance about—and then test—various causes for patterns in nature (Wolff & Krebs, 2008 ), rather than simply examining the patterns themselves and coming up with explanations after the fact (so called “inductive research;” Romesburg, 1981 ). By understanding and testing mechanisms, science becomes more reliable and transferable (Ayres & Lombardero, 2017 ; Houlahan et al., 2017 ; Sutherland et al., 2013 ) (Figure 1 ). Importantly, both of these strengths should have strong, positive impacts on reproducibility of ecological and evolutionary studies (see Discussion).

Understanding mechanisms often increases model transferability. Panels (a and b) show snowshoe hares in winter and summer coloration, respectively. If a correlative (i.e., nonmechanistic) model for hare survival as a function of color was trained only on hares during the winter and then extrapolated into the summer months, it would perform poorly (white hares would die disproportionately under no‐snow conditions). On the other hand, a researcher testing mechanisms for hare survival would (ideally via experimentation) arrive at the conclusion that it is not the whiteness of hares, but rather blending with the background that confers survival (the “camouflage” hypothesis). Understanding mechanism results in model predictions being robust to novel conditions. Panel (c) Shows x and y geographic locations of training (blue filled circles) and testing (blue open circles) locations for a hypothetical correlative model. Even if the model performs well on these independent test data (predicting open to closed circles), there is no guarantee that it will predict well outside of the spatial bounds of the existing data (red circles). Nonstationarity (in this case caused by a nonlinear relationship between predictor and response variable; panel d) could result in correlative relationships shifting substantially if extrapolated to new times or places. However, mechanistic hypotheses aimed at understanding the underlying factors driving the distribution of this species would be more likely to elucidate this nonlinear relationship. In both of these examples, understanding drivers behind ecological patterns—via testing mechanistic hypotheses—is likely to enhance model transferability

However, we are entering a new era of ecological and evolutionary science that is characterized by massive datasets on genomes, species distributions, climate, land cover, and other remotely sensed information (e.g., bioacoustics, camera traps; Pettorelli et al., 2017 ). Exceptional computing power and new statistical and machine‐learning algorithms now enable thousands of statistical models to be run in minutes. Such datasets and methods allow for pattern recognition at unprecedented spatial scales and for huge numbers of taxa and processes. Indeed, there have been recent arguments in both the scientific literature and popular press to do away with the traditional scientific method and a priori hypotheses (Glass & Hall, 2008 ; Golub, 2010 ). These arguments go something along the lines of “if we can get predictions right most of the time, why do we need to know the cause?”

In this paper, we sought to understand if hypothesis use in ecology and evolution has shifted in response to these pressures on the discipline. We, therefore, hypothesized that hypothesis use has declined in ecology and evolution since the 1990s, given the substantial advancement of tools further facilitating descriptive, correlative research (e.g., Cutler et al., 2007 ; Elith et al., 2008 ). We predicted that this decline should be particularly evident in the applied conservation literature—where the emergence of machine‐learning models has resulted in an explosion of conservation‐oriented species distribution models (Elith et al., 2006 ). Our alternative hypothesis was that hypothesis use has become more frequent. The mechanism for such increases is that higher‐profile journals (e.g., Functional Ecology , Proceedings of the Royal Society of London Ser. B ) and competitive granting agencies (e.g., the U.S. National Science Foundation) now require or strongly encourage hypothesis statements.

As noted above, many have argued that hypotheses are useful and important for overall progress in science, because they facilitate the discovery of mechanisms, reduce bias, and increase reproducibility (Platt, 1964 ). However, for hypothesis use to be propagated among scientists, one would also expect hypotheses to confer benefits to the individual. We, therefore, tested whether hypothesis use was associated with individual‐level incentives relevant to academic success: publications, citations, and grants (Weinberg, 2010 ). If hypothesis use confers individual‐level advantages, then hypothesis‐based research should be (1) published in more highly ranked journals, (2) have higher citation rates, and (3) be supported by highly competitive funding sources.

Finally, we also present some common justifications for absence of hypotheses and suggest potential counterpoints researchers should consider prior to dismissing hypothesis use, including potential benefits to the individual researcher. We hope this communication provides practical recommendations for improving hypothesis use in ecology and evolution—particularly for new practitioners in the field (Box 2 ).

Recommendations for improving hypotheses use in ecology and evolution

Authors : Know that you are human and prone to confirmation bias and highly effective at false pattern recognition. Thus, inductive research and single working hypotheses should be rare in your research. Remember that if your work is to have a real “impact”, it needs to withstand multiple tests from other labs over the coming decades.

Editors and Reviewers : Reward research that is conducted using principles of sound scientific method. Be skeptical of research that smacks of data dredging, post hoc hypothesis development, and single hypotheses. If no hypotheses are stated in a paper and/or the paper is purely descriptive, ask whether the novelty of the system and question warrant this, or if the field would have been better served by a study with mechanistic hypotheses. If only single hypotheses are stated, ask whether appropriate precautions were taken for the researcher to avoid finding support for a pet idea (e.g., blinded experiments, randomized attribution of treatments, etc.). To paraphrase Platt ( 1964 ): beware of the person with only one method or one instrument, either experimental or theoretical.

Mentors : Encourage your advisees to think carefully about hypothesis use and teach them how to construct sound multiple, mechanistic hypotheses. Importantly, explain why hypotheses are important to the scientific method, the individual and group consequences of excluding them, and the rare instances where they may not be necessary.

Policymakers/media/educators/students/readers : Read scientific articles with skepticism; have a scrutinous eye out for single hypothesis studies and p‐hacking. Reward multi‐hypothesis, mechanistic, predictive science by giving it greater weight in policy decisions (Sutherland et al., 2013 ), more coverage in the media, greater leverage in education, and more citations in reports.

2.1. Literature analysis

To examine hypothesis use over time and test whether hypothesis presence was associated with research type (basic vs. applied), journal impact factor, citation rates, and grants, we sampled the ecology and evolution literature using a stratified random sample of ecology and evolution journals in existence before 1991. First, we randomly selected 19 journals across impact factor (IF) strata ranging from 0.5–10.0 in two bins (<3 IF and ≥3 IF; see Figure 3 for full journal list). We then added three multidisciplinary journals that regularly publish ecology and evolution articles ( Proceedings of the National Academy of Sciences, Science, and Nature ). From this sample of 22 journals, we randomly selected ecology and evolution articles within 5‐year strata beginning in 1991 (3 articles/journal per 5‐year bin) to ensure the full date range was evenly sampled. We removed articles in the following categories: editorials, corrections, reviews, opinions, and methods papers. In multidisciplinary journals, we examined only ecology, evolution, and conservation biology articles, as indicated by section headers in each journal. Once selected, articles were randomly distributed to the authors of the current paper (hereafter “reviewers:” MGB, ASH, DF, SF, DG, SH, HK, UK, KL, KM, JN, BP, JSR, TSS, JV, DZC) for detailed examination. On rare occasions, an article was not found, or reviewers were not able to complete their review. Ultimately, our final sample comprised 268 articles.

Frequency distributions showing proportion of various hypotheses types across ecology and evolution journals included in our detailed literature search. Hypothesis use varied greatly across publication outlets. We considered J. Applied Ecology, J. Wildlife Management, J. Soil, and Water Cons., Ecological Applications, Conservation Biology, and Biological Conservation to be applied journals; both applied and basic journals varied greatly in the prevalence of hypotheses

Reviewers were given a maximum of 10 min to find research hypothesis statements within the abstract or introduction of articles. We chose 10 min to simulate the amount of time that a journal editor pressed for time might spend evaluating the introductory material in an article. After this initial 10 min period, we determined: (1) whether or not an article contained at least one hypothesis, (2) whether hypotheses were mechanistic or not (i.e., the authors claimed to examine the mechanism for an observed phenomenon), (3) whether multiple alternative hypotheses were considered (sensu Chamberlin, 1890 ), and (4) whether hypotheses were “descriptive” (that is, they did not explore a mechanism but simply stated the expected direction of an effect; we define this as a “prediction” [Box 1 ]). It is important to note that to be identified as having hypotheses, articles did not need to contain the actual term “hypothesis” under our protocol; we also included articles using phrases such as “If X is true, we expected …” or “ we anticipated, ” both of which reflect a priori expectations from the data. We categorized each article as either basic (fundamental research without applications as a focus) or applied (clear management or conservation focus to article). Finally, we also examined all articles for funding sources and noted the presence of a national or international‐level competitive grant (e.g., National Science Foundation, European Union, Natural Sciences and Engineering Research Council). We assumed that published articles would have fidelity to the hypotheses stated in original grant proposals that funded the research, therefore, the acknowledgment of a successful grant is an indicator of financial reward for including hypotheses in initial proposals. Journal impact factors and individual article citation rates were gleaned directly from Web of Science. We reasoned that many researchers seek out journals with higher impact factors for the first submission of their manuscripts (Paine & Fox, 2018 ). Our assumption was that studies with more careful experimental design—including hypotheses—should be published where initially submitted, whereas those without may be eventually published, on average, in lower impact journals (Opthof et al., 2000 ). Ideally, we could have included articles that were rejected and never published in our analysis, but such articles are notoriously difficult to track (Thornton & Lee, 2000 ).

To support our detailed literature analysis, we also tested for temporal trends in hypothesis use within a broader sample of the ecology and evolution literature. For the same set of 22 journals in our detailed sample, we conducted a Web of Science search for articles containing “hypoth*” in the title or abstract. To calculate the proportion of articles with hypotheses (from 1990–2018), we divided the number of articles with hypotheses by the total number of articles ( N = 302,558). Because our search method does not include the main text of articles and excludes more subtle ways of stating hypotheses (e.g., “We expected…,” “We predicted…”), we acknowledge that the proportion of papers identified is likely to be an underestimate of the true proportions. Nevertheless, we do not expect that the degree of underestimation would change over time, so temporal trends in the proportion of papers containing hypotheses should be unbiased.

2.2. Statistical analysis

We used generalized linear mixed models (GLMMs) to test for change in the prevalance of various hypothesis types over time (descriptive, mechanistic, multiple, any hypothesis). Presence of a hypothesis was modeled as dichotomous (0,1) with binomial error structure, and “journal” was included as a random effect to account for potential lack of independence among articles published in the same outlet. The predictor variable (i.e., year) was scaled to enable convergence. Similarly, we tested for differences in hypothesis prevalence between basic and applied articles using GLMMs with “journal” as a random effect. Finally, we tested the hypothesis that hypothesis use might decline over time due to the emergence of machine‐learning in the applied conservation literature; specifically, we modeled “hypothesis presence” as a function of the statistical interaction between “year” and “basic versus applied” articles. We conducted this test for all hypothesis types. GLMMs were implemented in R (version 3.60) using the lme4 package (Bates et al., 2018 ). In three of our models, the “journal” random effect standard deviation was estimated to be zero or nearly zero (i.e., 10 –8 ). In such cases, the model with the random effect is exceptionally difficult to estimate, and the random effect standard deviation being estimated as approximately zero indicates the random effect was likely not needed.

We tested whether the presence of hypotheses influenced the likelihood of publication in a high‐impact journal using generalized linear models with a Gaussian error structure. We used the log of journal impact factor (+0.5) as the response variable to improve normality of model residuals. We tested the association between major competitive grants and the presence of a hypotheses using generalized linear models (logistic regression) with “hypothesis presence” (0,1) as a predictor and presence of a grant (0,1) as a response.

Finally, we tested whether hypotheses increase citation rates using linear mixed effects models (LMMs); presence of various hypotheses (0,1) were predictors in univariate models and average citations per year (log‐transformed) was the response. “Journal” was treated as a random effect, which assumes that articles within a particular journal are unlikely to be independent in their citation rates. LMMs were implemented in R using the lme4 package (Bates et al., 2015 ).

3.1. Trends in hypothesis use in ecology and evolution

In the ecology and evolution articles we examined in detail, the prevalence of multiple alternative hypotheses (6.7%) and mechanistic hypotheses (26%) was very low and showed no temporal trend (GLMM: multiple alternative: β ^ = 0.098 [95% CI: −0.383, 0.595], z = 0.40, p = 0.69, mechanistic: β ^ = 0.131 [95% CI: −0.149, 0.418], z = 0.92, p = 0.36, Figure 2a,b ). Descriptive hypothesis use was also low (8.5%), and although we observed a slight tendency to increase over time, 95% confidence intervals overlapped zero (GLMM: β ^ = 0.351 [95% CI: −0.088, 0.819], z = 1.53, p = 0.13, Figure 2c ). Although the proportion of papers containing no hypotheses appears to have declined (Figure 2d ), this effect was not statistically significant (GLMM: β ^ = −0.201 [95% CI: −0.483, 0.074], z = −1.41, p = 0.15). This overall pattern is consistent with a Web of Science search ( N = 302,558 articles) for the term “hypoth*” in titles or abstracts that shows essentially no trend over the same time period (Figure 2e,f ).

Trends in hypothesis use from 1991–2015 from a sample of the ecological and evolutionary literature ( N = 268, (a) multiple alternative hypotheses, (b) mechanistic hypotheses, (c) descriptive hypotheses [predictions], and (d) no hypotheses present). We detected no temporal trend in any of these variables. Lines reflect LOESS smoothing with 95% confidence intervals. Dots show raw data with darker colors indicating overlapping data points. The total number of publications in ecology and evolution in selected journals has increased (e), but use of the term “hypoth*” in the title or abstracts of these 302,558 articles has remained flat, and at very low prevalence (f)

Counter to our hypothesis, applied and basic articles did not show a statistically significant difference in the prevalence of either mechanistic (GLMM: β ^ = 0.054 [95% CI: −0.620, 0.728], z = 0.16, p = 0.875) or multiple alternative hypotheses (GLMM: β ^ = 0.517 [95% CI: −0.582, 1.80], z = 0.88, p = 0.375). Although both basic and applied ecology and evolution articles containing hypotheses were similarly rare overall, there was a tendency for applied ecology articles to show increasing prevalence of mechanistic hypothesis use over time, whereas basic ecology articles have remained relatively unchanged (Table S1 , Figure S1 ). However, there was substantial variation across both basic and applied journals in the prevalence of hypotheses (Figure 3 ).

3.2. Do hypotheses “pay?”

We found little evidence that presence of hypotheses increased paper citation rates. Papers with mechanistic (LMM: β ^ = −0.109 [95% CI: −0.329, 0.115], t = 0.042, p = 0.97, Figure 4a , middle panel) or multiple alternative hypotheses (LMM: β ^ = −0.008 [95% CI: −0.369, 0.391], t = 0.042, p = 0.96, Figure 4a , bottom panel) did not have higher average annual citation rates, nor did papers with at least one hypothesis type (LMM: β ^ = −0.024 [95% CI: −0.239, 0.194], t = 0.218, p = 0.83, Figure 4a , top panel).

Results of our detailed literature search showing the relationship between having a hypothesis (or not) and three commonly sought after scientific rewards (Average times a paper is cited/year, Journal impact factor, and the likelihood of having a major national competitive grant). We found no statistically significant relationships between having a hypothesis and citation rates or grants, but articles with hypotheses tended to be published in higher impact journals

On the other hand, journal articles containing mechanistic hypotheses tended to be published in higher impact journals (GLM: β ^ = 0.290 [95% CI: 0.083, 0.497], t = 2.74, p = 0.006) but only slightly so (Figure 4b , middle panel). Including multiple alternative hypotheses in papers did not have a statistically significant effect (GLM: = 0.339 [95% CI: −0.029, 0.707], t = 1.80, p = 0.072, Figure 4b , bottom panel).

Finally, we found no association between obtaining a competitive national or international grant and the presence of a hypothesis (logistic regression: mechanistic: β ^ = −0.090 [95% CI: −0.637, 0.453], z = −0.36, p =0 .745; multiple alternative: β ^ = 0.080 [95% CI: −0.891, 1.052], z = 0.49, p = 0.870; any hypothesis: β ^ = −0.005 [95% CI: −0.536, 0.525], z = −0.02, p = 0.986, Figure 4c ).

4. DISCUSSION

Overall, the prevalence of hypothesis use in the ecological and evolutionary literature is strikingly low and has been so for the past 25 years despite repeated calls to reverse this pattern (Elliott & Brook, 2007 ; Peters, 1991 ; Rosen, 2016 ; Sells et al., 2018 ). Why is this the case?

Clearly, hypotheses are not always necessary and a portion of the sampled articles may represent situations where hypotheses are truly not useful (see Box 3 : “When Are Hypotheses Not Useful?”). Some authors (Wolff & Krebs, 2008 ) overlook knowledge gathering and descriptive research as a crucial first step for making observations about natural phenomena—from which hypotheses can be formulated. This descriptive work is an important part of ecological science (Tewksbury et al., 2014 ), but may not benefit from strict use of hypotheses. Similarly, some efforts are simply designed to be predictive, such as auto‐recognition of species via machine learning (Briggs et al., 2012 ) or for prioritizing conservation efforts (Wilson et al., 2006 ), where the primary concern is correct identification and prediction rather than the biological or computational reasons for correct predictions (Box 3 ). However, it would be surprising if 75% of ecology since 1990 has been purely descriptive work from little‐known systems or purely predictive in nature. Indeed, the majority of the articles we observed did not fall into these categories.

When are hypotheses not useful?

Of course, there are a number of instances where hypotheses might not be useful or needed. It is important to recognize these instances to prevent the pendulum from swinging in a direction where without hypotheses, research ceases to be considered science (Wolff & Krebs, 2008 ). Below are several important types of ecological research where formulating hypotheses may not always be beneficial.

When the goal is prediction rather than understanding. Examples of this exception include species distribution models (Elith et al., 2008 ) where the question is not why species are distributed as they are, but simply where species are predicted to be. Such results can be useful in conservation planning (Guisan et al., 2013 ; see below). Another example lies in auto‐recognition of species (Briggs et al., 2012 ) where the primary concern is getting identification right rather than the biological or computational reasons for correct predictions. In such instances, complex algorithms can be very effective at uncovering patterns (e.g., deep learning). A caveat and critical component of such efforts is to ensure that such models are tested on independent data. Further, if model predictions are made beyond the spatial or temporal bounds of training or test data, extreme caution should be applied (see Figure 4 ).