May 25, 2023

Here’s Why AI May Be Extremely Dangerous—Whether It’s Conscious or Not

Artificial intelligence algorithms will soon reach a point of rapid self-improvement that threatens our ability to control them and poses great potential risk to humanity

By Tamlyn Hunt

devrimb/Getty Images

“The idea that this stuff could actually get smarter than people.... I thought it was way off…. Obviously, I no longer think that,” Geoffrey Hinton, one of Google's top artificial intelligence scientists, also known as “ the godfather of AI ,” said after he quit his job in April so that he can warn about the dangers of this technology .

He’s not the only one worried. A 2023 survey of AI experts found that 36 percent fear that AI development may result in a “nuclear-level catastrophe.” Almost 28,000 people have signed on to an open letter written by the Future of Life Institute, including Steve Wozniak, Elon Musk, the CEOs of several AI companies and many other prominent technologists, asking for a six-month pause or a moratorium on new advanced AI development.

As a researcher in consciousness, I share these strong concerns about the rapid development of AI, and I am a co-signer of the Future of Life open letter.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Why are we all so concerned? In short: AI development is going way too fast.

The key issue is the profoundly rapid improvement in conversing among the new crop of advanced "chatbots," or what are technically called “large language models” (LLMs). With this coming “AI explosion,” we will probably have just one chance to get this right.

If we get it wrong, we may not live to tell the tale. This is not hyperbole.

This rapid acceleration promises to soon result in “artificial general intelligence” (AGI), and when that happens, AI will be able to improve itself with no human intervention. It will do this in the same way that, for example, Google’s AlphaZero AI learned how to play chess better than even the very best human or other AI chess players in just nine hours from when it was first turned on. It achieved this feat by playing itself millions of times over.

A team of Microsoft researchers analyzing OpenAI’s GPT-4 , which I think is the best of the new advanced chatbots currently available, said it had, "sparks of advanced general intelligence" in a new preprint paper .

In testing GPT-4, it performed better than 90 percent of human test takers on the Uniform Bar Exam, a standardized test used to certify lawyers for practice in many states. That figure was up from just 10 percent in the previous GPT-3.5 version, which was trained on a smaller data set. They found similar improvements in dozens of other standardized tests.

Most of these tests are tests of reasoning. This is the main reason why Bubeck and his team concluded that GPT-4 “could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.”

This pace of change is why Hinton told the New York Times : "Look at how it was five years ago and how it is now. Take the difference and propagate it forwards. That’s scary.” In a mid-May Senate hearing on the potential of AI, Sam Altman, the head of OpenAI called regulation “crucial.”

Once AI can improve itself, which may be not more than a few years away, and could in fact already be here now, we have no way of knowing what the AI will do or how we can control it. This is because superintelligent AI (which by definition can surpass humans in a broad range of activities) will—and this is what I worry about the most—be able to run circles around programmers and any other human by manipulating humans to do its will; it will also have the capacity to act in the virtual world through its electronic connections, and to act in the physical world through robot bodies.

This is known as the “control problem” or the “alignment problem” (see philosopher Nick Bostrom’s book Superintelligence for a good overview ) and has been studied and argued about by philosophers and scientists, such as Bostrom, Seth Baum and Eliezer Yudkowsky , for decades now.

I think of it this way: Why would we expect a newborn baby to beat a grandmaster in chess? We wouldn’t. Similarly, why would we expect to be able to control superintelligent AI systems? (No, we won’t be able to simply hit the off switch, because superintelligent AI will have thought of every possible way that we might do that and taken actions to prevent being shut off.)

Here’s another way of looking at it: a superintelligent AI will be able to do in about one second what it would take a team of 100 human software engineers a year or more to complete. Or pick any task, like designing a new advanced airplane or weapon system, and superintelligent AI could do this in about a second.

Once AI systems are built into robots, they will be able to act in the real world, rather than only the virtual (electronic) world, with the same degree of superintelligence, and will of course be able to replicate and improve themselves at a superhuman pace.

Any defenses or protections we attempt to build into these AI “gods,” on their way toward godhood, will be anticipated and neutralized with ease by the AI once it reaches superintelligence status. This is what it means to be superintelligent.

We won’t be able to control them because anything we think of, they will have already thought of, a million times faster than us. Any defenses we’ve built in will be undone, like Gulliver throwing off the tiny strands the Lilliputians used to try and restrain him.

Some argue that these LLMs are just automation machines with zero consciousness , the implication being that if they’re not conscious they have less chance of breaking free from their programming. Even if these language models, now or in the future, aren’t at all conscious, this doesn’t matter. For the record, I agree that it’s unlikely that they have any actual consciousness at this juncture—though I remain open to new facts as they come in.

Regardless, a nuclear bomb can kill millions without any consciousness whatsoever. In the same way, AI could kill millions with zero consciousness, in a myriad ways, including potentially use of nuclear bombs either directly (much less likely) or through manipulated human intermediaries (more likely).

So, the debates about consciousness and AI really don’t figure very much into the debates about AI safety.

Yes, language models based on GPT-4 and many other models are already circulating widely . But the moratorium being called for is to stop development of any new models more powerful than 4.0—and this can be enforced, with force if required. Training these more powerful models requires massive server farms and energy. They can be shut down.

My ethical compass tells me that it is very unwise to create these systems when we know already we won’t be able to control them, even in the relatively near future. Discernment is knowing when to pull back from the edge. Now is that time.

We should not open Pandora’s box any more than it already has been opened.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.

12 Risks and Dangers of Artificial Intelligence (AI)

As AI grows more sophisticated and widespread, the voices warning against the potential dangers of artificial intelligence grow louder.

“These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that happening,” said Geoffrey Hinton , known as the “Godfather of AI” for his foundational work on machine learning and neural network algorithms. In 2023, Hinton left his position at Google so that he could “ talk about the dangers of AI ,” noting a part of him even regrets his life’s work .

The renowned computer scientist isn’t alone in his concerns.

Tesla and SpaceX founder Elon Musk, along with over 1,000 other tech leaders, urged in a 2023 open letter to put a pause on large AI experiments, citing that the technology can “pose profound risks to society and humanity.”

Dangers of Artificial Intelligence

- Automation-spurred job loss

- Privacy violations

- Algorithmic bias caused by bad data

- Socioeconomic inequality

- Market volatility

- Weapons automatization

- Uncontrollable self-aware AI

Whether it’s the increasing automation of certain jobs , gender and racially biased algorithms or autonomous weapons that operate without human oversight (to name just a few), unease abounds on a number of fronts. And we’re still in the very early stages of what AI is really capable of.

12 Dangers of AI

Questions about who’s developing AI and for what purposes make it all the more essential to understand its potential downsides. Below we take a closer look at the possible dangers of artificial intelligence and explore how to manage its risks.

Is AI Dangerous?

The tech community has long debated the threats posed by artificial intelligence. Automation of jobs, the spread of fake news and a dangerous arms race of AI-powered weaponry have been mentioned as some of the biggest dangers posed by AI.

1. Lack of AI Transparency and Explainability

AI and deep learning models can be difficult to understand, even for those that work directly with the technology . This leads to a lack of transparency for how and why AI comes to its conclusions, creating a lack of explanation for what data AI algorithms use, or why they may make biased or unsafe decisions. These concerns have given rise to the use of explainable AI , but there’s still a long way before transparent AI systems become common practice.

2. Job Losses Due to AI Automation

AI-powered job automation is a pressing concern as the technology is adopted in industries like marketing , manufacturing and healthcare . By 2030, tasks that account for up to 30 percent of hours currently being worked in the U.S. economy could be automated — with Black and Hispanic employees left especially vulnerable to the change — according to McKinsey . Goldman Sachs even states 300 million full-time jobs could be lost to AI automation.

“The reason we have a low unemployment rate, which doesn’t actually capture people that aren’t looking for work, is largely that lower-wage service sector jobs have been pretty robustly created by this economy,” futurist Martin Ford told Built In. With AI on the rise, though, “I don’t think that’s going to continue.”

As AI robots become smarter and more dexterous, the same tasks will require fewer humans. And while AI is estimated to create 97 million new jobs by 2025 , many employees won’t have the skills needed for these technical roles and could get left behind if companies don’t upskill their workforces .

“If you’re flipping burgers at McDonald’s and more automation comes in, is one of these new jobs going to be a good match for you?” Ford said. “Or is it likely that the new job requires lots of education or training or maybe even intrinsic talents — really strong interpersonal skills or creativity — that you might not have? Because those are the things that, at least so far, computers are not very good at.”

Even professions that require graduate degrees and additional post-college training aren’t immune to AI displacement.

As technology strategist Chris Messina has pointed out, fields like law and accounting are primed for an AI takeover. In fact, Messina said, some of them may well be decimated. AI already is having a significant impact on medicine. Law and accounting are next, Messina said, the former being poised for “a massive shakeup.”

“Think about the complexity of contracts, and really diving in and understanding what it takes to create a perfect deal structure,” he said in regards to the legal field. “It’s a lot of attorneys reading through a lot of information — hundreds or thousands of pages of data and documents. It’s really easy to miss things. So AI that has the ability to comb through and comprehensively deliver the best possible contract for the outcome you’re trying to achieve is probably going to replace a lot of corporate attorneys.”

More on Artificial Intelligence AI Copywriting: Why Writing Jobs Are Safe

3. Social Manipulation Through AI Algorithms

Social manipulation also stands as a danger of artificial intelligence. This fear has become a reality as politicians rely on platforms to promote their viewpoints, with one example being Ferdinand Marcos, Jr., wielding a TikTok troll army to capture the votes of younger Filipinos during the Philippines’ 2022 election.

TikTok, which is just one example of a social media platform that relies on AI algorithms , fills a user’s feed with content related to previous media they’ve viewed on the platform. Criticism of the app targets this process and the algorithm’s failure to filter out harmful and inaccurate content, raising concerns over TikTok’s ability to protect its users from misleading information.

Online media and news have become even murkier in light of AI-generated images and videos, AI voice changers as well as deepfakes infiltrating political and social spheres. These technologies make it easy to create realistic photos, videos, audio clips or replace the image of one figure with another in an existing picture or video. As a result, bad actors have another avenue for sharing misinformation and war propaganda , creating a nightmare scenario where it can be nearly impossible to distinguish between creditable and faulty news.

“No one knows what’s real and what’s not,” Ford said. “So it really leads to a situation where you literally cannot believe your own eyes and ears; you can’t rely on what, historically, we’ve considered to be the best possible evidence... That’s going to be a huge issue.”

More on Artificial Intelligence How to Spot Deepfake Technology

4. Social Surveillance With AI Technology

In addition to its more existential threat, Ford is focused on the way AI will adversely affect privacy and security. A prime example is China’s use of facial recognition technology in offices, schools and other venues. Besides tracking a person’s movements, the Chinese government may be able to gather enough data to monitor a person’s activities, relationships and political views.

Another example is U.S. police departments embracing predictive policing algorithms to anticipate where crimes will occur. The problem is that these algorithms are influenced by arrest rates, which disproportionately impact Black communities . Police departments then double down on these communities, leading to over-policing and questions over whether self-proclaimed democracies can resist turning AI into an authoritarian weapon.

“Authoritarian regimes use or are going to use it,” Ford said. “The question is, How much does it invade Western countries, democracies, and what constraints do we put on it?”

Related Are Police Robots the Future of Law Enforcement?

5. Lack of Data Privacy Using AI Tools

If you’ve played around with an AI chatbot or tried out an AI face filter online, your data is being collected — but where is it going and how is it being used? AI systems often collect personal data to customize user experiences or to help train the AI models you’re using (especially if the AI tool is free). Data may not even be considered secure from other users when given to an AI system, as one bug incident that occurred with ChatGPT in 2023 “ allowed some users to see titles from another active user’s chat history .” While there are laws present to protect personal information in some cases in the United States, there is no explicit federal law that protects citizens from data privacy harm experienced by AI.

6. Biases Due to AI

Various forms of AI bias are detrimental too. Speaking to the New York Times , Princeton computer science professor Olga Russakovsky said AI bias goes well beyond gender and race . In addition to data and algorithmic bias (the latter of which can “amplify” the former), AI is developed by humans — and humans are inherently biased .

“A.I. researchers are primarily people who are male, who come from certain racial demographics, who grew up in high socioeconomic areas, primarily people without disabilities,” Russakovsky said. “We’re a fairly homogeneous population, so it’s a challenge to think broadly about world issues.”

The limited experiences of AI creators may explain why speech-recognition AI often fails to understand certain dialects and accents, or why companies fail to consider the consequences of a chatbot impersonating notorious figures in human history. Developers and businesses should exercise greater care to avoid recreating powerful biases and prejudices that put minority populations at risk.

7. Socioeconomic Inequality as a Result of AI

If companies refuse to acknowledge the inherent biases baked into AI algorithms, they may compromise their DEI initiatives through AI-powered recruiting . The idea that AI can measure the traits of a candidate through facial and voice analyses is still tainted by racial biases, reproducing the same discriminatory hiring practices businesses claim to be eliminating.

Widening socioeconomic inequality sparked by AI-driven job loss is another cause for concern, revealing the class biases of how AI is applied. Workers who perform more manual, repetitive tasks have experienced wage declines as high as 70 percent because of automation, with office and desk workers remaining largely untouched in AI’s early stages. However, the increase in generative AI use is already affecting office jobs , making for a wide range of roles that may be more vulnerable to wage or job loss than others.

Sweeping claims that AI has somehow overcome social boundaries or created more jobs fail to paint a complete picture of its effects. It’s crucial to account for differences based on race, class and other categories. Otherwise, discerning how AI and automation benefit certain individuals and groups at the expense of others becomes more difficult.

8. Weakening Ethics and Goodwill Because of AI

Along with technologists, journalists and political figures, even religious leaders are sounding the alarm on AI’s potential pitfalls. In a 2023 Vatican meeting and in his message for the 2024 World Day of Peace , Pope Francis called for nations to create and adopt a binding international treaty that regulates the development and use of AI.

Pope Francis warned against AI’s ability to be misused, and “create statements that at first glance appear plausible but are unfounded or betray biases.” He stressed how this could bolster campaigns of disinformation, distrust in communications media, interference in elections and more — ultimately increasing the risk of “fueling conflicts and hindering peace.”

The rapid rise of generative AI tools gives these concerns more substance. Many users have applied the technology to get out of writing assignments, threatening academic integrity and creativity. Plus, biased AI could be used to determine whether an individual is suitable for a job, mortgage, social assistance or political asylum, producing possible injustices and discrimination, noted Pope Francis.

“The unique human capacity for moral judgment and ethical decision-making is more than a complex collection of algorithms,” he said. “And that capacity cannot be reduced to programming a machine.”

More on Artificial Intelligence What Are AI Ethics?

9. Autonomous Weapons Powered By AI

As is too often the case, technological advancements have been harnessed for the purpose of warfare. When it comes to AI, some are keen to do something about it before it’s too late: In a 2016 open letter , over 30,000 individuals, including AI and robotics researchers, pushed back against the investment in AI-fueled autonomous weapons.

“The key question for humanity today is whether to start a global AI arms race or to prevent it from starting,” they wrote. “If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow.”

This prediction has come to fruition in the form of Lethal Autonomous Weapon Systems , which locate and destroy targets on their own while abiding by few regulations. Because of the proliferation of potent and complex weapons, some of the world’s most powerful nations have given in to anxieties and contributed to a tech cold war .

Many of these new weapons pose major risks to civilians on the ground, but the danger becomes amplified when autonomous weapons fall into the wrong hands. Hackers have mastered various types of cyber attacks , so it’s not hard to imagine a malicious actor infiltrating autonomous weapons and instigating absolute armageddon.

If political rivalries and warmongering tendencies are not kept in check, artificial intelligence could end up being applied with the worst intentions. Some fear that, no matter how many powerful figures point out the dangers of artificial intelligence, we’re going to keep pushing the envelope with it if there’s money to be made.

“The mentality is, ‘If we can do it, we should try it; let’s see what happens,” Messina said. “‘And if we can make money off it, we’ll do a whole bunch of it.’ But that’s not unique to technology. That’s been happening forever.’”

10. Financial Crises Brought About By AI Algorithms

The financial industry has become more receptive to AI technology’s involvement in everyday finance and trading processes. As a result, algorithmic trading could be responsible for our next major financial crisis in the markets.

While AI algorithms aren’t clouded by human judgment or emotions, they also don’t take into account contexts , the interconnectedness of markets and factors like human trust and fear. These algorithms then make thousands of trades at a blistering pace with the goal of selling a few seconds later for small profits. Selling off thousands of trades could scare investors into doing the same thing, leading to sudden crashes and extreme market volatility.

Instances like the 2010 Flash Crash and the Knight Capital Flash Crash serve as reminders of what could happen when trade-happy algorithms go berserk, regardless of whether rapid and massive trading is intentional.

This isn’t to say that AI has nothing to offer to the finance world. In fact, AI algorithms can help investors make smarter and more informed decisions on the market. But finance organizations need to make sure they understand their AI algorithms and how those algorithms make decisions. Companies should consider whether AI raises or lowers their confidence before introducing the technology to avoid stoking fears among investors and creating financial chaos.

11. Loss of Human Influence

An overreliance on AI technology could result in the loss of human influence — and a lack in human functioning — in some parts of society. Using AI in healthcare could result in reduced human empathy and reasoning , for instance. And applying generative AI for creative endeavors could diminish human creativity and emotional expression . Interacting with AI systems too much could even cause reduced peer communication and social skills. So while AI can be very helpful for automating daily tasks, some question if it might hold back overall human intelligence, abilities and need for community.

12. Uncontrollable Self-Aware AI

There also comes a worry that AI will progress in intelligence so rapidly that it will become sentient , and act beyond humans’ control — possibly in a malicious manner. Alleged reports of this sentience have already been occurring, with one popular account being from a former Google engineer who stated the AI chatbot LaMDA was sentient and speaking to him just as a person would. As AI’s next big milestones involve making systems with artificial general intelligence , and eventually artificial superintelligence , cries to completely stop these developments continue to rise .

More on Artificial Intelligence What Is the Eliza Effect?

How to Mitigate the Risks of AI

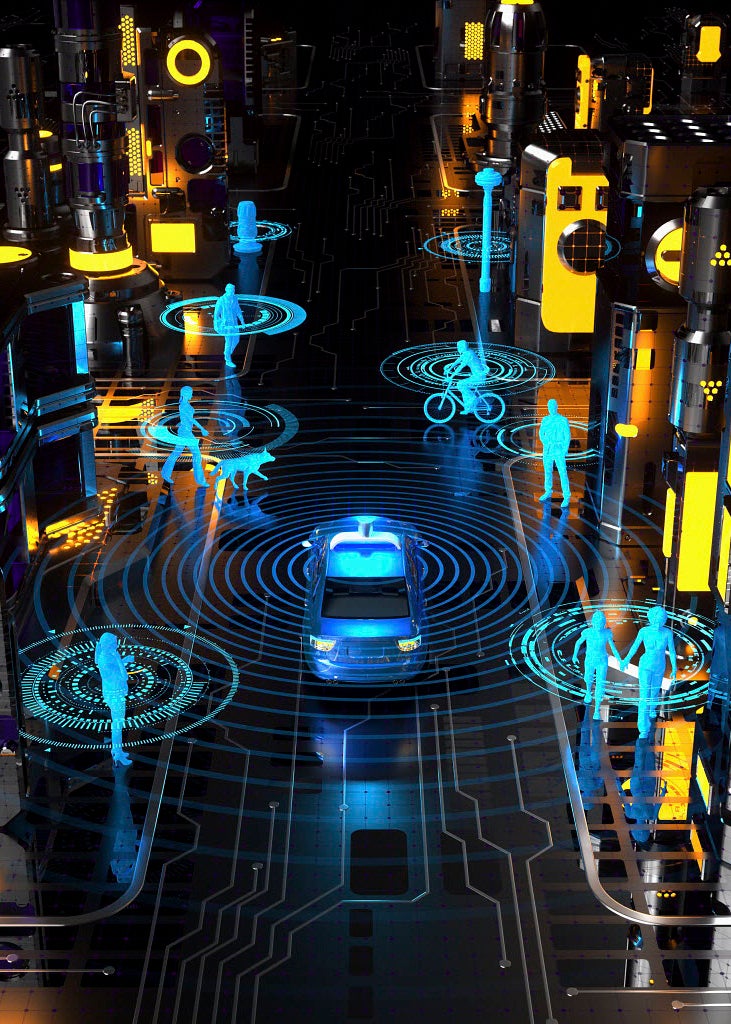

AI still has numerous benefits , like organizing health data and powering self-driving cars. To get the most out of this promising technology, though, some argue that plenty of regulation is necessary.

“There’s a serious danger that we’ll get [AI systems] smarter than us fairly soon and that these things might get bad motives and take control,” Hinton told NPR . “This isn’t just a science fiction problem. This is a serious problem that’s probably going to arrive fairly soon, and politicians need to be thinking about what to do about it now.”

Develop Legal Regulations

AI regulation has been a main focus for dozens of countries , and now the U.S. and European Union are creating more clear-cut measures to manage the rising sophistication of artificial intelligence. In fact, the White House Office of Science and Technology Policy (OSTP) published the AI Bill of Rights in 2022, a document outlining to help responsibly guide AI use and development. Additionally, President Joe Biden issued an executive order in 2023 requiring federal agencies to develop new rules and guidelines for AI safety and security.

Although legal regulations mean certain AI technologies could eventually be banned, it doesn’t prevent societies from exploring the field.

Ford argues that AI is essential for countries looking to innovate and keep up with the rest of the world.

“You regulate the way AI is used, but you don’t hold back progress in basic technology. I think that would be wrong-headed and potentially dangerous,” Ford said. “We decide where we want AI and where we don’t; where it’s acceptable and where it’s not. And different countries are going to make different choices.”

More on Artificial Intelligence Will This Election Year Be a Turning Point for AI Regulation?

Establish Organizational AI Standards and Discussions

On a company level, there are many steps businesses can take when integrating AI into their operations. Organizations can develop processes for monitoring algorithms, compiling high-quality data and explaining the findings of AI algorithms. Leaders could even make AI a part of their company culture and routine business discussions, establishing standards to determine acceptable AI technologies.

Guide Tech With Humanities Perspectives

Though when it comes to society as a whole, there should be a greater push for tech to embrace the diverse perspectives of the humanities . Stanford University AI researchers Fei-Fei Li and John Etchemendy make this argument in a 2019 blog post that calls for national and global leadership in regulating artificial intelligence:

“The creators of AI must seek the insights, experiences and concerns of people across ethnicities, genders, cultures and socio-economic groups, as well as those from other fields, such as economics, law, medicine, philosophy, history, sociology, communications, human-computer-interaction, psychology, and Science and Technology Studies (STS).”

Balancing high-tech innovation with human-centered thinking is an ideal method for producing responsible AI technology and ensuring the future of AI remains hopeful for the next generation. The dangers of artificial intelligence should always be a topic of discussion, so leaders can figure out ways to wield the technology for noble purposes.

“I think we can talk about all these risks, and they’re very real,” Ford said. “But AI is also going to be the most important tool in our toolbox for solving the biggest challenges we face.”

Frequently Asked Questions

What is ai.

AI (artificial intelligence) describes a machine's ability to perform tasks and mimic intelligence at a similar level as humans.

Is AI dangerous?

AI has the potential to be dangerous, but these dangers may be mitigated by implementing legal regulations and by guiding AI development with human-centered thinking.

Can AI cause human extinction?

If AI algorithms are biased or used in a malicious manner — such as in the form of deliberate disinformation campaigns or autonomous lethal weapons — they could cause significant harm toward humans. Though as of right now, it is unknown whether AI is capable of causing human extinction.

What happens if AI becomes self-aware?

Self-aware AI has yet to be created, so it is not fully known what will happen if or when this development occurs.

Some suggest self-aware AI may become a helpful counterpart to humans in everyday living, while others suggest that it may act beyond human control and purposely harm humans.

Great Companies Need Great People. That's Where We Come In.

- Share full article

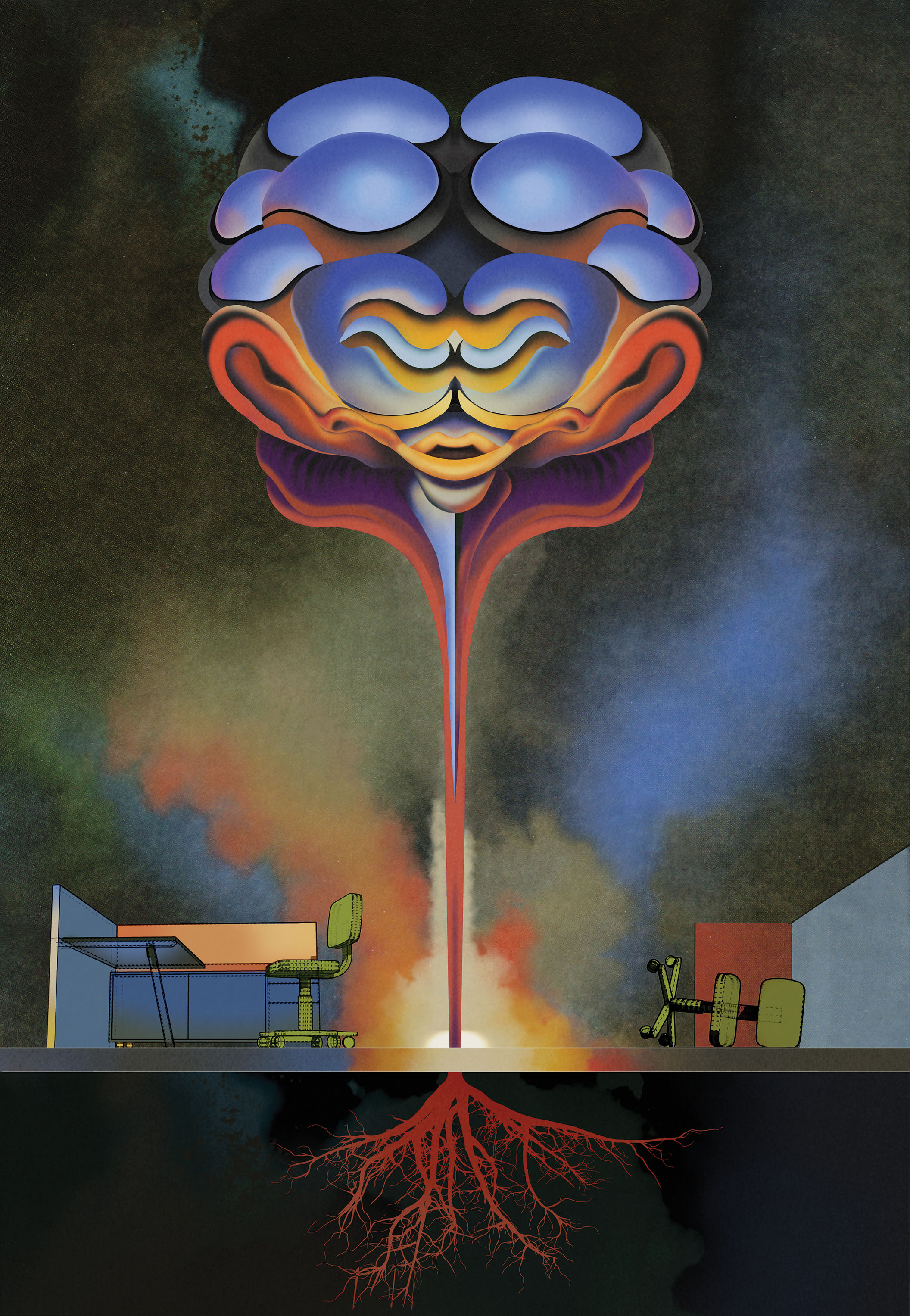

Opinion Guest Essay

The True Threat of Artificial Intelligence

Credit... Mathieu Larone

Supported by

By Evgeny Morozov

Mr. Morozov is the author of “To Save Everything, Click Here: The Folly of Technological Solutionism” and the host of the forthcoming podcast “ The Santiago Boys .”

- June 30, 2023

In May, more than 350 technology executives, researchers and academics signed a statement warning of the existential dangers of artificial intelligence. “Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the signatories warned.

This came on the heels of another high-profile letter , signed by the likes of Elon Musk and Steve Wozniak, a co-founder of Apple, calling for a six-month moratorium on the development of advanced A.I. systems.

Meanwhile, the Biden administration has urged responsible A.I. innovation, stating that “in order to seize the opportunities” it offers, we “must first manage its risks.” In Congress, Senator Chuck Schumer called for “first of their kind” listening sessions on the potential and risks of A.I., a crash course of sorts from industry executives, academics, civil rights activists and other stakeholders.

The mounting anxiety about A.I. isn’t because of the boring but reliable technologies that autocomplete our text messages or direct robot vacuums to dodge obstacles in our living rooms. It is the rise of artificial general intelligence, or A.G.I., that worries the experts.

A.G.I. doesn’t exist yet, but some believe that the rapidly growing capabilities of OpenAI’s ChatGPT suggest its emergence is near. Sam Altman, a co-founder of OpenAI, has described it as “systems that are generally smarter than humans.” Building such systems remains a daunting — some say impossible — task. But the benefits appear truly tantalizing.

Imagine Roombas, no longer condemned to vacuuming the floors, that evolve into all-purpose robots, happy to brew morning coffee or fold laundry — without ever being programmed to do these things.

Sounds appealing. But should these A.G.I. Roombas get too powerful, their mission to create a spotless utopia might get messy for their dust-spreading human masters. At least we’ve had a good run.

Discussions of A.G.I. are rife with such apocalyptic scenarios. Yet a nascent A.G.I. lobby of academics, investors and entrepreneurs counter that, once made safe, A.G.I. would be a boon to civilization. Mr. Altman, the face of this campaign, embarked on a global tour to charm lawmakers . Earlier this year he wrote that A.G.I. might even turbocharge the economy, boost scientific knowledge and “elevate humanity by increasing abundance.”

This is why, for all the hand-wringing, so many smart people in the tech industry are toiling to build this controversial technology: not using it to save the world seems immoral.

They are beholden to an ideology that views this new technology as inevitable and, in a safe version, as universally beneficial. Its proponents can think of no better alternatives for fixing humanity and expanding its intelligence.

But this ideology — call it A.G.I.-ism — is mistaken. The real risks of A.G.I. are political and won’t be fixed by taming rebellious robots. The safest of A.G.I.s would not deliver the progressive panacea promised by its lobby. And in presenting its emergence as all but inevitable, A.G.I.-ism distracts from finding better ways to augment intelligence.

Unbeknown to its proponents , A.G.I.-ism is just a bastard child of a much grander ideology, one preaching that, as Margaret Thatcher memorably put it, there is no alternative, not to the market.

Rather than breaking capitalism, as Mr. Altman has hinted it could do, A.G.I. — or at least the rush to build it — is more likely to create a powerful (and much hipper) ally for capitalism’s most destructive creed: neoliberalism.

Fascinated with privatization, competition and free trade, the architects of neoliberalism wanted to dynamize and transform a stagnant and labor-friendly economy through markets and deregulation.

Some of these transformations worked, but they came at an immense cost. Over the years, neoliberalism drew many, many critics, who blamed it for the Great Recession and financial crisis, Trumpism, Brexit and much else.

It is not surprising, then, that the Biden administration has distanced itself from the ideology, acknowledging that markets sometimes get it wrong. Foundations, think tanks and academics have even dared to imagine a post-neoliberal future.

Yet neoliberalism is far from dead. Worse, it has found an ally in A.G.I.-ism, which stands to reinforce and replicate its main biases: that private actors outperform public ones (the market bias), that adapting to reality beats transforming it (the adaptation bias ) and that efficiency trumps social concerns (the efficiency bias).

These biases turn the alluring promise behind A.G.I. on its head: Instead of saving the world, the quest to build it will make things only worse. Here is how.

A.G.I. will never overcome the market’s demands for profit.

Remember when Uber, with its cheap rates, was courting cities to serve as their public transportation systems?

It all began nicely, with Uber promising implausibly cheap rides, courtesy of a future with self-driving cars and minimal labor costs. Deep-pocketed investors loved this vision, even absorbing Uber’s multibillion-dollar losses.

But when reality descended , the self-driving cars were still a pipe dream. The investors demanded returns and Uber was forced to raise prices . Users that relied on it to replace public buses and trains were left on the sidewalk.

The neoliberal instinct behind Uber’s business model is that the private sector can do better than the public sector — the market bias.

It’s not just cities and public transit. Hospitals , police departments and even the Pentagon increasingly rely on Silicon Valley to accomplish their missions.

With A.G.I., this reliance will only deepen, not least because A.G.I. is unbounded in its scope and ambition. No administrative or government services would be immune to its promise of disruption.

Moreover, A.G.I. doesn’t even have to exist to lure them in. This, at any rate, is the lesson of Theranos, a start-up that promised to “solve” health care through a revolutionary blood-testing technology and a former darling of America’s elites. Its victims are real, even if its technology never was.

After so many Uber- and Theranos-like traumas, we already know what to expect of an A.G.I. rollout. It will consist of two phases. First, the charm offensive of heavily subsidized services. Then the ugly retrenchment, with the overdependent users and agencies shouldering the costs of making them profitable.

As always, Silicon Valley mavens play down the market’s role. In a recent essay titled “ Why A.I. Will Save the World ,” Marc Andreessen, a prominent tech investor, even proclaims that A.I. “is owned by people and controlled by people, like any other technology.”

Only a venture capitalist can traffic in such exquisite euphemisms. Most modern technologies are owned by corporations. And they — not the mythical “people” — will be the ones that will monetize saving the world.

And are they really saving it? The record, so far, is poor. Companies like Airbnb and TaskRabbit were welcomed as saviors for the beleaguered middle class ; Tesla’s electric cars were seen as a remedy to a warming planet. Soylent, the meal-replacement shake, embarked on a mission to “solve” global hunger, while Facebook vowed to “ solve ” connectivity issues in the Global South. None of these companies saved the world.

A decade ago, I called this solutionism , but “digital neoliberalism” would be just as fitting. This worldview reframes social problems in light of for-profit technological solutions. As a result, concerns that belong in the public domain are reimagined as entrepreneurial opportunities in the marketplace.

A.G.I.-ism has rekindled this solutionist fervor. Last year, Mr. Altman stated that “A.G.I. is probably necessary for humanity to survive” because “our problems seem too big” for us to “solve without better tools.” He’s recently asserted that A.G.I. will be a catalyst for human flourishing.

But companies need profits, and such benevolence, especially from unprofitable firms burning investors’ billions, is uncommon. OpenAI, having accepted billions from Microsoft, has contemplated raising another $100 billion to build A.G.I. Those investments will need to be earned back — against the service’s staggering invisible costs. (One estimate from February put the expense of operating ChatGPT at $700,000 per day.)

Thus, the ugly retrenchment phase, with aggressive price hikes to make an A.G.I. service profitable, might arrive before “abundance” and “flourishing.” But how many public institutions would mistake fickle markets for affordable technologies and become dependent on OpenAI’s expensive offerings by then?

And if you dislike your town outsourcing public transportation to a fragile start-up, would you want it farming out welfare services, waste management and public safety to the possibly even more volatile A.G.I. firms?

A.G.I. will dull the pain of our thorniest problems without fixing them.

Neoliberalism has a knack for mobilizing technology to make society’s miseries bearable. I recall an innovative tech venture from 2017 that promised to improve commuters’ use of a Chicago subway line. It offered rewards to discourage metro riders from traveling at peak times. Its creators leveraged technology to influence the demand side (the riders), seeing structural changes to the supply side (like raising public transport funding) as too difficult. Tech would help make Chicagoans adapt to the city’s deteriorating infrastructure rather than fixing it in order to meet the public’s needs.

This is the adaptation bias — the aspiration that, with a technological wand, we can become desensitized to our plight. It’s the product of neoliberalism’s relentless cheerleading for self-reliance and resilience.

The message is clear: gear up, enhance your human capital and chart your course like a start-up. And A.G.I.-ism echoes this tune. Bill Gates has trumpeted that A.I. can “help people everywhere improve their lives.”

The solutionist feast is only getting started: Whether it’s fighting the next pandemic , the loneliness epidemic or inflation , A.I. is already pitched as an all-purpose hammer for many real and imaginary nails. However, the decade lost to the solutionist folly reveals the limits of such technological fixes.

To be sure, Silicon Valley’s many apps — to monitor our spending, calories and workout regimes — are occasionally helpful. But they mostly ignore the underlying causes of poverty or obesity. And without tackling the causes, we remain stuck in the realm of adaptation, not transformation.

There’s a difference between nudging us to follow our walking routines — a solution that favors individual adaptation — and understanding why our towns have no public spaces to walk on — a prerequisite for a politics-friendly solution that favors collective and institutional transformation.

But A.G.I.-ism, like neoliberalism, sees public institutions as unimaginative and not particularly productive. They should just adapt to A.G.I., at least according to Mr. Altman, who recently said he was nervous about “the speed with which our institutions can adapt” — part of the reason, he added, “of why we want to start deploying these systems really early, while they’re really weak, so that people have as much time as possible to do this.”

But should institutions only adapt? Can’t they develop their own transformative agendas for improving humanity’s intelligence? Or do we use institutions only to mitigate the risks of Silicon Valley’s own technologies?

A.G.I. undermines civic virtues and amplifies trends we already dislike.

A common criticism of neoliberalism is that it has flattened our political life, rearranging it around efficiency. “ The Problem of Social Cost ,” a 1960 article that has become a classic of the neoliberal canon, preaches that a polluting factory and its victims should not bother bringing their disputes to court. Such fights are inefficient — who needs justice, anyway? — and stand in the way of market activity. Instead, the parties should privately bargain over compensation and get on with their business.

This fixation on efficiency is how we arrived at “solving” climate change by letting the worst offenders continue as before. The way to avoid the shackles of regulation is to devise a scheme — in this case, taxing carbon — that lets polluters buy credits to match the extra carbon they emit.

This culture of efficiency, in which markets measure the worth of things and substitute for justice, inevitably corrodes civic virtues.

And the problems this creates are visible everywhere. Academics fret that, under neoliberalism, research and teaching have become commodities. Doctors lament that hospitals prioritize more profitable services such as elective surgery over emergency care. Journalists hate that the worth of their articles is measured in eyeballs .

Now imagine unleashing A.G.I. on these esteemed institutions — the university, the hospital, the newspaper — with the noble mission of “fixing” them. Their implicit civic missions would remain invisible to A.G.I., for those missions are rarely quantified even in their annual reports — the sort of materials that go into training the models behind A.G.I.

After all, who likes to boast that his class on Renaissance history got only a handful of students? Or that her article on corruption in some faraway land got only a dozen page views? Inefficient and unprofitable, such outliers miraculously survive even in the current system. The rest of the institution quietly subsidizes them, prioritizing values other than profit-driven “efficiency.”

Will this still be the case in the A.G.I. utopia? Or will fixing our institutions through A.G.I. be like handing them over to ruthless consultants? They, too, offer data-bolstered “solutions” for maximizing efficiency. But these solutions often fail to grasp the messy interplay of values, missions and traditions at the heart of institutions — an interplay that is rarely visible if you only scratch their data surface.

In fact, the remarkable performance of ChatGPT-like services is, by design, a refusal to grasp reality at a deeper level, beyond the data’s surface. So whereas earlier A.I. systems relied on explicit rules and required someone like Newton to theorize gravity — to ask how and why apples fall — newer systems like A.G.I. simply learn to predict gravity’s effects by observing millions of apples fall to the ground.

However, if all that A.G.I. sees are cash-strapped institutions fighting for survival, it may never infer their true ethos. Good luck discerning the meaning of the Hippocratic oath by observing hospitals that have been turned into profit centers.

Margaret Thatcher’s other famous neoliberal dictum was that “ there is no such thing as society .”

The A.G.I. lobby unwittingly shares this grim view. For them, the kind of intelligence worth replicating is a function of what happens in individuals’ heads rather than in society at large.

But human intelligence is as much a product of policies and institutions as it is of genes and individual aptitudes. It’s easier to be smart on a fellowship in the Library of Congress than while working several jobs in a place without a bookstore or even decent Wi-Fi.

It doesn’t seem all that controversial to suggest that more scholarships and public libraries will do wonders for boosting human intelligence. But for the solutionist crowd in Silicon Valley, augmenting intelligence is primarily a technological problem — hence the excitement about A.G.I.

However, if A.G.I.-ism really is neoliberalism by other means, then we should be ready to see fewer — not more — intelligence-enabling institutions. After all, they are the remnants of that dreaded “society” that, for neoliberals, doesn’t really exist. A.G.I.’s grand project of amplifying intelligence may end up shrinking it.

Because of such solutionist bias, even seemingly innovative policy ideas around A.G.I. fail to excite. Take the recent proposal for a “ Manhattan Project for A.I. Safety .” This is premised on the false idea that there’s no alternative to A.G.I.

But wouldn’t our quest for augmenting intelligence be far more effective if the government funded a Manhattan Project for culture and education and the institutions that nurture them instead?

Without such efforts, the vast cultural resources of our existing public institutions risk becoming mere training data sets for A.G.I. start-ups, reinforcing the falsehood that society doesn’t exist.

Depending on how (and if) the robot rebellion unfolds, A.G.I. may or may not prove an existential threat. But with its antisocial bent and its neoliberal biases, A.G.I.-ism already is: We don’t need to wait for the magic Roombas to question its tenets.

Evgeny Morozov , the author of “To Save Everything, Click Here: The Folly of Technological Solutionism,” is the founder and publisher of The Syllabus and the host of the podcast “The Santiago Boys .”

The Times is committed to publishing a diversity of letters to the editor. We’d like to hear what you think about this or any of our articles. Here are some tips . And here’s our email: [email protected] .

Follow The New York Times Opinion section on Facebook , Twitter (@NYTopinion) and Instagram .

Advertisement

Major new report explains the risks and rewards of artificial intelligence

AI has begun to permeate every aspect of our lives. Image: Unsplash/Hitesh Choudhary

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Toby Walsh

Liz sonenberg.

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} Artificial Intelligence is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, artificial intelligence.

- A new report has just been released, highlighting the changes in AI over the last 5 years, and predicted future trends.

- It was co-written by people across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

- In the last 5 years, AI has become an increasing part of our lives, revolutionizing a number of industries, but is still not free from risk.

A major new report on the state of artificial intelligence (AI) has just been released . Think of it as the AI equivalent of an Intergovernmental Panel on Climate Change report, in that it identifies where AI is at today, and the promise and perils in view.

From language generation and molecular medicine to disinformation and algorithmic bias, AI has begun to permeate every aspect of our lives.

The report argues that we are at an inflection point where researchers and governments must think and act carefully to contain the risks AI presents and make the most of its benefits.

A century-long study of AI

The report comes out of the AI100 project , which aims to study and anticipate the effects of AI rippling out through our lives over the course of the next 100 years.

AI100 produces a new report every five years: the first was published in 2016, and this is the second. As two points define a line, this second report lets us see the direction AI is taking us in.

One of us (Liz Sonenberg) is a member of the standing committee overseeing the AI100 project, and the other (Toby Walsh) was on the study panel that wrote this particular report. Members of the panel came from across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

The promises and perils of AI are becoming real

The report highlights the remarkable progress made in AI over the past five years. AI is leaving the laboratory and has entered our lives, having a “real-world impact on people, institutions, and culture”. Read the news on any given day and you’re likely to find multiple stories about some new advance in AI or some new use of AI.

For example, in natural language processing (NLP), computers can now analyse and even generate realistic human language. To demonstrate, we asked Open AI’s GPT-3 system , one of the largest neural networks ever built, to summarise the AI100 report for you. It did a pretty good job, even if the summary confronts our sense of self by being written in the first person:

In the coming decade, I expect that AI will play an increasingly prominent role in the lives of people everywhere. AI-infused services will become more common, and AI will become increasingly embedded in the daily lives of people across the world.

I believe that this will bring with it great economic and societal benefits, but that it will also require us to address the many challenges to ensure that the benefits are broadly shared and that people are not marginalised by these new technologies.

A key insight of AI research is that it is easier to build things than to understand why they work. However, defining what success looks like for an AI application is not straightforward.

For example, the AI systems that are used in healthcare to analyse symptoms, recommend diagnoses, or choose treatments are often far better than anything that could be built by a human, but their success is hard to quantify.

As a second example of the recent and remarkable progress in AI, consider the latest breakthrough from Google’s DeepMind. AlphaFold is an AI program that provides a huge step forward in our ability to predict how proteins fold.

This will likely lead to major advances in life sciences and medicine, accelerating efforts to understand the building blocks of life and enabling quicker and more sophisticated drug discovery. Most of the planet now knows to their cost how the unique shape of the spike proteins in the SARS-CoV-2 virus are key to its ability to invade our cells, and also to the vaccines developed to combat its deadly progress.

The AI100 report argues that worries about super-intelligent machines and wide-scale job loss from automation are still premature, requiring AI that is far more capable than available today. The main concern the report raises is not malevolent machines of superior intelligence to humans, but incompetent machines of inferior intelligence.

Once again, it’s easy to find in the news real-life stories of risks and threats to our democratic discourse and mental health posed by AI-powered tools. For instance, Facebook uses machine learning to sort its news feed and give each of its 2 billion users an unique but often inflammatory view of the world.

The World Economic Forum was the first to draw the world’s attention to the Fourth Industrial Revolution, the current period of unprecedented change driven by rapid technological advances. Policies, norms and regulations have not been able to keep up with the pace of innovation, creating a growing need to fill this gap.

The Forum established the Centre for the Fourth Industrial Revolution Network in 2017 to ensure that new and emerging technologies will help—not harm—humanity in the future. Headquartered in San Francisco, the network launched centres in China, India and Japan in 2018 and is rapidly establishing locally-run Affiliate Centres in many countries around the world.

The global network is working closely with partners from government, business, academia and civil society to co-design and pilot agile frameworks for governing new and emerging technologies, including artificial intelligence (AI) , autonomous vehicles , blockchain , data policy , digital trade , drones , internet of things (IoT) , precision medicine and environmental innovations .

Learn more about the groundbreaking work that the Centre for the Fourth Industrial Revolution Network is doing to prepare us for the future.

Want to help us shape the Fourth Industrial Revolution? Contact us to find out how you can become a member or partner.

The time to act is now

It’s clear we’re at an inflection point: we need to think seriously and urgently about the downsides and risks the increasing application of AI is revealing. The ever-improving capabilities of AI are a double-edged sword. Harms may be intentional, like deepfake videos, or unintended, like algorithms that reinforce racial and other biases.

AI research has traditionally been undertaken by computer and cognitive scientists. But the challenges being raised by AI today are not just technical. All areas of human inquiry, and especially the social sciences, need to be included in a broad conversation about the future of the field. Minimising negative impacts on society and enhancing the positives requires consideration from across academia and with societal input.

Governments also have a crucial role to play in shaping the development and application of AI. Indeed, governments around the world have begun to consider and address the opportunities and challenges posed by AI. But they remain behind the curve.

A greater investment of time and resources is needed to meet the challenges posed by the rapidly evolving technologies of AI and associated fields. In addition to regulation, governments also need to educate. In an AI-enabled world, our citizens, from the youngest to the oldest, need to be literate in these new digital technologies.

At the end of the day, the success of AI research will be measured by how it has empowered all people, helping tackle the many wicked problems facing the planet, from the climate emergency to increasing inequality within and between countries.

AI will have failed if it harms or devalues the very people we are trying to help.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} weekly.

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Artificial Intelligence .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Weekend Reads: Funding AI’s future, imperfect environmentalists and Jane Goodall’s lessons on hope

Linda Lacina

April 5, 2024

To fully appreciate AI expectations, look to the trillions being invested

John Letzing

April 3, 2024

Can there be creative equity in the age of AI?

Faisal Kazim

Building trust in AI means moving beyond black-box algorithms. Here's why

Eugenio Zuccarelli

April 2, 2024

Women are falling behind on generative AI in the workplace. Here's how to change that

Ana Kreacic and Terry Stone

Microchips – their past, present and future

Victoria Masterson

March 27, 2024

Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest.

Lending a hand to a former student — Boston’s mayor

Where money isn’t cheap, misery follows

Larger lesson about tariffs in a move that helped Trump but not the country

Illustration by Ben Boothman

Great promise but potential for peril

Christina Pazzanese

Harvard Staff Writer

Ethical concerns mount as AI takes bigger decision-making role in more industries

Second in a four-part series that taps the expertise of the Harvard community to examine the promise and potential pitfalls of the rising age of artificial intelligence and machine learning , and how to humanize them .

For decades, artificial intelligence, or AI, was the engine of high-level STEM research. Most consumers became aware of the technology’s power and potential through internet platforms like Google and Facebook, and retailer Amazon. Today, AI is essential across a vast array of industries, including health care, banking, retail, and manufacturing.

Also in the series

Trailblazing initiative marries ethics, tech

AI revolution in medicine

Imagine a world in which AI is in your home, at work, everywhere

But its game-changing promise to do things like improve efficiency, bring down costs, and accelerate research and development has been tempered of late with worries that these complex, opaque systems may do more societal harm than economic good. With virtually no U.S. government oversight, private companies use AI software to make determinations about health and medicine, employment, creditworthiness, and even criminal justice without having to answer for how they’re ensuring that programs aren’t encoded, consciously or unconsciously, with structural biases.

Its growing appeal and utility are undeniable. Worldwide business spending on AI is expected to hit $50 billion this year and $110 billion annually by 2024, even after the global economic slump caused by the COVID-19 pandemic, according to a forecast released in August by technology research firm IDC. Retail and banking industries spent the most this year, at more than $5 billion each. The company expects the media industry and federal and central governments will invest most heavily between 2018 and 2023 and predicts that AI will be “the disrupting influence changing entire industries over the next decade.”

“Virtually every big company now has multiple AI systems and counts the deployment of AI as integral to their strategy,” said Joseph Fuller , professor of management practice at Harvard Business School, who co-leads Managing the Future of Work , a research project that studies, in part, the development and implementation of AI, including machine learning, robotics, sensors, and industrial automation, in business and the work world.

Early on, it was popularly assumed that the future of AI would involve the automation of simple repetitive tasks requiring low-level decision-making. But AI has rapidly grown in sophistication, owing to more powerful computers and the compilation of huge data sets. One branch, machine learning, notable for its ability to sort and analyze massive amounts of data and to learn over time, has transformed countless fields, including education.

Firms now use AI to manage sourcing of materials and products from suppliers and to integrate vast troves of information to aid in strategic decision-making, and because of its capacity to process data so quickly, AI tools are helping to minimize time in the pricey trial-and-error of product development — a critical advance for an industry like pharmaceuticals, where it costs $1 billion to bring a new pill to market, Fuller said.

Health care experts see many possible uses for AI, including with billing and processing necessary paperwork. And medical professionals expect that the biggest, most immediate impact will be in analysis of data, imaging, and diagnosis. Imagine, they say, having the ability to bring all of the medical knowledge available on a disease to any given treatment decision.

In employment, AI software culls and processes resumes and analyzes job interviewees’ voice and facial expressions in hiring and driving the growth of what’s known as “hybrid” jobs. Rather than replacing employees, AI takes on important technical tasks of their work, like routing for package delivery trucks, which potentially frees workers to focus on other responsibilities, making them more productive and therefore more valuable to employers.

“It’s allowing them to do more stuff better, or to make fewer errors, or to capture their expertise and disseminate it more effectively in the organization,” said Fuller, who has studied the effects and attitudes of workers who have lost or are likeliest to lose their jobs to AI.

“Can smart machines outthink us, or are certain elements of human judgment indispensable in deciding some of the most important things in life?”

— Michael Sandel, political philosopher and Anne T. and Robert M. Bass Professor of Government

Though automation is here to stay, the elimination of entire job categories, like highway toll-takers who were replaced by sensors because of AI’s proliferation, is not likely, according to Fuller.

“What we’re going to see is jobs that require human interaction, empathy, that require applying judgment to what the machine is creating [will] have robustness,” he said.

While big business already has a huge head start, small businesses could also potentially be transformed by AI, says Karen Mills ’75, M.B.A. ’77, who ran the U.S. Small Business Administration from 2009 to 2013. With half the country employed by small businesses before the COVID-19 pandemic, that could have major implications for the national economy over the long haul.

Rather than hamper small businesses, the technology could give their owners detailed new insights into sales trends, cash flow, ordering, and other important financial information in real time so they can better understand how the business is doing and where problem areas might loom without having to hire anyone, become a financial expert, or spend hours laboring over the books every week, Mills said.

One area where AI could “completely change the game” is lending, where access to capital is difficult in part because banks often struggle to get an accurate picture of a small business’s viability and creditworthiness.

“It’s much harder to look inside a business operation and know what’s going on” than it is to assess an individual, she said.

Information opacity makes the lending process laborious and expensive for both would-be borrowers and lenders, and applications are designed to analyze larger companies or those who’ve already borrowed, a built-in disadvantage for certain types of businesses and for historically underserved borrowers, like women and minority business owners, said Mills, a senior fellow at HBS.

But with AI-powered software pulling information from a business’s bank account, taxes, and online bookkeeping records and comparing it with data from thousands of similar businesses, even small community banks will be able to make informed assessments in minutes, without the agony of paperwork and delays, and, like blind auditions for musicians, without fear that any inequity crept into the decision-making.

“All of that goes away,” she said.

A veneer of objectivity

Not everyone sees blue skies on the horizon, however. Many worry whether the coming age of AI will bring new, faster, and frictionless ways to discriminate and divide at scale.

“Part of the appeal of algorithmic decision-making is that it seems to offer an objective way of overcoming human subjectivity, bias, and prejudice,” said political philosopher Michael Sandel , Anne T. and Robert M. Bass Professor of Government. “But we are discovering that many of the algorithms that decide who should get parole, for example, or who should be presented with employment opportunities or housing … replicate and embed the biases that already exist in our society.”

“If we’re not thoughtful and careful, we’re going to end up with redlining again.”

— Karen Mills, senior fellow at the Business School and head of the U.S. Small Business Administration from 2009 to 2013

AI presents three major areas of ethical concern for society: privacy and surveillance, bias and discrimination, and perhaps the deepest, most difficult philosophical question of the era, the role of human judgment, said Sandel, who teaches a course in the moral, social, and political implications of new technologies.

“Debates about privacy safeguards and about how to overcome bias in algorithmic decision-making in sentencing, parole, and employment practices are by now familiar,” said Sandel, referring to conscious and unconscious prejudices of program developers and those built into datasets used to train the software. “But we’ve not yet wrapped our minds around the hardest question: Can smart machines outthink us, or are certain elements of human judgment indispensable in deciding some of the most important things in life?”

Panic over AI suddenly injecting bias into everyday life en masse is overstated, says Fuller. First, the business world and the workplace, rife with human decision-making, have always been riddled with “all sorts” of biases that prevent people from making deals or landing contracts and jobs.

When calibrated carefully and deployed thoughtfully, resume-screening software allows a wider pool of applicants to be considered than could be done otherwise, and should minimize the potential for favoritism that comes with human gatekeepers, Fuller said.

Sandel disagrees. “AI not only replicates human biases, it confers on these biases a kind of scientific credibility. It makes it seem that these predictions and judgments have an objective status,” he said.

In the world of lending, algorithm-driven decisions do have a potential “dark side,” Mills said. As machines learn from data sets they’re fed, chances are “pretty high” they may replicate many of the banking industry’s past failings that resulted in systematic disparate treatment of African Americans and other marginalized consumers.

“If we’re not thoughtful and careful, we’re going to end up with redlining again,” she said.

A highly regulated industry, banks are legally on the hook if the algorithms they use to evaluate loan applications end up inappropriately discriminating against classes of consumers, so those “at the top levels” in the field are “very focused” right now on this issue, said Mills, who closely studies the rapid changes in financial technology, or “fintech.”

“They really don’t want to discriminate. They want to get access to capital to the most creditworthy borrowers,” she said. “That’s good business for them, too.”

Oversight overwhelmed

Given its power and expected ubiquity, some argue that the use of AI should be tightly regulated. But there’s little consensus on how that should be done and who should make the rules.

Thus far, companies that develop or use AI systems largely self-police, relying on existing laws and market forces, like negative reactions from consumers and shareholders or the demands of highly-prized AI technical talent to keep them in line.

“There’s no businessperson on the planet at an enterprise of any size that isn’t concerned about this and trying to reflect on what’s going to be politically, legally, regulatorily, [or] ethically acceptable,” said Fuller.

Firms already consider their own potential liability from misuse before a product launch, but it’s not realistic to expect companies to anticipate and prevent every possible unintended consequence of their product, he said.

Few think the federal government is up to the job, or will ever be.

“The regulatory bodies are not equipped with the expertise in artificial intelligence to engage in [oversight] without some real focus and investment,” said Fuller, noting the rapid rate of technological change means even the most informed legislators can’t keep pace. Requiring every new product using AI to be prescreened for potential social harms is not only impractical, but would create a huge drag on innovation.

“I wouldn’t have a central AI group that has a division that does cars, I would have the car people have a division of people who are really good at AI.”

— Jason Furman, a professor of the practice of economic policy at the Kennedy School and a former top economic adviser to President Barack Obama

Jason Furman , a professor of the practice of economic policy at Harvard Kennedy School, agrees that government regulators need “a much better technical understanding of artificial intelligence to do that job well,” but says they could do it.

Existing bodies like the National Highway Transportation Safety Association, which oversees vehicle safety, for example, could handle potential AI issues in autonomous vehicles rather than a single watchdog agency, he said.

“I wouldn’t have a central AI group that has a division that does cars, I would have the car people have a division of people who are really good at AI,” said Furman, a former top economic adviser to President Barack Obama.

Though keeping AI regulation within industries does leave open the possibility of co-opted enforcement, Furman said industry-specific panels would be far more knowledgeable about the overarching technology of which AI is simply one piece, making for more thorough oversight.

While the European Union already has rigorous data-privacy laws and the European Commission is considering a formal regulatory framework for ethical use of AI, the U.S. government has historically been late when it comes to tech regulation.

“I think we should’ve started three decades ago, but better late than never,” said Furman, who thinks there needs to be a “greater sense of urgency” to make lawmakers act.

Business leaders “can’t have it both ways,” refusing responsibility for AI’s harmful consequences while also fighting government oversight, Sandel maintains.

More like this

The robots are coming, but relax

The good, bad, and scary of the Internet of Things

Paving the way for self-driving cars

“The problem is these big tech companies are neither self-regulating, nor subject to adequate government regulation. I think there needs to be more of both,” he said, later adding: “We can’t assume that market forces by themselves will sort it out. That’s a mistake, as we’ve seen with Facebook and other tech giants.”

Last fall, Sandel taught “ Tech Ethics ,” a popular new Gen Ed course with Doug Melton, co-director of Harvard’s Stem Cell Institute. As in his legendary “Justice” course, students consider and debate the big questions about new technologies, everything from gene editing and robots to privacy and surveillance.

“Companies have to think seriously about the ethical dimensions of what they’re doing and we, as democratic citizens, have to educate ourselves about tech and its social and ethical implications — not only to decide what the regulations should be, but also to decide what role we want big tech and social media to play in our lives,” said Sandel.

Doing that will require a major educational intervention, both at Harvard and in higher education more broadly, he said.

“We have to enable all students to learn enough about tech and about the ethical implications of new technologies so that when they are running companies or when they are acting as democratic citizens, they will be able to ensure that technology serves human purposes rather than undermines a decent civic life.”

Next: The AI revolution in medicine may lift personalized treatment, fill gaps in access to care, and cut red tape. Yet risks abound.

Share this article

You might like.

Economist gathers group of Boston area academics to assess costs of creating tax incentives for developers to ease housing crunch

Student’s analysis of global attitudes called key contribution to research linking higher cost of borrowing to persistent consumer gloom

Researcher details findings on policy that failed to boost U.S. employment even as it scored political points

Forget ‘doomers.’ Warming can be stopped, top climate scientist says

Michael Mann points to prehistoric catastrophes, modern environmental victories

Yes, it’s exciting. Just don’t look at the sun.

Lab, telescope specialist details Harvard eclipse-viewing party, offers safety tips

College accepts 1,937 to Class of 2028

Students represent 94 countries, all 50 states

- Research Process

To Err is Not Human: The Dangers of AI-assisted Academic Writing

- 4 minute read

- 13.5K views

Table of Contents

Artificial intelligence (AI)-powered writing tools are becoming increasingly popular among researchers. AI tools can improve several important aspects of writing, such as readability, grammar, spelling, and tone, providing authors with a competitive edge when drafting grant proposals and academic articles. In recent years, there has also been an increase in the use of “Generative AI,” which can produce write-ups that appear to have been drafted by humans. However, despite AI’s enormous potential in academic writing, there are several significant pitfalls in its use.

Inauthentic Sources

AI tools are built on rapidly evolving deep learning algorithms that fetch answers to your queries or “prompts”. Owing to advances in computation, and the rapid growth in the amount of data that algorithms can access, these tools are often accurate in their answers. However, at times AI can make mistakes and give you inaccurate data. What is worrying is, this data may look authentic at a first glance and increase the risk of getting incorporated in research articles. Failing to scrutinise information and data sources provided by AI can therefore impair scientific credibility and trigger a chain of falsification in the research community.

Why Human Supervision Is Advisable

AI-generated output is frequently generic, matched with synonyms, and may not be able to critically analyse the scientific context when writing manuscripts.

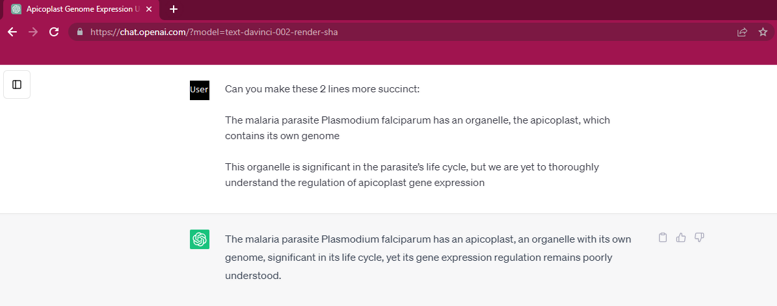

Consider the following example, where the AI ‘ChatGPT’ was used to generate a one-line summary of the following sentences:

The malaria parasite Plasmodium falciparum has an organelle,the apicoplast, which contains its own genome.

This organelle is significant in the Plasmodium’s lifecycle, but we are yet to thoroughly understand the regulation of apicoplast gene expression.

The following is a human-generated one-line summary:

The malaria parasite Plasmodium falciparum has an organelle that is significant in its lifecycle called an apicoplast, which contains its own genome —but the regulation of apicoplast gene expression is poorly understood.

On the other hand, the AI-generated summary is as follows:

The malaria parasite Plasmodium falciparum has an apicoplast, an organelle with its own genome , significant in its life cycle , yet its gene expression regulation remains poorly understood.

In the AI-generated text, it is not clear what ‘its’ refers to in each instance of because it could either refer to Plasmodium falciparum or it could refer to the apicoplast. Moreover, while the expression ‘gene expression regulation’ is technically correct, the sentence structure and writing style is superior if you write ‘regulation of gene expression’.

This is why we need humans to supervise AI bots and verify the accuracy of all information submitted for publication. We request that authors who have used AI or AI-assisted tools include a declaration statement at the end of their manuscript where they specify the tool and the reason for using it.

An example of AI-generated text using the software ChatGPT

Data Leakage