- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

Static Single Assignment (with relevant examples)

- Solidity - Assignment Operators

- C++ Assignment Operator Overloading

- Assignment Operators in Programming

- How to Declare a Static Data Member in a Class in C++?

- Assignment Operators In C++

- How to Declare a Static Variable in a Class in C++?

- Static Member Function in C++

- Java Program to Check the Accessibility of an Static Variable By a Static Method

- Java Program to Check the Accessibility of an Instance variable by a Static Method

- Java Assignment Operators with Examples

- Static Variables in Java with Examples

- Self assignment check in assignment operator

- When should we write our own assignment operator in C++?

- Default Assignment Operator and References in C++

- Assigning values to static final variables in Java

- static Keyword in Java

- Count the number of objects using Static member function

- Output of Java Programs | Set 45 (static and instance variables)

- What are the default values of static variables in C?

- Compiler Design - GATE CSE Previous Year Questions

- Phases of a Compiler

- Three address code in Compiler

- Introduction of Lexical Analysis

- Symbol Table in Compiler

- Construction of LL(1) Parsing Table

- Introduction of Compiler Design

- Difference between Compiler and Interpreter

- Code Optimization in Compiler Design

- Difference between High Level and Low level languages

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck.

In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it’s use. The prime use of SSA is it simplifies and improves the results of compiler optimisation algorithms, simultaneously by simplifying the variable properties. Some Algorithms improved by application of SSA –

- Constant Propagation – Translation of calculations from runtime to compile time. E.g. – the instruction v = 2*7+13 is treated like v = 27

- Value Range Propagation – Finding the possible range of values a calculation could result in.

- Dead Code Elimination – Removing the code which is not accessible and will have no effect on results whatsoever.

- Strength Reduction – Replacing computationally expensive calculations by inexpensive ones.

- Register Allocation – Optimising the use of registers for calculations.

Any code can be converted to SSA form by simply replacing the target variable of each code segment with a new variable and substituting each use of a variable with the new edition of the variable reaching that point. Versions are created by splitting the original variables existing in IR and are represented by original name with a subscript such that every variable gets its own version.

Example #1:

Convert the following code segment to SSA form:

Here x,y,z,s,p,q are original variables and x 2 , s 2 , s 3 , s 4 are versions of x and s.

Example #2:

Here a,b,c,d,e,q,s are original variables and a 2 , q 2 , q 3 are versions of a and q.

Please Login to comment...

Similar reads.

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

ENOSUCHBLOG

Programming, philosophy, pedaling., understanding static single assignment forms, oct 23, 2020 tags: llvm , programming .

This post is at least a year old.

With thanks to Niki Carroll , winny, and kurufu for their invaluable proofreading and advice.

By popular demand , I’m doing another LLVM post. This time, it’s single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers.

Like the last one , SSA is a topic in compiler and IR design that I mostly understand but could benefit from some self-guided education on. So here we are.

How to represent a program

At the highest level, a compiler’s job is singular: to turn some source language input into some machine language output . Internally, this breaks down into a sequence of clearly delineated 1 tasks:

- Lexing the source into a sequence of tokens

- Parsing the token stream into an abstract syntax tree , or AST 2

- Validating the AST (e.g., ensuring that all uses of identifiers are consistent with the source language’s scoping and definition rules) 3

- Translating the AST into machine code, with all of its complexities (instruction selection, register allocation, frame generation, &c)

In a single-pass compiler, (4) is monolithic: machine code is generated as the compiler walks the AST, with no revisiting of previously generated code. This is extremely fast (in terms of compiler performance) in exchange for some a few significant limitations:

Optimization potential: because machine code is generated in a single pass, it can’t be revisited for optimizations. Single-pass compilers tend to generate extremely slow and conservative machine code.

By way of example: the System V ABI (used by Linux and macOS) defines a special 128-byte region beyond the current stack pointer ( %rsp ) that can be used by leaf functions whose stack frames fit within it. This, in turn, saves a few stack management instructions in the function prologue and epilogue.

A single-pass compiler will struggle to take advantage of this ABI-supplied optimization: it needs to emit a stack slot for each automatic variable as they’re visited, and cannot revisit its function prologue for erasure if all variables fit within the red zone.

Language limitations: single-pass compilers struggle with common language design decisions, like allowing use of identifiers before their declaration or definition. For example, the following is valid C++:

C and C++ generally require pre-declaration and/or definition for identifiers, but member function bodies may reference the entire class scope. This will frustrate a single-pass compiler, which expects Rect::width and Rect::height to already exist in some symbol lookup table for call generation.

Consequently, (virtually) all modern compilers are multi-pass .

Multi-pass compilers break the translation phase down even more:

- The AST is lowered into an intermediate representation , or IR

- Analyses (or passes) are performed on the IR, refining it according to some optimization profile (code size, performance, &c)

- The IR is either translated to machine code or lowered to another IR, for further target specialization or optimization 4

So, we want an IR that’s easy to correctly transform and that’s amenable to optimization. Let’s talk about why IRs that have the static single assignment property fill that niche.

At its core, the SSA form of any program source program introduces only one new constraint: all variables are assigned (i.e., stored to) exactly once .

By way of example: the following (not actually very helpful) function is not in a valid SSA form with respect to the flags variable:

Why? Because flags is stored to twice: once for initialization, and (potentially) again inside the conditional body.

As programmers, we could rewrite helpful_open to only ever store once to each automatic variable:

But this is clumsy and repetitive: we essentially need to duplicate every chain of uses that follow any variable that is stored to more than once. That’s not great for readability, maintainability, or code size.

So, we do what we always do: make the compiler do the hard work for us. Fortunately there exists a transformation from every valid program into an equivalent SSA form, conditioned on two simple rules.

Rule #1: Whenever we see a store to an already-stored variable, we replace it with a brand new “version” of that variable.

Using rule #1 and the example above, we can rewrite flags using _N suffixes to indicate versions:

But wait a second: we’ve made a mistake!

- open(..., flags_1, ...) is incorrect: it unconditionally assigns O_CREAT , which wasn’t in the original function semantics.

- open(..., flags_0, ...) is also incorrect: it never assigns O_CREAT , and thus is wrong for the same reason.

So, what do we do? We use rule 2!

Rule #2: Whenever we need to choose a variable based on control flow, we use the Phi function (φ) to introduce a new variable based on our choice.

Using our example once more:

Our quandary is resolved: open always takes flags_2 , where flags_2 is a fresh SSA variable produced applying φ to flags_0 and flags_1 .

Observe, too, that φ is a symbolic function: compilers that use SSA forms internally do not emit real φ functions in generated code 5 . φ exists solely to reconcile rule #1 with the existence of control flow.

As such, it’s a little bit silly to talk about SSA forms with C examples (since C and other high-level languages are what we’re translating from in the first place). Let’s dive into how LLVM’s IR actually represents them.

SSA in LLVM

First of all, let’s see what happens when we run our very first helpful_open through clang with no optimizations:

(View it on Godbolt .)

So, we call open with %3 , which comes from…a load from an i32* named %flags ? Where’s the φ?

This is something that consistently slips me up when reading LLVM’s IR: only values , not memory, are in SSA form. Because we’ve compiled with optimizations disabled, %flags is just a stack slot that we can store into as many times as we please, and that’s exactly what LLVM has elected to do above.

As such, LLVM’s SSA-based optimizations aren’t all that useful when passed IR that makes direct use of stack slots. We want to maximize our use of SSA variables, whenever possible, to make future optimization passes as effective as possible.

This is where mem2reg comes in:

This file (optimization pass) promotes memory references to be register references. It promotes alloca instructions which only have loads and stores as uses. An alloca is transformed by using dominator frontiers to place phi nodes, then traversing the function in depth-first order to rewrite loads and stores as appropriate. This is just the standard SSA construction algorithm to construct “pruned” SSA form.

(Parenthetical mine.)

mem2reg gets run at -O1 and higher, so let’s do exactly that:

Foiled again! Our stack slots are gone thanks to mem2reg , but LLVM has actually optimized too far : it figured out that our flags value is wholly dependent on the return value of our access call and erased the conditional entirely.

Instead of a φ node, we got this select :

which the LLVM Language Reference describes concisely:

The ‘select’ instruction is used to choose one value based on a condition, without IR-level branching.

So we need a better example. Let’s do something that LLVM can’t trivially optimize into a select (or sequence of select s), like adding an else if with a function that we’ve only provided the declaration for:

That’s more like it! Here’s our magical φ:

LLVM’s phi is slightly more complicated than the φ(flags_0, flags_1) that I made up before, but not by much: it takes a list of pairs (two, in this case), with each pair containing a possible value and that value’s originating basic block (which, by construction, is always a predecessor block in the context of the φ node).

The Language Reference backs us up:

The type of the incoming values is specified with the first type field. After this, the ‘phi’ instruction takes a list of pairs as arguments, with one pair for each predecessor basic block of the current block. Only values of first class type may be used as the value arguments to the PHI node. Only labels may be used as the label arguments. There must be no non-phi instructions between the start of a basic block and the PHI instructions: i.e. PHI instructions must be first in a basic block.

Observe, too, that LLVM is still being clever: one of our φ choices is a computed select ( %spec.select ), so LLVM still managed to partially erase the original control flow.

So that’s cool. But there’s a piece of control flow that we’ve conspicuously ignored.

What about loops?

Not one, not two, but three φs! In order of appearance:

Because we supply the loop bounds via count , LLVM has no way to ensure that we actually enter the loop body. Consequently, our very first φ selects between the initial %base and %add . LLVM’s phi syntax helpfully tells us that %base comes from the entry block and %add from the loop, just as we expect. I have no idea why LLVM selected such a hideous name for the resulting value ( %base.addr.0.lcssa ).

Our index variable is initialized once and then updated with each for iteration, so it also needs a φ. Our selections here are %inc (which each body computes from %i.07 ) and the 0 literal (i.e., our initialization value).

Finally, the heart of our loop body: we need to get base , where base is either the initial base value ( %base ) or the value computed as part of the prior loop ( %add ). One last φ gets us there.

The rest of the IR is bookkeeping: we need separate SSA variables to compute the addition ( %add ), increment ( %inc ), and exit check ( %exitcond.not ) with each loop iteration.

So now we know what an SSA form is , and how LLVM represents them 6 . Why should we care?

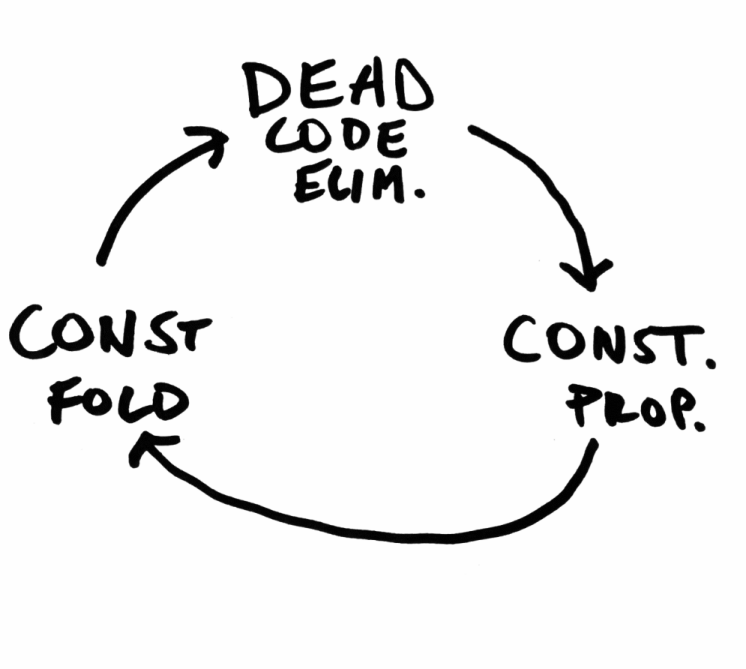

As I briefly alluded to early in the post, it comes down to optimization potential: the SSA forms of programs are particularly suited to a number of effective optimizations.

Let’s go through a select few of them.

Dead code elimination

One of the simplest things that an optimizing compiler can do is remove code that cannot possibly be executed . This makes the resulting binary smaller (and usually faster, since more of it can fit in the instruction cache).

“Dead” code falls into several categories 7 , but a common one is assignments that cannot affect program behavior, like redundant initialization:

Without an SSA form, an optimizing compiler would need to check whether any use of x reaches its original definition ( x = 100 ). Tedious. In SSA form, the impossibility of that is obvious:

And sure enough, LLVM eliminates the initial assignment of 100 entirely:

Constant propagation

Compilers can also optimize a program by substituting uses of a constant variable for the constant value itself. Let’s take a look at another blob of C:

As humans, we can see that y and z are trivially assigned and never modified 8 . For the compiler, however, this is a variant of the reaching definition problem from above: before it can replace y and z with 7 and 10 respectively, it needs to make sure that y and z are never assigned a different value.

Let’s do our SSA reduction:

This is virtually identical to our original form, but with one critical difference: the compiler can now see that every load of y and z is the original assignment. In other words, they’re all safe to replace!

So we’ve gotten rid of a few potential register operations, which is nice. But here’s the really critical part: we’ve set ourselves up for several other optimizations :

Now that we’ve propagated some of our constants, we can do some trivial constant folding : 7 + 10 becomes 17 , and so forth.

In SSA form, it’s trivial to observe that only x and a_{1..4} can affect the program’s behavior. So we can apply our dead code elimination from above and delete y and z entirely!

This is the real magic of an optimizing compiler: each individual optimization is simple and largely independent, but together they produce a virtuous cycle that can be repeated until gains diminish.

Register allocation

Register allocation (alternatively: register scheduling) is less of an optimization itself , and more of an unavoidable problem in compiler engineering: it’s fun to pretend to have access to an infinite number of addressable variables, but the compiler eventually insists that we boil our operations down to a small, fixed set of CPU registers .

The constraints and complexities of register allocation vary by architecture: x86 (prior to AMD64) is notoriously starved for registers 9 (only 8 full general purpose registers, of which 6 might be usable within a function’s scope 10 ), while RISC architectures typically employ larger numbers of registers to compensate for the lack of register-memory operations.

Just as above, reductions to SSA form have both indirect and direct advantages for the register allocator:

Indirectly: Eliminations of redundant loads and stores reduces the overall pressure on the register allocator, allowing it to avoid expensive spills (i.e., having to temporarily transfer a live register to main memory to accommodate another instruction).

Directly: Compilers have historically lowered φs into copies before register allocation, meaning that register allocators traditionally haven’t benefited from the SSA form itself 11 . There is, however, (semi-)recent research on direct application of SSA forms to both linear and coloring allocators 12 13 .

A concrete example: modern JavaScript engines use JITs to accelerate program evaluation. These JITs frequently use linear register allocators for their acceptable tradeoff between register selection speed (linear, as the name suggests) and acceptable register scheduling. Converting out of SSA form is a timely operation of its own, so linear allocation on the SSA representation itself is appealing in JITs and other contexts where compile time is part of execution time.

There are many things about SSA that I didn’t cover in this post: dominance frontiers , tradeoffs between “pruned” and less optimal SSA forms, and feedback mechanisms between the SSA form of a program and the compiler’s decision to cease optimizing, among others. Each of these could be its own blog post, and maybe will be in the future!

In the sense that each task is conceptually isolated and has well-defined inputs and outputs. Individual compilers have some flexibility with respect to whether they combine or further split the tasks. ↩

The distinction between an AST and an intermediate representation is hazy: Rust converts their AST to HIR early in the compilation process, and languages can be designed to have ASTs that are amendable to analyses that would otherwise be best on an IR. ↩

This can be broken up into lexical validation (e.g. use of an undeclared identifier) and semantic validation (e.g. incorrect initialization of a type). ↩

This is what LLVM does: LLVM IR is lowered to MIR (not to be confused with Rust’s MIR ), which is subsequently lowered to machine code. ↩

Not because they can’t: the SSA form of a program can be executed by evaluating φ with concrete control flow. ↩

We haven’t talked at all about minimal or pruned SSAs, and I don’t plan on doing so in this post. The TL;DR of them: naïve SSA form generation can lead to lots of unnecessary φ nodes, impeding analyses. LLVM (and GCC, and anything else that uses SSAs probably) will attempt to translate any initial SSA form into one with a minimally viable number of φs. For LLVM, this tied directly to the rest of mem2reg . ↩

Including removing code that has undefined behavior in it, since “doesn’t run at all” is a valid consequence of invoking UB. ↩

And are also function scoped, meaning that another translation unit can’t address them. ↩

x86 makes up for this by not being a load-store architecture : many instructions can pay the price of a memory round-trip in exchange for saving a register. ↩

Assuming that %esp and %ebp are being used by the compiler to manage the function’s frame. ↩

LLVM, for example, lowers all φs as one of its very first preparations for register allocation. See this 2009 LLVM Developers’ Meeting talk . ↩

Wimmer 2010a: “Linear Scan Register Allocation on SSA Form” ( PDF ) ↩

Hack 2005: “Towards Register Allocation for Programs in SSA-form” ( PDF ) ↩

Next: Alias analysis , Previous: SSA Operands , Up: Analysis and Optimization of GIMPLE tuples [ Contents ][ Index ]

13.3 Static Single Assignment ¶

Most of the tree optimizers rely on the data flow information provided by the Static Single Assignment (SSA) form. We implement the SSA form as described in R. Cytron, J. Ferrante, B. Rosen, M. Wegman, and K. Zadeck. Efficiently Computing Static Single Assignment Form and the Control Dependence Graph. ACM Transactions on Programming Languages and Systems, 13(4):451-490, October 1991 .

The SSA form is based on the premise that program variables are assigned in exactly one location in the program. Multiple assignments to the same variable create new versions of that variable. Naturally, actual programs are seldom in SSA form initially because variables tend to be assigned multiple times. The compiler modifies the program representation so that every time a variable is assigned in the code, a new version of the variable is created. Different versions of the same variable are distinguished by subscripting the variable name with its version number. Variables used in the right-hand side of expressions are renamed so that their version number matches that of the most recent assignment.

We represent variable versions using SSA_NAME nodes. The renaming process in tree-ssa.cc wraps every real and virtual operand with an SSA_NAME node which contains the version number and the statement that created the SSA_NAME . Only definitions and virtual definitions may create new SSA_NAME nodes.

Sometimes, flow of control makes it impossible to determine the most recent version of a variable. In these cases, the compiler inserts an artificial definition for that variable called PHI function or PHI node . This new definition merges all the incoming versions of the variable to create a new name for it. For instance,

Since it is not possible to determine which of the three branches will be taken at runtime, we don’t know which of a_1 , a_2 or a_3 to use at the return statement. So, the SSA renamer creates a new version a_4 which is assigned the result of “merging” a_1 , a_2 and a_3 . Hence, PHI nodes mean “one of these operands. I don’t know which”.

The following functions can be used to examine PHI nodes

Returns the SSA_NAME created by PHI node phi (i.e., phi ’s LHS).

Returns the number of arguments in phi . This number is exactly the number of incoming edges to the basic block holding phi .

Returns i th argument of phi .

Returns the incoming edge for the i th argument of phi .

Returns the SSA_NAME for the i th argument of phi .

- Preserving the SSA form

- Examining SSA_NAME nodes

- Walking the dominator tree

13.3.1 Preserving the SSA form ¶

Some optimization passes make changes to the function that invalidate the SSA property. This can happen when a pass has added new symbols or changed the program so that variables that were previously aliased aren’t anymore. Whenever something like this happens, the affected symbols must be renamed into SSA form again. Transformations that emit new code or replicate existing statements will also need to update the SSA form.

Since GCC implements two different SSA forms for register and virtual variables, keeping the SSA form up to date depends on whether you are updating register or virtual names. In both cases, the general idea behind incremental SSA updates is similar: when new SSA names are created, they typically are meant to replace other existing names in the program.

For instance, given the following code:

Suppose that we insert new names x_10 and x_11 (lines 4 and 8 ).

We want to replace all the uses of x_1 with the new definitions of x_10 and x_11 . Note that the only uses that should be replaced are those at lines 5 , 9 and 11 . Also, the use of x_7 at line 9 should not be replaced (this is why we cannot just mark symbol x for renaming).

Additionally, we may need to insert a PHI node at line 11 because that is a merge point for x_10 and x_11 . So the use of x_1 at line 11 will be replaced with the new PHI node. The insertion of PHI nodes is optional. They are not strictly necessary to preserve the SSA form, and depending on what the caller inserted, they may not even be useful for the optimizers.

Updating the SSA form is a two step process. First, the pass has to identify which names need to be updated and/or which symbols need to be renamed into SSA form for the first time. When new names are introduced to replace existing names in the program, the mapping between the old and the new names are registered by calling register_new_name_mapping (note that if your pass creates new code by duplicating basic blocks, the call to tree_duplicate_bb will set up the necessary mappings automatically).

After the replacement mappings have been registered and new symbols marked for renaming, a call to update_ssa makes the registered changes. This can be done with an explicit call or by creating TODO flags in the tree_opt_pass structure for your pass. There are several TODO flags that control the behavior of update_ssa :

- TODO_update_ssa . Update the SSA form inserting PHI nodes for newly exposed symbols and virtual names marked for updating. When updating real names, only insert PHI nodes for a real name O_j in blocks reached by all the new and old definitions for O_j . If the iterated dominance frontier for O_j is not pruned, we may end up inserting PHI nodes in blocks that have one or more edges with no incoming definition for O_j . This would lead to uninitialized warnings for O_j ’s symbol.

- TODO_update_ssa_no_phi . Update the SSA form without inserting any new PHI nodes at all. This is used by passes that have either inserted all the PHI nodes themselves or passes that need only to patch use-def and def-def chains for virtuals (e.g., DCE).

WARNING: If you need to use this flag, chances are that your pass may be doing something wrong. Inserting PHI nodes for an old name where not all edges carry a new replacement may lead to silent codegen errors or spurious uninitialized warnings.

- TODO_update_ssa_only_virtuals . Passes that update the SSA form on their own may want to delegate the updating of virtual names to the generic updater. Since FUD chains are easier to maintain, this simplifies the work they need to do. NOTE: If this flag is used, any OLD->NEW mappings for real names are explicitly destroyed and only the symbols marked for renaming are processed.

13.3.2 Examining SSA_NAME nodes ¶

The following macros can be used to examine SSA_NAME nodes

Returns the statement s that creates the SSA_NAME var . If s is an empty statement (i.e., IS_EMPTY_STMT ( s ) returns true ), it means that the first reference to this variable is a USE or a VUSE.

Returns the version number of the SSA_NAME object var .

13.3.3 Walking the dominator tree ¶

This function walks the dominator tree for the current CFG calling a set of callback functions defined in struct dom_walk_data in domwalk.h . The call back functions you need to define give you hooks to execute custom code at various points during traversal:

- Once to initialize any local data needed while processing bb and its children. This local data is pushed into an internal stack which is automatically pushed and popped as the walker traverses the dominator tree.

- Once before traversing all the statements in the bb .

- Once for every statement inside bb .

- Once after traversing all the statements and before recursing into bb ’s dominator children.

- It then recurses into all the dominator children of bb .

- After recursing into all the dominator children of bb it can, optionally, traverse every statement in bb again (i.e., repeating steps 2 and 3).

- Once after walking the statements in bb and bb ’s dominator children. At this stage, the block local data stack is popped.

Lesson 5: Global Analysis & SSA

- global analysis & optimization

- static single assignment

- SSA slides from Todd Mowry at CMU another presentation of the pseudocode for various algorithms herein

- Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency by Boissinot on more sophisticated was to translate out of SSA form

- tasks due October 7

Lots of definitions!

- Reminders: Successors & predecessors. Paths in CFGs.

- A dominates B iff all paths from the entry to B include A .

- The dominator tree is a convenient data structure for storing the dominance relationships in an entire function. The recursive children of a given node in a tree are the nodes that that node dominates.

- A strictly dominates B iff A dominates B and A ≠ B . (Dominance is reflexive, so "strict" dominance just takes that part away.)

- A immediately dominates B iff A dominates B but A does not strictly dominate any other node that strictly dominates B . (In which case A is B 's direct parent in the dominator tree.)

- A dominance frontier is the set of nodes that are just "one edge away" from being dominated by a given node. Put differently, A 's dominance frontier contains B iff A does not strictly dominate B , but A does dominate some predecessor of B .

- Post-dominance is the reverse of dominance. A post-dominates B iff all paths from B to the exit include A . (You can extend the strict version, the immediate version, trees, etc. to post-dominance.)

An algorithm for finding dominators:

The dom relation will, in the end, map each block to its set of dominators. We initialize it as the "complete" relation, i.e., mapping every block to the set of all blocks. The loop pares down the sets by iterating to convergence.

The running time is O(n²) in the worst case. But there's a trick: if you iterate over the CFG in reverse post-order , and the CFG is well behaved (reducible), it runs in linear time—the outer loop runs a constant number of times.

Natural Loops

Some things about loops:

- Natural loops are strongly connected components in the CFG with a single entry.

- Natural loops are formed around backedges , which are edges from A to B where B dominates A .

- A natural loop is the smallest set of vertices L including A and B such that, for every v in L , either all the predecessors of v are in L or v = B .

- A language that only has for , while , if , break , continue , etc. can only generate reducible CFGs. You need goto or something to generate irreducible CFGs.

Loop-Invariant Code Motion (LICM)

And finally, loop-invariant code motion (LICM) is an optimization that works on natural loops. It moves code from inside a loop to before the loop, if the computation always does the same thing on every iteration of the loop.

A loop's preheader is its header's unique predecessor. LICM moves code to the preheader. But while natural loops need to have a unique header, the header does not necessarily have a unique predecessor. So it's often convenient to invent an empty preheader block that jumps directly to the header, and then move all the in-edges to the header to point there instead.

LICM needs two ingredients: identifying loop-invariant instructions in the loop body, and deciding when it's safe to move one from the body to the preheader.

To identify loop-invariant instructions:

(This determination requires that you already calculated reaching definitions! Presumably using data flow.)

It's safe to move a loop-invariant instruction to the preheader iff:

- The definition dominates all of its uses, and

- No other definitions of the same variable exist in the loop, and

- The instruction dominates all loop exits.

The last criterion is somewhat tricky: it ensures that the computation would have been computed eventually anyway, so it's safe to just do it earlier. But it's not true of loops that may execute zero times, which, when you think about it, rules out most for loops! It's possible to relax this condition if:

- The assigned-to variable is dead after the loop, and

- The instruction can't have side effects, including exceptions—generally ruling out division because it might divide by zero. (A thing that you generally need to be careful of in such speculative optimizations that do computations that might not actually be necessary.)

Static Single Assignment (SSA)

You have undoubtedly noticed by now that many of the annoying problems in implementing analyses & optimizations stem from variable name conflicts. Wouldn't it be nice if every assignment in a program used a unique variable name? Of course, people don't write programs that way, so we're out of luck. Right?

Wrong! Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program like this:

Into a program like this, by renaming all the variables:

Of course, things will get a little more complicated when there is control flow. And because real machines are not SSA, using separate variables (i.e., memory locations and registers) for everything is bound to be inefficient. The idea in SSA is to convert general programs into SSA form, do all our optimization there, and then convert back to a standard mutating form before we generate backend code.

Just renaming assignments willy-nilly will quickly run into problems. Consider this program:

If we start renaming all the occurrences of a , everything goes fine until we try to write that last print a . Which "version" of a should it use?

To match the expressiveness of unrestricted programs, SSA adds a new kind of instruction: a ϕ-node . ϕ-nodes are flow-sensitive copy instructions: they get a value from one of several variables, depending on which incoming CFG edge was most recently taken to get to them.

In Bril, a ϕ-node appears as a phi instruction:

The phi instruction chooses between any number of variables, and it picks between them based on labels. If the program most recently executed a basic block with the given label, then the phi instruction takes its value from the corresponding variable.

You can write the above program in SSA like this:

It can also be useful to see how ϕ-nodes crop up in loops.

(An aside: some recent SSA-form IRs, such as MLIR and Swift's IR , use an alternative to ϕ-nodes called basic block arguments . Instead of making ϕ-nodes look like weird instructions, these IRs bake the need for ϕ-like conditional copies into the structure of the CFG. Basic blocks have named parameters, and whenever you jump to a block, you must provide arguments for those parameters. With ϕ-nodes, a basic block enumerates all the possible sources for a given variable, one for each in-edge in the CFG; with basic block arguments, the sources are distributed to the "other end" of the CFG edge. Basic block arguments are a nice alternative for "SSA-native" IRs because they avoid messy problems that arise when needing to treat ϕ-nodes differently from every other kind of instruction.)

Bril in SSA

Bril has an SSA extension . It adds support for a phi instruction. Beyond that, SSA form is just a restriction on the normal expressiveness of Bril—if you solemnly promise never to assign statically to the same variable twice, you are writing "SSA Bril."

The reference interpreter has built-in support for phi , so you can execute your SSA-form Bril programs without fuss.

The SSA Philosophy

In addition to a language form, SSA is also a philosophy! It can fundamentally change the way you think about programs. In the SSA philosophy:

- definitions == variables

- instructions == values

- arguments == data flow graph edges

In LLVM, for example, instructions do not refer to argument variables by name—an argument is a pointer to defining instruction.

Converting to SSA

To convert to SSA, we want to insert ϕ-nodes whenever there are distinct paths containing distinct definitions of a variable. We don't need ϕ-nodes in places that are dominated by a definition of the variable. So what's a way to know when control reachable from a definition is not dominated by that definition? The dominance frontier!

We do it in two steps. First, insert ϕ-nodes:

Then, rename variables:

Converting from SSA

Eventually, we need to convert out of SSA form to generate efficient code for real machines that don't have phi -nodes and do have finite space for variable storage.

The basic algorithm is pretty straightforward. If you have a ϕ-node:

Then there must be assignments to x and y (recursively) preceding this statement in the CFG. The paths from x to the phi -containing block and from y to the same block must "converge" at that block. So insert code into the phi -containing block's immediate predecessors along each of those two paths: one that does v = id x and one that does v = id y . Then you can delete the phi instruction.

This basic approach can introduce some redundant copying. (Take a look at the code it generates after you implement it!) Non-SSA copy propagation optimization can work well as a post-processing step. For a more extensive take on how to translate out of SSA efficiently, see “Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency” by Boissinot et al.

- Find dominators for a function.

- Construct the dominance tree.

- Compute the dominance frontier.

- One thing to watch out for: a tricky part of the translation from the pseudocode to the real world is dealing with variables that are undefined along some paths.

- You will want to make sure the output of your "to SSA" pass is actually in SSA form. There's a really simple is_ssa.py script that can check that for you.

- You'll also want to make sure that programs do the same thing when converted to SSA form and back again. Fortunately, brili supports the phi instruction, so you can interpret your SSA-form programs if you want to check the midpoint of that round trip.

- For bonus "points," implement global value numbering for SSA-form Bril code.

- Scholarly Community Encyclopedia

- Log in/Sign up

Video Upload Options

- MDPI and ACS Style

- Chicago Style

In compiler design, static single assignment form (often abbreviated as SSA form or simply SSA) is a property of an intermediate representation (IR), which requires that each variable be assigned exactly once, and every variable be defined before it is used. Existing variables in the original IR are split into versions, new variables typically indicated by the original name with a subscript in textbooks, so that every definition gets its own version. In SSA form, use-def chains are explicit and each contains a single element. SSA was proposed by Barry K. Rosen, Mark N. Wegman, and F. Kenneth Zadeck in 1988. Ron Cytron, Jeanne Ferrante and the previous three researchers at IBM developed an algorithm that can compute the SSA form efficiently. One can expect to find SSA in a compiler for Fortran, C or C++, whereas in functional language compilers, such as those for Scheme and ML, continuation-passing style (CPS) is generally used. SSA is formally equivalent to a well-behaved subset of CPS excluding non-local control flow, which does not occur when CPS is used as intermediate representation. So optimizations and transformations formulated in terms of one immediately apply to the other.

1. Benefits

The primary usefulness of SSA comes from how it simultaneously simplifies and improves the results of a variety of compiler optimizations, by simplifying the properties of variables. For example, consider this piece of code:

Humans can see that the first assignment is not necessary, and that the value of y being used in the third line comes from the second assignment of y . A program would have to perform reaching definition analysis to determine this. But if the program is in SSA form, both of these are immediate:

Compiler optimization algorithms which are either enabled or strongly enhanced by the use of SSA include:

- Constant propagation – conversion of computations from runtime to compile time, e.g. treat the instruction a=3*4+5; as if it were a=17;

- Value range propagation [ 1 ] – precompute the potential ranges a calculation could be, allowing for the creation of branch predictions in advance

- Sparse conditional constant propagation – range-check some values, allowing tests to predict the most likely branch

- Dead code elimination – remove code that will have no effect on the results

- Global value numbering – replace duplicate calculations producing the same result

- Partial redundancy elimination – removing duplicate calculations previously performed in some branches of the program

- Strength reduction – replacing expensive operations by less expensive but equivalent ones, e.g. replace integer multiply or divide by powers of 2 with the potentially less expensive shift left (for multiply) or shift right (for divide).

- Register allocation – optimize how the limited number of machine registers may be used for calculations

2. Converting to SSA

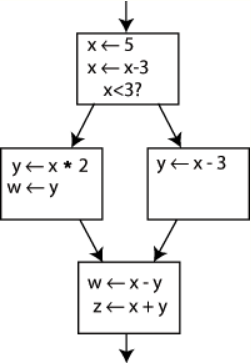

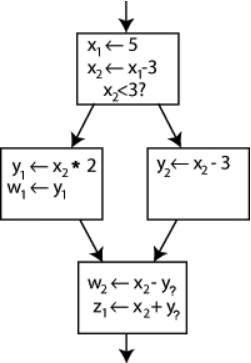

Converting ordinary code into SSA form is primarily a matter of replacing the target of each assignment with a new variable, and replacing each use of a variable with the "version" of the variable reaching that point. For example, consider the following control-flow graph:

An example control-flow graph, before conversion to SSA. https://handwiki.org/wiki/index.php?curid=1887224

Changing the name on the left hand side of "x [math]\displaystyle{ \leftarrow }[/math] x - 3" and changing the following uses of x to that new name would leave the program unaltered. This can be exploited in SSA by creating two new variables: x 1 and x 2 , each of which is assigned only once. Likewise, giving distinguishing subscripts to all the other variables yields:

An example control-flow graph, partially converted to SSA. https://handwiki.org/wiki/index.php?curid=1989184

It is clear which definition each use is referring to, except for one case: both uses of y in the bottom block could be referring to either y 1 or y 2 , depending on which path the control flow took.

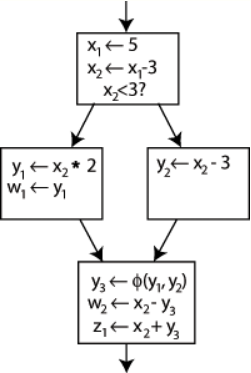

To resolve this, a special statement is inserted in the last block, called a Φ (Phi) function . This statement will generate a new definition of y called y 3 by "choosing" either y 1 or y 2 , depending on the control flow in the past.

An example control-flow graph, fully converted to SSA. https://handwiki.org/wiki/index.php?curid=1820048

Now, the last block can simply use y 3 , and the correct value will be obtained either way. A Φ function for x is not needed: only one version of x , namely x 2 is reaching this place, so there is no problem (in other words, Φ( x 2 , x 2 )= x 2 ).

Given an arbitrary control-flow graph, it can be difficult to tell where to insert Φ functions, and for which variables. This general question has an efficient solution that can be computed using a concept called dominance frontiers (see below).

Φ functions are not implemented as machine operations on most machines. A compiler can implement a Φ function by inserting "move" operations at the end of every predecessor block. In the example above, the compiler might insert a move from y 1 to y 3 at the end of the middle-left block and a move from y 2 to y 3 at the end of the middle-right block. These move operations might not end up in the final code based on the compiler's register allocation procedure. However, this approach may not work when simultaneous operations are speculatively producing inputs to a Φ function, as can happen on wide-issue machines. Typically, a wide-issue machine has a selection instruction used in such situations by the compiler to implement the Φ function.

According to Kenny Zadeck, [ 2 ] Φ functions were originally known as phony functions while SSA was being developed at IBM Research in the 1980s. The formal name of a Φ function was only adopted when the work was first published in an academic paper.

2.1. Computing Minimal SSA using Dominance Frontiers

First, we need the concept of a dominator : we say that a node A strictly dominates a different node B in the control-flow graph if it is impossible to reach B without passing through A first. This is useful, because if we ever reach B we know that any code in A has run. We say that A dominates B (B is dominated by A) if either A strictly dominates B or A = B.

Now we can define the dominance frontier : a node B is in the dominance frontier of a node A if A does not strictly dominate B, but does dominate some immediate predecessor of B, or if node A is an immediate predecessor of B, and, since any node dominates itself, node A dominates itself, and as a result node B is in the dominance frontier of node A. From A's point of view, these are the nodes at which other control paths, which don't go through A, make their earliest appearance.

Dominance frontiers capture the precise places at which we need Φ functions: if the node A defines a certain variable, then that definition and that definition alone (or redefinitions) will reach every node A dominates. Only when we leave these nodes and enter the dominance frontier must we account for other flows bringing in other definitions of the same variable. Moreover, no other Φ functions are needed in the control-flow graph to deal with A's definitions, and we can do with no less.

There is an efficient algorithm for finding dominance frontiers of each node. This algorithm was originally described in Cytron et al. 1991. Also useful is chapter 19 of the book "Modern compiler implementation in Java" by Andrew Appel (Cambridge University Press, 2002). See the paper for more details. [ 3 ]

Keith D. Cooper, Timothy J. Harvey, and Ken Kennedy of Rice University describe an algorithm in their paper titled A Simple, Fast Dominance Algorithm . [ 4 ] The algorithm uses well-engineered data structures to improve performance. It is expressed simply as follows: [ 4 ]

Note: in the code above, an immediate predecessor of node n is any node from which control is transferred to node n, and idom(b) is the node that immediately dominates node b (a singleton set).

3. Variations That Reduce the Number of Φ Functions

"Minimal" SSA inserts the minimal number of Φ functions required to ensure that each name is assigned a value exactly once and that each reference (use) of a name in the original program can still refer to a unique name. (The latter requirement is needed to ensure that the compiler can write down a name for each operand in each operation.)

However, some of these Φ functions could be dead . For this reason, minimal SSA does not necessarily produce the fewest Φ functions that are needed by a specific procedure. For some types of analysis, these Φ functions are superfluous and can cause the analysis to run less efficiently.

3.1. Pruned SSA

Pruned SSA form is based on a simple observation: Φ functions are only needed for variables that are "live" after the Φ function. (Here, "live" means that the value is used along some path that begins at the Φ function in question.) If a variable is not live, the result of the Φ function cannot be used and the assignment by the Φ function is dead.

Construction of pruned SSA form uses live variable information in the Φ function insertion phase to decide whether a given Φ function is needed. If the original variable name isn't live at the Φ function insertion point, the Φ function isn't inserted.

Another possibility is to treat pruning as a dead code elimination problem. Then, a Φ function is live only if any use in the input program will be rewritten to it, or if it will be used as an argument in another Φ function. When entering SSA form, each use is rewritten to the nearest definition that dominates it. A Φ function will then be considered live as long as it is the nearest definition that dominates at least one use, or at least one argument of a live Φ.

3.2. Semi-Pruned SSA

Semi-pruned SSA form [ 5 ] is an attempt to reduce the number of Φ functions without incurring the relatively high cost of computing live variable information. It is based on the following observation: if a variable is never live upon entry into a basic block, it never needs a Φ function. During SSA construction, Φ functions for any "block-local" variables are omitted.

Computing the set of block-local variables is a simpler and faster procedure than full live variable analysis, making semi-pruned SSA form more efficient to compute than pruned SSA form. On the other hand, semi-pruned SSA form will contain more Φ functions.

3.3. Block Arguments

Block arguments are an alternative to Φ functions that is representationally identical but in practice can be more convenient during optimization. Blocks are named and take a list of block arguments, notated as function parameters. When calling a block the block arguments are bound to specified values. Swift and LLVM's MLIR use block arguments. [ 6 ]

4. Converting out of SSA Form

SSA form is not normally used for direct execution (although it is possible to interpret SSA [ 7 ] ), and it is frequently used "on top of" another IR with which it remains in direct correspondence. This can be accomplished by "constructing" SSA as a set of functions which map between parts of the existing IR (basic blocks, instructions, operands, etc. ) and its SSA counterpart. When the SSA form is no longer needed, these mapping functions may be discarded, leaving only the now-optimized IR.

Performing optimizations on SSA form usually leads to entangled SSA-Webs, meaning there are Φ instructions whose operands do not all have the same root operand. In such cases color-out algorithms are used to come out of SSA. Naive algorithms introduce a copy along each predecessor path which caused a source of different root symbol to be put in Φ than the destination of Φ. There are multiple algorithms for coming out of SSA with fewer copies, most use interference graphs or some approximation of it to do copy coalescing. [ 8 ]

5. Extensions

Extensions to SSA form can be divided into two categories.

Renaming scheme extensions alter the renaming criterion. Recall that SSA form renames each variable when it is assigned a value. Alternative schemes include static single use form (which renames each variable at each statement when it is used) and static single information form (which renames each variable when it is assigned a value, and at the post-dominance frontier).

Feature-specific extensions retain the single assignment property for variables, but incorporate new semantics to model additional features. Some feature-specific extensions model high-level programming language features like arrays, objects and aliased pointers. Other feature-specific extensions model low-level architectural features like speculation and predication.

6. Compilers Using SSA Form

SSA form is a relatively recent development in the compiler community. As such, many older compilers only use SSA form for some part of the compilation or optimization process, but most do not rely on it. Examples of compilers that rely heavily on SSA form include:

- The ETH Oberon-2 compiler was one of the first public projects to incorporate "GSA", a variant of SSA.

- The LLVM Compiler Infrastructure uses SSA form for all scalar register values (everything except memory) in its primary code representation. SSA form is only eliminated once register allocation occurs, late in the compile process (often at link time).

- The Open64 compiler uses SSA form in its global scalar optimizer, though the code is brought into SSA form before and taken out of SSA form afterwards. Open64 uses extensions to SSA form to represent memory in SSA form as well as scalar values.

- As of version 4 (released in April 2005) GCC, the GNU Compiler Collection, makes extensive use of SSA. The frontends generate "GENERIC" code which is then converted into "GIMPLE" code by the "gimplifier". High-level optimizations are then applied on the SSA form of "GIMPLE". The resulting optimized intermediate code is then translated into RTL, on which low-level optimizations are applied. The architecture-specific backends finally turn RTL into assembly language.

- IBM's open source adaptive Java virtual machine, Jikes RVM, uses extended Array SSA, an extension of SSA that allows analysis of scalars, arrays, and object fields in a unified framework. Extended Array SSA analysis is only enabled at the maximum optimization level, which is applied to the most frequently executed portions of code.

- In 2002, researchers modified IBM's JikesRVM (named Jalapeño at the time) to run both standard Java bytecode and a typesafe SSA (SafeTSA) bytecode class files, and demonstrated significant performance benefits to using the SSA bytecode.

- Oracle's HotSpot Java Virtual Machine uses an SSA-based intermediate language in its JIT compiler. [ 9 ]

- Microsoft Visual C++ compiler backend available in Microsoft Visual Studio 2015 Update 3 uses SSA [ 10 ]

- Mono uses SSA in its JIT compiler called Mini.

- jackcc is an open-source compiler for the academic instruction set Jackal 3.0. It uses a simple 3-operand code with SSA for its intermediate representation. As an interesting variant, it replaces Φ functions with a so-called SAME instruction, which instructs the register allocator to place the two live ranges into the same physical register.

- Although not a compiler, the Boomerang decompiler uses SSA form in its internal representation. SSA is used to simplify expression propagation, identifying parameters and returns, preservation analysis, and more.

- Portable.NET uses SSA in its JIT compiler.

- libFirm a completely graph based SSA intermediate representation for compilers. libFirm uses SSA form for all scalar register values until code generation by use of an SSA-aware register allocator.

- The Illinois Concert Compiler circa 1994 [ 11 ] used a variant of SSA called SSU (Static Single Use) which renames each variable when it is assigned a value, and in each conditional context in which that variable is used; essentially the static single information form mentioned above. The SSU form is documented in John Plevyak's Ph.D Thesis.

- The COINS compiler uses SSA form optimizations as explained here.

- The Mozilla Firefox SpiderMonkey JavaScript engine uses SSA-based IR. [ 12 ]

- The Chromium V8 JavaScript engine implements SSA in its Crankshaft compiler infrastructure as announced in December 2010

- PyPy uses a linear SSA representation for traces in its JIT compiler.

- Android's Dalvik virtual machine uses SSA in its JIT compiler.

- Android's new optimizing compiler for the Android Runtime uses SSA for its IR.

- The Standard ML compiler MLton uses SSA in one of its intermediate languages.

- LuaJIT makes heavy use of SSA-based optimizations. [ 13 ]

- The PHP and Hack compiler HHVM uses SSA in its IR. [ 14 ]

- Reservoir Labs' R-Stream compiler supports non-SSA (quad list), SSA and SSI (Static Single Information [ 15 ] ) forms. [ 16 ]

- Go (1.7: for x86-64 architecture only; 1.8: for all supported architectures). [ 17 ] [ 18 ]

- SPIR-V, the shading language standard for the Vulkan graphics API and kernel language for OpenCL compute API, is an SSA representation. [ 19 ]

- Various Mesa drivers via NIR, an SSA representation for shading languages. [ 20 ]

- WebKit uses SSA in its JIT compilers. [ 21 ] [ 22 ]

- Swift defines its own SSA form above LLVM IR, called SIL (Swift Intermediate Language). [ 23 ] [ 24 ]

- Erlang rewrote their compiler in OTP 22.0 to "internally use an intermediate representation based on Static Single Assignment (SSA)." With plans for further optimizations built on top of SSA in future releases. [ 25 ]

- value range propagation http://llvm.org/devmtg/2007-05/05-Lewycky-Predsimplify.pdf

- see page 43 ["The Origin of Ф-Functions and the Name"] of Zadeck, F. Kenneth, Presentation on the History of SSA at the SSA'09 Seminar, Autrans, France, April 2009 http://citi2.rice.edu/WS07/KennethZadeck.pdf

- Cytron, Ron; Ferrante, Jeanne; Rosen, Barry K.; Wegman, Mark N.; Zadeck, F. Kenneth (1 October 1991). "Efficiently computing static single assignment form and the control dependence graph". ACM Transactions on Programming Languages and Systems 13 (4): 451–490. doi:10.1145/115372.115320. https://dx.doi.org/10.1145%2F115372.115320

- Cooper, Keith D.; Harvey, Timothy J.; Kennedy, Ken (2001). A Simple, Fast Dominance Algorithm. https://web.archive.org/web/20200130142038/http://citi2.rice.edu/WS07/KennethZadeck.pdf.

- Briggs, Preston; Cooper, Keith D.; Harvey, Timothy J.; Simpson, L. Taylor (1998). Practical Improvements to the Construction and Destruction of Static Single Assignment Form. http://www.cs.rice.edu/~harv/my_papers/ssa.pdf.

- "Block Arguments vs PHI nodes - MLIR Rationale". https://mlir.llvm.org/docs/Rationale/Rationale/#block-arguments-vs-phi-nodes.

- von Ronne, Jeffery; Ning Wang; Michael Franz (2004). "Interpreting programs in static single assignment form". Proceedings of the 2004 workshop on Interpreters, virtual machines and emulators - IVME '04. p. 23. doi:10.1145/1059579.1059585. ISBN 1581139098. http://dl.acm.org/citation.cfm?doid=1059579.1059585.

- Boissinot, Benoit; Darte, Alain; Rastello, Fabrice; Dinechin, Benoît Dupont de; Guillon, Christophe (2008). "Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency" (in en). HAL-Inria Cs.DS: 14. https://hal.inria.fr/inria-00349925.

- "The Java HotSpot Performance Engine Architecture". Oracle Corporation. http://www.oracle.com/technetwork/java/whitepaper-135217.html.

- "Introducing a new, advanced Visual C++ code optimizer". 4 May 2016. https://blogs.msdn.microsoft.com/vcblog/2016/05/04/new-code-optimizer.

- "Illinois Concert Project". http://www-csag.ucsd.edu/projects/concert.html.

- "IonMonkey Overview". https://wiki.mozilla.org/IonMonkey/Overview. ,

- "Bytecode Optimizations". the LuaJIT project. http://wiki.luajit.org/Optimizations.

- "HipHop Intermediate Representation (HHIR)". 30 October 2021. https://github.com/facebook/hhvm/blob/master/hphp/doc/ir.specification.

- Ananian, C. Scott; Rinard, Martin (1999). Static Single Information Form.

- "Encyclopedia of Parallel Computing". https://www.springer.com/us/book/9780387097657.

- "Go 1.7 Release Notes - The Go Programming Language". https://golang.org/doc/go1.7#compiler.

- "Go 1.8 Release Notes - The Go Programming Language". https://golang.org/doc/go1.8#compiler.

- "SPIR-V spec". https://www.khronos.org/registry/spir-v/specs/1.0/SPIRV.pdf.

- Ekstrand, Jason. "Reintroducing NIR, a new IR for mesa". https://lists.freedesktop.org/archives/mesa-dev/2014-December/072761.html.

- "Introducing the WebKit FTL JIT". 13 May 2014. https://webkit.org/blog/3362/introducing-the-webkit-ftl-jit/.

- "Introducing the B3 JIT Compiler". 15 February 2016. https://webkit.org/blog/5852/introducing-the-b3-jit-compiler/.

- "Swift Intermediate Language (GitHub)". 30 October 2021. https://github.com/apple/swift/blob/master/docs/SIL.rst.

- "Swift's High-Level IR: A Case Study of Complementing LLVM IR with Language-Specific Optimization, LLVM Developers Meetup 10/2015". https://www.youtube.com/watch?v=Ntj8ab-5cvE.

- "OTP 22.0 Release Notes". http://www.erlang.org/news/132.

- Terms and Conditions

- Privacy Policy

- Advisory Board

Browse Course Material

Course info.

- Chris Terman

Departments

- Electrical Engineering and Computer Science

As Taught In

- Computer Design and Engineering

- Digital Systems

Learning Resource Types

Computation structures, worked example: the static discipline.

- Download video

- Download transcript

You are leaving MIT OpenCourseWare

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

Gender pay gap in U.S. hasn’t changed much in two decades

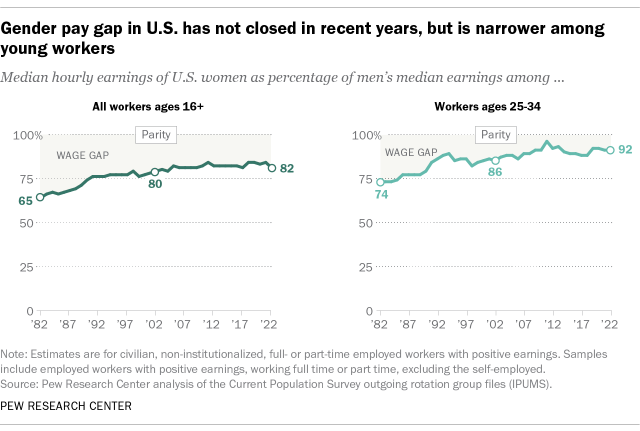

The gender gap in pay has remained relatively stable in the United States over the past 20 years or so. In 2022, women earned an average of 82% of what men earned, according to a new Pew Research Center analysis of median hourly earnings of both full- and part-time workers. These results are similar to where the pay gap stood in 2002, when women earned 80% as much as men.

As has long been the case, the wage gap is smaller for workers ages 25 to 34 than for all workers 16 and older. In 2022, women ages 25 to 34 earned an average of 92 cents for every dollar earned by a man in the same age group – an 8-cent gap. By comparison, the gender pay gap among workers of all ages that year was 18 cents.

While the gender pay gap has not changed much in the last two decades, it has narrowed considerably when looking at the longer term, both among all workers ages 16 and older and among those ages 25 to 34. The estimated 18-cent gender pay gap among all workers in 2022 was down from 35 cents in 1982. And the 8-cent gap among workers ages 25 to 34 in 2022 was down from a 26-cent gap four decades earlier.

The gender pay gap measures the difference in median hourly earnings between men and women who work full or part time in the United States. Pew Research Center’s estimate of the pay gap is based on an analysis of Current Population Survey (CPS) monthly outgoing rotation group files ( IPUMS ) from January 1982 to December 2022, combined to create annual files. To understand how we calculate the gender pay gap, read our 2013 post, “How Pew Research Center measured the gender pay gap.”

The COVID-19 outbreak affected data collection efforts by the U.S. government in its surveys, especially in 2020 and 2021, limiting in-person data collection and affecting response rates. It is possible that some measures of economic outcomes and how they vary across demographic groups are affected by these changes in data collection.

In addition to findings about the gender wage gap, this analysis includes information from a Pew Research Center survey about the perceived reasons for the pay gap, as well as the pressures and career goals of U.S. men and women. The survey was conducted among 5,098 adults and includes a subset of questions asked only for 2,048 adults who are employed part time or full time, from Oct. 10-16, 2022. Everyone who took part is a member of the Center’s American Trends Panel (ATP), an online survey panel that is recruited through national, random sampling of residential addresses. This way nearly all U.S. adults have a chance of selection. The survey is weighted to be representative of the U.S. adult population by gender, race, ethnicity, partisan affiliation, education and other categories. Read more about the ATP’s methodology .

Here are the questions used in this analysis, along with responses, and its methodology .

The U.S. Census Bureau has also analyzed the gender pay gap, though its analysis looks only at full-time workers (as opposed to full- and part-time workers). In 2021, full-time, year-round working women earned 84% of what their male counterparts earned, on average, according to the Census Bureau’s most recent analysis.

Much of the gender pay gap has been explained by measurable factors such as educational attainment, occupational segregation and work experience. The narrowing of the gap over the long term is attributable in large part to gains women have made in each of these dimensions.

Related: The Enduring Grip of the Gender Pay Gap

Even though women have increased their presence in higher-paying jobs traditionally dominated by men, such as professional and managerial positions, women as a whole continue to be overrepresented in lower-paying occupations relative to their share of the workforce. This may contribute to gender differences in pay.

Other factors that are difficult to measure, including gender discrimination, may also contribute to the ongoing wage discrepancy.

Perceived reasons for the gender wage gap

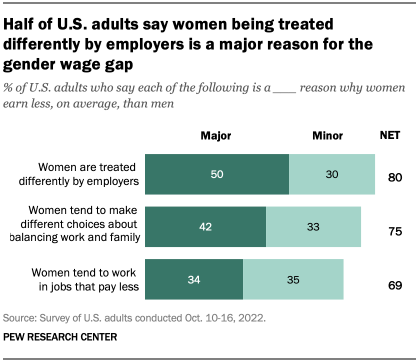

When asked about the factors that may play a role in the gender wage gap, half of U.S. adults point to women being treated differently by employers as a major reason, according to a Pew Research Center survey conducted in October 2022. Smaller shares point to women making different choices about how to balance work and family (42%) and working in jobs that pay less (34%).

There are some notable differences between men and women in views of what’s behind the gender wage gap. Women are much more likely than men (61% vs. 37%) to say a major reason for the gap is that employers treat women differently. And while 45% of women say a major factor is that women make different choices about how to balance work and family, men are slightly less likely to hold that view (40% say this).

Parents with children younger than 18 in the household are more likely than those who don’t have young kids at home (48% vs. 40%) to say a major reason for the pay gap is the choices that women make about how to balance family and work. On this question, differences by parental status are evident among both men and women.

Views about reasons for the gender wage gap also differ by party. About two-thirds of Democrats and Democratic-leaning independents (68%) say a major factor behind wage differences is that employers treat women differently, but far fewer Republicans and Republican leaners (30%) say the same. Conversely, Republicans are more likely than Democrats to say women’s choices about how to balance family and work (50% vs. 36%) and their tendency to work in jobs that pay less (39% vs. 30%) are major reasons why women earn less than men.

Democratic and Republican women are more likely than their male counterparts in the same party to say a major reason for the gender wage gap is that employers treat women differently. About three-quarters of Democratic women (76%) say this, compared with 59% of Democratic men. And while 43% of Republican women say unequal treatment by employers is a major reason for the gender wage gap, just 18% of GOP men share that view.

Pressures facing working women and men

Family caregiving responsibilities bring different pressures for working women and men, and research has shown that being a mother can reduce women’s earnings , while fatherhood can increase men’s earnings .

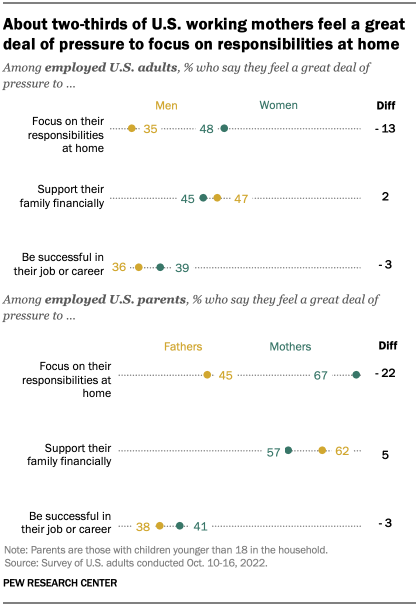

Employed women and men are about equally likely to say they feel a great deal of pressure to support their family financially and to be successful in their jobs and careers, according to the Center’s October survey. But women, and particularly working mothers, are more likely than men to say they feel a great deal of pressure to focus on responsibilities at home.

About half of employed women (48%) report feeling a great deal of pressure to focus on their responsibilities at home, compared with 35% of employed men. Among working mothers with children younger than 18 in the household, two-thirds (67%) say the same, compared with 45% of working dads.

When it comes to supporting their family financially, similar shares of working moms and dads (57% vs. 62%) report they feel a great deal of pressure, but this is driven mainly by the large share of unmarried working mothers who say they feel a great deal of pressure in this regard (77%). Among those who are married, working dads are far more likely than working moms (60% vs. 43%) to say they feel a great deal of pressure to support their family financially. (There were not enough unmarried working fathers in the sample to analyze separately.)

About four-in-ten working parents say they feel a great deal of pressure to be successful at their job or career. These findings don’t differ by gender.

Gender differences in job roles, aspirations

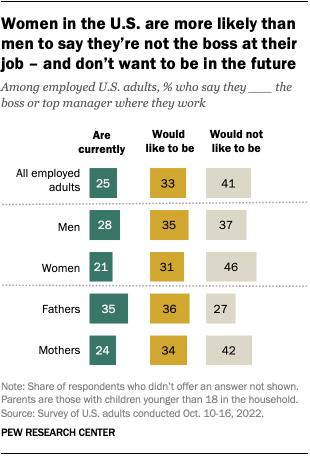

Overall, a quarter of employed U.S. adults say they are currently the boss or one of the top managers where they work, according to the Center’s survey. Another 33% say they are not currently the boss but would like to be in the future, while 41% are not and do not aspire to be the boss or one of the top managers.

Men are more likely than women to be a boss or a top manager where they work (28% vs. 21%). This is especially the case among employed fathers, 35% of whom say they are the boss or one of the top managers where they work. (The varying attitudes between fathers and men without children at least partly reflect differences in marital status and educational attainment between the two groups.)

In addition to being less likely than men to say they are currently the boss or a top manager at work, women are also more likely to say they wouldn’t want to be in this type of position in the future. More than four-in-ten employed women (46%) say this, compared with 37% of men. Similar shares of men (35%) and women (31%) say they are not currently the boss but would like to be one day. These patterns are similar among parents.

Note: This is an update of a post originally published on March 22, 2019. Anna Brown and former Pew Research Center writer/editor Amanda Barroso contributed to an earlier version of this analysis. Here are the questions used in this analysis, along with responses, and its methodology .

What is the gender wage gap in your metropolitan area? Find out with our pay gap calculator

- Gender & Work

- Gender Equality & Discrimination

- Gender Pay Gap

- Gender Roles

Carolina Aragão is a research associate focusing on social and demographic trends at Pew Research Center

Women have gained ground in the nation’s highest-paying occupations, but still lag behind men

Diversity, equity and inclusion in the workplace, the enduring grip of the gender pay gap, more than twice as many americans support than oppose the #metoo movement, women now outnumber men in the u.s. college-educated labor force, most popular.

1615 L St. NW, Suite 800 Washington, DC 20036 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Age & Generations

- Coronavirus (COVID-19)

- Economy & Work

- Family & Relationships

- Gender & LGBTQ

- Immigration & Migration

- International Affairs

- Internet & Technology

- Methodological Research

- News Habits & Media

- Non-U.S. Governments

- Other Topics

- Politics & Policy

- Race & Ethnicity

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

Copyright 2024 Pew Research Center

Terms & Conditions

Privacy Policy

Cookie Settings

Reprints, Permissions & Use Policy

IMAGES

VIDEO

COMMENTS

In compiler design, static single assignment form (often abbreviated as SSA form or simply SSA) is a property of an intermediate representation (IR) that requires each variable to be assigned exactly once and defined before it is used.

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck.. In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it's use. The prime use of SSA is it simplifies and improves the ...

Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program like ...

SSA form. Static single-assignment form arranges for every value computed by a program to have. aa unique assignment (aka, "definition") A procedure is in SSA form if every variable has (statically) exactly one definition. SSA form simplifies several important optimizations, including various forms of redundancy elimination. Example.

With thanks to Niki Carroll, winny, and kurufu for their invaluable proofreading and advice.. Preword. By popular demand, I'm doing another LLVM post.This time, it's single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers.. Like the last one, SSA is a topic in compiler and IR design that I mostly understand but could benefit from ...

Connects definitions of variables with uses of them. Propagate dataflow facts directly from defs to uses, rather than through control flow graph. In SSA form, def-use chains are linear in size of original program; in non-SSA form may be quadratic. Is relationship between SSA form and dominator structure of CFG.

The assignment is static because it is defined once per each couple of HS and MS sensors to be used for the fusion. These algorithms compute a similarity metric between a HS band and the set of MS bands. The maximum similarity criterion is used for the assignment. Different metrics lead to different algorithms.

SSA. Static single assignment is an IR where every variable is assigned a value at most once in the program text. E as y for a b asi c bl ock : assign to a fresh variable at each stmt. each use uses the most recently defined var. (Si mil ar to V al ue N umb eri ng) Straight-line SSA. . + y.

Static Single Assignment Form Many of the complexities of optimization and code generation arise from the fact that a given variable may be assigned to in many different places. Thus reaching definition analysis gives us the set of assignments that may reach a given use of a variable. Live range analysis must track all assignments that may ...

Static Single Assignment Form (and dominators, post-dominators, dominance frontiers…) CS252r Spring 2011 ... •If node X contains assignment to a, put Φ function for a in dominance frontier of X •Adding Φ fn may require introducing additional Φ fn •Step 2: Rename variables so only one definition ...

Static single assignment is an IR where every variable is assigned a value at most once in the program text. Easy for a basic block (reminiscent of Value Numbering): Visit each instruction in program order: LHS: assign to a fresh version of the variable. RHS: use the most recent version of each variable. = x + y.

Static Single Assignment Form. A property of an intermediate representation. Each variable is assigned exactly once. Control-flow and data-flow are explicit. Simplifies and improves a variety of compiler optimizations. Simple Example.

Static single-assignment form. SSA form. an ir that has a value-based name system, created by renaming and use of pseudooperations called ϕ-functions ssa encodes both control and value flow. It is used widely in optimization (see Section 9.3). (ssa) is an ir and a naming discipline that many modern compilers use to encode information about both the flow of control and the flow of values in ...

1. Introduction. Most current compilers and virtual machines, including the well-known GNU Compiler Collection (GCC) 1, the LLVM Compiler Infrastructure (LLVM) 2, and the Java Hotspot 3, use the so-called static single assignment (SSA) form as an intermediate representation (IR) of programs. SSA programs are often used for efficient program analysis, transformation, optimization, and efficient ...

A definition dof some variable vreachesoperation iif there is at least one path leading from that definition to operation i before v is redefined. REACHES(n): the set of definitions that reach the start of node n. DEDEF(n): the set of downward-exposed definitions in n. i.e. their defined variables are not redefined before leaving n.

13.3 Static Single Assignment. ¶. Most of the tree optimizers rely on the data flow information provided by the Static Single Assignment (SSA) form. We implement the SSA form as described in R. Cytron, J. Ferrante, B. Rosen, M. Wegman, and K. Zadeck. Efficiently Computing Static Single Assignment Form and the Control Dependence Graph.

static single assignment form (SSA form). SSA was conceived to make program analyses more efficient by compactly representing use-def chains. Over the last ... names have no meaning otherwise, in particular they are not local variables in the sense of an imperative language. 1 This acts like a let binding in a functional language. In fact, SSA ...

Wrong! Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program ...

In this way the compiler can hop quickly from use to definition to use to definition. An improvement on the idea of def-use chains is static single-assignment form, or SSA form, an intermediate representation in which each variable has only one definition in the program text. The one (static) definition-site may be in a loop that is executed ...

The static single-assignment (SSA) form is a program representation in which variables are split into "instances." Every new assignment to a variable — or more generally, every new definition of a variable — results in a new instance. The variable instances are numbered so that each use of a variable may be easily