Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing a Literature Review

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

A literature review is a document or section of a document that collects key sources on a topic and discusses those sources in conversation with each other (also called synthesis ). The lit review is an important genre in many disciplines, not just literature (i.e., the study of works of literature such as novels and plays). When we say “literature review” or refer to “the literature,” we are talking about the research ( scholarship ) in a given field. You will often see the terms “the research,” “the scholarship,” and “the literature” used mostly interchangeably.

Where, when, and why would I write a lit review?

There are a number of different situations where you might write a literature review, each with slightly different expectations; different disciplines, too, have field-specific expectations for what a literature review is and does. For instance, in the humanities, authors might include more overt argumentation and interpretation of source material in their literature reviews, whereas in the sciences, authors are more likely to report study designs and results in their literature reviews; these differences reflect these disciplines’ purposes and conventions in scholarship. You should always look at examples from your own discipline and talk to professors or mentors in your field to be sure you understand your discipline’s conventions, for literature reviews as well as for any other genre.

A literature review can be a part of a research paper or scholarly article, usually falling after the introduction and before the research methods sections. In these cases, the lit review just needs to cover scholarship that is important to the issue you are writing about; sometimes it will also cover key sources that informed your research methodology.

Lit reviews can also be standalone pieces, either as assignments in a class or as publications. In a class, a lit review may be assigned to help students familiarize themselves with a topic and with scholarship in their field, get an idea of the other researchers working on the topic they’re interested in, find gaps in existing research in order to propose new projects, and/or develop a theoretical framework and methodology for later research. As a publication, a lit review usually is meant to help make other scholars’ lives easier by collecting and summarizing, synthesizing, and analyzing existing research on a topic. This can be especially helpful for students or scholars getting into a new research area, or for directing an entire community of scholars toward questions that have not yet been answered.

What are the parts of a lit review?

Most lit reviews use a basic introduction-body-conclusion structure; if your lit review is part of a larger paper, the introduction and conclusion pieces may be just a few sentences while you focus most of your attention on the body. If your lit review is a standalone piece, the introduction and conclusion take up more space and give you a place to discuss your goals, research methods, and conclusions separately from where you discuss the literature itself.

Introduction:

- An introductory paragraph that explains what your working topic and thesis is

- A forecast of key topics or texts that will appear in the review

- Potentially, a description of how you found sources and how you analyzed them for inclusion and discussion in the review (more often found in published, standalone literature reviews than in lit review sections in an article or research paper)

- Summarize and synthesize: Give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: Don’t just paraphrase other researchers – add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically Evaluate: Mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: Use transition words and topic sentence to draw connections, comparisons, and contrasts.

Conclusion:

- Summarize the key findings you have taken from the literature and emphasize their significance

- Connect it back to your primary research question

How should I organize my lit review?

Lit reviews can take many different organizational patterns depending on what you are trying to accomplish with the review. Here are some examples:

- Chronological : The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order. Try to analyze the patterns, turning points, and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred (as mentioned previously, this may not be appropriate in your discipline — check with a teacher or mentor if you’re unsure).

- Thematic : If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Qualitative versus quantitative research

- Empirical versus theoretical scholarship

- Divide the research by sociological, historical, or cultural sources

- Theoretical : In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theorical concepts to create a framework for your research.

What are some strategies or tips I can use while writing my lit review?

Any lit review is only as good as the research it discusses; make sure your sources are well-chosen and your research is thorough. Don’t be afraid to do more research if you discover a new thread as you’re writing. More info on the research process is available in our "Conducting Research" resources .

As you’re doing your research, create an annotated bibliography ( see our page on the this type of document ). Much of the information used in an annotated bibliography can be used also in a literature review, so you’ll be not only partially drafting your lit review as you research, but also developing your sense of the larger conversation going on among scholars, professionals, and any other stakeholders in your topic.

Usually you will need to synthesize research rather than just summarizing it. This means drawing connections between sources to create a picture of the scholarly conversation on a topic over time. Many student writers struggle to synthesize because they feel they don’t have anything to add to the scholars they are citing; here are some strategies to help you:

- It often helps to remember that the point of these kinds of syntheses is to show your readers how you understand your research, to help them read the rest of your paper.

- Writing teachers often say synthesis is like hosting a dinner party: imagine all your sources are together in a room, discussing your topic. What are they saying to each other?

- Look at the in-text citations in each paragraph. Are you citing just one source for each paragraph? This usually indicates summary only. When you have multiple sources cited in a paragraph, you are more likely to be synthesizing them (not always, but often

- Read more about synthesis here.

The most interesting literature reviews are often written as arguments (again, as mentioned at the beginning of the page, this is discipline-specific and doesn’t work for all situations). Often, the literature review is where you can establish your research as filling a particular gap or as relevant in a particular way. You have some chance to do this in your introduction in an article, but the literature review section gives a more extended opportunity to establish the conversation in the way you would like your readers to see it. You can choose the intellectual lineage you would like to be part of and whose definitions matter most to your thinking (mostly humanities-specific, but this goes for sciences as well). In addressing these points, you argue for your place in the conversation, which tends to make the lit review more compelling than a simple reporting of other sources.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What is a Literature Review? | Guide, Template, & Examples

What is a Literature Review? | Guide, Template, & Examples

Published on 22 February 2022 by Shona McCombes . Revised on 7 June 2022.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research.

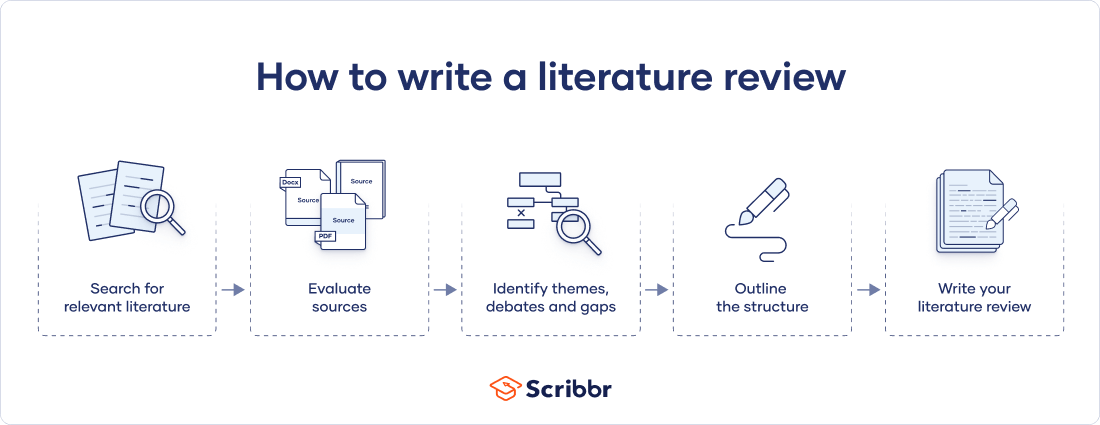

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarise sources – it analyses, synthesises, and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Why write a literature review, examples of literature reviews, step 1: search for relevant literature, step 2: evaluate and select sources, step 3: identify themes, debates and gaps, step 4: outline your literature review’s structure, step 5: write your literature review, frequently asked questions about literature reviews, introduction.

- Quick Run-through

- Step 1 & 2

When you write a dissertation or thesis, you will have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and scholarly context

- Develop a theoretical framework and methodology for your research

- Position yourself in relation to other researchers and theorists

- Show how your dissertation addresses a gap or contributes to a debate

You might also have to write a literature review as a stand-alone assignment. In this case, the purpose is to evaluate the current state of research and demonstrate your knowledge of scholarly debates around a topic.

The content will look slightly different in each case, but the process of conducting a literature review follows the same steps. We’ve written a step-by-step guide that you can follow below.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research objectives and questions .

If you are writing a literature review as a stand-alone assignment, you will have to choose a focus and develop a central question to direct your search. Unlike a dissertation research question, this question has to be answerable without collecting original data. You should be able to answer it based only on a review of existing publications.

Make a list of keywords

Start by creating a list of keywords related to your research topic. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list if you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can use boolean operators to help narrow down your search:

Read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

To identify the most important publications on your topic, take note of recurring citations. If the same authors, books or articles keep appearing in your reading, make sure to seek them out.

You probably won’t be able to read absolutely everything that has been written on the topic – you’ll have to evaluate which sources are most relevant to your questions.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models and methods? Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- How does the publication contribute to your understanding of the topic? What are its key insights and arguments?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible, and make sure you read any landmark studies and major theories in your field of research.

You can find out how many times an article has been cited on Google Scholar – a high citation count means the article has been influential in the field, and should certainly be included in your literature review.

The scope of your review will depend on your topic and discipline: in the sciences you usually only review recent literature, but in the humanities you might take a long historical perspective (for example, to trace how a concept has changed in meaning over time).

Remember that you can use our template to summarise and evaluate sources you’re thinking about using!

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It’s important to keep track of your sources with references to avoid plagiarism . It can be helpful to make an annotated bibliography, where you compile full reference information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

You can use our free APA Reference Generator for quick, correct, consistent citations.

Prevent plagiarism, run a free check.

To begin organising your literature review’s argument and structure, you need to understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly-visual platforms like Instagram and Snapchat – this is a gap that you could address in your own research.

There are various approaches to organising the body of a literature review. You should have a rough idea of your strategy before you start writing.

Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarising sources in order.

Try to analyse patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organise your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text, your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

If you are writing the literature review as part of your dissertation or thesis, reiterate your central problem or research question and give a brief summary of the scholarly context. You can emphasise the timeliness of the topic (“many recent studies have focused on the problem of x”) or highlight a gap in the literature (“while there has been much research on x, few researchers have taken y into consideration”).

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, make sure to follow these tips:

- Summarise and synthesise: give an overview of the main points of each source and combine them into a coherent whole.

- Analyse and interpret: don’t just paraphrase other researchers – add your own interpretations, discussing the significance of findings in relation to the literature as a whole.

- Critically evaluate: mention the strengths and weaknesses of your sources.

- Write in well-structured paragraphs: use transitions and topic sentences to draw connections, comparisons and contrasts.

In the conclusion, you should summarise the key findings you have taken from the literature and emphasise their significance.

If the literature review is part of your dissertation or thesis, reiterate how your research addresses gaps and contributes new knowledge, or discuss how you have drawn on existing theories and methods to build a framework for your research. This can lead directly into your methodology section.

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a dissertation , thesis, research paper , or proposal .

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarise yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, June 07). What is a Literature Review? | Guide, Template, & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/thesis-dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a dissertation proposal | a step-by-step guide, what is a theoretical framework | a step-by-step guide, what is a research methodology | steps & tips.

Methodological Approaches to Literature Review

- Living reference work entry

- First Online: 09 May 2023

- Cite this living reference work entry

- Dennis Thomas 2 ,

- Elida Zairina 3 &

- Johnson George 4

471 Accesses

The literature review can serve various functions in the contexts of education and research. It aids in identifying knowledge gaps, informing research methodology, and developing a theoretical framework during the planning stages of a research study or project, as well as reporting of review findings in the context of the existing literature. This chapter discusses the methodological approaches to conducting a literature review and offers an overview of different types of reviews. There are various types of reviews, including narrative reviews, scoping reviews, and systematic reviews with reporting strategies such as meta-analysis and meta-synthesis. Review authors should consider the scope of the literature review when selecting a type and method. Being focused is essential for a successful review; however, this must be balanced against the relevance of the review to a broad audience.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Akobeng AK. Principles of evidence based medicine. Arch Dis Child. 2005;90(8):837–40.

Article CAS PubMed PubMed Central Google Scholar

Alharbi A, Stevenson M. Refining Boolean queries to identify relevant studies for systematic review updates. J Am Med Inform Assoc. 2020;27(11):1658–66.

Article PubMed PubMed Central Google Scholar

Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32.

Article Google Scholar

Aromataris E MZE. JBI manual for evidence synthesis. 2020.

Google Scholar

Aromataris E, Pearson A. The systematic review: an overview. Am J Nurs. 2014;114(3):53–8.

Article PubMed Google Scholar

Aromataris E, Riitano D. Constructing a search strategy and searching for evidence. A guide to the literature search for a systematic review. Am J Nurs. 2014;114(5):49–56.

Babineau J. Product review: covidence (systematic review software). J Canad Health Libr Assoc Canada. 2014;35(2):68–71.

Baker JD. The purpose, process, and methods of writing a literature review. AORN J. 2016;103(3):265–9.

Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7(9):e1000326.

Bramer WM, Rethlefsen ML, Kleijnen J, Franco OH. Optimal database combinations for literature searches in systematic reviews: a prospective exploratory study. Syst Rev. 2017;6(1):1–12.

Brown D. A review of the PubMed PICO tool: using evidence-based practice in health education. Health Promot Pract. 2020;21(4):496–8.

Cargo M, Harris J, Pantoja T, et al. Cochrane qualitative and implementation methods group guidance series – paper 4: methods for assessing evidence on intervention implementation. J Clin Epidemiol. 2018;97:59–69.

Cook DJ, Mulrow CD, Haynes RB. Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med. 1997;126(5):376–80.

Article CAS PubMed Google Scholar

Counsell C. Formulating questions and locating primary studies for inclusion in systematic reviews. Ann Intern Med. 1997;127(5):380–7.

Cummings SR, Browner WS, Hulley SB. Conceiving the research question and developing the study plan. In: Cummings SR, Browner WS, Hulley SB, editors. Designing Clinical Research: An Epidemiological Approach. 4th ed. Philadelphia (PA): P Lippincott Williams & Wilkins; 2007. p. 14–22.

Eriksen MB, Frandsen TF. The impact of patient, intervention, comparison, outcome (PICO) as a search strategy tool on literature search quality: a systematic review. JMLA. 2018;106(4):420.

Ferrari R. Writing narrative style literature reviews. Medical Writing. 2015;24(4):230–5.

Flemming K, Booth A, Hannes K, Cargo M, Noyes J. Cochrane qualitative and implementation methods group guidance series – paper 6: reporting guidelines for qualitative, implementation, and process evaluation evidence syntheses. J Clin Epidemiol. 2018;97:79–85.

Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inf Libr J. 2009;26(2):91–108.

Green BN, Johnson CD, Adams A. Writing narrative literature reviews for peer-reviewed journals: secrets of the trade. J Chiropr Med. 2006;5(3):101–17.

Gregory AT, Denniss AR. An introduction to writing narrative and systematic reviews; tasks, tips and traps for aspiring authors. Heart Lung Circ. 2018;27(7):893–8.

Harden A, Thomas J, Cargo M, et al. Cochrane qualitative and implementation methods group guidance series – paper 5: methods for integrating qualitative and implementation evidence within intervention effectiveness reviews. J Clin Epidemiol. 2018;97:70–8.

Harris JL, Booth A, Cargo M, et al. Cochrane qualitative and implementation methods group guidance series – paper 2: methods for question formulation, searching, and protocol development for qualitative evidence synthesis. J Clin Epidemiol. 2018;97:39–48.

Higgins J, Thomas J. In: Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions version 6.3, updated February 2022). Available from www.training.cochrane.org/handbook.: Cochrane; 2022.

International prospective register of systematic reviews (PROSPERO). Available from https://www.crd.york.ac.uk/prospero/ .

Khan KS, Kunz R, Kleijnen J, Antes G. Five steps to conducting a systematic review. J R Soc Med. 2003;96(3):118–21.

Landhuis E. Scientific literature: information overload. Nature. 2016;535(7612):457–8.

Lockwood C, Porritt K, Munn Z, Rittenmeyer L, Salmond S, Bjerrum M, Loveday H, Carrier J, Stannard D. Chapter 2: Systematic reviews of qualitative evidence. In: Aromataris E, Munn Z, editors. JBI Manual for Evidence Synthesis. JBI; 2020. Available from https://synthesismanual.jbi.global . https://doi.org/10.46658/JBIMES-20-03 .

Chapter Google Scholar

Lorenzetti DL, Topfer L-A, Dennett L, Clement F. Value of databases other than medline for rapid health technology assessments. Int J Technol Assess Health Care. 2014;30(2):173–8.

Moher D, Liberati A, Tetzlaff J, Altman DG, the PRISMA Group. Preferred reporting items for (SR) and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;6:264–9.

Mulrow CD. Systematic reviews: rationale for systematic reviews. BMJ. 1994;309(6954):597–9.

Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18(1):143.

Munthe-Kaas HM, Glenton C, Booth A, Noyes J, Lewin S. Systematic mapping of existing tools to appraise methodological strengths and limitations of qualitative research: first stage in the development of the CAMELOT tool. BMC Med Res Methodol. 2019;19(1):1–13.

Murphy CM. Writing an effective review article. J Med Toxicol. 2012;8(2):89–90.

NHMRC. Guidelines for guidelines: assessing risk of bias. Available at https://nhmrc.gov.au/guidelinesforguidelines/develop/assessing-risk-bias . Last published 29 August 2019. Accessed 29 Aug 2022.

Noyes J, Booth A, Cargo M, et al. Cochrane qualitative and implementation methods group guidance series – paper 1: introduction. J Clin Epidemiol. 2018b;97:35–8.

Noyes J, Booth A, Flemming K, et al. Cochrane qualitative and implementation methods group guidance series – paper 3: methods for assessing methodological limitations, data extraction and synthesis, and confidence in synthesized qualitative findings. J Clin Epidemiol. 2018a;97:49–58.

Noyes J, Booth A, Moore G, Flemming K, Tunçalp Ö, Shakibazadeh E. Synthesising quantitative and qualitative evidence to inform guidelines on complex interventions: clarifying the purposes, designs and outlining some methods. BMJ Glob Health. 2019;4(Suppl 1):e000893.

Peters MD, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Healthcare. 2015;13(3):141–6.

Polanin JR, Pigott TD, Espelage DL, Grotpeter JK. Best practice guidelines for abstract screening large-evidence systematic reviews and meta-analyses. Res Synth Methods. 2019;10(3):330–42.

Article PubMed Central Google Scholar

Shea BJ, Grimshaw JM, Wells GA, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7(1):1–7.

Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. Brit Med J. 2017;358

Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. Br Med J. 2016;355

Stroup DF, Berlin JA, Morton SC, et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. JAMA. 2000;283(15):2008–12.

Tawfik GM, Dila KAS, Mohamed MYF, et al. A step by step guide for conducting a systematic review and meta-analysis with simulation data. Trop Med Health. 2019;47(1):1–9.

The Critical Appraisal Program. Critical appraisal skills program. Available at https://casp-uk.net/ . 2022. Accessed 29 Aug 2022.

The University of Melbourne. Writing a literature review in Research Techniques 2022. Available at https://students.unimelb.edu.au/academic-skills/explore-our-resources/research-techniques/reviewing-the-literature . Accessed 29 Aug 2022.

The Writing Center University of Winconsin-Madison. Learn how to write a literature review in The Writer’s Handbook – Academic Professional Writing. 2022. Available at https://writing.wisc.edu/handbook/assignments/reviewofliterature/ . Accessed 29 Aug 2022.

Thompson SG, Sharp SJ. Explaining heterogeneity in meta-analysis: a comparison of methods. Stat Med. 1999;18(20):2693–708.

Tricco AC, Lillie E, Zarin W, et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med Res Methodol. 2016;16(1):15.

Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Yoneoka D, Henmi M. Clinical heterogeneity in random-effect meta-analysis: between-study boundary estimate problem. Stat Med. 2019;38(21):4131–45.

Yuan Y, Hunt RH. Systematic reviews: the good, the bad, and the ugly. Am J Gastroenterol. 2009;104(5):1086–92.

Download references

Author information

Authors and affiliations.

Centre of Excellence in Treatable Traits, College of Health, Medicine and Wellbeing, University of Newcastle, Hunter Medical Research Institute Asthma and Breathing Programme, Newcastle, NSW, Australia

Dennis Thomas

Department of Pharmacy Practice, Faculty of Pharmacy, Universitas Airlangga, Surabaya, Indonesia

Elida Zairina

Centre for Medicine Use and Safety, Monash Institute of Pharmaceutical Sciences, Faculty of Pharmacy and Pharmaceutical Sciences, Monash University, Parkville, VIC, Australia

Johnson George

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Johnson George .

Section Editor information

College of Pharmacy, Qatar University, Doha, Qatar

Derek Charles Stewart

Department of Pharmacy, University of Huddersfield, Huddersfield, United Kingdom

Zaheer-Ud-Din Babar

Rights and permissions

Reprints and permissions

Copyright information

© 2023 Springer Nature Switzerland AG

About this entry

Cite this entry.

Thomas, D., Zairina, E., George, J. (2023). Methodological Approaches to Literature Review. In: Encyclopedia of Evidence in Pharmaceutical Public Health and Health Services Research in Pharmacy. Springer, Cham. https://doi.org/10.1007/978-3-030-50247-8_57-1

Download citation

DOI : https://doi.org/10.1007/978-3-030-50247-8_57-1

Received : 22 February 2023

Accepted : 22 February 2023

Published : 09 May 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-50247-8

Online ISBN : 978-3-030-50247-8

eBook Packages : Springer Reference Biomedicine and Life Sciences Reference Module Biomedical and Life Sciences

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Get science-backed answers as you write with Paperpal's Research feature

What is a Literature Review? How to Write It (with Examples)

A literature review is a critical analysis and synthesis of existing research on a particular topic. It provides an overview of the current state of knowledge, identifies gaps, and highlights key findings in the literature. 1 The purpose of a literature review is to situate your own research within the context of existing scholarship, demonstrating your understanding of the topic and showing how your work contributes to the ongoing conversation in the field. Learning how to write a literature review is a critical tool for successful research. Your ability to summarize and synthesize prior research pertaining to a certain topic demonstrates your grasp on the topic of study, and assists in the learning process.

Table of Contents

- What is the purpose of literature review?

- a. Habitat Loss and Species Extinction:

- b. Range Shifts and Phenological Changes:

- c. Ocean Acidification and Coral Reefs:

- d. Adaptive Strategies and Conservation Efforts:

- How to write a good literature review

- Choose a Topic and Define the Research Question:

- Decide on the Scope of Your Review:

- Select Databases for Searches:

- Conduct Searches and Keep Track:

- Review the Literature:

- Organize and Write Your Literature Review:

- Frequently asked questions

What is a literature review?

A well-conducted literature review demonstrates the researcher’s familiarity with the existing literature, establishes the context for their own research, and contributes to scholarly conversations on the topic. One of the purposes of a literature review is also to help researchers avoid duplicating previous work and ensure that their research is informed by and builds upon the existing body of knowledge.

What is the purpose of literature review?

A literature review serves several important purposes within academic and research contexts. Here are some key objectives and functions of a literature review: 2

- Contextualizing the Research Problem: The literature review provides a background and context for the research problem under investigation. It helps to situate the study within the existing body of knowledge.

- Identifying Gaps in Knowledge: By identifying gaps, contradictions, or areas requiring further research, the researcher can shape the research question and justify the significance of the study. This is crucial for ensuring that the new research contributes something novel to the field.

- Understanding Theoretical and Conceptual Frameworks: Literature reviews help researchers gain an understanding of the theoretical and conceptual frameworks used in previous studies. This aids in the development of a theoretical framework for the current research.

- Providing Methodological Insights: Another purpose of literature reviews is that it allows researchers to learn about the methodologies employed in previous studies. This can help in choosing appropriate research methods for the current study and avoiding pitfalls that others may have encountered.

- Establishing Credibility: A well-conducted literature review demonstrates the researcher’s familiarity with existing scholarship, establishing their credibility and expertise in the field. It also helps in building a solid foundation for the new research.

- Informing Hypotheses or Research Questions: The literature review guides the formulation of hypotheses or research questions by highlighting relevant findings and areas of uncertainty in existing literature.

Literature review example

Let’s delve deeper with a literature review example: Let’s say your literature review is about the impact of climate change on biodiversity. You might format your literature review into sections such as the effects of climate change on habitat loss and species extinction, phenological changes, and marine biodiversity. Each section would then summarize and analyze relevant studies in those areas, highlighting key findings and identifying gaps in the research. The review would conclude by emphasizing the need for further research on specific aspects of the relationship between climate change and biodiversity. The following literature review template provides a glimpse into the recommended literature review structure and content, demonstrating how research findings are organized around specific themes within a broader topic.

Literature Review on Climate Change Impacts on Biodiversity:

Climate change is a global phenomenon with far-reaching consequences, including significant impacts on biodiversity. This literature review synthesizes key findings from various studies:

a. Habitat Loss and Species Extinction:

Climate change-induced alterations in temperature and precipitation patterns contribute to habitat loss, affecting numerous species (Thomas et al., 2004). The review discusses how these changes increase the risk of extinction, particularly for species with specific habitat requirements.

b. Range Shifts and Phenological Changes:

Observations of range shifts and changes in the timing of biological events (phenology) are documented in response to changing climatic conditions (Parmesan & Yohe, 2003). These shifts affect ecosystems and may lead to mismatches between species and their resources.

c. Ocean Acidification and Coral Reefs:

The review explores the impact of climate change on marine biodiversity, emphasizing ocean acidification’s threat to coral reefs (Hoegh-Guldberg et al., 2007). Changes in pH levels negatively affect coral calcification, disrupting the delicate balance of marine ecosystems.

d. Adaptive Strategies and Conservation Efforts:

Recognizing the urgency of the situation, the literature review discusses various adaptive strategies adopted by species and conservation efforts aimed at mitigating the impacts of climate change on biodiversity (Hannah et al., 2007). It emphasizes the importance of interdisciplinary approaches for effective conservation planning.

How to write a good literature review

Writing a literature review involves summarizing and synthesizing existing research on a particular topic. A good literature review format should include the following elements.

Introduction: The introduction sets the stage for your literature review, providing context and introducing the main focus of your review.

- Opening Statement: Begin with a general statement about the broader topic and its significance in the field.

- Scope and Purpose: Clearly define the scope of your literature review. Explain the specific research question or objective you aim to address.

- Organizational Framework: Briefly outline the structure of your literature review, indicating how you will categorize and discuss the existing research.

- Significance of the Study: Highlight why your literature review is important and how it contributes to the understanding of the chosen topic.

- Thesis Statement: Conclude the introduction with a concise thesis statement that outlines the main argument or perspective you will develop in the body of the literature review.

Body: The body of the literature review is where you provide a comprehensive analysis of existing literature, grouping studies based on themes, methodologies, or other relevant criteria.

- Organize by Theme or Concept: Group studies that share common themes, concepts, or methodologies. Discuss each theme or concept in detail, summarizing key findings and identifying gaps or areas of disagreement.

- Critical Analysis: Evaluate the strengths and weaknesses of each study. Discuss the methodologies used, the quality of evidence, and the overall contribution of each work to the understanding of the topic.

- Synthesis of Findings: Synthesize the information from different studies to highlight trends, patterns, or areas of consensus in the literature.

- Identification of Gaps: Discuss any gaps or limitations in the existing research and explain how your review contributes to filling these gaps.

- Transition between Sections: Provide smooth transitions between different themes or concepts to maintain the flow of your literature review.

Conclusion: The conclusion of your literature review should summarize the main findings, highlight the contributions of the review, and suggest avenues for future research.

- Summary of Key Findings: Recap the main findings from the literature and restate how they contribute to your research question or objective.

- Contributions to the Field: Discuss the overall contribution of your literature review to the existing knowledge in the field.

- Implications and Applications: Explore the practical implications of the findings and suggest how they might impact future research or practice.

- Recommendations for Future Research: Identify areas that require further investigation and propose potential directions for future research in the field.

- Final Thoughts: Conclude with a final reflection on the importance of your literature review and its relevance to the broader academic community.

Conducting a literature review

Conducting a literature review is an essential step in research that involves reviewing and analyzing existing literature on a specific topic. It’s important to know how to do a literature review effectively, so here are the steps to follow: 1

Choose a Topic and Define the Research Question:

- Select a topic that is relevant to your field of study.

- Clearly define your research question or objective. Determine what specific aspect of the topic do you want to explore?

Decide on the Scope of Your Review:

- Determine the timeframe for your literature review. Are you focusing on recent developments, or do you want a historical overview?

- Consider the geographical scope. Is your review global, or are you focusing on a specific region?

- Define the inclusion and exclusion criteria. What types of sources will you include? Are there specific types of studies or publications you will exclude?

Select Databases for Searches:

- Identify relevant databases for your field. Examples include PubMed, IEEE Xplore, Scopus, Web of Science, and Google Scholar.

- Consider searching in library catalogs, institutional repositories, and specialized databases related to your topic.

Conduct Searches and Keep Track:

- Develop a systematic search strategy using keywords, Boolean operators (AND, OR, NOT), and other search techniques.

- Record and document your search strategy for transparency and replicability.

- Keep track of the articles, including publication details, abstracts, and links. Use citation management tools like EndNote, Zotero, or Mendeley to organize your references.

Review the Literature:

- Evaluate the relevance and quality of each source. Consider the methodology, sample size, and results of studies.

- Organize the literature by themes or key concepts. Identify patterns, trends, and gaps in the existing research.

- Summarize key findings and arguments from each source. Compare and contrast different perspectives.

- Identify areas where there is a consensus in the literature and where there are conflicting opinions.

- Provide critical analysis and synthesis of the literature. What are the strengths and weaknesses of existing research?

Organize and Write Your Literature Review:

- Literature review outline should be based on themes, chronological order, or methodological approaches.

- Write a clear and coherent narrative that synthesizes the information gathered.

- Use proper citations for each source and ensure consistency in your citation style (APA, MLA, Chicago, etc.).

- Conclude your literature review by summarizing key findings, identifying gaps, and suggesting areas for future research.

The literature review sample and detailed advice on writing and conducting a review will help you produce a well-structured report. But remember that a literature review is an ongoing process, and it may be necessary to revisit and update it as your research progresses.

Frequently asked questions

A literature review is a critical and comprehensive analysis of existing literature (published and unpublished works) on a specific topic or research question and provides a synthesis of the current state of knowledge in a particular field. A well-conducted literature review is crucial for researchers to build upon existing knowledge, avoid duplication of efforts, and contribute to the advancement of their field. It also helps researchers situate their work within a broader context and facilitates the development of a sound theoretical and conceptual framework for their studies.

Literature review is a crucial component of research writing, providing a solid background for a research paper’s investigation. The aim is to keep professionals up to date by providing an understanding of ongoing developments within a specific field, including research methods, and experimental techniques used in that field, and present that knowledge in the form of a written report. Also, the depth and breadth of the literature review emphasizes the credibility of the scholar in his or her field.

Before writing a literature review, it’s essential to undertake several preparatory steps to ensure that your review is well-researched, organized, and focused. This includes choosing a topic of general interest to you and doing exploratory research on that topic, writing an annotated bibliography, and noting major points, especially those that relate to the position you have taken on the topic.

Literature reviews and academic research papers are essential components of scholarly work but serve different purposes within the academic realm. 3 A literature review aims to provide a foundation for understanding the current state of research on a particular topic, identify gaps or controversies, and lay the groundwork for future research. Therefore, it draws heavily from existing academic sources, including books, journal articles, and other scholarly publications. In contrast, an academic research paper aims to present new knowledge, contribute to the academic discourse, and advance the understanding of a specific research question. Therefore, it involves a mix of existing literature (in the introduction and literature review sections) and original data or findings obtained through research methods.

Literature reviews are essential components of academic and research papers, and various strategies can be employed to conduct them effectively. If you want to know how to write a literature review for a research paper, here are four common approaches that are often used by researchers. Chronological Review: This strategy involves organizing the literature based on the chronological order of publication. It helps to trace the development of a topic over time, showing how ideas, theories, and research have evolved. Thematic Review: Thematic reviews focus on identifying and analyzing themes or topics that cut across different studies. Instead of organizing the literature chronologically, it is grouped by key themes or concepts, allowing for a comprehensive exploration of various aspects of the topic. Methodological Review: This strategy involves organizing the literature based on the research methods employed in different studies. It helps to highlight the strengths and weaknesses of various methodologies and allows the reader to evaluate the reliability and validity of the research findings. Theoretical Review: A theoretical review examines the literature based on the theoretical frameworks used in different studies. This approach helps to identify the key theories that have been applied to the topic and assess their contributions to the understanding of the subject. It’s important to note that these strategies are not mutually exclusive, and a literature review may combine elements of more than one approach. The choice of strategy depends on the research question, the nature of the literature available, and the goals of the review. Additionally, other strategies, such as integrative reviews or systematic reviews, may be employed depending on the specific requirements of the research.

The literature review format can vary depending on the specific publication guidelines. However, there are some common elements and structures that are often followed. Here is a general guideline for the format of a literature review: Introduction: Provide an overview of the topic. Define the scope and purpose of the literature review. State the research question or objective. Body: Organize the literature by themes, concepts, or chronology. Critically analyze and evaluate each source. Discuss the strengths and weaknesses of the studies. Highlight any methodological limitations or biases. Identify patterns, connections, or contradictions in the existing research. Conclusion: Summarize the key points discussed in the literature review. Highlight the research gap. Address the research question or objective stated in the introduction. Highlight the contributions of the review and suggest directions for future research.

Both annotated bibliographies and literature reviews involve the examination of scholarly sources. While annotated bibliographies focus on individual sources with brief annotations, literature reviews provide a more in-depth, integrated, and comprehensive analysis of existing literature on a specific topic. The key differences are as follows:

References

- Denney, A. S., & Tewksbury, R. (2013). How to write a literature review. Journal of criminal justice education , 24 (2), 218-234.

- Pan, M. L. (2016). Preparing literature reviews: Qualitative and quantitative approaches . Taylor & Francis.

- Cantero, C. (2019). How to write a literature review. San José State University Writing Center .

Paperpal is an AI writing assistant that help academics write better, faster with real-time suggestions for in-depth language and grammar correction. Trained on millions of research manuscripts enhanced by professional academic editors, Paperpal delivers human precision at machine speed.

Try it for free or upgrade to Paperpal Prime , which unlocks unlimited access to premium features like academic translation, paraphrasing, contextual synonyms, consistency checks and more. It’s like always having a professional academic editor by your side! Go beyond limitations and experience the future of academic writing. Get Paperpal Prime now at just US$19 a month!

Related Reads:

- Empirical Research: A Comprehensive Guide for Academics

- How to Write a Scientific Paper in 10 Steps

- Life Sciences Papers: 9 Tips for Authors Writing in Biological Sciences

- What is an Argumentative Essay? How to Write It (With Examples)

6 Tips for Post-Doc Researchers to Take Their Career to the Next Level

Self-plagiarism in research: what it is and how to avoid it, you may also like, what is academic writing: tips for students, why traditional editorial process needs an upgrade, paperpal’s new ai research finder empowers authors to..., what is hedging in academic writing , how to use ai to enhance your college..., ai + human expertise – a paradigm shift..., how to use paperpal to generate emails &..., ai in education: it’s time to change the..., is it ethical to use ai-generated abstracts without..., do plagiarism checkers detect ai content.

- Academic Skills

- Reading, writing and referencing

- Literature reviews

Writing a literature review

Find out how to write a lit review.

What is a literature review ?

A literature review explores and evaluates the literature on a specific topic or question. It synthesises the contributions of the different authors, often to identify areas that need further exploration.

You may be required to write a literature review as a standalone document or part of a larger body of research, such as a thesis.

- The point of a standalone literature review is to demonstrate that you have read widely in your field and you understand the main arguments.

- As part of a thesis or research paper, the literature review defines your project by establishing how your work will extend or differ from previous work and what contribution it will make.

What are markers looking for?

In the best literature reviews, the writer:

- Has a clear understanding of key concepts within the topic.

- Clarifies important definitions and terminology.

- Covers the breadth of the specific topic.

- Critically discusses the ideas in the literature and evaluates how authors present them.

- Clearly indicates a research gap for future enquiry.

How do I write a literature review?

This video outlines a step by step approach to help you evaluate readings, organise ideas and write critically. It provides examples of how to connect, interpret and critique ideas to make sure your voice comes through strongly.

Tips for research, reading and writing

You may be given a specific question to research or broad topics which must be refined to a question that can be reasonably addressed in the time and word limit available.

Use your early reading to help you determine and refine your topic.

- Too much literature? You probably need to narrow your scope. Try to identify a more specific issue of interest.

- Not enough literature? Your topic may be too specific and needs to be broader.

Start with readings suggested by your lecturers or supervisors. Then, do your own research - the best place to go is the Library Website .

You can also use the Library Guides or speak to a librarian to identify the most useful databases for you and to learn how to search for sources effectively and efficiently.

Cover the field

Make sure your literature search covers a broad range of views and information relevant to your topic. Focussing on a narrow selection of sources may result in a lack of depth. You are not expected to cover all research and scholarly opinions on your topic, but you need to identify and include important viewpoints. A quality literature review examines and evaluates different viewpoints based on the evidence presented, rather than providing only material that reinforces a bias.

Use reading strategies

Survey, skim and scan to find the most relevant articles, and the most relevant parts of those articles. These can be re-read more closely later when you have acquired an overview of your topic.

Take notes as you read

This helps to organise and develop your thoughts. Record your own reactions to the text in your notes, perhaps in a separate column. These notes can form the basis of your critical evaluation of the text. Record any facts, opinions or direct quotes that are likely to be useful to your review, noting the page numbers, author and year.

Stop reading when you have enough

This depends on the word count required of this literature review. A review of one thousand words can only cover the major ideas and probably less than ten references. Longer reviews that form part of a large research paper will include more than fifty. Your tutor or supervisor should be able to suggest a suitable number.

As you read, ask yourself these questions:

- Have I answered my question without any obvious gaps?

- Have I read this before? Are there any new related issues coming up as I search the literature?

- Have I found multiple references which cover the same material or just enough to prove agreement?

There are many possible ways to organise the material. For example:

- chronologically

- by theoretical perspective

- from most to least important

- by issue or theme

It is important to remember that you are not merely cataloguing or describing the literature you read. Therefore, you need to choose an organisation that will enable you to compare the various authors' treatment of ideas. This is often best achieved by organising thematically, or grouping ideas into sets of common issues tackled in the various texts. These themes will form the basis of the different threads that are the focus of your study.

A standalone literature review

A standalone literature review is structured much like an academic essay.

- Introduction - establish the context for your topic and outline your main contentions about the literature

- Main body - explain and support these inferences in the main body

- Conclusion - summarise your main points and restate the contention.

The main difference between an essay and this kind of literature review is that an essay focuses on a topic and uses the literature as a support for the arguments. In a standalone literature review, the literature itself is the topic of discussion and evaluation. This means you evaluate and discuss not only the informational content but the quality of the author’s handling of the content.

A literature review as part of a larger research paper?

As part of a larger research paper, the literature review may take many forms, depending on your discipline, your topic and the logic of your research. Traditionally, in empirical research, the literature review is included in the introduction, or a standalone chapter immediately following the introduction. For other forms of research, you may need to engage more extensively with the literature and thus, the literature review may spread over more than one chapter, or even be distributed throughout the thesis.

Start writing early. Writing will clarify your thinking on the topic and reveal any gaps in information and logic. If your ideas change, sections and paragraphs can be reworked to change your contentions or include extra information.

Similarly, draft an overall plan for your review as soon as you are ready, but be prepared to rework sections of it to reflect your developing argument.

The most important thing to remember is that you are writing a review . That means you must move past describing what other authors have written by connecting, interpreting and critiquing their ideas and presenting your own analysis and interpretation.

Looking for one-on-one advice?

Get tailored advice from an Academic Skills Adviser by booking an Individual appointment, or get quick feedback from one of our Academic Writing Mentors via email through our Writing advice service.

Go to Student appointments

Harvey Cushing/John Hay Whitney Medical Library

- Collections

- Research Help

YSN Doctoral Programs: Steps in Conducting a Literature Review

- Biomedical Databases

- Global (Public Health) Databases

- Soc. Sci., History, and Law Databases

- Grey Literature

- Trials Registers

- Data and Statistics

- Public Policy

- Google Tips

- Recommended Books

- Steps in Conducting a Literature Review

What is a literature review?

A literature review is an integrated analysis -- not just a summary-- of scholarly writings and other relevant evidence related directly to your research question. That is, it represents a synthesis of the evidence that provides background information on your topic and shows a association between the evidence and your research question.

A literature review may be a stand alone work or the introduction to a larger research paper, depending on the assignment. Rely heavily on the guidelines your instructor has given you.

Why is it important?

A literature review is important because it:

- Explains the background of research on a topic.

- Demonstrates why a topic is significant to a subject area.

- Discovers relationships between research studies/ideas.

- Identifies major themes, concepts, and researchers on a topic.

- Identifies critical gaps and points of disagreement.

- Discusses further research questions that logically come out of the previous studies.

APA7 Style resources

APA Style Blog - for those harder to find answers

1. Choose a topic. Define your research question.

Your literature review should be guided by your central research question. The literature represents background and research developments related to a specific research question, interpreted and analyzed by you in a synthesized way.

- Make sure your research question is not too broad or too narrow. Is it manageable?

- Begin writing down terms that are related to your question. These will be useful for searches later.

- If you have the opportunity, discuss your topic with your professor and your class mates.

2. Decide on the scope of your review

How many studies do you need to look at? How comprehensive should it be? How many years should it cover?

- This may depend on your assignment. How many sources does the assignment require?

3. Select the databases you will use to conduct your searches.

Make a list of the databases you will search.

Where to find databases:

- use the tabs on this guide

- Find other databases in the Nursing Information Resources web page

- More on the Medical Library web page

- ... and more on the Yale University Library web page

4. Conduct your searches to find the evidence. Keep track of your searches.

- Use the key words in your question, as well as synonyms for those words, as terms in your search. Use the database tutorials for help.

- Save the searches in the databases. This saves time when you want to redo, or modify, the searches. It is also helpful to use as a guide is the searches are not finding any useful results.

- Review the abstracts of research studies carefully. This will save you time.

- Use the bibliographies and references of research studies you find to locate others.

- Check with your professor, or a subject expert in the field, if you are missing any key works in the field.

- Ask your librarian for help at any time.

- Use a citation manager, such as EndNote as the repository for your citations. See the EndNote tutorials for help.

Review the literature

Some questions to help you analyze the research:

- What was the research question of the study you are reviewing? What were the authors trying to discover?

- Was the research funded by a source that could influence the findings?

- What were the research methodologies? Analyze its literature review, the samples and variables used, the results, and the conclusions.

- Does the research seem to be complete? Could it have been conducted more soundly? What further questions does it raise?

- If there are conflicting studies, why do you think that is?

- How are the authors viewed in the field? Has this study been cited? If so, how has it been analyzed?

Tips:

- Review the abstracts carefully.

- Keep careful notes so that you may track your thought processes during the research process.

- Create a matrix of the studies for easy analysis, and synthesis, across all of the studies.

- << Previous: Recommended Books

- Last Updated: Jan 4, 2024 10:52 AM

- URL: https://guides.library.yale.edu/YSNDoctoral

- Meriam Library

SWRK 330 - Social Work Research Methods

- Literature Reviews and Empirical Research

- Databases and Search Tips

- Article Citations

- Scholarly Journal Evaulation

- Statistical Sources

- Books and eBooks

What is a Literature Review?

Empirical research.

- Annotated Bibliographies

A literature review summarizes and discusses previous publications on a topic.

It should also:

explore past research and its strengths and weaknesses.

be used to validate the target and methods you have chosen for your proposed research.

consist of books and scholarly journals that provide research examples of populations or settings similar to your own, as well as community resources to document the need for your proposed research.

The literature review does not present new primary scholarship.

be completed in the correct citation format requested by your professor (see the C itations Tab)

Access Purdue OWL's Social Work Literature Review Guidelines here .

Empirical Research is research that is based on experimentation or observation, i.e. Evidence. Such research is often conducted to answer a specific question or to test a hypothesis (educated guess).

How do you know if a study is empirical? Read the subheadings within the article, book, or report and look for a description of the research "methodology." Ask yourself: Could I recreate this study and test these results?

These are some key features to look for when identifying empirical research.

NOTE: Not all of these features will be in every empirical research article, some may be excluded, use this only as a guide.

- Statement of methodology

- Research questions are clear and measurable

- Individuals, group, subjects which are being studied are identified/defined

- Data is presented regarding the findings

- Controls or instruments such as surveys or tests were conducted

- There is a literature review

- There is discussion of the results included

- Citations/references are included

See also Empirical Research Guide

- << Previous: Citations

- Next: Annotated Bibliographies >>

- Last Updated: Feb 6, 2024 8:38 AM

- URL: https://libguides.csuchico.edu/SWRK330

Meriam Library | CSU, Chico

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Module 2 Chapter 3: What is Empirical Literature & Where can it be Found?

In Module 1, you read about the problem of pseudoscience. Here, we revisit the issue in addressing how to locate and assess scientific or empirical literature . In this chapter you will read about:

- distinguishing between what IS and IS NOT empirical literature

- how and where to locate empirical literature for understanding diverse populations, social work problems, and social phenomena.

Probably the most important take-home lesson from this chapter is that one source is not sufficient to being well-informed on a topic. It is important to locate multiple sources of information and to critically appraise the points of convergence and divergence in the information acquired from different sources. This is especially true in emerging and poorly understood topics, as well as in answering complex questions.

What Is Empirical Literature

Social workers often need to locate valid, reliable information concerning the dimensions of a population group or subgroup, a social work problem, or social phenomenon. They might also seek information about the way specific problems or resources are distributed among the populations encountered in professional practice. Or, social workers might be interested in finding out about the way that certain people experience an event or phenomenon. Empirical literature resources may provide answers to many of these types of social work questions. In addition, resources containing data regarding social indicators may also prove helpful. Social indicators are the “facts and figures” statistics that describe the social, economic, and psychological factors that have an impact on the well-being of a community or other population group.The United Nations (UN) and the World Health Organization (WHO) are examples of organizations that monitor social indicators at a global level: dimensions of population trends (size, composition, growth/loss), health status (physical, mental, behavioral, life expectancy, maternal and infant mortality, fertility/child-bearing, and diseases like HIV/AIDS), housing and quality of sanitation (water supply, waste disposal), education and literacy, and work/income/unemployment/economics, for example.

Three characteristics stand out in empirical literature compared to other types of information available on a topic of interest: systematic observation and methodology, objectivity, and transparency/replicability/reproducibility. Let’s look a little more closely at these three features.

Systematic Observation and Methodology. The hallmark of empiricism is “repeated or reinforced observation of the facts or phenomena” (Holosko, 2006, p. 6). In empirical literature, established research methodologies and procedures are systematically applied to answer the questions of interest.

Objectivity. Gathering “facts,” whatever they may be, drives the search for empirical evidence (Holosko, 2006). Authors of empirical literature are expected to report the facts as observed, whether or not these facts support the investigators’ original hypotheses. Research integrity demands that the information be provided in an objective manner, reducing sources of investigator bias to the greatest possible extent.

Transparency and Replicability/Reproducibility. Empirical literature is reported in such a manner that other investigators understand precisely what was done and what was found in a particular research study—to the extent that they could replicate the study to determine whether the findings are reproduced when repeated. The outcomes of an original and replication study may differ, but a reader could easily interpret the methods and procedures leading to each study’s findings.

What is NOT Empirical Literature

By now, it is probably obvious to you that literature based on “evidence” that is not developed in a systematic, objective, transparent manner is not empirical literature. On one hand, non-empirical types of professional literature may have great significance to social workers. For example, social work scholars may produce articles that are clearly identified as describing a new intervention or program without evaluative evidence, critiquing a policy or practice, or offering a tentative, untested theory about a phenomenon. These resources are useful in educating ourselves about possible issues or concerns. But, even if they are informed by evidence, they are not empirical literature. Here is a list of several sources of information that do not meet the standard of being called empirical literature:

- your course instructor’s lectures

- political statements

- advertisements

- newspapers & magazines (journalism)

- television news reports & analyses (journalism)

- many websites, Facebook postings, Twitter tweets, and blog postings

- the introductory literature review in an empirical article

You may be surprised to see the last two included in this list. Like the other sources of information listed, these sources also might lead you to look for evidence. But, they are not themselves sources of evidence. They may summarize existing evidence, but in the process of summarizing (like your instructor’s lectures), information is transformed, modified, reduced, condensed, and otherwise manipulated in such a manner that you may not see the entire, objective story. These are called secondary sources, as opposed to the original, primary source of evidence. In relying solely on secondary sources, you sacrifice your own critical appraisal and thinking about the original work—you are “buying” someone else’s interpretation and opinion about the original work, rather than developing your own interpretation and opinion. What if they got it wrong? How would you know if you did not examine the primary source for yourself? Consider the following as an example of “getting it wrong” being perpetuated.

Example: Bullying and School Shootings . One result of the heavily publicized April 1999 school shooting incident at Columbine High School (Colorado), was a heavy emphasis placed on bullying as a causal factor in these incidents (Mears, Moon, & Thielo, 2017), “creating a powerful master narrative about school shootings” (Raitanen, Sandberg, & Oksanen, 2017, p. 3). Naturally, with an identified cause, a great deal of effort was devoted to anti-bullying campaigns and interventions for enhancing resilience among youth who experience bullying. However important these strategies might be for promoting positive mental health, preventing poor mental health, and possibly preventing suicide among school-aged children and youth, it is a mistaken belief that this can prevent school shootings (Mears, Moon, & Thielo, 2017). Many times the accounts of the perpetrators having been bullied come from potentially inaccurate third-party accounts, rather than the perpetrators themselves; bullying was not involved in all instances of school shooting; a perpetrator’s perception of being bullied/persecuted are not necessarily accurate; many who experience severe bullying do not perpetrate these incidents; bullies are the least targeted shooting victims; perpetrators of the shooting incidents were often bullying others; and, bullying is only one of many important factors associated with perpetrating such an incident (Ioannou, Hammond, & Simpson, 2015; Mears, Moon, & Thielo, 2017; Newman &Fox, 2009; Raitanen, Sandberg, & Oksanen, 2017). While mass media reports deliver bullying as a means of explaining the inexplicable, the reality is not so simple: “The connection between bullying and school shootings is elusive” (Langman, 2014), and “the relationship between bullying and school shooting is, at best, tenuous” (Mears, Moon, & Thielo, 2017, p. 940). The point is, when a narrative becomes this publicly accepted, it is difficult to sort out truth and reality without going back to original sources of information and evidence.

What May or May Not Be Empirical Literature: Literature Reviews