- Architecture and Design

- Asian and Pacific Studies

- Business and Economics

- Classical and Ancient Near Eastern Studies

- Computer Sciences

- Cultural Studies

- Engineering

- General Interest

- Geosciences

- Industrial Chemistry

- Islamic and Middle Eastern Studies

- Jewish Studies

- Library and Information Science, Book Studies

- Life Sciences

- Linguistics and Semiotics

- Literary Studies

- Materials Sciences

- Mathematics

- Social Sciences

- Sports and Recreation

- Theology and Religion

- Publish your article

- The role of authors

- Promoting your article

- Abstracting & indexing

- Publishing Ethics

- Why publish with De Gruyter

- How to publish with De Gruyter

- Our book series

- Our subject areas

- Your digital product at De Gruyter

- Contribute to our reference works

- Product information

- Tools & resources

- Product Information

- Promotional Materials

- Orders and Inquiries

- FAQ for Library Suppliers and Book Sellers

- Repository Policy

- Free access policy

- Open Access agreements

- Database portals

- For Authors

- Customer service

- People + Culture

- Journal Management

- How to join us

- Working at De Gruyter

- Mission & Vision

- De Gruyter Foundation

- De Gruyter Ebound

- Our Responsibility

- Partner publishers

Your purchase has been completed. Your documents are now available to view.

Methods Used in Economic Research: An Empirical Study of Trends and Levels

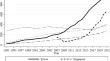

The methods used in economic research are analyzed on a sample of all 3,415 regular research papers published in 10 general interest journals every 5th year from 1997 to 2017. The papers are classified into three main groups by method: theory, experiments, and empirics. The theory and empirics groups are almost equally large. Most empiric papers use the classical method, which derives an operational model from theory and runs regressions. The number of papers published increases by 3.3% p.a. Two trends are highly significant: The fraction of theoretical papers has fallen by 26 pp (percentage points), while the fraction of papers using the classical method has increased by 15 pp. Economic theory predicts that such papers exaggerate, and the papers that have been analyzed by meta-analysis confirm the prediction. It is discussed if other methods have smaller problems.

1 Introduction

This paper studies the pattern in the research methods in economics by a sample of 3,415 regular papers published in the years 1997, 2002, 2007, 2012, and 2017 in 10 journals. The analysis builds on the beliefs that truth exists, but it is difficult to find, and that all the methods listed in the next paragraph have problems as discussed in Sections 2 and 4. Hereby I do not imply that all – or even most – papers have these problems, but we rarely know how serious it is when we read a paper. A key aspect of the problem is that a “perfect” study is very demanding and requires far too much space to report, especially if the paper looks for usable results. Thus, each paper is just one look at an aspect of the problem analyzed. Only when many studies using different methods reach a joint finding, we can trust that it is true.

Section 2 discusses the classification of papers by method into three main categories: (M1) Theory , with three subgroups: (M1.1) economic theory, (M1.2) statistical methods, and (M1.3) surveys. (M2) Experiments , with two subgroups: (M2.1) lab experiments and (M2.2) natural experiments. (M3) Empirics , with three subgroups: (M3.1) descriptive, (M3.2) classical empirics, and (M3.3) newer empirics. More than 90% of the papers are easy to classify, but a stochastic element enters in the classification of the rest. Thus, the study has some – hopefully random – measurement errors.

Section 3 discusses the sample of journals chosen. The choice has been limited by the following main criteria: It should be good journals below the top ten A-journals, i.e., my article covers B-journals, which are the journals where most research economists publish. It should be general interest journals, and the journals should be so different that it is likely that patterns that generalize across these journals apply to more (most?) journals. The Appendix gives some crude counts of researchers, departments, and journals. It assesses that there are about 150 B-level journals, but less than half meet the criteria, so I have selected about 15% of the possible ones. This is the most problematic element in the study. If the reader accepts my choice, the paper tells an interesting story about economic research.

All B-level journals try hard to have a serious refereeing process. If our selection is representative, the 150 journals have increased the annual number of papers published from about 7,500 in 1997 to about 14,000 papers in 2017, giving about 200,000 papers for the period. Thus, the B-level dominates our science. Our sample is about 6% for the years covered, but less than 2% of all papers published in B-journals in the period. However, it is a larger fraction of the papers in general interest journals.

It is impossible for anyone to read more than a small fraction of this flood of papers. Consequently, researchers compete for space in journals and for attention from the readers, as measured in the form of citations. It should be uncontroversial that papers that hold a clear message are easier to publish and get more citations. Thus, an element of sales promotion may enter papers in the form of exaggeration , which is a joint problem for all eight methods. This is in accordance with economic theory that predicts that rational researchers report exaggerated results; see Paldam ( 2016 , 2018 ). For empirical papers, meta-methods exist to summarize the results from many papers, notably papers using regressions. Section 4.4 reports that meta-studies find that exaggeration is common.

The empirical literature surveying the use of research methods is quite small, as I have found two articles only: Hamermesh ( 2013 ) covers 748 articles in 6 years a decade apart studies in three A-journals using a slightly different classification of methods, [1] while my study covers B-journals. Angrist, Azoulay, Ellison, Hill, and Lu ( 2017 ) use a machine-learning classification of 134,000 papers in 80 journals to look at the three main methods. My study subdivide the three categories into eight. The machine-learning algorithm is only sketched, so the paper is difficult to replicate, but it is surely a major effort. A key result in both articles is the strong decrease of theory in economic publications. This finding is confirmed, and it is shown that the corresponding increase in empirical articles is concentrated on the classical method.

I have tried to explain what I have done, so that everything is easy to replicate, in full or for one journal or one year. The coding of each article is available at least for the next five years. I should add that I have been in economic research for half a century. Some of the assessments in the paper will reflect my observations/experience during this period (indicated as my assessments). This especially applies to the judgements expressed in Section 4.

2 The eight categories

Table 1 reports that the annual number of papers in the ten journals has increased 1.9 times, or by 3.3% per year. The Appendix gives the full counts per category, journal, and year. By looking at data over two decades, I study how economic research develops. The increase in the production of papers is caused by two factors: The increase in the number of researchers. The increasing importance of publications for the careers of researchers.

The 3,415 papers

2.1 (M1) Theory: subgroups (M1.1) to (M1.3)

Table 2 lists the groups and main numbers discussed in the rest of the paper. Section 2.1 discusses (M1) theory. Section 2.2 covers (M2) experimental methods, while Section 2.3 looks at (M3) empirical methods using statistical inference from data.

The 3,415 papers – fractions in percent

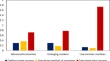

The change of the fractions from 1997 to 2017 in percentage points

Note: Section 3.4 tests if the pattern observed in Table 3 is statistically significant. The Appendix reports the full data.

2.1.1 (M1.1) Economic theory

Papers are where the main content is the development of a theoretical model. The ideal theory paper presents a (simple) new model that recasts the way we look at something important. Such papers are rare and obtain large numbers of citations. Most theoretical papers present variants of known models and obtain few citations.

In a few papers, the analysis is verbal, but more than 95% rely on mathematics, though the technical level differs. Theory papers may start by a descriptive introduction giving the stylized fact the model explains, but the bulk of the paper is the formal analysis, building a model and deriving proofs of some propositions from the model. It is often demonstrated how the model works by a set of simulations, including a calibration made to look realistic. However, the calibrations differ greatly by the efforts made to reach realism. Often, the simulations are in lieu of an analytical solution or just an illustration suggesting the magnitudes of the results reached.

Theoretical papers suffer from the problem known as T-hacking , [2] where the able author by a careful selection of assumptions can tailor the theory to give the results desired. Thus, the proofs made from the model may represent the ability and preferences of the researcher rather than the properties of the economy.

2.1.2 (M1.2) Statistical method

Papers reporting new estimators and tests are published in a handful of specialized journals in econometrics and mathematical statistics – such journals are not included. In our general interest journals, some papers compare estimators on actual data sets. If the demonstration of a methodological improvement is the main feature of the paper, it belongs to (M1.2), but if the economic interpretation is the main point of the paper, it belongs to (M3.2) or (M3.3). [3]

Some papers, including a special issue of Empirical Economics (vol. 53–1), deal with forecasting models. Such models normally have a weak relation to economic theory. They are sometimes justified precisely because of their eclectic nature. They are classified as either (M1.2) or (M3.1), depending upon the focus. It appears that different methods work better on different data sets, and perhaps a trade-off exists between the user-friendliness of the model and the improvement reached.

2.1.3 (M1.3) Surveys

When the literature in a certain field becomes substantial, it normally presents a motley picture with an amazing variation, especially when different schools exist in the field. Thus, a survey is needed, and our sample contains 68 survey articles. They are of two types, where the second type is still rare:

2.1.3.1 (M1.3.1) Assessed surveys

Here, the author reads the papers and assesses what the most reliable results are. Such assessments require judgement that is often quite difficult to distinguish from priors, even for the author of the survey.

2.1.3.2 (M1.3.2) Meta-studies

They are quantitative surveys of estimates of parameters claimed to be the same. Over the two decades from 1997 to 2017, about 500 meta-studies have been made in economics. Our sample includes five, which is 0.15%. [4] Meta-analysis has two levels: The basic level collects and codes the estimates and studies their distribution. This is a rather objective exercise where results seem to replicate rather well. [5] The second level analyzes the variation between the results. This is less objective. The papers analyzed by meta-studies are empirical studies using method (M3.2), though a few use estimates from (M3.1) and (M3.3).

2.2 (M2) Experimental methods: subgroups (M2.1) and (M2.2)

Experiments are of three distinct types, where the last two are rare, so they are lumped together. They are taking place in real life.

2.2.1 (M2.1) Lab experiments

The sample had 1.9% papers using this method in 1997, and it has expanded to 9.7% in 2017. It is a technique that is much easier to apply to micro- than to macroeconomics, so it has spread unequally in the 10 journals, and many experiments are reported in a couple of special journals that are not included in our sample.

Most of these experiments take place in a laboratory, where the subjects communicate with a computer, giving a controlled, but artificial, environment. [6] A number of subjects are told a (more or less abstract) story and paid to react in either of a number of possible ways. A great deal of ingenuity has gone into the construction of such experiments and in the methods used to analyze the results. Lab experiments do allow studies of behavior that are hard to analyze in any other way, and they frequently show sides of human behavior that are difficult to rationalize by economic theory. It appears that such demonstration is a strong argument for the publication of a study.

However, everything is artificial – even the payment. In some cases, the stories told are so elaborate and abstract that framing must be a substantial risk; [7] see Levitt and List ( 2007 ) for a lucid summary, and Bergh and Wichardt ( 2018 ) for a striking example. In addition, experiments cost money, which limits the number of subjects. It is also worth pointing to the difference between expressive and real behavior. It is typically much cheaper for the subject to “express” nice behavior in a lab than to be nice in the real world.

(M2.2) Event studies are studies of real world experiments. They are of two types:

(M2.2.1) Field experiments analyze cases where some people get a certain treatment and others do not. The “gold standard” for such experiments is double blind random sampling, where everything (but the result!) is preannounced; see Christensen and Miguel ( 2018 ). Experiments with humans require permission from the relevant authorities, and the experiment takes time too. In the process, things may happen that compromise the strict rules of the standard. [8] Controlled experiments are expensive, as they require a team of researchers. Our sample of papers contains no study that fulfills the gold standard requirements, but there are a few less stringent studies of real life experiments.

(M2.2.2) Natural experiments take advantage of a discontinuity in the environment, i.e., the period before and after an (unpredicted) change of a law, an earthquake, etc. Methods have been developed to find the effect of the discontinuity. Often, such studies look like (M3.2) classical studies with many controls that may or may not belong. Thus, the problems discussed under (M3.2) will also apply.

2.3 (M3) Empirical methods: subgroups (M3.1) to (M3.3)

The remaining methods are studies making inference from “real” data, which are data samples where the researcher chooses the sample, but has no control over the data generating process.

(M3.1) Descriptive studies are deductive. The researcher describes the data aiming at finding structures that tell a story, which can be interpreted. The findings may call for a formal test. If one clean test follows from the description, [9] the paper is classified under (M3.1). If a more elaborate regression analysis is used, it is classified as (M3.2). Descriptive studies often contain a great deal of theory.

Some descriptive studies present a new data set developed by the author to analyze a debated issue. In these cases, it is often possible to make a clean test, so to the extent that biases sneak in, they are hidden in the details of the assessments made when the data are compiled.

(M3.2) Classical empirics has three steps: It starts by a theory, which is developed into an operational model. Then it presents the data set, and finally it runs regressions.

The significance levels of the t -ratios on the coefficient estimated assume that the regression is the first meeting of the estimation model and the data. We all know that this is rarely the case; see also point (m1) in Section 4.4. In practice, the classical method is often just a presentation technique. The great virtue of the method is that it can be applied to real problems outside academia. The relevance comes with a price: The method is quite flexible as many choices have to be made, and they often give different results. Preferences and interests, as discussed in Sections 4.3 and 4.4 below, notably as point (m2), may affect these choices.

(M3.3) Newer empirics . Partly as a reaction to the problems of (M3.2), the last 3–4 decades have seen a whole set of newer empirical techniques. [10] They include different types of VARs, Bayesian techniques, causality/co-integration tests, Kalman Filters, hazard functions, etc. I have found 162 (or 4.7%) papers where these techniques are the main ones used. The fraction was highest in 1997. Since then it has varied, but with no trend.

I think that the main reason for the lack of success for the new empirics is that it is quite bulky to report a careful set of co-integration tests or VARs, and they often show results that are far from useful in the sense that they are unclear and difficult to interpret. With some introduction and discussion, there is not much space left in the article. Therefore, we are dealing with a cookbook that makes for rather dull dishes, which are difficult to sell in the market.

Note the contrast between (M3.2) and (M3.3): (M3.2) makes it possible to write papers that are too good, while (M3.3) often makes them too dull. This contributes to explain why (M3.2) is getting (even) more popular and the lack of success of (M3.3), but then, it is arguable that it is more dangerous to act on exaggerated results than on results that are weak.

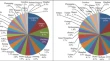

3 The 10 journals

The 10 journals chosen are: (J1) Can [Canadian Journal of Economics], (J2) Emp [Empirical Economics], (J3) EER [European Economic Review], (J4) EJPE [European Journal of Political Economy], (J5) JEBO [Journal of Economic Behavior & Organization], (J6) Inter [Journal of International Economics], (J7) Macro [Journal of Macroeconomics], (J8) Kyklos, (J9) PuCh [Public Choice], and (J10) SJE [Scandinavian Journal of Economics].

Section 3.1 discusses the choice of journals, while Section 3.2 considers how journals deal with the pressure for publication. Section 3.3 shows the marked difference in publication profile of the journals, and Section 3.4 tests if the trends in methods are significant.

3.1 The selection of journals

They should be general interest journals – methodological journals are excluded. By general interest, I mean that they bring papers where an executive summary may interest policymakers and people in general. (ii) They should be journals in English (the Canadian Journal includes one paper in French), which are open to researchers from all countries, so that the majority of the authors are from outside the country of the journal. [11] (iii) They should be sufficiently different so that it is likely that patterns, which apply to these journals, tell a believable story about economic research. Note that (i) and (iii) require some compromises, as is evident in the choice of (J2), (J6), (J7), and (J8) ( Table 4 ).

The 10 journals covered

Note. Growth is the average annual growth from 1997 to 2017 in the number of papers published.

Methodological journals are excluded, as they are not interesting to outsiders. However, new methods are developed to be used in general interest journals. From studies of citations, we know that useful methodological papers are highly cited. If they remain unused, we presume that it is because they are useless, though, of course, there may be a long lag.

The choice of journals may contain some subjectivity, but I think that they are sufficiently diverse so that patterns that generalize across these journals will also generalize across a broader range of good journals.

The papers included are the regular research articles. Consequently, I exclude short notes to other papers and book reviews, [12] except for a few article-long discussions of controversial books.

3.2 Creating space in journals

As mentioned in the introduction, the annual production of research papers in economics has now reached about 1,000 papers in top journals, and about 14,000 papers in the group of good journals. [13] The production has grown with 3.3% per year, and thus it has doubled the last twenty years. The hard-working researcher will read less than 100 papers a year. I know of no signs that this number is increasing. Thus, the upward trend in publication must be due to the large increase in the importance of publications for the careers of researchers, which has greatly increased the production of papers. There has also been a large increase in the number of researches, but as citations are increasingly skewed toward the top journals (see Heckman & Moktan, 2018 ), it has not increased demand for papers correspondingly. The pressures from the supply side have caused journals to look for ways to create space.

Book reviews have dropped to less than 1/3. Perhaps, it also indicates that economists read fewer books than they used to. Journals have increasingly come to use smaller fonts and larger pages, allowing more words per page. The journals from North-Holland Elsevier have managed to cram almost two old pages into one new one. [14] This makes it easier to publish papers, while they become harder to read.

Many journals have changed their numbering system for the annual issues, making it less transparent how much they publish. Only three – Canadian Economic Journal, Kyklos, and Scandinavian Journal of Economics – have kept the schedule of publishing one volume of four issues per year. It gives about 40 papers per year. Public Choice has a (fairly) consistent system with four volumes of two double issues per year – this gives about 100 papers. The remaining journals have changed their numbering system and increased the number of papers published per year – often dramatically.

Thus, I assess the wave of publications is caused by the increased supply of papers and not to the demand for reading material. Consequently, the study confirms and updates the observation by Temple ( 1918 , p. 242): “… as the world gets older the more people are inclined to write but the less they are inclined to read.”

3.3 How different are the journals?

The appendix reports the counts for each year and journal of the research methods. From these counts, a set of χ 2 -scores is calculated for the three main groups of methods – they are reported in Table 5 . It gives the χ 2 -test comparing the profile of each journal to the one of the other nine journals taken to be the theoretical distribution.

The methodological profile of the journals – χ 2 -scores for main groups

Note: The χ 2 -scores are calculated relative to all other journals. The sign (+) or (−) indicates if the journal has too many or too few papers relatively in the category. The P -values for the χ 2 (3)-test always reject that the journal has the same methodological profile as the other nine journals.

The test rejects that the distribution is the same as the average for any of the journals. The closest to the average is the EJPE and Public Choice. The two most deviating scores are for the most micro-oriented journal JEBO, which brings many experimental papers, and of course, Empirical Economics, which brings many empirical papers.

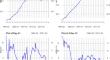

3.4 Trends in the use of the methods

Table 3 already gave an impression of the main trends in the methods preferred by economists. I now test if these impressions are statistically significant. The tests have to be tailored to disregard three differences between the journals: their methodological profiles, the number of papers they publish, and the trend in the number. Table 6 reports a set of distribution free tests, which overcome these differences. The tests are done on the shares of each research method for each journal. As the data cover five years, it gives 10 pairs of years to compare. [15] The three trend-scores in the []-brackets count how often the shares go up, down, or stay the same in the 10 cases. This is the count done for a Kendall rank correlation comparing the five shares with a positive trend (such as 1, 2, 3, 4, and 5).

Trend-scores and tests for the eight subgroups of methods across the 10 journals

Note: The three trend-scores in each [ I 1 , I 2 , I 3 ]-bracket are a Kendall-count over all 10 combinations of years. I 1 counts how often the share goes up. I 2 counts when the share goes down, and I 3 counts the number of ties. Most ties occur when there are no observations either year. Thus, I 1 + I 2 + I 3 = 10. The tests are two-sided binominal tests disregarding the zeroes. The test results in bold are significant at the 5% level.

The first set of trend-scores for (M1.1) and (J1) is [1, 9, 0]. It means that 1 of the 10 share-pairs increases, while nine decrease and no ties are found. The two-sided binominal test is 2%, so it is unlikely to happen. Nine of the ten journals in the (M1.1)-column have a majority of falling shares. The important point is that the counts in one column can be added – as is done in the all-row; this gives a powerful trend test that disregards differences between journals and the number of papers published. ( Table A1 )

Four of the trend-tests are significant: The fall in theoretical papers and the rise in classical papers. There is also a rise in the share of stat method and event studies. It is surprising that there is no trend in the number of experimental studies, but see Table A2 (in Appendix).

4 An attempt to interpret the pattern found

The development in the methods pursued by researchers in economics is a reaction to the demand and supply forces on the market for economic papers. As already argued, it seems that a key factor is the increasing production of papers.

The shares add to 100, so the decline of one method means that the others rise. Section 4.1 looks at the biggest change – the reduction in theory papers. Section 4.2 discusses the rise in two new categories. Section 4.3 considers the large increase in the classical method, while Section 4.4 looks at what we know about that method from meta-analysis.

4.1 The decline of theory: economics suffers from theory fatigue [16]

The papers in economic theory have dropped from 59.5 to 33.6% – this is the largest change for any of the eight subgroups. [17] It is highly significant in the trend test. I attribute this drop to theory fatigue.

As mentioned in Section 2.1, the ideal theory paper presents a (simple) new model that recasts the way we look at something important. However, most theory papers are less exciting: They start from the standard model and argue that a well-known conclusion reached from the model hinges upon a debatable assumption – if it changes, so does the conclusion. Such papers are useful. From a literature on one main model, the profession learns its strengths and weaknesses. It appears that no generally accepted method exists to summarize this knowledge in a systematic way, though many thoughtful summaries have appeared.

I think that there is a deeper problem explaining theory fatigue. It is that many theoretical papers are quite unconvincing. Granted that the calculations are done right, believability hinges on the realism of the assumptions at the start and of the results presented at the end. In order for a model to convince, it should (at least) demonstrate the realism of either the assumptions or the outcome. [18] If both ends appear to hang in the air, it becomes a game giving little new knowledge about the world, however skillfully played.

The theory fatigue has caused a demand for simulations demonstrating that the models can mimic something in the world. Kydland and Prescott pioneered calibration methods (see their 1991 ). Calibrations may be carefully done, but it often appears like a numerical solution of a model that is too complex to allow an analytical solution.

4.2 Two examples of waves: one that is still rising and another that is fizzling out

When a new method of gaining insights in the economy first appears, it is surrounded by doubts, but it also promises a high marginal productivity of knowledge. Gradually the doubts subside, and many researchers enter the field. After some time this will cause the marginal productivity of the method to fall, and it becomes less interesting. The eight methods include two newer ones: Lab experiments and newer stats. [19]

It is not surprising that papers with lab experiments are increasing, though it did take a long time: The seminal paper presenting the technique was Smith ( 1962 ), but only a handful of papers are from the 1960s. Charles Plott organized the first experimental lab 10 years later – this created a new standard for experiments, but required an investment in a lab and some staff. Labs became more common in the 1990s as PCs got cheaper and software was developed to handle experiments, but only 1.9% of the papers in the 10 journals reported lab experiments in 1997. This has now increased to 9.7%, so the wave is still rising. The trend in experiments is concentrated in a few journals, so the trend test in Table 6 is insignificant, but it is significant in the Appendix Table A2 , where it is done on the sum of articles irrespective of the journal.

In addition to the rising share of lab experiment papers in some journals, the journal Experimental Economics was started in 1998, where it published 281 pages in three issues. In 2017, it had reached 1,006 pages in four issues, [20] which is an annual increase of 6.5%.

Compared with the success of experimental economics, the motley category of newer empirics has had a more modest success, as the fraction of papers in the 5 years are 5.8, 5.2, 3.5, 5.4, and 4.2, which has no trend. Newer stats also require investment, but mainly in human capital. [21] Some of the papers using the classical methodology contain a table with Dickey-Fuller tests or some eigenvalues of the data matrix, but they are normally peripheral to the analysis. A couple of papers use Kalman filters, and a dozen papers use Bayesian VARs. However, it is clear that the newer empirics have made little headway into our sample of general interest journals.

4.3 The steady rise of the classical method: flexibility rewarded

The typical classical paper provides estimates of a key effect that decision-makers outside academia want to know. This makes the paper policy relevant right from the start, and in many cases, it is possible to write a one page executive summary to the said decision-makers.

The three-step convention (see Section 2.3) is often followed rather loosely. The estimation model is nearly always much simpler than the theory. Thus, while the model can be derived from a theory, the reverse does not apply. Sometimes, the model seems to follow straight from common sense, and if the link from the theory to the model is thin, it begs the question: Is the theory really necessary? In such cases, it is hard to be convinced that the tests “confirm” the theory, but then, of course, tests only say that the data do not reject the theory.

The classical method is often only a presentation devise. Think of a researcher who has reached a nice publishable result through a long and tortuous path, including some failed attempts to find such results. It is not possible to describe that path within the severely limited space of an article. In addition, such a presentation would be rather dull to read, and none of us likes to talk about wasted efforts that in hindsight seem a bit silly. Here, the classical method becomes a convenient presentation device.

The biggest source of variation in the results is the choice of control/modifier variables. All datasets presumably contain some general and some special information, where the latter depends on the circumstances prevailing when the data were compiled. The regression should be controlled for these circumstances in order to reach the general result. Such ceteris paribus controls are not part of the theory, so many possible controls may be added. The ones chosen for publication often appear to be the ones delivering the “right” results by the priors of the researcher. The justification for their inclusion is often thin, and if two-stage regressions are used, the first stage instruments often have an even thinner justification.

Thus, the classical method is rather malleable to the preferences and interests of researchers and sponsors. This means that some papers using the classical technique are not what they pretend, as already pointed out by Leamer ( 1983 ), see also Paldam ( 2018 ) for new references and theory. The fact that data mining is tempting suggests that it is often possible to reach smashing results, making the paper nice to read. This may be precisely why it is cited.

Many papers using the classical method throw in some bits of exotic statistics technique to demonstrate the robustness of the result and the ability of the researcher. This presumably helps to generate credibility.

4.4 Knowledge about classical papers reached from meta-studies

Individual studies using the classical method often look better than they are, and thus they are more uncertain than they appear, but we may think of the value of convergence for large N s (number of observations) as the truth. The exaggeration is largest in the beginning of a new literature, but gradually it becomes smaller. Thus, the classical method does generate truth when the effect searched for has been studied from many sides. The word research does mean that the search has to be repeated! It is highly risky to trust a few papers only.

Meta-analysis has found other results such as: Results in top journals do not stand out. It is necessary to look at many journals, as many papers on the same effect are needed. Little of the large variation between results is due to the choice of estimators.

A similar development should occur also for experimental economics. Experiments fall in families: A large number cover prisoner’s dilemma games, but there are also many studies of dictator games, auction games, etc. Surveys summarizing what we have learned about these games seem highly needed. Assessed summaries of old experiments are common, notably in introductions to papers reporting new ones. It should be possible to extract the knowledge reached by sets of related lab experiments in a quantitative way, by some sort of meta-technique, but this has barely started. The first pioneering meta-studies of lab experiments do find the usual wide variation of results from seemingly closely related experiments. [25] A recent large-scale replicability study by Camerer et al. ( 2018 ) finds that published experiments in the high quality journal Nature and Science exaggerate by a factor two just like regression studies using the classical method.

5 Conclusion

The study presents evidence that over the last 20 years economic research has moved away from theory towards empirical work using the classical method.

From the eighties onward, there has been a steady stream of papers pointing out that the classical method suffers from excess flexibility. It does deliver relevant results, but they tend to be too good. [26] While, increasingly, we know the size of the problems of the classical method, systematic knowledge about the problems of the other methods is weaker. It is possible that the problems are smaller, but we do not know.

Therefore, it is clear that obtaining solid knowledge about the size of an important effect requires a great deal of papers analyzing many aspects of the effect and a careful quantitative survey. It is a well-known principle in the harder sciences that results need repeated independent replication to be truly trustworthy. In economics, this is only accepted in principle.

The classical method of empirical research is gradually winning, and this is a fine development: It does give answers to important policy questions. These answers are highly variable and often exaggerated, but through the efforts of many competing researchers, solid knowledge will gradually emerge.

Home page: http://www.martin.paldam.dk

Acknowledgments

The paper has been presented at the 2018 MAER-Net Colloquium in Melbourne, the Kiel Aarhus workshop in 2018, and at the European Public Choice 2019 Meeting in Jerusalem. I am grateful for all comments, especially from Chris Doucouliagos, Eelke de Jong, and Bob Reed. In addition, I thank the referees for constructive advice.

Conflict of interest: Author states no conflict of interest.

Appendix: Two tables and some assessments of the size of the profession

The text needs some numbers to assess the representativity of the results reached. These numbers just need to be orders of magnitude. I use the standard three-level classification in A, B, and C of researchers, departments, and journals. The connections between the three categories are dynamic and rely on complex sorting mechanisms. In an international setting, it matters that researchers have preferences for countries, notably their own. The relation between the three categories has a stochastic element.

The World of Learning organization reports on 36,000 universities, colleges, and other institutes of tertiary education and research. Many of these institutions are mainly engaged in undergraduate teaching, and some are quite modest. If half of these institutions have a program in economics, with a staff of at least five, the total stock of academic economists is 100,000, of which most are at the C-level.

The A-level of about 500 tenured researchers working at the top ten universities (mainly) publishes in the top 10 journals that bring less than 1,000 papers per year; [27] see Heckman and Moktan (2020). They (mainly) cite each other, but they greatly influence other researchers. [28] The B-level consists of about 15–20,000 researchers who work at 4–500 research universities, with graduate programs and ambitions to publish. They (mainly) publish in the next level of about 150 journals. [29] In addition, there are at least another 1,000 institutions that strive to move up in the hierarchy.

The counts for each of the 10 journals

Counts, shares, and changes for all ten journals for subgroups

Note: The trend-scores are calculated as in Table 6 . Compared to the results in Table 6 , the results are similar, but the power is less than before. However, note that the results in Column (M2.1) dealing with experiments are stronger in Table A2 . This has to do with the way missing observations are treated in the test.

Angrist, J. , Azoulay, P. , Ellison, G. , Hill, R. , & Lu, S. F. (2017). Economic research evolves: Fields and styles. American Economic Review (Papers & Proceedings), 107, 293–297. 10.1257/aer.p20171117 Search in Google Scholar

Bergh, A. , & Wichardt, P. C. (2018). Mine, ours or yours? Unintended framing effects in dictator games (INF Working Papers, No 1205). Research Institute of Industrial Econ, Stockholm. München: CESifo. 10.2139/ssrn.3208589 Search in Google Scholar

Brodeur, A. , Cook, N. , & Heyes, A. (2020). Methods matter: p-Hacking and publication bias in causal analysis in economics. American Economic Review, 110(11), 3634–3660. 10.1257/aer.20190687 Search in Google Scholar

Camerer, C. F. , Dreber, A. , Holzmaster, F. , Ho, T.-H. , Huber, J. , Johannesson, M. , … Wu, H. (27 August 2018). Nature Human Behaviour. https://www.nature.com/articles/M2.11562-018-0399-z Search in Google Scholar

Card, D. , & DellaVigna, A. (2013). Nine facts about top journals in economics. Journal of Economic Literature, 51, 144–161 10.3386/w18665 Search in Google Scholar

Christensen, G. , & Miguel, E. (2018). Transparency, reproducibility, and the credibility of economics research. Journal of Economic Literature, 56, 920–980 10.3386/w22989 Search in Google Scholar

Doucouliagos, H. , Paldam, M. , & Stanley, T. D. (2018). Skating on thin evidence: Implications for public policy. European Journal of Political Economy, 54, 16–25 10.1016/j.ejpoleco.2018.03.004 Search in Google Scholar

Engel, C. (2011). Dictator games: A meta study. Experimental Economics, 14, 583–610 10.1007/s10683-011-9283-7 Search in Google Scholar

Fiala, L. , & Suentes, S. (2017). Transparency and cooperation in repeated dilemma games: A meta study. Experimental Economics, 20, 755–771 10.1007/s10683-017-9517-4 Search in Google Scholar

Friedman, M. (1953). Essays in positive economics. Chicago: University of Chicago Press. Search in Google Scholar

Hamermesh, D. (2013). Six decades of top economics publishing: Who and how? Journal of Economic Literature, 51, 162–172 10.3386/w18635 Search in Google Scholar

Heckman, J. J. , & Moktan, S. (2018). Publishing and promotion in economics: The tyranny of the top five. Journal of Economic Literature, 51, 419–470 10.3386/w25093 Search in Google Scholar

Ioannidis, J. P. A. , Stanley, T. D. , & Doucouliagos, H. (2017). The power of bias in economics research. Economic Journal, 127, F236–F265 10.1111/ecoj.12461 Search in Google Scholar

Johansen, S. , & Juselius, K. (1990). Maximum likelihood estimation and inference on cointegration – with application to the demand for money. Oxford Bulletin of Economics and Statistics, 52, 169–210 10.1111/j.1468-0084.1990.mp52002003.x Search in Google Scholar

Justman, M. (2018). Randomized controlled trials informing public policy: Lessons from the project STAR and class size reduction. European Journal of Political Economy, 54, 167–174 10.1016/j.ejpoleco.2018.04.005 Search in Google Scholar

Kydland, F. , & Prescott, E. C. (1991). The econometrics of the general equilibrium approach to business cycles. Scandinavian Journal of Economics, 93, 161–178 10.2307/3440324 Search in Google Scholar

Leamer, E. E. (1983). Let’s take the con out of econometrics. American Economic Review, 73, 31–43 Search in Google Scholar

Levitt, S. D. , & List, J. A. (2007). On the generalizability of lab behaviour to the field. Canadian Journal of Economics, 40, 347–370 10.1111/j.1365-2966.2007.00412.x Search in Google Scholar

Paldam, M. (April 14th 2015). Meta-analysis in a nutshell: Techniques and general findings. Economics. The Open-Access, Open-Assessment E-Journal, 9, 1–4 10.5018/economics-ejournal.ja.2015-11 Search in Google Scholar

Paldam, M. (2016). Simulating an empirical paper by the rational economist. Empirical Economics, 50, 1383–1407 10.1007/s00181-015-0971-6 Search in Google Scholar

Paldam, M. (2018). A model of the representative economist, as researcher and policy advisor. European Journal of Political Economy, 54, 6–15 10.1016/j.ejpoleco.2018.03.005 Search in Google Scholar

Smith, V. (1962). An experimental study of competitive market behavior. Journal of Political Economy, 70, 111–137 10.1017/CBO9780511528354.003 Search in Google Scholar

Stanley, T. D. , & Doucouliagos, H. (2012). Meta-regression analysis in economics and business. Abingdon: Routledge. 10.4324/9780203111710 Search in Google Scholar

Temple, C. L. (1918). Native races and their rulers; sketches and studies of official life and administrative problems in Nigeria. Cape Town: Argus Search in Google Scholar

© 2021 Martin Paldam, published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

- X / Twitter

Supplementary Materials

- Supplementary material

Please login or register with De Gruyter to order this product.

Journal and Issue

Articles in the same issue.

Empirical Economics

Journal of the Institute for Advanced Studies, Vienna, Austria

- Exemplary topics are treatment effect estimation, policy evaluation, forecasting, and econometric methods.

- Contributions may focus on the estimation of established relationships between economic variables or on the testing of hypotheses.

- Emphasizes the replicability of empirical results: replication studies may be published as short papers.

- Follows a single-blind review procedure (authors’ names are known to reviewers, but reviewers are anonymous to authors).

- Submissions that have poor chances of receiving positive reviews are routinely rejected without sending the papers for review.

This is a transformative journal , you may have access to funding.

- Robert M. Kunst

- Bertrand Candelon,

- Subal C. Kumbhakar,

- Arthur H. O. van Soest,

- Joakim Westerlund

Societies and partnerships

Latest issue

Volume 66, Issue 5

Latest articles

Revisiting the countercyclicality of fiscal policy.

- João Tovar Jalles

- Youssouf Kiendrebeogo

- Roberto Piazza

Money demand stability in India: allowing for an unknown number of breaks

- Masudul Hasan Adil

- Aditi Chaubal

Output, employment, and price effects of U.S. narrative tax changes: a factor-augmented vector autoregression approach

Uncovering heterogeneous regional impacts of Chinese monetary policy

- Andrew Tsang

The impact of robots on labor demand: evidence from job vacancy data in South Korea

Journal updates

Lawrence r. klein award 2021/2022.

This biannual prize is awarded for the best paper published in the journal Empirical Economics.

The Empirical Economics prize was awarded for the fi rst time by Springer in 2006, and was renamed in honor of the Nobel prize winner Lawrence R. Klein in 2013.

Call for Papers: Special Issue on Applications of Efficiency and Productivity Analysis

Guest Editors: Subal C. Kumbhakar, Christopher F. Parmeter and Emir Malikov

Deadline for paper submission: February 29, 2020

Journal information

- ABS Academic Journal Quality Guide

- Australian Business Deans Council (ABDC) Journal Quality List

- Engineering Village – GEOBASE

- Google Scholar

- Japanese Science and Technology Agency (JST)

- Norwegian Register for Scientific Journals and Series

- OCLC WorldCat Discovery Service

- Research Papers in Economics (RePEc)

- Social Science Citation Index

- TD Net Discovery Service

- UGC-CARE List (India)

Rights and permissions

Springer policies

© Springer-Verlag GmbH Germany, part of Springer Nature

- Find a journal

- Publish with us

- Track your research

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

The case for economics — by the numbers

Press contact :, media download.

*Terms of Use:

Images for download on the MIT News office website are made available to non-commercial entities, press and the general public under a Creative Commons Attribution Non-Commercial No Derivatives license . You may not alter the images provided, other than to crop them to size. A credit line must be used when reproducing images; if one is not provided below, credit the images to "MIT."

Previous image Next image

In recent years, criticism has been levelled at economics for being insular and unconcerned about real-world problems. But a new study led by MIT scholars finds the field increasingly overlaps with the work of other disciplines, and, in a related development, has become more empirical and data-driven, while producing less work of pure theory.

The study examines 140,000 economics papers published over a 45-year span, from 1970 to 2015, tallying the “extramural” citations that economics papers received in 16 other academic fields — ranging from other social sciences such as sociology to medicine and public health. In seven of those fields, economics is the social science most likely to be cited, and it is virtually tied for first in citations in another two disciplines.

In psychology journals, for instance, citations of economics papers have more than doubled since 2000. Public health papers now cite economics work twice as often as they did 10 years ago, and citations of economics research in fields from operations research to computer science have risen sharply as well.

While citations of economics papers in the field of finance have risen slightly in the last two decades, that rate of growth is no higher than it is in many other fields, and the overall interaction between economics and finance has not changed much. That suggests economics has not been unusually oriented toward finance issues — as some critics have claimed since the banking-sector crash of 2007-2008. And the study’s authors contend that as economics becomes more empirical, it is less dogmatic.

“If you ask me, economics has never been better,” says Josh Angrist, an MIT economist who led the study. “It’s never been more useful. It’s never been more scientific and more evidence-based.”

Indeed, the proportion of economics papers based on empirical work — as opposed to theory or methodology — cited in top journals within the field has risen by roughly 20 percentage points since 1990.

The paper, “Inside Job or Deep Impact? Extramural Citations and the Influence of Economic Scholarship,” appears in this month’s issue of the Journal of Economic Literature .

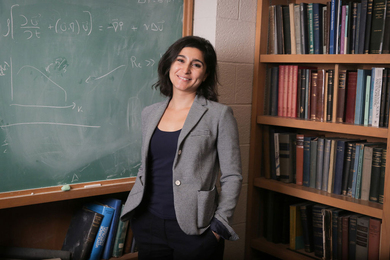

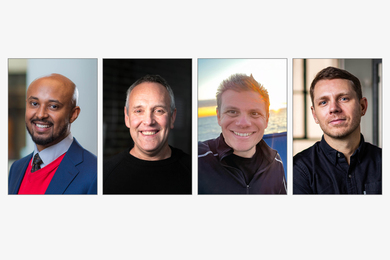

The co-authors are Angrist, who is the Ford Professor of Economics in MIT Department of Economics; Pierre Azoulay, the International Programs Professor of Management at the MIT Sloan School of Management; Glenn Ellison, the Gregory K. Palm Professor Economics and associate head of the Department of Economics; Ryan Hill, a doctoral candidate in MIT’s Department of Economics; and Susan Feng Lu, an associate professor of management in Purdue University’s Krannert School of Management.

Taking critics seriously

As Angrist acknowledges, one impetus for the study was the wave of criticism the economics profession has faced over the last decade, after the banking crisis and the “Great Recession” of 2008-2009, which included the finance-sector crash of 2008. The paper’s title alludes to the film “Inside Job” — whose thesis holds that, as Angrist puts it, “economics scholarship as an academic enterprise was captured somehow by finance, and that academic economists should therefore be blamed for the Great Recession.”

To conduct the study, the researchers used the Web of Science, a comprehensive bibliographic database, to examine citations between 1970 and 2015. The scholars developed machine-learning techniques to classify economics papers into subfields (such as macroeconomics or industrial organization) and by research “style” — meaning whether papers are primarily concerned with economic theory, empirical analysis, or econometric methods.

“We did a lot of fine-tuning of that,” says Hill, noting that for a study of this size, a machine-learning approach is a necessity.

The study also details the relationship between economics and four additional social science disciplines: anthropology, political science, psychology, and sociology. Among these, political science has overtaken sociology as the discipline most engaged with economics. Psychology papers now cite economics research about as often as they cite works of sociology.

The new intellectual connectivity between economics and psychology appears to be a product of the growth of behavioral economics, which examines the irrational, short-sighted financial decision-making of individuals — a different paradigm than the assumptions about rational decision-making found in neoclassical economics. During the study’s entire time period, one of the economics papers cited most often by other disciplines is the classic article “Prospect Theory: An Analysis of Decision under Risk,” by behavioral economists Daniel Kahneman and Amos Tversky.

Beyond the social sciences, other academic disciplines for which the researchers studied the influence of economics include four classic business fields — accounting, finance, management, and marketing — as well as computer science, mathematics, medicine, operations research, physics, public health, and statistics.

The researchers believe these “extramural” citations of economics are a good indicator of economics’ scientific value and relevance.

“Economics is getting more citations from computer science and sociology, political science, and psychology, but we also see fields like public health and medicine starting to cite economics papers,” Angrist says. “The empirical share of the economics publication output is growing. That’s a fairly marked change. But even more dramatic is the proportion of citations that flow to empirical work.”

Ellison emphasizes that because other disciplines are citing empirical economics more often, it shows that the growth of empirical research in economics is not just a self-reinforcing change, in which scholars chase trendy ideas. Instead, he notes, economists are producing broadly useful empirical research.

“Political scientists would feel totally free to ignore what economists were writing if what economists were writing today wasn’t of interest to them,” Ellison says. “But we’ve had this big shift in what we do, and other disciplines are showing their interest.”

It may also be that the empirical methods used in economics now more closely match those in other disciplines as well.

“What’s new is that economics is producing more accessible empirical work,” Hill says. “Our methods are becoming more similar … through randomized controlled trials, lab experiments, and other experimental approaches.”

But as the scholars note, there are exceptions to the general pattern in which greater empiricism in economics corresponds to greater interest from other fields. Computer science and operations research papers, which increasingly cite economists’ research, are mostly interested in the theory side of economics. And the growing overlap between psychology and economics involves a mix of theory and data-driven work.

In a big country

Angrist says he hopes the paper will help journalists and the general public appreciate how varied economics research is.

“To talk about economics is sort of like talking about [the United States of] America,” Angrist says. “America is a big, diverse country, and economics scholarship is a big, diverse enterprise, with many fields.”

He adds: “I think economics is incredibly eclectic.”

Ellison emphasizes this point as well, observing that the sheer breadth of the discipline gives economics the ability to have an impact in so many other fields.

“It really seems to be the diversity of economics that makes it do well in influencing other fields,” Ellison says. “Operations research, computer science, and psychology are paying a lot of attention to economic theory. Sociologists are paying a lot of attention to labor economics, marketing and management are paying attention to industrial organization, statisticians are paying attention to econometrics, and the public health people are paying attention to health economics. Just about everything in economics is influential somewhere.”

For his part, Angrist notes that he is a biased observer: He is a dedicated empiricist and a leading practitioner of research that uses quasiexperimental methods. His studies leverage circumstances in which, say, policy changes random assignments in civic life allow researchers to study two otherwise similar groups of people separated by one thing, such as access to health care.

Angrist was also a graduate-school advisor of Esther Duflo PhD ’99, who won the Nobel Prize in economics last fall, along with MIT’s Abhijit Banerjee — and Duflo thanked Angrist at their Nobel press conference, citing his methodological influence on her work. Duflo and Banerjee, as co-founders of MIT’s Abdul Latif Jameel Poverty Action Lab (J-PAL), are advocates of using field experiments in economics, which is still another way of producing empirical results with policy implications.

“More and more of our empirical work is worth paying attention to, and people do increasingly pay attention to it,” Angrist says. “At the same time, economists are much less inward-looking than they used to be.”

Share this news article on:

Related links.

- Paper: “Inside Job or Deep Impact? Extramural Citations and the Influence of Economic Scholarship”

- Josh Angrist

- Glenn Ellison

- Pierre Azoulay

- Department of Economics

- Article: “The Natural Experimenter”

Related Topics

- MIT Sloan School of Management

- School of Humanities Arts and Social Sciences

- History of science

- Social sciences

Related Articles

MIT economists Esther Duflo and Abhijit Banerjee win Nobel Prize

2019 MacVicar Faculty Fellows named

New science blooms after star researchers die, study finds

The “metrics” system

Previous item Next item

More MIT News

Seven from MIT elected to American Academy of Arts and Sciences for 2024

Read full story →

Two MIT teams selected for NSF sustainable materials grants

3 Questions: A shared vocabulary for how infectious diseases spread

Study demonstrates efficacy of MIT-led Brave Behind Bars program

Bringing an investigator’s eye to complex social challenges

Empirical Strategies in Economics: Illuminating the Path from Cause to Effect

The view that empirical strategies in economics should be transparent and credible now goes almost without saying. The local average treatment effects (LATE) framework for causal inference helped make this so. The LATE theorem tells us for whom particular instrumental variables (IV) and regression discontinuity estimates are valid. This lecture uses several empirical examples, mostly involving charter and exam schools, to highlight the value of LATE. A surprising exclusion restriction, an assumption central to the LATE interpretation of IV estimates, is shown to explain why enrollment at Chicago exam schools reduces student achievement. I also make two broader points: IV exclusion restrictions formalize commitment to clear and consistent explanations of reduced-form causal effects; compelling applications demonstrate the power of simple empirical strategies to generate new causal knowledge.

This is a revised version of my recorded Nobel Memorial Lecture posted December 8, 2021. Many thanks to Jimmy Chin and Vendela Norman for their help preparing this lecture and to Noam Angrist, Hank Farber, Peter Ganong, Guido Imbens, and Parag Pathak for comments on an earlier draft. Thanks also go to my coauthors and Blueprint Labs colleagues, from whom I’ve learned so much over the years. Special thanks are due to my co-laureates, David Card and Guido Imbens, for their guidance and partnership. We three share a debt to our absent friend, Alan Krueger, with whom we collaborated so fruitfully. This lecture incorporates empirical findings from joint work with Atila Abdulkadiroğlu, Sue Dynarski, Bill Evans, Iván Fernández-Val, Tom Kane, Victor Lavy, Yusuke Narita, Parag Pathak, Chris Walters, and Román Zárate. The views expressed herein are those of the author and do not necessarily reflect the views of the National Bureau of Economic Research.

The work discussed here was funded in part by the Laura and John Arnold Foundation, the National Science Foundation, and the W.T. Grant Foundation. Joshua Angrist's daughter teaches in a Boston charter school.

MARC RIS BibTeΧ

Download Citation Data

Published Versions

More from nber.

In addition to working papers , the NBER disseminates affiliates’ latest findings through a range of free periodicals — the NBER Reporter , the NBER Digest , the Bulletin on Retirement and Disability , the Bulletin on Health , and the Bulletin on Entrepreneurship — as well as online conference reports , video lectures , and interviews .

Browse Econ Literature

- Working papers

- Software components

- Book chapters

- JEL classification

More features

- Subscribe to new research

RePEc Biblio

Author registration.

- Economics Virtual Seminar Calendar NEW!

How to do empirical economics

- Author & abstract

- 12 References

- 2 Citations

- Most related

- Related works & more

Corrections

(University of North Carolina)

(University of Bonn)

(Université de Paris 1)

(Northwestern University)

- Joshua D Angrist

- Jean-Marc Robin

Suggested Citation

Download full text from publisher, references listed on ideas.

Follow serials, authors, keywords & more

Public profiles for Economics researchers

Various research rankings in Economics

RePEc Genealogy

Who was a student of whom, using RePEc

Curated articles & papers on economics topics

Upload your paper to be listed on RePEc and IDEAS

New papers by email

Subscribe to new additions to RePEc

EconAcademics

Blog aggregator for economics research

Cases of plagiarism in Economics

About RePEc

Initiative for open bibliographies in Economics

News about RePEc

Questions about IDEAS and RePEc

RePEc volunteers

Participating archives

Publishers indexing in RePEc

Privacy statement

Found an error or omission?

Opportunities to help RePEc

Get papers listed

Have your research listed on RePEc

Open a RePEc archive

Have your institution's/publisher's output listed on RePEc

Get RePEc data

Use data assembled by RePEc

This website uses cookies.

By clicking the "Accept" button or continuing to browse our site, you agree to first-party and session-only cookies being stored on your device to enhance site navigation and analyze site performance and traffic. For more information on our use of cookies, please see our Privacy Policy .

- American Economic Review

Design-Based Research in Empirical Microeconomics

- Article Information

JEL Classification

- J53 Labor-Management Relations; Industrial Jurisprudence

- Tools and Resources

- Customer Services

- Econometrics, Experimental and Quantitative Methods

- Economic Development

- Economic History

- Economic Theory and Mathematical Models

- Environmental, Agricultural, and Natural Resources Economics

- Financial Economics

- Health, Education, and Welfare Economics

- History of Economic Thought

- Industrial Organization

- International Economics

- Labor and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Micro, Behavioral, and Neuro-Economics

- Public Economics and Policy

- Urban, Rural, and Regional Economics

- Share This Facebook LinkedIn Twitter

Article contents

Gravity models and empirical trade.

- Scott Baier Scott Baier College of Business, Clemson University

- and Samuel Standaert Samuel Standaert Institute on Comparative Regional Integration Studies, United Nations University

- https://doi.org/10.1093/acrefore/9780190625979.013.327

- Published online: 31 March 2020

The gravity model of international trade states that the volume of trade between two countries is proportional to their economic mass and a measure of their relative trade frictions. Perhaps because of its intuitive appeal, the gravity model has been the workhorse model of international trade for more than 50 years. While the initial empirical work using the gravity model lacked sound theoretical underpinnings, the theoretical developments have highlighted how a gravity-like specification can be derived from many models with varying assumptions about preferences, technology, and market structure. Along the strengthening of the theoretical roots of the gravity model, the way in which it is estimated has also evolved significantly since the start of the new millennium. Depending on the exact characteristics of regression, different estimation methods should be used to estimate the gravity model.

- international trade

- bilateral trade

- the gravity equation

- structural gravity

- trade costs

- new trade theory

- heterogeneous firms

The Workhorse of International Trade

For more than 50 years, the gravity model has been the workhorse model of empirical international trade. Originally the model was presented as a simple analogy between Newton’s Universal Law of Gravitation and factors that would influence bilateral trade flows. The flow of trade between two countries was posited to be proportional to the economic size of the trading partners and inversely related to their distance from each other. As formulated, the gravity equation of international trade could be rewritten as a log-linear empirical specification that could be easily estimated. A large number of studies showed that the empirical findings were consistent with naïve gravity model. In particular, the coefficient estimates of the elasticity of bilateral trade to importer and exporter GDP were close to unity, the elasticity of trade with respect to bilateral distance was negative; moreover, the empirical specification was able to account for a reasonable amount of the observed variation in trade.

Even though the model was an empirical success, the gravity equation lacked a sound theoretical background. Beginning in the late 1970s, several authors showed that the gravity-like specification would emerge from a variety of standard assumptions regarding preferences, technology, market structure, and trade. At the same time, empirical trade economists became more concerned about the estimation strategy; in particular, that estimation using ordinary least squares might lead to biased coefficient estimates. The purpose of the review is to trace the history of the gravity equation and provide context for the evolution of the gravity equation of international trade. The review also highlights the current state of the field and highlights areas of future research. 1

Since much of the work on the gravity equation has been designed to identify factors that may reduce or enhance bilateral trade, the paper starts by using a naïve gravity specification to show how geography, history, culture, and government policies appear to influence trade flows by looking at the cross-section of data for 145 countries in 2014 . It goes on to provide an overview of theoretical models and empirical specifications from 1970 through 2001 . The subsequent section works through four of the standard models of international trade and shows how each model leads to a similar empirical specification—the structural gravity model. This section also briefly covers how these models can be extended to include tariffs, intermediate goods, and multiple sectors; it concludes by reviewing recent theoretical models that lead to a gravity-like empirical specification.

The final section of the article reviews the state of the empirical specifications. It starts with a discussion of the conditions under which the log-linear gravity model estimated by ordinary least squares will yield consistent estimates of the coefficients of interest. In most cases, however, these conditions are not satisfied, and an alternative estimator is needed. Santos Silva and Tenreyro ( 2006 ) showed that the Poisson Pseudo Maximum Likelihood Estimator has desirable properties that make it attractive for the empirical gravity work. These estimators are contrasted with the Gamma Pseudo Maximum Likelihood Estimator, and Nonlinear Least Squares, and different specification tests are discussed that may assist in choosing among them. Another issue that can arise in estimating the gravity model is the endogeneity of the control variables, the typical solutions for which are also briefly discussed. The section concludes with a discussion of the path for future work.

Gravity: A First Look at the Data

The early empirical gravity models of international trade were rooted in a simple and intuitive analogy to Newton’s Law of Universal Gravity. According to Newton’s law, the force of attraction between two bodies is proportional to the product of their masses and inversely proportional to their distance squared. These early gravity models of trade postulated a similar relationship between the bilateral trade flows between two countries, their economic sizes, and a measure of trade frictions. The lack of theoretical underpinnings for this relationship is the reason why it is referred to as the naïve gravity model. Mathematically, it can be expressed as

where X i j is bilateral trade between exporting country i and importing country j , Y i ( Y j ) is the gross domestic product in country i ( j ) and d i s t i j is the bilateral distance between country i and j . ε i j is typically assumed to be a log-normally distributed error term. Given the multiplicative structure and the assumption on the error term, Equation 1 can be estimated by taking the natural logarithm that leads to a log-linear specification

Literally, hundreds of papers have estimated the gravity equation by ordinary least squares. Intuitively, one would expect the economic size of the countries to have a positive effect ( β 1 > 0 , β 2 > 0 ) and distance to have a negative effect ( β 3 < 0 ). While the coefficient estimates have varied from study to study depending on the period and the sample of countries, the estimated coefficients of β 1 and β 2 were typically found to be close to unity, while that of β 3 was typically negative and statistically significant. What cemented the gravity model’s popularity was its ability to explain much of the observed variation in bilateral trade flows.

The core relationship of gravity models can be easily illustrated using the overall patterns in trade data. In a world without trade frictions, a simple gravity relationship is given by X i j = Y i Y j Y W where, as before, Y i ( Y j ) is GDP of country i ( j ) and Y W is world income. The frictionless gravity equation can be rearranged to relate country j ' s expenditure share on goods produced in country i ( X i j Y j ) to the latter’s share of the world production ( Y i Y W ). 2 Using trade data from 2014 for 125 countries, Figure 1 plots the relationship between expenditure shares and production shares on a logarithmic scale. The high correlation between these variables shown in this graph is consistent with, and part of the appeal of, the empirical structure of the gravity equation. 3 At the same time, Figure 1 also shows that more than 90% of all expenditure shares remain below the 45-degree line. That these import shares fall consistently below the production shares indicates that the world is far from frictionless.

Figure 1. Expenditure and production shares.

Modifying the frictionless gravity equation gives a crude measure of trade costs. To start, we rewrite the bilateral trade flows as

where ϕ i j the bilateral cost of trading and the strictly positive ϵ is the elasticity of bilateral trade flows with respect to these trade costs. Much of the empirical gravity literature has been devoted to identifying and quantifying the factors that influence trade costs. They can be classified as costs induced by geography (natural trade costs), costs associated with historical and cultural linkages, and costs induced by policies (sometimes referred to as “unnatural trade costs”). Researchers interested in international trade and economic geography have emphasized the role of natural trade costs (often referred to as second nature geography) and how these natural trade costs are associated with the respective location of the economic agents. An obvious empirical measure of such costs is the distance between countries. Limao and Venables ( 2001 ) and Hummels ( 2007 ) investigated the empirical relationship between observed CIF-FOB trade costs—that is, all the costs associated with shipping the goods and insuring it against damage during transport—and distance. These authors found a positive correlation between distance and trade costs. Indeed, one of the most robust findings in the empirical gravity literature is the negative relationship between distance and bilateral trade, or its equivalent: the positive relationship between the natural logarithm of distance and trade costs. The rough measure of trade costs obtained by rewriting the naïve gravity equation as

Figure 2 plots the relationship between this measure of trade costs and distance, depicting their clear positive relationship. The fitted line indicates that trade falls by 1.4% for every 1% increase in distance.

Figure 2. Trade costs and distance.

Other geographical factors are also posited to influence trade, including whether the countries share a border. It is frequently argued that contiguous countries have lower trade costs because their common border lowers both pecuniary and non-pecuniary costs of trade. Figure 3 depicts the relationship between trade costs and distance, where contiguous country-pairs are depicted with the plus symbol (+). On average, the points that correspond to the bilateral pairs that share a border lie below the least-squares line representing the relationship between trade costs and distance, indicating that contiguous countries face lower trade costs. 4

Figure 3. Trade costs and distance for contiguous (+) and non-contiguous (o) countries.

In addition to geography, cultural and historical factors are likely to influence trade costs. For example, Figure 4 depicts the relationship between trade costs and distance, this time with bilateral pairs that have ever had a colonial relationship indicated with a diamond symbol. Since these country pairs fall on average below the fitted line, the figure again suggests that trade costs are lower for bilateral pairs that share a colonial history. 5 Potential explanations for this may be that the colonial history implies more familiarity with each other or more similar institutions. Alternatively, the existence of differences in resources that increase trade between the two countries would have been a factor of colonial relationships in the first place.

Figure 4. Trade costs and distance for countries with (♦) and without (●) a colonial relationship.

Finally, some trade costs are likely attributable to government policies. For example, higher tariffs, economic sanctions, and other forms of regulations likely raise the cost of trading and hence reduce trade. Free trade agreements, on the other hand, are typically designed to lower trade costs and boost trade. Figure 5a depicts the relationship between trade costs and distance where countries that have a free trade agreement are depicted with a gold cross.

However, it is not immediately evident that conditional on distance, countries that have free trade agreements face lower trade costs. A potential explanation may be that other factors associated with trade costs also need to be included. Alternatively, the formation of the trade agreements could be in response to other factors such as high trade costs. Figure 5b depicts the separate fitted lines for the relationship between trade costs and distance with and without trade agreements. These fitted lines indicate that over shorter distances trade costs are lower, on average, for bilateral pairs that have a trade agreement. However, as the distance between the countries increases, free trade agreements appear to have a smaller impact on trade costs. 6

Figure 5. Distance and trade costs for countries with (x) and without (●) a free trade agreement. (a). combined fitted line. (b). separate fitted lines.

While these figures are only suggestive of the relationships between bilateral trade and geography, history, and government policies, the theories described in subsequent sections provide guidance on how other confounding factors can be controlled for when specifying an empirical gravity model.

Early Theoretical Developments and Empirical Applications

The gravity framework initially was appealing to researchers because the log-linear model was a simple and intuitive empirical way to assess the relationship between bilateral trade flows, production, income, and variables that could conceivably be viewed as factors that distort bilateral trade. When applied to trade data, the coefficient estimates were typically economically and statistically significant, and the simple gravity specification seemed to account for a large share of the variation of bilateral trade flows. Even though the gravity equation was considered an empirical success, it was often criticized for lacking sound theoretical foundations. Many of the early attempts to provide a theoretical foundation for the gravity model showed that bilateral trade was a function of incomes but did not provide an explicit rationale for the inclusion of distance and other trade costs. For example, Leamer and Stern ( 1970 ) presented a probabilistic model of bilateral trade flows. In their model, it was assumed that each transaction was of the same size ( γ ) and that the likelihood an exporter in i would meet and trade with an importer in j , would depend on the trade capacity of each of the two countries relative to total trade. If trade capacity of the exporting (importing) county i ( j ) is given by F i ( F j ) , then the probability of trade between an exporter and importer is given by p i j = F i F W F j F W , where F W represents total world trade. If there are N transactions of size γ , then total world trade would be given by F W = N γ and the volume of trade between i and j to be given by