Longitudinal Study Design

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

A longitudinal study is a type of observational and correlational study that involves monitoring a population over an extended period of time. It allows researchers to track changes and developments in the subjects over time.

What is a Longitudinal Study?

In longitudinal studies, researchers do not manipulate any variables or interfere with the environment. Instead, they simply conduct observations on the same group of subjects over a period of time.

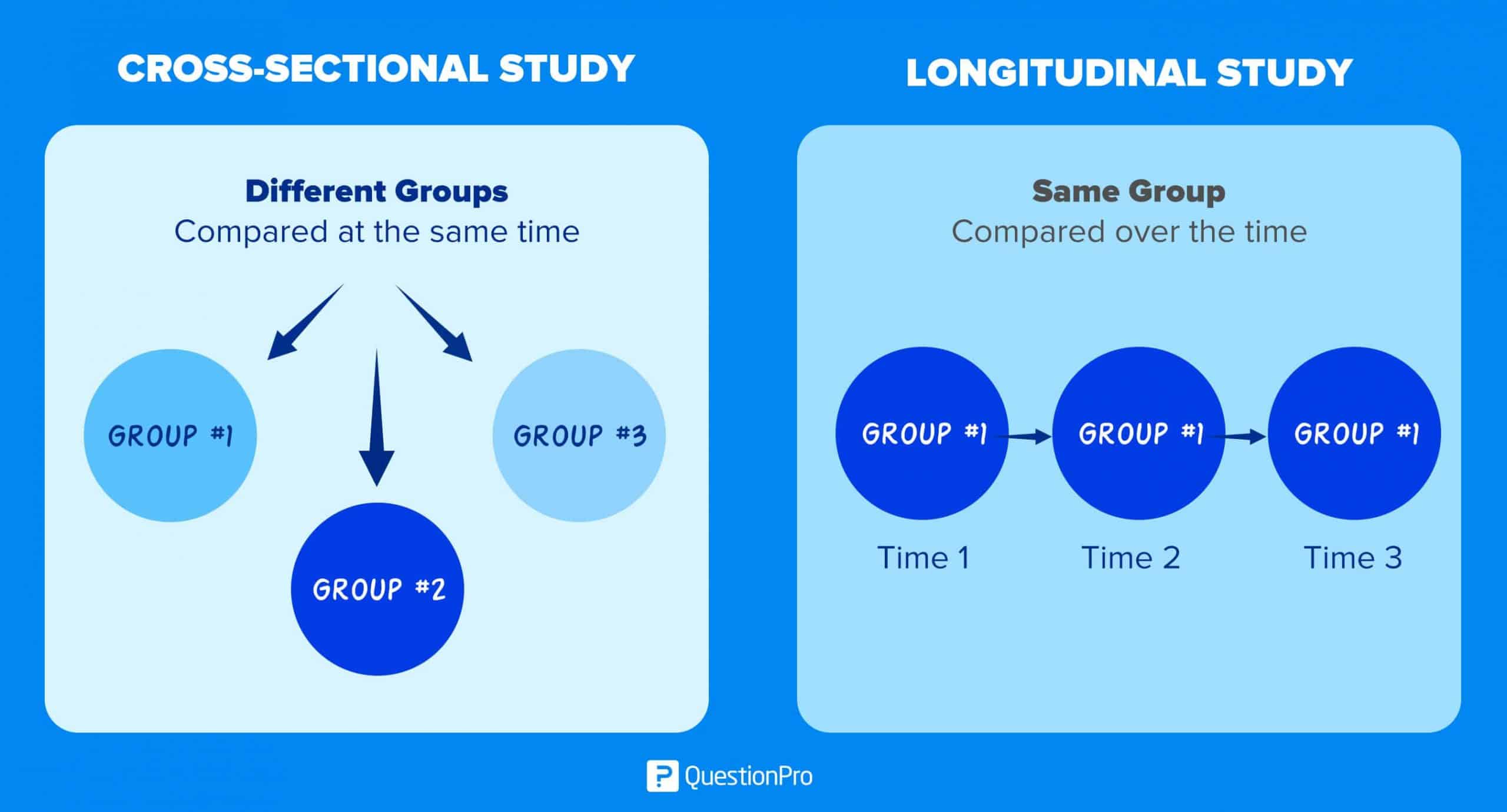

These research studies can last as short as a week or as long as multiple years or even decades. Unlike cross-sectional studies that measure a moment in time, longitudinal studies last beyond a single moment, enabling researchers to discover cause-and-effect relationships between variables.

They are beneficial for recognizing any changes, developments, or patterns in the characteristics of a target population. Longitudinal studies are often used in clinical and developmental psychology to study shifts in behaviors, thoughts, emotions, and trends throughout a lifetime.

For example, a longitudinal study could be used to examine the progress and well-being of children at critical age periods from birth to adulthood.

The Harvard Study of Adult Development is one of the longest longitudinal studies to date. Researchers in this study have followed the same men group for over 80 years, observing psychosocial variables and biological processes for healthy aging and well-being in late life (see Harvard Second Generation Study).

When designing longitudinal studies, researchers must consider issues like sample selection and generalizability, attrition and selectivity bias, effects of repeated exposure to measures, selection of appropriate statistical models, and coverage of the necessary timespan to capture the phenomena of interest.

Panel Study

- A panel study is a type of longitudinal study design in which the same set of participants are measured repeatedly over time.

- Data is gathered on the same variables of interest at each time point using consistent methods. This allows studying continuity and changes within individuals over time on the key measured constructs.

- Prominent examples include national panel surveys on topics like health, aging, employment, and economics. Panel studies are a type of prospective study .

Cohort Study

- A cohort study is a type of longitudinal study that samples a group of people sharing a common experience or demographic trait within a defined period, such as year of birth.

- Researchers observe a population based on the shared experience of a specific event, such as birth, geographic location, or historical experience. These studies are typically used among medical researchers.

- Cohorts are identified and selected at a starting point (e.g. birth, starting school, entering a job field) and followed forward in time.

- As they age, data is collected on cohort subgroups to determine their differing trajectories. For example, investigating how health outcomes diverge for groups born in 1950s, 1960s, and 1970s.

- Cohort studies do not require the same individuals to be assessed over time; they just require representation from the cohort.

Retrospective Study

- In a retrospective study , researchers either collect data on events that have already occurred or use existing data that already exists in databases, medical records, or interviews to gain insights about a population.

- Appropriate when prospectively following participants from the past starting point is infeasible or unethical. For example, studying early origins of diseases emerging later in life.

- Retrospective studies efficiently provide a “snapshot summary” of the past in relation to present status. However, quality concerns with retrospective data make careful interpretation necessary when inferring causality. Memory biases and selective retention influence quality of retrospective data.

Allows researchers to look at changes over time

Because longitudinal studies observe variables over extended periods of time, researchers can use their data to study developmental shifts and understand how certain things change as we age.

High validation

Since objectives and rules for long-term studies are established before data collection, these studies are authentic and have high levels of validity.

Eliminates recall bias

Recall bias occurs when participants do not remember past events accurately or omit details from previous experiences.

Flexibility

The variables in longitudinal studies can change throughout the study. Even if the study was created to study a specific pattern or characteristic, the data collection could show new data points or relationships that are unique and worth investigating further.

Limitations

Costly and time-consuming.

Longitudinal studies can take months or years to complete, rendering them expensive and time-consuming. Because of this, researchers tend to have difficulty recruiting participants, leading to smaller sample sizes.

Large sample size needed

Longitudinal studies tend to be challenging to conduct because large samples are needed for any relationships or patterns to be meaningful. Researchers are unable to generate results if there is not enough data.

Participants tend to drop out

Not only is it a struggle to recruit participants, but subjects also tend to leave or drop out of the study due to various reasons such as illness, relocation, or a lack of motivation to complete the full study.

This tendency is known as selective attrition and can threaten the validity of an experiment. For this reason, researchers using this approach typically recruit many participants, expecting a substantial number to drop out before the end.

Report bias is possible

Longitudinal studies will sometimes rely on surveys and questionnaires, which could result in inaccurate reporting as there is no way to verify the information presented.

- Data were collected for each child at three-time points: at 11 months after adoption, at 4.5 years of age and at 10.5 years of age. The first two sets of results showed that the adoptees were behind the non-institutionalised group however by 10.5 years old there was no difference between the two groups. The Romanian orphans had caught up with the children raised in normal Canadian families.

- The role of positive psychology constructs in predicting mental health and academic achievement in children and adolescents (Marques Pais-Ribeiro, & Lopez, 2011)

- The correlation between dieting behavior and the development of bulimia nervosa (Stice et al., 1998)

- The stress of educational bottlenecks negatively impacting students’ wellbeing (Cruwys, Greenaway, & Haslam, 2015)

- The effects of job insecurity on psychological health and withdrawal (Sidney & Schaufeli, 1995)

- The relationship between loneliness, health, and mortality in adults aged 50 years and over (Luo et al., 2012)

- The influence of parental attachment and parental control on early onset of alcohol consumption in adolescence (Van der Vorst et al., 2006)

- The relationship between religion and health outcomes in medical rehabilitation patients (Fitchett et al., 1999)

Goals of Longitudinal Data and Longitudinal Research

The objectives of longitudinal data collection and research as outlined by Baltes and Nesselroade (1979):

- Identify intraindividual change : Examine changes at the individual level over time, including long-term trends or short-term fluctuations. Requires multiple measurements and individual-level analysis.

- Identify interindividual differences in intraindividual change : Evaluate whether changes vary across individuals and relate that to other variables. Requires repeated measures for multiple individuals plus relevant covariates.

- Analyze interrelationships in change : Study how two or more processes unfold and influence each other over time. Requires longitudinal data on multiple variables and appropriate statistical models.

- Analyze causes of intraindividual change: This objective refers to identifying factors or mechanisms that explain changes within individuals over time. For example, a researcher might want to understand what drives a person’s mood fluctuations over days or weeks. Or what leads to systematic gains or losses in one’s cognitive abilities across the lifespan.

- Analyze causes of interindividual differences in intraindividual change : Identify mechanisms that explain within-person changes and differences in changes across people. Requires repeated data on outcomes and covariates for multiple individuals plus dynamic statistical models.

How to Perform a Longitudinal Study

When beginning to develop your longitudinal study, you must first decide if you want to collect your own data or use data that has already been gathered.

Using already collected data will save you time, but it will be more restricted and limited than collecting it yourself. When collecting your own data, you can choose to conduct either a retrospective or prospective study .

In a retrospective study, you are collecting data on events that have already occurred. You can examine historical information, such as medical records, in order to understand the past. In a prospective study, on the other hand, you are collecting data in real-time. Prospective studies are more common for psychology research.

Once you determine the type of longitudinal study you will conduct, you then must determine how, when, where, and on whom the data will be collected.

A standardized study design is vital for efficiently measuring a population. Once a study design is created, researchers must maintain the same study procedures over time to uphold the validity of the observation.

A schedule should be maintained, complete results should be recorded with each observation, and observer variability should be minimized.

Researchers must observe each subject under the same conditions to compare them. In this type of study design, each subject is the control.

Methodological Considerations

Important methodological considerations include testing measurement invariance of constructs across time, appropriately handling missing data, and using accelerated longitudinal designs that sample different age cohorts over overlapping time periods.

Testing measurement invariance

Testing measurement invariance involves evaluating whether the same construct is being measured in a consistent, comparable way across multiple time points in longitudinal research.

This includes assessing configural, metric, and scalar invariance through confirmatory factor analytic approaches. Ensuring invariance gives more confidence when drawing inferences about change over time.

Missing data

Missing data can occur during initial sampling if certain groups are underrepresented or fail to respond.

Attrition over time is the main source – participants dropping out for various reasons. The consequences of missing data are reduced statistical power and potential bias if dropout is nonrandom.

Handling missing data appropriately in longitudinal studies is critical to reducing bias and maintaining power.

It is important to minimize attrition by tracking participants, keeping contact info up to date, engaging them, and providing incentives over time.

Techniques like maximum likelihood estimation and multiple imputation are better alternatives to older methods like listwise deletion. Assumptions about missing data mechanisms (e.g., missing at random) shape the analytic approaches taken.

Accelerated longitudinal designs

Accelerated longitudinal designs purposefully create missing data across age groups.

Accelerated longitudinal designs strategically sample different age cohorts at overlapping periods. For example, assessing 6th, 7th, and 8th graders at yearly intervals would cover 6-8th grade development over a 3-year study rather than following a single cohort over that timespan.

This increases the speed and cost-efficiency of longitudinal data collection and enables the examination of age/cohort effects. Appropriate multilevel statistical models are required to analyze the resulting complex data structure.

In addition to those considerations, optimizing the time lags between measurements, maximizing participant retention, and thoughtfully selecting analysis models that align with the research questions and hypotheses are also vital in ensuring robust longitudinal research.

So, careful methodology is key throughout the design and analysis process when working with repeated-measures data.

Cohort effects

A cohort refers to a group born in the same year or time period. Cohort effects occur when different cohorts show differing trajectories over time.

Cohort effects can bias results if not accounted for, especially in accelerated longitudinal designs which assume cohort equivalence.

Detecting cohort effects is important but can be challenging as they are confounded with age and time of measurement effects.

Cohort effects can also interfere with estimating other effects like retest effects. This happens because comparing groups to estimate retest effects relies on cohort equivalence.

Overall, researchers need to test for and control cohort effects which could otherwise lead to invalid conclusions. Careful study design and analysis is required.

Retest effects

Retest effects refer to gains in performance that occur when the same or similar test is administered on multiple occasions.

For example, familiarity with test items and procedures may allow participants to improve their scores over repeated testing above and beyond any true change.

Specific examples include:

- Memory tests – Learning which items tend to be tested can artificially boost performance over time

- Cognitive tests – Becoming familiar with the testing format and particular test demands can inflate scores

- Survey measures – Remembering previous responses can bias future responses over multiple administrations

- Interviews – Comfort with the interviewer and process can lead to increased openness or recall

To estimate retest effects, performance of retested groups is compared to groups taking the test for the first time. Any divergence suggests inflated scores due to retesting rather than true change.

If unchecked in analysis, retest gains can be confused with genuine intraindividual change or interindividual differences.

This undermines the validity of longitudinal findings. Thus, testing and controlling for retest effects are important considerations in longitudinal research.

Data Analysis

Longitudinal data involves repeated assessments of variables over time, allowing researchers to study stability and change. A variety of statistical models can be used to analyze longitudinal data, including latent growth curve models, multilevel models, latent state-trait models, and more.

Latent growth curve models allow researchers to model intraindividual change over time. For example, one could estimate parameters related to individuals’ baseline levels on some measure, linear or nonlinear trajectory of change over time, and variability around those growth parameters. These models require multiple waves of longitudinal data to estimate.

Multilevel models are useful for hierarchically structured longitudinal data, with lower-level observations (e.g., repeated measures) nested within higher-level units (e.g., individuals). They can model variability both within and between individuals over time.

Latent state-trait models decompose the covariance between longitudinal measurements into time-invariant trait factors, time-specific state residuals, and error variance. This allows separating stable between-person differences from within-person fluctuations.

There are many other techniques like latent transition analysis, event history analysis, and time series models that have specialized uses for particular research questions with longitudinal data. The choice of model depends on the hypotheses, timescale of measurements, age range covered, and other factors.

In general, these various statistical models allow investigation of important questions about developmental processes, change and stability over time, causal sequencing, and both between- and within-person sources of variability. However, researchers must carefully consider the assumptions behind the models they choose.

Longitudinal vs. Cross-Sectional Studies

Longitudinal studies and cross-sectional studies are two different observational study designs where researchers analyze a target population without manipulating or altering the natural environment in which the participants exist.

Yet, there are apparent differences between these two forms of study. One key difference is that longitudinal studies follow the same sample of people over an extended period of time, while cross-sectional studies look at the characteristics of different populations at a given moment in time.

Longitudinal studies tend to require more time and resources, but they can be used to detect cause-and-effect relationships and establish patterns among subjects.

On the other hand, cross-sectional studies tend to be cheaper and quicker but can only provide a snapshot of a point in time and thus cannot identify cause-and-effect relationships.

Both studies are valuable for psychologists to observe a given group of subjects. Still, cross-sectional studies are more beneficial for establishing associations between variables, while longitudinal studies are necessary for examining a sequence of events.

1. Are longitudinal studies qualitative or quantitative?

Longitudinal studies are typically quantitative. They collect numerical data from the same subjects to track changes and identify trends or patterns.

However, they can also include qualitative elements, such as interviews or observations, to provide a more in-depth understanding of the studied phenomena.

2. What’s the difference between a longitudinal and case-control study?

Case-control studies compare groups retrospectively and cannot be used to calculate relative risk. Longitudinal studies, though, can compare groups either retrospectively or prospectively.

In case-control studies, researchers study one group of people who have developed a particular condition and compare them to a sample without the disease.

Case-control studies look at a single subject or a single case, whereas longitudinal studies are conducted on a large group of subjects.

3. Does a longitudinal study have a control group?

Yes, a longitudinal study can have a control group . In such a design, one group (the experimental group) would receive treatment or intervention, while the other group (the control group) would not.

Both groups would then be observed over time to see if there are differences in outcomes, which could suggest an effect of the treatment or intervention.

However, not all longitudinal studies have a control group, especially observational ones and not testing a specific intervention.

Baltes, P. B., & Nesselroade, J. R. (1979). History and rationale of longitudinal research. In J. R. Nesselroade & P. B. Baltes (Eds.), (pp. 1–39). Academic Press.

Cook, N. R., & Ware, J. H. (1983). Design and analysis methods for longitudinal research. Annual review of public health , 4, 1–23.

Fitchett, G., Rybarczyk, B., Demarco, G., & Nicholas, J.J. (1999). The role of religion in medical rehabilitation outcomes: A longitudinal study. Rehabilitation Psychology, 44, 333-353.

Harvard Second Generation Study. (n.d.). Harvard Second Generation Grant and Glueck Study. Harvard Study of Adult Development. Retrieved from https://www.adultdevelopmentstudy.org.

Le Mare, L., & Audet, K. (2006). A longitudinal study of the physical growth and health of postinstitutionalized Romanian adoptees. Pediatrics & child health, 11 (2), 85-91.

Luo, Y., Hawkley, L. C., Waite, L. J., & Cacioppo, J. T. (2012). Loneliness, health, and mortality in old age: a national longitudinal study. Social science & medicine (1982), 74 (6), 907–914.

Marques, S. C., Pais-Ribeiro, J. L., & Lopez, S. J. (2011). The role of positive psychology constructs in predicting mental health and academic achievement in children and adolescents: A two-year longitudinal study. Journal of Happiness Studies: An Interdisciplinary Forum on Subjective Well-Being, 12( 6), 1049–1062.

Sidney W.A. Dekker & Wilmar B. Schaufeli (1995) The effects of job insecurity on psychological health and withdrawal: A longitudinal study, Australian Psychologist, 30: 1,57-63.

Stice, E., Mazotti, L., Krebs, M., & Martin, S. (1998). Predictors of adolescent dieting behaviors: A longitudinal study. Psychology of Addictive Behaviors, 12 (3), 195–205.

Tegan Cruwys, Katharine H Greenaway & S Alexander Haslam (2015) The Stress of Passing Through an Educational Bottleneck: A Longitudinal Study of Psychology Honours Students, Australian Psychologist, 50:5, 372-381.

Thomas, L. (2020). What is a longitudinal study? Scribbr. Retrieved from https://www.scribbr.com/methodology/longitudinal-study/

Van der Vorst, H., Engels, R. C. M. E., Meeus, W., & Deković, M. (2006). Parental attachment, parental control, and early development of alcohol use: A longitudinal study. Psychology of Addictive Behaviors, 20 (2), 107–116.

Further Information

- Schaie, K. W. (2005). What can we learn from longitudinal studies of adult development?. Research in human development, 2 (3), 133-158.

- Caruana, E. J., Roman, M., Hernández-Sánchez, J., & Solli, P. (2015). Longitudinal studies. Journal of thoracic disease, 7 (11), E537.

- What’s a Longitudinal Study? Types, Uses & Examples

Research can take anything from a few minutes to years or even decades to complete. When a systematic investigation goes on for an extended period, it’s most likely that the researcher is carrying out a longitudinal study of the sample population. So how does this work?

In the most simple terms, a longitudinal study involves observing the interactions of the different variables in your research population, exposing them to various causal factors, and documenting the effects of this exposure. It’s an intelligent way to establish causal relationships within your sample population.

In this article, we’ll show you several ways to adopt longitudinal studies for your systematic investigation and how to avoid common pitfalls.

What is a Longitudinal Study?

A longitudinal study is a correlational research method that helps discover the relationship between variables in a specific target population. It is pretty similar to a cross-sectional study , although in its case, the researcher observes the variables for a longer time, sometimes lasting many years.

For example, let’s say you are researching social interactions among wild cats. You go ahead to recruit a set of newly-born lion cubs and study how they relate with each other as they grow. Periodically, you collect the same types of data from the group to track their development.

The advantage of this extended observation is that the researcher can witness the sequence of events leading to the changes in the traits of both the target population and the different groups. It can identify the causal factors for these changes and their long-term impact.

Characteristics of Longitudinal Studies

1. Non-interference: In longitudinal studies, the researcher doesn’t interfere with the participants’ day-to-day activities in any way. When it’s time to collect their responses , the researcher administers a survey with qualitative and quantitative questions .

2. Observational: As we mentioned earlier, longitudinal studies involve observing the research participants throughout the study and recording any changes in traits that you notice.

3. Timeline: A longitudinal study can span weeks, months, years, or even decades. This dramatically contrasts what is obtainable in cross-sectional studies that only last for a short time.

Cross-Sectional vs. Longitudinal Studies

- Definition

A cross-sectional study is a type of observational study in which the researcher collects data from variables at a specific moment to establish a relationship among them. On the other hand, longitudinal research observes variables for an extended period and records all the changes in their relationship.

Longitudinal studies take a longer time to complete. In some cases, the researchers can spend years documenting the changes among the variables plus their relationships. For cross-sectional studies, this isn’t the case. Instead, the researcher collects information in a relatively short time frame and makes relevant inferences from this data.

While cross-sectional studies give you a snapshot of the situation in the research environment, longitudinal studies are better suited for contexts where you need to analyze a problem long-term.

- Sample Data

Longitudinal studies repeatedly observe the same sample population, while cross-sectional studies are conducted with different research samples.

Because longitudinal studies span over a more extended time, they typically cost more money than cross-sectional observations.

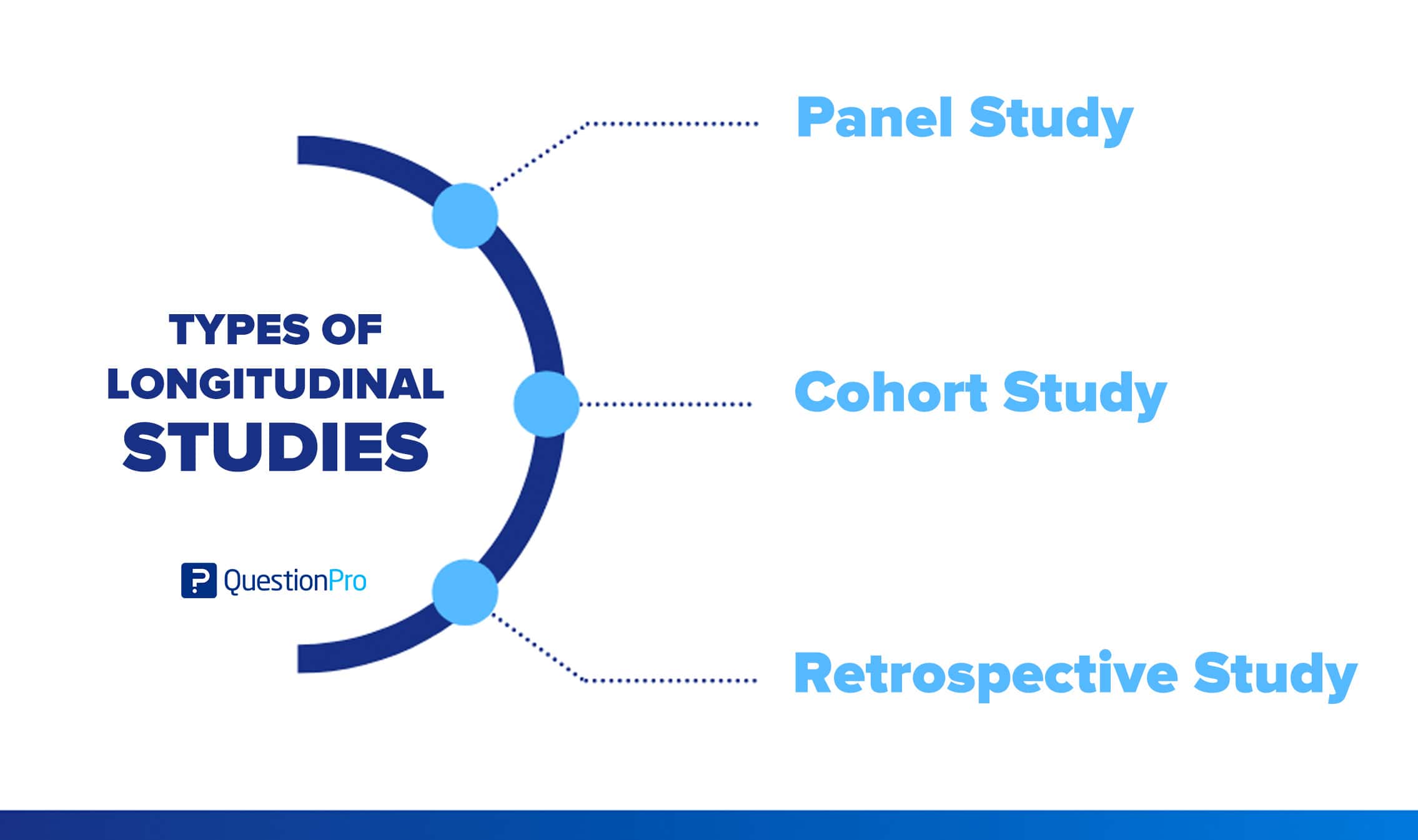

Types of Longitudinal Studies

The three main types of longitudinal studies are:

- Panel Study

- Retrospective Study

- Cohort Study

These methods help researchers to study variables and account for qualitative and quantitative data from the research sample.

1. Panel Study

In a panel study, the researcher uses data collection methods like surveys to gather information from a fixed number of variables at regular but distant intervals, often spinning into a few years. It’s primarily designed for quantitative research, although you can use this method for qualitative data analysis .

When To Use Panel Study

If you want to have first-hand, factual information about the changes in a sample population, then you should opt for a panel study. For example, medical researchers rely on panel studies to identify the causes of age-related changes and their consequences.

Advantages of Panel Study

- It helps you identify the causal factors of changes in a research sample.

- It also allows you to witness the impact of these changes on the properties of the variables and information needed at different points of their existing relationship.

- Panel studies can be used to obtain historical data from the sample population.

Disadvantages of Panel Studies

- Conducting a panel study is pretty expensive in terms of time and resources.

- It might be challenging to gather the same quality of data from respondents at every interval.

2. Retrospective Study

In a retrospective study, the researcher depends on existing information from previous systematic investigations to discover patterns leading to the study outcomes. In other words, a retrospective study looks backward. It examines exposures to suspected risk or protection factors concerning an outcome established at the start of the study.

When To Use Retrospective Study

Retrospective studies are best for research contexts where you want to quickly estimate an exposure’s effect on an outcome. It also helps you to discover preliminary measures of association in your data.

Medical researchers adopt retrospective study methods when they need to research rare conditions.

Advantages of Retrospective Study

- Retrospective studies happen at a relatively smaller scale and do not require much time to complete.

- It helps you to study rare outcomes when prospective surveys are not feasible.

Disadvantages of Retrospective Study

- It is easily affected by recall bias or misclassification bias.

- It often depends on convenience sampling, which is prone to selection bias.

3. Cohort Study

A cohort study entails collecting information from a group of people who share specific traits or have experienced a particular occurrence simultaneously. For example, a researcher might conduct a cohort study on a group of Black school children in the U.K.

During cohort study, the researcher exposes some group members to a specific characteristic or risk factor. Then, she records the outcome of this exposure and its impact on the exposed variables.

When To Use Cohort Study

You should conduct a cohort study if you’re looking to establish a causal relationship within your data sets. For example, in medical research, cohort studies investigate the causes of disease and establish links between risk factors and effects.

Advantages of Cohort Studies

- It allows you to study multiple outcomes that can be associated with one risk factor.

- Cohort studies are designed to help you measure all variables of interest.

Disadvantages of Cohort Studies

- Cohort studies are expensive to conduct.

- Throughout the process, the researcher has less control over variables.

When Would You Use a Longitudinal Study?

If you’re looking to discover the relationship between variables and the causal factors responsible for changes, you should adopt a longitudinal approach to your systematic investigation. Longitudinal studies help you to analyze change over a meaningful time.

How to Perform a Longitudinal Study?

There are only two approaches you can take when performing a longitudinal study. You can either source your own data or use previously gathered data.

1. Sourcing for your own data

Collecting your own data is a more verifiable method because you can trust your own data. The way you collect your data is also heavily dependent on the type of study you’re conducting.

If you’re conducting a retrospective study, you’d have to collect data on events that have already happened. An example is going through records to find patterns in cancer patients.

For a prospective study, you collect the data in real-time. This means finding a sample population, following them, and documenting your findings over the course of your study.

Irrespective of what study type you’d be conducting, you need a versatile data collection tool to help you accurately record your data. One we strongly recommend is Formplus . Signup here for free.

2. Using previously gathered data

Governmental and research institutes often carry out longitudinal studies and make the data available to the public. So you can pick up their previously researched data and use them for your own study. An example is the UK data service website .

Using previously gathered data isn’t just easy, they also allow you to carry out research over a long period of time.

The downside to this method is that it’s very restrictive because you can only use the data set available to you. You also have to thoroughly examine the source of the data given to you.

Advantages of a Longitudinal Study

- Longitudinal studies help you discover variable patterns over time, leading to more precise causal relationships and research outcomes.

- When researching developmental trends, longitudinal studies allow you to discover changes across lifespans and arrive at valid research outcomes.

- They are highly flexible, which means the researcher can adjust the study’s focus while it is ongoing.

- Unlike other research methods, longitudinal studies collect unique, long-term data and highlight relationships that cannot be discovered in a short-term investigation.

- You can collect additional data to study unexpected findings at any point in your systematic investigation.

Disadvantages and Limitations of a Longitudinal Study

- It’s difficult to predict the results of longitudinal studies because of the extended time frame. Also, it may take several years before the data begins to produce observable patterns or relationships that can be monitored.

- It costs lots of money to sustain a research effort for years. You’ll keep incurring costs every year compared to other forms of research that can be completed in a smaller fraction of the time.

- Longitudinal studies require a large sample size which might be challenging to achieve. Without this, the entire investigation will have little or no impact.

- Longitudinal studies often experience panel attrition. This happens when some members of the research sample are unable to complete the study due to several reasons like changes in contact details, refusal, incapacity, and even death.

Longitudinal Studies Examples

How does a longitudinal study work in the real world? To answer this, let’s consider a few typical scenarios.

A researcher wants to know the effects of a low-carb diet on weight loss. So, he gathers a group of obese men and kicks off the systematic investigation using his preferred longitudinal study method. He records information like how much they weigh, the number of carbs in their diet, and the like at different points. All these data help him to arrive at valid research outcomes.

Use for Free: Macros Calories Diet Plan Template

A researcher wants to know if there’s any relationship between children who drink milk before school and high classroom performance . First, he uses a sampling technique to gather a large research population.

Then, he conducts a baseline survey to establish the premise of the research for later comparison. Next, the researcher gives a log to each participant to keep track of predetermined research variables .

Example 3

You decide to study how a particular diet affects athletes’ performance over time. First, you gather your sample population , establish a baseline for the research, and observe and record the required data.

Longitudinal Studies Frequently Asked Questions (FAQs)

- Are Longitudinal Studies Quantitative or Qualitative?

Longitudinal studies are primarily a qualitative research method because the researcher observes and records changes in variables over an extended period. However, it can also be used to gather quantitative data depending on your research context.

- What Is Most Likely the Biggest Problem with Longitudinal Research?

The biggest challenge with longitudinal research is panel attrition. Due to the length of the research process, some variables might be unable to complete the study for one reason or the other. When this happens, it can distort your data and research outcomes.

- What is Longitudinal Data Collection?

Longitudinal data collection is the process of gathering information from the same sample population over a long period. Longitudinal data collection uses interviews, surveys, and observation to collect the required information from research sources.

- What is the Difference Between Longitudinal Data and a Time Series Analysis?

Because longitudinal studies collect data over a long period, they are often mistaken for time series analysis. So what’s the real difference between these two concepts?

In a time series analysis, the researcher focuses on a single individual at multiple time intervals. Meanwhile, longitudinal data focuses on multiple individuals at various time intervals.

Connect to Formplus, Get Started Now - It's Free!

- cohort study

- cross-sectional study

- longitudinal study

- longitudinal study faq

- panel study

- retrospective cohort study

- sample data

- busayo.longe

You may also like:

11 Retrospective vs Prospective Cohort Study Differences

differences between retrospective and prospective cohort studies in definitions, examples, data collection, analysis, advantages, sample...

Selection Bias in Research: Types, Examples & Impact

In this article, we’ll discuss the effects of selection bias, how it works, its common effects and the best ways to minimize it.

Cross-Sectional Studies: Types, Pros, Cons & Uses

In this article, we’ll look at what cross-sectional studies are, how it applies to your research and how to use Formplus to collect...

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

What Is a Longitudinal Study?

Tracking Variables Over Time

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amanda Tust is a fact-checker, researcher, and writer with a Master of Science in Journalism from Northwestern University's Medill School of Journalism.

:max_bytes(150000):strip_icc():format(webp)/Amanda-Tust-1000-ffe096be0137462fbfba1f0759e07eb9.jpg)

Steve McAlister / The Image Bank / Getty Images

The Typical Longitudinal Study

Potential pitfalls, frequently asked questions.

A longitudinal study follows what happens to selected variables over an extended time. Psychologists use the longitudinal study design to explore possible relationships among variables in the same group of individuals over an extended period.

Once researchers have determined the study's scope, participants, and procedures, most longitudinal studies begin with baseline data collection. In the days, months, years, or even decades that follow, they continually gather more information so they can observe how variables change over time relative to the baseline.

For example, imagine that researchers are interested in the mental health benefits of exercise in middle age and how exercise affects cognitive health as people age. The researchers hypothesize that people who are more physically fit in their 40s and 50s will be less likely to experience cognitive declines in their 70s and 80s.

Longitudinal vs. Cross-Sectional Studies

Longitudinal studies, a type of correlational research , are usually observational, in contrast with cross-sectional research . Longitudinal research involves collecting data over an extended time, whereas cross-sectional research involves collecting data at a single point.

To test this hypothesis, the researchers recruit participants who are in their mid-40s to early 50s. They collect data related to current physical fitness, exercise habits, and performance on cognitive function tests. The researchers continue to track activity levels and test results for a certain number of years, look for trends in and relationships among the studied variables, and test the data against their hypothesis to form a conclusion.

Examples of Early Longitudinal Study Design

Examples of longitudinal studies extend back to the 17th century, when King Louis XIV periodically gathered information from his Canadian subjects, including their ages, marital statuses, occupations, and assets such as livestock and land. He used the data to spot trends over the years and understand his colonies' health and economic viability.

In the 18th century, Count Philibert Gueneau de Montbeillard conducted the first recorded longitudinal study when he measured his son every six months and published the information in "Histoire Naturelle."

The Genetic Studies of Genius (also known as the Terman Study of the Gifted), which began in 1921, is one of the first studies to follow participants from childhood into adulthood. Psychologist Lewis Terman's goal was to examine the similarities among gifted children and disprove the common assumption at the time that gifted children were "socially inept."

Types of Longitudinal Studies

Longitudinal studies fall into three main categories.

- Panel study : Sampling of a cross-section of individuals

- Cohort study : Sampling of a group based on a specific event, such as birth, geographic location, or experience

- Retrospective study : Review of historical information such as medical records

Benefits of Longitudinal Research

A longitudinal study can provide valuable insight that other studies can't. They're particularly useful when studying developmental and lifespan issues because they allow glimpses into changes and possible reasons for them.

For example, some longitudinal studies have explored differences and similarities among identical twins, some reared together and some apart. In these types of studies, researchers tracked participants from childhood into adulthood to see how environment influences personality , achievement, and other areas.

Because the participants share the same genetics , researchers chalked up any differences to environmental factors . Researchers can then look at what the participants have in common and where they differ to see which characteristics are more strongly influenced by either genetics or experience. Note that adoption agencies no longer separate twins, so such studies are unlikely today. Longitudinal studies on twins have shifted to those within the same household.

As with other types of psychology research, researchers must take into account some common challenges when considering, designing, and performing a longitudinal study.

Longitudinal studies require time and are often quite expensive. Because of this, these studies often have only a small group of subjects, which makes it difficult to apply the results to a larger population.

Selective Attrition

Participants sometimes drop out of a study for any number of reasons, like moving away from the area, illness, or simply losing motivation . This tendency, known as selective attrition , shrinks the sample size and decreases the amount of data collected.

If the final group no longer reflects the original representative sample , attrition can threaten the validity of the experiment. Validity refers to whether or not a test or experiment accurately measures what it claims to measure. If the final group of participants doesn't represent the larger group accurately, generalizing the study's conclusions is difficult.

The World’s Longest-Running Longitudinal Study

Lewis Terman aimed to investigate how highly intelligent children develop into adulthood with his "Genetic Studies of Genius." Results from this study were still being compiled into the 2000s. However, Terman was a proponent of eugenics and has been accused of letting his own sexism , racism , and economic prejudice influence his study and of drawing major conclusions from weak evidence. However, Terman's study remains influential in longitudinal studies. For example, a recent study found new information on the original Terman sample, which indicated that men who skipped a grade as children went on to have higher incomes than those who didn't.

A Word From Verywell

Longitudinal studies can provide a wealth of valuable information that would be difficult to gather any other way. Despite the typical expense and time involved, longitudinal studies from the past continue to influence and inspire researchers and students today.

A longitudinal study follows up with the same sample (i.e., group of people) over time, whereas a cross-sectional study examines one sample at a single point in time, like a snapshot.

A longitudinal study can occur over any length of time, from a few weeks to a few decades or even longer.

That depends on what researchers are investigating. A researcher can measure data on just one participant or thousands over time. The larger the sample size, of course, the more likely the study is to yield results that can be extrapolated.

Piccinin AM, Knight JE. History of longitudinal studies of psychological aging . Encyclopedia of Geropsychology. 2017:1103-1109. doi:10.1007/978-981-287-082-7_103

Terman L. Study of the gifted . In: The SAGE Encyclopedia of Educational Research, Measurement, and Evaluation. 2018. doi:10.4135/9781506326139.n691

Sahu M, Prasuna JG. Twin studies: A unique epidemiological tool . Indian J Community Med . 2016;41(3):177-182. doi:10.4103/0970-0218.183593

Almqvist C, Lichtenstein P. Pediatric twin studies . In: Twin Research for Everyone . Elsevier; 2022:431-438.

Warne RT. An evaluation (and vindication?) of Lewis Terman: What the father of gifted education can teach the 21st century . Gifted Child Q. 2018;63(1):3-21. doi:10.1177/0016986218799433

Warne RT, Liu JK. Income differences among grade skippers and non-grade skippers across genders in the Terman sample, 1936–1976 . Learning and Instruction. 2017;47:1-12. doi:10.1016/j.learninstruc.2016.10.004

Wang X, Cheng Z. Cross-sectional studies: Strengths, weaknesses, and recommendations . Chest . 2020;158(1S):S65-S71. doi:10.1016/j.chest.2020.03.012

Caruana EJ, Roman M, Hernández-Sánchez J, Solli P. Longitudinal studies . J Thorac Dis . 2015;7(11):E537-E540. doi:10.3978/j.issn.2072-1439.2015.10.63

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Longitudinal Studies: Methods, Benefits and Challenges

Introduction

What is a longitudinal study, what are examples of longitudinal studies, longitudinal studies vs. cross-sectional studies, benefits of longitudinal studies, types of longitudinal studies, how do you conduct a longitudinal study, challenges of longitudinal research.

Longitudinal research refers to any study that collects the same sample of data from the same group of people at different points in time. While time-consuming and potentially costly in terms of resources and effort, a longitudinal study has enormous utility in understanding complex phenomena that might change as time passes.

In this article, we will explore the nature and importance of longitudinal studies to allow you to decide whether your research inquiry warrants a longitudinal inquiry or if a cross-sectional study is more appropriate.

To understand a longitudinal study, let's start with a simple survey as an example. Determining the popularity of a particular product or service at a specific point in time can simply be a matter of collecting and analyzing survey responses from a certain number of people within a population. The qualitative and quantitative data collected from these surveys can tell you what people think at the moment those surveys were conducted. This is what is known as a cross-sectional study .

Now imagine the product that you're trying to assess is seasonal like a brand of ice cream or hot chocolate. What's popular in summer may not be popular in winter, and trends come and go as competing products enter the market. In this context, the one survey that was conducted is merely a snapshot of a moving phenomenon at a single point in time.

In a longitudinal study design, that same survey will be distributed to the same group of people at different time intervals (e.g., twice a year or once a month) to allow researchers to see if there are any changes. Perhaps there is an ice cream that is as popular in the winter as it is in the summer, which may be worth identifying to expand profitability. A longitudinal study would thus be useful to explore this question.

Longitudinal research isn't conducted simply for the sake of being able to say research was conducted over a extended period of time. A longitudinal analysis collects data at different points in time to observe changes in the characteristics of the object of inquiry. Ultimately, collecting data for a longitudinal study can help identify cause-and-effect relationships that cannot otherwise be perceived in discrete or cross-sectional studies.

Longitudinal studies are found in many research fields where time is an important factor. Let's look at examples in three different research areas.

Classroom research is often longitudinal because of the acknowledgment that successful learning takes place over time and not merely in a single class session. Such studies take place over several classes, perhaps over a semester or an entire academic year. A researcher might observe the same group of students as they progress academically or, conversely, identify any significant decline in learning outcomes to determine how changes in teaching and learning over time might affect student development.

Health sciences

Medical research often relies on longitudinal studies to determine the effectiveness and risk factors involved with drugs, treatments, or other medical remedies. Consider a dietary supplement that is purported to help people lose weight. Perhaps, in the beginning, people who take this supplement actually do lose weight. But what happens later on? Do they keep the weight off, gain it back or, even worse, gain even more weight in the long term? A longitudinal study can help researchers determine if that supplement produces sustainable results or is merely a quick fix that has negative side effects later on.

Product life cycles and market trends can take extended periods of time to manifest. In the meantime, competing products might enter the market and consequently affect customer loyalty and product image. If a cross-sectional study captures a snapshot of opinions in the marketplace, then think of a longitudinal study as several snapshots spread out over time to allow researchers to observe changes in market behavior and their underlying causes as time passes.

Cross-sectional studies are discrete studies that capture data within a particular context at a particular point in time. These kinds of studies are more appropriate for research inquiries that don't examine some form of development or evolution, such as concepts or phenomena that are generally static or unchanging over extended periods of time.

To determine which type of study would be more appropriate for your research inquiry, it's important to identify the object of inquiry that is being studied. Ask yourself the following questions when planning your study:

- Do you need an extended period of time to sufficiently capture the phenomenon?

- Is the sample of data collected likely to change over time?

- Is it feasible to commit time and resources to an extended study?

If you said yes to all of these questions, a longitudinal study would be suited to addressing your research questions . Otherwise, cross-sectional studies may be more appropriate for your research.

Intuitive tools, powerful analysis with ATLAS.ti

Make the most of your research with our easy-to-use data analysis platform. Download a free trial today.

A longitudinal study can provide many benefits potentially relevant to the research question you are looking to address. Here are three different advantages you might consider.

Abundance of data

In many cases, research rigor is served by collecting abundant data . Research approaches like thematic analysis and content analysis benefit from a large set of data that helps you identify the most frequently occurring phenomena within a research context. Large data sets collected through longitudinal studies can be useful for separating abundance from anecdotes.

Identification of patterns

Analyzing patterns often implies exploring how things interact sequentially or over time, which is best captured with longitudinal data. Think about, for example, how sports competitions and political elections take place over a year or even multiple years. Construction of ships and buildings can be a long and protracted process. Doctoral students can spend four or more years before earning their degree. A simple cross-sectional study in such contexts may not gather sufficient data captured over a period of time long enough to observe sequences of related events.

Observation of relationships

Certain relationships between different phenomena can only be observed longitudinally. The famous marshmallow test that asserted connections between behaviors in childhood and later life outcomes spawned decades of longitudinal study. Even if your research is much simpler, your research question might involve the observation of distant but related phenomena that only a longitudinal study can capture.

There are two types of longitudinal studies to choose from, primarily depending on what you are looking to examine. Keep in mind that longitudinal study design, no matter what type of study you might pursue, is a matter of sustaining a research inquiry over time to capture the necessary data. It's important that your decision-making process is both transparent and intentional for the sake of research rigor.

Cohort studies

A cohort study examines a group of people that share a common trait. This trait could be a similar age group, a common level of education, or a shared experience.

An example of a cohort study is one that looks to identify factors related to successful aging found in lifestyles among people of middle age. Such a study could observe a group of people, all of whom are similar in age, to identify a common range of lifestyles and activities that are applicable for others of the same age group.

Panel studies

The difference between a cohort study and a panel study is that panel studies collect data from within a general population, rather than a specific set of particular individuals with a common characteristic. The goal of a panel study is to examine a representative sample of a larger population rather than a specific subset of people.

A longitudinal survey that adopts a panel study model, for example, would randomly sample a population and send out questionnaires to the same sample of people over time. Such a survey could look at changes in everyday habits regarding spending or work-life balance and how they might be influenced by environmental or economic shifts from one period of time to the next.

Planning a prospective or future research study that is longitudinal requires careful attention to detail prior to conducting the study. By itself, a longitudinal study can be considered a repeated sequence of the same discrete study across different periods of time.

However, ensuring that multiple iterations of the same study are conducted repeatedly and rigorously is the challenge in longitudinal studies. With that in mind, let's look at some of the different research methods that might be employed in longitudinal research.

Observational research

Action research and ethnographies rely on longitudinal observations to provide sufficient depth to the cultural practices and interactions that are under study. In anthropological and sociological research, some phenomena are so complex or dynamic that they can only be observed longitudinally.

Organizational research, for example, employs longitudinal research to identify how people in the workplace or other similar settings interact with each other. This kind of research is useful for understanding how rapport is established and whether productivity increases as a result.

A longitudinal survey can address research questions that deal with opinions and perspectives that may change over time. Unlike a cross-sectional survey from a particular point in time, longitudinal surveys are administered repeatedly to the same group of people to collect data on changes or developments.

A personal wellness study, for example, might examine how healthy habits (or the lack thereof) affect health by asking respondents questions about their daily routine. By comparing their routines over time with information such as blood pressure, weight, and waist size, survey data on lifestyle routines can allow researchers to identify what habits can cause the greatest improvement in individual health.

Experiments

Various experimental studies, especially in medical research, can be longitudinal in nature. A longitudinal experiment usually collects data from a control group and an experimental group to observe the effects of a certain treatment on the same participants over a period of time.

This type of research is commonly employed to examine the effects of medical treatments on outcomes such as cardiovascular disease or diabetes. The requirements for governmental approval are incredibly stringent and call for rigorous data collection that establishes causality.

Needless to say, longitudinal studies tend to be time-consuming. The most obvious drawback of longitudinal studies is that they take up a significant portion of researchers' time and effort.

However, there are other disadvantages of longitudinal studies, particularly the likelihood of participant attrition. In other words, the more lengthy the study, the more likely it is that participants may drop out of the study. This is especially true when working with vulnerable or marginalized populations such as migrant workers or homeless people, populations that may not always be easy to contact for collecting data.

Over the course of time, the research context that a researcher studies may change with the appearance of new technologies, trends, or other developments that may not have been anticipated. While confounding influences are possible in any study, they are likely to be more abundant in studies on a longitudinal scale. As a result, it's important for the researcher to try to account for these influences when analyzing the data . It could even be worthwhile to examine how the appearance of that phenomenon or concept impacted a relevant outcome of interest in your area.

Turn your research into critical insights with ATLAS.ti

Powerful tools to make the most of your data are just a click away. Download a free trial of ATLAS.ti.

What is a longitudinal study?

Last updated

20 February 2023

Reviewed by

Longitudinal studies are common in epidemiology, economics, and medicine. People also use them in other medical and social sciences, such as to study customer trends. Researchers periodically observe and collect data from the variables without manipulating the study environment.

A company may conduct a tracking study, surveying a target audience to measure changes in attitudes and behaviors over time. The collected data doesn't change, and the time interval remains consistent. This longitudinal study can measure brand awareness, customer satisfaction , and consumer opinions and analyze the impact of an advertising campaign.

Analyze longitudinal studies

Dovetail streamlines longitudinal study data to help you uncover and share actionable insights

- Types of longitudinal studies

There are two types of longitudinal studies: Cohort and panel studies.

Panel study

A panel study is a type of longitudinal study that involves collecting data from a fixed number of variables at regular but distant intervals. Researchers follow a group or groups of people over time. Panel studies are designed for quantitative analysis but are also usable for qualitative analysis .

A panel study may research the causes of age-related changes and their effects. Researchers may measure the health markers of a group over time, such as their blood pressure, blood cholesterol, and mental acuity. Then, they can compare the scores to understand how age positively or negatively correlates with these measures.

Cohort study

A cohort longitudinal study involves gathering information from a group of people with something in common, such as a specific trait or experience of the same event. The researchers observe behaviors and other details of the group over time. Unlike panel studies, you can pick a different group to test in cohort studies.

An example of a cohort study could be a drug manufacturer studying the effects on a group of users taking a new drug over a period. A drinks company may want to research consumers with common characteristics, like regular purchasers of sugar-free sodas. This will help the company understand trends within its target market.

- Benefits of longitudinal research

If you want to study the relationship between variables and causal factors responsible for certain outcomes, you should adopt a longitudinal approach to your investigation.

The benefits of longitudinal research over other research methods include the following:

Insights over time

It gives insights into how and why certain things change over time.

Better information

Researchers can better establish sequences of events and identify trends.

No recall bias

The participants won't have recall bias if you use a prospective longitudinal study. Recall bias is an error that occurs in a study if respondents don't wholly or accurately recall the details of their actions, attitudes, or behaviors.

Because variables can change during the study, researchers can discover new relationships or data points worth further investigation.

Small groups

Longitudinal studies don't need a large group of participants.

- Potential pitfalls

The challenges and potential pitfalls of longitudinal studies include the following:

A longitudinal survey takes a long time, involves multiple data collections , and requires complex processes, making it more expensive than other research methods.

Unpredictability

Because they take a long time, longitudinal studies are unpredictable. Unexpected events can cause changes in the variables, making earlier data potentially less valuable.

Slow insights

Researchers can take a long time to uncover insights from the study as it involves multiple observations.

Participants can drop out of the study, limiting the data set and making it harder to draw valid conclusions from the results.

Overly specific data

If you study a smaller group to reduce research costs, results will be less generalizable to larger populations versus a study with a larger group.

Despite these potential pitfalls, you can still derive significant value from a well-designed longitudinal study by uncovering long-term patterns and relationships.

- Longitudinal study designs

Longitudinal studies can take three forms: Repeated cross-sectional, prospective, and retrospective.

Repeated cross-sectional studies

Repeated cross-sectional studies are a type of longitudinal study where participants change across sampling periods. For example, as part of a brand awareness survey , you ask different people from the same customer population about their brand preferences.

Prospective studies

A prospective study is a longitudinal study that involves real-time data collection, and you follow the same participants over a period. Prospective longitudinal studies can be cohort, where participants have similar characteristics or experiences. They can also be panel studies, where you choose the population sample randomly.

Retrospective studies

Retrospective studies are longitudinal studies that involve collecting data on events that some participants have already experienced. Researchers examine historical information to identify patterns that led to an outcome they established at the start of the study. Retrospective studies are the most time and cost-efficient of the three.

- How to perform a longitudinal study

When developing a longitudinal study plan, you must decide whether to collect your data or use data from other sources. Each choice has its benefits and drawbacks.

Using data from other sources

You can freely access data from many previous longitudinal studies, especially studies conducted by governments and research institutes. For example, anyone can access data from the 1970 British Cohort Study on the UK Data Service website .

Using data from other sources saves the time and money you would have spent gathering data. However, the data is more restrictive than the data you collect yourself. You are limited to the variables the original researcher was investigating, and they may have aggregated the data, obscuring some details.

If you can't find data or longitudinal research that applies to your study, the only option is to collect it yourself.

Collecting your own data

Collecting data enhances its relevance, integrity, reliability, and verifiability. Your data collection methods depend on the type of longitudinal study you want to perform. For example, a retrospective longitudinal study collects historical data, while a prospective longitudinal study collects real-time data.

The only way to ensure relevant and reliable data is to use an effective and versatile data collection tool. It can improve the speed and accuracy of the information you collect.

What is a longitudinal study in research?

A longitudinal study is a research design that involves studying the same variables over time by gathering data continuously or repeatedly at consistent intervals.

What is an example of a longitudinal study?

An excellent example of a longitudinal study is market research to identify market trends. The organization's researchers collect data on customers' likes and dislikes to assess market trends and conditions. An organization can also conduct longitudinal studies after launching a new product to understand customers' perceptions and how it is doing in the market.

Why is it called a longitudinal study?

It’s a longitudinal study because you collect data over an extended period. Longitudinal data tracks the same type of information on the same variables at multiple points in time. You collect the data over repeated observations.

What is a longitudinal study vs. a cross-sectional study?

A longitudinal study follows the same people over an extended period, while a cross-sectional study looks at the characteristics of different people or groups at a given time. Longitudinal studies provide insights over an extended period and can establish patterns among variables.

Cross-sectional studies provide insights about a point in time, so they cannot identify cause-and-effect relationships.

Editor’s picks

Last updated: 11 January 2024

Last updated: 15 January 2024

Last updated: 25 November 2023

Last updated: 12 May 2023

Last updated: 30 April 2024

Last updated: 18 May 2023

Last updated: 10 April 2023

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Call for Papers

- Why publish with Work, Aging and Retirement?

- About Work, Aging and Retirement

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Questions on conceptual issues, questions on research design, questions on statistical techniques, acknowledgments, longitudinal research: a panel discussion on conceptual issues, research design, and statistical techniques.

All authors contributed equally to this article and the order of authorship is arranged arbitrarily. Correspondence concerning this article should be addressed to Mo Wang, Warrington College of Business, Department of Management, University of Florida, Gainesville, FL 32611. E-mail: [email protected]

Decision Editor: Donald Truxillo, PhD

- Article contents

- Figures & tables

- Supplementary Data

Mo Wang, Daniel J. Beal, David Chan, Daniel A. Newman, Jeffrey B. Vancouver, Robert J. Vandenberg, Longitudinal Research: A Panel Discussion on Conceptual Issues, Research Design, and Statistical Techniques, Work, Aging and Retirement , Volume 3, Issue 1, 1 January 2017, Pages 1–24, https://doi.org/10.1093/workar/waw033

- Permissions Icon Permissions

The goal of this article is to clarify the conceptual, methodological, and practical issues that frequently emerge when conducting longitudinal research, as well as in the journal review process. Using a panel discussion format, the current authors address 13 questions associated with 3 aspects of longitudinal research: conceptual issues, research design, and statistical techniques. These questions are intentionally framed at a general level so that the authors could address them from their diverse perspectives. The authors’ perspectives and recommendations provide a useful guide for conducting and reviewing longitudinal studies in work, aging, and retirement research.

An important meta-trend in work, aging, and retirement research is the heightened appreciation of the temporal nature of the phenomena under investigation and the important role that longitudinal study designs play in understanding them (e.g., Heybroek, Haynes, & Baxter, 2015 ; Madero-Cabib, Gauthier, & Le Goff, 2016 ; Wang, 2007 ; Warren, 2015 ; Weikamp & Göritz, 2015 ). This echoes the trend in more general research on work and organizational phenomena, where the discussion of time and longitudinal designs has evolved from explicating conceptual and methodological issues involved in the assessment of changes over time (e.g., McGrath & Rotchford, 1983 ) to the development and application of data analytic techniques (e.g., Chan, 1998 ; Chan & Schmitt, 2000 ; DeShon, 2012 ; Liu, Mo, Song, & Wang, 2016 ; Wang & Bodner, 2007 ; Wang & Chan, 2011 ; Wang, Zhou, & Zhang, 2016 ), theory rendering (e.g., Ancona et al. , 2001 ; Mitchell & James, 2001 ; Vancouver, Tamanini, & Yoder, 2010 ; Wang et al. , 2016 ), and methodological decisions in conducting longitudinal research (e.g., Beal, 2015 ; Bolger, Davis, & Rafaeli, 2003 ; Ployhart & Vandenberg, 2010 ). Given the importance of and the repeated call for longitudinal studies to investigate work, aging, and retirement-related phenomena (e.g., Fisher, Chaffee, & Sonnega, 2016 ; Wang, Henkens, & van Solinge, 2011 ), there is a need for more nontechnical discussions of the relevant conceptual and methodological issues. Such discussions would help researchers to make more informed decisions about longitudinal research and to conduct studies that would both strengthen the validity of inferences and avoid misleading interpretations.

In this article, using a panel discussion format, the authors address 13 questions associated with three aspects of longitudinal research: conceptual issues, research design, and statistical techniques. These questions, as summarized in Table 1 , are intentionally framed at a general level (i.e., not solely in aging-related research), so that the authors could address them from diverse perspectives. The goal of this article is to clarify the conceptual, methodological, and practical issues that frequently emerge in the process of conducting longitudinal research, as well as in the related journal review process. Thus, the authors’ perspectives and recommendations provide a useful guide for conducting and reviewing longitudinal studies—not only those dealing with aging and retirement, but also in the broader fields of work and organizational research.

Questions Regarding Longitudinal Research Addressed in This Article

Conceptual Issue Question 1: Conceptually, what is the essence of longitudinal research?

This is a fundamental question to ask given the confusion in the literature. It is common to see authors attribute their high confidence in their causal inferences to the longitudinal design they use. It is also common to see authors attribute greater confidence in their measurement because of using a longitudinal design. Less common, but with increasing frequency, authors claim to be examining the role of time in their theoretical models via the use of longitudinal designs. These different assumptions by authors illustrate the need for clarifying when specific attributions about longitudinal research are appropriate. Hence, a discussion of the essence of longitudinal research and what it provides is in order.

Oddly, definitions of longitudinal research are rare. One exception is a definition by Taris (2000) , who explained that longitudinal “data are collected for the same set of research units (which might differ from the sampling units/respondents) for (but not necessarily at) two or more occasions, in principle allowing for intra-individual comparison across time” (pp. 1–2). Perhaps more directly relevant for the current discussion of longitudinal research related to work and aging phenomena, Ployhart and Vandenberg (2010) defined “ longitudinal research as research emphasizing the study of change and containing at minimum three repeated observations (although more than three is better) on at least one of the substantive constructs of interest” (p. 97; italics in original). Compared to Taris (2000) , Ployhart and Vandenberg’s (2010) definition explicitly emphasizes change and encourages the collection of many waves of repeated measures. However, Ployhart and Vandenberg’s definition may be overly restrictive. For example, it precludes designs often classified as longitudinal such as the prospective design. In a prospective design, some criterion (i.e., presumed effect) is measured at Times 1 and 2, so that one can examine change in the criterion as a function of events (i.e., presumed causes) happening (or not) between the waves of data collection. For example, a researcher can use this design to assess the psychological and behavioral effects of retirement that occur before and after retirement. That is, psychological and behavioral variables are measured before and after retirement. Though not as internally valid as an experiment (which is not possible because we cannot randomly assign participants into retirement and non-retirement conditions), this prospective design is a substantial improvement over the typical design where the criteria are only measured at one time. This is because it allows one to more directly examine change in a criterion as a function of differences between events or person variables. Otherwise, one must draw inferences based on retrospective accounts of the change in criterion along with the retrospective accounts of the events; further, one may worry that the covariance between the criterion and person variables is due to changes in the criterion that are also changing the person. Of course, this design does not eliminate the possibility that changes in criterion may cause differences in events (e.g., changes observed in psychological and behavioral variables lead people to decide to retire).