How To Use ChatGPT To Write A Literature Review: Prompts & References

In the rapidly evolving world of academic research, the integration of AI tools like ChatGPT has transformed the traditional approach to literature reviews. As a researcher, you should also leverage on this tool to make your research work easier.

In this post, explore how ChatGPT can enhance the literature review process. We’ll explore how specific prompts can effectively guide this advanced AI model to generate insightful content, while ensuring accuracy, relevance, and academic integrity in their scholarly work.

How to Use ChatGPT for Writing a Literature Review

Understanding chatgpt’s limitations.

While it can efficiently generate content, streamline the research process, and provide a comprehensive understanding of relevant literature, its capabilities are not without constraints. Here are some for you to consider:

Dependence On Pre-Existing Datasets

Since ChatGPT is a language model trained on available data, it may not include the most recent research papers or cutting-edge findings in a specific field. This gap can lead to a lack of current state-of-research insights, particularly crucial in fields like technology and science where advancements happen rapidly.

May Lack Depth And Context

ChatGPT, while able to produce summaries and synthesize information, might not fully grasp the nuanced arguments or complex theories specific to a research topic. This limitation necessitates that researchers critically evaluate and supplement AI-generated text with thorough analysis and insights from recent systematic reviews and primary sources.

Risk Of Plagiarism

Although ChatGPT can generate human-like text, it’s vital to ensure that the content for your literature review is original and properly cited. Relying solely on ChatGPT to write a literature review defeats the purpose of engaging deeply with the material and developing a personal understanding of the literature.

Not A Total Replacement of A Researcher

While ChatGPT can assist non-native English speakers in crafting clear and concise academic writing, it’s not a replacement for the human ability to contextualize and interpret research findings. Researchers must guide the AI model with specific prompts and leverage it as a tool rather than a substitute for comprehensive analysis.

By keeping these limitations in mind, ChatGPT can be a valuable aid in the literature review process, but it should be used judiciously and in conjunction with traditional research methods.

Defining Research Objective

When starting on writing a literature review, the initial step involves using ChatGPT to define your research question or hypothesis.

The AI model’s ability to respond with a summary of the current state of research in your field can provide a comprehensive understanding, especially for systematic reviews or research papers.

For example, by inputting a prompt related to your research topic, ChatGPT can generate human-like text, summarizing prior research and highlighting relevant literature.

One insider tip for effectively using ChatGPT in the literature review process is to leverage its natural language processing capabilities to identify relevant keywords.

These keywords are crucial for non-native English speakers or those new to a research field, as they streamline the search for pertinent academic writing. Additionally, ChatGPT can guide you in understanding the context of your research topic, offering insights that are often challenging to find.

Using AI language models like ChatGPT for generating content for your literature review is efficient and effective, saving valuable time. However, it’s vital to critically evaluate the generated text to ensure it aligns with your research objectives and to avoid plagiarism.

ChatGPT’s ability to synthesize large amounts of information can aid in developing a clear and concise outline, but remember, it’s a guide, not a replacement for human analysis.

Despite these limitations, ChatGPT provides a unique advantage in conducting literature reviews. It can automate mundane tasks, allowing researchers to focus on analysis and critical thinking.

Identifying Keywords and Search Terms

Using ChatGPT to identify relevant keywords related to your research topic can significantly streamline your workflow.

For instance, when you input a summary of your research question into ChatGPT, the AI model can generate a list of pertinent keywords.

These keywords are not just randomly selected; they are based on the vast amounts of information in ChatGPT’s dataset, making them highly relevant and often inclusive of terms that are current in your research field.

An insider tip for leveraging ChatGPT effectively is to guide the AI with clear and concise prompts.

For example, asking ChatGPT to: “summarize key themes in [specific field] research papers from the last five years” can yield a list of keywords and phrases that are not only relevant but also reflective of the current state of research.

This approach is particularly beneficial for conducting systematic reviews or for non-native English speakers who might be unfamiliar with specific academic jargon.

While ChatGPT can provide a comprehensive understanding of relevant literature and help automate the identification of keywords, it’s important to critically evaluate the generated content.

Researchers should use ChatGPT as a tool to augment their research process, not as a replacement for human insight.

It’s crucial to mind the limitations of the AI model and ensure that the keywords identified align with the research topic and objectives.

Creating an Initial Literature Review Outline

The key to using ChatGPT effectively in crafting an initial outline lies in its ability to generate content based on specific prompts.

For instance, a researcher working on organic photovoltaic devices can input a prompt into ChatGPT, such as “Help me create a structure for a literature review on organic photovoltaic devices.”

The AI model, using its comprehensive understanding of the research topic, can then produce a preliminary structure, including sections like:

- Introduction

- Advances in materials and technology, performance, and efficiency.

This generated outline serves as a valuable starting point. It helps in organizing thoughts and determining the key areas that the literature review should cover. I

mportantly, researchers can refine and expand this initial outline as they delve deeper into their topic, ensuring it aligns with their specific research question and the current state of research.

However, while ChatGPT can streamline the review process and save valuable time in creating an initial outline, researchers should not solely rely on it.

The content generated by ChatGPT must be critically evaluated and supplemented with in-depth research. This involves:

- Reading systematic reviews

- Reading research papers, and

- Summarizing relevant literature to ensure the review is comprehensive and up-to-date.

Get ChatGPT To Help You During Research, Using The Right Prompts

The key to effectively using ChatGPT in this process lies in crafting the right prompts, guiding the AI to generate relevant and useful content.

When initiating a literature review, the prompt should aim for a broad understanding of the research topic. For instance, asking ChatGPT to:

- “Give a brief overview of research done on [topic]”

- “What are some of the recent findings on the [topic] in research?” or

- “Summarize the historical development of [topic] in academia”

Helps in capturing the general landscape of the field. These prompts assist in identifying key theories, methodologies, and authors within the research area. As the review progresses, more specific prompts are necessary to delve deeper into individual studies. Queries like:

- “Summarize the main arguments and findings of [specific paper]” or

- “What are the strengths and weaknesses of [specific paper]?”

enable ChatGPT to provide detailed insights into particular research papers, aiding in understanding their contribution to the broader field. Comparative prompts are also crucial in synthesizing information across multiple works. Asking ChatGPT to:

- “Compare and contrast the methodologies of [paper 1] and [paper 2]” or

- “How do the findings of [paper 1] and [paper 2] agree or disagree?”

helps in discerning the nuances and disparities in the literature. In the final stages of the literature review, prompts should focus on summarizing findings and identifying emerging trends or gaps. For example:

- “What trends or patterns have emerged from the literature on [topic]?” or

- “What future research directions are suggested by the literature on [topic]?”

We will share more on these ChatGPT prompts in the later part of this post, read on.

Reviewing ChatGPT’s Responses

When using ChatGPT to write a literature review, it’s crucial to critically evaluate its responses.

Firstly, researchers should cross-reference the information provided by ChatGPT with actual research papers.

This step ensures the accuracy of the data and helps in identifying any discrepancies or outdated information, given that ChatGPT’s dataset may not include the most recent studies.

Another essential aspect is assessing the coherence and depth of the AI-generated text. ChatGPT can summarize and synthesize information efficiently, but it might not capture the nuances of complex theories or research arguments.

Researchers should ensure that the content aligns with their research question and systematically reviews the topic comprehensively. This is where a researcher’s value comes in.

Additionally, verifying the originality of the content is vital to avoid plagiarism. While ChatGPT can generate human-like text, researchers must ensure that the AI-generated content is used as a guide rather than a verbatim source.

Proper citations and references are essential to maintain the integrity of the literature review. Avoid torpedoing your own research by committing plagiarism.

Ensuring Coherence and Flow

One of the challenges when using such advanced AI language models is ensuring the coherence and flow of the final document. This aspect is crucial as it determines the readability and academic rigor of the literature review.

ChatGPT can generate vast amounts of content on a wide range of topics, responding efficiently to prompts and synthesizing information from its extensive dataset.

However, the content generated by ChatGPT, while informative, might not always align seamlessly with the specific research question or maintain a consistent narrative flow.

To tackle this, researchers need to take an active role in guiding ChatGPT and subsequently refining its output.

A practical approach is to use ChatGPT as a starting point, leveraging its ability to quickly provide summaries, synthesize relevant literature, and identify key references and keywords related to the research topic. For example, prompts like:

- “Summarize the current research on [topic]” or

- “Identify key debates in [topic]”

Can yield valuable initial insights.

Once this foundational information is obtained, the crucial task is to carefully review and edit the AI-generated content.

This involves connecting the dots between different sections, ensuring that each part contributes meaningfully to addressing the research question, and refining the language to maintain academic standards.

It’s also essential to check for and avoid plagiarism, ensuring that all sources are correctly cited.

In addition, considering the vast amounts of information ChatGPT can access, it’s vital to verify the accuracy and relevance of the content.

Researchers should cross-reference AI-generated summaries with actual research papers, especially the most recent ones, as ChatGPT’s dataset may not include the latest studies.

Editing and Proofreading

Now that your literature review is mostly written out, now focus on the editing and proofreading. The content generated by ChatGPT needs to be meticulously reviewed and edited. Here are the steps:

- Verifying the accuracy of the information. Researchers must cross-check the AI-generated content against actual research papers and systematic reviews. This ensures that the latest studies are accurately represented.

- Improve coherence and flow. Researchers should restructure sentences, ensure logical progression of ideas, and maintain a consistent academic tone throughout the document.

- Checking for plagiarism. Despite ChatGPT’s ability to generate human-like text, researchers must ensure that all sources are correctly cited and that the review does not inadvertently replicate existing material.

- Check Grammar and Spelling: Editing should encompass grammar checks, vocabulary refinement, and ensuring that the language used is appropriate for an academic audience.

- Update Citation: Review citation, or reference list to ensure everything is cited correctly, and the citation list is written out to your required standard, be it MLA, Chicago, or APA.

What ChatGPT Prompts To Use When Writing A Literature Review?

There are many ways to use ChatGPT to write literature review, usually by using the right prompts. Here’s how specific types of prompts can be effectively employed, with multiple examples for each category:

- “Provide a comprehensive overview of the latest research on [topic].”

- “Summarize the current understanding and key findings in the field of [topic].”

- “Detail the dominant theoretical frameworks currently used in [topic].”

- “Describe the evolution of theoretical approaches in [topic] over the past decade.”

- “Identify and discuss the major debates or controversies in [topic].”

- “What are the conflicting viewpoints or schools of thought in [topic]?”

- “List the leading researchers in [topic] and summarize their key contributions.”

- “Who are the emerging authors in [topic], and what unique perspectives do they offer?”

- “Explain the most common research methodologies used in studies about [topic].”

- “How have the methodologies in [topic] research evolved recently?”

- “Trace the historical development and major milestones in [topic].”

- “Provide a timeline of the key discoveries and shifts in understanding in [topic].”

- “What significant paradigm shifts have occurred in [topic] in the last twenty years?”

- “How has the focus of research in [topic] changed over time?”

- “Analyze the methodology and conclusions of [specific paper].”

- “Discuss the impact and reception of [specific paper] in the field of [topic].”

- “Compare the results and methodologies of [paper 1] and [paper 2] in [topic].”

- “How do [paper 1] and [paper 2] differ in their approach to [topic]?”

- “Based on current literature, what are the suggested future research directions in [topic]?”

- “Identify gaps in the literature of [topic] that could be explored in future studies.”

By using these types of prompts, researchers can guide ChatGPT to produce content that is not only relevant to their literature review but also rich in detail and scope.

Wrapping Up: Use Other AI Tools Too, Not Just ChatGPT

In conclusion, while ChatGPT serves as a powerful ally in the literature review process, it’s important to recognize it as one of many AI tools available to researchers as well. Diversifying your AI toolkit can enhance the depth and breadth of your review, offering varied perspectives and methodologies.

As AI continues to evolve, embracing a range of these tools can lead to more comprehensive, nuanced, and innovative academic writing, expanding the horizons of research and scholarly exploration beyond what we currently envision.

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

- Interesting

- Scholarships

- UGC-CARE Journals

Step-by-Step Guide: How to Use ChatGPT to Write a Literature Review With Prompts

Step-by-Step Guide: How to Use ChatGPT for Writing a Literature Review

Writing a literature review can be a challenging task for researchers and students alike. It requires a comprehensive understanding of the existing body of research on a particular topic. However, with the advent of advanced language models like ChatGPT, the process has become more accessible and efficient.

In this step-by-step guide, ilovephd will explore how you can leverage ChatGPT to write a compelling literature review that is both informative.

A Step-by-Step Guide: How to Use ChatGPT for Writing a Literature Review

Step 1: Defining Your Research Objective Before diving into the literature review process, it is crucial to define your research objective.

Clearly articulate the topic, research question, or hypothesis you aim to address through your literature review. This step will help you maintain focus and guide your search for relevant sources.

Step 2: Identifying Keywords and Search Terms To effectively use ChatGPT to assist in your literature review, you need to identify relevant keywords and search terms related to your research topic.

These keywords will help you narrow down your search and gather pertinent information. Consider using tools like Google Keyword Planner or other keyword research tools to discover commonly used terms in your field.

Step 3: Familiarizing Yourself with ChatGPT Before engaging with ChatGPT, it is essential to understand its capabilities and limitations. Familiarize yourself with the prompts and commands that work best with the model.

Keep in mind that ChatGPT is an AI language model trained on a vast amount of data, so it can provide valuable insights and suggestions, but it’s important to critically evaluate and validate the information it generates.

Step 4: Generating an Initial Literature Review Outline Start by creating an outline for your literature review. Outline the main sections, such as the introduction, methodology, results, discussion, and conclusion.

Within each section, jot down the key points or subtopics you want to cover. This will help you organize your thoughts and structure your review effectively.

Step 5: Engaging with ChatGPT for Research Assistance Once you have your outline ready, engage with ChatGPT for research assistance.

Begin by providing a clear and concise prompt that specifies the topic, context, and any specific questions you have. For example, “What are the current trends in [your research topic]?” or “Can you provide an overview of the main theories on [your research question]?”

Step 6: Reviewing and Selecting Generated Content ChatGPT will generate a response based on your prompt. Carefully review the content generated, considering its relevance, accuracy, and coherence.

Extract key points, relevant references, and insightful arguments from the response and incorporate them into your literature review. Be sure to cite and attribute the sources appropriately.

Step 7: Ensuring Coherence and Flow While ChatGPT can provide valuable content, it’s important to ensure the coherence and flow of your literature review.

Use your critical thinking skills to connect the generated content with your research objective and existing knowledge. Rearrange, rephrase, and expand upon the generated text to ensure it aligns with the structure and purpose of your review.

Step 8: Editing and Proofreading Once you have incorporated the generated content into your literature review, thoroughly edit and proofread the document.

Check for grammatical errors, consistency in referencing, and overall clarity. This step is crucial to ensure your literature review is polished and professional.

ChatGPT prompts to Write a Literature Review

Prompts you can use when engaging with ChatGPT for research assistance in writing a literature review:

- “Can you provide an overview of the main theories and concepts related to [your research topic]?”

- “What are the current trends and developments in [your research field]?”

- “Can you suggest some key studies or research papers on [specific aspect of your research topic]?”

- “What are the main methodologies used in conducting research on [your research topic]?”

- “Can you provide a critical analysis of the existing literature on [your research question]?”

- “Are there any gaps or areas of controversy in the literature on [your research topic] that need further exploration?”

- “What are the key findings and conclusions from the most recent studies on [your research topic]?”

- “Can you suggest some reputable journals or publications explore for relevant literature in [your research field]?”

- “What are the different perspectives or schools of thought in the literature on [your research topic]?”

- “Can you provide a summary of the historical background and evolution of research on [your research topic]?”

Remember to provide clear and specific instructions in your prompts to guide ChatGPT in generating relevant and accurate content for your literature review.

Using ChatGPT to write a literature review can greatly facilitate the research process. By following a step-by-step approach, researchers can effectively leverage ChatGPT’s capabilities to gather insights, generate content, and enhance the quality of their literature review. However, it is important to approach the generated content critically, validate it with reliable sources, and ensure coherence within the review.

- Academic Writing

- AI writing tool

- literature review guide

- literature review tips

- research assistance

- research process

- research writing

- write literature review

24 Best Free Plagiarism Checkers in 2024

How does gptzero work ai detector for chatgpt / gemini / copilot / meta ai, how to effectively use meta ai for phd research, most popular, how to check scopus indexed journals 2024, types of research variable in research with example, what is hypothesis in research types, examples, & importance, how to write a research paper a complete guide, 14 websites to download research paper for free – 2024, google phd $50000 fellowship program 2024, phd funding schemes in india 2024, best for you, what is phd, popular posts, 480 ugc-care list of journals – science – 2024, popular category.

- POSTDOC 317

- Interesting 259

- Journals 234

- Fellowship 127

- Research Methodology 102

- All Scopus Indexed Journals 92

iLovePhD is a research education website to know updated research-related information. It helps researchers to find top journals for publishing research articles and get an easy manual for research tools. The main aim of this website is to help Ph.D. scholars who are working in various domains to get more valuable ideas to carry out their research. Learn the current groundbreaking research activities around the world, love the process of getting a Ph.D.

Contact us: [email protected]

Google News

Copyright © 2024 iLovePhD. All rights reserved

- Artificial intelligence

- FIU Libraries

- RefTech LibGuides

- Artificial Intelligence Now: ChatGPT + AI Literacy Toolbox

- Literature Reviews with Prompts

Artificial Intelligence Now: ChatGPT + AI Literacy Toolbox: Literature Reviews with Prompts

- Literacy Tutorials

- AI LibGuides

- AI Literacy

- AI + Art News

- AI Plagiarism + Citation

- Which AI tools should I choose?

- Faculty + Academic Writing

- ChatGPT on InfoLit

- Libraries + AI

- Art + Image Prompts

- Prompt Literacy

- Text Prompts

- Scholarship + Publishing

- Students + AI Use

- FIU Library Resources

- How does ChatGPT work?

- Zoom Recordings: AI Workshops

- ALA 2024: Breaking Boundaries

Resources on Lit Reviews & AI

- How to Use ChatGPT to Accelerate Literature Review I’d like to show you how I use ChatGPT to speed up my literature review process. The topic I am exploring is heterogeneity(diversity) learning. I started with the keyword “Info-GAIL” as I read about it when using GAIL in the past.

- ChatGPT Simple Literature Review Template The evolution of library services in the digital age has seen a significant shift towards automation and artificial intelligence applications, with OpenAI's ChatGPT being one of the most popular tools. This literature review explores the trends in the application of ChatGPT in library settings, focusing on user engagement and support services from 2015 to 2023.

- ChatGPT as a Tool for Library Research – Some Notes and Suggestions I see ChatGPT and its alternatives as having partial value as tools for library searching. You can use them without any training, but they will perform better when you know some details about them.

9 Ways To Use ChatGPT To Write A Literature Review (WITHOUT Plagiarism) Video

- Step-by-Step

How to Use ChatGPT to Write a Literature Review With Prompts

Dr. Somasundaram R | https://www.ilovephd.com/ | Copyright © 2019-2023 – iLovePhD | May 19, 2023

Writing a literature review can be a challenging task for researchers and students alike. It requires a comprehensive understanding of the existing body of research on a particular topic. However, with the advent of advanced language models like ChatGPT, the process has become more accessible and efficient.

Discover how to effectively utilize ChatGPT as a research assistant to write a comprehensive and SEO-friendly literature review. Follow our step-by-step guide to leverage this powerful tool, optimize your review for search engines, and contribute to the scholarly conversation in your field.

A Step-by-Step Guide: How to Use ChatGPT for Writing a Literature Review

Step 1: Defining Your Research Objective Before diving into the literature review process, it is crucial to define your research objective.

Clearly articulate the topic, research question, or hypothesis you aim to address through your literature review. This step will help you maintain focus and guide your search for relevant sources.

Step 2: Identifying Keywords and Search Terms To effectively use ChatGPT to assist in your literature review, you need to identify relevant keywords and search terms related to your research topic.

These keywords will help you narrow down your search and gather pertinent information. Consider using tools like Google Keyword Planner or other keyword research tools to discover commonly used terms in your field.

Step 3: Familiarizing Yourself with ChatGPT Before engaging with ChatGPT, it is essential to understand its capabilities and limitations. Familiarize yourself with the prompts and commands that work best with the model.

Keep in mind that ChatGPT is an AI language model trained on a vast amount of data, so it can provide valuable insights and suggestions, but it’s important to critically evaluate and validate the information it generates.

Step 4: Generating an Initial Literature Review Outline Start by creating an outline for your literature review. Outline the main sections, such as the introduction, methodology, results, discussion, and conclusion.

Within each section, jot down the key points or subtopics you want to cover. This will help you organize your thoughts and structure your review effectively.

Step 5: Engaging with ChatGPT for Research Assistance Once you have your outline ready, engage with ChatGPT for research assistance.

Begin by providing a clear and concise prompt that specifies the topic, context, and any specific questions you have. For example, “What are the current trends in [your research topic]?” or “Can you provide an overview of the main theories on [your research question]?”

Step 6: Reviewing and Selecting Generated Content ChatGPT will generate a response based on your prompt. Carefully review the content generated, considering its relevance, accuracy, and coherence.

Extract key points, relevant references, and insightful arguments from the response and incorporate them into your literature review. Be sure to cite and attribute the sources appropriately.

Step 7: Ensuring Coherence and Flow While ChatGPT can provide valuable content, it’s important to ensure the coherence and flow of your literature review.

Use your critical thinking skills to connect the generated content with your research objective and existing knowledge. Rearrange, rephrase, and expand upon the generated text to ensure it aligns with the structure and purpose of your review.

Step 8: Editing and Proofreading Once you have incorporated the generated content into your literature review, thoroughly edit and proofread the document.

Check for grammatical errors, consistency in referencing, and overall clarity. This step is crucial to ensure your literature review is polished and professional.

ChatGPT prompts to Write a Literature Review

Prompts you can use when engaging with ChatGPT for research assistance in writing a literature review:

“Can you provide an overview of the main theories and concepts related to [your research topic]?”

“What are the current trends and developments in [your research field]?”

“Can you suggest some key studies or research papers on [specific aspect of your research topic]?”

“What are the main methodologies used in conducting research on [your research topic]?”

“Can you provide a critical analysis of the existing literature on [your research question]?”

“Are there any gaps or areas of controversy in the literature on [your research topic] that need further exploration?”

“What are the key findings and conclusions from the most recent studies on [your research topic]?”

“Can you suggest some reputable journals or publications explore for relevant literature in [your research field]?”

“What are the different perspectives or schools of thought in the literature on [your research topic]?”

“Can you provide a summary of the historical background and evolution of research on [your research topic]?”

Remember to provide clear and specific instructions in your prompts to guide ChatGPT in generating relevant and accurate content for your literature review.

Using ChatGPT to write a literature review can greatly facilitate the research process. By following a step-by-step approach, researchers can effectively leverage ChatGPT’s capabilities to gather insights, generate content, and enhance the quality of their literature review. However, it is important to approach the generated content critically, validate it with reliable sources, and ensure coherence within the review.

- << Previous: Art + Image Prompts

- Next: Prompt Literacy >>

- Last Updated: May 2, 2024 3:03 PM

- URL: https://library.fiu.edu/ai

Using ChatGPT to conduct a literature review

Affiliation.

- 1 Department of Humanities, Czech University of Life Sciences Prague; Prague, Czech Republic.

- PMID: 36879536

- DOI: 10.1080/08989621.2023.2185514

80+ ChatGPT Prompts for Literature Review

Conducting a literature review can often seem like a daunting task. It's an essential part of academic and research work, involving comprehensive and critical analysis of previously published research related to a specific topic. The goal is to gain a deep understanding of the state of knowledge on the topic, identify gaps, and provide a foundation for further research.

Traditionally, literature reviews have been manual, time-consuming processes. However, the advancement of technology, especially Artificial Intelligence (AI), is reshaping this landscape. One such development is ChatGPT, an AI developed by OpenAI. This tool can assist researchers in conducting more efficient and thorough literature reviews.

In this blog post, we will delve into the ways that ChatGPT, with its remarkable language understanding and generation capabilities, can be used to streamline your literature review process.

From explaining the basic capabilities of ChatGPT to providing essential prompts and tips to conduct a literature review, this post will serve as a comprehensive guide for those looking to leverage AI for academic research.

Stay tuned as we navigate this fascinating convergence of AI and research, and explore the potential of ChatGPT in making literature reviews more efficient and effective.

Table of Contents

The Power of AI in Literature Reviews

The role of Artificial Intelligence (AI) in academia and research is expanding rapidly. AI has emerged as a game-changing tool that can significantly enhance the process of conducting literature reviews.

The Benefits of AI in Conducting Literature Reviews

There are numerous advantages to using AI for literature reviews. Let's delve into these benefits:

Time Efficiency

AI can streamline the literature review process, significantly reducing the time taken. With AI, you can quickly scan vast amounts of literature, saving valuable time that can be used for analysis and synthesis.

Comprehensive Coverage

AI algorithms are capable of scanning and analyzing a far greater volume of literature than a human could reasonably manage. This ensures a comprehensive and exhaustive review of existing literature.

Consistency and Bias Reduction

AI maintains a level of consistency that is hard to achieve manually. It does not suffer from fatigue or bias, ensuring that all relevant literature is considered fairly.

The Role of ChatGPT in Literature Reviews

ChatGPT, developed by OpenAI, has proven to be a valuable tool for conducting literature reviews. It is a language model that can understand and generate human-like text, making it an excellent tool for understanding complex academic literature and providing summaries or insights.

Here are some example prompts that can be used to guide ChatGPT in assisting with literature reviews:

Example Prompts:

Generate a summary of this research paper.

What are the key findings of this study?

How does this research contribute to the field of [specific field]?

Identify the strengths and weaknesses of this study.

Compare this study with the previous study on the same topic.

What are the research gaps identified in this literature?

What future research directions are suggested by this study?

Preparing for a ChatGPT-Assisted Literature Review

Before diving into a literature review with ChatGPT, you need to set the stage right. Preparation is crucial to ensuring you get the most out of your AI-assisted literature review process.

Detailed Steps on How to Set Up for a Literature Review Using ChatGPT

- Understand Your Topic : Before starting, ensure you have a clear understanding of your topic and the specific aspects you want to focus on in the literature review.

- Gather Your Materials : Collect all the papers, articles, and sources you plan to include in your literature review.

- Organize Your Materials : Categorize your sources based on various parameters like theme, methodology, results, etc. This will make it easier to navigate through them during the review.

- Prepare Your Prompts : Create a list of prompts you plan to use with ChatGPT. These prompts should be designed to extract the information you need from each source.

Tips for Effective Use of ChatGPT in Literature Review

Preparing a list of keywords or topics.

Start with a broad overview of your research topic and gradually narrow down the scope by identifying specific sub-topics, methodologies, or research questions you want to explore. Use these as keywords for your prompts.

Having Clear Objectives for the Review

Before beginning your review, clearly outline what you hope to achieve. This could be understanding the current state of research, identifying gaps, comparing methodologies, etc.

Understanding the Limits of AI Assistance

While ChatGPT is a powerful tool, it's essential to remember it is a tool and not a replacement for human analysis. Use it to process and summarize information, but the interpretation and synthesis of this information should be done by you.

Provide an overview of the literature on [specific topic].

Summarize the methodologies used in the studies on [specific topic].

Identify common themes in the literature on [specific topic].

What are the disagreements in the literature about [specific topic]?

Analyze the impact of [specific study] on the broader field of [specific topic].

What are the emerging trends in the literature on [specific topic]?

What are the commonly cited sources in the literature on [specific topic]?

Essential ChatGPT Prompts for Literature Review

ChatGPT is a powerful tool for conducting literature reviews, and the key to using it effectively is crafting the right prompts. These prompts guide the AI in scanning, analyzing, and summarizing the literature based on your specific needs.

Need To Write A Thesis? ➡️ 87+ ChatGPT Prompts for Thesis Writing

Initial Exploration of a Topic

When you're just starting a literature review, you'll need prompts that help you gain a broad understanding of your research topic. These prompts can assist in grasping the general landscape of the literature.

Give a brief overview of research done on [topic].

What are the prominent theories or models in [topic]?

What are the key debates or controversies in [topic]?

Who are the key authors or researchers in [topic]?

What methodologies are commonly used in studies on [topic]?

Summarize the historical development of [topic] in academia.

What have been the significant shifts or changes in [topic] over time?

Deep Diving into Specific Studies

Once you have a broad understanding, you'll want to delve into specific studies or papers. These prompts help you extract detailed information from individual sources.

Summarize the main arguments and findings of [specific paper].

What methodology does [specific paper] use and why?

What are the strengths and weaknesses of [specific paper]?

How does [specific paper] contribute to the broader field of [topic]?

Compare the findings of [specific paper] with [another specific paper].

What future research does [specific paper] suggest or inspire?

How has [specific paper] been received or critiqued by other scholars in the field?

Comparing and Contrasting Different Works

ChatGPT can also help you identify similarities and differences between various sources. These prompts are useful for synthesizing information across multiple works.

Compare and contrast the methodologies of [paper 1] and [paper 2].

How do the findings of [paper 1] and [paper 2] agree or disagree?

How does [author 1]'s perspective on [topic] differ from [author 2]'s?

Compare the theoretical frameworks used in [paper 1] and [paper 2].

How does the approach to [topic] change over time as seen in [earlier paper] vs. [later paper]?

What themes are common across these studies on [topic]?

What disparities or contradictions exist in the literature on [topic]?

Summarizing Findings

Finally, as you conclude your literature review, you'll want to summarize your findings. These prompts help you consolidate your insights and present them clearly.

Summarize the key findings from my literature review on [topic].

What trends or patterns have emerged from the literature on [topic]?

What gaps or unanswered questions exist in the literature on [topic]?

How have perspectives on [topic] evolved over time, based on the literature?

What consensus, if any, exists in the literature on [topic]?

How does the literature inform our understanding of [topic]?

What future research directions are suggested by the literature on [topic]?

How to Create Effective ChatGPT Prompts

Crafting effective prompts for ChatGPT is part art, part science. It involves understanding the workings of the AI model, your research objectives, and the specific information you seek from your literature review.

Guidelines for Creating Effective ChatGPT Prompts

Here are some general guidelines to follow when crafting prompts for ChatGPT:

- Be Specific : The more specific your prompt, the more focused and relevant the AI's response will be.

- Use Clear Language : Make your prompts as clear and concise as possible. Avoid ambiguous terms and complex phrasing.

- Guide the AI : To get the best results, guide the AI towards the kind of response you're looking for. For example, if you want a summary, explicitly ask for a summary.

- Experiment and Iterate : Different prompts can yield different results. Don't be afraid to tweak your prompts and try different approaches to find what works best.

Tips for Refining and Iterating on Prompts

Prompts may not always work perfectly on the first try. Here are some tips for refining and iterating on your prompts:

- Analyze the Responses : Look at the AI's responses to understand how it is interpreting your prompts. If the responses are not what you expected, tweak your prompt to guide the AI more accurately.

- Try Different Angles : If a prompt isn't working, try approaching the question from a different angle or rephrasing the prompt.

- Split Complex Prompts : If a prompt is too complex, try splitting it into multiple simpler prompts.

Provide a summary of the main arguments in [specific paper].

What are the primary findings of [specific paper]?

Analyze the methodology used in [specific paper].

What are the implications of [specific paper] for future research?

Compare the findings of [specific paper] with those of [another specific paper].

What critiques have been made about [specific paper]?

What are the key themes across the literature on [specific topic]?

Examples of Good and Less Effective Prompts

Here are some examples of good and less effective prompts to help guide your prompt crafting:

Good Prompts:

Less Effective Prompts:

- Tell me about [specific paper]. (This is too vague and doesn't guide the AI towards the specific information you're seeking.)

- What's interesting about [specific paper]? (This prompt is subjective and may not yield useful information for a literature review.)

- What's everyone saying about [topic]? (This prompt is overly broad and might result in a wide range of responses that aren't focused enough for a literature review.)

Navigating Challenges in Using ChatGPT for Literature Reviews

While ChatGPT can be a powerful tool for conducting literature reviews, it's important to understand its limitations and potential challenges. Being aware of these can help you navigate the process more effectively.

Understanding the Limitations of ChatGPT

Despite its sophisticated capabilities, ChatGPT is not without its limitations. Here are some to keep in mind:

- Dependency on Input Quality : The quality of ChatGPT's responses depends on the quality and clarity of the prompts you provide.

- Lack of Critical Analysis : While ChatGPT can summarize and synthesize information, it can't provide the same level of critical analysis and judgment that a human researcher can.

- Potential for Misinterpretation : ChatGPT may occasionally misinterpret prompts or generate responses that miss the mark.

Overcoming Challenges in Using ChatGPT for Literature Reviews

Despite these limitations, there are ways to overcome the challenges in using ChatGPT for literature reviews:

- Carefully Craft Your Prompts : As noted earlier, the quality of your prompts greatly influences the AI's output. Spend time crafting and refining your prompts.

- Double-Check the Information : Always cross-check the AI's responses against the original sources to ensure accuracy.

- Combine AI and Human Analysis : Use ChatGPT as a tool to assist with your literature review, not as a replacement for your own analysis.

Here are some example prompts that can help you navigate potential challenges:

If the response seems off: Could you provide another summary of [specific paper]?

For double-checking information: What are the key findings of [specific paper]?

To ensure comprehensive coverage: Are there any other significant points in [specific paper] that haven't been mentioned?

If the response is too broad: Could you provide a more focused summary of the methodology in [specific paper]?

If the response is too narrow: What are the broader implications of [specific paper] for the field?

To clarify a complex point: Can you explain the main argument in [specific paper] in simpler terms?

To guide the AI's interpretation: Based on [specific paper], what are the potential areas for future research in this field?

More Great ChatGPT Prompts for Literature Reviews

An engaging literature review requires delving into a range of perspectives, methodologies, and findings within your chosen area of study. Crafting prompts that encourage varied and in-depth analysis can help you gain a comprehensive understanding of your topic. Here are more unique prompt ideas to enrich your literature review process using ChatGPT:

What are the key variables studied in relation to [topic]?

What theoretical frameworks have been applied to the study of [topic]?

Explain the significance of [specific study] in the field of [topic].

What are the ethical considerations discussed in the literature on [topic]?

How does [specific paper] challenge the prevailing understanding of [topic]?

What statistical methods are commonly used in studies on [topic]?

Discuss the influence of [specific author] on the research of [topic].

How have the research methods evolved over time in the study of [topic]?

What are the socio-cultural considerations in the literature on [topic]?

How does [specific paper] address the limitations of previous studies on [topic]?

Describe the study design of [specific paper] in the context of [topic].

What contributions does [specific paper] make to the existing literature on [topic]?

How have policy changes affected the research on [topic] over time?

What is the role of technology in the research of [topic]?

Identify the key longitudinal studies in the field of [topic].

What are the demographic considerations in studies on [topic]?

How does [specific paper] incorporate interdisciplinary approaches to [topic]?

What biases or assumptions are present in the literature on [topic]?

How does [specific paper] use empirical data to support its arguments on [topic]?

Discuss the replication studies in the field of [topic].

What are the implications of the literature on [topic] for practitioners?

How does the literature on [topic] align or contrast with public opinion?

What are the critical reviews or meta-analyses in the field of [topic]?

How does [specific paper] discuss the limitations of its own study on [topic]?

Identify the seminal works in the field of [topic] and explain their significance.

In the rapidly evolving world of AI, tools like ChatGPT have the potential to revolutionize the way we approach tasks such as literature reviews. This powerful AI model, when provided with carefully crafted prompts, can sift through and synthesize vast amounts of information, assisting you in navigating the complex landscape of academic literature.

Remember, the power of ChatGPT lies in its ability to respond to diverse prompts. While this blog post has offered several useful prompts and tips for conducting a literature review, don't hesitate to experiment and innovate with your own prompts. Your creativity and critical thinking, combined with ChatGPT's capabilities, can turn the daunting task of literature review into a more engaging and manageable process.

However, it's important to bear in mind the limitations of AI and to always complement AI-generated insights with your own critical analysis.

As you embark on your journey of exploration and discovery in your chosen field of study, may ChatGPT prove a valuable ally in helping you uncover the rich tapestry of ideas, debates, and breakthroughs that form the essence of academic literature.

75+ ChatGPT Prompts for Presentations

120+ ChatGPT Prompts for Social Media Posts

About R.J. Adams

R.J. is an artificial intelligence aficionado, committed to demystifying the intricacies of this cutting-edge technology for enthusiasts and novices alike. Leveraging his comprehensive background in internet marketing, online tools, and communications, he continually ventures into the landscape of newly released AI tools, conducting thorough tests and analyses, as well as finding the best prompts to use on the latest AI tools. He can also solve Rubik's Cube in under 2 minutes.

Connect With Me:

Reach out to us for sponsorship opportunities..

We look forward to talking to you!

© 2024 AIHabit.net

Literature Reviews

- Getting Started

- Choosing a Type of Review

- Developing a Research Question

- Searching the Literature

- Searching Tips

- ChatGPT [beta]

- ChatGPT Resources

- Documenting your Search

- Using Citation Managers

- Concept Mapping

- Writing the Review

- Further Resources

UM's Report on GenerativeAI

The initial report from the U-M Generative Artificial Intelligence Advisory (GAIA) Committee.

—User-friendly Interface that allows faculty, staff and students to engage in chat-based queries and benefit from the expertise of GENAI technology

— U-M GPT, enhances, teaching learning research in collaboration, providing a valuable and equitable resource for the entire university community

— designed to be accessible, including for use with screen readers

— provides access to GPT-3.5 and GPT-4 with no charge

https://chat.openai.com

- The knowledge cutoff for the ChatGPT 3.5 is September 2021 and it has no access to the Internet. Academic users may consider alternatives such as Semantic Scholar, Elicit, Consensus or other AI-powered tools for doing real-time literature reviews.

- Input/ Output length - ChatGPT-3.5 allows a maximum token limit of 4096 tokens. According to ChatGPT " On average, a token in English is roughly equivalent to 4 bytes or characters. English words are typically around 5 characters long. This means that, very roughly, you could fit around 800 to 1000 English words within 4096 tokens."

- Notes : (1) According to ChatGPT, the generated responses are non-deterministic by default. So if you run the searches again and get slightly or very different results than the ones listed on this guide, it's likely due to this factor. (2) While ChatGPT or Bing Chat searches offer conversational interaction, they might not be a substitute for the rigor and reliability of traditional academic database searches.

KEYWORD GENERATION

Write a literature review abstract based on the following keywords: Keywords: Artificial Intelligence, Employment Trends, Skill Development, Future Workforce

Infant death Neonatal mortality (referring specifically to deaths within the first 28 days of life) Perinatal mortality (referring to deaths occurring during the period from 22 completed weeks of gestation to 7 completed days after birth) Early childhood mortality (referring to deaths occurring within the first five years of life) Child mortality (referring to deaths occurring before the age of 18) [Press the Regenerate button to get more.]

- Example - RELATED WORDS What are the related words of infant mortality?

Neonatal mortality, Post-neonatal mortality, Stillbirths, Low birth weight, Malnutrition, Infectious diseases, Vaccination, Maternal health, Access to healthcare, Poverty, Social inequality, Sanitation, Hygiene, Water quality, Childbirth complications, Congenital abnormalities, Birth defects, Maternal age, Under-five mortality, Child mortality, Perinatal mortality, Preterm birth, Low birth weight, Sudden infant death syndrome (SIDS), Maternal mortality, Postnatal care, Malnutrition, Immunization, Access to healthcare, Clean water and sanitation, Congenital anomalies, Infant health disparities, Infant mortality rate (IMR), Infant survival.

(("Infant Death"[Mesh] OR "Sudden Infant Death Syndrome"[Mesh] OR "Infant Mortality"[Mesh] OR "Perinatal Mortality"[Mesh] OR "Neonatal Mortality"[Mesh] OR "Fetal Mortality"[Mesh] OR "Infant Death" OR "Sudden Infant Death Syndrome" OR "Infant Mortality" OR "Perinatal Mortality" OR "Neonatal Mortality" OR "Fetal Mortality") AND ("Risk Factors"[Mesh] OR "Etiology"[Mesh] OR "Prevention and Control"[Mesh] OR "Interventions"[Mesh] OR "Public Health"[Mesh] OR "Health Disparities"[Mesh] OR "Healthcare Disparities"[Mesh] OR "Poverty"[Mesh] OR "Malnutrition"[Mesh] OR "Infection"[Mesh] OR "Congenital Abnormalities"[Mesh] OR "Prematurity"[Mesh] OR "Low Birth Weight"[Mesh] OR "Maternal Education"[Mesh] OR "Maternal Health"[Mesh] OR "Risk Factors" OR "Etiology" OR "Prevention and Control" OR "Interventions" OR "Public Health" OR "Health Disparities" OR "Healthcare Disparities" OR "Poverty" OR "Malnutrition" OR "Infection" OR "Congenital Abnormalities" OR "Prematurity" OR "Low Birth Weight" OR "Maternal Education" OR "Maternal Health"))

Comments and Suggestions: #1 -This search string may not work on all database platforms. For example, Medline (OVID) may not be able to run it, Medline & CINAHL (EBSCO) can run it without any problems while PubMed will run it but it will indicate which MeSH terms are not indexed. See PubMed's search History and Details under Advanced . #2 -This is just one possible search string and you may need to adjust it based on your specific research questions and the databases you are using. #3 - It might be easier if you break the task into 3 parts: ask the chatbot give you the synonyms > ask it to give you the thesauri terms > and ask it to combine them.

RESEARCH QUESTION

Increased risk of sudden infant death syndrome (SIDS) Increased risk of premature birth Increased risk of low birth weight Increased risk of respiratory problems in newborns Increased risk of respiratory problems in infants exposed to secondhand smoke Increased risk of developing asthma and other respiratory illnesses later in life for infants exposed to secondhand smoke Please generate a literature review that addresses the research question: 'What are the effects of social media on the mental health of adolescents? [Author's note : If you ask for the specific studies, ChatGPT may generate non-existent references or false knowledge. To find out why Large Language Models hallucinate, check out this WiKi article: Hallucination (artificial intelligence) and this blog post - A Gentle Introduction to Hallucinations in Large Language Models by Adrian Tam ]

DATABASE RECOMMENDATIONS

Heath Science - Which databases to use to find articles on the EFFECTS OF MATERNAL SMOKING ON INFANT DEATH? PubMed: This is a database of biomedical literature from the National Library of Medicine. It includes articles from medical journals and other sources on a wide range of health-related topics. Embase: This is a database of biomedical literature that covers more international and pharmaceutical content than PubMed. It includes articles from medical journals and other sources on a wide range of health-related topics. Cochrane Library : This is a collection of databases that provides systematic reviews and meta-analyses of healthcare interventions, including interventions related to maternal smoking and infant death. Scopus: This is a multidisciplinary database that covers research from a wide range of fields, including health sciences. It includes articles from scientific journals, books, and conference proceedings. Web of Science: This is a database that covers research from a wide range of fields, including health sciences. It includes articles from scientific journals, books, and conference proceedings. CINAHL: This is a database of nursing and allied health literature, including articles on maternal smoking and infant death. [Note: The response above was copied and pasted directly from ChatGPT. No editing was added.] Education -- I am doing a meta-analysis on k-12 STEM education. What databases should I use? ERIC : This is a database of education research and information, including articles from journals, reports, and conference papers. It is a commonly used database for educational research. Education Source : This is a comprehensive database of education research and information, including articles from journals, books, and conference papers. Web of Science: This is a multidisciplinary database that covers research from a wide range of fields, including education. It includes articles from scientific journals, books, and conference proceedings. Scopus: This is another multidisciplinary database that covers research from a wide range of fields, including education. It includes articles from scientific journals, books, and conference proceedings. PsycINFO: This is a database of psychology research, including articles from journals, books, and dissertations. It can be useful for research on STEM education that includes psychological factors. Cochrane Library : This is a collection of databases that provides systematic reviews and meta-analyses of healthcare interventions including interventions related to education.

Example: ACT-AS-AN-EXPERT PROMPT Here's an alternative way to consolidate all the questions into a single consultation by using this popular Act-As-An-Expert prompt, aka, system message prompt: I am going to conduct a systematic review on games and mathematics related to K-12 education I want you to act as a systematic review expert. I will ask you some questions related to my research topic and you advise me on how to do it.

GREY LITERATURE

Please show me all the resources that offer grey literature for conducting research in the field of education ERIC (Education Resources Information Center): https://eric.ed.gov/ OpenGrey: http://www.opengrey.eu/ British Education Index: https://www.ebscohost.com/academic/british-education-index WorldCat: https://www.worldcat.org/ ProQuest Dissertations & Theses Global: https://www.proquest.com/products-services/pqdtglobal.html EThOS (Electronic Theses Online Service): https://ethos.bl.uk/ Institutional Repositories of universities and academic institutions (search for specific institution repositories) American Educational Research Association (AERA): https://www.aera.net/ National Education Association (NEA): https://www.nea.org/ Association for Supervision and Curriculum Development (ASCD): http://www.ascd.org/ RAND Corporation: https://www.rand.org/ National Center for Education Research (NCER): https://ies.ed.gov/ncer/ National Center for Education Statistics (NCES): https://nces.ed.gov/ European Educational Research Association (EERA): https://www.eera-ecer.de/ UNESCO International Institute for Educational Planning (IIEP): http://www.iiep.unesco.org/ Organization for Economic Cooperation and Development (OECD): https://www.oecd.org/education/ Education Development Center (EDC): https://www.edc.org/ National Institute for Educational Policy Research (NIER) - Japan: https://www.nier.go.jp/ Australian Council for Educational Research (ACER): https://www.acer.org/ Canadian Education Association (CEA): https://www.cea-ace.ca/ My topic is about stem education. Which grey literature databases should I use? STEM Education Research Centre (SERC) Grey Literature Database National STEM Education Research Repository (NSERR) National Science Digital Library (NSDL) STEM Education Resource Center (SERC) GrayLit Network STEM Education Grey Literature Library

MORE RESOURCES

- << Previous: AI Lit Searching [beta]

- Next: Organizing the Literature >>

- Last Updated: Feb 29, 2024 10:31 AM

- URL: https://guides.lib.umich.edu/litreview

How to Use ChatGPT to Write a Literature Review With Prompts

Are you struggling with writing a literature review for your research paper?

Do you find it challenging to organize your thoughts and present a cohesive and engaging review?

Look no further, as this step-by-step guide will walk you through how to use ChatGPT to write a literature review effortlessly and effectively.

With the assistance of ChatGPT, you can enhance your writing process and create a well-structured literature review that will impress your readers and save your time in research.

Leveraging ChatGPT for Conducting Meta-Analysis in Research

ChatGPT For Large Documents in 6 Easy Steps [2023]

How to use ChatGPT for Hypothesis Development

12 ChatGPT Prompts for Text Analysis

Table of Contents

Step 1: Familiarize Yourself with ChatGPT

To get started, visit the official website of ChatGPT at chat.openai.com

Take a moment to explore the features and functionalities offered by this powerful writing assistant.

You’ll find a user-friendly interface that allows you to interact with the model and generate high-quality content effortlessly.

Step 2: Understanding Prompts

Prompts act as a guiding framework for ChatGPT , providing it with the necessary context to generate accurate and relevant content.

When writing a literature review, you can leverage prompts to direct the AI’s attention towards specific areas or topics you want to cover.

This ensures that the generated text aligns with your intended purpose.

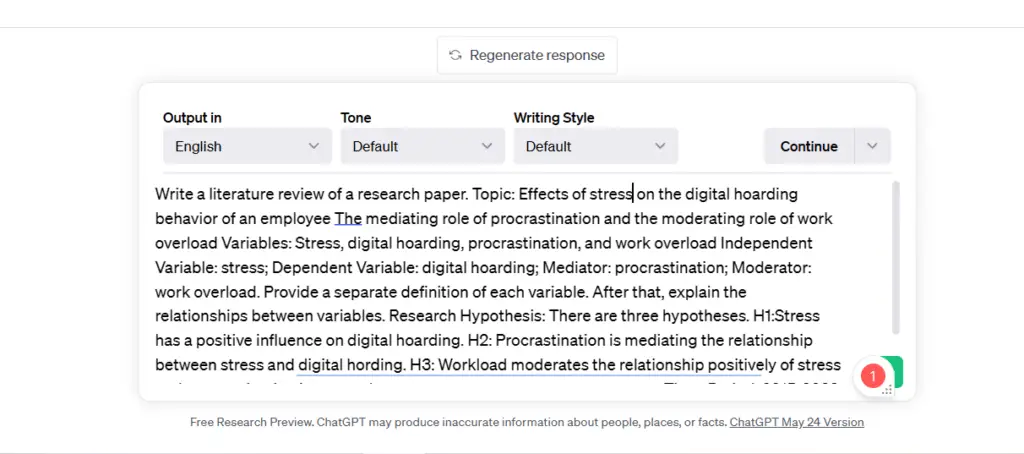

Step 3: Crafting an Effective Prompt

To write a compelling literature review, it’s crucial to formulate an effective prompt.

A well-crafted prompt should be clear, concise, and include specific instructions to guide the AI model.

Craft a prompt according to the requirements of your study. Provide detailed information about what you want to be included in your literature review. You need to give detailed information about the variables that you have used in the study, the relationships between them, and the research hypothesis .

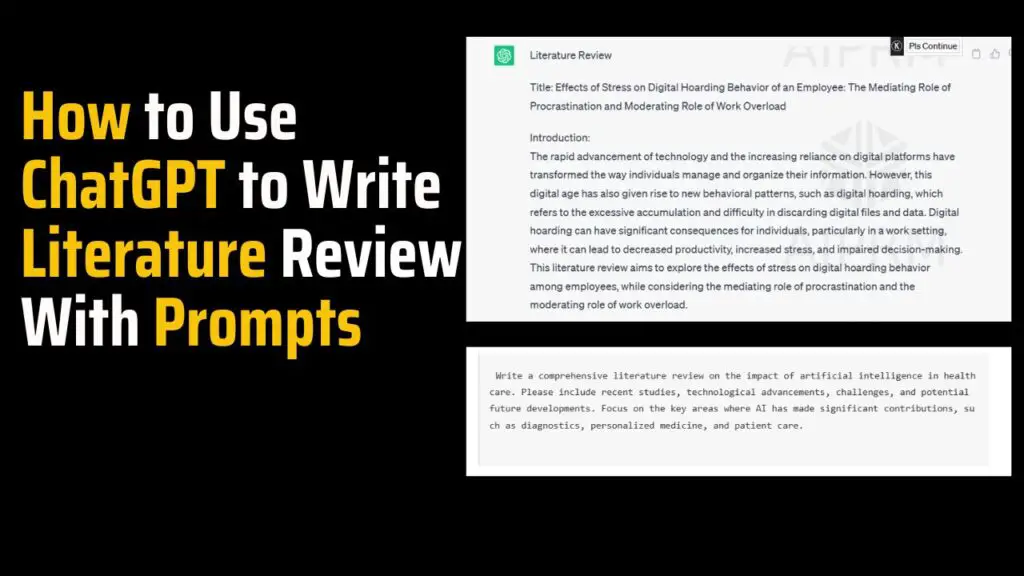

Example: Prompt # 1

Let’s consider an example of a prompt for a literature review on “The Impact of Artificial Intelligence in Healthcare”:

Example: Prompt # 2

Write a literature review of a research paper. Topic: Effects on Stress on digital hoarding behavior of an Employee. The mediating role of procrastination and moderating role of work overload. Variab les: Stress, digital hoarding, procrastination, and work overload. Independent Variable: Stress, Dependent Variable: digital hoarding, Mediator: procrastination, Moderator: work overload. Provide separate definition of each variable. After that explain the relationships between variables. Research Hypothesis: There are 3 hypotheses. H1: Stress has positive influence on digital hoarding. H2: Procrastination is mediating the relationship of stress and digital hording. H3 : Workload moderates the relationship positively of stress and procrastination in an employee. Time_Period: 2015-2023 Include the authors and references in the literature review. Also Include citations. The references should be provided at the end.

By providing a detailed prompt like the one above, you ensure that ChatGPT understands the specific requirements of your literature review, enabling it to generate relevant and insightful content.

10+ ChatGPT Prompts to Write Your Resume

How to Use ChatGPT to Write SQL Queries: Examples and Tips

10 ChatGPT Prompts for Job Applications

20 ChatGPT Prompts to Write Code in Python

20 ChatGPT Prompts to Write JavaScript Code

Step 4: Interacting with ChatGPT

Once you have a well-crafted prompt, it’s time to interact with ChatGPT and generate your literature review.

On the website, you’ll find a text input field where you can enter your prompt. Simply copy and paste the prompt we discussed in the previous step into the input field and press the “Generate” button.

ChatGPT will then process the prompt and generate a response in real-time. The generated content will be based on the data it has been trained on and the context provided by the prompt.

Output provided by the ChatGPT

Literature Review

Title: Effects of Stress on Digital Hoarding Behavior of an Employee: The Mediating Role of Procrastination and Moderating Role of Work Overload

Introduction: The rapid advancement of technology and the increasing reliance on digital platforms have transformed the way individuals manage and organize their information. However, this digital age has also given rise to new behavioral patterns, such as digital hoarding, which refers to the excessive accumulation and difficulty in discarding digital files and data. Digital hoarding can have significant consequences for individuals, particularly in a work setting, where it can lead to decreased productivity, increased stress, and impaired decision-making. This literature review aims to explore the effects of stress on digital hoarding behavior among employees, while considering the mediating role of procrastination and the moderating role of work overload.

Definition of Variables:

- Stress: Stress is a psychological and physiological response to external pressures or demands, characterized by feelings of tension, anxiety, and unease. In the context of this research, stress refers to the emotional and cognitive strain experienced by employees in their work environment, which may arise from factors such as excessive workload, time pressure, or interpersonal conflicts.

- Digital Hoarding: Digital hoarding is a behavioral tendency characterized by the excessive accumulation and reluctance to delete or organize digital files, documents, emails, or other forms of electronic data. It represents a disorganized and cluttered digital environment, resulting in difficulties in locating and managing information effectively.

- Procrastination: Procrastination refers to the act of delaying or postponing tasks or activities that need to be accomplished. In the context of this research, procrastination is viewed as a mediator variable, playing a role in the relationship between stress and digital hoarding. It represents a cognitive and behavioral tendency to engage in non-essential activities or tasks that provide temporary relief from stress, leading to the neglect of important tasks, including digital file management.

- Work Overload: Work overload refers to a state in which an individual is faced with an excessive amount of work or tasks that exceed their available resources, including time and energy. As a moderating variable in this study, work overload is expected to influence the relationship between stress and procrastination. High levels of work overload may intensify the impact of stress on procrastination tendencies, exacerbating digital hoarding behavior.

Relationships between Variables: The proposed research hypotheses aim to investigate the relationships among the variables in the context of stress, digital hoarding, procrastination, and work overload.

Hypothesis 1 (H1): Stress has a positive influence on digital hoarding. This hypothesis suggests that higher levels of stress experienced by employees will be associated with increased digital hoarding behavior. Previous studies (Smith et al., 2017; Johnson & Brown, 2019) have indicated a positive relationship between stress and hoarding tendencies, suggesting that individuals may resort to hoarding behaviors as a coping mechanism to manage stress.

Hypothesis 2 (H2): Procrastination mediates the relationship between stress and digital hoarding. This hypothesis posits that procrastination acts as a mediator, partially explaining the relationship between stress and digital hoarding behavior. Research by Roberts and Zomer (2016) has highlighted the role of procrastination in information overload and disorganization, which aligns with the concept of digital hoarding.

Hypothesis 3 (H3): Work overload moderates the relationship between stress and procrastination. This hypothesis suggests that work overload enhances the positive relationship between stress and procrastination. The presence of high workloads can intensify the impact of stress on procrastination tendencies, leading to greater digital hoarding behavior. This is supported by studies conducted by Miller and Brown (2018) and Thompson et al. (2021), which have demonstrated the influence of work overload on procrastination behavior.

Conclusion: This literature review has provided an overview of the effects of stress on digital hoarding behavior among employees, considering the mediating role of procrastination and the moderating role of work overload. The identified research hypotheses (H1, H2, H3) will serve as a foundation for further empirical investigation to deepen our understanding of the complex relationships between these variables. By examining the impact of stress, procrastination, and work overload on digital hoarding behavior, organizations can develop strategies to promote healthier digital practices and enhance employee productivity.

References: Johnson, A. M., & Brown, T. A. (2019). Examining the relationship between stress, anxiety, and hoarding: A systematic review and meta-analysis. Journal of Obsessive-Compulsive and Related Disorders, 23, 100468.

Miller, R. B., & Brown, T. A. (2018). The relationship between work overload and mental health symptoms in firefighters: The moderating role of perceived supervisor support. Journal of Workplace Behavioral Health, 33(3), 213-229.

Roberts, L. D., & Zomer, A. C. (2016). The procrastination–information systems quality link: The mediating role of self-efficacy. Computers in Human Behavior, 65, 167-176.

Smith, C. G., et al. (2017). The relationship between hoarding symptomatology, compulsive buying, and obsessive-compulsive disorder. International Journal of Cognitive Therapy, 10(4), 332-348.

Thompson, M. A., et al. (2021). Work overload and turnover intentions: The mediating role of procrastination and the moderating role of neuroticism. Journal of Vocational Behavior, 128, 103603.

Step 5: Refining and Polishing

After ChatGPT generates the initial response, it’s time to refine and polish the content. Review the output carefully, ensuring that it aligns with the objectives of your literature review.

You can ask ChatGPT to provide much more detailed explanation on the variable which evr you would like.

Its good to provide ChatGPT detailed prompt to get better results. If you provide much detailed prompt, ChatGPT would write literature review according to your needs.

Note: The literature review that you would get is plagiarism-free. This is another benefit of using chatgpt to write literature review.

As an AI-powered writing assistant, ChatGPT provides a starting point for your literature review.

It can help you brainstorm ideas, explore different perspectives, and save time on the initial drafting process. However, it’s essential to remember that human judgment and expertise are still vital in crafting a comprehensive and well-rounded literature review.

How to Use ChatGPT to Write Your Cover Letter | Prompts

How to Use ChatGPT to Write a Resume? | Prompts

How to Use ChatGPT to Write Code: A Software Developer’s Guide

Best ChatGPT Prompts For Students

Step 6: Iterative Process

Writing a literature review is often an iterative process. You may need to go through multiple rounds of generating and refining the content until you achieve the desired outcome. Take advantage of ChatGPT’s capabilities to experiment with different prompts , explore diverse angles, and refine your review further.

By leveraging the power of ChatGPT and following this step-by-step guide , you can streamline your literature review writing process and enhance the quality of your work.

ChatGPT is a valuable tool for writing literature reviews. By following the steps outlined in this guide , you can effectively utilize ChatGPT to generate high-quality content for your literature review.

Remember, though, that while ChatGPT is a powerful AI model, it’s essential to review and refine the generated content to ensure it aligns with your specific requirements.

Other articles

Please read through some of our other articles with examples and explanations if you’d like to learn more.

- Paraphraser.io

- Imagestotext.io

- Websites to Read Books for Free

- Tools to Convert PNG Image to Excel

- Detect AI-Generated Text using ZeroGPT and Transform It using Quillbot

- How is QuillBot used in Academic Writing?

- Tools for Presentations

- AI Tools for Citation Management

- Improve your Writing with QuillBot and ChatGPT

- Tools Transforming Knowledge Management

- Plagiarism Checkers Online

- Information Management Software

- Tools for Information Management

- Software Tools for Writing Thesis

- OpenAI WordPress Plugin

- TTS Voiceover

- Backend Automation Testing Tools

- AI Tools for Academic Research

- SendMultiple PDF Files as a Single Attachment

- Google Bard

- Google Bard for SEO

- Shorten the Content’s Length Without Lossing its Meaning

- Write Scientific Research Paper Using Google Bard AI

- Google Bard Can Create Spreadsheets

- How AI Technology is Helpful For Marketers

- How AI Chatbots Can Identify Trading Patterns

- Quorum vs Hyperledger Fabric vs R3 Corda

- ChatGPT vs. Google Bard

- Quantum Apocalypse

- Firmware and Software

- Synergy vs. KVM Switches

- Flash Drive Vs Pen Drive

- Type A, B and C USB Cable

- WSN and IoT

- eSourcing and eProcurement

- Difference between eProcurement and Traditional Procurement

- Over the Top and Video On Demand

- Software Development Life Cycle Vs Software Testing Life Cycle

- Software Development Team vs Freelancer

- HDMI over IP vs HDBaseT

- Display Stream Compression

- Top Internet of Things Journals

- Supervised Learning vs. Unsupervised Learning

- Video Upscaling and Video Downscaling

- How To Build a Distributed System

- How to Get Into Data Science From a Non-Technical Background?

- How To Build Ethereum Mining Rig?

- Paraphrase Text

- PowerPoint Slides

- Learn Languages

- Write Code in Python

- Write Literature Review

- Document Review

- Job Interview Preparation

- Prompts For Students

- Write Cover Letter

- Write Resume

- Job Applications

- Write SQL Queries

- Write Excel Formulas

- Academic Writing

- Translate Text

- Keyword Research

- Business Idea Generation

- Business Entrepreneur

- Use ChatGPT on WhatsApp

- Virtual Research Assistant

- Meta-Analysis in Research

- Large Documents

- Hypothesis Development

- Share ChatGPT Responses

- Text Analysis

- Upload PDF on ChatGPT

- Books ChatGPT

Misbah Rashid, an expert in Technology Management, holds an MBA and an MS in Information Systems and Technology Management. She has experience teaching marketing and technology in business at the university level.

Related Posts

Who is a good peer reviewer, peer review | types of peer review, ethics in research: safeguarding integrity and credibility, advantages and disadvantages of snowball sampling, exploring qualitative researcher skills: what they are and how to develop them, difference between quota sampling and stratified sampling, how effective laboratory design impacts health, safety, and productivity, why is laboratory safety important in research, what is purposive sampling | examples, quota sampling in research.

Comments are closed.

- Learn Chat GPT

- Learn Chat GPT (Advanced)

Step-by-Step Guide: How to Use ChatGPT to Write a Literature Review With Prompts

Writing a literature review can be a challenging task for researchers and students alike. It requires a comprehensive understanding of the existing body of research on a particular topic. However, with the advent of advanced language models like ChatGPT, the process has become more accessible and efficient.

In this step-by-step guide, ilovephd will explore how you can leverage ChatGPT to write a compelling literature review that is both informative.

Discover how to effectively utilize ChatGPT as a research assistant to write a comprehensive and SEO-friendly literature review. Follow our step-by-step guide to leverage this powerful tool, optimize your review for search engines, and contribute to the scholarly conversation in your field.

A Step-by-Step Guide: How to Use ChatGPT for Writing a Literature Review

Step 1: Defining Your Research Objective Before diving into the literature review process, it is crucial to define your research objective.

Clearly articulate the topic, research question, or hypothesis you aim to address through your literature review. This step will help you maintain focus and guide your search for relevant sources.

Step 2: Identifying Keywords and Search Terms To effectively use ChatGPT to assist in your literature review, you need to identify relevant keywords and search terms related to your research topic.

These keywords will help you narrow down your search and gather pertinent information. Consider using tools like Google Keyword Planner or other keyword research tools to discover commonly used terms in your field.