The Visual Display of Quantitative Information

- Education & Teaching

- Higher & Continuing Education

Enjoy fast, free delivery, exclusive deals, and award-winning movies & TV shows with Prime Try Prime and start saving today with fast, free delivery

Amazon Prime includes:

Fast, FREE Delivery is available to Prime members. To join, select "Try Amazon Prime and start saving today with Fast, FREE Delivery" below the Add to Cart button.

- Cardmembers earn 5% Back at Amazon.com with a Prime Credit Card.

- Unlimited Free Two-Day Delivery

- Streaming of thousands of movies and TV shows with limited ads on Prime Video.

- A Kindle book to borrow for free each month - with no due dates

- Listen to over 2 million songs and hundreds of playlists

- Unlimited photo storage with anywhere access

Important: Your credit card will NOT be charged when you start your free trial or if you cancel during the trial period. If you're happy with Amazon Prime, do nothing. At the end of the free trial, your membership will automatically upgrade to a monthly membership.

Buy new: $26.36 $26.36 FREE delivery: Tuesday, April 30 on orders over $35.00 shipped by Amazon. Ships from: Amazon.com Sold by: Amazon.com

Return this item for free.

Free returns are available for the shipping address you chose. You can return the item for any reason in new and unused condition: no shipping charges

- Go to your orders and start the return

- Select the return method

Buy used: $19.81

Fulfillment by Amazon (FBA) is a service we offer sellers that lets them store their products in Amazon's fulfillment centers, and we directly pack, ship, and provide customer service for these products. Something we hope you'll especially enjoy: FBA items qualify for FREE Shipping and Amazon Prime.

If you're a seller, Fulfillment by Amazon can help you grow your business. Learn more about the program.

Download the free Kindle app and start reading Kindle books instantly on your smartphone, tablet, or computer - no Kindle device required .

Read instantly on your browser with Kindle for Web.

Using your mobile phone camera - scan the code below and download the Kindle app.

Image Unavailable

- To view this video download Flash Player

Follow the author

The Visual Display of Quantitative Information, 2nd Ed. 2nd Edition

Purchase options and add-ons.

- ISBN-10 1930824130

- ISBN-13 978-1930824133

- Edition 2nd

- Publisher Graphics Pr

- Publication date February 14, 2001

- Language English

- Dimensions 11 x 9 x 1 inches

- Print length 197 pages

- See all details

Frequently bought together

Similar items that may ship from close to you

Product details

- Publisher : Graphics Pr; 2nd edition (February 14, 2001)

- Language : English

- Paperback : 197 pages

- ISBN-10 : 1930824130

- ISBN-13 : 978-1930824133

- Item Weight : 1.65 pounds

- Dimensions : 11 x 9 x 1 inches

- #11 in Science & Mathematics

- #23 in Education (Books)

- #228 in Unknown

About the author

Edward r. tufte.

Statistician/visualizer/artist Edward Tufte is Professor Emeritus of Political Science, Statistics, and Computer Science at Yale University. He wrote, designed, and self-published 5 classic books on data visualization.

The New York Times described Tufte as the "Leonardo da Vinci of data," and Bloomberg as the "Galileo of graphics."

Having completed his book Seeing With Fresh Eyes: Meaning, Space, Data, Truth, ET is now constructing a 234-acre tree farm and sculpture park in northwest Connecticut, which will show his artworks and remain open space in perpetuity.

He founded Graphics Press, ET Modern Gallery/Studio, and Hogpen Hill Farms.

Customer reviews

Customer Reviews, including Product Star Ratings help customers to learn more about the product and decide whether it is the right product for them.

To calculate the overall star rating and percentage breakdown by star, we don’t use a simple average. Instead, our system considers things like how recent a review is and if the reviewer bought the item on Amazon. It also analyzed reviews to verify trustworthiness.

Reviews with images

- Sort reviews by Top reviews Most recent Top reviews

Top reviews from the United States

There was a problem filtering reviews right now. please try again later..

Top reviews from other countries

- Amazon Newsletter

- About Amazon

- Accessibility

- Sustainability

- Press Center

- Investor Relations

- Amazon Devices

- Amazon Science

- Sell on Amazon

- Sell apps on Amazon

- Supply to Amazon

- Protect & Build Your Brand

- Become an Affiliate

- Become a Delivery Driver

- Start a Package Delivery Business

- Advertise Your Products

- Self-Publish with Us

- Become an Amazon Hub Partner

- › See More Ways to Make Money

- Amazon Visa

- Amazon Store Card

- Amazon Secured Card

- Amazon Business Card

- Shop with Points

- Credit Card Marketplace

- Reload Your Balance

- Amazon Currency Converter

- Your Account

- Your Orders

- Shipping Rates & Policies

- Amazon Prime

- Returns & Replacements

- Manage Your Content and Devices

- Recalls and Product Safety Alerts

- Conditions of Use

- Privacy Notice

- Consumer Health Data Privacy Disclosure

- Your Ads Privacy Choices

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.3: Visual Representation of Data II - Quantitative Variables

- Last updated

- Save as PDF

- Page ID 7789

- Jonathan A. Poritz

- Colorado State University – Pueblo

Now suppose we have a population and quantitative variable in which we are interested. We get a sample, which could be large or small, and look at the values of the our variable for the individuals in that sample. There are two ways we tend to make pictures of datasets like this: stem-and-leaf plots and histograms .

Stem-and-leaf Plots

One somewhat old-fashioned way to handle a modest amount of quantitative data produces something between simply a list of all the data values and a graph. It’s not a bad technique to know about in case one has to write down a dataset by hand, but very tedious – and quite unnecessary, if one uses modern electronic tools instead – if the dataset has more than a couple dozen values. The easiest case of this technique is where the data are all whole numbers in the range \(0-99\) . In that case, one can take off the tens place of each number – call it the stem – and put it on the left side of a vertical bar, and then line up all the ones places – each is a leaf – to the right of that stem. The whole thing is called a stem-and-leaf plot or, sometimes, just a stemplot .

It’s important not to skip any stems which are in the middle of the dataset, even if there are no corresponding leaves. It is also a good idea to allow repeated leaves, if there are repeated numbers in the dataset, so that the length of the row of leaves will give a good representation of how much data is in that general group of data values.

Example 1.3.1. Here is a list of the scores of 30 students on a statistics test: \[\begin{matrix} 86 & 80 & 25 & 77 & 73 & 76 & 88 & 90 & 69 & 93\\ 90 & 83 & 70 & 73 & 73 & 70 & 90 & 83 & 71 & 95\\ 40 & 58 & 68 & 69 & 100 & 78 & 87 & 25 & 92 & 74 \end{matrix}\] As we said, using the tens place (and the hundreds place as well, for the data value \(100\) ) as the stem and the ones place as the leaf, we get

[tab:stemplot1]

One nice feature stem-and-leaf plots have is that they contain all of the data values , they do not lose anything (unlike our next visualization method, for example).

[Frequency] Histograms

The most important visual representation of quantitative data is a histogram . Histograms actually look a lot like a stem-and-leaf plot, except turned on its side and with the row of numbers turned into a vertical bar, like a bar graph. The height of each of these bars would be how many

Another way of saying that is that we would be making bars whose heights were determined by how many scores were in each group of ten. Note there is still a question of into which bar a value right on the edge would count: e.g., does the data value \(50\) count in the bar to the left of that number, or the bar to the right? It doesn’t actually matter which side, but it is important to state which choice is being made.

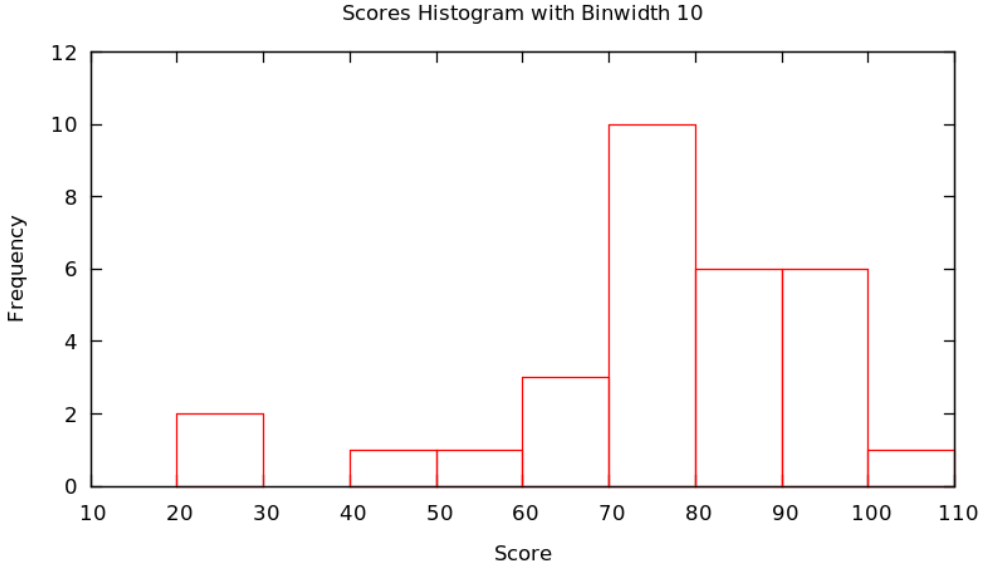

Example 1.3.2 Continuing with the score data in Example 1.3.1 and putting all data values \(x\) satisfying \(20\le x<30\) in the first bar, values \(x\) satisfying \(30\le x<40\) in the second, values \(x\) satisfying \(40\le x<50\) in the second, etc. – that is, put data values on the edges in the bar to the right – we get the figure

Actually, there is no reason that the bars always have to be ten units wide: it is important that they are all the same size and that how they handle the edge cases (whether the left or right bar gets a data value on edge), but they could be any size. We call the successive ranges of the \(x\) coordinates which get put together for each bar the called bins or classes , and it is up to the statistician to chose whichever bins – where they start and how wide they are – shows the data best.

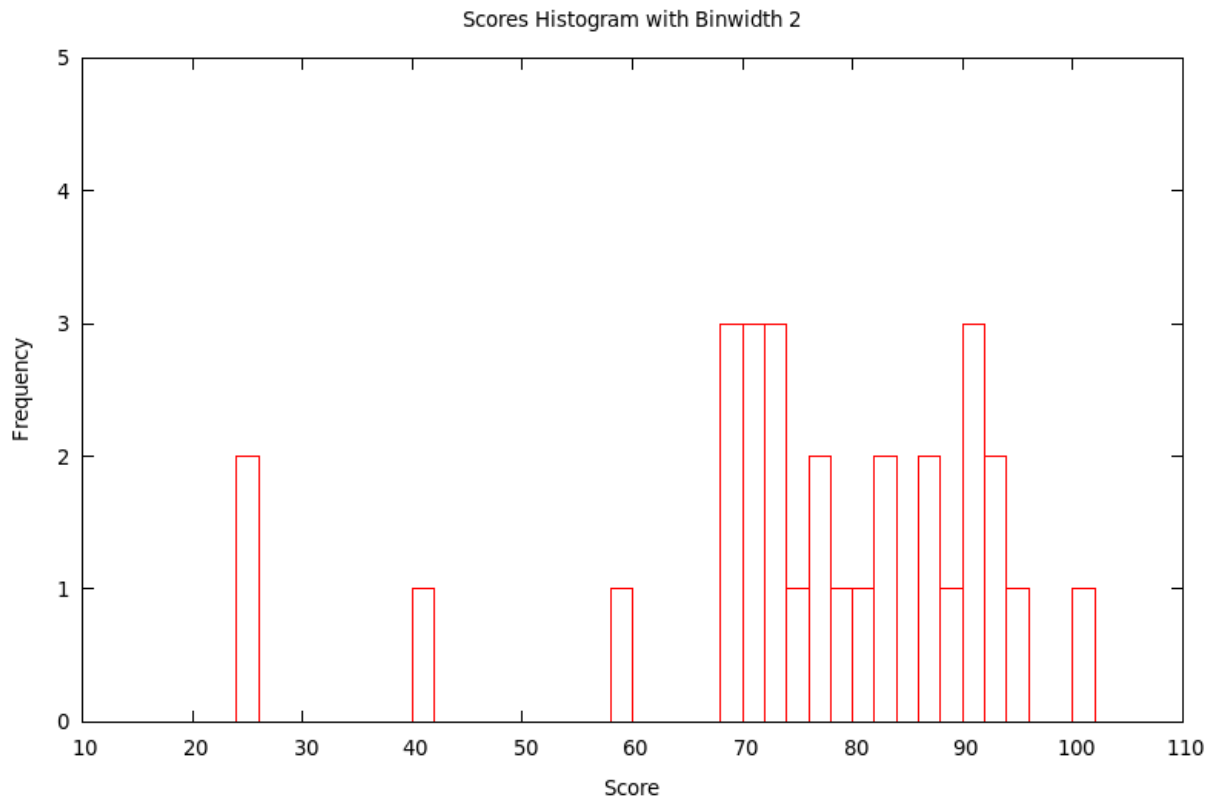

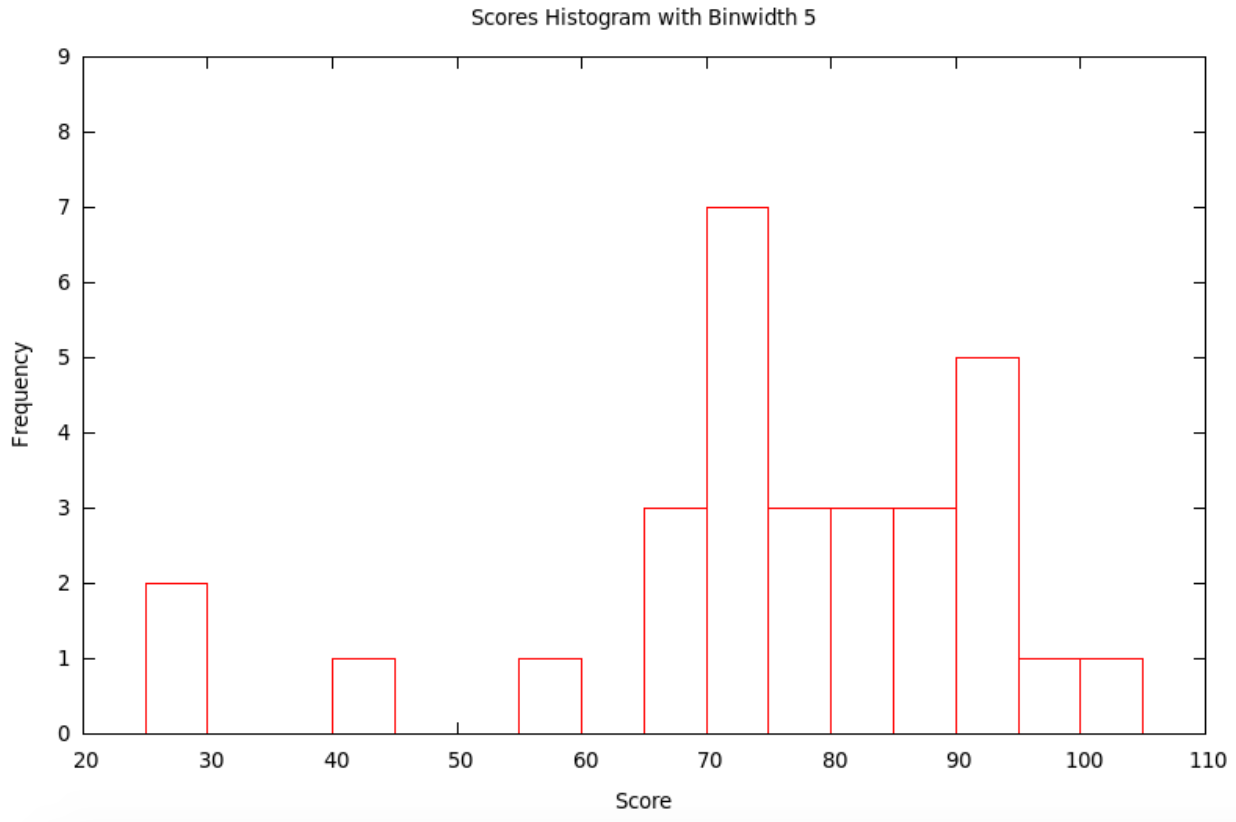

Typically, the smaller the bin size, the more variation (precision) can be seen in the bars ... but sometimes there is so much variation that the result seems to have a lot of random jumps up and down, like static on the radio. On the other hand, using a large bin size makes the picture smoother ... but sometimes, it is so smooth that very little information is left. Some of this is shown in the following

Example 1.3.3. Continuing with the score data in Example 1.3.1 and now using the bins with \(x\) satisfying \(10\le x<12\) , then \(12\le x<14\) , etc. , we get the histogram with bins of width 2:

If we use the bins with \(x\) satisfying \(10\le x<15\) , then \(15\le x<20\) , etc. , we get the histogram with bins of width 5:

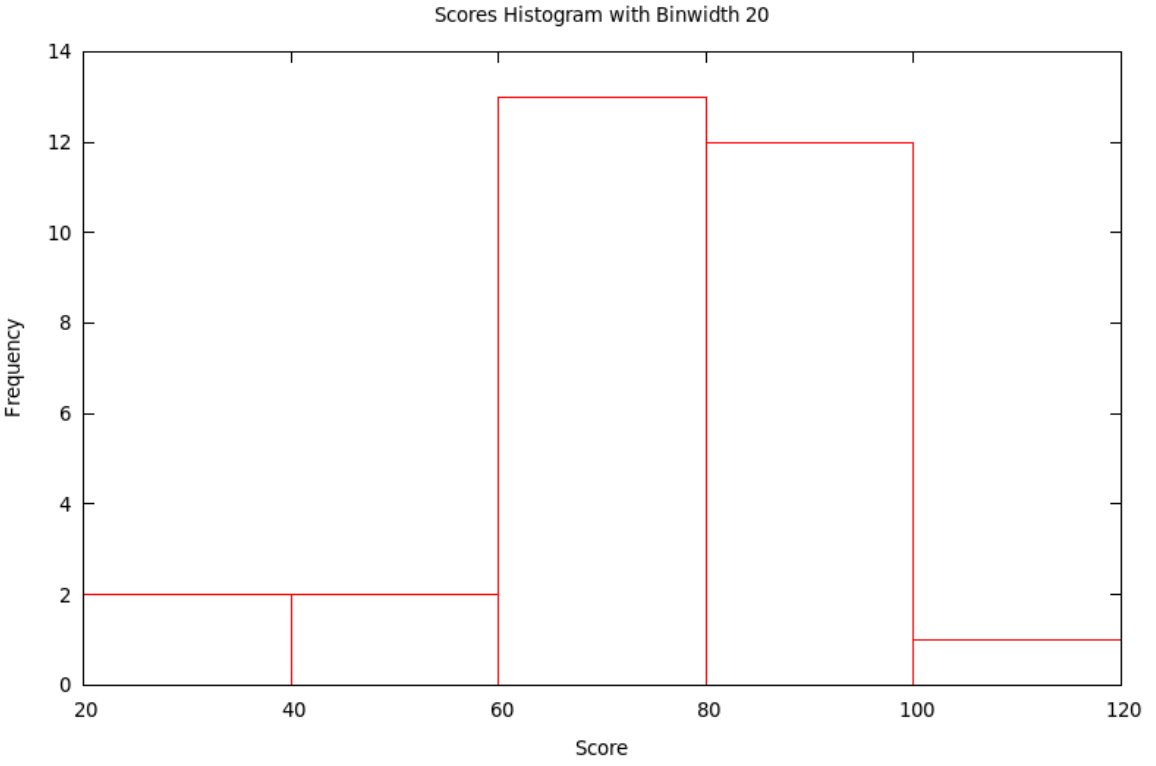

If we use the bins with \(x\) satisfying \(20\le x<40\) , then \(40\le x<60\) , etc. , we get the histogram with bins of width 20:

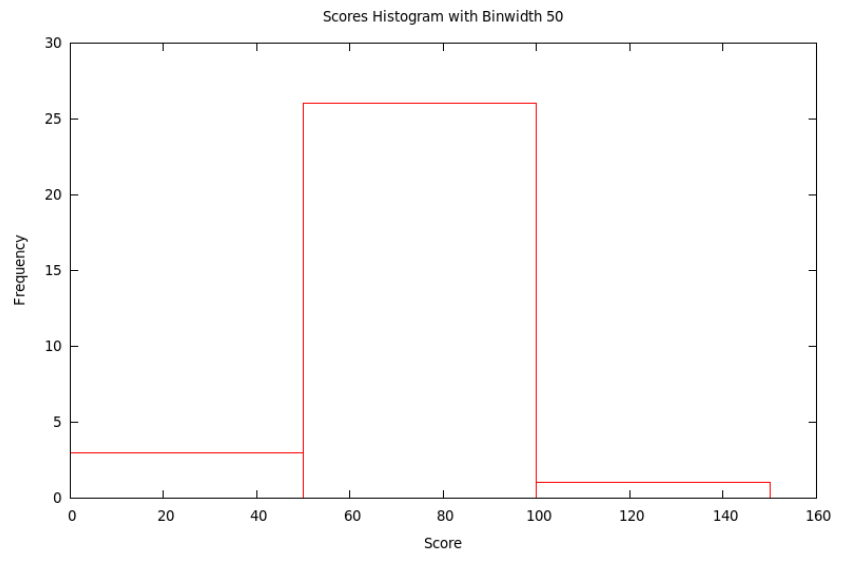

Finally, if we use the bins with \(x\) satisfying \(0\le x<50\) , then \(50\le x<100\) , and then \(100\le x<150\) , we get the histogram with bins of width 50:

[Relative Frequency] Histograms

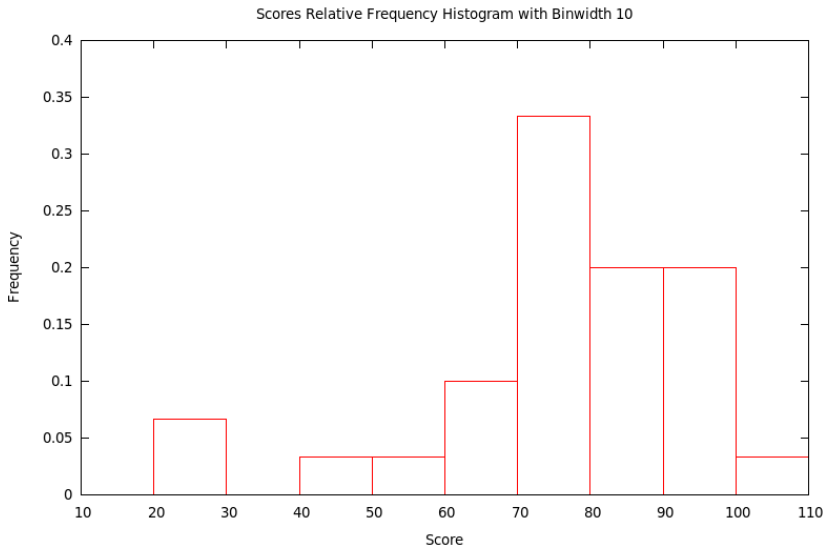

Just as we could have bar charts with absolute (§2.1) or relative (§2.2) frequencies, we can do the same for histograms. Above, in §3.2, we made absolute frequency histograms. If, instead, we divide each of the counts used to determine the heights of the bars by the total sample size, we will get fractions or percents – relative frequencies. We should then change the label on the \(y\) -axis and the tick-marks numbers on the \(y\) -axis, but otherwise the graph will look exactly the same (as it did with relative frequency bar charts compared with absolute frequency bar chars).

Example 1.3.4. Let’s make the relative frequency histogram corresponding to the absolute frequency histogram in Example 1.3.2 based on the data from Example 1.3.1 – all we have to do is change the numbers used to make heights of the bars in the graph by dividing them by the sample size, 30, and then also change the \(y\) -axis label and tick mark numbers.

How to Talk About Histograms

Histograms of course tell us what the data values are – the location along the \(x\) value of a bar is the value of the variable – and how many of them have each particular value – the height of the bar tells how many data values are in that bin. This is also given a technical name

[def:distribution] Given a variable defined on a population, or at least on a sample, the distribution of that variable is a list of all the values the variable actually takes on and how many times it takes on these values.

The reason we like the visual version of a distribution, its histogram, is that our visual intuition can then help us answer general, qualitative questions about what those data must be telling us. The first questions we usually want to answer quickly about the data are

- What is the shape of the histogram?

- Where is its center ?

- How much variability [also called spread ] does it show?

When we talk about the general shape of a histogram, we often use the terms

[def:symmskew] A histogram is symmetric if the left half is (approximately) the mirror image of the right half.

We say a histogram is skewed left if the tail on the left side is longer than on the right. In other words, left skew is when the left half of the histogram – half in the sense that the total of the bars in this left part is half of the size of the dataset – extends farther to the left than the right does to the right. Conversely, the histogram is skewed right if the right half extends farther to the right than the left does to the left.

If the shape of the histogram has one significant peak, then we say it is unimodal , while if it has several such, we say it is multimodal .

It is often easy to point to where the center of a distribution looks like it lies, but it is hard to be precise. It is particularly difficult if the histogram is “noisy,” maybe multimodal. Similarly, looking at a histogram, it is often easy to say it is “quite spread out” or “very concentrated in the center,” but it is then hard to go beyond this general sense.

Precision in our discussion of the center and spread of a dataset will only be possible in the next section, when we work with numerical measures of these features.

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

17 Data Visualization Techniques All Professionals Should Know

- 17 Sep 2019

There’s a growing demand for business analytics and data expertise in the workforce. But you don’t need to be a professional analyst to benefit from data-related skills.

Becoming skilled at common data visualization techniques can help you reap the rewards of data-driven decision-making , including increased confidence and potential cost savings. Learning how to effectively visualize data could be the first step toward using data analytics and data science to your advantage to add value to your organization.

Several data visualization techniques can help you become more effective in your role. Here are 17 essential data visualization techniques all professionals should know, as well as tips to help you effectively present your data.

Access your free e-book today.

What Is Data Visualization?

Data visualization is the process of creating graphical representations of information. This process helps the presenter communicate data in a way that’s easy for the viewer to interpret and draw conclusions.

There are many different techniques and tools you can leverage to visualize data, so you want to know which ones to use and when. Here are some of the most important data visualization techniques all professionals should know.

Data Visualization Techniques

The type of data visualization technique you leverage will vary based on the type of data you’re working with, in addition to the story you’re telling with your data .

Here are some important data visualization techniques to know:

- Gantt Chart

- Box and Whisker Plot

- Waterfall Chart

- Scatter Plot

- Pictogram Chart

- Highlight Table

- Bullet Graph

- Choropleth Map

- Network Diagram

- Correlation Matrices

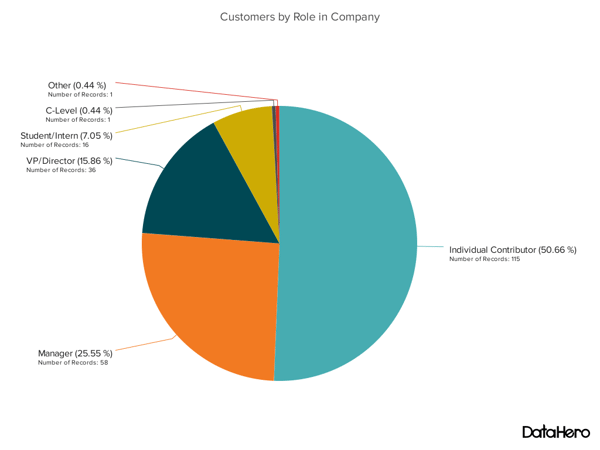

1. Pie Chart

Pie charts are one of the most common and basic data visualization techniques, used across a wide range of applications. Pie charts are ideal for illustrating proportions, or part-to-whole comparisons.

Because pie charts are relatively simple and easy to read, they’re best suited for audiences who might be unfamiliar with the information or are only interested in the key takeaways. For viewers who require a more thorough explanation of the data, pie charts fall short in their ability to display complex information.

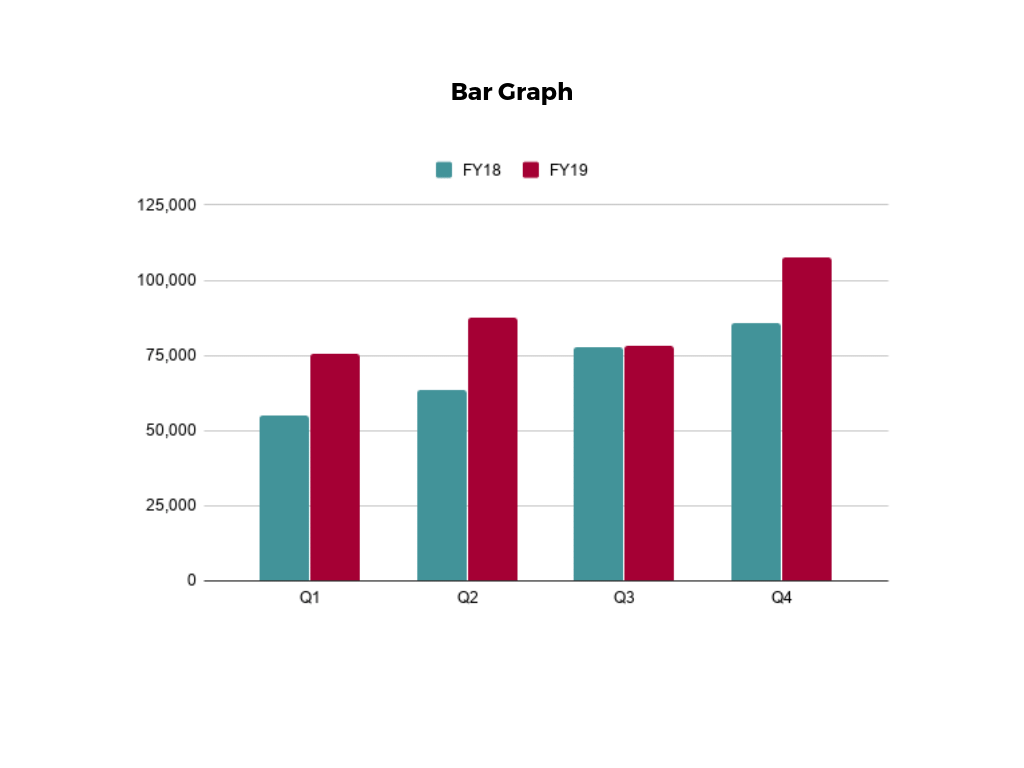

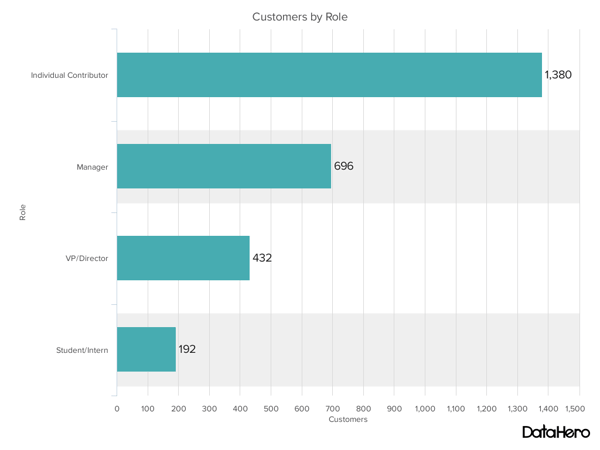

2. Bar Chart

The classic bar chart , or bar graph, is another common and easy-to-use method of data visualization. In this type of visualization, one axis of the chart shows the categories being compared, and the other, a measured value. The length of the bar indicates how each group measures according to the value.

One drawback is that labeling and clarity can become problematic when there are too many categories included. Like pie charts, they can also be too simple for more complex data sets.

3. Histogram

Unlike bar charts, histograms illustrate the distribution of data over a continuous interval or defined period. These visualizations are helpful in identifying where values are concentrated, as well as where there are gaps or unusual values.

Histograms are especially useful for showing the frequency of a particular occurrence. For instance, if you’d like to show how many clicks your website received each day over the last week, you can use a histogram. From this visualization, you can quickly determine which days your website saw the greatest and fewest number of clicks.

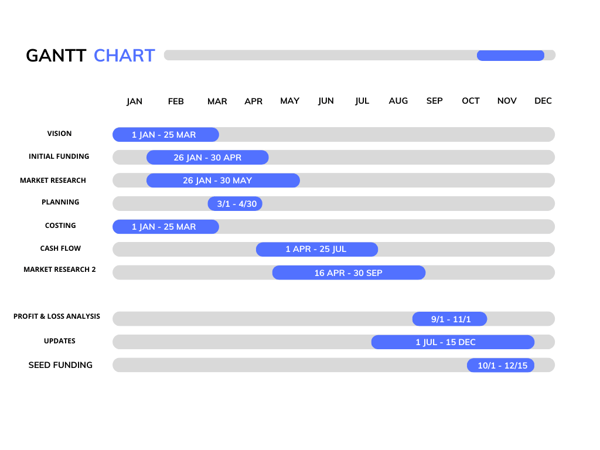

4. Gantt Chart

Gantt charts are particularly common in project management, as they’re useful in illustrating a project timeline or progression of tasks. In this type of chart, tasks to be performed are listed on the vertical axis and time intervals on the horizontal axis. Horizontal bars in the body of the chart represent the duration of each activity.

Utilizing Gantt charts to display timelines can be incredibly helpful, and enable team members to keep track of every aspect of a project. Even if you’re not a project management professional, familiarizing yourself with Gantt charts can help you stay organized.

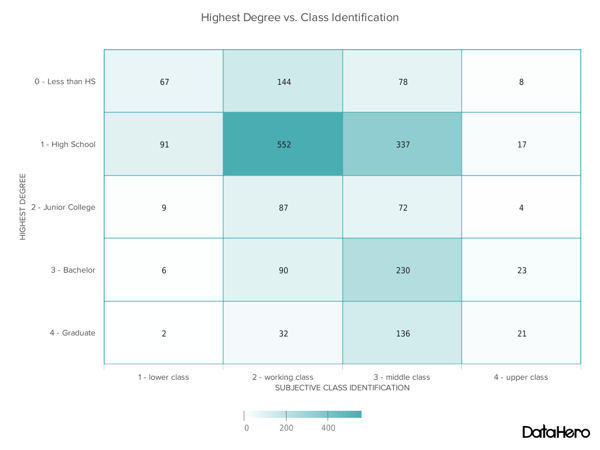

5. Heat Map

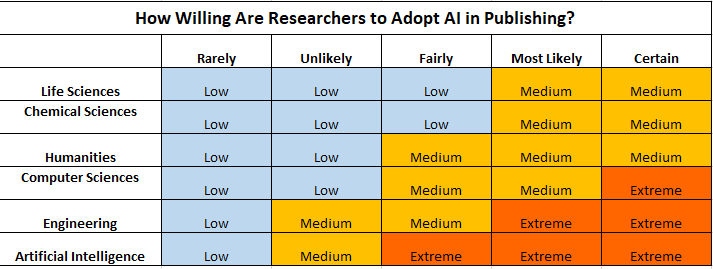

A heat map is a type of visualization used to show differences in data through variations in color. These charts use color to communicate values in a way that makes it easy for the viewer to quickly identify trends. Having a clear legend is necessary in order for a user to successfully read and interpret a heatmap.

There are many possible applications of heat maps. For example, if you want to analyze which time of day a retail store makes the most sales, you can use a heat map that shows the day of the week on the vertical axis and time of day on the horizontal axis. Then, by shading in the matrix with colors that correspond to the number of sales at each time of day, you can identify trends in the data that allow you to determine the exact times your store experiences the most sales.

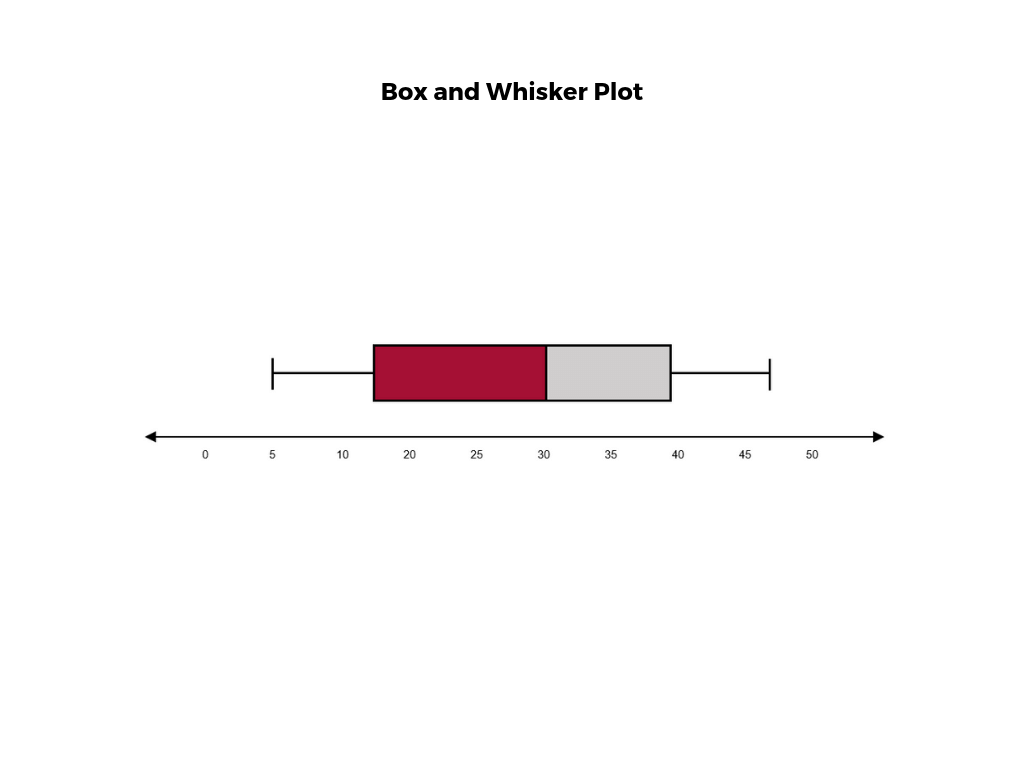

6. A Box and Whisker Plot

A box and whisker plot , or box plot, provides a visual summary of data through its quartiles. First, a box is drawn from the first quartile to the third of the data set. A line within the box represents the median. “Whiskers,” or lines, are then drawn extending from the box to the minimum (lower extreme) and maximum (upper extreme). Outliers are represented by individual points that are in-line with the whiskers.

This type of chart is helpful in quickly identifying whether or not the data is symmetrical or skewed, as well as providing a visual summary of the data set that can be easily interpreted.

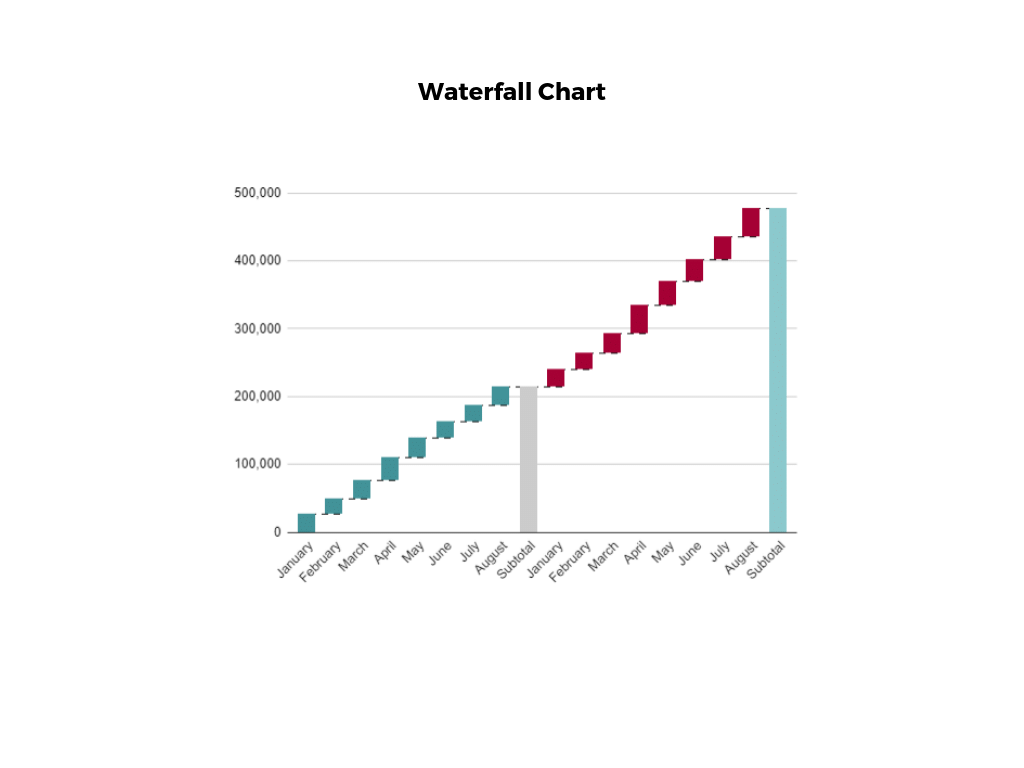

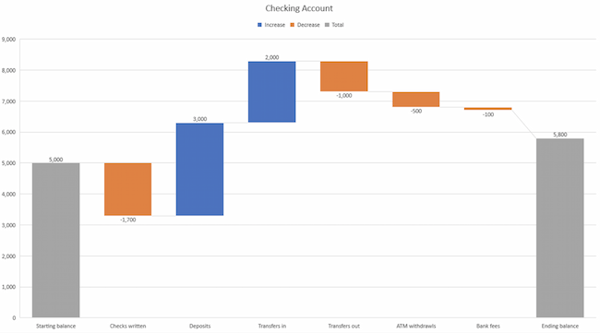

7. Waterfall Chart

A waterfall chart is a visual representation that illustrates how a value changes as it’s influenced by different factors, such as time. The main goal of this chart is to show the viewer how a value has grown or declined over a defined period. For example, waterfall charts are popular for showing spending or earnings over time.

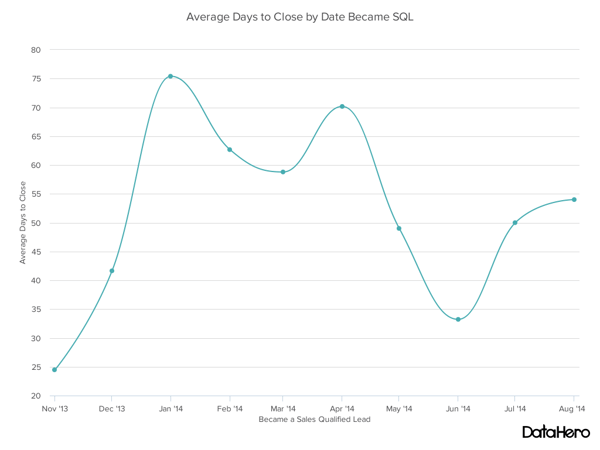

8. Area Chart

An area chart , or area graph, is a variation on a basic line graph in which the area underneath the line is shaded to represent the total value of each data point. When several data series must be compared on the same graph, stacked area charts are used.

This method of data visualization is useful for showing changes in one or more quantities over time, as well as showing how each quantity combines to make up the whole. Stacked area charts are effective in showing part-to-whole comparisons.

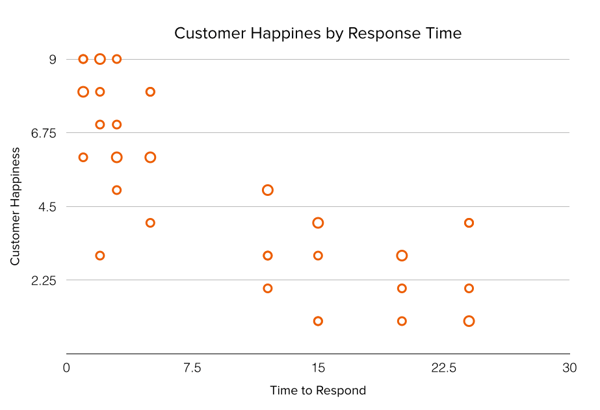

9. Scatter Plot

Another technique commonly used to display data is a scatter plot . A scatter plot displays data for two variables as represented by points plotted against the horizontal and vertical axis. This type of data visualization is useful in illustrating the relationships that exist between variables and can be used to identify trends or correlations in data.

Scatter plots are most effective for fairly large data sets, since it’s often easier to identify trends when there are more data points present. Additionally, the closer the data points are grouped together, the stronger the correlation or trend tends to be.

10. Pictogram Chart

Pictogram charts , or pictograph charts, are particularly useful for presenting simple data in a more visual and engaging way. These charts use icons to visualize data, with each icon representing a different value or category. For example, data about time might be represented by icons of clocks or watches. Each icon can correspond to either a single unit or a set number of units (for example, each icon represents 100 units).

In addition to making the data more engaging, pictogram charts are helpful in situations where language or cultural differences might be a barrier to the audience’s understanding of the data.

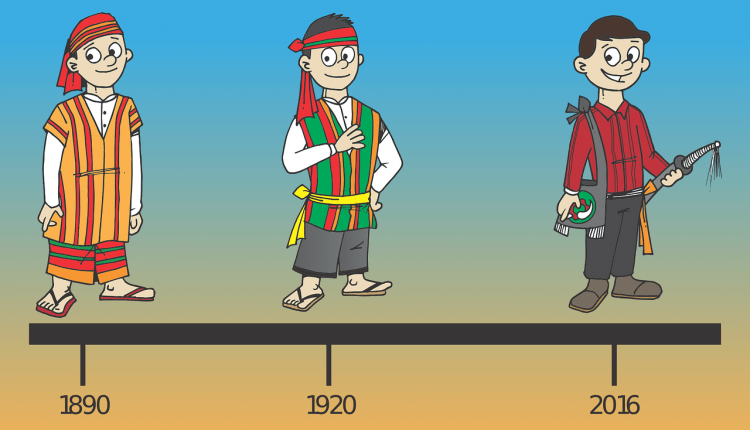

11. Timeline

Timelines are the most effective way to visualize a sequence of events in chronological order. They’re typically linear, with key events outlined along the axis. Timelines are used to communicate time-related information and display historical data.

Timelines allow you to highlight the most important events that occurred, or need to occur in the future, and make it easy for the viewer to identify any patterns appearing within the selected time period. While timelines are often relatively simple linear visualizations, they can be made more visually appealing by adding images, colors, fonts, and decorative shapes.

12. Highlight Table

A highlight table is a more engaging alternative to traditional tables. By highlighting cells in the table with color, you can make it easier for viewers to quickly spot trends and patterns in the data. These visualizations are useful for comparing categorical data.

Depending on the data visualization tool you’re using, you may be able to add conditional formatting rules to the table that automatically color cells that meet specified conditions. For instance, when using a highlight table to visualize a company’s sales data, you may color cells red if the sales data is below the goal, or green if sales were above the goal. Unlike a heat map, the colors in a highlight table are discrete and represent a single meaning or value.

13. Bullet Graph

A bullet graph is a variation of a bar graph that can act as an alternative to dashboard gauges to represent performance data. The main use for a bullet graph is to inform the viewer of how a business is performing in comparison to benchmarks that are in place for key business metrics.

In a bullet graph, the darker horizontal bar in the middle of the chart represents the actual value, while the vertical line represents a comparative value, or target. If the horizontal bar passes the vertical line, the target for that metric has been surpassed. Additionally, the segmented colored sections behind the horizontal bar represent range scores, such as “poor,” “fair,” or “good.”

14. Choropleth Maps

A choropleth map uses color, shading, and other patterns to visualize numerical values across geographic regions. These visualizations use a progression of color (or shading) on a spectrum to distinguish high values from low.

Choropleth maps allow viewers to see how a variable changes from one region to the next. A potential downside to this type of visualization is that the exact numerical values aren’t easily accessible because the colors represent a range of values. Some data visualization tools, however, allow you to add interactivity to your map so the exact values are accessible.

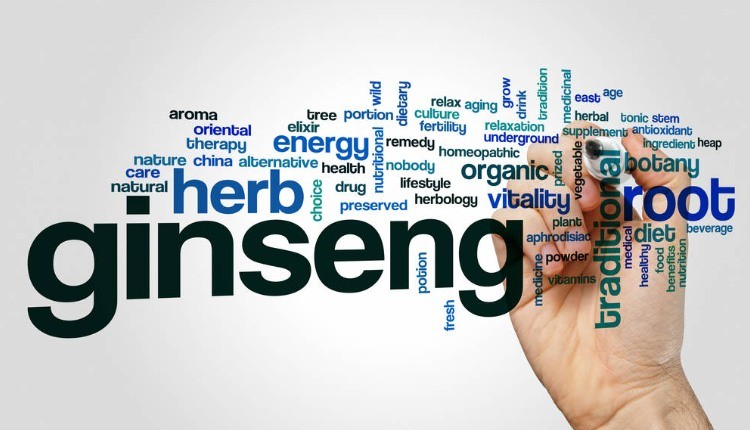

15. Word Cloud

A word cloud , or tag cloud, is a visual representation of text data in which the size of the word is proportional to its frequency. The more often a specific word appears in a dataset, the larger it appears in the visualization. In addition to size, words often appear bolder or follow a specific color scheme depending on their frequency.

Word clouds are often used on websites and blogs to identify significant keywords and compare differences in textual data between two sources. They are also useful when analyzing qualitative datasets, such as the specific words consumers used to describe a product.

16. Network Diagram

Network diagrams are a type of data visualization that represent relationships between qualitative data points. These visualizations are composed of nodes and links, also called edges. Nodes are singular data points that are connected to other nodes through edges, which show the relationship between multiple nodes.

There are many use cases for network diagrams, including depicting social networks, highlighting the relationships between employees at an organization, or visualizing product sales across geographic regions.

17. Correlation Matrix

A correlation matrix is a table that shows correlation coefficients between variables. Each cell represents the relationship between two variables, and a color scale is used to communicate whether the variables are correlated and to what extent.

Correlation matrices are useful to summarize and find patterns in large data sets. In business, a correlation matrix might be used to analyze how different data points about a specific product might be related, such as price, advertising spend, launch date, etc.

Other Data Visualization Options

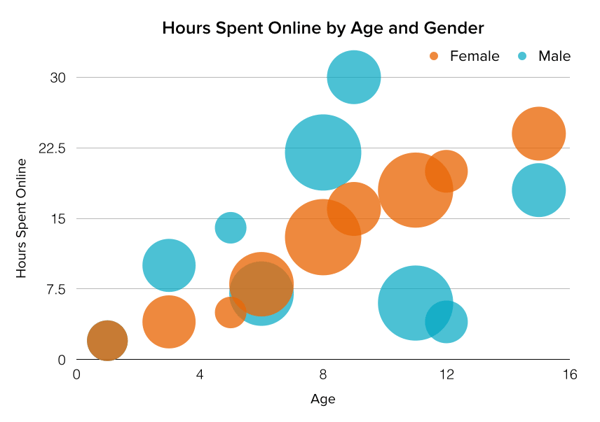

While the examples listed above are some of the most commonly used techniques, there are many other ways you can visualize data to become a more effective communicator. Some other data visualization options include:

- Bubble clouds

- Circle views

- Dendrograms

- Dot distribution maps

- Open-high-low-close charts

- Polar areas

- Radial trees

- Ring Charts

- Sankey diagram

- Span charts

- Streamgraphs

- Wedge stack graphs

- Violin plots

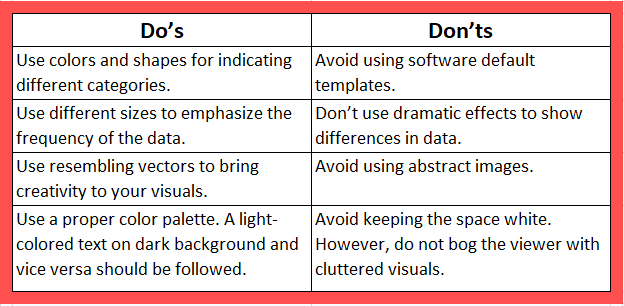

Tips For Creating Effective Visualizations

Creating effective data visualizations requires more than just knowing how to choose the best technique for your needs. There are several considerations you should take into account to maximize your effectiveness when it comes to presenting data.

Related : What to Keep in Mind When Creating Data Visualizations in Excel

One of the most important steps is to evaluate your audience. For example, if you’re presenting financial data to a team that works in an unrelated department, you’ll want to choose a fairly simple illustration. On the other hand, if you’re presenting financial data to a team of finance experts, it’s likely you can safely include more complex information.

Another helpful tip is to avoid unnecessary distractions. Although visual elements like animation can be a great way to add interest, they can also distract from the key points the illustration is trying to convey and hinder the viewer’s ability to quickly understand the information.

Finally, be mindful of the colors you utilize, as well as your overall design. While it’s important that your graphs or charts are visually appealing, there are more practical reasons you might choose one color palette over another. For instance, using low contrast colors can make it difficult for your audience to discern differences between data points. Using colors that are too bold, however, can make the illustration overwhelming or distracting for the viewer.

Related : Bad Data Visualization: 5 Examples of Misleading Data

Visuals to Interpret and Share Information

No matter your role or title within an organization, data visualization is a skill that’s important for all professionals. Being able to effectively present complex data through easy-to-understand visual representations is invaluable when it comes to communicating information with members both inside and outside your business.

There’s no shortage in how data visualization can be applied in the real world. Data is playing an increasingly important role in the marketplace today, and data literacy is the first step in understanding how analytics can be used in business.

Are you interested in improving your analytical skills? Learn more about Business Analytics , our eight-week online course that can help you use data to generate insights and tackle business decisions.

This post was updated on January 20, 2022. It was originally published on September 17, 2019.

About the Author

tableau.com is not available in your region.

University Libraries

- Ohio University Libraries

- Library Guides

Research Data Literacy 101

- Data Visualization

- Data Management

- Find Data & Statistics

- Data Manipulation & Visualization Tools

- Citing Data

- Contact/Need Help?

Tutorials & Books on Visualization

There are ENDLESS videos and help tutorials freely available on the web, even for specific programs.

- Data visualization for dummies eBook through Alden Library - This full-color guide introduces you to a variety of ways to handle and synthesize data in much more interesting ways than mere columns and rows of numbers

- Information visualization : an introduction eBook from Alden Library - Information visualization is the act of gaining insight into data, and is carried out by virtually everyone. It is usually facilitated by turning data - often a collection of numbers - into images that allow much easier comprehension.

- Information visualization: perception for design, 3rd eBook from Alden Library

- Designing Creative Content: How visualising data helps us see Data vis is as much about what story you want to tell as it is about visual design. This presentation digs into case studies and projects with a particular focus on answering the why, what, and how of data visualization.

What is Data Visualization?

Data visualization “ ...helps the human eye perceive huge amounts of data in an understandable way. It enables researchers to represent, draft, and display data visually in a way that allows humans to more efficiently identify, visualize, and remember it. " ( Orkodashvili, 2014 )

Building an information visualization can aid you in your research process in many ways, but predominately:

- Early in the research process- i nformation visualization can help you explore and understand patterns in your data in a non-traditional way, giving you a different perspective.

- Later in the research process- visualization can help you communicate important aspects of your data in an (hopefully) easy-to-understand fashion.

Orkodashvili, Mariam, PhD, Salem Press Encyclopedia, January, 2014.

Let's Get Started

Choosing your visualization method is important. ask yourself these questions:.

- Who is my audience, are they familiar with this information?

- What am I trying to convey? What am I showing them? What is my audience supposed to understand?

- How much time or space do I have? Does that matter?

Here is a Cheat Sheet for helping you choose a graphic that best suits your goal: Mike Parkinson's Graphic Cheat Sheet .

There are six types of visualization strategies and each of these six types can be further described with examples on this Periodic Table of Visualization Methods .

- Data visualization - visual representations of quantitative data in a schematic form

- Information visualization - interactive, visual representations of data to amplify understanding. Data is transformed into an interactive image

- Concept visualization - methods to elaborate mostly qualitative concepts, ideas, plans and analysis

- Strategy visualization - systematic use of complementary visual representations of the analysis, conclusion, and implementation of strategies

- Metaphor visualization - position information graphically to organize and structure information and convey insight about the information

- Compound visualization - complementary use of different graphic representation formats in one scheme

Data is Beautiful -TED Talk

David McCandless turns complex data sets (like worldwide military spending, media buzz, Facebook status updates) into beautiful, simple diagrams that tease out unseen patterns and connections. Good design, he suggests, is the best way to navigate information glut — and it may just change the way we see the world.

- << Previous: Open Data

- Next: Data Manipulation & Visualization Tools >>

Data & Finance for Work & Life

What is a data display? Definition, Types, & Examples

You may have heard that “data is the new oil” — the most valuable commodity of the 21st century. But just like oil is useless until refined, data is useless until simplified and communicated. Data displays are a tool to help analysts do just that.

Because they’re so important to data, data displays can be found in virtually every discipline that deals with large amounts of information. Consequently, the precise meaning behind data displays has become blurred, resulting in a lot of unanswered questions — many of which you may have already asked yourself.

The purpose of this article is to clear things up. I will briefly define data displays, show examples of 17 popular displays, answer some common questions, compare data displays and data visualization, cover data displays in the context of qualitative data, and explore common data visualization tools.

Don’t forget, you can get the free Intro to Data Analysis eBook to get a strong start.

Data Display Definition

Also known as data visualization, a data display is a visual representation of raw or processed data that aims to communicate a small number of insights about the behavior of an underlying table, which is otherwise difficult or impossible to understand to the naked eye. Common examples include graphs and charts, but any visual depiction of information, even maps, can be considered data displays.

Additionally, the term “data display” can refer to a legal agreement in which a publishing entity, often a stock exchange, obtains the rights from a partner to publicly display the partner’s data. This is important to know, but it’s outside the scope of this article.

Types of Data Display: 17 Actionable Visualizations with Examples

The most common types of data displays are the 17 that follow:

- bar charts,

- column charts,

- stacked bar charts,

- line graphs,

- area charts,

- stacked area charts,

- unstacked area charts,

- combo bar-and-line charts,

- waterfall charts,

- tree diagrams,

- bullet graphs,

- scatter plots,

- histograms,

- packed bubble charts, and

- box & whisker plots.

Let’s look at each of these with an example. I’ll be using Tableau software to show these, but many of them are available in Excel.

Bar charts show the value of dimensions as horizontal rectangles. They’re useful for comparing simple items side-by-side. This image shows total checkouts for two book IDs.

Column Charts

Column charts show the value of dimensions as a vertical rectangle. Like bar charts, they’re useful for comparing simple items side-by-side. This image shows total checkouts for two book IDs.

Stacked Bar/Stacked Columns Charts

Stacked bar or column charts show the value of dimensions with more granular dimensions “inside.” They’re useful for comparing dimensions with additional breakdown. In this image, the columns represent total checkouts by book ID, and the colors represent month of checkout.

Tree maps show the value of multiple dimensions by their relative size and splits them into rectangles in a “spiral” fashion. As you can see here, book IDs are shows in size by the number of checkouts they had.

Line Graphs

Line graphs show the value of two dimensions that are continuous, most often wherein one of the dimensions is time. This image shows five book IDs by number of checkouts over time.

Area Charts

Area charts show the value of a dimension as all the space under a line (often over time).

Stacked Area Charts

Stacked are charts show the value of two dimension values as areas stacked on top of each other, such that one starts where the other ends on the vertical axis.

Unstacked Area Charts

Unstacked area charts show two area charts layered on top of each other such that both start from zero. As you can see below, this view is useful for comparing the sum of two values over time.

Combo Bar-and-Line Charts

A bar-and-line chart shows two different measures — one as a line and the other as bars. These are particularly useful when showing a running total as a line and the individual values of the total as bars.

Waterfall Charts

Waterfall charts show a beginning balance, additions, subtractions, and an ending balance, all as a sequence of connected bars. These are useful for showing additions and subtractions, or a corkscrew calculations, around a project or account.

Tree Diagrams

Tree diagrams show the hierarchical relationship between elements of a system.

Bullet Graphs

Bullet graphs show a column value of actual real numbers (blue bars), a marker for a target number (the small black vertical lines), and shading at different intervals to indicate quality of performance such as bad, acceptable, and good.

Scatter Plots

Scatter plots show points on a plane where two variables meet — very similar to a line graph but used to compare any kind of variables, not just a value over time.

Histograms show bars representing groupings of a given dimension. This is easier to understand in the picture — each column represents a number of entries that fall into a range, i.e. 10 values fall into a bin ranging from 1-4, 29 values fall into a bin of 5-8, etc.

Heat maps show the intensity of a grid of values through the use of color shading and size intensities.

Packed Bubble Charts

Packed bubble charts show the intensity of dimension values based on relative size of “bubbles,” which are nothing more than circles.

Box & Whisker Plots

Box & whisker plots show values of a series based on 4 markers: max, min, lower 25% quartile, upper 75% quartile, and the average.

Don’t Use Pie Charts!

One of the most common chart types is a pie chart, and I’m asking you to never use one. Why? Because pie charts don’t provide any value to the viewer.

A pie chart shows the percent that parts of a total represent. But what does that mean for the viewer? Visually, it’s difficult to distinguish which slices are largest, unless you have one slice that dominates 80% or more of the pie — or you use labels on each slice.

If you want to show percent of total, use a percent of total bar chart. Or better yet, use a waterfall chart! These will be much more informative to the viewer.

Packed Bubble Charts: the New Cool Thing

I’m not totally against bubble charts, but they’re not the most insightful visual we can provide. A bubble chart has no structure, so it’s not possible to compare different values. They’re similar to pie charts in that it’s difficult to draw insight.

That said, there is some creative value to the viewer. Bubble charts grab attention, which means you can use them to draw in users and show them more insightful charts.

Why display data in different ways?

In all of the example charts above, I used the same two data tables. What this means is that any given data set can be represented in many different data displays . So why would we represent data in different ways?

The simple answer is that it helps the viewer think differently about information . When I showed the stacked area chart of number of checkouts for two different books, it appeared as though the books followed the same trend.

However, when I showed them in an unstacked view, we clearly see that the book colored orange performed slightly better in Q1 – Q3, whereas the book colored blue performed better in Q4.

Displaying data in different ways allows us to think differently about it — to gain insights and understand it in new and creative ways.

Another reason for using multiple data displays is for an analyst to cater to his/her audience . For example, take another look at the bullet graph and scatter plot above.

Managers in a book selling firm are likely very interested in the performance of sales in Q1 vs Q2, so the bullet graph is better for them . However, a writer looking to better understand the relationship between sales of individual books in Q1 vs Q2 will prefer the scatter plot .

Which data display shows the number of observations?

I’m not sure where this question comes from, but it’s asked a lot. An observation is nothing more than one line in a data table, and many wonder what data display shows the total number of these lines.

In short, any data display can show the number of observations in the underlying data set — it’s only a question of granularity of dimensions. However, the most common data display showing number of observations is a scatter plot. As long as you include a measure at the observation level of detail, the scatter will show the number of observations.

If the goal, however, is simply to count the number of observations, most data table software have a simple count function . In Excel, it’s COUNTA(array of one column). In Tableau, it’s COUNT([observation metric]).

What data display uses intervals and frequency?

Another common question, and this one is easy. Take another look at the histogram above. It pinpoints intervals and counts the number of records within that interval. The number of records is also know as frequency. In short, the data display showing intervals and frequency is a histogram.

Which data display is misleading?

You may have heard the term “misleading data.” Unfortunately, misleading data is a necessary evil in the world of informatics. While any data display can be misleading, the most common examples are bar charts in which an axis is made non-zero and line charts in which the data axis (x-axis) is reversed. The first results in an inflated visual value of bars, and the second results in the reverse interpretation of a trend over time.

Misleading data is a huge topic and is outside the scope of this article. If you’re interested in it, check out these articles:

- Data Distortion: What is it? And how is it misleading?

- Pros & Cons of Data Visualization: the Good, Bad, & Ugly

Data Visualization vs Data Display

Alas, we arrive at what is likely the most common source of confusion surrounding data displays: the difference between a data display and a data visualization.

In most cases, there is no difference between a data visualization and a data display — they are synonyms. However, the term “visualization” is a buzzword that invites the image of aesthetically-pleasing data displays, whereas “data display” can refer to visualizations OR aesthetically-simple charts and graphs like those used in academic papers.

What is a data display in qualitative research?

Since data is quantitative, applying data displays to qualitative research can be challenging — but it’s 100% possible. It requires converting qualitative data into quantitative data . In most cases qualitative data consists of words, so “conversion” involves counting words. In practice, counting manifests as (1) idea coding and/or (2) determining word frequency .

Idea coding consists of reading through text and assigning designated phrases per idea covered, then counting the number of times these phrases appear . Word frequency consists of passing a text through a word analyzer software and counting the most common combinations . The details around these techniques are outside the scope of this article, but you can learn more in the article Qualitative Content Analysis: a Simple Guide with Examples .

Once converted into numbers, we can display qualitative data just like we display quantitative data in the 17 Actionable Visualizations . So, how do we answer the question “what is a data display in qualitative research?”

In short, a data display in qualitative research is the visualization of words after they have been quantified through idea coding, word frequency, or both.

Data Display Tools and Products (5 Examples)

Any article on data displays worth its salt shows data tools. Here are five free data visualization tools you can get started with today.

Admittedly, Excel is not a data display tool in the strict sense of the term. However, it offers several user-friendly visualization options. You can navigate to them via the Insert ribbon. The options include:

- Column charts,

- Bar charts,

- Line graphs,

- Histograms,

- Box & Whisker Plots,

- Waterfall charts,

- Pie charts (but don’t use them!),

- Scatter plots, and

- Combo charts

They’re displayed in the icons as shown in the below picture:

Tableau is the leading data visualization software, and for good reason. It’s what I used to build all of the data displays earlier in this article. Tableau interacts directly with data stored in Excel, on a local server, in Google Sheets, and many other sources.

It provides one of the most flexible interfaces available, allowing you to rapidly “slice and dice” different dimensions and measures and switch between visualizations with the click of a button.

The one downside is that Tableau takes some time to learn . Its flexibility requires the use of many functional buttons, and you’ll need some time to understand them.

You can download the free version of the paid product called Tableau Public .

I only recently learned about Flourish. It’s a pre-set data display tool that’s much less flexible than Tableau, but much easier to get started on. Given a set of static and dynamic charts to choose from, Flourish prompts you to fill in data in a format compatible with the chart.

Have you seen those “rat race” videos where GDP per country or market cap by company is shown over time? With the leaders moving to the top over the years? You can build that in Flourish .

Infogram allows the creation of a fixed number of data displays, similar to those available in Excel. It’s added value, however, is that Infogram is aesthetically pleasing and it’s a browser-based tool. This means you won’t bore an audience with classic Excel charts, and it means you can access your work anywhere you have an internet connection. Check out Infogram here .

Datawrapper

Datawrapper is similar to Flourish and Infogram. The key difference is that you have a wide variety of displays to choose from like Flourish, but it requires a standard input format like Infogram.

At the end of the day, Tableau is by far the best visualization software in terms of flexibility and power. But if you’re looking for a simple, accessible solution, Flourish, Infogram and Datawrapper will do the trick. Try them out to see which is best for you!

Data Display in Excel

A quick note on data display in Excel: in addition to using the visualizations discussed above on a normal range, you can use them on a pivot table.

What are the steps to display data in a pivot table?

Imagine you have a normal range in Excel that you want to convert to a pivot table. You can do so by highlighting the range and navigating to Insert > Tables > Pivot Table. Once the field appears, drag the dimensions and measures you want into the fields.

From there, you can create a pivot table data display by placing your cursor anywhere in the pivot table and navigating to Insert and clicking a visualization. The data display is now connected to the pivot table and will change with it.

Data Display from Database

So far we’ve discussed the data display definition, types of displays, answered some common questions, compared data displays and data visualization, covered data displays with qualitative data, and explored common tools.

All of these items can be considered “front-end” topics, meaning they don’t require you to work with programming languages and underlying datasets. However, it’s worth addressing how to create a data display from a database.

At its core, a database is a storage location with 2 or more joinable tables. While IT professionals would laugh at me saying this, two tabs in Excel with a data table in each could technically be considered a database . This means that any time you create a data display or visualization using data from a structure of this nature, you’re displaying from a database.

But this would be an oversimplification !

In reality, serious databases are stored on servers accessible with SQL. Displaying data from those databases requires a tool, such as Tableau, capable of accessing those servers directly. If not, you would need to export them into Excel first, then display the data with a tool.

In short, displaying data from a database requires either a powerful visualization tool or preparatory export from the database into Excel using SQL.

What is a data display in math?

Because we’re talking about data, the numeric affiliation with math comes up often. Data displays are used in math insofar as math is used in almost every discipline. This means we don’t need to explore it extensively. However, the specific use of data displays in statistics is important.

Data Displays and Statistics

Statistics is the specific discipline of math that deals with datasets. More specifically, it deals with descriptive and inferential analytics . In short, descriptive analysis tries to understand distribution. Distribution can be broken down into central tendency and dispersion.

Inferential analysis, on the other hand, uses descriptive statistics on known data to make assumptions about a broader population. If a penny is copper (descriptive), and all pennies are the same, then all pennies are copper (inferential).

Data displays in statistics can be used for both descriptive and inferential analysis. They help the analyst understand how well their models represent the data.

Much of statistics is polluted with discipline-specific jargon, and it’s not the goal of this article to deep-dive into that world. Instead, I encourage you to get ahold of one of the data display tools we discussed and start playing with them. This is the best way to learn data display skills .

At AnalystAnswers.com, I’m working to build task-based packets to help you improve your skills. So stay tuned for those!

If you found this article helpful, check out more free content on data, finance, and business analytics at the AnalystAnswers.com homepage !

About the Author

Noah is the founder & Editor-in-Chief at AnalystAnswers. He is a transatlantic professional and entrepreneur with 5+ years of corporate finance and data analytics experience, as well as 3+ years in consumer financial products and business software. He started AnalystAnswers to provide aspiring professionals with accessible explanations of otherwise dense finance and data concepts. Noah believes everyone can benefit from an analytical mindset in growing digital world. When he's not busy at work, Noah likes to explore new European cities, exercise, and spend time with friends and family.

File available immediately.

Notice: JavaScript is required for this content.

Data visualization is the representation of data through use of common graphics, such as charts, plots, infographics and even animations. These visual displays of information communicate complex data relationships and data-driven insights in a way that is easy to understand.

Data visualization can be utilized for a variety of purposes, and it’s important to note that is not only reserved for use by data teams. Management also leverages it to convey organizational structure and hierarchy while data analysts and data scientists use it to discover and explain patterns and trends. Harvard Business Review (link resides outside ibm.com) categorizes data visualization into four key purposes: idea generation, idea illustration, visual discovery, and everyday dataviz. We’ll delve deeper into these below:

Idea generation

Data visualization is commonly used to spur idea generation across teams. They are frequently leveraged during brainstorming or Design Thinking sessions at the start of a project by supporting the collection of different perspectives and highlighting the common concerns of the collective. While these visualizations are usually unpolished and unrefined, they help set the foundation within the project to ensure that the team is aligned on the problem that they’re looking to address for key stakeholders.

Idea illustration

Data visualization for idea illustration assists in conveying an idea, such as a tactic or process. It is commonly used in learning settings, such as tutorials, certification courses, centers of excellence, but it can also be used to represent organization structures or processes, facilitating communication between the right individuals for specific tasks. Project managers frequently use Gantt charts and waterfall charts to illustrate workflows . Data modeling also uses abstraction to represent and better understand data flow within an enterprise’s information system, making it easier for developers, business analysts, data architects, and others to understand the relationships in a database or data warehouse.

Visual discovery

Visual discovery and every day data viz are more closely aligned with data teams. While visual discovery helps data analysts, data scientists, and other data professionals identify patterns and trends within a dataset, every day data viz supports the subsequent storytelling after a new insight has been found.

Data visualization

Data visualization is a critical step in the data science process, helping teams and individuals convey data more effectively to colleagues and decision makers. Teams that manage reporting systems typically leverage defined template views to monitor performance. However, data visualization isn’t limited to performance dashboards. For example, while text mining an analyst may use a word cloud to to capture key concepts, trends, and hidden relationships within this unstructured data. Alternatively, they may utilize a graph structure to illustrate relationships between entities in a knowledge graph. There are a number of ways to represent different types of data, and it’s important to remember that it is a skillset that should extend beyond your core analytics team.

Use this model selection framework to choose the most appropriate model while balancing your performance requirements with cost, risks and deployment needs.

Register for the ebook on generative AI

The earliest form of data visualization can be traced back the Egyptians in the pre-17th century, largely used to assist in navigation. As time progressed, people leveraged data visualizations for broader applications, such as in economic, social, health disciplines. Perhaps most notably, Edward Tufte published The Visual Display of Quantitative Information (link resides outside ibm.com), which illustrated that individuals could utilize data visualization to present data in a more effective manner. His book continues to stand the test of time, especially as companies turn to dashboards to report their performance metrics in real-time. Dashboards are effective data visualization tools for tracking and visualizing data from multiple data sources, providing visibility into the effects of specific behaviors by a team or an adjacent one on performance. Dashboards include common visualization techniques, such as:

- Tables: This consists of rows and columns used to compare variables. Tables can show a great deal of information in a structured way, but they can also overwhelm users that are simply looking for high-level trends.

- Pie charts and stacked bar charts: These graphs are divided into sections that represent parts of a whole. They provide a simple way to organize data and compare the size of each component to one other.

- Line charts and area charts: These visuals show change in one or more quantities by plotting a series of data points over time and are frequently used within predictive analytics. Line graphs utilize lines to demonstrate these changes while area charts connect data points with line segments, stacking variables on top of one another and using color to distinguish between variables.

- Histograms: This graph plots a distribution of numbers using a bar chart (with no spaces between the bars), representing the quantity of data that falls within a particular range. This visual makes it easy for an end user to identify outliers within a given dataset.

- Scatter plots: These visuals are beneficial in reveling the relationship between two variables, and they are commonly used within regression data analysis. However, these can sometimes be confused with bubble charts, which are used to visualize three variables via the x-axis, the y-axis, and the size of the bubble.

- Heat maps: These graphical representation displays are helpful in visualizing behavioral data by location. This can be a location on a map, or even a webpage.

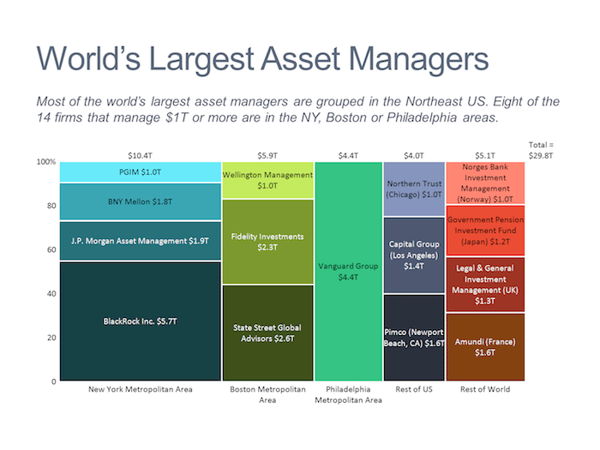

- Tree maps, which display hierarchical data as a set of nested shapes, typically rectangles. Treemaps are great for comparing the proportions between categories via their area size.

Access to data visualization tools has never been easier. Open source libraries, such as D3.js, provide a way for analysts to present data in an interactive way, allowing them to engage a broader audience with new data. Some of the most popular open source visualization libraries include:

- D3.js: It is a front-end JavaScript library for producing dynamic, interactive data visualizations in web browsers. D3.js (link resides outside ibm.com) uses HTML, CSS, and SVG to create visual representations of data that can be viewed on any browser. It also provides features for interactions and animations.

- ECharts: A powerful charting and visualization library that offers an easy way to add intuitive, interactive, and highly customizable charts to products, research papers, presentations, etc. Echarts (link resides outside ibm.com) is based in JavaScript and ZRender, a lightweight canvas library.

- Vega: Vega (link resides outside ibm.com) defines itself as “visualization grammar,” providing support to customize visualizations across large datasets which are accessible from the web.

- deck.gl: It is part of Uber's open source visualization framework suite. deck.gl (link resides outside ibm.com) is a framework, which is used for exploratory data analysis on big data. It helps build high-performance GPU-powered visualization on the web.

With so many data visualization tools readily available, there has also been a rise in ineffective information visualization. Visual communication should be simple and deliberate to ensure that your data visualization helps your target audience arrive at your intended insight or conclusion. The following best practices can help ensure your data visualization is useful and clear:

Set the context: It’s important to provide general background information to ground the audience around why this particular data point is important. For example, if e-mail open rates were underperforming, we may want to illustrate how a company’s open rate compares to the overall industry, demonstrating that the company has a problem within this marketing channel. To drive an action, the audience needs to understand how current performance compares to something tangible, like a goal, benchmark, or other key performance indicators (KPIs).

Know your audience(s): Think about who your visualization is designed for and then make sure your data visualization fits their needs. What is that person trying to accomplish? What kind of questions do they care about? Does your visualization address their concerns? You’ll want the data that you provide to motivate people to act within their scope of their role. If you’re unsure if the visualization is clear, present it to one or two people within your target audience to get feedback, allowing you to make additional edits prior to a large presentation.

Choose an effective visual: Specific visuals are designed for specific types of datasets. For instance, scatter plots display the relationship between two variables well, while line graphs display time series data well. Ensure that the visual actually assists the audience in understanding your main takeaway. Misalignment of charts and data can result in the opposite, confusing your audience further versus providing clarity.

Keep it simple: Data visualization tools can make it easy to add all sorts of information to your visual. However, just because you can, it doesn’t mean that you should! In data visualization, you want to be very deliberate about the additional information that you add to focus user attention. For example, do you need data labels on every bar in your bar chart? Perhaps you only need one or two to help illustrate your point. Do you need a variety of colors to communicate your idea? Are you using colors that are accessible to a wide range of audiences (e.g. accounting for color blind audiences)? Design your data visualization for maximum impact by eliminating information that may distract your target audience.

An AI-infused integrated planning solution that helps you transcend the limits of manual planning.

Build, run and manage AI models. Prepare data and build models on any cloud using open source code or visual modeling. Predict and optimize your outcomes.

Unlock the value of enterprise data and build an insight-driven organization that delivers business advantage with IBM Consulting.

Your trusted Watson co-pilot for smarter analytics and confident decisions.

Use features within IBM Watson® Studio that help you visualize and gain insights into your data, then cleanse and transform your data to build high-quality predictive models.

Data Refinery makes it easy to explore, prepare, and deliver data that people across your organization can trust.

Learn how to use Apache Superset (a modern, enterprise-ready business intelligence web application) with Netezza database to uncover the story behind the data.

Predict outcomes with flexible AI-infused forecasting and analyze what-if scenarios in real-time. IBM Planning Analytics is an integrated business planning solution that turns raw data into actionable insights. Deploy as you need, on-premises or on cloud.

Presentation of Quantitative Data: Data Visualization

- First Online: 15 December 2018

Cite this chapter

- Hector Guerrero 2

525k Accesses

We often think of data as being numerical values, and in business, those values are often stated in terms of units of currency (dollars, pesos, dinars, etc.). Although data in the form of currency are ubiquitous, it is quite easy to imagine other numerical units: percentages, counts in categories, units of sales, etc. This chapter, in conjunction with Chap. 3 , discusses how we can best use Excel’s graphic capabilities to effectively present quantitative data ( ratio and interval ) to inform and influence an audience, whether it is in euros or some other quantitative measure. In Chaps. 4 and 5 , we will acknowledge that not all data are numerical by focusing on qualitative ( categorical/nominal or ordinal ) data. The process of data gathering often produces a combination of data types, and throughout our discussions, it will be impossible to ignore this fact: quantitative and qualitative data often occur together. Let us begin our study of data visualization .

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

College of William & Mary, Mason School of Business, Williamsburg, VA, USA

Hector Guerrero

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Guerrero, H. (2019). Presentation of Quantitative Data: Data Visualization. In: Excel Data Analysis. Springer, Cham. https://doi.org/10.1007/978-3-030-01279-3_2

Download citation

DOI : https://doi.org/10.1007/978-3-030-01279-3_2

Published : 15 December 2018

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-01278-6

Online ISBN : 978-3-030-01279-3

eBook Packages : Business and Management Business and Management (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Perspect Behav Sci

- v.45(1); 2022 Mar

Quantitative Techniques and Graphical Representations for Interpreting Results from Alternating Treatment Design

Rumen manolov.

1 Department of Social Psychology and Quantitative Psychology, University of Barcelona, Passeig de la Vall dHebron 171, 08035 Barcelona, Spain

René Tanious

2 Methodology of Educational Sciences Research Group, KU Leuven - University of Leuven, Leuven, Belgium

Patrick Onghena

Associated data.

The data used for the illustrations are available from https://osf.io/ks4p2/

Multiple quantitative methods for single-case experimental design data have been applied to multiple-baseline, withdrawal, and reversal designs. The advanced data analytic techniques historically applied to single-case design data are primarily applicable to designs that involve clear sequential phases such as repeated measurement during baseline and treatment phases, but these techniques may not be valid for alternating treatment design (ATD) data where two or more treatments are rapidly alternated. Some recently proposed data analytic techniques applicable to ATD are reviewed. For ATDs with random assignment of condition ordering, the Edgington’s randomization test is one type of inferential statistical technique that can complement descriptive data analytic techniques for comparing data paths and for assessing the consistency of effects across blocks in which different conditions are being compared. In addition, several recently developed graphical representations are presented, alongside the commonly used time series line graph. The quantitative and graphical data analytic techniques are illustrated with two previously published data sets. Apart from discussing the potential advantages provided by each of these data analytic techniques, barriers to applying them are reduced by disseminating open access software to quantify or graph data from ATDs.

Alternating treatment design (ATD) is a single-case experimental design (SCED 1 ), characterized by a rapid and frequent alternation of conditions (Barlow & Hayes, 1979 ; Kratochwill & Levin, 1980 ) that can be used to compare two (or more) different treatments, or a control and a treatment condition. An ATD can be understood as a type of “multielement design” (see Hammond et al., 2013 ; Kennedy, 2005 ; Riley-Tillman et al., 2020 ; see Barlow & Hayes, 1979 , for a discussion), but it important to mention two potential distinctions. On the one hand, the term “multilelement design” is employed when an ATD is used for test-control pairwise functional analysis methodology (Hagopian et al., 1997 ; Hall et al., 2020 ; Hammond et al., 2013 ; Iwata et al., 1994 ). On the other hand, a multielement design can be used for assessing contextual variables and ATD for assessing interventions (Ledford et al., 2019 ). Previous publications on best practices for applying ATD recommend a minimum of five data points per condition, and limiting consecutive repeated exposure to two sessions of any one condition (What Works Clearinghouse, 2020 ; Wolery al., 2018). The rapid alternation between conditions distinguishes ATDs from other SCEDs, which are characterized by more consecutive repeated measurements for the same condition (Onghena & Edgington, 2005 ).

In relation to the previously mentioned distinguishing features of ATDs, it is important to adequately identify under what conditions this design is most useful and should be recommended to applied researchers. ATDs are applicable to reversible behaviors (Wolery et al., 2018 ) that are sensitive to interventions that can be introduced and removed fast, prior to maintenance and generalization phases of treatment analyses. Thus, for nonreversible behaviors, an AB (Michiels & Onghena, 2019 ), a multiple-baseline and/or a changing-criterion design can be used (Ledford et al., 2019 ), whereas for reversible behaviors and interventions that require more time to demonstrate a treatment effect (or for an effect to wear off), an ABAB design is typically recommended.

ATD can be useful for applied researchers for several reasons. First, an ATD can be used to compare the efficiency of different interventions (Holcombe et al., 1994 ), instead of only comparing a baseline to an intervention condition. Second, an ATD enables researchers to perform, in a brief period of time, several attempts to demonstrate whether one condition is superior to the other. This rapid alternation of conditions is useful to reduce the threat of history because it decreases the likelihood that confounding external events occur exactly at the same time as the conditions change (Petursdottir & Carr, 2018 ). This rapid alternation is also useful to reduce the threat of maturation, which usually entails a gradual process (Petursdottir & Carr, 2018 ), because the total duration of the ATD study is likely to be shorter when conditions change rapidly and the same condition is not in place for many consecutive measurements. Third, an ATD entailing a random determination of the sequence of conditions further increases the level of internal validity and makes the design equivalent to medical N-of-1 trials, which also entail block randomization and are considered Level-1 empirical evidence for treatment effectiveness for individual cases (Howick et al., 2011 ). The use of randomization when determining the alternating sequence has been recommended (Barlow & Hayes, 1979 ; Horner & Odom, 2014 ; Kazdin, 2011 ) and is relatively common: Manolov and Onghena ( 2018 ) report 51% and Tanious and Onghena ( 2020 ) report 59% of the ATD studies use randomization in the design. The fact that randomization is not always used limits the data analysis options available to the investigator. In the following paragraphs, we refer to different options for determining the condition sequence for ATDs. It is important to note that the way in which the sequence is determined affects the number of options available for data analysis.

Among the possibilities for a random determination for condition ordering, a completely randomized design (Onghena & Edgington, 2005 ) entails that the conditions are randomly alternated without any restriction, but this could lead to problematic sequences such as AAAAABBBBB or AAABBBBBAA. Given that such sequences do not allow for a rapid alternation of conditions, other randomization techniques are more commonly used to select the ordering of conditions. In particular, a “random alternation with no condition repeating until all have been conducted” (Wolery et al., 2018 , p. 304) describes block randomization (Ledford, 2018 ) or a randomized block design (Onghena & Edgington, 2005 ), in which all conditions are grouped in blocks and the order of conditions within each block is determined at random. For instance, sequences such as AB-BA-BA-AB-BA and BA-AB-BA-BA-AB can be obtained. A randomly determined sequence arising from an ATD with block randomization is equivalent to the N-of-1 trials used in the health sciences (Guyatt et al., 1990 ; Krone et al., 2020 ; Nikles & Mitchell, 2015 ), in which several random-order blocks are referred to as multiple crossovers. Another option is to use “random alternation with no more than two consecutive sessions in a single condition” (Wolery et al., 2018 , p. 304). Such an ATD with restricted randomization could lead to a sequence such as ABBABAABAB or AABABBABBA, with the latter being impossible when using block randomization. An alternative procedure for determining the sequence is through counterbalancing (Barlow & Hayes, 1979 ; Kennedy, 2005 ), which is especially relevant if there are multiple conditions and participants. Counterbalancing enables different ordering of the conditions to be present for different participants. For instance, the sequence could be ABBABAAB for participant 1 and BAABABBA for participant 2.

Aims and Organization

In the remaining sections of this manuscript, the emphasis is placed on data analysis options for ATD data. In particular, we illustrate the use of several quantitative techniques as complements to (rather than substitutes for) visual analysis. Quantifications are highlighted in relation to the importance of increasing the objectivity of the assessment of intervention effectiveness (Cox & Friedel, 2020 ; Laraway et al., 2019 ), reducing difficulties with accurately identifying clear differences between ATD data paths (Kranak et al., 2021 ), and making ATD results more likely to meet the requirements for including the data in meta-analyses (Onghena et al., 2018 ). The descriptive quantifications of differences in treatment effects and the inferential techniques (i.e., a randomization test) are applicable to both ATDs with block randomization and restricted randomization. However, the quantifications for assessing the consistency of effects across blocks are only applicable to ATDs with block randomization assignment for the conditions. The analytical options the current manuscript focuses on are scattered across several texts published since 2018. This article is aimed at providing behavior analysts with additional data analytic options, using freely available web-based software.

In the following text, we first discuss visual analysis, several descriptive quantitative techniques, and one inferential statistical technique. Next, we provide potential advantages for the proposed quantifications that complement visual inspection of graphed ATD data. Third, in order to enhance the applicability of the techniques and to make possible the replication of the results presented, we describe several existing software for data analysis options. Finally, we illustrate these quantitative data analytic techniques with two previously published ATD data sets.

Data Analysis Options for Alternating Treatment Design

Visual analysis.