An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Psychol

Levels of Reading Comprehension in Higher Education: Systematic Review and Meta-Analysis

Cristina de-la-peña.

1 Departamento de Métodos de Investigación y Diagnóstico en Educación, Universidad Internacional de la Rioja, Logroño, Spain

María Jesús Luque-Rojas

2 Department of Theory and History of Education and Research Methods and Diagnosis in Education, University of Malaga, Málaga, Spain

Associated Data

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Higher education aims for university students to produce knowledge from the critical reflection of scientific texts. Therefore, it is necessary to develop a deep mental representation of written information. The objective of this research was to determine through a systematic review and meta-analysis the proportion of university students who have an optimal performance at each level of reading comprehension. Systematic review of empirical studies has been limited from 2010 to March 2021 using the Web of Science, Scopus, Medline, and PsycINFO databases. Two reviewers performed data extraction independently. A random-effects model of proportions was used for the meta-analysis and heterogeneity was assessed with I 2 . To analyze the influence of moderating variables, meta-regression was used and two ways were used to study publication bias. Seven articles were identified with a total sample of the seven of 1,044. The proportion of students at the literal level was 56% (95% CI = 39–72%, I 2 = 96.3%), inferential level 33% (95% CI = 19–46%, I 2 = 95.2%), critical level 22% (95% CI = 9–35%, I 2 = 99.04%), and organizational level 22% (95% CI = 6–37%, I 2 = 99.67%). Comparing reading comprehension levels, there is a significant higher proportion of university students who have an optimal level of literal compared to the rest of the reading comprehension levels. The results have to be interpreted with caution but are a guide for future research.

Introduction

Reading comprehension allows the integration of knowledge that facilitates training processes and successful coping with academic and personal situations. In higher education, this reading comprehension has to provide students with autonomy to self-direct their academic-professional learning and provide critical thinking in favor of community service ( UNESCO, 2009 ). However, research in recent years ( Bharuthram, 2012 ; Afflerbach et al., 2015 ) indicates that a part of university students are not prepared to successfully deal with academic texts or they have reading difficulties ( Smagorinsky, 2001 ; Cox et al., 2014 ), which may limit academic training focused on written texts. This work aims to review the level of reading comprehension provided by studies carried out in different countries, considering the heterogeneity of existing educational models.

The level of reading comprehension refers to the type of mental representation that is made of the written text. The reader builds a mental model in which he can integrate explicit and implicit data from the text, experiences, and previous knowledge ( Kucer, 2016 ; van den Broek et al., 2016 ). Within the framework of the construction-integration model ( Kintsch and van Dijk, 1978 ; Kintsch, 1998 ), the most accepted model of reading comprehension, processing levels are differentiated, specifically: A superficial level that identifies or memorizes data forming the basis of the text and a deep level in which the text situation model is elaborated integrating previous experiences and knowledge. At these levels of processing, the cognitive strategies used, are different according to the domain-learning model ( Alexander, 2004 ) from basic coding to a transformation of the text. In the scientific literature, there are investigations ( Yussof et al., 2013 ; Ulum, 2016 ) that also identify levels of reading comprehension ranging from a literal level of identification of ideas to an inferential and critical level that require the elaboration of inferences and the data transformation.

Studies focused on higher education ( Barletta et al., 2005 ; Yáñez Botello, 2013 ) show that university students are at a literal or basic level of understanding, they often have difficulties in making inferences and recognizing the macrostructure of the written text, so they would not develop a model of a situation of the text. These scientific results are in the same direction as the research on reading comprehension in the mother tongue in the university population. Bharuthram (2012) indicates that university students do not access or develop effective strategies for reading comprehension, such as the capacity for abstraction and synthesis-analysis. Later, Livingston et al. (2015) find that first-year education students present limited reading strategies and difficulties in understanding written texts. Ntereke and Ramoroka (2017) found that only 12.4% of students perform well in a reading comprehension task, 34.3% presenting a low level of execution in the task.

Factors related to the level of understanding of written information are the mode of presentation of the text (printed vs. digital), the type of metacognitive strategies used (planning, making inferences, inhibition, monitoring, etc.), the type of text and difficulties (novel vs. a science passage), the mode of writing (text vs. multimodal), the type of reading comprehension task, and the diversity of the student. For example, several studies ( Tuncer and Bahadir, 2014 ; Trakhman et al., 2019 ; Kazazoglu, 2020 ) indicate that reading is more efficient with better performance in reading comprehension tests in printed texts compared to the same text in digital and according to Spencer (2006) college students prefer to read in print vs. digital texts. In reading the written text, metacognitive strategies are involved ( Amril et al., 2019 ) but studies ( Channa et al., 2018 ) seem to indicate that students do not use them for reading comprehension, specifically; Korotaeva (2012) finds that only 7% of students use them. Concerning the type of text and difficulties, for Wolfe and Woodwyk (2010) , expository texts benefit more from the construction of a situational model of the text than narrative texts, although Feng (2011) finds that expository texts are more difficult to read than narrative texts. Regarding the modality of the text, Mayer (2009) and Guo et al. (2020) indicate that multimodal texts that incorporate images into the text positively improve reading comprehension. In a study of Kobayashi (2002) using open questions, close, and multiple-choice shows that the type and format of the reading comprehension assessment test significantly influence student performance and that more structured tests help to better differentiate the good ones and the poor ones in reading comprehension. Finally, about student diversity, studies link reading comprehension with the interest and intrinsic motivation of university students ( Cartwright et al., 2019 ; Dewi et al., 2020 ), with gender ( Saracaloglu and Karasakaloglu, 2011 ), finding that women present a better level of reading comprehension than men and with knowledge related to reading ( Perfetti et al., 1987 ). In this research, it was controlled that all were printed and unimodal texts, that is, only text. This is essential because the cognitive processes involved in reading comprehension can vary with these factors ( Butcher and Kintsch, 2003 ; Xu et al., 2020 ).

The Present Study

Regardless of the educational context, in any university discipline, preparing essays or developing arguments are formative tasks that require a deep level of reading comprehension (inferences and transformation of information) that allows the elaboration of a situation model, and not having this level can lead to limited formative learning. Therefore, the objective of this research was to know the state of reading comprehension levels in higher education; specifically, the proportion of university students who perform optimally at each level of reading comprehension. It is important to note that there is not much information about the different levels in university students and that it is the only meta-analytic review that explores different levels of reading comprehension in this educational stage. This is a relevant issue because the university system requires that students produce knowledge from the critical reflection of scientific texts, preparing them for innovation, employability, and coexistence in society.

Materials and Methods

Eligibility criteria: inclusion and exclusion.

Empirical studies written in Spanish or English are selected that analyze the reading comprehension level in university students.

The exclusion criteria are as follows: (a) book chapters or review books or publications; (b) articles in other languages; (c) studies of lower educational levels; (d) articles that do not identify the age of the sample; (e) second language studies; (f) students with learning difficulties or other disorders; (g) publications that do not indicate the level of reading comprehension; (h) studies that relate reading competence with other variables but do not report reading comprehension levels; (i) pre-post program application work; (j) studies with experimental and control groups; (k) articles comparing pre-university stages or adults; (l) publications that use multi-texts; (m) studies that use some type of technology (computer, hypertext, web, psychophysiological, online questionnaire, etc.); and (n) studies unrelated to the subject of interest.

Only those publications that meet the following criteria are included as: (a) be empirical research (article, thesis, final degree/master’s degree, or conference proceedings book); (b) university stage; (c) include data or some measure on the level of reading comprehension that allows calculating the effect size; (d) written in English or Spanish; (e) reading comprehension in the first language or mother tongue; and (f) the temporary period from January 2010 to March 2021.

Search Strategies

A three-step procedure is used to select the studies included in the meta-analysis. In the first step, a review of research and empirical articles in English and Spanish from January 2010 to March 2021. The search is carried out in online databases of languages in Spanish and English, such as Web of Science (WoS), Scopus, Medline, and PsycINFO, to review empirical productions that analyze the level of reading comprehension in university students. In the second step, the following terms (titles, abstracts, keywords, and full text) are used to select the articles: Reading comprehension and higher education, university students, in Spanish and English, combined with the Boolean operators AND and OR. In the last step, secondary sources, such as the Google search engine, Theseus, and references in publications, are explored.

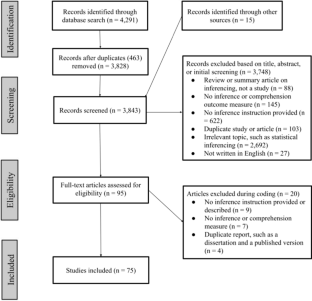

The search reports 4,294 publications (articles, theses, and conference proceedings books) in the databases and eight records of secondary references, specifically, 1989 from WoS, 2001 from Scopus, 42 from Medline, and 262 of PsycINFO. Of the total (4,294), 1,568 are eliminated due to duplications, leaving 2,734 valid records. Next, titles and abstracts are reviewed and 2,659 are excluded because they do not meet the inclusion criteria. The sample of 75 publications is reduced to 40 articles, excluding 35 because the full text cannot be accessed (the authors were contacted but did not respond), the full text did not show specific statistical data, they used online questionnaires or computerized presentations of the text. Finally, seven articles in Spanish were selected for use in the meta-analysis of the reading comprehension level of university students. Data additional to those included in the articles were not requested from the selected authors.

The PRISMA-P guidelines ( Moher et al., 2015 ) are followed to perform the meta-analysis and the flow chart for the selection of publications relevant to the subject is exposed (Figure 1) .

Flow diagram for the selection of articles.

Encoding Procedure

This research complies with what is established in the manual of systematic reviews ( Higgins and Green, 2008 ) in which clear objectives, specific search terms, and eligibility criteria for previously defined works are established. Two independent coders, reaching a 100% agreement, carry out the study search process. Subsequently, the research is codified, for this, a coding protocol is used as a guide to help resolve the ambiguities between the coders; the proposals are reflected and discussed and discrepancies are resolved, reaching a degree of agreement between the two coders of 97%.

For all studies, the reference, country, research objective, sample size, age and gender, reading comprehension test, other tests, and reading comprehension results were coded in percentages. All this information was later systematized in Table 1 .

Results of the empirical studies included in the meta-analysis.

In relation to the type of reading comprehension level, it was coded based on the levels of the scientific literature as follows: 1 = literal; 2 = inferential; 3 = critical; and 4 = organizational.

Regarding the possible moderating variables, it was coded if the investigations used a standardized reading comprehension measure (value = 1) or non-standardized (value = 0). This research considers the standardized measures of reading comprehension as the non-standardized measures created by the researchers themselves in their studies or questionnaires by other authors. By the type of evaluation test, we encode between multiple-choice (value = 0) or multiple-choices plus open question (value = 1). By type of text, we encode between argumentative (value = 1) or unknown (value = 0). By the type of career, we encode social sciences (value = 1) or other careers (health sciences; value = 0). Moreover, by the type of publication, we encode between article (value = 1) or doctoral thesis (value = 0).

Effect Size and Statistical Analysis

This descriptive study with a sample k = 7 and a population of 1,044 university students used a continuous variable and the proportions were used as the effect size to analyze the proportion of students who had an optimal performance at each level of reading comprehension. As for the percentages of each level of reading comprehension of the sample, they were transformed into absolute frequencies. A random-effects model ( Borenstein et al., 2009 ) was used as the effect size. These random-effects models have a greater capacity to generalize the conclusions and allow estimating the effects of different sources of variation (moderating variables). The DerSimonian and Laird method ( Egger et al., 2001 ) was used, calculating raw proportion and for each proportion its standard error, value of p and 95% confidence interval (CI).

To examine sampling variability, Cochran’s Q test (to test the null hypothesis of homogeneity between studies) and I 2 (proportion of variability) were used. According to Higgins et al. (2003) , if I 2 reaches 25%, it is considered low, if it reaches 50% and if it exceeds 75% it is considered high. A meta-regression analysis was used to investigate the effect of the moderator variables (type of measure, type of evaluation test, type of text, type of career, and type of publication) in each level of reading comprehension of the sample studies. For each moderating variable, all the necessary statistics were calculated (estimate, standard error, CI, Q , and I 2 ).

To compare the effect sizes of each level (literal, inferential, critical, and organizational) of reading comprehension, the chi-square test for the proportion recommended by Campbell (2007) was used.

Finally, to analyze publication bias, this study uses two ways: Rosenthal’s fail-safe number and regression test. Rosenthal’s fail-safe number shows the number of missing studies with null effects that would make the previous correlations insignificant ( Borenstein et al., 2009 ). When the values are large there is no bias. In the regression test, when the regression is not significant, there is no bias.

The software used to classify and encode data and produce descriptive statistics was with Microsoft Excel and the Jamovi version 1.6 free software was used to perform the meta-analysis.

The results of the meta-analysis are presented in three parts: the general descriptive analysis of the included studies; the meta-analytic analysis with the effect size, heterogeneity, moderating variables, and comparison of effect sizes; and the study of publication bias.

Overview of Included Studies

The search carried out of the scientific literature related to the subject published from 2010 to March 2021 generated a small number of publications, because it was limited to the higher education stage and required clear statistical data on reading comprehension.

Table 1 presents all the publications reviewed in this meta-analysis with a total of students evaluated in the reviewed works that amounts to 1,044, with the smallest sample size of 30 ( Del Pino-Yépez et al., 2019 ) and the largest with 570 ( Guevara Benítez et al., 2014 ). Regarding gender, 72% women and 28% men were included. Most of the sample comes from university degrees in social sciences, such as psychology and education (71.42%) followed by health sciences (14.28%) engineering and a publication (14.28%) that does not indicate origin. These publications selected according to the inclusion criteria for the meta-analysis come from more countries with a variety of educational systems, but all from South America. Specifically, the countries that have more studies are Mexico (28.57%) and Colombia, Chile, Bolivia, Peru, and Ecuador with 14.28% each, respectively. The years in which they were published are 2.57% in 2018 and 2016 and 14.28% in 2019, 2014, and 2013.

A total of 57% of the studies analyze four levels of reading comprehension (literal, inferential, critical, and organizational) and 43% investigate three levels of reading comprehension (literal, inferential, and critical). Based on the moderating variables, 57% of the studies use standardized reading comprehension measures and 43% non-standardized measures. According to the evaluation test used, 29% use multiple-choice questions and 71% combine multiple-choice questions plus open questions. 43% use an argumentative text and 57% other types of texts (not indicated in studies). By type of career, 71% are students of social sciences and 29% of other different careers, such as engineering or health sciences. In addition, 71% are articles and 29% with research works (thesis and degree works).

Table 2 shows the reading comprehension assessment instruments used by the authors of the empirical research integrated into the meta-analysis.

Reading comprehension assessment tests used in higher education.

Meta-Analytic Analysis of the Level of Reading Comprehension

The literal level presents a mean proportion effect size of 56% (95% CI = 39–72%; Figure 2 ). The variability between the different samples of the literal level of reading comprehension was significant ( Q = 162.066, p < 0.001; I 2 = 96.3%). No moderating variable used in this research had a significant contribution to heterogeneity: type of measurement ( p = 0.520), type of test ( p = 0.114), type of text ( p = 0.520), type of career ( p = 0.235), and type of publication ( p = 0.585). The high variability is explained by other factors not considered in this work, such as the characteristics of the students (cognitive abilities) or other issues.

Forest plot of literal level.

The inferential level presents a mean proportion effect size of 33% (95% CI = 19–46%; Figure 3 ). The variability between the different samples of the inferential level of reading comprehension was significant ( Q = 125.123, p < 0.001; I 2 = 95.2%). The type of measure ( p = 0.011) and the type of text ( p = 0.011) had a significant contribution to heterogeneity. The rest of the variables had no significance: type of test ( p = 0.214), type of career ( p = 0.449), and type of publication ( p = 0.218). According to the type of measure, the proportion of students who have an optimal level in inferential administering a standardized test is 28.7% less than when a non-standardized test is administered. The type of measure reduces variability by 2.57% and explains the differences between the results of the studies at the inferential level. According to the type of text, the proportion of students who have an optimal level in inferential using an argumentative text is 28.7% less than when using another type of text. The type of text reduces the variability by 2.57% and explains the differences between the results of the studies at the inferential level.

Forest plot of inferential level.

The critical level has a mean effect size of the proportion of 22% (95% CI = 9–35%; Figure 4 ). The variability between the different samples of the critical level of reading comprehension was significant ( Q = 627.044, p < 0.001; I 2 = 99.04%). No moderating variable used in this research had a significant contribution to heterogeneity: type of measurement ( p = 0.575), type of test ( p = 0.691), type of text ( p = 0.575), type of career ( p = 0.699), and type of publication ( p = 0.293). The high variability is explained by other factors not considered in this work, such as the characteristics of the students (cognitive abilities).

Forest plot of critical level.

The organizational level presents a mean effect size of the proportion of 22% (95% CI = 6–37%; Figure 5 ). The variability between the different samples of the organizational level of reading comprehension was significant ( Q = 1799.366, p < 0.001; I 2 = 99.67%). The type of test made a significant contribution to heterogeneity ( p = 0.289). The other moderating variables were not significant in this research: type of measurement ( p = 0.289), type of text ( p = 0.289), type of career ( p = 0.361), and type of publication ( p = 0.371). Depending on the type of test, the proportion of students who have an optimal level in organizational with multiple-choices tests plus open questions is 37% higher than while using only multiple-choice tests. The type of text reduces the variability by 0.27% and explains the differences between the results of the studies at the organizational level.

Forest plot of organizational level.

Table 3 shows the difference between the estimated effect sizes and the significance. There is a larger proportion of students having an optimal level of reading comprehension at the literal level compared to the inferential, critical, and organizational level; an optimal level of reading comprehension at the inferential level vs. the critical and organizational level.

Results of effect size comparison.

Analysis of Publication Bias

This research uses two ways to verify the existence of bias independently of the sample size. Table 4 shows the results and there is no publication bias at any level of reading comprehension.

Publication bias results.

This research used a systematic literature search and meta-analysis to provide estimates of the number of cases of university students who have an optimal level in the different levels of reading comprehension. All the information available on the subject at the international level was analyzed using international databases in English and Spanish, but the potentially relevant publications were limited. Only seven Spanish language studies were identified internationally. In these seven studies, the optimal performance at each level of reading comprehension varied, finding heterogeneity associated with the very high estimates, which indicates that the summary estimates have to be interpreted with caution and in the context of the sample and the variables used in this meta-analysis.

In this research, the effects of the type of measure, type of test, type of text, type of career, and type of publication have been analyzed. Due to the limited information in the publications, it was not possible to assess the effect of any more moderating variables.

We found that some factors significantly influence heterogeneity according to the level of reading comprehension considered. The type of measure influenced the optimal performance of students in the inferential level of reading comprehension; specifically, the proportion of students who have an optimal level in inferential worsens if the test is standardized. Several studies ( Pike, 1996 ; Koretz, 2002 ) identify differences between standardized and non-standardized measures in reading comprehension and a favor of non-standardized measures developed by the researchers ( Pyle et al., 2017 ). The ability to generate inferences of each individual may difficult to standardize because each person differently identifies the relationship between the parts of the text and integrates it with their previous knowledge ( Oakhill, 1982 ; Cain et al., 2004 ). This mental representation of the meaning of the text is necessary to create a model of the situation and a deep understanding ( McNamara and Magliano, 2009 ; van den Broek and Espin, 2012 ).

The type of test was significant for the organizational level of reading comprehension. The proportion of students who have an optimal level in organizational improves if the reading comprehension assessment test is multiple-choice plus open questions. The organizational level requires the reordering of written information through analysis and synthesis processes ( Guevara Benítez et al., 2014 ); therefore, it constitutes a production task that is better reflected in open questions than in reproduction questions as multiple choice ( Dinsmore and Alexander, 2015 ). McNamara and Kintsch (1996) identify that open tasks require an effort to make inferences related to previous knowledge and multidisciplinary knowledge. Important is to indicate that different evaluation test formats can measure different aspects of reading comprehension ( Zheng et al., 2007 ).

The type of text significantly influenced the inferential level of reading comprehension. The proportion of students who have an optimal level in inferential decreases with an argumentative text. The expectations created before an argumentative text made it difficult to generate inferences and, therefore, the construction of the meaning of the text. This result is in the opposite direction to the study by Diakidoy et al. (2011) who find that the refutation text, such as the argumentative one, facilitates the elaboration of inferences compared to other types of texts. It is possible that the argumentative text, given its dialogical nature of arguments and counterarguments, with a subject unknown by the students, has determined the decrease of inferences based on their scarce previous knowledge of the subject, needing help to elaborate the structure of the text read ( Reznitskaya et al., 2007 ). It should be pointed out that in meta-analysis studies, 43% use argumentative texts. Knowing the type of the text is relevant for generating inferences, for instance, according to Baretta et al. (2009) the different types of text are processed differently in the brain generating more or fewer inferences; specifically, using the N400 component, they find that expository texts generate more inferences from the text read.

For the type of career and the type of publication, no significance was found at any level of reading comprehension in this sample. This seems to indicate that university students have the same level of performance in tasks of literal, critical inferential, and organizational understanding regardless of whether they are studying social sciences, health sciences, or engineering. Nor does the type of publication affect the state of the different levels of reading comprehension in higher education.

The remaining high heterogeneity at all levels of reading comprehension was not captured in this review, indicating that there are other factors, such as student characteristics, gender, or other issues, that are moderating and explaining the variability at the literal, inferential, critical, and organizational reading comprehension in university students.

To the comparison between the different levels of reading comprehension, the literal level has a significantly higher proportion of students with an optimal level than the inferential, critical, and organizational levels. The inferential level has a significantly higher proportion of students with an optimal level than the critical and organizational levels. This corresponds with data from other investigations ( Márquez et al., 2016 ; Del Pino-Yépez et al., 2019 ) that indicate that the literal level is where university students execute with more successes, being more difficult and with less success at the inferential, organizational, and critical levels. This indicates that university students of this sample do not generate a coherent situation model that provides them with a global mental representation of the read text according to the model of Kintsch (1998) , but rather they make a literal analysis of the explicit content of the read text. This level of understanding can lead to less desirable results in educational terms ( Dinsmore and Alexander, 2015 ).

The educational implications of this meta-analysis in this sample are aimed at making universities aware of the state of reading comprehension levels possessed by university students and designing strategies (courses and workshops) to optimize it by improving the training and employability of students. Some proposals can be directed to the use of reflection tasks, integration of information, graphic organizers, evaluation, interpretation, nor the use of paraphrasing ( Rahmani, 2011 ). Some studies ( Hong-Nam and Leavell, 2011 ; Parr and Woloshyn, 2013 ) demonstrate the effectiveness of instructional courses in improving performance in reading comprehension and metacognitive strategies. In addition, it is necessary to design reading comprehension assessment tests in higher education that are balanced, validated, and reliable, allowing to have data for the different levels of reading comprehension.

Limitations and Conclusion

This meta-analysis can be used as a starting point to report on reading comprehension levels in higher education, but the results should be interpreted with caution and in the context of the study sample and variables. Publications without sufficient data and inaccessible articles, with a sample of seven studies, may have limited the international perspective. The interest in studying reading comprehension in the mother tongue, using only unimodal texts, without the influence of technology and with English and Spanish has also limited the review. The limited amount of data in the studies has limited meta-regression.

This review is a guide to direct future research, broadening the study focus on the level of reading comprehension using digital technology, experimental designs, second languages, and investigations that relate reading comprehension with other factors (gender, cognitive abilities, etc.) that can explain the heterogeneity in the different levels of reading comprehension. The possibility of developing a comprehensive reading comprehension assessment test in higher education could also be explored.

This review contributes to the scientific literature in several ways. In the first place, this meta-analytic review is the only one that analyzes the proportion of university students who have an optimal performance in the different levels of reading comprehension. This review is made with international publications and this topic is mostly investigated in Latin America. Second, optimal performance can be improved at all levels of reading comprehension, fundamentally inferential, critical, and organizational. The literal level is significantly the level of reading comprehension with the highest proportion of optimal performance in university students. Third, the students in this sample have optimal performance at the inferential level when they are non-argumentative texts and non-standardized measures, and, in the analyzed works, there is optimal performance at the organizational level when multiple-choice questions plus open questions are used.

The current research is linked to the research project “Study of reading comprehension in higher education” of Asociación Educar para el Desarrollo Humano from Argentina.

Data Availability Statement

Author contributions.

Cd-l-P had the idea for the article and analyzed the data. ML-R searched the data. Cd-l-P and ML-R selected the data and contributed to the valuable comments and manuscript writing. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The handling editor declared a shared affiliation though no other collaboration with one of the authors ML-R at the time of the review.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Funding. This paper was funded by the Universidad Internacional de la Rioja and Universidad de Málaga.

- Afflerbach P., Cho B.-Y., Kim J.-Y. (2015). Conceptualizing and assessing higher-order thinking in reading . Theory Pract. 54 , 203–212. 10.1080/00405841.2015.1044367 [ CrossRef ] [ Google Scholar ]

- Alexander P. A. (2004). “ A model of domain learning: reinterpreting expertise as a multidimensional, multistage process ,” in Motivation, Emotion, and Cognition: Integrative Perspectives on Intellectual Functioning and Development. eds. Dai D. Y., Sternberg R. J. (Mahwah, NJ: Erlbaum; ), 273–298. [ Google Scholar ]

- Amril A., Hasanuddin W. S., Atmazaki (2019). The contributions of reading strategies and reading frequencies toward students’ reading comprehension skill in higher education . Int. J. Eng. Adv. Technol. (IJEAT) 8 , 593–595. 10.35940/ijeat.F1105.0986S319 [ CrossRef ] [ Google Scholar ]

- Baretta L., Braga Tomitch L. M., MacNair N., Kwan Lim V., Waldie K. E. (2009). Inference making while reading narrative and expository texts: an ERP study . Psychol. Neurosci. 2 , 137–145. 10.3922/j.psns.2009.2.005 [ CrossRef ] [ Google Scholar ]

- Barletta M., Bovea V., Delgado P., Del Villar L., Lozano A., May O., et al.. (2005). Comprensión y Competencias Lectoras en Estudiantes Universitarios. Barranquilla: Uninorte. [ Google Scholar ]

- Bharuthram S. (2012). Making a case for the teaching of reading across the curriculum in higher education . S. Afr. J. Educ. 32 , 205–214. 10.15700/saje.v32n2a557 [ CrossRef ] [ Google Scholar ]

- Borenstein M., Hedges L. V., Higgins J. P. T., Rothstein H. R. (2009). Introduction to Meta-Analysis. United Kingdom: John Wiley and Sons, Ltd, 45–49. [ Google Scholar ]

- Butcher K. R., Kintsch W. (2003). “ Text comprehension and discourse processing ,” in Handbook of Psychology: Experimental Psychology. 2nd Edn . Vol . 4 . eds. Healy A. F., Proctor R. W., Weiner I. B. (New Jersey: John Wiley and Sons, Inc.), 575–595. [ Google Scholar ]

- Cain K., Oakhill J., Bryant P. (2004). Children’s reading comprehension ability: concurrent prediction by working memory, verbal ability, and component skills . J. Educ. Psychol. 96 , 31–42. 10.1037/0022-0663.96.1.31 [ CrossRef ] [ Google Scholar ]

- Campbell I. (2007). Chi-squared and Fisher-Irwin tests of two-by-two tables with small sample recommendations . Stat. Med. 26 , 3661–3675. 10.1002/sim.2832, PMID: [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Cartwright K. B., Lee S. A., Barber A. T., DeWyngaert L. U., Lane A. B., Singleton T. (2019). Contributions of executive function and cognitive intrinsic motivation to university students’ reading comprehension . Read. Res. Q. 55 , 345–369. 10.1002/rrq.273 [ CrossRef ] [ Google Scholar ]

- Channa M. A., Abassi A. M., John S., Sahito J. K. M. (2018). Reading comprehension and metacognitive strategies in first-year engineering university students in Pakistan . Int. J. Engl. Ling. 8 , 78–87. 10.5539/ijel.v8n6p78 [ CrossRef ] [ Google Scholar ]

- Cox S. R., Friesner D. L., Khayum M. (2014). Do Reading skills courses help underprepared readers achieve academic success in college? J. College Reading and Learn. 33 , 170–196. 10.1080/10790195.2003.10850147 [ CrossRef ] [ Google Scholar ]

- Del Pino-Yépez G. M., Saltos-Rodríguez L. J., Moreira-Aguayo P. Y. (2019). Estrategias didácticas para el afianzamiento de la comprensión lectora en estudiantes universitarios . Revista científica Dominio de las Ciencias 5 , 171–187. 10.23857/dc.v5i1.1038 [ CrossRef ] [ Google Scholar ]

- Dewi R. S., Fahrurrozi, Hasanah U., Wahyudi A. (2020). Reading interest and Reading comprehension: a correlational study in Syarif Hidayatullah State Islamic University, Jakarta . Talent Dev. Excell. 12 , 241–250. [ Google Scholar ]

- Diakidoy I. N., Mouskounti T., Ioannides C. (2011). Comprehension and learning from refutation and expository texts . Read. Res. Q. 46 , 22–38. 10.1598/RRQ.46.1.2 [ CrossRef ] [ Google Scholar ]

- Dinsmore D. J., Alexander P. A. (2015). A multidimensional investigation of deep-level and surface-level processing . J. Exp. Educ. 84 , 213–244. 10.1080/00220973.2014.979126 [ CrossRef ] [ Google Scholar ]

- Egger M., Smith D., Altmand D. G. (2001). Systematic Reviews in Health Care: Meta-Analysis in Context. London: BMJ Publishing Group. [ Google Scholar ]

- Feng L. (2011). A short analysis of the text variables affecting reading and testing reading . Stud. Lit. Lang. 2 , 44–49. [ Google Scholar ]

- Figueroa Romero R. L., Castañeda Sánchez W., Tamay Carranza I. A. (2016). Nivel de comprensión lectora en los estudiantes del primer ciclo de la Universidad San Pedro, filial Caraz, 2016. (Trabajo de investigación, Universidad San Pedro). Repositorio Institucional USP. Available at: http://repositorio.usanpedro.edu.pe/bitstream/handle/USANPEDRO/305/PI1640418.pdf?sequence=1andisAllowed=y (Accessed February 15, 2021).

- Guevara Benítez Y., Guerra García J., Delgado Sánchez U., Flores Rubí C. (2014). Evaluación de distintos niveles de comprensión lectora en estudiantes mexicanos de Psicología . Acta Colombiana de Psicología 17 , 113–121. 10.14718/ACP.2014.17.2.12 [ CrossRef ] [ Google Scholar ]

- Guo D., Zhang S., Wright K. L., McTigue E. M. (2020). Do you get the picture? A meta-analysis of the effect of graphics on reading comprehension . AERA Open 6 , 1–20. 10.1177/2332858420901696 [ CrossRef ] [ Google Scholar ]

- Higgins J. P., Green S. (2008). Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration. [ Google Scholar ]

- Higgins J. P., Thompson S. G., Deeks J. J., Altman D. G. (2003). Measuring inconsistency in meta-analyses . BMJ 327 , 327–557. 10.1136/bmj.327.7414.557, PMID: [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Hong-Nam K., Leavell A. G. (2011). Reading strategy instruction, metacognitive awareness, and self-perception of striving college developmental readers . J. College Literacy Learn. 37 , 3–17. [ Google Scholar ]

- Kazazoglu S. (2020). Is printed text the best choice? A mixed-method case study on reading comprehension . J. Lang. Linguistic Stud. 16 , 458–473. 10.17263/jlls.712879 [ CrossRef ] [ Google Scholar ]

- Kintsch W. (1998). Comprehension: A Paradigm for Cognition. New York: Cambridge University Press. [ Google Scholar ]

- Kintsch W., van Dijk T. A. (1978). Toward a model of text comprehension and production . Psychol. Rev. 85 , 363–394. 10.1037/0033-295X.85.5.363 [ CrossRef ] [ Google Scholar ]

- Kobayashi M. (2002). Method effects on reading comprehension test performance: test organization and response format . Lang. Test. 19 , 193–220. 10.1191/0265532202lt227oa [ CrossRef ] [ Google Scholar ]

- Koretz D. (2002). Limitations in the use of achievement tests as measures of educators’ productivity . J. Hum. Resour. 37 , 752–777. 10.2307/3069616 [ CrossRef ] [ Google Scholar ]

- Korotaeva I. V. (2012). Metacognitive strategies in reading comprehension of education majors . Procedural-Social and Behav. Sci. 69 , 1895–1900. 10.1016/j.sbspro.2012.12.143 [ CrossRef ] [ Google Scholar ]

- Kucer S. B. (2016). Accuracy, miscues, and the comprehension of complex literary and scientific texts . Read. Psychol. 37 , 1076–1095. 10.1080/02702711.2016.1159632 [ CrossRef ] [ Google Scholar ]

- Livingston C., Klopper B., Cox S., Uys C. (2015). The impact of an academic reading program in the bachelor of education (intermediate and senior phase) degree . Read. Writ. 6 , 1–11. 10.4102/rw.v6i1.66 [ CrossRef ] [ Google Scholar ]

- Márquez H., Díaz C., Muñoz R., Fuentes R. (2016). Evaluación de los niveles de comprensión lectora en estudiantes universitarios pertenecientes a las carreras de Kinesiología y Nutrición y Dietética de la Universidad Andrés Bello, Concepción . Revista de Educación en Ciencias de la Salud 13 , 154–160. [ Google Scholar ]

- Mayer R. E. (ed.) (2009). “ Modality principle ,” in Multimedia Learning. United States: Cambridge University Press, 200–2020. [ Google Scholar ]

- McNamara D. S., Kintsch W. (1996). Learning from texts: effects of prior knowledge and text coherence . Discourse Process 22 , 247–288. 10.1080/01638539609544975 [ CrossRef ] [ Google Scholar ]

- McNamara D. S., Magliano J. (2009). “ Toward a comprehensive model of comprehension ,” in The psychology of learning and motivation. ed. Ross E. B. (New York: Elsevier; ), 297–384. [ Google Scholar ]

- Moher D., Shamseer L., Clarke M., Ghersi D., Liberati A., Petticrew M., et al.. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement . Syst. Rev. 4 :1. 10.1186/2046-4053-4-1, PMID: [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Ntereke B. B., Ramoroka B. T. (2017). Reading competency of first-year undergraduate students at the University of Botswana: a case study . Read. Writ. 8 :a123. 10.4102/rw.v8i1.123 [ CrossRef ] [ Google Scholar ]

- Oakhill J. (1982). Constructive processes in skilled and less skilled comprehenders’ memory for sentences . Br. J. Psychol. 73 , 13–20. 10.1111/j.2044-8295.1982.tb01785.x, PMID: [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Parr C., Woloshyn V. (2013). Reading comprehension strategy instruction in a first-year course: an instructor’s self-study . Can. J. Scholarship Teach. Learn. 4 :3. 10.5206/cjsotl-rcacea.2013.2.3 [ CrossRef ] [ Google Scholar ]

- Perfetti C. A., Beck I., Bell L. C., Hughes C. (1987). Phonemic knowledge and learning to read are reciprocal: a longitudinal study of first-grade children . Merrill-Palmer Q. 33 , 283–319. [ Google Scholar ]

- Pike G. R. (1996). Limitations of using students’ self-reports of academic development as proxies for traditional achievement measures . Res. High. Educ. 37 , 89–114. 10.1007/BF01680043 [ CrossRef ] [ Google Scholar ]

- Pyle N., Vasquez A. C., Lignugaris B., Gillam S. L., Reutzel D. R., Olszewski A., et al.. (2017). Effects of expository text structure interventions on comprehension: a meta-analysis . Read. Res. Q. 52 , 469–501. 10.1002/rrq.179 [ CrossRef ] [ Google Scholar ]

- Rahmani M. (2011). Effects of note-taking training on reading comprehension and recall . Reading Matrix: An Int. Online J. 11 , 116–126. [ Google Scholar ]

- Reznitskaya A., Anderson R., Kuo L. J. (2007). Teaching and learning argumentation . Elem. Sch. J. 107 , 449–472. 10.1086/518623 [ CrossRef ] [ Google Scholar ]

- Sáez Sánchez B. K. (2018). La comprensión lectora en jóvenes universitarios de una escuela formadora de docentes . Revista Electrónica Científica de Investigación Educativa 4 , 609–618. [ Google Scholar ]

- Sanabria Mantilla T. R. (2018). Relación entre comprensión lectora y rendimiento académico en estudiantes de primer año de Psicología de la Universidad Pontificia Bolivariana. (Trabajo de grado, Universidad Pontificia Bolivariana). Repositorio Institucional UPB. Available at: https://repository.upb.edu.co/bitstream/handle/20.500.11912/5443/digital_36863.pdf?sequence=1andisAllowed=y (Accessed February 15, 2021).

- Saracaloglu A. S., Karasakaloglu N. (2011). An investigation of prospective teachers’ reading comprehension levels and study and learning strategies related to some variables . Egit. ve Bilim 36 , 98–115. [ Google Scholar ]

- Smagorinsky P. (2001). If meaning is constructed, what is it made from? Toward a cultural theory of reading . Rev. Educ. Res. 71 , 133–169. 10.3102/00346543071001133 [ CrossRef ] [ Google Scholar ]

- Spencer C. (2006). Research on learners’ preferences for reading from a printed text or a computer screen . J. Dist. Educ. 21 , 33–50. [ Google Scholar ]

- Trakhman L. M. S., Alexander P., Berkowitz L. E. (2019). Effects of processing time on comprehension and calibration in print and digital mediums . J. Exp. Educ. 87 , 101–115. 10.1080/00220973.2017.1411877 [ CrossRef ] [ Google Scholar ]

- Tuncer M., Bhadir F. (2014). Effect of screen reading and reading from printed out material on student success and permanency in introduction to computer lesson . Turk. Online J. Educ. Technol. 13 , 41–49. [ Google Scholar ]

- Ulum O. G. (2016). A descriptive content analysis of the extent of Bloom’s taxonomy in the reading comprehension questions of the course book Q: skills for success 4 reading and writing . Qual. Rep. 21 , 1674–1683. [ Google Scholar ]

- UNESCO (2009). “Conferencia mundial sobre la Educación Superior – 2009.” La nueva dinámica de la educación superior y la investigación para el cambio social y el desarrollo; July 5-8, 2009; Paris.

- van den Broek P., Espin C. A. (2012). Connecting cognitive theory and assessment: measuring individual differences in reading comprehension . Sch. Psychol. Rev. 43 , 315–325. 10.1080/02796015.2012.12087512 [ CrossRef ] [ Google Scholar ]

- van den Broek P., Mouw J. M., Kraal A. (2016). “ Individual differences in reading comprehension ,” in Handbook of Individual Differences in Reading: Reader, Text, and Context. ed. Afflerbach E. P. (New York: Routledge; ), 138–150. [ Google Scholar ]

- Wolfe M. B. W., Woodwyk J. M. (2010). Processing and memory of information presented in narrative or expository texts . Br. J. Educ. Psychol. 80 , 341–362. 10.1348/000709910X485700, PMID: [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Xu Y., Wong R., He S., Veldre A., Andrews S. (2020). Is it smart to read on your phone? The impact of reading format and culture on the continued influence of misinformation . Mem. Cogn. 48 , 1112–1127. 10.3758/s13421-020-01046-0, PMID: [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Yáñez Botello C. R. (2013). Caracterización de los procesos cognoscitivos y competencias involucrados en los niveles de comprensión lectora en Estudiantes Universitarios . Cuadernos Hispanoamericanos de Psicología 13 , 75–90. 10.18270/chps.v13i2.1350 [ CrossRef ] [ Google Scholar ]

- Yussof Y. M., Jamian A. R., Hamzah Z. A. Z., Roslan A. (2013). Students’ reading comprehension performance with emotional . Int. J. Edu. Literacy Stud. 1 , 82–88. 10.7575/aiac.ijels.v.1n.1p.82 [ CrossRef ] [ Google Scholar ]

- Zheng Y., Cheng L., Klinger D. A. (2007). Do test format in reading comprehension affect second-language students’ test performance differently? TESL Can. J. 25 , 65–78. 10.18806/tesl.v25i1.108 [ CrossRef ] [ Google Scholar ]

An IERI – International Educational Research Institute Journal

- Open access

- Published: 28 October 2021

The achievement gap in reading competence: the effect of measurement non-invariance across school types

- Theresa Rohm ORCID: orcid.org/0000-0001-9203-327X 1 , 2 ,

- Claus H. Carstensen 2 ,

- Luise Fischer 1 &

- Timo Gnambs 1 , 3

Large-scale Assessments in Education volume 9 , Article number: 23 ( 2021 ) Cite this article

3581 Accesses

1 Citations

2 Altmetric

Metrics details

After elementary school, students in Germany are separated into different school tracks (i.e., school types) with the aim of creating homogeneous student groups in secondary school. Consequently, the development of students’ reading achievement diverges across school types. Findings on this achievement gap have been criticized as depending on the quality of the administered measure. Therefore, the present study examined to what degree differential item functioning affects estimates of the achievement gap in reading competence.

Using data from the German National Educational Panel Study, reading competence was investigated across three timepoints during secondary school: in grades 5, 7, and 9 ( N = 7276). First, using the invariance alignment method, measurement invariance across school types was tested. Then, multilevel structural equation models were used to examine whether a lack of measurement invariance between school types affected the results regarding reading development.

Our analyses revealed some measurement non-invariant items that did not alter the patterns of competence development found among school types in the longitudinal modeling approach. However, misleading conclusions about the development of reading competence in different school types emerged when the hierarchical data structure (i.e., students being nested in schools) was not taken into account.

Conclusions

We assessed the relevance of measurement invariance and accounting for clustering in the context of longitudinal competence measurement. Even though differential item functioning between school types was found for each measurement occasion, taking these differences in item estimates into account did not alter the parallel pattern of reading competence development across German secondary school types. However, ignoring the clustered data structure of students being nested within schools led to an overestimation of the statistical significance of school type effects.

Introduction

Evaluating measurement invariance is a premise for the meaningful interpretation of differences in latent constructs between groups or over time (Brown, 2006 ). By assessing measurement invariance, it is made certain that the observed changes present true change instead of differences in the interpretation of items. The present study investigates measurement invariance between secondary school types for student reading competence, which is the cornerstone of learning. Reading competences develop in secondary school from reading simple texts, retrieving information and making inference from what is explicitly stated, up to the level of being a fluent reader by reading longer and more complex texts and being able to infer from what is not explicitly stated in the text (Chall, 1983 ). In particular, students’ reading competence is essential for the comprehension of educational content in secondary school (Edossa et al., 2019 ; O’Brien et al., 2001 ). Reading development is often investigated either from a school-level perspective or by focusing on individual-level differences. When taking a school-level perspective on reading competence growth within the German secondary school system, the high degree of segregation after the end of primary school must be considered. Most students are separated into different school tracks on the basis of their fourth-grade achievement level to obtain homogenous student groups in secondary school (Köller & Baumert, 2002 ). This homogenization based on proficiency levels is supposed to optimize teaching and education to account for students’ preconditions, enhancing learning for all students (Baumert et al., 2006 ; Gamoran & Mare, 1989 ). Consequently, divergence in competence attainment already exists at the beginning of secondary school and might increase among the school tracks over the school years. Previous studies comparing reading competence development between different German secondary school types have presented ambiguous results by finding either a comparable increase in reading competence development (e.g., Retelsdorf & Möller, 2008 ; Schneider & Stefanek, 2004 ) or a widening gap between upper, middle, and lower academic school tracks (e.g., Pfost & Artelt, 2013 ) for the same schooling years. Increasing performance differences in reading over time are termed “Matthew effects”, in the biblical analogy of rich getting richer and the poor getting poorer (e.g., Bast and Reitsma, 1998 ; Walberg & Tsai, 1983 ). This Matthew effect hypothesis was first used in the educational context by Stanovich ( 1986 ) to examine individual differences in reading competence development. Besides this widening pattern, as described by the Matthew effect phenomena, also parallel or compensatory patterns in reading development can be present. Parallel development is the case, when studied groups initially diverge in their reading competence and similarly increase over time. A compensatory pattern describes a reading competence development, where an initially diverging reading competence between groups converges over time.

Moreover, findings on the divergence in competence attainment have been criticized as being dependent on the quality of the measurement construct (Pfost et al., 2014 ; Protopapas et al., 2016 ). More precisely, the psychometric properties of the administered tests, such as the measurement (non-)invariance of items, can distort individual- or school-level differences. A core assumption of many measurement models pertains to comparable item functioning across groups, meaning that differences between item parameters are zero across groups, or in case of approximate measurement invariance, approximately zero. In practice, this often holds for only a subset of items and partial invariance can then be applied, where some item parameters (i.e., intercepts) are held constant across groups and others are allowed to be freely estimated (Van de Schoot et al., 2013 ). Using data from the German National Educational Panel Study (NEPS; Blossfeld et al., 2011 ), we focus on school-level differences in reading competence across three timepoints. We aim to examine the degree to which measurement non-invariance distorts comparisons of competence development across school types. We therefore compare a model that assumes partial measurement invariance across school types with a model that does not take differences in item estimates between school types into account. Finally, we demonstrate the need to account for clustering (i.e., students nested in schools) in longitudinal reading competence measurement when German secondary school types are compared.

School segregation and reading competence development

Ability tracking of students can take place within schools (e.g., differentiation through course assignment as, for example, in U.S. high schools) or between schools with a curricular differentiation between school types and with distinct learning certificates being offered by each school track, as is the German case (Heck et al., 2004 ; LeTendre et al., 2003 ; Oakes & Wells, 1996 ). The different kinds of curricula at each school type are tailored to the prerequisites of the students and provide different learning opportunities. German students are assigned to different school types based on primary school recommendations that take primary school performance during fourth grade into account, but factors such as support within the family are also considered (Cortina & Trommer, 2009 ; Pfost & Artelt, 2013 ; Retelsdorf et al., 2012 ). Nevertheless, this recommendation is not equally binding across German federal states, leaving room for parents to decide on their children’s school track. Consequently, student achievement in secondary school is associated with the cognitive abilities of students but also with their social characteristics and family background (Baumert et al., 2006 ; Ditton et al., 2005 ). This explicit between-school tracking after fourth grade has consequences for students’ achievement of reading competence in secondary school.

There might be several reasons why different trajectories of competence attainment are observed in the tracked secondary school system (Becker et al., 2006 ). First, students might already differ in their initial achievement and learning rates at the beginning of secondary school. This is related to curricular differentiation, as early separation aims to create homogenous student groups in terms of student proficiency levels and, in effect, enhances learning for all students by providing targeted learning opportunities (Baumert et al., 2003 ; Köller & Baumert, 2002 ; Retelsdorf & Möller, 2008 ). Hence, different learning rates are expected due to selection at the beginning of secondary school (Becker et al., 2006 ). Second, there are differences in learning and teaching methods among the school tracks, as learning settings are targeted towards students’ preconditions. Differences among school types are related to cognitive activation, the amount of support from the teacher in problem solving and demands regarding students’ accomplishments (Baumert et al., 2003 ). Third, composition effects due to the different socioeconomic and ethnic compositions of schools can shape student achievement. Not only belonging to a particular school type but also individual student characteristics determine student achievement. Moreover, the mixture of student characteristics might have decisive effects (Neumann et al., 2007 ). For example, average achievement rates and the characteristics of students’ social backgrounds were found to have additional effects on competence attainment in secondary school (Baumert et al., 2006 ), beyond mere school track affiliation and individual characteristics. Hence, schools of the same school type were found to differ greatly from each other in their attainment levels and their social compositions (Baumert et al., 2003 ).

Findings from the cross-sectional Programme for International Student Assessment (PISA) studies, conducted on behalf of the OECD every three years since 2000, unanimously show large differences between school tracks in reading competence for German students in ninth grade (Baumert et al., 2001 , 2003 ; Nagy et al., 2017 ; Naumann et al., 2010 ; Weis et al., 2016 , 2020 ). Students in upper academic track schools have, on average, higher reading achievement scores than students in the middle and lower academic tracks. Reading competence is thereby highly correlated with other assessed competencies, such as mathematics and science, where these differences between school tracks hold as well.

A few studies have also examined between-school track differences in the development of reading competence in German secondary schools, with most studies focusing on fifth and seventh grade in selected German federal states (e.g., Bos et al., 2009 ; Lehmann & Lenkeit, 2008 ; Lehmann et al., 1999 ; Pfost & Artelt, 2013 ; Retelsdorf & Möller, 2008 ). While some studies reported parallel developments in reading competence from fifth to seventh grade between school types (Retelsdorf & Möller, 2008 ; Schneider & Stefanek, 2004 ), others found a widening gap (Pfost & Artelt, 2013 ; Pfost et al., 2010 ). A widening gap between school types was also found for other competence domains, such as mathematics (Baumert et al., 2003 , 2006 ; Becker et al., 2006 ; Köller & Baumert, 2001 ), while parallel developments were rarely observed (Schneider & Stefanek, 2004 ).

In summary, there might be different school milieus created by the processes of selection into secondary school and formed by the social and ethnic origins of the students (Baumert et al., 2003 ). This has consequences for reading competence development during secondary school, which can follow a parallel, widening or compensatory pattern across school types. The cross-sectional PISA study regularly indicates large differences among German school types in ninth grade but does not offer insight into whether these differences already existed at the beginning of secondary school or how they developed throughout secondary school. In comparison, longitudinal studies have indicated a pattern in reading competence development through secondary school, but the studies conducted in the past were regionally limited and presented inconsistent findings on reading competence development among German secondary school types. In addition to differences in curricula, learning and teaching methods, students’ social backgrounds, family support, and student composition, the manner in which competence development during secondary school is measured and analyzed might contribute to the observed pattern in reading competence development.

Measuring differences in reading development

A meaningful longitudinal comparison of reading competence between school types and across grades requires a scale with a common metric. To be more specific, the relationships between the latent trait score and each observed item should not depend on group membership. The interpretability of scales has been questioned due to scaling issues (Protopapas et al., 2016 ). While the item response theory (IRT) calibration is assumed to be theoretically invariant, it depends in practice on the sample, item fit, and equivalence of item properties (e.g., discrimination and difficulty) among test takers and compared groups. Hence, empirically discovered between-group differences might be confounded with the psychometric properties of the administered tests. For example, Pfost et al. ( 2014 ) concluded from a meta-analysis of 28 studies on Matthew effects in primary school (i.e., the longitudinally widening achievement gap between good and poor readers) that low measurement precision (e.g., constructs presenting floor or ceiling effects) is strongly linked with compensatory patterns in reading achievement. Consequently, measuring changes using reading competence scores might depend on the quality of the measurement. Regarding competence development in secondary school, measurement precision is enhanced through the consideration of measurement error, the consideration of the multilevel data structure, and measurement invariance across groups. A biased measurement model might result when measurement error or the multilevel data structure are ignored, while the presence of differential item functioning (DIF) can be evidence of test-internal item bias. Moreover, the presence of statistical item bias might also contribute to test unfairness and, thus, invalid systematic disadvantages for specific groups (Camilli, 2006 ).

Latent variable modeling for reading competence, such as latent change models (Raykov, 1999 ; Steyer et al., 2000 ), can be advantageous compared to using composite scores. When using composite scores representing latent competences, measurement error is ignored (Lüdtke et al., 2011 ). Hence, biased estimates might be obtained if the construct is represented by composite scores instead of a latent variable measured by multiple indicators and accounting for measurement error (Lüdtke et al., 2008 ). Investigating student competence growth in secondary school poses a further challenge, as the clustered structure of the data needs to be taken into account. This can for example be achieved using cluster robust standard error estimation methods or through hierarchical linear modeling (cf. McNeish et al., 2017 ). If the school is the primary sampling unit, students are nested within schools and classes. Ignoring this hierarchical structure during estimation might result in inaccurate standard errors and biased significance tests, as standard errors would be underestimated. In turn, the statistical significance of the effects would be overestimated (Finch & Bolin, 2017 ; Hox, 2002 ; Raudenbush & Bryk, 2002 ; Silva et al., 2019 ). As one solution, multilevel structural equation modeling (MSEM) takes the hierarchical structure of the data into account while allowing for the estimation of latent variables with dichotomous and ordered categorical indicators (Kaplan et al., 2009 ; Marsh et al., 2009 ; Rabe-Hesketh et al., 2007 ). Although explicitly modeling the multilevel structure (as compared to cluster robust standard error estimation) involves additional assumptions regarding the distribution of the random effects and the covariance structure of random effects, it allows for the partitioning of variance to different hierarchical levels and for cluster-specific inferences (McNeish et al., 2017 ).

Furthermore, regarding the longitudinal modeling of performance divergence, an interpretation of growth relies on the assumption that the same attributes are measured across all timepoints (Williamson et al., 1991 ) and that the administered instrument (e.g., reading competence test items) is measurement invariant across groups (Jöreskog, 1971 ; Schweig, 2014 ). The assumption of measurement invariance presupposes that all items discriminate comparably across groups as well as timepoints and are equally difficult, independent of group membership and measurement occasion. Hence, the item parameters of a measurement model have to be constant across groups, meaning that the probability of answering an item correctly should be the same for members of different groups and at different timepoints when they have equal ability levels (Holland & Wainer, 1993 ; Millsap & Everson, 1993 ). When an item parameter is not independent of group membership, DIF is present.

The aim of our study is to investigate the effects of measurement non-invariance among school types on the achievement gap in reading competence development in German secondary schools. Measurement invariance between secondary school types is investigated for each measurement occasion to test whether items are biased among the school types. Then, we embed detected DIF into the longitudinal estimation of reading competence development between school types. A model considering school-type-specific item discrimination and difficulty for items exhibiting non-invariance between school types is therefore compared to a model that does not consider these school-type specificities. To achieve measurement precision for this longitudinal competence measurement, we consider measurement error and the clustered data structure through multilevel latent variable modeling. Finally, we present the same models without consideration of the clustered data structure and compare school type effects on reading competence development.

It is our goal to investigate whether the longitudinal development of reading competence is sensitive to the consideration of measurement non-invariance between the analyzed groups and to the consideration of the clustered data structure. This has practical relevance for all studies on reading competence development, where comparisons between school types are of interest and where schools were the primary sampling unit. Such evaluations increase the certainty that observed changes between school types reflect true changes.

Sample and procedure

The sample consisted of N = 7276 German secondary school students, repeatedly tested and interviewed in 2010 and 2011 (grade 5), 2012 and 2013 (grade 7), and 2014 and 2015 (grade 9) as part of the NEPS. Approximately half of the sample was female (48.08%), and 25.46% had a migration background (defined as either the student or at least one parent born abroad). Please note that migration background is unequally distributed across school types: 22.1% high school students, 26.9% middle secondary school students, 38.5% lower secondary school students, 31.2% comprehensive school students and 15.2% students from schools offering all tracks of secondary education except the high school track had a migration background. In fifth grade, the students’ ages ranged from 9 to 15 years ( M = 11.17, SD = 0.54). Students were tested within their class context through written questionnaires and achievement tests. For the first timepoint in grade 5, immediately after students were assigned to different school tracks, a representative sample of German secondary schools was drawn using a stratified multistage sampling design (Aßmann et al., 2011 ). First, schools that teach at the secondary level were randomly drawn, and second, two grade 5 classes were randomly selected within these schools. The five types of schools were distinguished and served as strata in the first step: high schools (“Gymnasium”), middle secondary schools (“Realschule”), lower secondary schools (“Hauptschule”), comprehensive schools (“Gesamtschule”), and schools offering all tracks of secondary education except the high school track (“Schule mit mehreren Bildungsgängen”). The schools were drawn proportional to their number of classes from these strata. Finally, all students of the selected classes for whom a positive parent’s consent was obtained before panel participation were asked to take part in the study. At the second measurement timepoint in 2012 to 2013, when students attended grade 7, a refreshment sample was drawn due to German federal state-specific differences in the timing of the transition to lower secondary education ( N = 2170; 29.82% of the total sample). The sampling design of the refreshment sample resembles the sampling design of the original sample (Steinhauer & Zinn, 2016 ). The ninth-grade sample in 2014 and 2015 was taken at the third measurement timepoint and was a follow-up survey for the students from regular schools in both the original and the refreshment sample. Students were tested at their schools, but N = 1797 students (24.70% of the total sample) had to be tested at least one measurement timepoint through an individual follow-up within their home context. In both cases, the competence assessments were conducted by a professional survey institute that sent test administrators to the participating schools or households. For an overview of the students being tested per measurement timepoint per school type, within the school or home context, as well as information on temporary and final sample attrition, see Table 1 .

To group students into their corresponding school type, we used the information on the survey wave when the students were sampled (original sample in grade 5, refreshment sample in grade 7). Overall, most of the sampled students attended high schools ( N = 3224; 44.31%), 23.65% attended middle secondary schools ( N = 1721), 13.95% attended lower secondary schools ( N = 1015), 11.96% of students attended schools offering all tracks of secondary education except the high school track ( N = 870), and 6.13% attended comprehensive schools ( N = 446). Altogether, the students attended 299 different schools, with a median of 24 students per school. Further details on the survey and the data collection process are presented on the project website ( http://www.neps-data.de/ ).

Instruments

During each assessment, reading competence was measured with a paper-based achievement test, including 32 items in fifth grade, 40 items in seventh grade administered in easy (27 items) and difficult (29 items) booklet versions, and 46 items in ninth grade administered in easy (30 items) and difficult (32 items) booklet versions. The items were specifically constructed for the administration of the NEPS, and each item was administered once (Krannich et al., 2017 ; Pohl et al., 2012 ; Scharl et al., 2017 ). Because memory effects might distort responses if items are repeatedly administered, the linking of the reading measurements in the NEPS is based on an anchor-group design (Fischer et al., 2016 ). With two independent link samples (one to link the grade 5 and grade 7 reading competence tests and the other to link the grade 7 with the grade 9 test), drawn from the same population as the original sample, a mean/mean linking was performed (Loyd & Hoover, 1980 ). In addition, the unidimensionality of the tests, measurement invariance of the items regarding reading development over the grade levels, as well as for relevant sample characteristics (i.e., gender and migration background) was demonstrated (Fischer et al., 2016 ; Krannich et al., 2017 ; Pohl et al., 2012 ; Scharl et al., 2017 ). Marginal reliabilities were reported as good, with 0.81 in grade 5, 0.83 in grade 7, and 0.81 in grade 9.

Each test administered to the respondents consisted of five different text types (domains: information, instruction, advertising, commenting and literary text) with subsequent questions in either a simple or complex multiple-choice format or a matching response format. In addition, but unrelated to the five text types, the questions covered three types of cognitive requirements (finding information in the text, drawing text-related conclusions, and reflecting and assessing). To answer the respective question types, these cognitive processes needed to be activated. These dimensional concepts and question types are linked to the frameworks of other large-scale assessment studies, such as PISA (OECD, 2017 ) or the International Adult Literacy Survey (IALS/ALL; e.g., OECD & Statistics Canada 1995 ). Further details on the reading test construction and development are presented by Gehrer et al. ( 2003 ).

Statistical analysis

We adopted the multilevel structural equation modelling framework for the modeling of student reading competence development and fitted a two-level factor model with categorical indicators (Kamata & Vaughn, 2010 ) to the reading competence tests. Each of the three measurement occasions was modeled as a latent factor. Please note that MSEM is the more general framework to fitting multilevel item response theory models (Fox, 2010 ; Fox & Glas, 2001 ; Kamata & Vaughn, 2010 ; Lu et al., 2005 ; Muthén & Asparouhov, 2012 ), and therefore, each factor in our model resembles a unidimensional, two-parametric IRT model. The model setup was the same for the student and the school level and therefore discrimination parameters (i.e., item loadings) were constrained to be equal at the within- and between-level, while difficulty estimates (i.e., item thresholds) and item residual variances are measured on the between-level (i.e., school-level). School type variables were included as binary predictors of latent abilities at the school level.

The multilevel structural equation models for longitudinal competence measurement were estimated using Bayesian MCMC estimation methods in the Mplus software program (version 8.0, Muthén and Muthén 1998 –2020). Two Markov chains were implemented for each parameter, and chain convergence was assessed using the potential scale reduction (PSR, Gelman & Rubin, 1992 ) criterion, where values below 1.10 indicate convergence (Gelman et al., 2004 ). Furthermore, successful convergence of the estimates was evaluated based on trace plots for each parameter. To determine whether the estimated models delivered reliable estimates, autocorrelation plots were investigated. The mean of the posterior distribution and the Bayesian 95% credibility interval were used to evaluate the model parameters. Using the Kolmogorov–Smirnov test, the hypothesis that both MCMC chains have an equal distribution was evaluated using 100 draws from each of the two chains per parameter. For all estimated models, the PSR criterion (i.e., Gelman and Rubin diagnostic) indicated that convergence was achieved, which was confirmed by a visual inspection of the trace plots for each model parameter.

Diffuse priors were used with a normal distribution with mean zero and infinite variance, N (0, ∞), for continuous indicators such as intercepts, loading parameters or regression slopes; normal distribution priors with mean zero and a variance of 5, N (0, 5), were used for categorical indicators; inverse-gamma priors IG (− 1, 0) were used for residual variances; and inverse-Wishart priors IW (0, − 4) for variances and covariances.

Model fit was assessed using the posterior predictive p-value (PPP), obtained through a fit statistic based on the likelihood-ratio \({\chi }^{2}\) test of an \({H}_{0}\) model against an unrestricted \({H}_{1}\) model, as implemented in Mplus. A low PPP indicates poor fit, while an acceptable model fit starts with PPP > 0.05, and an excellent-fitting model has a PPP value of approximately 0.5 (Asparouhov & Muthén, 2010 ).

Differential item functioning was examined using the invariance alignment method (IA; Asparouhov & Muthén, 2014 ; Kim et al., 2017 ; Muthén & Asparouhov, 2014 ). These models were estimated with maximum likelihood estimation using numerical integration and taking the nested data structure into account through cluster robust estimation. One can choose between fixing one group or free estimation. As the fixed alignment was shown to slightly outperform the free alignment in a simulation study (Kim et al., 2017 ), we applied fixed alignment and ran several models fixing each of the five school types once. Item information for items exhibiting DIF between school types were then split to the respective non-aligning group versus the remaining student groups. Hence, new pseudo-items are introduced for the models that take school-type specific item properties into account.