Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

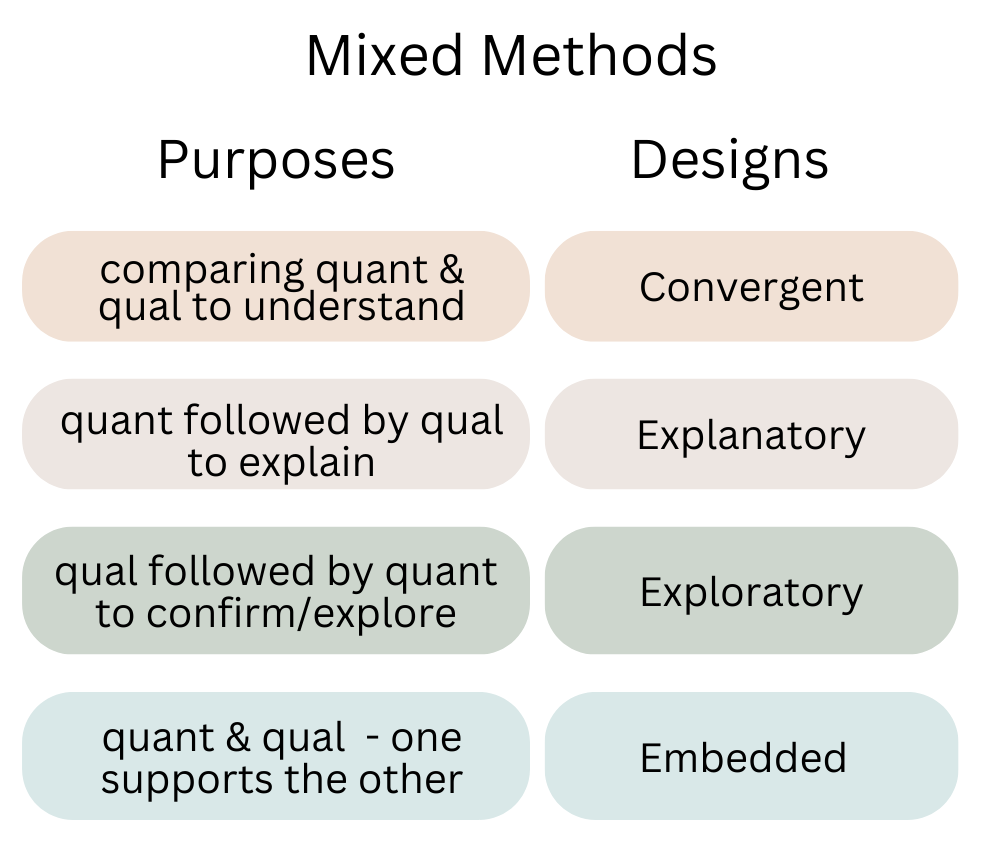

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

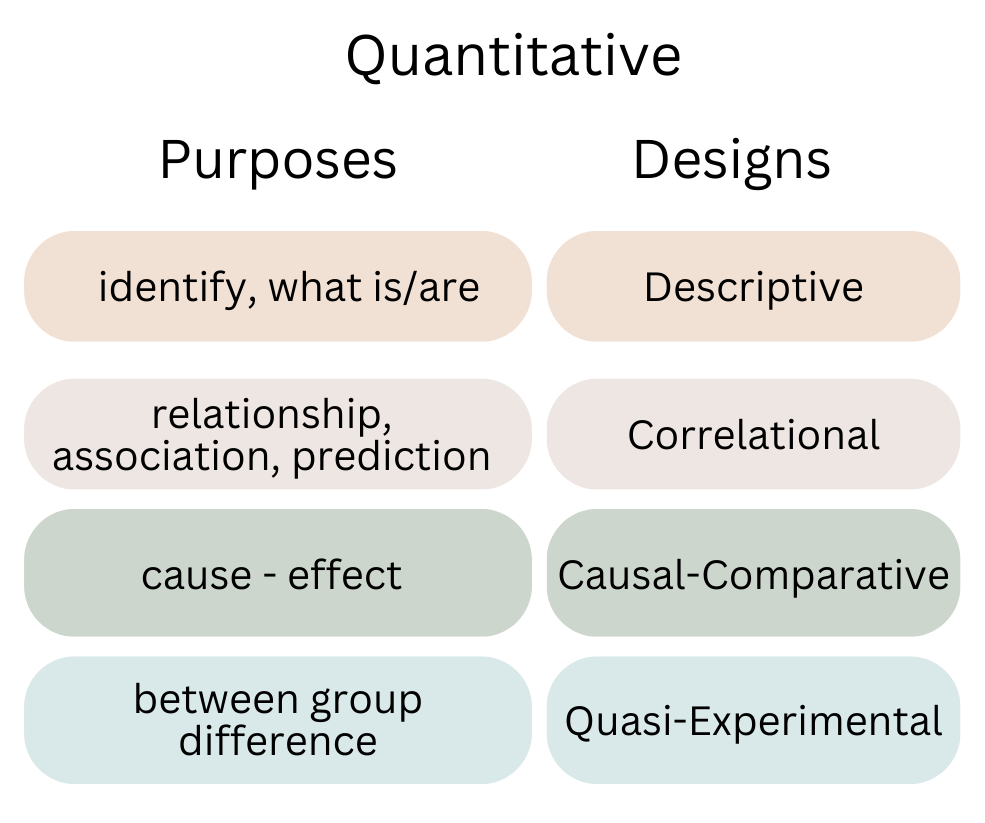

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

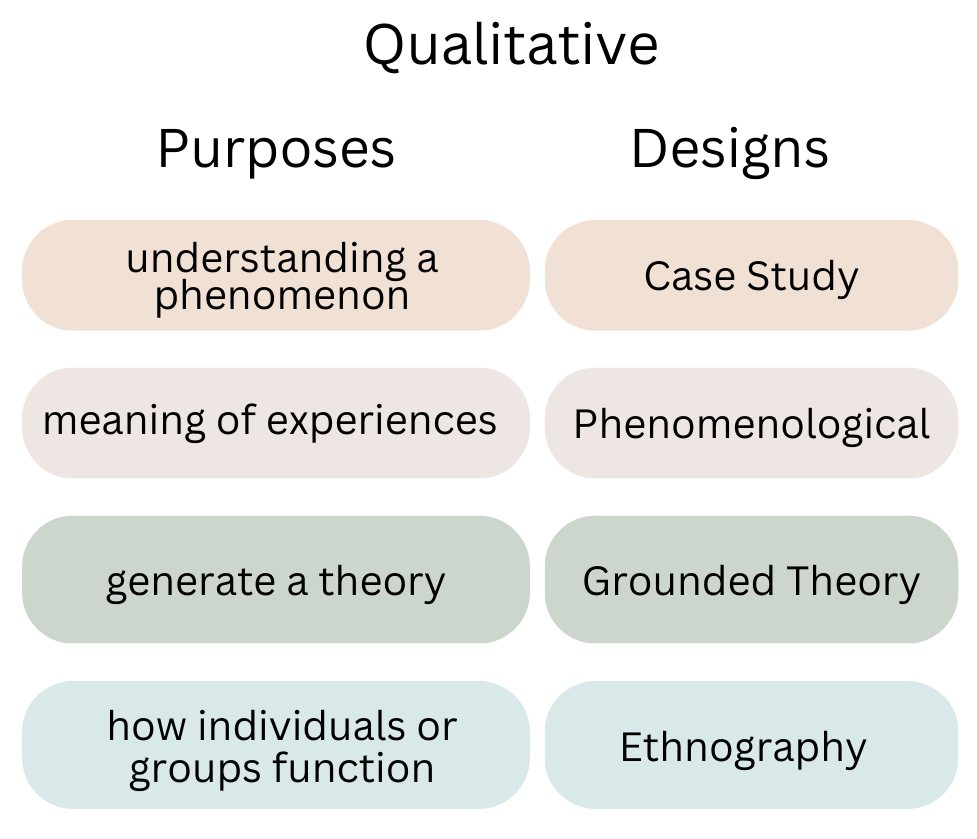

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Research Components

12 Research Design

The story continues….

The next morning, as Harry and Physicus finished the last cold slice of George’s pizza, they had also finished collecting their literature to conduct a review. The friends concluded their theory about the number of mice influencing Pickles’ behavior had considerable support in the research literature. After writing the Literature Review, their research questions will be fully grounded, justified, and worthwhile to be answered.

“So, how do we go about answering our research questions?” asked Harry.

Physicus explained that they will have to analyze their research questions to see what types of answers are required. Knowing this will guide their decisions about how to design the project to answer their questions.

“There are two basic types of answers to research questions, quantitative and qualitative. The types of answers the research questions require tell us what type of research design we need,” said Physicus.

“I guess if I ask how we decide which type of research design we should choose, you will say, ‘It depends?'” uttered Harry.

Physicus’ face brightened as he blurted out, “Absolutely not! Negative!” Physicus continued, “If the research questions are stated well, there will only be two ways in which they can be answered. The research questions are king; they make all the decisions.”

“How come?” Harry appeared confused.

“Well, let us see. Think about our first question. How many mice will Pickles attack at one time? What type of answer does this question require? It requires a numeric answer, correct?” Physicus asked.

“Yes, that is correct,” Harry said.

Physicus continued, “Good. So, does our second question also require a numeric answer?”

“The second question is also answered with a number,” replied Harry

Physicus blurted, “Correct! This means we need to use a quantitative research design!”

Physicus continued, “Now if we had research questions that could not be answered with numbers, we would need to use a qualitative research design to answer our questions with words or phrases instead.”

Harry now appeared relieved, “I get it. So in designing a research project, we simply look for a way to answer the research questions. That’s easy!”

“Well, it depends,” answered Physicus smiling.

Interpreting the Story

There are qualitative, quantitative, mixed methods, and applied research designs. Based on the research questions, the research design will be obvious. Physicus led Harry in determining their study would need a quantitative design, because they only needed numerical data to answer their research questions. If Harry’s questions could only be answered with words or phrases, then a qualitative design would be needed. If the friends had questions needing to be answered with numbers and phrases, then either a mixed methods or an applied research design would have been the choice.

Research Design

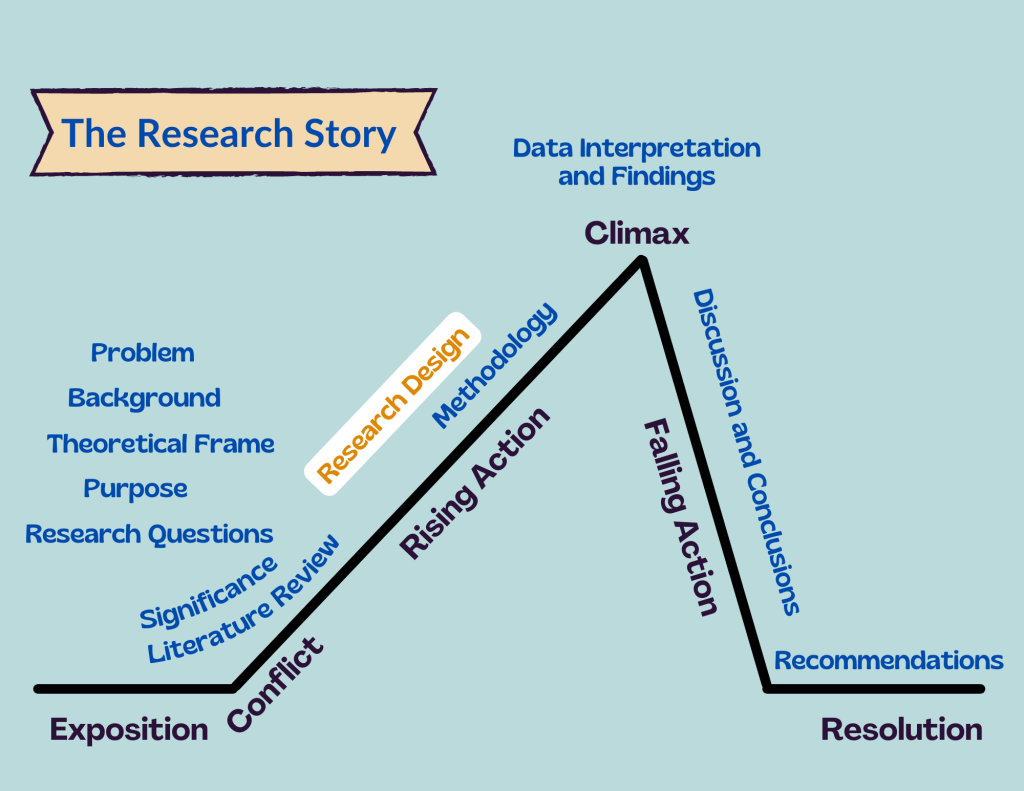

The Research Design explains what type of research is being conducted. The writing in this heading also explains why this type of research is needed to obtain the answers to the research or guiding questions for the project. The design provides a blueprint for the methodology. Articulating the nature of the research design is critical for explaining the Methodology (see the next chapter).

There are four categories of research designs used in educational research and a variety of specific research designs in each category. The first step in determining which category to use is to identify what type of data will answer the research questions. As in our story, Harry and Physicus had research questions that required quantitative answers, so the category of their research design is quantitative.

The next step in finding the specific research design is to consider the purpose (goal) of the research project. The research design must support the purpose. In our story, Harry and Physicus need a quantitative research design that supports their goal of determining the effect of the number of mice Pickles encounters at one time on his behavior. A causal-comparative or quasi-experimental research design is the best choice for the friends because these are specific quantitative designs used to find a cause-and-effect relationship.

Quantitative Research Designs

Quantitative research designs seek results based on statistical analyses of the collected numerical data. The primary quantitative designs used in educational research include descriptive, correlational, causal-comparative, and quasi-experimental designs. Numerical data are collected and analyzed using statistical calculations appropriate for the design. For example, analyses like mean, median, mode, range, etc. are used to describe or explain a phenomenon observed in a descriptive research design. A correlational research design uses statistics, such as correlation coefficient or regression analyses to explain how two phenomena are related. Causal-comparative and quasi-experimental designs use analyses needed to establish causal relationships, such as pre-post testing, or behavior change (like in our story).

The use of numerical data guides both the methodology and the analysis protocols. The design also guides and limits how the results are interpreted. Examples of quantitative data found in educational research include test scores, grade point averages, and dropout rates.

Qualitative Research Designs

Qualitative research designs involve obtaining verbal, perspective, and/or visual results using code-based analyses of collected data. Typical qualitative designs used in educational research include the case study, phenomenological, grounded theory, and ethnography. These designs involve exploring behaviors, perceptions/feelings, and social/cultural phenomena found in educational settings.

Qualitative designs result in a written description of the findings. Data collection strategies include observations, interviews, focus groups, surveys, and documentation reviews. The data are recorded as words, phrases, sentences, and paragraphs. Data are then grouped together to form themes. The process of grouping data to form themes is called coding. The labeled themes become the “code” used to interpret the data. The coding can be determined ahead of time before data are collected, or the coding emerges from the collected data. Data collection strategies often include media such as video and audio recordings. These recordings are transcribed into words to allow for the coding analysis.

The use of qualitative data guides both the methodology and the analysis protocols. The “squishy” nature of qualitative data (words vs. numbers) and the data coding analysis limits the interpretation and conclusions made from the results. It is important to explain the coding analysis used to provide clear reasoning for the themes and how these relate to the research questions.

Mixed Method Designs

Mixed Methods research designs are used when the research questions must be answered with results that are both quantitative and qualitative. These designs integrate the data results to arrive at conclusions. A mixed method design is used when there are greater benefits to using multiple data types, sources, and analyses. Examples of typical mixed methods design approaches in education include convergent, explanatory, exploratory, and embedded designs. Using mixed methods approaches in educational research allows the researcher to triangulate, complement, or expand understanding using multiple types of data.

The use of mixed methods data guides the methodology, analysis, and interpretation of the results. Using both qualitative (quant) and quantitative (qual) data analyses provides a clearer or more balanced picture of the results. Data are analyzed sequentially or concurrently depending on the design. While the quantitative and qualitative data are analyzed independently, the results are interpreted integratively. The findings are a synthesis of the quantitative and qualitative analyses.

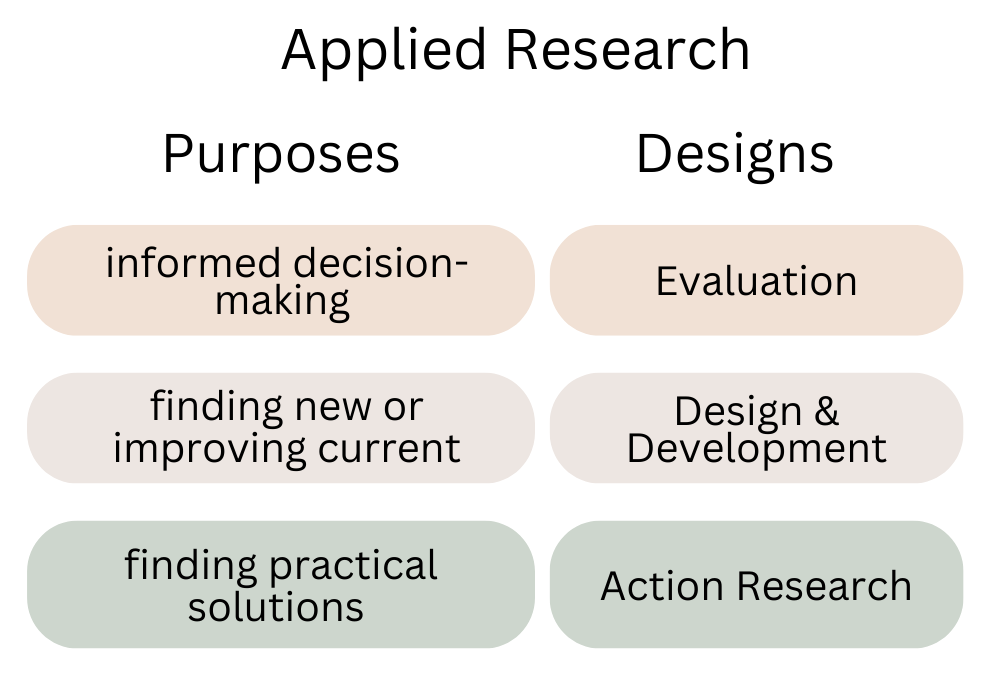

Applied Research Designs

Applied research designs seek both quantitative and qualitative results to address issues of educational practice. Applied research designs include evaluation, design and development, and action research. The purposes of applied research are to identify best practices, to innovate or improve current practices or policies, to test pedagogy, and to evaluate effectiveness. The results of applied research designs provide practical solutions to problems in educational practice.

Applied designs use both theoretical and empirical data. Theoretical data are collected from published theories or other research. Empirical data are obtained by conducting a needs assessment or other data collection methods. Data analyses include both quantitative and qualitative procedures. The findings are interpreted integratively as in mixed methods approaches, and then “applied” to the problem to form a solution.

Telling the research story

The Research Design in a research project tells the story of what direction the plot of the story will take. The writing in this heading sets the stage for the rising action of the plot in the research story. The Research Design describes the journey that is about to take place. It functions to guide the reader in understanding the type of path the story will follow. The Research Design is the overall direction of the research story and is determined before deciding on the specific steps to take in obtaining and analyzing the data.

The Research Design heading appears in Chapter 3 of the thesis and dissertation projects under the Methodology heading. A literature review project does not have this heading.

Graduate Research in Education: Learning the Research Story Copyright © 2022 by Kimberly Chappell and Greg I. Voykhansky is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Quantitative Research Designs in Educational Research

Introduction, general overviews.

- Survey Research Designs

- Correlational Designs

- Other Nonexperimental Designs

- Randomized Experimental Designs

- Quasi-Experimental Designs

- Single-Case Designs

- Single-Case Analyses

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Methodologies for Conducting Education Research

- Mixed Methods Research

- Multivariate Research Methodology

- Qualitative Data Analysis Techniques

- Qualitative, Quantitative, and Mixed Methods Research Sampling Strategies

- Researcher Development and Skills Training within the Context of Postgraduate Programs

- Single-Subject Research Design

- Social Network Analysis

- Statistical Assumptions

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- English as an International Language for Academic Publishing

- Girls' Education in the Developing World

- History of Education in Europe

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Quantitative Research Designs in Educational Research by James H. McMillan , Richard S. Mohn , Micol V. Hammack LAST REVIEWED: 24 July 2013 LAST MODIFIED: 24 July 2013 DOI: 10.1093/obo/9780199756810-0113

The field of education has embraced quantitative research designs since early in the 20th century. The foundation for these designs was based primarily in the psychological literature, and psychology and the social sciences more generally continued to have a strong influence on quantitative designs until the assimilation of qualitative designs in the 1970s and 1980s. More recently, a renewed emphasis on quasi-experimental and nonexperimental quantitative designs to infer causal conclusions has resulted in many newer sources specifically targeting these approaches to the field of education. This bibliography begins with a discussion of general introductions to all quantitative designs in the educational literature. The sources in this section tend to be textbooks or well-known sources written many years ago, though still very relevant and helpful. It should be noted that there are many other sources in the social sciences more generally that contain principles of quantitative designs that are applicable to education. This article then classifies quantitative designs primarily as either nonexperimental or experimental but also emphasizes the use of nonexperimental designs for making causal inferences. Among experimental designs the article distinguishes between those that include random assignment of subjects, those that are quasi-experimental (with no random assignment), and those that are single-case (single-subject) designs. Quasi-experimental and nonexperimental designs used for making causal inferences are becoming more popular in education given the practical difficulties and expense in conducting well-controlled experiments, particularly with the use of structural equation modeling (SEM). There have also been recent developments in statistical analyses that allow stronger causal inferences. Historically, quantitative designs have been tied closely to sampling, measurement, and statistics. In this bibliography there are important sources for newer statistical procedures that are needed for particular designs, especially single-case designs, but relatively little attention to sampling or measurement. The literature on quantitative designs in education is not well focused or comprehensively addressed in very many sources, except in general overview textbooks. Those sources that do include the range of designs are introductory in nature; more advanced designs and statistical analyses tend to be found in journal articles and other individual documents, with a couple exceptions. Another new trend in educational research designs is the use of mixed-method designs (both quantitative and qualitative), though this article does not emphasize these designs.

For many years there have been textbooks that present the range of quantitative research designs, both in education and the social sciences more broadly. Indeed, most of the quantitative design research principles are much the same for education, psychology, and other social sciences. These sources provide an introduction to basic designs that are used within the broader context of other educational research methodologies such as qualitative and mixed-method. Examples of these textbooks written specifically for education include Johnson and Christensen 2012 ; Mertens 2010 ; Arthur, et al. 2012 ; and Creswell 2012 . An example of a similar text written for the social sciences, including education that is dedicated only to quantitative research, is Gliner, et al. 2009 . In these texts separate chapters are devoted to different types of quantitative designs. For example, Creswell 2012 contains three quantitative design chapters—experimental, which includes both randomized and quasi-experimental designs; correlational (nonexperimental); and survey (also nonexperimental). Johnson and Christensen 2012 also includes three quantitative design chapters, with greater emphasis on quasi-experimental and single-subject research. Mertens 2010 includes a chapter on causal-comparative designs (nonexperimental). Often survey research is addressed as a distinct type of quantitative research with an emphasis on sampling and measurement (how to design surveys). Green, et al. 2006 also presents introductory chapters on different types of quantitative designs, but each of the chapters has different authors. In this book chapters extend basic designs by examining in greater detail nonexperimental methodologies structured for causal inferences and scaled-up experiments. Two additional sources are noted because they represent the types of publications for the social sciences more broadly that discuss many of the same principles of quantitative design among other types of designs. Bickman and Rog 2009 uses different chapter authors to cover topics such as statistical power for designs, sampling, randomized controlled trials, and quasi-experiments, and educational researchers will find this information helpful in designing their studies. Little 2012 provides a comprehensive coverage of topics related to quantitative methods in the social, behavioral, and education fields.

Arthur, James, Michael Waring, Robert Coe, and Larry V. Hedges, eds. 2012. Research methods & methodologies in education . Thousand Oaks, CA: SAGE.

Readers will find this book more of a handbook than a textbook. Different individuals author each of the chapters, representing quantitative, qualitative, and mixed-method designs. The quantitative chapters are on the treatment of advanced statistical applications, including analysis of variance, regression, and multilevel analysis.

Bickman, Leonard, and Debra J. Rog, eds. 2009. The SAGE handbook of applied social research methods . 2d ed. Thousand Oaks, CA: SAGE.

This handbook includes quantitative design chapters that are written for the social sciences broadly. There are relatively advanced treatments of statistical power, randomized controlled trials, and sampling in quantitative designs, though the coverage of additional topics is not as complete as other sources in this section.

Creswell, John W. 2012. Educational research: Planning, conducting, and evaluating quantitative and qualitative research . 4th ed. Boston: Pearson.

Creswell presents an introduction to all major types of research designs. Three chapters cover quantitative designs—experimental, correlational, and survey research. Both the correlational and survey research chapters focus on nonexperimental designs. Overall the introductions are complete and helpful to those beginning their study of quantitative research designs.

Gliner, Jeffrey A., George A. Morgan, and Nancy L. Leech. 2009. Research methods in applied settings: An integrated approach to design and analysis . 2d ed. New York: Routledge.

This text, unlike others in this section, is devoted solely to quantitative research. As such, all aspects of quantitative designs are covered. There are separate chapters on experimental, nonexperimental, and single-subject designs and on internal validity, sampling, and data-collection techniques for quantitative studies. The content of the book is somewhat more advanced than others listed in this section and is unique in its quantitative focus.

Green, Judith L., Gregory Camilli, and Patricia B. Elmore, eds. 2006. Handbook of complementary methods in education research . Mahwah, NJ: Lawrence Erlbaum.

Green, Camilli, and Elmore edited forty-six chapters that represent many contemporary issues and topics related to quantitative designs. Written by noted researchers, the chapters cover design experiments, quasi-experimentation, randomized experiments, and survey methods. Other chapters include statistical topics that have relevance for quantitative designs.

Johnson, Burke, and Larry B. Christensen. 2012. Educational research: Quantitative, qualitative, and mixed approaches . 4th ed. Thousand Oaks, CA: SAGE.

This comprehensive textbook of educational research methods includes extensive coverage of qualitative and mixed-method designs along with quantitative designs. Three of twenty chapters focus on quantitative designs (experimental, quasi-experimental, and single-case) and nonexperimental, including longitudinal and retrospective, designs. The level of material is relatively high, and there are introductory chapters on sampling and quantitative analyses.

Little, Todd D., ed. 2012. The Oxford handbook of quantitative methods . Vol. 1, Foundations . New York: Oxford Univ. Press.

This handbook is a relatively advanced treatment of quantitative design and statistical analyses. Multiple authors are used to address strengths and weaknesses of many different issues and methods, including advanced statistical tools.

Mertens, Donna M. 2010. Research and evaluation in education and psychology: Integrating diversity with quantitative, qualitative, and mixed methods . 3d ed. Thousand Oaks, CA: SAGE.

This textbook is an introduction to all types of educational designs and includes four chapters devoted to quantitative research—experimental and quasi-experimental, causal comparative and correlational, survey, and single-case research. The author’s treatment of some topics is somewhat more advanced than texts such as Creswell 2012 , with extensive attention to threats to internal validity for some of the designs.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Education »

- Meet the Editorial Board »

- Academic Achievement

- Academic Audit for Universities

- Academic Freedom and Tenure in the United States

- Action Research in Education

- Adjuncts in Higher Education in the United States

- Administrator Preparation

- Adolescence

- Advanced Placement and International Baccalaureate Courses

- Advocacy and Activism in Early Childhood

- African American Racial Identity and Learning

- Alaska Native Education

- Alternative Certification Programs for Educators

- Alternative Schools

- American Indian Education

- Animals in Environmental Education

- Art Education

- Artificial Intelligence and Learning

- Assessing School Leader Effectiveness

- Assessment, Behavioral

- Assessment, Educational

- Assessment in Early Childhood Education

- Assistive Technology

- Augmented Reality in Education

- Beginning-Teacher Induction

- Bilingual Education and Bilingualism

- Black Undergraduate Women: Critical Race and Gender Perspe...

- Blended Learning

- Case Study in Education Research

- Changing Professional and Academic Identities

- Character Education

- Children’s and Young Adult Literature

- Children's Beliefs about Intelligence

- Children's Rights in Early Childhood Education

- Citizenship Education

- Civic and Social Engagement of Higher Education

- Classroom Learning Environments: Assessing and Investigati...

- Classroom Management

- Coherent Instructional Systems at the School and School Sy...

- College Admissions in the United States

- College Athletics in the United States

- Community Relations

- Comparative Education

- Computer-Assisted Language Learning

- Computer-Based Testing

- Conceptualizing, Measuring, and Evaluating Improvement Net...

- Continuous Improvement and "High Leverage" Educational Pro...

- Counseling in Schools

- Critical Approaches to Gender in Higher Education

- Critical Perspectives on Educational Innovation and Improv...

- Critical Race Theory

- Crossborder and Transnational Higher Education

- Cross-National Research on Continuous Improvement

- Cross-Sector Research on Continuous Learning and Improveme...

- Cultural Diversity in Early Childhood Education

- Culturally Responsive Leadership

- Culturally Responsive Pedagogies

- Culturally Responsive Teacher Education in the United Stat...

- Curriculum Design

- Data Collection in Educational Research

- Data-driven Decision Making in the United States

- Deaf Education

- Desegregation and Integration

- Design Thinking and the Learning Sciences: Theoretical, Pr...

- Development, Moral

- Dialogic Pedagogy

- Digital Age Teacher, The

- Digital Citizenship

- Digital Divides

- Disabilities

- Distance Learning

- Distributed Leadership

- Doctoral Education and Training

- Early Childhood Education and Care (ECEC) in Denmark

- Early Childhood Education and Development in Mexico

- Early Childhood Education in Aotearoa New Zealand

- Early Childhood Education in Australia

- Early Childhood Education in China

- Early Childhood Education in Europe

- Early Childhood Education in Sub-Saharan Africa

- Early Childhood Education in Sweden

- Early Childhood Education Pedagogy

- Early Childhood Education Policy

- Early Childhood Education, The Arts in

- Early Childhood Mathematics

- Early Childhood Science

- Early Childhood Teacher Education

- Early Childhood Teachers in Aotearoa New Zealand

- Early Years Professionalism and Professionalization Polici...

- Economics of Education

- Education For Children with Autism

- Education for Sustainable Development

- Education Leadership, Empirical Perspectives in

- Education of Native Hawaiian Students

- Education Reform and School Change

- Educational Statistics for Longitudinal Research

- Educator Partnerships with Parents and Families with a Foc...

- Emotional and Affective Issues in Environmental and Sustai...

- Emotional and Behavioral Disorders

- Environmental and Science Education: Overlaps and Issues

- Environmental Education

- Environmental Education in Brazil

- Epistemic Beliefs

- Equity and Improvement: Engaging Communities in Educationa...

- Equity, Ethnicity, Diversity, and Excellence in Education

- Ethical Research with Young Children

- Ethics and Education

- Ethics of Teaching

- Ethnic Studies

- Evidence-Based Communication Assessment and Intervention

- Family and Community Partnerships in Education

- Family Day Care

- Federal Government Programs and Issues

- Feminization of Labor in Academia

- Finance, Education

- Financial Aid

- Formative Assessment

- Future-Focused Education

- Gender and Achievement

- Gender and Alternative Education

- Gender, Power and Politics in the Academy

- Gender-Based Violence on University Campuses

- Gifted Education

- Global Mindedness and Global Citizenship Education

- Global University Rankings

- Governance, Education

- Grounded Theory

- Growth of Effective Mental Health Services in Schools in t...

- Higher Education and Globalization

- Higher Education and the Developing World

- Higher Education Faculty Characteristics and Trends in the...

- Higher Education Finance

- Higher Education Governance

- Higher Education Graduate Outcomes and Destinations

- Higher Education in Africa

- Higher Education in China

- Higher Education in Latin America

- Higher Education in the United States, Historical Evolutio...

- Higher Education, International Issues in

- Higher Education Management

- Higher Education Policy

- Higher Education Research

- Higher Education Student Assessment

- High-stakes Testing

- History of Early Childhood Education in the United States

- History of Education in the United States

- History of Technology Integration in Education

- Homeschooling

- Inclusion in Early Childhood: Difference, Disability, and ...

- Inclusive Education

- Indigenous Education in a Global Context

- Indigenous Learning Environments

- Indigenous Students in Higher Education in the United Stat...

- Infant and Toddler Pedagogy

- Inservice Teacher Education

- Integrating Art across the Curriculum

- Intelligence

- Intensive Interventions for Children and Adolescents with ...

- International Perspectives on Academic Freedom

- Intersectionality and Education

- Knowledge Development in Early Childhood

- Leadership Development, Coaching and Feedback for

- Leadership in Early Childhood Education

- Leadership Training with an Emphasis on the United States

- Learning Analytics in Higher Education

- Learning Difficulties

- Learning, Lifelong

- Learning, Multimedia

- Learning Strategies

- Legal Matters and Education Law

- LGBT Youth in Schools

- Linguistic Diversity

- Linguistically Inclusive Pedagogy

- Literacy Development and Language Acquisition

- Literature Reviews

- Mathematics Identity

- Mathematics Instruction and Interventions for Students wit...

- Mathematics Teacher Education

- Measurement for Improvement in Education

- Measurement in Education in the United States

- Meta-Analysis and Research Synthesis in Education

- Methodological Approaches for Impact Evaluation in Educati...

- Mindfulness, Learning, and Education

- Motherscholars

- Multiliteracies in Early Childhood Education

- Multiple Documents Literacy: Theory, Research, and Applica...

- Museums, Education, and Curriculum

- Music Education

- Narrative Research in Education

- Native American Studies

- Nonformal and Informal Environmental Education

- Note-Taking

- Numeracy Education

- One-to-One Technology in the K-12 Classroom

- Online Education

- Open Education

- Organizing for Continuous Improvement in Education

- Organizing Schools for the Inclusion of Students with Disa...

- Outdoor Play and Learning

- Outdoor Play and Learning in Early Childhood Education

- Pedagogical Leadership

- Pedagogy of Teacher Education, A

- Performance Objectives and Measurement

- Performance-based Research Assessment in Higher Education

- Performance-based Research Funding

- Phenomenology in Educational Research

- Philosophy of Education

- Physical Education

- Podcasts in Education

- Policy Context of United States Educational Innovation and...

- Politics of Education

- Portable Technology Use in Special Education Programs and ...

- Post-humanism and Environmental Education

- Pre-Service Teacher Education

- Problem Solving

- Productivity and Higher Education

- Professional Development

- Professional Learning Communities

- Program Evaluation

- Programs and Services for Students with Emotional or Behav...

- Psychology Learning and Teaching

- Psychometric Issues in the Assessment of English Language ...

- Qualitative, Quantitative, and Mixed Methods Research Samp...

- Qualitative Research Design

- Quantitative Research Designs in Educational Research

- Queering the English Language Arts (ELA) Writing Classroom

- Race and Affirmative Action in Higher Education

- Reading Education

- Refugee and New Immigrant Learners

- Relational and Developmental Trauma and Schools

- Relational Pedagogies in Early Childhood Education

- Reliability in Educational Assessments

- Religion in Elementary and Secondary Education in the Unit...

- Researcher Development and Skills Training within the Cont...

- Research-Practice Partnerships in Education within the Uni...

- Response to Intervention

- Restorative Practices

- Risky Play in Early Childhood Education

- Scale and Sustainability of Education Innovation and Impro...

- Scaling Up Research-based Educational Practices

- School Accreditation

- School Choice

- School Culture

- School District Budgeting and Financial Management in the ...

- School Improvement through Inclusive Education

- School Reform

- Schools, Private and Independent

- School-Wide Positive Behavior Support

- Science Education

- Secondary to Postsecondary Transition Issues

- Self-Regulated Learning

- Self-Study of Teacher Education Practices

- Service-Learning

- Severe Disabilities

- Single Salary Schedule

- Single-sex Education

- Social Context of Education

- Social Justice

- Social Pedagogy

- Social Science and Education Research

- Social Studies Education

- Sociology of Education

- Standards-Based Education

- Student Access, Equity, and Diversity in Higher Education

- Student Assignment Policy

- Student Engagement in Tertiary Education

- Student Learning, Development, Engagement, and Motivation ...

- Student Participation

- Student Voice in Teacher Development

- Sustainability Education in Early Childhood Education

- Sustainability in Early Childhood Education

- Sustainability in Higher Education

- Teacher Beliefs and Epistemologies

- Teacher Collaboration in School Improvement

- Teacher Evaluation and Teacher Effectiveness

- Teacher Preparation

- Teacher Training and Development

- Teacher Unions and Associations

- Teacher-Student Relationships

- Teaching Critical Thinking

- Technologies, Teaching, and Learning in Higher Education

- Technology Education in Early Childhood

- Technology, Educational

- Technology-based Assessment

- The Bologna Process

- The Regulation of Standards in Higher Education

- Theories of Educational Leadership

- Three Conceptions of Literacy: Media, Narrative, and Gamin...

- Tracking and Detracking

- Traditions of Quality Improvement in Education

- Transformative Learning

- Transitions in Early Childhood Education

- Tribally Controlled Colleges and Universities in the Unite...

- Understanding the Psycho-Social Dimensions of Schools and ...

- University Faculty Roles and Responsibilities in the Unite...

- Using Ethnography in Educational Research

- Value of Higher Education for Students and Other Stakehold...

- Virtual Learning Environments

- Vocational and Technical Education

- Wellness and Well-Being in Education

- Women's and Gender Studies

- Young Children and Spirituality

- Young Children's Learning Dispositions

- Young Children's Working Theories

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|81.177.182.159]

- 81.177.182.159

Educational Design Research

- First Online: 01 January 2013

Cite this chapter

- Susan McKenney 5 &

- Thomas C. Reeves 6

32k Accesses

78 Citations

3 Altmetric

Educational design research is a genre of research in which the iterative development of solutions to practical and complex educational problems provides the setting for scientific inquiry. The solutions can be educational products, processes, programs, or policies. Educational design research not only targets solving significant problems facing educational practitioners but at the same time seeks to discover new knowledge that can inform the work of others facing similar problems. Working systematically and simultaneously toward these dual goals is perhaps the most defining feature of educational design research. This chapter seeks to clarify the nature of educational design research by distinguishing it from other types of inquiry conducted in the field of educational communications and technology. Examples of design research conducted by different researchers working in the field of educational communications and technology are described. The chapter concludes with a discussion of several important issues facing educational design researchers as they pursue future work using this innovative research approach.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41 (1), 16–25.

Google Scholar

Bannan-Ritland, B., & Baek, J. (2008). Teacher design research: An emerging paradigm for teachers’ professional development. In A. Kelly, R. Lesh, & J. Baek (Eds.), Handbook of design research methods in education: Innovations in science, technology, engineering, and mathematics learning and teaching . London: Routledge.

Barab, S., & Squire, K. (2004). Design-based research: Putting a stake in the ground. The Journal of the Learning Sciences, 13 (1), 1–14.

Article Google Scholar

Basham, J. D., Meyer, H., & Perry, E. (2010). The design and application of the digital backpack. Journal of Research on Technology in Education, 42 (4), 339–359.

Bell, P. (2004). On the theoretical breadth of design-based research in education. Educational Psychologist, 39 (4), 243–253.

Bell, P., Hoadley, C., & Linn, M. (2004). Design-based research in education. In M. Linn, E. Davis, & P. Bell (Eds.), Internet environments of science education (pp. 73–85). Mahwah, NJ: Lawrence Earlbaum Associates.

Bereiter, C. (2002). Design research for sustained innovation. Cognitive Studies, 9 (3), 321–327.

Boote, D. N., & Beile, P. (2005). Scholars before researchers: On the centrality of the dissertation literature review in research preparation. Educational Researcher, 34 (6), 3–15.

Brar, R. (2010). The design and study of a learning environment to support growth and change in students’ knowledge of fraction multiplication . Unpublished doctoral dissertation, The University of California, Berkeley.

*Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. Journal of the Learning Sciences, 2 (2), 141–178.

Burkhardt, H. (2006). From design research to large-scale impact: Engineering research in education. In J. van den Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research . London: Routledge.

Burkhardt, H. (2009). On strategic design. Educational Designer: Journal of the International Society for Design and Development in Education, 1 (3). Available online at: http://www.educationaldesigner.org/ed/volume1/issue3/article9/index.htm

Carter, C. A., Ruhe, M. C., Weyer, S. M., Litaker, D., Fry, R. E., & Stange, K. C. (2007). An appreciative inquiry approach to practice improvement and transformative change in health care settings. Quality Management in Health Care, 16 (3), 194–204.

Clarke, A. (1999). Evaluation research: An introduction to principles, methods and practice . London: Sage.

*Collins, A. (1992). Toward a design science of education. In E. Lagemann & L. Shulman (Eds.), Issues in education research: problems and possibilities (pp. 15–22). San Francisco, CA: Jossey-Bass.

Dede, C. (2004). If design-based research is the answer, what is the question? Journal of the Learning Sciences, 13 (1), 105–114.

Design-Based Research Collective. (2003). Design-based research: An emerging paradigm for educational inquiry. Educational Researcher, 32 (1), 5–8.

Dewey, J. (1900). Psychology and social practice. Psychological Review, 7 , 105–124.

Drexler, W. (2010). The networked student: A design-based research case study of student constructed personal learning environments in a middle school science course . Unpublished doctoral dissertation, The University of Florida.

Edelson, D. (2002). Design research: What we learn when we engage in design. The Journal of the Learning Sciences, 11 (1), 105–121.

Ejersbo, L., Engelhardt, R., Frølunde, L., Hanghøj, T., Magnussen, R., & Misfeldt, M. (2008). Balancing product design and theoretical insight. In A. Kelly, R. Lesh, & J. Baek (Eds.), The handbook of design research methods in education (pp. 149–163). Mahwah, NJ: Lawrence Erlbaum Associates.

*Firestone, W. A. (1993). Alternative arguments for generalizing from data as applied to qualitative research. Educational Researcher, 22 (4), 16–23.

Fullan, M., & Pomfret, A. (1977). Research on curriculum and instruction implementation. Review of Educational Research, 47 (2), 335–397.

Glaser, R. (1976). Components of a psychology of instruction: Toward a science of design. Review of Educational Research, 46 (1), 29–39.

Gravemeijer, K., & Cobb, P. (2006). Outline of a method for design research in mathematics education. In J. V. D. Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research . London: Routledge.

Hall, G. E., Wallace, R. C., & Dossett, W. A. (1973). A developmental conceptualization of the adoption process within educational institutions . Austin, TX: The Research and Development Center for Teacher Education.

Havelock, R. (1971). Planning for innovation through dissemination and utilization of knowledge . Ann Arbor, MI: Center for Research on Utilization of Scientific Knowledge.

Kaestle, C. F. (1993). The awful reputation of education research. Educational Researcher, 22 (1), 23, 26–31.

Kali, Y. (2008). The design principles database as means for promoting design-based research. In A. Kelly, R. Lesh, & J. Baek (Eds.), Handbook of design research methods in education (pp. 423–438). London: Routledge.

Kelly, A. (2003). Research as design. Educational Researcher, 32 (1), 3–4.

*Kelly, A., Lesh, R., & Baek, J. (Eds.). (2008). Handbook of design research methods in education . New York, NY: Routledge.

Kim, H., & Hannafin, M. (2008). Grounded design of web-enhanced case-based activity. Educational Technology Research and Development, 56 , 161–179.

Klopfer, E., & Squire, K. (2008). Environmental detectives—The development of an augmented reality platform for environmental simulations. Educational Technology Research and Development, 56 (2), 203–228.

Labaree, D. F. (2006). The trouble with ed schools . New Haven, CT: Yale University Press.

Lagemann, E. (2002). An elusive science: The troubling history of education research . Chicago: University of Chicago Press.

Laurel, B. (2003). Design research: Methods and perspectives . Cambridge, MA: MIT Press.

Lee, J. J. (2009). Understanding how identity supportive games can impact ethnic minority possible selves and learning: A design-based research study . Unpublished dissertation study, The Pennsylvania State University.

*Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic inquiry. Newbury Park, CA: Sage Publications.

Linn, M., & Eylon, B. (2006). Science education: Integrating views of learning and instruction. In P. Alexander & P. Winne (Eds.), Handbook of educational psychology (2nd ed., pp. 511–544). Mahwah, NJ: Lawrence Erlbaum Associates.

McKenney, S. E., & Reeves, T. C. (2013). Systematic review of design-based research progress: Is a little knowledge a dangerous thing? Educational Researcher, 42 (2), 97–100.

*McKenney, S. E., & Reeves, T. C. (2012). Conducting educational design research . London: Routledge.

McKenney, S., van den Akker, J., & Nieveen, N. (2006). Design research from the curriculum perspective. In J. Van den Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research (pp. 67–90). London: Routledge.

Mills, G. E. (2002). Action research: A guide for the teacher researcher (2nd ed.). Englewood Cliffs, NJ: Prentice-Hall.

Mishra, P., & Koehler, M. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108 (6), 1017–1054.

Münsterberg, H. (1899). Psychology and life . Boston, MA: Houghton Mifflin.

Book Google Scholar

Newman, D. (1990). Opportunities for research on the organizational impact of school computers. Educational Researcher, 19 (3), 8–13.

Oh, E. (2011). Collaborative group work in an online learning environment: A design research study . Unpublished doctoral dissertation, The University of Georgia.

Oh, E., & Reeves, T. (2010). The implications of the differences between design research and instructional systems design for educational technology researchers and practitioners. Educational Media International, 47 (4), 263–275.

*Penuel, W., Fishman, B., Cheng, B., & Sabelli, N. (2011). Organizing research and development at the intersection of learning, implementation and design. Educational Researcher, 40 (7), 331–337.

Plomp, T., & Nieveen, N. (Eds.). (2009). An introduction to educational design research: Proceedings of the seminar conducted at the East China Normal University, Shanghai . Enschede, The Netherlands: SLO—Netherlands Institute for Curriculum Institute.

Quintana, C., Reiser, B., Davis, E., Krajcik, J., Fretz, E., Duncan, R. G. et al. (2004). A scaffolding design framework for software to support science inquiry. The Journal of the Learning Sciences, 13 (3), 337–386.

Raval, H. (2010). Supporting para-teachers in an Indian NGO: The plan-enact-reflect cycle . Unpublished doctoral dissertation, Twente University.

Reeves, T. C. (2011). Can educational research be both rigorous and relevant? Educational Designer: Journal of the International Society for Design and Development in Education , 1 (4). Available online at: http://www.educationaldesigner.org/ed/volume1/issue4/

Reeves, T. C. (2006). Design research from the technology perspective. In J. V. Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research (pp. 86–109). London: Routledge.

*Reinking, D., & Bradley, B. (2008). Formative and design experiments: Approaches to language and literacy research . New York, NY: Teachers College Press.

Reynolds, R., & Caperton, I. (2011). Contrasts in student engagement, meaning-making, dislikes, and challenges in a discovery-based program of game design learning. Educational Technology Research and Development, 59 (2), 267–289.

Richey, R., & Klein, J. (2007). Design and development research . Mahwah, NJ: Lawrence Erlbaum Associates.

Schoenfeld, A. H. (2009). Bridging the cultures of educational research and design. Educational Designer: Journal of the International Society for Design and Development in Education, 1 (2). Available online at: http://www.educationaldesigner.org/ed/volume1/issue2/article5/index.htm

Schwarz, B. B., & Asterhan, C. S. (2011). E-moderation of synchronous discussions in educational settings: A nascent practice. The Journal of the Learning Sciences, 20 (3), 395–442.

Shavelson, R., Phillips, D., Towne, L., & Feuer, M. (2003). On the science of education design studies. Educational Researcher, 32 (1), 25–28.

Stokes, D. (1997). Pasteurs quadrant: Basic science and technological innovation . Washington, DC: Brookings Institution Press.

Thomas, M. K., Barab, S. A., & Tuzun, H. (2009). Developing critical implementations of technology-rich innovations: A cross-case study of the implementation of Quest Atlantis. Journal of Educational Computing Research, 41 (2), 125–153.

van Aken, X. (2004). Management research based on the paradigm of the design sciences: The quest for field-tested and grounded technological rules. Journal of Management Studies, 41 (2), 219–246.

*van den Akker, J. (1999). Principles and methods of development research. In J. van den Akker, R. Branch, K. Gustafson, N. Nieveen, & T. Plomp (Eds.), Design approaches and tools in education and training (pp. 1–14). Dordrecht: Kluwer Academic Publishers.

van den Akker, J., Gravemeijer, K., McKenney, S., & Nieveen, N. (Eds.). (2006a). Educational design research . London: Routledge.

van den Akker, J., Gravemeijer, K., McKenney, S., & Nieveen, N. (2006b). Introducing educational design research. In J. Van den Akker, K. Gravemeijer, S. McKenney, & N. Nieveen (Eds.), Educational design research (pp. 3–7). London: Routledge.

Wang, F., & Hannafin, M. (2005). Design-based research and technology-enhanced learning environments. Educational Technology Research and Development, 53 (4), 5–23.

Xie, Y., & Sharma, P. (2011). Exploring evidence of reflective thinking in student artifacts of blogging-mapping tool: A design-based research approach. Instructional Science, 39 (5), 695–719.

Yin, R. (1989). Case study research: Design and methods . Newbury Park, CA: Sage Publishing.

Download references

Author information

Authors and affiliations.

Open University of the Netherlands & University of Twente, OWK/GW/UTwente, 217, Enschede, 7500 AE, The Netherlands

Susan McKenney

College of Education, The University of Georgia, 325C Aderhold Hall, Athens, GA, 30602-7144, USA

Thomas C. Reeves

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Susan McKenney .

Editor information

Editors and affiliations.

, Department of Learning Technologies, C, University of North Texas, North Elm 3940, Denton, 76207-7102, Texas, USA

J. Michael Spector

W. Sunset Blvd. 1812, St. George, 84770, Utah, USA

M. David Merrill

, Centr. Instructiepsychol.&-technologie, K.U. Leuven, Andreas Vesaliusstraat 2, Leuven, 3000, Belgium

Research Drive, Iacocca A109 111, Bethlehem, 18015, Pennsylvania, USA

M. J. Bishop

Rights and permissions

Reprints and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

McKenney, S., Reeves, T.C. (2014). Educational Design Research. In: Spector, J., Merrill, M., Elen, J., Bishop, M. (eds) Handbook of Research on Educational Communications and Technology. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-3185-5_11

Download citation

DOI : https://doi.org/10.1007/978-1-4614-3185-5_11

Published : 22 May 2013

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4614-3184-8

Online ISBN : 978-1-4614-3185-5

eBook Packages : Humanities, Social Sciences and Law Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Tools and Resources

- Customer Services

- Original Language Spotlight

- Alternative and Non-formal Education

- Cognition, Emotion, and Learning

- Curriculum and Pedagogy

- Education and Society

- Education, Change, and Development

- Education, Cultures, and Ethnicities

- Education, Gender, and Sexualities

- Education, Health, and Social Services

- Educational Administration and Leadership

- Educational History

- Educational Politics and Policy

- Educational Purposes and Ideals

- Educational Systems

- Educational Theories and Philosophies

- Globalization, Economics, and Education

- Languages and Literacies

- Professional Learning and Development

- Research and Assessment Methods

- Technology and Education

- Share This Facebook LinkedIn Twitter

Article contents

Qualitative design research methods.

- Michael Domínguez Michael Domínguez San Diego State University

- https://doi.org/10.1093/acrefore/9780190264093.013.170

- Published online: 19 December 2017

Emerging in the learning sciences field in the early 1990s, qualitative design-based research (DBR) is a relatively new methodological approach to social science and education research. As its name implies, DBR is focused on the design of educational innovations, and the testing of these innovations in the complex and interconnected venue of naturalistic settings. As such, DBR is an explicitly interventionist approach to conducting research, situating the researcher as a part of the complex ecology in which learning and educational innovation takes place.

With this in mind, DBR is distinct from more traditional methodologies, including laboratory experiments, ethnographic research, and large-scale implementation. Rather, the goal of DBR is not to prove the merits of any particular intervention, or to reflect passively on a context in which learning occurs, but to examine the practical application of theories of learning themselves in specific, situated contexts. By designing purposeful, naturalistic, and sustainable educational ecologies, researchers can test, extend, or modify their theories and innovations based on their pragmatic viability. This process offers the prospect of generating theory-developing, contextualized knowledge claims that can complement the claims produced by other forms of research.

Because of this interventionist, naturalistic stance, DBR has also been the subject of ongoing debate concerning the rigor of its methodology. In many ways, these debates obscure the varied ways DBR has been practiced, the varied types of questions being asked, and the theoretical breadth of researchers who practice DBR. With this in mind, DBR research may involve a diverse range of methods as researchers from a variety of intellectual traditions within the learning sciences and education research design pragmatic innovations based on their theories of learning, and document these complex ecologies using the methodologies and tools most applicable to their questions, focuses, and academic communities.

DBR has gained increasing interest in recent years. While it remains a popular methodology for developmental and cognitive learning scientists seeking to explore theory in naturalistic settings, it has also grown in importance to cultural psychology and cultural studies researchers as a methodological approach that aligns in important ways with the participatory commitments of liberatory research. As such, internal tension within the DBR field has also emerged. Yet, though approaches vary, and have distinct genealogies and commitments, DBR might be seen as the broad methodological genre in which Change Laboratory, design-based implementation research (DBIR), social design-based experiments (SDBE), participatory design research (PDR), and research-practice partnerships might be categorized. These critically oriented iterations of DBR have important implications for educational research and educational innovation in historically marginalized settings and the Global South.

- design-based research

- learning sciences

- social-design experiment

- qualitative research

- research methods

Educational research, perhaps more than many other disciplines, is a situated field of study. Learning happens around us every day, at all times, in both formal and informal settings. Our worlds are replete with complex, dynamic, diverse communities, contexts, and institutions, many of which are actively seeking guidance and support in the endless quest for educational innovation. Educational researchers—as a source of potential expertise—are necessarily implicated in this complexity, linked to the communities and institutions through their very presence in spaces of learning, poised to contribute with possible solutions, yet often positioned as separate from the activities they observe, creating dilemmas of responsibility and engagement.

So what are educational scholars and researchers to do? These tensions invite a unique methodological challenge for the contextually invested researcher, begging them to not just produce knowledge about learning, but to participate in the ecology, collaborating on innovations in the complex contexts in which learning is taking place. In short, for many educational researchers, our backgrounds as educators, our connections to community partners, and our sociopolitical commitments to the process of educational innovation push us to ensure that our work is generative, and that our theories and ideas—our expertise—about learning and education are made pragmatic, actionable, and sustainable. We want to test what we know outside of laboratories, designing, supporting, and guiding educational innovation to see if our theories of learning are accurate, and useful to the challenges faced in schools and communities where learning is messy, collaborative, and contested. Through such a process, we learn, and can modify our theories to better serve the real needs of communities. It is from this impulse that qualitative design-based research (DBR) emerged as a new methodological paradigm for education research.

Qualitative design-based research will be examined, documenting its origins, the major tenets of the genre, implementation considerations, and methodological issues, as well as variance within the paradigm. As a relatively new methodology, much tension remains in what constitutes DBR, and what design should mean, and for whom. These tensions and questions, as well as broad perspectives and emergent iterations of the methodology, will be discussed, and considerations for researchers looking toward the future of this paradigm will be considered.

The Origins of Design-Based Research

Qualitative design-based research (DBR) first emerged in the learning sciences field among a group of scholars in the early 1990s, with the first articulation of DBR as a distinct methodological construct appearing in the work of Ann Brown ( 1992 ) and Allan Collins ( 1992 ). For learning scientists in the 1970s and 1980s, the traditional methodologies of laboratory experiments, ethnographies, and large-scale educational interventions were the only methods available. During these decades, a growing community of learning science and educational researchers (e.g., Bereiter & Scardamalia, 1989 ; Brown, Campione, Webber, & McGilley, 1992 ; Cobb & Steffe, 1983 ; Cole, 1995 ; Scardamalia & Bereiter, 1991 ; Schoenfeld, 1982 , 1985 ; Scribner & Cole, 1978 ) interested in educational innovation and classroom interventions in situated contexts began to find the prevailing methodologies insufficient for the types of learning they wished to document, the roles they wished to play in research, and the kinds of knowledge claims they wished to explore. The laboratory, or laboratory-like settings, where research on learning was at the time happening, was divorced from the complexity of real life, and necessarily limiting. Alternatively, most ethnographic research, while more attuned to capturing these complexities and dynamics, regularly assumed a passive stance 1 and avoided interceding in the learning process, or allowing researchers to see what possibility for innovation existed from enacting nascent learning theories. Finally, large-scale interventions could test innovations in practice but lost sight of the nuance of development and implementation in local contexts (Brown, 1992 ; Collins, Joseph, & Bielaczyc, 2004 ).

Dissatisfied with these options, and recognizing that in order to study and understand learning in the messiness of socially, culturally, and historically situated settings, new methods were required, Brown ( 1992 ) proposed an alternative: Why not involve ourselves in the messiness of the process, taking an active, grounded role in disseminating our theories and expertise by becoming designers and implementers of educational innovations? Rather than observing from afar, DBR researchers could trace their own iterative processes of design, implementation, tinkering, redesign, and evaluation, as it unfolded in shared work with teachers, students, learners, and other partners in lived contexts. This premise, initially articulated as “design experiments” (Brown, 1992 ), would be variously discussed over the next decade as “design research,” (Edelson, 2002 ) “developmental research,” (Gravemeijer, 1994 ), and “design-based research,” (Design-Based Research Collective, 2003 ), all of which reflect the original, interventionist, design-oriented concept. The latter term, “design-based research” (DBR), is used here, recognizing this as the prevailing terminology used to refer to this research approach at present. 2

Regardless of the evolving moniker, the prospects of such a methodology were extremely attractive to researchers. Learning scientists acutely aware of various aspects of situated context, and interested in studying the applied outcomes of learning theories—a task of inquiry into situated learning for which canonical methods were rather insufficient—found DBR a welcome development (Bell, 2004 ). As Barab and Squire ( 2004 ) explain: “learning scientists . . . found that they must develop technological tools, curriculum, and especially theories that help them systematically understand and predict how learning occurs” (p. 2), and DBR methodologies allowed them to do this in proactive, hands-on ways. Thus, rather than emerging as a strict alternative to more traditional methodologies, DBR was proposed to fill a niche that other methodologies were ill-equipped to cover.