An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Res Metr Anal

The Use of Research Methods in Psychological Research: A Systematised Review

Salomé elizabeth scholtz.

1 Community Psychosocial Research (COMPRES), School of Psychosocial Health, North-West University, Potchefstroom, South Africa

Werner de Klerk

Leon t. de beer.

2 WorkWell Research Institute, North-West University, Potchefstroom, South Africa

Research methods play an imperative role in research quality as well as educating young researchers, however, the application thereof is unclear which can be detrimental to the field of psychology. Therefore, this systematised review aimed to determine what research methods are being used, how these methods are being used and for what topics in the field. Our review of 999 articles from five journals over a period of 5 years indicated that psychology research is conducted in 10 topics via predominantly quantitative research methods. Of these 10 topics, social psychology was the most popular. The remainder of the conducted methodology is described. It was also found that articles lacked rigour and transparency in the used methodology which has implications for replicability. In conclusion this article, provides an overview of all reported methodologies used in a sample of psychology journals. It highlights the popularity and application of methods and designs throughout the article sample as well as an unexpected lack of rigour with regard to most aspects of methodology. Possible sample bias should be considered when interpreting the results of this study. It is recommended that future research should utilise the results of this study to determine the possible impact on the field of psychology as a science and to further investigation into the use of research methods. Results should prompt the following future research into: a lack or rigour and its implication on replication, the use of certain methods above others, publication bias and choice of sampling method.

Introduction

Psychology is an ever-growing and popular field (Gough and Lyons, 2016 ; Clay, 2017 ). Due to this growth and the need for science-based research to base health decisions on (Perestelo-Pérez, 2013 ), the use of research methods in the broad field of psychology is an essential point of investigation (Stangor, 2011 ; Aanstoos, 2014 ). Research methods are therefore viewed as important tools used by researchers to collect data (Nieuwenhuis, 2016 ) and include the following: quantitative, qualitative, mixed method and multi method (Maree, 2016 ). Additionally, researchers also employ various types of literature reviews to address research questions (Grant and Booth, 2009 ). According to literature, what research method is used and why a certain research method is used is complex as it depends on various factors that may include paradigm (O'Neil and Koekemoer, 2016 ), research question (Grix, 2002 ), or the skill and exposure of the researcher (Nind et al., 2015 ). How these research methods are employed is also difficult to discern as research methods are often depicted as having fixed boundaries that are continuously crossed in research (Johnson et al., 2001 ; Sandelowski, 2011 ). Examples of this crossing include adding quantitative aspects to qualitative studies (Sandelowski et al., 2009 ), or stating that a study used a mixed-method design without the study having any characteristics of this design (Truscott et al., 2010 ).

The inappropriate use of research methods affects how students and researchers improve and utilise their research skills (Scott Jones and Goldring, 2015 ), how theories are developed (Ngulube, 2013 ), and the credibility of research results (Levitt et al., 2017 ). This, in turn, can be detrimental to the field (Nind et al., 2015 ), journal publication (Ketchen et al., 2008 ; Ezeh et al., 2010 ), and attempts to address public social issues through psychological research (Dweck, 2017 ). This is especially important given the now well-known replication crisis the field is facing (Earp and Trafimow, 2015 ; Hengartner, 2018 ).

Due to this lack of clarity on method use and the potential impact of inept use of research methods, the aim of this study was to explore the use of research methods in the field of psychology through a review of journal publications. Chaichanasakul et al. ( 2011 ) identify reviewing articles as the opportunity to examine the development, growth and progress of a research area and overall quality of a journal. Studies such as Lee et al. ( 1999 ) as well as Bluhm et al. ( 2011 ) review of qualitative methods has attempted to synthesis the use of research methods and indicated the growth of qualitative research in American and European journals. Research has also focused on the use of research methods in specific sub-disciplines of psychology, for example, in the field of Industrial and Organisational psychology Coetzee and Van Zyl ( 2014 ) found that South African publications tend to consist of cross-sectional quantitative research methods with underrepresented longitudinal studies. Qualitative studies were found to make up 21% of the articles published from 1995 to 2015 in a similar study by O'Neil and Koekemoer ( 2016 ). Other methods in health psychology, such as Mixed methods research have also been reportedly growing in popularity (O'Cathain, 2009 ).

A broad overview of the use of research methods in the field of psychology as a whole is however, not available in the literature. Therefore, our research focused on answering what research methods are being used, how these methods are being used and for what topics in practice (i.e., journal publications) in order to provide a general perspective of method used in psychology publication. We synthesised the collected data into the following format: research topic [areas of scientific discourse in a field or the current needs of a population (Bittermann and Fischer, 2018 )], method [data-gathering tools (Nieuwenhuis, 2016 )], sampling [elements chosen from a population to partake in research (Ritchie et al., 2009 )], data collection [techniques and research strategy (Maree, 2016 )], and data analysis [discovering information by examining bodies of data (Ktepi, 2016 )]. A systematised review of recent articles (2013 to 2017) collected from five different journals in the field of psychological research was conducted.

Grant and Booth ( 2009 ) describe systematised reviews as the review of choice for post-graduate studies, which is employed using some elements of a systematic review and seldom more than one or two databases to catalogue studies after a comprehensive literature search. The aspects used in this systematised review that are similar to that of a systematic review were a full search within the chosen database and data produced in tabular form (Grant and Booth, 2009 ).

Sample sizes and timelines vary in systematised reviews (see Lowe and Moore, 2014 ; Pericall and Taylor, 2014 ; Barr-Walker, 2017 ). With no clear parameters identified in the literature (see Grant and Booth, 2009 ), the sample size of this study was determined by the purpose of the sample (Strydom, 2011 ), and time and cost constraints (Maree and Pietersen, 2016 ). Thus, a non-probability purposive sample (Ritchie et al., 2009 ) of the top five psychology journals from 2013 to 2017 was included in this research study. Per Lee ( 2015 ) American Psychological Association (APA) recommends the use of the most up-to-date sources for data collection with consideration of the context of the research study. As this research study focused on the most recent trends in research methods used in the broad field of psychology, the identified time frame was deemed appropriate.

Psychology journals were only included if they formed part of the top five English journals in the miscellaneous psychology domain of the Scimago Journal and Country Rank (Scimago Journal & Country Rank, 2017 ). The Scimago Journal and Country Rank provides a yearly updated list of publicly accessible journal and country-specific indicators derived from the Scopus® database (Scopus, 2017b ) by means of the Scimago Journal Rank (SJR) indicator developed by Scimago from the algorithm Google PageRank™ (Scimago Journal & Country Rank, 2017 ). Scopus is the largest global database of abstracts and citations from peer-reviewed journals (Scopus, 2017a ). Reasons for the development of the Scimago Journal and Country Rank list was to allow researchers to assess scientific domains, compare country rankings, and compare and analyse journals (Scimago Journal & Country Rank, 2017 ), which supported the aim of this research study. Additionally, the goals of the journals had to focus on topics in psychology in general with no preference to specific research methods and have full-text access to articles.

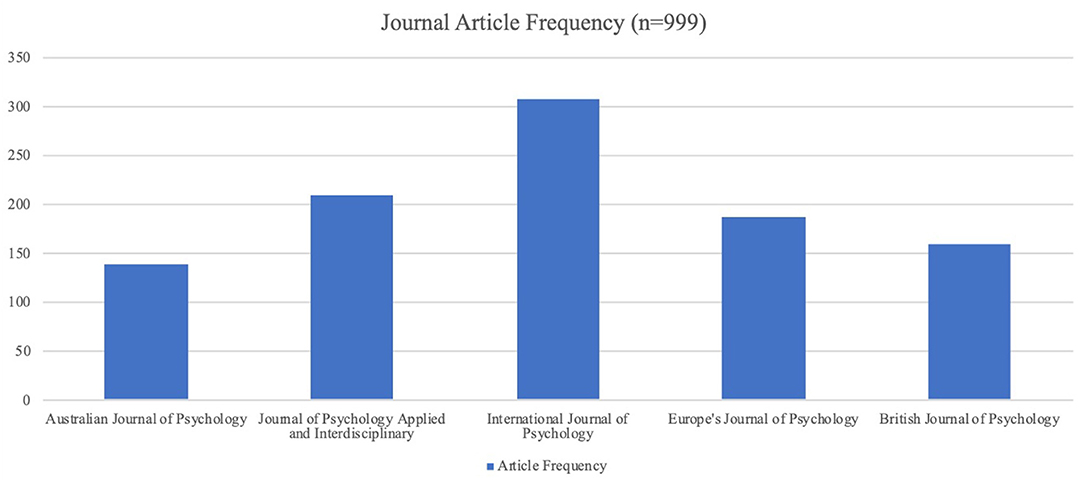

The following list of top five journals in 2018 fell within the abovementioned inclusion criteria (1) Australian Journal of Psychology, (2) British Journal of Psychology, (3) Europe's Journal of Psychology, (4) International Journal of Psychology and lastly the (5) Journal of Psychology Applied and Interdisciplinary.

Journals were excluded from this systematised review if no full-text versions of their articles were available, if journals explicitly stated a publication preference for certain research methods, or if the journal only published articles in a specific discipline of psychological research (for example, industrial psychology, clinical psychology etc.).

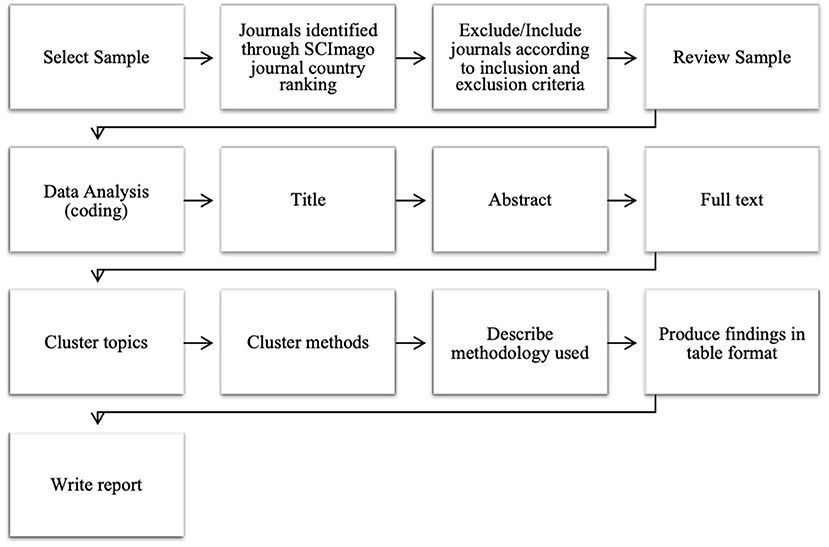

The researchers followed a procedure (see Figure 1 ) adapted from that of Ferreira et al. ( 2016 ) for systematised reviews. Data collection and categorisation commenced on 4 December 2017 and continued until 30 June 2019. All the data was systematically collected and coded manually (Grant and Booth, 2009 ) with an independent person acting as co-coder. Codes of interest included the research topic, method used, the design used, sampling method, and methodology (the method used for data collection and data analysis). These codes were derived from the wording in each article. Themes were created based on the derived codes and checked by the co-coder. Lastly, these themes were catalogued into a table as per the systematised review design.

Systematised review procedure.

According to Johnston et al. ( 2019 ), “literature screening, selection, and data extraction/analyses” (p. 7) are specifically tailored to the aim of a review. Therefore, the steps followed in a systematic review must be reported in a comprehensive and transparent manner. The chosen systematised design adhered to the rigour expected from systematic reviews with regard to full search and data produced in tabular form (Grant and Booth, 2009 ). The rigorous application of the systematic review is, therefore discussed in relation to these two elements.

Firstly, to ensure a comprehensive search, this research study promoted review transparency by following a clear protocol outlined according to each review stage before collecting data (Johnston et al., 2019 ). This protocol was similar to that of Ferreira et al. ( 2016 ) and approved by three research committees/stakeholders and the researchers (Johnston et al., 2019 ). The eligibility criteria for article inclusion was based on the research question and clearly stated, and the process of inclusion was recorded on an electronic spreadsheet to create an evidence trail (Bandara et al., 2015 ; Johnston et al., 2019 ). Microsoft Excel spreadsheets are a popular tool for review studies and can increase the rigour of the review process (Bandara et al., 2015 ). Screening for appropriate articles for inclusion forms an integral part of a systematic review process (Johnston et al., 2019 ). This step was applied to two aspects of this research study: the choice of eligible journals and articles to be included. Suitable journals were selected by the first author and reviewed by the second and third authors. Initially, all articles from the chosen journals were included. Then, by process of elimination, those irrelevant to the research aim, i.e., interview articles or discussions etc., were excluded.

To ensure rigourous data extraction, data was first extracted by one reviewer, and an independent person verified the results for completeness and accuracy (Johnston et al., 2019 ). The research question served as a guide for efficient, organised data extraction (Johnston et al., 2019 ). Data was categorised according to the codes of interest, along with article identifiers for audit trails such as authors, title and aims of articles. The categorised data was based on the aim of the review (Johnston et al., 2019 ) and synthesised in tabular form under methods used, how these methods were used, and for what topics in the field of psychology.

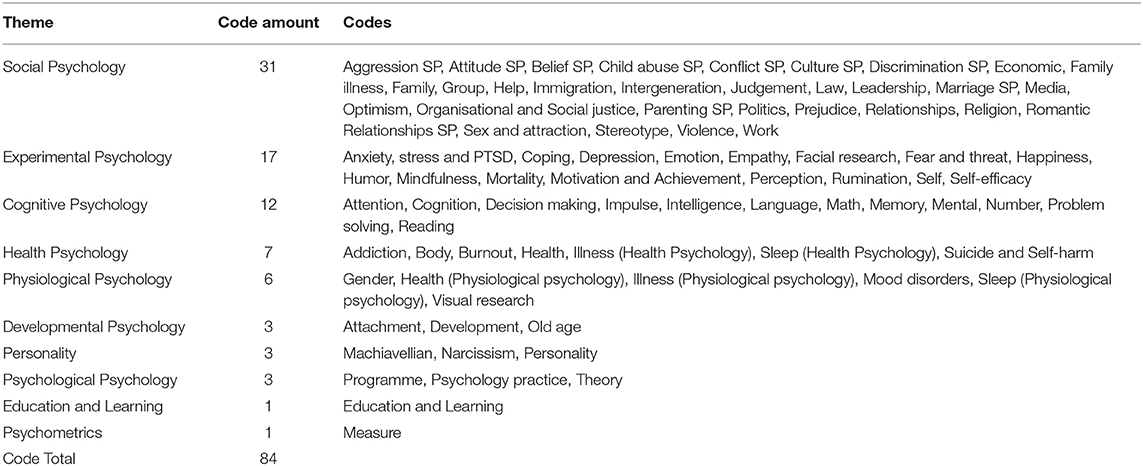

The initial search produced a total of 1,145 articles from the 5 journals identified. Inclusion and exclusion criteria resulted in a final sample of 999 articles ( Figure 2 ). Articles were co-coded into 84 codes, from which 10 themes were derived ( Table 1 ).

Journal article frequency.

Codes used to form themes (research topics).

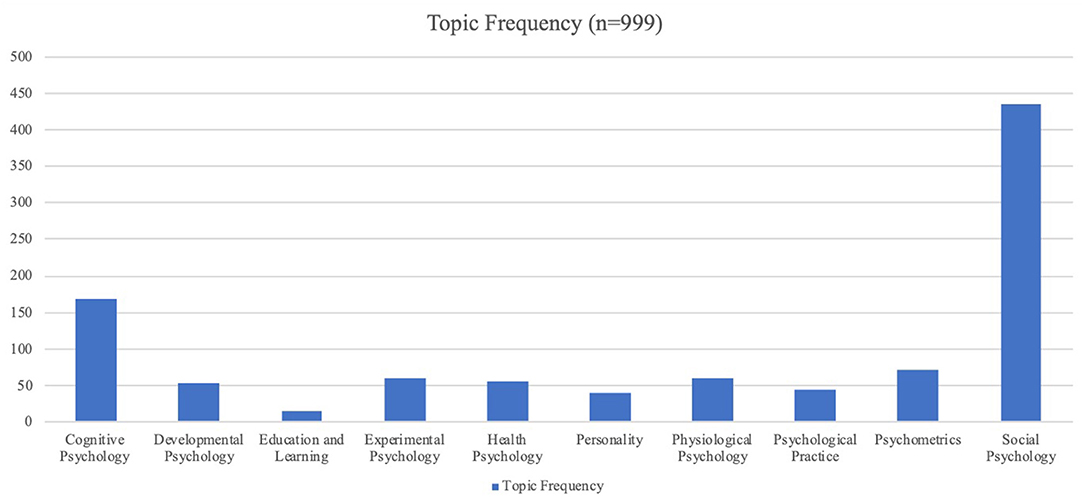

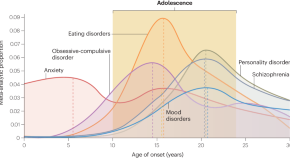

These 10 themes represent the topic section of our research question ( Figure 3 ). All these topics except, for the final one, psychological practice , were found to concur with the research areas in psychology as identified by Weiten ( 2010 ). These research areas were chosen to represent the derived codes as they provided broad definitions that allowed for clear, concise categorisation of the vast amount of data. Article codes were categorised under particular themes/topics if they adhered to the research area definitions created by Weiten ( 2010 ). It is important to note that these areas of research do not refer to specific disciplines in psychology, such as industrial psychology; but to broader fields that may encompass sub-interests of these disciplines.

Topic frequency (international sample).

In the case of developmental psychology , researchers conduct research into human development from childhood to old age. Social psychology includes research on behaviour governed by social drivers. Researchers in the field of educational psychology study how people learn and the best way to teach them. Health psychology aims to determine the effect of psychological factors on physiological health. Physiological psychology , on the other hand, looks at the influence of physiological aspects on behaviour. Experimental psychology is not the only theme that uses experimental research and focuses on the traditional core topics of psychology (for example, sensation). Cognitive psychology studies the higher mental processes. Psychometrics is concerned with measuring capacity or behaviour. Personality research aims to assess and describe consistency in human behaviour (Weiten, 2010 ). The final theme of psychological practice refers to the experiences, techniques, and interventions employed by practitioners, researchers, and academia in the field of psychology.

Articles under these themes were further subdivided into methodologies: method, sampling, design, data collection, and data analysis. The categorisation was based on information stated in the articles and not inferred by the researchers. Data were compiled into two sets of results presented in this article. The first set addresses the aim of this study from the perspective of the topics identified. The second set of results represents a broad overview of the results from the perspective of the methodology employed. The second set of results are discussed in this article, while the first set is presented in table format. The discussion thus provides a broad overview of methods use in psychology (across all themes), while the table format provides readers with in-depth insight into methods used in the individual themes identified. We believe that presenting the data from both perspectives allow readers a broad understanding of the results. Due a large amount of information that made up our results, we followed Cichocka and Jost ( 2014 ) in simplifying our results. Please note that the numbers indicated in the table in terms of methodology differ from the total number of articles. Some articles employed more than one method/sampling technique/design/data collection method/data analysis in their studies.

What follows is the results for what methods are used, how these methods are used, and which topics in psychology they are applied to . Percentages are reported to the second decimal in order to highlight small differences in the occurrence of methodology.

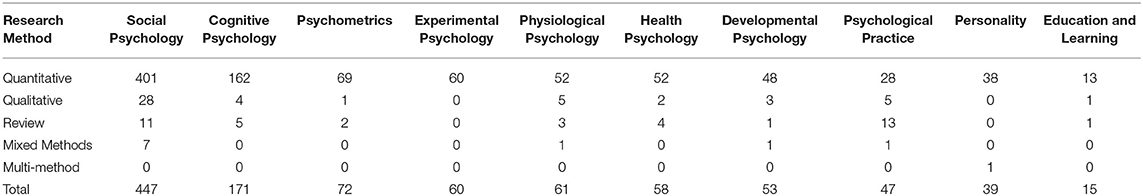

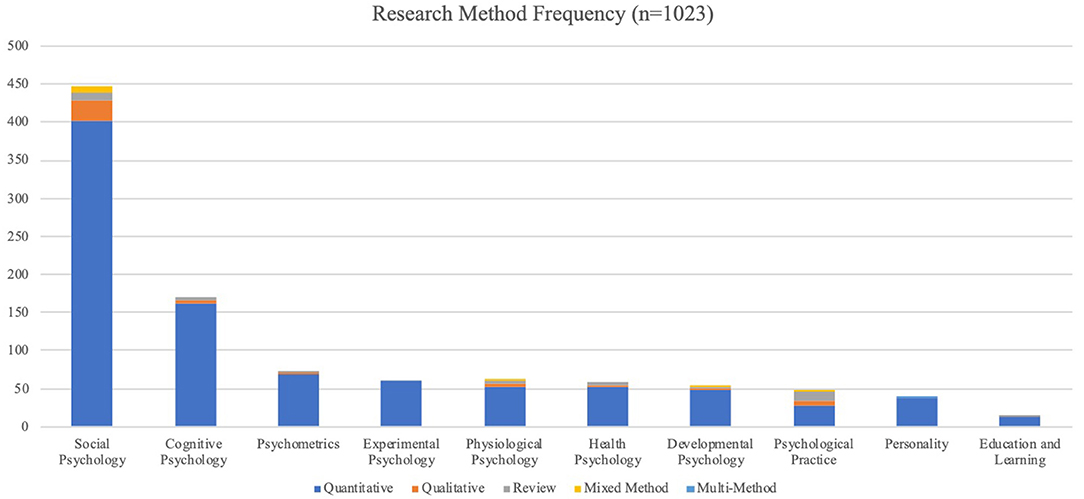

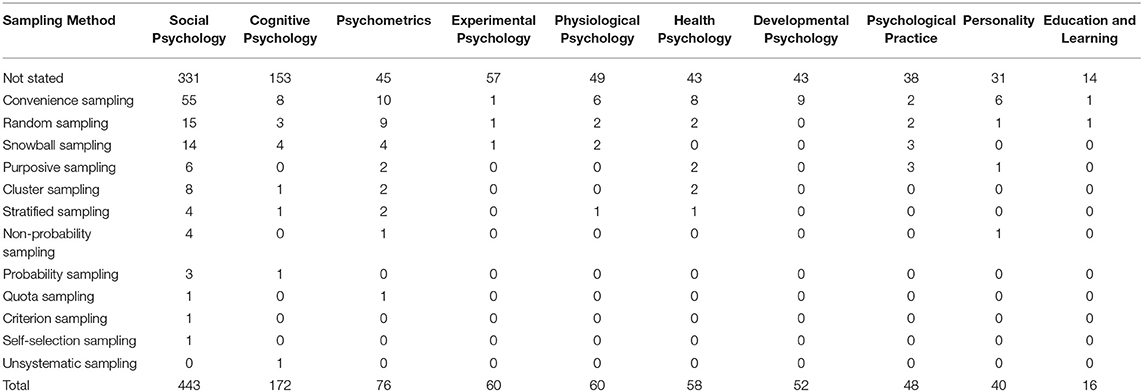

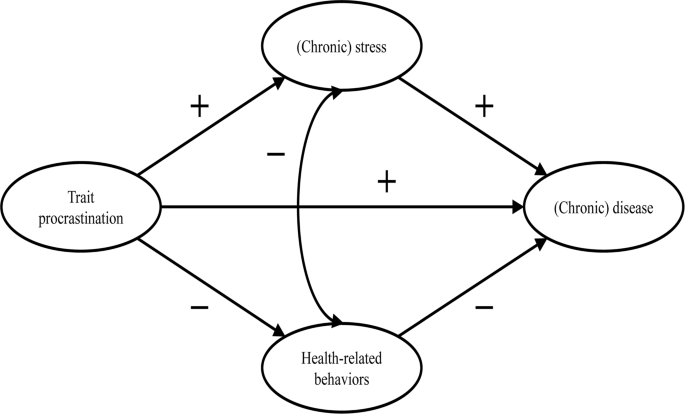

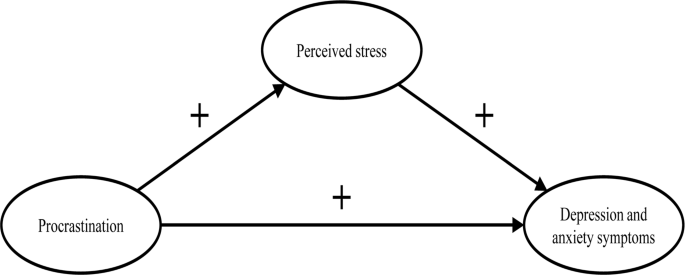

Firstly, with regard to the research methods used, our results show that researchers are more likely to use quantitative research methods (90.22%) compared to all other research methods. Qualitative research was the second most common research method but only made up about 4.79% of the general method usage. Reviews occurred almost as much as qualitative studies (3.91%), as the third most popular method. Mixed-methods research studies (0.98%) occurred across most themes, whereas multi-method research was indicated in only one study and amounted to 0.10% of the methods identified. The specific use of each method in the topics identified is shown in Table 2 and Figure 4 .

Research methods in psychology.

Research method frequency in topics.

Secondly, in the case of how these research methods are employed , our study indicated the following.

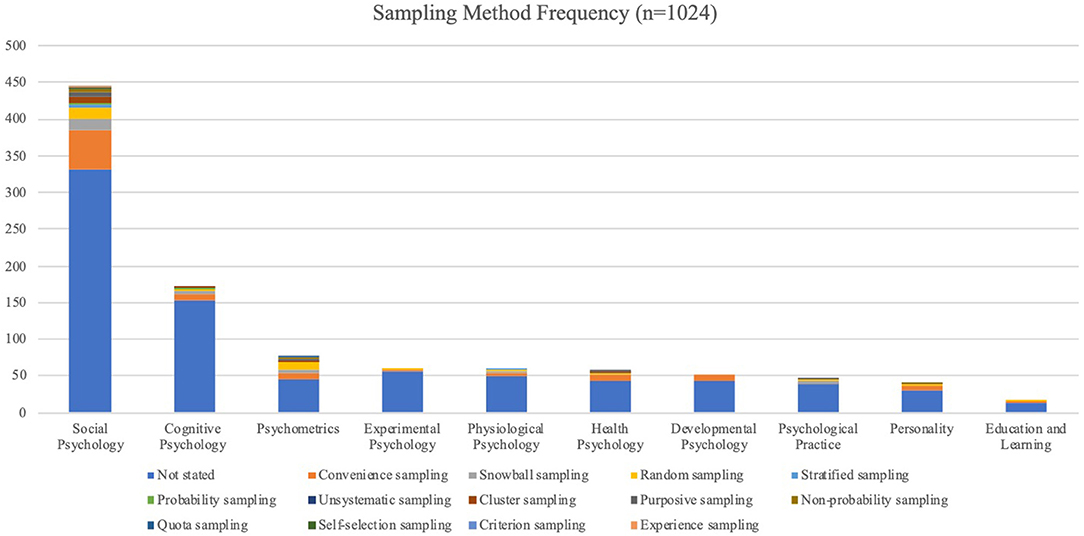

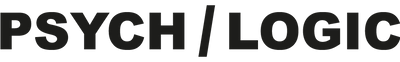

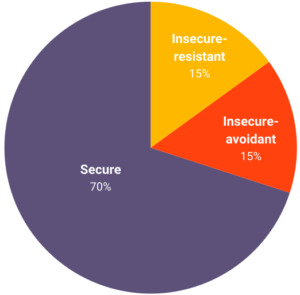

Sampling −78.34% of the studies in the collected articles did not specify a sampling method. From the remainder of the studies, 13 types of sampling methods were identified. These sampling methods included broad categorisation of a sample as, for example, a probability or non-probability sample. General samples of convenience were the methods most likely to be applied (10.34%), followed by random sampling (3.51%), snowball sampling (2.73%), and purposive (1.37%) and cluster sampling (1.27%). The remainder of the sampling methods occurred to a more limited extent (0–1.0%). See Table 3 and Figure 5 for sampling methods employed in each topic.

Sampling use in the field of psychology.

Sampling method frequency in topics.

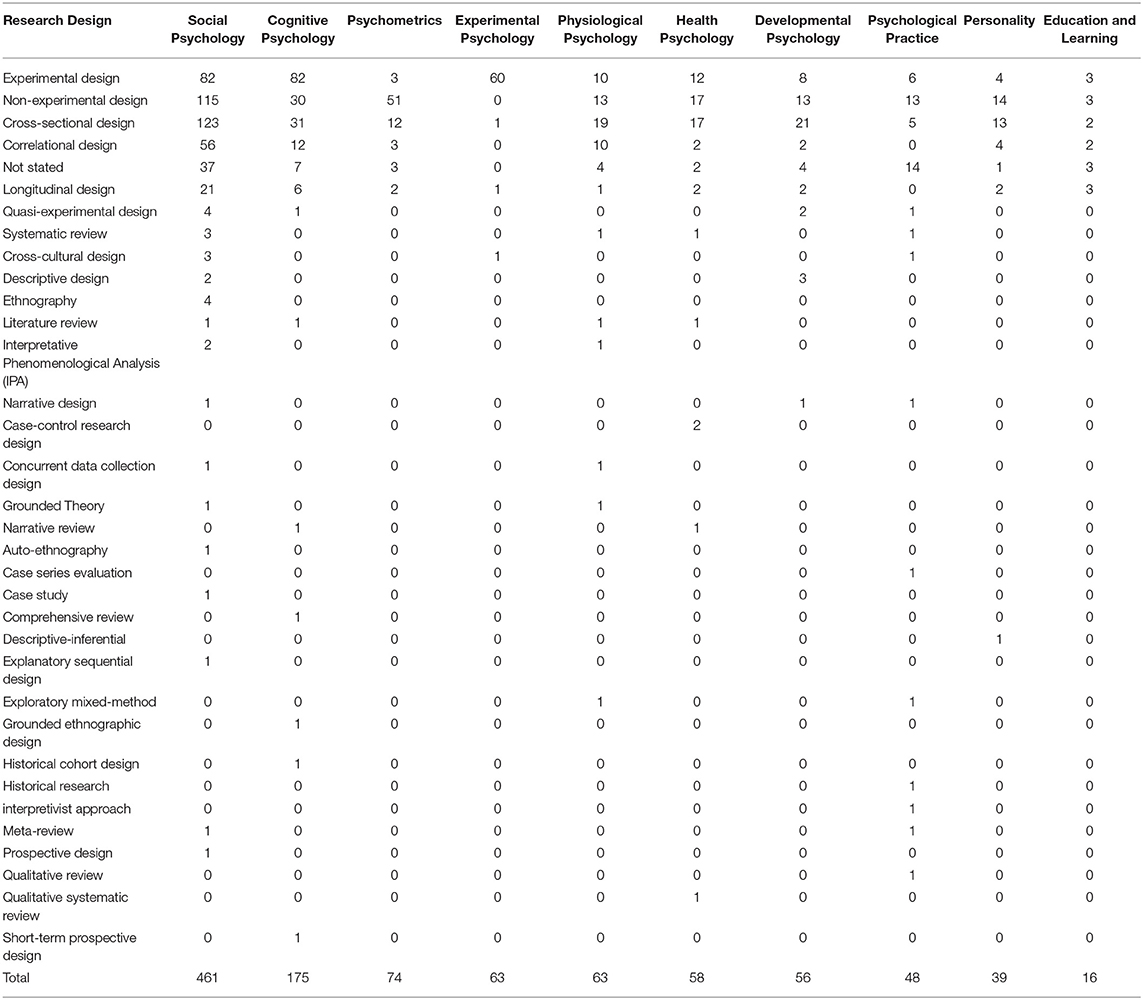

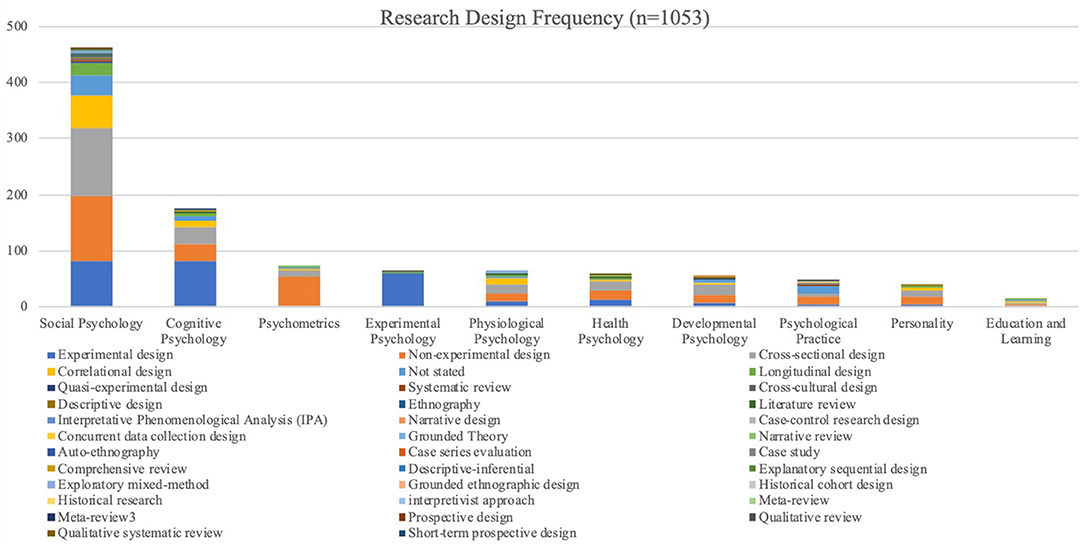

Designs were categorised based on the articles' statement thereof. Therefore, it is important to note that, in the case of quantitative studies, non-experimental designs (25.55%) were often indicated due to a lack of experiments and any other indication of design, which, according to Laher ( 2016 ), is a reasonable categorisation. Non-experimental designs should thus be compared with experimental designs only in the description of data, as it could include the use of correlational/cross-sectional designs, which were not overtly stated by the authors. For the remainder of the research methods, “not stated” (7.12%) was assigned to articles without design types indicated.

From the 36 identified designs the most popular designs were cross-sectional (23.17%) and experimental (25.64%), which concurred with the high number of quantitative studies. Longitudinal studies (3.80%), the third most popular design, was used in both quantitative and qualitative studies. Qualitative designs consisted of ethnography (0.38%), interpretative phenomenological designs/phenomenology (0.28%), as well as narrative designs (0.28%). Studies that employed the review method were mostly categorised as “not stated,” with the most often stated review designs being systematic reviews (0.57%). The few mixed method studies employed exploratory, explanatory (0.09%), and concurrent designs (0.19%), with some studies referring to separate designs for the qualitative and quantitative methods. The one study that identified itself as a multi-method study used a longitudinal design. Please see how these designs were employed in each specific topic in Table 4 , Figure 6 .

Design use in the field of psychology.

Design frequency in topics.

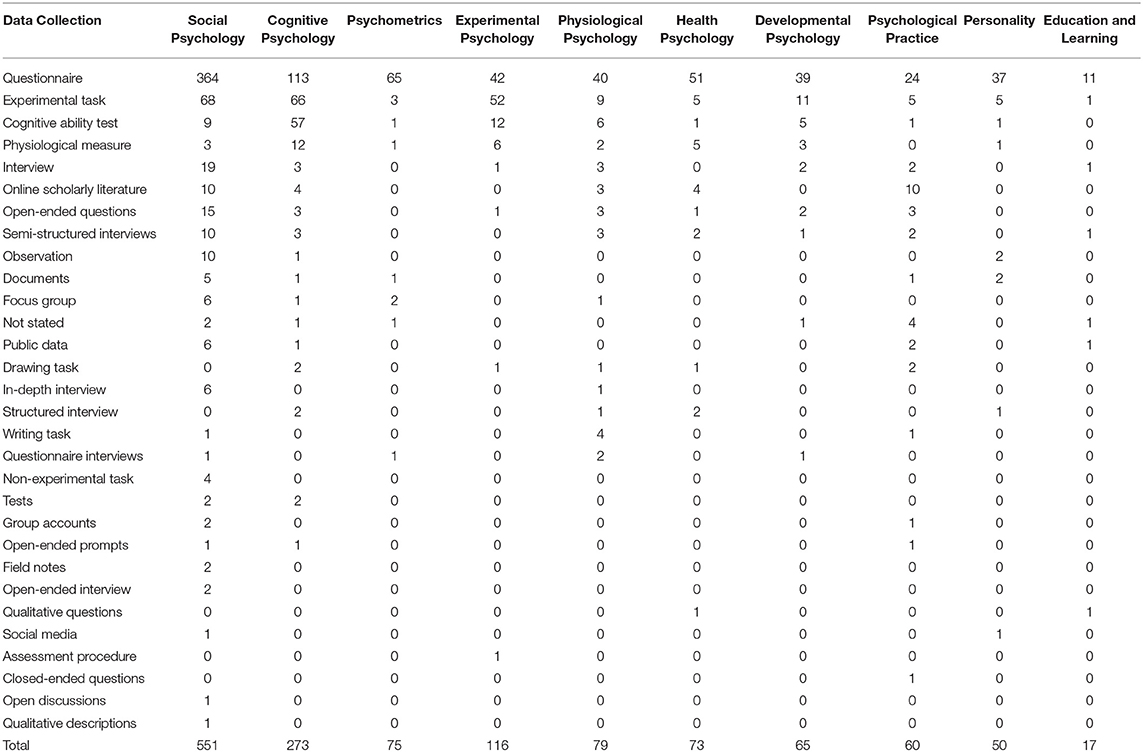

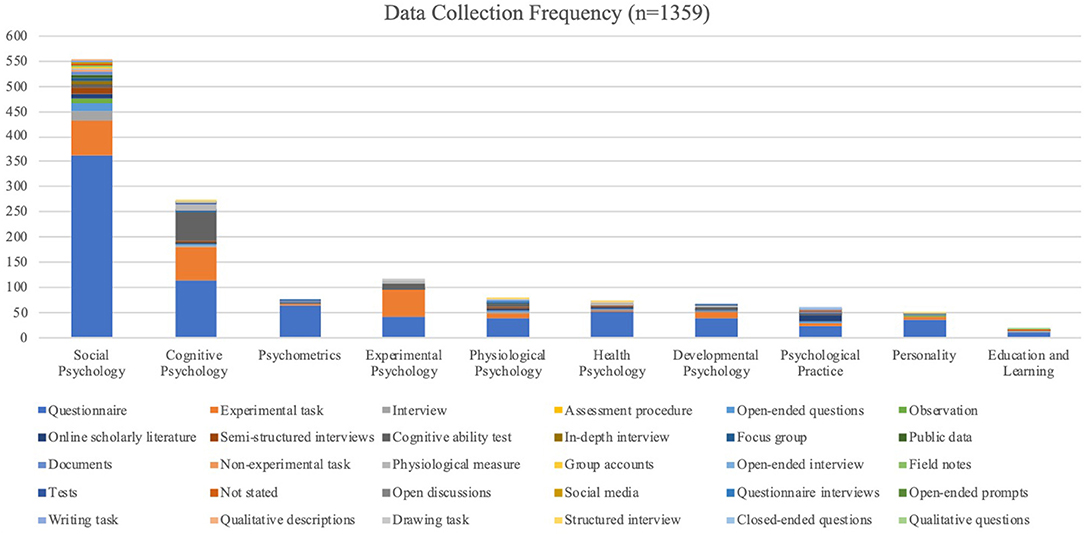

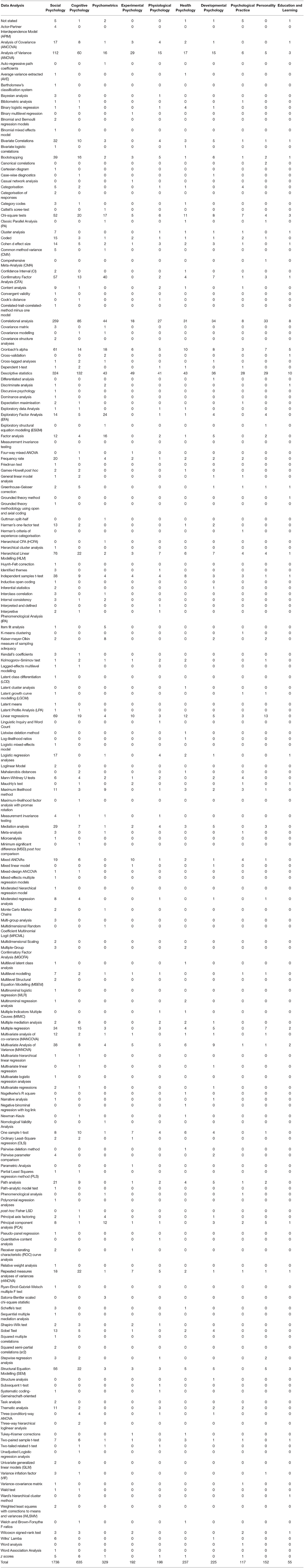

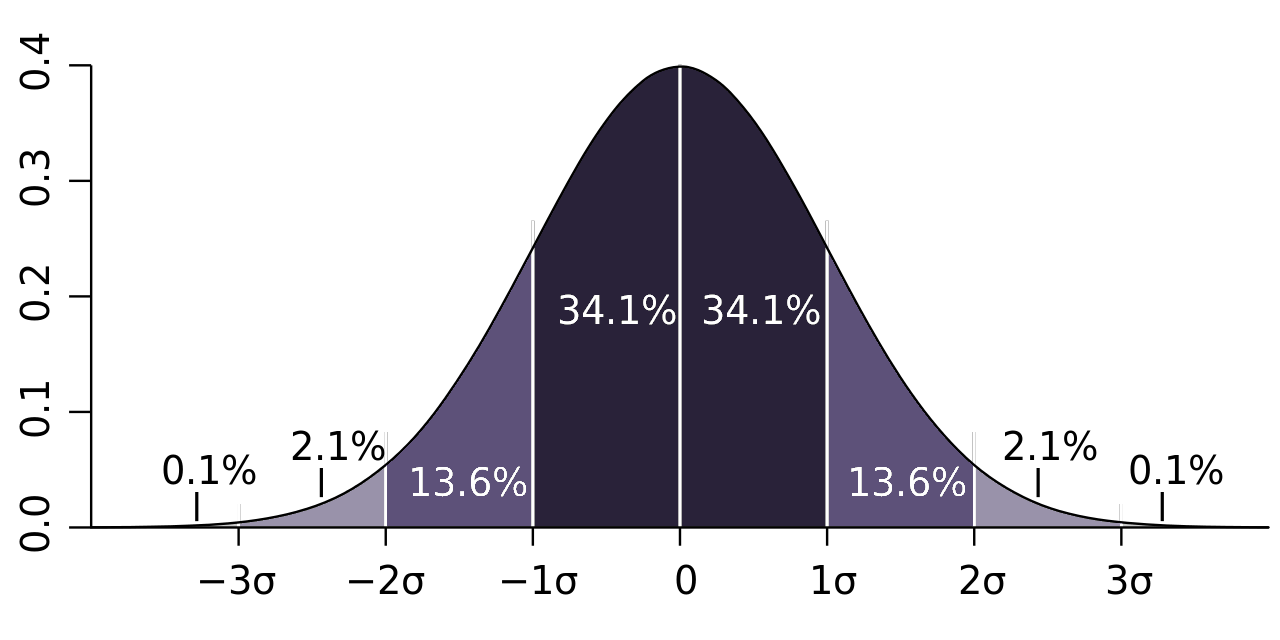

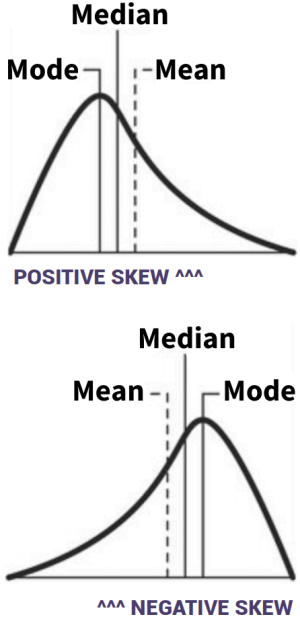

Data collection and analysis —data collection included 30 methods, with the data collection method most often employed being questionnaires (57.84%). The experimental task (16.56%) was the second most preferred collection method, which included established or unique tasks designed by the researchers. Cognitive ability tests (6.84%) were also regularly used along with various forms of interviewing (7.66%). Table 5 and Figure 7 represent data collection use in the various topics. Data analysis consisted of 3,857 occurrences of data analysis categorised into ±188 various data analysis techniques shown in Table 6 and Figures 1 – 7 . Descriptive statistics were the most commonly used (23.49%) along with correlational analysis (17.19%). When using a qualitative method, researchers generally employed thematic analysis (0.52%) or different forms of analysis that led to coding and the creation of themes. Review studies presented few data analysis methods, with most studies categorising their results. Mixed method and multi-method studies followed the analysis methods identified for the qualitative and quantitative studies included.

Data collection in the field of psychology.

Data collection frequency in topics.

Data analysis in the field of psychology.

Results of the topics researched in psychology can be seen in the tables, as previously stated in this article. It is noteworthy that, of the 10 topics, social psychology accounted for 43.54% of the studies, with cognitive psychology the second most popular research topic at 16.92%. The remainder of the topics only occurred in 4.0–7.0% of the articles considered. A list of the included 999 articles is available under the section “View Articles” on the following website: https://methodgarden.xtrapolate.io/ . This website was created by Scholtz et al. ( 2019 ) to visually present a research framework based on this Article's results.

This systematised review categorised full-length articles from five international journals across the span of 5 years to provide insight into the use of research methods in the field of psychology. Results indicated what methods are used how these methods are being used and for what topics (why) in the included sample of articles. The results should be seen as providing insight into method use and by no means a comprehensive representation of the aforementioned aim due to the limited sample. To our knowledge, this is the first research study to address this topic in this manner. Our discussion attempts to promote a productive way forward in terms of the key results for method use in psychology, especially in the field of academia (Holloway, 2008 ).

With regard to the methods used, our data stayed true to literature, finding only common research methods (Grant and Booth, 2009 ; Maree, 2016 ) that varied in the degree to which they were employed. Quantitative research was found to be the most popular method, as indicated by literature (Breen and Darlaston-Jones, 2010 ; Counsell and Harlow, 2017 ) and previous studies in specific areas of psychology (see Coetzee and Van Zyl, 2014 ). Its long history as the first research method (Leech et al., 2007 ) in the field of psychology as well as researchers' current application of mathematical approaches in their studies (Toomela, 2010 ) might contribute to its popularity today. Whatever the case may be, our results show that, despite the growth in qualitative research (Demuth, 2015 ; Smith and McGannon, 2018 ), quantitative research remains the first choice for article publication in these journals. Despite the included journals indicating openness to articles that apply any research methods. This finding may be due to qualitative research still being seen as a new method (Burman and Whelan, 2011 ) or reviewers' standards being higher for qualitative studies (Bluhm et al., 2011 ). Future research is encouraged into the possible biasness in publication of research methods, additionally further investigation with a different sample into the proclaimed growth of qualitative research may also provide different results.

Review studies were found to surpass that of multi-method and mixed method studies. To this effect Grant and Booth ( 2009 ), state that the increased awareness, journal contribution calls as well as its efficiency in procuring research funds all promote the popularity of reviews. The low frequency of mixed method studies contradicts the view in literature that it's the third most utilised research method (Tashakkori and Teddlie's, 2003 ). Its' low occurrence in this sample could be due to opposing views on mixing methods (Gunasekare, 2015 ) or that authors prefer publishing in mixed method journals, when using this method, or its relative novelty (Ivankova et al., 2016 ). Despite its low occurrence, the application of the mixed methods design in articles was methodologically clear in all cases which were not the case for the remainder of research methods.

Additionally, a substantial number of studies used a combination of methodologies that are not mixed or multi-method studies. Perceived fixed boundaries are according to literature often set aside, as confirmed by this result, in order to investigate the aim of a study, which could create a new and helpful way of understanding the world (Gunasekare, 2015 ). According to Toomela ( 2010 ), this is not unheard of and could be considered a form of “structural systemic science,” as in the case of qualitative methodology (observation) applied in quantitative studies (experimental design) for example. Based on this result, further research into this phenomenon as well as its implications for research methods such as multi and mixed methods is recommended.

Discerning how these research methods were applied, presented some difficulty. In the case of sampling, most studies—regardless of method—did mention some form of inclusion and exclusion criteria, but no definite sampling method. This result, along with the fact that samples often consisted of students from the researchers' own academic institutions, can contribute to literature and debates among academics (Peterson and Merunka, 2014 ; Laher, 2016 ). Samples of convenience and students as participants especially raise questions about the generalisability and applicability of results (Peterson and Merunka, 2014 ). This is because attention to sampling is important as inappropriate sampling can debilitate the legitimacy of interpretations (Onwuegbuzie and Collins, 2017 ). Future investigation into the possible implications of this reported popular use of convenience samples for the field of psychology as well as the reason for this use could provide interesting insight, and is encouraged by this study.

Additionally, and this is indicated in Table 6 , articles seldom report the research designs used, which highlights the pressing aspect of the lack of rigour in the included sample. Rigour with regards to the applied empirical method is imperative in promoting psychology as a science (American Psychological Association, 2020 ). Omitting parts of the research process in publication when it could have been used to inform others' research skills should be questioned, and the influence on the process of replicating results should be considered. Publications are often rejected due to a lack of rigour in the applied method and designs (Fonseca, 2013 ; Laher, 2016 ), calling for increased clarity and knowledge of method application. Replication is a critical part of any field of scientific research and requires the “complete articulation” of the study methods used (Drotar, 2010 , p. 804). The lack of thorough description could be explained by the requirements of certain journals to only report on certain aspects of a research process, especially with regard to the applied design (Laher, 20). However, naming aspects such as sampling and designs, is a requirement according to the APA's Journal Article Reporting Standards (JARS-Quant) (Appelbaum et al., 2018 ). With very little information on how a study was conducted, authors lose a valuable opportunity to enhance research validity, enrich the knowledge of others, and contribute to the growth of psychology and methodology as a whole. In the case of this research study, it also restricted our results to only reported samples and designs, which indicated a preference for certain designs, such as cross-sectional designs for quantitative studies.

Data collection and analysis were for the most part clearly stated. A key result was the versatile use of questionnaires. Researchers would apply a questionnaire in various ways, for example in questionnaire interviews, online surveys, and written questionnaires across most research methods. This may highlight a trend for future research.

With regard to the topics these methods were employed for, our research study found a new field named “psychological practice.” This result may show the growing consciousness of researchers as part of the research process (Denzin and Lincoln, 2003 ), psychological practice, and knowledge generation. The most popular of these topics was social psychology, which is generously covered in journals and by learning societies, as testaments of the institutional support and richness social psychology has in the field of psychology (Chryssochoou, 2015 ). The APA's perspective on 2018 trends in psychology also identifies an increased amount of psychology focus on how social determinants are influencing people's health (Deangelis, 2017 ).

This study was not without limitations and the following should be taken into account. Firstly, this study used a sample of five specific journals to address the aim of the research study, despite general journal aims (as stated on journal websites), this inclusion signified a bias towards the research methods published in these specific journals only and limited generalisability. A broader sample of journals over a different period of time, or a single journal over a longer period of time might provide different results. A second limitation is the use of Excel spreadsheets and an electronic system to log articles, which was a manual process and therefore left room for error (Bandara et al., 2015 ). To address this potential issue, co-coding was performed to reduce error. Lastly, this article categorised data based on the information presented in the article sample; there was no interpretation of what methodology could have been applied or whether the methods stated adhered to the criteria for the methods used. Thus, a large number of articles that did not clearly indicate a research method or design could influence the results of this review. However, this in itself was also a noteworthy result. Future research could review research methods of a broader sample of journals with an interpretive review tool that increases rigour. Additionally, the authors also encourage the future use of systematised review designs as a way to promote a concise procedure in applying this design.

Our research study presented the use of research methods for published articles in the field of psychology as well as recommendations for future research based on these results. Insight into the complex questions identified in literature, regarding what methods are used how these methods are being used and for what topics (why) was gained. This sample preferred quantitative methods, used convenience sampling and presented a lack of rigorous accounts for the remaining methodologies. All methodologies that were clearly indicated in the sample were tabulated to allow researchers insight into the general use of methods and not only the most frequently used methods. The lack of rigorous account of research methods in articles was represented in-depth for each step in the research process and can be of vital importance to address the current replication crisis within the field of psychology. Recommendations for future research aimed to motivate research into the practical implications of the results for psychology, for example, publication bias and the use of convenience samples.

Ethics Statement

This study was cleared by the North-West University Health Research Ethics Committee: NWU-00115-17-S1.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

- Aanstoos C. M. (2014). Psychology . Available online at: http://eds.a.ebscohost.com.nwulib.nwu.ac.za/eds/detail/detail?sid=18de6c5c-2b03-4eac-94890145eb01bc70%40sessionmgr4006&vid$=$1&hid$=$4113&bdata$=$JnNpdGU9ZWRzL~WxpdmU%3d#AN$=$93871882&db$=$ers

- American Psychological Association (2020). Science of Psychology . Available online at: https://www.apa.org/action/science/

- Appelbaum M., Cooper H., Kline R. B., Mayo-Wilson E., Nezu A. M., Rao S. M. (2018). Journal article reporting standards for quantitative research in psychology: the APA Publications and Communications Board task force report . Am. Psychol. 73 :3. 10.1037/amp0000191 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Bandara W., Furtmueller E., Gorbacheva E., Miskon S., Beekhuyzen J. (2015). Achieving rigor in literature reviews: insights from qualitative data analysis and tool-support . Commun. Ass. Inform. Syst. 37 , 154–204. 10.17705/1CAIS.03708 [ CrossRef ] [ Google Scholar ]

- Barr-Walker J. (2017). Evidence-based information needs of public health workers: a systematized review . J. Med. Libr. Assoc. 105 , 69–79. 10.5195/JMLA.2017.109 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Bittermann A., Fischer A. (2018). How to identify hot topics in psychology using topic modeling . Z. Psychol. 226 , 3–13. 10.1027/2151-2604/a000318 [ CrossRef ] [ Google Scholar ]

- Bluhm D. J., Harman W., Lee T. W., Mitchell T. R. (2011). Qualitative research in management: a decade of progress . J. Manage. Stud. 48 , 1866–1891. 10.1111/j.1467-6486.2010.00972.x [ CrossRef ] [ Google Scholar ]

- Breen L. J., Darlaston-Jones D. (2010). Moving beyond the enduring dominance of positivism in psychological research: implications for psychology in Australia . Aust. Psychol. 45 , 67–76. 10.1080/00050060903127481 [ CrossRef ] [ Google Scholar ]

- Burman E., Whelan P. (2011). Problems in / of Qualitative Research . Maidenhead: Open University Press/McGraw Hill. [ Google Scholar ]

- Chaichanasakul A., He Y., Chen H., Allen G. E. K., Khairallah T. S., Ramos K. (2011). Journal of Career Development: a 36-year content analysis (1972–2007) . J. Career. Dev. 38 , 440–455. 10.1177/0894845310380223 [ CrossRef ] [ Google Scholar ]

- Chryssochoou X. (2015). Social Psychology . Inter. Encycl. Soc. Behav. Sci. 22 , 532–537. 10.1016/B978-0-08-097086-8.24095-6 [ CrossRef ] [ Google Scholar ]

- Cichocka A., Jost J. T. (2014). Stripped of illusions? Exploring system justification processes in capitalist and post-Communist societies . Inter. J. Psychol. 49 , 6–29. 10.1002/ijop.12011 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Clay R. A. (2017). Psychology is More Popular Than Ever. Monitor on Psychology: Trends Report . Available online at: https://www.apa.org/monitor/2017/11/trends-popular

- Coetzee M., Van Zyl L. E. (2014). A review of a decade's scholarly publications (2004–2013) in the South African Journal of Industrial Psychology . SA. J. Psychol . 40 , 1–16. 10.4102/sajip.v40i1.1227 [ CrossRef ] [ Google Scholar ]

- Counsell A., Harlow L. (2017). Reporting practices and use of quantitative methods in Canadian journal articles in psychology . Can. Psychol. 58 , 140–147. 10.1037/cap0000074 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Deangelis T. (2017). Targeting Social Factors That Undermine Health. Monitor on Psychology: Trends Report . Available online at: https://www.apa.org/monitor/2017/11/trend-social-factors

- Demuth C. (2015). New directions in qualitative research in psychology . Integr. Psychol. Behav. Sci. 49 , 125–133. 10.1007/s12124-015-9303-9 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Denzin N. K., Lincoln Y. (2003). The Landscape of Qualitative Research: Theories and Issues , 2nd Edn. London: Sage. [ Google Scholar ]

- Drotar D. (2010). A call for replications of research in pediatric psychology and guidance for authors . J. Pediatr. Psychol. 35 , 801–805. 10.1093/jpepsy/jsq049 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Dweck C. S. (2017). Is psychology headed in the right direction? Yes, no, and maybe . Perspect. Psychol. Sci. 12 , 656–659. 10.1177/1745691616687747 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Earp B. D., Trafimow D. (2015). Replication, falsification, and the crisis of confidence in social psychology . Front. Psychol. 6 :621. 10.3389/fpsyg.2015.00621 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Ezeh A. C., Izugbara C. O., Kabiru C. W., Fonn S., Kahn K., Manderson L., et al.. (2010). Building capacity for public and population health research in Africa: the consortium for advanced research training in Africa (CARTA) model . Glob. Health Action 3 :5693. 10.3402/gha.v3i0.5693 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Ferreira A. L. L., Bessa M. M. M., Drezett J., De Abreu L. C. (2016). Quality of life of the woman carrier of endometriosis: systematized review . Reprod. Clim. 31 , 48–54. 10.1016/j.recli.2015.12.002 [ CrossRef ] [ Google Scholar ]

- Fonseca M. (2013). Most Common Reasons for Journal Rejections . Available online at: http://www.editage.com/insights/most-common-reasons-for-journal-rejections

- Gough B., Lyons A. (2016). The future of qualitative research in psychology: accentuating the positive . Integr. Psychol. Behav. Sci. 50 , 234–243. 10.1007/s12124-015-9320-8 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Grant M. J., Booth A. (2009). A typology of reviews: an analysis of 14 review types and associated methodologies . Health Info. Libr. J. 26 , 91–108. 10.1111/j.1471-1842.2009.00848.x [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Grix J. (2002). Introducing students to the generic terminology of social research . Politics 22 , 175–186. 10.1111/1467-9256.00173 [ CrossRef ] [ Google Scholar ]

- Gunasekare U. L. T. P. (2015). Mixed research method as the third research paradigm: a literature review . Int. J. Sci. Res. 4 , 361–368. Available online at: https://ssrn.com/abstract=2735996 [ Google Scholar ]

- Hengartner M. P. (2018). Raising awareness for the replication crisis in clinical psychology by focusing on inconsistencies in psychotherapy Research: how much can we rely on published findings from efficacy trials? Front. Psychol. 9 :256. 10.3389/fpsyg.2018.00256 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Holloway W. (2008). Doing intellectual disagreement differently . Psychoanal. Cult. Soc. 13 , 385–396. 10.1057/pcs.2008.29 [ CrossRef ] [ Google Scholar ]

- Ivankova N. V., Creswell J. W., Plano Clark V. L. (2016). Foundations and Approaches to mixed methods research , in First Steps in Research , 2nd Edn. K. Maree (Pretoria: Van Schaick Publishers; ), 306–335. [ Google Scholar ]

- Johnson M., Long T., White A. (2001). Arguments for British pluralism in qualitative health research . J. Adv. Nurs. 33 , 243–249. 10.1046/j.1365-2648.2001.01659.x [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Johnston A., Kelly S. E., Hsieh S. C., Skidmore B., Wells G. A. (2019). Systematic reviews of clinical practice guidelines: a methodological guide . J. Clin. Epidemiol. 108 , 64–72. 10.1016/j.jclinepi.2018.11.030 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Ketchen D. J., Jr., Boyd B. K., Bergh D. D. (2008). Research methodology in strategic management: past accomplishments and future challenges . Organ. Res. Methods 11 , 643–658. 10.1177/1094428108319843 [ CrossRef ] [ Google Scholar ]

- Ktepi B. (2016). Data Analytics (DA) . Available online at: https://eds-b-ebscohost-com.nwulib.nwu.ac.za/eds/detail/detail?vid=2&sid=24c978f0-6685-4ed8-ad85-fa5bb04669b9%40sessionmgr101&bdata=JnNpdGU9ZWRzLWxpdmU%3d#AN=113931286&db=ers

- Laher S. (2016). Ostinato rigore: establishing methodological rigour in quantitative research . S. Afr. J. Psychol. 46 , 316–327. 10.1177/0081246316649121 [ CrossRef ] [ Google Scholar ]

- Lee C. (2015). The Myth of the Off-Limits Source . Available online at: http://blog.apastyle.org/apastyle/research/

- Lee T. W., Mitchell T. R., Sablynski C. J. (1999). Qualitative research in organizational and vocational psychology, 1979–1999 . J. Vocat. Behav. 55 , 161–187. 10.1006/jvbe.1999.1707 [ CrossRef ] [ Google Scholar ]

- Leech N. L., Anthony J., Onwuegbuzie A. J. (2007). A typology of mixed methods research designs . Sci. Bus. Media B. V Qual. Quant 43 , 265–275. 10.1007/s11135-007-9105-3 [ CrossRef ] [ Google Scholar ]

- Levitt H. M., Motulsky S. L., Wertz F. J., Morrow S. L., Ponterotto J. G. (2017). Recommendations for designing and reviewing qualitative research in psychology: promoting methodological integrity . Qual. Psychol. 4 , 2–22. 10.1037/qup0000082 [ CrossRef ] [ Google Scholar ]

- Lowe S. M., Moore S. (2014). Social networks and female reproductive choices in the developing world: a systematized review . Rep. Health 11 :85. 10.1186/1742-4755-11-85 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Maree K. (2016). Planning a research proposal , in First Steps in Research , 2nd Edn, ed Maree K. (Pretoria: Van Schaik Publishers; ), 49–70. [ Google Scholar ]

- Maree K., Pietersen J. (2016). Sampling , in First Steps in Research, 2nd Edn , ed Maree K. (Pretoria: Van Schaik Publishers; ), 191–202. [ Google Scholar ]

- Ngulube P. (2013). Blending qualitative and quantitative research methods in library and information science in sub-Saharan Africa . ESARBICA J. 32 , 10–23. Available online at: http://hdl.handle.net/10500/22397 . [ Google Scholar ]

- Nieuwenhuis J. (2016). Qualitative research designs and data-gathering techniques , in First Steps in Research , 2nd Edn, ed Maree K. (Pretoria: Van Schaik Publishers; ), 71–102. [ Google Scholar ]

- Nind M., Kilburn D., Wiles R. (2015). Using video and dialogue to generate pedagogic knowledge: teachers, learners and researchers reflecting together on the pedagogy of social research methods . Int. J. Soc. Res. Methodol. 18 , 561–576. 10.1080/13645579.2015.1062628 [ CrossRef ] [ Google Scholar ]

- O'Cathain A. (2009). Editorial: mixed methods research in the health sciences—a quiet revolution . J. Mix. Methods 3 , 1–6. 10.1177/1558689808326272 [ CrossRef ] [ Google Scholar ]

- O'Neil S., Koekemoer E. (2016). Two decades of qualitative research in psychology, industrial and organisational psychology and human resource management within South Africa: a critical review . SA J. Indust. Psychol. 42 , 1–16. 10.4102/sajip.v42i1.1350 [ CrossRef ] [ Google Scholar ]

- Onwuegbuzie A. J., Collins K. M. (2017). The role of sampling in mixed methods research enhancing inference quality . Köln Z Soziol. 2 , 133–156. 10.1007/s11577-017-0455-0 [ CrossRef ] [ Google Scholar ]

- Perestelo-Pérez L. (2013). Standards on how to develop and report systematic reviews in psychology and health . Int. J. Clin. Health Psychol. 13 , 49–57. 10.1016/S1697-2600(13)70007-3 [ CrossRef ] [ Google Scholar ]

- Pericall L. M. T., Taylor E. (2014). Family function and its relationship to injury severity and psychiatric outcome in children with acquired brain injury: a systematized review . Dev. Med. Child Neurol. 56 , 19–30. 10.1111/dmcn.12237 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Peterson R. A., Merunka D. R. (2014). Convenience samples of college students and research reproducibility . J. Bus. Res. 67 , 1035–1041. 10.1016/j.jbusres.2013.08.010 [ CrossRef ] [ Google Scholar ]

- Ritchie J., Lewis J., Elam G. (2009). Designing and selecting samples , in Qualitative Research Practice: A Guide for Social Science Students and Researchers , 2nd Edn, ed Ritchie J., Lewis J. (London: Sage; ), 1–23. [ Google Scholar ]

- Sandelowski M. (2011). When a cigar is not just a cigar: alternative perspectives on data and data analysis . Res. Nurs. Health 34 , 342–352. 10.1002/nur.20437 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Sandelowski M., Voils C. I., Knafl G. (2009). On quantitizing . J. Mix. Methods Res. 3 , 208–222. 10.1177/1558689809334210 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Scholtz S. E., De Klerk W., De Beer L. T. (2019). A data generated research framework for conducting research methods in psychological research .

- Scimago Journal & Country Rank (2017). Available online at: http://www.scimagojr.com/journalrank.php?category=3201&year=2015

- Scopus (2017a). About Scopus . Available online at: https://www.scopus.com/home.uri (accessed February 01, 2017).

- Scopus (2017b). Document Search . Available online at: https://www.scopus.com/home.uri (accessed February 01, 2017).

- Scott Jones J., Goldring J. E. (2015). ‘I' m not a quants person'; key strategies in building competence and confidence in staff who teach quantitative research methods . Int. J. Soc. Res. Methodol. 18 , 479–494. 10.1080/13645579.2015.1062623 [ CrossRef ] [ Google Scholar ]

- Smith B., McGannon K. R. (2018). Developing rigor in quantitative research: problems and opportunities within sport and exercise psychology . Int. Rev. Sport Exerc. Psychol. 11 , 101–121. 10.1080/1750984X.2017.1317357 [ CrossRef ] [ Google Scholar ]

- Stangor C. (2011). Introduction to Psychology . Available online at: http://www.saylor.org/books/

- Strydom H. (2011). Sampling in the quantitative paradigm , in Research at Grass Roots; For the Social Sciences and Human Service Professions , 4th Edn, eds de Vos A. S., Strydom H., Fouché C. B., Delport C. S. L. (Pretoria: Van Schaik Publishers; ), 221–234. [ Google Scholar ]

- Tashakkori A., Teddlie C. (2003). Handbook of Mixed Methods in Social & Behavioural Research . Thousand Oaks, CA: SAGE publications. [ Google Scholar ]

- Toomela A. (2010). Quantitative methods in psychology: inevitable and useless . Front. Psychol. 1 :29. 10.3389/fpsyg.2010.00029 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Truscott D. M., Swars S., Smith S., Thornton-Reid F., Zhao Y., Dooley C., et al.. (2010). A cross-disciplinary examination of the prevalence of mixed methods in educational research: 1995–2005 . Int. J. Soc. Res. Methodol. 13 , 317–328. 10.1080/13645570903097950 [ CrossRef ] [ Google Scholar ]

- Weiten W. (2010). Psychology Themes and Variations . Belmont, CA: Wadsworth. [ Google Scholar ]

Research Methods In Psychology

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

Research methods in psychology are systematic procedures used to observe, describe, predict, and explain behavior and mental processes. They include experiments, surveys, case studies, and naturalistic observations, ensuring data collection is objective and reliable to understand and explain psychological phenomena.

Hypotheses are statements about the prediction of the results, that can be verified or disproved by some investigation.

There are four types of hypotheses :

- Null Hypotheses (H0 ) – these predict that no difference will be found in the results between the conditions. Typically these are written ‘There will be no difference…’

- Alternative Hypotheses (Ha or H1) – these predict that there will be a significant difference in the results between the two conditions. This is also known as the experimental hypothesis.

- One-tailed (directional) hypotheses – these state the specific direction the researcher expects the results to move in, e.g. higher, lower, more, less. In a correlation study, the predicted direction of the correlation can be either positive or negative.

- Two-tailed (non-directional) hypotheses – these state that a difference will be found between the conditions of the independent variable but does not state the direction of a difference or relationship. Typically these are always written ‘There will be a difference ….’

All research has an alternative hypothesis (either a one-tailed or two-tailed) and a corresponding null hypothesis.

Once the research is conducted and results are found, psychologists must accept one hypothesis and reject the other.

So, if a difference is found, the Psychologist would accept the alternative hypothesis and reject the null. The opposite applies if no difference is found.

Sampling techniques

Sampling is the process of selecting a representative group from the population under study.

A sample is the participants you select from a target population (the group you are interested in) to make generalizations about.

Representative means the extent to which a sample mirrors a researcher’s target population and reflects its characteristics.

Generalisability means the extent to which their findings can be applied to the larger population of which their sample was a part.

- Volunteer sample : where participants pick themselves through newspaper adverts, noticeboards or online.

- Opportunity sampling : also known as convenience sampling , uses people who are available at the time the study is carried out and willing to take part. It is based on convenience.

- Random sampling : when every person in the target population has an equal chance of being selected. An example of random sampling would be picking names out of a hat.

- Systematic sampling : when a system is used to select participants. Picking every Nth person from all possible participants. N = the number of people in the research population / the number of people needed for the sample.

- Stratified sampling : when you identify the subgroups and select participants in proportion to their occurrences.

- Snowball sampling : when researchers find a few participants, and then ask them to find participants themselves and so on.

- Quota sampling : when researchers will be told to ensure the sample fits certain quotas, for example they might be told to find 90 participants, with 30 of them being unemployed.

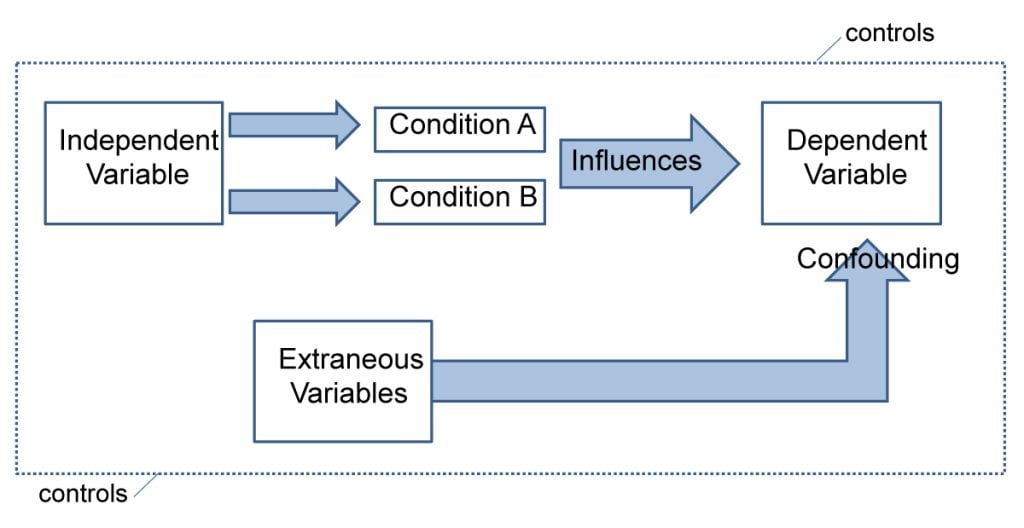

Experiments always have an independent and dependent variable .

- The independent variable is the one the experimenter manipulates (the thing that changes between the conditions the participants are placed into). It is assumed to have a direct effect on the dependent variable.

- The dependent variable is the thing being measured, or the results of the experiment.

Operationalization of variables means making them measurable/quantifiable. We must use operationalization to ensure that variables are in a form that can be easily tested.

For instance, we can’t really measure ‘happiness’, but we can measure how many times a person smiles within a two-hour period.

By operationalizing variables, we make it easy for someone else to replicate our research. Remember, this is important because we can check if our findings are reliable.

Extraneous variables are all variables which are not independent variable but could affect the results of the experiment.

It can be a natural characteristic of the participant, such as intelligence levels, gender, or age for example, or it could be a situational feature of the environment such as lighting or noise.

Demand characteristics are a type of extraneous variable that occurs if the participants work out the aims of the research study, they may begin to behave in a certain way.

For example, in Milgram’s research , critics argued that participants worked out that the shocks were not real and they administered them as they thought this was what was required of them.

Extraneous variables must be controlled so that they do not affect (confound) the results.

Randomly allocating participants to their conditions or using a matched pairs experimental design can help to reduce participant variables.

Situational variables are controlled by using standardized procedures, ensuring every participant in a given condition is treated in the same way

Experimental Design

Experimental design refers to how participants are allocated to each condition of the independent variable, such as a control or experimental group.

- Independent design ( between-groups design ): each participant is selected for only one group. With the independent design, the most common way of deciding which participants go into which group is by means of randomization.

- Matched participants design : each participant is selected for only one group, but the participants in the two groups are matched for some relevant factor or factors (e.g. ability; sex; age).

- Repeated measures design ( within groups) : each participant appears in both groups, so that there are exactly the same participants in each group.

- The main problem with the repeated measures design is that there may well be order effects. Their experiences during the experiment may change the participants in various ways.

- They may perform better when they appear in the second group because they have gained useful information about the experiment or about the task. On the other hand, they may perform less well on the second occasion because of tiredness or boredom.

- Counterbalancing is the best way of preventing order effects from disrupting the findings of an experiment, and involves ensuring that each condition is equally likely to be used first and second by the participants.

If we wish to compare two groups with respect to a given independent variable, it is essential to make sure that the two groups do not differ in any other important way.

Experimental Methods

All experimental methods involve an iv (independent variable) and dv (dependent variable)..

- Field experiments are conducted in the everyday (natural) environment of the participants. The experimenter still manipulates the IV, but in a real-life setting. It may be possible to control extraneous variables, though such control is more difficult than in a lab experiment.

- Natural experiments are when a naturally occurring IV is investigated that isn’t deliberately manipulated, it exists anyway. Participants are not randomly allocated, and the natural event may only occur rarely.

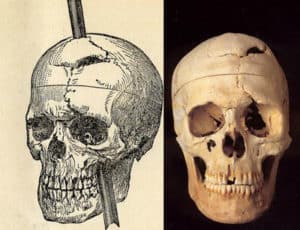

Case studies are in-depth investigations of a person, group, event, or community. It uses information from a range of sources, such as from the person concerned and also from their family and friends.

Many techniques may be used such as interviews, psychological tests, observations and experiments. Case studies are generally longitudinal: in other words, they follow the individual or group over an extended period of time.

Case studies are widely used in psychology and among the best-known ones carried out were by Sigmund Freud . He conducted very detailed investigations into the private lives of his patients in an attempt to both understand and help them overcome their illnesses.

Case studies provide rich qualitative data and have high levels of ecological validity. However, it is difficult to generalize from individual cases as each one has unique characteristics.

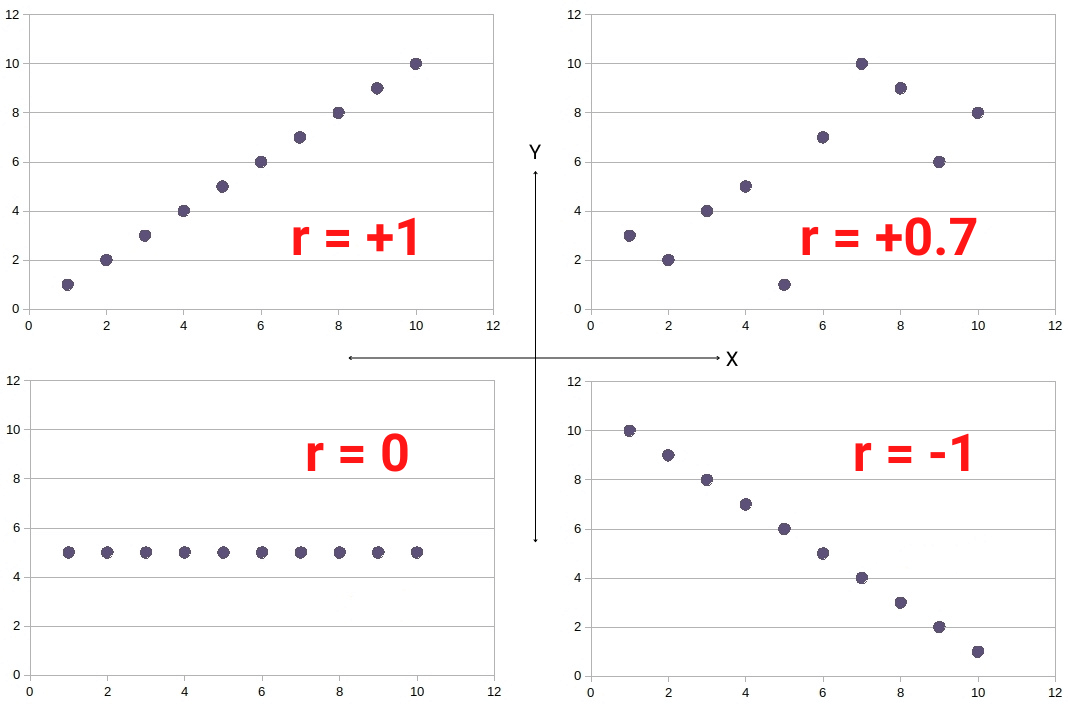

Correlational Studies

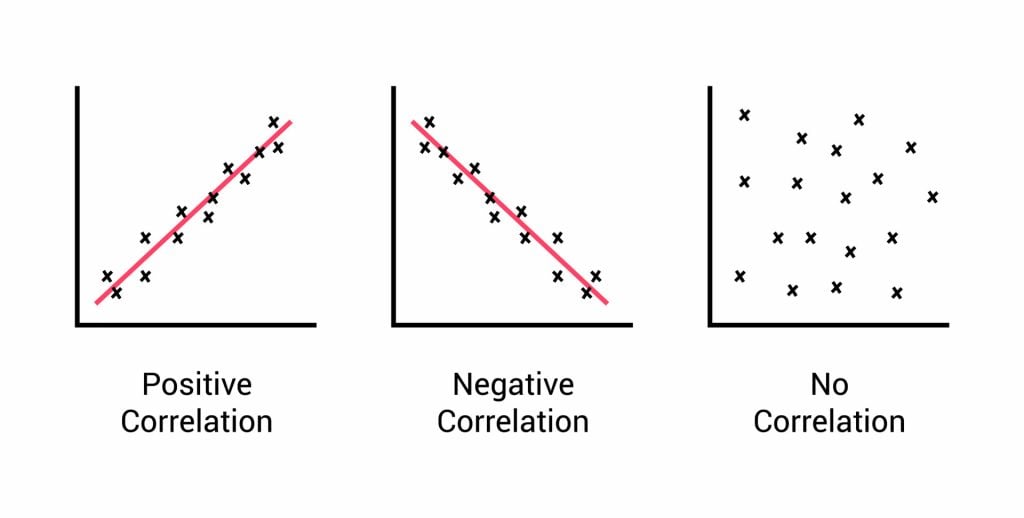

Correlation means association; it is a measure of the extent to which two variables are related. One of the variables can be regarded as the predictor variable with the other one as the outcome variable.

Correlational studies typically involve obtaining two different measures from a group of participants, and then assessing the degree of association between the measures.

The predictor variable can be seen as occurring before the outcome variable in some sense. It is called the predictor variable, because it forms the basis for predicting the value of the outcome variable.

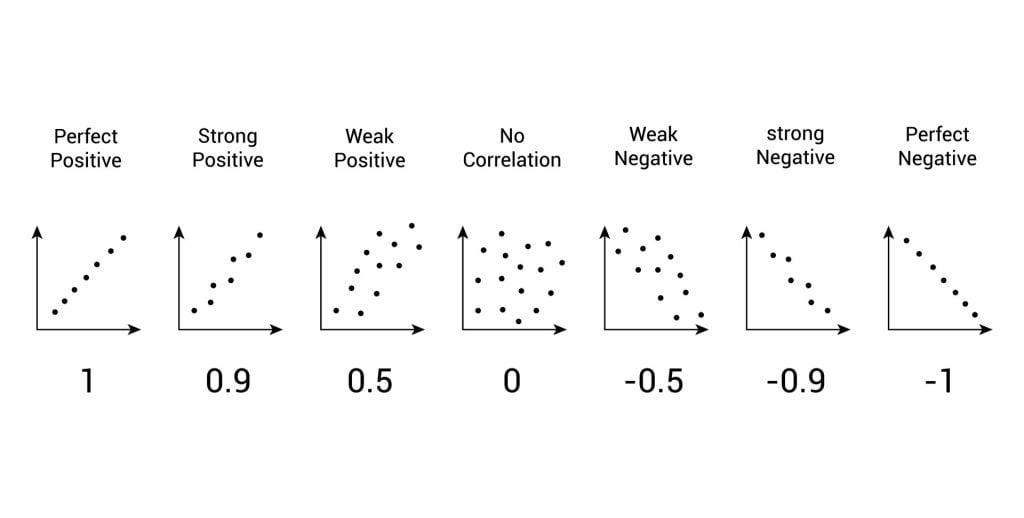

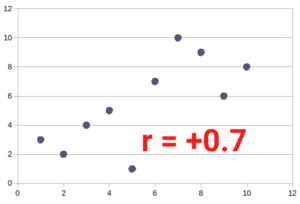

Relationships between variables can be displayed on a graph or as a numerical score called a correlation coefficient.

- If an increase in one variable tends to be associated with an increase in the other, then this is known as a positive correlation .

- If an increase in one variable tends to be associated with a decrease in the other, then this is known as a negative correlation .

- A zero correlation occurs when there is no relationship between variables.

After looking at the scattergraph, if we want to be sure that a significant relationship does exist between the two variables, a statistical test of correlation can be conducted, such as Spearman’s rho.

The test will give us a score, called a correlation coefficient . This is a value between 0 and 1, and the closer to 1 the score is, the stronger the relationship between the variables. This value can be both positive e.g. 0.63, or negative -0.63.

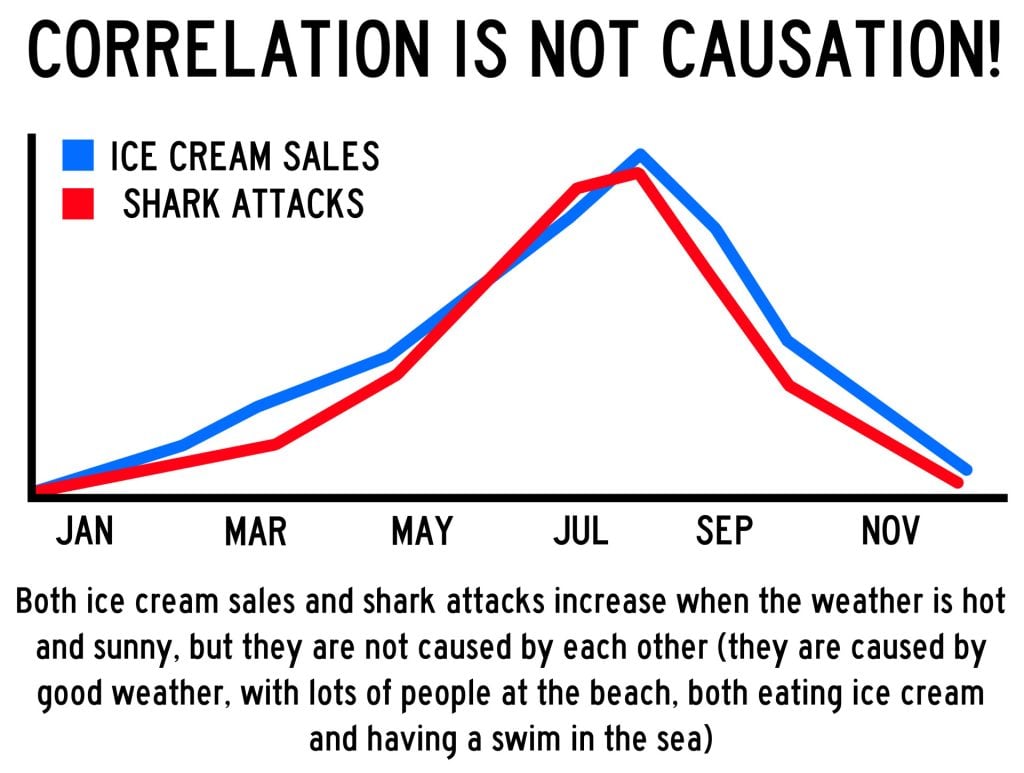

A correlation between variables, however, does not automatically mean that the change in one variable is the cause of the change in the values of the other variable. A correlation only shows if there is a relationship between variables.

Correlation does not always prove causation, as a third variable may be involved.

Interview Methods

Interviews are commonly divided into two types: structured and unstructured.

A fixed, predetermined set of questions is put to every participant in the same order and in the same way.

Responses are recorded on a questionnaire, and the researcher presets the order and wording of questions, and sometimes the range of alternative answers.

The interviewer stays within their role and maintains social distance from the interviewee.

There are no set questions, and the participant can raise whatever topics he/she feels are relevant and ask them in their own way. Questions are posed about participants’ answers to the subject

Unstructured interviews are most useful in qualitative research to analyze attitudes and values.

Though they rarely provide a valid basis for generalization, their main advantage is that they enable the researcher to probe social actors’ subjective point of view.

Questionnaire Method

Questionnaires can be thought of as a kind of written interview. They can be carried out face to face, by telephone, or post.

The choice of questions is important because of the need to avoid bias or ambiguity in the questions, ‘leading’ the respondent or causing offense.

- Open questions are designed to encourage a full, meaningful answer using the subject’s own knowledge and feelings. They provide insights into feelings, opinions, and understanding. Example: “How do you feel about that situation?”

- Closed questions can be answered with a simple “yes” or “no” or specific information, limiting the depth of response. They are useful for gathering specific facts or confirming details. Example: “Do you feel anxious in crowds?”

Its other practical advantages are that it is cheaper than face-to-face interviews and can be used to contact many respondents scattered over a wide area relatively quickly.

Observations

There are different types of observation methods :

- Covert observation is where the researcher doesn’t tell the participants they are being observed until after the study is complete. There could be ethical problems or deception and consent with this particular observation method.

- Overt observation is where a researcher tells the participants they are being observed and what they are being observed for.

- Controlled : behavior is observed under controlled laboratory conditions (e.g., Bandura’s Bobo doll study).

- Natural : Here, spontaneous behavior is recorded in a natural setting.

- Participant : Here, the observer has direct contact with the group of people they are observing. The researcher becomes a member of the group they are researching.

- Non-participant (aka “fly on the wall): The researcher does not have direct contact with the people being observed. The observation of participants’ behavior is from a distance

Pilot Study

A pilot study is a small scale preliminary study conducted in order to evaluate the feasibility of the key s teps in a future, full-scale project.

A pilot study is an initial run-through of the procedures to be used in an investigation; it involves selecting a few people and trying out the study on them. It is possible to save time, and in some cases, money, by identifying any flaws in the procedures designed by the researcher.

A pilot study can help the researcher spot any ambiguities (i.e. unusual things) or confusion in the information given to participants or problems with the task devised.

Sometimes the task is too hard, and the researcher may get a floor effect, because none of the participants can score at all or can complete the task – all performances are low.

The opposite effect is a ceiling effect, when the task is so easy that all achieve virtually full marks or top performances and are “hitting the ceiling”.

Research Design

In cross-sectional research , a researcher compares multiple segments of the population at the same time

Sometimes, we want to see how people change over time, as in studies of human development and lifespan. Longitudinal research is a research design in which data-gathering is administered repeatedly over an extended period of time.

In cohort studies , the participants must share a common factor or characteristic such as age, demographic, or occupation. A cohort study is a type of longitudinal study in which researchers monitor and observe a chosen population over an extended period.

Triangulation means using more than one research method to improve the study’s validity.

Reliability

Reliability is a measure of consistency, if a particular measurement is repeated and the same result is obtained then it is described as being reliable.

- Test-retest reliability : assessing the same person on two different occasions which shows the extent to which the test produces the same answers.

- Inter-observer reliability : the extent to which there is an agreement between two or more observers.

Meta-Analysis

A meta-analysis is a systematic review that involves identifying an aim and then searching for research studies that have addressed similar aims/hypotheses.

This is done by looking through various databases, and then decisions are made about what studies are to be included/excluded.

Strengths: Increases the conclusions’ validity as they’re based on a wider range.

Weaknesses: Research designs in studies can vary, so they are not truly comparable.

Peer Review

A researcher submits an article to a journal. The choice of the journal may be determined by the journal’s audience or prestige.

The journal selects two or more appropriate experts (psychologists working in a similar field) to peer review the article without payment. The peer reviewers assess: the methods and designs used, originality of the findings, the validity of the original research findings and its content, structure and language.

Feedback from the reviewer determines whether the article is accepted. The article may be: Accepted as it is, accepted with revisions, sent back to the author to revise and re-submit or rejected without the possibility of submission.

The editor makes the final decision whether to accept or reject the research report based on the reviewers comments/ recommendations.

Peer review is important because it prevent faulty data from entering the public domain, it provides a way of checking the validity of findings and the quality of the methodology and is used to assess the research rating of university departments.

Peer reviews may be an ideal, whereas in practice there are lots of problems. For example, it slows publication down and may prevent unusual, new work being published. Some reviewers might use it as an opportunity to prevent competing researchers from publishing work.

Some people doubt whether peer review can really prevent the publication of fraudulent research.

The advent of the internet means that a lot of research and academic comment is being published without official peer reviews than before, though systems are evolving on the internet where everyone really has a chance to offer their opinions and police the quality of research.

Types of Data

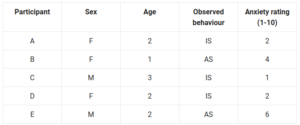

- Quantitative data is numerical data e.g. reaction time or number of mistakes. It represents how much or how long, how many there are of something. A tally of behavioral categories and closed questions in a questionnaire collect quantitative data.

- Qualitative data is virtually any type of information that can be observed and recorded that is not numerical in nature and can be in the form of written or verbal communication. Open questions in questionnaires and accounts from observational studies collect qualitative data.

- Primary data is first-hand data collected for the purpose of the investigation.

- Secondary data is information that has been collected by someone other than the person who is conducting the research e.g. taken from journals, books or articles.

Validity means how well a piece of research actually measures what it sets out to, or how well it reflects the reality it claims to represent.

Validity is whether the observed effect is genuine and represents what is actually out there in the world.

- Concurrent validity is the extent to which a psychological measure relates to an existing similar measure and obtains close results. For example, a new intelligence test compared to an established test.

- Face validity : does the test measure what it’s supposed to measure ‘on the face of it’. This is done by ‘eyeballing’ the measuring or by passing it to an expert to check.

- Ecological validit y is the extent to which findings from a research study can be generalized to other settings / real life.

- Temporal validity is the extent to which findings from a research study can be generalized to other historical times.

Features of Science

- Paradigm – A set of shared assumptions and agreed methods within a scientific discipline.

- Paradigm shift – The result of the scientific revolution: a significant change in the dominant unifying theory within a scientific discipline.

- Objectivity – When all sources of personal bias are minimised so not to distort or influence the research process.

- Empirical method – Scientific approaches that are based on the gathering of evidence through direct observation and experience.

- Replicability – The extent to which scientific procedures and findings can be repeated by other researchers.

- Falsifiability – The principle that a theory cannot be considered scientific unless it admits the possibility of being proved untrue.

Statistical Testing

A significant result is one where there is a low probability that chance factors were responsible for any observed difference, correlation, or association in the variables tested.

If our test is significant, we can reject our null hypothesis and accept our alternative hypothesis.

If our test is not significant, we can accept our null hypothesis and reject our alternative hypothesis. A null hypothesis is a statement of no effect.

In Psychology, we use p < 0.05 (as it strikes a balance between making a type I and II error) but p < 0.01 is used in tests that could cause harm like introducing a new drug.

A type I error is when the null hypothesis is rejected when it should have been accepted (happens when a lenient significance level is used, an error of optimism).

A type II error is when the null hypothesis is accepted when it should have been rejected (happens when a stringent significance level is used, an error of pessimism).

Ethical Issues

- Informed consent is when participants are able to make an informed judgment about whether to take part. It causes them to guess the aims of the study and change their behavior.

- To deal with it, we can gain presumptive consent or ask them to formally indicate their agreement to participate but it may invalidate the purpose of the study and it is not guaranteed that the participants would understand.

- Deception should only be used when it is approved by an ethics committee, as it involves deliberately misleading or withholding information. Participants should be fully debriefed after the study but debriefing can’t turn the clock back.

- All participants should be informed at the beginning that they have the right to withdraw if they ever feel distressed or uncomfortable.

- It causes bias as the ones that stayed are obedient and some may not withdraw as they may have been given incentives or feel like they’re spoiling the study. Researchers can offer the right to withdraw data after participation.

- Participants should all have protection from harm . The researcher should avoid risks greater than those experienced in everyday life and they should stop the study if any harm is suspected. However, the harm may not be apparent at the time of the study.

- Confidentiality concerns the communication of personal information. The researchers should not record any names but use numbers or false names though it may not be possible as it is sometimes possible to work out who the researchers were.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

A-Level Psychology

A-level Psychology AQA Revision Notes

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

- Reference Manager

- Simple TEXT file

People also looked at

Review article, the use of research methods in psychological research: a systematised review.

- 1 Community Psychosocial Research (COMPRES), School of Psychosocial Health, North-West University, Potchefstroom, South Africa

- 2 WorkWell Research Institute, North-West University, Potchefstroom, South Africa

Research methods play an imperative role in research quality as well as educating young researchers, however, the application thereof is unclear which can be detrimental to the field of psychology. Therefore, this systematised review aimed to determine what research methods are being used, how these methods are being used and for what topics in the field. Our review of 999 articles from five journals over a period of 5 years indicated that psychology research is conducted in 10 topics via predominantly quantitative research methods. Of these 10 topics, social psychology was the most popular. The remainder of the conducted methodology is described. It was also found that articles lacked rigour and transparency in the used methodology which has implications for replicability. In conclusion this article, provides an overview of all reported methodologies used in a sample of psychology journals. It highlights the popularity and application of methods and designs throughout the article sample as well as an unexpected lack of rigour with regard to most aspects of methodology. Possible sample bias should be considered when interpreting the results of this study. It is recommended that future research should utilise the results of this study to determine the possible impact on the field of psychology as a science and to further investigation into the use of research methods. Results should prompt the following future research into: a lack or rigour and its implication on replication, the use of certain methods above others, publication bias and choice of sampling method.

Introduction

Psychology is an ever-growing and popular field ( Gough and Lyons, 2016 ; Clay, 2017 ). Due to this growth and the need for science-based research to base health decisions on ( Perestelo-Pérez, 2013 ), the use of research methods in the broad field of psychology is an essential point of investigation ( Stangor, 2011 ; Aanstoos, 2014 ). Research methods are therefore viewed as important tools used by researchers to collect data ( Nieuwenhuis, 2016 ) and include the following: quantitative, qualitative, mixed method and multi method ( Maree, 2016 ). Additionally, researchers also employ various types of literature reviews to address research questions ( Grant and Booth, 2009 ). According to literature, what research method is used and why a certain research method is used is complex as it depends on various factors that may include paradigm ( O'Neil and Koekemoer, 2016 ), research question ( Grix, 2002 ), or the skill and exposure of the researcher ( Nind et al., 2015 ). How these research methods are employed is also difficult to discern as research methods are often depicted as having fixed boundaries that are continuously crossed in research ( Johnson et al., 2001 ; Sandelowski, 2011 ). Examples of this crossing include adding quantitative aspects to qualitative studies ( Sandelowski et al., 2009 ), or stating that a study used a mixed-method design without the study having any characteristics of this design ( Truscott et al., 2010 ).

The inappropriate use of research methods affects how students and researchers improve and utilise their research skills ( Scott Jones and Goldring, 2015 ), how theories are developed ( Ngulube, 2013 ), and the credibility of research results ( Levitt et al., 2017 ). This, in turn, can be detrimental to the field ( Nind et al., 2015 ), journal publication ( Ketchen et al., 2008 ; Ezeh et al., 2010 ), and attempts to address public social issues through psychological research ( Dweck, 2017 ). This is especially important given the now well-known replication crisis the field is facing ( Earp and Trafimow, 2015 ; Hengartner, 2018 ).

Due to this lack of clarity on method use and the potential impact of inept use of research methods, the aim of this study was to explore the use of research methods in the field of psychology through a review of journal publications. Chaichanasakul et al. (2011) identify reviewing articles as the opportunity to examine the development, growth and progress of a research area and overall quality of a journal. Studies such as Lee et al. (1999) as well as Bluhm et al. (2011) review of qualitative methods has attempted to synthesis the use of research methods and indicated the growth of qualitative research in American and European journals. Research has also focused on the use of research methods in specific sub-disciplines of psychology, for example, in the field of Industrial and Organisational psychology Coetzee and Van Zyl (2014) found that South African publications tend to consist of cross-sectional quantitative research methods with underrepresented longitudinal studies. Qualitative studies were found to make up 21% of the articles published from 1995 to 2015 in a similar study by O'Neil and Koekemoer (2016) . Other methods in health psychology, such as Mixed methods research have also been reportedly growing in popularity ( O'Cathain, 2009 ).

A broad overview of the use of research methods in the field of psychology as a whole is however, not available in the literature. Therefore, our research focused on answering what research methods are being used, how these methods are being used and for what topics in practice (i.e., journal publications) in order to provide a general perspective of method used in psychology publication. We synthesised the collected data into the following format: research topic [areas of scientific discourse in a field or the current needs of a population ( Bittermann and Fischer, 2018 )], method [data-gathering tools ( Nieuwenhuis, 2016 )], sampling [elements chosen from a population to partake in research ( Ritchie et al., 2009 )], data collection [techniques and research strategy ( Maree, 2016 )], and data analysis [discovering information by examining bodies of data ( Ktepi, 2016 )]. A systematised review of recent articles (2013 to 2017) collected from five different journals in the field of psychological research was conducted.

Grant and Booth (2009) describe systematised reviews as the review of choice for post-graduate studies, which is employed using some elements of a systematic review and seldom more than one or two databases to catalogue studies after a comprehensive literature search. The aspects used in this systematised review that are similar to that of a systematic review were a full search within the chosen database and data produced in tabular form ( Grant and Booth, 2009 ).

Sample sizes and timelines vary in systematised reviews (see Lowe and Moore, 2014 ; Pericall and Taylor, 2014 ; Barr-Walker, 2017 ). With no clear parameters identified in the literature (see Grant and Booth, 2009 ), the sample size of this study was determined by the purpose of the sample ( Strydom, 2011 ), and time and cost constraints ( Maree and Pietersen, 2016 ). Thus, a non-probability purposive sample ( Ritchie et al., 2009 ) of the top five psychology journals from 2013 to 2017 was included in this research study. Per Lee (2015) American Psychological Association (APA) recommends the use of the most up-to-date sources for data collection with consideration of the context of the research study. As this research study focused on the most recent trends in research methods used in the broad field of psychology, the identified time frame was deemed appropriate.