- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Present Your Data Like a Pro

- Joel Schwartzberg

Demystify the numbers. Your audience will thank you.

While a good presentation has data, data alone doesn’t guarantee a good presentation. It’s all about how that data is presented. The quickest way to confuse your audience is by sharing too many details at once. The only data points you should share are those that significantly support your point — and ideally, one point per chart. To avoid the debacle of sheepishly translating hard-to-see numbers and labels, rehearse your presentation with colleagues sitting as far away as the actual audience would. While you’ve been working with the same chart for weeks or months, your audience will be exposed to it for mere seconds. Give them the best chance of comprehending your data by using simple, clear, and complete language to identify X and Y axes, pie pieces, bars, and other diagrammatic elements. Try to avoid abbreviations that aren’t obvious, and don’t assume labeled components on one slide will be remembered on subsequent slides. Every valuable chart or pie graph has an “Aha!” zone — a number or range of data that reveals something crucial to your point. Make sure you visually highlight the “Aha!” zone, reinforcing the moment by explaining it to your audience.

With so many ways to spin and distort information these days, a presentation needs to do more than simply share great ideas — it needs to support those ideas with credible data. That’s true whether you’re an executive pitching new business clients, a vendor selling her services, or a CEO making a case for change.

- JS Joel Schwartzberg oversees executive communications for a major national nonprofit, is a professional presentation coach, and is the author of Get to the Point! Sharpen Your Message and Make Your Words Matter and The Language of Leadership: How to Engage and Inspire Your Team . You can find him on LinkedIn and X. TheJoelTruth

Partner Center

We use essential cookies to make Venngage work. By clicking “Accept All Cookies”, you agree to the storing of cookies on your device to enhance site navigation, analyze site usage, and assist in our marketing efforts.

Manage Cookies

Cookies and similar technologies collect certain information about how you’re using our website. Some of them are essential, and without them you wouldn’t be able to use Venngage. But others are optional, and you get to choose whether we use them or not.

Strictly Necessary Cookies

These cookies are always on, as they’re essential for making Venngage work, and making it safe. Without these cookies, services you’ve asked for can’t be provided.

Show cookie providers

- Google Login

Functionality Cookies

These cookies help us provide enhanced functionality and personalisation, and remember your settings. They may be set by us or by third party providers.

Performance Cookies

These cookies help us analyze how many people are using Venngage, where they come from and how they're using it. If you opt out of these cookies, we can’t get feedback to make Venngage better for you and all our users.

- Google Analytics

Targeting Cookies

These cookies are set by our advertising partners to track your activity and show you relevant Venngage ads on other sites as you browse the internet.

- Google Tag Manager

- Infographics

- Daily Infographics

- Template Lists

- Graphic Design

- Graphs and Charts

- Data Visualization

- Human Resources

- Beginner Guides

Blog Data Visualization

10 Data Presentation Examples For Strategic Communication

By Krystle Wong , Sep 28, 2023

Knowing how to present data is like having a superpower.

Data presentation today is no longer just about numbers on a screen; it’s storytelling with a purpose. It’s about captivating your audience, making complex stuff look simple and inspiring action.

To help turn your data into stories that stick, influence decisions and make an impact, check out Venngage’s free chart maker or follow me on a tour into the world of data storytelling along with data presentation templates that work across different fields, from business boardrooms to the classroom and beyond. Keep scrolling to learn more!

Click to jump ahead:

10 Essential data presentation examples + methods you should know

What should be included in a data presentation, what are some common mistakes to avoid when presenting data, faqs on data presentation examples, transform your message with impactful data storytelling.

Data presentation is a vital skill in today’s information-driven world. Whether you’re in business, academia, or simply want to convey information effectively, knowing the different ways of presenting data is crucial. For impactful data storytelling, consider these essential data presentation methods:

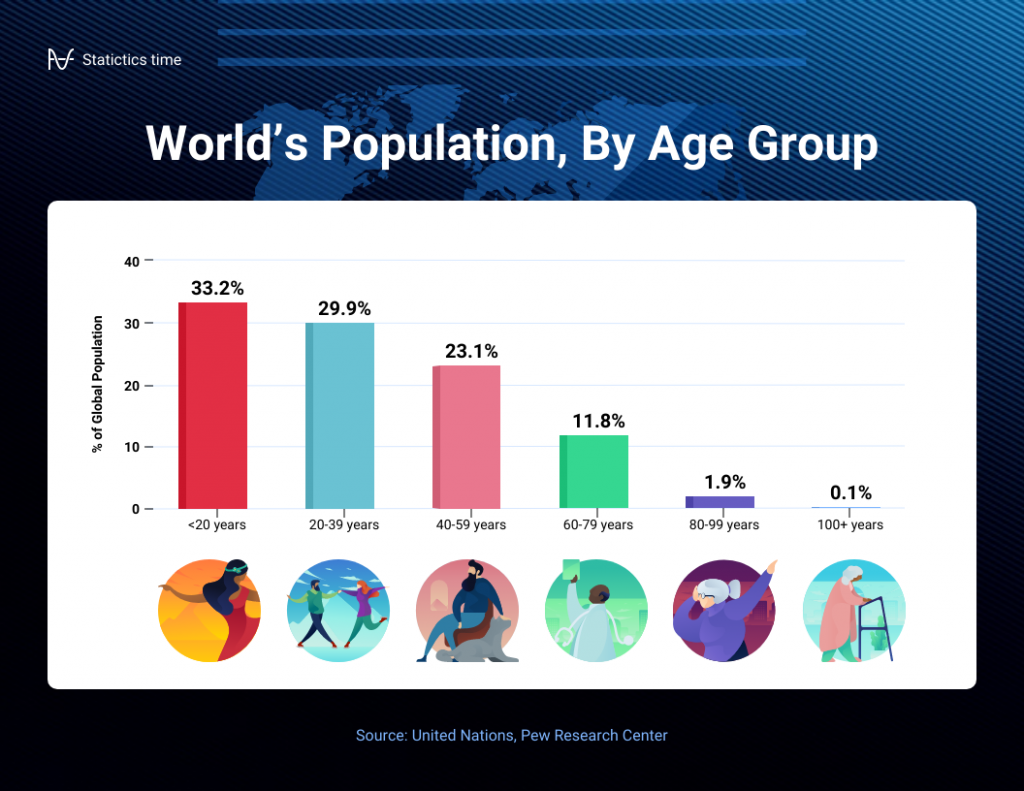

1. Bar graph

Ideal for comparing data across categories or showing trends over time.

Bar graphs, also known as bar charts are workhorses of data presentation. They’re like the Swiss Army knives of visualization methods because they can be used to compare data in different categories or display data changes over time.

In a bar chart, categories are displayed on the x-axis and the corresponding values are represented by the height of the bars on the y-axis.

It’s a straightforward and effective way to showcase raw data, making it a staple in business reports, academic presentations and beyond.

Make sure your bar charts are concise with easy-to-read labels. Whether your bars go up or sideways, keep it simple by not overloading with too many categories.

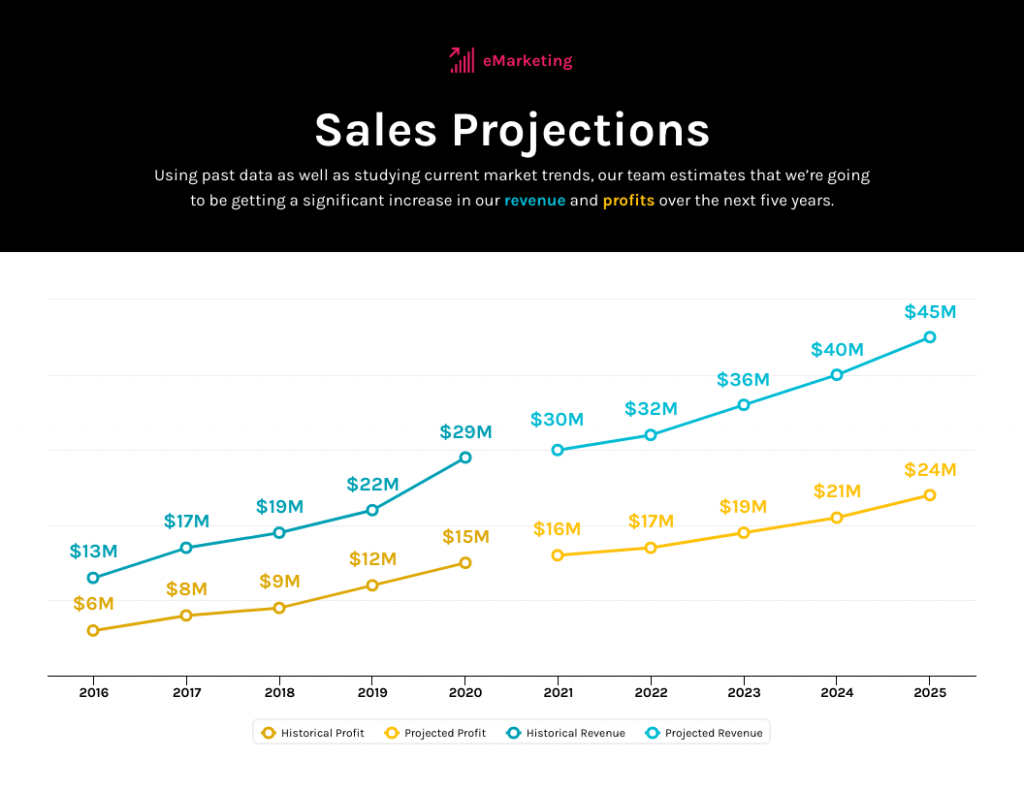

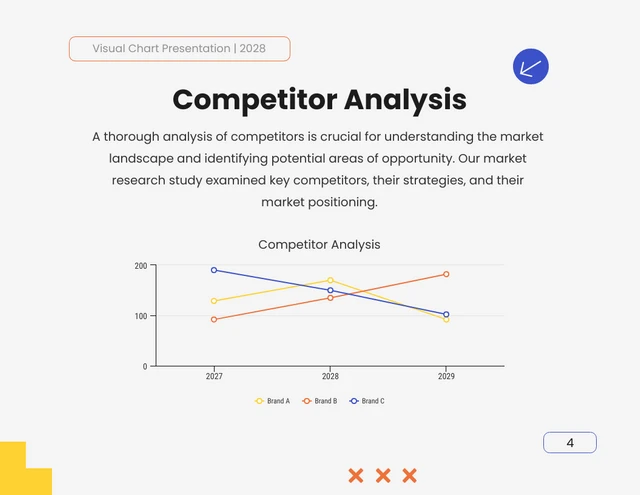

2. Line graph

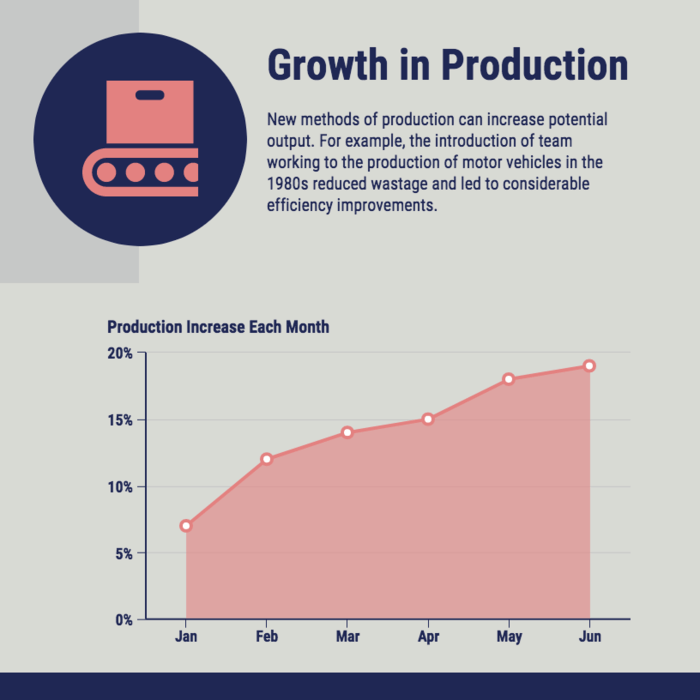

Great for displaying trends and variations in data points over time or continuous variables.

Line charts or line graphs are your go-to when you want to visualize trends and variations in data sets over time.

One of the best quantitative data presentation examples, they work exceptionally well for showing continuous data, such as sales projections over the last couple of years or supply and demand fluctuations.

The x-axis represents time or a continuous variable and the y-axis represents the data values. By connecting the data points with lines, you can easily spot trends and fluctuations.

A tip when presenting data with line charts is to minimize the lines and not make it too crowded. Highlight the big changes, put on some labels and give it a catchy title.

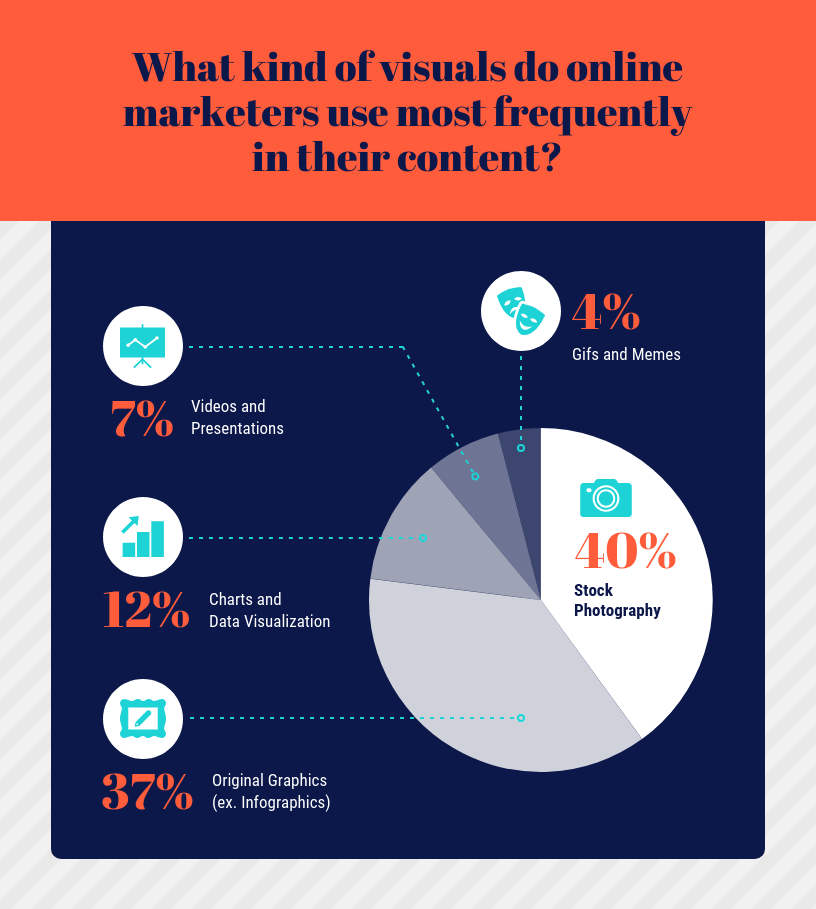

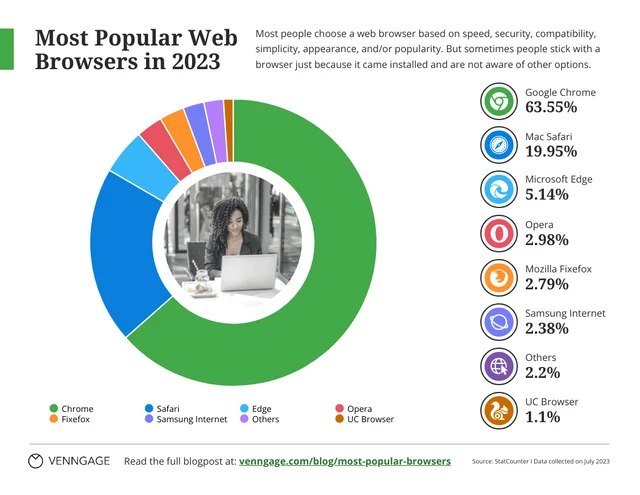

3. Pie chart

Useful for illustrating parts of a whole, such as percentages or proportions.

Pie charts are perfect for showing how a whole is divided into parts. They’re commonly used to represent percentages or proportions and are great for presenting survey results that involve demographic data.

Each “slice” of the pie represents a portion of the whole and the size of each slice corresponds to its share of the total.

While pie charts are handy for illustrating simple distributions, they can become confusing when dealing with too many categories or when the differences in proportions are subtle.

Don’t get too carried away with slices — label those slices with percentages or values so people know what’s what and consider using a legend for more categories.

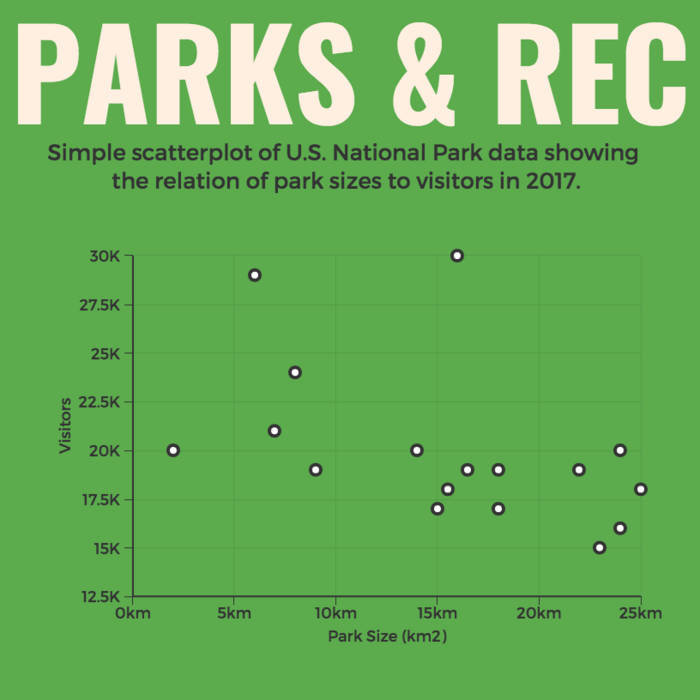

4. Scatter plot

Effective for showing the relationship between two variables and identifying correlations.

Scatter plots are all about exploring relationships between two variables. They’re great for uncovering correlations, trends or patterns in data.

In a scatter plot, every data point appears as a dot on the chart, with one variable marked on the horizontal x-axis and the other on the vertical y-axis.

By examining the scatter of points, you can discern the nature of the relationship between the variables, whether it’s positive, negative or no correlation at all.

If you’re using scatter plots to reveal relationships between two variables, be sure to add trendlines or regression analysis when appropriate to clarify patterns. Label data points selectively or provide tooltips for detailed information.

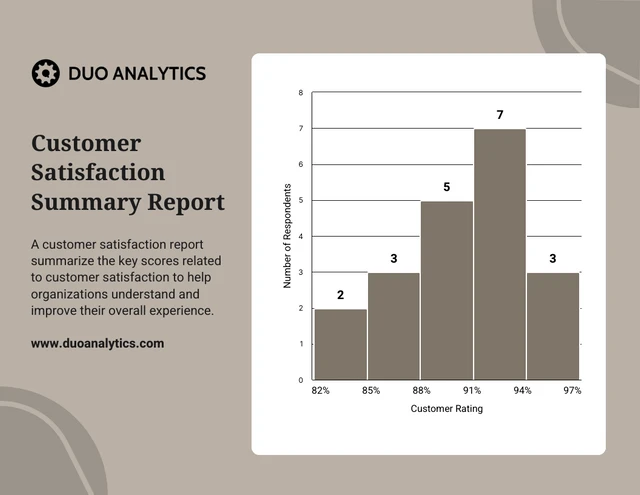

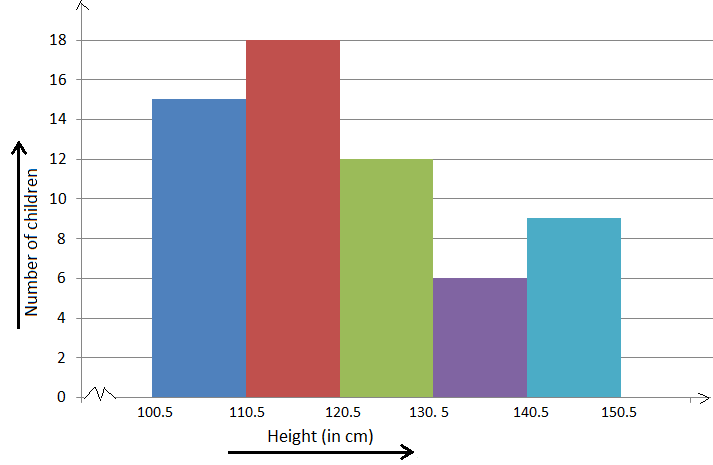

5. Histogram

Best for visualizing the distribution and frequency of a single variable.

Histograms are your choice when you want to understand the distribution and frequency of a single variable.

They divide the data into “bins” or intervals and the height of each bar represents the frequency or count of data points falling into that interval.

Histograms are excellent for helping to identify trends in data distributions, such as peaks, gaps or skewness.

Here’s something to take note of — ensure that your histogram bins are appropriately sized to capture meaningful data patterns. Using clear axis labels and titles can also help explain the distribution of the data effectively.

6. Stacked bar chart

Useful for showing how different components contribute to a whole over multiple categories.

Stacked bar charts are a handy choice when you want to illustrate how different components contribute to a whole across multiple categories.

Each bar represents a category and the bars are divided into segments to show the contribution of various components within each category.

This method is ideal for highlighting both the individual and collective significance of each component, making it a valuable tool for comparative analysis.

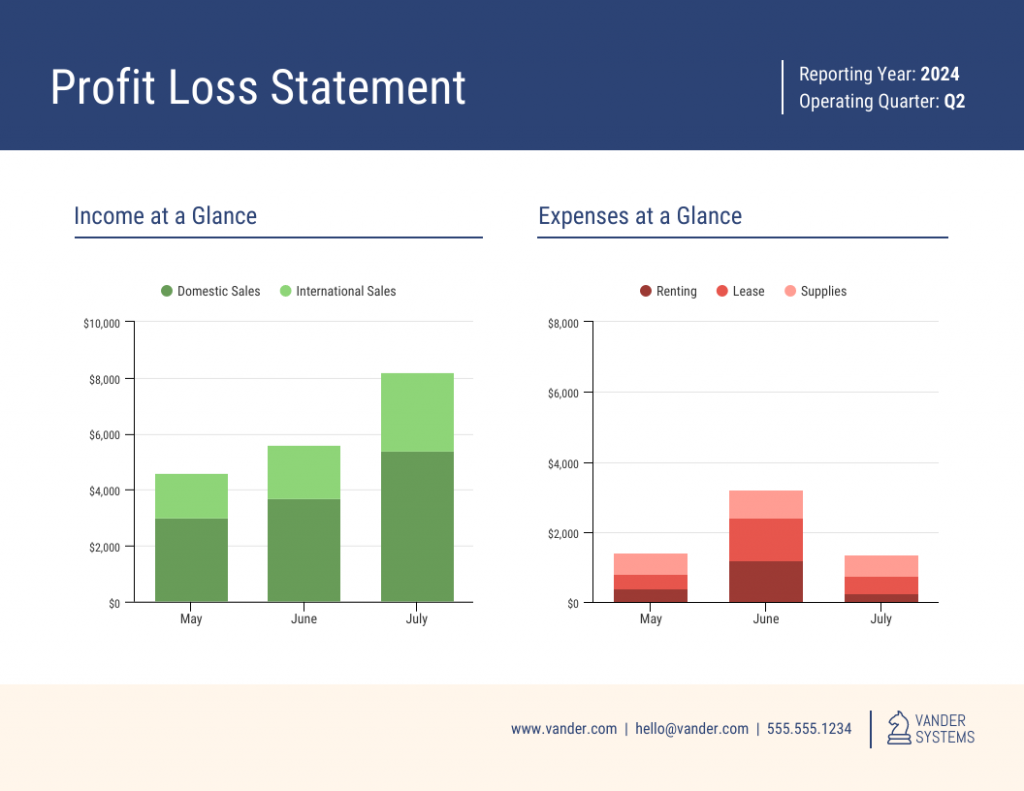

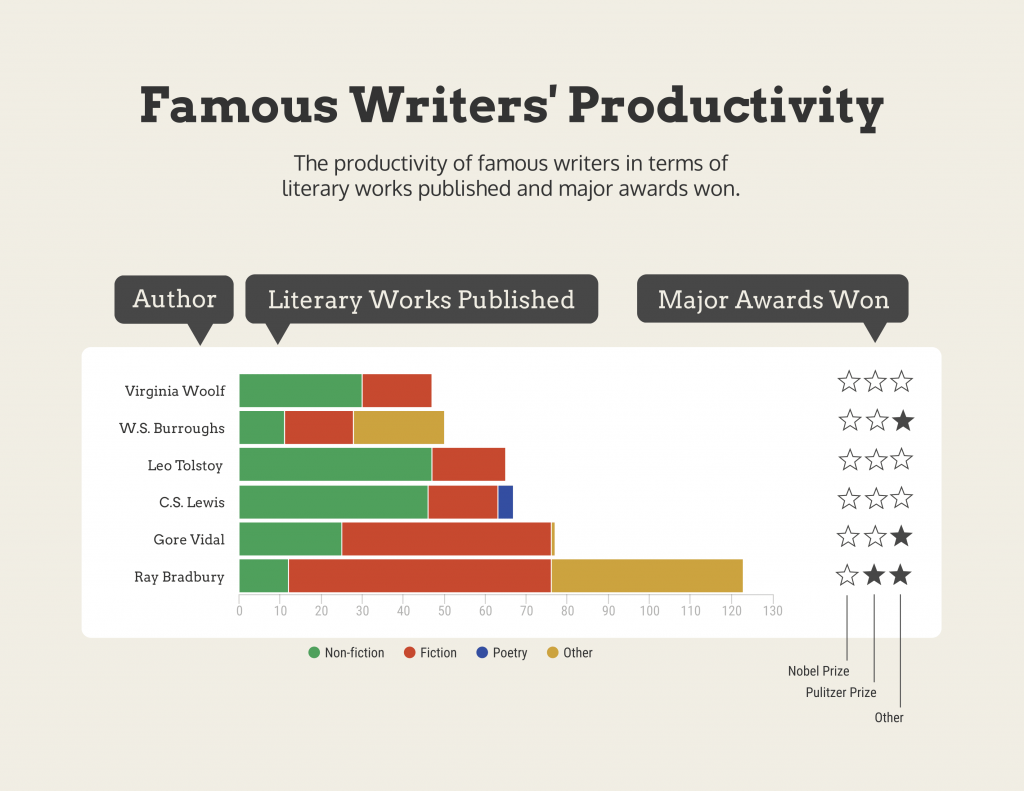

Stacked bar charts are like data sandwiches—label each layer so people know what’s what. Keep the order logical and don’t forget the paintbrush for snazzy colors. Here’s a data analysis presentation example on writers’ productivity using stacked bar charts:

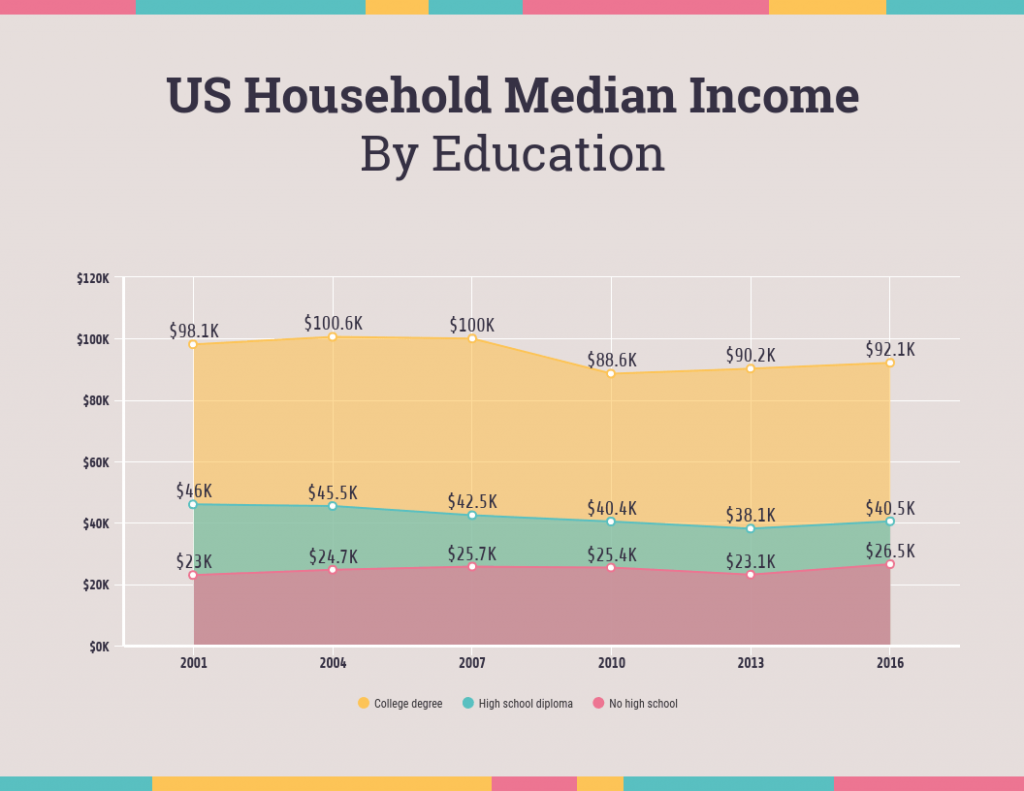

7. Area chart

Similar to line charts but with the area below the lines filled, making them suitable for showing cumulative data.

Area charts are close cousins of line charts but come with a twist.

Imagine plotting the sales of a product over several months. In an area chart, the space between the line and the x-axis is filled, providing a visual representation of the cumulative total.

This makes it easy to see how values stack up over time, making area charts a valuable tool for tracking trends in data.

For area charts, use them to visualize cumulative data and trends, but avoid overcrowding the chart. Add labels, especially at significant points and make sure the area under the lines is filled with a visually appealing color gradient.

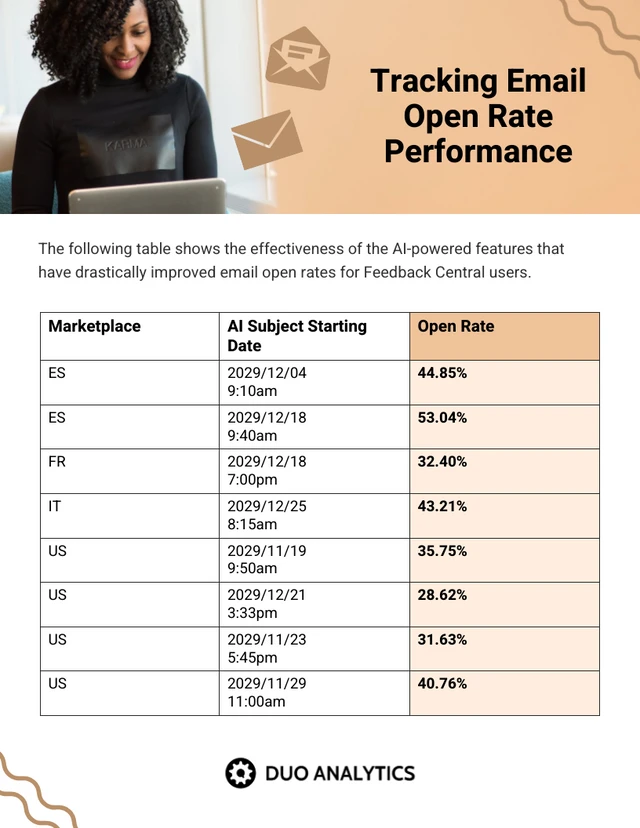

8. Tabular presentation

Presenting data in rows and columns, often used for precise data values and comparisons.

Tabular data presentation is all about clarity and precision. Think of it as presenting numerical data in a structured grid, with rows and columns clearly displaying individual data points.

A table is invaluable for showcasing detailed data, facilitating comparisons and presenting numerical information that needs to be exact. They’re commonly used in reports, spreadsheets and academic papers.

When presenting tabular data, organize it neatly with clear headers and appropriate column widths. Highlight important data points or patterns using shading or font formatting for better readability.

9. Textual data

Utilizing written or descriptive content to explain or complement data, such as annotations or explanatory text.

Textual data presentation may not involve charts or graphs, but it’s one of the most used qualitative data presentation examples.

It involves using written content to provide context, explanations or annotations alongside data visuals. Think of it as the narrative that guides your audience through the data.

Well-crafted textual data can make complex information more accessible and help your audience understand the significance of the numbers and visuals.

Textual data is your chance to tell a story. Break down complex information into bullet points or short paragraphs and use headings to guide the reader’s attention.

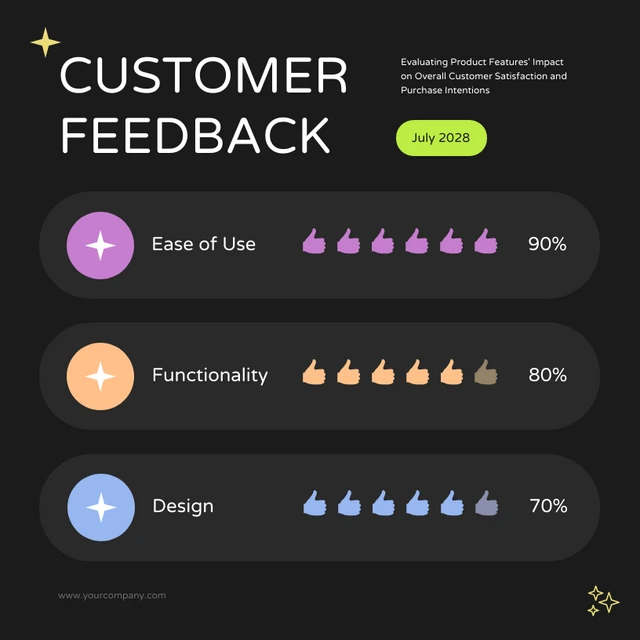

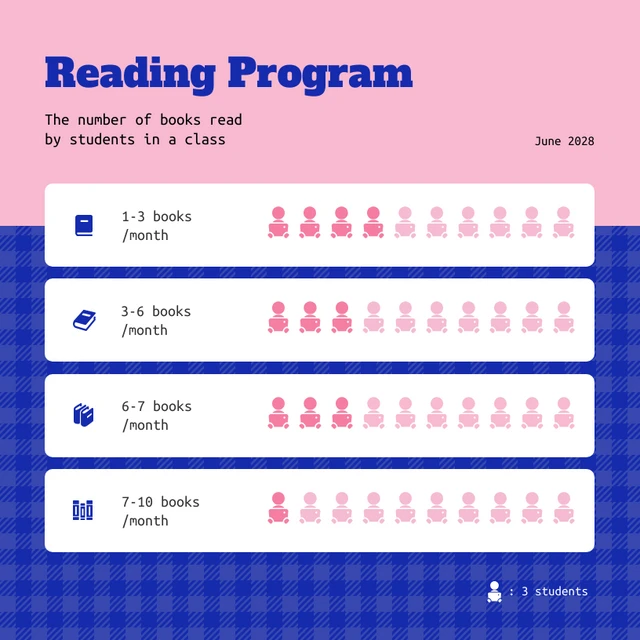

10. Pictogram

Using simple icons or images to represent data is especially useful for conveying information in a visually intuitive manner.

Pictograms are all about harnessing the power of images to convey data in an easy-to-understand way.

Instead of using numbers or complex graphs, you use simple icons or images to represent data points.

For instance, you could use a thumbs up emoji to illustrate customer satisfaction levels, where each face represents a different level of satisfaction.

Pictograms are great for conveying data visually, so choose symbols that are easy to interpret and relevant to the data. Use consistent scaling and a legend to explain the symbols’ meanings, ensuring clarity in your presentation.

Looking for more data presentation ideas? Use the Venngage graph maker or browse through our gallery of chart templates to pick a template and get started!

A comprehensive data presentation should include several key elements to effectively convey information and insights to your audience. Here’s a list of what should be included in a data presentation:

1. Title and objective

- Begin with a clear and informative title that sets the context for your presentation.

- State the primary objective or purpose of the presentation to provide a clear focus.

2. Key data points

- Present the most essential data points or findings that align with your objective.

- Use charts, graphical presentations or visuals to illustrate these key points for better comprehension.

3. Context and significance

- Provide a brief overview of the context in which the data was collected and why it’s significant.

- Explain how the data relates to the larger picture or the problem you’re addressing.

4. Key takeaways

- Summarize the main insights or conclusions that can be drawn from the data.

- Highlight the key takeaways that the audience should remember.

5. Visuals and charts

- Use clear and appropriate visual aids to complement the data.

- Ensure that visuals are easy to understand and support your narrative.

6. Implications or actions

- Discuss the practical implications of the data or any recommended actions.

- If applicable, outline next steps or decisions that should be taken based on the data.

7. Q&A and discussion

- Allocate time for questions and open discussion to engage the audience.

- Address queries and provide additional insights or context as needed.

Presenting data is a crucial skill in various professional fields, from business to academia and beyond. To ensure your data presentations hit the mark, here are some common mistakes that you should steer clear of:

Overloading with data

Presenting too much data at once can overwhelm your audience. Focus on the key points and relevant information to keep the presentation concise and focused. Here are some free data visualization tools you can use to convey data in an engaging and impactful way.

Assuming everyone’s on the same page

It’s easy to assume that your audience understands as much about the topic as you do. But this can lead to either dumbing things down too much or diving into a bunch of jargon that leaves folks scratching their heads. Take a beat to figure out where your audience is coming from and tailor your presentation accordingly.

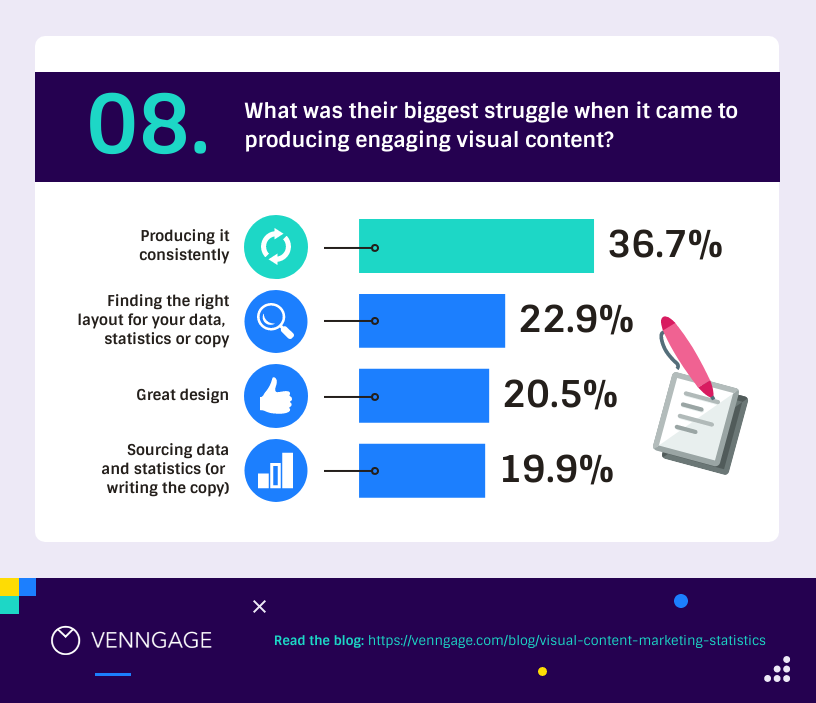

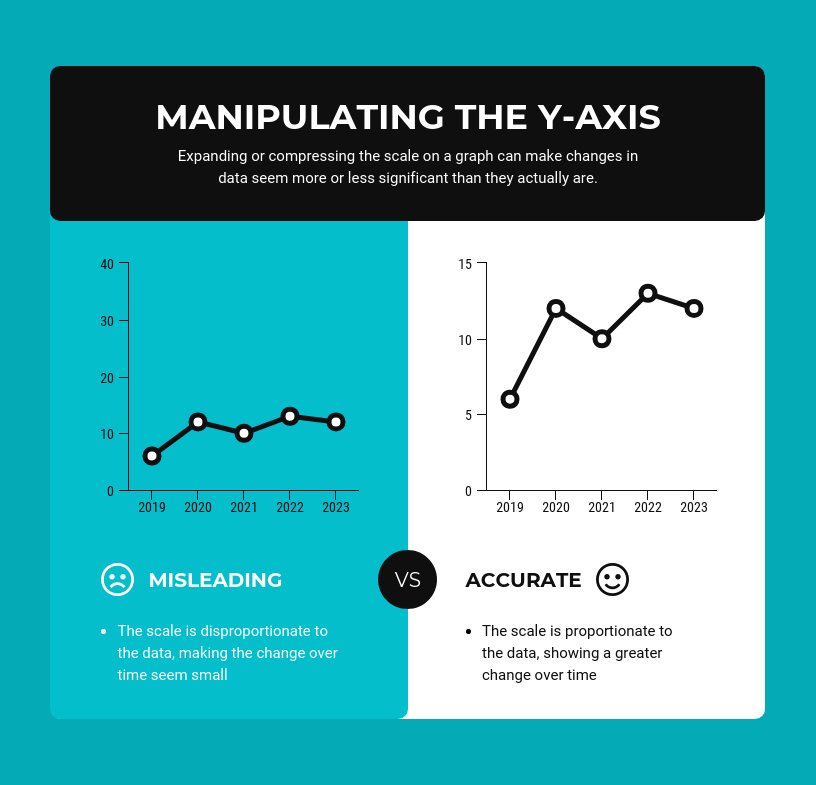

Misleading visuals

Using misleading visuals, such as distorted scales or inappropriate chart types can distort the data’s meaning. Pick the right data infographics and understandable charts to ensure that your visual representations accurately reflect the data.

Not providing context

Data without context is like a puzzle piece with no picture on it. Without proper context, data may be meaningless or misinterpreted. Explain the background, methodology and significance of the data.

Not citing sources properly

Neglecting to cite sources and provide citations for your data can erode its credibility. Always attribute data to its source and utilize reliable sources for your presentation.

Not telling a story

Avoid simply presenting numbers. If your presentation lacks a clear, engaging story that takes your audience on a journey from the beginning (setting the scene) through the middle (data analysis) to the end (the big insights and recommendations), you’re likely to lose their interest.

Infographics are great for storytelling because they mix cool visuals with short and sweet text to explain complicated stuff in a fun and easy way. Create one with Venngage’s free infographic maker to create a memorable story that your audience will remember.

Ignoring data quality

Presenting data without first checking its quality and accuracy can lead to misinformation. Validate and clean your data before presenting it.

Simplify your visuals

Fancy charts might look cool, but if they confuse people, what’s the point? Go for the simplest visual that gets your message across. Having a dilemma between presenting data with infographics v.s data design? This article on the difference between data design and infographics might help you out.

Missing the emotional connection

Data isn’t just about numbers; it’s about people and real-life situations. Don’t forget to sprinkle in some human touch, whether it’s through relatable stories, examples or showing how the data impacts real lives.

Skipping the actionable insights

At the end of the day, your audience wants to know what they should do with all the data. If you don’t wrap up with clear, actionable insights or recommendations, you’re leaving them hanging. Always finish up with practical takeaways and the next steps.

Can you provide some data presentation examples for business reports?

Business reports often benefit from data presentation through bar charts showing sales trends over time, pie charts displaying market share,or tables presenting financial performance metrics like revenue and profit margins.

What are some creative data presentation examples for academic presentations?

Creative data presentation ideas for academic presentations include using statistical infographics to illustrate research findings and statistical data, incorporating storytelling techniques to engage the audience or utilizing heat maps to visualize data patterns.

What are the key considerations when choosing the right data presentation format?

When choosing a chart format , consider factors like data complexity, audience expertise and the message you want to convey. Options include charts (e.g., bar, line, pie), tables, heat maps, data visualization infographics and interactive dashboards.

Knowing the type of data visualization that best serves your data is just half the battle. Here are some best practices for data visualization to make sure that the final output is optimized.

How can I choose the right data presentation method for my data?

To select the right data presentation method, start by defining your presentation’s purpose and audience. Then, match your data type (e.g., quantitative, qualitative) with suitable visualization techniques (e.g., histograms, word clouds) and choose an appropriate presentation format (e.g., slide deck, report, live demo).

For more presentation ideas , check out this guide on how to make a good presentation or use a presentation software to simplify the process.

How can I make my data presentations more engaging and informative?

To enhance data presentations, use compelling narratives, relatable examples and fun data infographics that simplify complex data. Encourage audience interaction, offer actionable insights and incorporate storytelling elements to engage and inform effectively.

The opening of your presentation holds immense power in setting the stage for your audience. To design a presentation and convey your data in an engaging and informative, try out Venngage’s free presentation maker to pick the right presentation design for your audience and topic.

What is the difference between data visualization and data presentation?

Data presentation typically involves conveying data reports and insights to an audience, often using visuals like charts and graphs. Data visualization , on the other hand, focuses on creating those visual representations of data to facilitate understanding and analysis.

Now that you’ve learned a thing or two about how to use these methods of data presentation to tell a compelling data story , it’s time to take these strategies and make them your own.

But here’s the deal: these aren’t just one-size-fits-all solutions. Remember that each example we’ve uncovered here is not a rigid template but a source of inspiration. It’s all about making your audience go, “Wow, I get it now!”

Think of your data presentations as your canvas – it’s where you paint your story, convey meaningful insights and make real change happen.

So, go forth, present your data with confidence and purpose and watch as your strategic influence grows, one compelling presentation at a time.

- Online Degree Explore Bachelor’s & Master’s degrees

- MasterTrack™ Earn credit towards a Master’s degree

- University Certificates Advance your career with graduate-level learning

- Top Courses

- Join for Free

Data Analysis and Presentation Skills: the PwC Approach Specialization

Make Smarter Business Decisions With Data Analysis. Understand data, apply data analytics tools and create effective business intelligence presentations

Taught in English

Some content may not be translated

Instructor: Alex Mannella

Financial aid available

157,406 already enrolled

Specialization - 5 course series

(9,839 reviews)

Skills you'll gain

- Data Analysis

- Microsoft Excel

- Data Visualization

- Presentation

Details to know

Add to your LinkedIn profile

See how employees at top companies are mastering in-demand skills

Advance your subject-matter expertise

- Learn in-demand skills from university and industry experts

- Master a subject or tool with hands-on projects

- Develop a deep understanding of key concepts

- Earn a career certificate from PwC

Earn a career certificate

Add this credential to your LinkedIn profile, resume, or CV

Share it on social media and in your performance review

If you are a PwC Employee, gain access to the PwC Specialization and Courses for free using the instructions on Vantage.

This Specialization will help you get practical with data analysis, turning business intelligence into real-world outcomes. We'll explore how a combination of better understanding, filtering, and application of data can help you solve problems faster - leading to smarter and more effective decision-making. You’ll learn how to use Microsoft Excel, PowerPoint, and other common data analysis and communication tools, and perhaps most importantly, we'll help you to present data to others in a way that gets them engaged in your story and motivated to act.

Please note: If you'd like to audit the courses in this Specialization, you'll need to enroll in each course separately and then you will see the audit option.

This specialization was created by PricewaterhouseCoopers LLP with an address at 300 Madison Avenue, New York, New York, 10017.

Applied Learning Project

This specialization will include a project at the end of each module and a capstone project at the end of the specialization. Each project will provide you the chance to apply the skills of that lesson. In the first module you'll plan an analysis approach, in the second and third modules you will analyze sets of data using the Excel skills you learn. In the fourth module you will prepare a business presentation.

In the final Capstone Project, you'll apply the skills you’ve learned by working through a mock client business problem. You'll analyze a set of data, looking for the business insights. Then you'll create and visualize your findings, before recording a video to present your recommendations to the client.

Data-driven Decision Making

What you'll learn.

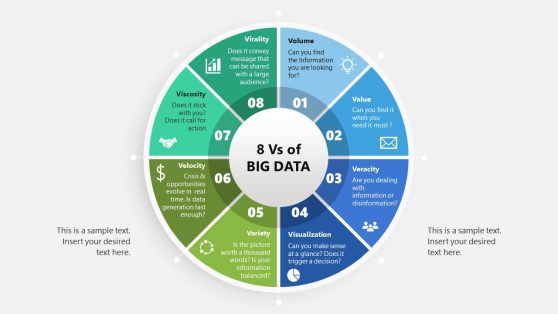

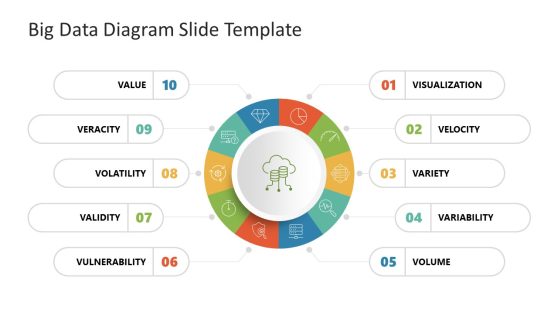

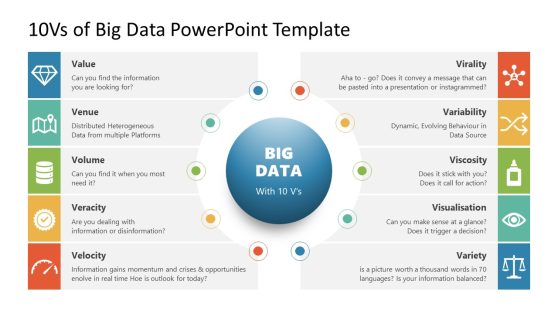

Welcome to Data-driven Decision Making. In this course, you'll get an introduction to Data Analytics and its role in business decisions. You'll learn why data is important and how it has evolved. You'll be introduced to “Big Data” and how it is used. You'll also be introduced to a framework for conducting Data Analysis and what tools and techniques are commonly used. Finally, you'll have a chance to put your knowledge to work in a simulated business setting.

This course was created by PricewaterhouseCoopers LLP with an address at 300 Madison Avenue, New York, New York, 10017.

Problem Solving with Excel

This course explores Excel as a tool for solving business problems. In this course you will learn the basic functions of excel through guided demonstration. Each week you will build on your excel skills and be provided an opportunity to practice what you’ve learned. Finally, you will have a chance to put your knowledge to work in a final project. Please note, the content in this course was developed using a Windows version of Excel 2013.

Data Visualization with Advanced Excel

In this course, you will get hands-on instruction of advanced Excel 2013 functions. You’ll learn to use PowerPivot to build databases and data models. We’ll show you how to perform different types of scenario and simulation analysis and you’ll have an opportunity to practice these skills by leveraging some of Excel's built in tools including, solver, data tables, scenario manager and goal seek. In the second half of the course, will cover how to visualize data, tell a story and explore data by reviewing core principles of data visualization and dashboarding. You’ll use Excel to build complex graphs and Power View reports and then start to combine them into dynamic dashboards.

Note: Learners will need PowerPivot to complete some of the exercises. Please use MS Excel 2013 version. If you have other MS Excel versions or a MAC you might not be able to complete all assignments. This course was created by PricewaterhouseCoopers LLP with an address at 300 Madison Avenue, New York, New York, 10017.

Effective Business Presentations with Powerpoint

This course is all about presenting the story of the data, using PowerPoint. You'll learn how to structure a presentation, to include insights and supporting data. You'll also learn some design principles for effective visuals and slides. You'll gain skills for client-facing communication - including public speaking, executive presence and compelling storytelling. Finally, you'll be given a client profile, a business problem, and a set of basic Excel charts, which you'll need to turn into a presentation - which you'll deliver with iterative peer feedback.

Data Analysis and Presentation Skills: the PwC Approach Final Project

In this Capstone Project, you'll bring together all the new skills and insights you've learned through the four courses. You'll be given a 'mock' client problem and a data set. You'll need to analyze the data to gain business insights, research the client's domain area, and create recommendations. You'll then need to visualize the data in a client-facing presentation. You'll bring it all together in a recorded video presentation.

With offices in 157 countries and more than 208,000 people, PwC is among the leading professional services networks in the world. Our purpose is to build trust in society and solve important problems. We help organisations and individuals create the value they’re looking for, by delivering quality in assurance, tax and advisory services.

Why people choose Coursera for their career

New to Business Essentials? Start here.

Open new doors with Coursera Plus

Unlimited access to 7,000+ world-class courses, hands-on projects, and job-ready certificate programs - all included in your subscription

Advance your career with an online degree

Earn a degree from world-class universities - 100% online

Join over 3,400 global companies that choose Coursera for Business

Upskill your employees to excel in the digital economy

Frequently asked questions

How long does it take to complete the specialization.

Exactly how long it takes will vary, depending on your schedule. Most learners complete the Specialization in five to six months.

What background knowledge is necessary?

You don't need any background knowledge. We've designed this Specialization for learners who are new to the field of data and analytics.

Do I need to take the courses in a specific order?

We recommend you take them in the order they appear on Coursera. Each course builds on the knowledge you learned in the last one.

Will I earn university credit for completing the Specialization?

Coursera courses and certificates don't carry university credit, though some universities may choose to accept Specialization Certificates for credit. You should check with your institution to find out more.

What will I be able to do upon completing the Specialization?

You'll be able to use the data and analytics framework to develop a plan to solve a business problem. You'll be able to use Excel to analyze data using formulas and present a series of visualizations with a summary recommendation to solve the business problem. You'll also be able to take data and create a dynamic data dashboard in Excel that accepts inputs and refreshes with new data. Finally, you'll be able to develop and deliver a presentation using PowerPoint and the results of your data analysis - so you can share your point of view on how to solve the business problem.

How do I audit the Specialization?

If you'd like to audit the courses in this Specialization, you'll need to enroll in each course separately and then you will see the audit option.

What tools do I need for this Specialization?

In the "Data Visualization and Advance Excel" course learners will need PowerPivot to complete some of the exercises. Please use MS Excel 2013 version. If you have other MS Excel versions or a MAC you might not be able to complete all assignments.

Is this course really 100% online? Do I need to attend any classes in person?

This course is completely online, so there’s no need to show up to a classroom in person. You can access your lectures, readings and assignments anytime and anywhere via the web or your mobile device.

What is the refund policy?

If you subscribed, you get a 7-day free trial during which you can cancel at no penalty. After that, we don’t give refunds, but you can cancel your subscription at any time. See our full refund policy Opens in a new tab .

Can I just enroll in a single course?

Yes! To get started, click the course card that interests you and enroll. You can enroll and complete the course to earn a shareable certificate, or you can audit it to view the course materials for free. When you subscribe to a course that is part of a Specialization, you’re automatically subscribed to the full Specialization. Visit your learner dashboard to track your progress.

Is financial aid available?

Yes. In select learning programs, you can apply for financial aid or a scholarship if you can’t afford the enrollment fee. If fin aid or scholarship is available for your learning program selection, you’ll find a link to apply on the description page.

Can I take the course for free?

When you enroll in the course, you get access to all of the courses in the Specialization, and you earn a certificate when you complete the work. If you only want to read and view the course content, you can audit the course for free. If you cannot afford the fee, you can apply for financial aid Opens in a new tab .

More questions

Your Modern Business Guide To Data Analysis Methods And Techniques

Table of Contents

1) What Is Data Analysis?

2) Why Is Data Analysis Important?

3) What Is The Data Analysis Process?

4) Types Of Data Analysis Methods

5) Top Data Analysis Techniques To Apply

6) Quality Criteria For Data Analysis

7) Data Analysis Limitations & Barriers

8) Data Analysis Skills

9) Data Analysis In The Big Data Environment

In our data-rich age, understanding how to analyze and extract true meaning from our business’s digital insights is one of the primary drivers of success.

Despite the colossal volume of data we create every day, a mere 0.5% is actually analyzed and used for data discovery , improvement, and intelligence. While that may not seem like much, considering the amount of digital information we have at our fingertips, half a percent still accounts for a vast amount of data.

With so much data and so little time, knowing how to collect, curate, organize, and make sense of all of this potentially business-boosting information can be a minefield – but online data analysis is the solution.

In science, data analysis uses a more complex approach with advanced techniques to explore and experiment with data. On the other hand, in a business context, data is used to make data-driven decisions that will enable the company to improve its overall performance. In this post, we will cover the analysis of data from an organizational point of view while still going through the scientific and statistical foundations that are fundamental to understanding the basics of data analysis.

To put all of that into perspective, we will answer a host of important analytical questions, explore analytical methods and techniques, while demonstrating how to perform analysis in the real world with a 17-step blueprint for success.

What Is Data Analysis?

Data analysis is the process of collecting, modeling, and analyzing data using various statistical and logical methods and techniques. Businesses rely on analytics processes and tools to extract insights that support strategic and operational decision-making.

All these various methods are largely based on two core areas: quantitative and qualitative research.

To explain the key differences between qualitative and quantitative research, here’s a video for your viewing pleasure:

Gaining a better understanding of different techniques and methods in quantitative research as well as qualitative insights will give your analyzing efforts a more clearly defined direction, so it’s worth taking the time to allow this particular knowledge to sink in. Additionally, you will be able to create a comprehensive analytical report that will skyrocket your analysis.

Apart from qualitative and quantitative categories, there are also other types of data that you should be aware of before dividing into complex data analysis processes. These categories include:

- Big data: Refers to massive data sets that need to be analyzed using advanced software to reveal patterns and trends. It is considered to be one of the best analytical assets as it provides larger volumes of data at a faster rate.

- Metadata: Putting it simply, metadata is data that provides insights about other data. It summarizes key information about specific data that makes it easier to find and reuse for later purposes.

- Real time data: As its name suggests, real time data is presented as soon as it is acquired. From an organizational perspective, this is the most valuable data as it can help you make important decisions based on the latest developments. Our guide on real time analytics will tell you more about the topic.

- Machine data: This is more complex data that is generated solely by a machine such as phones, computers, or even websites and embedded systems, without previous human interaction.

Why Is Data Analysis Important?

Before we go into detail about the categories of analysis along with its methods and techniques, you must understand the potential that analyzing data can bring to your organization.

- Informed decision-making : From a management perspective, you can benefit from analyzing your data as it helps you make decisions based on facts and not simple intuition. For instance, you can understand where to invest your capital, detect growth opportunities, predict your income, or tackle uncommon situations before they become problems. Through this, you can extract relevant insights from all areas in your organization, and with the help of dashboard software , present the data in a professional and interactive way to different stakeholders.

- Reduce costs : Another great benefit is to reduce costs. With the help of advanced technologies such as predictive analytics, businesses can spot improvement opportunities, trends, and patterns in their data and plan their strategies accordingly. In time, this will help you save money and resources on implementing the wrong strategies. And not just that, by predicting different scenarios such as sales and demand you can also anticipate production and supply.

- Target customers better : Customers are arguably the most crucial element in any business. By using analytics to get a 360° vision of all aspects related to your customers, you can understand which channels they use to communicate with you, their demographics, interests, habits, purchasing behaviors, and more. In the long run, it will drive success to your marketing strategies, allow you to identify new potential customers, and avoid wasting resources on targeting the wrong people or sending the wrong message. You can also track customer satisfaction by analyzing your client’s reviews or your customer service department’s performance.

What Is The Data Analysis Process?

When we talk about analyzing data there is an order to follow in order to extract the needed conclusions. The analysis process consists of 5 key stages. We will cover each of them more in detail later in the post, but to start providing the needed context to understand what is coming next, here is a rundown of the 5 essential steps of data analysis.

- Identify: Before you get your hands dirty with data, you first need to identify why you need it in the first place. The identification is the stage in which you establish the questions you will need to answer. For example, what is the customer's perception of our brand? Or what type of packaging is more engaging to our potential customers? Once the questions are outlined you are ready for the next step.

- Collect: As its name suggests, this is the stage where you start collecting the needed data. Here, you define which sources of data you will use and how you will use them. The collection of data can come in different forms such as internal or external sources, surveys, interviews, questionnaires, and focus groups, among others. An important note here is that the way you collect the data will be different in a quantitative and qualitative scenario.

- Clean: Once you have the necessary data it is time to clean it and leave it ready for analysis. Not all the data you collect will be useful, when collecting big amounts of data in different formats it is very likely that you will find yourself with duplicate or badly formatted data. To avoid this, before you start working with your data you need to make sure to erase any white spaces, duplicate records, or formatting errors. This way you avoid hurting your analysis with bad-quality data.

- Analyze : With the help of various techniques such as statistical analysis, regressions, neural networks, text analysis, and more, you can start analyzing and manipulating your data to extract relevant conclusions. At this stage, you find trends, correlations, variations, and patterns that can help you answer the questions you first thought of in the identify stage. Various technologies in the market assist researchers and average users with the management of their data. Some of them include business intelligence and visualization software, predictive analytics, and data mining, among others.

- Interpret: Last but not least you have one of the most important steps: it is time to interpret your results. This stage is where the researcher comes up with courses of action based on the findings. For example, here you would understand if your clients prefer packaging that is red or green, plastic or paper, etc. Additionally, at this stage, you can also find some limitations and work on them.

Now that you have a basic understanding of the key data analysis steps, let’s look at the top 17 essential methods.

17 Essential Types Of Data Analysis Methods

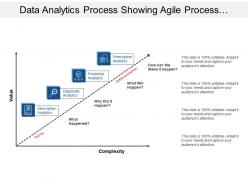

Before diving into the 17 essential types of methods, it is important that we go over really fast through the main analysis categories. Starting with the category of descriptive up to prescriptive analysis, the complexity and effort of data evaluation increases, but also the added value for the company.

a) Descriptive analysis - What happened.

The descriptive analysis method is the starting point for any analytic reflection, and it aims to answer the question of what happened? It does this by ordering, manipulating, and interpreting raw data from various sources to turn it into valuable insights for your organization.

Performing descriptive analysis is essential, as it enables us to present our insights in a meaningful way. Although it is relevant to mention that this analysis on its own will not allow you to predict future outcomes or tell you the answer to questions like why something happened, it will leave your data organized and ready to conduct further investigations.

b) Exploratory analysis - How to explore data relationships.

As its name suggests, the main aim of the exploratory analysis is to explore. Prior to it, there is still no notion of the relationship between the data and the variables. Once the data is investigated, exploratory analysis helps you to find connections and generate hypotheses and solutions for specific problems. A typical area of application for it is data mining.

c) Diagnostic analysis - Why it happened.

Diagnostic data analytics empowers analysts and executives by helping them gain a firm contextual understanding of why something happened. If you know why something happened as well as how it happened, you will be able to pinpoint the exact ways of tackling the issue or challenge.

Designed to provide direct and actionable answers to specific questions, this is one of the world’s most important methods in research, among its other key organizational functions such as retail analytics , e.g.

c) Predictive analysis - What will happen.

The predictive method allows you to look into the future to answer the question: what will happen? In order to do this, it uses the results of the previously mentioned descriptive, exploratory, and diagnostic analysis, in addition to machine learning (ML) and artificial intelligence (AI). Through this, you can uncover future trends, potential problems or inefficiencies, connections, and casualties in your data.

With predictive analysis, you can unfold and develop initiatives that will not only enhance your various operational processes but also help you gain an all-important edge over the competition. If you understand why a trend, pattern, or event happened through data, you will be able to develop an informed projection of how things may unfold in particular areas of the business.

e) Prescriptive analysis - How will it happen.

Another of the most effective types of analysis methods in research. Prescriptive data techniques cross over from predictive analysis in the way that it revolves around using patterns or trends to develop responsive, practical business strategies.

By drilling down into prescriptive analysis, you will play an active role in the data consumption process by taking well-arranged sets of visual data and using it as a powerful fix to emerging issues in a number of key areas, including marketing, sales, customer experience, HR, fulfillment, finance, logistics analytics , and others.

As mentioned at the beginning of the post, data analysis methods can be divided into two big categories: quantitative and qualitative. Each of these categories holds a powerful analytical value that changes depending on the scenario and type of data you are working with. Below, we will discuss 17 methods that are divided into qualitative and quantitative approaches.

Without further ado, here are the 17 essential types of data analysis methods with some use cases in the business world:

A. Quantitative Methods

To put it simply, quantitative analysis refers to all methods that use numerical data or data that can be turned into numbers (e.g. category variables like gender, age, etc.) to extract valuable insights. It is used to extract valuable conclusions about relationships, differences, and test hypotheses. Below we discuss some of the key quantitative methods.

1. Cluster analysis

The action of grouping a set of data elements in a way that said elements are more similar (in a particular sense) to each other than to those in other groups – hence the term ‘cluster.’ Since there is no target variable when clustering, the method is often used to find hidden patterns in the data. The approach is also used to provide additional context to a trend or dataset.

Let's look at it from an organizational perspective. In a perfect world, marketers would be able to analyze each customer separately and give them the best-personalized service, but let's face it, with a large customer base, it is timely impossible to do that. That's where clustering comes in. By grouping customers into clusters based on demographics, purchasing behaviors, monetary value, or any other factor that might be relevant for your company, you will be able to immediately optimize your efforts and give your customers the best experience based on their needs.

2. Cohort analysis

This type of data analysis approach uses historical data to examine and compare a determined segment of users' behavior, which can then be grouped with others with similar characteristics. By using this methodology, it's possible to gain a wealth of insight into consumer needs or a firm understanding of a broader target group.

Cohort analysis can be really useful for performing analysis in marketing as it will allow you to understand the impact of your campaigns on specific groups of customers. To exemplify, imagine you send an email campaign encouraging customers to sign up for your site. For this, you create two versions of the campaign with different designs, CTAs, and ad content. Later on, you can use cohort analysis to track the performance of the campaign for a longer period of time and understand which type of content is driving your customers to sign up, repurchase, or engage in other ways.

A useful tool to start performing cohort analysis method is Google Analytics. You can learn more about the benefits and limitations of using cohorts in GA in this useful guide . In the bottom image, you see an example of how you visualize a cohort in this tool. The segments (devices traffic) are divided into date cohorts (usage of devices) and then analyzed week by week to extract insights into performance.

3. Regression analysis

Regression uses historical data to understand how a dependent variable's value is affected when one (linear regression) or more independent variables (multiple regression) change or stay the same. By understanding each variable's relationship and how it developed in the past, you can anticipate possible outcomes and make better decisions in the future.

Let's bring it down with an example. Imagine you did a regression analysis of your sales in 2019 and discovered that variables like product quality, store design, customer service, marketing campaigns, and sales channels affected the overall result. Now you want to use regression to analyze which of these variables changed or if any new ones appeared during 2020. For example, you couldn’t sell as much in your physical store due to COVID lockdowns. Therefore, your sales could’ve either dropped in general or increased in your online channels. Through this, you can understand which independent variables affected the overall performance of your dependent variable, annual sales.

If you want to go deeper into this type of analysis, check out this article and learn more about how you can benefit from regression.

4. Neural networks

The neural network forms the basis for the intelligent algorithms of machine learning. It is a form of analytics that attempts, with minimal intervention, to understand how the human brain would generate insights and predict values. Neural networks learn from each and every data transaction, meaning that they evolve and advance over time.

A typical area of application for neural networks is predictive analytics. There are BI reporting tools that have this feature implemented within them, such as the Predictive Analytics Tool from datapine. This tool enables users to quickly and easily generate all kinds of predictions. All you have to do is select the data to be processed based on your KPIs, and the software automatically calculates forecasts based on historical and current data. Thanks to its user-friendly interface, anyone in your organization can manage it; there’s no need to be an advanced scientist.

Here is an example of how you can use the predictive analysis tool from datapine:

**click to enlarge**

5. Factor analysis

The factor analysis also called “dimension reduction” is a type of data analysis used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. The aim here is to uncover independent latent variables, an ideal method for streamlining specific segments.

A good way to understand this data analysis method is a customer evaluation of a product. The initial assessment is based on different variables like color, shape, wearability, current trends, materials, comfort, the place where they bought the product, and frequency of usage. Like this, the list can be endless, depending on what you want to track. In this case, factor analysis comes into the picture by summarizing all of these variables into homogenous groups, for example, by grouping the variables color, materials, quality, and trends into a brother latent variable of design.

If you want to start analyzing data using factor analysis we recommend you take a look at this practical guide from UCLA.

6. Data mining

A method of data analysis that is the umbrella term for engineering metrics and insights for additional value, direction, and context. By using exploratory statistical evaluation, data mining aims to identify dependencies, relations, patterns, and trends to generate advanced knowledge. When considering how to analyze data, adopting a data mining mindset is essential to success - as such, it’s an area that is worth exploring in greater detail.

An excellent use case of data mining is datapine intelligent data alerts . With the help of artificial intelligence and machine learning, they provide automated signals based on particular commands or occurrences within a dataset. For example, if you’re monitoring supply chain KPIs , you could set an intelligent alarm to trigger when invalid or low-quality data appears. By doing so, you will be able to drill down deep into the issue and fix it swiftly and effectively.

In the following picture, you can see how the intelligent alarms from datapine work. By setting up ranges on daily orders, sessions, and revenues, the alarms will notify you if the goal was not completed or if it exceeded expectations.

7. Time series analysis

As its name suggests, time series analysis is used to analyze a set of data points collected over a specified period of time. Although analysts use this method to monitor the data points in a specific interval of time rather than just monitoring them intermittently, the time series analysis is not uniquely used for the purpose of collecting data over time. Instead, it allows researchers to understand if variables changed during the duration of the study, how the different variables are dependent, and how did it reach the end result.

In a business context, this method is used to understand the causes of different trends and patterns to extract valuable insights. Another way of using this method is with the help of time series forecasting. Powered by predictive technologies, businesses can analyze various data sets over a period of time and forecast different future events.

A great use case to put time series analysis into perspective is seasonality effects on sales. By using time series forecasting to analyze sales data of a specific product over time, you can understand if sales rise over a specific period of time (e.g. swimwear during summertime, or candy during Halloween). These insights allow you to predict demand and prepare production accordingly.

8. Decision Trees

The decision tree analysis aims to act as a support tool to make smart and strategic decisions. By visually displaying potential outcomes, consequences, and costs in a tree-like model, researchers and company users can easily evaluate all factors involved and choose the best course of action. Decision trees are helpful to analyze quantitative data and they allow for an improved decision-making process by helping you spot improvement opportunities, reduce costs, and enhance operational efficiency and production.

But how does a decision tree actually works? This method works like a flowchart that starts with the main decision that you need to make and branches out based on the different outcomes and consequences of each decision. Each outcome will outline its own consequences, costs, and gains and, at the end of the analysis, you can compare each of them and make the smartest decision.

Businesses can use them to understand which project is more cost-effective and will bring more earnings in the long run. For example, imagine you need to decide if you want to update your software app or build a new app entirely. Here you would compare the total costs, the time needed to be invested, potential revenue, and any other factor that might affect your decision. In the end, you would be able to see which of these two options is more realistic and attainable for your company or research.

9. Conjoint analysis

Last but not least, we have the conjoint analysis. This approach is usually used in surveys to understand how individuals value different attributes of a product or service and it is one of the most effective methods to extract consumer preferences. When it comes to purchasing, some clients might be more price-focused, others more features-focused, and others might have a sustainable focus. Whatever your customer's preferences are, you can find them with conjoint analysis. Through this, companies can define pricing strategies, packaging options, subscription packages, and more.

A great example of conjoint analysis is in marketing and sales. For instance, a cupcake brand might use conjoint analysis and find that its clients prefer gluten-free options and cupcakes with healthier toppings over super sugary ones. Thus, the cupcake brand can turn these insights into advertisements and promotions to increase sales of this particular type of product. And not just that, conjoint analysis can also help businesses segment their customers based on their interests. This allows them to send different messaging that will bring value to each of the segments.

10. Correspondence Analysis

Also known as reciprocal averaging, correspondence analysis is a method used to analyze the relationship between categorical variables presented within a contingency table. A contingency table is a table that displays two (simple correspondence analysis) or more (multiple correspondence analysis) categorical variables across rows and columns that show the distribution of the data, which is usually answers to a survey or questionnaire on a specific topic.

This method starts by calculating an “expected value” which is done by multiplying row and column averages and dividing it by the overall original value of the specific table cell. The “expected value” is then subtracted from the original value resulting in a “residual number” which is what allows you to extract conclusions about relationships and distribution. The results of this analysis are later displayed using a map that represents the relationship between the different values. The closest two values are in the map, the bigger the relationship. Let’s put it into perspective with an example.

Imagine you are carrying out a market research analysis about outdoor clothing brands and how they are perceived by the public. For this analysis, you ask a group of people to match each brand with a certain attribute which can be durability, innovation, quality materials, etc. When calculating the residual numbers, you can see that brand A has a positive residual for innovation but a negative one for durability. This means that brand A is not positioned as a durable brand in the market, something that competitors could take advantage of.

11. Multidimensional Scaling (MDS)

MDS is a method used to observe the similarities or disparities between objects which can be colors, brands, people, geographical coordinates, and more. The objects are plotted using an “MDS map” that positions similar objects together and disparate ones far apart. The (dis) similarities between objects are represented using one or more dimensions that can be observed using a numerical scale. For example, if you want to know how people feel about the COVID-19 vaccine, you can use 1 for “don’t believe in the vaccine at all” and 10 for “firmly believe in the vaccine” and a scale of 2 to 9 for in between responses. When analyzing an MDS map the only thing that matters is the distance between the objects, the orientation of the dimensions is arbitrary and has no meaning at all.

Multidimensional scaling is a valuable technique for market research, especially when it comes to evaluating product or brand positioning. For instance, if a cupcake brand wants to know how they are positioned compared to competitors, it can define 2-3 dimensions such as taste, ingredients, shopping experience, or more, and do a multidimensional scaling analysis to find improvement opportunities as well as areas in which competitors are currently leading.

Another business example is in procurement when deciding on different suppliers. Decision makers can generate an MDS map to see how the different prices, delivery times, technical services, and more of the different suppliers differ and pick the one that suits their needs the best.

A final example proposed by a research paper on "An Improved Study of Multilevel Semantic Network Visualization for Analyzing Sentiment Word of Movie Review Data". Researchers picked a two-dimensional MDS map to display the distances and relationships between different sentiments in movie reviews. They used 36 sentiment words and distributed them based on their emotional distance as we can see in the image below where the words "outraged" and "sweet" are on opposite sides of the map, marking the distance between the two emotions very clearly.

Aside from being a valuable technique to analyze dissimilarities, MDS also serves as a dimension-reduction technique for large dimensional data.

B. Qualitative Methods

Qualitative data analysis methods are defined as the observation of non-numerical data that is gathered and produced using methods of observation such as interviews, focus groups, questionnaires, and more. As opposed to quantitative methods, qualitative data is more subjective and highly valuable in analyzing customer retention and product development.

12. Text analysis

Text analysis, also known in the industry as text mining, works by taking large sets of textual data and arranging them in a way that makes it easier to manage. By working through this cleansing process in stringent detail, you will be able to extract the data that is truly relevant to your organization and use it to develop actionable insights that will propel you forward.

Modern software accelerate the application of text analytics. Thanks to the combination of machine learning and intelligent algorithms, you can perform advanced analytical processes such as sentiment analysis. This technique allows you to understand the intentions and emotions of a text, for example, if it's positive, negative, or neutral, and then give it a score depending on certain factors and categories that are relevant to your brand. Sentiment analysis is often used to monitor brand and product reputation and to understand how successful your customer experience is. To learn more about the topic check out this insightful article .

By analyzing data from various word-based sources, including product reviews, articles, social media communications, and survey responses, you will gain invaluable insights into your audience, as well as their needs, preferences, and pain points. This will allow you to create campaigns, services, and communications that meet your prospects’ needs on a personal level, growing your audience while boosting customer retention. There are various other “sub-methods” that are an extension of text analysis. Each of them serves a more specific purpose and we will look at them in detail next.

13. Content Analysis

This is a straightforward and very popular method that examines the presence and frequency of certain words, concepts, and subjects in different content formats such as text, image, audio, or video. For example, the number of times the name of a celebrity is mentioned on social media or online tabloids. It does this by coding text data that is later categorized and tabulated in a way that can provide valuable insights, making it the perfect mix of quantitative and qualitative analysis.

There are two types of content analysis. The first one is the conceptual analysis which focuses on explicit data, for instance, the number of times a concept or word is mentioned in a piece of content. The second one is relational analysis, which focuses on the relationship between different concepts or words and how they are connected within a specific context.

Content analysis is often used by marketers to measure brand reputation and customer behavior. For example, by analyzing customer reviews. It can also be used to analyze customer interviews and find directions for new product development. It is also important to note, that in order to extract the maximum potential out of this analysis method, it is necessary to have a clearly defined research question.

14. Thematic Analysis

Very similar to content analysis, thematic analysis also helps in identifying and interpreting patterns in qualitative data with the main difference being that the first one can also be applied to quantitative analysis. The thematic method analyzes large pieces of text data such as focus group transcripts or interviews and groups them into themes or categories that come up frequently within the text. It is a great method when trying to figure out peoples view’s and opinions about a certain topic. For example, if you are a brand that cares about sustainability, you can do a survey of your customers to analyze their views and opinions about sustainability and how they apply it to their lives. You can also analyze customer service calls transcripts to find common issues and improve your service.

Thematic analysis is a very subjective technique that relies on the researcher’s judgment. Therefore, to avoid biases, it has 6 steps that include familiarization, coding, generating themes, reviewing themes, defining and naming themes, and writing up. It is also important to note that, because it is a flexible approach, the data can be interpreted in multiple ways and it can be hard to select what data is more important to emphasize.

15. Narrative Analysis

A bit more complex in nature than the two previous ones, narrative analysis is used to explore the meaning behind the stories that people tell and most importantly, how they tell them. By looking into the words that people use to describe a situation you can extract valuable conclusions about their perspective on a specific topic. Common sources for narrative data include autobiographies, family stories, opinion pieces, and testimonials, among others.

From a business perspective, narrative analysis can be useful to analyze customer behaviors and feelings towards a specific product, service, feature, or others. It provides unique and deep insights that can be extremely valuable. However, it has some drawbacks.

The biggest weakness of this method is that the sample sizes are usually very small due to the complexity and time-consuming nature of the collection of narrative data. Plus, the way a subject tells a story will be significantly influenced by his or her specific experiences, making it very hard to replicate in a subsequent study.

16. Discourse Analysis

Discourse analysis is used to understand the meaning behind any type of written, verbal, or symbolic discourse based on its political, social, or cultural context. It mixes the analysis of languages and situations together. This means that the way the content is constructed and the meaning behind it is significantly influenced by the culture and society it takes place in. For example, if you are analyzing political speeches you need to consider different context elements such as the politician's background, the current political context of the country, the audience to which the speech is directed, and so on.

From a business point of view, discourse analysis is a great market research tool. It allows marketers to understand how the norms and ideas of the specific market work and how their customers relate to those ideas. It can be very useful to build a brand mission or develop a unique tone of voice.

17. Grounded Theory Analysis

Traditionally, researchers decide on a method and hypothesis and start to collect the data to prove that hypothesis. The grounded theory is the only method that doesn’t require an initial research question or hypothesis as its value lies in the generation of new theories. With the grounded theory method, you can go into the analysis process with an open mind and explore the data to generate new theories through tests and revisions. In fact, it is not necessary to collect the data and then start to analyze it. Researchers usually start to find valuable insights as they are gathering the data.

All of these elements make grounded theory a very valuable method as theories are fully backed by data instead of initial assumptions. It is a great technique to analyze poorly researched topics or find the causes behind specific company outcomes. For example, product managers and marketers might use the grounded theory to find the causes of high levels of customer churn and look into customer surveys and reviews to develop new theories about the causes.

How To Analyze Data? Top 17 Data Analysis Techniques To Apply

Now that we’ve answered the questions “what is data analysis’”, why is it important, and covered the different data analysis types, it’s time to dig deeper into how to perform your analysis by working through these 17 essential techniques.

1. Collaborate your needs

Before you begin analyzing or drilling down into any techniques, it’s crucial to sit down collaboratively with all key stakeholders within your organization, decide on your primary campaign or strategic goals, and gain a fundamental understanding of the types of insights that will best benefit your progress or provide you with the level of vision you need to evolve your organization.

2. Establish your questions

Once you’ve outlined your core objectives, you should consider which questions will need answering to help you achieve your mission. This is one of the most important techniques as it will shape the very foundations of your success.

To help you ask the right things and ensure your data works for you, you have to ask the right data analysis questions .

3. Data democratization

After giving your data analytics methodology some real direction, and knowing which questions need answering to extract optimum value from the information available to your organization, you should continue with democratization.

Data democratization is an action that aims to connect data from various sources efficiently and quickly so that anyone in your organization can access it at any given moment. You can extract data in text, images, videos, numbers, or any other format. And then perform cross-database analysis to achieve more advanced insights to share with the rest of the company interactively.

Once you have decided on your most valuable sources, you need to take all of this into a structured format to start collecting your insights. For this purpose, datapine offers an easy all-in-one data connectors feature to integrate all your internal and external sources and manage them at your will. Additionally, datapine’s end-to-end solution automatically updates your data, allowing you to save time and focus on performing the right analysis to grow your company.

4. Think of governance

When collecting data in a business or research context you always need to think about security and privacy. With data breaches becoming a topic of concern for businesses, the need to protect your client's or subject’s sensitive information becomes critical.

To ensure that all this is taken care of, you need to think of a data governance strategy. According to Gartner , this concept refers to “ the specification of decision rights and an accountability framework to ensure the appropriate behavior in the valuation, creation, consumption, and control of data and analytics .” In simpler words, data governance is a collection of processes, roles, and policies, that ensure the efficient use of data while still achieving the main company goals. It ensures that clear roles are in place for who can access the information and how they can access it. In time, this not only ensures that sensitive information is protected but also allows for an efficient analysis as a whole.

5. Clean your data

After harvesting from so many sources you will be left with a vast amount of information that can be overwhelming to deal with. At the same time, you can be faced with incorrect data that can be misleading to your analysis. The smartest thing you can do to avoid dealing with this in the future is to clean the data. This is fundamental before visualizing it, as it will ensure that the insights you extract from it are correct.

There are many things that you need to look for in the cleaning process. The most important one is to eliminate any duplicate observations; this usually appears when using multiple internal and external sources of information. You can also add any missing codes, fix empty fields, and eliminate incorrectly formatted data.

Another usual form of cleaning is done with text data. As we mentioned earlier, most companies today analyze customer reviews, social media comments, questionnaires, and several other text inputs. In order for algorithms to detect patterns, text data needs to be revised to avoid invalid characters or any syntax or spelling errors.

Most importantly, the aim of cleaning is to prevent you from arriving at false conclusions that can damage your company in the long run. By using clean data, you will also help BI solutions to interact better with your information and create better reports for your organization.

6. Set your KPIs

Once you’ve set your sources, cleaned your data, and established clear-cut questions you want your insights to answer, you need to set a host of key performance indicators (KPIs) that will help you track, measure, and shape your progress in a number of key areas.

KPIs are critical to both qualitative and quantitative analysis research. This is one of the primary methods of data analysis you certainly shouldn’t overlook.

To help you set the best possible KPIs for your initiatives and activities, here is an example of a relevant logistics KPI : transportation-related costs. If you want to see more go explore our collection of key performance indicator examples .

7. Omit useless data

Having bestowed your data analysis tools and techniques with true purpose and defined your mission, you should explore the raw data you’ve collected from all sources and use your KPIs as a reference for chopping out any information you deem to be useless.

Trimming the informational fat is one of the most crucial methods of analysis as it will allow you to focus your analytical efforts and squeeze every drop of value from the remaining ‘lean’ information.

Any stats, facts, figures, or metrics that don’t align with your business goals or fit with your KPI management strategies should be eliminated from the equation.

8. Build a data management roadmap

While, at this point, this particular step is optional (you will have already gained a wealth of insight and formed a fairly sound strategy by now), creating a data governance roadmap will help your data analysis methods and techniques become successful on a more sustainable basis. These roadmaps, if developed properly, are also built so they can be tweaked and scaled over time.

Invest ample time in developing a roadmap that will help you store, manage, and handle your data internally, and you will make your analysis techniques all the more fluid and functional – one of the most powerful types of data analysis methods available today.

9. Integrate technology

There are many ways to analyze data, but one of the most vital aspects of analytical success in a business context is integrating the right decision support software and technology.

Robust analysis platforms will not only allow you to pull critical data from your most valuable sources while working with dynamic KPIs that will offer you actionable insights; it will also present them in a digestible, visual, interactive format from one central, live dashboard . A data methodology you can count on.

By integrating the right technology within your data analysis methodology, you’ll avoid fragmenting your insights, saving you time and effort while allowing you to enjoy the maximum value from your business’s most valuable insights.

For a look at the power of software for the purpose of analysis and to enhance your methods of analyzing, glance over our selection of dashboard examples .

10. Answer your questions

By considering each of the above efforts, working with the right technology, and fostering a cohesive internal culture where everyone buys into the different ways to analyze data as well as the power of digital intelligence, you will swiftly start to answer your most burning business questions. Arguably, the best way to make your data concepts accessible across the organization is through data visualization.

11. Visualize your data

Online data visualization is a powerful tool as it lets you tell a story with your metrics, allowing users across the organization to extract meaningful insights that aid business evolution – and it covers all the different ways to analyze data.

The purpose of analyzing is to make your entire organization more informed and intelligent, and with the right platform or dashboard, this is simpler than you think, as demonstrated by our marketing dashboard .

This visual, dynamic, and interactive online dashboard is a data analysis example designed to give Chief Marketing Officers (CMO) an overview of relevant metrics to help them understand if they achieved their monthly goals.

In detail, this example generated with a modern dashboard creator displays interactive charts for monthly revenues, costs, net income, and net income per customer; all of them are compared with the previous month so that you can understand how the data fluctuated. In addition, it shows a detailed summary of the number of users, customers, SQLs, and MQLs per month to visualize the whole picture and extract relevant insights or trends for your marketing reports .

The CMO dashboard is perfect for c-level management as it can help them monitor the strategic outcome of their marketing efforts and make data-driven decisions that can benefit the company exponentially.

12. Be careful with the interpretation

We already dedicated an entire post to data interpretation as it is a fundamental part of the process of data analysis. It gives meaning to the analytical information and aims to drive a concise conclusion from the analysis results. Since most of the time companies are dealing with data from many different sources, the interpretation stage needs to be done carefully and properly in order to avoid misinterpretations.

To help you through the process, here we list three common practices that you need to avoid at all costs when looking at your data:

- Correlation vs. causation: The human brain is formatted to find patterns. This behavior leads to one of the most common mistakes when performing interpretation: confusing correlation with causation. Although these two aspects can exist simultaneously, it is not correct to assume that because two things happened together, one provoked the other. A piece of advice to avoid falling into this mistake is never to trust just intuition, trust the data. If there is no objective evidence of causation, then always stick to correlation.

- Confirmation bias: This phenomenon describes the tendency to select and interpret only the data necessary to prove one hypothesis, often ignoring the elements that might disprove it. Even if it's not done on purpose, confirmation bias can represent a real problem, as excluding relevant information can lead to false conclusions and, therefore, bad business decisions. To avoid it, always try to disprove your hypothesis instead of proving it, share your analysis with other team members, and avoid drawing any conclusions before the entire analytical project is finalized.