An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Method of preparing a document for survey instrument validation by experts

Associated data.

Validation of a survey instrument is an important activity in the research process. Face validity and content validity, though being qualitative methods, are essential steps in validating how far the survey instrument can measure what it is intended for. These techniques are used in both scale development processes and a questionnaire that may contain multiple scales. In the face and content validation, a survey instrument is usually validated by experts from academics and practitioners from field or industry. Researchers face challenges in conducting a proper validation because of the lack of an appropriate method for communicating the requirement and receiving the feedback.

In this Paper, the authors develop a template that could be used for the validation of survey instrument.

In instrument development process, after the item pool is generated, the template is completed and sent to the reviewer. The reviewer will be able to give the necessary feedback through the template that will be helpful to the researcher in improving the instrument.

Graphical abstract

Specifications table

*Method details

Introduction

Survey instruments or questionnaires are the most popular data collection tool because of its many advantages. Collecting data from a huge population in a limited time and at a lower cost, convenient to respondents, anonymity, lack of interviewer bias and standardization of questions are some of the benefits. However, an important disadvantage of a questionnaire is poor data quality due to incomplete and inaccurate questions, wording problems and poor development process. The problems are critical and can be avoided or mitigated [14] .

To ensure the quality of the instrument, using a previously validated questionnaire is useful. This will save time and resources in development process and testing its reliability and validity. However, there can be situations wherein a new questionnaire is needed [5] . Whenever a new scale or questionnaire needs to be developed, following a structured method will help us to develop a quality instrument. There are many approaches in scale development and all the methods include stages for testing reliability and validity among them.

Even though there are many literatures available on the reliability and validity procedures, many researches struggle to operationalize the process. Collingridge [8] wrote in the Methodspace blog of Sage publication that he repeatedly asked professors on how to validate the questions in a survey and unfortunately did not get an answer. Most of the time, researchers send the completely designed questionnaire with the actual measurement scale without providing adequate information for the reviewers to provide proper feedback. This paper is an effort to develop a document template that can capture the feedback of the expert reviewers of the instrument.

This paper is structured as follows: Section 1 provides the introduction to the need for a validation format for research, and the fundamentals of validation and the factors involved in validation from various literature studies are discussed in Section 2. Section 3 presents the methodology used in framing the validation format. Section 4 provides the results of the study. Section 5 presents explanation of how the format can be used and feedback be processed. Finally, Section 6 concludes the paper with a note on contribution.

Review of literature

A questionnaire is explained as “an instrument for the measurement of one or more constructs by means of aggregated item scores, called scales” [21] . A questionnaire can be identified on a continuum of unstructured to structure [14] . A structured questionnaire will “have a similar format, are usually statements, questions, or stimulus words with structured response categories, and require a judgment or description by a respondent or rater” [21] . Research in social science with a positivist paradigm began in the 19th century. The first use of a questionnaire is attributed to the Statistical Society of London as early as 1838. Berthold Sigismund proposed the first guidelines for questionnaire development in 1856, which provided a definite plan for the questionnaire method [13] . In 1941, The British Association for the Advancement of Science provided Acceptance of Quantitative Measures for Sensory Events [26] provided a much pervasive application or questionnaire in research, similar to Guttman scale [15] , Thurstone Scale [27] and Likert Scale [18] .

Carpenter [6] argued that scholars do not follow the best practices in the measurement building procedure. The author claims that “the defaults in the statistical programs, inadequate training and numerous evaluation points can lead to improper practices”. Many researchers have proposed techniques for scale development. We trace the prominent methods from the literature. Table 1 presents various frameworks in scale development.

Frameworks of Scale development.

Reeves and Marbach-Ad [22] argued that the quantitative aspect of social science research is different from science in terms of quantifying the phenomena using instruments. Bollen [4] explained that a social science instrument measures latent variables that are not directly observed, although inferred from observable behaviour. Because of this characteristic of social science measures, there is a need to ensure that what is being measured actually is measuring the intended phenomenon.

The concept of reliability and validity was evolved as early as 1896 by Pearson. The validity theory from 1900 to 1950 basically dealt with the alignment of test scores with other measures. This was operationally tested by correlation. The validity theory was refined during the 1950s to include criterion, content and construct validity. Correlation of the test measure to an accurate criterion score is the criterion validity. In 1955, criterion validity was proposed as concurrent validity and predictive validity. Content validity provides “domain relevance and representativeness of the test instrument”. The concept of construct validity was introduced in 1954 and got increased emphasis, and from 1985 it took a central form as the appropriate test for validity. The new millennium saw a change in the perspectives of validity theory. Contemporary validity theory is a metamorphosis of epistemological and methodological perspectives. Argument-based approach and consequences-based validity are some new concepts that are evolving [24] .

American Educational Research Association (AERA), American Psychological Association (APA) and National Council on Measurement in Education (NCME) jointly developed ‘Standards for educational and psychological testing’. It is described as “the degree to which evidence and theory support the interpretations of test scores for posed uses of tests” [1] .

Based on the ‘Standards’, the validity tests are classified on the type of evidence. Standards 1.11 to 1.25, describe various evidence to test the validity [1] . Table 2 presents different types of validities based on evidence and their explanation.

Types of validity.

( Source: [1] )

Souza et al. [25] argued that “there is no statistical test to assess specifically the content validity; usually researchers use a qualitative approach, through the assessment of an experts committee, and then, a quantitative approach using the content validity index (CVI).”

Worthington and Whittaker [29] conducted a content analysis on new scales developed between 1995 and 2004. They specifically focused on the use of Exploratory and Confirmatory Factor Analysis (EFA & CFA) procedures in the validation of the scales. They argued that though the post-tests in the validation procedure, which are usually based on factor-analytic techniques, are more scientific and rigorous, the preliminary steps are necessary. Mistakes committed in the initial stages of scale development lead to problems in the later stages.

Messick [20] proposed six distinguishable notions of construct validity for educational and psychological measurements. Among the six, the foremost one is the content validity that looks at the relevance of the content, representativeness and technical quality. In a similar way Oosterveld et al. [21] developed taxonomy of questionnaire design directed towards psychometric aspects. The taxonomy introduces the following questionnaire design methods: (1) coherent, (2) prototypical, (3) internal, (4) external, (5) construct and (6) facet design technique. These methods are related “to six psychometric features guiding them face validity, process validity, homogeneity, criterion validity, construct validity and content validity”. The authors presented these methods under four stages: (1) concept review, (2) item generation, (3) scale development and (4) evaluation. After the definition of the construct in the first stage, the item pool is developed. The item production stage “comprises an item review by judges, e.g., experts, or potential respondents, and a pilot administration of the preliminary questionnaire, the results of which are subsequently used for refinement of the items”.

What needs to be checked?

This paper mainly focuses on the expert validation done under the face validity and content validity stages. Martinez [19] provides a clear distinction between content validity and face validity. “Face validity requires an examination of a measure and the items of which it is composed as sufficient and suitable ‘on its face’ for capturing a concept. A measure with face validity will be visibly relevant to the concept it is intended to measure, and less so to other concepts”. Though face validity is the quick and excellent first step for assessing the appropriateness of measure to capture the concept, it is not sufficient. It needs to be interpreted along with other forms of measurement validity.

“Content validity focuses on the degree to which a measure captures the full dimension of a particular concept. A measure exhibiting high content validity is one that encompasses the full meaning of the concept it is intended to assess” [19] . An extensive review of literature and consultation with experts ensures the validity of the content.

From the review of various literature studies, we arrive at the details of validation that need to be done by experts. Domain or subject matter experts both from academic and industry, a person with expertise in the construct being developed, people familiar with the target population on whom the instrument will be used, users of the instrument, data analysts and those who take decisions based on the scores of the test are recommended as experts. Experts are consulted during the concept development stage and item generation stage. Experts provide feedback on the content, sensitivity and standard settings [10] .

During the concept development stage, experts provide inputs on the definition of the constructs, relating it to the domain and also check with the related concepts. At the item generation stage, experts validate the representativeness and significance of each item to the construct, accuracy of each item in measuring the concept, inclusion or deletion of elements, logical sequence of the items, and scoring models. Experts also validate how the instrument can measure the concept among different groups of respondents. An item is checked for its bias to specific groups such as gender, minority groups and linguistically different groups. Experts also provide standard scores or cutoff scores for decision making [10] .

The second set of reviewers who are experts in questionnaire development basically check the structural aspects of the instrument in terms of common errors such as double-barreled, confusing and leading questions. This also includes language experts, even if the questionnaire is developed in a popular language like English. Other language experts are required in case the instrument involves translation.

There were many attempts to standardize the validation of the questionnaire. Forsyth et al. [11] developed a Forms Appraisal model, which was an exhaustive list of problems that occur in a questionnaire item. This was found to be tiresome for experts. Fowler and Roman [12] developed an ‘Interviewer Rating Form’, which allowed experts to comment on three qualities: (1) trouble reading the question, (2) respondent not understanding the meaning or ideas in the question and (3) respondent having difficulty in providing an answer. The experts had to code as ‘ A ’ for ‘No evidence of a problem’, ‘ B ’ for ‘Possible problem’ and ‘ C ’ for ‘Definite Problem’. Willis and Lessler [28] developed a shorter version of the coding scheme for evaluation of questionnaire items called “Question appraisal system (QAS)”. This system evaluates each item on 26 problem areas under seven heads. The expert needs to just code ‘Yes’ or ‘No’ for each item. Akkerboom and Dehue [2] developed a systematic review of a questionnaire for an interview and self-completion questionnaire with 26 problems items categorized under eight problem areas.

Hinkin [16] recommended a "best practices" of “clearly cite the theoretical literature on which the new measures are based and describe the manner in which the items were developed and the sample used for item development”. The author claims that “in many articles, this information was lacking, and it was not clear whether there was little justification for the items chosen or if the methodology employed was simply not adequately presented”.

Further to the qualitative analysis of the items, recent developments include quantitative assessments of the items. “The content adequacy of a set of newly developed items is assessed by asking respondents to rate the extent to which items corresponded with construct definitions” [16] . Souza et al. [25] suggest using the Content Validity Index (CVI) for the quantitative approach. Experts evaluate every item on a four-point scale, in which “1 = non-equivalent item; 2 = the item needs to be extensively revised so equivalence can be assessed; 3 = equivalent item, needs minor adjustments; and 4 = totally equivalent item”. The number of items with a score of 3 or 4 and dividing it with the total number of answers is used to calculate an index of CVI. The CVI value is the percentage of judges who agree with an item, and the index value of at least 0.80 and higher than 0.90 is accepted.

Information to be provided to the experts

The problems with conducting a face validity and content validity may be attributed to both scale developer and the reviewer. Scale developers do not convey their requirements to the experts properly, and experts are also not sure about what is expected by the researcher. Therefore, a format is developed, which will capture the requirements information for scale validation from both the researcher and the experts.

Covering letter

A covering letter is an important part when sending a questionnaire for review. It can help in persuading a reviewer to support the research. It should be short and simple. A covering letter first invites the experts for the review and provides esteem to the expert. Even if the questionnaire for review is handed over personally, having a covering letter will serve instructions for the review process and the expectations from the reviewer.

Boateng et al. [3] recommended that the researcher specifies the purpose of the construct or the questionnaire being developed, justifying the development of new instruments by confirming that there are no existing instruments are crucial. If there are any similar instruments, how different is the proposed one from the existing instruments.

The covering letter can mention the maximum time required for the review and any compensation that the expert will be awarded. This will motivate the reviewer to contribute their expertise and efforts. Instructions on how to complete the review process, what aspects to be checked, the coding systems and how to give the feedback are also provided in the covering letter. The covering letter ends with a thank you note in advance and personally signed by the instrument developer. Information on further contact details can also be provided at the end of the covering letter.

Introduction to research

Boateng et al. [3] proposed that it is an essential step to articulate the domain(s) before any validation process. They recommend that “the domain being examined should be decided upon and defined before any item activity. A well-defined domain will provide a working knowledge of the phenomenon under study, specify the boundaries of the domain, and ease the process of item generation and content validation”.

In the introduction section, the research problem being addressed, existing theories, the proposed theory or model that will be investigated, list of variables/concepts that are to be measured can be elaborated. Guion [30] defended that for those who do not just accept the content validity by the evaluations of operational definition alone, five conditions will be a tentative answer: “(1) the content domain should be grounded in behavior with a commonly accepted meaning, (2) the content domain must be defined in a manner that is not open to more than one interpretation, (3) the content domain must be related to the purposes of measurement, (4) qualified judges must agree that the domain has been sufficiently sampled and (5) the response content must be dependably observed and evaluated.” Therefore, the information provided in the ‘Introduction’ section will be helpful to the expert to do a content validity at the first step.

Construct-wise item validation

After the need for the measure or the survey instrument is communicated, the domain is validated. The next step is to validate the items. Validation may be done for developing a scale for a single concept or as a questionnaire with multiple concepts of measure. For a multiple construct instrument, the validation is done construct-wise.

In an instrument with multiple constructs, the Introduction provides information at the theory level. The domain validation is done to assess the relevance of the theory to the problem. In the next section, the domain validation is done at variable level. Similar to the Introduction, details about the construct is provided. The definition of the construct, source of the definition, description of the concept, and the operational definition are shared to the experts. Experts will validate the construct by relating it to the relevant domain. If the conceptualization and definition are not properly done, it will result in poor evaluation of the items.

New items are developed by deductive method or deductive method. In deductive methods, items are generated from already existing scales and indicators through literature review. In inductive technique, the items are generated through direct observation, individual interviews, focus group discussion and exploratory research. It is necessary to convey how the item is generated to the expert reviewer. Even when the item or a scale is adopted unaltered; it becomes necessary to validate them to assess their relevance to a particular culture or a region. Even in such situations, it is necessary to inform the reviewer about the source of the items.

Experts review each item and the construct as a whole. For each item, item code, the item statement, measurement scale, the source of item and description of the item are provided. In informing the source of the item, there are three options. When the item is adopted as it is from the previous scales, the source can be provided. If the item is adapted by modifying the earlier item, the source and the original item can be informed along with description of modification done. If the item is developed by induction, the item source can be mentioned. First, experts evaluate each item to assess if they represent the domain of the construct and provide their evaluation and 4-point or 3-point scale. When multiple experts are used for the validation process, this score can also be used for quantitative evaluation. The quality parameters of the item are further evaluated. Researchers may choose the questionnaire appraisal scheme from many different systems available. An open remarks column is provided for experts to give any feedback that is not covered by the format. A comments section is provided at the end of the construct validation section where the experts can give the feedback such underrepresentation of the construct by the items.

Validation of demography items

The same way, the information regarding each of the demography items that will be required in the questionnaire is also included in the format. Finally, space for the expert to comment on the entire instrument is also provided. The template of the evaluation form is provided in the Appendix.

Inferring the feedback

Since the feedback is a qualitative approach, mathematical or statistical approach is not required for inferring the review. Researcher can retain, remove or modify the statements of the questionnaire as indicated by the experts as essential, not essential and modify. As we have recommended using the quality parameters of QAS for describing the problems and issues, researcher will get a precise idea on what need to be corrected. Remarks by the experts will carry additional information in form of comments or suggestion that will be easy to follow when revising the items. General comments at the end of each scale or construct will provide suggestions on adding further items to the construct.

Despite the various frameworks available for the available to the researchers for developing the survey instrument, the quality of the same is not at the desirable level. Content validation of the measuring instrument is an essential requirement of every research. A rigorous process expert validation can avoid the problems at the latter stage. However, researchers are disadvantaged at operationalising the instrument review process. Researchers are challenged with communicating the background information and collecting the feedback. This paper is an attempt to design a standard format for the expert validation of the survey instrument. Through a literature review, the expectations from the expert review for validation are identified. The domain of the construct, relevance, accuracy, inclusion or deletion of items, sensitivity, bias, structural aspects such as language issues, double-barreled, negative, confusing and leading questions need to be validated by the experts. A format is designed with a covering page having an invitation to the experts, their role, introduction to the research and the instrument. Information regarding the scale and the list of the scale item are provided in the subsequent pages. The demography questions are also included for validation. The expert review format will provide standard communication and feedback between the researcher and the expert reviewer that can help in developing a rigorous and quality survey instruments.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

[OPTIONAL. This is where you can acknowledge colleagues who have helped you that are not listed as co-authors, and funding. MethodsX is a community effort, by researchers for researchers. We highly appreciate the work not only of authors submitting, but also of the reviewers who provide valuable input to each submission. We therefore publish a standard ``thank you'' note in each of the articles to acknowledge the efforts made by the respective reviewers.]

Supplementary material associated with this article can be found, in the online version, at doi: 10.1016/j.mex.2021.101326 .

Appendix. Supplementary materials

- My Bookings

- How to Determine the Validity and Reliability of an Instrument

How to Determine the Validity and Reliability of an Instrument By: Yue Li

Validity and reliability are two important factors to consider when developing and testing any instrument (e.g., content assessment test, questionnaire) for use in a study. Attention to these considerations helps to insure the quality of your measurement and of the data collected for your study.

Understanding and Testing Validity

Validity refers to the degree to which an instrument accurately measures what it intends to measure. Three common types of validity for researchers and evaluators to consider are content, construct, and criterion validities.

- Content validity indicates the extent to which items adequately measure or represent the content of the property or trait that the researcher wishes to measure. Subject matter expert review is often a good first step in instrument development to assess content validity, in relation to the area or field you are studying.

- Construct validity indicates the extent to which a measurement method accurately represents a construct (e.g., a latent variable or phenomena that can’t be measured directly, such as a person’s attitude or belief) and produces an observation, distinct from that which is produced by a measure of another construct. Common methods to assess construct validity include, but are not limited to, factor analysis, correlation tests, and item response theory models (including Rasch model).

- Criterion-related validity indicates the extent to which the instrument’s scores correlate with an external criterion (i.e., usually another measurement from a different instrument) either at present ( concurrent validity ) or in the future ( predictive validity ). A common measurement of this type of validity is the correlation coefficient between two measures.

Often times, when developing, modifying, and interpreting the validity of a given instrument, rather than view or test each type of validity individually, researchers and evaluators test for evidence of several different forms of validity, collectively (e.g., see Samuel Messick’s work regarding validity).

Understanding and Testing Reliability

Reliability refers to the degree to which an instrument yields consistent results. Common measures of reliability include internal consistency, test-retest, and inter-rater reliabilities.

- Internal consistency reliability looks at the consistency of the score of individual items on an instrument, with the scores of a set of items, or subscale, which typically consists of several items to measure a single construct. Cronbach’s alpha is one of the most common methods for checking internal consistency reliability. Group variability, score reliability, number of items, sample sizes, and difficulty level of the instrument also can impact the Cronbach’s alpha value.

- Test-retest measures the correlation between scores from one administration of an instrument to another, usually within an interval of 2 to 3 weeks. Unlike pre-post tests, no treatment occurs between the first and second administrations of the instrument, in order to test-retest reliability. A similar type of reliability called alternate forms , involves using slightly different forms or versions of an instrument to see if different versions yield consistent results.

- Inter-rater reliability checks the degree of agreement among raters (i.e., those completing items on an instrument). Common situations where more than one rater is involved may occur when more than one person conducts classroom observations, uses an observation protocol or scores an open-ended test, using a rubric or other standard protocol. Kappa statistics, correlation coefficients, and intra-class correlation (ICC) coefficient are some of the commonly reported measures of inter-rater reliability.

Developing a valid and reliable instrument usually requires multiple iterations of piloting and testing which can be resource intensive. Therefore, when available, I suggest using already established valid and reliable instruments, such as those published in peer-reviewed journal articles. However, even when using these instruments, you should re-check validity and reliability, using the methods of your study and your own participants’ data before running additional statistical analyses. This process will confirm that the instrument performs, as intended, in your study with the population you are studying, even though they are identical to the purpose and population for which the instrument was initially developed. Below are a few additional, useful readings to further inform your understanding of validity and reliability.

Resources for Understanding and Testing Reliability

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1985). Standards for educational and psychological testing . Washington, DC: Authors.

- Bond, T. G., & Fox, C. M. (2001). Applying the Rasch model: Fundamental measurement in the human sciences . Mahwah, NJ: Lawrence Erlbaum.

- Cronbach, L. (1990). Essentials of psychological testing . New York, NY: Harper & Row.

- Carmines, E., & Zeller, R. (1979). Reliability and Validity Assessment . Beverly Hills, CA: Sage Publications.

- Messick, S. (1987). Validity . ETS Research Report Series, 1987: i–208. doi: 10.1002/j.2330-8516.1987.tb00244.x

- Liu, X. (2010). Using and developing measurement instruments in science education: A Rasch modeling approach . Charlotte, NC: Information Age.

- Search for:

Recent Posts

- Avoiding Data Analysis Pitfalls

- Advice in Building and Boasting a Successful Grant Funding Track Record

- Personal History of Miami University’s Discovery and E & A Centers

- Center Director’s Message

Recent Comments

- November 2016

- September 2016

- February 2016

- November 2015

- October 2015

- Uncategorized

- Entries feed

- Comments feed

- WordPress.org

17.4.1 Validity of instruments

Validity has to do with whether the instrument is measuring what it is intended to measure. Empirical evidence that PROs measure the domains of interest allows strong inferences regarding validity. To provide such evidence, investigators have borrowed validation strategies from psychologists who for many years have struggled with determining whether questionnaires assessing intelligence and attitudes really measure what is intended.

Validation strategies include:

content-related: evidence that the items and domains of an instrument are appropriate and comprehensive relative to its intended measurement concept(s), population and use;

construct-related: evidence that relationships among items, domains, and concepts conform to a priori hypotheses concerning logical relationships that should exist with other measures or characteristics of patients and patient groups; and

criterion-related (for a PRO instrument used as diagnostic tool): the extent to which the scores of a PRO instrument are related to a criterion measure.

Establishing validity involves examining the logical relationships that should exist between assessment measures. For example, we would expect that patients with lower treadmill exercise capacity generally will have more shortness of breath in daily life than those with higher exercise capacity, and we would expect to see substantial correlations between a new measure of emotional function and existing emotional function questionnaires.

When we are interested in evaluating change over time, we examine correlations of change scores. For example, patients who deteriorate in their treadmill exercise capacity should, in general, show increases in dyspnoea, whereas those whose exercise capacity improves should experience less dyspnoea. Similarly, a new emotional function measure should show improvement in patients who improve on existing measures of emotional function. The technical term for this process is testing an instrument’s construct validity.

Review authors should look for, and evaluate the evidence of, the validity of PROs used in their included studies. Unfortunately, reports of randomized trials and other studies using PROs seldom review evidence of the validity of the instruments they use, but review authors can gain some reassurance from statements (backed by citations) that the questionnaires have been validated previously.

A final concern about validity arises if the measurement instrument is used with a different population, or in a culturally and linguistically different environment, than the one in which it was developed (typically, use of a non-English version of an English-language questionnaire). Ideally, one would have evidence of validity in the population enrolled in the randomized trial. Ideally PRO measures should be re-validated in each study using whatever data are available for the validation, for instance, other endpoints measured. Authors should note, in evaluating evidence of validity, when the population assessed in the trial is different from that used in validation studies.

Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Instrument validity.

Validity (a concept map shows the various types of validity) A instrument is valid only to the extent that it’s scores permits appropriate inferences to be made about 1) a specific group of people for 2) specific purposes.

An instrument that is a valid measure of third grader’s math skills probably is not a valid measure of high school calculus student’s math skills. An instrument that is a valid predictor of how well students might do in school, may not be a valid measure of how well they will do once they complete school. So we never say that an instrument is valid or not valid…we say it is valid for a specific purpose with a specific group of people. Validity is specific to the appropriateness of the interpretations we wish to make with the scores.

In the reliability section , we discussed a scale that consistently reported a weight of 15 pounds for someone. While it may be a reliable instrument, it is not a valid instrument to determine someone’s weight in pounds. Just as a measuring tape is a valid instrument to determine people’s height, it is not a valid instrument to determine their weight.

There are three general categories of instrument validity. Content-Related Evidence (also known as Face Validity) Specialists in the content measured by the instrument are asked to judge the appropriateness of the items on the instrument. Do they cover the breath of the content area (does the instrument contain a representative sample of the content being assessed)? Are they in a format that is appropriate for those using the instrument? A test that is intended to measure the quality of science instruction in fifth grade, should cover material covered in the fifth grade science course in a manner appropriate for fifth graders. A national science test might not be a valid measure of local science instruction, although it might be a valid measure of national science standards.

Criterion-Related Evidence Criterion-related evidence is collected by comparing the instrument with some future or current criteria, thus the name criterion-related. The purpose of an instrument dictates whether predictive or concurrent validity is warranted.

– Predictive Validity If an instrument is purported to measure some future performance, predictive validity should be investigated. A comparison must be made between the instrument and some later behavior that it predicts. Suppose a screening test for 5-year-olds is purported to predict success in kindergarten. To investigate predictive validity, one would give the prescreening instrument to 5-year-olds prior to their entry into kindergarten. The children’s kindergarten performance would be assessed at the end of kindergarten and a correlation would be calculated between the screening instrument scores and the kindergarten performance scores.

– Concurrent Validity Concurrent validity compares scores on an instrument with current performance on some other measure. Unlike predictive validity, where the second measurement occurs later, concurrent validity requires a second measure at about the same time. Concurrent validity for a science test could be investigated by correlating scores for the test with scores from another established science test taken about the same time. Another way is to administer the instrument to two groups who are known to differ on the trait being measured by the instrument. One would have support for concurrent validity if the scores for the two groups were very different. An instrument that measures altruism should be able to discriminate those who possess it (nuns) from those who don’t (homicidal maniacs). One would expect the nuns to score significantly higher on the instrument.

Construct-Related Evidence Construct validity is an on-going process. Please refer to pages 174-176 for more information. Construct validity will not be on the test.

– Discriminant Validity An instrument does not correlate significantly with variables from which it should differ.

– Convergent Validity An instrument correlates highly with other variables with which it should theoretically correlate.

Del Siegle, Ph.D. Neag School of Education – University of Connecticut [email protected] www.delsiegle.info

How to Validate a Research Instrument

The Real Difference Between Reliability and Validity

In the field of Psychology, research is a necessary component of determining whether a given treatment is effective and if our current understanding of human behavior is accurate. Therefore, the instruments used to evaluate research data must be valid and precise. If they are not, the information collected from a study is likely to be biased or factually flawed, doing more harm than good.

Protect construct validity. A construct is the behavior or outcome a researcher seeks to measure within a study, often revealed by the independent variable. Therefore, it is important to operationalize or define the construct precisely. For example, if you are studying depression but only measure the number of times a person cries, your construct is not valid and your research will likely be skewed.

Protect internal validity. Internal validity refers to how well your experiment is free of outside influence that could taint its results. Thus, a research instrument that takes students’ grades into account but not their developmental age is not a valid determinant of intelligence. Because the grades on a test will vary within different age brackets, a valid instrument should control for differences and isolate true scores.

Protect external validity. External validity refers to how well your study reflects the real world and not just an artificial situation. An instrument may work perfectly with a group of white male college students but this does not mean its results are generalizable to children, blue-collar adults or those of varied gender and ethnicity. For an instrument to have high external validity, it must be applicable to a diverse group of people and a wide array of natural environments.

Protect conclusion validity. When the study is complete, researchers may still invalidate their data by making a conclusion error. Essentially, there are two types to guard against. A Type I error is concluding there is no relationship between experimental variables when, in fact, there is. Conversely, a Type II error is claiming a relationship exists when the correlation is merely the result of flawed data.

Validating instruments and conducting experimental research is an extensive area within the mental health profession and should never be taken lightly. For an in-depth treatment of this topic, see Research Design in Counseling (3rd edition) by Heppner, Wampold, & Kivlighan.

Related Articles

How to Evaluate Statistical Analysis

Forms of Validity Used in Assessment Instruments

What Is Experimental Research Design?

Types of Primary Data

How Important Is Scientific Evidence?

Types of research hypotheses.

Limitations to Qualitative Research

The Disadvantages of Logistic Regression

- Validating instruments and conducting experimental research is an extensive area within the mental health profession and should never be taken lightly. For an in-depth treatment of this topic, see Research Design in Counseling (3rd edition) by Heppner, Wampold, & Kivlighan.

David Kingsbury holds degrees in psychology and theology from Campbellsville University. He has published two university-level pieces and numerous freelance articles on culture and society, entertainment, and travel. His portfolio includes clips from "Polymancer" magazine and various online health and fitness sites, where he offers his services as a mental health writer.

- Research Process

Why is data validation important in research?

- 3 minute read

- 56.9K views

Table of Contents

Data collection and analysis is one of the most important aspects of conducting research. High-quality data allows researchers to interpret findings accurately, act as a foundation for future studies, and give credibility to their research. As such, research often needs to go under the scanner to be free of suspicions of fraud and data falsification . At times, even unintentional errors in data could be viewed as research misconduct. Hence, data integrity is essential to protect your reputation and the reliability of your study.

Owing to the very nature of research and the sheer volume of data collected in large-scale studies, errors are bound to occur. One way to avoid “bad” or erroneous data is through data validation.

What is data validation?

Data validation is the process of examining the quality and accuracy of the collected data before processing and analysing it. It not only ensures the accuracy but also confirms the completeness of your data. However, data validation is time-consuming and can delay analysis significantly. So, is this step really important?

Importance of data validation

Data validation is important for several aspects of a well-conducted study:

- To ensure a robust dataset: The primary aim of data validation is to ensure an error-free dataset for further analysis. This is especially important if you or other researchers plan to use the dataset for future studies or to train machine learning models.

- To get a clearer picture of the data: Data validation also includes ‘cleaning-up’ of data, i.e., removing inputs that are incomplete, not standardized, or not within the range specified for your study. This process could also shed light on previously unknown patterns in the data and provide additional insights regarding the findings.

- To get accurate results: If your dataset has discrepancies, it will impact the final results and lead to inaccurate interpretations. Data validation can help identify errors, thus increasing the accuracy of your results.

- To mitigate the risk of forming incorrect hypotheses: Only those inferences and hypotheses that are backed by solid data are considered valid. Thus, data validation can help you form logical and reasonable speculations .

- To ensure the legitimacy of your findings: The integrity of your study is often determined by how reproducible it is. Data validation can enhance the reproducibility of your findings.

Data validation in research

Data validation is necessary for all types of research. For quantitative research, which utilizes measurable data points, the quality of data can be enhanced by selecting the correct methodology, avoiding biases in the study design, choosing an appropriate sample size and type, and conducting suitable statistical analyses.

In contrast, qualitative research , which includes surveys or behavioural studies, is prone to the use of incomplete and/or poor-quality data. This is because of the likelihood that the responses provided by survey participants are inaccurate and due to the subjective nature of observational studies. Thus, it is extremely important to validate data by incorporating a range of clear and objective questions in surveys, bullet-proofing multiple-choice questions, and setting standard parameters for data collection.

Importantly, for studies that utilize machine learning approaches or mathematical models, validating the data model is as important as validating the data inputs. Thus, for the generation of automated data validation protocols, one must rely on appropriate data structures, content, and file types to avoid errors due to automation.

Although data validation may seem like an unnecessary or time-consuming step, it is absolutely critical to validate the integrity of your study and is absolutely worth the effort. To learn more about how to validate data effectively, head over to Elsevier Author Services !

- Manuscript Preparation

How to write the results section of a research paper

Choosing the Right Research Methodology: A Guide for Researchers

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Writing a good review article

Scholarly Sources: What are They and Where can You Find Them?

Input your search keywords and press Enter.

How to validate a research instrument/definition/importance

The validation of a research instrument refers to the process of assessing the survey questions to ensure reliability. How to validate a research instrument?

Because there are multiple hard-to-control factors that can influence the reliability of a question, this process is not a quick or easy task.

6 steps to validate a research instrument

Here are six steps for you to effectively validate a research instrument.

Step 1: Perform an Instrument Test

The first of the steps to validate a research instrument is divided into two parts. The first is to offer a survey to a group familiar with the research topic to assess whether the questions successfully capture it.

The second review should come from someone who is an expert in question construction, ensuring that your survey does not contain common mistakes, such as confusing or ambiguous questions .

Step 2: Run a pilot test

Another step in validating a research instrument is to select a subset of the survey participants and run a pilot survey . The suggested sample size varies, although around 10 percent of your total population is a solid number of participants . The more participants you can gather the better, although even a smaller sample can help you eliminate irrelevant questions . How to validate a research instrument?

Step 3: Clean the collected data

After going through the data collection process , you can export the raw data for curation. This greatly reduces the risk of error. Once they are entered, the next step is to reverse the code for the negatively asked questions .

If respondents have responded carefully, their responses to questions that were expressed negatively should be consistent with their responses to similar questions that were expressed positively. If that’s not the case, you can think about deleting that poll.

Also check the minimum and maximum values for your general data set. For example, if you used a five-point scale and you see an answer that indicates the number six, you may have a data entry error.

Fortunately, there are software such as QuestionPro that have tools for quality control of survey data .

Step 4: Perform a Component Analysis

Another step to validate a research instrument is to perform a component analysis .

The goal of this stage is to determine what the items represent by looking for trends in the questions . You can combine the questions that are loaded into the same items by comparing them during their final analysis. How to validate a research instrument?

The number of item themes you can identify indicates the number of items your survey is measuring.

Step 5: Check the consistency of the questions

The next step in validating a research instrument is to check the consistency of the questions that are loaded in the same items.

Checking the correlation between the questions measures the reliability of the questions by ensuring that the survey responses are consistent.

Step 6: Review your survey

The last of the steps to validate a research instrument is the final review of the survey based on the information obtained from the data analysis . How to validate a research instrument?

If you come across a question that doesn’t relate to your survey items, you should delete it. If it is important, you can keep it and analyze it separately.

If only minor changes were made to the survey, you are likely ready to apply it after the final reviews. But if the changes are significant, another pilot survey and evaluation process will probably be needed.

Importance of validating a research instrument

Taking these steps to validate a research instrument is essential to ensure that the survey is truly reliable.

It is important to remember that you must include your instrument’s validation methods when submitting your research results report . =

Taking these steps to validate a research instrument not only strengthens its reliability, but also adds a title of quality and professionalism to your final product.

Related Articles

Group interview definition/types/advantages/disadvantages, data collection methods in quantitative research.

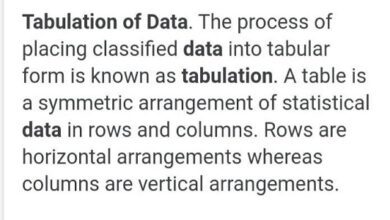

What is tabulation of data in survey types objective How to tabulate

What are periodicals Tips for finding How to find items Examples

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Please input characters displayed above.

Validation of the Teacher Questionnaire of Montessori Practice for Early Childhood in the Dutch Context

- Symen van der Zee Saxion University of Applied Sciences

Montessori education has existed for more than 100 years and counts almost 16,000 schools worldwide (Debs et al., 2022). Still, little is known about the implementation and fidelity of Montessori principles. Measuring implementations holds significant importance as it provides insight into current Montessori practices and because it is assumed that implementation might influence its effectiveness. In the Netherlands, it is especially important to measure fidelity because of the country’s history of flexible implementation of Montessori principles. No instruments currently exist that are specifically designed to measure Montessori implementation in the Dutch context. This study aims to validate a translated version of the Teacher Questionnaire for Montessori Practices, developed by Murray et al. (2019), within the Dutch early childhood education context. Additionally, it seeks to investigate the extent to which Montessori principles are implemented in Dutch early childhood schools. Data were collected from 131 early childhood Montessori teachers. Confirmatory factor analysis revealed that the Dutch dataset did not align with the factor structure proposed by Murray et al. (2019). Subsequent exploratory factor analysis led to the identification of a 3-factor solution, encompassing dimensions related to Children’s Freedom, Teacher Guidance, and Curriculum, which shows some similarities with Murray et al.’s (2019) factors. Implementation levels in the Netherlands varied, with the highest level of implementation observed in Children’s Freedom and the lowest in Curriculum.

Bartlett, M. S. (1954). A note on the multiplying factors for various χ 2 approximations. Journal of the Royal Statistical Society. Series B (Methodological), 296-298.

Berends, R. & de Brouwer, J. (2020). Perspectieven op Montessori [Perspectives on Montessori]. Saxion University Progressive Education University Press.

Brown, T. A. (2015). Confirmatory factor analysis for applied research (2nd ed.). Guilford Publications.

Debs, M. (2023). Introduction: Global Montessori Education. In M. McKenna, A. Murray, E. Alquist, & M. Debs (Eds.), Handbook of Montessori education. Bloomsbury Publications.

Debs, M., de Brouwer, J., Murray, A. K., Lawrence, L., Tyne, M., & Von der Wehl, C. (2022). Global diffusion of Montessori schools. Journal of Montessori Research, 8(2), 1–15. https://doi.org/10.17161/jomr.v8i2.18675

Demangeon, A., Claudel-Valentin, S., Aubry, A., & Tazouti, Y. (2023). A meta-analysis of the effects of Montessori education on five fields of development and learning in preschool and school-age children. Contemporary Educational Psychology, Article 102182. https://doi.org/10.1016/j.cedpsych.2023.102182

Dutch Montessori Association. (n.d.). Onze scholen op de kaart [Our schools on the map]. https://montessori.nl/scholen/

Dutch Montessori Association. (1983). Raamplan Montessori opleidingen [Framework plan for Montessori teacher training]. Nederlandse Montessori Vereniging.

Eyssen, C. H. (1919). Iets over de Montessori gedachten in de Fröbelschool [Thoughts about Montessori in Fröbel schools]. Correspondentieblad van den Bond van Onderwijzeressen bij het Fröbelonderwijs, 15, 37–38.

Fabrigar, L., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299. https://doi.org/10.1037/1082-989X.4.3.272

Field, A. (2018). Discovering statistics using IBM SPSS statistics. SAGE.

Gerker, H. E. (2023). Making sense of Montessori teacher identity, Montessori pedagogy, and educational policies in public schools. Journal of Montessori Research, 9(1), 1–15. https://doi.org/10.17161/jomr.v9i1.18861

Hardesty, D. M., & Bearden, W. O. (2004). The use of expert judges in scale development. Implications for improving face validity of measures of unobservable constructs. Journal of Business Research, 57(2), 98–107. https://doi.org/10.1016/S0148-2963(01)00295-8

Hazenoot, M. (2010). In rusteloze arbeid: de betekenis van Cornelia Philippi-Siewertsz van Reesema (1880-1963) voor de ontwikkeling van het onderwijs aan het jonge kind [In restless labor: The significance of Cornelia Philippi-Siewertsz van Reesema (1880-1963) for the development of early childhood education]. [Unpublished doctoral dissertation]. University of Groningen].

Hoencamp, M., Exalto, J., de Muynck, A., & de Ruyter, D. (2022). A Dutch example of New Education: Philipp Abraham Kohnstamm (1875–1951) and his ideas about the New School. History of Education, 51(6), 789-806. https://doi.org/10.1080/0046760X.2022.2038697

Horn, J. L. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika, 30(2), 179–185. https://psycnet.apa.org/doi/10.1007/BF02289447

Hu, L.-I., & Bentler P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55. https://doi.org/10.1080/10705519909540118

Imelman, J. D., & Meijer, W. A. J. (1986). De nieuwe school, gisteren en vandaag [The new school, yesterday and tomorrow]. Elsevier.

Inspectorate of Education. (n.d.). Toezichtresultaten [Monitoring results]. https://toezichtresultaten.onderwijsinspectie.nl/zoek?q=montessori&page=1§or=BAS

Joosten-Chotzen, R. (1937). Montessori opvoeding. Haar toepassing in ons land. [Montessori education. Its application in our country]. Bijleveld.

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39(1), 31-36. https://doi.org/10.1007/bf02291575

Kramer, R. (1976). Maria Montessori: A biography. Diversion Books.

Ledesma, R. D., & Valero-Mora, P. (2007). Determining the number of factors to retain in EFA: An easy-to-use computer program for carrying out Parallel Analysis. Practical Assessment, Research, and Evaluation, 12(1). https://doi.org/10.7275/wjnc-nm63

Leenders, H. H. M. (1999). Montessori en fascistisch Italië: een receptiegeschiedenis [Montessori and fascist Italy: A reception history]. Intro.

Lillard, A. S. (2019). Shunned and admired: Montessori, self-determination, and a case for radical school reform. Educational Psychology Review, 31, 939–965. https://doi.org/10.1007/s10648-019-09483-3

Lillard, A. S. (2012). Preschool children’s development in classic Montessori, supplemented Montessori, and conventional programs. Journal of School Psychology, 50(3), 379–401. https://doi.org/10.1016/j.jsp.2012.01.001

Lillard, A. S., Heise, M. J., Richey, E. M., Tong, X., Hart, A., & Bray, P. M. (2017). Montessori preschool elevates and equalizes child outcomes: A longitudinal study. Frontiers in Psychology, 8. https://doi.org/10.3389/fpsyg.2017.01783

Montessori, M. (1935). De Methode der wetenschappelijke pedagogiek [The Montessori Method]. Van Holkema & Warendorf N.V. (original work published 1914)

Montessori, M. (1937). Het geheim van het kinderleven [The secret of childhood]. Van Holkeman & Warendorf N.V.

Montessori, M. (1949). The absorbent mind. Theosophical Press.

Montessori, M. (1971). The formation of man. The Theosophical Publishing House. (original work published 1955)

Montessori, M. (1989). The child in the family. Clio Press. (original work published 1975)

Montessori, M. (1997). The California lectures of Maria Montessori, 1915. Clio Press.

Montessori, M. M. (1961). Waarom Montessori lycea [Why Montessori high schools]. In M. A. Allessie, O. Cornelissen, J. Haak, L. D. Misset, & W. P. Postma (Eds.), Montessori perspectieven (pp. 15–21). J. H. De Busy.

Montessori, M. M. (1973). Voorwoord [Preface]. In M. J. ten Cate, T. J. Hoeksema, & E. J. Lintvelt-Lemaire, (Eds.), Het montessori material deel 1 (pp. 3–5). Nederlandse Montessori Vereniging.

Murray, A. K., Chen, J., & Daoust, C. J. (2019). Developing instruments to measure Montessori instructional practices. Journal of Montessori Research, 5(1), 75–87. https://doi.org/10.17161/jomr.v5i1.9797

Murray, A. K., & Daoust, C. J. (2023). Fidelity issues in Montessori research. In M. McKenna, A. Murray, E. Alquist, & M. Debs (Eds.), Handbook of Montessori education. Bloomsbury Publications.

Philippi-Siewertsz van Reesema, C. (1924). Voorlopers van Montessori [Predecessors of Montessori]. Pedagogische Studiën, 5, 72–77.

Philippi-Siewertsz van Reesema, C. (1954). Kleuterwereld en kleuterschool [Toddler world and kindergarten]. Wereldbibliotheek. https://objects.library.uu.nl/reader/index.php?obj=1874-205180&lan=en#page//21/44/33/21443341246326260137183521089397551476.jpg/mode/1up

Prudon, P. (2015). Confirmatory factor analysis as a tool in research using questionnaires: A critique. Comprehensive Psychology. https://doi.org/10.2466/03.cp.4.10

Randolph, R. J., Bryson, A., Menon, L., Henderson, D., Mauel, A. K., Michaels, S., Rosenstein, D. L. W., McPherson, W., O’Grady, R., & Lillard, A. S. (2023). Montessori education’s impact on academic and nonacademic outcomes: A systematic review. Campbell Systematic Reviews, 19(3), 1–82. https://doi.org/10.1002/cl2.1330

Rietveld-van Wingerden, M., Sturm, J. C., & Miedema, S. (2003). Vrijheid van onderwijs en sociale cohesie in historisch perspectief [Freedom of education and social cohesion in historical perspective]. Pedagogiek, 23(2), 97–108. https://dspace.library.uu.nl/handle/1874/187625

den Ouden, M. et al. . (n.d.). Saxion Ethische commissie [Saxion Universitiy of Applied Sciences Ethical commission]. https://srs.saxion.nl/ethische-commissie/

Schmitt, T. A. (2011). Current methodological considerations in exploratory and confirmatory factor analysis. Journal of Psychoeducational Assessment, 29(4), 304–321. https://doi.org/10.1177/0734282911406653

Schwegman, M. (1999). Maria Montessori 1870–1952. Amsterdam University Press.

Sins, P., & van der Zee, S. (2015). De toegevoegde waarde van traditioneel vernieuwingsonderwijs: een studie naar de verschillen in cognitieve en niet-cognitieve opbrengsten tussen daltonscholen en traditionele scholen voor primair onderwijs [The added value of progressive education: A study of the differences in cognitive and noncognitive outcomes between Dalton schools and traditional primary schools]. Pedagogische Studiën, 92(4). https://pedagogischestudien.nl/article/view/14174/15663

Sixma, J. (1956). De Montessori lagere school [The Montessori elementary school]. Wolters.

Slaman, P. (2018). In de regel vrij. 100 jaar politiek rond onderwijs, cultuur en wetenschap [Generally free. 100 years of politics around education, culture and science]. Ministerie van OCW.

Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s alpha. International Journal of Medical Education, 2, 53–55. https://doi.org/10.5116/ijme.4dfb.8dfd

Tavakol, M., & Wetzel, A. (2020). Factor analysis: A means for theory and instrument development in support of construct validity. International Journal of Medical Education, 11, 245–247. https://doi.org/10.5116/ijme.5f96.0f4a

Thompson, B., & Daniel, L. G. (1996). Factor Analytic Evidence for the Construct Validity of Scores: A Historical Overview and Some Guidelines. Educational and Psychological Measurement, 56(2), 197–208. https://doi.org/10.1177/0013164496056002001

Vos, W. (2007). Aandacht voor kwaliteit [Focus on quality]. In M. Stefels & M. Rubinstein (Eds.), Montessorischolen in beweging (pp. 75–80). Garant Uitgevers.

Williams, B., Onsman, A., & Brown, T. (2010). Exploratory factor analysis: A five-step guide for novices. Australasian Journal of Paramedicine, 8, 1–13. https://doi.org/10.33151/ajp.8.3.93

Worthington, R. L., & Whittaker, T. A. (2006). Scale development research: a content analysis and recommendations for best practices. The Counseling Psychologist, 34(6), 806–838. https://doi.org/10.1177/0011000006288127

Copyright (c) 2024 Jaap de Brouwer, Vivian Morssink-Santing, Symen van der Zee

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License .

Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution Non-Commercial License that allows others to share the work with an acknowledgement of the work's authorship and initial publication in this journal. Authors can view article download statistics for published articles within their accounts.

Journal of Montessori Research Author Agreement The following is an agreement between the Author (the “Corresponding Author”) acting on behalf of all authors of the work (“Authors”) and the Journal of Montessori Research (the “Journal”) regarding your article (the “Work”) that is being submitted for consideration. Whereas the parties desire to promote effective scholarly communication that promotes local control of intellectual assets, the parties for valuable consideration agree as follows. A. CORRESPONDING AUTHOR’S GRANT OF RIGHTS After being accepted for publication, the Corresponding Author grants to the Journal, during the full term of copyright and any extensions or renewals of that term, the following: 1. An irrevocable non-exclusive right to reproduce, republish, transmit, sell, distribute, and otherwise use the Work in electronic and print editions of the Journal and in derivative works throughout the world, in all languages, and in all media now known or later developed. 2. An irrevocable non-exclusive right to create and store electronic archival copies of theWork, including the right to deposit the Work in open access digital repositories. 3. An irrevocable non-exclusive right to license others to reproduce, republish, transmit,and distribute the Work under the condition that the Authors are attributed. (Currently this is carried out by publishing the content under a Creative Commons Attribution Non-Commercial 4.0 license (CC BY-NC.) 4. Copyright in the Work remains with the Authors. B. CORRESPONDING AUTHOR’S DUTIES 1. When distributing or re-publishing the Work, the Corresponding Author agrees to credit the Journal as the place of first publication. 2. The Corresponding Author agrees to inform the Journal of any changes in contact information. C. CORRESPONDING AUTHOR’S WARRANTY The Corresponding Author represents and warrants that the Work is the Authors’ original work and that it does not violate or infringe the law or the rights of any third party and, specifically, that the Work contains no matter that is defamatory or that infringes literary or proprietary rights, intellectual property rights, or any rights of privacy. The Corresponding Author also warrants that he or she has the full power to make this agreement, and if the Work was prepared jointly, the Corresponding Author agrees to inform the Authors of the terms of this Agreement and to obtain their written permission to sign on their behalf. The Corresponding Author agrees to hold the Journal harmless from any breach of the aforestated representations. D. JOURNAL’S DUTIES In consideration of the Author’s grant of rights, the Journal agrees to publish the Work, attributing the Work to the Authors. E. ENTIRE AGREEMENT This agreement reflects the entire understanding of the parties. This agreement may be amended only in writing by an addendum signed by the parties. Amendments are incorporated by reference to this agreement. ACCEPTED AND AGREED BY THE CORRESPONDING AUTHOR ON BEHALF OF ALL AUTHORS CONTRIBUTING TO THIS WORK

How to Cite

- Endnote/Zotero/Mendeley (RIS)

Make a Submission

- Norwegian Bokmål

Developed By

This electronic publication is supported by the

University of Kansas Libraries .

ISSN: 2378-3923

ORIGINAL RESEARCH article

Adaptation of the internet business self-efficacy scale for peruvian students with a commercial profile provisionally accepted.

- 1 Peruvian Union University, Peru

- 2 Scientific University of the South, Peru

The final, formatted version of the article will be published soon.

Introduction: Given the lack of instruments to evaluate the sense of efficacy regarding entrepreneurial capacity in Peruvian university students, this study aims to translate into Spanish, adapt, and validate the Internet Entrepreneurial Self-efficacy Scale in Peruvian university students with a commercial profile. Method: An instrumental study was conducted where 743 students between 18 and 42 years old participated in careers with a commercial profile (Administration, Accounting, Economics, and other related careers) from the three regions of Peru (Coast, Mountains, Jungle). For analyzing content-based validity, Aiken's V coefficient was used, Cronbach's Alpha coefficient was used for reliability, and internal structure was used through confirmatory factor analysis. Results: A reverse translation was achieved in the appropriate time and context. All items proved to be valid (V > .70), and the reliability of the instrument was very good (α = 0.96). Concerning the results of the confirmatory factor analysis, the three-dimensional structure of the instrument was evaluated, finding an adequate fit (2 (87) = 279.6, p < .001, CFI = .972, RMSEA = .049, SRMR = .025), based on this, the original internal structure was corroborated. In complementary analyses, it was found that the instrument is invariant according to sex and university. Finally, it demonstrates significant correlations with scales that measure similar constructs. Conclusions: The Entrepreneurial Selfefficacy Scale on the Internet shows adequate psychometric properties; therefore, it can be used as a management tool to analyze the entrepreneurial capacity of university students with a commercial profile. These findings allow universities to evaluate the entrepreneurial capabilities of students who can promote sustainable businesses, which in turn improves the relationship between the University, state, and company.

Keywords: entrepreneurial self-efficacy, Validation study, Entrepreneurship, university students, Peru

Received: 26 Jan 2024; Accepted: 13 May 2024.

Copyright: © 2024 Torres-Miranda, Ccama, Niño Valiente, Turpo Chaparro, Castillo-Blanco and Mamani-Benito. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

* Correspondence: Mx. Oscar Mamani-Benito, Peruvian Union University, Lima, 05000, Peru

People also looked at

IMAGES

VIDEO

COMMENTS

Validation of a survey instrument is an important activity in the research process. Face validity and content validity, though being qualitative methods, are essential steps in validating how far the survey instrument can measure what it is intended for. ... introduction to the research and the instrument. Information regarding the scale and ...

measurement. An instrument development study might also be necessary if a researcher determines that an existing instrument is potentially psychometrically flawed (e.g., lacking reliability or validity evidence, step 7). An instrument development study might also be necessary if a researcher determines that an existing

Learn about the types and methods of validity and reliability for instruments used in research and evaluation. Find resources and examples for testing and improving the quality of your measurement.

These measurements can be examined to aid the researcher in making informed decisions about revisions to the instrument. Phase four is validation. In this phase the researcher should conduct a quantitative pilot study and analyze the data. It may be helpful to ask the participants for feedback to allow for further refinement of the instrument.

to their specific research interests. The survey is not the focus of the research but a tool, an artifact of conducting research. Other people may decide to use the researcher's instrument as they see fit, though it was not the researcher's intention to provide a new instrument for other researchers to use.

Often new researchers are confused with selection and conducting of proper validity type to test their research instrument (questionnaire/survey). This review article explores and describes the validity and reliability of a questionnaire/survey and also discusses various forms of validity and reliability tests.

Generally speaking the first step in validating a survey is to establish face validity. There are two important steps in this process. First is to have experts or people who understand your topic read through your questionnaire. They should evaluate whether the questions effectively capture the topic under investigation.

Learn how to evaluate the validity of patient-reported outcomes (PROs) instruments used in randomized trials and other studies. Find out the validation strategies, evidence, and challenges for different types of PROs.

Validity is the extent to which an instrument measures what it is supposed to measure and performs as it is designed to perform. It is rare, if nearly impossible, that an instrument be 100% valid, so validity is generally measured in degrees. As a process, validation involves collecting and analyzing data to assess the accuracy of an instrument.

Constructs can be characteristics of individuals, such as intelligence, obesity, job satisfaction, or depression; they can also be broader concepts applied to organizations or social groups, such as gender equality, corporate social responsibility, or freedom of speech.

They indicate how well a method, technique. or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.opt. It's important to consider reliability and validity when you are creating your research design, planning your methods, and writing up your results, especially in ...

This book aims to provide a useful guide for describing all the necessary steps of validating a questionnaire. Since different researchers may adopt slightly different approaches for validating a ...

Abstract. Validation of a survey instrument is an important activity in the research process. Face validity and content validity, though being qualitative methods, are essential steps in validating how far the survey instrument can measure what it is intended for. These techniques are used in both scale development processes and a questionnaire ...

Study instruments and tools must be provided at the time of protocol submission. Information that establishes the validity of the instrument/tool should be included in the protocol. Information about the validation of study tools assists the IRB in its deliberations about the scientific validity of the proposed study.

Instrument Validity. Validity (a concept map shows the various types of validity) A instrument is valid only to the extent that it's scores permits appropriate inferences to be made about. 1) a specific group of people for. 2) specific purposes. An instrument that is a valid measure of third grader's math skills probably is not a valid ...

Learn how to protect construct, internal, external and conclusion validity in psychological research. Find out how to define, operationalize and measure your constructs, and avoid common errors and biases.

Phase-2, expert validation was carried out using experts or experts to assess the instrument by filling out the validation sheet with a rating scale of 1=very poor, 2=not good, 3=fair, 4=good, and ...

Validity in qualitative research can also be checked by a technique known as respondent validation. This technique involves testing initial results with participants to see if they still ring true. Although the research has been interpreted and condensed, participants should still recognize the results as authentic and, at this stage, may even ...

To ensure a robust dataset: The primary aim of data validation is to ensure an error-free dataset for further analysis. This is especially important if you or other researchers plan to use the dataset for future studies or to train machine learning models. To get a clearer picture of the data: Data validation also includes 'cleaning-up' of ...

Step 1: Perform an Instrument Test. The first of the steps to validate a research instrument is divided into two parts. The first is to offer a survey to a group familiar with the research topic to assess whether the questions successfully capture it. The second review should come from someone who is an expert in question construction, ensuring ...

No instruments currently exist that are specifically designed to measure Montessori implementation in the Dutch context. This study aims to validate a translated version of the Teacher Questionnaire for Montessori Practices, developed by Murray et al. (2019), within the Dutch early childhood education context. ... Scale development research: a ...

The coexistence of several definitions of green jobs and measurement instruments gives room for mismatches between those concepts and their application to research questions. This paper first presents an organizing framework for the existing definitions, measurement instruments, and policy frameworks.

Introduction: Given the lack of instruments to evaluate the sense of efficacy regarding entrepreneurial capacity in Peruvian university students, this study aims to translate into Spanish, adapt, and validate the Internet Entrepreneurial Self-efficacy Scale in Peruvian university students with a commercial profile. Method: An instrumental study was conducted where 743 students between 18 and ...