- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.1: Introduction to Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 10211

- Kyle Siegrist

- University of Alabama in Huntsville via Random Services

Basic Theory

Preliminaries.

As usual, our starting point is a random experiment with an underlying sample space and a probability measure \(\P\). In the basic statistical model, we have an observable random variable \(\bs{X}\) taking values in a set \(S\). In general, \(\bs{X}\) can have quite a complicated structure. For example, if the experiment is to sample \(n\) objects from a population and record various measurements of interest, then \[ \bs{X} = (X_1, X_2, \ldots, X_n) \] where \(X_i\) is the vector of measurements for the \(i\)th object. The most important special case occurs when \((X_1, X_2, \ldots, X_n)\) are independent and identically distributed. In this case, we have a random sample of size \(n\) from the common distribution.

The purpose of this section is to define and discuss the basic concepts of statistical hypothesis testing . Collectively, these concepts are sometimes referred to as the Neyman-Pearson framework, in honor of Jerzy Neyman and Egon Pearson, who first formalized them.

A statistical hypothesis is a statement about the distribution of \(\bs{X}\). Equivalently, a statistical hypothesis specifies a set of possible distributions of \(\bs{X}\): the set of distributions for which the statement is true. A hypothesis that specifies a single distribution for \(\bs{X}\) is called simple ; a hypothesis that specifies more than one distribution for \(\bs{X}\) is called composite .

In hypothesis testing , the goal is to see if there is sufficient statistical evidence to reject a presumed null hypothesis in favor of a conjectured alternative hypothesis . The null hypothesis is usually denoted \(H_0\) while the alternative hypothesis is usually denoted \(H_1\).

An hypothesis test is a statistical decision ; the conclusion will either be to reject the null hypothesis in favor of the alternative, or to fail to reject the null hypothesis. The decision that we make must, of course, be based on the observed value \(\bs{x}\) of the data vector \(\bs{X}\). Thus, we will find an appropriate subset \(R\) of the sample space \(S\) and reject \(H_0\) if and only if \(\bs{x} \in R\). The set \(R\) is known as the rejection region or the critical region . Note the asymmetry between the null and alternative hypotheses. This asymmetry is due to the fact that we assume the null hypothesis, in a sense, and then see if there is sufficient evidence in \(\bs{x}\) to overturn this assumption in favor of the alternative.

An hypothesis test is a statistical analogy to proof by contradiction, in a sense. Suppose for a moment that \(H_1\) is a statement in a mathematical theory and that \(H_0\) is its negation. One way that we can prove \(H_1\) is to assume \(H_0\) and work our way logically to a contradiction. In an hypothesis test, we don't prove anything of course, but there are similarities. We assume \(H_0\) and then see if the data \(\bs{x}\) are sufficiently at odds with that assumption that we feel justified in rejecting \(H_0\) in favor of \(H_1\).

Often, the critical region is defined in terms of a statistic \(w(\bs{X})\), known as a test statistic , where \(w\) is a function from \(S\) into another set \(T\). We find an appropriate rejection region \(R_T \subseteq T\) and reject \(H_0\) when the observed value \(w(\bs{x}) \in R_T\). Thus, the rejection region in \(S\) is then \(R = w^{-1}(R_T) = \left\{\bs{x} \in S: w(\bs{x}) \in R_T\right\}\). As usual, the use of a statistic often allows significant data reduction when the dimension of the test statistic is much smaller than the dimension of the data vector.

The ultimate decision may be correct or may be in error. There are two types of errors, depending on which of the hypotheses is actually true.

Types of errors:

- A type 1 error is rejecting the null hypothesis \(H_0\) when \(H_0\) is true.

- A type 2 error is failing to reject the null hypothesis \(H_0\) when the alternative hypothesis \(H_1\) is true.

Similarly, there are two ways to make a correct decision: we could reject \(H_0\) when \(H_1\) is true or we could fail to reject \(H_0\) when \(H_0\) is true. The possibilities are summarized in the following table:

Of course, when we observe \(\bs{X} = \bs{x}\) and make our decision, either we will have made the correct decision or we will have committed an error, and usually we will never know which of these events has occurred. Prior to gathering the data, however, we can consider the probabilities of the various errors.

If \(H_0\) is true (that is, the distribution of \(\bs{X}\) is specified by \(H_0\)), then \(\P(\bs{X} \in R)\) is the probability of a type 1 error for this distribution. If \(H_0\) is composite, then \(H_0\) specifies a variety of different distributions for \(\bs{X}\) and thus there is a set of type 1 error probabilities.

The maximum probability of a type 1 error, over the set of distributions specified by \( H_0 \), is the significance level of the test or the size of the critical region.

The significance level is often denoted by \(\alpha\). Usually, the rejection region is constructed so that the significance level is a prescribed, small value (typically 0.1, 0.05, 0.01).

If \(H_1\) is true (that is, the distribution of \(\bs{X}\) is specified by \(H_1\)), then \(\P(\bs{X} \notin R)\) is the probability of a type 2 error for this distribution. Again, if \(H_1\) is composite then \(H_1\) specifies a variety of different distributions for \(\bs{X}\), and thus there will be a set of type 2 error probabilities. Generally, there is a tradeoff between the type 1 and type 2 error probabilities. If we reduce the probability of a type 1 error, by making the rejection region \(R\) smaller, we necessarily increase the probability of a type 2 error because the complementary region \(S \setminus R\) is larger.

The extreme cases can give us some insight. First consider the decision rule in which we never reject \(H_0\), regardless of the evidence \(\bs{x}\). This corresponds to the rejection region \(R = \emptyset\). A type 1 error is impossible, so the significance level is 0. On the other hand, the probability of a type 2 error is 1 for any distribution defined by \(H_1\). At the other extreme, consider the decision rule in which we always rejects \(H_0\) regardless of the evidence \(\bs{x}\). This corresponds to the rejection region \(R = S\). A type 2 error is impossible, but now the probability of a type 1 error is 1 for any distribution defined by \(H_0\). In between these two worthless tests are meaningful tests that take the evidence \(\bs{x}\) into account.

If \(H_1\) is true, so that the distribution of \(\bs{X}\) is specified by \(H_1\), then \(\P(\bs{X} \in R)\), the probability of rejecting \(H_0\) is the power of the test for that distribution.

Thus the power of the test for a distribution specified by \( H_1 \) is the probability of making the correct decision.

Suppose that we have two tests, corresponding to rejection regions \(R_1\) and \(R_2\), respectively, each having significance level \(\alpha\). The test with region \(R_1\) is uniformly more powerful than the test with region \(R_2\) if \[ \P(\bs{X} \in R_1) \ge \P(\bs{X} \in R_2) \text{ for every distribution of } \bs{X} \text{ specified by } H_1 \]

Naturally, in this case, we would prefer the first test. Often, however, two tests will not be uniformly ordered; one test will be more powerful for some distributions specified by \(H_1\) while the other test will be more powerful for other distributions specified by \(H_1\).

If a test has significance level \(\alpha\) and is uniformly more powerful than any other test with significance level \(\alpha\), then the test is said to be a uniformly most powerful test at level \(\alpha\).

Clearly a uniformly most powerful test is the best we can do.

\(P\)-value

In most cases, we have a general procedure that allows us to construct a test (that is, a rejection region \(R_\alpha\)) for any given significance level \(\alpha \in (0, 1)\). Typically, \(R_\alpha\) decreases (in the subset sense) as \(\alpha\) decreases.

The \(P\)-value of the observed value \(\bs{x}\) of \(\bs{X}\), denoted \(P(\bs{x})\), is defined to be the smallest \(\alpha\) for which \(\bs{x} \in R_\alpha\); that is, the smallest significance level for which \(H_0\) is rejected, given \(\bs{X} = \bs{x}\).

Knowing \(P(\bs{x})\) allows us to test \(H_0\) at any significance level for the given data \(\bs{x}\): If \(P(\bs{x}) \le \alpha\) then we would reject \(H_0\) at significance level \(\alpha\); if \(P(\bs{x}) \gt \alpha\) then we fail to reject \(H_0\) at significance level \(\alpha\). Note that \(P(\bs{X})\) is a statistic . Informally, \(P(\bs{x})\) can often be thought of as the probability of an outcome as or more extreme than the observed value \(\bs{x}\), where extreme is interpreted relative to the null hypothesis \(H_0\).

Analogy with Justice Systems

There is a helpful analogy between statistical hypothesis testing and the criminal justice system in the US and various other countries. Consider a person charged with a crime. The presumed null hypothesis is that the person is innocent of the crime; the conjectured alternative hypothesis is that the person is guilty of the crime. The test of the hypotheses is a trial with evidence presented by both sides playing the role of the data. After considering the evidence, the jury delivers the decision as either not guilty or guilty . Note that innocent is not a possible verdict of the jury, because it is not the point of the trial to prove the person innocent. Rather, the point of the trial is to see whether there is sufficient evidence to overturn the null hypothesis that the person is innocent in favor of the alternative hypothesis of that the person is guilty. A type 1 error is convicting a person who is innocent; a type 2 error is acquitting a person who is guilty. Generally, a type 1 error is considered the more serious of the two possible errors, so in an attempt to hold the chance of a type 1 error to a very low level, the standard for conviction in serious criminal cases is beyond a reasonable doubt .

Tests of an Unknown Parameter

Hypothesis testing is a very general concept, but an important special class occurs when the distribution of the data variable \(\bs{X}\) depends on a parameter \(\theta\) taking values in a parameter space \(\Theta\). The parameter may be vector-valued, so that \(\bs{\theta} = (\theta_1, \theta_2, \ldots, \theta_n)\) and \(\Theta \subseteq \R^k\) for some \(k \in \N_+\). The hypotheses generally take the form \[ H_0: \theta \in \Theta_0 \text{ versus } H_1: \theta \notin \Theta_0 \] where \(\Theta_0\) is a prescribed subset of the parameter space \(\Theta\). In this setting, the probabilities of making an error or a correct decision depend on the true value of \(\theta\). If \(R\) is the rejection region, then the power function \( Q \) is given by \[ Q(\theta) = \P_\theta(\bs{X} \in R), \quad \theta \in \Theta \] The power function gives a lot of information about the test.

The power function satisfies the following properties:

- \(Q(\theta)\) is the probability of a type 1 error when \(\theta \in \Theta_0\).

- \(\max\left\{Q(\theta): \theta \in \Theta_0\right\}\) is the significance level of the test.

- \(1 - Q(\theta)\) is the probability of a type 2 error when \(\theta \notin \Theta_0\).

- \(Q(\theta)\) is the power of the test when \(\theta \notin \Theta_0\).

If we have two tests, we can compare them by means of their power functions.

Suppose that we have two tests, corresponding to rejection regions \(R_1\) and \(R_2\), respectively, each having significance level \(\alpha\). The test with rejection region \(R_1\) is uniformly more powerful than the test with rejection region \(R_2\) if \( Q_1(\theta) \ge Q_2(\theta)\) for all \( \theta \notin \Theta_0 \).

Most hypothesis tests of an unknown real parameter \(\theta\) fall into three special cases:

Suppose that \( \theta \) is a real parameter and \( \theta_0 \in \Theta \) a specified value. The tests below are respectively the two-sided test , the left-tailed test , and the right-tailed test .

- \(H_0: \theta = \theta_0\) versus \(H_1: \theta \ne \theta_0\)

- \(H_0: \theta \ge \theta_0\) versus \(H_1: \theta \lt \theta_0\)

- \(H_0: \theta \le \theta_0\) versus \(H_1: \theta \gt \theta_0\)

Thus the tests are named after the conjectured alternative. Of course, there may be other unknown parameters besides \(\theta\) (known as nuisance parameters ).

Equivalence Between Hypothesis Test and Confidence Sets

There is an equivalence between hypothesis tests and confidence sets for a parameter \(\theta\).

Suppose that \(C(\bs{x})\) is a \(1 - \alpha\) level confidence set for \(\theta\). The following test has significance level \(\alpha\) for the hypothesis \( H_0: \theta = \theta_0 \) versus \( H_1: \theta \ne \theta_0 \): Reject \(H_0\) if and only if \(\theta_0 \notin C(\bs{x})\)

By definition, \(\P[\theta \in C(\bs{X})] = 1 - \alpha\). Hence if \(H_0\) is true so that \(\theta = \theta_0\), then the probability of a type 1 error is \(P[\theta \notin C(\bs{X})] = \alpha\).

Equivalently, we fail to reject \(H_0\) at significance level \(\alpha\) if and only if \(\theta_0\) is in the corresponding \(1 - \alpha\) level confidence set. In particular, this equivalence applies to interval estimates of a real parameter \(\theta\) and the common tests for \(\theta\) given above .

In each case below, the confidence interval has confidence level \(1 - \alpha\) and the test has significance level \(\alpha\).

- Suppose that \(\left[L(\bs{X}, U(\bs{X})\right]\) is a two-sided confidence interval for \(\theta\). Reject \(H_0: \theta = \theta_0\) versus \(H_1: \theta \ne \theta_0\) if and only if \(\theta_0 \lt L(\bs{X})\) or \(\theta_0 \gt U(\bs{X})\).

- Suppose that \(L(\bs{X})\) is a confidence lower bound for \(\theta\). Reject \(H_0: \theta \le \theta_0\) versus \(H_1: \theta \gt \theta_0\) if and only if \(\theta_0 \lt L(\bs{X})\).

- Suppose that \(U(\bs{X})\) is a confidence upper bound for \(\theta\). Reject \(H_0: \theta \ge \theta_0\) versus \(H_1: \theta \lt \theta_0\) if and only if \(\theta_0 \gt U(\bs{X})\).

Pivot Variables and Test Statistics

Recall that confidence sets of an unknown parameter \(\theta\) are often constructed through a pivot variable , that is, a random variable \(W(\bs{X}, \theta)\) that depends on the data vector \(\bs{X}\) and the parameter \(\theta\), but whose distribution does not depend on \(\theta\) and is known. In this case, a natural test statistic for the basic tests given above is \(W(\bs{X}, \theta_0)\).

Statistics Made Easy

Introduction to Hypothesis Testing

A statistical hypothesis is an assumption about a population parameter .

For example, we may assume that the mean height of a male in the U.S. is 70 inches.

The assumption about the height is the statistical hypothesis and the true mean height of a male in the U.S. is the population parameter .

A hypothesis test is a formal statistical test we use to reject or fail to reject a statistical hypothesis.

The Two Types of Statistical Hypotheses

To test whether a statistical hypothesis about a population parameter is true, we obtain a random sample from the population and perform a hypothesis test on the sample data.

There are two types of statistical hypotheses:

The null hypothesis , denoted as H 0 , is the hypothesis that the sample data occurs purely from chance.

The alternative hypothesis , denoted as H 1 or H a , is the hypothesis that the sample data is influenced by some non-random cause.

Hypothesis Tests

A hypothesis test consists of five steps:

1. State the hypotheses.

State the null and alternative hypotheses. These two hypotheses need to be mutually exclusive, so if one is true then the other must be false.

2. Determine a significance level to use for the hypothesis.

Decide on a significance level. Common choices are .01, .05, and .1.

3. Find the test statistic.

Find the test statistic and the corresponding p-value. Often we are analyzing a population mean or proportion and the general formula to find the test statistic is: (sample statistic – population parameter) / (standard deviation of statistic)

4. Reject or fail to reject the null hypothesis.

Using the test statistic or the p-value, determine if you can reject or fail to reject the null hypothesis based on the significance level.

The p-value tells us the strength of evidence in support of a null hypothesis. If the p-value is less than the significance level, we reject the null hypothesis.

5. Interpret the results.

Interpret the results of the hypothesis test in the context of the question being asked.

The Two Types of Decision Errors

There are two types of decision errors that one can make when doing a hypothesis test:

Type I error: You reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, often called alpha , and denoted as α.

Type II error: You fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is called the Power of the test or Beta , denoted as β.

One-Tailed and Two-Tailed Tests

A statistical hypothesis can be one-tailed or two-tailed.

A one-tailed hypothesis involves making a “greater than” or “less than ” statement.

For example, suppose we assume the mean height of a male in the U.S. is greater than or equal to 70 inches. The null hypothesis would be H0: µ ≥ 70 inches and the alternative hypothesis would be Ha: µ < 70 inches.

A two-tailed hypothesis involves making an “equal to” or “not equal to” statement.

For example, suppose we assume the mean height of a male in the U.S. is equal to 70 inches. The null hypothesis would be H0: µ = 70 inches and the alternative hypothesis would be Ha: µ ≠ 70 inches.

Note: The “equal” sign is always included in the null hypothesis, whether it is =, ≥, or ≤.

Related: What is a Directional Hypothesis?

Types of Hypothesis Tests

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

The following tutorials provide an explanation of the most common types of hypothesis tests:

Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test Introduction to the One Proportion Z-Test Introduction to the Two Proportion Z-Test

Published by Zach

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Unit 12: Significance tests (hypothesis testing)

About this unit, the idea of significance tests.

- Simple hypothesis testing (Opens a modal)

- Idea behind hypothesis testing (Opens a modal)

- Examples of null and alternative hypotheses (Opens a modal)

- P-values and significance tests (Opens a modal)

- Comparing P-values to different significance levels (Opens a modal)

- Estimating a P-value from a simulation (Opens a modal)

- Using P-values to make conclusions (Opens a modal)

- Simple hypothesis testing Get 3 of 4 questions to level up!

- Writing null and alternative hypotheses Get 3 of 4 questions to level up!

- Estimating P-values from simulations Get 3 of 4 questions to level up!

Error probabilities and power

- Introduction to Type I and Type II errors (Opens a modal)

- Type 1 errors (Opens a modal)

- Examples identifying Type I and Type II errors (Opens a modal)

- Introduction to power in significance tests (Opens a modal)

- Examples thinking about power in significance tests (Opens a modal)

- Consequences of errors and significance (Opens a modal)

- Type I vs Type II error Get 3 of 4 questions to level up!

- Error probabilities and power Get 3 of 4 questions to level up!

Tests about a population proportion

- Constructing hypotheses for a significance test about a proportion (Opens a modal)

- Conditions for a z test about a proportion (Opens a modal)

- Reference: Conditions for inference on a proportion (Opens a modal)

- Calculating a z statistic in a test about a proportion (Opens a modal)

- Calculating a P-value given a z statistic (Opens a modal)

- Making conclusions in a test about a proportion (Opens a modal)

- Writing hypotheses for a test about a proportion Get 3 of 4 questions to level up!

- Conditions for a z test about a proportion Get 3 of 4 questions to level up!

- Calculating the test statistic in a z test for a proportion Get 3 of 4 questions to level up!

- Calculating the P-value in a z test for a proportion Get 3 of 4 questions to level up!

- Making conclusions in a z test for a proportion Get 3 of 4 questions to level up!

Tests about a population mean

- Writing hypotheses for a significance test about a mean (Opens a modal)

- Conditions for a t test about a mean (Opens a modal)

- Reference: Conditions for inference on a mean (Opens a modal)

- When to use z or t statistics in significance tests (Opens a modal)

- Example calculating t statistic for a test about a mean (Opens a modal)

- Using TI calculator for P-value from t statistic (Opens a modal)

- Using a table to estimate P-value from t statistic (Opens a modal)

- Comparing P-value from t statistic to significance level (Opens a modal)

- Free response example: Significance test for a mean (Opens a modal)

- Writing hypotheses for a test about a mean Get 3 of 4 questions to level up!

- Conditions for a t test about a mean Get 3 of 4 questions to level up!

- Calculating the test statistic in a t test for a mean Get 3 of 4 questions to level up!

- Calculating the P-value in a t test for a mean Get 3 of 4 questions to level up!

- Making conclusions in a t test for a mean Get 3 of 4 questions to level up!

More significance testing videos

- Hypothesis testing and p-values (Opens a modal)

- One-tailed and two-tailed tests (Opens a modal)

- Z-statistics vs. T-statistics (Opens a modal)

- Small sample hypothesis test (Opens a modal)

- Large sample proportion hypothesis testing (Opens a modal)

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

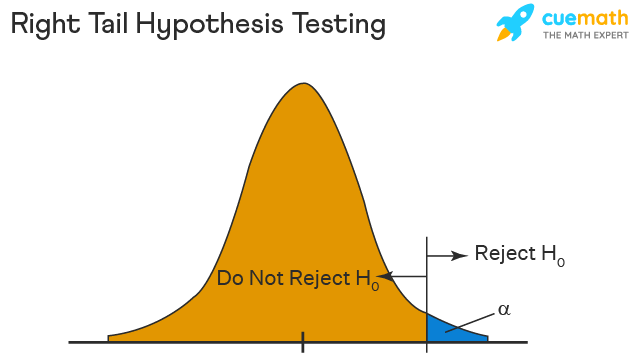

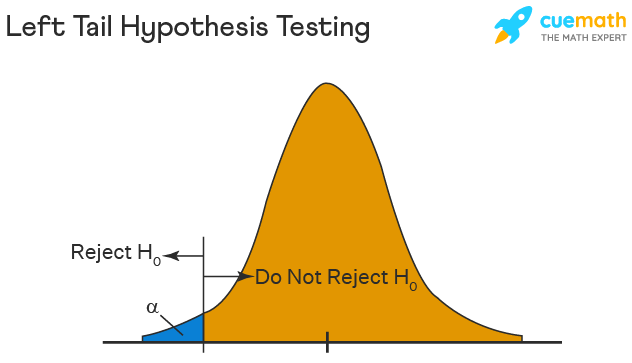

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

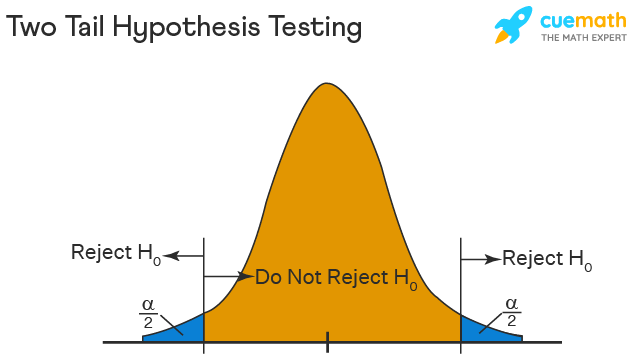

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example

The best way to solve a problem on hypothesis testing is by applying the 5 steps mentioned in the previous section. Suppose a researcher claims that the mean average weight of men is greater than 100kgs with a standard deviation of 15kgs. 30 men are chosen with an average weight of 112.5 Kgs. Using hypothesis testing, check if there is enough evidence to support the researcher's claim. The confidence interval is given as 95%.

Step 1: This is an example of a right-tailed test. Set up the null hypothesis as \(H_{0}\): \(\mu\) = 100.

Step 2: The alternative hypothesis is given by \(H_{1}\): \(\mu\) > 100.

Step 3: As this is a one-tailed test, \(\alpha\) = 100% - 95% = 5%. This can be used to determine the critical value.

1 - \(\alpha\) = 1 - 0.05 = 0.95

0.95 gives the required area under the curve. Now using a normal distribution table, the area 0.95 is at z = 1.645. A similar process can be followed for a t-test. The only additional requirement is to calculate the degrees of freedom given by n - 1.

Step 4: Calculate the z test statistic. This is because the sample size is 30. Furthermore, the sample and population means are known along with the standard deviation.

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

\(\mu\) = 100, \(\overline{x}\) = 112.5, n = 30, \(\sigma\) = 15

z = \(\frac{112.5-100}{\frac{15}{\sqrt{30}}}\) = 4.56

Step 5: Conclusion. As 4.56 > 1.645 thus, the null hypothesis can be rejected.

Hypothesis Testing and Confidence Intervals

Confidence intervals form an important part of hypothesis testing. This is because the alpha level can be determined from a given confidence interval. Suppose a confidence interval is given as 95%. Subtract the confidence interval from 100%. This gives 100 - 95 = 5% or 0.05. This is the alpha value of a one-tailed hypothesis testing. To obtain the alpha value for a two-tailed hypothesis testing, divide this value by 2. This gives 0.05 / 2 = 0.025.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Hypothesis Testing

- Hypothesis testing is a technique that is used to verify whether the results of an experiment are statistically significant.

- It involves the setting up of a null hypothesis and an alternate hypothesis.

- There are three types of tests that can be conducted under hypothesis testing - z test, t test, and chi square test.

- Hypothesis testing can be classified as right tail, left tail, and two tail tests.

Examples on Hypothesis Testing

- Example 1: The average weight of a dumbbell in a gym is 90lbs. However, a physical trainer believes that the average weight might be higher. A random sample of 5 dumbbells with an average weight of 110lbs and a standard deviation of 18lbs. Using hypothesis testing check if the physical trainer's claim can be supported for a 95% confidence level. Solution: As the sample size is lesser than 30, the t-test is used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) > 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 5, s = 18. \(\alpha\) = 0.05 Using the t-distribution table, the critical value is 2.132 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = 2.484 As 2.484 > 2.132, the null hypothesis is rejected. Answer: The average weight of the dumbbells may be greater than 90lbs

- Example 2: The average score on a test is 80 with a standard deviation of 10. With a new teaching curriculum introduced it is believed that this score will change. On random testing, the score of 38 students, the mean was found to be 88. With a 0.05 significance level, is there any evidence to support this claim? Solution: This is an example of two-tail hypothesis testing. The z test will be used. \(H_{0}\): \(\mu\) = 80, \(H_{1}\): \(\mu\) ≠ 80 \(\overline{x}\) = 88, \(\mu\) = 80, n = 36, \(\sigma\) = 10. \(\alpha\) = 0.05 / 2 = 0.025 The critical value using the normal distribution table is 1.96 z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) z = \(\frac{88-80}{\frac{10}{\sqrt{36}}}\) = 4.8 As 4.8 > 1.96, the null hypothesis is rejected. Answer: There is a difference in the scores after the new curriculum was introduced.

- Example 3: The average score of a class is 90. However, a teacher believes that the average score might be lower. The scores of 6 students were randomly measured. The mean was 82 with a standard deviation of 18. With a 0.05 significance level use hypothesis testing to check if this claim is true. Solution: The t test will be used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) < 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 6, s = 18 The critical value from the t table is -2.015 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = \(\frac{82-90}{\frac{18}{\sqrt{6}}}\) t = -1.088 As -1.088 > -2.015, we fail to reject the null hypothesis. Answer: There is not enough evidence to support the claim.

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on Hypothesis Testing

What is hypothesis testing.

Hypothesis testing in statistics is a tool that is used to make inferences about the population data. It is also used to check if the results of an experiment are valid.

What is the z Test in Hypothesis Testing?

The z test in hypothesis testing is used to find the z test statistic for normally distributed data . The z test is used when the standard deviation of the population is known and the sample size is greater than or equal to 30.

What is the t Test in Hypothesis Testing?

The t test in hypothesis testing is used when the data follows a student t distribution . It is used when the sample size is less than 30 and standard deviation of the population is not known.

What is the formula for z test in Hypothesis Testing?

The formula for a one sample z test in hypothesis testing is z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) and for two samples is z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

What is the p Value in Hypothesis Testing?

The p value helps to determine if the test results are statistically significant or not. In hypothesis testing, the null hypothesis can either be rejected or not rejected based on the comparison between the p value and the alpha level.

What is One Tail Hypothesis Testing?

When the rejection region is only on one side of the distribution curve then it is known as one tail hypothesis testing. The right tail test and the left tail test are two types of directional hypothesis testing.

What is the Alpha Level in Two Tail Hypothesis Testing?

To get the alpha level in a two tail hypothesis testing divide \(\alpha\) by 2. This is done as there are two rejection regions in the curve.

Open topic with navigation

The P value, or calculated probability, is the probability of finding the observed, or more extreme, results when the null hypothesis (H 0 ) of a study question is true – the definition of ‘extreme’ depends on how the hypothesis is being tested. P is also described in terms of rejecting H 0 when it is actually true, however, it is not a direct probability of this state.

The null hypothesis is usually an hypothesis of "no difference" e.g. no difference between blood pressures in group A and group B. Define a null hypothesis for each study question clearly before the start of your study.

The only situation in which you should use a one sided P value is when a large change in an unexpected direction would have absolutely no relevance to your study. This situation is unusual; if you are in any doubt then use a two sided P value.

The term significance level (alpha) is used to refer to a pre-chosen probability and the term "P value" is used to indicate a probability that you calculate after a given study.

The alternative hypothesis (H 1 ) is the opposite of the null hypothesis; in plain language terms this is usually the hypothesis you set out to investigate. For example, question is "is there a significant (not due to chance) difference in blood pressures between groups A and B if we give group A the test drug and group B a sugar pill?" and alternative hypothesis is " there is a difference in blood pressures between groups A and B if we give group A the test drug and group B a sugar pill".

If your P value is less than the chosen significance level then you reject the null hypothesis i.e. accept that your sample gives reasonable evidence to support the alternative hypothesis. It does NOT imply a "meaningful" or "important" difference; that is for you to decide when considering the real-world relevance of your result.

The choice of significance level at which you reject H 0 is arbitrary. Conventionally the 5% (less than 1 in 20 chance of being wrong), 1% and 0.1% (P < 0.05, 0.01 and 0.001) levels have been used. These numbers can give a false sense of security.

In the ideal world, we would be able to define a "perfectly" random sample, the most appropriate test and one definitive conclusion. We simply cannot. What we can do is try to optimise all stages of our research to minimise sources of uncertainty. When presenting P values some groups find it helpful to use the asterisk rating system as well as quoting the P value:

P < 0.05 *

P < 0.01 **

P < 0.001

Most authors refer to statistically significant as P < 0.05 and statistically highly significant as P < 0.001 (less than one in a thousand chance of being wrong).

The asterisk system avoids the woolly term "significant". Please note, however, that many statisticians do not like the asterisk rating system when it is used without showing P values. As a rule of thumb, if you can quote an exact P value then do. You might also want to refer to a quoted exact P value as an asterisk in text narrative or tables of contrasts elsewhere in a report.

At this point, a word about error. Type I error is the false rejection of the null hypothesis and type II error is the false acceptance of the null hypothesis. As an aid memoir: think that our cynical society rejects before it accepts.

The significance level (alpha) is the probability of type I error. The power of a test is one minus the probability of type II error (beta). Power should be maximised when selecting statistical methods. If you want to estimate sample sizes then you must understand all of the terms mentioned here.

The following table shows the relationship between power and error in hypothesis testing:

If you are interested in further details of probability and sampling theory at this point then please refer to one of the general texts listed in the reference section .

You must understand confidence intervals if you intend to quote P values in reports and papers. Statistical referees of scientific journals expect authors to quote confidence intervals with greater prominence than P values.

Notes about Type I error :

- is the incorrect rejection of the null hypothesis

- maximum probability is set in advance as alpha

- is not affected by sample size as it is set in advance

- increases with the number of tests or end points (i.e. do 20 rejections of H 0 and 1 is likely to be wrongly significant for alpha = 0.05)

Notes about Type II error :

- is the incorrect acceptance of the null hypothesis

- probability is beta

- beta depends upon sample size and alpha

- can't be estimated except as a function of the true population effect

- beta gets smaller as the sample size gets larger

- beta gets smaller as the number of tests or end points increases

Copyright © 2000-2024 StatsDirect Limited, all rights reserved. Download here .

Hypothesis Testing Framework

Now that we've seen an example and explored some of the themes for hypothesis testing, let's specify the procedure that we will follow.

Hypothesis Testing Steps

The formal framework and steps for hypothesis testing are as follows:

- Identify and define the parameter of interest

- Define the competing hypotheses to test

- Set the evidence threshold, formally called the significance level

- Generate or use theory to specify the sampling distribution and check conditions

- Calculate the test statistic and p-value

- Evaluate your results and write a conclusion in the context of the problem.

We'll discuss each of these steps below.

Identify Parameter of Interest

First, I like to specify and define the parameter of interest. What is the population that we are interested in? What characteristic are we measuring?

By defining our population of interest, we can confirm that we are truly using sample data. If we find that we actually have population data, our inference procedures are not needed. We could proceed by summarizing our population data.

By identifying and defining the parameter of interest, we can confirm that we use appropriate methods to summarize our variable of interest. We can also focus on the specific process needed for our parameter of interest.

In our example from the last page, the parameter of interest would be the population mean time that a host has been on Airbnb for the population of all Chicago listings on Airbnb in March 2023. We could represent this parameter with the symbol $\mu$. It is best practice to fully define $\mu$ both with words and symbol.

Define the Hypotheses

For hypothesis testing, we need to decide between two competing theories. These theories must be statements about the parameter. Although we won't have the population data to definitively select the correct theory, we will use our sample data to determine how reasonable our "skeptic's theory" is.

The first hypothesis is called the null hypothesis, $H_0$. This can be thought of as the "status quo", the "skeptic's theory", or that nothing is happening.

Examples of null hypotheses include that the population proportion is equal to 0.5 ($p = 0.5$), the population median is equal to 12 ($M = 12$), or the population mean is equal to 14.5 ($\mu = 14.5$).

The second hypothesis is called the alternative hypothesis, $H_a$ or $H_1$. This can be thought of as the "researcher's hypothesis" or that something is happening. This is what we'd like to convince the skeptic to believe. In most cases, the desired outcome of the researcher is to conclude that the alternative hypothesis is reasonable to use moving forward.

Examples of alternative hypotheses include that the population proportion is greater than 0.5 ($p > 0.5$), the population median is less than 12 ($M < 12$), or the population mean is not equal to 14.5 ($\mu \neq 14.5$).

There are a few requirements for the hypotheses:

- the hypotheses must be about the same population parameter,

- the hypotheses must have the same null value (provided number to compare to),

- the null hypothesis must have the equality (the equals sign must be in the null hypothesis),

- the alternative hypothesis must not have the equality (the equals sign cannot be in the alternative hypothesis),

- there must be no overlap between the null and alternative hypothesis.

You may have previously seen null hypotheses that include more than an equality (e.g. $p \le 0.5$). As long as there is an equality in the null hypothesis, this is allowed. For our purposes, we will simplify this statement to ($p = 0.5$).

To summarize from above, possible hypotheses statements are:

$H_0: p = 0.5$ vs. $H_a: p > 0.5$

$H_0: M = 12$ vs. $H_a: M < 12$

$H_0: \mu = 14.5$ vs. $H_a: \mu \neq 14.5$

In our second example about Airbnb hosts, our hypotheses would be:

$H_0: \mu = 2100$ vs. $H_a: \mu > 2100$.

Set Threshold (Significance Level)

There is one more step to complete before looking at the data. This is to set the threshold needed to convince the skeptic. This threshold is defined as an $\alpha$ significance level. We'll define exactly what the $\alpha$ significance level means later. For now, smaller $\alpha$s correspond to more evidence being required to convince the skeptic.

A few common $\alpha$ levels include 0.1, 0.05, and 0.01.

For our Airbnb hosts example, we'll set the threshold as 0.02.

Determine the Sampling Distribution of the Sample Statistic

The first step (as outlined above) is the identify the parameter of interest. What is the best estimate of the parameter of interest? Typically, it will be the sample statistic that corresponds to the parameter. This sample statistic, along with other features of the distribution will prove especially helpful as we continue the hypothesis testing procedure.

However, we do have a decision at this step. We can choose to use simulations with a resampling approach or we can choose to rely on theory if we are using proportions or means. We then also need to confirm that our results and conclusions will be valid based on the available data.

Required Condition

The one required assumption, regardless of approach (resampling or theory), is that the sample is random and representative of the population of interest. In other words, we need our sample to be a reasonable sample of data from the population.

Using Simulations and Resampling

If we'd like to use a resampling approach, we have no (or minimal) additional assumptions to check. This is because we are relying on the available data instead of assumptions.

We do need to adjust our data to be consistent with the null hypothesis (or skeptic's claim). We can then rely on our resampling approach to estimate a plausible sampling distribution for our sample statistic.

Recall that we took this approach on the last page. Before simulating our estimated sampling distribution, we adjusted the mean of the data so that it matched with our skeptic's claim, shown in the code below.

We'll see a few more examples on the next page.

Using Theory

On the other hand, we could rely on theory in order to estimate the sampling distribution of our desired statistic. Recall that we had a few different options to rely on:

- the CLT for the sampling distribution of a sample mean

- the binomial distribution for the sampling distribution of a proportion (or count)

- the Normal approximation of a binomial distribution (using the CLT) for the sampling distribution of a proportion

If relying on the CLT to specify the underlying sampling distribution, you also need to confirm:

- having a random sample and

- having a sample size that is less than 10% of the population size if the sampling is done without replacement

- having a Normally distributed population for a quantitative variable OR

- having a large enough sample size (usually at least 25) for a quantitative variable

- having a large enough sample size for a categorical variable (defined by $np$ and $n(1-p)$ being at least 10)

If relying on the binomial distribution to specify the underlying sampling distribution, you need to confirm:

- having a set number of trials, $n$

- having the same probability of success, $p$ for each observation

After determining the appropriate theory to use, we should check our conditions and then specify the sampling distribution for our statistic.

For the Airbnb hosts example, we have what we've assumed to be a random sample. It is not taken with replacement, so we also need to assume that our sample size (700) is less than 10% of our population size. In other words, we need to assume that the population of Chicago Airbnbs in March 2023 was at least 7000. Since we do have our (presumed) population data available, we can confirm that there were at least 7000 Chicago Airbnbs in the population in 2023.

Additionally, we can confirm that normality of the sampling distribution applies for the CLT to apply. Our sample size is more than 25 and the parameter of interest is a mean, so this meets our necessary criteria for the normality condition to be valid.

With the conditions now met, we can estimate our sampling distribution. From the CLT, we know that the distribution for the sample mean should be $\bar{X} \sim N(\mu, \frac{\sigma}{\sqrt{n}})$.

Now, we face our next challenge -- what to plug in as the mean and standard error for this distribution. Since we are adopting the skeptic's point of view for the purpose of this approach, we can plug in the value of $\mu_0 = 2100$. We also know that the sample size $n$ is 700. But what should we plug in for the population standard deviation $\sigma$?

When we don't know the value of a parameter, we will generally plug in our best estimate for the parameter. In this case, that corresponds to plugging in $\hat{\sigma}$, or our sample standard deviation.

Now, our estimated sampling distribution based on the CLT is: $\bar{X} \sim N(2100, 41.4045)$.

If we compare to our corresponding skeptic's sampling distribution on the last page, we can confirm that the theoretical sampling distribution is similar to the simulated sampling distribution based on resampling.

Assumptions not met

What do we do if the necessary conditions aren't met for the sampling distribution? Because the simulation-based resampling approach has minimal assumptions, we should be able to use this approach to produce valid results as long as the provided data is representative of the population.

The theory-based approach has more conditions, and we may not be able to meet all of the necessary conditions. For example, if our parameter is something other than a mean or proportion, we may not have appropriate theory. Additionally, we may not have a large enough sample size.

- First, we could consider changing approaches to the simulation-based one.

- Second, we might look at how we could meet the necessary conditions better. In some cases, we may be able to redefine groups or make adjustments so that the setup of the test is closer to what is needed.

- As a last resort, we may be able to continue following the hypothesis testing steps. In this case, your calculations may not be valid or exact; however, you might be able to use them as an estimate or an approximation. It would be crucial to specify the violation and approximation in any conclusions or discussion of the test.

Calculate the evidence with statistics and p-values

Now, it's time to calculate how much evidence the sample contains to convince the skeptic to change their mind. As we saw above, we can convince the skeptic to change their mind by demonstrating that our sample is unlikely to occur if their theory is correct.

How do we do this? We do this by calculating a probability associated with our observed value for the statistic.

For example, for our situation, we want to convince the skeptic that the population mean is actually greater than 2100 days. We do that by calculating the probability that a sample mean would be as large or larger than what we observed in our actual sample, which was 2188 days. Why do we need the larger portion? We use the larger portion because a sample mean of 2200 days also provides evidence that the population mean is larger than 2100 days; it isn't limited to exactly what we observed in our sample. We call this specific probability the p-value.

That is, the p-value is the probability of observing a test statistic as extreme or more extreme (as determined by the alternative hypothesis), assuming the null hypothesis is true.

Our observed p-value for the Airbnb host example demonstrates that the probability of getting a sample mean host time of 2188 days (the value from our sample) or more is 1.46%, assuming that the true population mean is 2100 days.

Test statistic

Notice that the formal definition of a p-value mentions a test statistic . In most cases, this word can be replaced with "statistic" or "sample" for an equivalent statement.

Oftentimes, we'll see that our sample statistic can be used directly as the test statistic, as it was above. We could equivalently adjust our statistic to calculate a test statistic. This test statistic is often calculated as:

$\text{test statistic} = \frac{\text{estimate} - \text{hypothesized value}}{\text{standard error of estimate}}$

P-value Calculation Options

Note also that the p-value definition includes a probability associated with a test statistic being as extreme or more extreme (as determined by the alternative hypothesis . How do we determine the area that we consider when calculating the probability. This decision is determined by the inequality in the alternative hypothesis.

For example, when we were trying to convince the skeptic that the population mean is greater than 2100 days, we only considered those sample means that we at least as large as what we observed -- 2188 days or more.

If instead we were trying to convince the skeptic that the population mean is less than 2100 days ($H_a: \mu < 2100$), we would consider all sample means that were at most what we observed - 2188 days or less. In this case, our p-value would be quite large; it would be around 99.5%. This large p-value demonstrates that our sample does not support the alternative hypothesis. In fact, our sample would encourage us to choose the null hypothesis instead of the alternative hypothesis of $\mu < 2100$, as our sample directly contradicts the statement in the alternative hypothesis.

If we wanted to convince the skeptic that they were wrong and that the population mean is anything other than 2100 days ($H_a: \mu \neq 2100$), then we would want to calculate the probability that a sample mean is at least 88 days away from 2100 days. That is, we would calculate the probability corresponding to 2188 days or more or 2012 days or less. In this case, our p-value would be roughly twice the previously calculated p-value.

We could calculate all of those probabilities using our sampling distributions, either simulated or theoretical, that we generated in the previous step. If we chose to calculate a test statistic as defined in the previous section, we could also rely on standard normal distributions to calculate our p-value.

Evaluate your results and write conclusion in context of problem

Once you've gathered your evidence, it's now time to make your final conclusions and determine how you might proceed.

In traditional hypothesis testing, you often make a decision. Recall that you have your threshold (significance level $\alpha$) and your level of evidence (p-value). We can compare the two to determine if your p-value is less than or equal to your threshold. If it is, you have enough evidence to persuade your skeptic to change their mind. If it is larger than the threshold, you don't have quite enough evidence to convince the skeptic.

Common formal conclusions (if given in context) would be:

- I have enough evidence to reject the null hypothesis (the skeptic's claim), and I have sufficient evidence to suggest that the alternative hypothesis is instead true.

- I do not have enough evidence to reject the null hypothesis (the skeptic's claim), and so I do not have sufficient evidence to suggest the alternative hypothesis is true.

The only decision that we can make is to either reject or fail to reject the null hypothesis (we cannot "accept" the null hypothesis). Because we aren't actively evaluating the alternative hypothesis, we don't want to make definitive decisions based on that hypothesis. However, when it comes to making our conclusion for what to use going forward, we frame this on whether we could successfully convince someone of the alternative hypothesis.

A less formal conclusion might look something like:

Based on our sample of Chicago Airbnb listings, it seems as if the mean time since a host has been on Airbnb (for all Chicago Airbnb listings) is more than 5.75 years.

Significance Level Interpretation

We've now seen how the significance level $\alpha$ is used as a threshold for hypothesis testing. What exactly is the significance level?

The significance level $\alpha$ has two primary definitions. One is that the significance level is the maximum probability required to reject the null hypothesis; this is based on how the significance level functions within the hypothesis testing framework. The second definition is that this is the probability of rejecting the null hypothesis when the null hypothesis is true; in other words, this is the probability of making a specific type of error called a Type I error.

Why do we have to be comfortable making a Type I error? There is always a chance that the skeptic was originally correct and we obtained a very unusual sample. We don't want to the skeptic to be so convinced of their theory that no evidence can convince them. In this case, we need the skeptic to be convinced as long as the evidence is strong enough . Typically, the probability threshold will be low, to reduce the number of errors made. This also means that a decent amount of evidence will be needed to convince the skeptic to abandon their position in favor of the alternative theory.

p-value Limitations and Misconceptions

In comparison to the $\alpha$ significance level, we also need to calculate the evidence against the null hypothesis with the p-value.

The p-value is the probability of getting a test statistic as extreme or more extreme (in the direction of the alternative hypothesis), assuming the null hypothesis is true.

Recently, p-values have gotten some bad press in terms of how they are used. However, that doesn't mean that p-values should be abandoned, as they still provide some helpful information. Below, we'll describe what p-values don't mean, and how they should or shouldn't be used to make decisions.

Factors that affect a p-value

What features affect the size of a p-value?

- the null value, or the value assumed under the null hypothesis

- the effect size (the difference between the null value under the null hypothesis and the true value of the parameter)

- the sample size

More evidence against the null hypothesis will be obtained if the effect size is larger and if the sample size is larger.

Misconceptions

We gave a definition for p-values above. What are some examples that p-values don't mean?

- A p-value is not the probability that the null hypothesis is correct

- A p-value is not the probability that the null hypothesis is incorrect

- A p-value is not the probability of getting your specific sample

- A p-value is not the probability that the alternative hypothesis is correct

- A p-value is not the probability that the alternative hypothesis is incorrect

- A p-value does not indicate the size of the effect

Our p-value is a way of measuring the evidence that your sample provides against the null hypothesis, assuming the null hypothesis is in fact correct.

Using the p-value to make a decision

Why is there bad press for a p-value? You may have heard about the standard $\alpha$ level of 0.05. That is, we would be comfortable with rejecting the null hypothesis once in 20 attempts when the null hypothesis is really true. Recall that we reject the null hypothesis when the p-value is less than or equal to the significance level.

Consider what would happen if you have two different p-values: 0.049 and 0.051.

In essence, these two p-values represent two very similar probabilities (4.9% vs. 5.1%) and very similar levels of evidence against the null hypothesis. However, when we make our decision based on our threshold, we would make two different decisions (reject and fail to reject, respectively). Should this decision really be so simplistic? I would argue that the difference shouldn't be so severe when the sample statistics are likely very similar. For this reason, I (and many other experts) strongly recommend using the p-value as a measure of evidence and including it with your conclusion.

Putting too much emphasis on the decision (and having a significant result) has created a culture of misusing p-values. For this reason, understanding your p-value itself is crucial.

Searching for p-values

The other concern with setting a definitive threshold of 0.05 is that some researchers will begin performing multiple tests until finding a p-value that is small enough. However, with a p-value of 0.05, we know that we will have a p-value less than 0.05 1 time out of every 20 times, even when the null hypothesis is true.

This means that if researchers start hunting for p-values that are small (sometimes called p-hacking), then they are likely to identify a small p-value every once in a while by chance alone. Researchers might then publish that result, even though the result is actually not informative. For this reason, it is recommended that researchers write a definitive analysis plan to prevent performing multiple tests in search of a result that occurs by chance alone.

Best Practices

With all of this in mind, what should we do when we have our p-value? How can we prevent or reduce misuse of a p-value?

- Report the p-value along with the conclusion

- Specify the effect size (the value of the statistic)

- Define an analysis plan before looking at the data

- Interpret the p-value clearly to specify what it indicates

- Consider using an alternate statistical approach, the confidence interval, discussed next, when appropriate

- Search Search Please fill out this field.

- Fundamental Analysis

Hypothesis to Be Tested: Definition and 4 Steps for Testing with Example

:max_bytes(150000):strip_icc():format(webp)/ChristinaMajaski-5c9433ea46e0fb0001d880b1.jpeg)

What Is Hypothesis Testing?

Hypothesis testing, sometimes called significance testing, is an act in statistics whereby an analyst tests an assumption regarding a population parameter. The methodology employed by the analyst depends on the nature of the data used and the reason for the analysis.

Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data. Such data may come from a larger population, or from a data-generating process. The word "population" will be used for both of these cases in the following descriptions.

Key Takeaways

- Hypothesis testing is used to assess the plausibility of a hypothesis by using sample data.

- The test provides evidence concerning the plausibility of the hypothesis, given the data.

- Statistical analysts test a hypothesis by measuring and examining a random sample of the population being analyzed.

- The four steps of hypothesis testing include stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result.

How Hypothesis Testing Works

In hypothesis testing, an analyst tests a statistical sample, with the goal of providing evidence on the plausibility of the null hypothesis.

Statistical analysts test a hypothesis by measuring and examining a random sample of the population being analyzed. All analysts use a random population sample to test two different hypotheses: the null hypothesis and the alternative hypothesis.

The null hypothesis is usually a hypothesis of equality between population parameters; e.g., a null hypothesis may state that the population mean return is equal to zero. The alternative hypothesis is effectively the opposite of a null hypothesis (e.g., the population mean return is not equal to zero). Thus, they are mutually exclusive , and only one can be true. However, one of the two hypotheses will always be true.

The null hypothesis is a statement about a population parameter, such as the population mean, that is assumed to be true.

4 Steps of Hypothesis Testing

All hypotheses are tested using a four-step process:

- The first step is for the analyst to state the hypotheses.

- The second step is to formulate an analysis plan, which outlines how the data will be evaluated.

- The third step is to carry out the plan and analyze the sample data.

- The final step is to analyze the results and either reject the null hypothesis, or state that the null hypothesis is plausible, given the data.

Real-World Example of Hypothesis Testing

If, for example, a person wants to test that a penny has exactly a 50% chance of landing on heads, the null hypothesis would be that 50% is correct, and the alternative hypothesis would be that 50% is not correct.

Mathematically, the null hypothesis would be represented as Ho: P = 0.5. The alternative hypothesis would be denoted as "Ha" and be identical to the null hypothesis, except with the equal sign struck-through, meaning that it does not equal 50%.

A random sample of 100 coin flips is taken, and the null hypothesis is then tested. If it is found that the 100 coin flips were distributed as 40 heads and 60 tails, the analyst would assume that a penny does not have a 50% chance of landing on heads and would reject the null hypothesis and accept the alternative hypothesis.

If, on the other hand, there were 48 heads and 52 tails, then it is plausible that the coin could be fair and still produce such a result. In cases such as this where the null hypothesis is "accepted," the analyst states that the difference between the expected results (50 heads and 50 tails) and the observed results (48 heads and 52 tails) is "explainable by chance alone."

Some staticians attribute the first hypothesis tests to satirical writer John Arbuthnot in 1710, who studied male and female births in England after observing that in nearly every year, male births exceeded female births by a slight proportion. Arbuthnot calculated that the probability of this happening by chance was small, and therefore it was due to “divine providence.”

What is Hypothesis Testing?

Hypothesis testing refers to a process used by analysts to assess the plausibility of a hypothesis by using sample data. In hypothesis testing, statisticians formulate two hypotheses: the null hypothesis and the alternative hypothesis. A null hypothesis determines there is no difference between two groups or conditions, while the alternative hypothesis determines that there is a difference. Researchers evaluate the statistical significance of the test based on the probability that the null hypothesis is true.

What are the Four Key Steps Involved in Hypothesis Testing?

Hypothesis testing begins with an analyst stating two hypotheses, with only one that can be right. The analyst then formulates an analysis plan, which outlines how the data will be evaluated. Next, they move to the testing phase and analyze the sample data. Finally, the analyst analyzes the results and either rejects the null hypothesis or states that the null hypothesis is plausible, given the data.

What are the Benefits of Hypothesis Testing?

Hypothesis testing helps assess the accuracy of new ideas or theories by testing them against data. This allows researchers to determine whether the evidence supports their hypothesis, helping to avoid false claims and conclusions. Hypothesis testing also provides a framework for decision-making based on data rather than personal opinions or biases. By relying on statistical analysis, hypothesis testing helps to reduce the effects of chance and confounding variables, providing a robust framework for making informed conclusions.

What are the Limitations of Hypothesis Testing?

Hypothesis testing relies exclusively on data and doesn’t provide a comprehensive understanding of the subject being studied. Additionally, the accuracy of the results depends on the quality of the available data and the statistical methods used. Inaccurate data or inappropriate hypothesis formulation may lead to incorrect conclusions or failed tests. Hypothesis testing can also lead to errors, such as analysts either accepting or rejecting a null hypothesis when they shouldn’t have. These errors may result in false conclusions or missed opportunities to identify significant patterns or relationships in the data.

The Bottom Line

Hypothesis testing refers to a statistical process that helps researchers and/or analysts determine the reliability of a study. By using a well-formulated hypothesis and set of statistical tests, individuals or businesses can make inferences about the population that they are studying and draw conclusions based on the data presented. There are different types of hypothesis testing, each with their own set of rules and procedures. However, all hypothesis testing methods have the same four step process, which includes stating the hypotheses, formulating an analysis plan, analyzing the sample data, and analyzing the result. Hypothesis testing plays a vital part of the scientific process, helping to test assumptions and make better data-based decisions.

Sage. " Introduction to Hypothesis Testing. " Page 4.

Elder Research. " Who Invented the Null Hypothesis? "

Formplus. " Hypothesis Testing: Definition, Uses, Limitations and Examples. "

:max_bytes(150000):strip_icc():format(webp)/z-test.asp-final-81378e9e20704163ba30aad511c16e5d.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Test statistics | Definition, Interpretation, and Examples

Published on July 17, 2020 by Rebecca Bevans . Revised on June 22, 2023.

The test statistic is a number calculated from a statistical test of a hypothesis. It shows how closely your observed data match the distribution expected under the null hypothesis of that statistical test.

The test statistic is used to calculate the p value of your results, helping to decide whether to reject your null hypothesis.

Table of contents

What exactly is a test statistic, types of test statistics, interpreting test statistics, reporting test statistics, other interesting articles, frequently asked questions about test statistics.

A test statistic describes how closely the distribution of your data matches the distribution predicted under the null hypothesis of the statistical test you are using.

The distribution of data is how often each observation occurs, and can be described by its central tendency and variation around that central tendency. Different statistical tests predict different types of distributions, so it’s important to choose the right statistical test for your hypothesis.

The test statistic summarizes your observed data into a single number using the central tendency, variation, sample size, and number of predictor variables in your statistical model.

Generally, the test statistic is calculated as the pattern in your data (i.e., the correlation between variables or difference between groups) divided by the variance in the data (i.e., the standard deviation ).

- Null hypothesis ( H 0 ): There is no correlation between temperature and flowering date.

- Alternate hypothesis ( H A or H 1 ): There is a correlation between temperature and flowering date.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Below is a summary of the most common test statistics, their hypotheses, and the types of statistical tests that use them.

Different statistical tests will have slightly different ways of calculating these test statistics, but the underlying hypotheses and interpretations of the test statistic stay the same.

In practice, you will almost always calculate your test statistic using a statistical program (R, SPSS, Excel, etc.), which will also calculate the p value of the test statistic. However, formulas to calculate these statistics by hand can be found online.

- a regression coefficient of 0.36

- a t value comparing that coefficient to the predicted range of regression coefficients under the null hypothesis of no relationship

The t value of the regression test is 2.36 – this is your test statistic.

For any combination of sample sizes and number of predictor variables, a statistical test will produce a predicted distribution for the test statistic. This shows the most likely range of values that will occur if your data follows the null hypothesis of the statistical test.

The more extreme your test statistic – the further to the edge of the range of predicted test values it is – the less likely it is that your data could have been generated under the null hypothesis of that statistical test.

The agreement between your calculated test statistic and the predicted values is described by the p value . The smaller the p value, the less likely your test statistic is to have occurred under the null hypothesis of the statistical test.

Because the test statistic is generated from your observed data, this ultimately means that the smaller the p value, the less likely it is that your data could have occurred if the null hypothesis was true.

Test statistics can be reported in the results section of your research paper along with the sample size, p value of the test, and any characteristics of your data that will help to put these results into context.

Whether or not you need to report the test statistic depends on the type of test you are reporting.

By surveying a random subset of 100 trees over 25 years we found a statistically significant ( p < 0.01) positive correlation between temperature and flowering dates ( R 2 = 0.36, SD = 0.057).

In our comparison of mouse diet A and mouse diet B, we found that the lifespan on diet A ( M = 2.1 years; SD = 0.12) was significantly shorter than the lifespan on diet B ( M = 2.6 years; SD = 0.1), with an average difference of 6 months ( t (80) = -12.75; p < 0.01).

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Confidence interval

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias