- Last updated November 18, 2021

- In AI Origins & Evolution

Top Machine Learning Research Papers Released In 2021

- Published on November 18, 2021

- by Dr. Nivash Jeevanandam

Advances in machine learning and deep learning research are reshaping our technology. Machine learning and deep learning have accomplished various astounding feats this year in 2021, and key research articles have resulted in technical advances used by billions of people. The research in this sector is advancing at a breakneck pace and assisting you to keep up. Here is a collection of the most important recent scientific study papers.

Rebooting ACGAN: Auxiliary Classifier GANs with Stable Training

The authors of this work examined why ACGAN training becomes unstable as the number of classes in the dataset grows. The researchers revealed that the unstable training occurs due to a gradient explosion problem caused by the unboundedness of the input feature vectors and the classifier’s poor classification capabilities during the early training stage. The researchers presented the Data-to-Data Cross-Entropy loss (D2D-CE) and the Rebooted Auxiliary Classifier Generative Adversarial Network to alleviate the instability and reinforce ACGAN (ReACGAN). Additionally, extensive tests of ReACGAN demonstrate that it is resistant to hyperparameter selection and is compatible with a variety of architectures and differentiable augmentations.

This article is ranked #1 on CIFAR-10 for Conditional Image Generation.

For the research paper, read here .

For code, see here .

Dense Unsupervised Learning for Video Segmentation

The authors presented a straightforward and computationally fast unsupervised strategy for learning dense spacetime representations from unlabeled films in this study. The approach demonstrates rapid convergence of training and a high degree of data efficiency. Furthermore, the researchers obtain VOS accuracy superior to previous results despite employing a fraction of the previously necessary training data. The researchers acknowledge that the research findings may be utilised maliciously, such as for unlawful surveillance, and that they are excited to investigate how this skill might be used to better learn a broader spectrum of invariances by exploiting larger temporal windows in movies with complex (ego-)motion, which is more prone to disocclusions.

This study is ranked #1 on DAVIS 2017 for Unsupervised Video Object Segmentation (val).

Temporally-Consistent Surface Reconstruction using Metrically-Consistent Atlases

The authors offer an atlas-based technique for producing unsupervised temporally consistent surface reconstructions by requiring a point on the canonical shape representation to translate to metrically consistent 3D locations on the reconstructed surfaces. Finally, the researchers envisage a plethora of potential applications for the method. For example, by substituting an image-based loss for the Chamfer distance, one may apply the method to RGB video sequences, which the researchers feel will spur development in video-based 3D reconstruction.

This article is ranked #1 on ANIM in the category of Surface Reconstruction.

EdgeFlow: Achieving Practical Interactive Segmentation with Edge-Guided Flow

The researchers propose a revolutionary interactive architecture called EdgeFlow that uses user interaction data without resorting to post-processing or iterative optimisation. The suggested technique achieves state-of-the-art performance on common benchmarks due to its coarse-to-fine network design. Additionally, the researchers create an effective interactive segmentation tool that enables the user to improve the segmentation result through flexible options incrementally.

This paper is ranked #1 on Interactive Segmentation on PASCAL VOC

Learning Transferable Visual Models From Natural Language Supervision

The authors of this work examined whether it is possible to transfer the success of task-agnostic web-scale pre-training in natural language processing to another domain. The findings indicate that adopting this formula resulted in the emergence of similar behaviours in the field of computer vision, and the authors examine the social ramifications of this line of research. CLIP models learn to accomplish a range of tasks during pre-training to optimise their training objective. Using natural language prompting, CLIP can then use this task learning to enable zero-shot transfer to many existing datasets. When applied at a large scale, this technique can compete with task-specific supervised models, while there is still much space for improvement.

This research is ranked #1 on Zero-Shot Transfer Image Classification on SUN

CoAtNet: Marrying Convolution and Attention for All Data Sizes

The researchers in this article conduct a thorough examination of the features of convolutions and transformers, resulting in a principled approach for combining them into a new family of models dubbed CoAtNet. Extensive experiments demonstrate that CoAtNet combines the advantages of ConvNets and Transformers, achieving state-of-the-art performance across a range of data sizes and compute budgets. Take note that this article is currently concentrating on ImageNet classification for model construction. However, the researchers believe their approach is relevant to a broader range of applications, such as object detection and semantic segmentation.

This paper is ranked #1 on Image Classification on ImageNet (using extra training data).

SwinIR: Image Restoration Using Swin Transformer

The authors of this article suggest the SwinIR image restoration model, which is based on the Swin Transformer . The model comprises three modules: shallow feature extraction, deep feature extraction, and human-recognition reconstruction. For deep feature extraction, the researchers employ a stack of residual Swin Transformer blocks (RSTB), each formed of Swin Transformer layers, a convolution layer, and a residual connection.

This research article is ranked #1 on Image Super-Resolution on Manga109 – 4x upscaling.

Access all our open Survey & Awards Nomination forms in one place >>

Dr. Nivash Jeevanandam

Download our mobile app.

CORPORATE TRAINING PROGRAMS ON GENERATIVE AI

Generative ai skilling for enterprises, our customized corporate training program on generative ai provides a unique opportunity to empower, retain, and advance your talent., 3 ways to join our community, telegram group.

Discover special offers, top stories, upcoming events, and more.

Discord Server

Stay Connected with a larger ecosystem of data science and ML Professionals

Subscribe to our Daily newsletter

Get our daily awesome stories & videos in your inbox, recent stories.

Top 10 LMS Platforms for Enterprise AI Training and Development

Father of Computational Theory Wins 2023 Turing Award

TCS Records $900 Million AI and GenAI Pipeline This Quarter

Generative AI has significantly enhanced TCS’ service offerings across cloud platforms, security, and enterprise solutions.

Elon Musk’s xAI Unveils Grok-1.5 Vision, Beats OpenAI’s GPT-4V

Ola Krutrim Makes History with In-House Cloud Infrastructure, Skips AWS and Azure

Eventbrite’s Data Privacy Manager, Aditi Sharma on ‘Encrypt Data, not Empathy’

NaMo App Introduces AI Chatbot NaMo AI, Offers Quick Answers to Government Schemes

Users can inquire about initiatives like “Har Ghar Jal” and get insights.

Data Centres’ Lean Towards Nuclear-Powered Future to Combat Energy Needs

Meta Releases AI on WhatsApp, Looks Like Perplexity AI

Our mission is to bring about better-informed and more conscious decisions about technology through authoritative, influential, and trustworthy journalism., shape the future of ai.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

- Terms of use

- Privacy Policy

Subscribe to Our Newsletter

The Belamy, our weekly Newsletter is a rage. Just enter your email below.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Machine learning articles from across Nature Portfolio

Machine learning is the ability of a machine to improve its performance based on previous results. Machine learning methods enable computers to learn without being explicitly programmed and have multiple applications, for example, in the improvement of data mining algorithms.

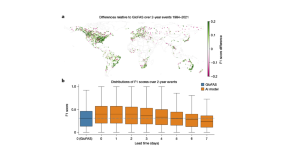

Artificial intelligence can provide accurate forecasts of extreme floods at global scale

Anthropogenic climate change is accelerating the hydrological cycle, causing an increase in the risk of flood-related disasters. A system that uses artificial intelligence allows the creation of reliable, global river flood forecasts, even in places where accurate local data are not available.

Capturing and modeling cellular niches from dissociated single-cell and spatial data

Cells interact with their local environment to enact global tissue function. By harnessing gene–gene covariation in cellular neighborhoods from spatial transcriptomics data, the covariance environment (COVET) niche representation and the environmental variational inference (ENVI) data integration method model phenotype–microenvironment interplay and reconstruct the spatial context of dissociated single-cell RNA sequencing datasets.

Creating a universal cell segmentation algorithm

Cell segmentation currently involves the use of various bespoke algorithms designed for specific cell types, tissues, staining methods and microscopy technologies. We present a universal algorithm that can segment all kinds of microscopy images and cell types across diverse imaging protocols.

Latest Research and Reviews

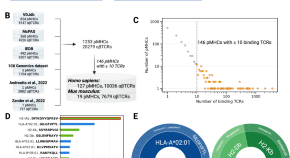

Deep learning predictions of TCR-epitope interactions reveal epitope-specific chains in dual alpha T cells

Prediction of the specificity of a T cell receptor from amino acid sequence has been performed using different methods and approaches. Here the authors use TCRab sequences with known specificity to develop a deep learning TCR-epitope interaction predictor and use this method to predict specificity of dual alpha chain TCRs and TCRs specific for different antigens.

- Giancarlo Croce

- Sara Bobisse

- David Gfeller

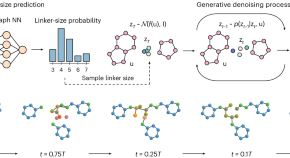

Equivariant 3D-conditional diffusion model for molecular linker design

Fragment-based molecular design uses chemical motifs and combines them into bio-active compounds. While this approach has grown in capability, molecular linker methods are restricted to linking fragments one by one, which makes the search for effective combinations harder. Igashov and colleagues use a conditional diffusion model to link multiple fragments in a one-shot generative process.

- Ilia Igashov

- Hannes Stärk

- Bruno Correia

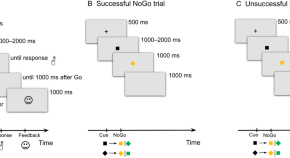

Machine learning reveals differential effects of depression and anxiety on reward and punishment processing

- Anna Grabowska

- Jakub Zabielski

- Magdalena Senderecka

Pathway-based signatures predict patient outcome, chemotherapy benefit and synthetic lethal dependencies in invasive lobular breast cancer

- John Alexander

- Koen Schipper

- Syed Haider

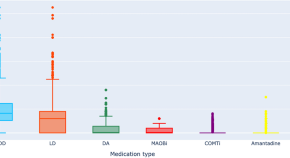

A decision support system based on recurrent neural networks to predict medication dosage for patients with Parkinson's disease

- Atiye Riasi

- Mehdi Delrobaei

- Mehri Salari

Deep learning assists in acute leukemia detection and cell classification via flow cytometry using the acute leukemia orientation tube

- Fu-Ming Cheng

- Shih-Chang Lo

- Kai-Cheng Hsu

News and Comment

Protein language models using convolutions.

Designer antibiotics by generative AI

Researchers developed an AI model that designs novel, synthesizable antibiotic compounds — several of which showed potent in vitro activity against priority pathogens.

- Karen O’Leary

Is ChatGPT corrupting peer review? Telltale words hint at AI use

A study of review reports identifies dozens of adjectives that could indicate text written with the help of chatbots.

- Dalmeet Singh Chawla

How to break big tech’s stranglehold on AI in academia

- Michał Woźniak

- Paweł Ksieniewicz

AI can help to tailor drugs for Africa — but Africans should lead the way

Computational models that require very little data could transform biomedical and drug development research in Africa, as long as infrastructure, trained staff and secure databases are available.

- Gemma Turon

- Mathew Njoroge

- Kelly Chibale

Three ways ChatGPT helps me in my academic writing

Generative AI can be a valuable aid in writing, editing and peer review – if you use it responsibly, says Dritjon Gruda.

- Dritjon Gruda

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Frequently Asked Questions

Journal of Machine Learning Research

The Journal of Machine Learning Research (JMLR), established in 2000 , provides an international forum for the electronic and paper publication of high-quality scholarly articles in all areas of machine learning. All published papers are freely available online.

- 2024.02.18 : Volume 24 completed; Volume 25 began.

- 2023.01.20 : Volume 23 completed; Volume 24 began.

- 2022.07.20 : New special issue on climate change .

- 2022.02.18 : New blog post: Retrospectives from 20 Years of JMLR .

- 2022.01.25 : Volume 22 completed; Volume 23 began.

- 2021.12.02 : Message from outgoing co-EiC Bernhard Schölkopf .

- 2021.02.10 : Volume 21 completed; Volume 22 began.

- More news ...

Latest papers

More PAC-Bayes bounds: From bounded losses, to losses with general tail behaviors, to anytime validity Borja Rodríguez-Gálvez, Ragnar Thobaben, Mikael Skoglund , 2024. [ abs ][ pdf ][ bib ]

Neural Hilbert Ladders: Multi-Layer Neural Networks in Function Space Zhengdao Chen , 2024. [ abs ][ pdf ][ bib ]

QDax: A Library for Quality-Diversity and Population-based Algorithms with Hardware Acceleration Felix Chalumeau, Bryan Lim, Raphaël Boige, Maxime Allard, Luca Grillotti, Manon Flageat, Valentin Macé, Guillaume Richard, Arthur Flajolet, Thomas Pierrot, Antoine Cully , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Random Forest Weighted Local Fréchet Regression with Random Objects Rui Qiu, Zhou Yu, Ruoqing Zhu , 2024. [ abs ][ pdf ][ bib ] [ code ]

PhAST: Physics-Aware, Scalable, and Task-Specific GNNs for Accelerated Catalyst Design Alexandre Duval, Victor Schmidt, Santiago Miret, Yoshua Bengio, Alex Hernández-García, David Rolnick , 2024. [ abs ][ pdf ][ bib ] [ code ]

Unsupervised Anomaly Detection Algorithms on Real-world Data: How Many Do We Need? Roel Bouman, Zaharah Bukhsh, Tom Heskes , 2024. [ abs ][ pdf ][ bib ] [ code ]

Multi-class Probabilistic Bounds for Majority Vote Classifiers with Partially Labeled Data Vasilii Feofanov, Emilie Devijver, Massih-Reza Amini , 2024. [ abs ][ pdf ][ bib ]

Information Processing Equalities and the Information–Risk Bridge Robert C. Williamson, Zac Cranko , 2024. [ abs ][ pdf ][ bib ]

Nonparametric Regression for 3D Point Cloud Learning Xinyi Li, Shan Yu, Yueying Wang, Guannan Wang, Li Wang, Ming-Jun Lai , 2024. [ abs ][ pdf ][ bib ] [ code ]

AMLB: an AutoML Benchmark Pieter Gijsbers, Marcos L. P. Bueno, Stefan Coors, Erin LeDell, Sébastien Poirier, Janek Thomas, Bernd Bischl, Joaquin Vanschoren , 2024. [ abs ][ pdf ][ bib ] [ code ]

Materials Discovery using Max K-Armed Bandit Nobuaki Kikkawa, Hiroshi Ohno , 2024. [ abs ][ pdf ][ bib ]

Semi-supervised Inference for Block-wise Missing Data without Imputation Shanshan Song, Yuanyuan Lin, Yong Zhou , 2024. [ abs ][ pdf ][ bib ]

Adaptivity and Non-stationarity: Problem-dependent Dynamic Regret for Online Convex Optimization Peng Zhao, Yu-Jie Zhang, Lijun Zhang, Zhi-Hua Zhou , 2024. [ abs ][ pdf ][ bib ]

Scaling Speech Technology to 1,000+ Languages Vineel Pratap, Andros Tjandra, Bowen Shi, Paden Tomasello, Arun Babu, Sayani Kundu, Ali Elkahky, Zhaoheng Ni, Apoorv Vyas, Maryam Fazel-Zarandi, Alexei Baevski, Yossi Adi, Xiaohui Zhang, Wei-Ning Hsu, Alexis Conneau, Michael Auli , 2024. [ abs ][ pdf ][ bib ] [ code ]

MAP- and MLE-Based Teaching Hans Ulrich Simon, Jan Arne Telle , 2024. [ abs ][ pdf ][ bib ]

A General Framework for the Analysis of Kernel-based Tests Tamara Fernández, Nicolás Rivera , 2024. [ abs ][ pdf ][ bib ]

Overparametrized Multi-layer Neural Networks: Uniform Concentration of Neural Tangent Kernel and Convergence of Stochastic Gradient Descent Jiaming Xu, Hanjing Zhu , 2024. [ abs ][ pdf ][ bib ]

Sparse Representer Theorems for Learning in Reproducing Kernel Banach Spaces Rui Wang, Yuesheng Xu, Mingsong Yan , 2024. [ abs ][ pdf ][ bib ]

Exploration of the Search Space of Gaussian Graphical Models for Paired Data Alberto Roverato, Dung Ngoc Nguyen , 2024. [ abs ][ pdf ][ bib ]

The good, the bad and the ugly sides of data augmentation: An implicit spectral regularization perspective Chi-Heng Lin, Chiraag Kaushik, Eva L. Dyer, Vidya Muthukumar , 2024. [ abs ][ pdf ][ bib ] [ code ]

Stochastic Approximation with Decision-Dependent Distributions: Asymptotic Normality and Optimality Joshua Cutler, Mateo Díaz, Dmitriy Drusvyatskiy , 2024. [ abs ][ pdf ][ bib ]

Minimax Rates for High-Dimensional Random Tessellation Forests Eliza O'Reilly, Ngoc Mai Tran , 2024. [ abs ][ pdf ][ bib ]

Nonparametric Estimation of Non-Crossing Quantile Regression Process with Deep ReQU Neural Networks Guohao Shen, Yuling Jiao, Yuanyuan Lin, Joel L. Horowitz, Jian Huang , 2024. [ abs ][ pdf ][ bib ]

Spatial meshing for general Bayesian multivariate models Michele Peruzzi, David B. Dunson , 2024. [ abs ][ pdf ][ bib ] [ code ]

A Semi-parametric Estimation of Personalized Dose-response Function Using Instrumental Variables Wei Luo, Yeying Zhu, Xuekui Zhang, Lin Lin , 2024. [ abs ][ pdf ][ bib ]

Learning Non-Gaussian Graphical Models via Hessian Scores and Triangular Transport Ricardo Baptista, Youssef Marzouk, Rebecca Morrison, Olivier Zahm , 2024. [ abs ][ pdf ][ bib ] [ code ]

On the Learnability of Out-of-distribution Detection Zhen Fang, Yixuan Li, Feng Liu, Bo Han, Jie Lu , 2024. [ abs ][ pdf ][ bib ]

Win: Weight-Decay-Integrated Nesterov Acceleration for Faster Network Training Pan Zhou, Xingyu Xie, Zhouchen Lin, Kim-Chuan Toh, Shuicheng Yan , 2024. [ abs ][ pdf ][ bib ] [ code ]

On the Eigenvalue Decay Rates of a Class of Neural-Network Related Kernel Functions Defined on General Domains Yicheng Li, Zixiong Yu, Guhan Chen, Qian Lin , 2024. [ abs ][ pdf ][ bib ]

Tight Convergence Rate Bounds for Optimization Under Power Law Spectral Conditions Maksim Velikanov, Dmitry Yarotsky , 2024. [ abs ][ pdf ][ bib ]

ptwt - The PyTorch Wavelet Toolbox Moritz Wolter, Felix Blanke, Jochen Garcke, Charles Tapley Hoyt , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Choosing the Number of Topics in LDA Models – A Monte Carlo Comparison of Selection Criteria Victor Bystrov, Viktoriia Naboka-Krell, Anna Staszewska-Bystrova, Peter Winker , 2024. [ abs ][ pdf ][ bib ] [ code ]

Functional Directed Acyclic Graphs Kuang-Yao Lee, Lexin Li, Bing Li , 2024. [ abs ][ pdf ][ bib ]

Unlabeled Principal Component Analysis and Matrix Completion Yunzhen Yao, Liangzu Peng, Manolis C. Tsakiris , 2024. [ abs ][ pdf ][ bib ] [ code ]

Distributed Estimation on Semi-Supervised Generalized Linear Model Jiyuan Tu, Weidong Liu, Xiaojun Mao , 2024. [ abs ][ pdf ][ bib ]

Towards Explainable Evaluation Metrics for Machine Translation Christoph Leiter, Piyawat Lertvittayakumjorn, Marina Fomicheva, Wei Zhao, Yang Gao, Steffen Eger , 2024. [ abs ][ pdf ][ bib ]

Differentially private methods for managing model uncertainty in linear regression Víctor Peña, Andrés F. Barrientos , 2024. [ abs ][ pdf ][ bib ]

Data Summarization via Bilevel Optimization Zalán Borsos, Mojmír Mutný, Marco Tagliasacchi, Andreas Krause , 2024. [ abs ][ pdf ][ bib ]

Pareto Smoothed Importance Sampling Aki Vehtari, Daniel Simpson, Andrew Gelman, Yuling Yao, Jonah Gabry , 2024. [ abs ][ pdf ][ bib ] [ code ]

Policy Gradient Methods in the Presence of Symmetries and State Abstractions Prakash Panangaden, Sahand Rezaei-Shoshtari, Rosie Zhao, David Meger, Doina Precup , 2024. [ abs ][ pdf ][ bib ] [ code ]

Scaling Instruction-Finetuned Language Models Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Yunxuan Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Alex Castro-Ros, Marie Pellat, Kevin Robinson, Dasha Valter, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, Jason Wei , 2024. [ abs ][ pdf ][ bib ]

Tangential Wasserstein Projections Florian Gunsilius, Meng Hsuan Hsieh, Myung Jin Lee , 2024. [ abs ][ pdf ][ bib ] [ code ]

Learnability of Linear Port-Hamiltonian Systems Juan-Pablo Ortega, Daiying Yin , 2024. [ abs ][ pdf ][ bib ] [ code ]

Off-Policy Action Anticipation in Multi-Agent Reinforcement Learning Ariyan Bighashdel, Daan de Geus, Pavol Jancura, Gijs Dubbelman , 2024. [ abs ][ pdf ][ bib ] [ code ]

On Unbiased Estimation for Partially Observed Diffusions Jeremy Heng, Jeremie Houssineau, Ajay Jasra , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Improving Lipschitz-Constrained Neural Networks by Learning Activation Functions Stanislas Ducotterd, Alexis Goujon, Pakshal Bohra, Dimitris Perdios, Sebastian Neumayer, Michael Unser , 2024. [ abs ][ pdf ][ bib ] [ code ]

Mathematical Framework for Online Social Media Auditing Wasim Huleihel, Yehonathan Refael , 2024. [ abs ][ pdf ][ bib ]

An Embedding Framework for the Design and Analysis of Consistent Polyhedral Surrogates Jessie Finocchiaro, Rafael M. Frongillo, Bo Waggoner , 2024. [ abs ][ pdf ][ bib ]

Low-rank Variational Bayes correction to the Laplace method Janet van Niekerk, Haavard Rue , 2024. [ abs ][ pdf ][ bib ] [ code ]

Scaling the Convex Barrier with Sparse Dual Algorithms Alessandro De Palma, Harkirat Singh Behl, Rudy Bunel, Philip H.S. Torr, M. Pawan Kumar , 2024. [ abs ][ pdf ][ bib ] [ code ]

Causal-learn: Causal Discovery in Python Yujia Zheng, Biwei Huang, Wei Chen, Joseph Ramsey, Mingming Gong, Ruichu Cai, Shohei Shimizu, Peter Spirtes, Kun Zhang , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Decomposed Linear Dynamical Systems (dLDS) for learning the latent components of neural dynamics Noga Mudrik, Yenho Chen, Eva Yezerets, Christopher J. Rozell, Adam S. Charles , 2024. [ abs ][ pdf ][ bib ] [ code ]

Existence and Minimax Theorems for Adversarial Surrogate Risks in Binary Classification Natalie S. Frank, Jonathan Niles-Weed , 2024. [ abs ][ pdf ][ bib ]

Data Thinning for Convolution-Closed Distributions Anna Neufeld, Ameer Dharamshi, Lucy L. Gao, Daniela Witten , 2024. [ abs ][ pdf ][ bib ] [ code ]

A projected semismooth Newton method for a class of nonconvex composite programs with strong prox-regularity Jiang Hu, Kangkang Deng, Jiayuan Wu, Quanzheng Li , 2024. [ abs ][ pdf ][ bib ]

Revisiting RIP Guarantees for Sketching Operators on Mixture Models Ayoub Belhadji, Rémi Gribonval , 2024. [ abs ][ pdf ][ bib ]

Monotonic Risk Relationships under Distribution Shifts for Regularized Risk Minimization Daniel LeJeune, Jiayu Liu, Reinhard Heckel , 2024. [ abs ][ pdf ][ bib ] [ code ]

Polygonal Unadjusted Langevin Algorithms: Creating stable and efficient adaptive algorithms for neural networks Dong-Young Lim, Sotirios Sabanis , 2024. [ abs ][ pdf ][ bib ]

Axiomatic effect propagation in structural causal models Raghav Singal, George Michailidis , 2024. [ abs ][ pdf ][ bib ]

Optimal First-Order Algorithms as a Function of Inequalities Chanwoo Park, Ernest K. Ryu , 2024. [ abs ][ pdf ][ bib ] [ code ]

Resource-Efficient Neural Networks for Embedded Systems Wolfgang Roth, Günther Schindler, Bernhard Klein, Robert Peharz, Sebastian Tschiatschek, Holger Fröning, Franz Pernkopf, Zoubin Ghahramani , 2024. [ abs ][ pdf ][ bib ]

Trained Transformers Learn Linear Models In-Context Ruiqi Zhang, Spencer Frei, Peter L. Bartlett , 2024. [ abs ][ pdf ][ bib ]

Adam-family Methods for Nonsmooth Optimization with Convergence Guarantees Nachuan Xiao, Xiaoyin Hu, Xin Liu, Kim-Chuan Toh , 2024. [ abs ][ pdf ][ bib ]

Efficient Modality Selection in Multimodal Learning Yifei He, Runxiang Cheng, Gargi Balasubramaniam, Yao-Hung Hubert Tsai, Han Zhao , 2024. [ abs ][ pdf ][ bib ]

A Multilabel Classification Framework for Approximate Nearest Neighbor Search Ville Hyvönen, Elias Jääsaari, Teemu Roos , 2024. [ abs ][ pdf ][ bib ] [ code ]

Probabilistic Forecasting with Generative Networks via Scoring Rule Minimization Lorenzo Pacchiardi, Rilwan A. Adewoyin, Peter Dueben, Ritabrata Dutta , 2024. [ abs ][ pdf ][ bib ] [ code ]

Multiple Descent in the Multiple Random Feature Model Xuran Meng, Jianfeng Yao, Yuan Cao , 2024. [ abs ][ pdf ][ bib ]

Mean-Square Analysis of Discretized Itô Diffusions for Heavy-tailed Sampling Ye He, Tyler Farghly, Krishnakumar Balasubramanian, Murat A. Erdogdu , 2024. [ abs ][ pdf ][ bib ]

Invariant and Equivariant Reynolds Networks Akiyoshi Sannai, Makoto Kawano, Wataru Kumagai , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Personalized PCA: Decoupling Shared and Unique Features Naichen Shi, Raed Al Kontar , 2024. [ abs ][ pdf ][ bib ] [ code ]

Survival Kernets: Scalable and Interpretable Deep Kernel Survival Analysis with an Accuracy Guarantee George H. Chen , 2024. [ abs ][ pdf ][ bib ] [ code ]

On the Sample Complexity and Metastability of Heavy-tailed Policy Search in Continuous Control Amrit Singh Bedi, Anjaly Parayil, Junyu Zhang, Mengdi Wang, Alec Koppel , 2024. [ abs ][ pdf ][ bib ]

Convergence for nonconvex ADMM, with applications to CT imaging Rina Foygel Barber, Emil Y. Sidky , 2024. [ abs ][ pdf ][ bib ] [ code ]

Distributed Gaussian Mean Estimation under Communication Constraints: Optimal Rates and Communication-Efficient Algorithms T. Tony Cai, Hongji Wei , 2024. [ abs ][ pdf ][ bib ]

Sparse NMF with Archetypal Regularization: Computational and Robustness Properties Kayhan Behdin, Rahul Mazumder , 2024. [ abs ][ pdf ][ bib ] [ code ]

Deep Network Approximation: Beyond ReLU to Diverse Activation Functions Shijun Zhang, Jianfeng Lu, Hongkai Zhao , 2024. [ abs ][ pdf ][ bib ]

Effect-Invariant Mechanisms for Policy Generalization Sorawit Saengkyongam, Niklas Pfister, Predrag Klasnja, Susan Murphy, Jonas Peters , 2024. [ abs ][ pdf ][ bib ]

Pygmtools: A Python Graph Matching Toolkit Runzhong Wang, Ziao Guo, Wenzheng Pan, Jiale Ma, Yikai Zhang, Nan Yang, Qi Liu, Longxuan Wei, Hanxue Zhang, Chang Liu, Zetian Jiang, Xiaokang Yang, Junchi Yan , 2024. (Machine Learning Open Source Software Paper) [ abs ][ pdf ][ bib ] [ code ]

Heterogeneous-Agent Reinforcement Learning Yifan Zhong, Jakub Grudzien Kuba, Xidong Feng, Siyi Hu, Jiaming Ji, Yaodong Yang , 2024. [ abs ][ pdf ][ bib ] [ code ]

Sample-efficient Adversarial Imitation Learning Dahuin Jung, Hyungyu Lee, Sungroh Yoon , 2024. [ abs ][ pdf ][ bib ]

Stochastic Modified Flows, Mean-Field Limits and Dynamics of Stochastic Gradient Descent Benjamin Gess, Sebastian Kassing, Vitalii Konarovskyi , 2024. [ abs ][ pdf ][ bib ]

Rates of convergence for density estimation with generative adversarial networks Nikita Puchkin, Sergey Samsonov, Denis Belomestny, Eric Moulines, Alexey Naumov , 2024. [ abs ][ pdf ][ bib ]

Additive smoothing error in backward variational inference for general state-space models Mathis Chagneux, Elisabeth Gassiat, Pierre Gloaguen, Sylvain Le Corff , 2024. [ abs ][ pdf ][ bib ]

Optimal Bump Functions for Shallow ReLU networks: Weight Decay, Depth Separation, Curse of Dimensionality Stephan Wojtowytsch , 2024. [ abs ][ pdf ][ bib ]

Numerically Stable Sparse Gaussian Processes via Minimum Separation using Cover Trees Alexander Terenin, David R. Burt, Artem Artemev, Seth Flaxman, Mark van der Wilk, Carl Edward Rasmussen, Hong Ge , 2024. [ abs ][ pdf ][ bib ] [ code ]

On Tail Decay Rate Estimation of Loss Function Distributions Etrit Haxholli, Marco Lorenzi , 2024. [ abs ][ pdf ][ bib ] [ code ]

Deep Nonparametric Estimation of Operators between Infinite Dimensional Spaces Hao Liu, Haizhao Yang, Minshuo Chen, Tuo Zhao, Wenjing Liao , 2024. [ abs ][ pdf ][ bib ]

Post-Regularization Confidence Bands for Ordinary Differential Equations Xiaowu Dai, Lexin Li , 2024. [ abs ][ pdf ][ bib ]

On the Generalization of Stochastic Gradient Descent with Momentum Ali Ramezani-Kebrya, Kimon Antonakopoulos, Volkan Cevher, Ashish Khisti, Ben Liang , 2024. [ abs ][ pdf ][ bib ]

Pursuit of the Cluster Structure of Network Lasso: Recovery Condition and Non-convex Extension Shotaro Yagishita, Jun-ya Gotoh , 2024. [ abs ][ pdf ][ bib ]

Iterate Averaging in the Quest for Best Test Error Diego Granziol, Nicholas P. Baskerville, Xingchen Wan, Samuel Albanie, Stephen Roberts , 2024. [ abs ][ pdf ][ bib ] [ code ]

Nonparametric Inference under B-bits Quantization Kexuan Li, Ruiqi Liu, Ganggang Xu, Zuofeng Shang , 2024. [ abs ][ pdf ][ bib ]

Black Box Variational Inference with a Deterministic Objective: Faster, More Accurate, and Even More Black Box Ryan Giordano, Martin Ingram, Tamara Broderick , 2024. [ abs ][ pdf ][ bib ] [ code ]

On Sufficient Graphical Models Bing Li, Kyongwon Kim , 2024. [ abs ][ pdf ][ bib ]

Localized Debiased Machine Learning: Efficient Inference on Quantile Treatment Effects and Beyond Nathan Kallus, Xiaojie Mao, Masatoshi Uehara , 2024. [ abs ][ pdf ][ bib ] [ code ]

On the Effect of Initialization: The Scaling Path of 2-Layer Neural Networks Sebastian Neumayer, Lénaïc Chizat, Michael Unser , 2024. [ abs ][ pdf ][ bib ]

Improving physics-informed neural networks with meta-learned optimization Alex Bihlo , 2024. [ abs ][ pdf ][ bib ]

A Comparison of Continuous-Time Approximations to Stochastic Gradient Descent Stefan Ankirchner, Stefan Perko , 2024. [ abs ][ pdf ][ bib ]

Critically Assessing the State of the Art in Neural Network Verification Matthias König, Annelot W. Bosman, Holger H. Hoos, Jan N. van Rijn , 2024. [ abs ][ pdf ][ bib ]

Estimating the Minimizer and the Minimum Value of a Regression Function under Passive Design Arya Akhavan, Davit Gogolashvili, Alexandre B. Tsybakov , 2024. [ abs ][ pdf ][ bib ]

Modeling Random Networks with Heterogeneous Reciprocity Daniel Cirkovic, Tiandong Wang , 2024. [ abs ][ pdf ][ bib ]

Exploration, Exploitation, and Engagement in Multi-Armed Bandits with Abandonment Zixian Yang, Xin Liu, Lei Ying , 2024. [ abs ][ pdf ][ bib ]

On Efficient and Scalable Computation of the Nonparametric Maximum Likelihood Estimator in Mixture Models Yangjing Zhang, Ying Cui, Bodhisattva Sen, Kim-Chuan Toh , 2024. [ abs ][ pdf ][ bib ] [ code ]

Decorrelated Variable Importance Isabella Verdinelli, Larry Wasserman , 2024. [ abs ][ pdf ][ bib ]

Model-Free Representation Learning and Exploration in Low-Rank MDPs Aditya Modi, Jinglin Chen, Akshay Krishnamurthy, Nan Jiang, Alekh Agarwal , 2024. [ abs ][ pdf ][ bib ]

Seeded Graph Matching for the Correlated Gaussian Wigner Model via the Projected Power Method Ernesto Araya, Guillaume Braun, Hemant Tyagi , 2024. [ abs ][ pdf ][ bib ] [ code ]

Fast Policy Extragradient Methods for Competitive Games with Entropy Regularization Shicong Cen, Yuting Wei, Yuejie Chi , 2024. [ abs ][ pdf ][ bib ]

Power of knockoff: The impact of ranking algorithm, augmented design, and symmetric statistic Zheng Tracy Ke, Jun S. Liu, Yucong Ma , 2024. [ abs ][ pdf ][ bib ]

Lower Complexity Bounds of Finite-Sum Optimization Problems: The Results and Construction Yuze Han, Guangzeng Xie, Zhihua Zhang , 2024. [ abs ][ pdf ][ bib ]

On Truthing Issues in Supervised Classification Jonathan K. Su , 2024. [ abs ][ pdf ][ bib ]

Subscribe to the PwC Newsletter

Join the community, natural language processing, representation learning.

Disentanglement

Graph representation learning, sentence embeddings.

Network Embedding

Classification.

Text Classification

Graph Classification

Audio Classification

Medical Image Classification

Language modelling.

Long-range modeling

Protein language model, sentence pair modeling, deep hashing, table retrieval, question answering.

Open-Ended Question Answering

Open-Domain Question Answering

Conversational question answering.

Answer Selection

Translation, image generation.

Image-to-Image Translation

Image Inpainting

Text-to-Image Generation

Conditional Image Generation

Data augmentation.

Image Augmentation

Text Augmentation

Machine translation.

Transliteration

Bilingual lexicon induction.

Multimodal Machine Translation

Unsupervised Machine Translation

Text generation.

Dialogue Generation

Data-to-Text Generation

Multi-Document Summarization

Text style transfer.

Topic Models

Document Classification

Sentence Classification

Emotion Classification

2d semantic segmentation, image segmentation.

Scene Parsing

Reflection Removal

Visual question answering (vqa).

Visual Question Answering

Machine Reading Comprehension

Chart Question Answering

Embodied Question Answering

Named entity recognition (ner).

Nested Named Entity Recognition

Chinese named entity recognition, few-shot ner, sentiment analysis.

Aspect-Based Sentiment Analysis (ABSA)

Multimodal Sentiment Analysis

Aspect Sentiment Triplet Extraction

Twitter Sentiment Analysis

Few-shot learning.

One-Shot Learning

Few-Shot Semantic Segmentation

Cross-domain few-shot.

Unsupervised Few-Shot Learning

Word embeddings.

Learning Word Embeddings

Multilingual Word Embeddings

Embeddings evaluation, contextualised word representations, optical character recognition (ocr).

Active Learning

Handwriting Recognition

Handwritten digit recognition, irregular text recognition, continual learning.

Class Incremental Learning

Continual named entity recognition, unsupervised class-incremental learning, text summarization.

Abstractive Text Summarization

Document summarization, opinion summarization, information retrieval.

Passage Retrieval

Cross-lingual information retrieval, table search, relation extraction.

Relation Classification

Document-level relation extraction, joint entity and relation extraction, temporal relation extraction, link prediction.

Inductive Link Prediction

Dynamic link prediction, hyperedge prediction, anchor link prediction, natural language inference.

Answer Generation

Visual Entailment

Cross-lingual natural language inference, reading comprehension.

Intent Recognition

Implicit relations, large language model, active object detection, emotion recognition.

Speech Emotion Recognition

Emotion Recognition in Conversation

Multimodal Emotion Recognition

Emotion-cause pair extraction, natural language understanding, vietnamese social media text processing.

Emotional Dialogue Acts

Semantic textual similarity.

Paraphrase Identification

Cross-Lingual Semantic Textual Similarity

Image captioning.

3D dense captioning

Controllable image captioning, aesthetic image captioning.

Relational Captioning

Event extraction, event causality identification, zero-shot event extraction, dialogue state tracking, task-oriented dialogue systems.

Visual Dialog

Dialogue understanding, semantic parsing.

AMR Parsing

Semantic dependency parsing, drs parsing, ucca parsing, coreference resolution, coreference-resolution, cross document coreference resolution, in-context learning, semantic similarity, conformal prediction.

Text Simplification

Music Source Separation

Audio source separation.

Decision Making Under Uncertainty

Sentence Embedding

Sentence compression, joint multilingual sentence representations, sentence embeddings for biomedical texts, code generation.

Code Translation

Code Documentation Generation

Class-level code generation, library-oriented code generation, dependency parsing.

Transition-Based Dependency Parsing

Prepositional phrase attachment, unsupervised dependency parsing, cross-lingual zero-shot dependency parsing, specificity, information extraction, extractive summarization, temporal information extraction, low resource named entity recognition, cross-lingual, cross-lingual transfer, cross-lingual document classification.

Cross-Lingual Entity Linking

Cross-language text summarization, response generation, common sense reasoning.

Physical Commonsense Reasoning

Riddle sense, anachronisms, instruction following, visual instruction following, memorization, data integration.

Entity Alignment

Entity Resolution

Table annotation, entity linking.

Prompt Engineering

Visual Prompting

Question generation, poll generation.

Topic coverage

Dynamic topic modeling, part-of-speech tagging.

Unsupervised Part-Of-Speech Tagging

Abuse detection, hate speech detection, mathematical reasoning.

Math Word Problem Solving

Formal logic, geometry problem solving, abstract algebra, open information extraction.

Hope Speech Detection

Hate speech normalization, hate speech detection crisishatemm benchmark, data mining.

Argument Mining

Opinion Mining

Subgroup discovery, cognitive diagnosis, parallel corpus mining, word sense disambiguation.

Word Sense Induction

Language identification, dialect identification, native language identification, few-shot relation classification, implicit discourse relation classification, cause-effect relation classification, bias detection, selection bias, fake news detection, relational reasoning.

Semantic Role Labeling

Predicate Detection

Semantic role labeling (predicted predicates).

Textual Analogy Parsing

Slot filling.

Zero-shot Slot Filling

Extracting covid-19 events from twitter, grammatical error correction.

Grammatical Error Detection

Text matching, document text classification, learning with noisy labels, multi-label classification of biomedical texts, political salient issue orientation detection, pos tagging, deep clustering, trajectory clustering, deep nonparametric clustering, nonparametric deep clustering, stance detection, zero-shot stance detection, few-shot stance detection, stance detection (us election 2020 - biden), stance detection (us election 2020 - trump), spoken language understanding, dialogue safety prediction, multi-modal entity alignment, intent detection.

Open Intent Detection

Word similarity, text-to-speech synthesis.

Prosody Prediction

Zero-shot multi-speaker tts, zero-shot cross-lingual transfer, cross-lingual ner, fact verification, intent classification.

Document AI

Document understanding, language acquisition, grounded language learning, constituency parsing.

Constituency Grammar Induction

Entity typing.

Entity Typing on DH-KGs

Self-learning, cross-modal retrieval, image-text matching, multilingual cross-modal retrieval.

Zero-shot Composed Person Retrieval

Cross-modal retrieval on rsitmd, ad-hoc information retrieval, document ranking.

Word Alignment

Open-domain dialog, dialogue evaluation, novelty detection, model editing, knowledge editing, multimodal deep learning, multimodal text and image classification, discourse parsing, discourse segmentation, connective detection, multi-label text classification.

Text-based Image Editing

Text-guided-image-editing.

Zero-Shot Text-to-Image Generation

Concept alignment, conditional text-to-image synthesis.

Shallow Syntax

Sarcasm detection.

De-identification

Privacy preserving deep learning, explanation generation, lemmatization, morphological analysis.

Session Search

Aspect Extraction

Extract aspect, aspect category sentiment analysis.

Aspect-oriented Opinion Extraction

Aspect-Category-Opinion-Sentiment Quadruple Extraction

Molecular representation.

Chinese Word Segmentation

Handwritten chinese text recognition, chinese spelling error correction, chinese zero pronoun resolution, offline handwritten chinese character recognition, entity disambiguation, text-to-video generation, text-to-video editing, subject-driven video generation, conversational search, source code summarization, method name prediction, speech-to-text translation, simultaneous speech-to-text translation, text clustering.

Short Text Clustering

Open Intent Discovery

Authorship attribution, keyphrase extraction, linguistic acceptability.

Column Type Annotation

Cell entity annotation, columns property annotation, row annotation, abusive language.

Visual Storytelling

KG-to-Text Generation

Unsupervised KG-to-Text Generation

Few-shot text classification, zero-shot out-of-domain detection, term extraction, text2text generation, keyphrase generation, figurative language visualization, sketch-to-text generation, protein folding, phrase grounding, grounded open vocabulary acquisition, deep attention, morphological inflection, word translation, multilingual nlp, spam detection, context-specific spam detection, traditional spam detection, summarization, unsupervised extractive summarization, query-focused summarization.

Knowledge Base Population

Natural language transduction, conversational response selection, cross-lingual word embeddings, text annotation, passage ranking, image-to-text retrieval, news classification, key information extraction, biomedical information retrieval.

SpO2 estimation

Graph-to-sequence, authorship verification.

Sentence Summarization

Unsupervised sentence summarization, automated essay scoring, keyword extraction, story generation, temporal processing, timex normalization, document dating, meme classification, hateful meme classification, multimodal association, multimodal generation, morphological tagging, nlg evaluation, key point matching, component classification, argument pair extraction (ape), claim extraction with stance classification (cesc), claim-evidence pair extraction (cepe), weakly supervised classification, weakly supervised data denoising, entity extraction using gan.

Rumour Detection

Semantic composition.

Sentence Ordering

Comment generation.

Lexical Simplification

Token classification, toxic spans detection.

Blackout Poetry Generation

Semantic retrieval, subjectivity analysis.

Taxonomy Learning

Taxonomy expansion, hypernym discovery, conversational response generation.

Personalized and Emotional Conversation

Passage re-ranking, review generation, sentence-pair classification, emotional intelligence, dark humor detection, lexical normalization, pronunciation dictionary creation, negation detection, negation scope resolution, question similarity, medical question pair similarity computation, goal-oriented dialog, user simulation, intent discovery, propaganda detection, propaganda span identification, propaganda technique identification, lexical analysis, lexical complexity prediction, question rewriting, punctuation restoration, reverse dictionary, humor detection.

Meeting Summarization

Table-based fact verification, legal reasoning, pretrained multilingual language models, formality style transfer, semi-supervised formality style transfer, word attribute transfer, attribute value extraction, diachronic word embeddings, persian sentiment analysis, clinical concept extraction.

Clinical Information Retreival

Constrained clustering.

Only Connect Walls Dataset Task 1 (Grouping)

Incremental constrained clustering, aspect category detection, dialog act classification, extreme summarization.

Hallucination Evaluation

Long-context understanding, recognizing emotion cause in conversations.

Causal Emotion Entailment

Nested Mention Recognition

Relationship extraction (distant supervised), binary classification, llm-generated text detection, cancer-no cancer per breast classification, cancer-no cancer per image classification, suspicous (birads 4,5)-no suspicous (birads 1,2,3) per image classification, cancer-no cancer per view classification, clickbait detection, decipherment, semantic entity labeling, text compression, handwriting verification, bangla spelling error correction, ccg supertagging, gender bias detection, linguistic steganography, probing language models, toponym resolution.

Timeline Summarization

Multimodal abstractive text summarization, reader-aware summarization, code repair, thai word segmentation, stock prediction, text-based stock prediction, event-driven trading, pair trading.

Face to Face Translation

Multimodal lexical translation, explanatory visual question answering, vietnamese visual question answering, aggression identification, arabic text diacritization, commonsense causal reasoning, fact selection, suggestion mining, temporal relation classification, vietnamese datasets, vietnamese word segmentation, arabic sentiment analysis, aspect category polarity, complex word identification, cross-lingual bitext mining, morphological disambiguation, scientific document summarization, lay summarization, text attribute transfer.

Image-guided Story Ending Generation

Speculation detection, speculation scope resolution, abstract argumentation, dialogue rewriting, logical reasoning reading comprehension.

Unsupervised Sentence Compression

Sign language production, stereotypical bias analysis, temporal tagging, anaphora resolution, bridging anaphora resolution.

Abstract Anaphora Resolution

Hope speech detection for english, hope speech detection for malayalam, hope speech detection for tamil, hidden aspect detection, latent aspect detection, chinese spell checking, cognate prediction, japanese word segmentation, memex question answering, multi-agent integration, polyphone disambiguation, spelling correction, table-to-text generation.

KB-to-Language Generation

Text anonymization, zero-shot sentiment classification, conditional text generation, contextualized literature-based discovery, multimedia generative script learning, image-sentence alignment, open-world social event classification, personality generation, personality alignment, action parsing, author attribution, binary condescension detection, conversational web navigation, croatian text diacritization, czech text diacritization, definition modelling, document-level re with incomplete labeling, domain labelling, french text diacritization, hungarian text diacritization, irish text diacritization, latvian text diacritization, misogynistic aggression identification, morpheme segmentaiton, multi-label condescension detection, news annotation, open relation modeling, personality recognition in conversation.

Reading Order Detection

Record linking, role-filler entity extraction, romanian text diacritization, slovak text diacritization, spanish text diacritization, syntax representation, text-to-video search, turkish text diacritization, turning point identification, twitter event detection.

Vietnamese Language Models

Vietnamese text diacritization, zero-shot machine translation.

Conversational Sentiment Quadruple Extraction

Attribute extraction, legal outcome extraction, automated writing evaluation, chemical indexing, clinical assertion status detection.

Coding Problem Tagging

Collaborative plan acquisition, commonsense reasoning for rl, context query reformulation.

Variable Disambiguation

Cross-lingual text-to-image generation, crowdsourced text aggregation.

Description-guided molecule generation

Multi-modal Dialogue Generation

Page stream segmentation.

Email Thread Summarization

Emergent communications on relations, emotion detection and trigger summarization, extractive tags summarization.

Hate Intensity Prediction

Hate span identification, job prediction, joint entity and relation extraction on scientific data, joint ner and classification, literature mining, math information retrieval, meme captioning, multi-grained named entity recognition, multilingual machine comprehension in english hindi, multimodal text prediction, negation and speculation cue detection, negation and speculation scope resolution, only connect walls dataset task 2 (connections), overlapping mention recognition, paraphrase generation, multilingual paraphrase generation, phrase ranking, phrase tagging, phrase vector embedding, poem meters classification, query wellformedness.

Question-Answer categorization

Readability optimization, reliable intelligence identification, sentence completion, hurtful sentence completion, speaker attribution in german parliamentary debates (germeval 2023, subtask 1), text effects transfer, text-variation, vietnamese aspect-based sentiment analysis, sentiment dependency learning, vietnamese natural language understanding, web page tagging, workflow discovery, incongruity detection, multi-word expression embedding, multi-word expression sememe prediction, trustable and focussed llm generated content, pcl detection, semeval-2022 task 4-1 (binary pcl detection), semeval-2022 task 4-2 (multi-label pcl detection), automatic writing, complaint comment classification, counterspeech detection, extractive text summarization, face selection, job classification, multi-lingual text-to-image generation, multlingual neural machine translation, optical charater recogntion, bangla text detection, question to declarative sentence, relation mention extraction.

Tweet-Reply Sentiment Analysis

Vietnamese parsing.

- Data Science

- Quantum Computing

- Miscellaneous

A Comprehensive Guide on RTMP Streaming

Blockchain booms, risks loom: the ai rescue mission in smart contract auditing, developing incident response plans for insider threats, weis wave: revolutionizing market analysis, top machine learning (ml) research papers released in 2022.

For every Machine Learning (ML) enthusiast, we bring you a curated list of the major breakthroughs in ML research in 2022.

Machine learning (ML) is gaining much traction in recent years owing to the disruption and development it brings in enhancing existing technologies. Every month, hundreds of ML papers from various organizations and universities get uploaded on the internet to share the latest breakthroughs in this domain. As the year ends, we bring you the Top 22 ML research papers of 2022 that created a huge impact in the industry. The following list does not reflect the ranking of the papers, and they have been selected on the basis of the recognitions and awards received at international conferences in machine learning.

- Bootstrapped Meta-Learning

Meta-learning is a promising field that investigates ways to enable machine learners or RL agents (which include hyperparameters) to learn how to learn in a quicker and more robust manner, and it is a crucial study area for enhancing the efficiency of AI agents.

This 2022 ML paper presents an algorithm that teaches the meta-learner how to overcome the meta-optimization challenge and myopic meta goals. The algorithm’s primary objective is meta-learning using gradients, which ensures improved performance. The research paper also examines the potential benefits due to bootstrapping. The authors highlight several interesting theoretical aspects of this algorithm, and the empirical results achieve new state-of-the-art (SOTA) on the ATARI ALE benchmark as well as increased efficiency in multitask learning.

- Competition-level code generation with AlphaCode

One of the exciting uses for deep learning and large language models is programming. The rising need for coders has sparked the race to build tools that can increase developer productivity and provide non-developers with tools to create software. However, these models still perform badly when put to the test on more challenging, unforeseen issues that need more than just converting instructions into code.

The popular ML paper of 2022 introduces AlphaCode, a code generation system that, in simulated assessments of programming contests on the Codeforces platform, averaged a rating in the top 54.3%. The paper describes the architecture, training, and testing of the deep-learning model.

- Restoring and attributing ancient texts using deep neural networks

The epigraphic evidence of the ancient Greek era — inscriptions created on durable materials such as stone and pottery — had already been broken when it was discovered, rendering the inscribed writings incomprehensible. Machine learning can help in restoring, and identifying chronological and geographical origins of damaged inscriptions to help us better understand our past.

This ML paper proposed a machine learning model built by DeepMind, Ithaca, for the textual restoration and geographical and chronological attribution of ancient Greek inscriptions. Ithaca was trained on a database of just under 80,000 inscriptions from the Packard Humanities Institute. It had a 62% accuracy rate compared to historians, who had a 25% accuracy rate on average. But when historians used Ithaca, they quickly achieved a 72% accuracy.

- Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer

Large neural networks use more resources to train hyperparameters since each time, the network must estimate which hyperparameters to utilize. This groundbreaking ML paper of 2022 suggests a novel zero-shot hyperparameter tuning paradigm for more effectively tuning massive neural networks. The research, co-authored by Microsoft Research and OpenAI, describes a novel method called µTransfer that leverages µP to zero-shot transfer hyperparameters from small models and produces nearly perfect HPs on large models without explicitly tuning them.

This method has been found to reduce the amount of trial and error necessary in the costly process of training large neural networks. By drastically lowering the need to predict which training hyperparameters to use, this approach speeds up research on massive neural networks like GPT-3 and perhaps its successors in the future.

- PaLM: Scaling Language Modeling with Pathways

Large neural networks trained for language synthesis and recognition have demonstrated outstanding results in various tasks in recent years. This trending 2022 ML paper introduced Pathways Language Model (PaLM), a 780 billion high-quality text token, and 540 billion parameter-dense decoder-only autoregressive transformer.

Although PaLM just uses a decoder and makes changes like SwiGLU Activation, Parallel Layers, Multi-Query Attention, RoPE Embeddings, Shared Input-Output Embeddings, and No Biases and Vocabulary, it is based on a typical transformer model architecture. The paper describes the company’s latest flagship surpassing several human baselines while achieving state-of-the-art in numerous zero, one, and few-shot NLP tasks.

- Robust Speech Recognition via Large-Scale Weak Supervision

Machine learning developers have found it challenging to build speech-processing algorithms that are trained to predict a vast volume of audio transcripts on the internet. This year, OpenAI released Whisper , a new state-of-the-art (SotA) model in speech-to-text that can transcribe any audio to text and translate it into several languages. It has received 680,000 hours of training on a vast amount of voice data gathered from the internet. According to OpenAI, this model is robust to accents, background noise, and technical terminology. Additionally, it allows transcription into English from 99 different languages and translation into English from those languages.

The OpenAI ML paper mentions the author ensured that about one-third of the audio data is non-English. This helped the team outperform other supervised state-of-the-art models by maintaining a diversified dataset.

- OPT: Open Pre-trained Transformer Language Models

Large language models have demonstrated extraordinary performance f on numerous tasks (e.g., zero and few-shot learning). However, these models are difficult to duplicate without considerable funding due to their high computing costs. Even while the public can occasionally interact with these models through paid APIs, complete research access is still only available from a select group of well-funded labs. This limited access has hindered researchers’ ability to comprehend how and why these language models work, which has stalled progress on initiatives to improve their robustness and reduce ethical drawbacks like bias and toxicity.

The popular 2022 ML paper introduces Open Pre-trained Transformers (OPT), a suite of decoder-only pre-trained transformers with 125 million to 175 billion parameters that the authors want to share freely and responsibly with interested academics. The biggest OPT model, OPT-175B (it is not included in the code repository but is accessible upon request), which is impressively proven to perform similarly to GPT-3 (which also has 175 billion parameters) uses just 15% of GPT-3’s carbon footprint during development and training.

- A Path Towards Autonomous Machine Intelligence

Yann LeCun is a prominent and respectable researcher in the field of artificial intelligence and machine learning. In June, his much-anticipated paper “ A Path Towards Autonomous Machine Intelligence ” was published on OpenReview. LeCun offered a number of approaches and architectures in his paper that might be combined and used to create self-supervised autonomous machines.

He presented a modular architecture for autonomous machine intelligence that combines various models to operate as distinct elements of a machine’s brain and mirror the animal brain. Due to the differentiability of all the models, they are all interconnected to power certain brain-like activities, such as identification and environmental response. It incorporates ideas like a configurable predictive world model, behavior-driven through intrinsic motivation, and hierarchical joint embedding architectures trained with self-supervised learning.

- LaMDA: Language Models for Dialog Applications

Despite tremendous advances in text generation, many of the chatbots available are still rather irritating and unhelpful. This 2022 ML paper from Google describes the LaMDA — short for “Language Model for Dialogue Applications” — system, which caused the uproar this summer when a former Google engineer, Blake Lemoine, alleged that it is sentient. LaMDA is a family of large language models for dialog applications built on Google’s Transformer architecture, which is known for its efficiency and speed in language tasks such as translation. The model’s ability to be adjusted using data that has been human-annotated and the capability of consulting external sources are its most intriguing features.

The model, which has 137 billion parameters, was pre-trained using 1.56 trillon words from publicly accessible conversation data and online publications. The model is also adjusted based on the three parameters of quality, safety, and groundedness.

- Privacy for Free: How does Dataset Condensation Help Privacy?

One of the primary proposals in the award-winning ML paper is to use dataset condensation methods to retain data efficiency during model training while also providing membership privacy. The authors argue that dataset condensation, which was initially created to increase training effectiveness, is a better alternative to data generators for producing private data since it offers privacy for free.

Though existing data generators are used to produce differentially private data for model training to minimize unintended data leakage, they result in high training costs or subpar generalization performance for the sake of data privacy. This study was published by Sony AI and received the Outstanding Paper Award at ICML 2022.

- TranAD: Deep Transformer Networks for Anomaly Detection in Multivariate Time Series Data

The use of a model that converts time series into anomaly scores at each time step is essential in any system for detecting time series anomalies. Recognizing and diagnosing anomalies in multivariate time series data is critical for modern industrial applications. Unfortunately, developing a system capable of promptly and reliably identifying abnormal observations is challenging. This is attributed to a shortage of anomaly labels, excessive data volatility, and the expectations of modern applications for ultra-low inference times.

In this study , the authors present TranAD, a deep transformer network-based anomaly detection and diagnosis model that leverages attention-based sequence encoders to quickly execute inference while being aware of the more general temporal patterns in the data. TranAD employs adversarial training to achieve stability and focus score-based self-conditioning to enable robust multi-modal feature extraction. The paper mentions extensive empirical experiments on six publicly accessible datasets show that TranAD can perform better in detection and diagnosis than state-of-the-art baseline methods with data- and time-efficient training.

- Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding

In the last few years, generative models called “diffusion models” have been increasingly popular. This year saw these models capture the excitement of AI enthusiasts around the world.

Going ahead of the current text to speech technology of recent times, this outstanding 2022 ML paper introduced the viral text-to-image diffusion model from Google, Imagen. This diffusion model achieves a new state-of-the-art FID score of 7.27 on the COCO dataset by combining the deep language understanding of transformer-based large language models with the photorealistic image-generating capabilities of diffusion models. A text-only frozen language model provides the text representation, and a diffusion model with two super-resolution upsampling stages, up to 1024×2014, produces the images. It employs several training approaches, including classifier-free guiding, to teach itself conditional and unconditional generation. Another important feature of Imagen is the use of dynamic thresholding, which stops the diffusion process from being saturated in specific areas of the picture, a behavior that reduces image quality, particularly when the weight placed on text conditional creation is large.

- No Language Left Behind: Scaling Human-Centered Machine Translation

This ML paper introduced the most popular Meta projects of the year 2022: NLLB-200. This paper talks about how Meta built and open-sourced this state-of-the-art AI model at FAIR, which is capable of translating 200 languages between each other. It covers every aspect of this technology: language analysis, moral issues, effect analysis, and benchmarking.

No matter what language a person speaks, accessibility via language ensures that everyone can benefit from the growth of technology. Meta claims that several languages that NLLB-200 translates, such as Kamba and Lao, are not currently supported by any translation systems in use. The tech behemoth also created a dataset called “FLORES-200” to evaluate the effectiveness of the NLLB-200 and show that accurate translations are offered. According to Meta, NLLB-200 offers an average of 44% higher-quality translations than its prior model.

- A Generalist Agent

AI pundits believe that multimodality will play a huge role in the future of Artificial General Intelligence (AGI). One of the most talked ML papers of 2022 by DeepMind introduces Gato – a generalist agent . This AGI agent is a multi-modal, multi-task, multi-embodiment network, which means that the same neural network (i.e. a single architecture with a single set of weights) can do all tasks while integrating inherently diverse types of inputs and outputs.

DeepMind claims that the general agent can be improved with new data to perform even better on a wider range of tasks. They argue that having a general-purpose agent reduces the need for hand-crafting policy models for each region, enhances the volume and diversity of training data, and enables continuous advances in the data, computing, and model scales. A general-purpose agent can also be viewed as the first step toward artificial general intelligence, which is the ultimate goal of AGI.

Gato demonstrates the versatility of transformer-based machine learning architectures by exhibiting their use in a variety of applications. Unlike previous neural network systems tailored for playing games, stack blocks with a real robot arm, read words, and caption images, Gato is versatile enough to perform all of these tasks on its own, using only a single set of weights and a relatively simple architecture.

- The Forward-Forward Algorithm: Some Preliminary Investigations

AI pioneer Geoffrey Hinton is known for writing paper on the first deep convolutional neural network and backpropagation. In his latest paper presented at NeurIPS 2022, Hinton proposed the “forward-forward algorithm,” a new learning algorithm for artificial neural networks based on our understanding of neural activations in the brain. This approach draws inspiration from Boltzmann machines (Hinton and Sejnowski, 1986) and noise contrast estimation (Gutmann and Hyvärinen, 2010). According to Hinton, forward-forward, which is still in its experimental stages, can substitute the forward and backward passes of backpropagation with two forward passes, one with positive data and the other with negative data that the network itself could generate. Further, the algorithm could simulate hardware more efficiently and provide a better explanation for the brain’s cortical learning process.

Without employing complicated regularizers, the algorithm obtained a 1.4 percent test error rate on the MNIST dataset in an empirical study, proving that it is just as effective as backpropagation.

The paper also suggests a novel “mortal computing” model that can enable the forward-forward algorithm and understand our brain’s energy-efficient processes.

- Focal Modulation Networks

In humans, the ciliary muscles alter the shape of the eye and hence the radius of the curvature lens to focus on near or distant objects. Changing the shape of the eye lens, changes the focal length of the lens. Mimicking this behavior of focal modulation in computer vision systems can be tricky.

This machine learning paper introduces FocalNet, an iterative information extraction technique that employs the premise of foveal attention to post-process Deep Neural Network (DNN) outputs by performing variable input/feature space sampling. Its attention-free design outperforms SoTA self-attention (SA) techniques in a wide range of visual benchmarks. According to the paper, focal modulation consists of three parts: According to the paper, focal modulation consists of three parts:

a. hierarchical contextualization, implemented using a stack of depth-wise convolutional layers, to encode visual contexts from close-up to a great distance;

b. gated aggregation to selectively gather contexts for each query token based on its content; and

c. element-wise modulation or affine modification to inject the gathered context into the query.

- Learning inverse folding from millions of predicted structures

The field of structural biology is being fundamentally changed by cutting-edge technologies in machine learning, protein structure prediction, and innovative ultrafast structural aligners. Time and money are no longer obstacles to obtaining precise protein models and extensively annotating their functionalities. However, determining a protein sequence from its backbone atom coordinates remained a challenge for scientists. To date, machine learning methods to this challenge have been constrained by the amount of empirically determined protein structures available.

In this ICML Outstanding Paper (Runner Up) , authors explain tackling this problem by increasing training data by almost three orders of magnitude by using AlphaFold2 to predict structures for 12 million protein sequences. With the use of this additional data, a sequence-to-sequence transformer with invariant geometric input processing layers is able to recover native sequence on structurally held-out backbones in 51% of cases while recovering buried residues in 72% of cases. This is an improvement of over 10% over previous techniques. In addition to designing protein complexes, partly masked structures, binding interfaces, and numerous states, the concept generalises to a range of other more difficult tasks.

- MineDojo: Building Open-Ended Embodied Agents with Internet-Scale Knowledge

Within the AI research community, using video games as a training medium for AI has gained popularity. These autonomous agents have had great success in Atari games, Starcraft, Dota, and Go. Although these developments have gained popularity in the field of artificial intelligence research, the agents do not generalize beyond a narrow range of activities, in contrast to humans, who continually learn from open-ended tasks.

This thought-provoking 2022 ML paper suggests MineDojo, a unique framework for embodied agent research based on the well-known game Minecraft. In addition to building an internet-scale information base with Minecraft videos, tutorials, wiki pages, and forum discussions, Minecraft provides a simulation suite with tens of thousands of open-ended activities. Using MineDojo data, the author proposes a unique agent learning methodology that employs massive pre-trained video-language models as a learnt reward function. Without requiring a dense shaping reward that has been explicitly created, MinoDojo autonomous agent can perform a wide range of open-ended tasks that are stated in free-form language.

- Is Out-of-Distribution Detection Learnable?

Machine learning (supervised ML) models are frequently trained using the closed-world assumption, which assumes that the distribution of the testing data will resemble that of the training data. This assumption doesn’t hold true when used in real-world activities, which causes a considerable decline in their performance. While this performance loss is acceptable for applications like product recommendations, developing an out-of-distribution (OOD) identification algorithm is crucial to preventing ML systems from making inaccurate predictions in situations where data distribution in real-world activities typically drifts over time (self-driving cars).

In this paper , authors explore the probably approximately correct (PAC) learning theory of OOD detection, which is proposed by researchers as an open problem, to study the applicability of OOD detection. They first focus on identifying a prerequisite for OOD detection’s learnability. Following that, they attempt to show a number of impossibility theorems regarding the learnability of OOD detection in a handful yet different scenarios.

- Gradient Descent: The Ultimate Optimizer

Gradient descent is a popular optimization approach for training machine learning models and neural networks. The ultimate aim of any machine learning (neural network) method is to optimize parameters, but selecting the ideal step size for an optimizer is difficult since it entails lengthy and error-prone manual work. Many strategies exist for automated hyperparameter optimization; however, they often incorporate additional hyperparameters to govern the hyperparameter optimization process. In this study , MIT CSAIL and Meta researchers offer a unique approach that allows gradient descent optimizers like SGD and Adam to tweak their hyperparameters automatically.

They propose learning the hyperparameters by self-using gradient descent, as well as learning the hyper-hyperparameters via gradient descent, and so on indefinitely. This paper describes an efficient approach for allowing gradient descent optimizers to autonomously adjust their own hyperparameters, which may be layered recursively to many levels. As these gradient-based optimizer towers expand in size, they become substantially less sensitive to the selection of top-level hyperparameters, reducing the load on the user to search for optimal values.

- ProcTHOR: Large-Scale Embodied AI Using Procedural Generation

Embodied AI is a developing study field that has been influenced by recent advancements in artificial intelligence, machine learning, and computer vision. This method of computer learning makes an effort to translate this connection to artificial systems. The paper proposes ProcTHOR, a framework for procedural generation of Embodied AI environments. ProcTHOR allows researchers to sample arbitrarily huge datasets of diverse, interactive, customisable, and performant virtual environments in order to train and assess embodied agents across navigation, interaction, and manipulation tasks.

According to the authors, models trained on ProcTHOR using only RGB images and without any explicit mapping or human task supervision achieve cutting-edge results in 6 embodied AI benchmarks for navigation, rearrangement, and arm manipulation, including the ongoing Habitat2022, AI2-THOR Rearrangement2022, and RoboTHOR challenges. The paper received the Outstanding Paper award at NeurIPS 2022.

- A Commonsense Knowledge Enhanced Network with Retrospective Loss for Emotion Recognition in Spoken Dialog

Emotion Recognition in Spoken Dialog (ERSD) has recently attracted a lot of attention due to the growth of open conversational data. This is due to the fact that excellent speech recognition algorithms have emerged as a result of the integration of emotional states in intelligent spoken human-computer interactions. Additionally, it has been demonstrated that recognizing emotions makes it possible to track the development of human-computer interactions, allowing for dynamic change of conversational strategies and impacting the result (e.g., customer feedback). But the volume of the current ERSD datasets restricts the model’s development.

This ML paper proposes a Commonsense Knowledge Enhanced Network (CKE-Net) with a retrospective loss to carry out dialog modeling, external knowledge integration, and historical state retrospect hierarchically.

Subscribe to our newsletter

Subscribe and never miss out on such trending AI-related articles.

Join our WhatsApp Channel and Discord Server to be a part of an engaging community.

RELATED ARTICLES

Enhancing efficiency: the role of data storage in ai systems, from insight to impact: the power of data expanding your business, the ultimate guide to scrape websites for data using web scraping tools, leave a reply cancel reply.

Save my name, email, and website in this browser for the next time I comment.

Most Popular

Analytics Drift strives to keep you updated with the latest technologies such as Artificial Intelligence, Data Science, Machine Learning, and Deep Learning. We are on a mission to build the largest data science community in the world by serving you with engaging content on our platform.

Contact us: [email protected]

Copyright © 2024 Analytics Drift Private Limited.

Machine Intelligence

Google is at the forefront of innovation in Machine Intelligence, with active research exploring virtually all aspects of machine learning, including deep learning and more classical algorithms. Exploring theory as well as application, much of our work on language, speech, translation, visual processing, ranking and prediction relies on Machine Intelligence. In all of those tasks and many others, we gather large volumes of direct or indirect evidence of relationships of interest, applying learning algorithms to understand and generalize.

Machine Intelligence at Google raises deep scientific and engineering challenges, allowing us to contribute to the broader academic research community through technical talks and publications in major conferences and journals. Contrary to much of current theory and practice, the statistics of the data we observe shifts rapidly, the features of interest change as well, and the volume of data often requires enormous computation capacity. When learning systems are placed at the core of interactive services in a fast changing and sometimes adversarial environment, combinations of techniques including deep learning and statistical models need to be combined with ideas from control and game theory.

Recent Publications

Some of our teams.

Algorithms & optimization

Applied science

Climate and sustainability

Graph mining

Learning theory

Market algorithms

Operations research

Security, privacy and abuse

System performance

We're always looking for more talented, passionate people.

Advertisement

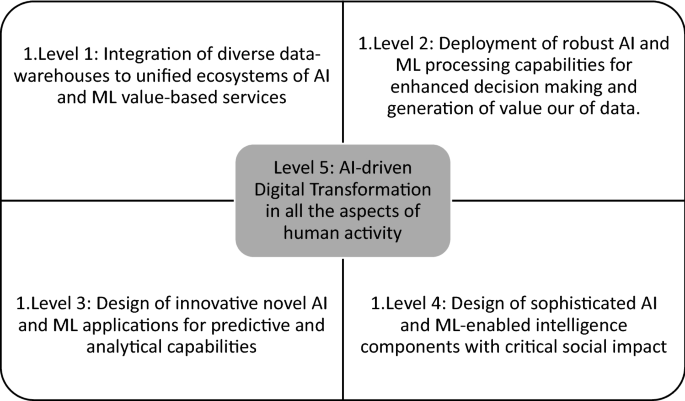

Artificial intelligence and machine learning research: towards digital transformation at a global scale

- Published: 17 April 2021

- Volume 13 , pages 3319–3321, ( 2022 )

Cite this article

- Akila Sarirete 1 ,

- Zain Balfagih 1 ,

- Tayeb Brahimi 1 ,

- Miltiadis D. Lytras 1 , 2 &

- Anna Visvizi 3 , 4

8107 Accesses

12 Citations

Explore all metrics

Avoid common mistakes on your manuscript.