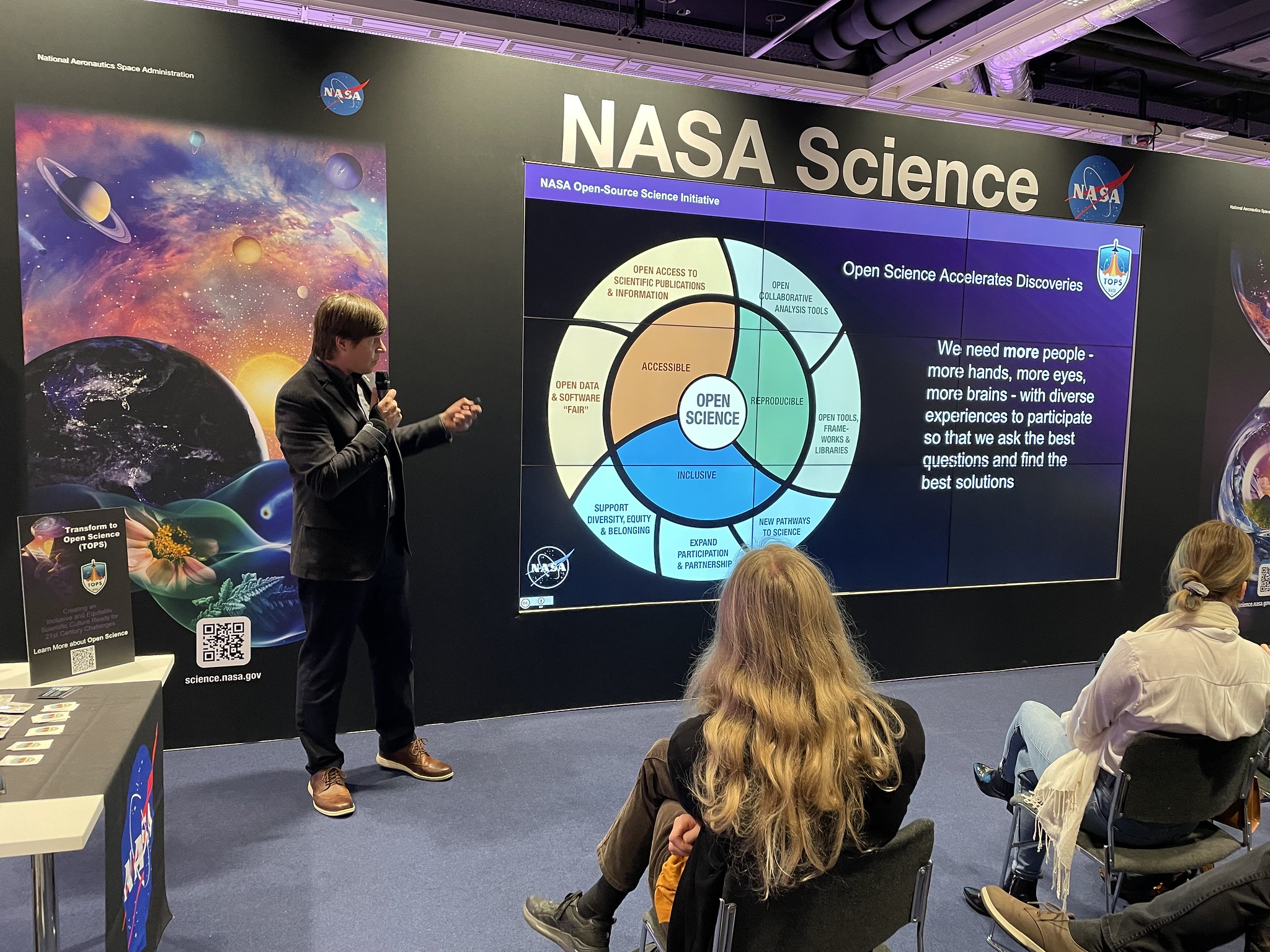

Open Science at NASA

NASA is making a long-term commitment to building an inclusive open science community over the next decade. Open-source science is a commitment to the open sharing of software, data, and knowledge (algorithms, papers, documents, ancillary information) as early as possible in the scientific process.

Open Principles

The principles of open-source science are to make publicly funded scientific research transparent, inclusive, accessible, and reproducible. Advances in technology, including collaborative tools and cloud computing, help enable open-source science, but technology alone is insufficient. Open-source science requires a culture shift to a more inclusive, transparent, and collaborative scientific process, which will increase the pace and quality of scientific progress.

Open science Facts

Open Transparent Science Scientific processes and results should be open such that they are reproducible by members of the community.

Open Inclusive Science Process and participants should welcome participation by and collaboration with diverse people and organizations.

Open Accessible Science Data, tools, software, documentation, and publications should be accessible to all (FAIR).

Open Reproducible Science Scientific process and results should be open such that they are reproducible by members of the community.

Why Do Open Science?

● Broadens participation and fosters greater collaboration in scientific investigations by lowering the barriers to entry into scientific exploration ● Generates greater impact and more citations to scientific results

Open Culture

To help build a culture of open science, NASA is championing a new initiative: the Open-Source Science Initiative (OSSI) . OSSI is a comprehensive program of activities to enable and support moving science towards openness, including policy adjustments, supporting open-source software, and enabling cyberinfrastructure. OSSI aims to implement NASA’s Strategy for Data Management and Computing for Groundbreaking Science 2019-2024 , which was developed through community input.

Open Science Features and Events

Unveiling the Sun: NASA’s Open Data Approach to Solar Eclipse Research

As the world anticipates the upcoming total solar eclipse on April 8, 2024, NASA is preparing for an extraordinary opportunity for scientific discovery, open collaboration, and public engagement.

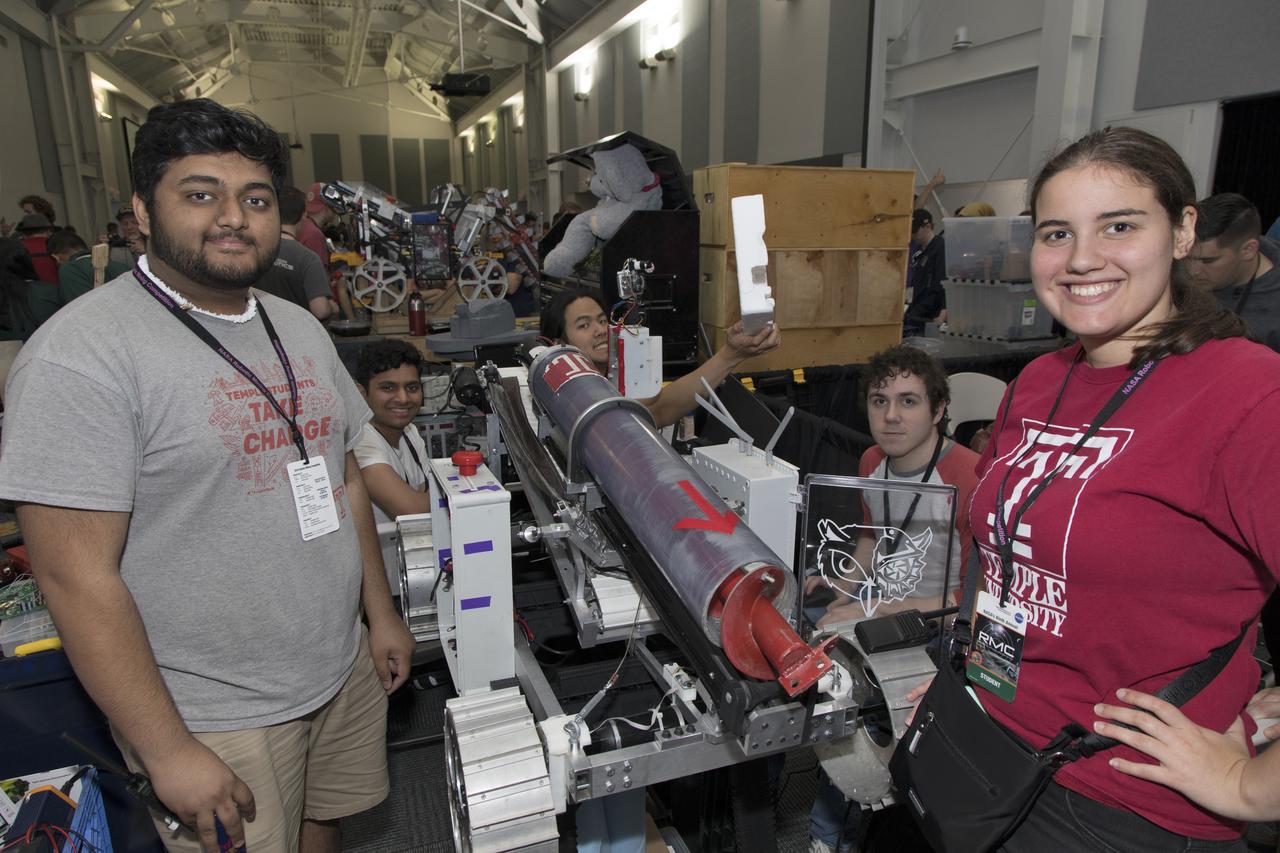

Transform to Open Science (TOPS) 2024 Total Solar Eclipse Activities

Explore the data-driven domain of eclipses to help understand how open science principles facilitate the sharing and analysis of information among researchers, students, and enthusiasts.

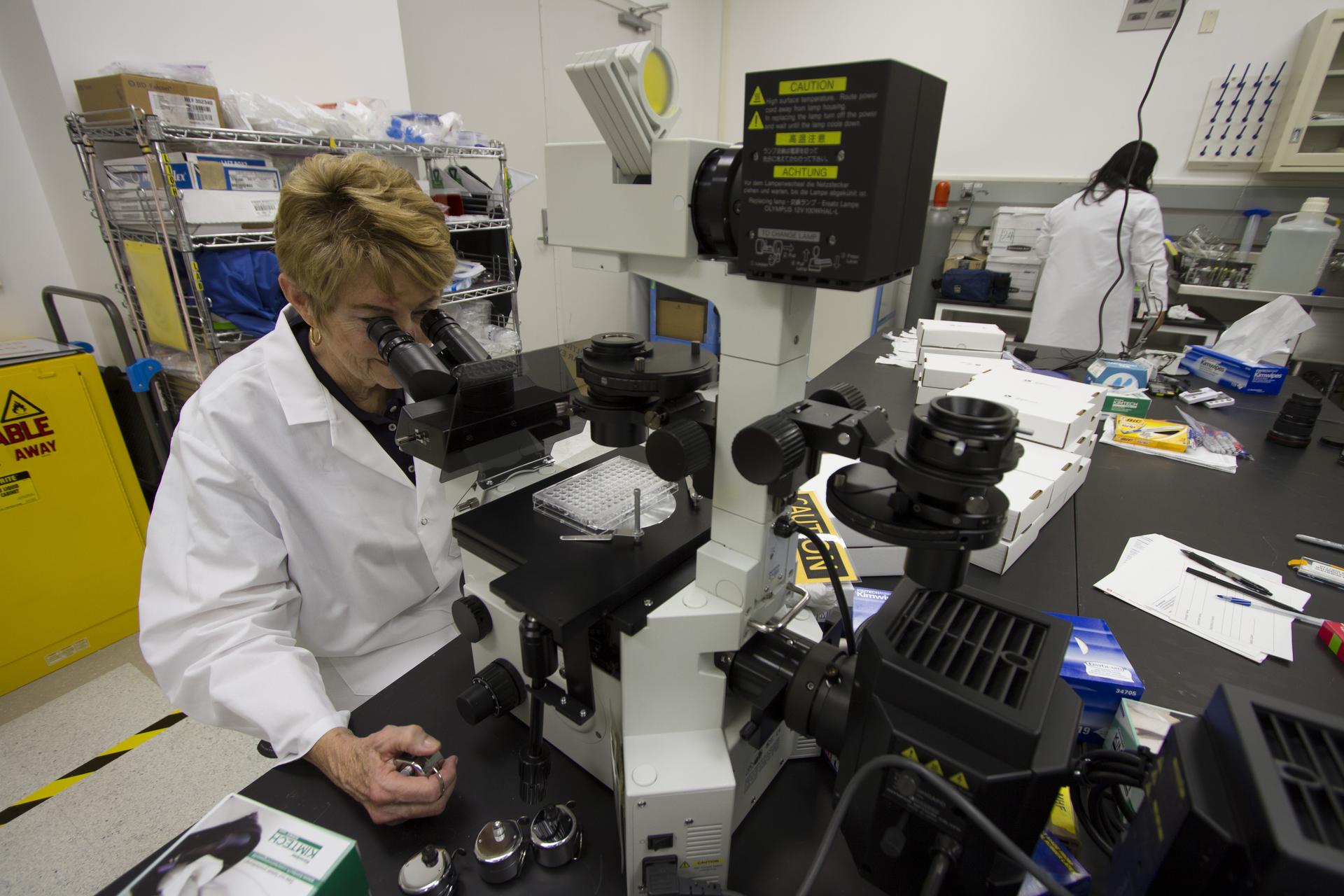

NASA GeneLab Open Science Success Story: Henry Cope

Hear PhD candidate Henry Cope’s unique journey and how his experience with the Open Science datasets propelled his career in medical and space biology.

Software for the NASA Science Mission Directorate Workshop 2024

This workshop aims to explore the current opportunities and challenges for the various categories of software relevant for activities funded by the NASA Science Mission Directorate (SMD).

Explore Open Science at NASA

Transform to Open Science (TOPS)

Provides the visibility, advocacy, and community resources to support and enable the shift to open science.

Core Data and Computing

The Core Data and Computing Services Program (CDCSP) will provide a layered architecture on which SMD science can scale.

Data and Computing Architecture Study

The Data and Computing Architecture study will investigate the technology needed to support NASA's open science goals.

Artificial Intelligence and Machine Learning

Artificial Intelligence and Machine Learning will play an important role in advancing NASA science.

Open-Source Science Awards

NASA supports open science through call for new innovative programs, supplements to existing awards, and sustainability of software.

Scientific Information Policy

The information produced as part of NASA’s scientific research activities represents a significant public investment. Learn more about how and when it should be shared.

Science Mission Directorate Science Data

The Science Data Repository pages provides a comprehensive list of NASA Science Data Repositories.

Discover More Topics From NASA

James Webb Space Telescope

Perseverance Rover

Parker Solar Probe

Open Research Library

The Open Research Library (ORL) is planned to include all Open Access book content worldwide on one platform for user-friendly discovery, offering a seamless experience navigating more than 20,000 Open Access books.

- Search Search

- CN (Chinese)

- DE (German)

- ES (Spanish)

- FR (Français)

- JP (Japanese)

- Open research

- Booksellers

- Peer Reviewers

- Springer Nature Group ↗

- Fundamentals of open research

- Gold or Green routes to open research

- Benefits of open research

- Open research timeline

- Whitepapers

- About overview

- Journal pricing FAQs

- Publishing an OA book

- Journals & books overview

- Open access agreements

- OA article funding

- Article OA funding and policy guidance

- OA book funding

- Book OA funding and policy guidance

- Funding & support overview

- Springer Nature journal policies

- APC waivers and discounts

- Springer Nature book policies

- Publication policies overview

The fundamentals of open access and open research

What is open access and open research.

Open access (OA) refers to the free, immediate, online availability of research outputs such as journal articles or books, combined with the rights to use these outputs fully in the digital environment. OA content is open to all, with no access fees.

Open research goes beyond the boundaries of publications to consider all research outputs – from data to code and even open peer review. Making all outputs of research as open and accessible as possible means research can have a greater impact, and help to solve some of the world’s greatest challenges.

Learn more about gold open access

How can i publish my work open access.

As the author of a research article or book, you have the ability to ensure that your research can be accessed and used by the widest possible audience. Springer Nature supports immediate Gold OA as the most open, least restrictive form of OA: authors can choose to publish their research article in a fully OA journal, a hybrid or transformative journal, or as an OA book or OA chapter.

Alternatively, where articles, books or chapters are published via the subscription route, Springer Nature allows authors to archive the accepted version of their manuscript on their own personal website or their funder’s or institution’s repository, for public release after an embargo period (Green OA). Find out more.

Why should I publish OA?

What is cc by.

The CC BY licence is the most open licence available and considered the industry 'gold standard' for OA; it is also preferred by many funders. It lets others distribute, remix, tweak, and build upon your work, even commercially, as long as they credit you for the original creation. It offers maximum dissemination and use of licenced materials. All Springer Nature journals with OA options offer the CC BY licence, and this is now the default licence for the majority of Springer Nature fully OA journals. It is also the default license for OA books and chapters. Other Creative Commons licenses are available on request.

How do I pay for open access?

As costs are involved in every stage of the publication process, authors are asked to pay an open access fee in order for their article to be published open access under a creative commons license. Springer Nature offers a free open access support service to make it easier for our authors to discover and apply for funding to cover article processing charges (APCs) and/or book processing charges (BPCs). Find out more.

What is open data?

We believe that all research data, including research files and code, should be as open as possible and want to make it easier for researchers to share the data that support their publications, making them accessible and reusable. Find out more about our research data services and policies.

What is a preprint?

A preprint is a version of a scientific manuscript posted on a public server prior to formal peer review. Once posted, the preprint becomes a permanent part of the scientific record, citable with its own unique DOI . Early sharing is recommended as it offers an opportunity to receive feedback on your work, claim priority for a discovery, and help research move faster. In Review is one of the most innovative preprint services available, offering real time updates on your manuscript’s progress through peer review. Discover In Review and its benefits.

What is open peer review?

Open peer review refers to the process of making peer reviewer reports openly available. Many publishers and journals offer some form of open peer review, including BMC who were one of the first publishers to open up peer review in 1999. Find out more .

Blog posts on open access from "The Source"

Open Research

How to publish open access with fees covered

Could you publish open access with fees covered under a Springer Nature open access agreement?

Celebrating our 2000th open access book

We are proud to celebrate the publication of our 2000th open access book. Take a look at how we achieved this milestone.

open access

Why is Gold OA best for researchers?

Explore the advantages of Gold OA, by reading some of the highlights from our white paper "Going for Gold".

How researchers are using open data in 2022

How are researchers using open data in 2022? Read this year’s State of Open Data Report, providing insights into the attitudes, motivations and challenges of researchers towards open data.

Ready to publish?

A pioneer of open access publishing, BMC is committed to innovation and offers an evolving portfolio of some 300 journals.

Open research is at the heart of Nature Research. Our portfolio includes Nature Communications , Scientific Reports and many more.

Springer offers a variety of open access options for journal articles and books across a number of disciplines.

Palgrave Macmillan is committed to developing sustainable models of open access for the HSS disciplines.

Apress is dedicated to meeting the information needs of developers, IT professionals, and tech communities worldwide.

Discover more tools and resources along with our author services

Author services

Early Career Resource Center

Journal Suggester

Using Your ORCID ID

The Transfer Desk

Tutorials and educational resources.

How to Write a Manuscript

How to submit a journal article manuscript

Nature Masterclasses

Stay up to date.

Here to foster information exchange with the library community

Connect with us on LinkedIn and stay up to date with news and development.

- Tools & Services

- Account Development

- Sales and account contacts

- Professional

- Press office

- Locations & Contact

We are a world leading research, educational and professional publisher. Visit our main website for more information.

- © 2023 Springer Nature

- General terms and conditions

- Your US State Privacy Rights

- Your Privacy Choices / Manage Cookies

- Accessibility

- Legal notice

- Help us to improve this site, send feedback.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 01 February 2021

An open source machine learning framework for efficient and transparent systematic reviews

- Rens van de Schoot ORCID: orcid.org/0000-0001-7736-2091 1 ,

- Jonathan de Bruin ORCID: orcid.org/0000-0002-4297-0502 2 ,

- Raoul Schram 2 ,

- Parisa Zahedi ORCID: orcid.org/0000-0002-1610-3149 2 ,

- Jan de Boer ORCID: orcid.org/0000-0002-0531-3888 3 ,

- Felix Weijdema ORCID: orcid.org/0000-0001-5150-1102 3 ,

- Bianca Kramer ORCID: orcid.org/0000-0002-5965-6560 3 ,

- Martijn Huijts ORCID: orcid.org/0000-0002-8353-0853 4 ,

- Maarten Hoogerwerf ORCID: orcid.org/0000-0003-1498-2052 2 ,

- Gerbrich Ferdinands ORCID: orcid.org/0000-0002-4998-3293 1 ,

- Albert Harkema ORCID: orcid.org/0000-0002-7091-1147 1 ,

- Joukje Willemsen ORCID: orcid.org/0000-0002-7260-0828 1 ,

- Yongchao Ma ORCID: orcid.org/0000-0003-4100-5468 1 ,

- Qixiang Fang ORCID: orcid.org/0000-0003-2689-6653 1 ,

- Sybren Hindriks 1 ,

- Lars Tummers ORCID: orcid.org/0000-0001-9940-9874 5 &

- Daniel L. Oberski ORCID: orcid.org/0000-0001-7467-2297 1 , 6

Nature Machine Intelligence volume 3 , pages 125–133 ( 2021 ) Cite this article

70k Accesses

202 Citations

162 Altmetric

Metrics details

- Computational biology and bioinformatics

- Computer science

- Medical research

A preprint version of the article is available at arXiv.

To help researchers conduct a systematic review or meta-analysis as efficiently and transparently as possible, we designed a tool to accelerate the step of screening titles and abstracts. For many tasks—including but not limited to systematic reviews and meta-analyses—the scientific literature needs to be checked systematically. Scholars and practitioners currently screen thousands of studies by hand to determine which studies to include in their review or meta-analysis. This is error prone and inefficient because of extremely imbalanced data: only a fraction of the screened studies is relevant. The future of systematic reviewing will be an interaction with machine learning algorithms to deal with the enormous increase of available text. We therefore developed an open source machine learning-aided pipeline applying active learning: ASReview. We demonstrate by means of simulation studies that active learning can yield far more efficient reviewing than manual reviewing while providing high quality. Furthermore, we describe the options of the free and open source research software and present the results from user experience tests. We invite the community to contribute to open source projects such as our own that provide measurable and reproducible improvements over current practice.

Similar content being viewed by others

AI-assisted peer review

Alessandro Checco, Lorenzo Bracciale, … Giuseppe Bianchi

A typology for exploring the mitigation of shortcut behaviour

Felix Friedrich, Wolfgang Stammer, … Kristian Kersting

Distributed peer review enhanced with natural language processing and machine learning

Wolfgang E. Kerzendorf, Ferdinando Patat, … Tyler A. Pritchard

With the emergence of online publishing, the number of scientific manuscripts on many topics is skyrocketing 1 . All of these textual data present opportunities to scholars and practitioners while simultaneously confronting them with new challenges. Scholars often develop systematic reviews and meta-analyses to develop comprehensive overviews of the relevant topics 2 . The process entails several explicit and, ideally, reproducible steps, including identifying all likely relevant publications in a standardized way, extracting data from eligible studies and synthesizing the results. Systematic reviews differ from traditional literature reviews in that they are more replicable and transparent 3 , 4 . Such systematic overviews of literature on a specific topic are pivotal not only for scholars, but also for clinicians, policy-makers, journalists and, ultimately, the general public 5 , 6 , 7 .

Given that screening the entire research literature on a given topic is too labour intensive, scholars often develop quite narrow searches. Developing a search strategy for a systematic review is an iterative process aimed at balancing recall and precision 8 , 9 ; that is, including as many potentially relevant studies as possible while simultaneously limiting the total number of studies retrieved. The vast number of publications in the field of study often leads to a relatively precise search, with the risk of missing relevant studies. The process of systematic reviewing is error prone and extremely time intensive 10 . In fact, if the literature of a field is growing faster than the amount of time available for systematic reviews, adequate manual review of this field then becomes impossible 11 .

The rapidly evolving field of machine learning has aided researchers by allowing the development of software tools that assist in developing systematic reviews 11 , 12 , 13 , 14 . Machine learning offers approaches to overcome the manual and time-consuming screening of large numbers of studies by prioritizing relevant studies via active learning 15 . Active learning is a type of machine learning in which a model can choose the data points (for example, records obtained from a systematic search) it would like to learn from and thereby drastically reduce the total number of records that require manual screening 16 , 17 , 18 . In most so-called human-in-the-loop 19 machine-learning applications, the interaction between the machine-learning algorithm and the human is used to train a model with a minimum number of labelling tasks. Unique to systematic reviewing is that not only do all relevant records (that is, titles and abstracts) need to seen by a researcher, but an extremely diverse range of concepts also need to be learned, thereby requiring flexibility in the modelling approach as well as careful error evaluation 11 . In the case of systematic reviewing, the algorithm(s) are interactively optimized for finding the most relevant records, instead of finding the most accurate model. The term researcher-in-the-loop was introduced 20 as a special case of human-in-the-loop with three unique components: (1) the primary output of the process is a selection of the records, not a trained machine learning model; (2) all records in the relevant selection are seen by a human at the end of the process 21 ; (3) the use-case requires a reproducible workflow and complete transparency is required 22 .

Existing tools that implement such an active learning cycle for systematic reviewing are described in Table 1 ; see the Supplementary Information for an overview of all of the software that we considered (note that this list was based on a review of software tools 12 ). However, existing tools have two main drawbacks. First, many are closed source applications with black box algorithms, which is problematic as transparency and data ownership are essential in the era of open science 22 . Second, to our knowledge, existing tools lack the necessary flexibility to deal with the large range of possible concepts to be learned by a screening machine. For example, in systematic reviews, the optimal type of classifier will depend on variable parameters, such as the proportion of relevant publications in the initial search and the complexity of the inclusion criteria used by the researcher 23 . For this reason, any successful system must allow for a wide range of classifier types. Benchmark testing is crucial to understand the real-world performance of any machine learning-aided system, but such benchmark options are currently mostly lacking.

In this paper we present an open source machine learning-aided pipeline with active learning for systematic reviews called ASReview. The goal of ASReview is to help scholars and practitioners to get an overview of the most relevant records for their work as efficiently as possible while being transparent in the process. The open, free and ready-to-use software ASReview addresses all concerns mentioned above: it is open source, uses active learning, allows multiple machine learning models. It also has a benchmark mode, which is especially useful for comparing and designing algorithms. Furthermore, it is intended to be easily extensible, allowing third parties to add modules that enhance the pipeline. Although we focus this paper on systematic reviews, ASReview can handle any text source.

In what follows, we first present the pipeline for manual versus machine learning-aided systematic reviews. We then show how ASReview has been set up and how ASReview can be used in different workflows by presenting several real-world use cases. We subsequently demonstrate the results of simulations that benchmark performance and present the results of a series of user-experience tests. Finally, we discuss future directions.

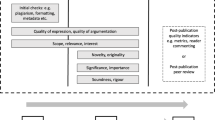

Pipeline for manual and machine learning-aided systematic reviews

The pipeline of a systematic review without active learning traditionally starts with researchers doing a comprehensive search in multiple databases 24 , using free text words as well as controlled vocabulary to retrieve potentially relevant references. The researcher then typically verifies that the key papers they expect to find are indeed included in the search results. The researcher downloads a file with records containing the text to be screened. In the case of systematic reviewing it contains the titles and abstracts (and potentially other metadata such as the authors’s names, journal name, DOI) of potentially relevant references into a reference manager. Ideally, two or more researchers then screen the records’s titles and abstracts on the basis of the eligibility criteria established beforehand 4 . After all records have been screened, the full texts of the potentially relevant records are read to determine which of them will be ultimately included in the review. Most records are excluded in the title and abstract phase. Typically, only a small fraction of the records belong to the relevant class, making title and abstract screening an important bottleneck in systematic reviewing process 25 . For instance, a recent study analysed 10,115 records and excluded 9,847 after title and abstract screening, a drop of more than 95% 26 . ASReview therefore focuses on this labour-intensive step.

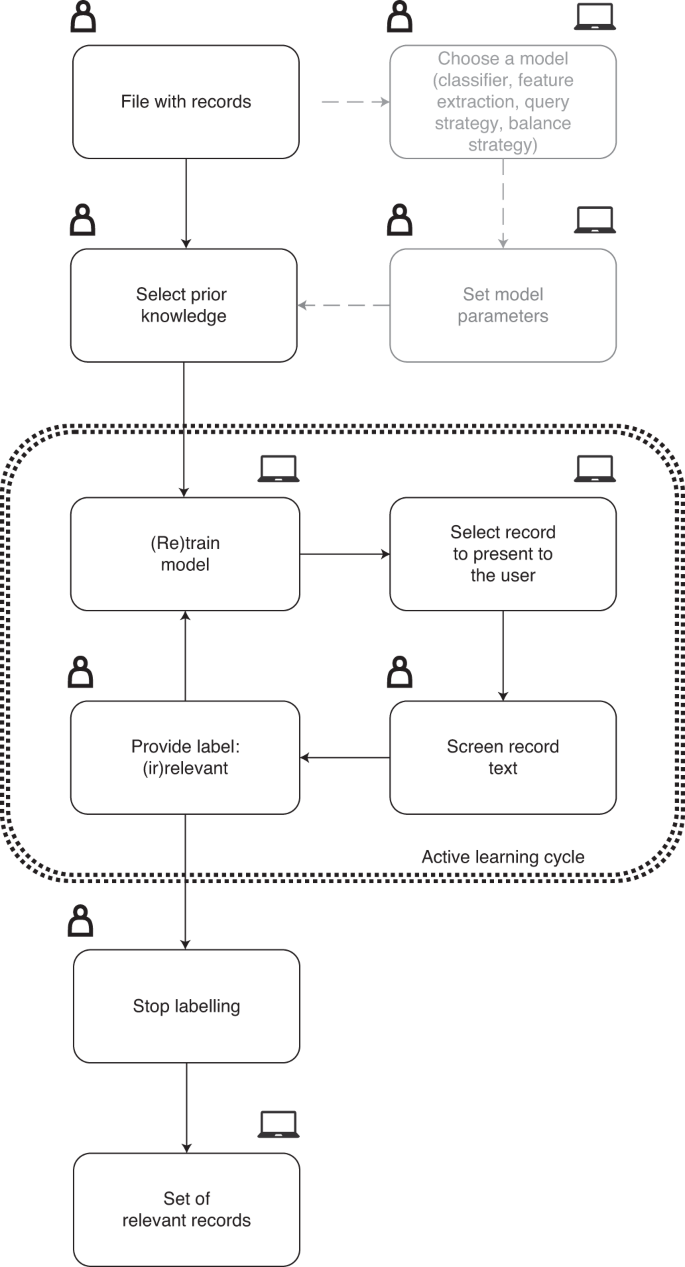

The research pipeline of ASReview is depicted in Fig. 1 . The researcher starts with a search exactly as described above and subsequently uploads a file containing the records (that is, metadata containing the text of the titles and abstracts) into the software. Prior knowledge is then selected, which is used for training of the first model and presenting the first record to the researcher. As screening is a binary classification problem, the reviewer must select at least one key record to include and exclude on the basis of background knowledge. More prior knowledge may result in improved efficiency of the active learning process.

The symbols indicate whether the action is taken by a human, a computer, or whether both options are available.

A machine learning classifier is trained to predict study relevance (labels) from a representation of the record-containing text (feature space) on the basis of prior knowledge. We have purposefully chosen not to include an author name or citation network representation in the feature space to prevent authority bias in the inclusions. In the active learning cycle, the software presents one new record to be screened and labelled by the user. The user’s binary label (1 for relevant versus 0 for irrelevant) is subsequently used to train a new model, after which a new record is presented to the user. This cycle continues up to a certain user-specified stopping criterion has been reached. The user now has a file with (1) records labelled as either relevant or irrelevant and (2) unlabelled records ordered from most to least probable to be relevant as predicted by the current model. This set-up helps to move through a large database much quicker than in the manual process, while the decision process simultaneously remains transparent.

Software implementation for ASReview

The source code 27 of ASReview is available open source under an Apache 2.0 license, including documentation 28 . Compiled and packaged versions of the software are available on the Python Package Index 29 or Docker Hub 30 . The free and ready-to-use software ASReview implements oracle, simulation and exploration modes. The oracle mode is used to perform a systematic review with interaction by the user, the simulation mode is used for simulation of the ASReview performance on existing datasets, and the exploration mode can be used for teaching purposes and includes several preloaded labelled datasets.

The oracle mode presents records to the researcher and the researcher classifies these. Multiple file formats are supported: (1) RIS files are used by digital libraries such as IEEE Xplore, Scopus and ScienceDirect; the citation managers Mendeley, RefWorks, Zotero and EndNote support the RIS format too. (2) Tabular datasets with the .csv, .xlsx and .xls file extensions. CSV files should be comma separated and UTF-8 encoded; the software for CSV files accepts a set of predetermined labels in line with the ones used in RIS files. Each record in the dataset should hold the metadata on, for example, a scientific publication. Mandatory metadata is text and can, for example, be titles or abstracts from scientific papers. If available, both are used to train the model, but at least one is needed. An advanced option is available that splits the title and abstracts in the feature-extraction step and weights the two feature matrices independently (for TF–IDF only). Other metadata such as author, date, DOI and keywords are optional but not used for training the models. When using ASReview in the simulation or exploration mode, an additional binary variable is required to indicate historical labelling decisions. This column, which is automatically detected, can also be used in the oracle mode as background knowledge for previous selection of relevant papers before entering the active learning cycle. If unavailable, the user has to select at least one relevant record that can be identified by searching the pool of records. At least one irrelevant record should also be identified; the software allows to search for specific records or presents random records that are most likely to be irrelevant due to the extremely imbalanced data.

The software has a simple yet extensible default model: a naive Bayes classifier, TF–IDF feature extraction, a dynamic resampling balance strategy 31 and certainty-based sampling 17 , 32 for the query strategy. These defaults were chosen on the basis of their consistently high performance in benchmark experiments across several datasets 31 . Moreover, the low computation time of these default settings makes them attractive in applications, given that the software should be able to run locally. Users can change the settings, shown in Table 2 , and technical details are described in our documentation 28 . Users can also add their own classifiers, feature extraction techniques, query strategies and balance strategies.

ASReview has a number of implemented features (see Table 2 ). First, there are several classifiers available: (1) naive Bayes; (2) support vector machines; (3) logistic regression; (4) neural networks; (5) random forests; (6) LSTM-base, which consists of an embedding layer, an LSTM layer with one output, a dense layer and a single sigmoid output node; and (7) LSTM-pool, which consists of an embedding layer, an LSTM layer with many outputs, a max pooling layer and a single sigmoid output node. The feature extraction techniques available are Doc2Vec 33 , embedding LSTM, embedding with IDF or TF–IDF 34 (the default is unigram, with the option to run n -grams while other parameters are set to the defaults of Scikit-learn 35 ) and sBERT 36 . The available query strategies for the active learning part are (1) random selection, ignoring model-assigned probabilities; (2) uncertainty-based sampling, which chooses the most uncertain record according to the model (that is, closest to 0.5 probability); (3) certainty-based sampling (max in ASReview), which chooses the record most likely to be included according to the model; and (4) mixed sampling, which uses a combination of random and certainty-based sampling.

There are several balance strategies that rebalance and reorder the training data. This is necessary, because the data is typically extremely imbalanced and therefore we have implemented the following balance strategies: (1) full sampling, which uses all of the labelled records; (2) undersampling the irrelevant records so that the included and excluded records are in some particular ratio (closer to one); and (3) dynamic resampling, a novel method similar to undersampling in that it decreases the imbalance of the training data 31 . However, in dynamic resampling, the number of irrelevant records is decreased, whereas the number of relevant records is increased by duplication such that the total number of records in the training data remains the same. The ratio between relevant and irrelevant records is not fixed over interactions, but dynamically updated depending on the number of labelled records, the total number of records and the ratio between relevant and irrelevant records. Details on all of the described algorithms can be found in the code and documentation referred to above.

By default, ASReview converts the records’s texts into a document-term matrix, terms are converted to lowercase and no stop words are removed by default (but this can be changed). As the document-term matrix is identical in each iteration of the active learning cycle, it is generated in advance of model training and stored in the (active learning) state file. Each row of the document-term matrix can easily be requested from the state-file. Records are internally identified by their row number in the input dataset. In oracle mode, the record that is selected to be classified is retrieved from the state file and the record text and other metadata (such as title and abstract) are retrieved from the original dataset (from the file or the computer’s memory). ASReview can run on your local computer, or on a (self-hosted) local or remote server. Data (all records and their labels) remain on the users’s computer. Data ownership and confidentiality are crucial and no data are processed or used in any way by third parties. This is unique by comparison with some of the existing systems, as shown in the last column of Table 1 .

Real-world use cases and high-level function descriptions

Below we highlight a number of real-world use cases and high-level function descriptions for using the pipeline of ASReview.

ASReview can be integrated in classic systematic reviews or meta-analyses. Such reviews or meta-analyses entail several explicit and reproducible steps, as outlined in the PRISMA guidelines 4 . Scholars identify all likely relevant publications in a standardized way, screen retrieved publications to select eligible studies on the basis of defined eligibility criteria, extract data from eligible studies and synthesize the results. ASReview fits into this process, particularly in the abstract screening phase. ASReview does not replace the initial step of collecting all potentially relevant studies. As such, results from ASReview depend on the quality of the initial search process, including selection of databases 24 and construction of comprehensive searches using keywords and controlled vocabulary. However, ASReview can be used to broaden the scope of the search (by keyword expansion or omitting limitation in the search query), resulting in a higher number of initial papers to limit the risk of missing relevant papers during the search part (that is, more focus on recall instead of precision).

Furthermore, many reviewers nowadays move towards meta-reviews when analysing very large literature streams, that is, systematic reviews of systematic reviews 37 . This can be problematic as the various reviews included could use different eligibility criteria and are therefore not always directly comparable. Due to the efficiency of ASReview, scholars using the tool could conduct the study by analysing the papers directly instead of using the systematic reviews. Furthermore, ASReview supports the rapid update of a systematic review. The included papers from the initial review are used to train the machine learning model before screening of the updated set of papers starts. This allows the researcher to quickly screen the updated set of papers on the basis of decisions made in the initial run.

As an example case, let us look at the current literature on COVID-19 and the coronavirus. An enormous number of papers are being published on COVID-19. It is very time consuming to manually find relevant papers (for example, to develop treatment guidelines). This is especially problematic as urgent overviews are required. Medical guidelines rely on comprehensive systematic reviews, but the medical literature is growing at breakneck pace and the quality of the research is not universally adequate for summarization into policy 38 . Such reviews must entail adequate protocols with explicit and reproducible steps, including identifying all potentially relevant papers, extracting data from eligible studies, assessing potential for bias and synthesizing the results into medical guidelines. Researchers need to screen (tens of) thousands of COVID-19-related studies by hand to find relevant papers to include in their overview. Using ASReview, this can be done far more efficiently by selecting key papers that match their (COVID-19) research question in the first step; this should start the active learning cycle and lead to the most relevant COVID-19 papers for their research question being presented next. A plug-in was therefore developed for ASReview 39 , which contained three databases that are updated automatically whenever a new version is released by the owners of the data: (1) the Cord19 database, developed by the Allen Institute for AI, with over all publications on COVID-19 and other coronavirus research (for example SARS, MERS and so on) from PubMed Central, the WHO COVID-19 database of publications, the preprint servers bioRxiv and medRxiv and papers contributed by specific publishers 40 . The CORD-19 dataset is updated daily by the Allen Institute for AI and updated also daily in the plugin. (2) In addition to the full dataset, we automatically construct a daily subset of the database with studies published after December 1st, 2019 to search for relevant papers published during the COVID-19 crisis. (3) A separate dataset of COVID-19 related preprints, containing metadata of preprints from over 15 preprints servers across disciplines, published since January 1st, 2020 41 . The preprint dataset is updated weekly by the maintainers and then automatically updated in ASReview as well. As this dataset is not readily available to researchers through regular search engines (for example, PubMed), its inclusion in ASReview provided added value to researchers interested in COVID-19 research, especially if they want a quick way to screen preprints specifically.

Simulation study

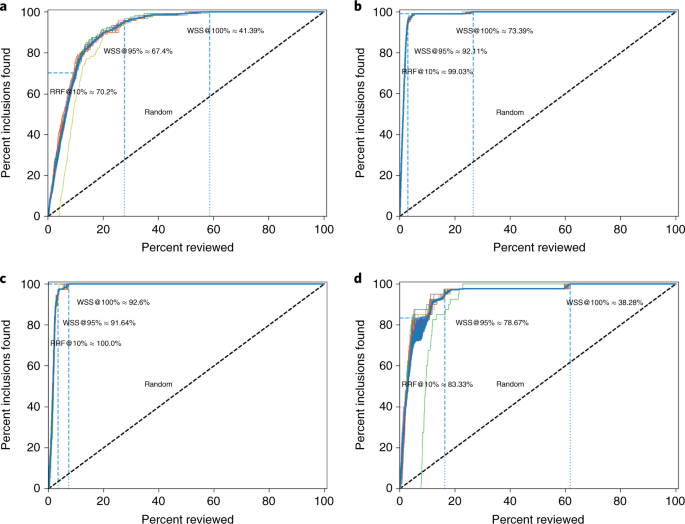

To evaluate the performance of ASReview on a labelled dataset, users can employ the simulation mode. As an example, we ran simulations based on four labelled datasets with version 0.7.2 of ASReview. All scripts to reproduce the results in this paper can be found on Zenodo ( https://doi.org/10.5281/zenodo.4024122 ) 42 , whereas the results are available at OSF ( https://doi.org/10.17605/OSF.IO/2JKD6 ) 43 .

First, we analysed the performance for a study systematically describing studies that performed viral metagenomic next-generation sequencing in common livestock such as cattle, small ruminants, poultry and pigs 44 . Studies were retrieved from Embase ( n = 1,806), Medline ( n = 1,384), Cochrane Central ( n = 1), Web of Science ( n = 977) and Google Scholar ( n = 200, the top relevant references). After deduplication this led to 2,481 studies obtained in the initial search, of which 120 were inclusions (4.84%).

A second simulation study was performed on the results for a systematic review of studies on fault prediction in software engineering 45 . Studies were obtained from ACM Digital Library, IEEExplore and the ISI Web of Science. Furthermore, a snowballing strategy and a manual search were conducted, accumulating to 8,911 publications of which 104 were included in the systematic review (1.2%).

A third simulation study was performed on a review of longitudinal studies that applied unsupervised machine learning techniques to longitudinal data of self-reported symptoms of the post-traumatic stress assessed after trauma exposure 46 , 47 ; 5,782 studies were obtained by searching Pubmed, Embase, PsychInfo and Scopus and through a snowballing strategy in which both the references and the citation of the included papers were screened. Thirty-eight studies were included in the review (0.66%).

A fourth simulation study was performed on the results for a systematic review on the efficacy of angiotensin-converting enzyme inhibitors, from a study collecting various systematic review datasets from the medical sciences 15 . The collection is a subset of 2,544 publications from the TREC 2004 Genomics Track document corpus 48 . This is a static subset from all MEDLINE records from 1994 through 2003, which allows for replicability of results. Forty-one publications were included in the review (1.6%).

Performance metrics

We evaluated the four datasets using three performance metrics. We first assess the work saved over sampling (WSS), which is the percentage reduction in the number of records needed to screen achieved by using active learning instead of screening records at random; WSS is measured at a given level of recall of relevant records, for example 95%, indicating the work reduction in screening effort at the cost of failing to detect 5% of the relevant records. For some researchers it is essential that all relevant literature on the topic is retrieved; this entails that the recall should be 100% (that is, WSS@100%). We also propose the amount of relevant references found after having screened the first 10% of the records (RRF10%). This is a useful metric for getting a quick overview of the relevant literature.

For every dataset, 15 runs were performed with one random inclusion and one random exclusion (see Fig. 2 ). The classical review performance with randomly found inclusions is shown by the dashed line. The average work saved over sampling at 95% recall for ASReview is 83% and ranges from 67% to 92%. Hence, 95% of the eligible studies will be found after screening between only 8% to 33% of the studies. Furthermore, the number of relevant abstracts found after reading 10% of the abstracts ranges from 70% to 100%. In short, our software would have saved many hours of work.

a – d , Results of the simulation study for the results for a study systematically review studies that performed viral metagenomic next-generation sequencing in common livestock ( a ), results for a systematic review of studies on fault prediction in software engineering ( b ), results for longitudinal studies that applied unsupervised machine learning techniques on longitudinal data of self-reported symptoms of posttraumatic stress assessed after trauma exposure ( c ), and results for a systematic review on the efficacy of angiotensin-converting enzyme inhibitors ( d ). Fiteen runs (shown with separate lines) were performed for every dataset, with only one random inclusion and one random exclusion. The classical review performances with randomly found inclusions are shown by the dashed lines.

Usability testing (user experience testing)

We conducted a series of user experience tests to learn from end users how they experience the software and implement it in their workflow. The study was approved by the Ethics Committee of the Faculty of Social and Behavioral Sciences of Utrecht University (ID 20-104).

Unstructured interviews

The first user experience (UX) test—carried out in December 2019—was conducted with an academic research team in a substantive research field (public administration and organizational science) that has conducted various systematic reviews and meta-analyses. It was composed of three university professors (ranging from assistant to full) and three PhD candidates. In one 3.5 h session, the participants used the software and provided feedback via unstructured interviews and group discussions. The goal was to provide feedback on installing the software and testing the performance on their own data. After these sessions we prioritized the feedback in a meeting with the ASReview team, which resulted in the release of v.0.4 and v.0.6. An overview of all releases can be found on GitHub 27 .

A second UX test was conducted with four experienced researchers developing medical guidelines based on classical systematic reviews, and two experienced reviewers working at a pharmaceutical non-profit organization who work on updating reviews with new data. In four sessions, held in February to March 2020, these users tested the software following our testing protocol. After each session we implemented the feedback provided by the experts and asked them to review the software again. The main feedback was about how to upload datasets and select prior papers. Their feedback resulted in the release of v.0.7 and v.0.9.

Systematic UX test

In May 2020 we conducted a systematic UX test. Two groups of users were distinguished: an unexperienced group and an experienced user who already used ASReview. Due to the COVID-19 lockdown the usability tests were conducted via video calling where one person gave instructions to the participant and one person observed, called human-moderated remote testing 49 . During the tests, one person (SH) asked the questions and helped the participant with the tasks, the other person observed and made notes, a user experience professional at the IT department of Utrecht University (MH).

To analyse the notes, thematic analysis was used, which is a method to analyse data by dividing the information in subjects that all have a different meaning 50 using the Nvivo 12 software 51 . When something went wrong the text was coded as showstopper, when something did not go smoothly the text was coded as doubtful, and when something went well the subject was coded as superb. The features the participants requested for future versions of the ASReview tool were discussed with the lead engineer of the ASReview team and were submitted to GitHub as issues or feature requests.

The answers to the quantitative questions can be found at the Open Science Framework 52 . The participants ( N = 11) rated the tool with a grade of 7.9 (s.d. = 0.9) on a scale from one to ten (Table 2 ). The unexperienced users on average rated the tool with an 8.0 (s.d. = 1.1, N = 6). The experienced user on average rated the tool with a 7.8 (s.d. = 0.9, N = 5). The participants described the usability test with words such as helpful, accessible, fun, clear and obvious.

The UX tests resulted in the new release v0.10, v0.10.1 and the major release v0.11, which is a major revision of the graphical user interface. The documentation has been upgraded to make installing and launching ASReview more straightforward. We made setting up the project, selecting a dataset and finding past knowledge is more intuitive and flexible. We also added a project dashboard with information on your progress and advanced settings.

Continuous input via the open source community

Finally, the ASReview development team receives continuous feedback from the open science community about, among other things, the user experience. In every new release we implement features listed by our users. Recurring UX tests are performed to keep up with the needs of users and improve the value of the tool.

We designed a system to accelerate the step of screening titles and abstracts to help researchers conduct a systematic review or meta-analysis as efficiently and transparently as possible. Our system uses active learning to train a machine learning model that predicts relevance from texts using a limited number of labelled examples. The classifier, feature extraction technique, balance strategy and active learning query strategy are flexible. We provide an open source software implementation, ASReview with state-of-the-art systems across a wide range of real-world systematic reviewing applications. Based on our experiments, ASReview provides defaults on its parameters, which exhibited good performance on average across the applications we examined. However, we stress that in practical applications, these defaults should be carefully examined; for this purpose, the software provides a simulation mode to users. We encourage users and developers to perform further evaluation of the proposed approach in their application, and to take advantage of the open source nature of the project by contributing further developments.

Drawbacks of machine learning-based screening systems, including our own, remain. First, although the active learning step greatly reduces the number of manuscripts that must be screened, it also prevents a straightforward evaluation of the system’s error rates without further onerous labelling. Providing users with an accurate estimate of the system’s error rate in the application at hand is therefore a pressing open problem. Second, although, as argued above, the use of such systems is not limited in principle to reviewing, no empirical benchmarks of actual performance in these other situations yet exist to our knowledge. Third, machine learning-based screening systems automate the screening step only; although the screening step is time-consuming and a good target for automation, it is just one part of a much larger process, including the initial search, data extraction, coding for risk of bias, summarizing results and so on. Although some other works, similar to our own, have looked at (semi-)automating some of these steps in isolation 53 , 54 , to our knowledge the field is still far removed from an integrated system that would truly automate the review process while guaranteeing the quality of the produced evidence synthesis. Integrating the various tools that are currently under development to aid the systematic reviewing pipeline is therefore a worthwhile topic for future development.

Possible future research could also focus on the performance of identifying full text articles with different document length and domain-specific terminologies or even other types of text, such as newspaper articles and court cases. When the selection of past knowledge is not possible based on expert knowledge, alternative methods could be explored. For example, unsupervised learning or pseudolabelling algorithms could be used to improve training 55 , 56 . In addition, as the NLP community pushes forward the state of the art in feature extraction methods, these are easily added to our system as well. In all cases, performance benefits should be carefully evaluated using benchmarks for the task at hand. To this end, common benchmark challenges should be constructed that allow for an even comparison of the various tools now available. To facilitate such a benchmark, we have constructed a repository of publicly available systematic reviewing datasets 57 .

The future of systematic reviewing will be an interaction with machine learning algorithms to deal with the enormous increase of available text. We invite the community to contribute to open source projects such as our own, as well as to common benchmark challenges, so that we can provide measurable and reproducible improvement over current practice.

Data availability

The results described in this paper are available at the Open Science Framework ( https://doi.org/10.17605/OSF.IO/2JKD6 ) 43 . The answers to the quantitative questions of the UX test can be found at the Open Science Framework (OSF.IO/7PQNM) 52 .

Code availability

All code to reproduce the results described in this paper can be found on Zenodo ( https://doi.org/10.5281/zenodo.4024122 ) 42 . All code for the software ASReview is available under an Apache 2.0 license ( https://doi.org/10.5281/zenodo.3345592 ) 27 , is maintained on GitHub 63 and includes documentation ( https://doi.org/10.5281/zenodo.4287120 ) 28 .

Bornmann, L. & Mutz, R. Growth rates of modern science: a bibliometric analysis based on the number of publications and cited references. J. Assoc. Inf. Sci. Technol. 66 , 2215–2222 (2015).

Article Google Scholar

Gough, D., Oliver, S. & Thomas, J. An Introduction to Systematic Reviews (Sage, 2017).

Cooper, H. Research Synthesis and Meta-analysis: A Step-by-Step Approach (SAGE Publications, 2015).

Liberati, A. et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J. Clin. Epidemiol. 62 , e1–e34 (2009).

Boaz, A. et al. Systematic Reviews: What have They Got to Offer Evidence Based Policy and Practice? (ESRC UK Centre for Evidence Based Policy and Practice London, 2002).

Oliver, S., Dickson, K. & Bangpan, M. Systematic Reviews: Making Them Policy Relevant. A Briefing for Policy Makers and Systematic Reviewers (UCL Institute of Education, 2015).

Petticrew, M. Systematic reviews from astronomy to zoology: myths and misconceptions. Brit. Med. J. 322 , 98–101 (2001).

Lefebvre, C., Manheimer, E. & Glanville, J. in Cochrane Handbook for Systematic Reviews of Interventions (eds. Higgins, J. P. & Green, S.) 95–150 (John Wiley & Sons, 2008); https://doi.org/10.1002/9780470712184.ch6 .

Sampson, M., Tetzlaff, J. & Urquhart, C. Precision of healthcare systematic review searches in a cross-sectional sample. Res. Synth. Methods 2 , 119–125 (2011).

Wang, Z., Nayfeh, T., Tetzlaff, J., O’Blenis, P. & Murad, M. H. Error rates of human reviewers during abstract screening in systematic reviews. PLoS ONE 15 , e0227742 (2020).

Marshall, I. J. & Wallace, B. C. Toward systematic review automation: a practical guide to using machine learning tools in research synthesis. Syst. Rev. 8 , 163 (2019).

Harrison, H., Griffin, S. J., Kuhn, I. & Usher-Smith, J. A. Software tools to support title and abstract screening for systematic reviews in healthcare: an evaluation. BMC Med. Res. Methodol. 20 , 7 (2020).

O’Mara-Eves, A., Thomas, J., McNaught, J., Miwa, M. & Ananiadou, S. Using text mining for study identification in systematic reviews: a systematic review of current approaches. Syst. Rev. 4 , 5 (2015).

Wallace, B. C., Trikalinos, T. A., Lau, J., Brodley, C. & Schmid, C. H. Semi-automated screening of biomedical citations for systematic reviews. BMC Bioinf. 11 , 55 (2010).

Cohen, A. M., Hersh, W. R., Peterson, K. & Yen, P.-Y. Reducing workload in systematic review preparation using automated citation classification. J. Am. Med. Inform. Assoc. 13 , 206–219 (2006).

Kremer, J., Steenstrup Pedersen, K. & Igel, C. Active learning with support vector machines. WIREs Data Min. Knowl. Discov. 4 , 313–326 (2014).

Miwa, M., Thomas, J., O’Mara-Eves, A. & Ananiadou, S. Reducing systematic review workload through certainty-based screening. J. Biomed. Inform. 51 , 242–253 (2014).

Settles, B. Active Learning Literature Survey (Minds@UW, 2009); https://minds.wisconsin.edu/handle/1793/60660

Holzinger, A. Interactive machine learning for health informatics: when do we need the human-in-the-loop? Brain Inform. 3 , 119–131 (2016).

Van de Schoot, R. & De Bruin, J. Researcher-in-the-loop for Systematic Reviewing of Text Databases (Zenodo, 2020); https://doi.org/10.5281/zenodo.4013207

Kim, D., Seo, D., Cho, S. & Kang, P. Multi-co-training for document classification using various document representations: TF–IDF, LDA, and Doc2Vec. Inf. Sci. 477 , 15–29 (2019).

Nosek, B. A. et al. Promoting an open research culture. Science 348 , 1422–1425 (2015).

Kilicoglu, H., Demner-Fushman, D., Rindflesch, T. C., Wilczynski, N. L. & Haynes, R. B. Towards automatic recognition of scientifically rigorous clinical research evidence. J. Am. Med. Inform. Assoc. 16 , 25–31 (2009).

Gusenbauer, M. & Haddaway, N. R. Which academic search systems are suitable for systematic reviews or meta‐analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res. Synth. Methods 11 , 181–217 (2020).

Borah, R., Brown, A. W., Capers, P. L. & Kaiser, K. A. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 7 , e012545 (2017).

de Vries, H., Bekkers, V. & Tummers, L. Innovation in the Public Sector: a systematic review and future research agenda. Public Adm. 94 , 146–166 (2016).

Van de Schoot, R. et al. ASReview: Active Learning for Systematic Reviews (Zenodo, 2020); https://doi.org/10.5281/zenodo.3345592

De Bruin, J. et al. ASReview Software Documentation 0.14 (Zenodo, 2020); https://doi.org/10.5281/zenodo.4287120

ASReview PyPI Package (ASReview Core Development Team, 2020); https://pypi.org/project/asreview/

Docker container for ASReview (ASReview Core Development Team, 2020); https://hub.docker.com/r/asreview/asreview

Ferdinands, G. et al. Active Learning for Screening Prioritization in Systematic Reviews—A Simulation Study (OSF Preprints, 2020); https://doi.org/10.31219/osf.io/w6qbg

Fu, J. H. & Lee, S. L. Certainty-enhanced active learning for improving imbalanced data classification. In 2011 IEEE 11th International Conference on Data Mining Workshops 405–412 (IEEE, 2011).

Le, Q. V. & Mikolov, T. Distributed representations of sentences and documents. Preprint at https://arxiv.org/abs/1405.4053 (2014).

Ramos, J. Using TF–IDF to determine word relevance in document queries. In Proc. 1st Instructional Conference on Machine Learning Vol. 242, 133–142 (ICML, 2003).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12 , 2825–2830 (2011).

MathSciNet MATH Google Scholar

Reimers, N. & Gurevych, I. Sentence-BERT: sentence embeddings using siamese BERT-networks Preprint at https://arxiv.org/abs/1908.10084 (2019).

Smith, V., Devane, D., Begley, C. M. & Clarke, M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med. Res. Methodol. 11 , 15 (2011).

Wynants, L. et al. Prediction models for diagnosis and prognosis of COVID-19: systematic review and critical appraisal. Brit. Med. J . 369 , 1328 (2020).

Van de Schoot, R. et al. Extension for COVID-19 Related Datasets in ASReview (Zenodo, 2020). https://doi.org/10.5281/zenodo.3891420 .

Lu Wang, L. et al. CORD-19: The COVID-19 open research dataset. Preprint at https://arxiv.org/abs/2004.10706 (2020).

Fraser, N. & Kramer, B. Covid19_preprints (FigShare, 2020); https://doi.org/10.6084/m9.figshare.12033672.v18

Ferdinands, G., Schram, R., Van de Schoot, R. & De Bruin, J. Scripts for ‘ASReview: Open Source Software for Efficient and Transparent Active Learning for Systematic Reviews’ (Zenodo, 2020); https://doi.org/10.5281/zenodo.4024122

Ferdinands, G., Schram, R., van de Schoot, R. & de Bruin, J. Results for ‘ASReview: Open Source Software for Efficient and Transparent Active Learning for Systematic Reviews’ (OSF, 2020); https://doi.org/10.17605/OSF.IO/2JKD6

Kwok, K. T. T., Nieuwenhuijse, D. F., Phan, M. V. T. & Koopmans, M. P. G. Virus metagenomics in farm animals: a systematic review. Viruses 12 , 107 (2020).

Hall, T., Beecham, S., Bowes, D., Gray, D. & Counsell, S. A systematic literature review on fault prediction performance in software engineering. IEEE Trans. Softw. Eng. 38 , 1276–1304 (2012).

van de Schoot, R., Sijbrandij, M., Winter, S. D., Depaoli, S. & Vermunt, J. K. The GRoLTS-Checklist: guidelines for reporting on latent trajectory studies. Struct. Equ. Model. Multidiscip. J. 24 , 451–467 (2017).

Article MathSciNet Google Scholar

van de Schoot, R. et al. Bayesian PTSD-trajectory analysis with informed priors based on a systematic literature search and expert elicitation. Multivar. Behav. Res. 53 , 267–291 (2018).

Cohen, A. M., Bhupatiraju, R. T. & Hersh, W. R. Feature generation, feature selection, classifiers, and conceptual drift for biomedical document triage. In Proc. 13th Text Retrieval Conference (TREC, 2004).

Vasalou, A., Ng, B. D., Wiemer-Hastings, P. & Oshlyansky, L. Human-moderated remote user testing: orotocols and applications. In 8th ERCIM Workshop, User Interfaces for All Vol. 19 (ERCIM, 2004).

Joffe, H. in Qualitative Research Methods in Mental Health and Psychotherapy: A Guide for Students and Practitioners (eds Harper, D. & Thompson, A. R.) Ch. 15 (Wiley, 2012).

NVivo v. 12 (QSR International Pty, 2019).

Hindriks, S., Huijts, M. & van de Schoot, R. Data for UX-test ASReview - June 2020. OSF https://doi.org/10.17605/OSF.IO/7PQNM (2020).

Marshall, I. J., Kuiper, J. & Wallace, B. C. RobotReviewer: evaluation of a system for automatically assessing bias in clinical trials. J. Am. Med. Inform. Assoc. 23 , 193–201 (2016).

Nallapati, R., Zhou, B., dos Santos, C. N., Gulcehre, Ç. & Xiang, B. Abstractive text summarization using sequence-to-sequence RNNs and beyond. In Proc. 20th SIGNLL Conference on Computational Natural Language Learning 280–290 (Association for Computational Linguistics, 2016).

Xie, Q., Dai, Z., Hovy, E., Luong, M.-T. & Le, Q. V. Unsupervised data augmentation for consistency training. Preprint at https://arxiv.org/abs/1904.12848 (2019).

Ratner, A. et al. Snorkel: rapid training data creation with weak supervision. VLDB J. 29 , 709–730 (2020).

Systematic Review Datasets (ASReview Core Development Team, 2020); https://github.com/asreview/systematic-review-datasets

Wallace, B. C., Small, K., Brodley, C. E., Lau, J. & Trikalinos, T. A. Deploying an interactive machine learning system in an evidence-based practice center: Abstrackr. In Proc. 2nd ACM SIGHIT International Health Informatics Symposium 819–824 (Association for Computing Machinery, 2012).

Cheng, S. H. et al. Using machine learning to advance synthesis and use of conservation and environmental evidence. Conserv. Biol. 32 , 762–764 (2018).

Yu, Z., Kraft, N. & Menzies, T. Finding better active learners for faster literature reviews. Empir. Softw. Eng . 23 , 3161–3186 (2018).

Ouzzani, M., Hammady, H., Fedorowicz, Z. & Elmagarmid, A. Rayyan—a web and mobile app for systematic reviews. Syst. Rev. 5 , 210 (2016).

Przybyła, P. et al. Prioritising references for systematic reviews with RobotAnalyst: a user study. Res. Synth. Methods 9 , 470–488 (2018).

ASReview: Active learning for Systematic Reviews (ASReview Core Development Team, 2020); https://github.com/asreview/asreview

Download references

Acknowledgements

We would like to thank the Utrecht University Library, focus area Applied Data Science, and departments of Information and Technology Services, Test and Quality Services, and Methodology and Statistics, for their support. We also want to thank all researchers who shared data, participated in our user experience tests or who gave us feedback on ASReview in other ways. Furthermore, we would like to thank the editors and reviewers for providing constructive feedback. This project was funded by the Innovation Fund for IT in Research Projects, Utrecht University, the Netherlands.

Author information

Authors and affiliations.

Department of Methodology and Statistics, Faculty of Social and Behavioral Sciences, Utrecht University, Utrecht, the Netherlands

Rens van de Schoot, Gerbrich Ferdinands, Albert Harkema, Joukje Willemsen, Yongchao Ma, Qixiang Fang, Sybren Hindriks & Daniel L. Oberski

Department of Research and Data Management Services, Information Technology Services, Utrecht University, Utrecht, the Netherlands

Jonathan de Bruin, Raoul Schram, Parisa Zahedi & Maarten Hoogerwerf

Utrecht University Library, Utrecht University, Utrecht, the Netherlands

Jan de Boer, Felix Weijdema & Bianca Kramer

Department of Test and Quality Services, Information Technology Services, Utrecht University, Utrecht, the Netherlands

Martijn Huijts

School of Governance, Faculty of Law, Economics and Governance, Utrecht University, Utrecht, the Netherlands

Lars Tummers

Department of Biostatistics, Data management and Data Science, Julius Center, University Medical Center Utrecht, Utrecht, the Netherlands

Daniel L. Oberski

You can also search for this author in PubMed Google Scholar

Contributions

R.v.d.S. and D.O. originally designed the project, with later input from L.T. J.d.Br. is the lead engineer, software architect and supervises the code base on GitHub. R.S. coded the algorithms and simulation studies. P.Z. coded the very first version of the software. J.d.Bo., F.W. and B.K. developed the systematic review pipeline. M.Huijts is leading the UX tests and was supported by S.H. M.Hoogerwerf developed the architecture of the produced (meta)data. G.F. conducted the simulation study together with R.S. A.H. performed the literature search comparing the different tools together with G.F. J.W. designed all the artwork and helped with formatting the manuscript. Y.M. and Q.F. are responsible for the preprocessing of the metadata under the supervision of J.d.Br. R.v.d.S, D.O. and L.T. wrote the paper with input from all authors. Each co-author has written parts of the manuscript.

Corresponding author

Correspondence to Rens van de Schoot .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Jian Wu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information.

Overview of software tools supporting systematic reviews.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

van de Schoot, R., de Bruin, J., Schram, R. et al. An open source machine learning framework for efficient and transparent systematic reviews. Nat Mach Intell 3 , 125–133 (2021). https://doi.org/10.1038/s42256-020-00287-7

Download citation

Received : 04 June 2020

Accepted : 17 December 2020

Published : 01 February 2021

Issue Date : February 2021

DOI : https://doi.org/10.1038/s42256-020-00287-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

A systematic review, meta-analysis, and meta-regression of the prevalence of self-reported disordered eating and associated factors among athletes worldwide.

- Hadeel A. Ghazzawi

- Lana S. Nimer

- Haitham Jahrami

Journal of Eating Disorders (2024)

Systematic review using a spiral approach with machine learning

- Amirhossein Saeidmehr

- Piers David Gareth Steel

- Faramarz F. Samavati

Systematic Reviews (2024)

The spatial patterning of emergency demand for police services: a scoping review

- Samuel Langton

- Stijn Ruiter

- Linda Schoonmade

Crime Science (2024)

The SAFE procedure: a practical stopping heuristic for active learning-based screening in systematic reviews and meta-analyses

- Josien Boetje

- Rens van de Schoot

Tunneling, cognitive load and time orientation and their relations with dietary behavior of people experiencing financial scarcity – an AI-assisted scoping review elaborating on scarcity theory

- Annemarieke van der Veer

- Tamara Madern

- Frank J. van Lenthe

International Journal of Behavioral Nutrition and Physical Activity (2024)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Investigations

- Justice & Accountability

Open source research has “come of age”, according to a recent article published by The Economist . What was once the niche realm of a relatively small number of individuals with free time and obsessive internet habits is now informing research and journalism in a wide range of fields and institutions. It’s hard to imagine a better time to roll up your sleeves and set off on the path of the open source researcher.

The promise of open source research is that anyone — not just journalists or researchers at select institutions — can contribute to investigations that uncover wrongdoing and hold perpetrators of crimes and atrocities to account.

When we say “anyone”, we mean anyone : if you’ve an internet connection, free time, and a stubborn commitment to getting the facts right, then you too, can be an open source researcher.

Getting started in open source research can be daunting, especially if the field is completely new to you. But there’s no reason to fear: this guide will cover concrete steps that you can take to develop skills, discover communities based on your interests, and eventually lend a helping hand to important research.

By following these steps, you’ll learn where to find open source researchers, how to observe and learn from their work, and how to practice the new skills that you’ll develop.

1. Take Stock of your Skills and Interests

Are you interested in a particular conflict? Or do you love solving puzzles, which could translate to geolocating images? Do you have a programming background, or knowledge of several languages? Or are you fascinated by military machinery and equipment?

Having an idea of what topics interest you and what you’re good at will help you find other researchers on social media whose work you might want to follow, and may eventually use to inform for your own.

If you’re not sure how your skills and interests might translate to this field, then don’t worry: that just means that you’ll have more to discover.

2. Get on Twitter

The importance of this step cannot be overstated.

Twitter is the primary medium for identifying, debating, and disseminating open source research. It’s full of practitioners who are eager to engage in discussions with others about best methods and practices, and to share their own work and that of others. Having a Twitter account will allow you to follow researchers so that you can learn from their work, as well as ask questions and engage in discussions with like-minded researchers.

If you’re security conscious or have any reason to want to be anonymous, you can easily set up a Twitter account that doesn’t contain your real name or other personal information. The open source research community welcomes anonymous accounts, and operating anonymously doesn’t have any negative connotations (though you may find that eventually you’ll have to reveal your identity if you get approached with publication offers or job opportunities).

If you’re still Twitter-averse, remember that you don’t have to post anything — ever. Twitter’s primary purpose can be to show you what other researchers are saying and publishing, so you don’t ever have to interact with anyone unless you want to.

3. Find Your People (and Put Them on a List)

Once you’re on Twitter, you’ll want to follow lots of open source researchers. This will allow you to see what topics the field is interested in, which organisations tend to focus on which issues, and which methods they employ in their research. More importantly, you’ll be able to learn directly from the experts about methods, tools, and best practices.

If you’ve just learned about the world of open source research, it might be a good idea to cast a wide net and follow researchers at established institutions like the New York Times Visual Investigations , Bellingcat , or the Washington Post Visual Forensics team.

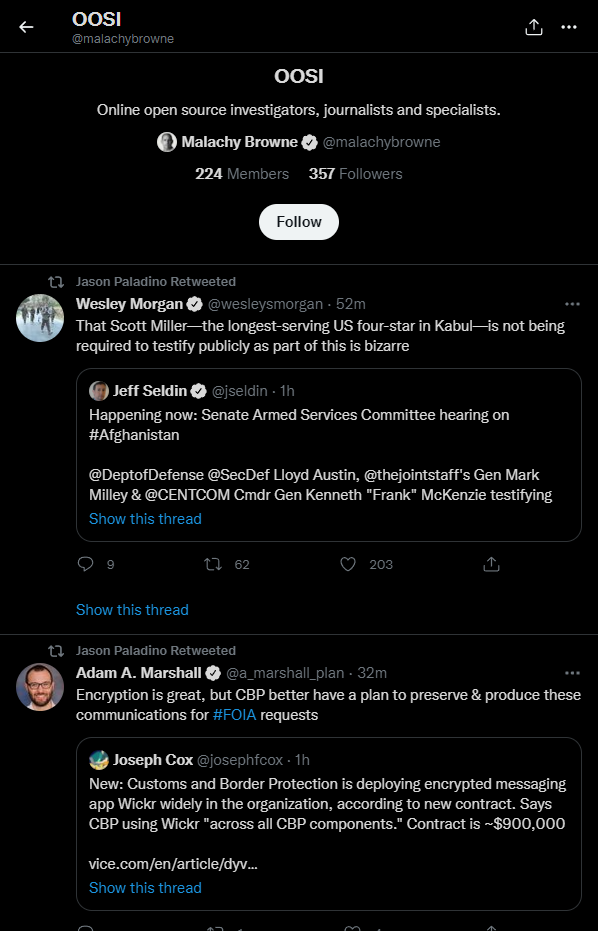

One easy way to find open source researchers is to follow Twitter lists. Any user can create a list of accounts, and some — like Malachy Browne of the New York Times’ Visual Investigations Team — have made those lists available. His “OOSI List” contains more than 200 open source researchers whose work you can keep up with by following the list.

Some people use the term “online open source investigations” (OOSI), while others use “open source investigations” (OSI), but the term that’s been around the longest and is used most often on social media is “open source intelligence” (OSINT). These terms are usually used interchangeably, but there are some differences among them that you might want to consider.

The difference between OOSI and OSI is in the name: while OOSI refers to investigations that only use online sources, you would use OSI to describe an investigation that also used offline open sources.

Some who use OOSI or OSI instead of OSINT do so because they feel that the name “OSINT” has direct connotations to intelligence agencies. For these agencies, OSINT is part of an ecosystem of intelligence sources that includes HUMINT (human intelligence), SOCMINT (social media intelligence), IMINT (imagery intelligence), and others. While some independent researchers might be justifiably uncomfortable with that connotation, the term is still widely used and is probably the most recognised.

In any case, we’d recommend using all of these search terms in order to broaden the resources at your disposal.

Malachy Browne’s OOSI Twitter List has over 200 researchers and organisations whose work you’re about to discover.

Other useful Twitter lists of researchers include:

- Gisela Pérez de Acha’s “OSINTers”

- Rawan Shaif’s “OSINT”

- Bianca Britton’s “Open Source”

- Julia Bayer’s “OSINT research verify”

As time goes by and you become more familiar with the field, you’ll start to notice how wide and varied it is. On some corners you’ll find researchers who focus exclusively on identifying weapons seen on videos from conflict zones; on some you’ll see people who dedicate their time to tracking aircraft or ships , while in others you’ll find expert geolocators . You might decide that you’ll want to create your own list of niche researchers, which you can do by following the instructions in this guide .

4. Find Community Branches

Open source researchers and enthusiasts tend to spend lots of time online, which means that they’re likely to be hanging out in digital spaces besides Twitter.

Discord is a popular messaging app on which several open source communities have chosen to set up base. These communities resemble the chatrooms of the early internet, and are called “servers” in Discord lingo.

Bellingcat’s Discord server is located here . Anyone can join and share tools, ask questions, and collaborate on research projects. The server is divided into topics where users are welcomed to post relevant content for discussion.

Other open source communities with Discord servers that you should check out are:

- The OSINT Curious Project: Community hub for OSINT Curious , a website dedicated to sharing news and educational information about open source research.

- Project OWL : At any given moment, you’re likely to find thousands of members online in this sprawling server dedicated to every imaginable facet of open source research.

- Bridaga Osint : This server is dedicated to sharing information and resources for the Spanish-speaking community.

There are many more open source-focused Discord servers out there, so never stop looking. Remember that open source research is a collaborative effort, so don’t be afraid to get out there and network.

Discord channels are a great place to meet and chat with others interested in research. In the Bellingcat Discord server, there’s a channel dedicated to sharing research tools and resources.

Reddit also hosts open source research communities, including r/Bellingcat , a community-run subreddit. r/OSINT boasts over 26,000 members, making it an active hub of questions and answers on all things related to the field.

The r/TraceAnObject subreddit is dedicated to bringing together people who want to help EUROPOL with its #TraceAnObject campaign and the FBI’s Endangered Child Alert Program . The campaign allows law enforcement to request assistance from the public with identifying individuals, objects and locations seen in child sexual abuse images. Spending time in these subreddits will allow you not only to potentially assist in the rescue of a child, but in the process, to develop your open source research skills. Bellingcat’s own Carlos Gonzales got started in open source research by helping out with these two campaigns part-time.

Joining these kinds of spaces and interacting with fellow enthusiasts and researchers might result in opportunities for you to contribute to important projects, or even inspire you to launch your own.

5. Observe, Learn, and Practice

Now that you’ve got a sense for what the community looks like, where researchers hang out and who’s working on what, you can start to dedicate time to developing and practicing the new skills you’ll be picking up.

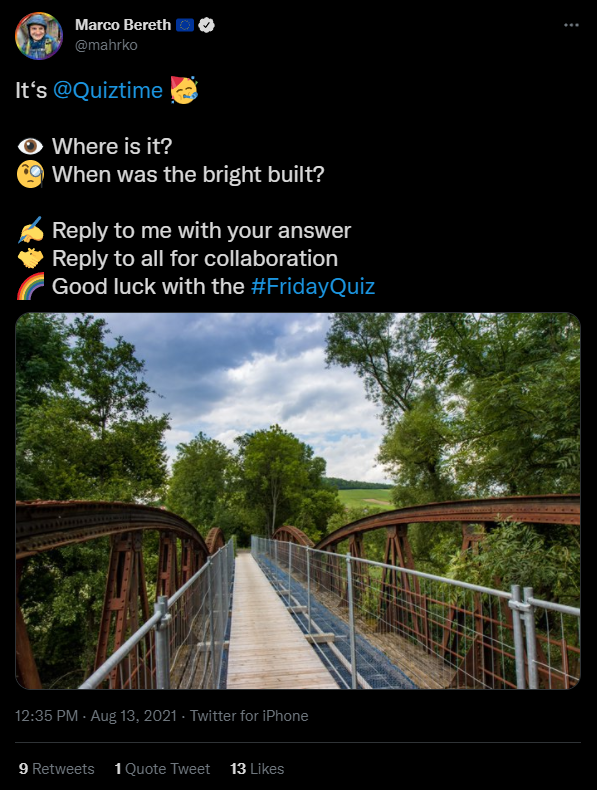

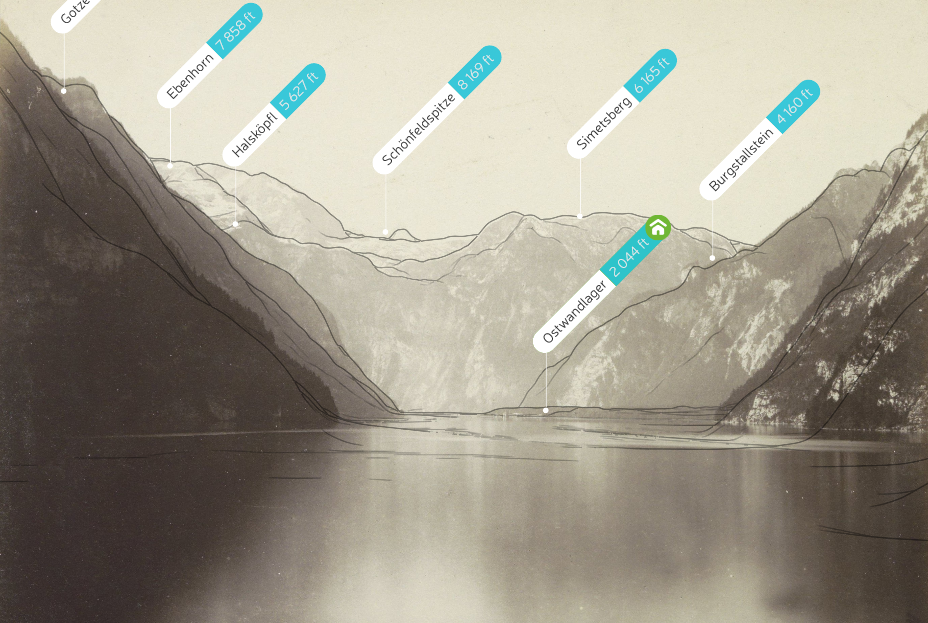

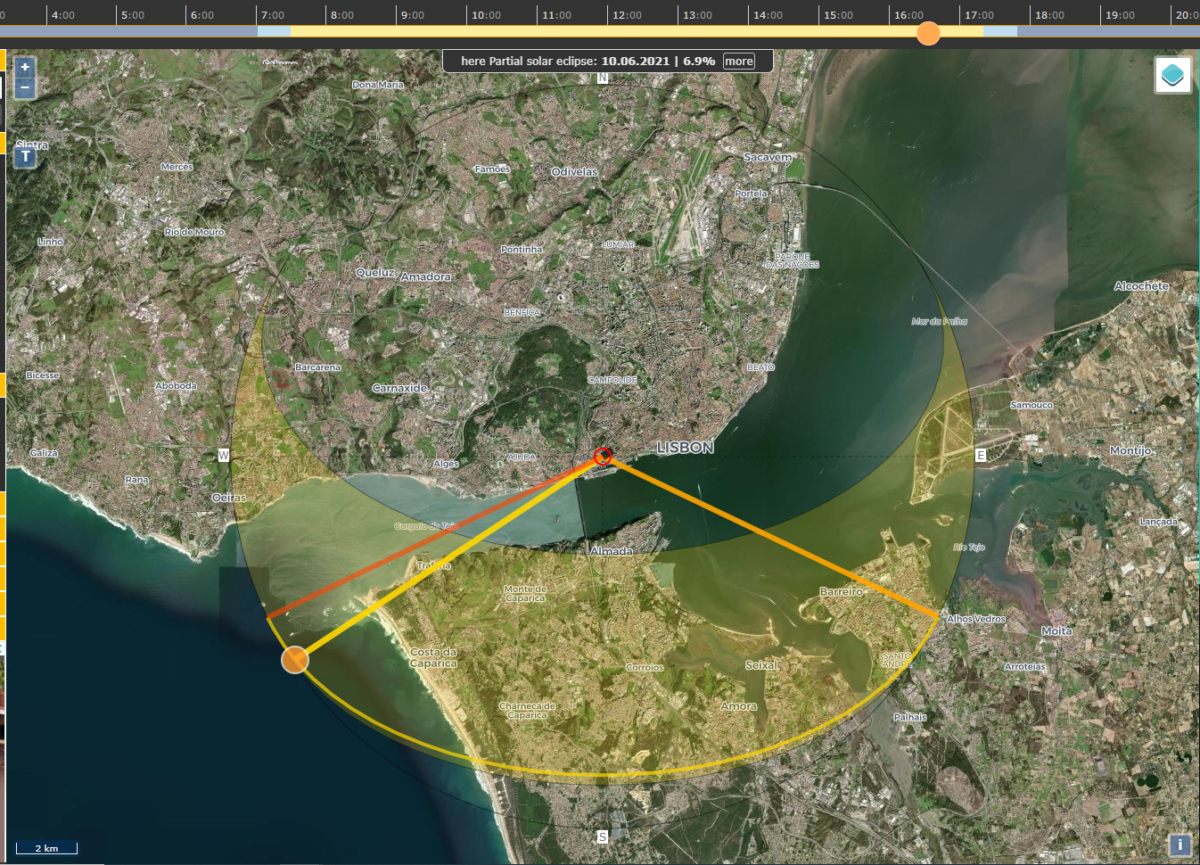

One excellent way to practice research skills is by trying out @Quiztime challenges on Twitter. This account posts daily images and challenges you to find out exactly where they were taken.

Sometimes the quizzes also build off an established geolocation for the image, asking you to determine what an object in the image is or when it was taken.

This Quiztime challenge asks you to determine where this bridge is located and when it was built. How would you go about doing that?

Geolocation is not the only skill that you could develop by doing Quiztime challenges.

With the example above in mind, how could you narrow down the number of search areas, from “anywhere on the planet” to a particular country or region? How might you determine what kind of bridge that was? Would a reverse image search work, or would you have to dig deeper into bridge design? Are there any bridge architecture databases or other resources that you could draw from? Google Maps would be the starting place for the geolocation, but many places in the world don’t have Google Street View. If that’s the case for the place where this bridge is located, how else might you find street-level imagery of this place?

These are just some of the questions that you might ask yourself if you were working to solve this challenge, and they’re the same kinds of questions that you might ask yourself if you were working on an open source project to verify images of atrocities in a conflict zone.

In a similar vein, Geoguessr is a geolocation game that is popular in the open source community (both Bellingcat and OSINT Curious , to name two examples, stream Geoguessr games on Twitch ). The game drops you into a Google Streetview image, and it’s your job to guess exactly where the picture was taken. After each guess, you’re given points. The closer your guess is to the actual spot, the more points you get.

You can play by different sets of rules, including no Googling for information or not moving from the spot where you land. These make the game well worth replaying and allow you to tweak its difficulty to your liking.

You could spend hours honing your geolocation skills on Geoguessr.

At this point, you can start putting some of these steps together. For example, you might decide that you want to collaborate on a Quiztime challenge with other Twitter users, or set up a Geoguessr Game Night with people you met in the Bellingcat Discord server.

You might also consider joining events like Trace Lab’s Search Party CTF , where four-person teams compete to find information about missing persons (you can read more about what exactly Trace Labs does and why in their “About” page). By participating in these events you’d not only be potentially helping find a missing person, but you’d also have the chance to work (and learn from) other OSI enthusiasts by putting your skills to practice in a team setting.

Now that you’re all set up, here are a few more ideas to get you on your way.

Bookmark Community Resources

One of the great characteristics of the open source research community is its willingness to share knowledge. This knowledge-sharing sometimes takes the form of newsletters and community resource pages that feature tools and research projects for you to explore.

Sector035 ’s Week in OSINT is a weekly newsletter that looks back over the previous seven days in the world of open source research. The newsletter covers everything from new tools that the community has discovered or developed, to new articles and other resources that have just been published. Week in OSINT delivers the best and newest in open source research to your inbox, making it a guaranteed way to learn the ropes of the field.