An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Korean J Anesthesiol

- v.71(2); 2018 Apr

Introduction to systematic review and meta-analysis

1 Department of Anesthesiology and Pain Medicine, Inje University Seoul Paik Hospital, Seoul, Korea

2 Department of Anesthesiology and Pain Medicine, Chung-Ang University College of Medicine, Seoul, Korea

Systematic reviews and meta-analyses present results by combining and analyzing data from different studies conducted on similar research topics. In recent years, systematic reviews and meta-analyses have been actively performed in various fields including anesthesiology. These research methods are powerful tools that can overcome the difficulties in performing large-scale randomized controlled trials. However, the inclusion of studies with any biases or improperly assessed quality of evidence in systematic reviews and meta-analyses could yield misleading results. Therefore, various guidelines have been suggested for conducting systematic reviews and meta-analyses to help standardize them and improve their quality. Nonetheless, accepting the conclusions of many studies without understanding the meta-analysis can be dangerous. Therefore, this article provides an easy introduction to clinicians on performing and understanding meta-analyses.

Introduction

A systematic review collects all possible studies related to a given topic and design, and reviews and analyzes their results [ 1 ]. During the systematic review process, the quality of studies is evaluated, and a statistical meta-analysis of the study results is conducted on the basis of their quality. A meta-analysis is a valid, objective, and scientific method of analyzing and combining different results. Usually, in order to obtain more reliable results, a meta-analysis is mainly conducted on randomized controlled trials (RCTs), which have a high level of evidence [ 2 ] ( Fig. 1 ). Since 1999, various papers have presented guidelines for reporting meta-analyses of RCTs. Following the Quality of Reporting of Meta-analyses (QUORUM) statement [ 3 ], and the appearance of registers such as Cochrane Library’s Methodology Register, a large number of systematic literature reviews have been registered. In 2009, the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [ 4 ] was published, and it greatly helped standardize and improve the quality of systematic reviews and meta-analyses [ 5 ].

Levels of evidence.

In anesthesiology, the importance of systematic reviews and meta-analyses has been highlighted, and they provide diagnostic and therapeutic value to various areas, including not only perioperative management but also intensive care and outpatient anesthesia [6–13]. Systematic reviews and meta-analyses include various topics, such as comparing various treatments of postoperative nausea and vomiting [ 14 , 15 ], comparing general anesthesia and regional anesthesia [ 16 – 18 ], comparing airway maintenance devices [ 8 , 19 ], comparing various methods of postoperative pain control (e.g., patient-controlled analgesia pumps, nerve block, or analgesics) [ 20 – 23 ], comparing the precision of various monitoring instruments [ 7 ], and meta-analysis of dose-response in various drugs [ 12 ].

Thus, literature reviews and meta-analyses are being conducted in diverse medical fields, and the aim of highlighting their importance is to help better extract accurate, good quality data from the flood of data being produced. However, a lack of understanding about systematic reviews and meta-analyses can lead to incorrect outcomes being derived from the review and analysis processes. If readers indiscriminately accept the results of the many meta-analyses that are published, incorrect data may be obtained. Therefore, in this review, we aim to describe the contents and methods used in systematic reviews and meta-analyses in a way that is easy to understand for future authors and readers of systematic review and meta-analysis.

Study Planning

It is easy to confuse systematic reviews and meta-analyses. A systematic review is an objective, reproducible method to find answers to a certain research question, by collecting all available studies related to that question and reviewing and analyzing their results. A meta-analysis differs from a systematic review in that it uses statistical methods on estimates from two or more different studies to form a pooled estimate [ 1 ]. Following a systematic review, if it is not possible to form a pooled estimate, it can be published as is without progressing to a meta-analysis; however, if it is possible to form a pooled estimate from the extracted data, a meta-analysis can be attempted. Systematic reviews and meta-analyses usually proceed according to the flowchart presented in Fig. 2 . We explain each of the stages below.

Flowchart illustrating a systematic review.

Formulating research questions

A systematic review attempts to gather all available empirical research by using clearly defined, systematic methods to obtain answers to a specific question. A meta-analysis is the statistical process of analyzing and combining results from several similar studies. Here, the definition of the word “similar” is not made clear, but when selecting a topic for the meta-analysis, it is essential to ensure that the different studies present data that can be combined. If the studies contain data on the same topic that can be combined, a meta-analysis can even be performed using data from only two studies. However, study selection via a systematic review is a precondition for performing a meta-analysis, and it is important to clearly define the Population, Intervention, Comparison, Outcomes (PICO) parameters that are central to evidence-based research. In addition, selection of the research topic is based on logical evidence, and it is important to select a topic that is familiar to readers without clearly confirmed the evidence [ 24 ].

Protocols and registration

In systematic reviews, prior registration of a detailed research plan is very important. In order to make the research process transparent, primary/secondary outcomes and methods are set in advance, and in the event of changes to the method, other researchers and readers are informed when, how, and why. Many studies are registered with an organization like PROSPERO ( http://www.crd.york.ac.uk/PROSPERO/ ), and the registration number is recorded when reporting the study, in order to share the protocol at the time of planning.

Defining inclusion and exclusion criteria

Information is included on the study design, patient characteristics, publication status (published or unpublished), language used, and research period. If there is a discrepancy between the number of patients included in the study and the number of patients included in the analysis, this needs to be clearly explained while describing the patient characteristics, to avoid confusing the reader.

Literature search and study selection

In order to secure proper basis for evidence-based research, it is essential to perform a broad search that includes as many studies as possible that meet the inclusion and exclusion criteria. Typically, the three bibliographic databases Medline, Embase, and Cochrane Central Register of Controlled Trials (CENTRAL) are used. In domestic studies, the Korean databases KoreaMed, KMBASE, and RISS4U may be included. Effort is required to identify not only published studies but also abstracts, ongoing studies, and studies awaiting publication. Among the studies retrieved in the search, the researchers remove duplicate studies, select studies that meet the inclusion/exclusion criteria based on the abstracts, and then make the final selection of studies based on their full text. In order to maintain transparency and objectivity throughout this process, study selection is conducted independently by at least two investigators. When there is a inconsistency in opinions, intervention is required via debate or by a third reviewer. The methods for this process also need to be planned in advance. It is essential to ensure the reproducibility of the literature selection process [ 25 ].

Quality of evidence

However, well planned the systematic review or meta-analysis is, if the quality of evidence in the studies is low, the quality of the meta-analysis decreases and incorrect results can be obtained [ 26 ]. Even when using randomized studies with a high quality of evidence, evaluating the quality of evidence precisely helps determine the strength of recommendations in the meta-analysis. One method of evaluating the quality of evidence in non-randomized studies is the Newcastle-Ottawa Scale, provided by the Ottawa Hospital Research Institute 1) . However, we are mostly focusing on meta-analyses that use randomized studies.

If the Grading of Recommendations, Assessment, Development and Evaluations (GRADE) system ( http://www.gradeworkinggroup.org/ ) is used, the quality of evidence is evaluated on the basis of the study limitations, inaccuracies, incompleteness of outcome data, indirectness of evidence, and risk of publication bias, and this is used to determine the strength of recommendations [ 27 ]. As shown in Table 1 , the study limitations are evaluated using the “risk of bias” method proposed by Cochrane 2) . This method classifies bias in randomized studies as “low,” “high,” or “unclear” on the basis of the presence or absence of six processes (random sequence generation, allocation concealment, blinding participants or investigators, incomplete outcome data, selective reporting, and other biases) [ 28 ].

The Cochrane Collaboration’s Tool for Assessing the Risk of Bias [ 28 ]

Data extraction

Two different investigators extract data based on the objectives and form of the study; thereafter, the extracted data are reviewed. Since the size and format of each variable are different, the size and format of the outcomes are also different, and slight changes may be required when combining the data [ 29 ]. If there are differences in the size and format of the outcome variables that cause difficulties combining the data, such as the use of different evaluation instruments or different evaluation timepoints, the analysis may be limited to a systematic review. The investigators resolve differences of opinion by debate, and if they fail to reach a consensus, a third-reviewer is consulted.

Data Analysis

The aim of a meta-analysis is to derive a conclusion with increased power and accuracy than what could not be able to achieve in individual studies. Therefore, before analysis, it is crucial to evaluate the direction of effect, size of effect, homogeneity of effects among studies, and strength of evidence [ 30 ]. Thereafter, the data are reviewed qualitatively and quantitatively. If it is determined that the different research outcomes cannot be combined, all the results and characteristics of the individual studies are displayed in a table or in a descriptive form; this is referred to as a qualitative review. A meta-analysis is a quantitative review, in which the clinical effectiveness is evaluated by calculating the weighted pooled estimate for the interventions in at least two separate studies.

The pooled estimate is the outcome of the meta-analysis, and is typically explained using a forest plot ( Figs. 3 and and4). 4 ). The black squares in the forest plot are the odds ratios (ORs) and 95% confidence intervals in each study. The area of the squares represents the weight reflected in the meta-analysis. The black diamond represents the OR and 95% confidence interval calculated across all the included studies. The bold vertical line represents a lack of therapeutic effect (OR = 1); if the confidence interval includes OR = 1, it means no significant difference was found between the treatment and control groups.

Forest plot analyzed by two different models using the same data. (A) Fixed-effect model. (B) Random-effect model. The figure depicts individual trials as filled squares with the relative sample size and the solid line as the 95% confidence interval of the difference. The diamond shape indicates the pooled estimate and uncertainty for the combined effect. The vertical line indicates the treatment group shows no effect (OR = 1). Moreover, if the confidence interval includes 1, then the result shows no evidence of difference between the treatment and control groups.

Forest plot representing homogeneous data.

Dichotomous variables and continuous variables

In data analysis, outcome variables can be considered broadly in terms of dichotomous variables and continuous variables. When combining data from continuous variables, the mean difference (MD) and standardized mean difference (SMD) are used ( Table 2 ).

Summary of Meta-analysis Methods Available in RevMan [ 28 ]

The MD is the absolute difference in mean values between the groups, and the SMD is the mean difference between groups divided by the standard deviation. When results are presented in the same units, the MD can be used, but when results are presented in different units, the SMD should be used. When the MD is used, the combined units must be shown. A value of “0” for the MD or SMD indicates that the effects of the new treatment method and the existing treatment method are the same. A value lower than “0” means the new treatment method is less effective than the existing method, and a value greater than “0” means the new treatment is more effective than the existing method.

When combining data for dichotomous variables, the OR, risk ratio (RR), or risk difference (RD) can be used. The RR and RD can be used for RCTs, quasi-experimental studies, or cohort studies, and the OR can be used for other case-control studies or cross-sectional studies. However, because the OR is difficult to interpret, using the RR and RD, if possible, is recommended. If the outcome variable is a dichotomous variable, it can be presented as the number needed to treat (NNT), which is the minimum number of patients who need to be treated in the intervention group, compared to the control group, for a given event to occur in at least one patient. Based on Table 3 , in an RCT, if x is the probability of the event occurring in the control group and y is the probability of the event occurring in the intervention group, then x = c/(c + d), y = a/(a + b), and the absolute risk reduction (ARR) = x − y. NNT can be obtained as the reciprocal, 1/ARR.

Calculation of the Number Needed to Treat in the Dichotomous table

Fixed-effect models and random-effect models

In order to analyze effect size, two types of models can be used: a fixed-effect model or a random-effect model. A fixed-effect model assumes that the effect of treatment is the same, and that variation between results in different studies is due to random error. Thus, a fixed-effect model can be used when the studies are considered to have the same design and methodology, or when the variability in results within a study is small, and the variance is thought to be due to random error. Three common methods are used for weighted estimation in a fixed-effect model: 1) inverse variance-weighted estimation 3) , 2) Mantel-Haenszel estimation 4) , and 3) Peto estimation 5) .

A random-effect model assumes heterogeneity between the studies being combined, and these models are used when the studies are assumed different, even if a heterogeneity test does not show a significant result. Unlike a fixed-effect model, a random-effect model assumes that the size of the effect of treatment differs among studies. Thus, differences in variation among studies are thought to be due to not only random error but also between-study variability in results. Therefore, weight does not decrease greatly for studies with a small number of patients. Among methods for weighted estimation in a random-effect model, the DerSimonian and Laird method 6) is mostly used for dichotomous variables, as the simplest method, while inverse variance-weighted estimation is used for continuous variables, as with fixed-effect models. These four methods are all used in Review Manager software (The Cochrane Collaboration, UK), and are described in a study by Deeks et al. [ 31 ] ( Table 2 ). However, when the number of studies included in the analysis is less than 10, the Hartung-Knapp-Sidik-Jonkman method 7) can better reduce the risk of type 1 error than does the DerSimonian and Laird method [ 32 ].

Fig. 3 shows the results of analyzing outcome data using a fixed-effect model (A) and a random-effect model (B). As shown in Fig. 3 , while the results from large studies are weighted more heavily in the fixed-effect model, studies are given relatively similar weights irrespective of study size in the random-effect model. Although identical data were being analyzed, as shown in Fig. 3 , the significant result in the fixed-effect model was no longer significant in the random-effect model. One representative example of the small study effect in a random-effect model is the meta-analysis by Li et al. [ 33 ]. In a large-scale study, intravenous injection of magnesium was unrelated to acute myocardial infarction, but in the random-effect model, which included numerous small studies, the small study effect resulted in an association being found between intravenous injection of magnesium and myocardial infarction. This small study effect can be controlled for by using a sensitivity analysis, which is performed to examine the contribution of each of the included studies to the final meta-analysis result. In particular, when heterogeneity is suspected in the study methods or results, by changing certain data or analytical methods, this method makes it possible to verify whether the changes affect the robustness of the results, and to examine the causes of such effects [ 34 ].

Heterogeneity

Homogeneity test is a method whether the degree of heterogeneity is greater than would be expected to occur naturally when the effect size calculated from several studies is higher than the sampling error. This makes it possible to test whether the effect size calculated from several studies is the same. Three types of homogeneity tests can be used: 1) forest plot, 2) Cochrane’s Q test (chi-squared), and 3) Higgins I 2 statistics. In the forest plot, as shown in Fig. 4 , greater overlap between the confidence intervals indicates greater homogeneity. For the Q statistic, when the P value of the chi-squared test, calculated from the forest plot in Fig. 4 , is less than 0.1, it is considered to show statistical heterogeneity and a random-effect can be used. Finally, I 2 can be used [ 35 ].

I 2 , calculated as shown above, returns a value between 0 and 100%. A value less than 25% is considered to show strong homogeneity, a value of 50% is average, and a value greater than 75% indicates strong heterogeneity.

Even when the data cannot be shown to be homogeneous, a fixed-effect model can be used, ignoring the heterogeneity, and all the study results can be presented individually, without combining them. However, in many cases, a random-effect model is applied, as described above, and a subgroup analysis or meta-regression analysis is performed to explain the heterogeneity. In a subgroup analysis, the data are divided into subgroups that are expected to be homogeneous, and these subgroups are analyzed. This needs to be planned in the predetermined protocol before starting the meta-analysis. A meta-regression analysis is similar to a normal regression analysis, except that the heterogeneity between studies is modeled. This process involves performing a regression analysis of the pooled estimate for covariance at the study level, and so it is usually not considered when the number of studies is less than 10. Here, univariate and multivariate regression analyses can both be considered.

Publication bias

Publication bias is the most common type of reporting bias in meta-analyses. This refers to the distortion of meta-analysis outcomes due to the higher likelihood of publication of statistically significant studies rather than non-significant studies. In order to test the presence or absence of publication bias, first, a funnel plot can be used ( Fig. 5 ). Studies are plotted on a scatter plot with effect size on the x-axis and precision or total sample size on the y-axis. If the points form an upside-down funnel shape, with a broad base that narrows towards the top of the plot, this indicates the absence of a publication bias ( Fig. 5A ) [ 29 , 36 ]. On the other hand, if the plot shows an asymmetric shape, with no points on one side of the graph, then publication bias can be suspected ( Fig. 5B ). Second, to test publication bias statistically, Begg and Mazumdar’s rank correlation test 8) [ 37 ] or Egger’s test 9) [ 29 ] can be used. If publication bias is detected, the trim-and-fill method 10) can be used to correct the bias [ 38 ]. Fig. 6 displays results that show publication bias in Egger’s test, which has then been corrected using the trim-and-fill method using Comprehensive Meta-Analysis software (Biostat, USA).

Funnel plot showing the effect size on the x-axis and sample size on the y-axis as a scatter plot. (A) Funnel plot without publication bias. The individual plots are broader at the bottom and narrower at the top. (B) Funnel plot with publication bias. The individual plots are located asymmetrically.

Funnel plot adjusted using the trim-and-fill method. White circles: comparisons included. Black circles: inputted comparisons using the trim-and-fill method. White diamond: pooled observed log risk ratio. Black diamond: pooled inputted log risk ratio.

Result Presentation

When reporting the results of a systematic review or meta-analysis, the analytical content and methods should be described in detail. First, a flowchart is displayed with the literature search and selection process according to the inclusion/exclusion criteria. Second, a table is shown with the characteristics of the included studies. A table should also be included with information related to the quality of evidence, such as GRADE ( Table 4 ). Third, the results of data analysis are shown in a forest plot and funnel plot. Fourth, if the results use dichotomous data, the NNT values can be reported, as described above.

The GRADE Evidence Quality for Each Outcome

N: number of studies, ROB: risk of bias, PON: postoperative nausea, POV: postoperative vomiting, PONV: postoperative nausea and vomiting, CI: confidence interval, RR: risk ratio, AR: absolute risk.

When Review Manager software (The Cochrane Collaboration, UK) is used for the analysis, two types of P values are given. The first is the P value from the z-test, which tests the null hypothesis that the intervention has no effect. The second P value is from the chi-squared test, which tests the null hypothesis for a lack of heterogeneity. The statistical result for the intervention effect, which is generally considered the most important result in meta-analyses, is the z-test P value.

A common mistake when reporting results is, given a z-test P value greater than 0.05, to say there was “no statistical significance” or “no difference.” When evaluating statistical significance in a meta-analysis, a P value lower than 0.05 can be explained as “a significant difference in the effects of the two treatment methods.” However, the P value may appear non-significant whether or not there is a difference between the two treatment methods. In such a situation, it is better to announce “there was no strong evidence for an effect,” and to present the P value and confidence intervals. Another common mistake is to think that a smaller P value is indicative of a more significant effect. In meta-analyses of large-scale studies, the P value is more greatly affected by the number of studies and patients included, rather than by the significance of the results; therefore, care should be taken when interpreting the results of a meta-analysis.

When performing a systematic literature review or meta-analysis, if the quality of studies is not properly evaluated or if proper methodology is not strictly applied, the results can be biased and the outcomes can be incorrect. However, when systematic reviews and meta-analyses are properly implemented, they can yield powerful results that could usually only be achieved using large-scale RCTs, which are difficult to perform in individual studies. As our understanding of evidence-based medicine increases and its importance is better appreciated, the number of systematic reviews and meta-analyses will keep increasing. However, indiscriminate acceptance of the results of all these meta-analyses can be dangerous, and hence, we recommend that their results be received critically on the basis of a more accurate understanding.

1) http://www.ohri.ca .

2) http://methods.cochrane.org/bias/assessing-risk-bias-included-studies .

3) The inverse variance-weighted estimation method is useful if the number of studies is small with large sample sizes.

4) The Mantel-Haenszel estimation method is useful if the number of studies is large with small sample sizes.

5) The Peto estimation method is useful if the event rate is low or one of the two groups shows zero incidence.

6) The most popular and simplest statistical method used in Review Manager and Comprehensive Meta-analysis software.

7) Alternative random-effect model meta-analysis that has more adequate error rates than does the common DerSimonian and Laird method, especially when the number of studies is small. However, even with the Hartung-Knapp-Sidik-Jonkman method, when there are less than five studies with very unequal sizes, extra caution is needed.

8) The Begg and Mazumdar rank correlation test uses the correlation between the ranks of effect sizes and the ranks of their variances [ 37 ].

9) The degree of funnel plot asymmetry as measured by the intercept from the regression of standard normal deviates against precision [ 29 ].

10) If there are more small studies on one side, we expect the suppression of studies on the other side. Trimming yields the adjusted effect size and reduces the variance of the effects by adding the original studies back into the analysis as a mirror image of each study.

1.2.2 What is a systematic review?

A systematic review attempts to collate all empirical evidence that fits pre-specified eligibility criteria in order to answer a specific research question. It uses explicit, systematic methods that are selected with a view to minimizing bias, thus providing more reliable findings from which conclusions can be drawn and decisions made (Antman 1992, Oxman 1993) . The key characteristics of a systematic review are:

a clearly stated set of objectives with pre-defined eligibility criteria for studies;

an explicit, reproducible methodology;

a systematic search that attempts to identify all studies that would meet the eligibility criteria;

an assessment of the validity of the findings of the included studies, for example through the assessment of risk of bias; and

a systematic presentation, and synthesis, of the characteristics and findings of the included studies.

Many systematic reviews contain meta-analyses. Meta-analysis is the use of statistical methods to summarize the results of independent studies (Glass 1976). By combining information from all relevant studies, meta-analyses can provide more precise estimates of the effects of health care than those derived from the individual studies included within a review (see Chapter 9, Section 9.1.3 ). They also facilitate investigations of the consistency of evidence across studies, and the exploration of differences across studies.

Introduction to Systematic Reviews

In this guide.

- Introduction

- Lane Research Services

- Types of Reviews

- Systematic Review Process

- Protocols & Guidelines

- Data Extraction and Screening

- Resources & Tools

- Systematic Review Online Course

What is a Systematic Review?

Knowledge synthesis is a term used to describe the method of synthesizing results from individual studies and interpreting these results within the larger body of knowledge on the topic. It requires highly structured, transparent and reproducible methods using quantitative and/or qualitative evidence. Systematic reviews, meta-analyses, scoping reviews, rapid reviews, narrative syntheses, practice guidelines, among others, are all forms of knowledge syntheses. For more information on types of reviews, visit the "Types of Reviews" tab on the left.

A systematic review varies from an ordinary literature review in that it uses a comprehensive, methodical, transparent and reproducible search strategy to ensure conclusions are as unbiased and closer to the truth as possible. The Cochrane Handbook for Systematic Reviews of Interventions defines a systematic review as:

"A systematic review attempts to identify, appraise and synthesize all the empirical evidence that meets pre-specified eligibility criteria to answer a given research question. Researchers conducting systematic reviews use explicit methods aimed at minimizing bias, in order to produce more reliable findings that can be used to inform decision making [...] This involves: the a priori specification of a research question; clarity on the scope of the review and which studies are eligible for inclusion; making every effort to find all relevant research and to ensure that issues of bias in included studies are accounted for; and analysing the included studies in order to draw conclusions based on all the identified research in an impartial and objective way." ( Chapter 1: Starting a review )

What are systematic reviews? from Cochrane on Youtube .

- Next: Lane Research Services >>

- Last Updated: Nov 1, 2023 2:50 PM

- URL: https://laneguides.stanford.edu/systematicreviews

- Duke NetID Login

- 919.660.1100

- Duke Health Badge: 24-hour access

- Accounts & Access

- Databases, Journals & Books

- Request & Reserve

- Training & Consulting

- Request Articles & Books

- Renew Online

- Reserve Spaces

- Reserve a Locker

- Study & Meeting Rooms

- Course Reserves

- Digital Health Device Collection

- Pay Fines/Fees

- Recommend a Purchase

- Access From Off Campus

- Building Access

- Computers & Equipment

- Wifi Access

- My Accounts

- Mobile Apps

- Known Access Issues

- Report an Access Issue

- All Databases

- Article Databases

- Basic Sciences

- Clinical Sciences

- Dissertations & Theses

- Drugs, Chemicals & Toxicology

- Grants & Funding

- Interprofessional Education

- Non-Medical Databases

- Search for E-Journals

- Search for Print & E-Journals

- Search for E-Books

- Search for Print & E-Books

- E-Book Collections

- Biostatistics

- Global Health

- MBS Program

- Medical Students

- MMCi Program

- Occupational Therapy

- Path Asst Program

- Physical Therapy

- Researchers

- Community Partners

Conducting Research

- Archival & Historical Research

- Black History at Duke Health

- Data Analytics & Viz Software

- Data: Find and Share

- Evidence-Based Practice

- NIH Public Access Policy Compliance

- Publication Metrics

- Qualitative Research

- Searching Animal Alternatives

Systematic Reviews

- Test Instruments

Using Databases

- JCR Impact Factors

- Web of Science

Finding & Accessing

- COVID-19: Core Clinical Resources

- Health Literacy

- Health Statistics & Data

- Library Orientation

Writing & Citing

- Creating Links

- Getting Published

- Reference Mgmt

- Scientific Writing

Meet a Librarian

- Request a Consultation

- Find Your Liaisons

- Register for a Class

- Request a Class

- Self-Paced Learning

Search Services

- Literature Search

- Systematic Review

- Animal Alternatives (IACUC)

- Research Impact

Citation Mgmt

- Other Software

Scholarly Communications

- About Scholarly Communications

- Publish Your Work

- Measure Your Research Impact

- Engage in Open Science

- Libraries and Publishers

- Directions & Maps

- Floor Plans

Library Updates

- Annual Snapshot

- Conference Presentations

- Contact Information

- Gifts & Donations

What is a Systematic Review?

- Types of Reviews

- Manuals and Reporting Guidelines

- Our Service

- 1. Assemble Your Team

- 2. Develop a Research Question

- 3. Write and Register a Protocol

- 4. Search the Evidence

- 5. Screen Results

- 6. Assess for Quality and Bias

- 7. Extract the Data

- 8. Write the Review

- Additional Resources

- Finding Full-Text Articles

A systematic review attempts to collate all empirical evidence that fits pre-specified eligibility criteria in order to answer a specific research question. The key characteristics of a systematic review are:

- a clearly defined question with inclusion and exclusion criteria;

- a rigorous and systematic search of the literature;

- two phases of screening (blinded, at least two independent screeners);

- data extraction and management;

- analysis and interpretation of results;

- risk of bias assessment of included studies;

- and report for publication.

Medical Center Library & Archives Presentations

The following presentation is a recording of the Getting Started with Systematic Reviews workshop (4/2022), offered by the Duke Medical Center Library & Archives. A NetID/pw is required to access the tutorial via Warpwire.

- << Previous: Overview

- Next: Types of Reviews >>

- Last Updated: Mar 20, 2024 2:21 PM

- URL: https://guides.mclibrary.duke.edu/sysreview

- Duke Health

- Duke University

- Duke Libraries

- Medical Center Archives

- Duke Directory

- Seeley G. Mudd Building

- 10 Searle Drive

- [email protected]

- University of Texas Libraries

- UT Libraries

Systematic Reviews & Evidence Synthesis Methods

- Types of Reviews

- Formulate Question

- Find Existing Reviews & Protocols

- Register a Protocol

- Searching Systematically

- Supplementary Searching

- Managing Results

- Deduplication

- Critical Appraisal

- Glossary of terms

- Librarian Support

- Video tutorials This link opens in a new window

- Systematic Review & Evidence Synthesis Boot Camp

What is a Systematic Review?

A systematic review gathers, assesses, and synthesizes all available empirical research on a specific question using a comprehensive search method with an aim to minimize bias.

Or, put another way :

A systematic review begins with a specific research question. Authors of the review gather and evaluate all experimental studies that address the question . Bringing together the findings of these separate studies allows the review authors to make new conclusions from what has been learned.

*The key characteristics of a systematic review are:

- A clearly stated set of objectives with pre-defined eligibility criteria for studies;

- An explicit, reproducible methodology;

- A systematic search that attempts to identify all relevant research;

- A critical appraisal of the included studies;

- A clear and objective synthesis and presentation of the characteristics and findings of the included studies.

*Lasserson T, Thomas J, Higgins JPT. Chapter 1: Starting a review. In Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

What is the difference between an evidence synthesis and a systematic review? A systematic review is a type of evidence synthesis. Any literature review is a type of evidence synthesis. For the various types of evidence syntheses/literature reviews, see the page on this guide Types of Reviews .

Systematic reviews are usually done as a team project , requiring cooperation and a commitment of (lots of) time and effort over an extended period. You will need at least 3 people and, depending on the scope of the project and the size of the database result sets, you should plan for 6-24 months from start to completion

Things to Know Before You Begin . . .

Run exploratory searches on the topic to get a sense of the plausibility of your project.

A systematic review requires a research question that is already well-covered in the primary literature. That is, if there has been little previous work on the topic, there will be little to analyze and conclusions hard to find.

A narrowly-focused research question may add little to the knowledge of the field of study.

Make sure someone else has not already 1) written a recent systematic review on your topic, or 2) is in the midst of a similar systematic review project. Instructions on how to check .

Team members will need to use research databases for searching the literature. If these databases are not available through library subscriptions or freely available, their use may require payment or travel. Look here for database recommendations .

It is extremely important to develop a protocol for your project. Guidance is provided here .

Tools such as a reference manager and a screening tool will save time.

Lynn Bostwick : Nursing, Nutrition, Pharmacy, Public Health

Meryl Brodsky : Communication and Information Studies

Hannah Chapman Tripp : Biology, Neuroscience

Carolyn Cunningham : Human Development & Family Sciences, Psychology, Sociology

Larayne Dallas : Engineering

Liz DeHart : Marine Science

Grant Hardaway : Educational Psychology, Kinesiology & Health Education, Social Work

Janelle Hedstrom : Special Education, Curriculum & Instruction, Ed Leadership & Policy

Susan Macicak : Linguistics

Imelda Vetter : Dell Medical School

- Last Updated: Apr 9, 2024 8:57 PM

- URL: https://guides.lib.utexas.edu/systematicreviews

Systematic Reviews (in the Health Sciences)

What is a systematic review, is a systematic review right for you, systematic review vs. literature review.

- Library Help & Tools

- Standards & Guidelines

- Getting Started

- Research Question

- Search Development

- Search Techniques

- Grey Literature

- Citation Management

- Screening & Selection

- Retrieving Full Text

- Data Extraction

- Meta-Analysis

A systematic review is a research method that attempts to identify, appraise and synthesize all the empirical evidence that meets pre-specified eligibility criteria to answer a specific research question. Researchers conducting systematic reviews use explicit, systematic methods that are selected with a view aimed at minimizing bias, to produce more reliable findings to inform decision making.

Systematic reviews should be conducted by a team of researchers, at least one of whom has significant knowledge of research conducted in the subject, and cannot be done alone . Systematic reviews follow the established standards and methodologies for conducting and reporting systematic reviews.

- What is a Systematic Review? (About Cochrane Reviews)

Not all questions are appropriate for a systematic review. Depending on your question, another type of review, such as a scoping review or literature review, may be more appropriate.

A systematic review research question should be a well-formulated clearly defined clinical question, commonly in PICO format.

- Decision Tree: What Type of Review is Right for You?

- Interactive Tool: Which Review is Right for You?

- PICO: Asking Focused Questions

- A typology of reviews: an analysis of 14 review types and associated methodologies.

- Next: Library Help & Tools >>

- Last Updated: Apr 1, 2024 3:35 PM

- URL: https://libguides.usc.edu/healthsciences/systematicreviews

Jump to navigation

Cochrane Training

Chapter 1: starting a review.

Toby J Lasserson, James Thomas, Julian PT Higgins

Key Points:

- Systematic reviews address a need for health decision makers to be able to access high quality, relevant, accessible and up-to-date information.

- Systematic reviews aim to minimize bias through the use of pre-specified research questions and methods that are documented in protocols, and by basing their findings on reliable research.

- Systematic reviews should be conducted by a team that includes domain expertise and methodological expertise, who are free of potential conflicts of interest.

- People who might make – or be affected by – decisions around the use of interventions should be involved in important decisions about the review.

- Good data management, project management and quality assurance mechanisms are essential for the completion of a successful systematic review.

Cite this chapter as: Lasserson TJ, Thomas J, Higgins JPT. Chapter 1: Starting a review. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

1.1 Why do a systematic review?

Systematic reviews were developed out of a need to ensure that decisions affecting people’s lives can be informed by an up-to-date and complete understanding of the relevant research evidence. With the volume of research literature growing at an ever-increasing rate, it is impossible for individual decision makers to assess this vast quantity of primary research to enable them to make the most appropriate healthcare decisions that do more good than harm. By systematically assessing this primary research, systematic reviews aim to provide an up-to-date summary of the state of research knowledge on an intervention, diagnostic test, prognostic factor or other health or healthcare topic. Systematic reviews address the main problem with ad hoc searching and selection of research, namely that of bias. Just as primary research studies use methods to avoid bias, so should summaries and syntheses of that research.

A systematic review attempts to collate all the empirical evidence that fits pre-specified eligibility criteria in order to answer a specific research question. It uses explicit, systematic methods that are selected with a view to minimizing bias, thus providing more reliable findings from which conclusions can be drawn and decisions made (Antman et al 1992, Oxman and Guyatt 1993). Systematic review methodology, pioneered and developed by Cochrane, sets out a highly structured, transparent and reproducible methodology (Chandler and Hopewell 2013). This involves: the a priori specification of a research question; clarity on the scope of the review and which studies are eligible for inclusion; making every effort to find all relevant research and to ensure that issues of bias in included studies are accounted for; and analysing the included studies in order to draw conclusions based on all the identified research in an impartial and objective way.

This Handbook is about systematic reviews on the effects of interventions, and specifically about methods used by Cochrane to undertake them. Cochrane Reviews use primary research to generate new knowledge about the effects of an intervention (or interventions) used in clinical, public health or policy settings. They aim to provide users with a balanced summary of the potential benefits and harms of interventions and give an indication of how certain they can be of the findings. They can also compare the effectiveness of different interventions with one another and so help users to choose the most appropriate intervention in particular situations. The primary purpose of Cochrane Reviews is therefore to inform people making decisions about health or health care.

Systematic reviews are important for other reasons. New research should be designed or commissioned only if it does not unnecessarily duplicate existing research (Chalmers et al 2014). Therefore, a systematic review should typically be undertaken before embarking on new primary research. Such a review will identify current and ongoing studies, as well as indicate where specific gaps in knowledge exist, or evidence is lacking; for example, where existing studies have not used outcomes that are important to users of research (Macleod et al 2014). A systematic review may also reveal limitations in the conduct of previous studies that might be addressed in the new study or studies.

Systematic reviews are important, often rewarding and, at times, exciting research projects. They offer the opportunity for authors to make authoritative statements about the extent of human knowledge in important areas and to identify priorities for further research. They sometimes cover issues high on the political agenda and receive attention from the media. Conducting research with these impacts is not without its challenges, however, and completing a high-quality systematic review is often demanding and time-consuming. In this chapter we introduce some of the key considerations for potential review authors who are about to start a systematic review.

1.2 What is the review question?

Getting the research question right is critical for the success of a systematic review. Review authors should ensure that the review addresses an important question to those who are expected to use and act upon its conclusions.

We discuss the formulation of questions in detail in Chapter 2 . For a question about the effects of an intervention, the PICO approach is usually used, which is an acronym for Population, Intervention, Comparison(s) and Outcome. Reviews may have additional questions, for example about how interventions were implemented, economic issues, equity issues or patient experience.

To ensure that the review addresses a relevant question in a way that benefits users, it is important to ensure wide input. In most cases, question formulation should therefore be informed by people with various relevant – but potentially different – perspectives (see Chapter 2, Section 2.4 ).

1.3 Who should do a systematic review?

Systematic reviews should be undertaken by a team. Indeed, Cochrane will not publish a review that is proposed to be undertaken by a single person. Working as a team not only spreads the effort, but ensures that tasks such as the selection of studies for eligibility, data extraction and rating the certainty of the evidence will be performed by at least two people independently, minimizing the likelihood of errors. First-time review authors are encouraged to work with others who are experienced in the process of systematic reviews and to attend relevant training.

Review teams must include expertise in the topic area under review. Topic expertise should not be overly narrow, to ensure that all relevant perspectives are considered. Perspectives from different disciplines can help to avoid assumptions or terminology stemming from an over-reliance on a single discipline. Review teams should also include expertise in systematic review methodology, including statistical expertise.

Arguments have been made that methodological expertise is sufficient to perform a review, and that content expertise should be avoided because of the risk of preconceptions about the effects of interventions (Gøtzsche and Ioannidis 2012). However, it is important that both topic and methodological expertise is present to ensure a good mix of skills, knowledge and objectivity, because topic expertise provides important insight into the implementation of the intervention(s), the nature of the condition being treated or prevented, the relationships between outcomes measured, and other factors that may have an impact on decision making.

A Cochrane Review should represent an independent assessment of the evidence and avoiding financial and non-financial conflicts of interest often requires careful management. It will be important to consider if there are any relevant interests that may constitute a conflict of interest. There are situations where employment, holding of patents and other financial support should prevent people joining an author team. Funding of Cochrane Reviews by commercial organizations with an interest in the outcome of the review is not permitted. To ensure that any issues are identified early in the process, authors planning Cochrane Reviews should consult the Conflict of Interest Policy . Authors should make complete declarations of interest before registration of the review, and refresh these annually thereafter until publication and just prior to publication of the protocol and the review. For authors of review updates, this must be done at the time of the decision to update the review, annually thereafter until publication, and just prior to publication. Authors should also update declarations of interest at any point when their circumstances change.

1.3.1 Involving consumers and other stakeholders

Because the priorities of decision makers and consumers may be different from those of researchers, it is important that review authors consider carefully what questions are important to these different stakeholders. Systematic reviews are more likely to be relevant to a broad range of end users if they are informed by the involvement of people with a range of experiences, in terms of both the topic and the methodology (Thomas et al 2004, Rees and Oliver 2017). Engaging consumers and other stakeholders, such as policy makers, research funders and healthcare professionals, increases relevance, promotes mutual learning, improved uptake and decreases research waste.

Mapping out all potential stakeholders specific to the review question is a helpful first step to considering who might be invited to be involved in a review. Stakeholders typically include: patients and consumers; consumer advocates; policy makers and other public officials; guideline developers; professional organizations; researchers; funders of health services and research; healthcare practitioners, and, on occasion, journalists and other media professionals. Balancing seniority, credibility within the given field, and diversity should be considered. Review authors should also take account of the needs of resource-poor countries and regions in the review process (see Chapter 16 ) and invite appropriate input on the scope of the review and the questions it will address.

It is established good practice to ensure that consumers are involved and engaged in health research, including systematic reviews. Cochrane uses the term ‘consumers’ to refer to a wide range of people, including patients or people with personal experience of a healthcare condition, carers and family members, representatives of patients and carers, service users and members of the public. In 2017, a Statement of Principles for consumer involvement in Cochrane was agreed. This seeks to change the culture of research practice to one where both consumers and other stakeholders are joint partners in research from planning, conduct, and reporting to dissemination. Systematic reviews that have had consumer involvement should be more directly applicable to decision makers than those that have not (see online Chapter II ).

1.3.2 Working with consumers and other stakeholders

Methods for working with consumers and other stakeholders include surveys, workshops, focus groups and involvement in advisory groups. Decisions about what methods to use will typically be based on resource availability, but review teams should be aware of the merits and limitations of such methods. Authors will need to decide who to involve and how to provide adequate support for their involvement. This can include financial reimbursement, the provision of training, and stating clearly expectations of involvement, possibly in the form of terms of reference.

While a small number of consumers or other stakeholders may be part of the review team and become co-authors of the subsequent review, it is sometimes important to bring in a wider range of perspectives and to recognize that not everyone has the capacity or interest in becoming an author. Advisory groups offer a convenient approach to involving consumers and other relevant stakeholders, especially for topics in which opinions differ. Important points to ensure successful involvement include the following.

- The review team should co-ordinate the input of the advisory group to inform key review decisions.

- The advisory group’s input should continue throughout the systematic review process to ensure relevance of the review to end users is maintained.

- Advisory group membership should reflect the breadth of the review question, and consideration should be given to involving vulnerable and marginalized people (Steel 2004) to ensure that conclusions on the value of the interventions are well-informed and applicable to all groups in society (see Chapter 16 ).

Templates such as terms of reference, job descriptions, or person specifications for an advisory group help to ensure clarity about the task(s) required and are available from INVOLVE . The website also gives further information on setting and organizing advisory groups. See also the Cochrane training website for further resources to support consumer involvement.

1.4 The importance of reliability

Systematic reviews aim to be an accurate representation of the current state of knowledge about a given issue. As understanding improves, the review can be updated. Nevertheless, it is important that the review itself is accurate at the time of publication. There are two main reasons for this imperative for accuracy. First, health decisions that affect people’s lives are increasingly taken based on systematic review findings. Current knowledge may be imperfect, but decisions will be better informed when taken in the light of the best of current knowledge. Second, systematic reviews form a critical component of legal and regulatory frameworks; for example, drug licensing or insurance coverage. Here, systematic reviews also need to hold up as auditable processes for legal examination. As systematic reviews need to be both correct, and be seen to be correct, detailed evidence-based methods have been developed to guide review authors as to the most appropriate procedures to follow, and what information to include in their reports to aid auditability.

1.4.1 Expectations for the conduct and reporting of Cochrane Reviews

Cochrane has developed methodological expectations for the conduct, reporting and updating of systematic reviews of interventions (MECIR) and their plain language summaries ( Plain Language Expectations for Authors of Cochrane Summaries ; PLEACS). Developed collaboratively by methodologists and Cochrane editors, they are intended to describe the desirable attributes of a Cochrane Review. The expectations are not all relevant at the same stage of review conduct, so care should be taken to identify those that are relevant at specific points during the review. Different methods should be used at different stages of the review in terms of the planning, conduct, reporting and updating of the review.

Each expectation has a title, a rationale and an elaboration. For the purposes of publication of a review with Cochrane, each has the status of either ‘mandatory’ or ‘highly desirable’. Items described as mandatory are expected to be applied, and if they are not then an appropriate justification should be provided; failure to implement such items may be used as a basis for deciding not to publish a review in the Cochrane Database of Systematic Reviews (CDSR). Items described as highly desirable should generally be implemented, but there are reasonable exceptions and justifications are not required.

All MECIR expectations for the conduct of a review are presented in the relevant chapters of this Handbook . Expectations for reporting of completed reviews (including PLEACS) are described in online Chapter III . The recommendations provided in the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) Statement have been incorporated into the Cochrane reporting expectations, ensuring compliance with the PRISMA recommendations and summarizing attributes of reporting that should allow a full assessment of the methods and findings of the review (Moher et al 2009).

1.5 Protocol development

Preparing a systematic review is complex and involves many judgements. To minimize the potential for bias in the review process, these judgements should be made as far as possible in ways that do not depend on the findings of the studies included in the review. Review authors’ prior knowledge of the evidence may, for example, influence the definition of a systematic review question, the choice of criteria for study eligibility, or the pre-specification of intervention comparisons and outcomes to analyse. It is important that the methods to be used should be established and documented in advance (see MECIR Box 1.5.a , MECIR Box 1.5.b and MECIR Box 1.5.c ).

Publication of a protocol for a review that is written without knowledge of the available studies reduces the impact of review authors’ biases, promotes transparency of methods and processes, reduces the potential for duplication, allows peer review of the planned methods before they have been completed, and offers an opportunity for the review team to plan resources and logistics for undertaking the review itself. All chapters in the Handbook should be consulted when drafting the protocol. Since systematic reviews are by their nature retrospective, an element of knowledge of the evidence is often inevitable. This is one reason why non-content experts such as methodologists should be part of the review team (see Section 1.3 ). Two exceptions to the retrospective nature of a systematic review are a meta-analysis of a prospectively planned series of trials and some living systematic reviews, as described in Chapter 22 .

The review question should determine the methods used in the review, and not vice versa. The question may concern a relatively straightforward comparison of one treatment with another; or it may necessitate plans to compare different treatments as part of a network meta-analysis, or assess differential effects of an intervention in different populations or delivered in different ways.

The protocol sets out the context in which the review is being conducted. It presents an opportunity to develop ideas that are foundational for the review. This concerns, most explicitly, definition of the eligibility criteria such as the study participants and the choice of comparators and outcomes. The eligibility criteria may also be defined following the development of a logic model (or an articulation of the aspects of an extent logic model that the review is addressing) to explain how the intervention might work (see Chapter 2, Section 2.5.1 ).

MECIR Box 1.5.a Relevant expectations for conduct of intervention reviews

A key purpose of the protocol is to make plans to minimize bias in the eventual findings of the review. Reliable synthesis of available evidence requires a planned, systematic approach. Threats to the validity of systematic reviews can come from the studies they include or the process by which reviews are conducted. Biases within the studies can arise from the method by which participants are allocated to the intervention groups, awareness of intervention group assignment, and the collection, analysis and reporting of data. Methods for examining these issues should be specified in the protocol. Review processes can generate bias through a failure to identify an unbiased (and preferably complete) set of studies, and poor quality assurance throughout the review. The availability of research may be influenced by the nature of the results (i.e. reporting bias). To reduce the impact of this form of bias, searching may need to include unpublished sources of evidence (Dwan et al 2013) ( MECIR Box 1.5.b ).

MECIR Box 1.5.b Relevant expectations for the conduct of intervention reviews

Developing a protocol for a systematic review has benefits beyond reducing bias. Investing effort in designing a systematic review will make the process more manageable and help to inform key priorities for the review. Defining the question, referring to it throughout, and using appropriate methods to address the question focuses the analysis and reporting, ensuring the review is most likely to inform treatment decisions for funders, policy makers, healthcare professionals and consumers. Details of the planned analyses, including investigations of variability across studies, should be specified in the protocol, along with methods for interpreting the results through the systematic consideration of factors that affect confidence in estimates of intervention effect ( MECIR Box 1.5.c ).

MECIR Box 1.5.c Relevant expectations for conduct of intervention reviews

While the intention should be that a review will adhere to the published protocol, changes in a review protocol are sometimes necessary. This is also the case for a protocol for a randomized trial, which must sometimes be changed to adapt to unanticipated circumstances such as problems with participant recruitment, data collection or event rates. While every effort should be made to adhere to a predetermined protocol, this is not always possible or appropriate. It is important, however, that changes in the protocol should not be made based on how they affect the outcome of the research study, whether it is a randomized trial or a systematic review. Post hoc decisions made when the impact on the results of the research is known, such as excluding selected studies from a systematic review, or changing the statistical analysis, are highly susceptible to bias and should therefore be avoided unless there are reasonable grounds for doing this.

Enabling access to a protocol through publication (all Cochrane Protocols are published in the CDSR ) and registration on the PROSPERO register of systematic reviews reduces duplication of effort, research waste, and promotes accountability. Changes to the methods outlined in the protocol should be transparently declared.

This Handbook provides details of the systematic review methods developed or selected by Cochrane. They are intended to address the need for rigour, comprehensiveness and transparency in preparing a Cochrane systematic review. All relevant chapters – including those describing procedures to be followed in the later stages of the review – should be consulted during the preparation of the protocol. A more specific description of the structure of Cochrane Protocols is provide in online Chapter II .

1.6 Data management and quality assurance

Systematic reviews should be replicable, and retaining a record of the inclusion decisions, data collection, transformations or adjustment of data will help to establish a secure and retrievable audit trail. They can be operationally complex projects, often involving large research teams operating in different sites across the world. Good data management processes are essential to ensure that data are not inadvertently lost, facilitating the identification and correction of errors and supporting future efforts to update and maintain the review. Transparent reporting of review decisions enables readers to assess the reliability of the review for themselves.

Review management software, such as Covidence and EPPI-Reviewer , can be used to assist data management and maintain consistent and standardized records of decisions made throughout the review. These tools offer a central repository for review data that can be accessed remotely throughout the world by members of the review team. They record independent assessment of studies for inclusion, risk of bias and extraction of data, enabling checks to be made later in the process if needed. Research has shown that even experienced reviewers make mistakes and disagree with one another on risk-of-bias assessments, so it is particularly important to maintain quality assurance here, despite its cost in terms of author time. As more sophisticated information technology tools begin to be deployed in reviews (see Chapter 4, Section 4.6.6.2 and Chapter 22, Section 22.2.4 ), it is increasingly apparent that all review data – including the initial decisions about study eligibility – have value beyond the scope of the individual review. For example, review updates can be made more efficient through (semi-) automation when data from the original review are available for machine learning.

1.7 Chapter information

Authors: Toby J Lasserson, James Thomas, Julian PT Higgins

Acknowledgements: This chapter builds on earlier versions of the Handbook . We would like to thank Ruth Foxlee, Richard Morley, Soumyadeep Bhaumik, Mona Nasser, Dan Fox and Sally Crowe for their contributions to Section 1.3 .

Funding: JT is supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care North Thames at Barts Health NHS Trust. JPTH is a member of the NIHR Biomedical Research Centre at University Hospitals Bristol NHS Foundation Trust and the University of Bristol. JPTH received funding from National Institute for Health Research Senior Investigator award NF-SI-0617-10145. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

1.8 References

Antman E, Lau J, Kupelnick B, Mosteller F, Chalmers T. A comparison of results of meta-analyses of randomized control trials and recommendations of clinical experts: treatment for myocardial infarction. JAMA 1992; 268 : 240–248.

Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gulmezoglu AM, Howells DW, Ioannidis JP, Oliver S. How to increase value and reduce waste when research priorities are set. Lancet 2014; 383 : 156–165.

Chandler J, Hopewell S. Cochrane methods – twenty years experience in developing systematic review methods. Systematic Reviews 2013; 2 : 76.

Dwan K, Gamble C, Williamson PR, Kirkham JJ, Reporting Bias Group. Systematic review of the empirical evidence of study publication bias and outcome reporting bias: an updated review. PloS One 2013; 8 : e66844.

Gøtzsche PC, Ioannidis JPA. Content area experts as authors: helpful or harmful for systematic reviews and meta-analyses? BMJ 2012; 345 .

Macleod MR, Michie S, Roberts I, Dirnagl U, Chalmers I, Ioannidis JP, Al-Shahi Salman R, Chan AW, Glasziou P. Biomedical research: increasing value, reducing waste. Lancet 2014; 383 : 101–104.

Moher D, Liberati A, Tetzlaff J, Altman D, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Medicine 2009; 6 : e1000097.

Oxman A, Guyatt G. The science of reviewing research. Annals of the New York Academy of Sciences 1993; 703 : 125–133.

Rees R, Oliver S. Stakeholder perspectives and participation in reviews. In: Gough D, Oliver S, Thomas J, editors. An Introduction to Systematic Reviews . 2nd ed. London: Sage; 2017. p. 17–34.

Steel R. Involving marginalised and vulnerable people in research: a consultation document (2nd revision). INVOLVE; 2004.

Thomas J, Harden A, Oakley A, Oliver S, Sutcliffe K, Rees R, Brunton G, Kavanagh J. Integrating qualitative research with trials in systematic reviews. BMJ 2004; 328 : 1010–1012.

For permission to re-use material from the Handbook (either academic or commercial), please see here for full details.

- Boston University Libraries

Systematic Reviews in the Social Sciences

What is a systematic review, difference between a systematic review and a literature review.

- Finding Systematic Reviews

- Conducting Systematic Reviews

- Saving Search Results

- Systematic Review Management Tools

- Citing Your Sources

"A systematic review attempts to collate all empirical evidence that fits pre-specified eligibility criteria in order to answer a specific research question. It uses explicit, systematic methods that are selected with a view to minimizing bias, thus providing more reliable findings from which conclusions can be drawn and decisions made (Antman 1992, Oxman 1993) . The key characteristics of a systematic review are:

a clearly stated set of objectives with pre-defined eligibility criteria for studies;

an explicit, reproducible methodology;

a systematic search that attempts to identify all studies that would meet the eligibility criteria;

an assessment of the validity of the findings of the included studies, for example through the assessment of risk of bias; and

a systematic presentation, and synthesis, of the characteristics and findings of the included studies".

Cochrane Handbook for Systematic Reviews of Interventions . (March 2011)

(Original author, Meredith Kirkpatrick, 2021)

Kysh, Lynn (2013): Difference between a systematic review and a literature review . Figshare.https://doi.org/10.6084/m9.figshare.766364.v1

Related Guides

- Social Work

- Social Work Policy Resources

- Literature Reviews in Social Work

- Systematic Reviews in the Health Sciences

- Next: Finding Systematic Reviews >>

- Last Updated: Sep 1, 2022 2:14 PM

- URL: https://library.bu.edu/systematic-reviews-social-sciences

Systematic Reviews

- What is a Systematic Review?

- Request Form

- Getting Started

- Systematic Review Process

- Question Frameworks

- Key Resources

Definition of Systematic Review

"A systematic review attempts to identify, appraise, and synthesize all the empirical evidence that meets pre-specified eligibility criteria to answer a given research question. Researchers conducting systematic reviews use explicit methods aimed at minimizing bias, in order to produce more reliable findings that can be used to inform decision making."

- About Cochrane Reviews, Cochrane Library

Systematic reviews are part of a larger category of research methodologies known as evidence syntheses or knowledge syntheses. While many types of evidence syntheses exist, these are the methodologies that the Dana Health Sciences Library is prepared to support and collaborate on.

Review Methods

There are many types of reviews, and choosing the right one can be challenging. The list below provides a general overview of six popular review types. If you are still unsure of which one to choose, please try the Right Review tool, which asks you a series of questions to help you determine which review methodology might be suitable for your project. When you are finished, please feel free to discuss the results with your librarian .

Chart adapted from: Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies . Health Info Libr J. 2009 Jun;26(2):91-108. doi: 10.1111/j.1471-1842.2009.00848.x.

Standards & Guidance References

Here are some standards and additional research synthesis methods papers to get you started:

Chandler, J., Churchill, R., Higgins, J., Lasserson, T., & Tovey, D. (2013). Methodological standards for the conduct of new Cochrane Intervention Reviews, Version 2.3. Available from http://www.editorial-unit.cochrane.org/mecir .

European Network for Health Technology Assessment. (2019) Guideline: Process of information retrieval for systematic reviews and health technology assessments on clinical effectiveness Version 2.0. DEC 2019. Available from: https://www.eunethta.eu/wp-content/uploads/2020/01/EUnetHTA_Guideline_Information_Retrieval_v2-0.pdf .

Higgins, J., Green, S., & (editors). (2011). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. J. Higgins & S. Green (Eds.), Available from: http://handbook.cochrane.org/ .

IOM (Institute of Medicine). (2011). Finding What Works in Health Care: Standards for Systematic Reviews. Available from: https://www.nap.edu/catalog/13059/finding-what-works-in-health-care-standards-for-systematic-reviews .

Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gotzsche, P. C., Ioannidis, J. P., . . . Moher, D. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Medicine, 6(7), e1000100. doi: 10.1371/journal.pmed.1000100. Available from: https://journals.plos.org/plosmedicine/article/file?id=10.1371/journal.pmed.1000100&type=printable .

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Prisma Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Medicine, 6(7), e1000097. doi: 10.1371/journal.pmed.1000097. Available from: https://journals.plos.org/plosmedicine/article/file?id=10.1371/journal.pmed.1000097&type=printable .

Moher D., Shamseer L., Clarke M., Ghersi D., Liberati A., Petticrew M., . . . PRISMA-P Group (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews, 4(1), doi:10.1186/2046-4053-4-1. Availalble from: https://www.bmj.com/content/bmj/349/bmj.g7647.full.pdf .

Rader, T., Mann, M., Stansfield, C., Cooper, C., & Sampson, M. (2013). Methods for documenting systematic review searches: a discussion of common issues. Research Synthesis Methods, Article first published online: 8 OCT 2013. doi: 10.1002/jrsm.1097. Available from: https://onlinelibrary.wiley.com/doi/epdf/10.1002/jrsm.1097 .

Rethlefsen, M. L., Murad, M., & Livingston, E. H. (2014). Engaging medical librarians to improve the quality of review articles. JAMA, 312(10), 999-1000. doi: 10.1001/jama.2014.9263. Availalble from: https://jamanetwork.com/journals/jama/fullarticle/1902238 .

Shamseer, L., Moher, D., Clarke, M., Ghersi, D., Liberati, A. D., Petticrew, M., . . . PRISMA-P Group. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ, 349, g7647. doi: 10.1136/bmj.g7647. Available from: https://www.bmj.com/content/bmj/349/bmj.g7647.full.pdf .

Umscheid, C. A. (2013). A Primer on Performing Systematic Reviews and Meta-analyses. Clinical Infectious Diseases, 57(5), 725-734. doi: 10.1093/cid/cit333. Available from: https://academic.oup.com/cid/article/57/5/725/311245 .

This website has been adapted from the Northwestern University Galter Health Sciences Library's Systematic Reviews guide.

- Next: Systematic & Scoping Review Services at Dana >>

- Last Updated: Apr 15, 2024 10:01 AM

- URL: https://researchguides.uvm.edu/reviews

- Systematic review

- Open access

- Published: 16 April 2020

A systematic review of empirical studies examining mechanisms of implementation in health

- Cara C. Lewis 1 , 2 , 3 ,

- Meredith R. Boyd 4 ,

- Callie Walsh-Bailey 1 , 5 ,

- Aaron R. Lyon 3 ,

- Rinad Beidas 6 ,

- Brian Mittman 7 ,

- Gregory A. Aarons 8 ,

- Bryan J. Weiner 9 &

- David A. Chambers 10

Implementation Science volume 15 , Article number: 21 ( 2020 ) Cite this article

20k Accesses

145 Citations

61 Altmetric

Metrics details

Understanding the mechanisms of implementation strategies (i.e., the processes by which strategies produce desired effects) is important for research to understand why a strategy did or did not achieve its intended effect, and it is important for practice to ensure strategies are designed and selected to directly target determinants or barriers. This study is a systematic review to characterize how mechanisms are conceptualized and measured, how they are studied and evaluated, and how much evidence exists for specific mechanisms.

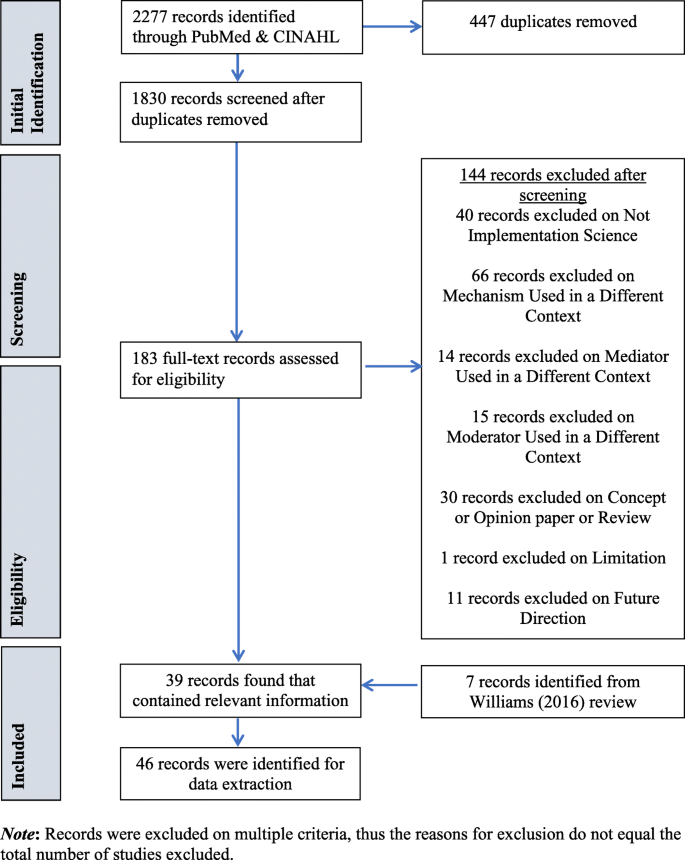

We systematically searched PubMed and CINAHL Plus for implementation studies published between January 1990 and August 2018 that included the terms “mechanism,” “mediator,” or “moderator.” Two authors independently reviewed title and abstracts and then full texts for fit with our inclusion criteria of empirical studies of implementation in health care contexts. Authors extracted data regarding general study information, methods, results, and study design and mechanisms-specific information. Authors used the Mixed Methods Appraisal Tool to assess study quality.

Search strategies produced 2277 articles, of which 183 were included for full text review. From these we included for data extraction 39 articles plus an additional seven articles were hand-entered from only other review of implementation mechanisms (total = 46 included articles). Most included studies employed quantitative methods (73.9%), while 10.9% were qualitative and 15.2% were mixed methods. Nine unique versions of models testing mechanisms emerged. Fifty-three percent of the studies met half or fewer of the quality indicators. The majority of studies (84.8%) only met three or fewer of the seven criteria stipulated for establishing mechanisms.

Conclusions