6.894 : Interactive Data Visualization

Assignment 2: exploratory data analysis.

In this assignment, you will identify a dataset of interest and perform an exploratory analysis to better understand the shape & structure of the data, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of captioned visualizations that convey key insights gained during your analysis.

Step 1: Data Selection

First, you will pick a topic area of interest to you and find a dataset that can provide insights into that topic. To streamline the assignment, we've pre-selected a number of datasets for you to choose from.

However, if you would like to investigate a different topic and dataset, you are free to do so. If working with a self-selected dataset, please check with the course staff to ensure it is appropriate for the course. Be advised that data collection and preparation (also known as data wrangling ) can be a very tedious and time-consuming process. Be sure you have sufficient time to conduct exploratory analysis, after preparing the data.

After selecting a topic and dataset – but prior to analysis – you should write down an initial set of at least three questions you'd like to investigate.

Part 2: Exploratory Visual Analysis

Next, you will perform an exploratory analysis of your dataset using a visualization tool such as Tableau. You should consider two different phases of exploration.

In the first phase, you should seek to gain an overview of the shape & stucture of your dataset. What variables does the dataset contain? How are they distributed? Are there any notable data quality issues? Are there any surprising relationships among the variables? Be sure to also perform "sanity checks" for patterns you expect to see!

In the second phase, you should investigate your initial questions, as well as any new questions that arise during your exploration. For each question, start by creating a visualization that might provide a useful answer. Then refine the visualization (by adding additional variables, changing sorting or axis scales, filtering or subsetting data, etc. ) to develop better perspectives, explore unexpected observations, or sanity check your assumptions. You should repeat this process for each of your questions, but feel free to revise your questions or branch off to explore new questions if the data warrants.

- Final Deliverable

Your final submission should take the form of a Google Docs report – similar to a slide show or comic book – that consists of 10 or more captioned visualizations detailing your most important insights. Your "insights" can include important surprises or issues (such as data quality problems affecting your analysis) as well as responses to your analysis questions. To help you gauge the scope of this assignment, see this example report analyzing data about motion pictures . We've annotated and graded this example to help you calibrate for the breadth and depth of exploration we're looking for.

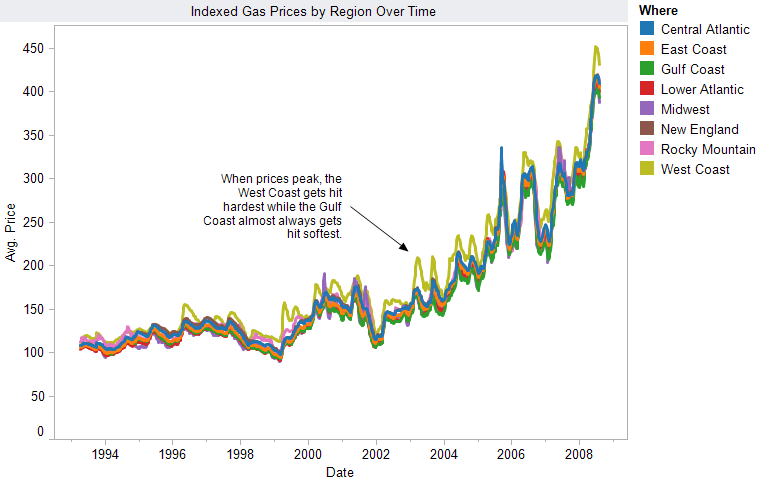

Each visualization image should be a screenshot exported from a visualization tool, accompanied with a title and descriptive caption (1-4 sentences long) describing the insight(s) learned from that view. Provide sufficient detail for each caption such that anyone could read through your report and understand what you've learned. You are free, but not required, to annotate your images to draw attention to specific features of the data. You may perform highlighting within the visualization tool itself, or draw annotations on the exported image. To easily export images from Tableau, use the Worksheet > Export > Image... menu item.

The end of your report should include a brief summary of main lessons learned.

Recommended Data Sources

To get up and running quickly with this assignment, we recommend exploring one of the following provided datasets:

World Bank Indicators, 1960–2017 . The World Bank has tracked global human developed by indicators such as climate change, economy, education, environment, gender equality, health, and science and technology since 1960. The linked repository contains indicators that have been formatted to facilitate use with Tableau and other data visualization tools. However, you're also welcome to browse and use the original data by indicator or by country . Click on an indicator category or country to download the CSV file.

Chicago Crimes, 2001–present (click Export to download a CSV file). This dataset reflects reported incidents of crime (with the exception of murders where data exists for each victim) that occurred in the City of Chicago from 2001 to present, minus the most recent seven days. Data is extracted from the Chicago Police Department's CLEAR (Citizen Law Enforcement Analysis and Reporting) system.

Daily Weather in the U.S., 2017 . This dataset contains daily U.S. weather measurements in 2017, provided by the NOAA Daily Global Historical Climatology Network . This data has been transformed: some weather stations with only sparse measurements have been filtered out. See the accompanying weather.txt for descriptions of each column .

Social mobility in the U.S. . Raj Chetty's group at Harvard studies the factors that contribute to (or hinder) upward mobility in the United States (i.e., will our children earn more than we will). Their work has been extensively featured in The New York Times. This page lists data from all of their papers, broken down by geographic level or by topic. We recommend downloading data in the CSV/Excel format, and encourage you to consider joining multiple datasets from the same paper (under the same heading on the page) for a sufficiently rich exploratory process.

The Yelp Open Dataset provides information about businesses, user reviews, and more from Yelp's database. The data is split into separate files ( business , checkin , photos , review , tip , and user ), and is available in either JSON or SQL format. You might use this to investigate the distributions of scores on Yelp, look at how many reviews users typically leave, or look for regional trends about restaurants. Note that this is a large, structured dataset and you don't need to look at all of the data to answer interesting questions. In order to download the data you will need to enter your email and agree to Yelp's Dataset License .

Additional Data Sources

If you want to investigate datasets other than those recommended above, here are some possible sources to consider. You are also free to use data from a source different from those included here. If you have any questions on whether your dataset is appropriate, please ask the course staff ASAP!

- data.boston.gov - City of Boston Open Data

- MassData - State of Masachussets Open Data

- data.gov - U.S. Government Open Datasets

- U.S. Census Bureau - Census Datasets

- IPUMS.org - Integrated Census & Survey Data from around the World

- Federal Elections Commission - Campaign Finance & Expenditures

- Federal Aviation Administration - FAA Data & Research

- fivethirtyeight.com - Data and Code behind the Stories and Interactives

- Buzzfeed News

- Socrata Open Data

- 17 places to find datasets for data science projects

Visualization Tools

You are free to use one or more visualization tools in this assignment. However, in the interest of time and for a friendlier learning curve, we strongly encourage you to use Tableau . Tableau provides a graphical interface focused on the task of visual data exploration. You will (with rare exceptions) be able to complete an initial data exploration more quickly and comprehensively than with a programming-based tool.

- Tableau - Desktop visual analysis software . Available for both Windows and MacOS; register for a free student license.

- Data Transforms in Vega-Lite . A tutorial on the various built-in data transformation operators available in Vega-Lite.

- Data Voyager , a research prototype from the UW Interactive Data Lab, combines a Tableau-style interface with visualization recommendations. Use at your own risk!

- R , using the ggplot2 library or with R's built-in plotting functions.

- Jupyter Notebooks (Python) , using libraries such as Altair or Matplotlib .

Data Wrangling Tools

The data you choose may require reformatting, transformation or cleaning prior to visualization. Here are tools you can use for data preparation. We recommend first trying to import and process your data in the same tool you intend to use for visualization. If that fails, pick the most appropriate option among the tools below. Contact the course staff if you are unsure what might be the best option for your data!

Graphical Tools

- Tableau Prep - Tableau provides basic facilities for data import, transformation & blending. Tableau prep is a more sophisticated data preparation tool

- Trifacta Wrangler - Interactive tool for data transformation & visual profiling.

- OpenRefine - A free, open source tool for working with messy data.

Programming Tools

- JavaScript data utilities and/or the Datalib JS library .

- Pandas - Data table and manipulation utilites for Python.

- dplyr - A library for data manipulation in R.

- Or, the programming language and tools of your choice...

The assignment score is out of a maximum of 10 points. Submissions that squarely meet the requirements will receive a score of 8. We will determine scores by judging the breadth and depth of your analysis, whether visualizations meet the expressivenes and effectiveness principles, and how well-written and synthesized your insights are.

We will use the following rubric to grade your assignment. Note, rubric cells may not map exactly to specific point scores.

Submission Details

This is an individual assignment. You may not work in groups.

Your completed exploratory analysis report is due by noon on Wednesday 2/19 . Submit a link to your Google Doc report using this submission form . Please double check your link to ensure it is viewable by others (e.g., try it in an incognito window).

Resubmissions. Resubmissions will be regraded by teaching staff, and you may earn back up to 50% of the points lost in the original submission. To resubmit this assignment, please use this form and follow the same submission process described above. Include a short 1 paragraph description summarizing the changes from the initial submission. Resubmissions without this summary will not be regraded. Resubmissions will be due by 11:59pm on Saturday, 3/14. Slack days may not be applied to extend the resubmission deadline. The teaching staff will only begin to regrade assignments once the Final Project phase begins, so please be patient.

- Due: 12pm, Wed 2/19

- Recommended Datasets

- Example Report

- Visualization & Data Wrangling Tools

- Submission form

Assignment 2: Exploratory Data Analysis

In groups of 3-4, identify a dataset of interest and perform exploratory analysis in Tableau to understand the structure of the data, investigate hypotheses, and develop preliminary insights. Prepare a PDF or Google Slides report using this template outline : include a set of 10 or more visualizations that illustrate your findings, one summary “dashboard” visualization, as well as a write-up of your process and what you learned.

Submit your group’s report url and individually, your peer assessments for A2 by Monday 10/1, 11:59pm .

Week 1: Data Selection

First, choose a topic of interest to you and find a dataset that can provide insights into that topic. See below for recommended datasets to help you get started.

If working with a self-selected dataset, please check with the course staff to ensure it is appropriate for this assignment. Be advised that data collection and preparation (also known as data wrangling) can be a very tedious and time-consuming process. Be sure you have sufficient time to conduct exploratory analysis, after preparing the data.

After selecting a topic and dataset – but prior to analysis – you should write down an initial set of at least three questions you’d like to investigate.

Week 2: Exploratory Visual Analysis

Next, perform an exploratory analysis of your dataset using Tableau. You should consider two different phases of exploration.

In the first phase, you should seek to gain an overview of the shape & stucture of your dataset. What variables does the dataset contain? How are they distributed? Are there any notable data quality issues? Are there any surprising relationships among the variables? Be sure to also perform “sanity checks” for patterns you expect to see!

In the second phase, you should investigate your initial questions, as well as any new questions that arise during your exploration. For each question, start by creating a visualization that might provide a useful answer. Then refine the visualization (by adding additional variables, changing sorting or axis scales, filtering or subsetting data, etc.) to develop better perspectives, explore unexpected observations, or sanity check your assumptions. You should repeat this process for each of your questions, but feel free to revise your questions or branch off to explore new questions if the data warrants.

Final Deliverable

Your final submission will be a written report, 10 or more captioned “quick and dirty” Tableau visualizations outlining your most important insights, and one summary Tableau “dashboard” visualization that answers one (or more) of your chosen hypotheses. The “dashboard” may have multiple charts to communicate your main findings, but it is a static image (so design and label accordingly.) Focus on the answers to your initial questions, but be sure to also describe surprises as well as challenges encountered along the way, e.g. data quality issues.

Each visualization image should accompanied with a title and short caption (<2 sentences). Provide sufficient detail for each caption such that anyone could read through your report and understand your findings. Feel free to annotate your images to draw attention to specific features of the data, keeping in mind the visual principles we’re learned so far.

To easily export images from Tableau, use the Worksheet > Export > Image… menu item.

Grading Criteria

- Poses clear questions applicable to the chosen dataset.

- Appropriate data quality assessment and transformations.

- Breadth of analysis, exploring multiple questions.

- Depth of analysis, with appropriate follow-up questions.

- Expressive & effective visualizations appropriate to analysis questions.

- Clearly written, understandable captions that communicate primary insights.

Data Sources

- NYC Open Data : data on NYC trees, taxis, subway, citibike, 311 calls, land lot use, etc.

- data.gov : everything from hourly precipitation, fruit & vegetable prices, crime reports, to electricity usage.

- Dataset Search by Google Research : indexes public open datasets.

- Stanford Open Policing Dataset

- Physician Medicare Data

- Civil Rights Data Collection

- Data Is Plural newsletter’s Structured Archive : spreadsheet of public datasets ranging from curious to wide-reaching, e.g. “How often do Wikipedia editors edit?”, “Four years of rejected vanity license plate requests”

- Yelp Open Dataset

- U.S. Census Bureau : use their Discovery Tool

- US Health Data : central searchable repository of US health data (Center for Disease Control and National Center for Health Statistics), e.g. surveys on pregnancy, cause of death, health care access, obesity, etc.

- International Monetary Fund

- IPUMS.org : Integrated Census & Survey Data from around the World

- Federal Elections Commission : Campaign Finance & Expenditures

- Stanford Mass Shootings in America Project : data up to 2016, with pointers to alternatives

- USGS Earthquake Catalog

- Federal Aviation Administration

- FiveThirtyEight Data : Datasets and code behind fivethirtyeight.com

- ProPublica Data Store : datasets collected by ProPublica or obtained via FOIA requests, e.g. Chicago parking ticket data

- Machine Learning Repository - large variety of maintained data sets

- Socrata Open Data

- 17 places to find datasets for data science projects

- Awesome Public Datasets (github): topic-centric list of high-quality open datasets in public domains

- Open Syllabus : 6,059,459 syllabi

Tableau Resources

Tableau Training : Specifically the Getting Started video and Visual Analytics section. Most helpful when first getting off the ground.

Build-It-Yourself Exercises : Specifically, sections Build Charts and Analyze Data > Build Common / Advanced Chart Types , and Build Data Views From Scratch > Analyze Data are a good documentation resource.

Drawing With Numbers : blog with example walkthroughs in the Visualizations section, and Tableau Wiki has a bunch of useful links for the most common advanced exploratory / visualization Tableau techniques.

Additional Tools

Your dataset almost certainly will require reformatting, restructuring, or cleaning before visualization. Here are some tools for data preparation:

- Tableau includes basic functionality for data import, transformation & blending.

- R with ggplot2 library

- Python Jupyter notebooks with libraries eg. Altair or Matplotlib

- Trifacta Wrangler interactive tool for data transformation & visual profiling.

- OpenRefine free, open source tool for working with messy data.

- JavaScript data utilities or Datalib JS library via Vega.

- Pandas data table and manipulation utilites for Python.

- Or, the programming language and tools of your choice.

Assignment 2: Exploratory Data Analysis

- 1 Assignment Due: April 18, 2016

- 2 Exploratory Analysis Process

- 3 Data Sets

- 4 Visualization Software

- 5 How to create your wiki page

- 6 Add a link to your finished reports here

Assignment Due: April 18, 2016

A wide variety of digital tools have been designed to help users visually explore data sets and confirm or disconfirm hypotheses about the data. The task in this assignment is to use existing software tools to formulate and answer a series of specific questions about a data set of your choice. After answering the questions you should create a final visualization that is designed to present the answer to your question to others. You should maintain a web notebook that documents all the questions you asked and the steps you performed from start to finish. The goal of this assignment is not to develop a new visualization tool, but to understand better the process of exploring data using off-the-shelf visualization tools.

Here is one way to start.

- Step 1. Pick a domain that you are interested in. Some good possibilities might be the physical properties of chemical elements, the types of stars, or the human genome. Feel free to use an example from your own research, but do not pick an example that you already have created visualizations for.

- Step 2. Pose an initial question that you would like to answer. For example: Is there a relationship between melting point and atomic number? Are the brightness and color of stars correlated? Are there different patterns of nucleotides in different regions in human DNA?

- Step 3. Assess the fitness of the data for answering your question. Inspect the data--it is invariably helpful to first look at the raw values. Does the data seem appropriate for answering your question? If not, you may need to start the process over. If so, does the data need to be reformatted or cleaned prior to analysis? Perform any steps necessary to get the data into shape prior to visual analysis.

You will need to iterate through these steps a few times. It may be challenging to find interesting questions and a dataset that has the information that you need to answer those questions.

Exploratory Analysis Process

After you have an initial question and a dataset, construct a visualization that provides an answer to your question. As you construct the visualization you will find that your question evolves - often it will become more specific. Keep track of his evolution and the other questions that occur to you along the way. Once you have answered all the questions to your satisfaction, think of a way to present the data and the answers as clearly as possible. In this assignment, you should use existing visualization software tools. You may find it beneficial to use more than one tool.

Before starting, write down the initial question clearly. And, as you go, maintain a wiki notebook of what you had to do to construct the visualizations and how the questions evolved. Include in the notebook where you got the data, and documentation about the format of the dataset. Describe any transformations or rearrangements of the dataset that you needed to perform; in particular, describe how you got the data into the format needed by the visualization system. Keep copies of any intermediate visualizations that helped you refine your question. After you have constructed the final visualization for presenting your answer, write a caption and a paragraph describing the visualization, and how it answers the question you posed. Think of the figure, the caption and the text as material you might include in a research paper.

Your assignment must be posted to the wiki before class on April 18, 2016 .

You should look for data sets online in convenient formats such as Excel or a CSV file. The web contains a lot of raw data. In some cases you will need to convert the data to a format you can use. Format conversion is a big part of visualization research so it is worth learning techniques for doing such conversions. Although it is best to find a data set you are especially interested in, here are pointers to a few datasets: Online Datasets

- Visualization Software

To create the visualizations, we will be using Tableau , a commercial visualization tool that supports many different ways to interact with the data. Tableau has given us licenses so that you can install the software on your own computer . One goal of this assignment is for you to learn to use and evaluate the effectiveness of Tableau. Please talk to me if you think it won't be possible for you to use the tool. In addition to Tableau, you are free to also use other visualization tools as you see fit.

- Tableau Download Instructions

How to create your wiki page

Begin by creating a new wiki page for this assignment. The title of the page should be of the form:

A2-FirstnameLastname .

The wiki syntax will look like this: *[[A2-FirstnameLastname|Firstname Lastname]] . Hit the edit button for the next section to see how I created the link for my name.

To upload images to the wiki, first create a link for the image of the form [[Image:image_name.jpg]] (replacing image_name.jpg with a unique image name for use by the server). This will create a link you can follow that will then allow you to upload the image. Alternatively, you can use the "Upload file" link in the toolbox to upload the image first, and then subsequently create a link to it on your wiki page.

Add a link to your finished reports here

One you are finished editing the page, add a link to it here with full name as the link text.

- Maneesh Agrawala

- Pedro Dantas

- Emma Townley-Smith

- Matthew Pick

- Pascal Odek

- Flavia Grey

- Ernesto Ramirez

- Raymond Luong

- Haiyin Wang

- Christina Kao

- Leigh Hagestad

- Benjamin Wang

- Shenli Yuan

- Juan Marroquin

- Sarah Sterman

- Patrick Briggs

- Samuel Hansen

- Andrew McCabe

- Mackenzie Leake

- Christine Quan

- Janette Cheng

- Lorena Huang Liu

- Pontus Orraryd

- Oskar Ankarberg

- Brandon Liu

- Serena Wong

- Sarah Wymer

- Ben-han Sung

- Nikolas Martelaro

- Gloria Chua

- John Morgan

- Filippa Karrfelt

- Catherine Mullings

- Juliana Cook

- Andrei Terentiev

- Adrian Leven

- John Newcomb

- Tum Chaturapruek

- Sarah Nader

- Lucas Throckmorton

- Kyle Dumovic

- Kris Sankaran

- Chinmayi Dixit

- Shannon Kao

- Zach Maurer

- Maria Frank

- Sage Isabella

- Gabriella Brignardello

- Cong Qiaoben

- Erin Singer

- Jennifer Lu

- Kush Nijhawan

- Santiago Seira Silva-Herzog

- Joanne Jang

- Bradley Reyes

- Milan Doshi

- Sukhi Gulati

- Shirbi Ish-Shalom

- Lawrence Rogers

- Mark Schramm

Navigation menu

Personal tools.

- View source

- View history

- Wiki Markup Reference

- What links here

- Related changes

- Special pages

- Printable version

- Permanent link

- Page information

- This page was last modified on 19 April 2016, at 12:11.

- Privacy policy

- About cs448b-wiki

- Disclaimers

Data Analytics Coding Fundamentals : UVic BIDA302: Course Book

Assignment 2 - week 3 - exploratory data analysis {#assignment3}.

This chunk of R code loads the packages that we will be using.

Introduction

For this homework assignment, please write your answer for each question after the question text but before the line break before the next one.

In some cases, you will have to insert R code chunks, and run them to ensure that you’ve got the right result.

Use all of the R Markdown formatting you want! Bullets, bold text, etc. is welcome. And don’t forget to consider changes to the YAML.

Once you have finished your assignment, create an HTML document by “knitting” the document using either the “Preview” or “Knit” button in the top left of the script window frame

New Homes Registry

The B.C. Ministry of Municipal Affairs and Housing publishes data from BC Housing’s New Homes Registry, by regional district and municipality, by three types types of housing: single detached, multi-unit homes, and purpose built rental. 9

The name of the file is “bc-stats_2018-new-homes-data_tosend.xlsx”

Packages used

This exercise relies on the following packages:

documentation for {readxl}

in particular, review the “Overview” page and the “Articles”

- documentation for {ggplot2}

You will also require functions from {dplyr}, {tidyr}, and (potentially) {forcats}.

1. Explore the file

List the sheet names in the file. (You may wish to assign the long and cumbersome name of the source file to a character string object with a shorter, more concise name.)

2. Importing a sheet

Here’s a screenshot of the top rows of the sheet with single detached housing:

What problems do you anticipate with the way this sheet is laid out?

In Question 5, you will be making a plot that uses data from this sheet. Will you need all of the rows and columns that contain information of one kind or another?

What are the data types of each column in the Excel file? Ask yourself things like “What is the variable type of this column? What type do I think R will interpret this as?”

Read in the sheet, using no options. What is notable about the content of the R data table, compared to the Excel source?

Read the contents of the file again, so that the header rows are in the right place, and with the “Note:” column omitted.

(See this page on the {readxl} reference material for some tips.)

Note: there are many possible solutions to this problem. Once you’ve created an object that you can manipulate in the R environment, your solution may involve some of the {dplyr} data manipulations.

3. Tidy data

Does this data frame violate any of the principles of tidy data?

If so, use the pivot functions from {tidyr} to turn it into a tidy structure.

4. Joining tables

Because the structure of the data in the Excel file is consistent, we can copy-and-paste and edit our code above, and assemble the contents of the three sheets into a single data table.

Repeat the import and tidy steps for the sheets containing the data for multi-unit homes and purpose built rental, and assign each to a unique object. At the end of this step you will have three tidy data frame objects in your environment, one each for single detached, multi-unit homes, and purpose built rentals.

Now join the three tables, creating a single table that contains all of the information that was previously stored in three separate sheets.

5. EDA: plotting

Now you’ve got a tidy structure, it’s time for some exploratory data analysis!

Plot the total number of housing units built in B.C. by municipality, but only the 10 municipalities with the greatest number of homes built, sorted from most to least. (I will leave it up to you to decide if you want to do that by a single year or by the total of all three years in the data.)

Hints and resources:

The Data visualisation and Exploratory data analysis chapters of R for Data Science , 2nd ed. might be handy references

The {ggplot2} reference pages

The Factors chapter of R for Data Science , 2nd ed.

The {forcats} reference pages

You might need to do further data manipulation before you can plot what you want

Sometimes I find it very helpful to make a sketch of the plot I envision, and then writing down which variables are associated with the bits on the plot

Exploratory data analysis (EDA) is used by data scientists to analyze and investigate data sets and summarize their main characteristics, often employing data visualization methods.

EDA helps determine how best to manipulate data sources to get the answers you need, making it easier for data scientists to discover patterns, spot anomalies, test a hypothesis, or check assumptions.

EDA is primarily used to see what data can reveal beyond the formal modeling or hypothesis testing task and provides a provides a better understanding of data set variables and the relationships between them. It can also help determine if the statistical techniques you are considering for data analysis are appropriate. Originally developed by American mathematician John Tukey in the 1970s, EDA techniques continue to be a widely used method in the data discovery process today.

Learn how to leverage the right databases for applications, analytics and generative AI.

Register for the ebook on generative AI

The main purpose of EDA is to help look at data before making any assumptions. It can help identify obvious errors, as well as better understand patterns within the data, detect outliers or anomalous events, find interesting relations among the variables.

Data scientists can use exploratory analysis to ensure the results they produce are valid and applicable to any desired business outcomes and goals. EDA also helps stakeholders by confirming they are asking the right questions. EDA can help answer questions about standard deviations, categorical variables, and confidence intervals. Once EDA is complete and insights are drawn, its features can then be used for more sophisticated data analysis or modeling, including machine learning .

Specific statistical functions and techniques you can perform with EDA tools include:

- Clustering and dimension reduction techniques, which help create graphical displays of high-dimensional data containing many variables.

- Univariate visualization of each field in the raw dataset, with summary statistics.

- Bivariate visualizations and summary statistics that allow you to assess the relationship between each variable in the dataset and the target variable you’re looking at.

- Multivariate visualizations, for mapping and understanding interactions between different fields in the data.

- K-means Clustering is a clustering method in unsupervised learning where data points are assigned into K groups, i.e. the number of clusters, based on the distance from each group’s centroid. The data points closest to a particular centroid will be clustered under the same category. K-means Clustering is commonly used in market segmentation, pattern recognition, and image compression.

- Predictive models, such as linear regression, use statistics and data to predict outcomes.

There are four primary types of EDA:

- Univariate non-graphical. This is simplest form of data analysis, where the data being analyzed consists of just one variable. Since it’s a single variable, it doesn’t deal with causes or relationships. The main purpose of univariate analysis is to describe the data and find patterns that exist within it.

- Stem-and-leaf plots, which show all data values and the shape of the distribution.

- Histograms, a bar plot in which each bar represents the frequency (count) or proportion (count/total count) of cases for a range of values.

- Box plots, which graphically depict the five-number summary of minimum, first quartile, median, third quartile, and maximum.

- Multivariate nongraphical: Multivariate data arises from more than one variable. Multivariate non-graphical EDA techniques generally show the relationship between two or more variables of the data through cross-tabulation or statistics.

- Multivariate graphical: Multivariate data uses graphics to display relationships between two or more sets of data. The most used graphic is a grouped bar plot or bar chart with each group representing one level of one of the variables and each bar within a group representing the levels of the other variable.

Other common types of multivariate graphics include:

- Scatter plot, which is used to plot data points on a horizontal and a vertical axis to show how much one variable is affected by another.

- Multivariate chart, which is a graphical representation of the relationships between factors and a response.

- Run chart, which is a line graph of data plotted over time.

- Bubble chart, which is a data visualization that displays multiple circles (bubbles) in a two-dimensional plot.

- Heat map, which is a graphical representation of data where values are depicted by color.

Some of the most common data science tools used to create an EDA include:

- Python: An interpreted, object-oriented programming language with dynamic semantics. Its high-level, built-in data structures, combined with dynamic typing and dynamic binding, make it very attractive for rapid application development, as well as for use as a scripting or glue language to connect existing components together. Python and EDA can be used together to identify missing values in a data set, which is important so you can decide how to handle missing values for machine learning.

- R: An open-source programming language and free software environment for statistical computing and graphics supported by the R Foundation for Statistical Computing. The R language is widely used among statisticians in data science in developing statistical observations and data analysis.

For a deep dive into the differences between these approaches, check out " Python vs. R: What's the Difference? "

Use IBM Watson® Studio to determine whether the statistical techniques that you are considering for data analysis are appropriate.

Learn the importance and the role of EDA and data visualization techniques to find data quality issues and for data preparation, relevant to building ML pipelines.

Learn common techniques to retrieve your data, clean it, apply feature engineering, and have it ready for preliminary analysis and hypothesis testing.

Train, validate, tune and deploy generative AI, foundation models and machine learning capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders. Build AI applications in a fraction of the time with a fraction of the data.

Exploratory Data Analysis: A Comprehensive Guide to Make Insightful Decisions

Today, data has become ubiquitous, affecting every aspect of our lives. From social media to financial transactions to healthcare, the generated data is abundant, which has ignited a data-driven revolution in every industry.

As the data sets grew, the complexity of understanding these data increased, and that’s how exploratory data analysis was uncovered. The data analysis process has many attributes, like cleansing, transforming, and analyzing the data to build models with relevant insights.

Exploratory Data Analysis is all about knowing your data in depth, such as significant correlations and repeating patterns, to select the proper analysis methods. In this article, you will learn about Exploratory Data Analysis, its key features, how to perform EDA, its types, the integration challenges, and how to overcome them.

What is Exploratory Data Analysis?

Understanding your data in depth before performing any analysis on it is essential. You need to know the patterns, the variables, and how those variables relate to each other, among other things. EDA (exploratory data analysis) is the process that examines, summarizes, visualizes, and understands your data to generate data-driven conclusions and further study of the information.

Let’s look at some of the key attributes of EDA:

Summarizing the Data

EDA allows data summarization using descriptive statistics, which helps understand the deviation, central tendency, and distribution of the values.

Data Visualizing

By implementing EDA, you can utilize varied visualization techniques, such as histograms, line charts, scatter plots, etc, to explore patterns, relationships, or trends within the data set.

Data Cleansing

Data cleansing is crucial for driving meaningful insights and ensuring accuracy. EDA helps you clean your data by identifying errors, inconsistencies, duplicates, or missing values.

What are the Types of Exploratory Data Analysis?

Understanding exploratory data analysis techniques helps with efficient data analysis and meaningful decisions. Let’s look at three primary exploratory data analysis types.

1. Univariate Exploratory Data Analysis

It is a type of analysis in which you study one variable at a time. This helps you understand the characteristics of a particular variable without getting distracted by other factors. For example, focusing on product sales to know which product is doing better.

There are two types of Univariate Analysis:

- Graphical Methods: Graphical methods use visual tools to understand the characteristics of a single variable. The visual tools may include histograms, box plots, density pots, etc.

- Non-Graphical Methods: In non-graphical methods, numerical values can be used to study the characteristics of a single variable. These numerical measures include descriptive analysis, such as mean, median, mode, dispersion measures, or percentiles.

2. Bivariate Exploratory Data Analysis

Bivariate analysis focuses on studying a relationship between two variables. It examines how a change in one variable can impact another. For example, the relationship between customer age and product category can be analyzed to determine whether age influences buying preferences. There are multiple ways to examine this relationship, both graphically and non-graphically.

The graphical methods include scatter plots, line charts, and bubble charts to visualize the relationship between two numeric values. The non-graphical method examines the relationship between two variables through correlation, regression analysis, or chi-square test.

3. Multivariate Exploratory Data Analysis

Multivariate analysis helps to analyze and understand the relationship between two or more variables simultaneously. It helps unveil more complex associations and patterns within the data. For example, it explores the relationship between a person's height, weight, and age.

There are two types of Multivariate Analysis:

- Graphical Methods: These methods help analyze patterns or associations through visualization, such as scatter plots and heat maps.

- Non-Graphical Methods: These statistical techniques are used to make predictions, test a hypothesis, or draw conclusions. They include methods such as multiple regression analysis, factor analysis, cluster analysis, etc.

How to Perform Exploratory Data Analysis?

Let’s look at the Exploratory data analysis steps:

1. Data Collection

You should recognize the need to collect the relevant data from various sources and collect the data according to the purpose of your analysis.

2. Inspecting the Data Variables

As the information collected is vast, you must first identify the critical variables that would affect your outcome or impact your decision.

3. Data Cleansing

In this step, you need to clean your data by identifying the errors, missing values, inconsistencies, or duplicate values.

4. Identifying Patterns and Correlations

Visualize your data sets using different data visualization tools to understand the patterns and relationships between variables.

5. Performing Descriptive Statistics

Calculate a statistical summary of your data using statistical methods or formulas.

6. Perform Advanced Analysis

Conduct an advanced analysis beyond descriptive statistics by defining objectives and specifying questions you want answered. This allows you to gain deeper insights into your data and identify complex relationship

7. Interpret Data

Interpret the results of your analysis and generate insights and conclusions based on the relationships, patterns, and trends.

8. Document and Report

Document the results of your analysis, including all the steps and techniques you have used. Mention your key findings and summarize the data inside the report, which you can use to share insights with your stakeholders.

Exploratory Data Analysis Tools You Can Use

EDA can be conducted using different tools or software platforms. Let’s look at some of the popular tools used:

Python’s simplicity makes it a preferable choice for data analysis. Its rich library ecosystem includes libraries like NumPy, Pandas, and Scikit-learn for machine learning, data manipulation, and numerical tasks. These libraries provide versatile toolsets for EDA and visualization. With Python, you can effectively handle and manage missing data and uncover valuable insights from large datasets.

R is a general-purpose programming language designed for data analysis and graphics. With R, you can handle and manipulate complex statistical datasets. It provides a rich set of tools for data cleaning, transformation, and analysis, allowing you to prepare data for further exploration. Additionally, R provides built-in analysis functionalities for performing detailed ERA and uncovering valuable insights from your data.

MATLAB stands for Matrix Laboratory. It is a high-level programming language and an interactive environment designed to perform numerical computations, data analysis, and visualizations. MATLAB is widely used to solve problems and provide numerical stimulation in mathematics, physics, finance, and engineering.

Jupyter Notebooks

Jupyter is an interactive computing environment that allows you to create and share documents containing texts, equations, live code, visualizations, and more. It supports multiple programming languages, which makes it a versatile data analysis and research tool. Jupyter Notebooks' flexible environment provides an interactive workspace for working on EDA projects.

What are the Challenges of EDA?

Before implementing EDA, you must know the challenges you might face ahead of time. Let’s look at some of them:

Data Unification

One of the most significant hurdles is data unification. Combining data from multiple sources, such as APIs, cloud platforms, or databases, before analysis can be a complex task. The challenge lies in the differences in data format or structure across these sources. For instance, customer data from CRM can be structured differently than website traffic data from analytics applications.

Data Quality

Performing EDA involves ensuring the quality of the data. The data is sourced from multiple sources, and these data sets may have inconsistencies, missing values, errors, etc., which may lead to incorrect conclusions.

Data Security

When handling large amounts of sensitive data, you might encounter security issues where unauthorized access or data breaches can become a crucial challenge.

Data Consistency

It is essential to reflect the updates or modifications in all related data sets, which helps maintain data consistency and synchronize your integration process.

Addressing these challenges might require a robust solution combining best practices for smooth data integration .

Simplifying the EDA Process with Airbyte

EDA is a crucial step in any data science project. However, the initial stages of EDA can be bogged down by challenges related to data preparation and consolidation. Here, Airbyte simplifies these challenges and empowers you to focus on the crucial task—extracting insights from your data.

Here’s how Airtbyte helps you to streamline the EDA process:

Airbyte is a data integration and replication platform with an extensive library of 350+ pre-built connectors . The library contains a varied range of APIs, databases, and flat files, making the integration process smooth. Through the Connector Builder Kit (CDK), you can also build customized connectors according to your work-specified needs.

For complex transformations, you can seamlessly integrate Airbyte with dbt (data build tool). This powerful combination allows you to leverage dbt’s functionalities like data standardization, cleansing, and mapping before loading it into your target destination.

What’s more! Airbyte adheres to industry standards such as GDPR, SOC2, ISO certifications, and so on, providing data security and compliance.

Use Cases & Examples of Exploratory Data Analysis

Exploratory data analysis is essential for understanding the trends and patterns among the data and using the information to derive insightful conclusions. Let’s look at exploratory data analysis examples and use cases.

EDA in Retail

EDA in retail can be performed to understand a particular product's sales patterns. Retailers can use the EDA tools to improve their sales by studying how different variables such as price, discount, and demographics contribute to increases or decreases in sales. They can also know which products are doing best and in which region the sales of a particular product are high.

EDA in Healthcare

EDA can be used to analyze clinical trial data and study the effectiveness of a particular drug or treatment. It helps you analyze patients' data, how they respond to a certain treatment, risk factors, etc. Insights from EDA can help healthcare professionals make more informed decisions about patients' care, treatment strategies, and resource allocation.

EDA isn’t just about examining data. It’s a robust technique that unlocks meaningful insights from even complex data structures. By employing EDA, you can gain a deeper understanding of your data, enabling you to identify areas for improvement and make strategic data-driven decisions for your business.

About the Author

Table of contents, get your data syncing in minutes, join our newsletter to get all the insights on the data stack., integrate with 300+ apps using airbyte, integrate and move data across 300+ apps using airbyte., related posts.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

This is the Repository of Course Project 2 of Exploratory Data Analysis Course from "Data Science: Foundations using R Specialization".

AndersonUyekita/exploratory-data-analysis_course-project-2

Folders and files, repository files navigation, course project 2 exploratory data analysis.

- 👨🏻💻 Author: Anderson H Uyekita

- 📚 Specialization: Data Science: Foundations using R Specialization

- 🧑🏫 Instructor: Roger D Peng

- 🚦 Start: Wednesday, 15 June 2022

- 🏁 Finish: Sunday, 19 June 2022

- 🌎 Rpubs: Interactive Document

- 📋 Instructions: Project Instructions

Course Project 2 aims to create six plots using base graphic and ggplot2 to answer six given questions. The datasets used for this assignment are from the National Emissions Inventory (NEI), which is recorded every three years. The current Course Project will cover the years from 1999 to 2008. The datasets comprise 2 data frames:

- The summarySCC_PM25.rds with almost 6.5 million observations and 6 variables, and;

- The Source_Classification_Code.rds with over 11,7 thousand observations and 15 variables.

Based on the plots created to address the questions, I have figured out that PM2.5 emissions have decreased over the years all over the US. Although, In Baltimore City, it is clear the pollution emission reduction from vehicles, in Los Angeles County, the PM2.5 from vehicles had increased until 2005 when in 2008 the pollution decreased by almost 9%.

Finally, the Coal PM2.5 is mostly from Electric Generation, and one observed a sharp reduction in Coal PM2.5 between 2005 and 2008.

Feel free to look at the Codebook to go in-depth.

1. Project Course 2 Assignment

Project Course 2 consists of answering six questions. Each question assignment asks to create a single plot to answer it.

Question 1: Have total emissions from PM2.5 decreased in the United States from 1999 to 2008?

Using the base plotting system, make a plot showing the total PM2.5 emission from all sources for each of the years 1999, 2002, 2005, and 2008.

Over the years, the fine particulate matter, the so-called PM2.5, has decreased from 7.3 million tons to 3.5 million tons, representing a 52.7% reduction.

Question 2: Have total emissions from PM2.5 decreased in the Baltimore City , Maryland ( fips == "24510" ) from 1999 to 2008?

Use the base plotting system to make a plot answering this question.

Based on Plot 2, the fine particulate matter (PM2.5) decreased by 43.1% from 1999 to 2008. However, in 2005 the PM2.5 of Baltimore City surpassed the 2002 index, showing a disturbance in the trend of PM 2.5 decreasing.

Question 3: Of the four types of sources indicated by the type (point, nonpoint, onroad, nonroad) variable, which of these four sources have seen decreases in emissions from 1999–2008 for Baltimore City ? Which have seen increases in emissions from 1999–2008?

Use the ggplot2 plotting system to make a plot answer this question.

According to the line graphic in Plot 3, non-road , nonpoint , and on-road types have decreased their emissions of PM2.5 between 1999 and 20008. On the other hand, the point type has increased by 16.2%.

Question 4: Across the United States, how have emissions from coal combustion-related sources changed from 1999–2008?

According to the SCC dataset, there are three sources of coal PM2.5 emissions:

- Fuel Comb - Comm/Institutional - Coal;

- Fuel Comb - Electric Generation - Coal, and;

- Fuel Comb - Industrial Boilers, ICEs - Coal.

The PM2.5 emissions from Coal have decreased by 37.9%, most resulting from a reduction in Electric Generation by 41,7% observed from 2005 to 2008. In addition, the Comm/Institutional also had an extraordinary evolution reducing 74.2% of its emissions in the same period. On the other hand, the Industrial Boilers, ICEs have increased their emissions by 34.3%, going in the opposite direction.

Question 5: How have emissions from motor vehicle sources changed from 1999–2008 in Baltimore City ?

The Vehicle Emissions of PM2.5 decreased by 74.5% from 1999 to 2008. The most significant change occurred between 1999 and 2002.

Question 6: Compare emissions from motor vehicle sources in Baltimore City with emissions from motor vehicle sources in Los Angeles County , California ( fips == "06037" ). Which city has seen greater changes over time in motor vehicle emissions?

Baltimore City has decreased its PM2.5 emissions from the vehicle by 74.5%. However, in the opposite direction, Los Angeles has increased its PM 2.5 emissions by 4.3%.

Los Angeles did not significantly change its fine particulate matter emissions, but Baltimore has advanced a lot, showing the more substantial changes in PM2.5 emissions over time.

- Data Selection

- Deliverables

- Data Sources

- Data Wrangling

- << Back to home

Assignment 2: Exploratory Data Analysis

In this assignment, you will identify a dataset of interest and perform exploratory analysis to better understand the shape & structure of the data, identify data quality issues, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of annotated and/or captioned visualizations that convey key insights gained during your analysis process.

Step 1: Data Selection

First, pick a topic area of interest to you and find a dataset that can provide insights into that topic. To streamline the assignment, we've pre-selected a number of datasets included below for you to choose from (see the Recommended Data Sources section below).

However, if you would like to investigate a different topic and dataset, you are free to do so. If working with a self-selected dataset and you have doubts about its appropriateness for the course, please check with the course staff. Be advised that data collection and preparation (also known as data wrangling ) can be a very tedious and time-consuming process. Be sure you have sufficient time to conduct exploratory analysis, after preparing the data.

After selecting a topic and dataset – but prior to analysis – you should write down an initial set of at least three questions you'd like to investigate. These questions should be clearly listed at the top of your final submission report.

Part 2: Exploratory Visual Analysis

Next, you will perform an exploratory analysis of your dataset using a visualization tool such as Vega-Lite or Tableau. You should consider two different phases of exploration.

In the first phase, you should seek to gain an overview of the shape & stucture of your dataset. What variables does the dataset contain? How are they distributed? Are there any notable data quality issues? Are there any surprising relationships among the variables? Be sure to perform "sanity checks" for any patterns you expect the data to contain.

In the second phase, you should investigate your initial questions, as well as any new questions that arise during your exploration. For each question, start by creating a visualization that might provide a useful answer. Then refine the visualization (by adding additional variables, changing sorting or axis scales, filtering or subsetting data, etc. ) to develop better perspectives, explore unexpected observations, or sanity check your assumptions. You should repeat this process for each of your questions, but feel free to revise your questions or branch off to explore new questions if the data warrants.

Final Deliverable

Your final submission should take the form of a sequence of images – similar to a comic book – that consists of 8 or more visualizations detailing your most important insights.

Your "insights" can include surprises or issues (such as data quality problems affecting your analysis) as well as responses to your analysis questions. Where appropriate, we encourage you to include annotated visualizations to guide viewers' attention and provide interpretive context. (See this page for some examples of what we mean by "annotated visualizations.")

Each image should be a visualization, including any titles or descriptive annotations highlighting the insight(s) shown in that view. For example, annotations could take the form of guidelines and text labels, differential coloring, and/or fading of non-focal elements. You are also free to include a short caption for each image, though no more than 2 sentences: be concise! You may create annotations using the visualization tools of your choice (see our tool recommendations below), or by adding them using image editing or vector graphics tools.

Provide sufficient detail such that anyone can read your report and understand what you've learned without already being familiar with the dataset. For example, be sure to provide a clear overview of what data is being visualized and what the data variables mean. To help gauge the scope of this assignment, see this example report analyzing motion picture data .

You must write up your report as an Observable notebook, similar to the example above. From a private notebook, click the "..." menu button in the upper right and select "Enable link sharing". Then submit the URL of your notebook on the Canvas A2 submission page .

Be sure to enable link sharing, otherwise the course staff will not be able to view your submission and you may face a late submission penalty! Also note that if you make changes to the page after link sharing is enabled, you must reshare the link from Observable.

- To export a Vega-Lite visualization, be sure you are using the "canvas" renderer, right click the image, and select "Save Image As...".

- To export images from Tableau, use the Worksheet > Export > Image... menu item.

- To add an image to an Observable notebook, first add your image as a notebook file attachment: click the "..." menu button and select "File attachments". Then load the image in a new notebook cell: FileAttachment("your-file-name.png").image() .

Recommended Data Sources

To get up and running quickly with this assignment, we recommend using one of the following provided datasets or sources, but you are free to use any dataset of your choice.

The World Bank Data, 1960-2017

The World Bank has tracked global human development by indicators such as climate change, economy, education, environment, gender equality, health, and science and technology since 1960. We have 20 indicators from the World Bank for you to explore . Alternatively, you can browse the original data by indicators or by countries . Click on an indicator category or country to download the CSV file.

Data: https://github.com/ZeningQu/World-Bank-Data-by-Indicators

Daily Weather in the U.S., 2017

This dataset contains daily U.S. weather measurements in 2017, provided by the NOAA Daily Global Historical Climatology Network . This data has been transformed: some weather stations with only sparse measurements have been filtered out. See the accompanying weather.txt for descriptions of each column .

Data: weather.csv.gz (gzipped CSV)

Yelp Open Dataset

This dataset provides information about businesses, user reviews, and more from Yelp's database. The data is split into separate files ( business , checkin , photos , review , tip , and user ), and is available in either JSON or SQL format. You might use this to investigate the distributions of scores on yelp, look at how many reviews users typically leave, or look for regional trends about restaurants. Note that this is a large, structured dataset and you don't need to look at all of the data to answer interesting questions.

Important Note: In order to download and use this data you will need to enter your email and agree to Yelp's Dataset License .

Data: Yelp Access Page (data available in JSON & SQL formats)

Additional Data Sources

Here are some other possible sources to consider. You are also free to use data from a source different from those included here. If you have any questions on whether a dataset is appropriate, please ask the course staff ASAP!

- data.seattle.gov - City of Seattle Open Data

- data.wa.gov - State of Washington Open Data

- nwdata.org - Open Data & Civic Tech Resources for the Pacific Northwest

- data.gov - U.S. Government Open Datasets

- U.S. Census Bureau - Census Datasets

- IPUMS.org - Integrated Census & Survey Data from around the World

- Federal Elections Commission - Campaign Finance & Expenditures

- Federal Aviation Administration - FAA Data & Research

- fivethirtyeight.com - Data and Code behind the Stories and Interactives

- Buzzfeed News - Open-source data from BuzzFeed's newsroom

- Kaggle Datasets - Datasets for Kaggle contests

- List of datasets useful for course projects - curated by Mike Freeman

Data Wrangling Tools

The data you choose may require reformatting, transformation, or cleaning prior to visualization. Here are tools you can use for data preparation. We recommend first trying to import and process your data in the same tool you intend to use for visualization. If that fails, pick the most appropriate option among the tools below. Contact the course staff if you are unsure what might be the best option for your data!

Graphical Tools

- Tableau - Tableau provides basic facilities for data import, transformation & blending.

- Trifacta Wrangler - Interactive tool for data transformation & visual profiling.

- OpenRefine - A free, open source tool for working with messy data.

Programming Tools

- Arquero : JavaScript library for wrangling and transforming data tables.

- JavaScript basics for manipulating data in the browser .

- Pandas - Data table and manipulation utilites for Python.

- dplyr - A library for data manipulation in R.

- Or, the programming language and tools of your choice...

Visualization Tools

You are free to use one or more visualization tools in this assignment. However, in the interest of time and for a friendlier learning curve, we strongly encourage you to use Tableau and/or Vega-Lite . To help you get up and running, the quiz sections on Thursday 1/14 will provide an introductory Tableau tutorial, so you may want to come prepared with questions.

- Tableau - Desktop visual analysis software . Available for both Windows and MacOS; you can register for a free student license from the Tableau website.

- Vega-Lite is a high-level grammar of interactive graphics. It provides a concise, declarative JSON syntax to create a large range of visualizations for data analysis and presentation.

- Jupyter Notebooks (Python) , using libraries such as Altair or Matplotlib .

- Voyager - Research prototype from the UW Interactive Data Lab . Voyager combines a Tableau-style interface with visualization recommendations. Use at your own risk!

Grading Criteria

Each submission will be graded based on both the analysis process and included visualizations. Here are our grading criteria:

- Poses clear questions applicable to the chosen dataset.

- Appropriate data quality assessment and transformation.

- Sufficient breadth of analysis, exploring multiple questions.

- Sufficient depth of analysis, with appropriate follow-up questions.

- Expressive & effective visualizations crafted to investigate analysis questions.

- Clearly written, understandable annotations that communicate primary insights.

Submission Details

Your completed exploratory analysis report is due Monday 1/25, 11:59pm . As described above, your report should take the form of an Observable notebook. Submit the URL of your notebook ( with link sharing enabled! ) on the Canvas A2 page .

Note: If you enabled link sharing and/or submitted your link early, be sure to reshare the link from Observable when you are finished making changes to ensure all the changes are visible.

- Assignment 2: Exploratory Data Analysis

- by Kimberly Ouimette

- Last updated 11 months ago

- Hide Comments (–) Share Hide Toolbars

Twitter Facebook Google+

Or copy & paste this link into an email or IM:

IMAGES

VIDEO

COMMENTS

Assignment 2: Exploratory Data Analysis. In this assignment, you will identify a dataset of interest and perform an exploratory analysis to better understand the shape & structure of the data, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of ...

Assignment 2: Exploratory Data Analysis. In this assignment, you will identify a dataset of interest and perform an exploratory analysis to better understand the shape & structure of the data, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of ...

Assignment 2: Exploratory Data Analysis. In groups of 3-4, identify a dataset of interest and perform exploratory analysis in Tableau to understand the structure of the data, investigate hypotheses, and develop preliminary insights. Prepare a PDF or Google Slides report using this template outline: include a set of 10 or more visualizations ...

Introduction. Exploratory Data Analysis (EDA) is the single most important task to conduct at the beginning of every data science project. In essence, it involves thoroughly examining and characterizing your data in order to find its underlying characteristics, possible anomalies, and hidden patterns and relationships.

Assignment. The overall goal of this assignment is to explore the National Emissions Inventory database and see what it say about fine particulate matter pollution in the United states over the 10-year period 1999 2008. You may use any R package you want to support your analysis.

Chapter 4 Exploratory Data Analysis. A rst look at the data. As mentioned in Chapter 1, exploratory data analysis or \EDA" is a critical rst step in analyzing the data from an experiment. Here are the main reasons we use EDA: detection of mistakes checking of assumptions preliminary selection of appropriate models determining relationships ...

What is Exploratory Data Analysis? Exploratory Data Analysis (EDA), also known as Data Exploration, is a step in the Data Analysis Process, where a number of techniques are used to better understand the dataset being used. 'Understanding the dataset' can refer to a number of things including but not limited to…

The goal of this assignment is not to develop a new visualization tool, but to understand better the process of exploring data using off-the-shelf visualization tools. Here is one way to start. Step 1. Pick a domain that you are interested in. Some good possibilities might be the physical properties of chemical elements, the types of stars, or ...

The Data visualisation and Exploratory data analysis chapters of R for Data Science, 2nd ed. might be handy references. The {ggplot2} reference pages. The Factors chapter of R for Data Science, 2nd ed. The {forcats} reference pages. You might need to do further data manipulation before you can plot what you want

3.c BOX PLOT: Box plot is an alternative and more robust way to illustrate a continuous variable. The vertical lines in the box plot have a specific meaning. The centerline in the box is the 50th percentile of the data (median). Variability is represented by a box that is formed by marking the first and third quartile.

Exploratory data analysis (EDA) is used by data scientists to analyze and investigate data sets and summarize their main characteristics, often employing data visualization methods. EDA helps determine how best to manipulate data sources to get the answers you need, making it easier for data scientists to discover patterns, spot anomalies, test ...

Exploratory Data Analysis in Python. Exploratory data analysis (EDA) is a critical initial step in the data science workflow. It involves using Python libraries to inspect, summarize, and visualize data to uncover trends, patterns, and relationships. Here's a breakdown of the key steps in performing EDA with Python: 1. Importing Libraries:

3. Multivariate Exploratory Data Analysis. Multivariate analysis helps to analyze and understand the relationship between two or more variables simultaneously. It helps unveil more complex associations and patterns within the data. For example, it explores the relationship between a person's height, weight, and age.

The data for this assignment are available from the course web site as a single zip file: Data for Peer Assessment [29Mb] The zip file contains two files: PM2.5 Emissions Data (summarySCC_PM25.rds): This file contains a data frame with all of the PM2.5 emissions data for 1999, 2002, 2005, and 2008. For each year, the table contains number of ...

University of Maryland University College DATA 610 - Decision Management Systems Assignment №2 - Exploratory Data Analysis (EDA) using Cognos Analytics Deadline: Last day of week 5, 11:59 pm Eastern Time Submission via LEO. This is an individual assignment. Each student will complete the assignment outlined below and post his/her written results to the appropriate assignment.

Course Project 2 aims to create six plots using base graphic and ggplot2 to answer six given questions. The datasets used for this assignment are from the National Emissions Inventory (NEI), which is recorded every three years.

University of Maryland University College DATA 610 - Decision Management Systems Assignment №2 - Exploratory Data Analysis (EDA) using Cognos Analytics Deadline: Last day of week 5, 11:59 pm Eastern Time Submission via LEO. This is an individual assignment. Each student will complete the assignment outlined below and post his/her written results to the appropriate assignment.

Assignment 2: Exploratory Data Analysis. In this assignment, you will identify a dataset of interest and perform exploratory analysis to better understand the shape & structure of the data, identify data quality issues, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a ...

RPubs - [Course Project 2] Exploratory Data Analysis. [Course Project 2] Exploratory Data Analysis.

Coursera - Exploratory Data Analysis - Assignment 2. by Desiré De Waele. Last updated about 8 years ago.

Assignment 2: Exploratory Data Analysis; by Kimberly Ouimette; Last updated 10 months ago; Hide Comments (-) Share Hide Toolbars

Assignment 2: Exploratory Data Analysis (EDA) using Cognos Analytics. Introduction Nashville is ranked as the 4 th best real estate market in the United States by WalletHub, which analyzed 300 cities across 24 key indicators ("Nashville Real Estate", 2020).

Assignment 2 - Exploratory Analysis Course: MIS 576 - Data Mining for Business Analytics 1. Assignment 2: Exploratory Analysis Lesson Plan Overview and Data Set Details So far, we have discussed about the basic concepts of data mining techniques including various supervised (defined target variable) and unsupervised (no target variable ...