- Open access

- Published: 04 March 2017

Markers’ criteria in assessing English essays: an exploratory study of the higher secondary school certificate (HSCC) in the Punjab province of Pakistan

- Miguel Fernandez ORCID: orcid.org/0000-0002-0826-3142 1 &

- Athar Munir Siddiqui 1

Language Testing in Asia volume 7 , Article number: 6 ( 2017 ) Cite this article

4305 Accesses

1 Altmetric

Metrics details

Marking of essays is mainly carried out by human raters who bring in their own subjective and idiosyncratic evaluation criteria, which sometimes lead to discrepancy. This discrepancy may in turn raise issues like reliability and fairness. The current research attempts to explore the evaluation criteria of markers on a national level high stakes examination conducted at 12th grade by three examination boards in the South of Pakistan.

Fifteen markers and 30 students participated in the study. For this research, data came from quantitative as well as qualitative sources. Qualitative data came in the form of scores on a set of three essays that all the fifteen markers in the study marked. For the purpose of this study, they weren’t provided with any rating scale as to replicate the current practices. Qualitative data came from semi-structured interviews with the selected markers and short written commentaries by the markers to rationalize their scores on the essays.

Many-facet Rasch model analyses present differences in raters’ consistency of scoring and the severity they exercised. Additionally, an analysis of the interviews and the commentaries written by raters justifying the scores they gave showed that there is a great deal of variability in their assessment criteria in terms of grammar, attitude towards mistakes, handwriting, length, creativity and organization and use of cohesive devices.

Conclusions

The study shows a great deal of variability amongst markers, in their actual scores as well as in the criteria they use to assess English essays. Even they apply the same evaluation criteria, markers differ in the relative weight they give them.

Research has shown that, in contexts where essays are assessed by more than one rater, discrepancies often exist among the different raters because they do not apply scoring criteria consistently (Hamp-Lyons 1989 ; Lee 1998 ; Vann et al. 1991 ; Weir 1993 ). This study examines this issue in Pakistan, a context where composition writing is a standard feature of English assessment systems at the secondary & post-secondary levels, but where no research has been conducted into the criteria raters use in assessing written work. The particular focus of this project is a large-scale high-stakes examination conducted by the Board of Intermediate and Secondary Education (BISE) in the Punjab province of Pakistan. One factor that makes this context particularly interesting is that raters are not provided with formal criteria to guide their assessment and this makes it even more likely that variations in the criteria raters use will exist.

Literature review

Language testers and researchers emphasize the importance of reliability in scoring since scorer reliability is central to test reliability (Hughes 1989 ; Lumley 2002 ). Cho ( 1999 , p. 3) believes that “rating discrepancy between raters may cause a very serious impediment to assuring test validation, thereby incurring the mistrust of the language assessment process itself.” Bachman and Alderson ( 2004 ), while openly acknowledging the difficulties raters face in assessing essays, consider writing to be one of the most difficult areas of language to assess. They note various factors which complicate the assessment process but believe that serious problems arise because of the subjectivity of judgement involved in rating students’ writing. The subjectivity of human raters, sometimes referred to as the rater factor, is considered the single most important factor affecting the reliability of scoring because raters (1) may come from different professional and linguistic backgrounds (Barkaoui 2010 ), (2) may have different systematic tendencies like restriction of range, rater severity/leniency, (Fernández Álvarez and Sanz Sainz 2011 ; Wiseman 2012 ), (3) may have different attitude to errors (Huang 2009 ; Janopoulos 1992 ; Lunsford and Lunsford 2008 ; Santos 1988 ; Vann, Lorenz, and Mayer 1991 ), (4) may have very different expectations of good writing (Huang 2009 ; Powers, Fowles, Farnum, and Ramsey 1994 ; Shaw and Weir 2007 ; Weigle 2002 ), (5) may quickly become tired or be inattentive (Fernández Álvarez and Sanz Sainz 2011 ; Enright and Quinlan 2010 ) or (6) may have different teaching and testing experience (Barkaoui 2010 ), etc. Therefore, language testing professionals (e.g., Alderson et al. 1995 ; Hughes 1989 ; Weir 2005 ) suggest constant training of raters and routine double scoring in order to achieve an acceptable level of inter-rater reliability. Research also supports the view that scorers’ reliability can be improved considerably by training the raters, (Charney 1984 ; Cho 1999 ; Douglas 2010 ; Huot 1990 ; Weigle 1994 ) though it cannot completely eliminate the element of subjectivity (Kondo-Brown 2002 ; Weir 2005 ; Wiseman 2012 ).

In order to achieve an acceptable level of reliability, Weigle ( 2002 ), among others, has outlined detailed procedures to be followed while scoring ESL compositions. Key to these is the provision of a rating scale, rubric or scoring guide, which functions as the yardstick against which the raters judge a piece of writing or an oral performance. The importance of using a rating scale to help raters score consistently is so well-established in the field of language testing that it is taken for granted that one will always be available; the issue then becomes not whether to use a rating scale, but what form this should take (e.g., holistic or analytic - see Weigle 2002 for a discussion of rating scales).

In Pakistan, although essay writing is typically a key component in high-stakes examinations, no explicit criteria for the scoring of essays exist (Haq and Ghani 2009 ). Given this situation, this study examines the scoring criteria raters use and the extent to which these vary across raters.

An overview of the relevant assessment literature shows that a variety of qualitative and quantitative tools like introspective and retrospective think aloud protocols (e.g., Cumming et al. 2001 , 2002 ; Erdosy 2004 ), group or individual interviews (e.g., Erdosy 2004 ), written score explanations (e.g., Barkaoui 2010 ; Milanovic et al. 1996 ; Rinnert and Kobayashi 2001 ; Siddiqui 2016 ), questionnaires (e.g., Shi 2001 ) and panel discussions (e.g., Kuiken and Vedder 2014 ) have been used by different researchers to find an answer to the Research Questions 1 and 2 outlined in the next section. Think Aloud Protocols (TAPs) or verbal protocols, for instance, which require the participants to verbalise their thoughts while they are actually rating, are the most widely used methods to investigate the rating process of essays in English as a first language (Huot 1993 ; Wolfe et al. 1998 ) as well as in English as a second language context (Cumming et al. 2001 ; Lumley 2005 ). They have three major weaknesses, namely incompleteness (DeRemer 1998 ; Smith 2000 ), possible alteration in the rating due to simultaneous verbalization and rating (Barkaoui 2011 ; Lumley 2005 ) and the difficulty to administer TAPs (Barkaoui 2011 ; Siddiqui 2016 ). Keeping in mind the limitations inherent in each method, it was therefore decided to use two methods simultaneously (Written commentaries and interviews) to counterbalance the weaknesses in a single method. To find out the answer to the 3 rd Research question, Rasch analyses were carried out.

Research questions

Informed by the analysis of the literature and context above, the following research questions were proposed:

What criteria can be used in scoring essays?

What criteria do other raters use in scoring essays?

How consistent are raters in the criteria they apply?

The context for the study is the Higher Secondary School Certificate (HSCC) conducted by the BISE in the Punjab province of Pakistan. Out of a total of nine BISEs in the Punjab, three are responsible for conducting examinations in South Punjab (SP). These Boards, though independent, are closely interconnected at the provincial level and every year thousands of students from private and public schools/colleges take the exams administered by these Boards. The compulsory English paper, which carries 18% of the total scores, has many essay-type questions.

Participants

A sample of raters working in the three different Boards in SP was selected for this study. Given the large number of raters working in the region, five raters from each Board were chosen, giving a total of 15 participating raters. All the raters had at least 10 years of experience as a rater on the aforementioned BISEs. Their ages ranged from 40 to 52, while nine were male and six female.

Moreover, 30 students studying at a government college in the jurisdiction of SP were also part of the study. All of them were pre-engineering male students and were preparing to take the final examination conducted by BISE. They were assigned five essays to prepare for their send-up test, Footnote 1 and this contained four essay questions from which the students had to choose one. The essays were written under examination conditions. The essay titles were

My first day at College.

Science, a mixed blessing.

My hero in history.

The place of women in our society.

Of the 30 students, eight attempted ‘My first day at College’ whereas nine each chose ‘Science, a mixed blessing’ and ‘My hero in history’. The remaining four students did not attempt this question. Thus, a total of 26 essays were produced. Out of this total one essay was randomly selected on each of the three topics. These three essays were anonymised, photocopied and given to the raters participating in the study.

Data collection

Scoring of essays.

The 15 raters in this study were given the same three essays to score and asked to score them as they would do in official scoring centres (including giving a score to each paper).

Written commentaries

Each rater was also asked to submit a short written commentary in which they justified the score they awarded to each script. The obvious advantages associated with this method are economy of time and ease in data collection. Quite unlike interviews and TAPs, short commentaries are readily available in written form and lend themselves to quick analysis saving researcher\s time and energy for analysis. Here is an example of one such commentary:

The candidate has attempted the given topic in a somewhat appropriate way. But there occurred some spelling mistakes and the candidate wrote over the words. The candidate has not been successful in fulfilling the required number of words as usually maintained by Intermediate student.

Handwriting is plausible and pages are well margined. The candidate does not make use of capital and small letters in writing main heading /title. Overall impression on my part is that the attempt is just average.

After the scoring was completed, each rater was interviewed in order to examine the criteria they used in assessing the scripts (see Appendix 1 for interview questions). The interviews were semi structured. According to Wallace ( 1998 ), these are the most popular form of interviews. Unlike TAPs, which put additional load on the raters while they are simultaneously verbalising their thoughts and rating essays, interviews for the current study provided the participants ample opportunities to talk about their marking criteria in a relaxed atmosphere. Moreover, as compared to other methods like observation or TAPs they are easy to administer since the raters who might refuse to be observed while they are at work or decline to verbalise their thoughts while evaluating essays agreed to be interviewed. This readiness of the markers to be interviewed was not only in consonance with the ethical framework charted out for the study but was advantageous also since the willing raters gave a true and fuller account of their rating practices. This approach allowed the researchers to address a set of themes that wanted to be covered but also provided the flexibility to discuss any additional issues of interest that emerged during the conversation. Prior to the interviews, the commentaries the raters had written were reviewed and used to inform the direction of the discussion.

Data analysis

Many-facet Rasch model (NFRM) analyses were carried out with the use of FACETS (Linacre and Wright 1993 ) to estimate raters’ performance (in terms of intra and inter rater reliability) and essay difficulty. Due to the fact that raters vary according to the severity in their scoring, MFRM analyses help identify particular elements within one facet that are problematic, such as a rater who is not consistent in the way he or she scores (Linacre 1989 ; Lynch and McNamara 1998 ; Bond and Fox 2001 ).

Results and findings

Consistency of scoring.

Table 1 summarizes the scores (out of a maximum of 15) given to each of the three essays by the 15 raters. These figures highlight variability in the assessment of these scripts. On essay 1, the scores ranged from 5 to 12, on essay 2 from 4 to 10 and on essay 3 from 6 to 10. Raters’ aggregates scores for the three essays ranged from 17 to 31.

Figure 1 presents the variable map generated by FACETS, in which the different variables are represented. The map allows us to see all the facets of the analysis at one time and to draw comparisons among them. It also summarizes key information about each facet, highlighting results from more detailed sections of the FACETS output.

FACETS Variable Map

The first column in the map shows the logit scale. Although this scale can adopt many values, the great majority of the cases are placed in the rank ± 5 logits. In this case, it goes from +2 to -4. The location of point 0 in the scale is arbitrary, although it is usually placed in the average difficulty of the items. The second column (labeled “Raters”) compares the raters in terms of the level of severity or leniency that each rater exercised when rating the essays. Because more than one rater rated each essay, raters’ tendencies to rate responses higher or lower on average could be estimated. We refer to these as rater severity measures. More severe raters appear higher in the column, while more lenient raters appear lower. When we examine the map, we see that the harshest raters (Raters 1 and 11) had a severity measure of about 1.4 logits, while the most lenient raters (Raters 3, 10 and 13) had a severity measure of about -4.0 logits. The third column (labeled “Essays”) compares the three essays in terms of their relative difficulties. Essays appearing higher in the column were more difficult to receive high ratings on than essays appearing lower in the column. The most difficult essay topic was “My hero in history,” while the easiest was “Science, a mixed blessing.”

Raters’ severity

One of the central questions is whether raters differ in the severity with which they rate and how consistent they are in the criteria they apply. To answer these questions we need to examine Fig. 2 , where we can find the raters measurement report.

Raters Measurement Report (arranged by mN)

The logit measures of rater severity (in log-odds units) that were included in the map are shown under the column labeled Measure. Each rater has a severity measure with a standard error associated with it, shown in the column labeled Model S.E., indicating the precision of the severity measure. The rater severity measures range from -4.59 logits (for the most lenient rater, Rater 10) to 1.40 logits (for the most severe raters, Raters 1 and 11). The spread of the severity measures is about 6 logits. Comparing the fair averages of the most severe and most lenient raters, we would conclude that, on average, Rater 10 tended to give ratings that were 3.16 raw score points higher than Raters 1 and 11 (i.e., 8.90–5.74 = 3.16).

The reported reliability is the Reliability of the Rater Separation. This index provides information about how well one can differentiate among the raters in terms of their levels of severity. It is the Rasch equivalent of a KR-20 or a Cronbach Alpha. It is not a measure of inter-rater reliability. Rather, rater separation reliability is a measure of how different the raters are. In most situations, the most desirable result is to have a reliability of rater separation close to zero. This result would suggest that the raters were interchangeable, exercising very similar levels of severity. In our example, the high degree of rater separation (.66) suggests that the raters included in this analysis are well differentiated in terms of the levels of severity they exercised. There is evidence here of unwanted variation in rater severity that can affect examinee scores.

Rater consistency

In order to see if the raters scored consistently, we would examine the rater fit statistics. FACETS produces mean-square fit statistics for each rater. These are shown on Fig. 2 under the columns labeled Infit and Outfit. Rater Infit is an estimate of the consistency with which a rater scores the essays. We can also think about rater infit as a measure of the rater’s ability to be internally consistent in his/her scoring. FACETS reports a mean-square infit statistic (MnSq) and a standardized infit statistic (ZStd) for each rater. Mean-square infit has an expected value of 1. Values greater than 1 signal more variation (i.e., unexplained, unmodeled variation) in the rater’s ratings than expected. Values smaller than 1 signal less variation than expected in the rater’s ratings. Generally, infit greater than 1 is more of a problem than infit less than 1, since highly surprising or unexpected ratings that do not fit with the other ratings tend to be more difficult to explain and defend than overly predictable ratings.

Data represented in Fig. 2 indicates that Rater 14 shows less consistency in the scoring, with an infit MnSq of 2.30, followed by Raters 15 (MnSq of 1.85), 5 (MnSq of 1.27), 12 (MnSq of 1.27) and 1 (MnSq of 1.17).

Raters’ scoring criteria

An analysis of the interviews and the commentaries written by raters justifying the scores they gave showed that there is a great deal of variability in their assessment criteria. Even where they assigned the same score to an essay, the rationale for doing so was often quite different.

Although all raters stressed the importance of grammatical accuracy, they disagreed on the relative weight that grammar should be given. Some raters were very particular about grammar and allocated nearly 50% of the total scores to it. For example, one respondent very ardently noted: “First thing is grammar. First of all we check whether the student has followed grammatical rules. If there are spelling, construction and grammatical mistakes, then we deduct 50% of the scores”. Others were less concerned about grammar and gave good scores to an essay, as long as the grammatical mistakes did not interfere with meaning.

Attitude towards mistakes

Raters also varied in how they treated repeated mistakes of form, such as spelling or grammar. When asked how they react if a student repeatedly misspells a word, some raters said that they counted it as a single mistake, while others said they counted it as a separate mistake each time it occurred. For others, it depended on how serious they felt the mistake was. For example, one respondent explained “generally I will count it as one but if there is a mistake in verb tense - for example if he is using present indefinite tense incorrectly again and again - I will deduct scores each time”.

For some raters, quotations and memorized extracts from literature were “a vital means to support a viewpoint”; such raters thus gave more credit to an essay which had quotations. For example, one respondent explained the following:

An essay… must have 5 to 6 quotations to support the arguments… Well, if the student is… able to convince without quotations I give him credit… But how this can happen? You see references are life line of your arguments.

Even those raters who were looking for quotations in the essays were not unanimous as to how many were required. Some thought there was no fixed number while others wanted at least six to seven quotations in an essay. Still others thought that they were not even necessary and an essay could be convincing without having quotations. One rater noted:

I reserve 50% scores for content and 50% for grammar and spellings. References and handwriting do not matter much and I give generous credit even if the essay is written in a very bad hand and has no quotations.

Handwriting

Some raters believed that one of the essential qualities of a well-developed essay is clear and legible handwriting - the essay should be pleasing to the eye. Raters from this group said that they gave 2–3 bonus points to an essay that is written in a neat hand. One rater, while rationalizing the scores she deducted from an essay, observed that “the candidate overwrote the words. Besides there are so many cuttings and the handwriting is also not plausible”. Other raters, in contrast, did not consider handwriting to be an important criterion in the assessment of the essays.

Though raters agreed that a well-written essay should meet the prescribed word limit, they varied in the way they assessed this criterion. Some raters said that since they had been scoring for a very long time their experience helped them judge whether the essay had the required number of words or not. Others said that if the essay had all the components it automatically had the required length. Still others said that the number of pages written gave them a clue to the length of essay - as one rater noted, “It’s quite obvious. A 300-word essay will be normally 3 pages of the answer sheet.”

Almost all raters looked for creativity in an essay and gave credit to original writing in line with the Boards’ instruction to give generous credit to a creative attempt. But they noted, with regret, that creativity at the intermediate level is virtually non-existent as the predictability of essay titles (similar titles were set each year) encouraged the candidates to memorize essays and reproduce these in the examination.

Organization and cohesive devices

Makers did not seem to give great weight to organization and cohesive devices in an essay at the intermediate level since they believed that 99% of the essays written in the examination were memorized ones and had been pre-organized. Only one rater mentioned it as a scoring criterion. In the discussion about the reproduction of memorised essays, the rater observed the following:

Some essays produced by students have superior organization as they are written by an expert. I always make it a point to give more credit to such essay, as the student must be credited with the choice of memorizing a good essay.

Raters’ awareness of inter-rater variability

The findings presented above show that there was variability in raters’ scoring and also in the criteria they used in assessing essays. Additionally, the study also showed that raters were aware of these issues and felt the discrepancies were partly the result of the lack of proper written assessment guidelines. One rater noted that:

Well, you know human beings are not machines… and every individual rater has different experiences, backgrounds and of course he has… quite different expectations. And they may look for different things in essay. Some may want good handwriting and others may look for some strong arguments.

The raters, then, were not at all surprised by the possibility of limited inter-rated reliability in the scoring of essays.

The study shows that there exists a great deal of variability amongst raters, both in their actual scores as well as in the criteria they use to assess English essays. Even when they apply the same criteria, raters vary in the relative importance they give them. This confirms the earlier studies (e.g., Bridgeman and Carlson 1983 ; Lee 1998 ; Weir 1993 ) which suggest that different raters have different preferences. This research also lends support to earlier work (Connors and Lunsford 1988 ; James 1977 ; Williams 1981 ) which noted that different raters react differently to mistakes. Given the high-stakes nature of the examination which the individuals in this study are scored for, the variability highlighted here is very problematic and suggests that there is much room for the introduction of systems, including training, which would allow the assessment of BISE English essays to be more reliable.

One particular issue to emerge here was raters’ preference for memorized excerpts in the essays. This is not an issue that appears elsewhere in the literature, but its relevance is noteworthy. Firstly, the majority of BISE raters have a Master’s in English literature. These teachers thus believe that an essay will be improved if it uses literary quotations to support its viewpoint. Secondly, in Eastern culture, age and wisdom command respect. It is thus generally believed that an argument will be stronger and more convincing if it quotes some celebrated author. Religious scholars and authors often quote from the Holy Quran and verses from famous poets to impress the audience in Pakistan.

The issue that raters raised about memorised essays here is also worth highlighting. If it is indeed the case that essays produced under examination conditions have been written in advance (not necessarily by the students) and memorised, then this brings into question the validity of the examination itself; it is not actually assessing how well the students can write in English but other qualities such as their memories.

The study has some limitations. Since only experienced teachers from government institutes participated in the study these results cannot be generalized to novice raters or raters associated with private institutes. Moreover, the sample size was limited to 15 raters and a set of three essays. Additional research with a larger sample is needed to better understand how raters with varied teaching and scoring experience and from different socio-cultural backgrounds assess English essay writing.

The study has highlighted significant variability amongst raters working at the different Boards of Intermediate and Secondary Education in one region of Pakistan. This small scale research project serves two purposes. Firstly, by pointing out the great differences in the scores awarded by different examiners to the same essays, it sensitizes different stakeholders to the gravity of the situation and by the same token urges for more research into the phenomenon. Secondly, it makes a case for using a rating scale, training the raters and taking other appropriate measures to achieve an acceptable level of inter-rater reliability in the scoring of English essays on high-stakes examinations.

The research has implications for the Board officials, policy makers and examiners. Many raters, even though they have been working for over decades, have difficulties finding out what exactly they have to look for in an essay at the intermediate level. It is the goal of this study to provide some guidance in the scoring process and to set the principles for future research studies on a larger scale.

Send-up tests are preparatory examinations conducted by the college(s) locally just before the students take the final examination conducted by the BISE. These tests are modelled on the Boards’ examination.

Alderson, J. C., Clapham, C., & Wall, D. (1995). Language test construction and evaluation . Cambridge: Cambridge University Press.

Google Scholar

Bachman, F., & Alderson, J. C. (2004). Statistical analyses for language assessment . Cambridge: Cambridge University Press.

Book Google Scholar

Barkaoui, K. (2010). Do ESL Essay Raters’ Evaluation Criteria Change With Experience? A Mixed-Methods, Cross-Sectional Study. TESOL Quarterly, 44 (1), 31–57.

Article Google Scholar

Barkaoui, K. (2011). Think-aloud protocols in research on essay rating: An empirical study of their veridicality and reactivity. Language Testing, 28 (1), 51–75.

Bond, T. G., & Fox, C. M. (2001). Applying the Rasch Model. Fundamental Measurement in the Human Sciences . Mahwah: Lawrence Erlbaum Associates, Inc.

Bridgeman, B., & Carlson, S. (1983). Survey of academic writing tasks required of graduate and undergraduate foreign students . Princeton: Educational Testing Service.

Charney, D. (1984). The validity using holistic scales to evaluate writing: A critical overview. Research in the Teaching of English, 18 (1), 65–87.

Cho, D. (1999). A study on ESL writing assessment: Intra-rater reliability of ESL compositions. Melbourne Papers in Language Testing, 8 (1), 1–24.

Connors, R., & Lunsford, A. (1988). Frequency of formal errors in current college writing or Ma and Pa Kettle do research. College Composition and Communication, 39 , 395–409.

Cumming, A., Kantor, R., & Powers, D. (2001). Scoring TOEFL essays and TOEFL 2000 prototype writing tasks: An investigation into raters’ decision making and development of a preliminary analytic framework (TOEFL Monograph Series N 22). Princeton: Educational Testing Service.

Cumming, A., Kantor, R., & Powers, D. E. (2002). Decision-making while rating ESL/EFL writing tasks: A descriptive framework. Modern Language Journal, 86 (1), 67–96.

DeRemer, M. (1998). Writing assessment: Raters’ elaboration of the rating task. Assessing Writing, 5 , 7–29.

Douglas, D. (2010). Understanding language testing . New York: Routledge.

Enright, M. K., & Quinlan, T. (2010). Complementing human judgment of essays written by English language learners with e-rater1scoring. Language Testing, 27 (3), 317–334.

Erdosy, M. U. (2004). Exploring variability in judging writing ability in second language: A study of four experienced raters of ESL compositions (TOEFL Research Report RR 03-17). Princeton: Educational Testing Service.

Fernández Alvarez, M., & Sanz Sainz, I. (2011). An Overview of Inter-rater Reliability, Severity and Consistency in Scoring Compositions using FACETS. In M. Gilda & M. Almitra (Eds.), Philological Research (pp. 103–116). Athens: Athens Institute for Education and Research.

Hamp-Lyons, L. (1989). Raters respond to rhetoric in writing. In H. W. Dechert & M. Raupach (Eds.), Interlingual Process (pp. 229–244). Tübingen: Narr.

Haq, N., & Ghani, M. (2009). Bias in grading: A truth that everybody knows but nobody talks about (Vol. 11, pp. 51–89). English Language and Literary Forum. Shah Abdul Latif University of Sindh: Pakistan.

Huang, J. (2009). Factors affecting the assessment of ESL students’ writing. International Journal of Applied Educational Studies, 5 (1), 1–17.

Hughes, A. (1989). Testing for language teachers . Cambridge: Cambridge University Press.

Huot, B. (1990). The literature of direct writing assessment: Major concerns and prevailing trends. Review of Educational Research, 60 , 237–263.

Huot, B. A. (1993). The influence of holistic scoring procedures on reading and rating student essays. In M. M. Williamson & B. A. Huot (Eds.), Validating holistic scoring for writing assessment: Theoretical and empirical foundations (pp. 206–236). Creskill: Hampton Press.

James, C. (1977). Judgments of error gravities. English Language Teaching Journal, 2 , 116–124.

Janopoulos, M. (1992). University faculty tolerance of NS and NNS writing errors: A comparison. Journal of Second Language Writing, 1 (2), 109–121.

Kondo-Brown, K. (2002). A FACETS analysis of rater bias in measuring Japanese second language writing performance. Language Testing, 19 (1), 3–31.

Kuiken, K., & Vedder, I. (2014). Rating written performance: What do raters do and why? Language Testing, 31 (3), 329–348.

Lee, Y. (1998). An investigation into Korean markers’ reliability for English writing assessment. English Teaching, 53 (1), 179–200.

Linacre, J. M. (1989). Many-facet Rasch Measurement . Chicago: MESA Press.

Linacre, J. M., & Wright, B. D. (1993). FACETS: Many-facet Rasch analysis (Version. 3.71.4) . Chicago: MESA Press.

Lumley, T. (2002). Assessment criteria in a large-scale writing test: What do they really mean to the raters? Language Testing, 19 (3), 246–276.

Lumley, T. (2005). Assessing second language writing: The rater’s perspective . New York: Peter Lang.

Lunsford, A., & Lunsford, K. (2008). ‘Mistakes are a fact of life’: A national comparative study. College Composition and Communication, 59 , 81–806.

Lynch, T., & McNamara, T. F. (1998). Using G-theory and many-facet Rasch measurement in the development of performance assessments of the ESL speaking skills of immigrants. Language Testing, 15 (2), 158–180.

Milanovic, M., Saville, N., & Shuhong, S. (1996). A study of the decision-making behavior of composition markers. In M. Milanovic & N. Saville (Eds.), Performance testing, cognition and assessment: Selected papers from the 15th Language Testing Colloquium (pp. 92–114). Cambridge: Cambridge University Press.

Powers, D. E., Fowles, M. E., Farnum, M., & Ramsey, P. (1994). Will they think less of my handwritten essay if others word process theirs? Effects on essay scores of intermingling handwritten and word-processed essays. Journal of Educational Measurement, 31 (3), 220–233.

Rinnert, C., & Kobayashi, H. (2001). Differing perceptions of EFL writing among readers in Japan. The Modern Language Journal, 85 , 189–209.

Santos, T. (1988). Professors’ reactions to the academic writing on non-native-speaking students. TESOL Quarterly, 22 , 69–90.

Shaw, S. D., & Weir, C. J. (2007). Examining writing research and practice in assessing second language writing . Cambridge: Cambridge University Press.

Shi, L. (2001). Native and non-native-speaking EFL teachers’ evaluation of Chinese students’ English writing. Language Testing, 18 (3), 303–325.

Siddiqui, M. A. (2016). An Evaluation of the Testing of English at Intermediate Level with reference to Board of Intermediate and Secondary Education in the Punjab . Unpublished Doctoral thesis. Multan: Bahauddin Zakaria University Multan.

Smith, D. (2000). Rater judgments in the direct assessment of competency-based second language writing ability. In G. Brindley (Ed.), Studies in immigrant English language assessment (Vol. 1, pp. 159–189). Sydney: Macquarie University.

Vann, R. J., Lorenz, F. O., & Mayer, D. M. (1991). Error gravity: Faculty response to errors in the written discourse of nonnative speakers of English. In L. Hamp-Lyons (Ed.), Assessing second language writing in academic contexts (pp. 181–195). Norwood: Ablex Publishing Corporation.

Wallace, M. J. (1998). Action research for language teachers . Cambridge: Cambridge University Press.

Weigle, S. C. (1994). Effects of training on raters of ESL compositions. Language Testing, 11 (2), 197–223.

Weigle, S. C. (2002). Assessing writing . Cambridge: Cambridge University Press.

Weir, C. J. (1993). Understanding and developing language tests . New York: Prentice Hall.

Weir, C. J. (2005). Language testing and validation: An evidence-based approach . New York: Palgrave Macmillan.

Williams, J. (1981). The phenomenology of errors. College Composition and Communication, 32 , 152–168.

Wiseman, C. S. (2012). Rater effects: Ego engagement in rater decision-making. Assessing Writing, 17 , 150–173.

Wolfe, E. W., Kao, C. W., & Ranney, M. (1998). Cognitive differences in proficient and non-proficient essay scorers. Written Communication, 15 , 465–492.

Download references

Acknowledgements

Authors’ contributions.

The main author has conducted the study, and secondary author has contributed to the data analysis and review of the literature. Both authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and affiliations.

Department of Early Childhood-Primary and Bilingual Education, Chicago State University, 9501 South King Drive, ED 215, Chicago, IL, 60628-1598, USA

Miguel Fernandez & Athar Munir Siddiqui

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Miguel Fernandez .

Interview Schedule for Raters

How long have you been working as a sub-examiner with the Board of Intermediate and Secondary Education?

How many Boards/centres have you worked at as a sub-examiner?

Have you ever had any opportunity to work as a Paper setter/Head examiner /Random checker or in any other capacity with any Examination Board?

Do raters receive any instructions regarding scoring of essays, prior to the scoring or during the scoring?

If yes, what kind of instructions are usually given?

How far are these instructions useful in scoring especially essays?

If no, what criteria do raters use in evaluating essays?

Do the Board(s) arrange any training for the sub-examiner or head examiners?

What happens on the first day of scoring?

In your opinion what qualities should a well written essay at Intermediate level have?

What do you usually look for when you are scoring essays at the Intermediate level?

Do you deduct scores for grammar and spelling mistakes?

How do you count spelling mistakes? If a student misspells a word three times will you count it as one mistake or three?

Do you distinguish between pen mistakes and serious mistakes? If yes, how you do it?

Do you credit or discredit on the basis of handwriting?

If an essay is well written and you are fully satisfied, what maximum score will you award it?

How much time do you usually spend in scoring an essay?

Do you read minutely or make quick judgments by the overall impression the essay has on you?

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Cite this article.

Fernandez, M., Siddiqui, A.M. Markers’ criteria in assessing English essays: an exploratory study of the higher secondary school certificate (HSCC) in the Punjab province of Pakistan. Lang Test Asia 7 , 6 (2017). https://doi.org/10.1186/s40468-017-0037-0

Download citation

Received : 29 December 2016

Accepted : 28 February 2017

Published : 04 March 2017

DOI : https://doi.org/10.1186/s40468-017-0037-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Evaluation criteria

- English essays

- High stakes exams

Cambridge C2 Proficiency (CPE): How Your Writing is Marked

Introduction

When I teach my students at all the different levels of preparation for their Cambridge exams, one of the biggest mysteries is the way the writing exam is marked. Of course, the higher the level, the more detailed the criteria and the higher the expectations become in terms of language but also other aspects of good writing, such as cohesion and coherence as well as appropriate register and following certain conventions depending on the type of text you write.

That’s why I’m going to show you everything you need to know in order to not only understand your own writing better, but also to get a grasp on what Cambridge examiners look for and expect from you when marking writing in C2 Proficiency.

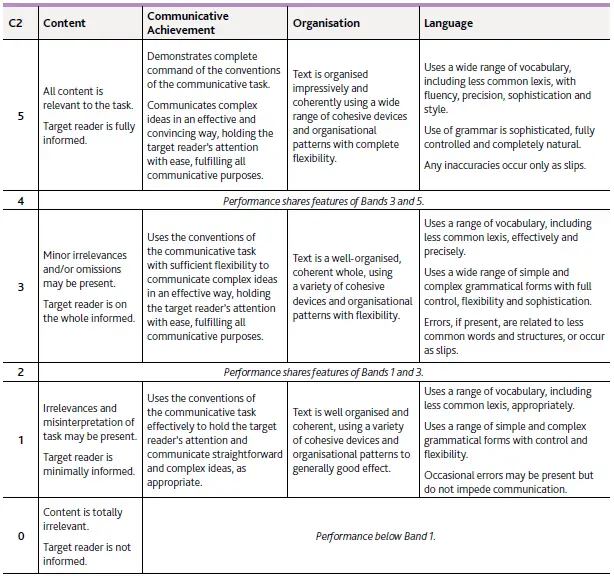

In a nutshell, there are four criteria your texts are assessed on:

Communicative Achievement

Organisation.

Each of these criteria is scored on a scale from 0-5 so you can score a maximum of 20 marks per text. As you have to complete two tasks in the official exam, the total possible score is 40 .

In the following chapter, we are going to look at these criteria in detail so once you’ve finished this article, you are an absolute expert in C2 Proficiency writing.

The four marking scales in C2 Proficiency

As I mentioned in the previous section, there are four marking criteria which are scored on a scale from 0-5. To score zero marks in any of them is basically impossible as you pretty much get one mark for simply handing in something that, at least, talks about the topic of the task.

Above you can see the official marking criteria showing what is expected of you in each criterion and at each band from 0 to 5.

In the following sections, let’s have a look at each criterion, what the different descriptors actually mean and what you can do to score the highest possible marks.

The first marking scale looks at two things: how relevant the content of your text is in the context of the task and if the target reader is informed .

In other words, you need to make sure that everything you write addresses the different topic points in the task (relevant) and that you develop your ideas appropriately (informed reader) depending on the requirements of the task. For example, if the task asks you to state XYZ, you don’t have to expand on this point as much as you would if it wants you to describe or explain something.

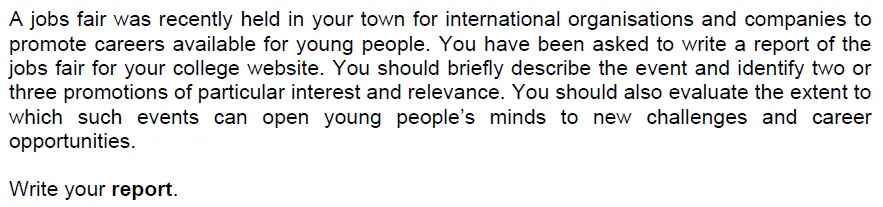

Above, you can see a report writing task. In order to ace the content criterion you should always ask yourself what the topic of the task is and what exactly you need to include in your text.

In this example, the topic is a jobs fair and you need to address the following points:

- Briefly describe the event

- Identify two or three promotions of particular interest and relevance

- Evaluate the extent to which such events can open young people’s minds to new challenges and career opportunities

I’ve highlighted the most important words for you so you get an idea of how detailed you need to be about each individual one. While the first topic point can be kept rather short the other two require a bit more development.

So, if you want to score five marks, you need to address all the topic points in the task in as much detail as the operators dictate (briefly describe, identify…of particular interest and relevance, evaluate).

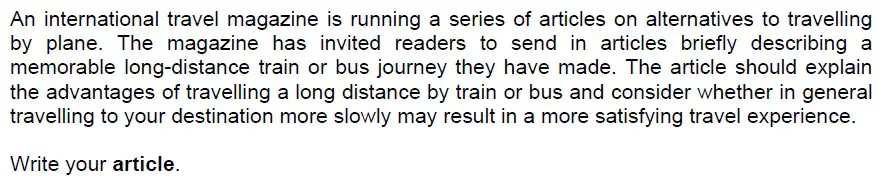

The next scale, called Communicative Achievement, examines three different things: how well you follow the conventions of the task, if you hold the reader’s attention and how well you communicate your straightforward and more complex ideas .

Conventions include things like genre and typical layout (e.g. letter vs. report), function and register (formal vs. informal). For example, in an essay (genre) you start with an introduction and finish with a conclusion (layout), you try to look at different sides of an argument and give your own opinion (function) and use a formal tone (register). This varies from one text type to the other and you need to familiarise yourself with the specifics of each of them.

Holding the reader’s attention looks at your ability to convey meaning in a way that enables the reader to still follow your thoughts easily without getting distracted because, for example, they have to read very carefully just to understand what you are trying to say.

When it comes to your ideas, you should be able to communicate straightforward ones which don’t demand higher rhetorical skills as well as complex ones. Complex ideas are not as concrete in nature and you have to use higher-level language and organisational skills to express them. For example, describing something is rather straightforward compared to evaluating something, which is a more complex idea.

Looking at this article task, we have to think about the typical conventions of an article (layout, engaging language, describing and explaining something, neutral to informal tone, etc.), as well as which topic points are rather straightforward (briefly describing a journey) and which ones more complex to address (explain advantages; Is travelling more slowly more satisfying?). Feel free to have a look at what I did to express all these ideas .

The third marking scale examines how well you organise your text and connect your ideas by taking into account the paragraph structure you choose, linking words and other organisational patterns .

All the different types of text in C2 Proficiency need to be organised in paragraphs. How you do that depends on your preference as well as the conventions of the task. The most important thing to remember is to use a structure that makes sense and organises the text in a logical manner.

Within and across paragraphs, there is a wide variety of language and patterns we can employ to make our text more coherent and cohesive. The most common (and most basic) device is linking words which connect ideas in a very direct way, for example and, but, because, so, first of all, to sum up, etc. You should always include them instead of just using commas or full stops as these separate ideas rather than connect them.

Taking it up to the next level, we have other more advanced cohesive devices. These include less common linking expressions (e.g. moreover, furthermore, as a result, it may appear, etc.) but also other devices. In this category fall grammatical structures like pronouns to refer back to a previously mentioned idea, substitution (There are three people in the picture. The one on the left…), ellipsis (My first car is yellow while the second one is green). or repetition.

Last but not least, you should also include other organisational patterns to organise your writing, such as arranging your paragraphs and sentences in a logical order, parallelism (Give a man a fish, and you feed him for a day. Teach a man to fish, and you feed him for a lifetime.) or rhetorical questions.

This criterion is probably the most obvious, but we are going to look at it in as much detail as the other ones. Language examines the range and control of the vocabulary and grammar you use, how appropriate your words are for a specific task and the mistakes you make in your writing .

In terms of vocabulary, you need to be aware of the topic of the task and make sure you include words and expressions connected to it (appropriate). You also need to show that you know less common vocabulary instead of just high-frequency items and that you can use it with proper control and in the right context.

As for grammar, only basic structures, like simple verb forms or clauses are not enough at C2 Proficiency level. The examiners expect more and you should definitely be able to incorporate more complex structures with high accuracy in your writing.

If you make a mistake and it is clear that it is just a slip (one-time error), don’t worry too much, but if the examiner can see that you repeatedly get certain structures wrong or use them inappropriately, you might get penalised so challenge yourself, but don’t overdo it.

Marking writing tasks in Cambridge C2 Proficiency is a science in itself and examiners are well trained and continuously monitored in order to give you a fair chance at success and provide everyone with a level playing field.

Even though it isn’t easy to understand every minute detail, I hope this article helps you a little bit going forward so you can assess your own writing in a more informed way and make the right changes and choices.

If you want, I can give you some writing feedback or even be your teacher in a private class . It would be my pleasure to work with you on your journey to C2 Proficiency glory.

Lots of love,

Teacher Phill 🙂

Similar Posts

Cambridge C2 Proficiency (CPE): How to Calculate Your Score

How to calculate your score in C2 Proficiency Knowing and understanding your score in the different Cambridge exams can be…

Cambridge C2 Proficiency (CPE): Everything You Need to Know

Cambridge C2 Proficiency is the pinnacle of English language exams and passing this test means that you are truly in…

Cambridge C2 Proficiciency (CPE): How to Write a Review

Overview Mandatory task: no Word count: 280-320 Main characteristics: descriptive, narrative, evaluative, recommendations/suggestions Register: depends on the task Structure: Introduction…

Cambridge C2 Proficiency (CPE): How to Write an Essay

Overview Mandatory task: yes Word count: 240-280 Main characteristics: summarising and evaluating main ideas Register: formal Structure: introduction, two topic…

Cambridge C2 Proficiency (CPE): How to Write a Report

Overview Mandatory task: no Word count: 280-320 Main characteristics: descriptive, comparative, analytical, impersonal, persuasive Register: normally formal but depends on…

How To Stay Calm on Your Cambridge Exam Day

Table of Contents Cambridge Exam Day – What To Do What to do on the day of your Cambridge English…

One Comment

Comments are closed.

- Support Sites

English A: Language and Literature Support Site

Hle assessment criteria, criterion a: knowledge, understanding and interpretation.

- To what extent does the essay show knowledge and understanding of the work or body of work?

- To what extent are interpretations drawn from the work or body of work to explore the topic?

- To what extent are interpretations supported by relevant references to the work or body of work?

Criterion B: Analysis and evaluation

- To what extent does the essay show analysis and evaluation of how the author uses stylistic and structural features to construct meaning on the topic?

Criterion C: Coherence, focus and organisation

- To what extent does the essay show coherence, focus and organisation?

Criterion D: Language

- To what extent is the student’s use of vocabulary, tone, syntax, style and terminology accurate, varied and effective?

ETS navigation links:

- Criterion Home

Sign In to Your Criterion® Account

Important message.

- The user name and/or password you entered is invalid. Passwords are case sensitive. Make sure your CAPS LOCK key is off. Please try again, or use Forgot User Name or Forgot Password links for help.

- Students: Have more opportunities to practice writing at their own pace, get immediate feedback and revise essays based on the feedback.

- Teachers: Can decrease their workload and free up time to concentrate on the content of students’ work and teach higher level writing skills.

- Administrators: Can make data driven decisions and easily monitor district, school and classroom writing performance.

Returning User

- Forgot User Name

- Forgot Password

- You will need an access code from your Administrator or Instructor to create an account.

- If you used Criterion with a previous institution or class, please sign in with that user name and password. There is no need to create a new account.

Create Account

By logging in, subscriber agrees that the privacy of student and instructor information, essays, and score data are the responsibility of the instructor and client institution. Student and instructor names, user identification, passwords, essays, and score data are maintained by ETS on a secure server. ETS does not disseminate student or instructor information and it is only accessible to the instructor, designated Subscriber Administrator, and ETS Account Manager. The Subscriber acknowledges that prior to using Criterion, said Subscriber is obligated to obtain any written parental consent that may be required in accordance with COPPA, Section 6502.

Quick Links

- System Requirements

For K–12 Education

Learn more about how the Criterion service can help you measure and improve your students' writing skills, adjust instructions and track student progress with greater efficiency. Order Criterion >

For Higher Education

In order to bring you the best possible user experience, this site uses Javascript. If you are seeing this message, it is likely that the Javascript option in your browser is disabled. For optimal viewing of this site, please ensure that Javascript is enabled for your browser.

IMAGES

VIDEO

COMMENTS

There are four marking scales in the writing exam. Each of these scales looks at specific aspects of your writing. Generally speaking the four parts are: Content - answering the task, supporting your ideas. Communicative achievement - register, tone, clear ideas, conventions of the specific task type. Organisation - structure of the text ...

For more in depth information on the marking scheme of O Level English Essays, click on this link. 3) A Level General Paper (GP) Essay Marking Scheme. As a JC tutor, when marking GP essays, you should primarily focus on; - content - use of english. Content. GP Paper 1 comprises 12 questions and students have to answer any one of the 12 ...

marking a piece of writing for an exam. For the B1 Preliminary for Schools exam, these are: Content, Communicative Achievement, Organisation and Language. 1. Writing Assessment Scale 2. Writing Assessment subscales 1. Assessment criteria 2. Assessment categories Each piece of writing gets four sets of marks for each of the subscales, from 0 ...

Figure 1. STEPS IN WRITING AN ESSAY. Choosing a topic or question Analysing the topic or question chosen Reading and noting relevant material Drawing up an essay plan Writing the essay Reviewing and redrafting. Marking and Commenting on Essays Chapter 6 Tutoring and Demonstrating: A Handbook53.

Marking Criteria: Essays and Exams Criterion 1st 2.1 2.2 3rd Fail relevance to the question precise grasp of the question or topic, addresses it directly and keeps it in focus throughout shows a sound understanding of the question or topic and tackles it effectively shows an adequate understanding of the question or topic and shows

marking a piece of writing for an exam. For the B2 First for Schools exam, these are: Content, Communicative Achievement, Organisation and Language. 1. Writing Assessment Scale 2. Writing Assessment subscales 1. Assessment criteria 2. Assessment categories Each piece of writing gets four sets of marks for each of the subscales, from 0 (lowest)

Essay Rubric Directions: Your essay will be graded based on this rubric. Consequently, use this rubric as a guide when writing your essay and check it again before you submit your essay. Traits 4 3 2 1 Focus & Details There is one clear, well-focused topic. Main ideas are clear and are well supported by detailed and accurate information.

To prepare for the C1 Advanced exam, learners should: Read widely to familiarise themselves with the conventions and styles of different types of writing (i.e. reports, proposals, reviews, letters and essays). Write 10-minute plans for a variety of questions in past papers, so that planning becomes automatic and quick.

1. The brief. The first thing a marker will likely do is examine the writer's brief. This indicates essay type, subject and content focus, word limit and any other set requirements. The brief gives us the guidelines by which to mark the essay. With these expectations in mind, critical reading of your essay begins.

their writing satisfies the assessment criteria and which areas they need to improve. How the Writing paper is assessed . Cambridge English examiners consider four things when marking the Writing paper. Part 1 is marked out of a total of 20 marks and Part 2 is marked out of a total of 20 marks. Each criterion is worth 25% of the total mark. Content

Marking of essays is mainly carried out by human raters who bring in their own subjective and idiosyncratic evaluation criteria, which sometimes lead to discrepancy. This discrepancy may in turn raise issues like reliability and fairness. The current research attempts to explore the evaluation criteria of markers on a national level high stakes examination conducted at 12th grade by three ...

Academic English criteria for marking writings, presentations, posters & seminars. Criteria is divided into sections of task, language etc. ... Marking criteria. Students; ... Essay writing criteria x 2 (updated 2023) There are two writing criterion in this download. One is a basic marking criteria that can be used to mark students' general ...

Always use the rubric when marking the creative essay (Paper 3, SECTION A). Marks from 0-50 have been divided into FIVE major level descriptors. In the Content, Language and Style criteria, each of the five level descriptors is divided into an upper-level and a lower level sub-

In a nutshell, there are four criteria your texts are assessed on: Content. Communicative Achievement. Organisation. Language. Each of these criteria is scored on a scale from 0-5 so you can score a maximum of 20 marks per text. As you have to complete two tasks in the official exam, the total possible score is 40.

Descriptor. 1. The essay shows little analysis and evaluation of how the author uses stylistic and structural features to construct meaning on the topic. 2. The essay shows some analysis and evaluation of how the author uses stylistic and structural features to construct meaning on the topic. 3.

• Marking must be objective. Give credit for relevant ideas. • Use the 50mark assessment - rubric to mark the essays. The texts produced by candidates must be assessed according to the following criteria as set out in the assessment rubric: o Content and planning (30 marks) o Language, style and editing (15 marks) o Structure (5 marks)

These general marking principles must be applied by all examiners when marking candidate answers. They should be applied alongside the specific content of the mark scheme or generic level descriptors for a question. Each question paper and mark scheme will also comply with these marking principles. GENERIC MARKING PRINCIPLE 1:

For example if 9-6 best describes the candidate's work, reconsider the candidate's abilities in the three main areas: knowledge and understanding; analysis; evaluation. If the candidate just misses a 9, award an 8. If the candidate is slightly above a 6, award a 7. page 3. Marking instructions for each question.

marking a piece of writing for an exam. For the A2 Key for Schools exam, these are: Content, Organisation and Language. 1. W riting Assessment Scale 2. W riting Assessment subscales 1. Assessment criteria 2. Assessment categories Each piece of writing gets four sets of marks for each of the subscales, from 0 (lowest) to 5 (highest).

of essay marking criteria Norton et al. (2002) constructed an Essay Feedback Checklist (EFC). The EFC is a tool that consists of nine generic criteria (e.g. 'Addressed the ques-tion throughout the essay') for which stu-dents were asked to provide, before submitting their essay, a confidence rating indicating whether they felt they had met

Essay writing criteria x 2 (updated 2023). There are two writing criterion in this download. One is a basic marking criteria that can be used to mark students' general writing and the other criteria includes the use of sources.

Methodology of the research is mostly complete: Source(s) and/or method(s) to be used are generally relevant and appropriate given the topic and research question. There is some evidence that their selection(s) was informed. If the topic or research question is deemed inappropriate for the subject in which the essay is registered no more than ...

Account. The Criterion® Online Writing Evaluation service from ETS is a web-based instructional writing tool that helps students, plan, write and revise their essays guided by instant diagnostic feedback and a Criterion score. Learn more.