Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

Market Research

- Artificial Intelligence

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Causal Research

Try Qualtrics for free

Causal research: definition, examples and how to use it.

16 min read Causal research enables market researchers to predict hypothetical occurrences & outcomes while improving existing strategies. Discover how this research can decrease employee retention & increase customer success for your business.

What is causal research?

Causal research, also known as explanatory research or causal-comparative research, identifies the extent and nature of cause-and-effect relationships between two or more variables.

It’s often used by companies to determine the impact of changes in products, features, or services process on critical company metrics. Some examples:

- How does rebranding of a product influence intent to purchase?

- How would expansion to a new market segment affect projected sales?

- What would be the impact of a price increase or decrease on customer loyalty?

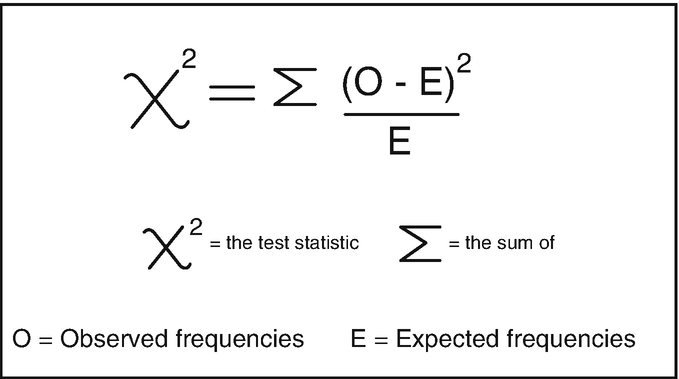

To maintain the accuracy of causal research, ‘confounding variables’ or influences — e.g. those that could distort the results — are controlled. This is done either by keeping them constant in the creation of data, or by using statistical methods. These variables are identified before the start of the research experiment.

As well as the above, research teams will outline several other variables and principles in causal research:

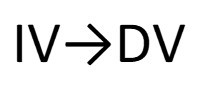

- Independent variables

The variables that may cause direct changes in another variable. For example, the effect of truancy on a student’s grade point average. The independent variable is therefore class attendance.

- Control variables

These are the components that remain unchanged during the experiment so researchers can better understand what conditions create a cause-and-effect relationship.

This describes the cause-and-effect relationship. When researchers find causation (or the cause), they’ve conducted all the processes necessary to prove it exists.

- Correlation

Any relationship between two variables in the experiment. It’s important to note that correlation doesn’t automatically mean causation. Researchers will typically establish correlation before proving cause-and-effect.

- Experimental design

Researchers use experimental design to define the parameters of the experiment — e.g. categorizing participants into different groups.

- Dependent variables

These are measurable variables that may change or are influenced by the independent variable. For example, in an experiment about whether or not terrain influences running speed, your dependent variable is the terrain.

Why is causal research useful?

It’s useful because it enables market researchers to predict hypothetical occurrences and outcomes while improving existing strategies. This allows businesses to create plans that benefit the company. It’s also a great research method because researchers can immediately see how variables affect each other and under what circumstances.

Also, once the first experiment has been completed, researchers can use the learnings from the analysis to repeat the experiment or apply the findings to other scenarios. Because of this, it’s widely used to help understand the impact of changes in internal or commercial strategy to the business bottom line.

Some examples include:

- Understanding how overall training levels are improved by introducing new courses

- Examining which variations in wording make potential customers more interested in buying a product

- Testing a market’s response to a brand-new line of products and/or services

So, how does causal research compare and differ from other research types?

Well, there are a few research types that are used to find answers to some of the examples above:

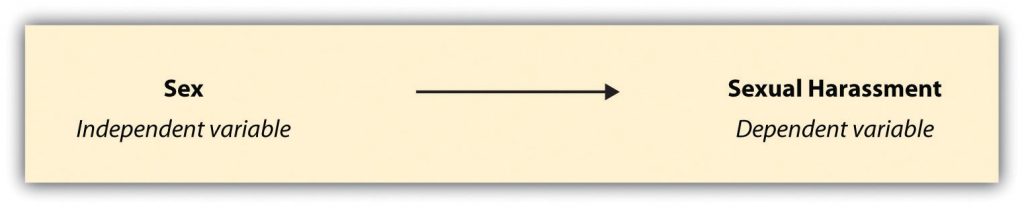

1. Exploratory research

As its name suggests, exploratory research involves assessing a situation (or situations) where the problem isn’t clear. Through this approach, researchers can test different avenues and ideas to establish facts and gain a better understanding.

Researchers can also use it to first navigate a topic and identify which variables are important. Because no area is off-limits, the research is flexible and adapts to the investigations as it progresses.

Finally, this approach is unstructured and often involves gathering qualitative data, giving the researcher freedom to progress the research according to their thoughts and assessment. However, this may make results susceptible to researcher bias and may limit the extent to which a topic is explored.

2. Descriptive research

Descriptive research is all about describing the characteristics of the population, phenomenon or scenario studied. It focuses more on the “what” of the research subject than the “why”.

For example, a clothing brand wants to understand the fashion purchasing trends amongst buyers in California — so they conduct a demographic survey of the region, gather population data and then run descriptive research. The study will help them to uncover purchasing patterns amongst fashion buyers in California, but not necessarily why those patterns exist.

As the research happens in a natural setting, variables can cross-contaminate other variables, making it harder to isolate cause and effect relationships. Therefore, further research will be required if more causal information is needed.

Get started on your market research journey with CoreXM

How is causal research different from the other two methods above?

Well, causal research looks at what variables are involved in a problem and ‘why’ they act a certain way. As the experiment takes place in a controlled setting (thanks to controlled variables) it’s easier to identify cause-and-effect amongst variables.

Furthermore, researchers can carry out causal research at any stage in the process, though it’s usually carried out in the later stages once more is known about a particular topic or situation.

Finally, compared to the other two methods, causal research is more structured, and researchers can combine it with exploratory and descriptive research to assist with research goals.

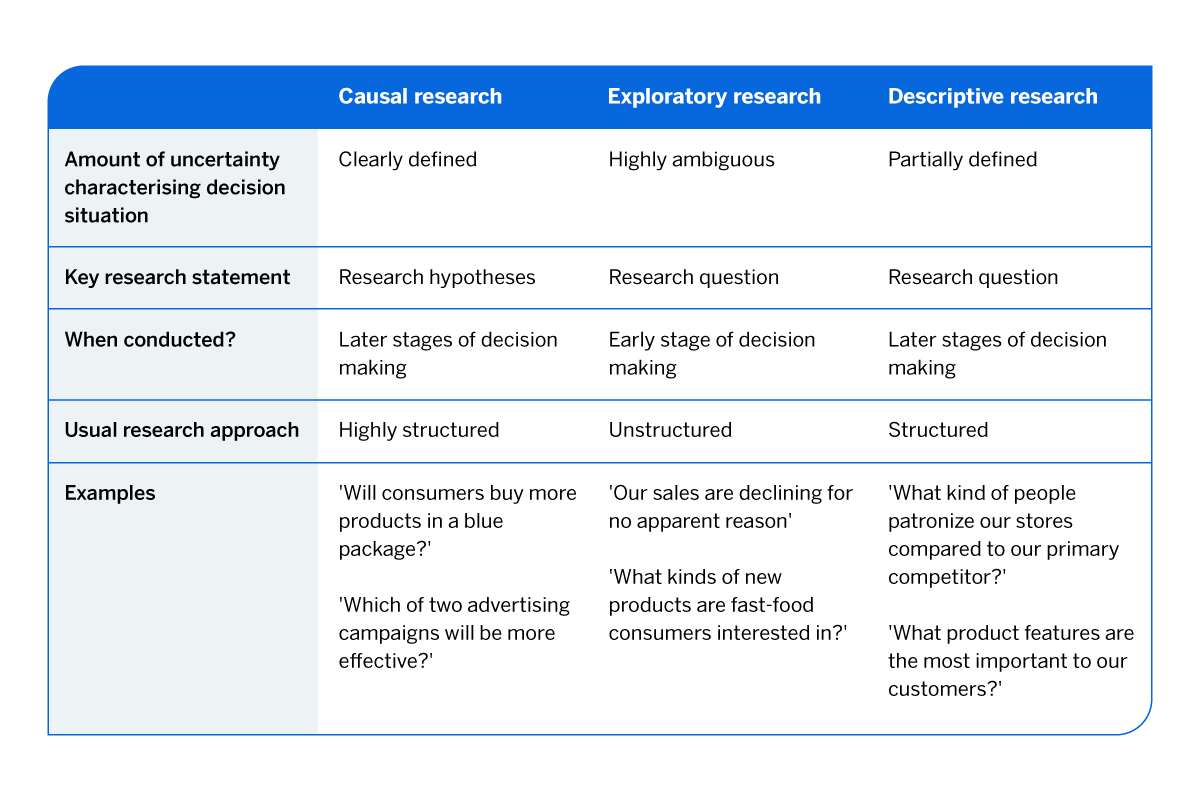

Summary of three research types

What are the advantages of causal research?

- Improve experiences

By understanding which variables have positive impacts on target variables (like sales revenue or customer loyalty), businesses can improve their processes, return on investment, and the experiences they offer customers and employees.

- Help companies improve internally

By conducting causal research, management can make informed decisions about improving their employee experience and internal operations. For example, understanding which variables led to an increase in staff turnover.

- Repeat experiments to enhance reliability and accuracy of results

When variables are identified, researchers can replicate cause-and-effect with ease, providing them with reliable data and results to draw insights from.

- Test out new theories or ideas

If causal research is able to pinpoint the exact outcome of mixing together different variables, research teams have the ability to test out ideas in the same way to create viable proof of concepts.

- Fix issues quickly

Once an undesirable effect’s cause is identified, researchers and management can take action to reduce the impact of it or remove it entirely, resulting in better outcomes.

What are the disadvantages of causal research?

- Provides information to competitors

If you plan to publish your research, it provides information about your plans to your competitors. For example, they might use your research outcomes to identify what you are up to and enter the market before you.

- Difficult to administer

Causal research is often difficult to administer because it’s not possible to control the effects of extraneous variables.

- Time and money constraints

Budgetary and time constraints can make this type of research expensive to conduct and repeat. Also, if an initial attempt doesn’t provide a cause and effect relationship, the ROI is wasted and could impact the appetite for future repeat experiments.

- Requires additional research to ensure validity

You can’t rely on just the outcomes of causal research as it’s inaccurate. It’s best to conduct other types of research alongside it to confirm its output.

- Trouble establishing cause and effect

Researchers might identify that two variables are connected, but struggle to determine which is the cause and which variable is the effect.

- Risk of contamination

There’s always the risk that people outside your market or area of study could affect the results of your research. For example, if you’re conducting a retail store study, shoppers outside your ‘test parameters’ shop at your store and skew the results.

How can you use causal research effectively?

To better highlight how you can use causal research across functions or markets, here are a few examples:

Market and advertising research

A company might want to know if their new advertising campaign or marketing campaign is having a positive impact. So, their research team can carry out a causal research project to see which variables cause a positive or negative effect on the campaign.

For example, a cold-weather apparel company in a winter ski-resort town may see an increase in sales generated after a targeted campaign to skiers. To see if one caused the other, the research team could set up a duplicate experiment to see if the same campaign would generate sales from non-skiers. If the results reduce or change, then it’s likely that the campaign had a direct effect on skiers to encourage them to purchase products.

Improving customer experiences and loyalty levels

Customers enjoy shopping with brands that align with their own values, and they’re more likely to buy and present the brand positively to other potential shoppers as a result. So, it’s in your best interest to deliver great experiences and retain your customers.

For example, the Harvard Business Review found that an increase in customer retention rates by 5% increased profits by 25% to 95%. But let’s say you want to increase your own, how can you identify which variables contribute to it?Using causal research, you can test hypotheses about which processes, strategies or changes influence customer retention. For example, is it the streamlined checkout? What about the personalized product suggestions? Or maybe it was a new solution that solved their problem? Causal research will help you find out.

Discover how to use analytics to improve customer retention.

Improving problematic employee turnover rates

If your company has a high attrition rate, causal research can help you narrow down the variables or reasons which have the greatest impact on people leaving. This allows you to prioritize your efforts on tackling the issues in the right order, for the best positive outcomes.

For example, through causal research, you might find that employee dissatisfaction due to a lack of communication and transparency from upper management leads to poor morale, which in turn influences employee retention.

To rectify the problem, you could implement a routine feedback loop or session that enables your people to talk to your company’s C-level executives so that they feel heard and understood.

How to conduct causal research first steps to getting started are:

1. Define the purpose of your research

What questions do you have? What do you expect to come out of your research? Think about which variables you need to test out the theory.

2. Pick a random sampling if participants are needed

Using a technology solution to support your sampling, like a database, can help you define who you want your target audience to be, and how random or representative they should be.

3. Set up the controlled experiment

Once you’ve defined which variables you’d like to measure to see if they interact, think about how best to set up the experiment. This could be in-person or in-house via interviews, or it could be done remotely using online surveys.

4. Carry out the experiment

Make sure to keep all irrelevant variables the same, and only change the causal variable (the one that causes the effect) to gather the correct data. Depending on your method, you could be collecting qualitative or quantitative data, so make sure you note your findings across each regularly.

5. Analyze your findings

Either manually or using technology, analyze your data to see if any trends, patterns or correlations emerge. By looking at the data, you’ll be able to see what changes you might need to do next time, or if there are questions that require further research.

6. Verify your findings

Your first attempt gives you the baseline figures to compare the new results to. You can then run another experiment to verify your findings.

7. Do follow-up or supplemental research

You can supplement your original findings by carrying out research that goes deeper into causes or explores the topic in more detail. One of the best ways to do this is to use a survey. See ‘Use surveys to help your experiment’.

Identifying causal relationships between variables

To verify if a causal relationship exists, you have to satisfy the following criteria:

- Nonspurious association

A clear correlation exists between one cause and the effect. In other words, no ‘third’ that relates to both (cause and effect) should exist.

- Temporal sequence

The cause occurs before the effect. For example, increased ad spend on product marketing would contribute to higher product sales.

- Concomitant variation

The variation between the two variables is systematic. For example, if a company doesn’t change its IT policies and technology stack, then changes in employee productivity were not caused by IT policies or technology.

How surveys help your causal research experiments?

There are some surveys that are perfect for assisting researchers with understanding cause and effect. These include:

- Employee Satisfaction Survey – An introductory employee satisfaction survey that provides you with an overview of your current employee experience.

- Manager Feedback Survey – An introductory manager feedback survey geared toward improving your skills as a leader with valuable feedback from your team.

- Net Promoter Score (NPS) Survey – Measure customer loyalty and understand how your customers feel about your product or service using one of the world’s best-recognized metrics.

- Employee Engagement Survey – An entry-level employee engagement survey that provides you with an overview of your current employee experience.

- Customer Satisfaction Survey – Evaluate how satisfied your customers are with your company, including the products and services you provide and how they are treated when they buy from you.

- Employee Exit Interview Survey – Understand why your employees are leaving and how they’ll speak about your company once they’re gone.

- Product Research Survey – Evaluate your consumers’ reaction to a new product or product feature across every stage of the product development journey.

- Brand Awareness Survey – Track the level of brand awareness in your target market, including current and potential future customers.

- Online Purchase Feedback Survey – Find out how well your online shopping experience performs against customer needs and expectations.

That covers the fundamentals of causal research and should give you a foundation for ongoing studies to assess opportunities, problems, and risks across your market, product, customer, and employee segments.

If you want to transform your research, empower your teams and get insights on tap to get ahead of the competition, maybe it’s time to leverage Qualtrics CoreXM.

Qualtrics CoreXM provides a single platform for data collection and analysis across every part of your business — from customer feedback to product concept testing. What’s more, you can integrate it with your existing tools and services thanks to a flexible API.

Qualtrics CoreXM offers you as much or as little power and complexity as you need, so whether you’re running simple surveys or more advanced forms of research, it can deliver every time.

Related resources

Market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, primary vs secondary research 14 min read, request demo.

Ready to learn more about Qualtrics?

What is causal research design?

Last updated

14 May 2023

Reviewed by

Examining these relationships gives researchers valuable insights into the mechanisms that drive the phenomena they are investigating.

Organizations primarily use causal research design to identify, determine, and explore the impact of changes within an organization and the market. You can use a causal research design to evaluate the effects of certain changes on existing procedures, norms, and more.

This article explores causal research design, including its elements, advantages, and disadvantages.

Analyze your causal research

Dovetail streamlines causal research analysis to help you uncover and share actionable insights

- Components of causal research

You can demonstrate the existence of cause-and-effect relationships between two factors or variables using specific causal information, allowing you to produce more meaningful results and research implications.

These are the key inputs for causal research:

The timeline of events

Ideally, the cause must occur before the effect. You should review the timeline of two or more separate events to determine the independent variables (cause) from the dependent variables (effect) before developing a hypothesis.

If the cause occurs before the effect, you can link cause and effect and develop a hypothesis .

For instance, an organization may notice a sales increase. Determining the cause would help them reproduce these results.

Upon review, the business realizes that the sales boost occurred right after an advertising campaign. The business can leverage this time-based data to determine whether the advertising campaign is the independent variable that caused a change in sales.

Evaluation of confounding variables

In most cases, you need to pinpoint the variables that comprise a cause-and-effect relationship when using a causal research design. This uncovers a more accurate conclusion.

Co-variations between a cause and effect must be accurate, and a third factor shouldn’t relate to cause and effect.

Observing changes

Variation links between two variables must be clear. A quantitative change in effect must happen solely due to a quantitative change in the cause.

You can test whether the independent variable changes the dependent variable to evaluate the validity of a cause-and-effect relationship. A steady change between the two variables must occur to back up your hypothesis of a genuine causal effect.

- Why is causal research useful?

Causal research allows market researchers to predict hypothetical occurrences and outcomes while enhancing existing strategies. Organizations can use this concept to develop beneficial plans.

Causal research is also useful as market researchers can immediately deduce the effect of the variables on each other under real-world conditions.

Once researchers complete their first experiment, they can use their findings. Applying them to alternative scenarios or repeating the experiment to confirm its validity can produce further insights.

Businesses widely use causal research to identify and comprehend the effect of strategic changes on their profits.

- How does causal research compare and differ from other research types?

Other research types that identify relationships between variables include exploratory and descriptive research .

Here’s how they compare and differ from causal research designs:

Exploratory research

An exploratory research design evaluates situations where a problem or opportunity's boundaries are unclear. You can use this research type to test various hypotheses and assumptions to establish facts and understand a situation more clearly.

You can also use exploratory research design to navigate a topic and discover the relevant variables. This research type allows flexibility and adaptability as the experiment progresses, particularly since no area is off-limits.

It’s worth noting that exploratory research is unstructured and typically involves collecting qualitative data . This provides the freedom to tweak and amend the research approach according to your ongoing thoughts and assessments.

Unfortunately, this exposes the findings to the risk of bias and may limit the extent to which a researcher can explore a topic.

This table compares the key characteristics of causal and exploratory research:

Descriptive research

This research design involves capturing and describing the traits of a population, situation, or phenomenon. Descriptive research focuses more on the " what " of the research subject and less on the " why ."

Since descriptive research typically happens in a real-world setting, variables can cross-contaminate others. This increases the challenge of isolating cause-and-effect relationships.

You may require further research if you need more causal links.

This table compares the key characteristics of causal and descriptive research.

Causal research examines a research question’s variables and how they interact. It’s easier to pinpoint cause and effect since the experiment often happens in a controlled setting.

Researchers can conduct causal research at any stage, but they typically use it once they know more about the topic.

In contrast, causal research tends to be more structured and can be combined with exploratory and descriptive research to help you attain your research goals.

- How can you use causal research effectively?

Here are common ways that market researchers leverage causal research effectively:

Market and advertising research

Do you want to know if your new marketing campaign is affecting your organization positively? You can use causal research to determine the variables causing negative or positive impacts on your campaign.

Improving customer experiences and loyalty levels

Consumers generally enjoy purchasing from brands aligned with their values. They’re more likely to purchase from such brands and positively represent them to others.

You can use causal research to identify the variables contributing to increased or reduced customer acquisition and retention rates.

Could the cause of increased customer retention rates be streamlined checkout?

Perhaps you introduced a new solution geared towards directly solving their immediate problem.

Whatever the reason, causal research can help you identify the cause-and-effect relationship. You can use this to enhance your customer experiences and loyalty levels.

Improving problematic employee turnover rates

Is your organization experiencing skyrocketing attrition rates?

You can leverage the features and benefits of causal research to narrow down the possible explanations or variables with significant effects on employees quitting.

This way, you can prioritize interventions, focusing on the highest priority causal influences, and begin to tackle high employee turnover rates.

- Advantages of causal research

The main benefits of causal research include the following:

Effectively test new ideas

If causal research can pinpoint the precise outcome through combinations of different variables, researchers can test ideas in the same manner to form viable proof of concepts.

Achieve more objective results

Market researchers typically use random sampling techniques to choose experiment participants or subjects in causal research. This reduces the possibility of exterior, sample, or demography-based influences, generating more objective results.

Improved business processes

Causal research helps businesses understand which variables positively impact target variables, such as customer loyalty or sales revenues. This helps them improve their processes, ROI, and customer and employee experiences.

Guarantee reliable and accurate results

Upon identifying the correct variables, researchers can replicate cause and effect effortlessly. This creates reliable data and results to draw insights from.

Internal organization improvements

Businesses that conduct causal research can make informed decisions about improving their internal operations and enhancing employee experiences.

- Disadvantages of causal research

Like any other research method, casual research has its set of drawbacks that include:

Extra research to ensure validity

Researchers can't simply rely on the outcomes of causal research since it isn't always accurate. There may be a need to conduct other research types alongside it to ensure accurate output.

Coincidence

Coincidence tends to be the most significant error in causal research. Researchers often misinterpret a coincidental link between a cause and effect as a direct causal link.

Administration challenges

Causal research can be challenging to administer since it's impossible to control the impact of extraneous variables .

Giving away your competitive advantage

If you intend to publish your research, it exposes your information to the competition.

Competitors may use your research outcomes to identify your plans and strategies to enter the market before you.

- Causal research examples

Multiple fields can use causal research, so it serves different purposes, such as.

Customer loyalty research

Organizations and employees can use causal research to determine the best customer attraction and retention approaches.

They monitor interactions between customers and employees to identify cause-and-effect patterns. That could be a product demonstration technique resulting in higher or lower sales from the same customers.

Example: Business X introduces a new individual marketing strategy for a small customer group and notices a measurable increase in monthly subscriptions.

Upon getting identical results from different groups, the business concludes that the individual marketing strategy resulted in the intended causal relationship.

Advertising research

Businesses can also use causal research to implement and assess advertising campaigns.

Example: Business X notices a 7% increase in sales revenue a few months after a business introduces a new advertisement in a certain region. The business can run the same ad in random regions to compare sales data over the same period.

This will help the company determine whether the ad caused the sales increase. If sales increase in these randomly selected regions, the business could conclude that advertising campaigns and sales share a cause-and-effect relationship.

Educational research

Academics, teachers, and learners can use causal research to explore the impact of politics on learners and pinpoint learner behavior trends.

Example: College X notices that more IT students drop out of their program in their second year, which is 8% higher than any other year.

The college administration can interview a random group of IT students to identify factors leading to this situation, including personal factors and influences.

With the help of in-depth statistical analysis, the institution's researchers can uncover the main factors causing dropout. They can create immediate solutions to address the problem.

Is a causal variable dependent or independent?

When two variables have a cause-and-effect relationship, the cause is often called the independent variable. As such, the effect variable is dependent, i.e., it depends on the independent causal variable. An independent variable is only causal under experimental conditions.

What are the three criteria for causality?

The three conditions for causality are:

Temporality/temporal precedence: The cause must precede the effect.

Rationality: One event predicts the other with an explanation, and the effect must vary in proportion to changes in the cause.

Control for extraneous variables: The covariables must not result from other variables.

Is causal research experimental?

Causal research is mostly explanatory. Causal studies focus on analyzing a situation to explore and explain the patterns of relationships between variables.

Further, experiments are the primary data collection methods in studies with causal research design. However, as a research design, causal research isn't entirely experimental.

What is the difference between experimental and causal research design?

One of the main differences between causal and experimental research is that in causal research, the research subjects are already in groups since the event has already happened.

On the other hand, researchers randomly choose subjects in experimental research before manipulating the variables.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 5 March 2024

Last updated: 25 November 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

Causal Research: Definition, Design, Tips, Examples

Appinio Research · 21.02.2024 · 33min read

Ever wondered why certain events lead to specific outcomes? Understanding causality—the relationship between cause and effect—is crucial for unraveling the mysteries of the world around us. In this guide on causal research, we delve into the methods, techniques, and principles behind identifying and establishing cause-and-effect relationships between variables. Whether you're a seasoned researcher or new to the field, this guide will equip you with the knowledge and tools to conduct rigorous causal research and draw meaningful conclusions that can inform decision-making and drive positive change.

What is Causal Research?

Causal research is a methodological approach used in scientific inquiry to investigate cause-and-effect relationships between variables. Unlike correlational or descriptive research, which merely examine associations or describe phenomena, causal research aims to determine whether changes in one variable cause changes in another variable.

Importance of Causal Research

Understanding the importance of causal research is crucial for appreciating its role in advancing knowledge and informing decision-making across various fields. Here are key reasons why causal research is significant:

- Establishing Causality: Causal research enables researchers to determine whether changes in one variable directly cause changes in another variable. This helps identify effective interventions, predict outcomes, and inform evidence-based practices.

- Guiding Policy and Practice: By identifying causal relationships, causal research provides empirical evidence to support policy decisions, program interventions, and business strategies. Decision-makers can use causal findings to allocate resources effectively and address societal challenges.

- Informing Predictive Modeling: Causal research contributes to the development of predictive models by elucidating causal mechanisms underlying observed phenomena. Predictive models based on causal relationships can accurately forecast future outcomes and trends.

- Advancing Scientific Knowledge: Causal research contributes to the cumulative body of scientific knowledge by testing hypotheses, refining theories, and uncovering underlying mechanisms of phenomena. It fosters a deeper understanding of complex systems and phenomena.

- Mitigating Confounding Factors: Understanding causal relationships allows researchers to control for confounding variables and reduce bias in their studies. By isolating the effects of specific variables, researchers can draw more valid and reliable conclusions.

Causal Research Distinction from Other Research

Understanding the distinctions between causal research and other types of research methodologies is essential for researchers to choose the most appropriate approach for their study objectives. Let's explore the differences and similarities between causal research and descriptive, exploratory, and correlational research methodologies .

Descriptive vs. Causal Research

Descriptive research focuses on describing characteristics, behaviors, or phenomena without manipulating variables or establishing causal relationships. It provides a snapshot of the current state of affairs but does not attempt to explain why certain phenomena occur.

Causal research , on the other hand, seeks to identify cause-and-effect relationships between variables by systematically manipulating independent variables and observing their effects on dependent variables. Unlike descriptive research, causal research aims to determine whether changes in one variable directly cause changes in another variable.

Similarities:

- Both descriptive and causal research involve empirical observation and data collection.

- Both types of research contribute to the scientific understanding of phenomena, albeit through different approaches.

Differences:

- Descriptive research focuses on describing phenomena, while causal research aims to explain why phenomena occur by identifying causal relationships.

- Descriptive research typically uses observational methods, while causal research often involves experimental designs or causal inference techniques to establish causality.

Exploratory vs. Causal Research

Exploratory research aims to explore new topics, generate hypotheses, or gain initial insights into phenomena. It is often conducted when little is known about a subject and seeks to generate ideas for further investigation.

Causal research , on the other hand, is concerned with testing hypotheses and establishing cause-and-effect relationships between variables. It builds on existing knowledge and seeks to confirm or refute causal hypotheses through systematic investigation.

- Both exploratory and causal research contribute to the generation of knowledge and theory development.

- Both types of research involve systematic inquiry and data analysis to answer research questions.

- Exploratory research focuses on generating hypotheses and exploring new areas of inquiry, while causal research aims to test hypotheses and establish causal relationships.

- Exploratory research is more flexible and open-ended, while causal research follows a more structured and hypothesis-driven approach.

Correlational vs. Causal Research

Correlational research examines the relationship between variables without implying causation. It identifies patterns of association or co-occurrence between variables but does not establish the direction or causality of the relationship.

Causal research , on the other hand, seeks to establish cause-and-effect relationships between variables by systematically manipulating independent variables and observing their effects on dependent variables. It goes beyond mere association to determine whether changes in one variable directly cause changes in another variable.

- Both correlational and causal research involve analyzing relationships between variables.

- Both types of research contribute to understanding the nature of associations between variables.

- Correlational research focuses on identifying patterns of association, while causal research aims to establish causal relationships.

- Correlational research does not manipulate variables, while causal research involves systematically manipulating independent variables to observe their effects on dependent variables.

How to Formulate Causal Research Hypotheses?

Crafting research questions and hypotheses is the foundational step in any research endeavor. Defining your variables clearly and articulating the causal relationship you aim to investigate is essential. Let's explore this process further.

1. Identify Variables

Identifying variables involves recognizing the key factors you will manipulate or measure in your study. These variables can be classified into independent, dependent, and confounding variables.

- Independent Variable (IV): This is the variable you manipulate or control in your study. It is the presumed cause that you want to test.

- Dependent Variable (DV): The dependent variable is the outcome or response you measure. It is affected by changes in the independent variable.

- Confounding Variables: These are extraneous factors that may influence the relationship between the independent and dependent variables, leading to spurious correlations or erroneous causal inferences. Identifying and controlling for confounding variables is crucial for establishing valid causal relationships.

2. Establish Causality

Establishing causality requires meeting specific criteria outlined by scientific methodology. While correlation between variables may suggest a relationship, it does not imply causation. To establish causality, researchers must demonstrate the following:

- Temporal Precedence: The cause must precede the effect in time. In other words, changes in the independent variable must occur before changes in the dependent variable.

- Covariation of Cause and Effect: Changes in the independent variable should be accompanied by corresponding changes in the dependent variable. This demonstrates a consistent pattern of association between the two variables.

- Elimination of Alternative Explanations: Researchers must rule out other possible explanations for the observed relationship between variables. This involves controlling for confounding variables and conducting rigorous experimental designs to isolate the effects of the independent variable.

3. Write Clear and Testable Hypotheses

Hypotheses serve as tentative explanations for the relationship between variables and provide a framework for empirical testing. A well-formulated hypothesis should be:

- Specific: Clearly state the expected relationship between the independent and dependent variables.

- Testable: The hypothesis should be capable of being empirically tested through observation or experimentation.

- Falsifiable: There should be a possibility of proving the hypothesis false through empirical evidence.

For example, a hypothesis in a study examining the effect of exercise on weight loss could be: "Increasing levels of physical activity (IV) will lead to greater weight loss (DV) among participants (compared to those with lower levels of physical activity)."

By formulating clear hypotheses and operationalizing variables, researchers can systematically investigate causal relationships and contribute to the advancement of scientific knowledge.

Causal Research Design

Designing your research study involves making critical decisions about how you will collect and analyze data to investigate causal relationships.

Experimental vs. Observational Designs

One of the first decisions you'll make when designing a study is whether to employ an experimental or observational design. Each approach has its strengths and limitations, and the choice depends on factors such as the research question, feasibility , and ethical considerations.

- Experimental Design: In experimental designs, researchers manipulate the independent variable and observe its effects on the dependent variable while controlling for confounding variables. Random assignment to experimental conditions allows for causal inferences to be drawn. Example: A study testing the effectiveness of a new teaching method on student performance by randomly assigning students to either the experimental group (receiving the new teaching method) or the control group (receiving the traditional method).

- Observational Design: Observational designs involve observing and measuring variables without intervention. Researchers may still examine relationships between variables but cannot establish causality as definitively as in experimental designs. Example: A study observing the association between socioeconomic status and health outcomes by collecting data on income, education level, and health indicators from a sample of participants.

Control and Randomization

Control and randomization are crucial aspects of experimental design that help ensure the validity of causal inferences.

- Control: Controlling for extraneous variables involves holding constant factors that could influence the dependent variable, except for the independent variable under investigation. This helps isolate the effects of the independent variable. Example: In a medication trial, controlling for factors such as age, gender, and pre-existing health conditions ensures that any observed differences in outcomes can be attributed to the medication rather than other variables.

- Randomization: Random assignment of participants to experimental conditions helps distribute potential confounders evenly across groups, reducing the likelihood of systematic biases and allowing for causal conclusions. Example: Randomly assigning patients to treatment and control groups in a clinical trial ensures that both groups are comparable in terms of baseline characteristics, minimizing the influence of extraneous variables on treatment outcomes.

Internal and External Validity

Two key concepts in research design are internal validity and external validity, which relate to the credibility and generalizability of study findings, respectively.

- Internal Validity: Internal validity refers to the extent to which the observed effects can be attributed to the manipulation of the independent variable rather than confounding factors. Experimental designs typically have higher internal validity due to their control over extraneous variables. Example: A study examining the impact of a training program on employee productivity would have high internal validity if it could confidently attribute changes in productivity to the training intervention.

- External Validity: External validity concerns the extent to which study findings can be generalized to other populations, settings, or contexts. While experimental designs prioritize internal validity, they may sacrifice external validity by using highly controlled conditions that do not reflect real-world scenarios. Example: Findings from a laboratory study on memory retention may have limited external validity if the experimental tasks and conditions differ significantly from real-life learning environments.

Types of Experimental Designs

Several types of experimental designs are commonly used in causal research, each with its own strengths and applications.

- Randomized Control Trials (RCTs): RCTs are considered the gold standard for assessing causality in research. Participants are randomly assigned to experimental and control groups, allowing researchers to make causal inferences. Example: A pharmaceutical company testing a new drug's efficacy would use an RCT to compare outcomes between participants receiving the drug and those receiving a placebo.

- Quasi-Experimental Designs: Quasi-experimental designs lack random assignment but still attempt to establish causality by controlling for confounding variables through design or statistical analysis . Example: A study evaluating the effectiveness of a smoking cessation program might compare outcomes between participants who voluntarily enroll in the program and a matched control group of non-enrollees.

By carefully selecting an appropriate research design and addressing considerations such as control, randomization, and validity, researchers can conduct studies that yield credible evidence of causal relationships and contribute valuable insights to their field of inquiry.

Causal Research Data Collection

Collecting data is a critical step in any research study, and the quality of the data directly impacts the validity and reliability of your findings.

Choosing Measurement Instruments

Selecting appropriate measurement instruments is essential for accurately capturing the variables of interest in your study. The choice of measurement instrument depends on factors such as the nature of the variables, the target population , and the research objectives.

- Surveys : Surveys are commonly used to collect self-reported data on attitudes, opinions, behaviors, and demographics . They can be administered through various methods, including paper-and-pencil surveys, online surveys, and telephone interviews.

- Observations: Observational methods involve systematically recording behaviors, events, or phenomena as they occur in natural settings. Observations can be structured (following a predetermined checklist) or unstructured (allowing for flexible data collection).

- Psychological Tests: Psychological tests are standardized instruments designed to measure specific psychological constructs, such as intelligence, personality traits, or emotional functioning. These tests often have established reliability and validity.

- Physiological Measures: Physiological measures, such as heart rate, blood pressure, or brain activity, provide objective data on bodily processes. They are commonly used in health-related research but require specialized equipment and expertise.

- Existing Databases: Researchers may also utilize existing datasets, such as government surveys, public health records, or organizational databases, to answer research questions. Secondary data analysis can be cost-effective and time-saving but may be limited by the availability and quality of data.

Ensuring accurate data collection is the cornerstone of any successful research endeavor. With the right tools in place, you can unlock invaluable insights to drive your causal research forward. From surveys to tests, each instrument offers a unique lens through which to explore your variables of interest.

At Appinio , we understand the importance of robust data collection methods in informing impactful decisions. Let us empower your research journey with our intuitive platform, where you can effortlessly gather real-time consumer insights to fuel your next breakthrough. Ready to take your research to the next level? Book a demo today and see how Appinio can revolutionize your approach to data collection!

Book a Demo

Sampling Techniques

Sampling involves selecting a subset of individuals or units from a larger population to participate in the study. The goal of sampling is to obtain a representative sample that accurately reflects the characteristics of the population of interest.

- Probability Sampling: Probability sampling methods involve randomly selecting participants from the population, ensuring that each member of the population has an equal chance of being included in the sample. Common probability sampling techniques include simple random sampling , stratified sampling, and cluster sampling .

- Non-Probability Sampling: Non-probability sampling methods do not involve random selection and may introduce biases into the sample. Examples of non-probability sampling techniques include convenience sampling, purposive sampling, and snowball sampling.

The choice of sampling technique depends on factors such as the research objectives, population characteristics, resources available, and practical constraints. Researchers should strive to minimize sampling bias and maximize the representativeness of the sample to enhance the generalizability of their findings.

Ethical Considerations

Ethical considerations are paramount in research and involve ensuring the rights, dignity, and well-being of research participants. Researchers must adhere to ethical principles and guidelines established by professional associations and institutional review boards (IRBs).

- Informed Consent: Participants should be fully informed about the nature and purpose of the study, potential risks and benefits, their rights as participants, and any confidentiality measures in place. Informed consent should be obtained voluntarily and without coercion.

- Privacy and Confidentiality: Researchers should take steps to protect the privacy and confidentiality of participants' personal information. This may involve anonymizing data, securing data storage, and limiting access to identifiable information.

- Minimizing Harm: Researchers should mitigate any potential physical, psychological, or social harm to participants. This may involve conducting risk assessments, providing appropriate support services, and debriefing participants after the study.

- Respect for Participants: Researchers should respect participants' autonomy, diversity, and cultural values. They should seek to foster a trusting and respectful relationship with participants throughout the research process.

- Publication and Dissemination: Researchers have a responsibility to accurately report their findings and acknowledge contributions from participants and collaborators. They should adhere to principles of academic integrity and transparency in disseminating research results.

By addressing ethical considerations in research design and conduct, researchers can uphold the integrity of their work, maintain trust with participants and the broader community, and contribute to the responsible advancement of knowledge in their field.

Causal Research Data Analysis

Once data is collected, it must be analyzed to draw meaningful conclusions and assess causal relationships.

Causal Inference Methods

Causal inference methods are statistical techniques used to identify and quantify causal relationships between variables in observational data. While experimental designs provide the most robust evidence for causality, observational studies often require more sophisticated methods to account for confounding factors.

- Difference-in-Differences (DiD): DiD compares changes in outcomes before and after an intervention between a treatment group and a control group, controlling for pre-existing trends. It estimates the average treatment effect by differencing the changes in outcomes between the two groups over time.

- Instrumental Variables (IV): IV analysis relies on instrumental variables—variables that affect the treatment variable but not the outcome—to estimate causal effects in the presence of endogeneity. IVs should be correlated with the treatment but uncorrelated with the error term in the outcome equation.

- Regression Discontinuity (RD): RD designs exploit naturally occurring thresholds or cutoff points to estimate causal effects near the threshold. Participants just above and below the threshold are compared, assuming that they are similar except for their proximity to the threshold.

- Propensity Score Matching (PSM): PSM matches individuals or units based on their propensity scores—the likelihood of receiving the treatment—creating comparable groups with similar observed characteristics. Matching reduces selection bias and allows for causal inference in observational studies.

Assessing Causality Strength

Assessing the strength of causality involves determining the magnitude and direction of causal effects between variables. While statistical significance indicates whether an observed relationship is unlikely to occur by chance, it does not necessarily imply a strong or meaningful effect.

- Effect Size: Effect size measures the magnitude of the relationship between variables, providing information about the practical significance of the results. Standard effect size measures include Cohen's d for mean differences and odds ratios for categorical outcomes.

- Confidence Intervals: Confidence intervals provide a range of values within which the actual effect size is likely to lie with a certain degree of certainty. Narrow confidence intervals indicate greater precision in estimating the true effect size.

- Practical Significance: Practical significance considers whether the observed effect is meaningful or relevant in real-world terms. Researchers should interpret results in the context of their field and the implications for stakeholders.

Handling Confounding Variables

Confounding variables are extraneous factors that may distort the observed relationship between the independent and dependent variables, leading to spurious or biased conclusions. Addressing confounding variables is essential for establishing valid causal inferences.

- Statistical Control: Statistical control involves including confounding variables as covariates in regression models to partially out their effects on the outcome variable. Controlling for confounders reduces bias and strengthens the validity of causal inferences.

- Matching: Matching participants or units based on observed characteristics helps create comparable groups with similar distributions of confounding variables. Matching reduces selection bias and mimics the randomization process in experimental designs.

- Sensitivity Analysis: Sensitivity analysis assesses the robustness of study findings to changes in model specifications or assumptions. By varying analytical choices and examining their impact on results, researchers can identify potential sources of bias and evaluate the stability of causal estimates.

- Subgroup Analysis: Subgroup analysis explores whether the relationship between variables differs across subgroups defined by specific characteristics. Identifying effect modifiers helps understand the conditions under which causal effects may vary.

By employing rigorous causal inference methods, assessing the strength of causality, and addressing confounding variables, researchers can confidently draw valid conclusions about causal relationships in their studies, advancing scientific knowledge and informing evidence-based decision-making.

Causal Research Examples

Examples play a crucial role in understanding the application of causal research methods and their impact across various domains. Let's explore some detailed examples to illustrate how causal research is conducted and its real-world implications:

Example 1: Software as a Service (SaaS) User Retention Analysis

Suppose a SaaS company wants to understand the factors influencing user retention and engagement with their platform. The company conducts a longitudinal observational study, collecting data on user interactions, feature usage, and demographic information over several months.

- Design: The company employs an observational cohort study design, tracking cohorts of users over time to observe changes in retention and engagement metrics. They use analytics tools to collect data on user behavior , such as logins, feature usage, session duration, and customer support interactions.

- Data Collection: Data is collected from the company's platform logs, customer relationship management (CRM) system, and user surveys. Key metrics include user churn rates, active user counts, feature adoption rates, and Net Promoter Scores ( NPS ).

- Analysis: Using statistical techniques like survival analysis and regression modeling, the company identifies factors associated with user retention, such as feature usage patterns, onboarding experiences, customer support interactions, and subscription plan types.

- Findings: The analysis reveals that users who engage with specific features early in their lifecycle have higher retention rates, while those who encounter usability issues or lack personalized onboarding experiences are more likely to churn. The company uses these insights to optimize product features, improve onboarding processes, and enhance customer support strategies to increase user retention and satisfaction.

Example 2: Business Impact of Digital Marketing Campaign

Consider a technology startup launching a digital marketing campaign to promote its new product offering. The company conducts an experimental study to evaluate the effectiveness of different marketing channels in driving website traffic, lead generation, and sales conversions.

- Design: The company implements an A/B testing design, randomly assigning website visitors to different marketing treatment conditions, such as Google Ads, social media ads, email campaigns, or content marketing efforts. They track user interactions and conversion events using web analytics tools and marketing automation platforms.

- Data Collection: Data is collected on website traffic, click-through rates, conversion rates, lead generation, and sales revenue. The company also gathers demographic information and user feedback through surveys and customer interviews to understand the impact of marketing messages and campaign creatives .

- Analysis: Utilizing statistical methods like hypothesis testing and multivariate analysis, the company compares key performance metrics across different marketing channels to assess their effectiveness in driving user engagement and conversion outcomes. They calculate return on investment (ROI) metrics to evaluate the cost-effectiveness of each marketing channel.

- Findings: The analysis reveals that social media ads outperform other marketing channels in generating website traffic and lead conversions, while email campaigns are more effective in nurturing leads and driving sales conversions. Armed with these insights, the company allocates marketing budgets strategically, focusing on channels that yield the highest ROI and adjusting messaging and targeting strategies to optimize campaign performance.

These examples demonstrate the diverse applications of causal research methods in addressing important questions, informing policy decisions, and improving outcomes in various fields. By carefully designing studies, collecting relevant data, employing appropriate analysis techniques, and interpreting findings rigorously, researchers can generate valuable insights into causal relationships and contribute to positive social change.

How to Interpret Causal Research Results?

Interpreting and reporting research findings is a crucial step in the scientific process, ensuring that results are accurately communicated and understood by stakeholders.

Interpreting Statistical Significance

Statistical significance indicates whether the observed results are unlikely to occur by chance alone, but it does not necessarily imply practical or substantive importance. Interpreting statistical significance involves understanding the meaning of p-values and confidence intervals and considering their implications for the research findings.

- P-values: A p-value represents the probability of obtaining the observed results (or more extreme results) if the null hypothesis is true. A p-value below a predetermined threshold (typically 0.05) suggests that the observed results are statistically significant, indicating that the null hypothesis can be rejected in favor of the alternative hypothesis.

- Confidence Intervals: Confidence intervals provide a range of values within which the true population parameter is likely to lie with a certain degree of confidence (e.g., 95%). If the confidence interval does not include the null value, it suggests that the observed effect is statistically significant at the specified confidence level.

Interpreting statistical significance requires considering factors such as sample size, effect size, and the practical relevance of the results rather than relying solely on p-values to draw conclusions.

Discussing Practical Significance

While statistical significance indicates whether an effect exists, practical significance evaluates the magnitude and meaningfulness of the effect in real-world terms. Discussing practical significance involves considering the relevance of the results to stakeholders and assessing their impact on decision-making and practice.

- Effect Size: Effect size measures the magnitude of the observed effect, providing information about its practical importance. Researchers should interpret effect sizes in the context of their field and the scale of measurement (e.g., small, medium, or large effect sizes).

- Contextual Relevance: Consider the implications of the results for stakeholders, policymakers, and practitioners. Are the observed effects meaningful in the context of existing knowledge, theory, or practical applications? How do the findings contribute to addressing real-world problems or informing decision-making?

Discussing practical significance helps contextualize research findings and guide their interpretation and application in practice, beyond statistical significance alone.

Addressing Limitations and Assumptions

No study is without limitations, and researchers should transparently acknowledge and address potential biases, constraints, and uncertainties in their research design and findings.

- Methodological Limitations: Identify any limitations in study design, data collection, or analysis that may affect the validity or generalizability of the results. For example, sampling biases , measurement errors, or confounding variables.

- Assumptions: Discuss any assumptions made in the research process and their implications for the interpretation of results. Assumptions may relate to statistical models, causal inference methods, or theoretical frameworks underlying the study.

- Alternative Explanations: Consider alternative explanations for the observed results and discuss their potential impact on the validity of causal inferences. How robust are the findings to different interpretations or competing hypotheses?

Addressing limitations and assumptions demonstrates transparency and rigor in the research process, allowing readers to critically evaluate the validity and reliability of the findings.

Communicating Findings Clearly

Effectively communicating research findings is essential for disseminating knowledge, informing decision-making, and fostering collaboration and dialogue within the scientific community.

- Clarity and Accessibility: Present findings in a clear, concise, and accessible manner, using plain language and avoiding jargon or technical terminology. Organize information logically and use visual aids (e.g., tables, charts, graphs) to enhance understanding.

- Contextualization: Provide context for the results by summarizing key findings, highlighting their significance, and relating them to existing literature or theoretical frameworks. Discuss the implications of the findings for theory, practice, and future research directions.

- Transparency: Be transparent about the research process, including data collection procedures, analytical methods, and any limitations or uncertainties associated with the findings. Clearly state any conflicts of interest or funding sources that may influence interpretation.

By communicating findings clearly and transparently, researchers can facilitate knowledge exchange, foster trust and credibility, and contribute to evidence-based decision-making.

Causal Research Tips

When conducting causal research, it's essential to approach your study with careful planning, attention to detail, and methodological rigor. Here are some tips to help you navigate the complexities of causal research effectively:

- Define Clear Research Questions: Start by clearly defining your research questions and hypotheses. Articulate the causal relationship you aim to investigate and identify the variables involved.

- Consider Alternative Explanations: Be mindful of potential confounding variables and alternative explanations for the observed relationships. Take steps to control for confounders and address alternative hypotheses in your analysis.

- Prioritize Internal Validity: While external validity is important for generalizability, prioritize internal validity in your study design to ensure that observed effects can be attributed to the manipulation of the independent variable.

- Use Randomization When Possible: If feasible, employ randomization in experimental designs to distribute potential confounders evenly across experimental conditions and enhance the validity of causal inferences.

- Be Transparent About Methods: Provide detailed descriptions of your research methods, including data collection procedures, analytical techniques, and any assumptions or limitations associated with your study.

- Utilize Multiple Methods: Consider using a combination of experimental and observational methods to triangulate findings and strengthen the validity of causal inferences.

- Be Mindful of Sample Size: Ensure that your sample size is adequate to detect meaningful effects and minimize the risk of Type I and Type II errors. Conduct power analyses to determine the sample size needed to achieve sufficient statistical power.

- Validate Measurement Instruments: Validate your measurement instruments to ensure that they are reliable and valid for assessing the variables of interest in your study. Pilot test your instruments if necessary.

- Seek Feedback from Peers: Collaborate with colleagues or seek feedback from peer reviewers to solicit constructive criticism and improve the quality of your research design and analysis.

Conclusion for Causal Research

Mastering causal research empowers researchers to unlock the secrets of cause and effect, shedding light on the intricate relationships between variables in diverse fields. By employing rigorous methods such as experimental designs, causal inference techniques, and careful data analysis, you can uncover causal mechanisms, predict outcomes, and inform evidence-based practices. Through the lens of causal research, complex phenomena become more understandable, and interventions become more effective in addressing societal challenges and driving progress. In a world where understanding the reasons behind events is paramount, causal research serves as a beacon of clarity and insight. Armed with the knowledge and techniques outlined in this guide, you can navigate the complexities of causality with confidence, advancing scientific knowledge, guiding policy decisions, and ultimately making meaningful contributions to our understanding of the world.

How to Conduct Causal Research in Minutes?

Introducing Appinio , your gateway to lightning-fast causal research. As a real-time market research platform, we're revolutionizing how companies gain consumer insights to drive data-driven decisions. With Appinio, conducting your own market research is not only easy but also thrilling. Experience the excitement of market research with Appinio, where fast, intuitive, and impactful insights are just a click away.

Here's why you'll love Appinio:

- Instant Insights: Say goodbye to waiting days for research results. With our platform, you'll go from questions to insights in minutes, empowering you to make decisions at the speed of business.

- User-Friendly Interface: No need for a research degree here! Our intuitive platform is designed for anyone to use, making complex research tasks simple and accessible.

- Global Reach: Reach your target audience wherever they are. With access to over 90 countries and the ability to define precise target groups from 1200+ characteristics, you'll gather comprehensive data to inform your decisions.

Get free access to the platform!

Join the loop 💌

Be the first to hear about new updates, product news, and data insights. We'll send it all straight to your inbox.

Get the latest market research news straight to your inbox! 💌

Wait, there's more

17.04.2024 | 25min read

Quota Sampling: Definition, Types, Methods, Examples

15.04.2024 | 34min read

What is Market Share? Definition, Formula, Examples

11.04.2024 | 34min read

What is Data Analysis? Definition, Tools, Examples

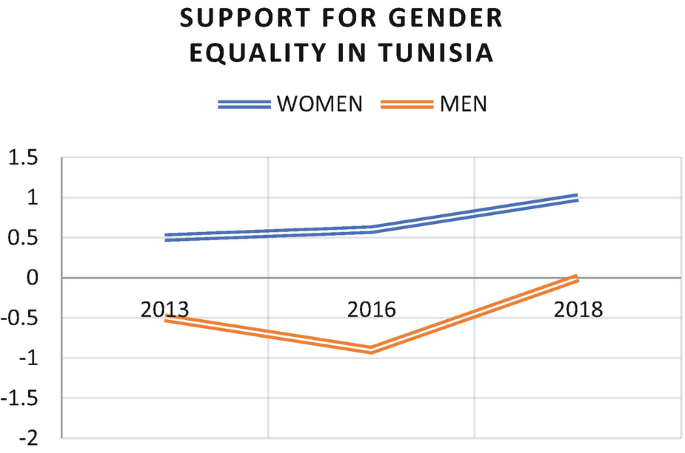

Bivariate Analysis: Associations, Hypotheses, and Causal Stories

- Open Access

- First Online: 04 October 2022

Cite this chapter

You have full access to this open access chapter

- Mark Tessler 2

Part of the book series: SpringerBriefs in Sociology ((BRIEFSSOCY))

2776 Accesses

Every day, we encounter various phenomena that make us question how, why, and with what implications they vary. In responding to these questions, we often begin by considering bivariate relationships, meaning the way that two variables relate to one another. Such relationships are the focus of this chapter.

You have full access to this open access chapter, Download chapter PDF

3.1 Description, Explanation, and Causal Stories

There are many reasons why we might be interested in the relationship between two variables. Suppose we observe that some of the respondents interviewed in Arab Barometer surveys and other surveys report that they have thought about emigrating, and we are interested in this variable. We may want to know how individuals’ consideration of emigration varies in relation to certain attributes or attitudes. In this case, our goal would be descriptive , sometimes described as the mapping of variance. Our goal may also or instead be explanation , such as when we want to know why individuals have thought about emigrating.

Description

Description means that we seek to increase our knowledge and refine our understanding of a single variable by looking at whether and how it varies in relation to one or more other variables. Descriptive information makes a valuable contribution when the structure and variance of an important phenomenon are not well known, or not well known in relation to other important variables.

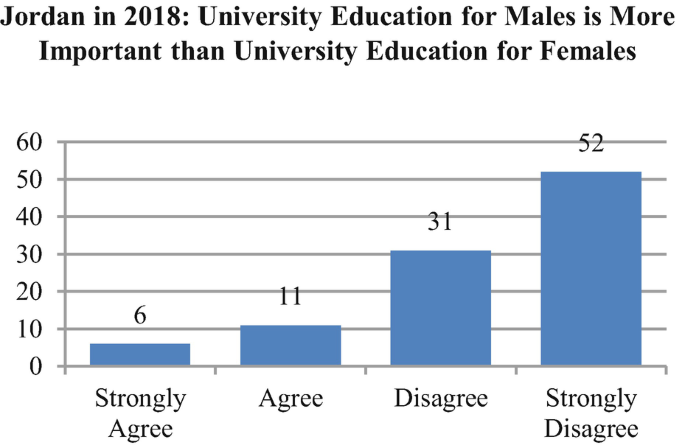

Returning to the example about emigration, suppose you notice that among Jordanians interviewed in 2018, 39.5 percent of the 2400 men and women interviewed reported that they have considered the possibility of emigrating.

Our objective may be to discover what these might-be migrants look like and what they are thinking. We do this by mapping the variance of emigration across attributes and orientations that provide some of this descriptive information, with the descriptions themselves each expressed as bivariate relationships. These relationships are also sometimes labeled “associations” or “correlations” since they are not considered causal relationships and are not concerned with explanation.

Of the 39.5 percent of Jordanians who told interviewers that they have considered emigrating, 57.3 percent are men and 42.7 percent are women. With respect to age, 34 percent are age 29 or younger and 19.2 percent are age 50 or older. It might have been expected that a higher percentage of respondents age 29 or younger would have considered emigrating. In fact, however, 56 percent of the 575 men and women in this age category have considered emigrating. And with respect to destination, the Arab country most frequently mentioned by those who have considered emigration is the UAE, named by 17 percent, followed by Qatar at 10 percent and Saudi Arabia at 9.8 percent. Non-Arab destinations were mentioned more frequently, with Turkey named by 18.1 percent, Canada by 21.1 percent, and the U.S. by 24.2 percent.

With the variables sex, age, and prospective destination added to the original variable, which is consideration of emigration, there are clearly more than two variables under consideration. But the variables are described two at a time and so each relationship is bivariate.

These bivariate relationships, between having considered emigration on the one hand and sex, age, and prospective destination on the other, provide descriptive information that is likely to be useful to analysts, policymakers, and others concerned with emigration. They tell, or begin to tell, as noted above, what might-be migrants look like and what they are thinking. Still additional insight may be gained by adding descriptive bivariate relationships for Jordanians interviewed in a different year to those interviewed in 2018. In addition, of course, still more information and possibly a more refined understanding, may be gained by examining the attributes and orientations of prospective emigrants who are citizens of other Arab (and perhaps also non-Arab) countries.

With a focus on description, these bivariate relationships are not constructed to shed light on explanation, that is to contribute to causal stories that seek to account for variance and tell why some individuals but not others have considered the possibility of emigrating. In fact, however, as useful as bivariate relationships that provide descriptive information may be, researchers usually are interested as much if not more in bivariate relationships that express causal stories and purport to provide explanations.

Explanation and Causal Stories

There is a difference in the origins of bivariate relationships that seek to provide descriptive information and those that seek to provide explanatory information. The former can be thought to be responding to what questions: What characterizes potential emigrants? What do they look like? What are their thoughts about this or that subject? If the objective is description, a researcher collects and uses her data to investigate the relationship between two variables without a specific and firm prediction about the relationship between them. Rather, she simply wonders about the “what” questions listed above and believes that finding out the answers will be instructive. In this case, therefore, she selects the bivariate relationships to be considered based on what she thinks it will be useful to know, and not based on assessing the accuracy of a previously articulated causal story that specifies the strength and structure of the effect that one variable has on the other.

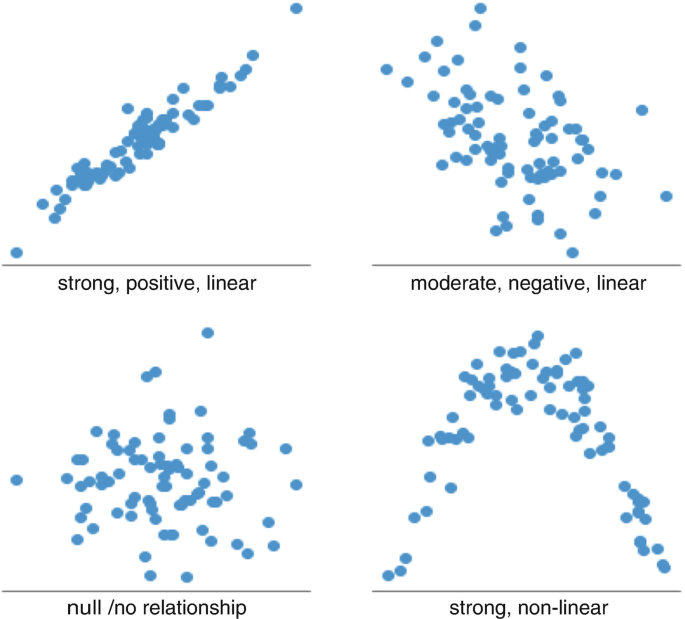

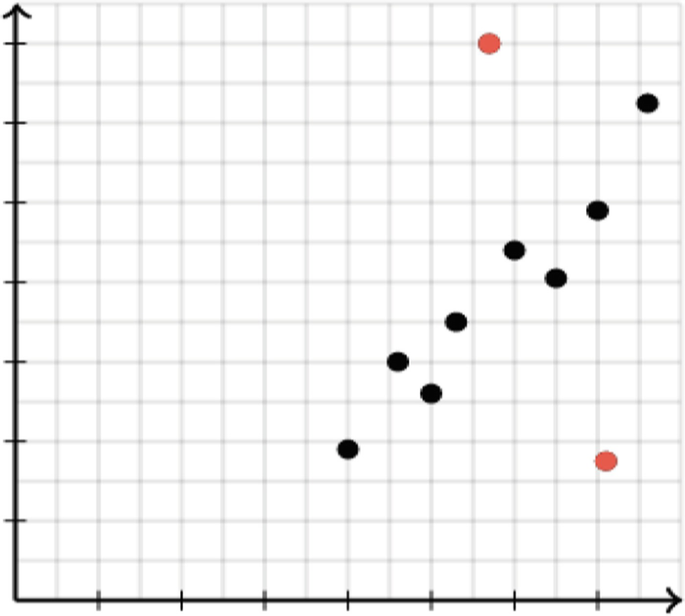

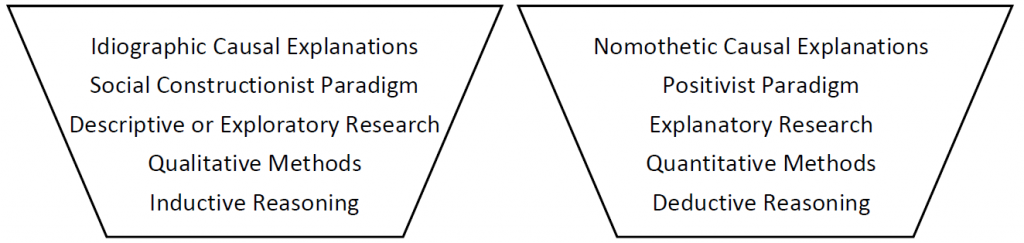

A researcher is often interested in causal stories and explanation, however, and this does usually begin with thinking about the relationship between two variables, one of which is the presumed cause and the other the presumed effect. The presumed cause is the independent variable, and the presumed effect is the dependent variable . Offering evidence that there is a strong relationship between two variables is not sufficient to demonstrate that the variables are likely to be causally related, but it is a necessary first step. In this respect it is a point of departure for the fuller, probably multivariate analysis, required to persuasively argue that a relationship is likely to be causal. In addition, as discussed in Chap. 4 , multivariate analysis often not only strengthens the case for inferring that a relationship is causal, but also provides a more elaborate and more instructive causal story. The foundation, however, on which a multivariate analysis aimed at causal inference is built, is a bivariate relationship composed of a presumed independent variable and a presumed dependent variable.

A hypothesis that posits a causal relationship between two variables is not the same as a causal story, although the two are of course closely connected. The former specifies a presumed cause, a presumed determinant of variance on the dependent variable. It probably also specifies the structure of the relationship, such as linear as opposed to non-linear, or positive (also called direct) as opposed to negative (also called inverse).