Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Instrument reliability.

Reliability (visit the concept map that shows the various types of reliability)

A test is reliable to the extent that whatever it measures, it measures it consistently. If I were to stand on a scale and the scale read 15 pounds, I might wonder. Suppose I were to step off the scale and stand on it again, and again it read 15 pounds. The scale is producing consistent results. From a research point of view, the scale seems to be reliable because whatever it is measuring, it is measuring it consistently. Whether those consistent results are valid is another question. However, an instrument cannot be valid if it is not reliable.

There are three major categories of reliability for most instruments: test-retest, equivalent form, and internal consistency. Each measures consistency a bit differently and a given instrument need not meet the requirements of each. Test-retest measures consistency from one time to the next. Equivalent-form measures consistency between two versions of an instrument. Internal-consistency measures consistency within the instrument (consistency among the questions). A fourth category (scorer agreement) is often used with performance and product assessments. Scorer agreement is consistency of rating a performance or product among different judges who are rating the performance or product. Generally speaking, the longer a test is, the more reliable it tends to be (up to a point). For research purposes, a minimum reliability of .70 is required for attitude instruments. Some researchers feel that it should be higher. A reliability of .70 indicates 70% consistency in the scores that are produced by the instrument. Many tests, such as achievement tests, strive for .90 or higher reliabilities.

Relationship of Test Forms and Testing Sessions Required for Reliability Procedures

Test-Retest Method (stability: measures error because of changes over time) The same instrument is given twice to the same group of people. The reliability is the correlation between the scores on the two instruments. If the results are consistent over time, the scores should be similar. The trick with test-retest reliability is determining how long to wait between the two administrations. One should wait long enough so the subjects don’t remember how they responded the first time they completed the instrument, but not so long that their knowledge of the material being measured has changed. This may be a couple weeks to a couple months.

If one were investigating the reliability of a test measuring mathematics skills, it would not be wise to wait two months. The subjects probably would have gained additional mathematics skills during the two months and thus would have scored differently the second time they completed the test. We would not want their knowledge to have changed between the first and second testing.

Equivalent-Form (Parallel or Alternate-Form) Method (measures error because of differences in test forms) Two different versions of the instrument are created. We assume both measure the same thing. The same subjects complete both instruments during the same time period. The scores on the two instruments are correlated to calculate the consistency between the two forms of the instrument.

Internal-Consistency Method (measures error because of idiosyncrasies of the test items) Several internal-consistency methods exist. They have one thing in common. The subjects complete one instrument one time. For this reason, this is the easiest form of reliability to investigate. This method measures consistency within the instrument three different ways.

– Split-Half A total score for the odd number questions is correlated with a total score for the even number questions (although it might be the first half with the second half). This is often used with dichotomous variables that are scored 0 for incorrect and 1 for correct.The Spearman-Brown prophecy formula is applied to the correlation to determine the reliability.

– Kuder-Richardson Formula 20 (K-R 20) and Kuder-Richardson Formula 21 (K-R 21) These are alternative formulas for calculating how consistent subject responses are among the questions on an instrument. Items on the instrument must be dichotomously scored (0 for incorrect and 1 for correct). All items are compared with each other, rather than half of the items with the other half of the items. It can be shown mathematically that the Kuder-Richardson reliability coefficient is actually the mean of all split-half coefficients (provided the Rulon formula is used) resulting from different splittings of a test. K-R 21 assumes that all of the questions are equally difficult. K-R 20 does not assume that. The formula for K-R 21 can be found on page 179.

– Cronbach’s Alpha When the items on an instrument are not scored right versus wrong, Cronbach’s alpha is often used to measure the internal consistency. This is often the case with attitude instruments that use the Likert scale. A computer program such as SPSS is often used to calculate Cronbach’s alpha. Although Cronbach’s alpha is usually used for scores which fall along a continuum, it will produce the same results as KR-20 with dichotomous data (0 or 1).

I have created an Excel spreadsheet that will calculate Spearman-Brown, KR-20, KR-21, and Cronbach’s alpha. The spreadsheet will handle data for a maximum 1000 subjects with a maximum of 100 responses for each.

Scoring Agreement (measures error because of the scorer) Performance and product assessments are often based on scores by individuals who are trained to evaluate the performance or product. The consistency between rating can be calculated in a variety of ways.

– Interrater Reliability Two judges can evaluate a group of student products and the correlation between their ratings can be calculated (r=.90 is a common cutoff).

– Percentage Agreement Two judges can evaluate a group of products and a percentage for the number of times they agree is calculated (80% is a common cutoff).

———

All scores contain error. The error is what lowers an instrument’s reliability. Obtained Score = True Score + Error Score

———-

There could be a number of reasons why the reliability estimate for a measure is low. Four common sources of inconsistencies of test scores are listed below:

Test Taker — perhaps the subject is having a bad day Test Itself — the questions on the instrument may be unclear Testing Conditions — there may be distractions during the testing that detract the subject Test Scoring — scores may be applying different standards when evaluating the subjects’ responses

Del Siegle, Ph.D. Neag School of Education – University of Connecticut [email protected] www.delsiegle.info

Created 9/24/2002 Edited 10/17/2013

- My Bookings

- How to Determine the Validity and Reliability of an Instrument

How to Determine the Validity and Reliability of an Instrument By: Yue Li

Validity and reliability are two important factors to consider when developing and testing any instrument (e.g., content assessment test, questionnaire) for use in a study. Attention to these considerations helps to insure the quality of your measurement and of the data collected for your study.

Understanding and Testing Validity

Validity refers to the degree to which an instrument accurately measures what it intends to measure. Three common types of validity for researchers and evaluators to consider are content, construct, and criterion validities.

- Content validity indicates the extent to which items adequately measure or represent the content of the property or trait that the researcher wishes to measure. Subject matter expert review is often a good first step in instrument development to assess content validity, in relation to the area or field you are studying.

- Construct validity indicates the extent to which a measurement method accurately represents a construct (e.g., a latent variable or phenomena that can’t be measured directly, such as a person’s attitude or belief) and produces an observation, distinct from that which is produced by a measure of another construct. Common methods to assess construct validity include, but are not limited to, factor analysis, correlation tests, and item response theory models (including Rasch model).

- Criterion-related validity indicates the extent to which the instrument’s scores correlate with an external criterion (i.e., usually another measurement from a different instrument) either at present ( concurrent validity ) or in the future ( predictive validity ). A common measurement of this type of validity is the correlation coefficient between two measures.

Often times, when developing, modifying, and interpreting the validity of a given instrument, rather than view or test each type of validity individually, researchers and evaluators test for evidence of several different forms of validity, collectively (e.g., see Samuel Messick’s work regarding validity).

Understanding and Testing Reliability

Reliability refers to the degree to which an instrument yields consistent results. Common measures of reliability include internal consistency, test-retest, and inter-rater reliabilities.

- Internal consistency reliability looks at the consistency of the score of individual items on an instrument, with the scores of a set of items, or subscale, which typically consists of several items to measure a single construct. Cronbach’s alpha is one of the most common methods for checking internal consistency reliability. Group variability, score reliability, number of items, sample sizes, and difficulty level of the instrument also can impact the Cronbach’s alpha value.

- Test-retest measures the correlation between scores from one administration of an instrument to another, usually within an interval of 2 to 3 weeks. Unlike pre-post tests, no treatment occurs between the first and second administrations of the instrument, in order to test-retest reliability. A similar type of reliability called alternate forms , involves using slightly different forms or versions of an instrument to see if different versions yield consistent results.

- Inter-rater reliability checks the degree of agreement among raters (i.e., those completing items on an instrument). Common situations where more than one rater is involved may occur when more than one person conducts classroom observations, uses an observation protocol or scores an open-ended test, using a rubric or other standard protocol. Kappa statistics, correlation coefficients, and intra-class correlation (ICC) coefficient are some of the commonly reported measures of inter-rater reliability.

Developing a valid and reliable instrument usually requires multiple iterations of piloting and testing which can be resource intensive. Therefore, when available, I suggest using already established valid and reliable instruments, such as those published in peer-reviewed journal articles. However, even when using these instruments, you should re-check validity and reliability, using the methods of your study and your own participants’ data before running additional statistical analyses. This process will confirm that the instrument performs, as intended, in your study with the population you are studying, even though they are identical to the purpose and population for which the instrument was initially developed. Below are a few additional, useful readings to further inform your understanding of validity and reliability.

Resources for Understanding and Testing Reliability

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1985). Standards for educational and psychological testing . Washington, DC: Authors.

- Bond, T. G., & Fox, C. M. (2001). Applying the Rasch model: Fundamental measurement in the human sciences . Mahwah, NJ: Lawrence Erlbaum.

- Cronbach, L. (1990). Essentials of psychological testing . New York, NY: Harper & Row.

- Carmines, E., & Zeller, R. (1979). Reliability and Validity Assessment . Beverly Hills, CA: Sage Publications.

- Messick, S. (1987). Validity . ETS Research Report Series, 1987: i–208. doi: 10.1002/j.2330-8516.1987.tb00244.x

- Liu, X. (2010). Using and developing measurement instruments in science education: A Rasch modeling approach . Charlotte, NC: Information Age.

- Search for:

Recent Posts

- Avoiding Data Analysis Pitfalls

- Advice in Building and Boasting a Successful Grant Funding Track Record

- Personal History of Miami University’s Discovery and E & A Centers

- Center Director’s Message

Recent Comments

- November 2016

- September 2016

- February 2016

- November 2015

- October 2015

- Uncategorized

- Entries feed

- Comments feed

- WordPress.org

Validity & Reliability In Research

A Plain-Language Explanation (With Examples)

By: Derek Jansen (MBA) | Expert Reviewer: Kerryn Warren (PhD) | September 2023

Validity and reliability are two related but distinctly different concepts within research. Understanding what they are and how to achieve them is critically important to any research project. In this post, we’ll unpack these two concepts as simply as possible.

This post is based on our popular online course, Research Methodology Bootcamp . In the course, we unpack the basics of methodology using straightfoward language and loads of examples. If you’re new to academic research, you definitely want to use this link to get 50% off the course (limited-time offer).

Overview: Validity & Reliability

- The big picture

- Validity 101

- Reliability 101

- Key takeaways

First, The Basics…

First, let’s start with a big-picture view and then we can zoom in to the finer details.

Validity and reliability are two incredibly important concepts in research, especially within the social sciences. Both validity and reliability have to do with the measurement of variables and/or constructs – for example, job satisfaction, intelligence, productivity, etc. When undertaking research, you’ll often want to measure these types of constructs and variables and, at the simplest level, validity and reliability are about ensuring the quality and accuracy of those measurements .

As you can probably imagine, if your measurements aren’t accurate or there are quality issues at play when you’re collecting your data, your entire study will be at risk. Therefore, validity and reliability are very important concepts to understand (and to get right). So, let’s unpack each of them.

What Is Validity?

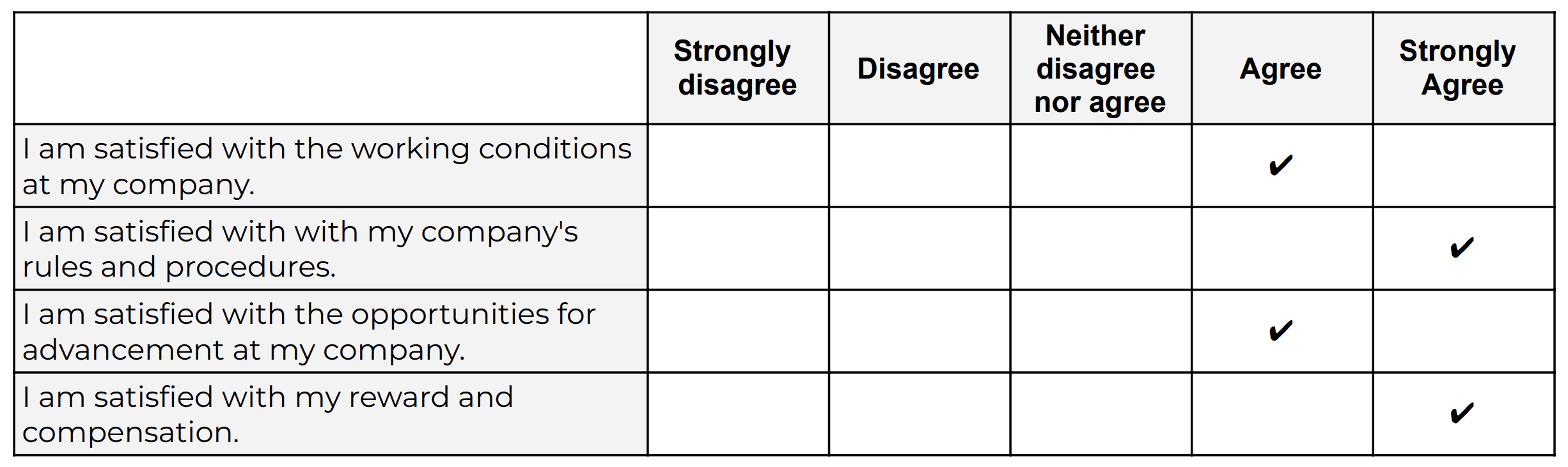

In simple terms, validity (also called “construct validity”) is all about whether a research instrument accurately measures what it’s supposed to measure .

For example, let’s say you have a set of Likert scales that are supposed to quantify someone’s level of overall job satisfaction. If this set of scales focused purely on only one dimension of job satisfaction, say pay satisfaction, this would not be a valid measurement, as it only captures one aspect of the multidimensional construct. In other words, pay satisfaction alone is only one contributing factor toward overall job satisfaction, and therefore it’s not a valid way to measure someone’s job satisfaction.

Oftentimes in quantitative studies, the way in which the researcher or survey designer interprets a question or statement can differ from how the study participants interpret it . Given that respondents don’t have the opportunity to ask clarifying questions when taking a survey, it’s easy for these sorts of misunderstandings to crop up. Naturally, if the respondents are interpreting the question in the wrong way, the data they provide will be pretty useless . Therefore, ensuring that a study’s measurement instruments are valid – in other words, that they are measuring what they intend to measure – is incredibly important.

There are various types of validity and we’re not going to go down that rabbit hole in this post, but it’s worth quickly highlighting the importance of making sure that your research instrument is tightly aligned with the theoretical construct you’re trying to measure . In other words, you need to pay careful attention to how the key theories within your study define the thing you’re trying to measure – and then make sure that your survey presents it in the same way.

For example, sticking with the “job satisfaction” construct we looked at earlier, you’d need to clearly define what you mean by job satisfaction within your study (and this definition would of course need to be underpinned by the relevant theory). You’d then need to make sure that your chosen definition is reflected in the types of questions or scales you’re using in your survey . Simply put, you need to make sure that your survey respondents are perceiving your key constructs in the same way you are. Or, even if they’re not, that your measurement instrument is capturing the necessary information that reflects your definition of the construct at hand.

If all of this talk about constructs sounds a bit fluffy, be sure to check out Research Methodology Bootcamp , which will provide you with a rock-solid foundational understanding of all things methodology-related. Remember, you can take advantage of our 60% discount offer using this link.

Need a helping hand?

What Is Reliability?

As with validity, reliability is an attribute of a measurement instrument – for example, a survey, a weight scale or even a blood pressure monitor. But while validity is concerned with whether the instrument is measuring the “thing” it’s supposed to be measuring, reliability is concerned with consistency and stability . In other words, reliability reflects the degree to which a measurement instrument produces consistent results when applied repeatedly to the same phenomenon , under the same conditions .

As you can probably imagine, a measurement instrument that achieves a high level of consistency is naturally more dependable (or reliable) than one that doesn’t – in other words, it can be trusted to provide consistent measurements . And that, of course, is what you want when undertaking empirical research. If you think about it within a more domestic context, just imagine if you found that your bathroom scale gave you a different number every time you hopped on and off of it – you wouldn’t feel too confident in its ability to measure the variable that is your body weight 🙂

It’s worth mentioning that reliability also extends to the person using the measurement instrument . For example, if two researchers use the same instrument (let’s say a measuring tape) and they get different measurements, there’s likely an issue in terms of how one (or both) of them are using the measuring tape. So, when you think about reliability, consider both the instrument and the researcher as part of the equation.

As with validity, there are various types of reliability and various tests that can be used to assess the reliability of an instrument. A popular one that you’ll likely come across for survey instruments is Cronbach’s alpha , which is a statistical measure that quantifies the degree to which items within an instrument (for example, a set of Likert scales) measure the same underlying construct . In other words, Cronbach’s alpha indicates how closely related the items are and whether they consistently capture the same concept .

Recap: Key Takeaways

Alright, let’s quickly recap to cement your understanding of validity and reliability:

- Validity is concerned with whether an instrument (e.g., a set of Likert scales) is measuring what it’s supposed to measure

- Reliability is concerned with whether that measurement is consistent and stable when measuring the same phenomenon under the same conditions.

In short, validity and reliability are both essential to ensuring that your data collection efforts deliver high-quality, accurate data that help you answer your research questions . So, be sure to always pay careful attention to the validity and reliability of your measurement instruments when collecting and analysing data. As the adage goes, “rubbish in, rubbish out” – make sure that your data inputs are rock-solid.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS.

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS AND I HAVE GREATLY BENEFITED FROM THE CONTENT.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

Experimental research refers to the experiments conducted in the laboratory or under observation in controlled conditions. Here is all you need to know about experimental research.

Disadvantages of primary research – It can be expensive, time-consuming and take a long time to complete if it involves face-to-face contact with customers.

You can transcribe an interview by converting a conversation into a written format including question-answer recording sessions between two or more people.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Reliability and validity: Importance in Medical Research

Affiliations.

- 1 Al-Nafees Medical College,Isra University, Islamabad, Pakistan.

- 2 Fauji Foundation Hospital, Foundation University Medical College, Islamabad, Pakistan.

- PMID: 34974579

- DOI: 10.47391/JPMA.06-861

Reliability and validity are among the most important and fundamental domains in the assessment of any measuring methodology for data-collection in a good research. Validity is about what an instrument measures and how well it does so, whereas reliability concerns the truthfulness in the data obtained and the degree to which any measuring tool controls random error. The current narrative review was planned to discuss the importance of reliability and validity of data-collection or measurement techniques used in research. It describes and explores comprehensively the reliability and validity of research instruments and also discusses different forms of reliability and validity with concise examples. An attempt has been taken to give a brief literature review regarding the significance of reliability and validity in medical sciences.

Keywords: Validity, Reliability, Medical research, Methodology, Assessment, Research tools..

Publication types

- Biomedical Research*

- Reproducibility of Results

- Privacy Policy

Buy Me a Coffee

Home » Reliability – Types, Examples and Guide

Reliability – Types, Examples and Guide

Table of Contents

Reliability

Definition:

Reliability refers to the consistency, dependability, and trustworthiness of a system, process, or measurement to perform its intended function or produce consistent results over time. It is a desirable characteristic in various domains, including engineering, manufacturing, software development, and data analysis.

Reliability In Engineering

In engineering and manufacturing, reliability refers to the ability of a product, equipment, or system to function without failure or breakdown under normal operating conditions for a specified period. A reliable system consistently performs its intended functions, meets performance requirements, and withstands various environmental factors, stress, or wear and tear.

Reliability In Software Development

In software development, reliability relates to the stability and consistency of software applications or systems. A reliable software program operates consistently without crashing, produces accurate results, and handles errors or exceptions gracefully. Reliability is often measured by metrics such as mean time between failures (MTBF) and mean time to repair (MTTR).

Reliability In Data Analysis and Statistics

In data analysis and statistics, reliability refers to the consistency and repeatability of measurements or assessments. For example, if a measurement instrument consistently produces similar results when measuring the same quantity or if multiple raters consistently agree on the same assessment, it is considered reliable. Reliability is often assessed using statistical measures such as test-retest reliability, inter-rater reliability, or internal consistency.

Research Reliability

Research reliability refers to the consistency, stability, and repeatability of research findings . It indicates the extent to which a research study produces consistent and dependable results when conducted under similar conditions. In other words, research reliability assesses whether the same results would be obtained if the study were replicated with the same methodology, sample, and context.

What Affects Reliability in Research

Several factors can affect the reliability of research measurements and assessments. Here are some common factors that can impact reliability:

Measurement Error

Measurement error refers to the variability or inconsistency in the measurements that is not due to the construct being measured. It can arise from various sources, such as the limitations of the measurement instrument, environmental factors, or the characteristics of the participants. Measurement error reduces the reliability of the measure by introducing random variability into the data.

Rater/Observer Bias

In studies involving subjective assessments or ratings, the biases or subjective judgments of the raters or observers can affect reliability. If different raters interpret and evaluate the same phenomenon differently, it can lead to inconsistencies in the ratings, resulting in lower inter-rater reliability.

Participant Factors

Characteristics or factors related to the participants themselves can influence reliability. For example, factors such as fatigue, motivation, attention, or mood can introduce variability in responses, affecting the reliability of self-report measures or performance assessments.

Instrumentation

The quality and characteristics of the measurement instrument can impact reliability. If the instrument lacks clarity, has ambiguous items or instructions, or is prone to measurement errors, it can decrease the reliability of the measure. Poorly designed or unreliable instruments can introduce measurement error and decrease the consistency of the measurements.

Sample Size

Sample size can affect reliability, especially in studies where the reliability coefficient is based on correlations or variability within the sample. A larger sample size generally provides more stable estimates of reliability, while smaller samples can yield less precise estimates.

Time Interval

The time interval between test administrations can impact test-retest reliability. If the time interval is too short, participants may recall their previous responses and answer in a similar manner, artificially inflating the reliability coefficient. On the other hand, if the time interval is too long, true changes in the construct being measured may occur, leading to lower test-retest reliability.

Content Sampling

The specific items or questions included in a measure can affect reliability. If the measure does not adequately sample the full range of the construct being measured or if the items are too similar or redundant, it can result in lower internal consistency reliability.

Scoring and Data Handling

Errors in scoring, data entry, or data handling can introduce variability and impact reliability. Inaccurate or inconsistent scoring procedures, data entry mistakes, or mishandling of missing data can affect the reliability of the measurements.

Context and Environment

The context and environment in which measurements are obtained can influence reliability. Factors such as noise, distractions, lighting conditions, or the presence of others can introduce variability and affect the consistency of the measurements.

Types of Reliability

There are several types of reliability that are commonly discussed in research and measurement contexts. Here are some of the main types of reliability:

Test-Retest Reliability

This type of reliability assesses the consistency of a measure over time. It involves administering the same test or measure to the same group of individuals on two separate occasions and then comparing the results. If the scores are similar or highly correlated across the two testing points, it indicates good test-retest reliability.

Inter-Rater Reliability

Inter-rater reliability examines the degree of agreement or consistency between different raters or observers who are assessing the same phenomenon. It is commonly used in subjective evaluations or assessments where judgments are made by multiple individuals. High inter-rater reliability suggests that different observers are likely to reach the same conclusions or make consistent assessments.

Internal Consistency Reliability

Internal consistency reliability assesses the extent to which the items or questions within a measure are consistent with each other. It is commonly measured using techniques such as Cronbach’s alpha. High internal consistency reliability indicates that the items within a measure are measuring the same construct or concept consistently.

Parallel Forms Reliability

Parallel forms reliability assesses the consistency of different versions or forms of a test that are intended to measure the same construct. Two equivalent versions of a test are administered to the same group of individuals, and the scores are compared to determine the level of agreement between the forms.

Split-Half Reliability

Split-half reliability involves splitting a measure into two halves and examining the consistency between the two halves. It can be done by dividing the items into odd-even pairs or by randomly splitting the items. The scores from the two halves are then compared to assess the degree of consistency.

Alternate Forms Reliability

Alternate forms reliability is similar to parallel forms reliability, but it involves administering two different versions of a test to the same group of individuals. The two forms should be equivalent and measure the same construct. The scores from the two forms are then compared to assess the level of agreement.

Applications of Reliability

Reliability has several important applications across various fields and disciplines. Here are some common applications of reliability:

Psychological and Educational Testing

Reliability is crucial in psychological and educational testing to ensure that the scores obtained from assessments are consistent and dependable. It helps to determine the accuracy and stability of measures such as intelligence tests, personality assessments, academic exams, and aptitude tests.

Market Research

In market research, reliability is important for ensuring consistent and dependable data collection. Surveys, questionnaires, and other data collection instruments need to have high reliability to obtain accurate and consistent responses from participants. Reliability analysis helps researchers identify and address any issues that may affect the consistency of the data.

Health and Medical Research

Reliability is essential in health and medical research to ensure that measurements and assessments used in studies are consistent and trustworthy. This includes the reliability of diagnostic tests, patient-reported outcome measures, observational measures, and psychometric scales. High reliability is crucial for making valid inferences and drawing reliable conclusions from research findings.

Quality Control and Manufacturing

Reliability analysis is widely used in industries such as manufacturing and quality control to assess the reliability of products and processes. It helps to identify and address sources of variation and inconsistency, ensuring that products meet the required standards and specifications consistently.

Social Science Research

Reliability plays a vital role in social science research, including fields such as sociology, anthropology, and political science. It is used to assess the consistency of measurement tools, such as surveys or observational protocols, to ensure that the data collected is reliable and can be trusted for analysis and interpretation.

Performance Evaluation

Reliability is important in performance evaluation systems used in organizations and workplaces. Whether it’s assessing employee performance, evaluating the reliability of scoring rubrics, or measuring the consistency of ratings by supervisors, reliability analysis helps ensure fairness and consistency in the evaluation process.

Psychometrics and Scale Development

Reliability analysis is a fundamental step in psychometrics, which involves developing and validating measurement scales. Researchers assess the reliability of items and subscales to ensure that the scale measures the intended construct consistently and accurately.

Examples of Reliability

Here are some examples of reliability in different contexts:

Test-Retest Reliability Example: A researcher administers a personality questionnaire to a group of participants and then administers the same questionnaire to the same participants after a certain period, such as two weeks. The scores obtained from the two administrations are highly correlated, indicating good test-retest reliability.

Inter-Rater Reliability Example: Multiple teachers assess the essays of a group of students using a standardized grading rubric. The ratings assigned by the teachers show a high level of agreement or correlation, indicating good inter-rater reliability.

Internal Consistency Reliability Example: A researcher develops a questionnaire to measure job satisfaction. The researcher administers the questionnaire to a group of employees and calculates Cronbach’s alpha to assess internal consistency. The calculated value of Cronbach’s alpha is high (e.g., above 0.8), indicating good internal consistency reliability.

Parallel Forms Reliability Example: Two versions of a mathematics exam are created, which are designed to measure the same mathematical skills. Both versions of the exam are administered to the same group of students, and the scores from the two versions are highly correlated, indicating good parallel forms reliability.

Split-Half Reliability Example: A researcher develops a survey to measure self-esteem. The survey consists of 20 items, and the researcher randomly divides the items into two halves. The scores obtained from each half of the survey show a high level of agreement or correlation, indicating good split-half reliability.

Alternate Forms Reliability Example: A researcher develops two versions of a language proficiency test, which are designed to measure the same language skills. Both versions of the test are administered to the same group of participants, and the scores from the two versions are highly correlated, indicating good alternate forms reliability.

Where to Write About Reliability in A Thesis

When writing about reliability in a thesis, there are several sections where you can address this topic. Here are some common sections in a thesis where you can discuss reliability:

Introduction :

In the introduction section of your thesis, you can provide an overview of the study and briefly introduce the concept of reliability. Explain why reliability is important in your research field and how it relates to your study objectives.

Theoretical Framework:

If your thesis includes a theoretical framework or a literature review, this is a suitable section to discuss reliability. Provide an overview of the relevant theories, models, or concepts related to reliability in your field. Discuss how other researchers have measured and assessed reliability in similar studies.

Methodology:

The methodology section is crucial for addressing reliability. Describe the research design, data collection methods, and measurement instruments used in your study. Explain how you ensured the reliability of your measurements or data collection procedures. This may involve discussing pilot studies, inter-rater reliability, test-retest reliability, or other techniques used to assess and improve reliability.

Data Analysis:

In the data analysis section, you can discuss the statistical techniques employed to assess the reliability of your data. This might include measures such as Cronbach’s alpha, Cohen’s kappa, or intraclass correlation coefficients (ICC), depending on the nature of your data and research design. Present the results of reliability analyses and interpret their implications for your study.

Discussion:

In the discussion section, analyze and interpret the reliability results in relation to your research findings and objectives. Discuss any limitations or challenges encountered in establishing or maintaining reliability in your study. Consider the implications of reliability for the validity and generalizability of your results.

Conclusion:

In the conclusion section, summarize the main points discussed in your thesis regarding reliability. Emphasize the importance of reliability in research and highlight any recommendations or suggestions for future studies to enhance reliability.

Importance of Reliability

Reliability is of utmost importance in research, measurement, and various practical applications. Here are some key reasons why reliability is important:

- Consistency : Reliability ensures consistency in measurements and assessments. Consistent results indicate that the measure or instrument is stable and produces similar outcomes when applied repeatedly. This consistency allows researchers and practitioners to have confidence in the reliability of the data collected and the conclusions drawn from it.

- Accuracy : Reliability is closely linked to accuracy. A reliable measure produces results that are close to the true value or state of the phenomenon being measured. When a measure is unreliable, it introduces error and uncertainty into the data, which can lead to incorrect interpretations and flawed decision-making.

- Trustworthiness : Reliability enhances the trustworthiness of measurements and assessments. When a measure is reliable, it indicates that it is dependable and can be trusted to provide consistent and accurate results. This is particularly important in fields where decisions and actions are based on the data collected, such as education, healthcare, and market research.

- Comparability : Reliability enables meaningful comparisons between different groups, individuals, or time points. When measures are reliable, differences or changes observed can be attributed to true differences in the underlying construct, rather than measurement error. This allows for valid comparisons and evaluations, both within a study and across different studies.

- Validity : Reliability is a prerequisite for validity. Validity refers to the extent to which a measure or assessment accurately captures the construct it is intended to measure. If a measure is unreliable, it cannot be valid, as it does not consistently reflect the construct of interest. Establishing reliability is an important step in establishing the validity of a measure.

- Decision-making : Reliability is crucial for making informed decisions based on data. Whether it’s evaluating employee performance, diagnosing medical conditions, or conducting research studies, reliable measurements and assessments provide a solid foundation for decision-making processes. They help to reduce uncertainty and increase confidence in the conclusions drawn from the data.

- Quality Assurance : Reliability is essential for maintaining quality assurance in various fields. It allows organizations to assess and monitor the consistency and dependability of their processes, products, and services. By ensuring reliability, organizations can identify areas of improvement, address sources of variation, and deliver consistent and high-quality outcomes.

Limitations of Reliability

Here are some limitations of reliability:

- Limited to consistency: Reliability primarily focuses on the consistency of measurements and findings. However, it does not guarantee the accuracy or validity of the measurements. A measurement can be consistent but still systematically biased or flawed, leading to inaccurate results. Reliability alone cannot address validity concerns.

- Context-dependent: Reliability can be influenced by the specific context, conditions, or population under study. A measurement or instrument that demonstrates high reliability in one context may not necessarily exhibit the same level of reliability in a different context. Researchers need to consider the specific characteristics and limitations of their study context when interpreting reliability.

- Inadequate for complex constructs: Reliability is often based on the assumption of unidimensionality, which means that a measurement instrument is designed to capture a single construct. However, many real-world phenomena are complex and multidimensional, making it challenging to assess reliability accurately. Reliability measures may not adequately capture the full complexity of such constructs.

- Susceptible to systematic errors: Reliability focuses on minimizing random errors, but it may not detect or address systematic errors or biases in measurements. Systematic errors can arise from flaws in the measurement instrument, data collection procedures, or sample selection. Reliability assessments may not fully capture or address these systematic errors, leading to biased or inaccurate results.

- Relies on assumptions: Reliability assessments often rely on certain assumptions, such as the assumption of measurement invariance or the assumption of stable conditions over time. These assumptions may not always hold true in real-world research settings, particularly when studying dynamic or evolving phenomena. Failure to meet these assumptions can compromise the reliability of the research.

- Limited to quantitative measures: Reliability is typically applied to quantitative measures and instruments, which can be problematic when studying qualitative or subjective phenomena. Reliability measures may not fully capture the richness and complexity of qualitative data, limiting their applicability in certain research domains.

Also see Reliability Vs Validity

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Validity – Types, Examples and Guide

Alternate Forms Reliability – Methods, Examples...

Construct Validity – Types, Threats and Examples

Internal Validity – Threats, Examples and Guide

Reliability Vs Validity

Internal Consistency Reliability – Methods...

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 5: Psychological Measurement

Reliability and Validity of Measurement

Learning Objectives

- Define reliability, including the different types and how they are assessed.

- Define validity, including the different types and how they are assessed.

- Describe the kinds of evidence that would be relevant to assessing the reliability and validity of a particular measure.

Again, measurement involves assigning scores to individuals so that they represent some characteristic of the individuals. But how do researchers know that the scores actually represent the characteristic, especially when it is a construct like intelligence, self-esteem, depression, or working memory capacity? The answer is that they conduct research using the measure to confirm that the scores make sense based on their understanding of the construct being measured. This is an extremely important point. Psychologists do not simply assume that their measures work. Instead, they collect data to demonstrate that they work. If their research does not demonstrate that a measure works, they stop using it.

As an informal example, imagine that you have been dieting for a month. Your clothes seem to be fitting more loosely, and several friends have asked if you have lost weight. If at this point your bathroom scale indicated that you had lost 10 pounds, this would make sense and you would continue to use the scale. But if it indicated that you had gained 10 pounds, you would rightly conclude that it was broken and either fix it or get rid of it. In evaluating a measurement method, psychologists consider two general dimensions: reliability and validity.

Reliability

Reliability refers to the consistency of a measure. Psychologists consider three types of consistency: over time (test-retest reliability), across items (internal consistency), and across different researchers (inter-rater reliability).

Test-Retest Reliability

When researchers measure a construct that they assume to be consistent across time, then the scores they obtain should also be consistent across time. Test-retest reliability is the extent to which this is actually the case. For example, intelligence is generally thought to be consistent across time. A person who is highly intelligent today will be highly intelligent next week. This means that any good measure of intelligence should produce roughly the same scores for this individual next week as it does today. Clearly, a measure that produces highly inconsistent scores over time cannot be a very good measure of a construct that is supposed to be consistent.

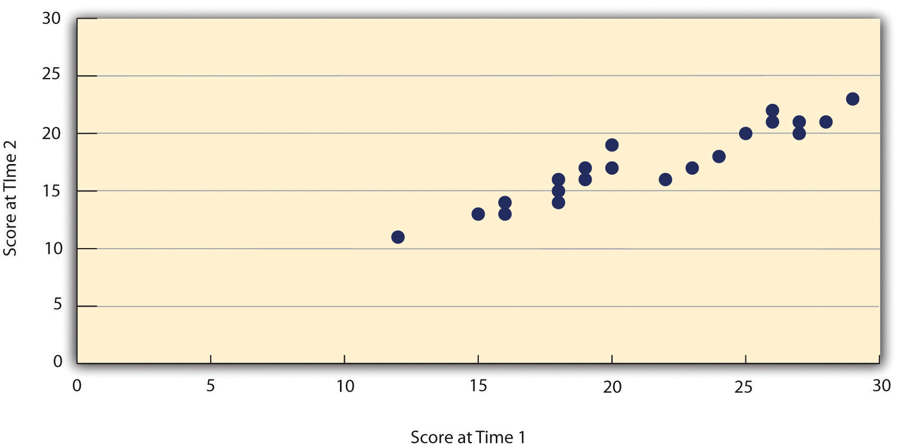

Assessing test-retest reliability requires using the measure on a group of people at one time, using it again on the same group of people at a later time, and then looking at test-retest correlation between the two sets of scores. This is typically done by graphing the data in a scatterplot and computing Pearson’s r . Figure 5.2 shows the correlation between two sets of scores of several university students on the Rosenberg Self-Esteem Scale, administered two times, a week apart. Pearson’s r for these data is +.95. In general, a test-retest correlation of +.80 or greater is considered to indicate good reliability.

Again, high test-retest correlations make sense when the construct being measured is assumed to be consistent over time, which is the case for intelligence, self-esteem, and the Big Five personality dimensions. But other constructs are not assumed to be stable over time. The very nature of mood, for example, is that it changes. So a measure of mood that produced a low test-retest correlation over a period of a month would not be a cause for concern.

Internal Consistency

A second kind of reliability is internal consistency , which is the consistency of people’s responses across the items on a multiple-item measure. In general, all the items on such measures are supposed to reflect the same underlying construct, so people’s scores on those items should be correlated with each other. On the Rosenberg Self-Esteem Scale, people who agree that they are a person of worth should tend to agree that that they have a number of good qualities. If people’s responses to the different items are not correlated with each other, then it would no longer make sense to claim that they are all measuring the same underlying construct. This is as true for behavioural and physiological measures as for self-report measures. For example, people might make a series of bets in a simulated game of roulette as a measure of their level of risk seeking. This measure would be internally consistent to the extent that individual participants’ bets were consistently high or low across trials.

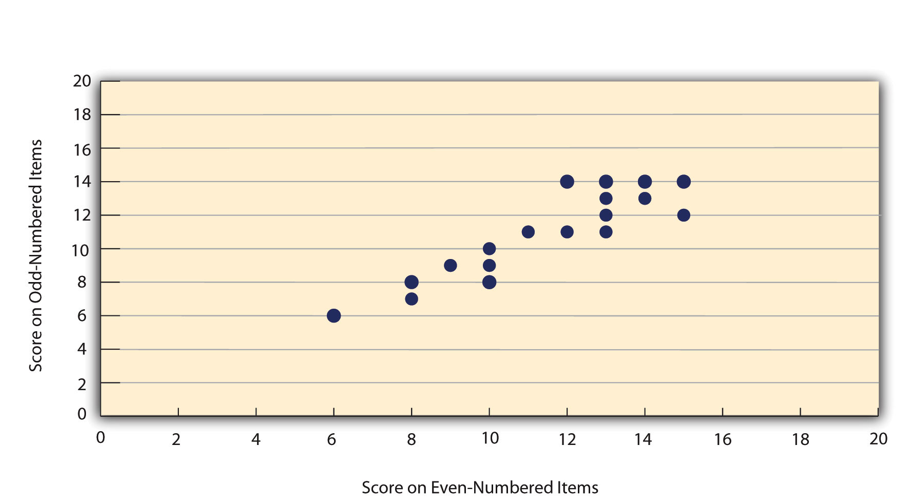

Like test-retest reliability, internal consistency can only be assessed by collecting and analyzing data. One approach is to look at a split-half correlation . This involves splitting the items into two sets, such as the first and second halves of the items or the even- and odd-numbered items. Then a score is computed for each set of items, and the relationship between the two sets of scores is examined. For example, Figure 5.3 shows the split-half correlation between several university students’ scores on the even-numbered items and their scores on the odd-numbered items of the Rosenberg Self-Esteem Scale. Pearson’s r for these data is +.88. A split-half correlation of +.80 or greater is generally considered good internal consistency.

Perhaps the most common measure of internal consistency used by researchers in psychology is a statistic called Cronbach’s α (the Greek letter alpha). Conceptually, α is the mean of all possible split-half correlations for a set of items. For example, there are 252 ways to split a set of 10 items into two sets of five. Cronbach’s α would be the mean of the 252 split-half correlations. Note that this is not how α is actually computed, but it is a correct way of interpreting the meaning of this statistic. Again, a value of +.80 or greater is generally taken to indicate good internal consistency.

Interrater Reliability

Many behavioural measures involve significant judgment on the part of an observer or a rater. Inter-rater reliability is the extent to which different observers are consistent in their judgments. For example, if you were interested in measuring university students’ social skills, you could make video recordings of them as they interacted with another student whom they are meeting for the first time. Then you could have two or more observers watch the videos and rate each student’s level of social skills. To the extent that each participant does in fact have some level of social skills that can be detected by an attentive observer, different observers’ ratings should be highly correlated with each other. Inter-rater reliability would also have been measured in Bandura’s Bobo doll study. In this case, the observers’ ratings of how many acts of aggression a particular child committed while playing with the Bobo doll should have been highly positively correlated. Interrater reliability is often assessed using Cronbach’s α when the judgments are quantitative or an analogous statistic called Cohen’s κ (the Greek letter kappa) when they are categorical.

Validity is the extent to which the scores from a measure represent the variable they are intended to. But how do researchers make this judgment? We have already considered one factor that they take into account—reliability. When a measure has good test-retest reliability and internal consistency, researchers should be more confident that the scores represent what they are supposed to. There has to be more to it, however, because a measure can be extremely reliable but have no validity whatsoever. As an absurd example, imagine someone who believes that people’s index finger length reflects their self-esteem and therefore tries to measure self-esteem by holding a ruler up to people’s index fingers. Although this measure would have extremely good test-retest reliability, it would have absolutely no validity. The fact that one person’s index finger is a centimetre longer than another’s would indicate nothing about which one had higher self-esteem.

Discussions of validity usually divide it into several distinct “types.” But a good way to interpret these types is that they are other kinds of evidence—in addition to reliability—that should be taken into account when judging the validity of a measure. Here we consider three basic kinds: face validity, content validity, and criterion validity.

Face Validity

Face validity is the extent to which a measurement method appears “on its face” to measure the construct of interest. Most people would expect a self-esteem questionnaire to include items about whether they see themselves as a person of worth and whether they think they have good qualities. So a questionnaire that included these kinds of items would have good face validity. The finger-length method of measuring self-esteem, on the other hand, seems to have nothing to do with self-esteem and therefore has poor face validity. Although face validity can be assessed quantitatively—for example, by having a large sample of people rate a measure in terms of whether it appears to measure what it is intended to—it is usually assessed informally.

Face validity is at best a very weak kind of evidence that a measurement method is measuring what it is supposed to. One reason is that it is based on people’s intuitions about human behaviour, which are frequently wrong. It is also the case that many established measures in psychology work quite well despite lacking face validity. The Minnesota Multiphasic Personality Inventory-2 (MMPI-2) measures many personality characteristics and disorders by having people decide whether each of over 567 different statements applies to them—where many of the statements do not have any obvious relationship to the construct that they measure. For example, the items “I enjoy detective or mystery stories” and “The sight of blood doesn’t frighten me or make me sick” both measure the suppression of aggression. In this case, it is not the participants’ literal answers to these questions that are of interest, but rather whether the pattern of the participants’ responses to a series of questions matches those of individuals who tend to suppress their aggression.

Content Validity

Content validity is the extent to which a measure “covers” the construct of interest. For example, if a researcher conceptually defines test anxiety as involving both sympathetic nervous system activation (leading to nervous feelings) and negative thoughts, then his measure of test anxiety should include items about both nervous feelings and negative thoughts. Or consider that attitudes are usually defined as involving thoughts, feelings, and actions toward something. By this conceptual definition, a person has a positive attitude toward exercise to the extent that he or she thinks positive thoughts about exercising, feels good about exercising, and actually exercises. So to have good content validity, a measure of people’s attitudes toward exercise would have to reflect all three of these aspects. Like face validity, content validity is not usually assessed quantitatively. Instead, it is assessed by carefully checking the measurement method against the conceptual definition of the construct.

Criterion Validity

Criterion validity is the extent to which people’s scores on a measure are correlated with other variables (known as criteria ) that one would expect them to be correlated with. For example, people’s scores on a new measure of test anxiety should be negatively correlated with their performance on an important school exam. If it were found that people’s scores were in fact negatively correlated with their exam performance, then this would be a piece of evidence that these scores really represent people’s test anxiety. But if it were found that people scored equally well on the exam regardless of their test anxiety scores, then this would cast doubt on the validity of the measure.

A criterion can be any variable that one has reason to think should be correlated with the construct being measured, and there will usually be many of them. For example, one would expect test anxiety scores to be negatively correlated with exam performance and course grades and positively correlated with general anxiety and with blood pressure during an exam. Or imagine that a researcher develops a new measure of physical risk taking. People’s scores on this measure should be correlated with their participation in “extreme” activities such as snowboarding and rock climbing, the number of speeding tickets they have received, and even the number of broken bones they have had over the years. When the criterion is measured at the same time as the construct, criterion validity is referred to as concurrent validity ; however, when the criterion is measured at some point in the future (after the construct has been measured), it is referred to as predictive validity (because scores on the measure have “predicted” a future outcome).

Criteria can also include other measures of the same construct. For example, one would expect new measures of test anxiety or physical risk taking to be positively correlated with existing measures of the same constructs. This is known as convergent validity .

Assessing convergent validity requires collecting data using the measure. Researchers John Cacioppo and Richard Petty did this when they created their self-report Need for Cognition Scale to measure how much people value and engage in thinking (Cacioppo & Petty, 1982) [1] . In a series of studies, they showed that people’s scores were positively correlated with their scores on a standardized academic achievement test, and that their scores were negatively correlated with their scores on a measure of dogmatism (which represents a tendency toward obedience). In the years since it was created, the Need for Cognition Scale has been used in literally hundreds of studies and has been shown to be correlated with a wide variety of other variables, including the effectiveness of an advertisement, interest in politics, and juror decisions (Petty, Briñol, Loersch, & McCaslin, 2009) [2] .

Discriminant Validity

Discriminant validity , on the other hand, is the extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct. For example, self-esteem is a general attitude toward the self that is fairly stable over time. It is not the same as mood, which is how good or bad one happens to be feeling right now. So people’s scores on a new measure of self-esteem should not be very highly correlated with their moods. If the new measure of self-esteem were highly correlated with a measure of mood, it could be argued that the new measure is not really measuring self-esteem; it is measuring mood instead.

When they created the Need for Cognition Scale, Cacioppo and Petty also provided evidence of discriminant validity by showing that people’s scores were not correlated with certain other variables. For example, they found only a weak correlation between people’s need for cognition and a measure of their cognitive style—the extent to which they tend to think analytically by breaking ideas into smaller parts or holistically in terms of “the big picture.” They also found no correlation between people’s need for cognition and measures of their test anxiety and their tendency to respond in socially desirable ways. All these low correlations provide evidence that the measure is reflecting a conceptually distinct construct.

Key Takeaways

- Psychological researchers do not simply assume that their measures work. Instead, they conduct research to show that they work. If they cannot show that they work, they stop using them.

- There are two distinct criteria by which researchers evaluate their measures: reliability and validity. Reliability is consistency across time (test-retest reliability), across items (internal consistency), and across researchers (interrater reliability). Validity is the extent to which the scores actually represent the variable they are intended to.

- Validity is a judgment based on various types of evidence. The relevant evidence includes the measure’s reliability, whether it covers the construct of interest, and whether the scores it produces are correlated with other variables they are expected to be correlated with and not correlated with variables that are conceptually distinct.

- The reliability and validity of a measure is not established by any single study but by the pattern of results across multiple studies. The assessment of reliability and validity is an ongoing process.

- Practice: Ask several friends to complete the Rosenberg Self-Esteem Scale. Then assess its internal consistency by making a scatterplot to show the split-half correlation (even- vs. odd-numbered items). Compute Pearson’s r too if you know how.

- Discussion: Think back to the last college exam you took and think of the exam as a psychological measure. What construct do you think it was intended to measure? Comment on its face and content validity. What data could you collect to assess its reliability and criterion validity?

- Cacioppo, J. T., & Petty, R. E. (1982). The need for cognition. Journal of Personality and Social Psychology, 42 , 116–131. ↵

- Petty, R. E, Briñol, P., Loersch, C., & McCaslin, M. J. (2009). The need for cognition. In M. R. Leary & R. H. Hoyle (Eds.), Handbook of individual differences in social behaviour (pp. 318–329). New York, NY: Guilford Press. ↵

The consistency of a measure.

The consistency of a measure over time.

The consistency of a measure on the same group of people at different times.

Consistency of people’s responses across the items on a multiple-item measure.

Method of assessing internal consistency through splitting the items into two sets and examining the relationship between them.

A statistic in which α is the mean of all possible split-half correlations for a set of items.

The extent to which different observers are consistent in their judgments.

The extent to which the scores from a measure represent the variable they are intended to.

The extent to which a measurement method appears to measure the construct of interest.

The extent to which a measure “covers” the construct of interest.

The extent to which people’s scores on a measure are correlated with other variables that one would expect them to be correlated with.

In reference to criterion validity, variables that one would expect to be correlated with the measure.

When the criterion is measured at the same time as the construct.

when the criterion is measured at some point in the future (after the construct has been measured).

When new measures positively correlate with existing measures of the same constructs.

The extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 18, Issue 3

- Validity and reliability in quantitative studies

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Roberta Heale 1 ,

- Alison Twycross 2

- 1 School of Nursing, Laurentian University , Sudbury, Ontario , Canada

- 2 Faculty of Health and Social Care , London South Bank University , London , UK

- Correspondence to : Dr Roberta Heale, School of Nursing, Laurentian University, Ramsey Lake Road, Sudbury, Ontario, Canada P3E2C6; rheale{at}laurentian.ca

https://doi.org/10.1136/eb-2015-102129

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Evidence-based practice includes, in part, implementation of the findings of well-conducted quality research studies. So being able to critique quantitative research is an important skill for nurses. Consideration must be given not only to the results of the study but also the rigour of the research. Rigour refers to the extent to which the researchers worked to enhance the quality of the studies. In quantitative research, this is achieved through measurement of the validity and reliability. 1

- View inline

Types of validity

The first category is content validity . This category looks at whether the instrument adequately covers all the content that it should with respect to the variable. In other words, does the instrument cover the entire domain related to the variable, or construct it was designed to measure? In an undergraduate nursing course with instruction about public health, an examination with content validity would cover all the content in the course with greater emphasis on the topics that had received greater coverage or more depth. A subset of content validity is face validity , where experts are asked their opinion about whether an instrument measures the concept intended.

Construct validity refers to whether you can draw inferences about test scores related to the concept being studied. For example, if a person has a high score on a survey that measures anxiety, does this person truly have a high degree of anxiety? In another example, a test of knowledge of medications that requires dosage calculations may instead be testing maths knowledge.

There are three types of evidence that can be used to demonstrate a research instrument has construct validity:

Homogeneity—meaning that the instrument measures one construct.

Convergence—this occurs when the instrument measures concepts similar to that of other instruments. Although if there are no similar instruments available this will not be possible to do.

Theory evidence—this is evident when behaviour is similar to theoretical propositions of the construct measured in the instrument. For example, when an instrument measures anxiety, one would expect to see that participants who score high on the instrument for anxiety also demonstrate symptoms of anxiety in their day-to-day lives. 2

The final measure of validity is criterion validity . A criterion is any other instrument that measures the same variable. Correlations can be conducted to determine the extent to which the different instruments measure the same variable. Criterion validity is measured in three ways:

Convergent validity—shows that an instrument is highly correlated with instruments measuring similar variables.

Divergent validity—shows that an instrument is poorly correlated to instruments that measure different variables. In this case, for example, there should be a low correlation between an instrument that measures motivation and one that measures self-efficacy.

Predictive validity—means that the instrument should have high correlations with future criterions. 2 For example, a score of high self-efficacy related to performing a task should predict the likelihood a participant completing the task.

Reliability

Reliability relates to the consistency of a measure. A participant completing an instrument meant to measure motivation should have approximately the same responses each time the test is completed. Although it is not possible to give an exact calculation of reliability, an estimate of reliability can be achieved through different measures. The three attributes of reliability are outlined in table 2 . How each attribute is tested for is described below.

Attributes of reliability

Homogeneity (internal consistency) is assessed using item-to-total correlation, split-half reliability, Kuder-Richardson coefficient and Cronbach's α. In split-half reliability, the results of a test, or instrument, are divided in half. Correlations are calculated comparing both halves. Strong correlations indicate high reliability, while weak correlations indicate the instrument may not be reliable. The Kuder-Richardson test is a more complicated version of the split-half test. In this process the average of all possible split half combinations is determined and a correlation between 0–1 is generated. This test is more accurate than the split-half test, but can only be completed on questions with two answers (eg, yes or no, 0 or 1). 3

Cronbach's α is the most commonly used test to determine the internal consistency of an instrument. In this test, the average of all correlations in every combination of split-halves is determined. Instruments with questions that have more than two responses can be used in this test. The Cronbach's α result is a number between 0 and 1. An acceptable reliability score is one that is 0.7 and higher. 1 , 3