Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 10 April 2024

A hybrid particle swarm optimization algorithm for solving engineering problem

- Jinwei Qiao 1 , 2 ,

- Guangyuan Wang 1 , 2 ,

- Zhi Yang 1 , 2 ,

- Xiaochuan Luo 3 ,

- Jun Chen 1 , 2 ,

- Kan Li 4 &

- Pengbo Liu 1 , 2

Scientific Reports volume 14 , Article number: 8357 ( 2024 ) Cite this article

94 Accesses

Metrics details

- Computational science

- Mechanical engineering

To overcome the disadvantages of premature convergence and easy trapping into local optimum solutions, this paper proposes an improved particle swarm optimization algorithm (named NDWPSO algorithm) based on multiple hybrid strategies. Firstly, the elite opposition-based learning method is utilized to initialize the particle position matrix. Secondly, the dynamic inertial weight parameters are given to improve the global search speed in the early iterative phase. Thirdly, a new local optimal jump-out strategy is proposed to overcome the "premature" problem. Finally, the algorithm applies the spiral shrinkage search strategy from the whale optimization algorithm (WOA) and the Differential Evolution (DE) mutation strategy in the later iteration to accelerate the convergence speed. The NDWPSO is further compared with other 8 well-known nature-inspired algorithms (3 PSO variants and 5 other intelligent algorithms) on 23 benchmark test functions and three practical engineering problems. Simulation results prove that the NDWPSO algorithm obtains better results for all 49 sets of data than the other 3 PSO variants. Compared with 5 other intelligent algorithms, the NDWPSO obtains 69.2%, 84.6%, and 84.6% of the best results for the benchmark function ( \({f}_{1}-{f}_{13}\) ) with 3 kinds of dimensional spaces (Dim = 30,50,100) and 80% of the best optimal solutions for 10 fixed-multimodal benchmark functions. Also, the best design solutions are obtained by NDWPSO for all 3 classical practical engineering problems.

Similar content being viewed by others

A clustering-based competitive particle swarm optimization with grid ranking for multi-objective optimization problems

Qianlin Ye, Zheng Wang, … Mengjiao Yu

A modified shuffled frog leaping algorithm with inertia weight

Zhuanzhe Zhao, Mengxian Wang, … Zhibo Liu

Appropriate noise addition to metaheuristic algorithms can enhance their performance

Kwok Pui Choi, Enzio Hai Hong Kam, … Weng Kee Wong

Introduction

In the ever-changing society, new optimization problems arise every moment, and they are distributed in various fields, such as automation control 1 , statistical physics 2 , security prevention and temperature prediction 3 , artificial intelligence 4 , and telecommunication technology 5 . Faced with a constant stream of practical engineering optimization problems, traditional solution methods gradually lose their efficiency and convenience, making it more and more expensive to solve the problems. Therefore, researchers have developed many metaheuristic algorithms and successfully applied them to the solution of optimization problems. Among them, Particle swarm optimization (PSO) algorithm 6 is one of the most widely used swarm intelligence algorithms.

However, the basic PSO has a simple operating principle and solves problems with high efficiency and good computational performance, but it suffers from the disadvantages of easily trapping in local optima and premature convergence. To improve the overall performance of the particle swarm algorithm, an improved particle swarm optimization algorithm is proposed by the multiple hybrid strategy in this paper. The improved PSO incorporates the search ideas of other intelligent algorithms (DE, WOA), so the improved algorithm proposed in this paper is named NDWPSO. The main improvement schemes are divided into the following 4 points: Firstly, a strategy of elite opposition-based learning is introduced into the particle population position initialization. A high-quality initialization matrix of population position can improve the convergence speed of the algorithm. Secondly, a dynamic weight methodology is adopted for the acceleration coefficients by combining the iterative map and linearly transformed method. This method utilizes the chaotic nature of the mapping function, the fast convergence capability of the dynamic weighting scheme, and the time-varying property of the acceleration coefficients. Thus, the global search and local search of the algorithm are balanced and the global search speed of the population is improved. Thirdly, a determination mechanism is set up to detect whether the algorithm falls into a local optimum. When the algorithm is “premature”, the population resets 40% of the position information to overcome the local optimum. Finally, the spiral shrinking mechanism combined with the DE/best/2 position mutation is used in the later iteration, which further improves the solution accuracy.

The structure of the paper is given as follows: Sect. “ Particle swarm optimization (PSO) ” describes the principle of the particle swarm algorithm. Section “ Improved particle swarm optimization algorithm ” shows the detailed improvement strategy and a comparison experiment of inertia weight is set up for the proposed NDWPSO. Section “ Experiment and discussion ” includes the experimental and result discussion sections on the performance of the improved algorithm. Section “ Conclusions and future works ” summarizes the main findings of this study.

Literature review

This section reviews some metaheuristic algorithms and other improved PSO algorithms. A simple discussion about recently proposed research studies is given.

Metaheuristic algorithms

A series of metaheuristic algorithms have been proposed in recent years by using various innovative approaches. For instance, Lin et al. 7 proposed a novel artificial bee colony algorithm (ABCLGII) in 2018 and compared ABCLGII with other outstanding ABC variants on 52 frequently used test functions. Abed-alguni et al. 8 proposed an exploratory cuckoo search (ECS) algorithm in 2021 and carried out several experiments to investigate the performance of ECS by 14 benchmark functions. Brajević 9 presented a novel shuffle-based artificial bee colony (SB-ABC) algorithm for solving integer programming and minimax problems in 2021. The experiments are tested on 7 integer programming problems and 10 minimax problems. In 2022, Khan et al. 10 proposed a non-deterministic meta-heuristic algorithm called Non-linear Activated Beetle Antennae Search (NABAS) for a non-convex tax-aware portfolio selection problem. Brajević et al. 11 proposed a hybridization of the sine cosine algorithm (HSCA) in 2022 to solve 15 complex structural and mechanical engineering design optimization problems. Abed-Alguni et al. 12 proposed an improved Salp Swarm Algorithm (ISSA) in 2022 for single-objective continuous optimization problems. A set of 14 standard benchmark functions was used to evaluate the performance of ISSA. In 2023, Nadimi et al. 13 proposed a binary starling murmuration optimization (BSMO) to select the effective features from different important diseases. In the same year, Nadimi et al. 14 systematically reviewed the last 5 years' developments of WOA and made a critical analysis of those WOA variants. In 2024, Fatahi et al. 15 proposed an Improved Binary Quantum-based Avian Navigation Optimizer Algorithm (IBQANA) for the Feature Subset Selection problem in the medical area. Experimental evaluation on 12 medical datasets demonstrates that IBQANA outperforms 7 established algorithms. Abed-alguni et al. 16 proposed an Improved Binary DJaya Algorithm (IBJA) to solve the Feature Selection problem in 2024. The IBJA’s performance was compared against 4 ML classifiers and 10 efficient optimization algorithms.

Improved PSO algorithms

Many researchers have constantly proposed some improved PSO algorithms to solve engineering problems in different fields. For instance, Yeh 17 proposed an improved particle swarm algorithm, which combines a new self-boundary search and a bivariate update mechanism, to solve the reliability redundancy allocation problem (RRAP) problem. Solomon et al. 18 designed a collaborative multi-group particle swarm algorithm with high parallelism that was used to test the adaptability of Graphics Processing Units (GPUs) in distributed computing environments. Mukhopadhyay and Banerjee 19 proposed a chaotic multi-group particle swarm optimization (CMS-PSO) to estimate the unknown parameters of an autonomous chaotic laser system. Duan et al. 20 designed an improved particle swarm algorithm with nonlinear adjustment of inertia weights to improve the coupling accuracy between laser diodes and single-mode fibers. Sun et al. 21 proposed a particle swarm optimization algorithm combined with non-Gaussian stochastic distribution for the optimal design of wind turbine blades. Based on a multiple swarm scheme, Liu et al. 22 proposed an improved particle swarm optimization algorithm to predict the temperatures of steel billets for the reheating furnace. In 2022, Gad 23 analyzed the existing 2140 papers on Swarm Intelligence between 2017 and 2019 and pointed out that the PSO algorithm still needs further research. In general, the improved methods can be classified into four categories:

Adjusting the distribution of algorithm parameters. Feng et al. 24 used a nonlinear adaptive method on inertia weights to balance local and global search and introduced asynchronously varying acceleration coefficients.

Changing the updating formula of the particle swarm position. Both papers 25 and 26 used chaotic mapping functions to update the inertia weight parameters and combined them with a dynamic weighting strategy to update the particle swarm positions. This improved approach enables the particle swarm algorithm to be equipped with fast convergence of performance.

The initialization of the swarm. Alsaidy and Abbood proposed 27 a hybrid task scheduling algorithm that replaced the random initialization of the meta-heuristic algorithm with the heuristic algorithms MCT-PSO and LJFP-PSO.

Combining with other intelligent algorithms: Liu et al. 28 introduced the differential evolution (DE) algorithm into PSO to increase the particle swarm as diversity and reduce the probability of the population falling into local optimum.

Particle swarm optimization (PSO)

The particle swarm optimization algorithm is a population intelligence algorithm for solving continuous and discrete optimization problems. It originated from the social behavior of individuals in bird and fish flocks 6 . The core of the PSO algorithm is that an individual particle identifies potential solutions by flight in a defined constraint space adjusts its exploration direction to approach the global optimal solution based on the shared information among the group, and finally solves the optimization problem. Each particle \(i\) includes two attributes: velocity vector \({V}_{i}=\left[{v}_{i1},{v}_{i2},{v}_{i3},{...,v}_{ij},{...,v}_{iD},\right]\) and position vector \({X}_{i}=[{x}_{i1},{x}_{i2},{x}_{i3},...,{x}_{ij},...,{x}_{iD}]\) . The velocity vector is used to modify the motion path of the swarm; the position vector represents a potential solution for the optimization problem. Here, \(j=\mathrm{1,2},\dots ,D\) , \(D\) represents the dimension of the constraint space. The equations for updating the velocity and position of the particle swarm are shown in Eqs. ( 1 ) and ( 2 ).

Here \({Pbest}_{i}^{k}\) represents the previous optimal position of the particle \(i\) , and \({Gbest}\) is the optimal position discovered by the whole population. \(i=\mathrm{1,2},\dots ,n\) , \(n\) denotes the size of the particle swarm. \({c}_{1}\) and \({c}_{2}\) are the acceleration constants, which are used to adjust the search step of the particle 29 . \({r}_{1}\) and \({r}_{2}\) are two random uniform values distributed in the range \([\mathrm{0,1}]\) , which are used to improve the randomness of the particle search. \(\omega\) inertia weight parameter, which is used to adjust the scale of the search range of the particle swarm 30 . The basic PSO sets the inertia weight parameter as a time-varying parameter to balance global exploration and local seeking. The updated equation of the inertia weight parameter is given as follows:

where \({\omega }_{max}\) and \({\omega }_{min}\) represent the upper and lower limits of the range of inertia weight parameter. \(k\) and \(Mk\) are the current iteration and maximum iteration.

Improved particle swarm optimization algorithm

According to the no free lunch theory 31 , it is known that no algorithm can solve every practical problem with high quality and efficiency for increasingly complex and diverse optimization problems. In this section, several improvement strategies are proposed to improve the search efficiency and overcome this shortcoming of the basic PSO algorithm.

Improvement strategies

The optimization strategies of the improved PSO algorithm are shown as follows:

The inertia weight parameter is updated by an improved chaotic variables method instead of a linear decreasing strategy. Chaotic mapping performs the whole search at a higher speed and is more resistant to falling into local optimal than the probability-dependent random search 32 . However, the population may result in that particles can easily fly out of the global optimum boundary. To ensure that the population can converge to the global optimum, an improved Iterative mapping is adopted and shown as follows:

Here \({\omega }_{k}\) is the inertia weight parameter in the iteration \(k\) , \(b\) is the control parameter in the range \([\mathrm{0,1}]\) .

The acceleration coefficients are updated by the linear transformation. \({c}_{1}\) and \({c}_{2}\) represent the influential coefficients of the particles by their own and population information, respectively. To improve the search performance of the population, \({c}_{1}\) and \({c}_{2}\) are changed from fixed values to time-varying parameter parameters, that are updated by linear transformation with the number of iterations:

where \({c}_{max}\) and \({c}_{min}\) are the maximum and minimum values of acceleration coefficients, respectively.

The initialization scheme is determined by elite opposition-based learning . The high-quality initial population will accelerate the solution speed of the algorithm and improve the accuracy of the optimal solution. Thus, the elite backward learning strategy 33 is introduced to generate the position matrix of the initial population. Suppose the elite individual of the population is \({X}=[{x}_{1},{x}_{2},{x}_{3},...,{x}_{j},...,{x}_{D}]\) , and the elite opposition-based solution of \(X\) is \({X}_{o}=[{x}_{{\text{o}}1},{x}_{{\text{o}}2},{x}_{{\text{o}}3},...,{x}_{oj},...,{x}_{oD}]\) . The formula for the elite opposition-based solution is as follows:

where \({k}_{r}\) is the random value in the range \((\mathrm{0,1})\) . \({ux}_{oij}\) and \({lx}_{oij}\) are dynamic boundaries of the elite opposition-based solution in \(j\) dimensional variables. The advantage of dynamic boundary is to reduce the exploration space of particles, which is beneficial to the convergence of the algorithm. When the elite opposition-based solution is out of bounds, the out-of-bounds processing is performed. The equation is given as follows:

After calculating the fitness function values of the elite solution and the elite opposition-based solution, respectively, \(n\) high quality solutions were selected to form a new initial population position matrix.

The position updating Eq. ( 2 ) is modified based on the strategy of dynamic weight. To improve the speed of the global search of the population, the strategy of dynamic weight from the artificial bee colony algorithm 34 is introduced to enhance the computational performance. The new position updating equation is shown as follows:

Here \(\rho\) is the random value in the range \((\mathrm{0,1})\) . \(\psi\) represents the acceleration coefficient and \({\omega }{\prime}\) is the dynamic weight coefficient. The updated equations of the above parameters are as follows:

where \(f(i)\) denotes the fitness function value of individual particle \(i\) and u is the average of the population fitness function values in the current iteration. The Eqs. ( 11 , 12 ) are introduced into the position updating equation. And they can attract the particle towards positions of the best-so-far solution in the search space.

New local optimal jump-out strategy is added for escaping from the local optimal. When the value of the fitness function for the population optimal particles does not change in M iterations, the algorithm determines that the population falls into a local optimal. The scheme in which the population jumps out of the local optimum is to reset the position information of the 40% of individuals within the population, in other words, to randomly generate the position vector in the search space. M is set to 5% of the maximum number of iterations.

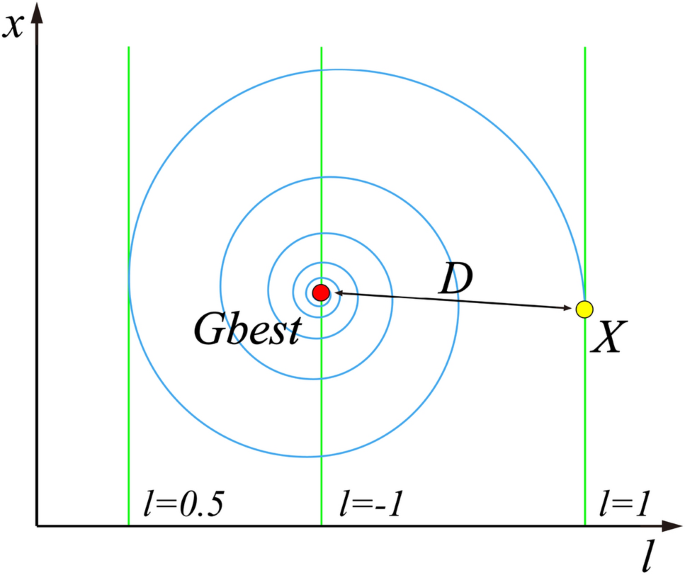

New spiral update search strategy is added after the local optimal jump-out strategy. Since the whale optimization algorithm (WOA) was good at exploring the local search space 35 , the spiral update search strategy in the WOA 36 is introduced to update the position of the particles after the swarm jumps out of local optimal. The equation for the spiral update is as follows:

Here \(D=\left|{x}_{i}\left(k\right)-Gbest\right|\) denotes the distance between the particle itself and the global optimal solution so far. \(B\) is the constant that defines the shape of the logarithmic spiral. \(l\) is the random value in \([-\mathrm{1,1}]\) . \(l\) represents the distance between the newly generated particle and the global optimal position, \(l=-1\) means the closest distance, while \(l=1\) means the farthest distance, and the meaning of this parameter can be directly observed by Fig. 1 .

Spiral updating position.

The DE/best/2 mutation strategy is introduced to form the mutant particle. 4 individuals in the population are randomly selected that differ from the current particle, then the vector difference between them is rescaled, and the difference vector is combined with the global optimal position to form the mutant particle. The equation for mutation of particle position is shown as follows:

where \({x}^{*}\) is the mutated particle, \(F\) is the scale factor of mutation, \({r}_{1}\) , \({r}_{2}\) , \({r}_{3}\) , \({r}_{4}\) are random integer values in \((0,n]\) and not equal to \(i\) , respectively. Specific particles are selected for mutation with the screening conditions as follows:

where \(Cr\) represents the probability of mutation, \(rand\left(\mathrm{0,1}\right)\) is a random number in \(\left(\mathrm{0,1}\right)\) , and \({i}_{rand}\) is a random integer value in \((0,n]\) .

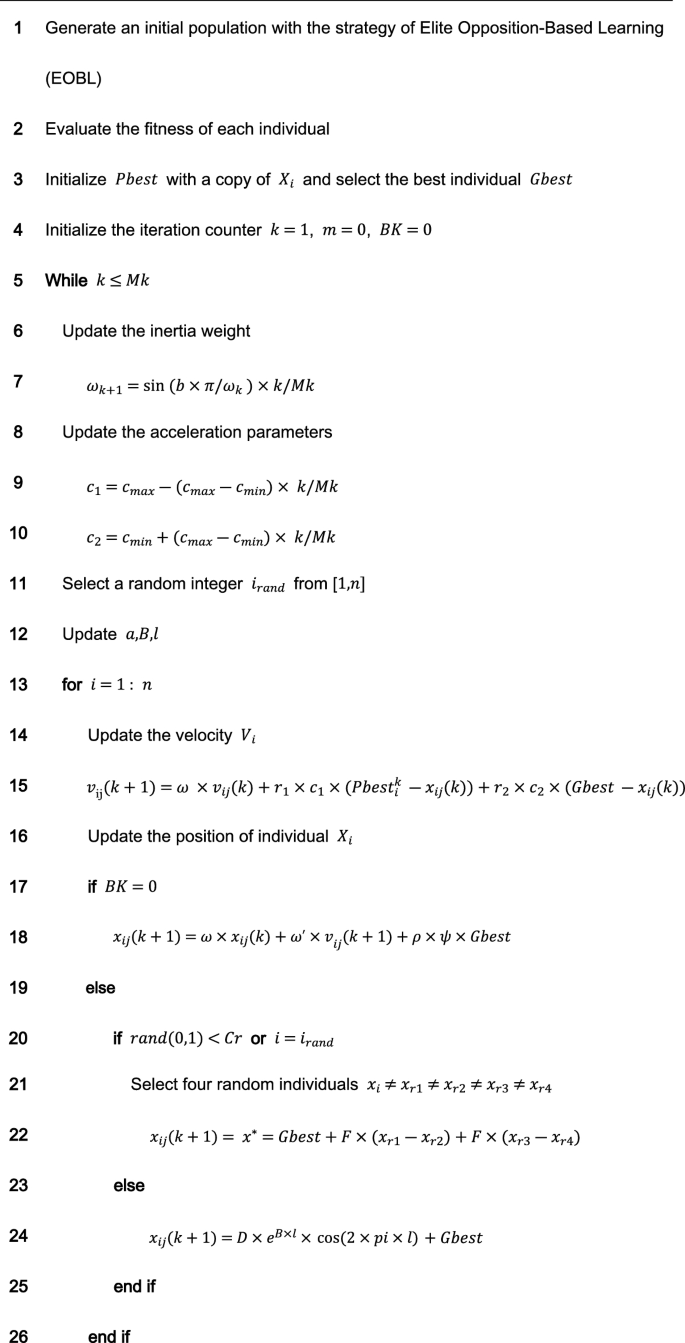

The improved PSO incorporates the search ideas of other intelligent algorithms (DE, WOA), so the improved algorithm proposed in this paper is named NDWPSO. The pseudo-code for the NDWPSO algorithm is given as follows:

The main procedure of NDWPSO.

Comparing the distribution of inertia weight parameters

There are several improved PSO algorithms (such as CDWPSO 25 , and SDWPSO 26 ) that adopt the dynamic weighted particle position update strategy as their improvement strategy. The updated equations of the CDWPSO and the SDWPSO algorithm for the inertia weight parameters are given as follows:

where \({\text{A}}\) is a value in \((\mathrm{0,1}]\) . \({r}_{max}\) and \({r}_{min}\) are the upper and lower limits of the fluctuation range of the inertia weight parameters, \(k\) is the current number of algorithm iterations, and \(Mk\) denotes the maximum number of iterations.

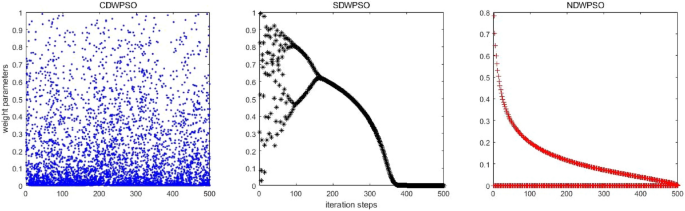

Considering that the update method of inertia weight parameters by our proposed NDWPSO is comparable to the CDWPSO, and SDWPSO, a comparison experiment for the distribution of inertia weight parameters is set up in this section. The maximum number of iterations in the experiment is \(Mk=500\) . The distributions of CDWPSO, SDWPSO, and NDWPSO inertia weights are shown sequentially in Fig. 2 .

The inertial weight distribution of CDWPSO, SDWPSO, and NDWPSO.

In Fig. 2 , the inertia weight value of CDWPSO is a random value in (0,1]. It may make individual particles fly out of the range in the late iteration of the algorithm. Similarly, the inertia weight value of SDWPSO is a value that tends to zero infinitely, so that the swarm no longer can fly in the search space, making the algorithm extremely easy to fall into the local optimal value. On the other hand, the distribution of the inertia weights of the NDWPSO forms a gentle slope by two curves. Thus, the swarm can faster lock the global optimum range in the early iterations and locate the global optimal more precisely in the late iterations. The reason is that the inertia weight values between two adjacent iterations are inversely proportional to each other. Besides, the time-varying part of the inertial weight within NDWPSO is designed to reduce the chaos characteristic of the parameters. The inertia weight value of NDWPSO avoids the disadvantages of the above two schemes, so its design is more reasonable.

Experiment and discussion

In this section, three experiments are set up to evaluate the performance of NDWPSO: (1) the experiment of 23 classical functions 37 between NDWPSO and three particle swarm algorithms (PSO 6 , CDWPSO 25 , SDWPSO 26 ); (2) the experiment of benchmark test functions between NDWPSO and other intelligent algorithms (Whale Optimization Algorithm (WOA) 36 , Harris Hawk Algorithm (HHO) 38 , Gray Wolf Optimization Algorithm (GWO) 39 , Archimedes Algorithm (AOA) 40 , Equilibrium Optimizer (EO) 41 and Differential Evolution (DE) 42 ); (3) the experiment for solving three real engineering problems (welded beam design 43 , pressure vessel design 44 , and three-bar truss design 38 ). All experiments are run on a computer with Intel i5-11400F GPU, 2.60 GHz, 16 GB RAM, and the code is written with MATLAB R2017b.

The benchmark test functions are 23 classical functions, which consist of indefinite unimodal (F1–F7), indefinite dimensional multimodal functions (F8–F13), and fixed-dimensional multimodal functions (F14–F23). The unimodal benchmark function is used to evaluate the global search performance of different algorithms, while the multimodal benchmark function reflects the ability of the algorithm to escape from the local optimal. The mathematical equations of the benchmark functions are shown and found as Supplementary Tables S1 – S3 online.

Experiments on benchmark functions between NDWPSO, and other PSO variants

The purpose of the experiment is to show the performance advantages of the NDWPSO algorithm. Here, the dimensions and corresponding population sizes of 13 benchmark functions (7 unimodal and 6 multimodal) are set to (30, 40), (50, 70), and (100, 130). The population size of 10 fixed multimodal functions is set to 40. Each algorithm is repeated 30 times independently, and the maximum number of iterations is 200. The performance of the algorithm is measured by the mean and the standard deviation (SD) of the results for different benchmark functions. The parameters of the NDWPSO are set as: \({[{\omega }_{min},\omega }_{max}]=[\mathrm{0.4,0.9}]\) , \(\left[{c}_{max},{c}_{min}\right]=\left[\mathrm{2.5,1.5}\right],{V}_{max}=0.1,b={e}^{-50}, M=0.05\times Mk, B=1,F=0.7, Cr=0.9.\) And, \(A={\omega }_{max}\) for CDWPSO; \({[r}_{max},{r}_{min}]=[\mathrm{4,0}]\) for SDWPSO.

Besides, the experimental data are retained to two decimal places, but some experimental data will increase the number of retained data to pursue more accuracy in comparison. The best results in each group of experiments will be displayed in bold font. The experimental data is set to 0 if the value is below 10 –323 . The experimental parameter settings in this paper are different from the references (PSO 6 , CDWPSO 25 , SDWPSO 26 , so the final experimental data differ from the ones within the reference.

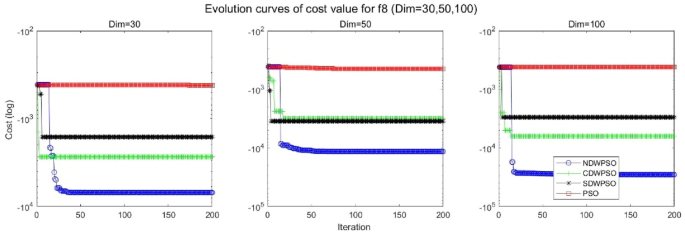

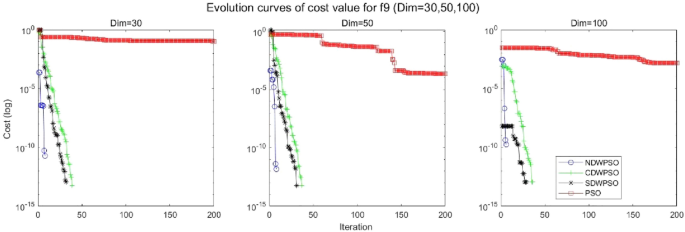

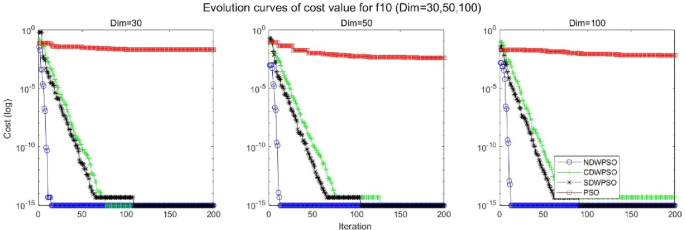

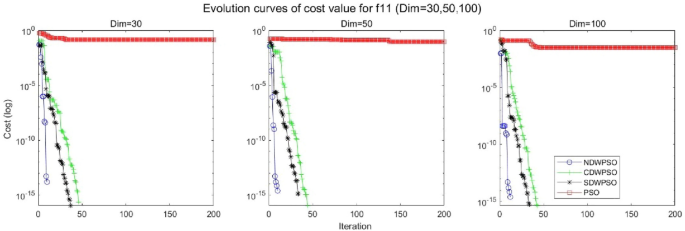

As shown in Tables 1 and 2 , the NDWPSO algorithm obtains better results for all 49 sets of data than other PSO variants, which include not only 13 indefinite-dimensional benchmark functions and 10 fixed-multimodal benchmark functions. Remarkably, the SDWPSO algorithm obtains the same accuracy of calculation as NDWPSO for both unimodal functions f 1 –f 4 and multimodal functions f 9 –f 11 . The solution accuracy of NDWPSO is higher than that of other PSO variants for fixed-multimodal benchmark functions f 14 -f 23 . The conclusion can be drawn that the NDWPSO has excellent global search capability, local search capability, and the capability for escaping the local optimal.

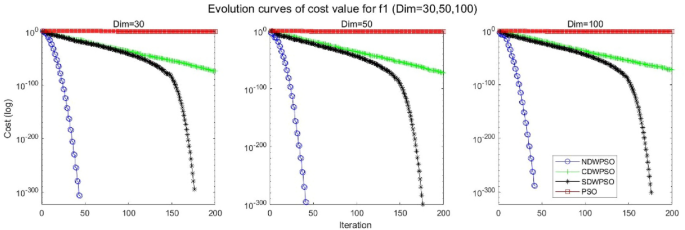

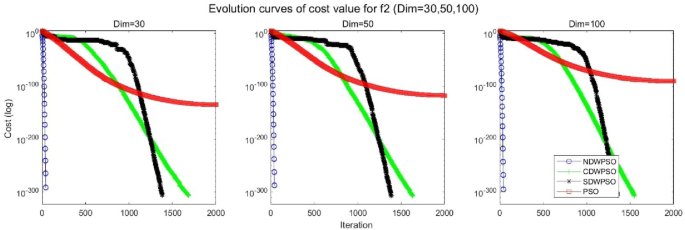

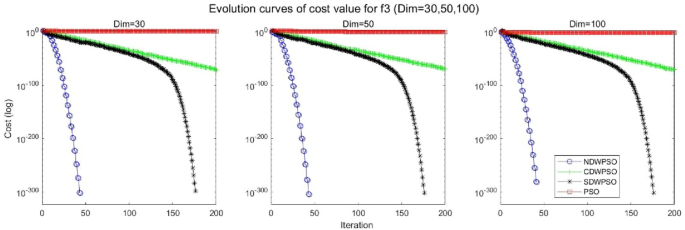

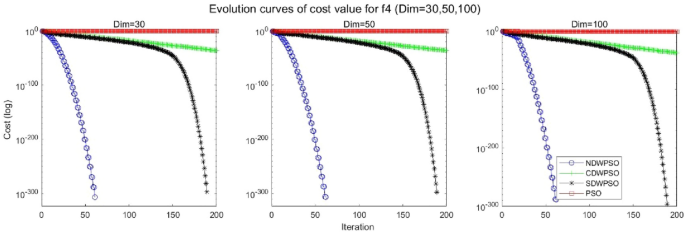

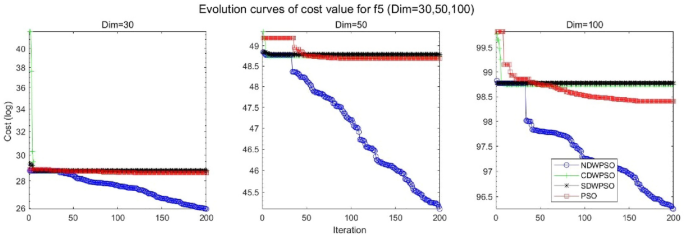

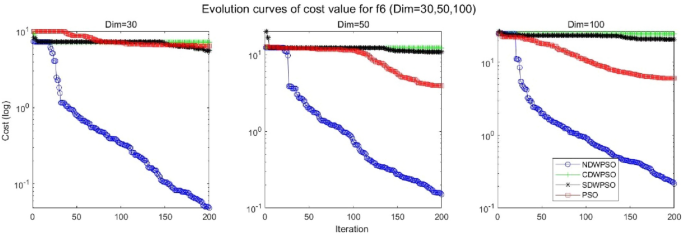

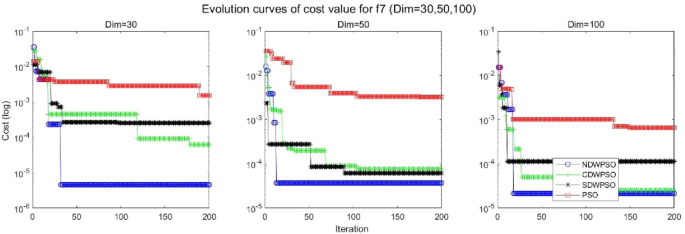

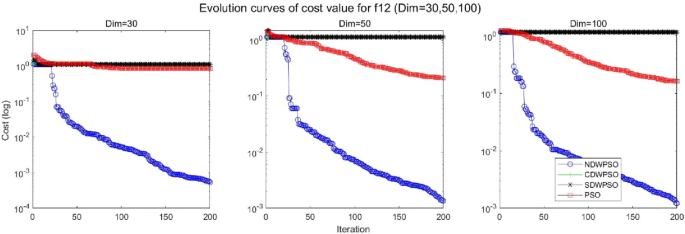

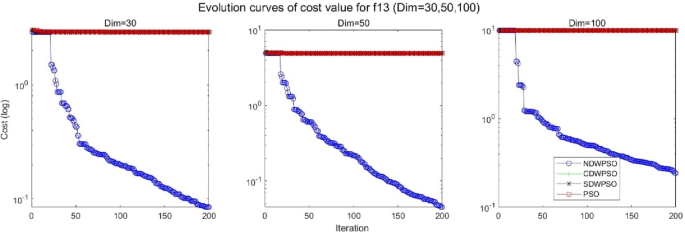

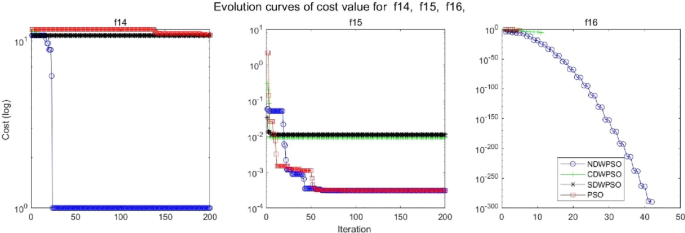

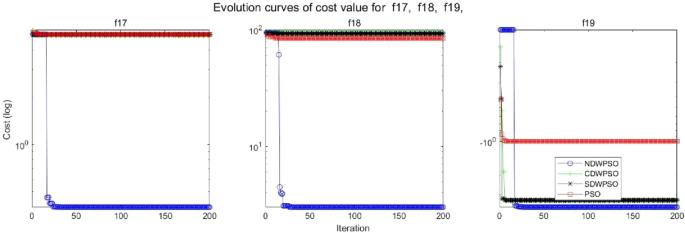

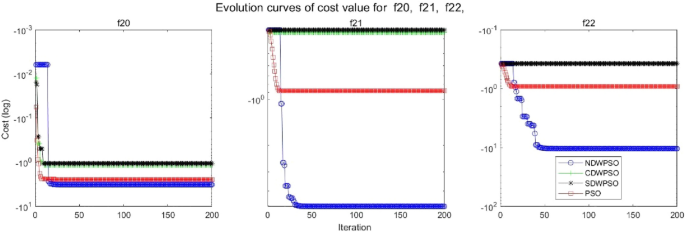

In addition, the convergence curves of the 23 benchmark functions are shown in Figs. 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 and 19 . The NDWPSO algorithm has a faster convergence speed in the early stage of the search for processing functions f1-f6, f8-f14, f16, f17, and finds the global optimal solution with a smaller number of iterations. In the remaining benchmark function experiments, the NDWPSO algorithm shows no outstanding performance for convergence speed in the early iterations. There are two reasons of no outstanding performance in the early iterations. On one hand, the fixed-multimodal benchmark function has many disturbances and local optimal solutions in the whole search space. on the other hand, the initialization scheme based on elite opposition-based learning is still stochastic, which leads to the initial position far from the global optimal solution. The inertia weight based on chaotic mapping and the strategy of spiral updating can significantly improve the convergence speed and computational accuracy of the algorithm in the late search stage. Finally, the NDWPSO algorithm can find better solutions than other algorithms in the middle and late stages of the search.

Evolution curve of NDWPSO and other PSO algorithms for f1 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f2 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f3 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f4 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f5 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f6 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f7 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f8 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f9 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f10 (Dim = 30,50,100).

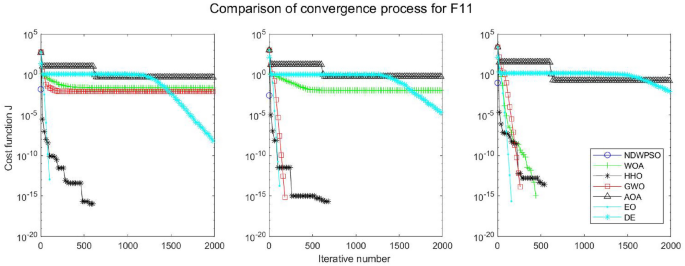

Evolution curve of NDWPSO and other PSO algorithms for f11(Dim = 30,50,100).

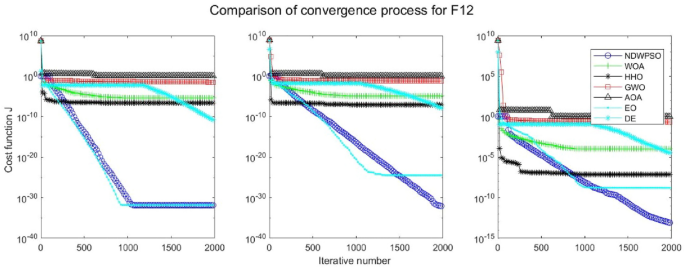

Evolution curve of NDWPSO and other PSO algorithms for f12 (Dim = 30,50,100).

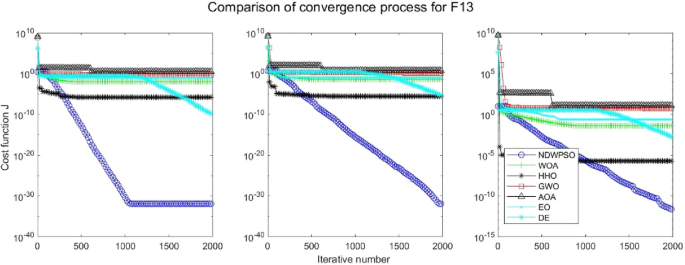

Evolution curve of NDWPSO and other PSO algorithms for f13 (Dim = 30,50,100).

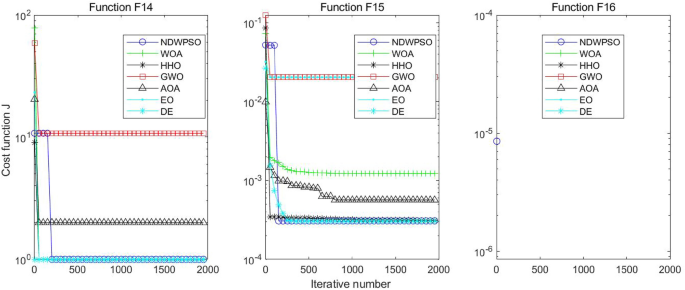

Evolution curve of NDWPSO and other PSO algorithms for f14, f15, f16.

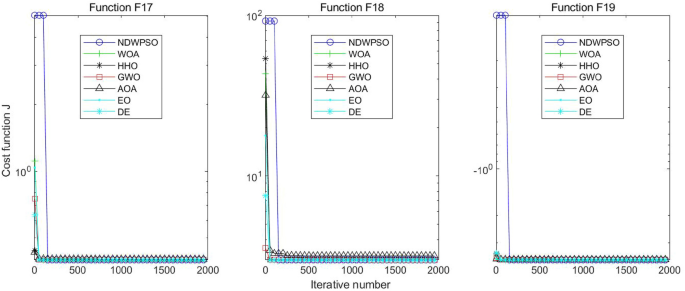

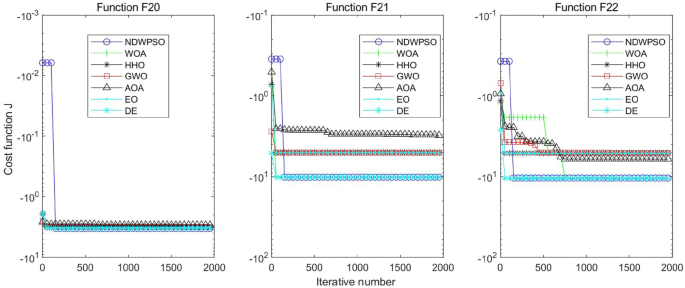

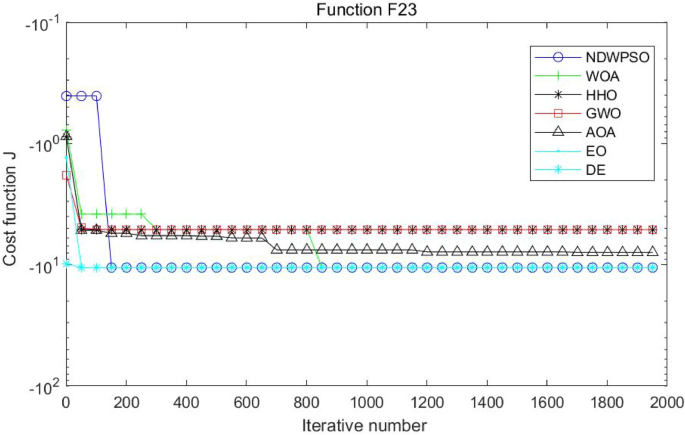

Evolution curve of NDWPSO and other PSO algorithms for f17, f18, f19.

Evolution curve of NDWPSO and other PSO algorithms for f20, f21, f22.

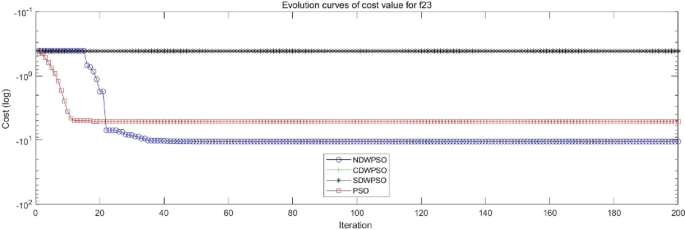

Evolution curve of NDWPSO and other PSO algorithms for f23.

To evaluate the performance of different PSO algorithms, a statistical test is conducted. Due to the stochastic nature of the meta-heuristics, it is not enough to compare algorithms based on only the mean and standard deviation values. The optimization results cannot be assumed to obey the normal distribution; thus, it is necessary to judge whether the results of the algorithms differ from each other in a statistically significant way. Here, the Wilcoxon non-parametric statistical test 45 is used to obtain a parameter called p -value to verify whether two sets of solutions are different to a statistically significant extent or not. Generally, it is considered that p ≤ 0.5 can be considered as a statistically significant superiority of the results. The p -values calculated in Wilcoxon’s rank-sum test comparing NDWPSO and other PSO algorithms are listed in Table 3 for all benchmark functions. The p -values in Table 3 additionally present the superiority of the NDWPSO because all of the p -values are much smaller than 0.5.

In general, the NDWPSO has the fastest convergence rate when finding the global optimum from Figs. 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 and 19 , and thus we can conclude that the NDWPSO is superior to the other PSO variants during the process of optimization.

Comparison experiments between NDWPSO and other intelligent algorithms

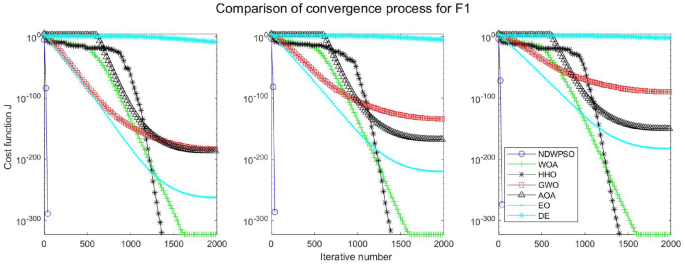

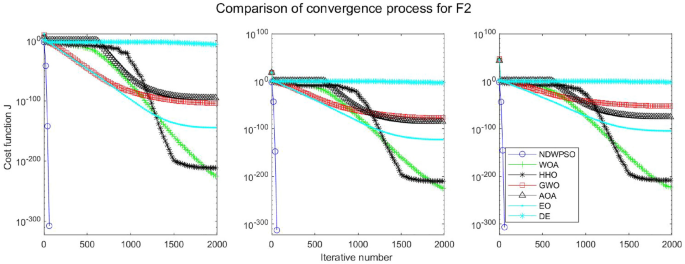

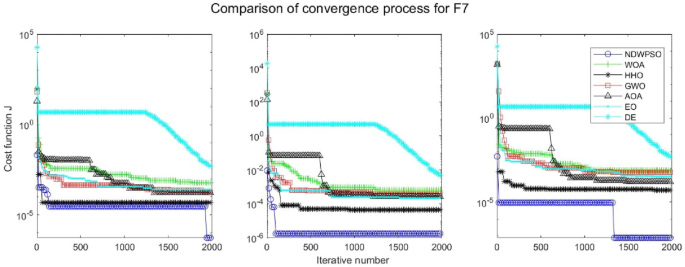

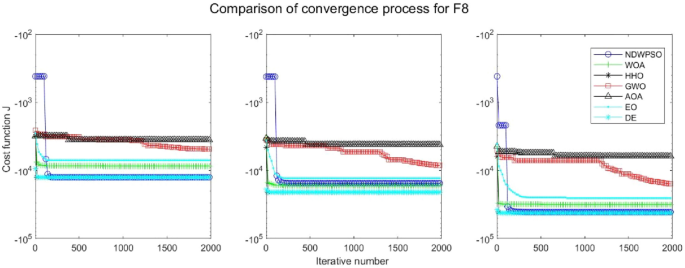

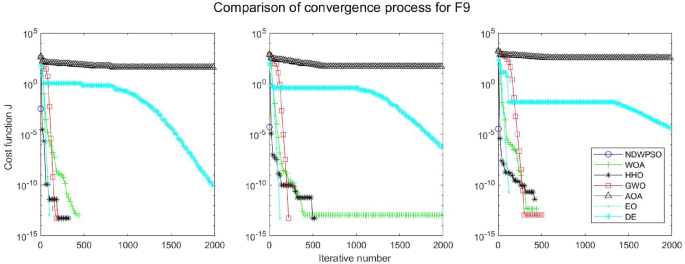

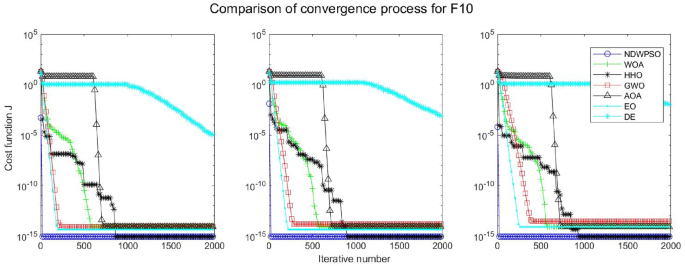

Experiments are conducted to compare NDWPSO with several other intelligent algorithms (WOA, HHO, GWO, AOA, EO and DE). The experimental object is 23 benchmark functions, and the experimental parameters of the NDWPSO algorithm are set the same as in Experiment 4.1. The maximum number of iterations of the experiment is increased to 2000 to fully demonstrate the performance of each algorithm. Each algorithm is repeated 30 times individually. The parameters of the relevant intelligent algorithms in the experiments are set as shown in Table 4 . To ensure the fairness of the algorithm comparison, all parameters are concerning the original parameters in the relevant algorithm literature. The experimental results are shown in Tables 5 , 6 , 7 and 8 and Figs. 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 and 36 .

Evolution curve of NDWPSO and other algorithms for f1 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f2 (Dim = 30,50,100).

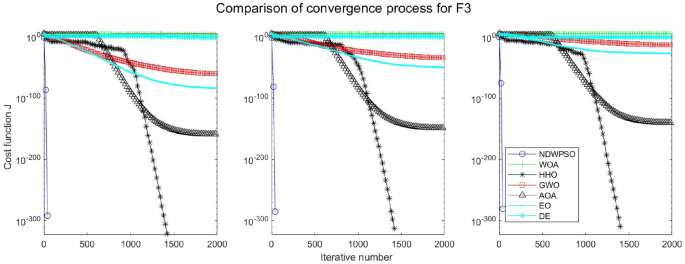

Evolution curve of NDWPSO and other algorithms for f3(Dim = 30,50,100).

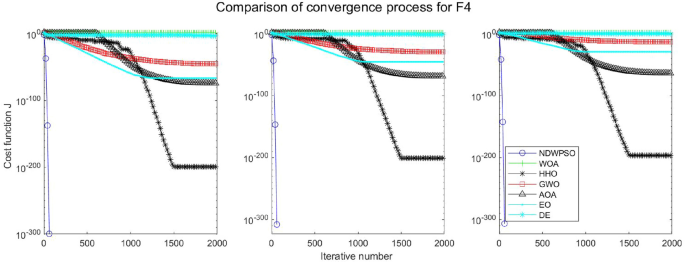

Evolution curve of NDWPSO and other algorithms for f4 (Dim = 30,50,100).

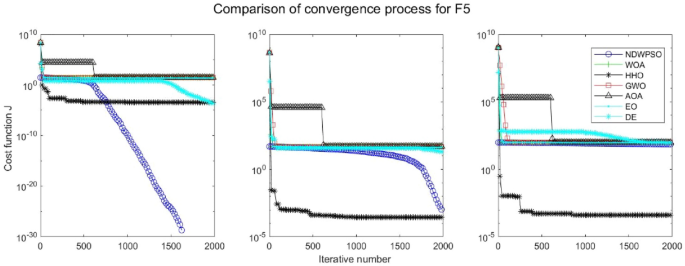

Evolution curve of NDWPSO and other algorithms for f5 (Dim = 30,50,100).

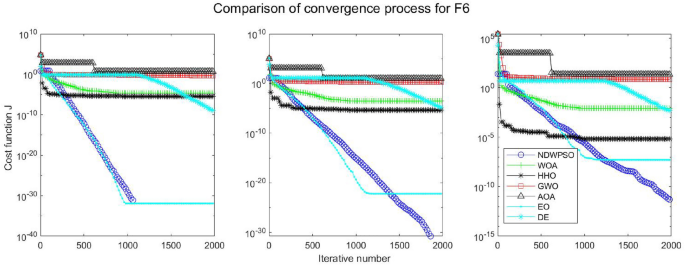

Evolution curve of NDWPSO and other algorithms for f6 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f7 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f8 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f9(Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f10 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f11 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f12 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f13 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f14, f15, f16.

Evolution curve of NDWPSO and other algorithms for f17, f18, f19.

Evolution curve of NDWPSO and other algorithms for f20, f21, f22.

Evolution curve of NDWPSO and other algorithms for f23.

The experimental data of NDWPSO and other intelligent algorithms for handling 30, 50, and 100-dimensional benchmark functions ( \({f}_{1}-{f}_{13}\) ) are recorded in Tables 8 , 9 and 10 , respectively. The comparison data of fixed-multimodal benchmark tests ( \({f}_{14}-{f}_{23}\) ) are recorded in Table 11 . According to the data in Tables 5 , 6 and 7 , the NDWPSO algorithm obtains 69.2%, 84.6%, and 84.6% of the best results for the benchmark function ( \({f}_{1}-{f}_{13}\) ) in the search space of three dimensions (Dim = 30, 50, 100), respectively. In Table 8 , the NDWPSO algorithm obtains 80% of the optimal solutions in 10 fixed-multimodal benchmark functions.

The convergence curves of each algorithm are shown in Figs. 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 and 36 . The NDWPSO algorithm demonstrates two convergence behaviors when calculating the benchmark functions in 30, 50, and 100-dimensional search spaces. The first behavior is the fast convergence of NDWPSO with a small number of iterations at the beginning of the search. The reason is that the Iterative-mapping strategy and the position update scheme of dynamic weighting are used in the NDWPSO algorithm. This scheme can quickly target the region in the search space where the global optimum is located, and then precisely lock the optimal solution. When NDWPSO processes the functions \({f}_{1}-{f}_{4}\) , and \({f}_{9}-{f}_{11}\) , the behavior can be reflected in the convergence trend of their corresponding curves. The second behavior is that NDWPSO gradually improves the convergence accuracy and rapidly approaches the global optimal in the middle and late stages of the iteration. The NDWPSO algorithm fails to converge quickly in the early iterations, which is possible to prevent the swarm from falling into a local optimal. The behavior can be demonstrated by the convergence trend of the curves when NDWPSO handles the functions \({f}_{6}\) , \({f}_{12}\) , and \({f}_{13}\) , and it also shows that the NDWPSO algorithm has an excellent ability of local search.

Combining the experimental data with the convergence curves, it is concluded that the NDWPSO algorithm has a faster convergence speed, so the effectiveness and global convergence of the NDWPSO algorithm are more outstanding than other intelligent algorithms.

Experiments on classical engineering problems

Three constrained classical engineering design problems (welded beam design, pressure vessel design 43 , and three-bar truss design 38 ) are used to evaluate the NDWPSO algorithm. The experiments are the NDWPSO algorithm and 5 other intelligent algorithms (WOA 36 , HHO, GWO, AOA, EO 41 ). Each algorithm is provided with the maximum number of iterations and population size ( \({\text{Mk}}=500,\mathrm{ n}=40\) ), and then repeats 30 times, independently. The parameters of the algorithms are set the same as in Table 4 . The experimental results of three engineering design problems are recorded in Tables 9 , 10 and 11 in turn. The result data is the average value of the solved data.

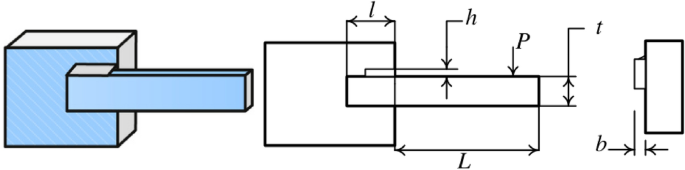

Welded beam design

The target of the welded beam design problem is to find the optimal manufacturing cost for the welded beam with the constraints, as shown in Fig. 37 . The constraints are the thickness of the weld seam ( \({\text{h}}\) ), the length of the clamped bar ( \({\text{l}}\) ), the height of the bar ( \({\text{t}}\) ) and the thickness of the bar ( \({\text{b}}\) ). The mathematical formulation of the optimization problem is given as follows:

Welded beam design.

In Table 9 , the NDWPSO, GWO, and EO algorithms obtain the best optimal cost. Besides, the standard deviation (SD) of t NDWPSO is the lowest, which means it has very good results in solving the welded beam design problem.

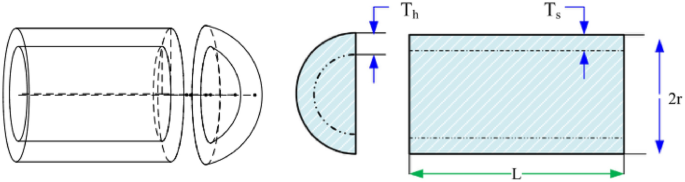

Pressure vessel design

Kannan and Kramer 43 proposed the pressure vessel design problem as shown in Fig. 38 to minimize the total cost, including the cost of material, forming, and welding. There are four design optimized objects: the thickness of the shell \({T}_{s}\) ; the thickness of the head \({T}_{h}\) ; the inner radius \({\text{R}}\) ; the length of the cylindrical section without considering the head \({\text{L}}\) . The problem includes the objective function and constraints as follows:

Pressure vessel design.

The results in Table 10 show that the NDWPSO algorithm obtains the lowest optimal cost with the same constraints and has the lowest standard deviation compared with other algorithms, which again proves the good performance of NDWPSO in terms of solution accuracy.

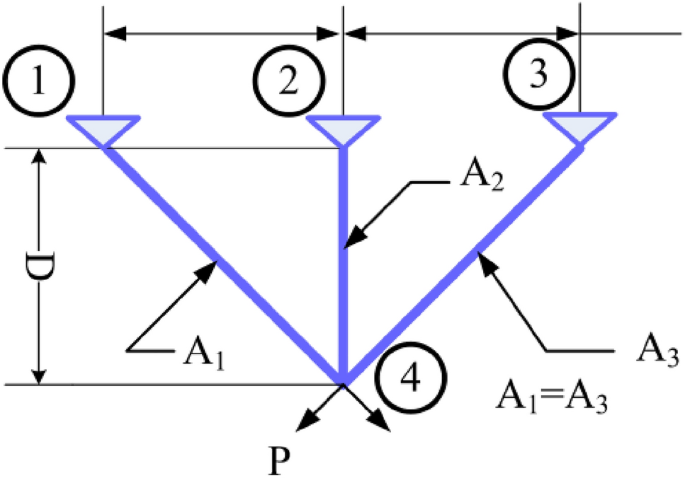

Three-bar truss design

This structural design problem 44 is one of the most widely-used case studies as shown in Fig. 39 . There are two main design parameters: the area of the bar1 and 3 ( \({A}_{1}={A}_{3}\) ) and area of bar 2 ( \({A}_{2}\) ). The objective is to minimize the weight of the truss. This problem is subject to several constraints as well: stress, deflection, and buckling constraints. The problem is formulated as follows:

Three-bar truss design.

From Table 11 , NDWPSO obtains the best design solution in this engineering problem and has the smallest standard deviation of the result data. In summary, the NDWPSO can reveal very competitive results compared to other intelligent algorithms.

Conclusions and future works

An improved algorithm named NDWPSO is proposed to enhance the solving speed and improve the computational accuracy at the same time. The improved NDWPSO algorithm incorporates the search ideas of other intelligent algorithms (DE, WOA). Besides, we also proposed some new hybrid strategies to adjust the distribution of algorithm parameters (such as the inertia weight parameter, the acceleration coefficients, the initialization scheme, the position updating equation, and so on).

23 classical benchmark functions: indefinite unimodal (f1-f7), indefinite multimodal (f8-f13), and fixed-dimensional multimodal(f14-f23) are applied to evaluate the effective line and feasibility of the NDWPSO algorithm. Firstly, NDWPSO is compared with PSO, CDWPSO, and SDWPSO. The simulation results can prove the exploitative, exploratory, and local optima avoidance of NDWPSO. Secondly, the NDWPSO algorithm is compared with 5 other intelligent algorithms (WOA, HHO, GWO, AOA, EO). The NDWPSO algorithm also has better performance than other intelligent algorithms. Finally, 3 classical engineering problems are applied to prove that the NDWPSO algorithm shows superior results compared to other algorithms for the constrained engineering optimization problems.

Although the proposed NDWPSO is superior in many computation aspects, there are still some limitations and further improvements are needed. The NDWPSO performs a limit initialize on each particle by the strategy of “elite opposition-based learning”, it takes more computation time before speed update. Besides, the” local optimal jump-out” strategy also brings some random process. How to reduce the random process and how to improve the limit initialize efficiency are the issues that need to be further discussed. In addition, in future work, researchers will try to apply the NDWPSO algorithm to wider fields to solve more complex and diverse optimization problems.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Sami, F. Optimize electric automation control using artificial intelligence (AI). Optik 271 , 170085 (2022).

Article ADS Google Scholar

Li, X. et al. Prediction of electricity consumption during epidemic period based on improved particle swarm optimization algorithm. Energy Rep. 8 , 437–446 (2022).

Article Google Scholar

Sun, B. Adaptive modified ant colony optimization algorithm for global temperature perception of the underground tunnel fire. Case Stud. Therm. Eng. 40 , 102500 (2022).

Bartsch, G. et al. Use of artificial intelligence and machine learning algorithms with gene expression profiling to predict recurrent nonmuscle invasive urothelial carcinoma of the bladder. J. Urol. 195 (2), 493–498 (2016).

Article PubMed Google Scholar

Bao, Z. Secure clustering strategy based on improved particle swarm optimization algorithm in internet of things. Comput. Intell. Neurosci. 2022 , 1–9 (2022).

Google Scholar

Kennedy, J. & Eberhart, R. Particle swarm optimization. In: Proceedings of ICNN'95-International Conference on Neural Networks . IEEE, 1942–1948 (1995).

Lin, Q. et al. A novel artificial bee colony algorithm with local and global information interaction. Appl. Soft Comput. 62 , 702–735 (2018).

Abed-alguni, B. H. et al. Exploratory cuckoo search for solving single-objective optimization problems. Soft Comput. 25 (15), 10167–10180 (2021).

Brajević, I. A shuffle-based artificial bee colony algorithm for solving integer programming and minimax problems. Mathematics 9 (11), 1211 (2021).

Khan, A. T. et al. Non-linear activated beetle antennae search: A novel technique for non-convex tax-aware portfolio optimization problem. Expert Syst. Appl. 197 , 116631 (2022).

Brajević, I. et al. Hybrid sine cosine algorithm for solving engineering optimization problems. Mathematics 10 (23), 4555 (2022).

Abed-Alguni, B. H., Paul, D. & Hammad, R. Improved Salp swarm algorithm for solving single-objective continuous optimization problems. Appl. Intell. 52 (15), 17217–17236 (2022).

Nadimi-Shahraki, M. H. et al. Binary starling murmuration optimizer algorithm to select effective features from medical data. Appl. Sci. 13 (1), 564 (2022).

Nadimi-Shahraki, M. H. et al. A systematic review of the whale optimization algorithm: Theoretical foundation, improvements, and hybridizations. Archiv. Comput. Methods Eng. 30 (7), 4113–4159 (2023).

Fatahi, A., Nadimi-Shahraki, M. H. & Zamani, H. An improved binary quantum-based avian navigation optimizer algorithm to select effective feature subset from medical data: A COVID-19 case study. J. Bionic Eng. 21 (1), 426–446 (2024).

Abed-alguni, B. H. & AL-Jarah, S. H. IBJA: An improved binary DJaya algorithm for feature selection. J. Comput. Sci. 75 , 102201 (2024).

Yeh, W.-C. A novel boundary swarm optimization method for reliability redundancy allocation problems. Reliab. Eng. Syst. Saf. 192 , 106060 (2019).

Solomon, S., Thulasiraman, P. & Thulasiram, R. Collaborative multi-swarm PSO for task matching using graphics processing units. In: Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation 1563–1570 (2011).

Mukhopadhyay, S. & Banerjee, S. Global optimization of an optical chaotic system by chaotic multi swarm particle swarm optimization. Expert Syst. Appl. 39 (1), 917–924 (2012).

Duan, L. et al. Improved particle swarm optimization algorithm for enhanced coupling of coaxial optical communication laser. Opt. Fiber Technol. 64 , 102559 (2021).

Sun, F., Xu, Z. & Zhang, D. Optimization design of wind turbine blade based on an improved particle swarm optimization algorithm combined with non-gaussian distribution. Adv. Civ. Eng. 2021 , 1–9 (2021).

Liu, M. et al. An improved particle-swarm-optimization algorithm for a prediction model of steel slab temperature. Appl. Sci. 12 (22), 11550 (2022).

Article MathSciNet CAS Google Scholar

Gad, A. G. Particle swarm optimization algorithm and its applications: A systematic review. Archiv. Comput. Methods Eng. 29 (5), 2531–2561 (2022).

Article MathSciNet Google Scholar

Feng, H. et al. Trajectory control of electro-hydraulic position servo system using improved PSO-PID controller. Autom. Constr. 127 , 103722 (2021).

Chen, Ke., Zhou, F. & Liu, A. Chaotic dynamic weight particle swarm optimization for numerical function optimization. Knowl. Based Syst. 139 , 23–40 (2018).

Bai, B. et al. Reliability prediction-based improved dynamic weight particle swarm optimization and back propagation neural network in engineering systems. Expert Syst. Appl. 177 , 114952 (2021).

Alsaidy, S. A., Abbood, A. D. & Sahib, M. A. Heuristic initialization of PSO task scheduling algorithm in cloud computing. J. King Saud Univ. –Comput. Inf. Sci. 34 (6), 2370–2382 (2022).

Liu, H., Cai, Z. & Wang, Y. Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 10 (2), 629–640 (2010).

Deng, W. et al. A novel intelligent diagnosis method using optimal LS-SVM with improved PSO algorithm. Soft Comput. 23 , 2445–2462 (2019).

Huang, M. & Zhen, L. Research on mechanical fault prediction method based on multifeature fusion of vibration sensing data. Sensors 20 (1), 6 (2019).

Article ADS PubMed PubMed Central Google Scholar

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1 (1), 67–82 (1997).

Gandomi, A. H. et al. Firefly algorithm with chaos. Commun. Nonlinear Sci. Numer. Simul. 18 (1), 89–98 (2013).

Article ADS MathSciNet Google Scholar

Zhou, Y., Wang, R. & Luo, Q. Elite opposition-based flower pollination algorithm. Neurocomputing 188 , 294–310 (2016).

Li, G., Niu, P. & Xiao, X. Development and investigation of efficient artificial bee colony algorithm for numerical function optimization. Appl. Soft Comput. 12 (1), 320–332 (2012).

Xiong, G. et al. Parameter extraction of solar photovoltaic models by means of a hybrid differential evolution with whale optimization algorithm. Solar Energy 176 , 742–761 (2018).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95 , 51–67 (2016).

Yao, X., Liu, Y. & Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 3 (2), 82–102 (1999).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Fut. Gener. Comput. Syst. 97 , 849–872 (2019).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69 , 46–61 (2014).

Hashim, F. A. et al. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51 , 1531–1551 (2021).

Faramarzi, A. et al. Equilibrium optimizer: A novel optimization algorithm. Knowl. -Based Syst. 191 , 105190 (2020).

Pant, M. et al. Differential evolution: A review of more than two decades of research. Eng. Appl. Artif. Intell. 90 , 103479 (2020).

Coello, C. A. C. Use of a self-adaptive penalty approach for engineering optimization problems. Comput. Ind. 41 (2), 113–127 (2000).

Kannan, B. K. & Kramer, S. N. An augmented lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 116 , 405–411 (1994).

Derrac, J. et al. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1 (1), 3–18 (2011).

Download references

Acknowledgements

This work was supported by Key R&D plan of Shandong Province, China (2021CXGC010207, 2023CXGC01020); First batch of talent research projects of Qilu University of Technology in 2023 (2023RCKY116); Introduction of urgently needed talent projects in Key Supported Regions of Shandong Province; Key Projects of Natural Science Foundation of Shandong Province (ZR2020ME116); the Innovation Ability Improvement Project for Technology-based Small- and Medium-sized Enterprises of Shandong Province (2022TSGC2051, 2023TSGC0024, 2023TSGC0931); National Key R&D Program of China (2019YFB1705002), LiaoNing Revitalization Talents Program (XLYC2002041) and Young Innovative Talents Introduction & Cultivation Program for Colleges and Universities of Shandong Province (Granted by Department of Education of Shandong Province, Sub-Title: Innovative Research Team of High Performance Integrated Device).

Author information

Authors and affiliations.

School of Mechanical and Automotive Engineering, Qilu University of Technology (Shandong Academy of Sciences), Jinan, 250353, China

Jinwei Qiao, Guangyuan Wang, Zhi Yang, Jun Chen & Pengbo Liu

Shandong Institute of Mechanical Design and Research, Jinan, 250353, China

School of Information Science and Engineering, Northeastern University, Shenyang, 110819, China

Xiaochuan Luo

Fushun Supervision Inspection Institute for Special Equipment, Fushun, 113000, China

You can also search for this author in PubMed Google Scholar

Contributions

Z.Y., J.Q., and G.W. wrote the main manuscript text and prepared all figures and tables. J.C., P.L., K.L., and X.L. were responsible for the data curation and software. All authors reviewed the manuscript.

Corresponding author

Correspondence to Zhi Yang .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary information., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Qiao, J., Wang, G., Yang, Z. et al. A hybrid particle swarm optimization algorithm for solving engineering problem. Sci Rep 14 , 8357 (2024). https://doi.org/10.1038/s41598-024-59034-2

Download citation

Received : 11 January 2024

Accepted : 05 April 2024

Published : 10 April 2024

DOI : https://doi.org/10.1038/s41598-024-59034-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Particle swarm optimization

- Elite opposition-based learning

- Iterative mapping

- Convergence analysis

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

A comparative review of current optimization algorithms for maximizing overcurrent relay selectivity and speed

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Comput Intell Neurosci

- v.2022; 2022

An Improved Particle Swarm Optimization Algorithm and Its Application to the Extreme Value Optimization Problem of Multivariable Function

School of Mathematical and Statistics, Xuzhou University of Technology, Xuzhou 221008, China

Associated Data

The datasets generated and/or analyzed in the current study are available from the corresponding author upon reasonable request.

It is proposed to improve the study of particle optimization and its application in order to solve the problem of inefficiency and lack of local optimization skills in the use of particle herd optimization. Firstly, the basic principle, mathematical description, algorithm parameters, and flow of the original (Particle Swarm Optimization, PSO) algorithm are introduced, and then the standard PSO algorithm is introduced; thirdly, over the last 10 years, four types of improvements have been proposed through the study of improved particle algorithms. The improved algorithm is applied to the extreme value optimization problem of multivariable function. The simulation results show that the basic (Cloud Particle Swarm Optimization, CPSO) algorithm within 500 generations has not reached convergence for 8 times, 6 times, 4 times, and 5 times, respectively. In the case of convergence, the average number of steps is much higher than ICPSO, and the improved algorithm converges completely. In terms of time performance, the convergence time of ICPSO is much better than that of CPSO algorithm. Therefore, the improved particle optimization algorithm ensures the effectiveness of the improvement measures, such as small optimization algebra, fast merging speed, high efficiency, and good population diversity.

1. Introduction

An optimization problem involves finding a set of parameter values under certain constraints so that some measure of optimization is met, even if some performance indexes of the system reach the minimum or maximum. It is an ancient subject based on mathematics. It widely exists in agriculture, chemical industry, national defense, finance, transportation, electric power, communication, and many other fields [ 1 ]. The application of optimization technology in the above fields has brought great economic and social benefits. Long-term practice shows that under the same conditions, the treatment of optimization technology has significant effects on the reduction of system energy consumption, the improvement of efficiency, and the rational utilization of resources, and this advantage is more obvious with the increase of the scale of treatment objects. The emergence of bionic algorithm provides a powerful tool for a large number of problems that cannot be well optimized by traditional optimization algorithms. Bionic algorithm is an algorithm model based on human and biological behavior or material movement form [ 2 ]. Since this kind of algorithm was put forward, because of its universality in solving the optimization problem, it does not need some information of the objective function or even the explicit expression of the optimized object. It only needs to know the input and output of the optimized problem so as to avoid the computational complexity and difficult operability of the algorithm based on the properties of the optimized function. Currently, bionic algorithms include genetic algorithms, artificial immunity algorithms, ant colony optimization algorithms, particle herd optimization algorithms, community location algorithms, and more [ 3 , 4 ].

Particle swarm optimization (PSO) is a new type of bionic optimization algorithm that is similar to the genetic algorithm and is a repetitive optimization algorithm (see Figure 1 ). It initiates a set of random solutions and repeatedly searches for the optimal solution. However, this is different from the evolutionary idea in the genetic algorithm that “the best survives, the best survives.” Compared to other bionic algorithms, such as genetic algorithms, particle herd algorithms are simpler to understand, have fewer parameters that can be adjusted, and are easier to implement. Convergence analysis of particle herd optimization algorithms is the basis of PSO algorithms. Currently, most improved particle herd optimization algorithms lack convergence models and convergence analysis. Second, the particle optimization algorithm is easy to get into the local optimization problem; that is, there is a problem of incomplete integration [ 5 ]. Particle optimization when solving the problem of optimizing high-dimensional or ultra-high-dimensional complex functions, particle swarm optimization often has the problem of premature convergence; that is, limited by the particle update mechanism, the particles have gathered to a point and stagnated when the population has not found the optimal solution. Therefore, it is urgent to find an effective mechanism to make the algorithm escape from local minima and overcome the problem of premature convergence. Third, it is necessary to expand the theory of discrete particle optimization and its application, as the results of discrete particle optimization studies lag far behind continuous particle optimization. Fourth, the expansion of research on the application of particle herd algorithms, how to use particle herd algorithms, and the integration of other algorithms to solve practical problems are the research topics of domestic and foreign scientists [ 6 ].

An improved particle swarm optimization algorithm.

2. Literature Review

Numerous scientists are devoted to the study of optimization problems, and as a result, optimization theories and algorithms are developing rapidly. Currently, traditional optimization methods include Newton's method, simplex method, and conjugate gradient method, trust region method, pattern search method, Rosenbrock method, and Powell method. When facing some large-scale problems, these methods need to traverse the whole search space and produce a combination explosion of search, which makes them “helpless” in the face of these problems; that is, the calculation speed, convergence, and initial sensitivity are far from meeting the requirements. Therefore, efficient optimization algorithm has become one of the research objectives of scientists [ 7 ]. Sun et al.'s model has been proposed to convert uncertainty between the definition of qualitative knowledge, the concept of quality, and its numerical representation, and it has been used in many fields, such as intelligent management and fuzzy evaluation. Because the cloud model has the characteristics of uncertainty, uncertainty, stability, and change in the expression of knowledge, it reflects the basic principles of species evolution in nature. Therefore, the field of evolutionary computing has also begun to focus on cloud design [ 8 ]. Song's algorithm for high-dimensional functions and nonlinear distribution particle optimization has been proposed to overcome the poor performance of particle optimization. The algorithm performs scattering operations on particles in a nonlinear increment so that a large number of unnecessary scattering operations can be avoided at the beginning of the algorithm iteration, and the probability of scattering operations at the end is high iteration, thus ensuring the efficiency of the algorithm's operation. This can effectively improve the algorithm's global search capability [ 9 ]. Zeng et al. proposed a cloud genetic algorithm by using cloud generator to replace the traditional crossover and mutation operators in genetic algorithm, which has achieved good results in function optimization [ 10 ]. Kumari et al. combining genetic algorithms with cloud models offers a cloud-based evolutionary algorithm that effectively solves the problem of genetic algorithms and easily facilitates local optimization and early convergence [ 11 ]. Omidinasab and Goodarzimehr proposed an adaptive cloud particle swarm optimization algorithm using particle fitness and different inertia weight evolution strategies, which effectively solved the problems of local optimization and too fast convergence speed of the algorithm [ 12 ]. Zhu et al. suggest that the current condition and space of a particle in an entire population should be explored, evaluated by the fitness value of the particle, and its speed adjusted by the fitness value so that the particle itself can be active locally and globally search [ 13 ].

Based on this study, this paper proposes to improve the study of particle herd algorithms and their applications. By means of solution space transformation, the local optimization and global optimization are combined, a simple cloud operator is used to study the evolution of particles and to perform mutations to accelerate the integration speed of the algorithm. From the simulation results, it can be seen that the improvement measures improve the accuracy of the population diversity, search capabilities, and algorithm integrity.

3. Research Methods

3.1. particle herd algorithm.

Particle herd optimization (PSO) is a new type of bionic optimization algorithm based on modeling the behavior of birds of prey according to certain assumptions. The discovery of the algorithm is based on the modeling of simplified social models. It originated from complex adaptive system. CAS particle swarm optimization algorithm is developed based on the following four characteristics of CAS: firstly, the subject is active and active. Secondly, the subject interacts with the environment and other subjects, which is the main driving force for the development and change of the system. Moreover, the influence of environment is macro, the influence between subjects is micro, and macro and micro should be organically combined. Finally, the whole system may also be affected by some random factors [ 14 ].

3.1.1. Standard Particle Swarm Optimization Algorithm

In order to better explore the solution space, Shi introduced the concept of inertia weight based on the original particle herd algorithm and gradually developed the standard particle herd algorithm currently in use. The speed-position update mode is as follows:

The standard particle herd algorithm described in this section is a linearly tuned particle herd algorithm. Its formula is

where ω max indicates the value of the maximum mass of inertia, ω min indicates the minimum value of the mass of inertia, Gen represents the maximum number of iterations, and iter represents the current number of iterations. A particle herd algorithm involves inertial motion of a particle along its own velocity and thinking about the behavior of the particle itself. At the same time, it also participates in group information sharing and mutual cooperation so as to find the best position in the particle swarm. The interaction and restriction of these three parts determine the optimization performance of the algorithm [ 15 , 16 ]. For its movement process, see Figure 2 .

The schematic diagram of the update process.

3.1.2. Discrete Particle Swarm Optimization

To solve the problem of optimizing a separate combinator with a PSO algorithm, two completely different technical routes were developed: one based on the classical continuous particle herd algorithm, and for a specific problem, a discrete policy space for continuous particle motion, space, and appropriate adjustments is made. The PSO algorithm to be solved still retains the speed-position update algorithm of the classical particle herd algorithm in the calculation. His representative, Eberhart, proposed a discrete binary version of the PSO based on the first particle herd algorithm. The model they proposed is to limit the historical and global optimization of each dimension of the particle and the particle itself to 1 or 0, but the speed is not limited. When updating the position with speed, set an off value. When the speed is higher than the off value, the position of particles is taken as 1; otherwise, it is taken as 0. The speed and position update equations are expressed as follows:

Consider S v i d = 1 1 + exp − v i d , (7) where

S ( v id ) is the sigmoid function and r and () is the random number between [0, 1]. The velocity component v id determines the probability that the position component x id takes 1 or 0. The greater the v id , the greater the probability that x id takes 1.

Another approach is to solve the discrete optimization problem based on the basic information update mechanism of the PSO algorithm, as well as to redefine the basic idea of the classical particle optimization algorithm, the unique representation of the particle herd, and the operation algorithm within the algorithm, for example, the discrete binary PSO algorithm proposed by Farzane in Clerc's Traveler Trading Policy (TSP) and the 0–1 planning policy. The difference between the two methods lies in the following: the former maps the actual discrete problem to the particle continuous motion space and then calculates and solves it in the continuous space. The latter is to map PSO algorithm to discrete space and calculate and solve it in discrete space [ 17 , 18 ].

3.2. Improved Particle Swarm Optimization (ICPSO)

3.2.1. cloud design.

The cloud model is a mathematical model that transforms deterministic knowledge into qualitative and quantitative forms and mainly reflects the ambiguity and randomness of knowledge about things and people in the objective world and provides a combination of qualitative and quantitative processing of things.

Definition 1 . —

(clouds and cloud drops). Let U be a numerical world represented by numerical values, and C be a qualitative concept over U . If the numerical value of x ∈ U is a random embodiment of the concept of quality C , then C µ ( x ) ∈ [0, 1] of the degree of certainty is a random number that tends to be constant: μ : ∪⟶[0,1], x ∈ ∪, x ⟶ μ ( x ). The distribution of x in the U universe is then called a cloud, which is denoted by a C ( X ) cloud, and each x is called a cloud drop. The cloud model and its numerical properties are shown in Figure 3 , and Ex = 20, En = 3, He = 0.1.

Cloud model and its digital features.

Definition 2 . —

One-dimensional simple cloud operator ArForward ( C ( Ex , En , He )) is a mapping of π that converts the general properties of quality concepts into digital representations. C ⟶Π. The following conditions are met:

In, Norm( μ , δ ) is a normal random variable with expected value µ and variance δ , and N is the number of cloud droplets. Using a simple cloud operator, it is possible to convert a concept into a set of cloud droplets numerically represented by C ( Ex , En , He ) realizing the transformation from conceptual space to numerical space. The one-dimensional simple cloud operator can be extended to the n -dimensional simple cloud operator.

3.2.2. Basic Particle Swarm Optimization (CPSO)

Let the size of the particle swarm be N , the fitness value of the particle X i in the t th iteration is f i , and the average fitness value of the particle is equations ( 8 )∼( 10 ):

Equations ( 9 ) and ( 10 ) are speed update formula and position update formula, respectively. The fitness value better than f avg is averaged to get f avg ′, the fitness value less than f avg is averaged to get f avg ″ , and the fitness value of the optimal particle is f min . If f i is better than f avg ′, the fitness value of particles is small and close to the optimal solution. Small inertia weight is adopted, and the evolution strategy adopts “social model” to speed up the speed of global convergence. If f i is inferior to f avg ″ , the fitness value of particles is large and far from the optimal solution. Large inertia weight is adopted, and the evolution strategy adopts “cognitive model” so that these particles with poor performance can accelerate the convergence speed. If f i is better than f avg ″ and inferior to f avg ′, the fitness value of particles is moderate, the inertia weight adopts cloud adaptive inertia weight, and the evolution strategy adopts “complete model” [ 19 ].

Definition 3 . —

(evolutionary model). The process that each particle generates a new generation of particles through the normal cloud generator according to its individual extreme value is called evolutionary model.

Definition 4 . —

(mutation). Given the thresholds N and K in advance, when the global extreme value has not evolved for N consecutive generations or the amplitude of the evolution process is less than k , it is considered that the particles fall into the local optimum, and all particles are mutated through the normal cloud generator according to the global extreme value.

3.2.3. ICPSO Algorithm

Aiming at the problems of the above basic CPSO, this paper puts forward the following two improvement methods.

- (1) With the help of group substitution and spatial transformation, the global search and local search are combined.

- Most of the running time of the basic CPSO algorithm is consumed in the updating of the population. In addition, the limitation of slow evolution often appears in the later stage of evolution. For this, group substitution and space transformation are introduced. The particle swarm optimization algorithm of group substitution mainly searches the solution space through several particle swarm optimization using different search methods. One particle swarm is the main search group and the other is the auxiliary search group. Under some conditions in the search process, some auxiliary search group particles and main search group particles are replaced to maintain the diversity of main search group particles so that the main search group can avoid stagnation or premature due to lack of diversity so as to ensure that the main search group can search the global optimal value point. In order to calculate the advantages and disadvantages of the current position of cloud particles, it is necessary to transform the solution space and map the two positions occupied by each particle from the unit space I =[−1,1] n to the solution space of the optimization problem. Note that the i th cloud operator on particle P j is [ α i j β i j ] T ; then, the corresponding solution space variables are as follows: X k j = 1 2 b i 1 + α i j + a i 1 − α i j , (12) X i δ j = 1 2 b i 1 + β i j + a i 1 − β i j . (13)

- Then, if the optimal value obtained is greater than the modern optimal solution, the spatial transformation of the solution is optimized. After each iteration, the improved algorithm performs local search near the contemporary optimal solution and improves the ability to search algorithms, and the basic CPSO algorithm improves errors that do not change over several generations [ 20 ].

- (2) According to a simple cloud operator, particle mutations are used to improve the algorithmic search method. Nonmodern optimal solutions focus on phenomena that are common in the evolutionary process of the CPSO basic algorithm, and the greater the evolution, the greater the deviation from the optimal solution, and the following improvement measures are taken: calculate the initial value of the current position and velocity of each particle, and then calculate whether the fitness of each particle has reached the mutation threshold N , and if so, perform a mutation operation on each particle according to Definition 4 ; otherwise, the particle renewal is performed according to equations ( 9 ) and ( 10 ).

3.2.4. Algorithm Flow of ICPSO

The ICPSO algorithm flow using the above two improvement measures is as follows:

- Initialize the population. That is, initialize the position of each particle, individual extreme value PBEST, local extreme value GBEST, and so on.

- Calculate the fitness value for each particle and update Pbest and Gbest.

- Judge whether the mutation threshold n is reached. If it is reached, the mutation operation is carried out according to Definition 4 . Let the local best (minimum) of all particles be Gbest and make ex = Gbest, en = 2gbest, h e = en/10 in normal cloud computing a ( C ( ex , en , he )). According to Definition 2 , the normal cloud generator completes the mutation operation of all particles and fails to reach the mutation threshold (4).

- Evolve each particle. Let the individual minimum of particle I be Pbest, let ex = Pbest, en = 2pbest, he = en/10 in normal cloud computing a ( C ( ex , en , he )), generate a new particle J according to the normal cloud generator in Definition 2 , and let I = J to complete the evolution operation.

- If the iteration limit is reached, the Gbest output will end; otherwise, go to (2).

3.3. Analysis of Influence of Parameter Selection on Algorithm Performance

In the mutation operation, select the global extreme value Gbest as ex . Because at this time, the algorithm may have fallen into local optimization, and according to the sociological principle, there are often better individuals around the current excellent individuals, so there is more chance to find the optimal solution around them. en represents the horizontal width of the cloud. The larger the en , the larger the horizontal width and the larger the particle search range. The scope of the search should be expanded in the first stage of evolution, the search accuracy should be improved in the next stage of evolution, and en should be reduced dynamically. The global extreme (small) value Gbest of particle evolution gradually approaches the actual extreme value from large to small. In this paper, en = 2gbest is taken to realize the dynamic mediation of en to a certain extent [ 21 ].

It is proportional to the degree of distribution of the cloud droplets. The larger it is, the greater the degree of distribution, and the more the cloud droplets spread. If it is too large, the algorithm loses its stability, and if it is too large, the algorithm loses its stability. The smallness and randomness will be lost to a certain extent. he = en /10 is taken to mediate the stability of the algorithm.

If the parameter K is too large, the mutation will be too high, which will affect the efficiency of the algorithm, and if it is too low, reduce the accuracy of the solution. Also, because the particle herd algorithm has a rapid fusion rate in the first stage of evolution, the fusion rate in the next stage is gradually slowed down, it is difficult to set a completely reasonable fixed value for parameter k . In this paper, let k = Gbest/2 so that the value of K decreases dynamically with the global optimal value Gbest so as to realize adaptive adjustment. To select the change threshold N , consider the SpHere function as an example to test the effect of different N values on the resolution accuracy of the ICPSO algorithm. The experimental parameters were set as follows: the population size was 100, the initial value range was [−5, 5], the maximum iterative algebra was 1000, and the SpHere function was 5, 10, 30, 50, 100. Take 2 for N , respectively. Run 5, 10, and 20 50 times to get the mean, and the quantity is K = Gbest/2. For the test results, see Table 1 .

Solutions of sphere function under different N values.

As can be seen from Table 1 , the smaller the threshold n of low-dimensional function with dimension less than 10, the higher the accuracy of the solution, but the more time-consuming. The smaller the threshold n of 10∼100-dimensional function, the more time-consuming, but the accuracy of the solution is not necessarily high. There is an inflection point in the solution accuracy at 5 out of n . It can be seen that the selection of n value has a certain correlation with the dimension of the function [ 22 ].

4. Result Discussion

Check the effectiveness of the improvement measures, the following typical function extreme value optimization problem is introduced.

- (1) RA-Rastrigin function is shown in formula ( 13 ): f 1 x , y = x 2 + y 2 − cos 18 x − cos 18 y , (14)

Variation of Rastrigin function optimization results with dimension.

- (2) The generalized raster function is shown in equation ( 14 ): f 2 x = ∑ i = 1 30 x i 2 − 10 cos 2 π x i + 10 . (15)

- Here, x i ∈ [−5.12, 5.12], the optimization objective is to find the minimum of the function, the global minimum of f 2 is 0, and there are about 45 local minimum points in the feasible region.

- (3) Br-Branin function is shown in equation ( 15 ): f 3 x , y = x − 5.1 4 π 2 y 2 + 5 π y − 6 2 + 10 1 − 1 8 π cos y + 10 , (16)

- where X ∈ [0,15]; y ∈[−5,10].The optimization objective is to find the minimum of the function, the global minimum of F3 is 0.3979, and the three global minimum points are (−3.031, 1.164), (3.031, 1.164), and (9.3425, 2.425).

- (4) The six-hump camel-back function is as follows ( 16 ): f 4 x , y = 4 x 2 − 2.1 x 4 + 1 3 x 6 + x y − 4 y 2 + 4 y 4 . (17)

Here, x , y ∈ [−5,5], the optimization objective is to find the minimum of the function, the global minimum of F4 is −1.0205, and the two global minimum points are (0.0884, −0.7014) and (−0.0884, 0.7014). The above functions are optimized 50 times with basic CPSO and ICPSO, respectively. For comparison, the initial values of the two algorithms are the same. Then, count the maximum/minimum steps, convergence times, and average steps of each algorithm. The simulation results are shown in Figure 5 ∼ Figure 8 and Table 2 .

RA-Rastrigin function optimization curve.

Optimization curve of six-hump camel-back function.

Performance comparison between PSO and ICPSO algorithms.