Experimental Research Design — 6 mistakes you should never make!

Since school days’ students perform scientific experiments that provide results that define and prove the laws and theorems in science. These experiments are laid on a strong foundation of experimental research designs.

An experimental research design helps researchers execute their research objectives with more clarity and transparency.

In this article, we will not only discuss the key aspects of experimental research designs but also the issues to avoid and problems to resolve while designing your research study.

Table of Contents

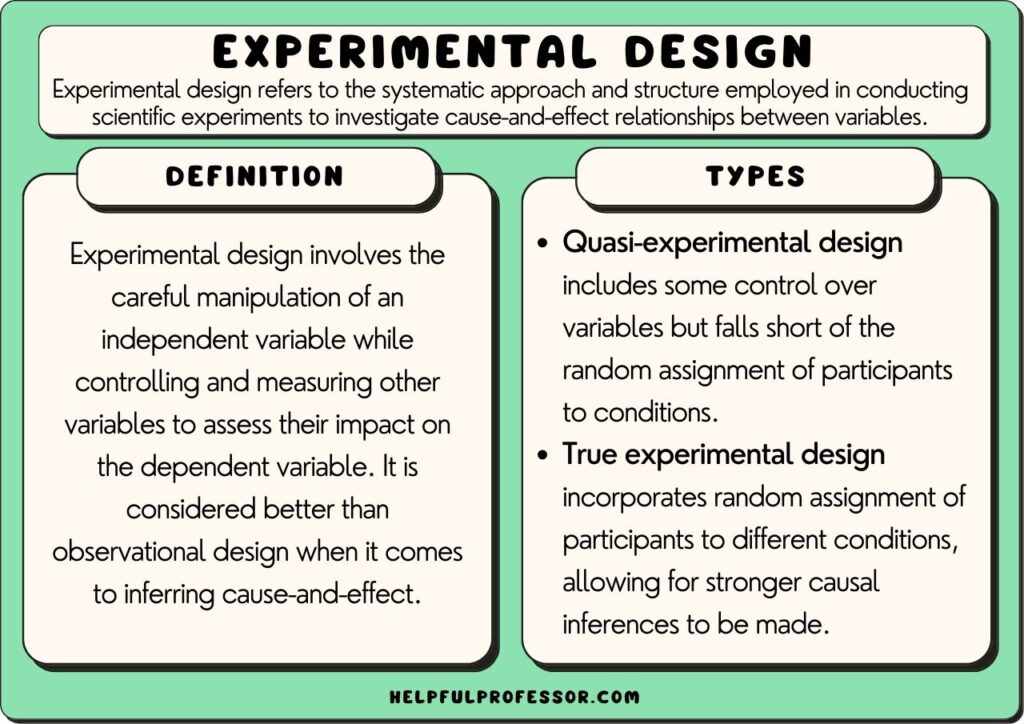

What Is Experimental Research Design?

Experimental research design is a framework of protocols and procedures created to conduct experimental research with a scientific approach using two sets of variables. Herein, the first set of variables acts as a constant, used to measure the differences of the second set. The best example of experimental research methods is quantitative research .

Experimental research helps a researcher gather the necessary data for making better research decisions and determining the facts of a research study.

When Can a Researcher Conduct Experimental Research?

A researcher can conduct experimental research in the following situations —

- When time is an important factor in establishing a relationship between the cause and effect.

- When there is an invariable or never-changing behavior between the cause and effect.

- Finally, when the researcher wishes to understand the importance of the cause and effect.

Importance of Experimental Research Design

To publish significant results, choosing a quality research design forms the foundation to build the research study. Moreover, effective research design helps establish quality decision-making procedures, structures the research to lead to easier data analysis, and addresses the main research question. Therefore, it is essential to cater undivided attention and time to create an experimental research design before beginning the practical experiment.

By creating a research design, a researcher is also giving oneself time to organize the research, set up relevant boundaries for the study, and increase the reliability of the results. Through all these efforts, one could also avoid inconclusive results. If any part of the research design is flawed, it will reflect on the quality of the results derived.

Types of Experimental Research Designs

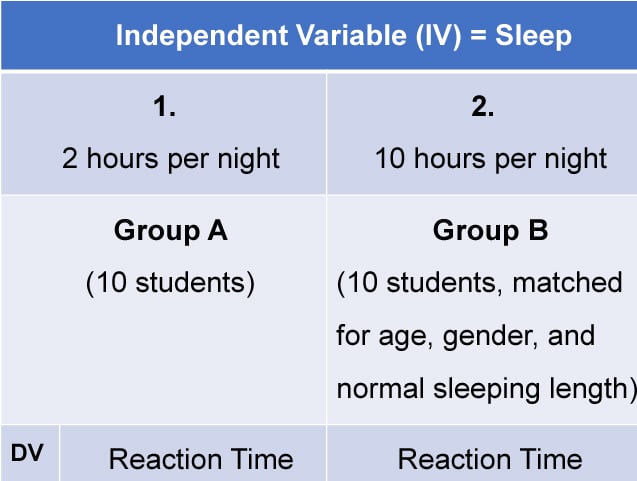

Based on the methods used to collect data in experimental studies, the experimental research designs are of three primary types:

1. Pre-experimental Research Design

A research study could conduct pre-experimental research design when a group or many groups are under observation after implementing factors of cause and effect of the research. The pre-experimental design will help researchers understand whether further investigation is necessary for the groups under observation.

Pre-experimental research is of three types —

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

2. True Experimental Research Design

A true experimental research design relies on statistical analysis to prove or disprove a researcher’s hypothesis. It is one of the most accurate forms of research because it provides specific scientific evidence. Furthermore, out of all the types of experimental designs, only a true experimental design can establish a cause-effect relationship within a group. However, in a true experiment, a researcher must satisfy these three factors —

- There is a control group that is not subjected to changes and an experimental group that will experience the changed variables

- A variable that can be manipulated by the researcher

- Random distribution of the variables

This type of experimental research is commonly observed in the physical sciences.

3. Quasi-experimental Research Design

The word “Quasi” means similarity. A quasi-experimental design is similar to a true experimental design. However, the difference between the two is the assignment of the control group. In this research design, an independent variable is manipulated, but the participants of a group are not randomly assigned. This type of research design is used in field settings where random assignment is either irrelevant or not required.

The classification of the research subjects, conditions, or groups determines the type of research design to be used.

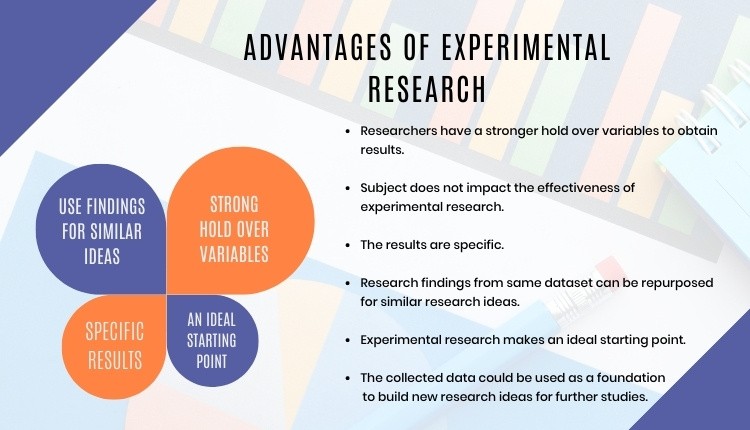

Advantages of Experimental Research

Experimental research allows you to test your idea in a controlled environment before taking the research to clinical trials. Moreover, it provides the best method to test your theory because of the following advantages:

- Researchers have firm control over variables to obtain results.

- The subject does not impact the effectiveness of experimental research. Anyone can implement it for research purposes.

- The results are specific.

- Post results analysis, research findings from the same dataset can be repurposed for similar research ideas.

- Researchers can identify the cause and effect of the hypothesis and further analyze this relationship to determine in-depth ideas.

- Experimental research makes an ideal starting point. The collected data could be used as a foundation to build new research ideas for further studies.

6 Mistakes to Avoid While Designing Your Research

There is no order to this list, and any one of these issues can seriously compromise the quality of your research. You could refer to the list as a checklist of what to avoid while designing your research.

1. Invalid Theoretical Framework

Usually, researchers miss out on checking if their hypothesis is logical to be tested. If your research design does not have basic assumptions or postulates, then it is fundamentally flawed and you need to rework on your research framework.

2. Inadequate Literature Study

Without a comprehensive research literature review , it is difficult to identify and fill the knowledge and information gaps. Furthermore, you need to clearly state how your research will contribute to the research field, either by adding value to the pertinent literature or challenging previous findings and assumptions.

3. Insufficient or Incorrect Statistical Analysis

Statistical results are one of the most trusted scientific evidence. The ultimate goal of a research experiment is to gain valid and sustainable evidence. Therefore, incorrect statistical analysis could affect the quality of any quantitative research.

4. Undefined Research Problem

This is one of the most basic aspects of research design. The research problem statement must be clear and to do that, you must set the framework for the development of research questions that address the core problems.

5. Research Limitations

Every study has some type of limitations . You should anticipate and incorporate those limitations into your conclusion, as well as the basic research design. Include a statement in your manuscript about any perceived limitations, and how you considered them while designing your experiment and drawing the conclusion.

6. Ethical Implications

The most important yet less talked about topic is the ethical issue. Your research design must include ways to minimize any risk for your participants and also address the research problem or question at hand. If you cannot manage the ethical norms along with your research study, your research objectives and validity could be questioned.

Experimental Research Design Example

In an experimental design, a researcher gathers plant samples and then randomly assigns half the samples to photosynthesize in sunlight and the other half to be kept in a dark box without sunlight, while controlling all the other variables (nutrients, water, soil, etc.)

By comparing their outcomes in biochemical tests, the researcher can confirm that the changes in the plants were due to the sunlight and not the other variables.

Experimental research is often the final form of a study conducted in the research process which is considered to provide conclusive and specific results. But it is not meant for every research. It involves a lot of resources, time, and money and is not easy to conduct, unless a foundation of research is built. Yet it is widely used in research institutes and commercial industries, for its most conclusive results in the scientific approach.

Have you worked on research designs? How was your experience creating an experimental design? What difficulties did you face? Do write to us or comment below and share your insights on experimental research designs!

Frequently Asked Questions

Randomization is important in an experimental research because it ensures unbiased results of the experiment. It also measures the cause-effect relationship on a particular group of interest.

Experimental research design lay the foundation of a research and structures the research to establish quality decision making process.

There are 3 types of experimental research designs. These are pre-experimental research design, true experimental research design, and quasi experimental research design.

The difference between an experimental and a quasi-experimental design are: 1. The assignment of the control group in quasi experimental research is non-random, unlike true experimental design, which is randomly assigned. 2. Experimental research group always has a control group; on the other hand, it may not be always present in quasi experimental research.

Experimental research establishes a cause-effect relationship by testing a theory or hypothesis using experimental groups or control variables. In contrast, descriptive research describes a study or a topic by defining the variables under it and answering the questions related to the same.

good and valuable

Very very good

Good presentation.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Publishing Research

- Reporting Research

How to Optimize Your Research Process: A step-by-step guide

For researchers across disciplines, the path to uncovering novel findings and insights is often filled…

- Industry News

- Trending Now

Breaking Barriers: Sony and Nature unveil “Women in Technology Award”

Sony Group Corporation and the prestigious scientific journal Nature have collaborated to launch the inaugural…

Achieving Research Excellence: Checklist for good research practices

Academia is built on the foundation of trustworthy and high-quality research, supported by the pillars…

- Promoting Research

Plain Language Summary — Communicating your research to bridge the academic-lay gap

Science can be complex, but does that mean it should not be accessible to the…

Science under Surveillance: Journals adopt advanced AI to uncover image manipulation

Journals are increasingly turning to cutting-edge AI tools to uncover deceitful images published in manuscripts.…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What should universities' stance be on AI tools in research and academic writing?

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

13 13. Experimental design

Chapter outline.

- What is an experiment and when should you use one? (8 minute read)

- True experimental designs (7 minute read)

- Quasi-experimental designs (8 minute read)

- Non-experimental designs (5 minute read)

- Critical, ethical, and critical considerations (5 minute read)

Content warning : examples in this chapter contain references to non-consensual research in Western history, including experiments conducted during the Holocaust and on African Americans (section 13.6).

13.1 What is an experiment and when should you use one?

Learning objectives.

Learners will be able to…

- Identify the characteristics of a basic experiment

- Describe causality in experimental design

- Discuss the relationship between dependent and independent variables in experiments

- Explain the links between experiments and generalizability of results

- Describe advantages and disadvantages of experimental designs

The basics of experiments

The first experiment I can remember using was for my fourth grade science fair. I wondered if latex- or oil-based paint would hold up to sunlight better. So, I went to the hardware store and got a few small cans of paint and two sets of wooden paint sticks. I painted one with oil-based paint and the other with latex-based paint of different colors and put them in a sunny spot in the back yard. My hypothesis was that the oil-based paint would fade the most and that more fading would happen the longer I left the paint sticks out. (I know, it’s obvious, but I was only 10.)

I checked in on the paint sticks every few days for a month and wrote down my observations. The first part of my hypothesis ended up being wrong—it was actually the latex-based paint that faded the most. But the second part was right, and the paint faded more and more over time. This is a simple example, of course—experiments get a heck of a lot more complex than this when we’re talking about real research.

Merriam-Webster defines an experiment as “an operation or procedure carried out under controlled conditions in order to discover an unknown effect or law, to test or establish a hypothesis, or to illustrate a known law.” Each of these three components of the definition will come in handy as we go through the different types of experimental design in this chapter. Most of us probably think of the physical sciences when we think of experiments, and for good reason—these experiments can be pretty flashy! But social science and psychological research follow the same scientific methods, as we’ve discussed in this book.

As the video discusses, experiments can be used in social sciences just like they can in physical sciences. It makes sense to use an experiment when you want to determine the cause of a phenomenon with as much accuracy as possible. Some types of experimental designs do this more precisely than others, as we’ll see throughout the chapter. If you’ll remember back to Chapter 11 and the discussion of validity, experiments are the best way to ensure internal validity, or the extent to which a change in your independent variable causes a change in your dependent variable.

Experimental designs for research projects are most appropriate when trying to uncover or test a hypothesis about the cause of a phenomenon, so they are best for explanatory research questions. As we’ll learn throughout this chapter, different circumstances are appropriate for different types of experimental designs. Each type of experimental design has advantages and disadvantages, and some are better at controlling the effect of extraneous variables —those variables and characteristics that have an effect on your dependent variable, but aren’t the primary variable whose influence you’re interested in testing. For example, in a study that tries to determine whether aspirin lowers a person’s risk of a fatal heart attack, a person’s race would likely be an extraneous variable because you primarily want to know the effect of aspirin.

In practice, many types of experimental designs can be logistically challenging and resource-intensive. As practitioners, the likelihood that we will be involved in some of the types of experimental designs discussed in this chapter is fairly low. However, it’s important to learn about these methods, even if we might not ever use them, so that we can be thoughtful consumers of research that uses experimental designs.

While we might not use all of these types of experimental designs, many of us will engage in evidence-based practice during our time as social workers. A lot of research developing evidence-based practice, which has a strong emphasis on generalizability, will use experimental designs. You’ve undoubtedly seen one or two in your literature search so far.

The logic of experimental design

How do we know that one phenomenon causes another? The complexity of the social world in which we practice and conduct research means that causes of social problems are rarely cut and dry. Uncovering explanations for social problems is key to helping clients address them, and experimental research designs are one road to finding answers.

As you read about in Chapter 8 (and as we’ll discuss again in Chapter 15 ), just because two phenomena are related in some way doesn’t mean that one causes the other. Ice cream sales increase in the summer, and so does the rate of violent crime; does that mean that eating ice cream is going to make me murder someone? Obviously not, because ice cream is great. The reality of that relationship is far more complex—it could be that hot weather makes people more irritable and, at times, violent, while also making people want ice cream. More likely, though, there are other social factors not accounted for in the way we just described this relationship.

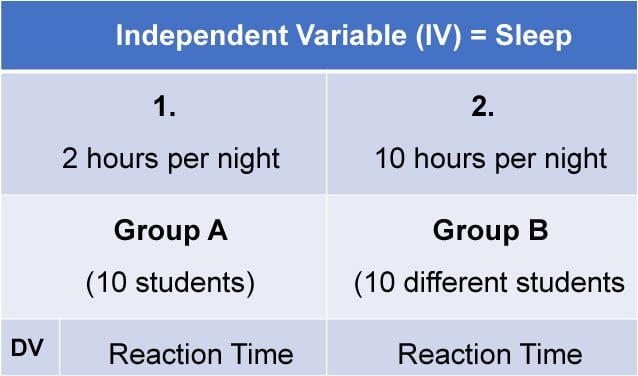

Experimental designs can help clear up at least some of this fog by allowing researchers to isolate the effect of interventions on dependent variables by controlling extraneous variables . In true experimental design (discussed in the next section) and some quasi-experimental designs, researchers accomplish this w ith the control group and the experimental group . (The experimental group is sometimes called the “treatment group,” but we will call it the experimental group in this chapter.) The control group does not receive the intervention you are testing (they may receive no intervention or what is known as “treatment as usual”), while the experimental group does. (You will hopefully remember our earlier discussion of control variables in Chapter 8 —conceptually, the use of the word “control” here is the same.)

In a well-designed experiment, your control group should look almost identical to your experimental group in terms of demographics and other relevant factors. What if we want to know the effect of CBT on social anxiety, but we have learned in prior research that men tend to have a more difficult time overcoming social anxiety? We would want our control and experimental groups to have a similar gender mix because it would limit the effect of gender on our results, since ostensibly, both groups’ results would be affected by gender in the same way. If your control group has 5 women, 6 men, and 4 non-binary people, then your experimental group should be made up of roughly the same gender balance to help control for the influence of gender on the outcome of your intervention. (In reality, the groups should be similar along other dimensions, as well, and your group will likely be much larger.) The researcher will use the same outcome measures for both groups and compare them, and assuming the experiment was designed correctly, get a pretty good answer about whether the intervention had an effect on social anxiety.

You will also hear people talk about comparison groups , which are similar to control groups. The primary difference between the two is that a control group is populated using random assignment, but a comparison group is not. Random assignment entails using a random process to decide which participants are put into the control or experimental group (which participants receive an intervention and which do not). By randomly assigning participants to a group, you can reduce the effect of extraneous variables on your research because there won’t be a systematic difference between the groups.

Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other related fields. Random sampling also helps a great deal with generalizability , whereas random assignment increases internal validity .

We have already learned about internal validity in Chapter 11 . The use of an experimental design will bolster internal validity since it works to isolate causal relationships. As we will see in the coming sections, some types of experimental design do this more effectively than others. It’s also worth considering that true experiments, which most effectively show causality , are often difficult and expensive to implement. Although other experimental designs aren’t perfect, they still produce useful, valid evidence and may be more feasible to carry out.

Key Takeaways

- Experimental designs are useful for establishing causality, but some types of experimental design do this better than others.

- Experiments help researchers isolate the effect of the independent variable on the dependent variable by controlling for the effect of extraneous variables .

- Experiments use a control/comparison group and an experimental group to test the effects of interventions. These groups should be as similar to each other as possible in terms of demographics and other relevant factors.

- True experiments have control groups with randomly assigned participants, while other types of experiments have comparison groups to which participants are not randomly assigned.

- Think about the research project you’ve been designing so far. How might you use a basic experiment to answer your question? If your question isn’t explanatory, try to formulate a new explanatory question and consider the usefulness of an experiment.

- Why is establishing a simple relationship between two variables not indicative of one causing the other?

13.2 True experimental design

- Describe a true experimental design in social work research

- Understand the different types of true experimental designs

- Determine what kinds of research questions true experimental designs are suited for

- Discuss advantages and disadvantages of true experimental designs

True experimental design , often considered to be the “gold standard” in research designs, is thought of as one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity and its ability to establish ( causality ) through treatment manipulation, while controlling for the effects of extraneous variable. Sometimes the treatment level is no treatment, while other times it is simply a different treatment than that which we are trying to evaluate. For example, we might have a control group that is made up of people who will not receive any treatment for a particular condition. Or, a control group could consist of people who consent to treatment with DBT when we are testing the effectiveness of CBT.

As we discussed in the previous section, a true experiment has a control group with participants randomly assigned , and an experimental group . This is the most basic element of a true experiment. The next decision a researcher must make is when they need to gather data during their experiment. Do they take a baseline measurement and then a measurement after treatment, or just a measurement after treatment, or do they handle measurement another way? Below, we’ll discuss the three main types of true experimental designs. There are sub-types of each of these designs, but here, we just want to get you started with some of the basics.

Using a true experiment in social work research is often pretty difficult, since as I mentioned earlier, true experiments can be quite resource intensive. True experiments work best with relatively large sample sizes, and random assignment, a key criterion for a true experimental design, is hard (and unethical) to execute in practice when you have people in dire need of an intervention. Nonetheless, some of the strongest evidence bases are built on true experiments.

For the purposes of this section, let’s bring back the example of CBT for the treatment of social anxiety. We have a group of 500 individuals who have agreed to participate in our study, and we have randomly assigned them to the control and experimental groups. The folks in the experimental group will receive CBT, while the folks in the control group will receive more unstructured, basic talk therapy. These designs, as we talked about above, are best suited for explanatory research questions.

Before we get started, take a look at the table below. When explaining experimental research designs, we often use diagrams with abbreviations to visually represent the experiment. Table 13.1 starts us off by laying out what each of the abbreviations mean.

Pretest and post-test control group design

In pretest and post-test control group design , participants are given a pretest of some kind to measure their baseline state before their participation in an intervention. In our social anxiety experiment, we would have participants in both the experimental and control groups complete some measure of social anxiety—most likely an established scale and/or a structured interview—before they start their treatment. As part of the experiment, we would have a defined time period during which the treatment would take place (let’s say 12 weeks, just for illustration). At the end of 12 weeks, we would give both groups the same measure as a post-test .

In the diagram, RA (random assignment group A) is the experimental group and RB is the control group. O 1 denotes the pre-test, X e denotes the experimental intervention, and O 2 denotes the post-test. Let’s look at this diagram another way, using the example of CBT for social anxiety that we’ve been talking about.

In a situation where the control group received treatment as usual instead of no intervention, the diagram would look this way, with X i denoting treatment as usual (Figure 13.3).

Hopefully, these diagrams provide you a visualization of how this type of experiment establishes time order , a key component of a causal relationship. Did the change occur after the intervention? Assuming there is a change in the scores between the pretest and post-test, we would be able to say that yes, the change did occur after the intervention. Causality can’t exist if the change happened before the intervention—this would mean that something else led to the change, not our intervention.

Post-test only control group design

Post-test only control group design involves only giving participants a post-test, just like it sounds (Figure 13.4).

But why would you use this design instead of using a pretest/post-test design? One reason could be the testing effect that can happen when research participants take a pretest. In research, the testing effect refers to “measurement error related to how a test is given; the conditions of the testing, including environmental conditions; and acclimation to the test itself” (Engel & Schutt, 2017, p. 444) [1] (When we say “measurement error,” all we mean is the accuracy of the way we measure the dependent variable.) Figure 13.4 is a visualization of this type of experiment. The testing effect isn’t always bad in practice—our initial assessments might help clients identify or put into words feelings or experiences they are having when they haven’t been able to do that before. In research, however, we might want to control its effects to isolate a cleaner causal relationship between intervention and outcome.

Going back to our CBT for social anxiety example, we might be concerned that participants would learn about social anxiety symptoms by virtue of taking a pretest. They might then identify that they have those symptoms on the post-test, even though they are not new symptoms for them. That could make our intervention look less effective than it actually is.

However, without a baseline measurement establishing causality can be more difficult. If we don’t know someone’s state of mind before our intervention, how do we know our intervention did anything at all? Establishing time order is thus a little more difficult. You must balance this consideration with the benefits of this type of design.

Solomon four group design

One way we can possibly measure how much the testing effect might change the results of the experiment is with the Solomon four group design. Basically, as part of this experiment, you have two control groups and two experimental groups. The first pair of groups receives both a pretest and a post-test. The other pair of groups receives only a post-test (Figure 13.5). This design helps address the problem of establishing time order in post-test only control group designs.

For our CBT project, we would randomly assign people to four different groups instead of just two. Groups A and B would take our pretest measures and our post-test measures, and groups C and D would take only our post-test measures. We could then compare the results among these groups and see if they’re significantly different between the folks in A and B, and C and D. If they are, we may have identified some kind of testing effect, which enables us to put our results into full context. We don’t want to draw a strong causal conclusion about our intervention when we have major concerns about testing effects without trying to determine the extent of those effects.

Solomon four group designs are less common in social work research, primarily because of the logistics and resource needs involved. Nonetheless, this is an important experimental design to consider when we want to address major concerns about testing effects.

- True experimental design is best suited for explanatory research questions.

- True experiments require random assignment of participants to control and experimental groups.

- Pretest/post-test research design involves two points of measurement—one pre-intervention and one post-intervention.

- Post-test only research design involves only one point of measurement—post-intervention. It is a useful design to minimize the effect of testing effects on our results.

- Solomon four group research design involves both of the above types of designs, using 2 pairs of control and experimental groups. One group receives both a pretest and a post-test, while the other receives only a post-test. This can help uncover the influence of testing effects.

- Think about a true experiment you might conduct for your research project. Which design would be best for your research, and why?

- What challenges or limitations might make it unrealistic (or at least very complicated!) for you to carry your true experimental design in the real-world as a student researcher?

- What hypothesis(es) would you test using this true experiment?

13.4 Quasi-experimental designs

- Describe a quasi-experimental design in social work research

- Understand the different types of quasi-experimental designs

- Determine what kinds of research questions quasi-experimental designs are suited for

- Discuss advantages and disadvantages of quasi-experimental designs

Quasi-experimental designs are a lot more common in social work research than true experimental designs. Although quasi-experiments don’t do as good a job of giving us robust proof of causality , they still allow us to establish time order , which is a key element of causality. The prefix quasi means “resembling,” so quasi-experimental research is research that resembles experimental research, but is not true experimental research. Nonetheless, given proper research design, quasi-experiments can still provide extremely rigorous and useful results.

There are a few key differences between true experimental and quasi-experimental research. The primary difference between quasi-experimental research and true experimental research is that quasi-experimental research does not involve random assignment to control and experimental groups. Instead, we talk about comparison groups in quasi-experimental research instead. As a result, these types of experiments don’t control the effect of extraneous variables as well as a true experiment.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. We’re able to eliminate some threats to internal validity, but we can’t do this as effectively as we can with a true experiment. Realistically, our CBT-social anxiety project is likely to be a quasi experiment, based on the resources and participant pool we’re likely to have available.

It’s important to note that not all quasi-experimental designs have a comparison group. There are many different kinds of quasi-experiments, but we will discuss the three main types below: nonequivalent comparison group designs, time series designs, and ex post facto comparison group designs.

Nonequivalent comparison group design

You will notice that this type of design looks extremely similar to the pretest/post-test design that we discussed in section 13.3. But instead of random assignment to control and experimental groups, researchers use other methods to construct their comparison and experimental groups. A diagram of this design will also look very similar to pretest/post-test design, but you’ll notice we’ve removed the “R” from our groups, since they are not randomly assigned (Figure 13.6).

Researchers using this design select a comparison group that’s as close as possible based on relevant factors to their experimental group. Engel and Schutt (2017) [2] identify two different selection methods:

- Individual matching : Researchers take the time to match individual cases in the experimental group to similar cases in the comparison group. It can be difficult, however, to match participants on all the variables you want to control for.

- Aggregate matching : Instead of trying to match individual participants to each other, researchers try to match the population profile of the comparison and experimental groups. For example, researchers would try to match the groups on average age, gender balance, or median income. This is a less resource-intensive matching method, but researchers have to ensure that participants aren’t choosing which group (comparison or experimental) they are a part of.

As we’ve already talked about, this kind of design provides weaker evidence that the intervention itself leads to a change in outcome. Nonetheless, we are still able to establish time order using this method, and can thereby show an association between the intervention and the outcome. Like true experimental designs, this type of quasi-experimental design is useful for explanatory research questions.

What might this look like in a practice setting? Let’s say you’re working at an agency that provides CBT and other types of interventions, and you have identified a group of clients who are seeking help for social anxiety, as in our earlier example. Once you’ve obtained consent from your clients, you can create a comparison group using one of the matching methods we just discussed. If the group is small, you might match using individual matching, but if it’s larger, you’ll probably sort people by demographics to try to get similar population profiles. (You can do aggregate matching more easily when your agency has some kind of electronic records or database, but it’s still possible to do manually.)

Time series design

Another type of quasi-experimental design is a time series design. Unlike other types of experimental design, time series designs do not have a comparison group. A time series is a set of measurements taken at intervals over a period of time (Figure 13.7). Proper time series design should include at least three pre- and post-intervention measurement points. While there are a few types of time series designs, we’re going to focus on the most common: interrupted time series design.

But why use this method? Here’s an example. Let’s think about elementary student behavior throughout the school year. As anyone with children or who is a teacher knows, kids get very excited and animated around holidays, days off, or even just on a Friday afternoon. This fact might mean that around those times of year, there are more reports of disruptive behavior in classrooms. What if we took our one and only measurement in mid-December? It’s possible we’d see a higher-than-average rate of disruptive behavior reports, which could bias our results if our next measurement is around a time of year students are in a different, less excitable frame of mind. When we take multiple measurements throughout the first half of the school year, we can establish a more accurate baseline for the rate of these reports by looking at the trend over time.

We may want to test the effect of extended recess times in elementary school on reports of disruptive behavior in classrooms. When students come back after the winter break, the school extends recess by 10 minutes each day (the intervention), and the researchers start tracking the monthly reports of disruptive behavior again. These reports could be subject to the same fluctuations as the pre-intervention reports, and so we once again take multiple measurements over time to try to control for those fluctuations.

This method improves the extent to which we can establish causality because we are accounting for a major extraneous variable in the equation—the passage of time. On its own, it does not allow us to account for other extraneous variables, but it does establish time order and association between the intervention and the trend in reports of disruptive behavior. Finding a stable condition before the treatment that changes after the treatment is evidence for causality between treatment and outcome.

Ex post facto comparison group design

Ex post facto (Latin for “after the fact”) designs are extremely similar to nonequivalent comparison group designs. There are still comparison and experimental groups, pretest and post-test measurements, and an intervention. But in ex post facto designs, participants are assigned to the comparison and experimental groups once the intervention has already happened. This type of design often occurs when interventions are already up and running at an agency and the agency wants to assess effectiveness based on people who have already completed treatment.

In most clinical agency environments, social workers conduct both initial and exit assessments, so there are usually some kind of pretest and post-test measures available. We also typically collect demographic information about our clients, which could allow us to try to use some kind of matching to construct comparison and experimental groups.

In terms of internal validity and establishing causality, ex post facto designs are a bit of a mixed bag. The ability to establish causality depends partially on the ability to construct comparison and experimental groups that are demographically similar so we can control for these extraneous variables .

Quasi-experimental designs are common in social work intervention research because, when designed correctly, they balance the intense resource needs of true experiments with the realities of research in practice. They still offer researchers tools to gather robust evidence about whether interventions are having positive effects for clients.

- Quasi-experimental designs are similar to true experiments, but do not require random assignment to experimental and control groups.

- In quasi-experimental projects, the group not receiving the treatment is called the comparison group, not the control group.

- Nonequivalent comparison group design is nearly identical to pretest/post-test experimental design, but participants are not randomly assigned to the experimental and control groups. As a result, this design provides slightly less robust evidence for causality.

- Nonequivalent groups can be constructed by individual matching or aggregate matching .

- Time series design does not have a control or experimental group, and instead compares the condition of participants before and after the intervention by measuring relevant factors at multiple points in time. This allows researchers to mitigate the error introduced by the passage of time.

- Ex post facto comparison group designs are also similar to true experiments, but experimental and comparison groups are constructed after the intervention is over. This makes it more difficult to control for the effect of extraneous variables, but still provides useful evidence for causality because it maintains the time order[ /pb_glossary] of the experiment.

- Think back to the experiment you considered for your research project in Section 13.3. Now that you know more about quasi-experimental designs, do you still think it's a true experiment? Why or why not?

- What should you consider when deciding whether an experimental or quasi-experimental design would be more feasible or fit your research question better?

13.5 Non-experimental designs

Learners will be able to...

- Describe non-experimental designs in social work research

- Discuss how non-experimental research differs from true and quasi-experimental research

- Demonstrate an understanding the different types of non-experimental designs

- Determine what kinds of research questions non-experimental designs are suited for

- Discuss advantages and disadvantages of non-experimental designs

The previous sections have laid out the basics of some rigorous approaches to establish that an intervention is responsible for changes we observe in research participants. This type of evidence is extremely important to build an evidence base for social work interventions, but it's not the only type of evidence to consider. We will discuss qualitative methods, which provide us with rich, contextual information, in Part 4 of this text. The designs we'll talk about in this section are sometimes used in [pb_glossary id="851"] qualitative research, but in keeping with our discussion of experimental design so far, we're going to stay in the quantitative research realm for now. Non-experimental is also often a stepping stone for more rigorous experimental design in the future, as it can help test the feasibility of your research.

In general, non-experimental designs do not strongly support causality and don't address threats to internal validity. However, that's not really what they're intended for. Non-experimental designs are useful for a few different types of research, including explanatory questions in program evaluation. Certain types of non-experimental design are also helpful for researchers when they are trying to develop a new assessment or scale. Other times, researchers or agency staff did not get a chance to gather any assessment information before an intervention began, so a pretest/post-test design is not possible.

A significant benefit of these types of designs is that they're pretty easy to execute in a practice or agency setting. They don't require a comparison or control group, and as Engel and Schutt (2017) [3] point out, they "flow from a typical practice model of assessment, intervention, and evaluating the impact of the intervention" (p. 177). Thus, these designs are fairly intuitive for social workers, even when they aren't expert researchers. Below, we will go into some detail about the different types of non-experimental design.

One group pretest/post-test design

Also known as a before-after one-group design, this type of research design does not have a comparison group and everyone who participates in the research receives the intervention (Figure 13.8). This is a common type of design in program evaluation in the practice world. Controlling for extraneous variables is difficult or impossible in this design, but given that it is still possible to establish some measure of time order, it does provide weak support for causality.

Imagine, for example, a researcher who is interested in the effectiveness of an anti-drug education program on elementary school students’ attitudes toward illegal drugs. The researcher could assess students' attitudes about illegal drugs (O 1 ), implement the anti-drug program (X), and then immediately after the program ends, the researcher could once again measure students’ attitudes toward illegal drugs (O 2 ). You can see how this would be relatively simple to do in practice, and have probably been involved in this type of research design yourself, even if informally. But hopefully, you can also see that this design would not provide us with much evidence for causality because we have no way of controlling for the effect of extraneous variables. A lot of things could have affected any change in students' attitudes—maybe girls already had different attitudes about illegal drugs than children of other genders, and when we look at the class's results as a whole, we couldn't account for that influence using this design.

All of that doesn't mean these results aren't useful, however. If we find that children's attitudes didn't change at all after the drug education program, then we need to think seriously about how to make it more effective or whether we should be using it at all. (This immediate, practical application of our results highlights a key difference between program evaluation and research, which we will discuss in Chapter 23 .)

After-only design

As the name suggests, this type of non-experimental design involves measurement only after an intervention. There is no comparison or control group, and everyone receives the intervention. I have seen this design repeatedly in my time as a program evaluation consultant for nonprofit organizations, because often these organizations realize too late that they would like to or need to have some sort of measure of what effect their programs are having.

Because there is no pretest and no comparison group, this design is not useful for supporting causality since we can't establish the time order and we can't control for extraneous variables. However, that doesn't mean it's not useful at all! Sometimes, agencies need to gather information about how their programs are functioning. A classic example of this design is satisfaction surveys—realistically, these can only be administered after a program or intervention. Questions regarding satisfaction, ease of use or engagement, or other questions that don't involve comparisons are best suited for this type of design.

Static-group design

A final type of non-experimental research is the static-group design. In this type of research, there are both comparison and experimental groups, which are not randomly assigned. There is no pretest, only a post-test, and the comparison group has to be constructed by the researcher. Sometimes, researchers will use matching techniques to construct the groups, but often, the groups are constructed by convenience of who is being served at the agency.

Non-experimental research designs are easy to execute in practice, but we must be cautious about drawing causal conclusions from the results. A positive result may still suggest that we should continue using a particular intervention (and no result or a negative result should make us reconsider whether we should use that intervention at all). You have likely seen non-experimental research in your daily life or at your agency, and knowing the basics of how to structure such a project will help you ensure you are providing clients with the best care possible.

- Non-experimental designs are useful for describing phenomena, but cannot demonstrate causality.

- After-only designs are often used in agency and practice settings because practitioners are often not able to set up pre-test/post-test designs.

- Non-experimental designs are useful for explanatory questions in program evaluation and are helpful for researchers when they are trying to develop a new assessment or scale.

- Non-experimental designs are well-suited to qualitative methods.

- If you were to use a non-experimental design for your research project, which would you choose? Why?

- Have you conducted non-experimental research in your practice or professional life? Which type of non-experimental design was it?

13.6 Critical, ethical, and cultural considerations

- Describe critiques of experimental design

- Identify ethical issues in the design and execution of experiments

- Identify cultural considerations in experimental design

As I said at the outset, experiments, and especially true experiments, have long been seen as the gold standard to gather scientific evidence. When it comes to research in the biomedical field and other physical sciences, true experiments are subject to far less nuance than experiments in the social world. This doesn't mean they are easier—just subject to different forces. However, as a society, we have placed the most value on quantitative evidence obtained through empirical observation and especially experimentation.

Major critiques of experimental designs tend to focus on true experiments, especially randomized controlled trials (RCTs), but many of these critiques can be applied to quasi-experimental designs, too. Some researchers, even in the biomedical sciences, question the view that RCTs are inherently superior to other types of quantitative research designs. RCTs are far less flexible and have much more stringent requirements than other types of research. One seemingly small issue, like incorrect information about a research participant, can derail an entire RCT. RCTs also cost a great deal of money to implement and don't reflect “real world” conditions. The cost of true experimental research or RCTs also means that some communities are unlikely to ever have access to these research methods. It is then easy for people to dismiss their research findings because their methods are seen as "not rigorous."

Obviously, controlling outside influences is important for researchers to draw strong conclusions, but what if those outside influences are actually important for how an intervention works? Are we missing really important information by focusing solely on control in our research? Is a treatment going to work the same for white women as it does for indigenous women? With the myriad effects of our societal structures, you should be very careful ever assuming this will be the case. This doesn't mean that cultural differences will negate the effect of an intervention; instead, it means that you should remember to practice cultural humility implementing all interventions, even when we "know" they work.

How we build evidence through experimental research reveals a lot about our values and biases, and historically, much experimental research has been conducted on white people, and especially white men. [4] This makes sense when we consider the extent to which the sciences and academia have historically been dominated by white patriarchy. This is especially important for marginalized groups that have long been ignored in research literature, meaning they have also been ignored in the development of interventions and treatments that are accepted as "effective." There are examples of marginalized groups being experimented on without their consent, like the Tuskegee Experiment or Nazi experiments on Jewish people during World War II. We cannot ignore the collective consciousness situations like this can create about experimental research for marginalized groups.

None of this is to say that experimental research is inherently bad or that you shouldn't use it. Quite the opposite—use it when you can, because there are a lot of benefits, as we learned throughout this chapter. As a social work researcher, you are uniquely positioned to conduct experimental research while applying social work values and ethics to the process and be a leader for others to conduct research in the same framework. It can conflict with our professional ethics, especially respect for persons and beneficence, if we do not engage in experimental research with our eyes wide open. We also have the benefit of a great deal of practice knowledge that researchers in other fields have not had the opportunity to get. As with all your research, always be sure you are fully exploring the limitations of the research.

- While true experimental research gathers strong evidence, it can also be inflexible, expensive, and overly simplistic in terms of important social forces that affect the resources.

- Marginalized communities' past experiences with experimental research can affect how they respond to research participation.

- Social work researchers should use both their values and ethics, and their practice experiences, to inform research and push other researchers to do the same.

- Think back to the true experiment you sketched out in the exercises for Section 13.3. Are there cultural or historical considerations you hadn't thought of with your participant group? What are they? Does this change the type of experiment you would want to do?

- How can you as a social work researcher encourage researchers in other fields to consider social work ethics and values in their experimental research?

- Engel, R. & Schutt, R. (2016). The practice of research in social work. Thousand Oaks, CA: SAGE Publications, Inc. ↵

- Sullivan, G. M. (2011). Getting off the “gold standard”: Randomized controlled trials and education research. Journal of Graduate Medical Education , 3 (3), 285-289. ↵

an operation or procedure carried out under controlled conditions in order to discover an unknown effect or law, to test or establish a hypothesis, or to illustrate a known law.

explains why particular phenomena work in the way that they do; answers “why” questions

variables and characteristics that have an effect on your outcome, but aren't the primary variable whose influence you're interested in testing.

the group of participants in our study who do not receive the intervention we are researching in experiments with random assignment

in experimental design, the group of participants in our study who do receive the intervention we are researching

the group of participants in our study who do not receive the intervention we are researching in experiments without random assignment

using a random process to decide which participants are tested in which conditions

The ability to apply research findings beyond the study sample to some broader population,

Ability to say that one variable "causes" something to happen to another variable. Very important to assess when thinking about studies that examine causation such as experimental or quasi-experimental designs.

the idea that one event, behavior, or belief will result in the occurrence of another, subsequent event, behavior, or belief

An experimental design in which one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed

a type of experimental design in which participants are randomly assigned to control and experimental groups, one group receives an intervention, and both groups receive pre- and post-test assessments

A measure of a participant's condition before they receive an intervention or treatment.

A measure of a participant's condition after an intervention or, if they are part of the control/comparison group, at the end of an experiment.

A demonstration that a change occurred after an intervention. An important criterion for establishing causality.

an experimental design in which participants are randomly assigned to control and treatment groups, one group receives an intervention, and both groups receive only a post-test assessment

The measurement error related to how a test is given; the conditions of the testing, including environmental conditions; and acclimation to the test itself

a subtype of experimental design that is similar to a true experiment, but does not have randomly assigned control and treatment groups

In nonequivalent comparison group designs, the process by which researchers match individual cases in the experimental group to similar cases in the comparison group.

In nonequivalent comparison group designs, the process in which researchers match the population profile of the comparison and experimental groups.

a set of measurements taken at intervals over a period of time

Graduate research methods in Education (Leadership) Copyright © by Dan Laitsch is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Advertisement

Single-Case Experimental Designs in Educational Research: A Methodology for Causal Analyses in Teaching and Learning

- Reflection on the Field

- Published: 09 June 2013

- Volume 25 , pages 549–569, ( 2013 )

Cite this article

- Joshua B. Plavnick 1 &

- Summer J. Ferreri 1

3353 Accesses

30 Citations

1 Altmetric

Explore all metrics

Current legislation requires educational practices be informed by science. The effort to establish educational practices supported by science has, to date, emphasized experiments with large numbers of participants who are randomly assigned to an intervention or control condition. A potential limitation of such an emphasis at the expense of other research methods is that evidence-based practices in education will derive only from science in the hypothetico-deductive tradition. Such a process omits practices originating from and tested through an inductive approach to understanding phenomena. Single-case experimental designs, developed by experimental and applied behavior analysts, offer an inductive process to identify and alter the lawful relations between the behavior of individual organisms and the environmental variables that are causally related to the occurrence or nonoccurrence of the behavior. Such designs have been essential in the development of effective instructional practices for students with disabilities and have much to offer the broader educational population as well. The purpose of the present paper is to provide an overview of single-case research methodology and the process by which this methodology can contribute to the identification of evidence-based instructional practices.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

A primer on the validity typology and threats to validity in education research

The Design(s) of Educational Research: Description and Interpretation

The logic of causal investigations and the rhetoric and pragmatics of research planning.

Azrin, N. H., & Peterson, A. L. (1990). Treatment of Tourette’s sundrome by habit reversal: A waiting-list control group. Behavior Therapy, 21 , 305–318.

Article Google Scholar

Bellini, S., & Akullian, J. (2007). A meta-analysis of video modeling and video self-modeling interventions for children and adolescents with autism spectrum disorders. Exceptional Children, 73 , 264–287.

Google Scholar

Cooper, J. O., Herron, T. E., & Heward, W. L. (2007). Applied Behavior Analysis (2nd ed.). Upper Saddle River: Prentice Hall.

Eisenhart, M., & DeHaan, R. L. (2005). Doctoral preparation of scientifically based education researchers. Educational Researcher, 34 , 3–13.

Fisher, W. W., Kelley, M. E., & Lomas, J. E. (2003). Visual aids and structured criteria for improving visual inspection and interpretation of single-case designs. Journal of Applied Behavior Analysis, 36 , 387–406.

Gorman, B. S., & Allison, D. B. (1996). Statistical alternatives for single-case designs. In R. Franklin, D. Allison, & B. Gorman (Eds.), Design and analysis of single-case research . Hillsdale: Erlbaum.

Hains, A. H., & Baer, D. M. (1989). Interaction effects in multielement designs: Inevitable, desirable, and ignorable. Journal of Applied Behavior Analysis, 22 , 57–69.

Hall, R. V., & Fox, R. G. (1977). Changing-criterion designs: an alternate applied behavior analysis procedure. In B. C. Etzel, J. M. LeBlanc, & D. M. Baer (Eds.), New developments in behavioral research . Hillsdale, NJ: Erlbaum.

Hartmann, D. P., & Hall, R. V. (1976). The changing criterion design. Journal of Applied Behavior Analysis, 9 , 527–532.

Horner, R. H., Carr, E. G., Halle, J., McGee, G., Odom, S., & Wolery, M. (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71 , 165–179.

Hudson, T. M., Hinkson-Lee, K., & Collins, B. (2013). Teaching paragraph composition to students with emotional/behavioral disorders using the simultaneous prompting procedure. Journal of Behavioral Education, 22 , 139–156.

Individuals with Disabilities Education Improvement Act. (2004). Pub. L. No. 108–446

Ittenbach, R. F., & Lawhead, W. F. (1996). Historical and philosophical foundations of single-case research. In R. Franklin, D. Allison, & B. Gorman (Eds.), Design and Analysis of Single-Case Research . Hillsdale: Erlbaum.

Johnston, J. M., & Pennypacker, H. S. (2008). Strategies and Tactics for Behavioral Research (3rd ed.). Hillsdale: Erlbaum.

Kahng, S. W., Chung, K. M., Gutshall, K., Pitts, S. C., Kao, J., & Girolami, K. (2010). Consistent visual analysis of intrasubject data. Journal of Applied Behavior Analysis, 43 , 35–45.

Kazdin, A. E. (2011). Single-Case Research Designs: Methods for Clinical and Applied Settings (2nd ed.). New York: Oxford University Press.

Kennedy, C. H. (2005). Single-Case Designs for Educational Research . Boston: Allyn and Bacon.

Kratochwill, T. R., & Stoiber, K. C. (2002). Evidence-based interventions in school psychology: Conceptual foundations of the procedural and coding manual of division 16 and the Society for the Study of School Psychology Task Force. School Psychology Quarterly, 17 , 341–389.

Kratochwill, T. R., Hitchcock, J., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M. et al. (2010). Single-case designs technical documentation. Retrieved from What Works Clearinghouse website: http://ies.ed.gov/ncee/wwc/pdf/wwc_scd.pdf .

Leaf, J. B., Dotson, W., Oppenheim, M. L., Sheldon, J. B., & Sherman, J. A. (2010). The effectiveness of group teaching interactions for young children with a pervasive developmental disorder. Research in Autism Spectrum Disorders, 4 , 186–198.

Maggin, D. M., Briesch, A. M., & Chafouleas, S. M. (2013). An application of the What Works Clearinghouse standards for evaluating single-subject research: synthesis of the self-management literature-base. Remedial and Special Education . doi: 10.1177/0741932511435176 .

Mason, R. A., Ganz, J. B., Parker, R. I., Burke, M. D., & Camargo, S. P. (2012). Moderating factors of video-modeling with other as model: A meta-analysis of single-case studies. Research in Developmental Disabilities, 33 , 1076–1086.

Neef, N. A. (2006). Advances in single subject design. In C. Reynolds & T. Gutkin (Eds.), The Handbook of School Psychology (4th ed.). New York: Wiley.

Neef, N. A., Marckel, J., Ferreri, S. J., Bicard, D. F., Endo, S., Aman, M. G., et al. (2005). Behavioral assessment of impulsivity: A comparison of children with and without attention deficit hyperactivity disorder. Journal of Applied Behavior Analysis, 38 , 23–37.

No Child Left Behind Act of 2001 (2002). Pub. L. No. 107–110, 115 Stat. 1425, 20 U.S.C. §§6301 et seq.

Odom, S. L., Brantlinger, E., Gersten, R., Horner, R. H., Thompson, B., & Harris, K. R. (2005). Research in special education: Scientific methods and evidence-based practices. Exceptional Children, 71 , 137–149.

Poncy, B. C., Duhon, G. J., Lee, S. B., & Key, A. (2010). Evaluation of techniques to promote generalization with basic math fact skills. Journal of Behavioral Education, 19 , 76–92.

Ricciardi, J. N., Luiselli, J. K., & Camare, M. (2013). Shaping approach responses as intervention for specific phobia in a child with autism. Journal of Applied Behavior Analysis, 39 , 445–448.

Sidman, M. (1960). Tactics of Scientific Research . New York: Basic Books.

Skinner, B. F. (1938). The Behavior of Organisms: An Experimental Analysis . New York: Appleton-Century.

Skinner, B. F. (1953). Science and Human Behavior . New York: Macmillan.

Skinner, B. F. (1968). Technology of Teaching . New York: Appleton.

Wolery, M. (2013). A commentary single-case design technical document of the What Works Clearinghouse. Remedial and Special Education, 34 , 39–43.

Download references

Author information

Authors and affiliations.

Department of Counseling, Educational Psychology, and Special Education, Michigan State University, 620 Farm Lane, Erickson Hall #341, East Lansing, MI, 48824, USA

Joshua B. Plavnick & Summer J. Ferreri

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Joshua B. Plavnick .

Rights and permissions

Reprints and permissions

About this article

Plavnick, J.B., Ferreri, S.J. Single-Case Experimental Designs in Educational Research: A Methodology for Causal Analyses in Teaching and Learning. Educ Psychol Rev 25 , 549–569 (2013). https://doi.org/10.1007/s10648-013-9230-6

Download citation

Published : 09 June 2013

Issue Date : December 2013

DOI : https://doi.org/10.1007/s10648-013-9230-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Applied behavior analysis

- Evidence-based practice

- Single-case experimental design

- Find a journal

- Publish with us

- Track your research

- Experimental Research Designs: Types, Examples & Methods

Experimental research is the most familiar type of research design for individuals in the physical sciences and a host of other fields. This is mainly because experimental research is a classical scientific experiment, similar to those performed in high school science classes.

Imagine taking 2 samples of the same plant and exposing one of them to sunlight, while the other is kept away from sunlight. Let the plant exposed to sunlight be called sample A, while the latter is called sample B.

If after the duration of the research, we find out that sample A grows and sample B dies, even though they are both regularly wetted and given the same treatment. Therefore, we can conclude that sunlight will aid growth in all similar plants.

What is Experimental Research?

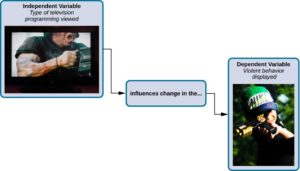

Experimental research is a scientific approach to research, where one or more independent variables are manipulated and applied to one or more dependent variables to measure their effect on the latter. The effect of the independent variables on the dependent variables is usually observed and recorded over some time, to aid researchers in drawing a reasonable conclusion regarding the relationship between these 2 variable types.

The experimental research method is widely used in physical and social sciences, psychology, and education. It is based on the comparison between two or more groups with a straightforward logic, which may, however, be difficult to execute.

Mostly related to a laboratory test procedure, experimental research designs involve collecting quantitative data and performing statistical analysis on them during research. Therefore, making it an example of quantitative research method .

What are The Types of Experimental Research Design?

The types of experimental research design are determined by the way the researcher assigns subjects to different conditions and groups. They are of 3 types, namely; pre-experimental, quasi-experimental, and true experimental research.

Pre-experimental Research Design

In pre-experimental research design, either a group or various dependent groups are observed for the effect of the application of an independent variable which is presumed to cause change. It is the simplest form of experimental research design and is treated with no control group.

Although very practical, experimental research is lacking in several areas of the true-experimental criteria. The pre-experimental research design is further divided into three types

- One-shot Case Study Research Design

In this type of experimental study, only one dependent group or variable is considered. The study is carried out after some treatment which was presumed to cause change, making it a posttest study.

- One-group Pretest-posttest Research Design:

This research design combines both posttest and pretest study by carrying out a test on a single group before the treatment is administered and after the treatment is administered. With the former being administered at the beginning of treatment and later at the end.

- Static-group Comparison:

In a static-group comparison study, 2 or more groups are placed under observation, where only one of the groups is subjected to some treatment while the other groups are held static. All the groups are post-tested, and the observed differences between the groups are assumed to be a result of the treatment.

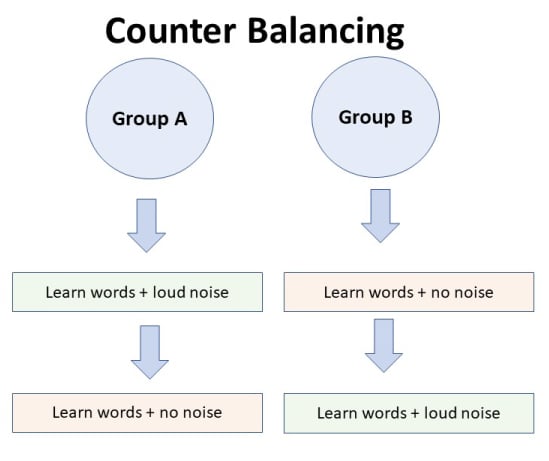

Quasi-experimental Research Design

The word “quasi” means partial, half, or pseudo. Therefore, the quasi-experimental research bearing a resemblance to the true experimental research, but not the same. In quasi-experiments, the participants are not randomly assigned, and as such, they are used in settings where randomization is difficult or impossible.

This is very common in educational research, where administrators are unwilling to allow the random selection of students for experimental samples.

Some examples of quasi-experimental research design include; the time series, no equivalent control group design, and the counterbalanced design.

True Experimental Research Design

The true experimental research design relies on statistical analysis to approve or disprove a hypothesis. It is the most accurate type of experimental design and may be carried out with or without a pretest on at least 2 randomly assigned dependent subjects.

The true experimental research design must contain a control group, a variable that can be manipulated by the researcher, and the distribution must be random. The classification of true experimental design include:

- The posttest-only Control Group Design: In this design, subjects are randomly selected and assigned to the 2 groups (control and experimental), and only the experimental group is treated. After close observation, both groups are post-tested, and a conclusion is drawn from the difference between these groups.

- The pretest-posttest Control Group Design: For this control group design, subjects are randomly assigned to the 2 groups, both are presented, but only the experimental group is treated. After close observation, both groups are post-tested to measure the degree of change in each group.

- Solomon four-group Design: This is the combination of the pretest-only and the pretest-posttest control groups. In this case, the randomly selected subjects are placed into 4 groups.

The first two of these groups are tested using the posttest-only method, while the other two are tested using the pretest-posttest method.

Examples of Experimental Research

Experimental research examples are different, depending on the type of experimental research design that is being considered. The most basic example of experimental research is laboratory experiments, which may differ in nature depending on the subject of research.

Administering Exams After The End of Semester

During the semester, students in a class are lectured on particular courses and an exam is administered at the end of the semester. In this case, the students are the subjects or dependent variables while the lectures are the independent variables treated on the subjects.

Only one group of carefully selected subjects are considered in this research, making it a pre-experimental research design example. We will also notice that tests are only carried out at the end of the semester, and not at the beginning.

Further making it easy for us to conclude that it is a one-shot case study research.

Employee Skill Evaluation

Before employing a job seeker, organizations conduct tests that are used to screen out less qualified candidates from the pool of qualified applicants. This way, organizations can determine an employee’s skill set at the point of employment.

In the course of employment, organizations also carry out employee training to improve employee productivity and generally grow the organization. Further evaluation is carried out at the end of each training to test the impact of the training on employee skills, and test for improvement.

Here, the subject is the employee, while the treatment is the training conducted. This is a pretest-posttest control group experimental research example.

Evaluation of Teaching Method

Let us consider an academic institution that wants to evaluate the teaching method of 2 teachers to determine which is best. Imagine a case whereby the students assigned to each teacher is carefully selected probably due to personal request by parents or due to stubbornness and smartness.

This is a no equivalent group design example because the samples are not equal. By evaluating the effectiveness of each teacher’s teaching method this way, we may conclude after a post-test has been carried out.

However, this may be influenced by factors like the natural sweetness of a student. For example, a very smart student will grab more easily than his or her peers irrespective of the method of teaching.

What are the Characteristics of Experimental Research?

Experimental research contains dependent, independent and extraneous variables. The dependent variables are the variables being treated or manipulated and are sometimes called the subject of the research.

The independent variables are the experimental treatment being exerted on the dependent variables. Extraneous variables, on the other hand, are other factors affecting the experiment that may also contribute to the change.

The setting is where the experiment is carried out. Many experiments are carried out in the laboratory, where control can be exerted on the extraneous variables, thereby eliminating them.

Other experiments are carried out in a less controllable setting. The choice of setting used in research depends on the nature of the experiment being carried out.

- Multivariable

Experimental research may include multiple independent variables, e.g. time, skills, test scores, etc.

Why Use Experimental Research Design?

Experimental research design can be majorly used in physical sciences, social sciences, education, and psychology. It is used to make predictions and draw conclusions on a subject matter.

Some uses of experimental research design are highlighted below.

- Medicine: Experimental research is used to provide the proper treatment for diseases. In most cases, rather than directly using patients as the research subject, researchers take a sample of the bacteria from the patient’s body and are treated with the developed antibacterial

The changes observed during this period are recorded and evaluated to determine its effectiveness. This process can be carried out using different experimental research methods.

- Education: Asides from science subjects like Chemistry and Physics which involves teaching students how to perform experimental research, it can also be used in improving the standard of an academic institution. This includes testing students’ knowledge on different topics, coming up with better teaching methods, and the implementation of other programs that will aid student learning.

- Human Behavior: Social scientists are the ones who mostly use experimental research to test human behaviour. For example, consider 2 people randomly chosen to be the subject of the social interaction research where one person is placed in a room without human interaction for 1 year.

The other person is placed in a room with a few other people, enjoying human interaction. There will be a difference in their behaviour at the end of the experiment.

- UI/UX: During the product development phase, one of the major aims of the product team is to create a great user experience with the product. Therefore, before launching the final product design, potential are brought in to interact with the product.

For example, when finding it difficult to choose how to position a button or feature on the app interface, a random sample of product testers are allowed to test the 2 samples and how the button positioning influences the user interaction is recorded.

What are the Disadvantages of Experimental Research?