- Teesside University Student & Library Services

- Learning Hub Group

Critical Appraisal for Health Students

- Critical Appraisal of a quantitative paper

- Critical Appraisal: Help

- Critical Appraisal of a qualitative paper

- Useful resources

Appraisal of a Quantitative paper: Top tips

- Introduction

Critical appraisal of a quantitative paper (RCT)

This guide, aimed at health students, provides basic level support for appraising quantitative research papers. It's designed for students who have already attended lectures on critical appraisal. One framework for appraising quantitative research (based on reliability, internal and external validity) is provided and there is an opportunity to practise the technique on a sample article.

Please note this framework is for appraising one particular type of quantitative research a Randomised Controlled Trial (RCT) which is defined as

a trial in which participants are randomly assigned to one of two or more groups: the experimental group or groups receive the intervention or interventions being tested; the comparison group (control group) receive usual care or no treatment or a placebo. The groups are then followed up to see if there are any differences between the results. This helps in assessing the effectiveness of the intervention.(CASP, 2020)

Support materials

- Framework for reading quantitative papers (RCTs)

- Critical appraisal of a quantitative paper PowerPoint

To practise following this framework for critically appraising a quantitative article, please look at the following article:

Marrero, D.G. et al (2016) 'Comparison of commercial and self-initiated weight loss programs in people with prediabetes: a randomized control trial', AJPH Research , 106(5), pp. 949-956.

Critical Appraisal of a quantitative paper (RCT): practical example

- Internal Validity

- External Validity

- Reliability Measurement Tool

How to use this practical example

Using the framework, you can have a go at appraising a quantitative paper - we are going to look at the following article:

Marrero, d.g. et al (2016) 'comparison of commercial and self-initiated weight loss programs in people with prediabetes: a randomized control trial', ajph research , 106(5), pp. 949-956., step 1. take a quick look at the article, step 2. click on the internal validity tab above - there are questions to help you appraise the article, read the questions and look for the answers in the article. , step 3. click on each question and our answers will appear., step 4. repeat with the other aspects of external validity and reliability. , questioning the internal validity:, randomisation : how were participants allocated to each group did a randomisation process taken place, comparability of groups: how similar were the groups eg age, sex, ethnicity – is this made clear, blinding (none, single, double or triple): who was not aware of which group a patient was in (eg nobody, only patient, patient and clinician, patient, clinician and researcher) was it feasible for more blinding to have taken place , equal treatment of groups: were both groups treated in the same way , attrition : what percentage of participants dropped out did this adversely affect one group has this been evaluated, overall internal validity: does the research measure what it is supposed to be measuring, questioning the external validity:, attrition: was everyone accounted for at the end of the study was any attempt made to contact drop-outs, sampling approach: how was the sample selected was it based on probability or non-probability what was the approach (eg simple random, convenience) was this an appropriate approach, sample size (power calculation): how many participants was a sample size calculation performed did the study pass, exclusion/ inclusion criteria: were the criteria set out clearly were they based on recognised diagnostic criteria, what is the overall external validity can the results be applied to the wider population, questioning the reliability (measurement tool) internal validity:, internal consistency reliability (cronbach’s alpha). has a cronbach’s alpha score of 0.7 or above been included, test re-test reliability correlation. was the test repeated more than once were the same results received has a correlation coefficient been reported is it above 0.7 , validity of measurement tool. is it an established tool if not what has been done to check if it is reliable pilot study expert panel literature review criterion validity (test against other tools): has a criterion validity comparison been carried out was the score above 0.7, what is the overall reliability how consistent are the measurements , overall validity and reliability:, overall how valid and reliable is the paper.

- << Previous: Critical Appraisal of a qualitative paper

- Next: Useful resources >>

- Last Updated: Aug 25, 2023 2:48 PM

- URL: https://libguides.tees.ac.uk/critical_appraisal

Research Evaluation

- First Online: 23 June 2020

Cite this chapter

- Carlo Ghezzi 2

946 Accesses

1 Citations

- The original version of this chapter was revised. A correction to this chapter can be found at https://doi.org/10.1007/978-3-030-45157-8_7

This chapter is about research evaluation. Evaluation is quintessential to research. It is traditionally performed through qualitative expert judgement. The chapter presents the main evaluation activities in which researchers can be engaged. It also introduces the current efforts towards devising quantitative research evaluation based on bibliometric indicators and critically discusses their limitations, along with their possible (limited and careful) use.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Change history

19 october 2021.

The original version of the chapter was inadvertently published with an error. The chapter has now been corrected.

Notice that the taxonomy presented in Box 5.1 does not cover all kinds of scientific papers. As an example, it does not cover survey papers, which normally are not submitted to a conference.

Private institutions and industry may follow different schemes.

Adler, R., Ewing, J., Taylor, P.: Citation statistics: A report from the international mathematical union (imu) in cooperation with the international council of industrial and applied mathematics (iciam) and the institute of mathematical statistics (ims). Statistical Science 24 (1), 1–14 (2009). URL http://www.jstor.org/stable/20697661

Esposito, F., Ghezzi, C., Hermenegildo, M., Kirchner, H., Ong, L.: Informatics Research Evaluation. Informatics Europe (2018). URL https://www.informatics-europe.org/publications.html

Friedman, B., Schneider, F.B.: Incentivizing quality and impact: Evaluating scholarship in hiring, tenure, and promotion. Computing Research Association (2016). URL https://cra.org/resources/best-practice-memos/incentivizing-quality-and-impact-evaluating-scholarship-in-hiring-tenure-and-promotion/

Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., Rafols, I.: Bibliometrics: The leiden manifesto for research metrics. Nature News 520 (7548), 429 (2015). https://doi.org/10.1038/520429a . URL http://www.nature.com/news/bibliometrics-the-leiden-manifesto-for-research-metrics-1.17351

Parnas, D.L.: Stop the numbers game. Commun. ACM 50 (11), 19–21 (2007). https://doi.org/10.1145/1297797.1297815 . URL http://doi.acm.org/10.1145/1297797.1297815

Patterson, D., Snyder, L., Ullman, J.: Evaluating computer scientists and engineers for promotion and tenure. Computing Research Association (1999). URL https://cra.org/resources/best-practice-memos/incentivizing-quality-and-impact-evaluating-scholarship-in-hiring-tenure-and-promotion/

Saenen, B., Borrell-Damian, L.: Reflections on University Research Assessment: key concepts, issues and actors. European University Association (2019). URL https://eua.eu/component/attachments/attachments.html?id=2144

Download references

Author information

Authors and affiliations.

Dipartimento di Elettronica, Informazione e Bioingegneria, Politecnico di Milano, Milano, Italy

Carlo Ghezzi

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Carlo Ghezzi .

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Ghezzi, C. (2020). Research Evaluation. In: Being a Researcher. Springer, Cham. https://doi.org/10.1007/978-3-030-45157-8_5

Download citation

DOI : https://doi.org/10.1007/978-3-030-45157-8_5

Published : 23 June 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-45156-1

Online ISBN : 978-3-030-45157-8

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

#QuantCrit: Integrating CRT With Quantitative Methods in Family Science

See all articles from this issue

- Traditional quantitative methodologies are rooted in studying and norming the experiences of W.E.I.R.D.

- Quantitative Criticalism is a transdisciplinary approach to resist traditional quantitative methodologies

- Quantitative Criticalists aim to produce socially just research informed by various critical theories (e.g., CRT, feminist, queer).

Race is frequently operationalized as an individual fixed trait that is used to explain individual differences in various outcomes (Zuberi, 2000). Family Scientists may use race to explain direct causal relationships (e.g., parenting styles) or as a control variable to account for the variation explained by race on a given outcome (e.g., communication styles; Zuberi & Bonilla-Silva, 2008). When using race as a variable, Family Scientists often unlink it from its sociocultural context. In effect, race is reduced to a phenotypic or genotypic marker for explaining research phenomena (Bonilla-Silva, 2009). This process is particularly pervasive in quantitative methods, which are frequently perceived as more empirically valuable than qualitative methods (Onwuegbuzie & Leech, 2005). Quantitative methods position the external world as independent of human perception and are subject to immutable scientific laws. Quantitative researchers utilize randomization, control, and manipulation to ensure that outside factors do not bias research findings. This emphasis on isolationism centers knowledge as objective. However, critical researchers argue that quantitative inquiry is no less socially constructed than any other form of research (Stage, 2007). For instance, sampling bias can greatly influence research findings and interpretations by privileging the lived experiences of certain groups of people (e.g., high number of studies conducted on individuals from Western, educated, industrial, rich, and democratic backgrounds; Nielsen et al., 2017) or presenting results that are nonrepresentative of the internal diversity that exists within marginalized groups (e.g., the plethora of comparison studies that consolidate members of the African American diaspora to a single racial category; Jackson & Cothran, 2003).

To challenge the assumptions that shape quantitative inquiry’s emphasis on neutrality and objectivity, critical-race-conscious scholars have embraced critical race theory (CRT) as a mechanism for addressing the replication of racial stereotypes and White supremacy in empirical research. By failing to account for how racism and White supremacy shape family scholarship, Family Scientists are inadvertently perpetuating the position that change and betterment are the sole responsibility of the individual rather than challenging the systemic “creators” of inequality (Walsdorf et al., 2020). While conceptualizations of CRT tenets are evolving, a common thread among the elements is a commitment to identify, deconstruct, and remedy the oppressive realities of people of color, their families, and their communities (Bridges, 2019). This commitment has recently been extended to the critical evaluation of quantitative research via the development of “Quantitative Criticalism” (QuantCrit), which provides a framework for applying the principles and insights of CRT to quantitative data whenever it is used in research or encountered in policy and practice (Gillborn et al., 2018). In this article, we briefly summarize the tenets of QuantCrit and its connections to the principles of CRT and provide a brief case example of how Family Scientists can use QuantCrit.

Quantitative Criticalism

QuantCrit is an analytic framework that utilizes the tenets of CRT to challenge normative assumptions embedded in quantitative methodology (Covarrubias & Vélez, 2013; Gillborn et al., 2018; Sullivan et al., 2010). Despite its origin, QuantCrit has been used to critique traditional approaches to investigating various racial disparities, including breast cancer and genomic uncertainty (Gerido, 2020), teaching evaluations (Campbell, 2020), Asian American experiences in higher education (Teranishi, 2007), teacher prioritization of student achievement (Quinn et al., 2019), and student learning outcomes (Young & Cunningham, 2021). While heavily utilized by education scholars, QuantCrit is a transdisciplinary framework that applies the principles of socially constructed inequality and inherent inequality found within CRT to quantitative inquiry (insofar as socially constructed and inherent inequalities function to create and maintain social, economic, and political inequalities between dominant and marginalized groups). According to Gillborn et al. (2018), QuantCrit is not “an off-shoot movement of CRT” but “a kind of toolkit that embodies the need to apply CRT understandings and insights whenever quantitative data is used in research and/or encountered in policy and practice” (p. 169). Several central tenets guide this framework.

Acategorical Intersectionality

Identity is a complex, multidimensional aspect of individuals’ lived experience. Intersectionality describes how systems of identity, discrimination, and disadvantage co-influence individuals, families, and communities (Collins, 2019). Intersectionality challenges the idea of a single social category as the primary dimension of inequity and asserts that complex social inequalities are firmly entrenched in all aspects of people’s lived experiences. The gendered, racialized, and economic factors that shape an individual’s lived experiences cannot be understood independently, as they are intertwined. For example, Suzuki et al. (2021) discuss this complexity by explaining how not including race in research may suggest that it is unimportant but addressing race only via the inclusion of racial categories without explicitly elaborating on how racism influenced the outcome may indicate that racial inequities are natural. As such, QuantCrit researchers refute the idea of categorization as natural or inherent, critically evaluating the categories they construct for analysis and provide a rationale regarding their use of categories.

Centrality of Counternarratives

QuantCrit places emphasis on reliably researching and centering individuals’ lived experience using counternarratives. Counternarratives represent the perspectives of minoritized groups that often contradict a culture’s dominant narrative. By centralizing minoritized voices and contextualizing privilege and power, QuantCrit researchers diversify research narratives. In doing so, QuantCrit researchers highlight the multidirectional effects of power, privilege, and oppression by disrupting narratives that frame minoritized group members as deficient. This disruption also includes critically evaluating and intervening in the oppressive systems that uphold the power and privilege of dominant groups.

Nonneutrality of Data

QuantCrit researchers heavily scrutinize the notion of objectivity and reject the idea that numbers “speak for themselves.” QuantCrit researchers acknowledge that all data and analytic methods have biases and strive to minimize and explicitly discuss these biases.

Bias in the Interpretation and Presentation of Research

Even when numbers are not explicitly used to advance oppressive notions, research findings are interpreted and presented through the cultural norms, values, and practices of the researcher (Gillborn et al., 2018). In presenting research results, QuantCrit researchers overtly discuss their positionality and how their lived experiences may have influenced their interpretation and presentation of their findings.

Social Justice Oriented

QuantCrit research is rooted in the goals of social justice; it rejects the notion that quantitative research is bias-free, identifying and acknowledging how prior and contemporary research is used as a tool of oppression, and disrupting systems of oppression by critically evaluating and changing oppressive aspects of the quantitative research process. In doing so, QuantCrit researchers commit themselves to capturing the nuances and depth of the lived experiences of marginalized groups while simultaneously challenging prevailing oppressive systems.

QuantCrit: A Brief Example

QuantCrit has far-reaching research implications for Family Science. Consider how Family Scientists construct variables to reflect aspects of social marginalization (e.g., neighborhood disadvantage). Neighborhood disadvantage refers to the “lack of economic and social resources that predisposes people to physical and social disorder” (Ross & Mirowsky, 2001, p. 258). These effects are often of interest to Family Scientists for their developmental and intergenerational consequences (e.g., social organization theory; Mancini & Bowen, 2013). However, this construct has issues regarding its operationalization and measurement. Prior studies have operationalized neighborhood disadvantage as an index of contextual elements of a participant’s environment, including, but not limited to, a) the proportion of households with children with single-parent mothers; b) the proportion of households living under poverty rate; c) unemployment rate; and d) proportion of African American households (Martin et al., 2019; Vazsonyi et al., 2006). These elements are frequently mathematically consolidated into a variable based on their high degree of relatability. An issue with this consolidation is the assumption that living near or around a higher proportion of African American households brings disadvantages. However, researchers rarely address how redlining has been used to systematically place African Americans in disenfranchised neighborhoods (Aaronson et al., 2021).

QuantCrit researchers would approach the assessment of neighborhood disadvantage much differently. They may use other factors such as access to resources (e.g., food, health care facilities, community resources) and physical signs of social disorder (e.g., graffiti, vandalism, abandoned buildings) as indicators of neighborhood disadvantage. In addition, QuantCrit researchers may collect data from residents on their perception of the neighborhood and how it has an impact on their lives. These factors respect the spirit of the unobserved concept without perpetuating harmful stereotypes about African Americans.

As Family Scientists seek to incorporate CRT into their praxis, there is a growing need to critically evaluate our approach to quantitative methodology and disrupt the perpetuation of racism and White supremacy within Family Science scholarship. QuantCrit provides researchers with fertile ground for such reflection as it challenges researchers to consider the historical, social, political, and economic power relations present within their research. While this article was a mere introduction to the QuantCrit framework, we hope that it inspires more Family Scientists to reflect upon its tenets and explore ways of dismantling racism and White supremacy within their quantitative research.

Bridges, K. (2019). Critical race theory: A primer (3rd ed.). Foundation Press.

Bonilla-Silva, E. (2009). Racism without racists: Color-blind racism and the persistence of racial inequality in the United States (2nd ed.). Rowman & Littlefield.

Campbell, S. L. (2020). Ratings in black and white: A quantcrit examination of race and gender in teacher evaluation reform. Race Ethnicity and Education , https://doi.org/10.1080/13613324.2020.1842345

Collins, P. H. (2019). Intersectionality as critical social theory . Duke University Press.

Covarrubias, A., & V. Vélez. 2013. Critical race quantitative intersectionality: An anti-racist research paradigm that refuses to “let the numbers speak for themselves.” In M. Lynn & A. D. Dixson (Eds.), Handbook of critical race theory in education (pp. 270–285). Routledge.

Gerido, L. H. (2020). Racial disparities in breast cancer and genomic uncertainty: A quantcrit mini-review. Open Information Science, 4 (1), 39–57. https://doi.org/10.1515/opis-2020-0004

Gillborn, D., Warmington, P., & Demack, S. (2018). Quantcrit: Education, policy, “Big Data” and principles for a critical race theory of statistics. Race Ethnicity and Education , 21 (2), 158–179. https://doi.org/10.1080/13613324.2017.1377417

Jackson, J. V., & Cothran, M. E. (2003). Black versus Black: The relationships among African, African American, and African Caribbean persons. Journal of Black Studies , 33 (5), 576–604. https://doi.org/10.1177/0021934703033005003

Mancini, J. A., & Bowen, G. L. (2013). Families and communities: A social organization theory of action and change. In G. W. Peterson & K. R. Bush (Eds.) Handbook of marriage and the family (pp. 781–813). Springer.

Martin, C. L., Kane, J. B., Miles, G. L., Aiello, A. E., & Harris, K. M. (2019). Neighborhood disadvantage across the transition from adolescence to adulthood and risk of metabolic syndrome. Health & Place , 57 , 131–138. https://doi.org/10.1016/j.healthplace.2019.03.002

Nielsen, M., Haun, D., Kärtner, J., & Legare, C. H. (2017). The persistent sampling bias in developmental psychology: A call to action. Journal of Experimental Child Psychology, 162 , 31–38. https://doi.org/10.1016/j.jecp.2017.04.017

Onwuegbuzie, A. J., & Leech, N. L. (2005). Taking the “Q” out of research: Teaching research methodology courses without the divide between quantitative and qualitative paradigms. Quality & Quantity, 39 , 267–296. https://doi.org/10.1007/s11135-004-1670-0

Quinn, D. M., Desruisseaux, T. M., & Nkansah-Amankra, A. (2019). “Achievement gap” language affects teachers’ issue prioritization. Educational Researcher , 48 (7), 484–487. https://doi.org/10.3102/0013189X19863765

Ross, C. E., & Mirowsky, J. (2001). Neighborhood disadvantage, disorder, and health. Journal of Health & Social Behavior, 42 (3), 258–276. https://doi.org/10.2307/3090214

Stage, F. K. (2007). Answering critical questions using quantitative data. New Directions for Institutional Research , 2007 (133), 5–16. https://doi.org/10.1002/ir.200

Sullivan, E., Larke, P. J., & Webb-Hasan, G. (2010). Using critical policy and critical race theory to examine Texas’ school disciplinary policies. Race, Gender & Class , 17 (1–2), 72–87. https://www.jstor.org/stable/41674726

Suzuki, S., Morris, S. L., & Johnson, S. K. (2021). Using quantcrit to advance an anti-racist developmental science: Applications to mixture modeling. Journal of Adolescent Research , 1–27. https://doi.org/10.1177/07435584211028229

Teranishi, R. T. (2007). Race, ethnicity, and higher education policy: The use of critical quantitative research. New Directions for Institutional Research , 2007 (133), 37–49. https://doi.org/10.1002/ir.203

Vazsonyi, A. T., Cleveland, H. H., & Wiebe, R. P. (2006). Does the effect of impulsivity on delinquency vary by level of neighborhood disadvantage? Criminal Justice and Behavior , 33 (4), 511–541. https://doi.org/10.1177/0093854806287318

Walsdorf, A. A., Jordan, L. S., McGeorge, C. R., & Caught, M. O. (2020). White supremacy and the web of family science: Implications of the missing spider. Journal of Family Theory & Review, 12 (1), 64–79. https://doi.org/10.1111/jftr.12364

Young, J., & Cunningham, J. A. (2021). Repositioning black girls in mathematics disposition research: New perspectives from quantcrit. Investigations in Mathematics Learning , 13 (1), 29–42. https://doi.org/10.1080/19477503.2020.1827664

Zuberi, T., & Bonilla-Silva, E. (2008). White logic, white methods: Racism and methodology. Rowman & Littlefield.

Zuberi, T. (2000). Deracializing social statistics: Problems in the quantification of race. Annals of the American Academy of Political and Social Science, 568 (1), 172–185. https://doi.org/10.1177/000271620056800113

Family Science is a vibrant and growing discipline. Visit Family.Science to learn more and see how Family Scientists make a difference.

NCFR is a nonpartisan, 501(c)(3) nonprofit organization whose members support all families through research, teaching, practice, and advocacy.

Get the latest updates on NCFR & Family Science in our weekly email newsletter:

Connect with Us

National Council on Family Relations 661 LaSalle Street, Suite 200 Saint Paul, MN 55114 Phone: (888) 781-9331 [email protected] Terms & Conditions | Privacy Policy

© Copyright 2023 NCFR

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- How to appraise quantitative research

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

This article has a correction. Please see:

- Correction: How to appraise quantitative research - April 01, 2019

- Xabi Cathala 1 ,

- Calvin Moorley 2

- 1 Institute of Vocational Learning , School of Health and Social Care, London South Bank University , London , UK

- 2 Nursing Research and Diversity in Care , School of Health and Social Care, London South Bank University , London , UK

- Correspondence to Mr Xabi Cathala, Institute of Vocational Learning, School of Health and Social Care, London South Bank University London UK ; cathalax{at}lsbu.ac.uk and Dr Calvin Moorley, Nursing Research and Diversity in Care, School of Health and Social Care, London South Bank University, London SE1 0AA, UK; Moorleyc{at}lsbu.ac.uk

https://doi.org/10.1136/eb-2018-102996

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Introduction

Some nurses feel that they lack the necessary skills to read a research paper and to then decide if they should implement the findings into their practice. This is particularly the case when considering the results of quantitative research, which often contains the results of statistical testing. However, nurses have a professional responsibility to critique research to improve their practice, care and patient safety. 1 This article provides a step by step guide on how to critically appraise a quantitative paper.

Title, keywords and the authors

The authors’ names may not mean much, but knowing the following will be helpful:

Their position, for example, academic, researcher or healthcare practitioner.

Their qualification, both professional, for example, a nurse or physiotherapist and academic (eg, degree, masters, doctorate).

This can indicate how the research has been conducted and the authors’ competence on the subject. Basically, do you want to read a paper on quantum physics written by a plumber?

The abstract is a resume of the article and should contain:

Introduction.

Research question/hypothesis.

Methods including sample design, tests used and the statistical analysis (of course! Remember we love numbers).

Main findings.

Conclusion.

The subheadings in the abstract will vary depending on the journal. An abstract should not usually be more than 300 words but this varies depending on specific journal requirements. If the above information is contained in the abstract, it can give you an idea about whether the study is relevant to your area of practice. However, before deciding if the results of a research paper are relevant to your practice, it is important to review the overall quality of the article. This can only be done by reading and critically appraising the entire article.

The introduction

Example: the effect of paracetamol on levels of pain.

My hypothesis is that A has an effect on B, for example, paracetamol has an effect on levels of pain.

My null hypothesis is that A has no effect on B, for example, paracetamol has no effect on pain.

My study will test the null hypothesis and if the null hypothesis is validated then the hypothesis is false (A has no effect on B). This means paracetamol has no effect on the level of pain. If the null hypothesis is rejected then the hypothesis is true (A has an effect on B). This means that paracetamol has an effect on the level of pain.

Background/literature review

The literature review should include reference to recent and relevant research in the area. It should summarise what is already known about the topic and why the research study is needed and state what the study will contribute to new knowledge. 5 The literature review should be up to date, usually 5–8 years, but it will depend on the topic and sometimes it is acceptable to include older (seminal) studies.

Methodology

In quantitative studies, the data analysis varies between studies depending on the type of design used. For example, descriptive, correlative or experimental studies all vary. A descriptive study will describe the pattern of a topic related to one or more variable. 6 A correlational study examines the link (correlation) between two variables 7 and focuses on how a variable will react to a change of another variable. In experimental studies, the researchers manipulate variables looking at outcomes 8 and the sample is commonly assigned into different groups (known as randomisation) to determine the effect (causal) of a condition (independent variable) on a certain outcome. This is a common method used in clinical trials.

There should be sufficient detail provided in the methods section for you to replicate the study (should you want to). To enable you to do this, the following sections are normally included:

Overview and rationale for the methodology.

Participants or sample.

Data collection tools.

Methods of data analysis.

Ethical issues.

Data collection should be clearly explained and the article should discuss how this process was undertaken. Data collection should be systematic, objective, precise, repeatable, valid and reliable. Any tool (eg, a questionnaire) used for data collection should have been piloted (or pretested and/or adjusted) to ensure the quality, validity and reliability of the tool. 9 The participants (the sample) and any randomisation technique used should be identified. The sample size is central in quantitative research, as the findings should be able to be generalised for the wider population. 10 The data analysis can be done manually or more complex analyses performed using computer software sometimes with advice of a statistician. From this analysis, results like mode, mean, median, p value, CI and so on are always presented in a numerical format.

The author(s) should present the results clearly. These may be presented in graphs, charts or tables alongside some text. You should perform your own critique of the data analysis process; just because a paper has been published, it does not mean it is perfect. Your findings may be different from the author’s. Through critical analysis the reader may find an error in the study process that authors have not seen or highlighted. These errors can change the study result or change a study you thought was strong to weak. To help you critique a quantitative research paper, some guidance on understanding statistical terminology is provided in table 1 .

- View inline

Some basic guidance for understanding statistics

Quantitative studies examine the relationship between variables, and the p value illustrates this objectively. 11 If the p value is less than 0.05, the null hypothesis is rejected and the hypothesis is accepted and the study will say there is a significant difference. If the p value is more than 0.05, the null hypothesis is accepted then the hypothesis is rejected. The study will say there is no significant difference. As a general rule, a p value of less than 0.05 means, the hypothesis is accepted and if it is more than 0.05 the hypothesis is rejected.

The CI is a number between 0 and 1 or is written as a per cent, demonstrating the level of confidence the reader can have in the result. 12 The CI is calculated by subtracting the p value to 1 (1–p). If there is a p value of 0.05, the CI will be 1–0.05=0.95=95%. A CI over 95% means, we can be confident the result is statistically significant. A CI below 95% means, the result is not statistically significant. The p values and CI highlight the confidence and robustness of a result.

Discussion, recommendations and conclusion

The final section of the paper is where the authors discuss their results and link them to other literature in the area (some of which may have been included in the literature review at the start of the paper). This reminds the reader of what is already known, what the study has found and what new information it adds. The discussion should demonstrate how the authors interpreted their results and how they contribute to new knowledge in the area. Implications for practice and future research should also be highlighted in this section of the paper.

A few other areas you may find helpful are:

Limitations of the study.

Conflicts of interest.

Table 2 provides a useful tool to help you apply the learning in this paper to the critiquing of quantitative research papers.

Quantitative paper appraisal checklist

- 1. ↵ Nursing and Midwifery Council , 2015 . The code: standard of conduct, performance and ethics for nurses and midwives https://www.nmc.org.uk/globalassets/sitedocuments/nmc-publications/nmc-code.pdf ( accessed 21.8.18 ).

- Gerrish K ,

- Moorley C ,

- Tunariu A , et al

- Shorten A ,

Competing interests None declared.

Patient consent Not required.

Provenance and peer review Commissioned; internally peer reviewed.

Correction notice This article has been updated since its original publication to update p values from 0.5 to 0.05 throughout.

Linked Articles

- Miscellaneous Correction: How to appraise quantitative research BMJ Publishing Group Ltd and RCN Publishing Company Ltd Evidence-Based Nursing 2019; 22 62-62 Published Online First: 31 Jan 2019. doi: 10.1136/eb-2018-102996corr1

Read the full text or download the PDF:

Critical Appraisal Tools

Jbi’s critical appraisal tools assist in assessing the trustworthiness, relevance and results of published papers..

These tools have been revised. Recently published articles detail the revision.

"Assessing the risk of bias of quantitative analytical studies: introducing the vision for critical appraisal within JBI systematic reviews"

"revising the jbi quantitative critical appraisal tools to improve their applicability: an overview of methods and the development process".

End to end support for developing systematic reviews

Analytical Cross Sectional Studies

Checklist for analytical cross sectional studies, how to cite, associated publication(s), case control studies , checklist for case control studies, case reports , checklist for case reports, case series , checklist for case series.

Munn Z, Barker TH, Moola S, Tufanaru C, Stern C, McArthur A, Stephenson M, Aromataris E. Methodological quality of case series studies: an introduction to the JBI critical appraisal tool. JBI Evidence Synthesis. 2020;18(10):2127-2133

Methodological quality of case series studies: an introduction to the JBI critical appraisal tool

Cohort studies , checklist for cohort studies, diagnostic test accuracy studies , checklist for diagnostic test accuracy studies.

Campbell JM, Klugar M, Ding S, Carmody DP, Hakonsen SJ, Jadotte YT, White S, Munn Z. Chapter 9: Diagnostic test accuracy systematic reviews. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

JBI Manual for Evidence Synthesis

Chapter 9: Diagnostic test accuracy systematic reviews

Economic Evaluations

Checklist for economic evaluations, prevalence studies , checklist for prevalence studies.

Munn Z, Moola S, Lisy K, Riitano D, Tufanaru C. Chapter 5: Systematic reviews of prevalence and incidence. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

Chapter 5: Systematic reviews of prevalence and incidence

Qualitative Research

Checklist for qualitative research.

Lockwood C, Munn Z, Porritt K. Qualitative research synthesis: methodological guidance for systematic reviewers utilizing meta-aggregation. Int J Evid Based Healthc. 2015;13(3):179–187

Chapter 2: Systematic reviews of qualitative evidence

Qualitative research synthesis

Methodological guidance for systematic reviewers utilizing meta-aggregation

Quasi-Experimental Studies

Checklist for quasi-experimental studies, randomized controlled trials , randomized controlled trials.

Barker TH, Stone JC, Sears K, Klugar M, Tufanaru C, Leonardi-Bee J, Aromataris E, Munn Z. The revised JBI critical appraisal tool for the assessment of risk of bias for randomized controlled trials. JBI Evidence Synthesis. 2023;21(3):494-506

The revised JBI critical appraisal tool for the assessment of risk of bias for randomized controlled trials

Randomized controlled trials checklist (archive), systematic reviews , checklist for systematic reviews.

Aromataris E, Fernandez R, Godfrey C, Holly C, Kahlil H, Tungpunkom P. Summarizing systematic reviews: methodological development, conduct and reporting of an Umbrella review approach. Int J Evid Based Healthc. 2015;13(3):132-40.

Chapter 10: Umbrella Reviews

Textual Evidence: Expert Opinion

Checklist for textual evidence: expert opinion.

McArthur A, Klugarova J, Yan H, Florescu S. Chapter 4: Systematic reviews of text and opinion. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

Chapter 4: Systematic reviews of text and opinion

Textual Evidence: Narrative

Checklist for textual evidence: narrative, textual evidence: policy , checklist for textual evidence: policy.

Qualitative vs Quantitative Research Methods & Data Analysis

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

What is the difference between quantitative and qualitative?

The main difference between quantitative and qualitative research is the type of data they collect and analyze.

Quantitative research collects numerical data and analyzes it using statistical methods. The aim is to produce objective, empirical data that can be measured and expressed in numerical terms. Quantitative research is often used to test hypotheses, identify patterns, and make predictions.

Qualitative research , on the other hand, collects non-numerical data such as words, images, and sounds. The focus is on exploring subjective experiences, opinions, and attitudes, often through observation and interviews.

Qualitative research aims to produce rich and detailed descriptions of the phenomenon being studied, and to uncover new insights and meanings.

Quantitative data is information about quantities, and therefore numbers, and qualitative data is descriptive, and regards phenomenon which can be observed but not measured, such as language.

What Is Qualitative Research?

Qualitative research is the process of collecting, analyzing, and interpreting non-numerical data, such as language. Qualitative research can be used to understand how an individual subjectively perceives and gives meaning to their social reality.

Qualitative data is non-numerical data, such as text, video, photographs, or audio recordings. This type of data can be collected using diary accounts or in-depth interviews and analyzed using grounded theory or thematic analysis.

Qualitative research is multimethod in focus, involving an interpretive, naturalistic approach to its subject matter. This means that qualitative researchers study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them. Denzin and Lincoln (1994, p. 2)

Interest in qualitative data came about as the result of the dissatisfaction of some psychologists (e.g., Carl Rogers) with the scientific study of psychologists such as behaviorists (e.g., Skinner ).

Since psychologists study people, the traditional approach to science is not seen as an appropriate way of carrying out research since it fails to capture the totality of human experience and the essence of being human. Exploring participants’ experiences is known as a phenomenological approach (re: Humanism ).

Qualitative research is primarily concerned with meaning, subjectivity, and lived experience. The goal is to understand the quality and texture of people’s experiences, how they make sense of them, and the implications for their lives.

Qualitative research aims to understand the social reality of individuals, groups, and cultures as nearly as possible as participants feel or live it. Thus, people and groups are studied in their natural setting.

Some examples of qualitative research questions are provided, such as what an experience feels like, how people talk about something, how they make sense of an experience, and how events unfold for people.

Research following a qualitative approach is exploratory and seeks to explain ‘how’ and ‘why’ a particular phenomenon, or behavior, operates as it does in a particular context. It can be used to generate hypotheses and theories from the data.

Qualitative Methods

There are different types of qualitative research methods, including diary accounts, in-depth interviews , documents, focus groups , case study research , and ethnography.

The results of qualitative methods provide a deep understanding of how people perceive their social realities and in consequence, how they act within the social world.

The researcher has several methods for collecting empirical materials, ranging from the interview to direct observation, to the analysis of artifacts, documents, and cultural records, to the use of visual materials or personal experience. Denzin and Lincoln (1994, p. 14)

Here are some examples of qualitative data:

Interview transcripts : Verbatim records of what participants said during an interview or focus group. They allow researchers to identify common themes and patterns, and draw conclusions based on the data. Interview transcripts can also be useful in providing direct quotes and examples to support research findings.

Observations : The researcher typically takes detailed notes on what they observe, including any contextual information, nonverbal cues, or other relevant details. The resulting observational data can be analyzed to gain insights into social phenomena, such as human behavior, social interactions, and cultural practices.

Unstructured interviews : generate qualitative data through the use of open questions. This allows the respondent to talk in some depth, choosing their own words. This helps the researcher develop a real sense of a person’s understanding of a situation.

Diaries or journals : Written accounts of personal experiences or reflections.

Notice that qualitative data could be much more than just words or text. Photographs, videos, sound recordings, and so on, can be considered qualitative data. Visual data can be used to understand behaviors, environments, and social interactions.

Qualitative Data Analysis

Qualitative research is endlessly creative and interpretive. The researcher does not just leave the field with mountains of empirical data and then easily write up his or her findings.

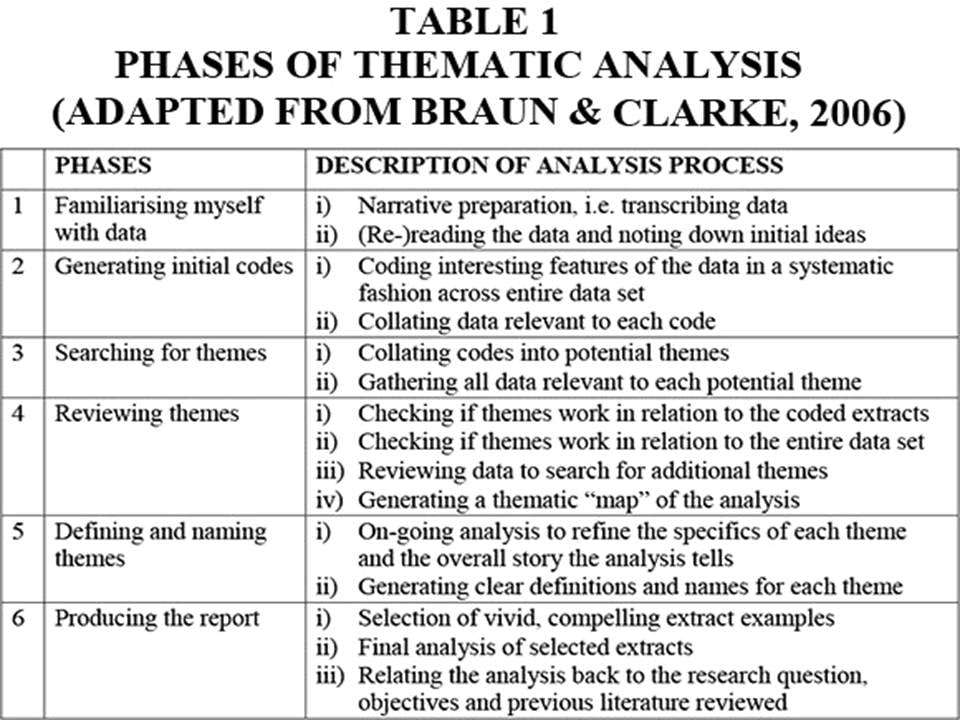

Qualitative interpretations are constructed, and various techniques can be used to make sense of the data, such as content analysis, grounded theory (Glaser & Strauss, 1967), thematic analysis (Braun & Clarke, 2006), or discourse analysis.

For example, thematic analysis is a qualitative approach that involves identifying implicit or explicit ideas within the data. Themes will often emerge once the data has been coded.

Key Features

- Events can be understood adequately only if they are seen in context. Therefore, a qualitative researcher immerses her/himself in the field, in natural surroundings. The contexts of inquiry are not contrived; they are natural. Nothing is predefined or taken for granted.

- Qualitative researchers want those who are studied to speak for themselves, to provide their perspectives in words and other actions. Therefore, qualitative research is an interactive process in which the persons studied teach the researcher about their lives.

- The qualitative researcher is an integral part of the data; without the active participation of the researcher, no data exists.

- The study’s design evolves during the research and can be adjusted or changed as it progresses. For the qualitative researcher, there is no single reality. It is subjective and exists only in reference to the observer.

- The theory is data-driven and emerges as part of the research process, evolving from the data as they are collected.

Limitations of Qualitative Research

- Because of the time and costs involved, qualitative designs do not generally draw samples from large-scale data sets.

- The problem of adequate validity or reliability is a major criticism. Because of the subjective nature of qualitative data and its origin in single contexts, it is difficult to apply conventional standards of reliability and validity. For example, because of the central role played by the researcher in the generation of data, it is not possible to replicate qualitative studies.

- Also, contexts, situations, events, conditions, and interactions cannot be replicated to any extent, nor can generalizations be made to a wider context than the one studied with confidence.

- The time required for data collection, analysis, and interpretation is lengthy. Analysis of qualitative data is difficult, and expert knowledge of an area is necessary to interpret qualitative data. Great care must be taken when doing so, for example, looking for mental illness symptoms.

Advantages of Qualitative Research

- Because of close researcher involvement, the researcher gains an insider’s view of the field. This allows the researcher to find issues that are often missed (such as subtleties and complexities) by the scientific, more positivistic inquiries.

- Qualitative descriptions can be important in suggesting possible relationships, causes, effects, and dynamic processes.

- Qualitative analysis allows for ambiguities/contradictions in the data, which reflect social reality (Denscombe, 2010).

- Qualitative research uses a descriptive, narrative style; this research might be of particular benefit to the practitioner as she or he could turn to qualitative reports to examine forms of knowledge that might otherwise be unavailable, thereby gaining new insight.

What Is Quantitative Research?

Quantitative research involves the process of objectively collecting and analyzing numerical data to describe, predict, or control variables of interest.

The goals of quantitative research are to test causal relationships between variables , make predictions, and generalize results to wider populations.

Quantitative researchers aim to establish general laws of behavior and phenomenon across different settings/contexts. Research is used to test a theory and ultimately support or reject it.

Quantitative Methods

Experiments typically yield quantitative data, as they are concerned with measuring things. However, other research methods, such as controlled observations and questionnaires , can produce both quantitative information.

For example, a rating scale or closed questions on a questionnaire would generate quantitative data as these produce either numerical data or data that can be put into categories (e.g., “yes,” “no” answers).

Experimental methods limit how research participants react to and express appropriate social behavior.

Findings are, therefore, likely to be context-bound and simply a reflection of the assumptions that the researcher brings to the investigation.

There are numerous examples of quantitative data in psychological research, including mental health. Here are a few examples:

Another example is the Experience in Close Relationships Scale (ECR), a self-report questionnaire widely used to assess adult attachment styles .

The ECR provides quantitative data that can be used to assess attachment styles and predict relationship outcomes.

Neuroimaging data : Neuroimaging techniques, such as MRI and fMRI, provide quantitative data on brain structure and function.

This data can be analyzed to identify brain regions involved in specific mental processes or disorders.

For example, the Beck Depression Inventory (BDI) is a clinician-administered questionnaire widely used to assess the severity of depressive symptoms in individuals.

The BDI consists of 21 questions, each scored on a scale of 0 to 3, with higher scores indicating more severe depressive symptoms.

Quantitative Data Analysis

Statistics help us turn quantitative data into useful information to help with decision-making. We can use statistics to summarize our data, describing patterns, relationships, and connections. Statistics can be descriptive or inferential.

Descriptive statistics help us to summarize our data. In contrast, inferential statistics are used to identify statistically significant differences between groups of data (such as intervention and control groups in a randomized control study).

- Quantitative researchers try to control extraneous variables by conducting their studies in the lab.

- The research aims for objectivity (i.e., without bias) and is separated from the data.

- The design of the study is determined before it begins.

- For the quantitative researcher, the reality is objective, exists separately from the researcher, and can be seen by anyone.

- Research is used to test a theory and ultimately support or reject it.

Limitations of Quantitative Research

- Context: Quantitative experiments do not take place in natural settings. In addition, they do not allow participants to explain their choices or the meaning of the questions they may have for those participants (Carr, 1994).

- Researcher expertise: Poor knowledge of the application of statistical analysis may negatively affect analysis and subsequent interpretation (Black, 1999).

- Variability of data quantity: Large sample sizes are needed for more accurate analysis. Small-scale quantitative studies may be less reliable because of the low quantity of data (Denscombe, 2010). This also affects the ability to generalize study findings to wider populations.

- Confirmation bias: The researcher might miss observing phenomena because of focus on theory or hypothesis testing rather than on the theory of hypothesis generation.

Advantages of Quantitative Research

- Scientific objectivity: Quantitative data can be interpreted with statistical analysis, and since statistics are based on the principles of mathematics, the quantitative approach is viewed as scientifically objective and rational (Carr, 1994; Denscombe, 2010).

- Useful for testing and validating already constructed theories.

- Rapid analysis: Sophisticated software removes much of the need for prolonged data analysis, especially with large volumes of data involved (Antonius, 2003).

- Replication: Quantitative data is based on measured values and can be checked by others because numerical data is less open to ambiguities of interpretation.

- Hypotheses can also be tested because of statistical analysis (Antonius, 2003).

Antonius, R. (2003). Interpreting quantitative data with SPSS . Sage.

Black, T. R. (1999). Doing quantitative research in the social sciences: An integrated approach to research design, measurement and statistics . Sage.

Braun, V. & Clarke, V. (2006). Using thematic analysis in psychology . Qualitative Research in Psychology , 3, 77–101.

Carr, L. T. (1994). The strengths and weaknesses of quantitative and qualitative research : what method for nursing? Journal of advanced nursing, 20(4) , 716-721.

Denscombe, M. (2010). The Good Research Guide: for small-scale social research. McGraw Hill.

Denzin, N., & Lincoln. Y. (1994). Handbook of Qualitative Research. Thousand Oaks, CA, US: Sage Publications Inc.

Glaser, B. G., Strauss, A. L., & Strutzel, E. (1968). The discovery of grounded theory; strategies for qualitative research. Nursing research, 17(4) , 364.

Minichiello, V. (1990). In-Depth Interviewing: Researching People. Longman Cheshire.

Punch, K. (1998). Introduction to Social Research: Quantitative and Qualitative Approaches. London: Sage

Further Information

- Designing qualitative research

- Methods of data collection and analysis

- Introduction to quantitative and qualitative research

- Checklists for improving rigour in qualitative research: a case of the tail wagging the dog?

- Qualitative research in health care: Analysing qualitative data

- Qualitative data analysis: the framework approach

- Using the framework method for the analysis of

- Qualitative data in multi-disciplinary health research

- Content Analysis

- Grounded Theory

- Thematic Analysis

A Critical Evaluation of Qualitative & Quantitative Research Designs Compare & Contrast Essay

A wealth of literature demonstrates that research is a discursive practice that must be carried out using meticulous and systematic means so as to meet pertinent norms and standards, especially in regard to its validity, reliability, and rationale (Lankshear, n.d.).

Equally, good quality research must have the capacity to elucidate strong evidence in the form of quantitative or qualitative data that is relevant to a phenomenon or variable under study.

The development of an effective procedure or guideline of undertaking the research is of paramount importance in the context of allowing objective data to be collected, organized, analyzed and presented in ways that will allow people to acknowledge that the findings are not only informative, but the inferences drawn upon them are logical.

This paper purposes to evaluate some of differences and characteristics between quantitative and qualitative research designs.

Both qualitative and quantitative research studies have unique characteristics. To start with, it is imperative to note that qualitative researchers are mainly concerned with studying the subject matter in the natural settings in an effort to make sense of, or to understand, observable occurrences in terms of the meanings attached to them by people (Patton, 2002).

One of the characteristics of a qualitative research study, therefore, is that it is interested in studying real-world situations or occurrences as they unfold naturally without controlling or manipulating any variables. In essence, it is not predetermined as is the case with quantitative studies.

The second characteristic is that most qualitative studies utilize purposive sampling procedures. Specifically, cases designated for study are selected by virtue of the fact that they have the needed information and provide valuable manifestations of the phenomenon under study.

In most qualitative studies, sampling of cases is aimed at getting more insight about the phenomenon under study, not empirical generalization from a sample of subjects to a population as is the case in quantitative studies (Patton, 2002).

The third characteristic of a qualitative research study is that the design adopted must be open and flexible enough to enable the researcher adapt to new inquiry depending on the level of understanding needed.

A qualitative design must have the capacity to accommodate new situations as they emerge in addition to allowing the researcher pursue new paths of discovery as opposed to a quantitative design, which utilizes rigid, unresponsive design (Patton, 2002).

Quantitative studies, on the other part, are basically undertaken by means of developing a testable hypothesis or research questions, and collecting data, which is then ordered and statistically analyzed to come up with findings. Finally, the inferences from the findings will differentiate whether the original hypothesis is supported by the evidence collected from the field (Creswell, 2003). One of the unique characteristics of a quantitative study is that the researcher is independent from the phenomena under study as opposed to qualitative study, where the researcher must always interact with the phenomena under study.

The second characteristic is that reality in a quantitative study is viewed in an objective and singular manner, intricately separate from the researcher (Creswell, 2003).

A qualitative study, however, views reality in a subjective manner. Finally, the facts collected from the field must be value-free and unbiased, but in a qualitative study, the facts are often value-laden and prejudicial.

The type of research design adopted by the researcher to a large extent influences the sampling method to be used for the study (Patton, 2002). A sampling method, according to Creswell (2003), is basically a technique employed in drawing samples from a larger population in such a way that the sample drawn will assist in the determination of some observations or hypothesis concerning the population.

There exist different types of sampling methods, each with its own practical importance in relation to the kind of research design employed for the study.

As such, most quantitative research designs utilize probability sampling procedures, which includes random, systematic, and stratified sampling techniques. It is imperative to note that in probability sampling procedures, every subject within a population has an identified non-zero chance or probability of being selected (StatPac, 2010)

In random sampling method, the nature of the population must first be defined to allow all subjects equal chance of selection. This is the purest form of probability sampling, and greatly assists quantitative researchers to come up with objective and unbiased data from the field (Creswell, 2003).

In stratified sampling technique, a stratum or a subset of the population that is known to share some common aspects is used to select the sample, thereby reducing sampling error. When the relevant subsets are identified by the researcher and their actual representation in the population known, random sampling is then employed to select adequate number of participants from each subset.

This type of sampling is helpful to a quantitative researcher since it allows him or her to study the subgroups in greater detail while in their own naturalistic world and free from value interferences or bias (Marshall, 1996; Creswell, 2003). Still, this type of sampling assists the quantitative researcher to study some unique subsets within a population that may have low or high incidences of a particular phenomenon relative to the other subsets (StatPac, 2010).

Systematic sampling, on its part, works more or less as random sampling, but selects every Nth member of an already predetermined sample. The technique assists a quantitative researcher to remain objective, and it is also simple to use.

Most qualitative research designs employ non-probability sampling procedures, with the most common type being the convenience and purposeful sampling techniques (StatPac, 2010).

It is however imperative to note that some non-probability sampling techniques such as convenience sampling can be used in quantitative research and some probability sampling techniques such as stratified sampling can also be used in qualitative research (Creswell, 2003).

In a convenience sample, subjects are selected by virtue of being at the correct place at the right time. In short, subjects are selected based on convenience. This is helpful to both quantitative and qualitative researchers since it does not only saves time, but also money (StatPac, 2010).

Due to the depth of information required in qualitative studies, most researchers employ purposive sampling technique to get the participant who is well placed to offer comprehensive and detailed information about a case or phenomena under study (Creswell, 2003).

As such, this technique is not interested in calculating numbers about sampling errors and sample representation as is the case in samples used in quantitative research; on the contrary, a purposive sample is interested in gaining an in-depth analysis of something, and therefore may be value-laden and subjective. However, these externalities form the basis of qualitative research.

As such, it can be said that a sampling method such as random sampling will assist in quantitative research since the nature of the research design is more mechanistic and only interested in coming up with results that can then be generalized to the wider population.

However, a sampling method such as purposive sampling will serve qualitative studies well since they are interested in providing illumination and understanding of complicated issues that can only be answered by the ‘why’ and ‘how’ questions (Marshall, 1996).

Such questions can only be answered by someone who is knowledgeable enough about the case under study, thus the use of purposive sampling.

Second, it is known that for a true random or stratified sample to be selected, the unique aspects under investigation of the whole population must be known before hand. This is only possible in quantitative studies, but is rarely possible in many qualitative studies (Marshall, 1996).

Third, random or stratified sampling mostly used in quantitative research is likely to generate a representative sample only if the underlying characteristics of the study are generally distributed within the population.

However, “…there is no evidence that the values, beliefs and attitudes that form the core of qualitative investigation are normally distributed, making the probability approach inappropriate” (Marshall, 1996, p. 523). Lastly, it is clear that individuals are not equally good at perceiving, understanding, and interpreting either their own or other people’s actions and behavior.

This translates to the fact that while some sampling methods such as random sampling are best suited for quantitative research since the studies are only interested in coming up with results that can be generalized to a wider population, it would appear more plausible for a qualitative researcher to employ a sampling technique such as purposive sampling, which will enable him obtain information that is richer in context and insightful.

Reference List

Creswell, J.W. (2003). Research design: Qualitative, quantitative, and mixed method approaches . Thousand Oaks, CA: Sage Publications, Inc.

Lankshear, C. Some notes on the nature and importance of research design within educational research. Web.

Marshall, M.N. (1996). Sampling for qualitative research. Family Practice, 13 (6), 522-525. Web.

Patton, M.Q. (2002). Qualitative research and evaluation methods, 3 rd Ed. Thousand Oaks, CA: Sage Publications, Inc.

StatPac. (2010). Survey sampling methods . Web.

- Chicago (A-D)

- Chicago (N-B)

IvyPanda. (2024, March 13). A Critical Evaluation of Qualitative & Quantitative Research Designs. https://ivypanda.com/essays/a-critical-evaluation-of-qualitative-quantitative-research-designs/

"A Critical Evaluation of Qualitative & Quantitative Research Designs." IvyPanda , 13 Mar. 2024, ivypanda.com/essays/a-critical-evaluation-of-qualitative-quantitative-research-designs/.

IvyPanda . (2024) 'A Critical Evaluation of Qualitative & Quantitative Research Designs'. 13 March.

IvyPanda . 2024. "A Critical Evaluation of Qualitative & Quantitative Research Designs." March 13, 2024. https://ivypanda.com/essays/a-critical-evaluation-of-qualitative-quantitative-research-designs/.

1. IvyPanda . "A Critical Evaluation of Qualitative & Quantitative Research Designs." March 13, 2024. https://ivypanda.com/essays/a-critical-evaluation-of-qualitative-quantitative-research-designs/.

Bibliography

IvyPanda . "A Critical Evaluation of Qualitative & Quantitative Research Designs." March 13, 2024. https://ivypanda.com/essays/a-critical-evaluation-of-qualitative-quantitative-research-designs/.

- Domestic Violence: Qualitative & Quantitative Research

- Stratified and Cluster Sampling in Research

- Quality Measurement With Stratified Random Sampling

- The Importance of Market Segmentation

- Sampling Techniques in Education

- Stratified and Cluster Sampling

- "The Influence of Business Ethics Education on Moral Efficacy" by May et al.

- Why is society stratified?

- Light Propagation Through Stratified Medium

- Electromagnetic Wave Propagation in the Stratified Medium

- Happiness and Morality

- Journal Entry, Hermaphrodite or Intersex

- Differences in Wages and Benefits at the Workplace

- The Impact of Computer-Based Technologies on Business Communication

- Technology and Communication Connection: Benefits and Shortcomings

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Clin Diagn Res

- v.11(5); 2017 May

Critical Appraisal of Clinical Research

Azzam al-jundi.

1 Professor, Department of Orthodontics, King Saud bin Abdul Aziz University for Health Sciences-College of Dentistry, Riyadh, Kingdom of Saudi Arabia.

Salah Sakka

2 Associate Professor, Department of Oral and Maxillofacial Surgery, Al Farabi Dental College, Riyadh, KSA.

Evidence-based practice is the integration of individual clinical expertise with the best available external clinical evidence from systematic research and patient’s values and expectations into the decision making process for patient care. It is a fundamental skill to be able to identify and appraise the best available evidence in order to integrate it with your own clinical experience and patients values. The aim of this article is to provide a robust and simple process for assessing the credibility of articles and their value to your clinical practice.

Introduction

Decisions related to patient value and care is carefully made following an essential process of integration of the best existing evidence, clinical experience and patient preference. Critical appraisal is the course of action for watchfully and systematically examining research to assess its reliability, value and relevance in order to direct professionals in their vital clinical decision making [ 1 ].

Critical appraisal is essential to:

- Combat information overload;

- Identify papers that are clinically relevant;

- Continuing Professional Development (CPD).

Carrying out Critical Appraisal:

Assessing the research methods used in the study is a prime step in its critical appraisal. This is done using checklists which are specific to the study design.

Standard Common Questions:

- What is the research question?

- What is the study type (design)?

- Selection issues.

- What are the outcome factors and how are they measured?

- What are the study factors and how are they measured?

- What important potential confounders are considered?

- What is the statistical method used in the study?

- Statistical results.

- What conclusions did the authors reach about the research question?

- Are ethical issues considered?

The Critical Appraisal starts by double checking the following main sections:

I. Overview of the paper:

- The publishing journal and the year

- The article title: Does it state key trial objectives?

- The author (s) and their institution (s)

The presence of a peer review process in journal acceptance protocols also adds robustness to the assessment criteria for research papers and hence would indicate a reduced likelihood of publication of poor quality research. Other areas to consider may include authors’ declarations of interest and potential market bias. Attention should be paid to any declared funding or the issue of a research grant, in order to check for a conflict of interest [ 2 ].

II. ABSTRACT: Reading the abstract is a quick way of getting to know the article and its purpose, major procedures and methods, main findings, and conclusions.

- Aim of the study: It should be well and clearly written.

- Materials and Methods: The study design and type of groups, type of randomization process, sample size, gender, age, and procedure rendered to each group and measuring tool(s) should be evidently mentioned.

- Results: The measured variables with their statistical analysis and significance.

- Conclusion: It must clearly answer the question of interest.

III. Introduction/Background section:

An excellent introduction will thoroughly include references to earlier work related to the area under discussion and express the importance and limitations of what is previously acknowledged [ 2 ].

-Why this study is considered necessary? What is the purpose of this study? Was the purpose identified before the study or a chance result revealed as part of ‘data searching?’

-What has been already achieved and how does this study be at variance?

-Does the scientific approach outline the advantages along with possible drawbacks associated with the intervention or observations?

IV. Methods and Materials section : Full details on how the study was actually carried out should be mentioned. Precise information is given on the study design, the population, the sample size and the interventions presented. All measurements approaches should be clearly stated [ 3 ].

V. Results section : This section should clearly reveal what actually occur to the subjects. The results might contain raw data and explain the statistical analysis. These can be shown in related tables, diagrams and graphs.

VI. Discussion section : This section should include an absolute comparison of what is already identified in the topic of interest and the clinical relevance of what has been newly established. A discussion on a possible related limitations and necessitation for further studies should also be indicated.

Does it summarize the main findings of the study and relate them to any deficiencies in the study design or problems in the conduct of the study? (This is called intention to treat analysis).

- Does it address any source of potential bias?

- Are interpretations consistent with the results?

- How are null findings interpreted?

- Does it mention how do the findings of this study relate to previous work in the area?

- Can they be generalized (external validity)?

- Does it mention their clinical implications/applicability?

- What are the results/outcomes/findings applicable to and will they affect a clinical practice?

- Does the conclusion answer the study question?

- -Is the conclusion convincing?

- -Does the paper indicate ethics approval?

- -Can you identify potential ethical issues?

- -Do the results apply to the population in which you are interested?

- -Will you use the results of the study?

Once you have answered the preliminary and key questions and identified the research method used, you can incorporate specific questions related to each method into your appraisal process or checklist.

1-What is the research question?

For a study to gain value, it should address a significant problem within the healthcare and provide new or meaningful results. Useful structure for assessing the problem addressed in the article is the Problem Intervention Comparison Outcome (PICO) method [ 3 ].

P = Patient or problem: Patient/Problem/Population:

It involves identifying if the research has a focused question. What is the chief complaint?

E.g.,: Disease status, previous ailments, current medications etc.,

I = Intervention: Appropriately and clearly stated management strategy e.g.,: new diagnostic test, treatment, adjunctive therapy etc.,

C= Comparison: A suitable control or alternative

E.g.,: specific and limited to one alternative choice.

O= Outcomes: The desired results or patient related consequences have to be identified. e.g.,: eliminating symptoms, improving function, esthetics etc.,

The clinical question determines which study designs are appropriate. There are five broad categories of clinical questions, as shown in [ Table/Fig-1 ].

[Table/Fig-1]:

Categories of clinical questions and the related study designs.

2- What is the study type (design)?

The study design of the research is fundamental to the usefulness of the study.

In a clinical paper the methodology employed to generate the results is fully explained. In general, all questions about the related clinical query, the study design, the subjects and the correlated measures to reduce bias and confounding should be adequately and thoroughly explored and answered.

Participants/Sample Population:

Researchers identify the target population they are interested in. A sample population is therefore taken and results from this sample are then generalized to the target population.

The sample should be representative of the target population from which it came. Knowing the baseline characteristics of the sample population is important because this allows researchers to see how closely the subjects match their own patients [ 4 ].

Sample size calculation (Power calculation): A trial should be large enough to have a high chance of detecting a worthwhile effect if it exists. Statisticians can work out before the trial begins how large the sample size should be in order to have a good chance of detecting a true difference between the intervention and control groups [ 5 ].

- Is the sample defined? Human, Animals (type); what population does it represent?

- Does it mention eligibility criteria with reasons?

- Does it mention where and how the sample were recruited, selected and assessed?

- Does it mention where was the study carried out?

- Is the sample size justified? Rightly calculated? Is it adequate to detect statistical and clinical significant results?

- Does it mention a suitable study design/type?

- Is the study type appropriate to the research question?

- Is the study adequately controlled? Does it mention type of randomization process? Does it mention the presence of control group or explain lack of it?

- Are the samples similar at baseline? Is sample attrition mentioned?

- All studies report the number of participants/specimens at the start of a study, together with details of how many of them completed the study and reasons for incomplete follow up if there is any.

- Does it mention who was blinded? Are the assessors and participants blind to the interventions received?

- Is it mentioned how was the data analysed?

- Are any measurements taken likely to be valid?

Researchers use measuring techniques and instruments that have been shown to be valid and reliable.

Validity refers to the extent to which a test measures what it is supposed to measure.

(the extent to which the value obtained represents the object of interest.)

- -Soundness, effectiveness of the measuring instrument;

- -What does the test measure?

- -Does it measure, what it is supposed to be measured?

- -How well, how accurately does it measure?